FACE: FAST, ACCURATE AND CONTEXT-AWARE AUDIO ANNOTATION AND CLASSIFICATION

FACE: 快速、准确且上下文感知的音频标注与分类

ABSTRACT

摘要

This paper presents a context-aware framework for feature selection and classification procedures to realize a fast and accurate audio event annotation and classification. The context-aware design starts with exploring feature extraction techniques to find an appropriate combination to select a set resulting in remarkable classification accuracy with minimal computational effort. The exploration for feature selection also embraces an investigation of audio Tempo representation, an advantageous feature extraction method missed by previous works in the environmental audio classification research scope. The proposed annotation method considers outlier, inlier, and hard-to-predict data samples to realize context-aware Active Learning, leading to the average accuracy of $90%$ when only $15%$ of data possess initial annotation. Our proposed algorithm for sound classification obtained average prediction accuracy of $98.\bar{0}5%$ on the Urban Sound 8 K dataset. The notebooks containing our source codes and implementation results are available at https://github.com/gitmehrdad/FACE.

本文提出了一种用于特征选择和分类流程的情境感知框架,旨在实现快速准确的音频事件标注与分类。该情境感知设计首先探索特征提取技术,通过寻找合适的组合来选取一组能在最小计算成本下实现显著分类准确率的特征。特征选择过程中还研究了音频节奏(Tempo)表征——这一在环境音频分类研究领域被以往工作忽略的优势特征提取方法。所提出的标注方法综合考虑离群值、内点及难预测数据样本,实现了情境感知的主动学习(Active Learning),在仅15%数据具备初始标注时仍能达到90%的平均准确率。我们提出的声音分类算法在Urban Sound 8K数据集上取得了98.05%的平均预测准确率。包含源代码和实现结果的Notebook已发布于https://github.com/gitmehrdad/FACE。

Keywords Context-aware Machine Learning $\cdot$ Active Learning $\cdot$ Sound Classification $\cdot$ Audio Annotation $\cdot$ Audio Tempo Representation (Tempogram) $\cdot$ Semi-supervised Audio Classification $\cdot$ Feature Selection

关键词 上下文感知机器学习 (Context-aware Machine Learning) $\cdot$ 主动学习 (Active Learning) $\cdot$ 声音分类 (Sound Classification) $\cdot$ 音频标注 (Audio Annotation) $\cdot$ 音频速度表征 (Tempogram) $\cdot$ 半监督音频分类 (Semi-supervised Audio Classification) $\cdot$ 特征选择 (Feature Selection)

1 Introduction

1 引言

In recent years, sound event classification has gained attention due to its application in audio analysis, music information retrieval, noise monitoring, animal call classification, and speech enhancement [1, 2, 3]. The development in the mentioned fields requires annotated recordings [3] and larger datasets instead of the smaller ones [4]. Since labeling audio is time-consuming and expensive, establishing a large-scale labeled audio dataset is arduous [5]. So, it seems necessary to develop reliable methods to label the datasets. There are several methods of determining the labels of unlabeled data. Some papers, including [1, 4, 5, 6, 7, 8] pursue Semi-supervised Learning, and some works, including [2, 3, 9, 10, 11, 12] seek to utilize Active Learning. Regarding the superior annotation accuracy of Active Learning-based approaches, this paper also focuses on Active Learning to determine the labels of audio samples. In certainty-based Active learning, a small portion of data with deterministic labels trains a classifier at first. Then, the classifier labels the unlabeled data, and audio samples with the highest classification certainties join the training set. The cycle of classifier training, classification, and updating the training set continues until the complete annotation of all audio samples.

近年来,声音事件分类因其在音频分析、音乐信息检索、噪声监测、动物叫声分类和语音增强等领域的应用而受到关注 [1, 2, 3]。上述领域的发展需要标注录音 [3] 和更大规模的数据集而非小型数据集 [4]。由于音频标注耗时且昂贵,建立大规模标注音频数据集十分艰巨 [5]。因此,开发可靠的数据集标注方法显得尤为必要。

现有多种方法可用于确定未标注数据的标签。部分论文(如 [1, 4, 5, 6, 7, 8])采用半监督学习 (Semi-supervised Learning),另一些研究(如 [2, 3, 9, 10, 11, 12])则尝试利用主动学习 (Active Learning)。鉴于基于主动学习的方法具有更高的标注准确性,本文同样聚焦于通过主动学习确定音频样本的标签。

在基于确定性的主动学习中,首先使用少量带确定标签的数据训练分类器。随后,分类器对未标注数据进行标注,并将分类确定性最高的音频样本加入训练集。分类器训练、分类和训练集更新的循环将持续进行,直至完成所有音频样本的标注。

Solutions proposed for the problem of audio event classification examine several methods. In [1, 4, 5, 7, 8], they suggested utilizing deep multi-layer neural networks to classify the data. The main issue challenging some Deep Learning solutions is the low prediction accuracy, in the case of the model over fitting, due to the scarcity of training data. In the audio classification task, where usually a propitious amount of training samples are available, we won’t face the model over fitting; however, in the audio annotation task, where the labels are available only for a small portion of data, the over fitting problem makes the deep multi-layer neural networks less attractive. In [2, 3, 6], they have employed classic machine learning class if i ers to classify the data. These methods are potentially less susceptible to over fitting since they have fewer unknown parameters that should get determined during the training. Nevertheless, the classic machine learning approaches suffer from the fixed input size issue.

针对音频事件分类问题提出的解决方案探讨了多种方法。文献[1,4,5,7,8]建议采用深度多层神经网络进行数据分类。某些深度学习方案面临的主要挑战是由于训练数据稀缺导致模型过拟合时的预测准确率低下问题。在通常具备充足训练样本的音频分类任务中不会出现过拟合现象,但在仅能获取少量数据标签的音频标注任务中,过拟合问题使得深度多层神经网络的吸引力下降。文献[2,3,6]则采用经典机器学习分类器进行数据处理。由于需要训练的未知参数较少,这些方法对过拟合的敏感性相对较低。然而,经典机器学习方法存在固定输入尺寸的限制性问题。

Regarding the considerable achievements of Context-aware Machine Learning [13], this paper proposes some contextaware solutions for the addressed problems. A context-aware design considers the current situation for decision-making. As a case in point, based on the system’s status, a context-aware Machine Learning system might dynamically renew the model fine-tuning and hyper parameter adjustment. The first research contribution of this paper includes a heuristic approach for context-aware feature selection to provide summarized fixed-size input vectors to gain a fast, accurate, and over fitting-resistant classification model. The investigation of the audio signal’s Cyclic Tempogram feature would be another contribution of this work in the audio signal classification task. The proposal of a context-aware Active Learning-based method for audio signal annotation is the third research contribution of this paper. The fourth research contribution is the context-aware classifier design to obtain optimum classification results. Section 2 describes the mentioned contributions in data preparation and feature extraction, data annotation, and audio classification. Section 3 discusses the implementation results for the proposed methods. Section 4 concludes the paper.

鉴于上下文感知机器学习 (Context-aware Machine Learning) [13] 取得的显著成果,本文针对所述问题提出了一些上下文感知解决方案。上下文感知设计会综合考虑当前情境进行决策。例如,基于系统状态,上下文感知机器学习系统可动态更新模型微调与超参数调整。本文的第一项研究贡献是提出了一种启发式上下文感知特征选择方法,通过生成固定长度的摘要化输入向量,从而获得快速、准确且抗过拟合的分类模型。在音频信号分类任务中探索循环时变谱 (Cyclic Tempogram) 特征是本研究的另一项贡献。第三项研究贡献是提出了基于上下文感知的主动学习 (Active Learning) 音频信号标注方法。第四项贡献是通过上下文感知分类器设计实现最优分类结果。第2章详细阐述了在数据准备、特征提取、数据标注和音频分类方面的上述贡献。第3章讨论了所提方法的实现结果。第4章对全文进行总结。

2 The proposed Method

2 所提出的方法

2.1 Data Preparation and Feature Extraction

2.1 数据准备与特征提取

Assume a dataset of audio signals with a duration of four seconds under the sampling rate of $22.05K H z$ . Since each audio signal consists of $4\times22050$ samples, the input vector of a classifier operating on that dataset should contain 88200 variables. These variables might distinguish audio signals from each other; however, processing those lengthy input vectors requires large amounts of memory and expensive computational resources. Besides, as the classifier’s input vector’s length grows, the trained model tends to be more susceptible to over fitting [14]. So, instead of regarding the time contents of an audio signal as the classifier’s input vector, some distinctive features extracted from the signal’s time contents would form the input vector. A context-aware feature extraction provides good classification accuracy and low model over fitting possibility.

假设有一个采样率为 $22.05K H z$ 的四秒音频信号数据集。由于每个音频信号包含 $4\times22050$ 个样本,基于该数据集的分类器输入向量应包含88200个变量。这些变量可能区分不同音频信号,但处理如此冗长的输入向量需要大量内存和高昂计算资源。此外,随着分类器输入向量长度增加,训练模型更容易出现过拟合 [14]。因此,与其将音频信号的时间内容作为分类器输入向量,不如从信号时间内容中提取一些显著特征来构成输入向量。上下文感知的特征提取能提供良好的分类精度和较低的模型过拟合可能性。

Some previous works, including [15, 16, 17, 18], discussed the various feature extraction methods applicable to the sound event classification task. Following a context-aware approach, we evaluate different feature extraction methods to find the most effective selection among that methods. Since this paper desires fast classification, we overlooked the evaluation of some methods with remarkable classification accuracy gain, like the Scattering Transform, because of their low feature extraction speed. The setup for the ablation study on the features contains a dataset, a feature extraction method, and a classifier.

包括[15, 16, 17, 18]在内的先前研究讨论了适用于声音事件分类任务的各种特征提取方法。基于上下文感知的方法,我们评估了不同特征提取方法以从中找出最有效的选择。由于本文追求快速分类,我们忽略了对某些分类精度提升显著但特征提取速度较慢的方法(如散射变换(Scattering Transform))的评估。特征消融研究的实验设置包含数据集、特征提取方法和分类器三部分。

The employed dataset is Urban Sound 8 K (US8K) presented in [19], which consists of 10 classes of urban environment’s audio events, including air conditioner, car horn, children playing, dog bark, drilling, engine idling, gunshot, jackhammer, siren, and street music. Each class consists of various counts of audio samples, and different-class data are arduous to distinguish for at least half the data classes. There are 8732 audio samples with different sampling rates and a maximum duration of 4 seconds. In this paper, the first step of data preparation is resampling the audio signals with the $22.05K H z$ sampling rate to obtain a uniform dataset. Data compression often ensues signal resampling since the sampling rate of audio signals usually exceeds $22.05K H z$ . Since the US8K dataset does not specify a validation set, we regard $10%$ of the training samples as the validation samples. All context-aware decisions in this paper are made based on the mentioned validation set. The examined features contain local auto correlation of the onset strength envelope (Tempogram), Chromagram, Mel-scaled Spec tr ogram, Mel-frequency cepstral coefficients (MFCCs), Spectral Contrast, Spectral flatness, Spectral bandwidth, Spectral centroid, Roll-off frequency, RMS value for each frame of audio samples, tonal centroid features (Tonnetz), and zero-crossing rate of an audio time series. Regarding the lack of investigation history in the environmental sound classification papers, this paper studies the Tempogram, proposed in [20], for the first time.

采用的数据集为文献[19]提出的Urban Sound 8K (US8K),包含10类城市环境音频事件:空调声、汽车喇叭声、儿童嬉戏声、犬吠声、钻孔声、引擎空转声、枪击声、破碎锤声、警笛声和街头音乐声。每类音频样本数量不等,其中半数以上类别的数据存在显著区分难度。该数据集共含8732个采样率各异、最长4秒的音频样本。本文数据预处理的第一步是将所有音频信号重采样至22.05kHz统一采样率。由于原始音频采样率通常超过22.05kHz,重采样过程常伴随数据压缩。鉴于US8K未预设验证集,我们划拨10%训练样本作为验证样本,本文所有上下文感知决策均基于该验证集得出。

研究的特征包括:起始强度包络局部自相关(Tempogram)、色度图(Chromagram)、梅尔频谱图(Mel-scaled Spectrogram)、梅尔频率倒谱系数(MFCCs)、频谱对比度(Spectral Contrast)、频谱平坦度(Spectral flatness)、频谱带宽(Spectral bandwidth)、频谱质心(Spectral centroid)、滚降频率(Roll-off frequency)、音频帧均方根值(RMS)、音调质心特征(Tonnetz)以及音频时间序列过零率。针对环境音分类领域的研究空白,本文首次探讨了文献[20]提出的Tempogram特征。

Most of the mentioned feature extraction methods operate based on windowing an audio signal, so the extracted features are referred to as static since they represent the information of the signal’s specific windows. The delta and delta-delta coefficients of the audio signal feature, which respectively indicate the first-order and second-order derivatives of the features, are referred to as dynamic features. The delta features show the speech rate, and the delta-delta features indicate the speech’s acceleration [21]. Some feature extraction methods generate a Spec tr ogram, a visual representation of the audio signal’s spectrum of frequencies. Regarding the Spec tr ogram as an image, we can exert some feature extraction properties from the Computer Vision scope, so we apply a Gaussian filter to the feature vectors before taking derivatives. In [14], feature selection helps to overcome the over fitting problem of the model by reducing the input vector’s dimensions and to obliviate the fixed input size issue mentioned in the previous section. The input vector’s length depends on the number of windows used during the feature extraction. When the windows’ count gets too high, the information represented by consecutive windows won’t differ substantially, and when the windows’ count gets too low, the amount of the extracted features won’t suffice to distinguish different audio samples easily. To reduce the data dimensions, the mean and the variance of the extracted features per window would replace the original data. A similar replacement happens for the delta and delta-delta vectors. So, the final feature vector embraces six vectors of floating-point numbers, containing the mean and the variance values for the original feature and first-order and second-order derivatives of it. In this paper, we used the feature extraction tools available by Librosa, a Python package, where most arguments of the feature extraction function are left untouched with their default values. Nevertheless, some function arguments, including the window length and the number of samples per window, got adjusted using the validation data. Before the experiments, we transformed features using Quantiles information into the standard vectors where the value of all elements lies in the range of (-1,1).

上述大多数特征提取方法基于对音频信号进行分窗操作,因此提取的特征被称为静态特征,因为它们代表了信号特定窗口的信息。音频信号特征的一阶导数(delta系数)和二阶导数(delta-delta系数)则被称为动态特征:delta特征反映语速变化,delta-delta特征体现语音加速度[21]。部分特征提取方法会生成声谱图(Spectrogram)——即音频信号频率谱的可视化表示。将声谱图视为图像时,我们可以引入计算机视觉领域的特征提取方法,例如在求导前对特征向量应用高斯滤波。文献[14]指出,特征选择通过降低输入向量维度有助于缓解模型过拟合问题,并规避前文提到的固定输入尺寸限制。输入向量长度取决于特征提取时使用的窗口数量:窗口过多会导致相邻窗口信息高度冗余,窗口过少则难以区分不同音频样本。为降低数据维度,我们用每个窗口提取特征的均值与方差替代原始数据,并对delta和delta-delta向量进行同样处理。最终特征向量包含六个浮点数向量,分别对应原始特征及其一阶、二阶导数的均值与方差值。本文使用Python语言音频处理库Librosa进行特征提取,大部分函数参数保持默认值,但窗长和每窗样本数等关键参数通过验证数据进行了调整。实验前,我们基于分位数信息将特征标准化至(-1,1)区间。

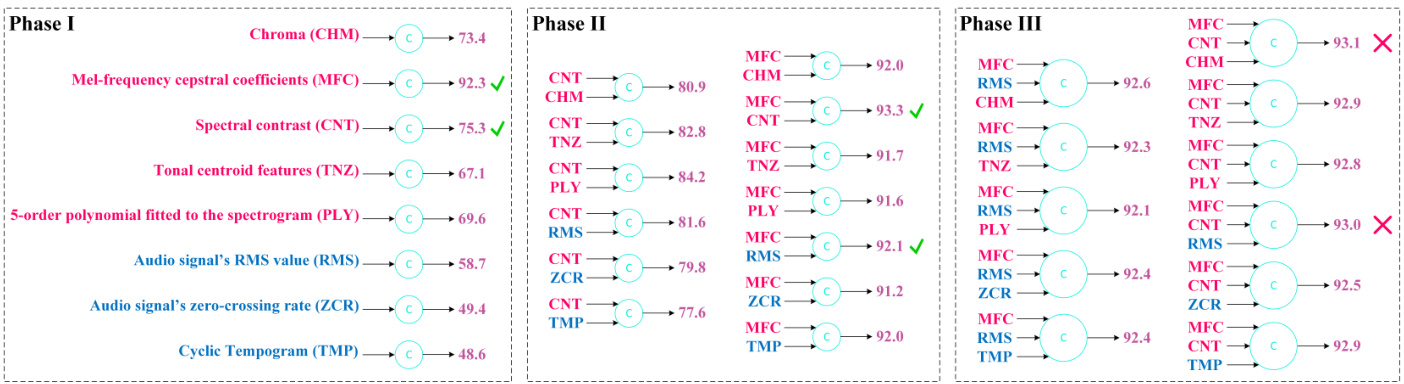

Figure 1: Feature selection resutls based on the proposed heuristic approach

图 1: 基于所提启发式方法的特征选择结果

A context-aware choice for the classifier would be the one holding the best capability to distinguish the data. There is a dilemma in feature and classifier selection: a classifier might hide the distinction some features provide, while a feature set might hide the resolution some class if i ers provide. So, initially, we explore various extracted features using a specific classifier chosen based on our speculation of the data distribution. After feature selection, we try to find the best classifier to distinguish the data.

上下文感知分类器的选择应基于其区分数据的最佳能力。特征与分类器选择存在两难困境:分类器可能掩盖某些特征提供的区分度,而特征集也可能掩盖某些分类器提供的分辨率。因此,我们首先基于对数据分布的推测选择特定分类器,探索各类提取特征。完成特征选择后,再尝试寻找区分数据的最佳分类器。

As the t-SNE plot for the Urban Sound 8 K dataset samples represented in [22] shows, the data distribution in the features space is heterogeneous and sparse. In a heterogeneous data distribution, the neighborhood of each data sample embraces some data samples with different class labels. Sparse data have a distribution in which the same-class data samples don’t shape dense areas. Assuming that the feature selection in our work also results in heterogeneous and sparse data distribution, we search for a classifier to separate the data classes. Class if i ers drawing a linear decision boundary fail to separate sparse and heterogeneous data, and class if i ers utilizing non-linear kernels require expensive computational effort. So, a decision tree classifier with an appropriate depth seems profitable. The high potential of model over fitting utilizing the decision tree classifier necessitates using an ensemble of tree class if i ers. Despite the improvements, the model over fitting still overshadows the accuracy of the mentioned classifier, so the XGBoost classifier proposes a solution by moving the decision boundaries utilizing regular iz ation [14]. Inspired by the One-vs-All approach that avails the application of binary class if i ers for multi-class classification, we propose to employ the One-vs-All XGBoost classifier. In fact, instead of a single multi-class XGBoost classifier, multiple binary XGBoost class if i ers draw the decision boundaries, where each classifier tries to separate the members of a specific class from all other data. Regarding the light duty of a binary XGBoost compared to a multi-class XGBoost, the computational overhead of the proposed method would not be an issue.

如[22]中所示的Urban Sound 8K数据集样本t-SNE图所示,特征空间中的数据分布呈现异质性和稀疏性。在异质性数据分布中,每个数据样本的邻域内会包含不同类别标签的样本。稀疏数据则表现为同类样本无法形成密集区域。鉴于本研究的特征选择同样可能导致异质稀疏的数据分布,我们需寻找能有效分离数据类别的分类器。

采用线性决策边界的分类器难以分割稀疏异质数据,而使用非线性核函数的分类器又面临高昂计算成本。因此,具有适当深度的决策树分类器成为较优选择。但决策树分类器存在较高的过拟合风险,这促使我们采用树分类器集成方法。尽管有所改进,过拟合问题仍会影响分类器精度,为此XGBoost分类器通过正则化[14]调整决策边界提供了解决方案。

受"一对多"(One-vs-All)方法启发(该方法使二分类器能处理多分类问题),我们提出采用一对多XGBoost分类器。具体而言,该方法使用多个二分类XGBoost替代单一多分类XGBoost,每个分类器负责将特定类别样本与其他所有数据分离。由于二分类XGBoost的计算负荷远低于多分类版本,该方法不会带来显著的计算开销。

Figure 1 depicts the feature selection results. To avoid the examination of all features permutations, we followed a heuristic approach, which consists of adding one new feature to each feature set at each phase, reporting the validation sample’s classification accuracy, moving the top-two feature sets to the next study phase, and dropping further investigations when the classification accuracy stopped enhancement. In Figure 1, the results of Phase III don’t show improvement compared to Phase II; therefore, we pick the MFC+CNT combination from Phase II as our final selection. Figure 1 depicts methods’ abbreviations in Phase II and Phase III of the experiment and shows the time-domain features in blue and the frequency-domain features in red. Figure 1 doesn’t represent the Mel-scaled Spec tr ogram feature extraction results since MFCC features are not only convertible to the Mel-scaled Spec tr ogram features but also capable of providing more classification accuracy. Some methods like Spectral flatness, Spectral bandwidth, Spectral centroid, and Roll-off frequency got excluded from Figure 1 because of their poor classification accuracy results. Please note that the reported accuracies belong to a simple classification procedure where no Active Learning or other accuracy optimization techniques are applied. As seen in Figure 1, the remarkable accuracy the MFCC feature provides alone clarifies the reason behind the widespread use of that feature in most previous works. The feature vector of the selected combination consists of 810 floating-point numbers, substantially reducing the computational resources required for the annotation and the classification tasks because it is sufficient to check 810 features instead of 88200 features.

图 1: 特征选择结果。为避免检查所有特征排列组合,我们采用启发式方法:在每阶段向特征集添加一个新特征,记录验证样本的分类准确率,将前两名特征集推进至下一研究阶段,当分类准确率停止提升时终止研究。图 1 显示第三阶段结果相对第二阶段未有改进,因此我们选择第二阶段的 MFC+CNT 组合作为最终方案。图中标注了实验第二、三阶段的方法缩写,蓝色表示时域特征,红色表示频域特征。

由于梅尔倒谱系数 (MFCC) 不仅能转换为梅尔频谱特征,还能提供更高分类准确率,图 1 未展示梅尔频谱特征提取结果。频谱平坦度、频谱带宽、频谱质心、滚降频率等方法因分类准确率不佳未被纳入图示。需注意报告准确率来自基础分类流程,未应用主动学习或其他准确率优化技术。

如图 1 所示,MFCC 特征单独展现的优异准确率解释了其被广泛采用的原因。所选组合的特征向量由810个浮点数构成,相比检查88200个特征,该方案显著降低了标注与分类任务所需的计算资源。

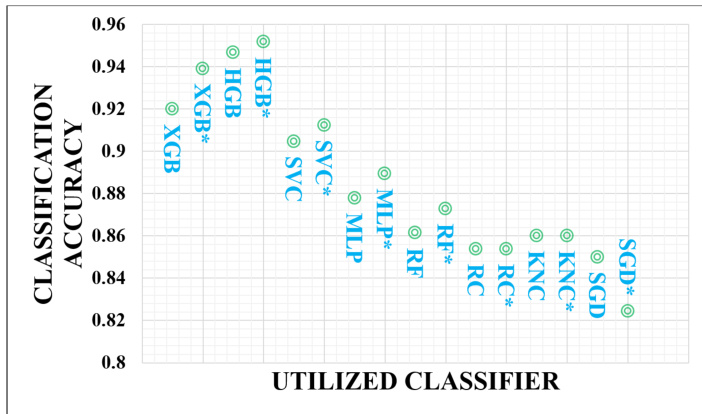

Figure 2: The classification accuracy vs. the utilized classifier

图 2: 分类准确率与所用分类器的关系

Utilizing various class if i ers, Figure 2 shows the classification results where no Active Learning or other accuracy optimization techniques are applied. The experiment focuses on finding the best classifier for the annotation task, so it explores only the classic machine-learning methods. In Figure 2, XGB, HGB, SVC, MLP, RF, RC, KNC, and SGD, respectively, denote the XGBoost, the Histogram-based Gradient Boosting Classifier, the Support Vector Machine, the Multi-layer Perceptron, the Random Forest, the Ridge Classifier, the K-nearest-neighbors Classifier, and the Regula