Pix3D: Dataset and Methods for Single-Image 3D Shape Modeling

Pix3D: 单图像3D形状建模数据集与方法

Xingyuan Sun∗1,2 Jiajun Wu∗1 Xiuming Zhang1 Zhoutong Zhang1 Chengkai Zhang1 Tianfan Xue3 Joshua B. Tenenbaum1 William T. Freeman1,3

Xingyuan Sun∗1,2 Jiajun Wu∗1 Xiuming Zhang1 Zhoutong Zhang1 Chengkai Zhang1 Tianfan Xue3 Joshua B. Tenenbaum1 William T. Freeman1,3

1 Massachusetts Institute of Technology 2Shanghai Jiao Tong University 3Google Research

1 麻省理工学院 2 上海交通大学 3 Google Research

Figure 1: We present Pix3D, a new large-scale dataset of diverse image-shape pairs. Each 3D shape in Pix3D is associated with a rich and diverse set of images, each with an accurate 3D pose annotation to ensure precise 2D-3D alignment. In comparison, existing datasets have limitations: 3D models may not match the objects in images; pose annotations may be imprecise; or the dataset may be relatively small.

图 1: 我们提出了Pix3D——一个包含多样化图像-形状对的大规模新数据集。Pix3D中的每个3D形状都关联着一组丰富多样的图像,每张图像都带有精确的3D位姿标注以确保2D-3D对齐的准确性。相比之下,现有数据集存在以下局限:3D模型可能与图像中的物体不匹配;位姿标注可能不精确;或者数据集规模相对较小。

Abstract

摘要

We study 3D shape modeling from a single image and make contributions to it in three aspects. First, we present Pix3D, a large-scale benchmark of diverse image-shape pairs with pixel-level 2D-3D alignment. Pix3D has wide applications in shape-related tasks including reconstruction, retrieval, viewpoint estimation, etc. Building such a large-scale dataset, however, is highly challenging; existing datasets either contain only synthetic data, or lack precise alignment between 2D images and 3D shapes, or only have a small number of images. Second, we calibrate the evaluation criteria for 3D shape reconstruction through behavioral studies, and use them to objectively and systematically benchmark cuttingedge reconstruction algorithms on Pix3D. Third, we design a novel model that simultaneously performs 3D reconstruction and pose estimation; our multi-task learning approach achieves state-of-the-art performance on both tasks.

我们研究从单张图像进行3D形状建模,并在三个方面做出贡献。首先,我们提出了Pix3D,这是一个具有像素级2D-3D对齐的多样化图像-形状配对的大规模基准数据集。Pix3D在形状相关任务(如重建、检索、视角估计等)中具有广泛应用。然而,构建如此大规模的数据集极具挑战性;现有数据集要么仅包含合成数据,要么缺乏2D图像与3D形状之间的精确对齐,要么仅包含少量图像。其次,我们通过行为研究校准了3D形状重建的评估标准,并利用这些标准在Pix3D上客观系统地评估前沿重建算法。第三,我们设计了一个同时执行3D重建和姿态估计的新模型;我们的多任务学习方法在这两项任务上均实现了最先进的性能。

1. Introduction

1. 引言

The computer vision community has put major efforts in building datasets. In 3D vision, there are rich 3D CAD model repositories like ShapeNet [7] and the Princeton Shape Benchmark [50], large-scale datasets associating images and shapes like Pascal $^{3\mathrm{D}+}$ [65] and Object Net 3 D [64], and benchmarks with fine-grained pose annotations for shapes in images like IKEA [39]. Why do we need one more?

计算机视觉领域在数据集构建上投入了大量精力。在3D视觉方面,已有丰富的3D CAD模型库如ShapeNet [7]和Princeton Shape Benchmark [50],关联图像与形状的大规模数据集如Pascal $^{3\mathrm{D}+}$ [65]和Object Net 3D [64],以及带有精细姿态标注的图像形状基准如IKEA [39]。为何还需要一个新的?

Looking into Figure 1, we realize existing datasets have limitations for the task of modeling a 3D object from a single image. ShapeNet is a large dataset for 3D models, but does not come with real images; Pascal $^{3\mathrm{D}+}$ and Object Net 3 D have real images, but the image-shape alignment is rough because the 3D models do not match the objects in images; IKEA has high-quality image-3D alignment, but it only contains 90 3D models and 759 images.

观察图1,我们发现现有数据集在从单张图像建模3D物体的任务上存在局限。ShapeNet是一个大型3D模型数据集,但不包含真实图像;Pascal $^{3\mathrm{D}+}$ 和Object Net 3D虽有真实图像,但由于3D模型与图像物体不匹配,图像-形状对齐较为粗糙;IKEA具备高质量的图像-3D对齐,但仅包含90个3D模型和759张图像。

We desire a dataset that has all three merits—a large-scale dataset of real images and ground-truth shapes with precise 2D-3D alignment. Our dataset, named Pix3D, has 395 3D shapes of nine object categories. Each shape associates with a set of real images, capturing the exact object in diverse environments. Further, the 10,069 image-shape pairs have precise 3D annotations, giving pixel-level alignment between shapes and their silhouettes in the images.

我们期望获得一个兼具三大优点的数据集——包含真实图像和大规模精确2D-3D对齐的真实形状数据。我们将其命名为Pix3D的数据集涵盖9类物体的395个3D形状,每个形状关联一组真实环境拍摄的该物体图像。此外,10,069组图像-形状对均带有精确的3D标注,实现了物体形状与图像轮廓间的像素级对齐。

Building such a dataset, however, is highly challenging. For each object, it is difficult to simultaneously collect its high-quality geometry and in-the-wild images. We can crawl many images of real-world objects, but we do not have access to their shapes; 3D CAD repositories offer object geometry, but do not come with real images. Further, for each imageshape pair, we need a precise pose annotation that aligns the shape with its projection in the image.

然而,构建这样的数据集极具挑战性。对于每个物体,很难同时采集其高质量几何结构和真实场景图像。我们可以爬取大量现实物体的图像,但无法获取其形状;3D CAD资源库提供了物体几何模型,却不包含真实图像。此外,对于每个图像-形状配对,还需要精确的姿态标注来对齐几何形状与其在图像中的投影。

We overcome these challenges by constructing Pix3D in three steps. First, we collect a large number of image-shape pairs by crawling the web and performing 3D scans ourselves. Second, we collect 2D keypoint annotations of objects in the images on Amazon Mechanical Turk, with which we optimize for 3D poses that align shapes with image silhouettes. Third, we filter out image-shape pairs with a poor alignment and, at the same time, collect attributes (i.e., truncation, occlusion) for each instance, again by crowd sourcing.

我们通过三个步骤构建Pix3D来克服这些挑战。首先,我们通过爬取网络并自行进行3D扫描收集了大量图像-形状对。其次,我们在Amazon Mechanical Turk上收集图像中物体的2D关键点标注,利用这些标注优化3D姿态以使形状与图像轮廓对齐。第三,我们筛选出对齐效果不佳的图像-形状对,同时通过众包方式为每个实例收集属性(即截断、遮挡)。

In addition to high-quality data, we need a proper metric to objectively evaluate the reconstruction results. A welldesigned metric should reflect the visual appealing ness of the reconstructions. In this paper, we calibrate commonly used metrics, including intersection over union, Chamfer distance, and earth mover’s distance, on how well they capture human perception of shape similarity. Based on this, we benchmark state-of-the-art algorithms for 3D object modeling on Pix3D to demonstrate their strengths and weaknesses.

除了高质量数据,我们还需要一个合适的指标来客观评估重建结果。一个精心设计的指标应能反映重建结果的视觉吸引力。本文校准了常用指标(包括交并比、Chamfer距离和推土机距离)对人类形状相似性感知的匹配程度。基于此,我们在Pix3D数据集上对最先进的3D物体建模算法进行基准测试,以展示它们的优缺点。

With its high-quality alignment, Pix3D is also suitable for object pose estimation and shape retrieval. To demonstrate that, we propose a novel model that performs shape and pose estimation simultaneously. Given a single RGB image, our model first predicts its 2.5D sketches, and then regresses the 3D shape and the camera parameters from the estimated 2.5D sketches. Experiments show that multi-task learning helps to boost the model’s performance.

凭借其高质量的对齐能力,Pix3D也非常适合用于物体姿态估计和形状检索。为了证明这一点,我们提出了一种能同时进行形状和姿态估计的新模型。给定一张RGB图像,我们的模型首先预测其2.5D草图,然后从估计的2.5D草图中回归出3D形状和相机参数。实验表明,多任务学习有助于提升模型性能。

Our contributions are three-fold. First, we build a new dataset for single-image 3D object modeling; Pix3D has a diverse collection of image-shape pairs with precise 2D-3D alignment. Second, we calibrate metrics for 3D shape reconstruction based on their correlations with human perception, and benchmark state-of-the-art algorithms on 3D reconstruction, pose estimation, and shape retrieval. Third, we present a novel model that simultaneously estimates object shape and pose, achieving state-of-the-art performance on both tasks.

我们的贡献有三方面。首先,我们构建了一个新的单图像3D物体建模数据集Pix3D,该数据集包含多样化的图像-形状配对,并具有精确的2D-3D对齐。其次,我们根据与人类感知的相关性校准了3D形状重建的评估指标,并在3D重建、姿态估计和形状检索任务上对现有先进算法进行了基准测试。第三,我们提出了一种同时估计物体形状和姿态的新模型,在这两项任务上均达到了最先进的性能水平。

2. Related Work

2. 相关工作

Datasets of 3D shapes and scenes. For decades, researchers have been building datasets of 3D objects, either as a repository of 3D CAD models [4, 5, 50] or as images of 3D shapes with pose annotations [35, 48]. Both directions have witnessed the rapid development of web-scale databases: ShapeNet [7] was proposed as a large repository of more than 50K models covering 55 categories, and Xiang et al. built Pascal $^{3\mathrm{D}+}$ [65] and Object Net 3 D [64], two largescale datasets with alignment between 2D images and the 3D shape inside. While these datasets have helped to advance the field of 3D shape modeling, they have their respective limitations: datasets like ShapeNet or Elastic 2 D 3 D [33] do not have real images, and recent 3D reconstruction challenges using ShapeNet have to be exclusively on synthetic images [68]; Pascal $^{3\mathrm{D}+}$ and Object Net 3 D have only rough alignment between images and shapes, because objects in the images are matched to a pre-defined set of CAD models, not their actual shapes. This has limited their usage as a benchmark for 3D shape reconstruction [60].

3D形状与场景数据集。数十年来,研究人员持续构建3D对象数据集,其形式包括3D CAD模型库[4,5,50]或带有姿态标注的3D形状图像[35,48]。这两个方向均见证了网络级数据库的快速发展:ShapeNet[7]作为覆盖55个类别、包含超5万模型的大型模型库被提出,而Xiang等人则构建了Pascal $^{3\mathrm{D}+}$[65]与ObjectNet3D[64]这两个实现2D图像与内部3D形状对齐的大规模数据集。尽管这些数据集推动了3D形状建模领域的发展,它们仍存在各自局限:ShapeNet或Elastic2D3D[33]等数据集不含真实图像,近期基于ShapeNet的3D重建挑战赛只能使用合成图像[68];Pascal $^{3\mathrm{D}+$和ObjectNet3D仅实现图像与形状的粗略对齐,因其图像对象需匹配预定义的CAD模型集而非实际形状。这限制了它们作为3D形状重建基准的适用性[60]。

With depth sensors like Kinect [24, 27], the community has built various RGB-D or depth-only datasets of objects and scenes. We refer readers to the review article from Firman [14] for a comprehensive list. Among those, many object datasets are designed for benchmarking robot manipulation [6, 23, 34, 52]. These datasets often contain a relatively small set of hand-held objects in front of clean backgrounds. Tanks and Temples [31] is an exciting new benchmark with 14 scenes, designed for high-quality, large-scale, multi-view 3D reconstruction. In comparison, our dataset, Pix3D, focuses on reconstructing a 3D object from a single image, and contains much more real-world objects and images.

借助Kinect [24, 27]等深度传感器,研究社区已构建了多种面向物体和场景的RGB-D或纯深度数据集。读者可参阅Firman的综述文章[14]获取完整列表。其中,许多物体数据集专为机器人操作基准测试而设计[6, 23, 34, 52],这些数据集通常包含少量手持物体且背景干净。Tanks and Temples [31]是一个包含14个场景的新基准测试集,专注于高质量、大规模、多视角的3D重建。相比之下,我们的Pix3D数据集聚焦于从单张图像重建3D物体,包含更多真实世界的物体和图像。

Probably the dataset closest to Pix3D is the large collection of object scans from Choi et al. [8], which contains a rich and diverse set of shapes, each with an RGB-D video. Their dataset, however, is not ideal for single-image 3D shape modeling for two reasons. First, the object of interest may be truncated throughout the video; this is especially the case for large objects like sofas. Second, their dataset does not explore the various contexts that an object may appear in, as each shape is only associated with a single scan. In Pix3D, we address both problems by leveraging powerful web search engines and crowd sourcing.

与Pix3D最接近的数据集可能是Choi等人[8]提出的大规模物体扫描集合,该数据集包含丰富多样的形状,每个形状都配有RGB-D视频。然而,他们的数据集并不适合单图像3D形状建模,原因有二:首先,目标物体可能在视频中被截断,对于沙发等大型物体尤其如此;其次,该数据集未探索物体可能出现的多样场景,因为每个形状仅关联单一扫描。在Pix3D中,我们通过强大的网络搜索引擎和众包技术解决了这两个问题。

Another closely related benchmark is IKEA [39], which provides accurate alignment between images of IKEA objects and 3D CAD models. This dataset is therefore particularly suitable for fine pose estimation. However, it contains only 759 images and 90 shapes, relatively small for shape model $\mathrm{ing^{*}}$ . In contrast, Pix3D contains 10,069 images (13.3x) and 395 shapes $\left(4.4\mathbf{X}\right)$ of greater variations.

另一个密切相关的基准是IKEA [39],它提供了IKEA物体图像与3D CAD模型之间的精确对齐。因此,该数据集特别适合精细姿态估计。然而,它仅包含759张图像和90个形状,对于形状建模$\mathrm{ing^{*}}$来说规模相对较小。相比之下,Pix3D包含10,069张图像(13.3倍)和395个形状$\left(4.4\mathbf{X}\right)$,且具有更大的多样性。

Researchers have also explored constructing scene datasets with 3D annotations. Notable attempts include LabelMe3D [47], NYU-D [51], SUN RGB-D [54], KITTI [16], and modern large-scale RGB-D scene datasets [10, 41, 55]. These datasets are either synthetic or contain only 3D surfaces of real scenes. Pix3D, in contrast, offers accurate alignment between 3D object shape and 2D images in the wild.

研究人员还探索了构建带有3D标注的场景数据集。值得关注的尝试包括LabelMe3D [47]、NYU-D [51]、SUN RGB-D [54]、KITTI [16]以及现代大规模RGB-D场景数据集[10, 41, 55]。这些数据集要么是合成的,要么仅包含真实场景的3D表面。相比之下,Pix3D提供了真实环境中3D物体形状与2D图像的精确对齐。

Single-image 3D reconstruction. The problem of recovering object shape from a single image is challenging, as it requires both powerful recognition systems and prior shape knowledge. Using deep convolutional networks, researchers have made significant progress in recent years [9, 17, 21, 29, 42, 44, 57, 60, 61, 63, 67, 53, 62]. While most of these approaches represent objects in voxels, there have also been attempts to reconstruct objects in point clouds [12] or octave trees [45, 58]. In this paper, we demonstrate that our newly proposed Pix3D serves as an ideal benchmark for evaluating these algorithms. We also propose a novel model that jointly estimates an object’s shape and its 3D pose.

单图像3D重建。从单张图像恢复物体形状是一个具有挑战性的问题,因为它需要强大的识别系统和先验形状知识。近年来,研究者利用深度卷积网络取得了显著进展[9, 17, 21, 29, 42, 44, 57, 60, 61, 63, 67, 53, 62]。虽然这些方法大多采用体素(voxel)表示物体,但也有研究尝试通过点云[12]或八叉树[45, 58]进行重建。本文证明,我们新提出的Pix3D数据集是评估这些算法的理想基准。我们还提出了一种联合估计物体形状和3D位姿的新模型。

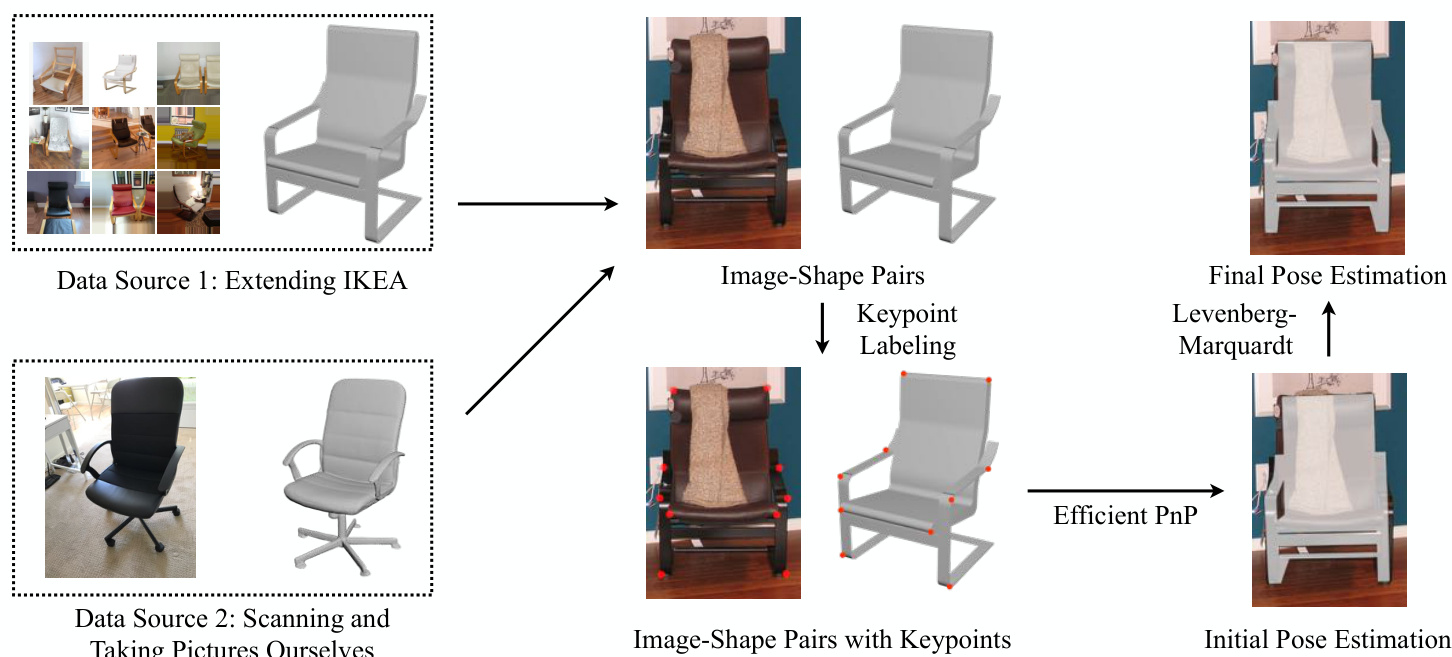

Figure 2: We build the dataset in two steps. First, we collect image-shape pairs by crawling web images of IKEA furniture as well as scanning objects and taking pictures ourselves. Second, we align the shapes with their 2D silhouettes by minimizing the 2D coordinates of the keypoints and their projected positions from 3D, using the Efficient $\mathrm{PnP}$ and the Levenberg-Marquardt algorithm.

图 2: 我们通过两个步骤构建数据集。首先,通过爬取宜家家具的网页图片、扫描实物并自行拍摄照片来收集图像-形状配对。其次,利用高效 $\mathrm{PnP}$ 和 Levenberg-Marquardt 算法,通过最小化关键点的2D坐标与其从3D投影位置的对齐误差,将形状与其2D轮廓进行匹配。

Shape retrieval. Another related research direction is retrieving similar 3D shapes given a single image, instead of reconstructing the object’s actual geometry [1, 15, 19, 49]. Pix3D contains shapes with significant inter-class and intraclass variations, and is therefore suitable for both generalpurpose and fine-grained shape retrieval tasks.

形状检索。另一个相关研究方向是根据单张图像检索相似的3D形状,而非重建物体的实际几何结构 [1, 15, 19, 49]。Pix3D数据集包含具有显著类间和类内差异的形状,因此既适用于通用检索任务,也适用于细粒度形状检索任务。

3D pose estimation. Many of the aforementioned object datasets include annotations of object poses [35, 39, 48, 64, 65]. Researchers have also proposed numerous methods on 3D pose estimation [13, 43, 56, 59]. In this paper, we show that Pix3D is also a proper benchmark for this task.

3D姿态估计。上述许多物体数据集都包含了物体姿态的标注 [35, 39, 48, 64, 65]。研究人员也提出了多种3D姿态估计方法 [13, 43, 56, 59]。本文表明,Pix3D同样是该任务的合适基准。

3. Building Pix3D

3. 构建 Pix3D

Figure 2 summarizes how we build Pix3D. We collect raw images from web search engines and shapes from 3D repositories; we also take pictures and scan shapes ourselves. Finally, we use labeled keypoints on both 2D images and 3D shapes to align them.

图 2: 总结了Pix3D的构建流程。我们从网络搜索引擎收集原始图像,从3D模型库获取形状数据;同时自行拍摄照片并扫描物体形状。最后,通过在2D图像和3D形状上标注的关键点来实现两者的对齐。

3.1. Collecting Image-Shape Pairs

3.1. 收集图像-形状对

We obtain raw image-shape pairs in two ways. One is to crawl images of IKEA furniture from the web and align them with CAD models provided in the IKEA dataset [39]. The other is to directly scan 3D shapes and take pictures.

我们通过两种方式获取原始图像-形状对。一种是从网络爬取宜家(IKEA)家具图像,并将其与IKEA数据集[39]提供的CAD模型对齐。另一种是直接扫描3D形状并拍摄照片。

images for 90 shapes. Therefore, we choose to keep the 3D shapes from IKEA dataset, but expand the set of 2D images using online image search engines and crowd sourcing.

我们选择了保留IKEA数据集中的3D形状,同时利用在线图像搜索引擎和众包来扩充2D图像集。

For each 3D shape, we first search for its corresponding 2D images through Google, Bing, and Baidu, using its IKEA model name as the keyword. We obtain 104,220 images for the 219 shapes. We then use Amazon Mechanical Turk (AMT) to remove irrelevant ones. For each image, we ask three AMT workers to label whether this image matches the 3D shape or not. For images whose three responses differ, we ask three additional workers and decide whether to keep them based on majority voting. We end up with 14,600 images for the 219 IKEA shapes.

对于每个3D形状,我们首先通过Google、Bing和百度搜索其对应的2D图像,使用其IKEA型号名称作为关键词。我们为219个形状获取了104,220张图像。随后利用Amazon Mechanical Turk (AMT) 剔除不相关图像。针对每张图像,我们请三名AMT工作人员标注该图像是否与3D形状匹配。对于三个标注结果不一致的图像,我们会额外邀请三名工作人员进行标注,并根据多数表决决定是否保留。最终我们为219个IKEA形状保留了14,600张图像。

3D scan. We scan non-IKEA objects with a Structure Sensor† mounted on an iPad. We choose to use the Structure Sensor because its mobility enables us to capture a wide range of shapes.

3D扫描。我们使用安装在iPad上的Structure Sensor†扫描非宜家物品。选择Structure Sensor是因为其便携性使我们能够捕捉各种形状。

The iPad RGB camera is synchronized with the depth sensor at $30\mathrm{Hz}$ , and calibrated by the Scanner App provided by Occipital, Inc.‡ The resolution of RGB frames is $2592\times1936$ , and the resolution of depth frames is $320\times240$ . For each object, we take a short video and fuse the depth data to get its 3D mesh by using fusion algorithm provided by Occipital, Inc. We also take 10–20 images for each scanned object in front of various backgrounds from different viewpoints, making sure the object is neither cropped nor occluded. In total, we have scanned 209 objects and taken 2,313 images. Combining these with the IKEA shapes and images, we have 418 shapes and 16,913 images altogether.

iPad的RGB摄像头与深度传感器以$30\mathrm{Hz}$频率同步,并通过Occipital公司提供的Scanner App进行校准。RGB帧的分辨率为$2592\times1936$,深度帧的分辨率为$320\times240$。对于每个物体,我们拍摄一段短视频并使用Occipital公司提供的融合算法将深度数据融合生成其3D网格。同时,我们从不同视角在各种背景前为每个扫描物体拍摄10-20张图像,确保物体未被裁剪或遮挡。总计我们扫描了209个物体并拍摄了2,313张图像。结合宜家(IKEA)的形状和图像数据,我们最终共获得418个形状和16,913张图像。

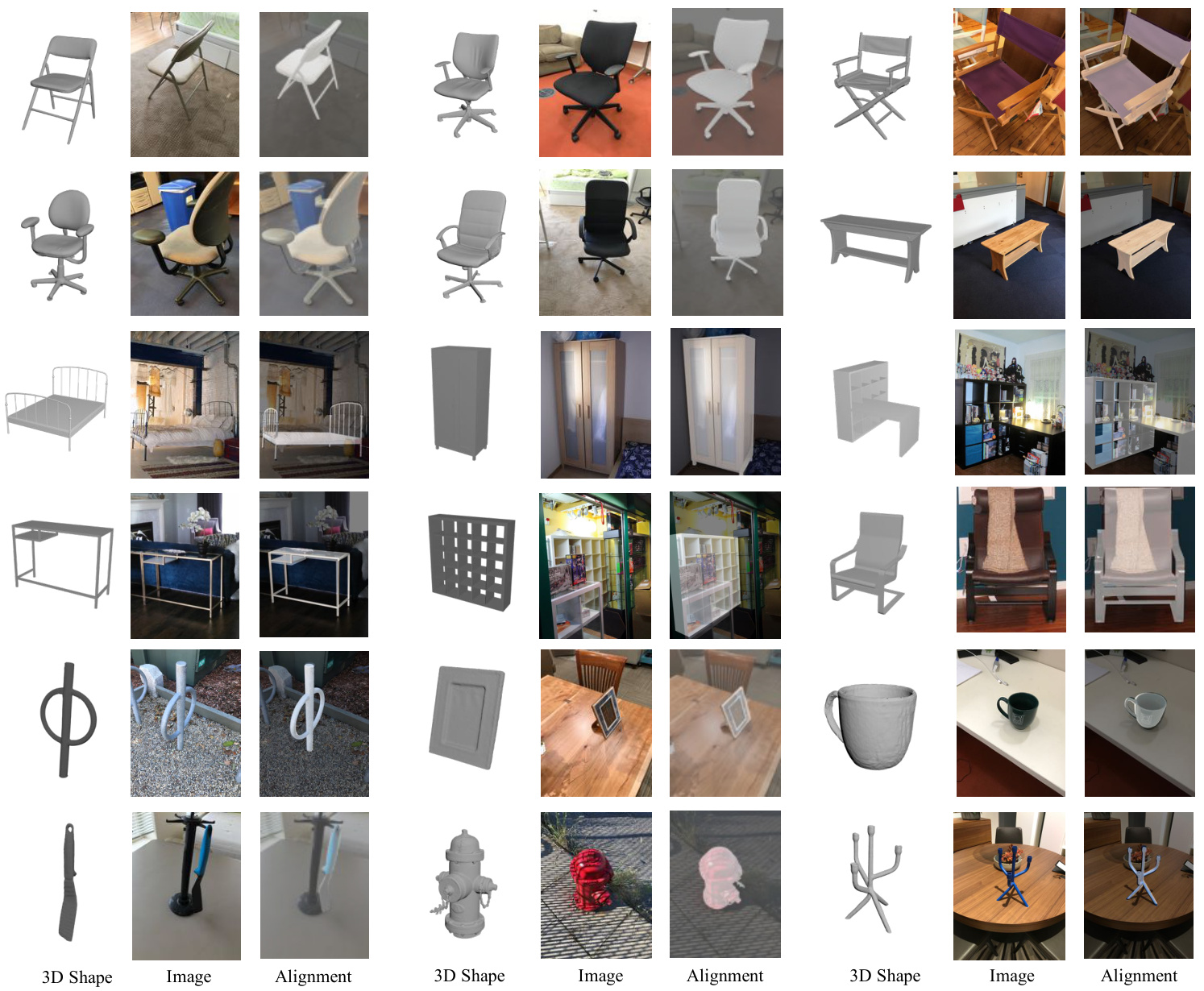

Figure 3: Sample images and shapes in Pix3D. From left to right: 3D shapes, 2D images, and 2D-3D alignment. Rows 1–2 show some chairs we scanned, rows 3–4 show a few IKEA objects, and rows 5–6 show some objects of other categories we scanned.

图 3: Pix3D中的样本图像与形状。从左至右:3D形状、2D图像及2D-3D对齐。第1-2行为我们扫描的部分椅子,第3-4行展示若干宜家(IKEA)物件,第5-6行呈现其他类别中我们扫描的物体。

3.2. Image-Shape Alignment

3.2. 图像形状对齐

To align a 3D CAD model with its projection in a 2D image, we need to solve for its 3D pose (translation and rotation), and the camera parameters used to capture the image.

为了使3D CAD模型与其在2D图像中的投影对齐,我们需要求解其3D位姿(平移和旋转)以及用于捕捉图像的相机参数。

We use a keypoint-based method inspired by Lim et al. [39]. Denote the keypoints’ 2D coordinates as $X_{\mathrm{2D}}=$ ${\mathbf{x}{1},\mathbf{x}{2},\cdots,\mathbf{x}{n}}$ and their corresponding 3D coordinates as $X_{\mathrm{3D}}={{\bf X}{1},{\bf X}{2},\cdot\cdot\cdot\mathrm{~,~}{\bf X}_{n}}$ . We solve for camera parameters and 3D poses that minimize the re projection error of the keypoints. Specifically, we want to find the projection matrix $_P$ that minimizes

我们采用了一种基于关键点的方法,灵感来源于Lim等人[39]。将关键点的2D坐标记为$X_{\mathrm{2D}}={\mathbf{x}{1},\mathbf{x}{2},\cdots,\mathbf{x}{n}}$,对应的3D坐标记为$X_{\mathrm{3D}}={{\bf X}{1},{\bf X}{2},\cdot\cdot\cdot\mathrm{~,~}{\bf X}_{n}}$。我们通过最小化关键点的重投影误差来求解相机参数和3D位姿。具体而言,需要找到使投影矩阵$_P$最小化的解。

$$

\mathcal{L}(P;X_{\mathrm{3D}},X_{\mathrm{2D}})=\sum_{i}|\mathrm{Proj}{P}(\mathbf{X}{i})-\mathbf{x}{i}|_{2}^{2},

$$

$$

\mathcal{L}(P;X_{\mathrm{3D}},X_{\mathrm{2D}})=\sum_{i}|\mathrm{Proj}{P}(\mathbf{X}{i})-\mathbf{x}{i}|_{2}^{2},

$$

where $\mathrm{Proj}_{P}(\cdot)$ is the projection function.

其中 $\mathrm{Proj}_{P}(\cdot)$ 是投影函数。

Under the central projection assumption (zero-skew, square pixel, and the optical center is at the center of the frame), we

在中心投影假设(零偏斜、方形像素且光学中心位于帧中心)下,我们

have $P=K[R|T]$ , where $\kappa$ is the camera intrinsic matrix; $\boldsymbol{R}\in\mathbb{R}^{3\times3}$ and $\pmb{T}\in\mathbb{R}^{3}$ represent the object’s 3D rotation and 3D translation, respectively. We know

有 $P=K[R|T]$,其中 $\kappa$ 是相机内参矩阵;$\boldsymbol{R}\in\mathbb{R}^{3\times3}$ 和 $\pmb{T}\in\mathbb{R}^{3}$ 分别表示物体的三维旋转和三维平移。已知

$$

K=\left[{\begin{array}{c c c}{f}&{0}&{w/2}\ {0}&{f}&{h/2}\ {0}&{0}&{1}\end{array}}\right],

$$

$$

K=\left[{\begin{array}{c c c}{f}&{0}&{w/2}\ {0}&{f}&{h/2}\ {0}&{0}&{1}\end{array}}\right],

$$

where $f$ is the focal length, and $w$ and $h$ are the width and height of the image. Therefore, there are altogether seven parameters to be estimated: rotations $\theta,\phi,\psi$ , translations $x,y,z$ , and focal length $f$ (Rotation matrix $R$ is determined by $\theta,\phi$ , and $\psi$ ).

其中 $f$ 为焦距,$w$ 和 $h$ 分别为图像的宽度和高度。因此,共有七个待估计参数:旋转角度 $\theta,\phi,\psi$、平移量 $x,y,z$ 以及焦距 $f$(旋转矩阵 $R$ 由 $\theta,\phi$ 和 $\psi$ 确定)。

To solve Equation 1, we first calculate a rough 3D pose using the Efficient $\mathrm{P}n\mathrm{P}$ algorithm [36] and then refine it using the Levenberg-Marquardt algorithm [37, 40], as shown in Figure 2. Details of each step are described below.

为求解方程1,我们首先使用高效的 $\mathrm{P}n\mathrm{P}$ 算法 [36] 计算粗略的3D姿态,然后利用Levenberg-Marquardt算法 [37, 40] 进行优化,如图2所示。各步骤细节如下所述。

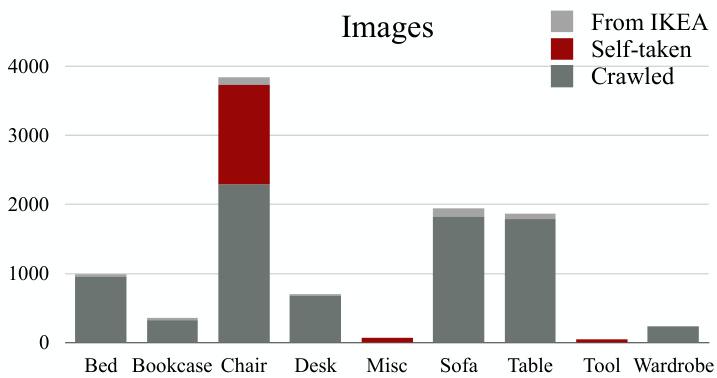

Figure 4: The distribution of images across categories

图 4: 各类别图像分布情况

Efficient $\mathbf{P}\pmb{n}\mathbf{P}.$ Perspective $\cdot n$ -Point $({\mathrm{P}}n{\mathrm{P}})$ is the problem of estimating the pose of a calibrated camera given paired 3D points and 2D projections. The Efficient $\mathrm{P}n\mathrm{P}$ (EPnP) algorithm solves the problem using virtual control points [37]. Because EPnP does not estimate the focal length, we enumerate the focal length $f$ from 300 to 2,000 with a step size of 10, solve for the 3D pose with each $f$ , and choose the one with the minimum projection error.

高效 $\mathbf{P}\pmb{n}\mathbf{P}.$ 透视 $\cdot n$ 点 $({\mathrm{P}}n{\mathrm{P}})$ 问题是在给定配对3D点和2D投影的情况下估计校准相机的姿态。高效 $\mathrm{P}n\mathrm{P}$ (EPnP) 算法通过虚拟控制点解决该问题 [37]。由于EPnP不估算焦距,我们以10为步长枚举300至2,000范围内的焦距 $f$,为每个 $f$ 求解3D姿态,并选择投影误差最小的结果。

The Levenberg-Marquardt algorithm (LMA). We take the output of $\mathrm{EPnP}$ with 50 random disturbances as the initial states, and run LMA on each of them. Finally, we choose the solution with the minimum projection error.

Levenberg-Marquardt算法 (LMA)。我们将带有50次随机扰动的$\mathrm{EPnP}$输出作为初始状态,并对每个状态运行LMA。最终选择具有最小投影误差的解。

Implementation details. For each 3D shape, we manually label its 3D keypoints. The number of keypoints ranges from 8 to 24. For each image, we ask three AMT workers to label if each keypoint is visible on the image, and if so, where it is. We only consider visible keypoints during the optimization.

实现细节。对于每个3D形状,我们手动标注其3D关键点,关键点数量为8到24个不等。针对每张图像,我们聘请三名AMT工作人员标注各关键点是否在图像中可见,若可见则标注其位置。优化过程中仅考虑可见关键点。

The 2D keypoint annotations are noisy, which severely hurts the performance of the optimization algorithm. We try two methods to increase its robustness. The first is to use RANSAC. The second is to use only a subset of 2D keypoint annotations. For each image, denote $C={c_{1},c_{2},c_{3}}$ as its three sets of human annotations. We then enumerate the seven nonempty subsets $C_{k}\subseteq C$ ; for each keypoint, we compute the median of its 2D coordinates in $C_{k}$ . We apply our optimization algorithm on every subset $C_{k}$ , and keep the output with the minimum projection error. After that, we let three AMT workers choose, for each image, which of the two methods offers better alignment, or neither performs well. At the same time, we also collect attributes (i.e., truncation, occlusion) for each image. Finally, we fine-tune the annotations ourselves using the GUI offered in Object Net 3 D [64]. Altogether there are 395 3D shapes and 10,069 images. Sample 2D-3D pairs are shown in Figure 3.

2D关键点标注存在噪声,这会严重损害优化算法的性能。我们尝试了两种方法来提高其鲁棒性。第一种是使用RANSAC。第二种是仅使用2D关键点标注的子集。对于每张图像,记 $C={c_{1},c_{2},c_{3}}$ 为其三组人工标注。然后我们枚举七个非空子集 $C_{k}\subseteq C$;对于每个关键点,我们计算其在 $C_{k}$ 中2D坐标的中位数。我们在每个子集 $C_{k}$ 上应用优化算法,并保留投影误差最小的输出。之后,我们让三位AMT工作人员为每张图像选择两种方法中哪一种对齐效果更好,或者两者效果都不佳。同时,我们还为每张图像收集了属性(即截断、遮挡)。最后,我们使用Object Net 3D [64]提供的GUI自行微调标注。总共有395个3D形状和10,069张图像。示例2D-3D对如图3所示。

4. Exploring Pix3D

4. Pix3D探索

We now present some statistics of Pix3D, and contrast it with its predecessors.

我们现在展示Pix3D的一些统计数据,并与之前的数据集进行对比。

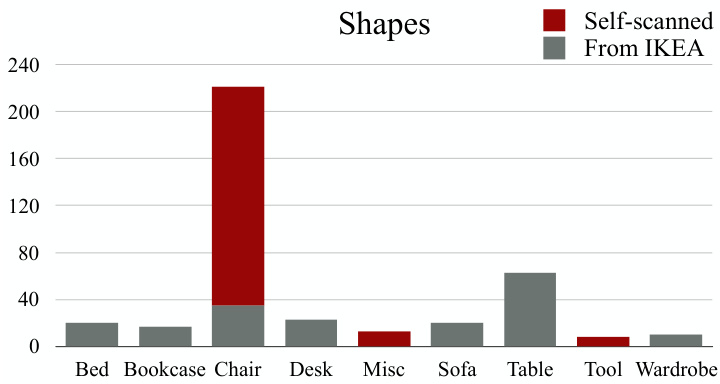

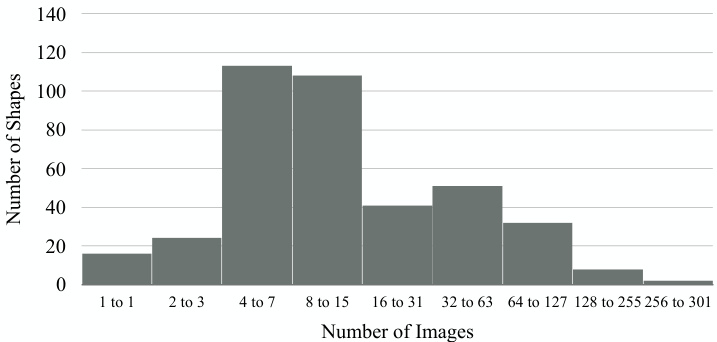

Dataset statistics. Figures 4 and 5 show the category distributions of 2D images and 3D shapes in Pix3D; Figure 6 shows the distribution of the number of images each model has. Our dataset covers a large variety of shapes, each of which has a large number of in-the-wild images. Chairs cover the significant part of Pix3D, because they are common, highly diverse, and well-studied by recent literature [11, 60, 20].

数据集统计。图4和图5展示了Pix3D中2D图像和3D形状的类别分布;图6显示了每个模型对应的图像数量分布。我们的数据集涵盖了多种形状,每种形状都包含大量真实场景图像。椅子在Pix3D中占比较大,因为它们常见、多样性高,且被近期研究广泛关注 [11, 60, 20]。

Figure 5: The distribution of shapes across categories

图 5: 各类别形状分布

Figure 6: Number of images available for each shape

图 6: 每种形状可用的图像数量

Quantitative evaluation. As a quantitative comparison on the quality of Pix3D and other datasets, we randomly select 25 chair and 25 sofa images from PASCAL $^{3\mathrm{D}+}$ [65], ObjectNet3D [64], IKEA [39], and Pix3D. For each image, we render the projected 2D silhouette of the shape using its pose annotation provided by the dataset. We then manually annotate the ground truth object masks in these images, and calculate Intersection over Union (IoU) between the projections and the ground truth. For each image-shape pair, we also ask 50 AMT workers whether they think the image is picturing the 3D ground truth shape provided by the dataset.

定量评估。为了定量比较Pix3D与其他数据集的质量,我们从PASCAL $^{3\mathrm{D}+}$ [65]、ObjectNet3D [64]、IKEA [39]和Pix3D中随机选取25张椅子和25张沙发的图像。对于每张图像,我们利用数据集提供的姿态标注渲染形状的投影2D轮廓,并人工标注这些图像中的真实物体掩膜,计算投影与真实掩膜的交并比(IoU)。针对每张图像-形状组合,我们还邀请了50名AMT工作人员判断该图像是否呈现了数据集提供的3D真实形状。

From Table 1, we see that Pix3D has much higher IoUs than PASCAL $^{3\mathrm{D}+}$ and Object Net 3 D, and slightly higher IoUs compared with the IKEA dataset. Humans also feel IKEA and $\mathrm{Pix}3\mathrm{D}$ have matched images and shapes, but not PASCAL $^{3\mathrm{D}+}$ or Object Net 3 D. In addition, we observe that many CAD models in the IKEA dataset are of an incorrect scale, making it challenging to align the shapes with images. For example, there are only 15 unoccluded and un truncated images of sofas in IKEA, while Pix3D has 1,092.

从表1可以看出,Pix3D的IoU值显著高于PASCAL $^{3\mathrm{D}+}$ 和Object Net 3D,相较IKEA数据集也略胜一筹。人类评估者也认为IKEA和$\mathrm{Pix}3\mathrm{D}$的图像与形状匹配度较高,而PASCAL $^{3\mathrm{D}+}$和Object Net 3D则不尽如人意。此外,我们注意到IKEA数据集中许多CAD模型比例失准,导致形状与图像对齐困难。例如IKEA仅有15张无遮挡且完整的沙发图像,而Pix3D则包含1,092张。

5. Metrics

5. 指标

Designing a good evaluation metric is important to encourage researchers to design algorithms that reconstruct highquality 3D geometry, rather than low-quality 3D reconstruction that overfits to a certain metric.

设计一个好的评估指标对于鼓励研究人员设计能够重建高质量3D几何的算法非常重要,而不是过度拟合某一特定指标的低质量3D重建。

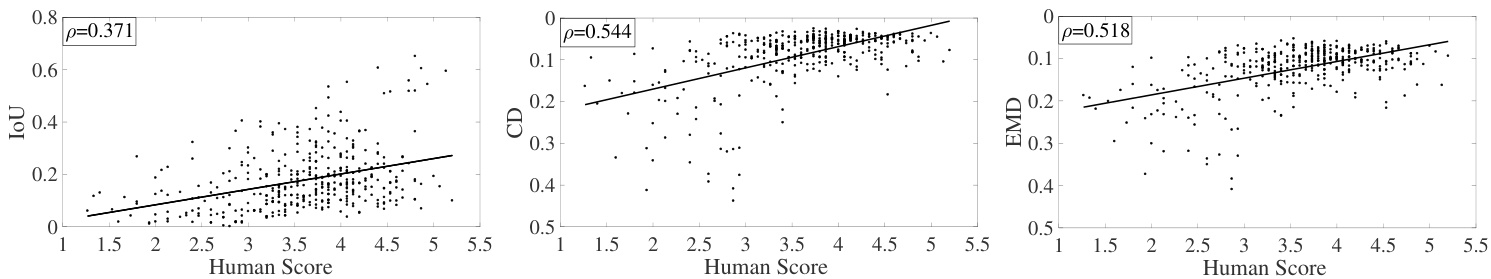

Figure 7: Scatter plots between humans’ ratings of reconstructed shapes and their IoU, CD, and EMD. The three metrics have a Pearson’s coefficient of 0.371, 0.544, and 0.518, respectively.

图 7: 人类对重建形状评分与其IoU (Intersection over Union) 、CD (Chamfer Distance) 和EMD (Earth Mover's Distance) 的散点图。这三个指标的皮尔逊相关系数分别为0.371、0.544和0.518。

Table 1: We compute the Intersection over Union (IoU) between manually annotated 2D masks and the 2D projections of 3D shapes. We also ask humans to judge whether the object in the images matches the provided shape.

表 1: 我们计算了人工标注的2D掩码与3D形状的2D投影之间的交并比(IoU)。同时邀请人类评估者判断图像中的物体是否与提供的形状匹配。

Many 3D reconstruction papers use Intersection over Union (IoU) to evaluate the similarity between ground truth and reconstructed 3D voxels, which may significantly deviate from human perception. In contrast, metrics like shortest distance and geodesic distance are more commonly used than IoU for matching meshes in graphics [32, 25]. Here, we conduct behavioral studies to calibrate IoU, Chamfer distance (CD) [2], and Earth Mover’s distance (EMD) [46] on how well they reflect human perception.

许多3D重建论文使用交并比(IoU)来评估真实3D体素与重建结果之间的相似度,但该指标可能与人类感知存在显著偏差。相比之下,在图形学领域中[32, 25],最短距离和测地线距离等指标比IoU更常用于网格匹配。我们通过行为研究来校准IoU、倒角距离(CD) [2]和推土机距离(EMD) [46]与人类感知的吻合程度。

5.1. Definitions

5.1. 定义

The definition of IoU is straightforward. For Chamfer distance (CD) and Earth Mover’s distance (EMD), we first convert voxels to point clouds, and then compute CD and EMD between pairs of point clouds.

IoU的定义很直观。对于倒角距离 (Chamfer distance, CD) 和推土机距离 (Earth Mover's distance, EMD),我们首先将体素转换为点云,然后计算点云对之间的CD和EMD。

| Chairs | Sofas | |||

| IoU | Match? | IoU | Match? | |

| PASCAL 3D+ [65] | 0.514 | 0.00 | 0.813 | 0.00 |

| ObjectNet3D [64] | 0.570 | 0.16 | 0.773 | 0.08 |

| IKEA [39] | 0.748 | 1.00 | 0.918 | 1.00 |

| Pix3D (ours) | 0.835 | 1.00 | 0.926 | 1.00 |

Table 2: Spearman’s rank correlation coefficients between different metrics. IoU, EMD, and CD have a correlation coefficient of 0.32, 0.43, and 0.49 with human judgments, respectively.

| 椅子 | 沙发 | |||

|---|---|---|---|---|

| IoU | 匹配? | IoU | 匹配? | |

| PASCAL 3D+ [65] | 0.514 | 0.00 | 0.813 | 0.00 |

| ObjectNet3D [64] | 0.570 | 0.16 | 0.773 | 0.08 |

| IKEA [39] | 0.748 | 1.00 | 0.918 | 1.00 |

| Pix3D (本工作) | 0.835 | 1.00 | 0.926 | 1.00 |

表 2: 不同指标间的Spearman等级相关系数。IoU、EMD和CD与人类判断的相关系数分别为0.32、0.43和0.49。

Voxels to a point cloud. We first extract the isosurface of each predicted voxel using the Lewiner marching cubes [38] algorithm. In practice, we use 0.1 as a universal surface value for extraction. We then uniformly sample points on the surface meshes and create the densely sampled point clouds. Finally, we randomly sample 1,024 points from each point cloud and normalize them into a unit cube for distance calculation.

体素到点云。我们首先使用Lewiner移动立方体[38]算法提取每个预测体素的等值面。实际操作中,我们采用0.1作为通用的表面提取阈值。接着在表面网格上均匀采样点,生成密集采样的点云。最后,我们从每个点云中随机采样1,024个点,并将其归一化至单位立方体内以进行距离计算。

Chamfer distance (CD). The Chamfer distance (CD) between $S_{1},S_{2}\subseteq\mathbb{R}^{3}$ is defined as

倒角距离 (CD)。倒角距离 (CD) 用于衡量 $S_{1},S_{2}\subseteq\mathbb{R}^{3}$ 两个点集之间的差异,其定义为

$$

\mathrm{CD}(S_{1},S_{2})={\frac{1}{|S_{1}|}}\sum_{x\in S_{1}}{\underset{y\in S_{2}}{\mathrm{min}}}|x-y|{2}+{\frac{1}{|S_{2}|}}\sum_{y\in S_{2}}{\underset{x\in S_{1}}{\mathrm{min}}}|x-y|_{2}.

$$

$$

\mathrm{CD}(S_{1},S_{2})={\frac{1}{|S_{1}|}}\sum_{x\in S_{1}}{\underset{y\in S_{2}}{\mathrm{min}}}|x-y|{2}+{\frac{1}{|S_{2}|}}\sum_{y\in S_{2}}{\underset{x\in S_{1}}{\mathrm{min}}}|x-y|_{2}.

$$

For each point in each cloud, CD finds the nearest point in the other point set, and sums the distances up. CD has been used in recent shape retrieval challenges [68].

对于每个点云中的每个点,CD (Chamfer Distance) 会在另一个点集中找到最近的点,并将这些距离求和。CD 已被用于近期的形状检索挑战赛 [68]。

| IoU | EMD | CD | Human | |

|---|---|---|---|---|

| IoU | 1 | 0.55 | 0.60 | 0.32 |

| EMD | 0.55 | 1 | 0.78 | 0.43 |

| CD | 0.60 | 0.78 | 1 | 0.49 |

| Human | 0.32 | 0.43 | 0.49 | 1 |

Earth Mover’s distance (EMD). We follow the definition of EMD in Fan et al. [12]. The Earth Mover’s distance (EMD) between $S_{1},S_{2}\subseteq\mathbb{R}^{3}$ (of equal size, i.e., $|S_{1}|=|S_{2}|)$ is

推土机距离 (Earth Mover's Distance, EMD)。我们沿用Fan等人[12]对EMD的定义。对于等规模点集$S_{1},S_{2}\subseteq\mathbb{R}^{3}$ (即满足$|S_{1}|=|S_{2}|)$,其推土机距离定义为

$$

\operatorname{EMD}(S_{1},S_{2})={\frac{1}{|S_{1}|}}\operatorname*{min}{\phi:S_{1}\to S_{2}}\sum_{x\in S_{1}}|x-\phi(x)|_{2},

$$

$$

\operatorname{EMD}(S_{1},S_{2})={\frac{1}{|S_{1}|}}\operatorname*{min}{\phi:S_{1}\to S_{2}}\sum_{x\in S_{1}}|x-\phi(x)|_{2},

$$

where $\phi:S_{1}\rightarrow S_{2}$ is a bijection. We divide EMD by the size of the point cloud for normalization. In practice, calculating the exact EMD value is computationally expensive; we instead use a $(1+\epsilon)$ approximation algorithm [3].

其中 $\phi:S_{1}\rightarrow S_{2}$ 是一个双射。我们将 EMD 除以点云大小以实现归一化。实际计算中,精确计算 EMD 值的计算成本很高;我们转而使用 $(1+\epsilon)$ 近似算法 [3]。

5.2. Experiments

5.2. 实验

We then conduct two user studies to compare these metrics and benchmark how they capture human perception.

我们随后进行了两项用户研究,以比较这些指标并评估它们如何捕捉人类感知。

Which one looks better? We run three shape reconstructions algorithms (3D-R2N2 [9], DRC [60], and 3D-VAEGAN [63]) on 200 randomly selected images of chairs. We then, for each image and every pair of its three constructions, ask three AMT workers to choose the one that looks closer to the object in the image. We also compute how each pair of objects rank in each metric. Finally, we calculate the Spearman’s rank correlation coefficients between different metrics (i.e., IoU, EMD, CD, and human perception). Table 2 suggests that EMD and CD correlate better with human ratings.

哪个看起来更好?我们在200张随机选择的椅子图像上运行了三种形状重建算法(3D-R2N2 [9]、DRC [60]和3D-VAEGAN [63])。然后,针对每张图像及其三个重建结果中的每一对,我们请三位AMT工作者选择看起来更接近图像中物体的那个。我们还计算了每对物体在每个指标中的排名。最后,我们计算了不同指标(即IoU、EMD、CD和人类感知)之间的斯皮尔曼等级相关系数。表2表明,EMD和CD与人类评分相关性更好。

How good is it? We randomly select 400 images, and show each of them to 15 AMT workers, together with the voxel prediction by DRC [60] and the ground truth shape. We then ask them to rate the reconstruction, on a scale of 1 to 7, based on how similar it is to the ground truth. The scatter plot in Figure 7 suggests that CD and EMD have higher Pearson’s coefficients with human responses.

效果如何?我们随机选取400张图像,每张图像展示给15名AMT工作人员,同时提供DRC [60]的体素预测结果和真实形状。随后要求他们根据重建结果与真实形状的相似度,以1到7分为标准进行评分。图7中的散点图显示,CD和EMD与人类反馈的皮尔逊相关系数更高。

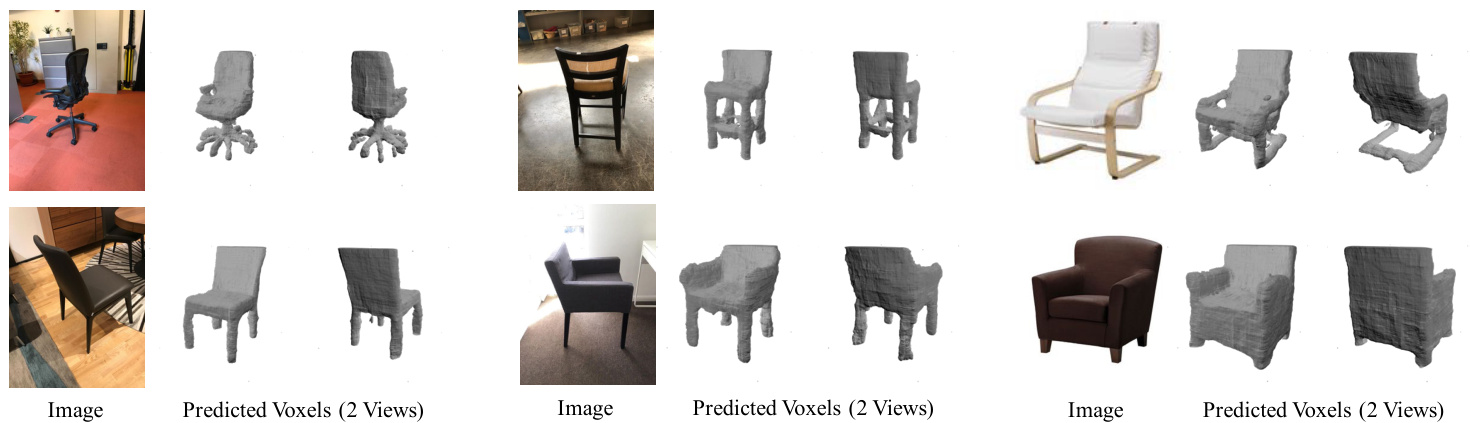

Figure 8: Results on 3D reconstructions of chairs. We show two views of the predicted voxels for each example.

图 8: 椅子三维重建结果。我们为每个示例展示预测体素的两个视角视图。

| IoU | EMD | CD | |

|---|---|---|---|

| 3D-R2N2 [9] | 0.136 | 0.211 | 0.239 |

| PSGN [12] | N/A | 0.216 | 0.200 |

| 3D-VAE-GAN [63] | 0.171 | 0.176 | 0.182 |

| DRC [60] | 0.265 | 0.144 | 0.160 |

| MarrNet* [61] | 0.231 | 0.136 | 0.144 |

| AtlasNet[18] | N/A | 0.128 | 0.125 |

| Ours (w/oPose) | 0.267 | 0.124 | 0.124 |

| Ours (w/ Pose) | 0.282 | 0.118 | 0.119 |

Table 3: Results on 3D shape reconstruction. Our model gets the highest IoU, EMD, and CD. We also compare our full model with a variant that does not have the view estimator. Results show that multi-task learning helps boost its performance. As MarrNet and PSGN predict viewer-centered shapes, while the other methods are object-centered, we rotate their reconstructions into the canonical view using ground truth pose annotations before evaluation.

表 3: 三维形状重建结果。我们的模型在 IoU、EMD 和 CD 指标上均取得最高值。同时对比了完整模型与无视角估计器变体的性能,结果表明多任务学习能有效提升表现。由于 MarrNet 和 PSGN 预测的是以观察者为中心的形状,而其他方法均为以物体为中心,因此在评估前我们使用真实位姿标注将其重建结果旋转至标准视角。

6. Approach

6. 方法

Pix3D serves as a benchmark for shape modeling tasks including reconstruction, retrieval, and pose estimation. Here, we design a new model that simultaneously performs shape reconstruction and pose estimation, and evaluate it on Pix3D.

Pix3D作为形状建模任务的基准,包括重建、检索和姿态估计。在此,我们设计了一个同时执行形状重建和姿态估计的新模型,并在Pix3D上进行了评估。

Our model is an extension of MarrNet [61], both of which use 2.5D sketches (the object’s depth, surface normals, and silhouette) as an intermediate representation. It contains four modules: (1) a 2.5D sketch estimator that predicts the depth, surface normals, and silhouette of the object; (2) a 2.5D sketch encoder that encodes the 2.5D sketches into a lowdimensional latent vector; (3) a 3D shape decoder and (4) a view estimator that decodes a latent vector into a 3D shape and camera parameters, respectively. Different from MarrNet [61], our model has an additional branch for pose estimation. We briefly describe them below, and please refer to the supplementary material for more details.

我们的模型是对 MarrNet [61] 的扩展,两者都使用 2.5D 草图(物体的深度、表面法线和轮廓)作为中间表示。它包含四个模块:(1) 用于预测物体深度、表面法线和轮廓的 2.5D 草图估计器;(2) 将 2.5D 草图编码为低维潜在向量的 2.5D 草图编码器;(3) 3D 形状解码器;(4) 将潜在向量分别解码为 3D 形状和相机参数的视角估计器。与 MarrNet [61] 不同,我们的模型增加了姿态估计分支。下文将简要描述这些模块,更多细节请参阅补充材料。

2.5D sketch estimator. The first module takes an RGB ima