AudioCLIP: Extending CLIP to Image, Text and Audio⋆

AudioCLIP: 将CLIP扩展到图像、文本和音频领域⋆

Abstract In the past, the rapidly evolving field of sound classification greatly benefited from the application of methods from other domains. Today, we observe the trend to fuse domain-specific tasks and approaches together, which provides the community with new outstanding models. In this work, we present an extension of the CLIP model that handles audio in addition to text and images. Our proposed model incorporates the ESResNeXt audio-model into the CLIP framework using the AudioSet dataset. Such a combination enables the proposed model to perform bimodal and unimodal classification and querying, while keeping CLIP’s ability to generalize to unseen datasets in a zero-shot inference fashion. AudioCLIP achieves new state-of-the-art results in the Environmental Sound Classification (ESC) task, out-performing other approaches by reaching accuracies of $90.07%$ on the Urban Sound 8 K and $97.15%$ on the ESC-50 datasets. Further it sets new baselines in the zero-shot ESCtask on the same datasets ( $68.78%$ and $69.40%$ , respectively).

摘要

过去,快速发展的声音分类领域极大地受益于其他领域方法的应用。如今,我们观察到将特定领域任务与方法相融合的趋势,这为学界提供了新的杰出模型。本文提出了一种CLIP模型的扩展版本,使其能够同时处理文本、图像和音频。我们提出的模型通过AudioSet数据集将ESResNeXt音频模型整合到CLIP框架中。这种组合使模型能够执行双模态和单模态分类及查询,同时保持CLIP以零样本推理方式泛化至未见数据集的能力。AudioCLIP在环境声音分类(ESC)任务中取得了新的最先进成果,在UrbanSound8K数据集上达到90.07%的准确率,在ESC-50数据集上达到97.15%的准确率,超越了其他方法。此外,它还在相同数据集的零样本ESC任务中设立了新基准(分别为68.78%和69.40%)。

Finally, we also assess the cross-modal querying performance of the proposed model as well as the influence of full and partial training on the results. For the sake of reproducibility, our code is published.

最后,我们还评估了所提出模型的跨模态查询性能,以及完整训练和部分训练对结果的影响。为了确保可复现性,我们的代码已公开。

Keywords: Multimodal learning · Audio classification · Zero-shot inference.

关键词:多模态学习 · 音频分类 · 零样本 (Zero-shot) 推理

1 Introduction

1 引言

The latest advances of the sound classification community provided many powerful audio-domain models that demonstrated impressive results. Combination of widely known datasets – such as AudioSet [7], Urban Sound 8 K [25] and ESC-50 [19] – and domain-specific and inter-domain techniques conditioned the rapid development of audio-dedicated methods and approaches [15,10,30].

声音分类领域的最新进展提供了许多强大的音频领域模型,展现出令人瞩目的成果。广为人知的数据集(如 AudioSet [7]、Urban Sound 8K [25] 和 ESC-50 [19])与领域专用及跨领域技术的结合,推动了音频专用方法和技术的快速发展 [15,10,30]。

Previously, researchers were focusing mostly on the classification task using the audible modality exclusively. In the last years, however, popularity of multimodal approaches in application to audio-related tasks has been increasing [14,2,34]. Being applied to audio-specific tasks, this implied the use of either textual or visual modalities in addition to sound. While the use of an additional modality together with audio is not rare, combination of more than two modalities is still uncommon in the audio domain. However, the restricted amount of qualitatively labeled data is constraining the development of the field in both, uni- and multimodal directions. Such a lack of data has challenged the research and sparked a cautious growth of interest for zero- and few-shot learning approaches based on contrastive learning methods that rely on textual descriptions [13,32,33].

此前,研究者主要专注于仅利用听觉模态的分类任务。然而近年来,多模态方法在音频相关任务中的应用日益普及[14,2,34]。在音频专项任务中,这意味着除了声音外还需结合文本或视觉模态。虽然音频与其他模态的结合并不罕见,但在音频领域同时使用两种以上模态仍属少见。然而,高质量标注数据的有限性制约着该领域在单模态和多模态方向的发展。这种数据匮乏的现状对研究提出了挑战,并促使基于文本描述的对比学习方法(如零样本和少样本学习)受到谨慎关注[13,32,33]。

In our work, we propose an approach to combine a high-performance audio model – ESResNeXt [10] – into a contrastive text-image model, namely CLIP [21], thus, obtaining a tri-modal hybrid architecture. The base CLIP model demonstrates impressive performance and strong domain adaptation capabilities that are referred as “zero-shot inference” in the original paper [21]. To keep consistency with the CLIP terminology, we use the term “zero-shot” in the sense defined in [21].

在我们的工作中,我们提出了一种将高性能音频模型——ESResNeXt [10]——与对比式文本-图像模型(即CLIP [21])相结合的方法,从而获得一个三模态混合架构。基础CLIP模型展现出令人印象深刻的性能和强大的领域适应能力,这些在原论文[21]中被称为"零样本(zero-shot)推理"。为保持与CLIP术语的一致性,我们采用[21]中定义的"零样本"概念。

As we will see, the joint use of three modalities during the training results in out-performance of previous models in environmental sound classification task, extends zero-shot capabilities of the base architecture to the audio modality and introduces an ability to perform cross-modal querying using text, image and audio in any combination.

正如我们将看到的,在训练过程中联合使用三种模态,使得模型在环境声音分类任务上超越了以往模型,将基础架构的零样本(Zero-shot)能力扩展至音频模态,并引入了能够以任意组合使用文本、图像和音频进行跨模态查询的能力。

The remainder of this paper is organized as follows. In Section 2 we discuss the current approaches to handle audio in a standalone manner as well as jointly with additional modalities. Then, we describe models that serve as a base of our proposed hybrid architecture in Section 3, its training and evaluation in Section 4 and the obtained results in Section 5. Finally, we summarize our work and highlight follow-up research directions in Section 6.

本文的剩余部分结构如下。第2节讨论当前单独处理音频及与其他模态联合处理的方法。接着,第3节介绍作为我们提出的混合架构基础模型,第4节描述其训练与评估过程,第5节展示实验结果。最后,第6节总结工作并展望后续研究方向。

2 Related Work

2 相关工作

In this section, we provide an overview of the audio-related tasks and approaches that are intersecting in our work. Beginning with description of the environmental sound classification task, we connect it to the zero-shot classification through the description of existing methods to handle multiple modalities in a single model.

在本节中,我们将概述工作中涉及的音频相关任务和方法。从环境声音分类任务的描述开始,通过介绍现有方法在单一模型中处理多模态的方式,将其与零样本分类联系起来。

The environmental sound classification task implies an assignment of correct labels given samples belonging to sound classes that surround us in the everyday life (e.g., “alarm clock”, “car horn”, “jackhammer”, “mouse clicking”, “cat”). To successfully solve this task, different approaches were proposed that included the use of one- [27,28] or two-dimensional Convolutional Neural Networks (CNN) operating on static [18,24,32,9,15,17,33,8,30] or trainable [23,10] time-frequency transformation of raw audio. While the first approaches relied on the task-specific design of models, the latter results confirmed that the use of domain adaptation from visual domain is beneficial [9,17,10]. However, the visual modality was used in a sequential way, implying the processing of only one modality simultaneously.

环境声音分类任务是指对日常生活中环绕我们的声音类别样本(如"闹钟"、"汽车喇叭"、"电钻"、"鼠标点击"、"猫叫")进行正确标注。为成功解决该任务,研究者提出了多种方法,包括使用一维[27,28]或二维卷积神经网络(CNN),这些网络基于原始音频的静态[18,24,32,9,15,17,33,8,30]或可训练[23,10]时频变换进行操作。早期方法依赖于针对特定任务的模型设计,而后来的研究结果证实,采用视觉领域的域适应技术具有优势[9,17,10]。然而,这些视觉模态的使用都是顺序式的,意味着每次只能处理单一模态。

The joint use of several modalities occurred first in video-related [14,34,6] tasks and was adapted to the sound classification task later [13,31]. However, despite the multimodal design, such approaches utilized two modalities simultaneously at most, while recent studies suggest that the use of more modalities is beneficial [2,1].

多种模态的联合使用最早出现在视频相关[14,34,6]任务中,后来被应用于声音分类任务[13,31]。然而,尽管采用了多模态设计,这类方法最多同时使用两种模态,而近期研究表明使用更多模态更有优势[2,1]。

The multimodal approaches described above share a common key idea of contrastive learning. Such a technique belongs to the branch of self-supervised learning that, among other features, helps to overcome the lack of qualitatively labeled data. That makes it possible to apply contrastive learning-based training to the zero-shot classification tasks [13,32,33].

上述多模态方法共享对比学习(contrastive learning)这一核心思想。该技术属于自监督学习的分支,其特性之一是可缓解高质量标注数据不足的问题。这使得基于对比学习的训练能够应用于零样本分类任务[13,32,33]。

Summarizing, our proposed model employs contrastive learning to perform training on textual, visual and audible modalities, is able to perform modalityspecific classification or, more general, querying and is implicitly enabled to generalize to previously unseen datasets in a zero-shot inference setup.

总结来说,我们提出的模型采用对比学习对文本、视觉和听觉模态进行训练,能够执行特定模态分类或更通用的查询任务,并隐式具备在零样本推理设置下泛化到未见数据集的能力。

3 Model

3 模型

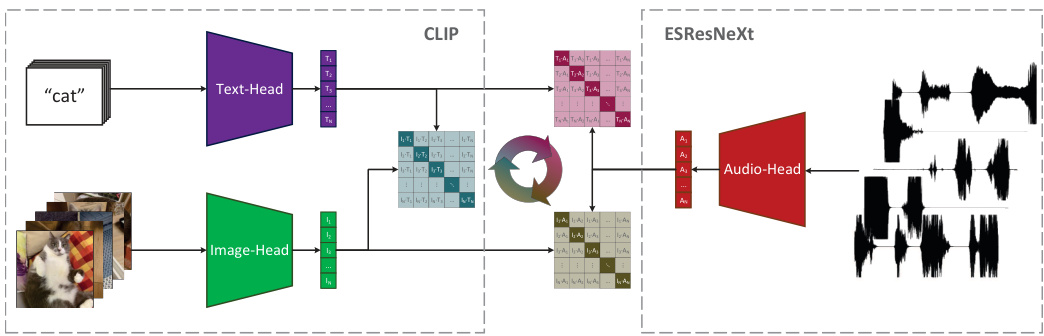

In this section, we describe the key components that make up the proposed model and the way how it handles its input. On a high level, our hybrid architecture combines a ResNet-based CLIP model [21] for visual and textual modalities and an ESResNeXt model [10] for audible modality, as can be seen in Figure 1.

在本节中,我们将描述构成所提出模型的关键组件及其处理输入的方式。从高层次来看,我们的混合架构结合了基于ResNet的CLIP模型[21](用于视觉和文本模态)以及ESResNeXt模型[10](用于听觉模态),如图1所示。

Figure 1. Overview of the proposed AudioCLIP model. On the left, the workflow of the text-image-model CLIP is shown. Performing joint training of the text- and imageheads, CLIP learns to align representations of the same concept in a shared multimodal embedding space. On the right, the audio-model ESResNeXT is shown. Here, the added audible modality interacts with two others, enabling the model to handle 3 modalities simultaneously.

图 1: 提出的 AudioCLIP 模型概览。左侧展示了文本-图像模型 CLIP 的工作流程。通过联合训练文本和图像编码头,CLIP 学会了在共享的多模态嵌入空间中对齐相同概念的表示。右侧展示了音频模型 ESResNeXT。这里新增的听觉模态与另外两种模态交互,使模型能够同时处理 3 种模态。

3.1 CLIP

3.1 CLIP

Conceptually, the CLIP model consists of two sub networks: text and image encoding heads. Both parts of the CLIP model were pre-trained jointly under natural language supervision [21]. Such a training setup enabled the model to generalize the classification ability to image samples that belonged to previously unseen datasets according to the provided labels without any additional finetuning.

从概念上讲,CLIP模型由两个子网络组成:文本编码头和图像编码头。该模型的两部分在自然语言监督下进行了联合预训练 [21] 。这种训练方式使模型能够根据提供的标签,将分类能力泛化到属于先前未见数据集的图像样本上,而无需任何额外的微调。

For the text encoding part, a slightly modified [22] Transformer [29] architecture was used [21]. For the chosen 12-layer model, the input text was represented by a lower-case byte pair encoding with a vocabulary of size 49 408 [21]. Due to computational constraints, the maximum sequence length was clipped at 76 [21].

在文本编码部分,采用了略微修改的[22] Transformer[29]架构[21]。对于选定的12层模型,输入文本通过小写字节对编码(BPE)表示,词表大小为49,408[21]。由于计算资源限制,最大序列长度被截断为76[21]。

For the image encoding part of the CLIP model, two different architectures were considered. One was a Vision Transformer (ViT) [21,5], whose architecture made it similar to the text-head. Another option was represented by a modified ResNet-50 [11], whose global average pooling layer was replaced by a QKVattention layer [21]. As we mentioned in Section 3.1, for the proposed hybrid model we chose the ResNet-based variant of the CLIP model because of its lower computational complexity, in comparison to the ViT-based one.

在CLIP模型的图像编码部分,我们考虑了两种不同架构。一种是Vision Transformer (ViT) [21,5],其架构使其与文本头(text-head)相似。另一种方案是改进版ResNet-50 [11],其全局平均池化层被替换为QKV注意力层(QKVattention layer) [21]。如第3.1节所述,由于基于ResNet的CLIP变体比基于ViT的版本具有更低计算复杂度,我们最终为混合模型选择了该方案。

Given an input batch (text-image pairs) of size $N$ , both CLIP-sub networks produce the corresponding embeddings that are mapped linearly into a multimodal embedding space of size $1024$ [21]. In a such setup, CLIP learns to maximize the cosine similarity between matching textual and visual representations, while minimizing it between incorrect ones, which is achieved using symmetric cross entropy loss over similarity measures [21].

给定一个大小为 $N$ 的输入批次(文本-图像对),两个CLIP子网络会生成相应的嵌入,这些嵌入被线性映射到一个大小为1024的多模态嵌入空间[21]。在这种设置下,CLIP学习最大化匹配文本和视觉表示之间的余弦相似度,同时最小化不匹配对之间的相似度,这是通过对相似度度量使用对称交叉熵损失来实现的[21]。

3.2 ESResNeXt

3.2 ESResNeXt

For the audio encoding part, we decided to apply ESResNeXt model [10] that is based on the ResNeXt-50 [3] architecture and consists of a trainable timefrequency transformation based on complex frequency B-spline wavelets [26]. The chosen model contains moderate number of parameters to learn ( $\sim30$ M), while performing competitive on a large-scale audio dataset, namely AudioSet [7], and providing state-of-the-art-level classification results on the Urban Sound 8 K [25] and ESC-50 [19] datasets. Additionally, the ESResNeXt model supports an implicit processing of a multi-channel audio input and provides improved robustness against additive white Gaussian noise and sample rate decrease [10].

在音频编码部分,我们决定采用基于ResNeXt-50架构的ESResNeXt模型[10],该模型包含基于复杂频率B样条小波的可训练时频变换[26]。所选模型具有适中的参数量(约3000万),在大规模音频数据集AudioSet[7]上表现优异,并在UrbanSound8K[25]和ESC-50[19]数据集上提供了最先进的分类结果。此外,ESResNeXt模型支持多通道音频输入的隐式处理,并提高了对加性高斯白噪声和采样率降低的鲁棒性[10]。

3.3 Hybrid Model – AudioCLIP

3.3 混合模型 – AudioCLIP

In this work, we introduce an additional – audible – modality into the novel CLIP framework, which is naturally extending the existing model. We consider the newly added modality as equally important as the originally present. Such a modification became possible through the use of the AudioSet [7] dataset that we found suitable for this, as described in Section 4.1.

在本工作中,我们向新颖的CLIP框架中引入了一个额外的可听模态,这自然扩展了现有模型。我们将新添加的模态视为与原有模态同等重要。如第4.1节所述,通过使用适用于此的AudioSet [7]数据集,这种修改成为可能。

Thus, the proposed AudioCLIP model incorporates three sub networks: text-, image- and audio-heads. In addition to the existing text-to-image-similarity loss term, there are two new ones introduced: text-to-audio and image-to-audio. The proposed model is able to process all three modalities simultaneously, as well as any pair of them.

因此,提出的AudioCLIP模型包含三个子网络:文本头、图像头和音频头。除了现有的文本-图像相似性损失项外,还引入了两个新的损失项:文本-音频和图像-音频。该模型能够同时处理所有三种模态,以及它们之间的任意两种组合。

4 Experimental Setup

4 实验设置

In this section, we describe datasets that were used, data augmentation methods we applied, the training process and its corresponding hyper-parameters, finalizing with the performance evaluation methods.

在本节中,我们将介绍所使用的数据集、采用的数据增强方法、训练流程及其对应超参数,最后说明性能评估方法。

4.1 Datasets

4.1 数据集

In this work, five image, audio and mixed datasets were used directly and indirectly. Here, we describe these datasets and define their roles in the training and evaluation processes.

在本研究中,直接或间接使用了五个图像、音频及混合数据集。以下将描述这些数据集,并明确它们在训练和评估过程中的作用。

Composite CLIP Dataset: In order to train CLIP, a new dataset was constructed by its authors. It consisted of roughly $400\mathrm{M}$ text-image pairs based on a set of $\sim500\mathrm{k\Omega}$ text-based queries, and each query covered at least $\sim20\mathrm{k\Omega}$ pairs [21]. In this work, the CLIP dataset was used indirectly as a weight initialize r of the text- and image-heads (CLIP model).

复合CLIP数据集:为了训练CLIP,其作者构建了一个新数据集。该数据集包含约$400\mathrm{M}$个文本-图像对,基于一组$\sim500\mathrm{k\Omega}$的文本查询构建,每个查询涵盖至少$\sim20\mathrm{k\Omega}$个配对[21]。本工作中,CLIP数据集被间接用作文本头和图像头(CLIP模型)的权重初始化器。

ImageNet: ImageNet is a large-scale visual datasets described in [4] that contains more than $1\mathrm{M}$ images across $1000$ classes. For the purposes of this work, the ImageNet dataset served as a weight initialize r of the ESResNeXt model and as a target for the zero-shot inference task.

ImageNet: ImageNet 是一个大规模视觉数据集 [4],包含超过 $1\mathrm{M}$ 张图像,涵盖 $1000$ 个类别。在本研究中,ImageNet 数据集用于初始化 ESResNeXt 模型的权重,并作为零样本推理任务的目标。

AudioSet: Being proposed in [7], the AudioSet dataset provides a large-scale collection ( $\sim1.8$ M $&\sim20\mathrm{k\Omega}$ evaluation set) of audible data organized into 527 classes in a non-exclusive way. Each sample is a snippet up to $\mathrm{10~s}$ long from a YouTube-video, defined by the corresponding ID and timings.

AudioSet:该数据集由[7]提出,提供了大规模可听数据集合(约180万样本和约2万欧姆评估集),以非排他方式划分为527个类别。每个样本是从YouTube视频中截取的最长10秒的片段,由对应ID和时间戳定义。

For this work, we acquired video frames in addition to audio tracks. Thus, the AudioSet dataset became the glue between the vanilla CLIP framework and our tri-modal extension on top of it. In particular, audio tracks and the respective class labels were used to perform image-to-audio transfer learning for the ESResNeXt model, and then, the extracted frames in addition to audio and class names served as an input for the hybrid AudioCLIP model.

在这项工作中,我们除了音频轨道外还采集了视频帧。因此,AudioSet数据集成为原始CLIP框架与我们基于其扩展的三模态模型之间的桥梁。具体而言,音频轨道和相应的类别标签被用于对ESResNeXt模型进行图像到音频的迁移学习,随后提取的帧与音频及类别名称共同作为混合AudioCLIP模型的输入。

During the training part, ten equally distant frames were extracted from a video recording, and one of them was picked randomly $(\sim\mathcal{U})$ and passed through the AudioCLIP model. In the evaluation phase, the same extraction procedure was performed, with the difference that only a central frame was presented to the model. Performance metrics are reported based on the evaluation set of the AudioSet dataset.

在训练阶段,从视频录制中提取了十个等距帧,并随机选取其中一帧 $(\sim\mathcal{U})$ 输入AudioCLIP模型。评估阶段采用相同的提取流程,区别在于仅向模型提供中央帧。性能指标基于AudioSet数据集的评估集进行报告。

Urban Sound 8 K: The Urban Sound 8 K dataset provides 8 732 mono- and binaural audio tracks sampled at frequencies in the range $16-48\mathrm{kHz}$ , each track is not longer than 4s. The audio recordings are organized into ten classes: “air conditioner”, “car horn”, “children playing”, “dog bark”, “drilling”, “engine idling”, “gun shot”, “jackhammer”, “siren”, and “street music”. To ensure correctness during the evaluation phase, the Urban Sound 8 K dataset was split by its authors into 10 non-overlapping folds [25] that we used in this work.

Urban Sound 8K:Urban Sound 8K数据集提供了8,732条单声道和双声道音频轨,采样频率范围为$16-48\mathrm{kHz}$,每条音频不超过4秒。录音内容分为十类:"空调声"、"汽车喇叭声"、"儿童嬉戏声"、"犬吠声"、"钻孔声"、"引擎空转声"、"枪声"、"破碎锤声"、"警笛声"和"街头音乐声"。为确保评估阶段准确性,该数据集作者将其划分为10个互不重叠的折叠[25],本研究采用了这一划分方式。

On this dataset, we performed zero-shot inference using the AudioCLIP model trained on AudioSet. Also, the audio encoding head was fine-tuned to the Urban Sound 8 K dataset in both, standalone and cooperative fashion, and the classification performance in both setups was assessed.

在该数据集上,我们使用基于AudioSet训练的AudioCLIP模型进行了零样本(zero-shot)推理。同时,音频编码头以独立和协同两种方式针对Urban Sound 8K数据集进行了微调,并评估了两种设置下的分类性能。

ESC-50: The ESC-50 dataset provides $2000$ single-channel 5 s long audio tracks sampled at 44.1 kHz. As the name suggests, the dataset consists of 50 classes that can be divided into 5 major groups: animal, natural and water, non-speech human, interior, and exterior sounds. To ensure correctness during the evaluation phase, the ESC-50 dataset was split by its author into 5 nonoverlapping folds [19] that we used in this work.

ESC-50: ESC-50数据集提供了2000条单声道、5秒长的音频片段,采样率为44.1 kHz。如其名称所示,该数据集包含50个类别,可分为5大类:动物声音、自然与水声、非语音人声、室内声音和室外声音。为确保评估阶段的准确性,ESC-50数据集作者将其划分为5个非重叠折叠[19],我们在本研究中采用了这一划分方式。

On this dataset, we performed zero-shot inference using the AudioCLIP model trained on AudioSet. Also, the audio encoding head was fine-tuned to the ESC-50 dataset in both, standalone and cooperative fashion, and the classification performance in both setups was assessed.

在该数据集上,我们使用基于AudioSet训练的AudioCLIP模型进行了零样本(zero-shot)推理。同时,音频编码头以独立和协同两种方式对ESC-50数据集进行了微调,并评估了两种设置下的分类性能。

4.2 Data Augmentation

4.2 数据增强

In comparison to the composite CLIP dataset (Section 4.1), the audio datasets provide two orders of magnitude less training samples, which makes over fitting an issue, especially for the Urban Sound 8 K and ESC-50 datasets. To address this challenge, several data augmentation techniques were applied that we describe in this section.

与复合CLIP数据集(第4.1节)相比,音频数据集提供的训练样本少了两个数量级,这会导致过拟合问题,尤其是在Urban Sound 8K和ESC-50数据集上。为解决这一挑战,我们采用了本节所述的多种数据增强技术。

Time Scaling: Simultaneous change of track duration and its pitch is achieved using random scaling along the time axis. This kind of augmentation combines two computationally expensive ones, namely time stretching and pitch shift. Being a faster alternative to the combination of the aforementioned techniques, the time scaling in the range of random factors [−1.5, 1.5], $\sim\mathcal{U}$ provides a lightweight though powerful method to fight over fitting [9].

时间缩放 (Time Scaling):通过在时间轴上随机缩放,实现音轨时长与音高的同步变化。这种数据增强方式融合了计算成本较高的时间拉伸 (time stretching) 和移调 (pitch shift) 技术。作为上述技术组合的快速替代方案,当随机因子范围控制在 [−1.5, 1.5]($\sim\mathcal{U}$)时,时间缩放能以轻量级方式有效抑制过拟合 [9]。

Time Inversion: Inversion of a track along its time axis relates to the random flip of an image, which is an augmentation technique that is widely used in the visual domain. In this work, random time inversion with the probability of 0.5 was applied to the training samples similarly to [10].

时间反转:沿时间轴反转音轨类似于图像的随机翻转,这是一种在视觉领域广泛使用的数据增强技术。在本研究中,我们以0.5的概率对训练样本进行随机时间反转,具体实现方式参照[10]。

Random Crop and Padding: Due to the requirement to align track duration before the processing through the model we applied random cropping or padding to the samples that were longer or shorter than the longest track in a non-augmented dataset, respectively. During the evaluation phase, the random operation was replaced by the center one.

随机裁剪与填充:由于需要通过模型处理前对齐音轨时长,我们对非增强数据集中时长超过或短于最长音轨的样本分别进行了随机裁剪或填充。在评估阶段,随机操作被替换为中心裁剪。

Random Noise: The addition of random noise was shown to be helpful to overcome over fitting in visual-realted tasks [12]. Also, the robustness evaluation of the ESResNeXt model suggested the improved sustainability of the chosen audio encoding model against the additive white Gaussian noise (AWGN) [10]. In this work, we extended the set of data augmentation techniques using AWGN, whose sound-to-noise ratio varied randomly ( $\sim\mathcal{U}$ ) from $10.0\mathrm{dB}$ to 120 dB. The probability of the presense of the noise was set to 0.25.

随机噪声:研究表明,在视觉相关任务中添加随机噪声有助于克服过拟合问题 [12]。此外,ESResNeXt模型的鲁棒性评估表明,所选音频编码模型对加性高斯白噪声 (AWGN) 具有更强的耐受性 [10]。本工作中,我们扩展了使用AWGN的数据增强技术集,其信噪比随机变化 ( $\sim\mathcal{U}$ ),范围从 $10.0\mathrm{dB}$ 到120 dB。噪声出现的概率设置为0.25。

4.3 Training

4.3 训练

The entire training process was divided into subsequent steps, which made acquisition of the final AudioCLIP model reliable and assured its high performance. As described in Section 3.1, we took a ResNet-based CLIP text-imagemodel pre-trained on its own dataset (Section 4.1) [21] and combined it with the ESResNeXt audio-model initialized using ImageNet weights and then pretrained on the AudioSet dataset [10].

整个训练过程分为多个后续步骤,这使得最终AudioCLIP模型的获取过程可靠且确保了其高性能。如第3.1节所述,我们采用了一个基于ResNet的CLIP文本-图像模型(该模型已在自有数据集上进行了预训练(见第4.1节)[21]),并将其与使用ImageNet权重初始化并在AudioSet数据集[10]上预训练的ESResNeXt音频模型相结合。

While the CLIP model was already pre-trained on text-image pairs, we decided to perform an extended AudioSet pre-training of the audio-head first, as it improved performance of the base ESResNeXt model (Table 1), and then to continue training in a tri-modal setting combining it with two other heads. Here, the whole AudioCLIP model was trained jointly on the AudioSet dataset using audio snippets, the corresponding video frames and the assigned textual labels.

虽然CLIP模型已经经过文本-图像对的预训练,但我们决定先对音频头进行扩展的AudioSet预训练(这提升了基础ESResNeXt模型的性能(表1)),再继续结合另外两个头进行三模态联合训练。整个AudioCLIP模型在AudioSet数据集上联合训练,使用音频片段、对应视频帧和分配的文本标签。

Finally, audio-head of the trained AudioCLIP model was fine-tuned on the Urban Sound 8 K and ESC-50 datasets in a bimodal manner (audio and text) using sound recordings and the corresponding textual labels.

最后,训练好的AudioCLIP模型的音频头部在Urban Sound 8K和ESC-50数据集上以双模态方式(音频和文本)进行了微调,使用了录音和相应的文本标签。

The trained AudioCLIP model and its audio encoding head were evaluated on the ImageNet dataset as well as on the three audio-datasets: AudioSet, Urban Sound 8 K, and ESC-50.

训练完成的AudioCLIP模型及其音频编码头在ImageNet数据集以及三个音频数据集(AudioSet、Urban Sound 8K和ESC-50)上进行了评估。

Audio-Head Pre-Training The initialization of the audio-head’s parameters was split into two steps. First, the ImageNet-initialized ESResNeXt model was trained on the AudioSet dataset in a standalone fashion. Then, the pre-trained audio-head was incorporated into the AudioCLIP model and trained further under the cooperative supervision of the text- and image-heads.

音频头预训练

音频头的参数初始化分为两步。首先,以独立方式在AudioSet数据集上训练ImageNet初始化的ESResNeXt模型。随后,将预训练好的音频头整合到AudioCLIP模型中,并在文本头和图像头的协同监督下进行进一步训练。

Standalone: The first pre-training step implied the use of the AudioSet dataset as a weight initialize r. Here, the ESResNeXt model was trained using the same setup as described in [10], with the difference in the number of training epochs. In this work, we increased the training time, which turned out into better evaluation performance on the AudioSet dataset and the subsequent downstream tasks, as described in Section 5.1 and independently quantified.

独立训练:第一步预训练阶段采用AudioSet数据集作为权重初始化器。此处ESResNeXt模型沿用[10]所述相同配置进行训练,仅调整了训练周期数。本研究通过延长训练时间,显著提升了模型在AudioSet数据集及后续下游任务(如第5.1节所述)的评估性能,该提升效果已通过独立量化验证。

Cooperative: The further pre-training of the audio-head was done jointly with the text- and image-heads. Here, the pre-trained (in a standalone manner) audio-head was modified slightly through the replacement of its classification layer with a randomly initialized one, whose number of output neurons was the same as the size of CLIP’s embedding space.

协作式:音频头部的进一步预训练是与文本和图像头部联合进行的。在此过程中,预训练(以独立方式完成)的音频头部经过轻微修改,将其分类层替换为随机初始化的新层,该层的输出神经元数量与CLIP嵌入空间的尺寸相同。

In this setup, the audio-head was trained as a part of the AudioCLIP model, which made its outputs compatible with the embeddings of the vanilla CLIP model. Parameters of the two other sub networks, namely text- and image-head, were frozen during the cooperative pre-training of the audio encoding head, thus, these heads served as teachers in a multi-modal knowledge distillation setup.

在此设置中,音频头(audio-head)作为AudioCLIP模型的一部分进行训练,使其输出与原始CLIP模型的嵌入兼容。在音频编码头的协同预训练过程中,另外两个子网络(即文本头和图像头)的参数被冻结,因此这些头在多模态知识蒸馏设置中充当教师角色。

The performance of the AudioCLIP model trained in such a fashion was assessed and is described in Section 5.

以这种方式训练的AudioCLIP模型性能评估结果见第5节。

AudioCLIP Training The joint training of the audio-head made it compatible with the vanilla CLIP model, however, the distribution of images and textual descriptions in the AudioSet dataset does not follow the one from the CLIP dataset. This could lead to suboptimal performance of the resulting AudioCLIP model on the target dataset as well as on the downstream tasks.

AudioCLIP训练

音频头部的联合训练使其与原始CLIP模型兼容,但AudioSet数据集中图像和文本描述的分布与CLIP数据集不一致。这可能导致最终Aud