V2VNet: Vehicle-to-Vehicle Communication for Joint Perception and Prediction

V2VNet: 面向联合感知与预测的车车通信技术

Abstract. In this paper, we explore the use of vehicle-to-vehicle (V2V) communication to improve the perception and motion forecasting performance of self-driving vehicles. By intelligently aggregating the information received from multiple nearby vehicles, we can observe the same scene from different viewpoints. This allows us to see through occlusions and detect actors at long range, where the observations are very sparse or non-existent. We also show that our approach of sending compressed deep feature map activation s achieves high accuracy while satisfying communication bandwidth requirements.

摘要。本文探讨了如何利用车对车(V2V)通信技术提升自动驾驶车辆的感知与运动预测性能。通过智能聚合来自多辆邻近车辆的信息,我们能够从不同视角观察同一场景。这种方法使我们能够穿透遮挡物,在观测数据极其稀疏或完全缺失的远距离区域检测动态目标。我们还证明,通过发送压缩的深度特征图激活值(deep feature map activations),我们的方法在满足通信带宽要求的同时实现了高精度。

Keywords: Autonomous Driving, Object Detection, Motion Forecast

关键词:自动驾驶 (Autonomous Driving)、目标检测 (Object Detection)、运动预测 (Motion Forecast)

1 Introduction

1 引言

While a world densely populated with self-driving vehicles (SDVs) might seem futuristic, these vehicles will one day soon be the norm. They will provide safer, cheaper and less congested transportation solutions for everyone, everywhere. A core component of self-driving vehicles is their ability to perceive the world. From sensor data, the SDV needs to reason about the scene in 3D, identify the other agents, and forecast how their futures might play out. These tasks are commonly referred to as perception and motion forecasting. Both strong perception and motion forecasting are critical for the SDV to plan and maneuver through traffic to get from one point to another safely.

虽然一个布满自动驾驶汽车(SDV)的世界看起来充满未来感,但这些车辆很快将成为常态。它们将为全球各地的人们提供更安全、更经济且更通畅的交通解决方案。自动驾驶汽车的核心能力在于环境感知——通过传感器数据理解三维场景、识别其他交通参与者,并预测其未来运动轨迹。这些功能通常被称为环境感知与运动预测。强大的感知能力和运动预测对自动驾驶汽车规划路径、安全穿行车流至关重要。

The reliability of perception and motion forecasting algorithms has significantly improved in the past few years due to the development of neural network architectures that can reason in 3D and intelligently fuse multi-sensor data (e.g., images, LiDAR, maps) [28, 29]. Motion forecasting algorithm performance has been further improved by building good multimodal distributions [4,6,12,19] that capture diverse actor behaviour and by modelling actor interactions [3,25,36,37]. Recently, [5, 31] propose approaches that perform joint perception and motion forecasting, dubbed perception and prediction (P&P), further increasing the accuracy while being computationally more efficient than classical two-step pipelines.

感知与运动预测算法的可靠性在过去几年显著提升,这得益于能进行三维推理并智能融合多传感器数据(如图像、激光雷达、地图)的神经网络架构发展 [28, 29]。通过构建捕捉多样化行为体动作的良好多模态分布 [4,6,12,19] 以及建模行为体间交互 [3,25,36,37],运动预测算法性能得到进一步优化。近期,[5, 31] 提出了联合执行感知与运动预测的方法(称为感知与预测 (P&P) ),在计算效率优于传统两步流程的同时,进一步提高了预测精度。

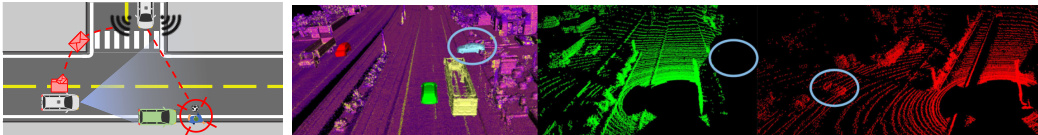

Fig. 1: Left: Safety critical scenario of a pedestrian coming out of occlusion. V2V communication can be leveraged to use the fact that multiple self-driving vehicles see the scene from different viewpoints, and thus see through occluders. Right: Example V2VSim Scene. Virtual scene with occluded actor (blue) and SDVs (red and green), Rendered LiDAR from each SDV in the scene.

图 1: 左图: 行人从遮挡物后出现的交通安全关键场景。利用车联网(V2V)通信技术,可整合多辆自动驾驶车辆从不同视角观测的场景信息,从而穿透遮挡物。右图: V2VSim场景示例。虚拟场景中包含被遮挡的交通参与者(蓝色)与自动驾驶车辆(红色和绿色),以及场景中每辆自动驾驶车辆渲染的激光雷达(LiDAR)点云。

Despite these advances, challenges remain. For example, objects that are heavily occluded or far away result in sparse observations and pose a challenge for modern computer vision systems. Failing to detect and predict the intention of these hard-to-see actors might have catastrophic consequences in safety critical situations when there are only a few mili seconds to react: imagine the SDV driving along a road and a child chasing after a soccer ball runs into the street from behind a parked car (Fig. 1, left). This situation is difficult for both SDVs and human drivers to correctly perceive and adjust for. The crux of the problem is that the SDV and the human can only see the scene from a single viewpoint.

尽管取得了这些进展,挑战依然存在。例如,严重遮挡或距离过远的物体会导致观测数据稀疏,这对现代计算机视觉系统构成了挑战。在安全关键场景中,若无法及时检测和预测这些难以察觉的行为体意图,可能会引发灾难性后果——例如自动驾驶汽车(SDV)正沿道路行驶时,一个孩子从停放的车辆后方冲出追球闯入街道(图1: 左)。这种情况无论对SDV还是人类驾驶员都难以准确感知并做出调整。问题的关键在于,SDV和人类都只能从单一视角观察场景。

However, SDVs could have super-human capabilities if we equip them with the ability to transmit information and utilize the information received from nearby vehicles to better perceive the world. Then the SDV could see behind the occlusion and detect the child earlier, allowing for a safer avoidance maneuver.

然而,若为自动驾驶汽车(SDV)配备信息传输能力,并利用附近车辆接收的信息来增强环境感知,它们将具备超人类能力。这样,自动驾驶汽车就能透视遮挡物、更早发现儿童,从而执行更安全的避让动作。

In this paper, we consider the vehicle-to-vehicle (V2V) communication setting, where each vehicle can broadcast and receive information to/from nearby vehicles (within a 70m radius). Note that this broadcast range is realistic based on existing communication protocols [21]. We show that to achieve the best compromise of having strong perception and motion forecasting performance while also satisfying existing hardware transmission bandwidth capabilities, we should send compressed intermediate representations of the P&P neural network. Thus, we derive a novel P&P model, called V2VNet, which utilizes a spatially aware graph neural network (GNN) to aggregate the information received from all the nearby SDVs, allowing us to intelligently combine information from different points in time and viewpoints in the scene.

本文研究了车对车(V2V)通信场景,其中每辆车都能与附近70米半径范围内的车辆进行信息广播和接收。需要说明的是,该广播范围基于现有通信协议[21]是切实可行的。研究表明,要在保持出色感知与运动预测性能的同时满足现有硬件传输带宽限制,最佳方案是传输P&P神经网络的压缩中间表征。因此,我们提出了一种新型P&P模型V2VNet,该模型采用空间感知图神经网络(GNN)来聚合来自所有附近自动驾驶车辆(SDV)的信息,使我们能够智能整合场景中不同时间点和视角的信息。

To evaluate our approach, we require a dataset where multiple self-driving vehicles are in the same local traffic scene. Unfortunately, no such dataset exists. Therefore, our second contribution is a new dataset, dubbed V2V-Sim (see Fig. 1, right) that mimics the setting where there are multiple SDVs driving in the area. Towards this goal, we use a high-fidelity LiDAR simulator [33], which uses a large catalog of static 3D scenes and dynamic objects built from real-world data, to simulate realistic LiDAR point clouds for a given traffic scene. With this simulator, we can recreate traffic scenarios recorded from the real-world and simulate them as if a percentage of the vehicles are SDVs in the network. We show that V2VNet and other V2V methods significantly boosts performance relative to the single vehicle system, and that our compressed intermediate representations reduce bandwidth requirements without sacrificing performance. We hope this work brings attention to the potential benefits of the V2V setting for bringing safer autonomous vehicles on the road. To enable this, we plan to release this new dataset and make a challenge with a leader board and evaluation server.

为了评估我们的方法,我们需要一个多辆自动驾驶车辆处于同一局部交通场景的数据集。遗憾的是,目前尚无此类数据集存在。因此,我们的第二个贡献是提出了一个名为V2V-Sim的新数据集(见图1右侧),该数据集模拟了多辆SDV在同一区域行驶的场景。为实现这一目标,我们采用了一个高保真LiDAR模拟器[33],该模拟器利用基于真实数据构建的静态3D场景和动态对象库,为给定交通场景生成逼真的LiDAR点云。通过该模拟器,我们可以重现真实世界记录的交通场景,并模拟其中一定比例的车辆作为网络中的SDV。实验表明,V2VNet及其他V2V方法相较于单车系统显著提升了性能,且我们提出的压缩中间表示在不牺牲性能的前提下降低了带宽需求。我们希望这项工作能引起人们对V2V场景潜在效益的关注,以推动更安全的自动驾驶车辆上路。为此,我们计划公开该数据集,并设立包含排行榜和评估服务器的挑战赛。

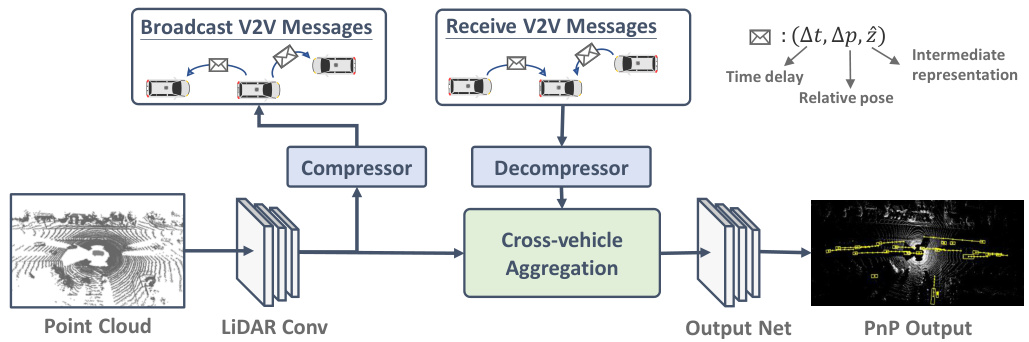

Fig. 2: Overview of V2VNet.

图 2: V2VNet 概述。

2 Related Work

2 相关工作

Joint Perception and Prediction: Detection and motion forecasting play a crucial role in any autonomous driving system. [3–5, 25, 30, 31] unified 3D detection and motion forecasting for self-driving, gaining two key benefits: (1) Sharing computation of both tasks achieves efficient memory usage and fast inference time. (2) Jointly reasoning about detection and motion forecasting improves accuracy and robustness. We build upon these existing P&P models by incorporating V2V communication to share information from different SDVs, enhancing detection and motion forecasting.

联合感知与预测:检测与运动预测在自动驾驶系统中起着至关重要的作用。[3–5, 25, 30, 31] 将3D检测与运动预测统一应用于自动驾驶领域,获得两大优势:(1) 共享两项任务的计算可实现高效内存利用与快速推理。(2) 联合处理检测与运动预测能提升精度与鲁棒性。我们在现有P&P模型基础上引入V2V通信技术,通过多台SDV间的信息共享来强化检测与运动预测能力。

Vehicle-to-Vehicle Perception: For the perception task, prior work has utilized messages encoding three types of data: raw sensor data, output detections, or metadata messages that contain vehicle information such as location, heading and speed. [34,38] associate the received V2V messages with outputs of local sensors. [8] aggregate LiDAR point clouds from other vehicles, followed by a deep network for detection. [35, 44] process sensor measurements via a deep network and then generate perception outputs for cross-vehicle data sharing. In contrast, we leverage the power of deep networks by transmitting a compressed intermediate representation. Furthermore, while previous works demonstrate results on a limited number of simple and unrealistic scenarios, we showcase the effectiveness of our model on a diverse large-scale self-driving V2V dataset.

车对车感知:在感知任务方面,先前研究主要利用三类编码数据:原始传感器数据、输出检测结果或包含车辆位置、航向和速度等信息的元数据消息。[34,38] 将接收到的V2V消息与本地传感器输出相关联。[8] 通过聚合其他车辆的LiDAR点云数据,再使用深度网络进行检测。[35,44] 通过深度网络处理传感器测量数据,随后生成用于跨车辆数据共享的感知输出。与之不同,我们通过传输压缩的中间表征来发挥深度网络的效能。此外,尽管前人研究仅在少量简单且非真实的场景中展示结果,我们则在一个多样化的大规模自动驾驶V2V数据集上验证了模型的有效性。

Aggregation of Multiple Beliefs: In V2V setting, the receiver vehicle should collect and aggregate information from an arbitrary number of sender vehicles for downstream inference. A straightforward approach is to perform permutation invariant operations such as pooling [10,40] over features from different vehicles. However, this strategy ignores cross-vehicle relations (spatial locations, headings, times) and fails to jointly reason about features from the sender and receiver. On the other hand, recent work on graph neural networks (GNNs) has shown success on processing graph-structured data [15, 18, 26, 46]. MPNN [17] abstract common ali ties of GNNs with a message passing framework. GGNN [27] introduce a gating mechanism for node update in the propagation step. Graph-neural networks have also be effective in self-driving: [3, 25] propose a spatially-aware GNN and an interaction transformer to model the interactions between actors in self-driving scenes. [41] uses GNNs to estimate value functions of map nodes and share vehicle information for coordinated route planning. We believe GNNs are tailored for V2V communication, as each vehicle can be a node in the graph. V2VNet leverages GNNs to aggregate and combine messages from other vehicles.

多信念聚合:在V2V(车对车)场景中,接收方车辆需收集并聚合来自任意数量发送方车辆的信息以进行下游推理。一种直接方法是对不同车辆的特征执行置换不变操作(如池化 [10,40]),但该策略忽略了车辆间关系(空间位置、航向、时间),且无法联合推理发送方与接收方的特征。另一方面,近期图神经网络(GNN)研究在处理图结构数据方面取得成果 [15, 18, 26, 46]:MPNN [17] 用消息传递框架抽象GNN的共性,GGNN [27] 在传播步骤引入门控机制更新节点。GNN在自动驾驶领域同样有效:[3, 25] 提出空间感知GNN和交互Transformer建模自动驾驶场景中参与者的交互,[41] 使用GNN估计地图节点的价值函数并共享车辆信息以协调路径规划。我们认为GNN天然适配V2V通信——每辆车可作为图中的节点。V2VNet利用GNN聚合并整合其他车辆的消息。

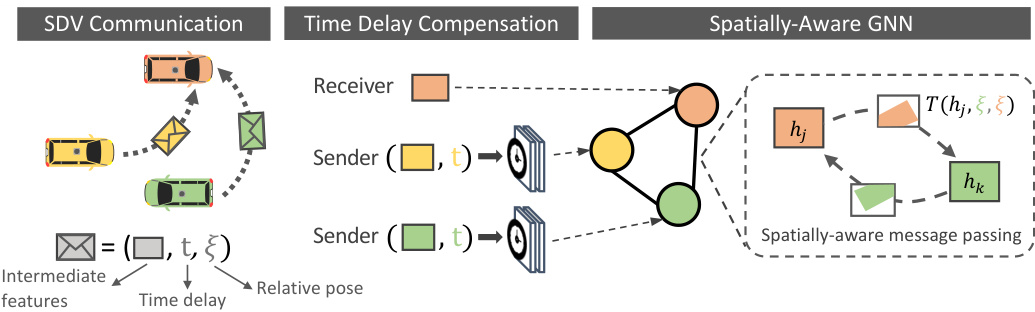

Fig. 3: After SDVs communicate messages, each receiver SDV compensates for time-delay of the received messages, and a GNN aggregates the spatial messages to compute the final intermediate representation.

图 3: SDV完成消息通信后,每个接收方SDV会对收到的消息进行时延补偿,随后通过GNN聚合空间信息以计算最终的中间表征。

Active Perception: In V2V perception, the receiving vehicle should aggregate information from different viewpoints such that its field of view is maximized, trusting more the view that can see better. Our work is related to a long line of work in active perception, which focuses on deciding what action the agent should take to better perceive the environment. Active perception has been effective in localization and mapping [13,22], vision-based navigation [14], serving as a learning signal [20, 48], and various other robotics applications [9]. In this work, rather than actively steering SDVs to obtain better viewpoint and sending information to the others, we consider a more realistic scenario where multiple SDVs have their own routes but are currently in the same geographical area, allowing the SDVs to see better by sharing perception messages.

主动感知 (Active Perception):在车对车 (V2V) 感知中,接收车辆应聚合来自不同视角的信息以最大化其视野范围,并更信任能提供更佳视角的观测。我们的工作与主动感知领域的一系列研究相关,该领域主要研究智能体应采取何种行动以更好地感知环境。主动感知已在定位与建图 [13,22]、基于视觉的导航 [14]、作为学习信号 [20, 48] 以及各类机器人应用 [9] 中展现出成效。本研究中,我们并非通过主动操控自动驾驶车辆 (SDV) 获取更优视角并传递信息,而是考虑更现实的场景:多辆 SDV 虽各有行驶路线但处于同一地理区域,通过共享感知消息实现更优的环境观测。

3 Perceiving the World by Leveraging Multiple Vehicles

3 利用多车感知世界

In this paper, we design a novel perception and motion forecasting model that enables the self-driving vehicle to leverage the fact that several SDVs may be present in the same geographic area. Following the success of joint perception and prediction algorithms [3, 5, 30, 31], which we call P&P, we design our approach as a joint architecture to perform both tasks, which is enhanced to incorporate information received from other vehicles. Specifically, we would like to devise our P&P model to do the following: given sensor data the SDV should (1) process this data, (2) broadcast it, (3) incorporate information received from other nearby SDVs, and then (4) generate final estimates of where all traffic participants are in the 3D space and their predicted future trajectories.

本文设计了一种新颖的感知与运动预测模型,使自动驾驶车辆能够利用同一地理区域内可能存在多辆自动驾驶车辆的事实。基于联合感知与预测算法3,5,30,31的成功实践,我们将该方法设计为执行双重任务的联合架构,并通过整合来自其他车辆的信息进行增强。具体而言,我们希望构建的P&P模型能够实现以下功能:给定传感器数据后,自动驾驶车辆应(1) 处理数据,(2) 广播数据,(3) 整合来自附近其他自动驾驶车辆的信息,最终(4) 生成所有交通参与者在3D空间中的位置及其预测未来轨迹的最终估计。

Two key questions arise in the V2V setting: (i) what information should each vehicle broadcast to retain all the important information while minimizing the transmission bandwidth required? (ii) how should each vehicle incorporate the information received from other vehicles to increase the accuracy of its perception and motion forecasting outputs? In this section we address these two questions.

在V2V(车对车)场景中,有两个关键问题:(i) 每辆车应广播哪些信息才能在保留所有重要信息的同时最小化传输带宽?(ii) 每辆车应如何整合从其他车辆接收的信息以提高其感知和运动预测输出的准确性?本节将针对这两个问题展开讨论。

3.1 Which Information should be Transmitted

3.1 应传输哪些信息

An SDV can choose to broadcast three types of information: (i) the raw sensor data, (ii) the intermediate representations of its P&P system, or (iii) the output detections and motion forecast trajectories. While all three message types are valuable for improving performance, we would like to minimize the message sizes while maximizing P&P accuracy gains. Note that small message sizes are critical because we want to leverage cheap, low-bandwidth, decentralized communication devices. While sending raw measurements minimizes information loss, they require more bandwidth. Furthermore, the receiving vehicle would need to process all additional sensor data received, which might prevent it from meeting the real-time inference requirements. On the other hand, transmitting the outputs of the P&P system is very good in terms of bandwidth, as only a few numbers need to be broadcasted. However, we may lose valuable scene context and uncertainty information that could be very important to better fuse the information.

智能驾驶车辆(SDV)可选择广播三类信息:(i)原始传感器数据,(ii)感知与预测(P&P)系统的中间表征,(iii)输出检测结果及运动预测轨迹。虽然这三类信息对提升系统性能均有价值,但我们希望在最大化P&P精度增益的同时最小化信息量。需注意,小信息量对采用廉价、低带宽、去中心化通信设备至关重要。虽然发送原始测量值能最小化信息损失,但会占用更多带宽。此外,接收车辆需处理所有额外接收的传感器数据,这可能影响其实时推理性能。相反,传输P&P系统输出结果在带宽方面极具优势,仅需广播少量数据。但这种方式可能会丢失对信息融合至关重要的场景上下文和不确定性信息。

In this paper, we argue that sending intermediate representations of the P&P network achieves the best of both worlds. First, each vehicle processes its own sensor data and computes its intermediate feature representation. This is compressed and broadcasted to nearby SDVs. Then, each SDV’s intermediate representation is updated using the received messages from other SDVs. This is further processed through additional network layers to produce the final perception and motion forecasting outputs. This approach has two advantages: (1) Intermediate representations in deep networks can be easily compressed [11, 43], while retaining important information for downstream tasks. (2) It has low computation overhead, as the sensor data from other vehicles has already been pre-processed.

本文认为,发送P&P网络的中间表征能实现两全其美。首先,每辆车处理自身传感器数据并计算其中间特征表征,经压缩后广播给附近自动驾驶车辆(SDV)。接着,各SDV利用接收到的其他车辆消息更新其中间表征,再通过额外网络层处理以生成最终感知与运动预测输出。该方法具备两大优势:(1) 深度网络中的中间表征易于压缩[11,43],同时能保留下游任务所需的关键信息;(2) 计算开销低,因为其他车辆的传感器数据已完成预处理。

In the following, we first showcase how to compute the intermediate representations and how to compress them. We then show how each vehicle should incorporate the received information to increase the accuracy of its P&P outputs.

接下来,我们首先展示如何计算中间表征 (intermediate representations) 及其压缩方法,随后说明每辆车应如何整合接收到的信息以提升其感知与预测 (P&P) 输出的准确性。

Algorithm 1 Cross-vehicle Aggregation

算法 1 跨车聚合

3.2 Leveraging Multiple Vehicles

3.2 利用多车辆协作

V2VNet has three main stages: (1) a convolutional network block that processes raw sensor data and creates a compressible intermediate representation, (2) a cross-vehicle aggregation stage, which aggregates information received from multiple vehicles with the vehicle’s internal state (computed from its own sensor data) to compute an updated intermediate representation, (3) an output network that computes the final P&P outputs. We now describe these steps in more details. We refer the reader to Fig. 2 for our V2VNet architecture.

V2VNet包含三个主要阶段:(1) 卷积网络块,用于处理原始传感器数据并生成可压缩的中间表示;(2) 跨车辆聚合阶段,将来自多辆车辆的信息与该车辆内部状态(根据自身传感器数据计算得出)进行聚合,以计算更新的中间表示;(3) 输出网络,用于计算最终的P&P输出。下面我们将更详细地描述这些步骤。读者可参考图2了解V2VNet架构。

LiDAR Convolution Block: Following the architecture from [45], we extract features from LiDAR data and transform them into bird’s-eye-view (BEV). Specifically, we voxelize the past five LiDAR point cloud sweeps into 15.6cm $^3$ voxels, apply several convolutional layers, and output feature maps of shape $H\times W\times C$ , where $H\times W$ denotes the scene range in BEV, and $C$ is the number of feature channels. We use 3 layers of 3x3 convolution filters (with strides of 2, 1, 2) to produce a 4x down sampled spatial feature map. This is the intermediate representation that we then compress and broadcast to other nearby SDVs.

LiDAR 卷积块:遵循 [45] 中的架构,我们从 LiDAR 数据中提取特征并将其转换为鸟瞰图 (BEV)。具体而言,我们将过去五次 LiDAR 点云扫描体素化为 15.6cm $^3$ 的体素,应用多个卷积层,并输出形状为 $H\times W\times C$ 的特征图,其中 $H\times W$ 表示 BEV 中的场景范围,$C$ 为特征通道数。我们使用 3 层 3x3 卷积滤波器(步长分别为 2、1、2)生成 4 倍下采样的空间特征图。这一中间表示随后会被压缩并广播至附近其他 SDV。

Compression: We now describe how each vehicle compresses its intermediate representations prior to transmission. We adapt Ballé et al.'s variation al image compression algorithm [2] to compress our intermediate representations; a convolutional network learns to compress our representations with the help of a learned hyperprior. The latent representation is then quantized and encoded losslessly with very few bits via entropy encoding. Note that our compression module is differentiable and therefore trainable, allowing our approach to learn how to preserve the feature map information while minimizing bandwidth.

压缩:接下来我们将介绍每辆车在传输前如何压缩其中间表示。我们采用Ballé等人提出的变分图像压缩算法[2]来压缩中间表示:通过卷积网络在习得超先验的辅助下学习压缩表示,随后对潜在表示进行量化,并通过熵编码以极少的比特数实现无损编码。值得注意的是,我们的压缩模块是可微分的,因而具备可训练性,这使得我们的方法能够学习如何在最小化带宽的同时保留特征图信息。

Cross-vehicle Aggregation: After the SDV computes its intermediate representation and transmits its compressed bitstream, it decodes the representation received from other vehicles. Specifically, we apply entropy decoding to the bit stream and apply a decoder CNN to extract the decompressed feature map. We then aggregate the received information from other vehicles to produce an updated intermediate representation. Our aggregation module has to handle the fact that different SDVs are located at different spatial locations and see the actors at different timestamps due to the rolling shutter of the LiDAR sensor and the different triggering per vehicle of the sensors. This is important as the intermediate feature representations are spatially aware.

跨车聚合:SDV计算其中间表示并传输压缩比特流后,会解码从其他车辆接收的表示。具体而言,我们对比特流进行熵解码,并通过解码器CNN提取解压缩后的特征图。随后,将接收到的其他车辆信息进行聚合,生成更新的中间表示。我们的聚合模块需处理以下情况:由于LiDAR传感器的卷帘快门效应及各车辆传感器触发时间不同,不同SDV处于不同空间位置,且观测到交通参与者的时间戳存在差异。这一点至关重要,因为中间特征表示具有空间感知特性。

Table 1: Detection Average Precision (AP) at $\mathrm{IoU={0.5,0.7}}$ , prediction with $\ell_{2}$ error at recall 0.9 at different timestamps, and Trajectory Collision Rate (TCR).

| 方法 | AP@IoU↑e2Error 0.5 0.7 | ↑(w) 1.0s 2.0s 3.0s | TCR√ T=0.01 |

|---|---|---|---|

| NoFusion | 77.3 68.5 | 0.43 0.67 0.98 | 2.84 |

| Output Fusion | 90.8 86.3 | 0.290.500.80 | 3.00 |

| LiDAR Fusion | 92.2 88.5 | 0.290.50 0.79 | 2.31 |

| V2VNet | 93.1 89.9 | 0.290.500.78 | 2.25 |

表 1: 在 $\mathrm{IoU={0.5,0.7}}$ 下的检测平均精度 (AP) , 不同时间戳下召回率为0.9时的 $\ell_{2}$ 误差预测, 以及轨迹碰撞率 (TCR) 。

Towards this goal, each vehicle uses a fully-connected graph neural network (GNN) [39] as the aggregation module, where each node in the GNN is the state representation of an SDV in the scene, including itself (see Fig. 3). Each SDV maintains its own local graph based on which SDVs are within range (i.e., 70 m). GNNs are a natural choice as they handle dynamic graph topologies, which arise in the V2V setting. GNNs are deep-learning models tailored to graphstructured data: each node maintains a state representation, and for a fix number of iterations, messages are sent between nodes and the node states are updated based on the aggregated received information using a neural network. Note that the GNN messages are different from the messages transmitted/received by the SDVs: the GNN computation is done locally by the SDV. We design our GNN to temporally warp and spatially transform the received messages to the receiver’s coordinate system. We now describe the aggregation process that the receiving vehicle performs. We refer the reader to Alg. 1 for pseudocode.

为实现这一目标,每辆车使用全连接图神经网络 (GNN) [39] 作为聚合模块,其中 GNN 的每个节点代表场景中一个 SDV (包括自身) 的状态表征 (见图 3)。每个 SDV 基于通信范围内 (即 70 米内) 的其他 SDV 维护其局部图。GNN 能天然处理 V2V 场景中动态变化的图拓扑结构,因此成为理想选择。GNN 是专为图结构数据设计的深度学习模型:每个节点维护状态表征,在固定次数的迭代中,节点间传递消息并通过神经网络基于聚合接收信息更新节点状态。需注意 GNN 消息与 SDV 收发消息不同:GNN 计算由 SDV 本地完成。我们设计的 GNN 会对接收消息进行时空坐标转换至接收者坐标系。下文详述接收车辆的聚合流程,伪代码参见算法 1。

We first compensate for the time delay between the vehicles to create an initial state for each node in the graph. Specifically, for each node, we apply a convolutional neural network (CNN) that takes as input the received intermediate representation $\hat{z}{i}$ , the relative 6DoF pose $\varDelta p_{i}$ between the receiving and transmitting SDVs and the time delay $\varDelta t_{i\rightarrow k}$ with respect to the receiving vehicle sensor time. Note that for the node representing the receiving car, $\hat{z}$ is directly its intermediate representation. The time delay is computed as the time difference between the sweep start times of each vehicle, based on universal GPS time. We then take the time-delay-compensated representation and concatenate with zeros to augment the capacity of the node state to aggregate the information received from other vehicles after propagation (line 3 in Alg. 1).

我们首先补偿车辆间的时间延迟,为图中的每个节点创建初始状态。具体而言,对于每个节点,我们应用一个卷积神经网络 (CNN),其输入包括接收到的中间表示 $\hat{z}{i}$、接收与发送自动驾驶车 (SDV) 之间的相对6自由度位姿 $\varDelta p_{i}$,以及相对于接收车辆传感器时间的时间延迟 $\varDelta t_{i\rightarrow k}$。需要注意的是,代表接收车辆的节点中,$\hat{z}$ 直接采用其自身的中间表示。时间延迟基于全球GPS时间,计算为各车辆扫描开始时间的时间差。随后,我们将经过时间延迟补偿的表示与零值拼接,以增强节点状态容量,从而在传播后聚合来自其他车辆的信息 (算法1第3行)。

Next we perform GNN message passing. The key insight is that because the other SDVs are in the same local area, the node representations will have overlapping fields of view. If we intelligently transform the representations and share information between nodes where the fields-of-view overlap, we can enhance the SDV’s understanding of the scene and produce better output P&P. Fig. 3 visually depicts our spatial aggregation module. We first apply a relative spatial transformation $\xi_{i\to k}$ to warp the intermediate state of the $i$ -th node to send a GNN message to the $k$ -th node. We then perform joint reasoning on the spatially-aligned feature maps of both nodes using a CNN. The final modified message is computed as in Alg. 1 line 7, where $T$ applies the spatial transformation and resampling of the feature state via bilinear-interpolation, and $M_{i\to k}$ masks out non-overlapping areas between the fields of view. Note that with this design, our messages maintain the spatial awareness.

接下来我们进行GNN消息传递。关键洞见在于:由于其他SDV位于同一局部区域,节点表征将存在视野重叠。如果我们能智能地转换这些表征,并在视野重叠的节点间共享信息,就能增强SDV对场景的理解,从而生成更优的P&P输出。图3直观展示了我们的空间聚合模块:首先施加相对空间变换$\xi_{i\to k}$来扭曲第$i$个节点的中间状态,以向第$k$个节点发送GNN消息;随后使用CNN对两个节点空间对齐的特征图进行联合推理。最终修正消息的计算如算法1第7行所示,其中$T$通过双线性插值实现特征状态的空间变换与重采样,$M_{i\to k}$则掩蔽视野间的非重叠区域。值得注意的是,该设计使消息始终保持空间感知能力。

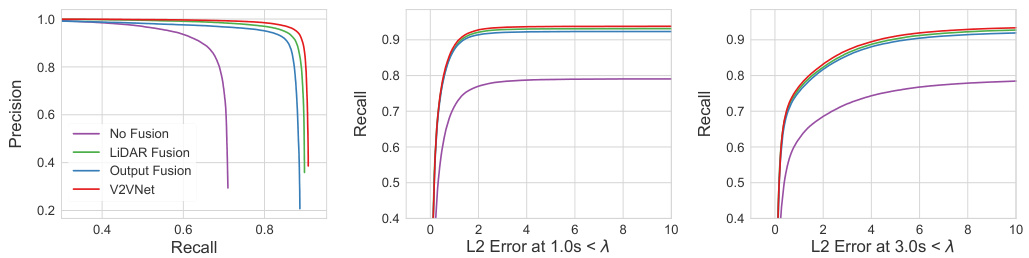

Fig. 4: Left: Detection Precision-Recall (PR) Curve at $\mathrm{IoU=0.7}$ . Center/Right: Recall as a function of $L_{2}$ Error Prediction at 1.0s and 3.0s.

图 4: 左: 检测精确率-召回率 (PR) 曲线 ($\mathrm{IoU=0.7}$)。中/右: 召回率随 $L_{2}$ 误差预测在 1.0 秒和 3.0 秒时的变化曲线。

We next aggregate at each node the received messages via a mask-aware permutation-invariant function $\phi_{M}$ and update the node state with a convolutional gated recurrent unit (ConvGRU) (Alg. 1 line 8), where $j\in N(i)$ are the neighboring nodes in the network for node $i$ and $\phi_{M}$ is the mean operator. The mask-aware accumulation operator ensures only overlapping fields-of-view are considered. In addition, the gating mechanism in the node update enables information selection for the accumulated received messages based on the current belief of the receiving SDV. After the final iteration, a multilayer perceptron outputs the updated intermediate representation (Alg. 1 Line 11). We repeat this message propagation scheme for a fix number of iterations.

接下来,我们在每个节点处通过一个掩码感知的置换不变函数 $\phi_{M}$ 聚合接收到的消息,并使用卷积门控循环单元 (ConvGRU) 更新节点状态 (算法1第8行),其中 $j\in N(i)$ 是节点 $i$ 在网络中的相邻节点,$\phi_{M}$ 为均值算子。掩码感知的累积算子确保仅考虑重叠的视野范围。此外,节点更新中的门控机制使得接收到的累积消息能基于接收方SDV的当前置信度进行信息选择。在最终迭代后,多层感知机输出更新后的中间表示 (算法1第11行)。我们重复这一消息传播机制固定次数。

Output Network: After performing message passing, we apply a set of four Inception-like [42] convolutional blocks to capture multi-scale context efficiently, which is important for prediction. Finally, we take the feature map and exploit two network branches to output detection and motion forecasting estimates respectively. The detection output is $(x,y,w,h,\theta)$ , denoting the position, size and orientation of each object. The output of the motion forecast branch is parameterized as $(x_{t},y_{t})$ , which denotes the object’s location at future time step $t$ . We forecast the motion of the actors for the next 3 seconds at 0.5 s intervals. Please see supplementary for additional architecture and implementation details.

输出网络:在进行消息传递后,我们应用了一组四个类似Inception [42]的卷积块来高效捕获多尺度上下文,这对预测至关重要。最后,我们获取特征图并利用两个网络分支分别输出检测和运动预测结果。检测输出为$(x,y,w,h,\theta)$,表示每个对象的位置、大小和方向。运动预测分支的输出参数化为$(x_{t},y_{t})$,表示对象在未来时间步$t$的位置。我们以0.5秒为间隔预测接下来3秒内参与者的运动。更多架构和实现细节请参阅补充材料。

3.3 Learning

3.3 学习

We first pretrain the LiDAR backbone and output headers, bypassing the crossvehicle aggregation stage. Our loss function is cross-entropy on the vehicle classification output and smooth $\ell_{1}$ on the bounding box parameters. We apply hard-negative mining to improve performance. We then finetune jointly the LiDAR backbone, cross-vehicle aggregation, and output header modules on our novel V2V dataset (see Sec. 4) with synchronized inputs (no time delay) using the same loss function. We do not use the temporal warping function at this stage. During training, for every example in the mini-batch, we randomly sample the number of connected vehicles uniformly on $[0,m i n(c,6)]$ , where $c$ is the number of candidate vehicles available. This is to make sure V2VNet can handle arbitrary graph connectivity while also making sure the fraction of vehicles on the V2V network remains within the GPU memory constraints. Finally, the temporal warping function is trained to compensate for time delay with asynchronous inputs, where all other parts of the network are fixed. We uniformly sample time delay between 0.0s and 0.1s (time of one 10Hz LiDAR sweep). We then train the compression module with the main network (backbone, aggregation, output header) fixed. We use a rate-distortion objective, which aims to maxim