Cross-modal Local Shortest Path and Global Enhancement for Visible-Thermal Person Re-Identification

跨模态局部最短路径与全局增强的可见光-热成像行人重识别

Abstract—In addition to considering the recognition difficulty caused by human posture and occlusion, it is also necessary to solve the modal differences caused by different imaging systems in the Visible-Thermal cross-modal person re-identification (VTReID) task. In this paper,we propose the Cross-modal Local Shortest Path and Global Enhancement (CM-LSP-GE) modules, a two-stream network based on joint learning of local and global features. The core idea of our paper is to use local feature alignment to solve occlusion problem, and to solve modal difference by strengthening global feature. Firstly, Attentionbased two-stream ResNet network is designed to extract dualmodality features and map to a unified feature space. Then, to solve the cross-modal person pose and occlusion problems, the image are cut horizontally into several equal parts to obtain local features and the shortest path in local features between two graphs is used to achieve the fine-grained local feature alignment. Thirdly, a batch normalization enhancement module applies global features to enhance strategy, resulting in difference enhancement between different classes. The multi granularity loss fusion strategy further improves the performance of the algorithm. Finally, joint learning mechanism of local and global features is used to improve cross-modal person re-identification accuracy. The experimental results on two typical datasets show that our model is obviously superior to the most state-of-the-art methods. Especially, on SYSU-MM01 datasets, our model can achieve a gain of $2.89%$ and $7.96%\mathbf{in}$ all search term of Rank-1 and mAP. The source code will be released soon.

摘要—在可见光-热红外跨模态行人重识别(VTReID)任务中,除了考虑人体姿态和遮挡带来的识别困难外,还需解决不同成像系统导致的模态差异。本文提出基于局部与全局特征联合学习的双流网络框架CM-LSP-GE模块,核心思想是通过局部特征对齐解决遮挡问题,通过全局特征增强解决模态差异。首先设计基于注意力机制的双流ResNet网络提取双模态特征并映射至统一特征空间;其次将图像水平切分为若干等份获取局部特征,通过计算两图局部特征间最短路径实现细粒度局部特征对齐,以解决跨模态行人姿态与遮挡问题;第三采用批归一化增强模块对全局特征实施增强策略,从而扩大类间差异;多粒度损失融合策略进一步提升了算法性能;最终通过局部与全局特征的联合学习机制提升跨模态行人重识别准确率。在两个典型数据集上的实验结果表明,本模型显著优于现有最优方法,特别是在SYSU-MM01数据集的全搜索模式下,Rank-1和mAP指标分别获得2.89%和7.96%的提升。源代码即将开源。

Index Terms—Visible-Thermal person re-identification, crossmodal, Local feature alignment, Multi-branch

索引术语—可见光-热成像行人重识别,跨模态,局部特征对齐,多分支

I. INTRODUCTION

I. 引言

D ERSON re-identification(Re-ID) [1]is an important technology in video tracking, which mainly studies matching person images from different camera perspectives. The traditional Re-ID mainly focuses on the visible modality, but in the actual application environment, there are often problems that cannot be identified due to low illumination, so that full-time tracking cannot be realized. In order to obtain the information of pedestrian identification at night, researchers turned to VT-Reid [2]research which mainly studies the matching of pedestrian images from one modality (infrared) to another modality (visible), which is a cross-modal tracking technology for intelligent tracking of pedestrians in full time.

行人重识别 (Re-ID) [1]是视频跟踪中的一项重要技术,主要研究从不同摄像机视角匹配行人图像。传统Re-ID主要关注可见光模态,但在实际应用环境中常因光照不足导致无法识别,从而无法实现全天候跟踪。为获取夜间行人识别信息,研究者转向VT-Reid [2]研究,该技术主要研究从一种模态(红外)到另一种模态(可见光)的行人图像匹配,是实现全天候行人智能跟踪的跨模态追踪技术。

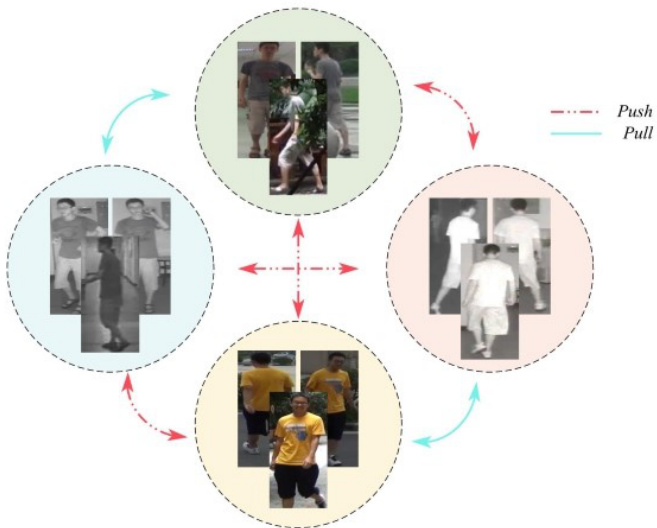

As shown in Figure 1, now the main challenge of VT-Reid is not only to solve the problem of pedestrian posture difference and body part occlusion, but also to solve the problem of image inconsistency caused by modal difference.

如图 1 所示,当前 VT-Reid 的主要挑战不仅在于解决行人姿态差异和身体部位遮挡问题,还需解决由模态差异导致的图像不一致性问题。

In order to improve the accuracy of cross modal pedestrian re-recognition, researchers have proposed machine learning models that based on hand-designed feature and deep learning network. Because manual features can only represent limited pedestrian low-level features which are only part of all features , the machine learning methods such as HOG [3],LOMO [4] cannot fulfill the re-identification task satisfactorily. At present, deep learning is widely used to solve the re-identification problem and its common solution is to encode the pedestrian characteristics of different modes into a common feature space for similarity measurement.

为提高跨模态行人重识别的准确性,研究者们提出了基于手工设计特征和深度学习网络的机器学习模型。由于手工特征仅能表示有限的行人底层特征(这些特征只是全部特征的一部分),诸如HOG [3]、LOMO [4]等机器学习方法无法令人满意地完成重识别任务。当前,深度学习被广泛应用于解决重识别问题,其常见解决方案是将不同模态的行人特征编码至公共特征空间进行相似性度量。

Fig. 1: The cross-modal person re-identification technology difficulties. Sample images from SYSU-MM01 dataset [2]

图 1: 跨模态行人重识别技术难点。样本图像来自SYSU-MM01数据集 [2]

To alleviate the modal differences in cross matching, in this paper we use the attention-based two-stream ResNet network to extract the features of pedestrians in visible and infrared images, and map them to the unified feature space for similarity measurement.

为了缓解跨模态匹配中的模态差异,本文采用基于注意力机制的双流ResNet网络提取可见光和红外图像中行人的特征,并将其映射到统一的特征空间进行相似性度量。

Most of the work only focuses on the global coarse grained feature extraction process and ignore the research on the feature enhancement method after extraction, in this paper we design a feature enhancement module based on batch normalization to enhance the global feature after extraction and improve the feature representation of different pedestrians.

大多数工作仅关注全局粗粒度特征提取过程,而忽略了提取后特征增强方法的研究。本文设计了一个基于批归一化 (batch normalization) 的特征增强模块,用于提升提取后的全局特征并改善不同行人的特征表示。

However, it is not enough to focus only on the global features, local features are also play an important role in VTReID task. When the body parts are missed due to pedestrian occlusion, it is difficult to extract the global features from these images and truly characterize this person, which is easy to lead incorrect classification. Considering that local information(e.g., head, body) of pedestrians in the images can be well distinguished and aids global feature learning, so in this paper, the pedestrian images under two different modes are segmented equally in the horizontal direction, and then the shortest path algorithm is used to achieve cross-modal local feature alignment. Finally, the joint learning mechanism based on local and global features can effectively improve the algorithm performance.

然而,仅关注全局特征是不够的,局部特征在跨模态行人重识别(VTReID)任务中也起着重要作用。当行人因遮挡导致身体部位缺失时,难以从这些图像中提取全局特征并真实表征该行人,容易导致错误分类。考虑到图像中行人的局部信息(如头部、躯干)能够被良好区分并辅助全局特征学习,本文对两种不同模态下的行人图像进行水平方向等分切割,随后采用最短路径算法实现跨模态局部特征对齐。最终,基于局部与全局特征的联合学习机制能有效提升算法性能。

Finally, different backbone networks in the classification task can affect the final classification accuracy. In this context, we investigate the impact of different variants of the ResNet two-stream feature extraction network on the final identification accuracy to further promote the performance of the network. In summary, the contributions of this paper are:

最后,分类任务中不同的主干网络会影响最终的分类准确率。为此,我们研究了ResNet双流特征提取网络的不同变体对最终识别准确率的影响,以进一步提升网络性能。综上所述,本文的贡献在于:

We propose an attention-based two-stream ResNet network for VT cross-modal feature acquisition. • We propose a method for cross-modal local feature alignment based on the shortest path (CM-LSP), which effectively solves the occlusion problem in cross-modal pedestrian re-identification and improves the robustness of the algorithm. • We design a batch normalized global feature enhancement (BN-GE) method to solve the problem of insufficient global feature discrimination and propose a multi granularity loss fusion strategy to guide network learning. • Ours method achieves preferably results on datasets SYSU-MM01 and RegDB. This can be used as a research baseline to improve the quality of future research.

我们提出了一种基于注意力机制的双流ResNet网络,用于可见光-热红外(VT)跨模态特征提取。

• 我们提出了一种基于最短路径的跨模态局部特征对齐方法(CM-LSP),有效解决了跨模态行人重识别中的遮挡问题,提升了算法鲁棒性。

• 我们设计了批归一化全局特征增强方法(BN-GE)以解决全局特征区分度不足的问题,并提出多粒度损失融合策略来指导网络学习。

• 本方法在SYSU-MM01和RegDB数据集上取得了优异效果,可作为提升未来研究质量的基准方案。

II. RELATED WORK

II. 相关工作

In order to improve cross-modal recognition accuracy in VTReID not only needs to solve the problem of pedestrian posture and occlusion, but also needs to break through the dilemma of cross-modal discrepancy. Machine learning based on artificial features has proved poor performance since they represent only some low-level pedestrian features. Therefore, Researchers turn to more powerful methods, Deep Learning, for feature acquisition and the mainstream approaches include: image generation-based methods, feature extractor-based methods and metric learning-based methods.

为了提高VTReID中的跨模态识别准确率,不仅需要解决行人姿态和遮挡问题,还需突破跨模态差异的困境。基于人工特征的机器学习方法已被证明效果不佳,因为它们仅能表示一些低层次的行人特征。因此,研究者转向更强大的深度学习方法进行特征提取,主流方法包括:基于图像生成的方法、基于特征提取器的方法以及基于度量学习的方法。

Image Generation Based Methods fulfil the reidentification task by generating fake images through generative adversarial network (GAN) to reduce the difference between cross-patterns from the image level. Vladimir $\mathrm{[5]_{et}}$ al.firstly proposed ThermalGAN to transform visible images into infrared images and then accomplish pedestrian recognition in the infrared modality. Zhang [6]et al.considered a teacher-student GAN model (TS-GAN) which used modal transitions to better guide the learning of disc rim i native features. Xia [7]et al.pointed out an image modal panning network which performed image modal transformation through a cycle consistency adversarial network. The above methods all use GAN to generate fake images reducing cross-modal differences from the image level. However, multiple seemingly reasonable images may be generated due to the change of the color attribute of the pedestrian appearance. It is difficult to determine which generated target is correct,resulting in a false identification process. The methods based on image generation often have the problem of algorithm performance uncertainty.

基于图像生成的方法通过生成对抗网络 (GAN) 生成虚假图像,从图像层面减少跨模态差异,从而完成重识别任务。Vladimir $\mathrm{[5]_{et}}$ 等人首次提出 ThermalGAN,将可见光图像转换为红外图像,进而在红外模态下完成行人识别。Zhang [6]等人提出师生式 GAN 模型 (TS-GAN),利用模态转换更好地指导判别特征学习。Xia [7]等人设计了一种图像模态平移网络,通过循环一致性对抗网络实现图像模态转换。上述方法均利用 GAN 生成虚假图像,从图像层面减小跨模态差异。但由于行人外观颜色属性的变化,可能生成多个看似合理的图像,难以确定哪个生成目标正确,导致识别过程出现错误。基于图像生成的方法往往存在算法性能不确定性问题。

Feature Extractor Based Methods are mainly used to extract the distinction and consistency characteristics from different modes according to the discrepancy of different modes. Therefore, the extraction of rich features is the key of the algorithm. $\mathrm{Wu}^{[2]}\mathrm{et}$ al.analyzed the performance of different network structures, which include one-stream and twostream networks, and proposed deep zero-padding for training one-stream network towards automatically evolving domainspecific nodes in the network for cross-modality matching. Kang [8] et al.rendered a one-stream model that placed visible and infrared images in different channels or created input images by connecting different channels. Fan [9]et al.advanced a cross-spectral bi-subspace matching one-stream model to solve the matching difference between cross-modal classes. All three of the above single-stream algorithms have low accuracy due to the single-stream network structure defects, which can only extract some common features and cannot extract the disc rim i native features in dual-modality.

基于特征提取器的方法主要用于根据不同模态间的差异,从不同模态中提取区分性与一致性特征。因此,丰富特征的提取是算法的核心。Wu[2]等人分析了一流网络和双流网络等不同网络结构的性能,提出了深度零填充技术,通过训练一流网络实现跨模态匹配中领域特定节点的自动演化。Kang[8]团队提出的一流模型将可见光与红外图像置于不同通道,或通过通道连接构建输入图像。Fan[9]等人提出的跨光谱双子空间匹配一流模型,则用于解决跨模态类别间的匹配差异问题。上述三种单流算法由于网络结构缺陷,仅能提取部分共性特征而无法捕获双模态中的判别性特征,导致准确率较低。

On the contrary to single stream network, the two-stream can extract different modal features by using the parallel network, so that the network has the advantage of extracting distinguishing features. Ye [10] et al. applied the two-stream AlextNet network to gain the dual-mode features, and then project these features into the public feature space. Based on this, Jiang [11]et al. designed a multi-granularity attention network to extract coarse-grained features separately. Ran [12]et al. mapped global features to the same feature space and added local disc rim i native feature learning and the algorithms performance were improved to some extent.To verify the effect of the network flow structure to acquire features ability on the performance of the algorithm, Emrah Basaran [13]designed a four-stream ResNet network framework which composed of gray flow and LZM upon the two-stream network. However, the experiment result showed that this method has large amount of calculation, high training cost and unsatisfactory experimental results. Overall, the two-stream network performs best in the structure of VT-ReID tasks.

与单流网络相反,双流网络通过并行架构提取不同模态特征,使网络具备提取区分性特征的优势。Ye [10] 等人采用双流AlexNet网络获取双模态特征,并将这些特征投影到公共特征空间。基于此,Jiang [11] 团队设计了多粒度注意力网络分别提取粗粒度特征。Ran [12] 团队将全局特征映射到同一特征空间,并加入局部判别特征学习模块,使算法性能得到一定提升。为验证网络流结构对特征获取能力的影响,Emrah Basaran [13] 在双流网络基础上设计了包含灰度流和LZM的四流ResNet框架。但实验表明该方法计算量大、训练成本高且效果欠佳。总体而言,双流网络在VT-ReID任务结构中表现最优。

Most of the above methods use ResNet as the feature extraction network. However, the variants of ResNet, like SE [14], CBAM [15], GC [16], SK [17], ST [18], NAM [19], ResNetXT [20]and SN [21]are widely used in classification and recognition tasks, and have achieved good accuracy improvement.

上述方法大多采用ResNet作为特征提取网络。然而,ResNet的变体如SE [14]、CBAM [15]、GC [16]、SK [17]、ST [18]、NAM [19]、ResNetXT [20]和SN [21]被广泛应用于分类与识别任务,并取得了显著的精度提升。

Metric Learning Based Methods are mainly focus on forcibly shortening the distance between similar samples across models and widening the distance between different samples by designing loss function. Based on the hierarchical feature extraction of the two-stream network, Ye [22], [23]et al. designed the dual-constrained top-ranking loss and the Bidirectional exponential angular triplet loss from the global feature. Zhu [24]et al.proposed to use the Hetero-center loss to constrain the intra-class center distance between two heterogeneous modes to monitor the learning of cross-modal invariant information from the perspective of global features.Ling [25]et al.advanced a center-guided metric learning method for enforcing the distance constraints among cross-modal class centers and samples. Liu [26]et al.raised a dual-modality triplet loss which considering both inter-mode difference and intra-mode change and introduced a mid-level feature fusion module. Hao [27]et al.projected an end-to-end two-stream hyper sphere manifold embedding network with classification and identification losses, which constrained the intra-mode change and cross-mode change on the hyper sphere. Zhao [28]et al. introduced difficult sample quintuple loss which is used to guide global feature learning. Liu [29], [30]et al. introduced heterogeneous center-based triple loss and dual-granularity triple loss from cross-modal global feature alignment, and coarsegrained feature learning as well as part-level feature extraction block. Ling [31]et al. designed the Earth Mover’s Distance can alleviate the impact of the intra-identity variations during modality alignment, and the Multi-Granularity Structure is designed to enable it to align modalities from both coarse-and fine-grained features. In order to find the nuances features, $\mathrm{Wu}^{[\bar{3}2]}\mathrm{et}$ al. proposed the center clustering loss, separation loss and the mode alignment module to find the nuances of different modes in an unsupervised manner.

基于度量学习的方法主要关注通过设计损失函数强制缩短跨模型相似样本间的距离,同时扩大不同样本间的距离。基于双流网络的分层特征提取,Ye [22][23]等人从全局特征出发设计了双约束顶级排序损失和双向指数角度三元组损失。Zhu [24]团队提出使用异质中心损失约束两种异构模态间的类内中心距离,从全局特征角度监控跨模态不变信息的学习。Ling [25]等人提出中心引导的度量学习方法,用于加强跨模态类中心与样本间的距离约束。Liu [26]团队提出同时考虑模态间差异与模态内变化的双模态三元组损失,并引入中层特征融合模块。Hao [27]等人设计了端到端的双流超球面流形嵌入网络,通过分类与识别损失在超球面上约束模态内变化与跨模态变化。Zhao [28]团队引入困难样本五元组损失指导全局特征学习。Liu [29][30]等人从跨模态全局特征对齐、粗粒度特征学习及局部特征提取模块出发,提出基于异构中心的三元组损失和双粒度三元组损失。Ling [31]团队设计的推土机距离(EMD)可减轻模态对齐过程中身份内部变异的影响,并通过多粒度结构实现从粗粒度到细粒度特征的跨模态对齐。为捕捉细微差异特征,Wu [32]等人提出中心聚类损失、分离损失和模态对齐模块,以无监督方式发现不同模态的细微差异。

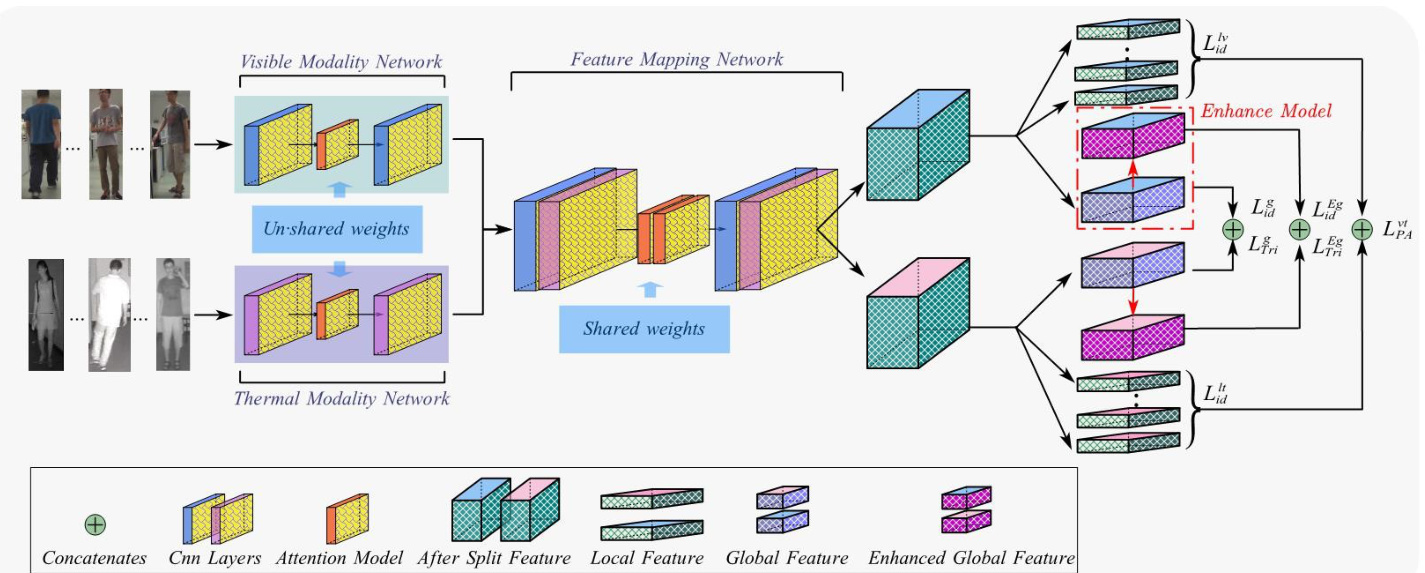

Fig. 2: The model proposed in this paper consists of three main components, an attention-based two-stream backbone network, the cross-modal local feature alignment module and a global feature enhancement module. Firstly, attention-based two-stream networks extract bimodal features and map them to the same feature space. Segmentation by FMN output features to obtain unimodal After Split Feature, the segmented features continue to be divided into unimodal global features and local features containing a certain number of horizontal cuts.

图 2: 本文提出的模型包含三个主要组件:基于注意力机制的双流主干网络、跨模态局部特征对齐模块和全局特征增强模块。首先,基于注意力的双流网络提取双模态特征并将其映射到同一特征空间。通过FMN输出特征进行分割得到单模态分割特征后,这些特征继续被划分为单模态全局特征和包含若干水平切片的局部特征。

From the above literature, it is known that coarse-grained global feature plays a major role in recognition, while finegrained local feature is a very good help in addition to global features to improve the ReID accuracy. In order to solve the problem of occlusion and modal difference in cross-modal VTReID, we use multi-granularity fusion loss to guide network learning. Firstly, in order to solve the modal difference, we design the classification loss based on global features and the loss of hard sample triples from the overall level. At the same time, the classification loss based on local features is designed from the partial level. Secondly, in order to solve the problem of component occlusion and ensure the shortest distance between components of the same kind, a part alignment loss is devised. Finally, multi-granularity loss is used to jointly constrain feature learning.

从上述文献可知,粗粒度的全局特征在识别中起主导作用,而细粒度的局部特征作为全局特征的补充能有效提升ReID精度。为解决跨模态VTReID中的遮挡问题和模态差异,我们采用多粒度融合损失指导网络学习。首先,为消除模态差异,从整体层面设计了基于全局特征的分类损失和困难样本三元组损失;同时从局部层面设计了基于局部特征的分类损失。其次,为解决部件遮挡问题并确保同类部件间距离最近,设计了部件对齐损失。最终通过多粒度损失联合约束特征学习。

III. METHODOLOGY

III. 方法论

Overview. In this section, we propose a CM-LSP-GE method. As shown in Fig. 2.this model mainly consists of four parts:(1) Attention-based two-stream network includes thermal mode network (TMN), visible mode network (VMN)and fusion module network (FMN). (2) Cross-modal local feature alignment module.(3) Batch normalized global feature enhancement module. (4) The multi-granularity fusion loss.

概述。在本节中,我们提出了一种CM-LSP-GE方法。如图2所示,该模型主要由四部分组成:(1) 基于注意力的双流网络,包括热模态网络(TMN)、可见模态网络(VMN)和融合模块网络(FMN);(2) 跨模态局部特征对齐模块;(3) 批归一化全局特征增强模块;(4) 多粒度融合损失。

A. Attention-based two-stream network

A. 基于注意力机制的双流网络

In cross-modal person re-identification project, the twostream network is often used for feature extraction due to its excellent characteristics of disc rim i native learning of different modal features, which mainly include feature extraction and feature mapping. At present, most of the mainstream methods use ResNet50 as the backbone for feature extraction. However, some of the latest improved ResNet have achieved better performance in image classification, recognition and so on. Therefore, it is necessary to find a better backbone for VTReid task, and provide a new reference for future research.

在跨模态行人重识别项目中,双流网络因其对不同模态特征的判别性学习优势常被用于特征提取,主要包括特征提取和特征映射两个部分。当前主流方法大多采用ResNet50作为特征提取主干网络,但部分最新改进版ResNet在图像分类、识别等任务中已展现出更优性能。因此,有必要为VTReid任务寻找更优主干网络,为后续研究提供新参考。

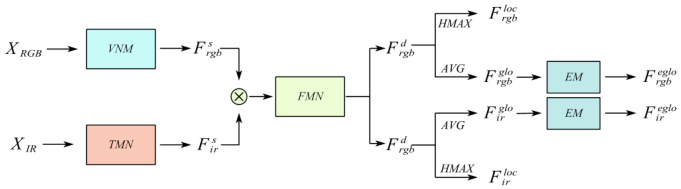

Fig. 3: Schematic diagram of two-stream characteristic flow

图 3: 双流特征流示意图

As shown in Fig. 3, We will introduce how to obtain the feature of sample. Assume that the visible and infrared image are respectively defined as $X_{r g b}$ and $X_{i r}$ , they are respectively fed into the VMN and TMN networks for feature learning to obtain shallow features $F_{r g b}^{s}$ and $F_{i r}^{s}$ ,then the two shallow features are connected as new features, which are input into the FMN network for fusion feature learning and feature mapping , and then the segmented features F rdgb and F idr represented in a uniform feature space are obtained. The segmentation features are processed by the adaptive average pooling layer to obtain the global featuresF rgglbo rgb’ , $F_{i r}^{g l o}$ ,while the local features $F_{r g b}^{l o c}$ and $F_{i r}^{l o c}$ are obtained via the horizontal adaptive maximum pooling layer. Finally, the global features are enhanced by the EM module to get the enhanced features $F_{r g b}^{e g l o}$ and $F_{i r}^{e j l o}$ .In the network inference stage, the distance matrix is constructed using the F eglo for similarity analysis.

如图 3 所示,我们将介绍如何获取样本特征。假设可见光图像和红外图像分别定义为 $X_{r g b}$ 和 $X_{i r}$,它们分别输入 VMN 和 TMN 网络进行特征学习,得到浅层特征 $F_{r g b}^{s}$ 和 $F_{i r}^{s}$,然后将这两个浅层特征连接为新特征,输入 FMN 网络进行融合特征学习和特征映射,最终得到统一特征空间中的分割特征 $F_{rdgb}$ 和 $F_{idr}$。分割特征通过自适应平均池化层处理得到全局特征 $F_{rgglbo\ rgb}$ 和 $F_{i r}^{g l o}$,而局部特征 $F_{r g b}^{l o c}$ 和 $F_{i r}^{l o c}$ 则通过水平自适应最大池化层获得。最后,全局特征通过 EM 模块增强,得到增强特征 $F_{r g b}^{e g l o}$ 和 $F_{i r}^{e j l o}$。在网络推理阶段,使用 $F_{eglo}$ 构建距离矩阵进行相似性分析。

B. Cross-modal Local Feature Alignment Module

B. 跨模态局部特征对齐模块

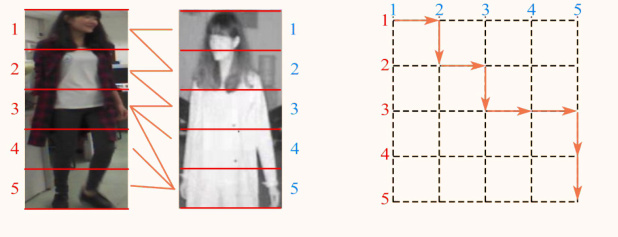

When pedestrian occlusion occurs, it is difficult to truly recognize the global pedestrian features using missing components as distinguishing features. Therefore, we designed the shortest path alignment module based on local features, which achieves component alignment by equalizing the image segmentation and calculating the shortest path between local features in the two graphs.

当行人遮挡发生时,很难真正利用缺失部分作为区分特征来识别全局行人特征。因此,我们设计了基于局部特征的最短路径对齐模块,通过均衡图像分割并计算两图中局部特征之间的最短路径来实现组件对齐。

Fig. 4: Cross-modal Local Feature Alignment

图 4: 跨模态局部特征对齐

As shown in Fig. 4, the visible and infrared images are equally divided into five parts, then the local feature represent ation is defined as F rlogcb $\bar{\cal F}{r g b}^{\mathrm{loc}}={f_{r}^{1},f_{r}^{2}...f_{r}^{i}}$ and $F_{i r}^{\mathrm{loc}}=$ $\big{f_{t}^{1},f_{t}^{2}...f_{t}^{i}\big}$ ,where the visible local features are calculated as Eq. 1

如图 4 所示,可见光与红外图像被均分为五部分,局部特征表示定义为 $F_{r g b}^{\mathrm{loc}}={f_{r}^{1},f_{r}^{2}...f_{r}^{i}}$ 和 $F_{i r}^{\mathrm{loc}}={f_{t}^{1},f_{t}^{2}...f_{t}^{i}}$ ,其中可见光局部特征计算公式如式 (1) 所示。

$$

f_{r}^{i}=\operatorname*{\o}{H M a x}{i\in(1,2,...,h)}(F_{r g b}^{d})_{[i\times\mathrm{d}]}d=2^{n}&n\in(0,1,2,...,11)

$$

$$

f_{r}^{i}=\operatorname*{\o}{H M a x}{i\in(1,2,...,h)}(F_{r g b}^{d})_{[i\times\mathrm{d}]}d=2^{n}&n\in(0,1,2,...,11)

$$

where $i$ is the horizontal feature position, $d$ is the local feature dimension, $h$ is height of the input image.

其中 $i$ 为水平特征位置,$d$ 为局部特征维度,$h$ 为输入图像高度。

When using similar methods to calculate infrared local features, we obtain the local feature representation of the bimodal state and subsequently define the Eq. 2for calculating the distance between the two graphs. The distance equation is defined as:

在采用类似方法计算红外局部特征时,我们获得了双模态状态的局部特征表示,随后定义了用于计算两图间距离的公式2。该距离方程定义为:

$$

d_{i,j}=\left|\frac{f_{r}^{i}-M e a n(f_{r}^{i})}{M a x(f_{r}^{i})-M i n(f_{r}^{i})}-\frac{f_{\mathrm{t}}^{j}-M e a n(f_{t}^{j})}{M a x(f_{t}^{j})-M i n(f_{t}^{j})}\right|_{1}

$$

$$

d_{i,j}=\left|\frac{f_{r}^{i}-M e a n(f_{r}^{i})}{M a x(f_{r}^{i})-M i n(f_{r}^{i})}-\frac{f_{\mathrm{t}}^{j}-M e a n(f_{t}^{j})}{M a x(f_{t}^{j})-M i n(f_{t}^{j})}\right|_{1}

$$

where $i,j\in(1,2,3,...,h)$ are the respective parts of the images. $d_{i,j}$ is the distance between local features of different modes.

其中 $i,j\in(1,2,3,...,h)$ 表示图像的各个部分,$d_{i,j}$ 是不同模态局部特征之间的距离。

Then, we construct the distance matrix $D$ from $d_{i,j}$ ,and define $S_{i,j}$ is the total distance between the local features of the two images as the shortest distance from $(1,1)$ to $(H,H)$ .the shortest path between two graphs is calculated by the Eq. 3

接着,我们根据 $d_{i,j}$ 构建距离矩阵 $D$,并定义 $S_{i,j}$ 为两幅图像局部特征之间的总距离,即从 $(1,1)$ 到 $(H,H)$ 的最短距离。两个图之间的最短路径通过公式 3 计算。

Fig. 5: Global feature enhancement module

图 5: 全局特征增强模块

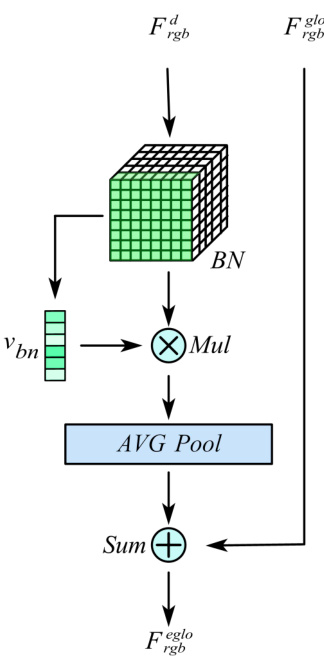

C. Batch normalized global feature enhancement module

C. 批量归一化全局特征增强模块

It is no doubt that global features play an important role in person re-identification, and a BN-based global feature enhancement module is designed to further improve the discri mi nation of different pedestrian global representations under cross-modality. As shown in Fig. 5, taking the visible mode as an example.

毫无疑问,全局特征在行人重识别中起着重要作用。为了进一步提升跨模态下不同行人全局表征的区分能力,我们设计了一个基于批归一化(BN)的全局特征增强模块。如图 5 所示,以可见光模态为例。

Firstly, the unified spatial output features F rdgb are normalized through the batch-normalization layer (BN) At the same time, the vector $v_{b n}$ is calculated according to the BN weights. Normalize the features F rdgb using BN and multiply them with $v_{b n}$ to obtain a new feature and use this feature to obtain a new weight matrix by adaptive averaging pooling layer. To obtain the final F reggblo , we linearly sum F rgglbo and this feature.

首先,统一的空域输出特征F_rdgb通过批归一化层(BN)进行归一化处理。同时,根据BN权重计算向量$v_{bn}$。使用BN对特征F_rdgb进行归一化后,将其与$v_{bn}$相乘得到新特征,并通过自适应平均池化层生成新的权重矩阵。最终通过线性叠加F_rgglbo与该特征,获得F_reggblo。

Then, we derive the calculation process from the perspective of the formula. We first define the BN layer calculation, as in Eq. 4:

接着,我们从公式角度推导计算过程。首先定义BN层计算,如式4所示:

$$

B N(x)=\gamma\frac{x-\mu_{b}}{\sqrt{\sigma_{b}^{2}+\varepsilon}}+\beta

$$

where $\gamma$ is scale factor and $\beta$ is translation factor, $\mu_{b}$ and $\sigma_{b}^{2}$ is mean and variance of one batch, respectively. $\varepsilon$ is a hyper parameter.

其中 $\gamma$ 是缩放因子,$\beta$ 是平移因子,$\mu_{b}$ 和 $\sigma_{b}^{2}$ 分别表示一个批次的均值和方差,$\varepsilon$ 是一个超参数。

We define the weight vector $v_{b n}=\lambda_{j}/\sum_{j=0}\lambda_{j}$ ,where $\lambda_{j}$ is the weight factor in the BN layer.

我们将权重向量定义为 $v_{b n}=\lambda_{j}/\sum_{j=0}\lambda_{j}$ ,其中 $\lambda_{j}$ 是 BN (Batch Normalization) 层中的权重因子。

Finally, The enhanced global features are calculated as in Eq. 5:

最后,增强的全局特征按式5计算:

$$

F_{r g b}^{e g l o}=F_{r g b}^{g l o}+A v g(v_{b n}\bullet B N(F_{r g b}^{d}))

$$

$$

F_{r g b}^{e g l o}=F_{r g b}^{g l o}+A v g(v_{b n}\bullet B N(F_{r g b}^{d}))

$$

where $A v g$ is adaptive average pooling calculation process.

其中 $A v g$ 是自适应平均池化 (adaptive average pooling) 的计算过程。

$D$ . The Multi-granularity Fusion Loss

$D$ . 多粒度融合损失

The multi-granularity fusion loss proposed in this paper is described in detail below. We use two-stream networks to obtain global and local features, and then use them to compute classification loss and triple loss. Classification loss is widely used in the Reid task to calculate cross-entropy loss mainly by pedestrian identity labels, which is referred to as id loss in this paper.

本文提出的多粒度融合损失函数详述如下。我们采用双流网络分别提取全局和局部特征,随后利用这些特征计算分类损失(classification loss)和三重损失(triple loss)。分类损失在行人重识别任务中广泛应用,主要通过行人身份标签计算交叉熵损失,本文将其称为id损失。

Firstly, we define the global and local classification losses as $L_{i d}^{g}$ and $L_{i d}^{l v}$ , respectively, as Eq. 6 and Eq. 7:

首先,我们将全局和局部分类损失分别定义为 $L_{i d}^{g}$ 和 $L_{i d}^{l v}$ ,如式6和式7所示:

$$

L_{i d}^{g}=\sum_{i=1}^{N}-q_{i}\log(p_{i}^{g})

$$

$$

L_{i d}^{g}=\sum_{i=1}^{N}-q_{i}\log(p_{i}^{g})

$$

$$

L_{i d}^{l v}=\sum_{j=2}^{S}\sum_{i=1}^{N}-q_{i}\log(p_{i}^{j})

$$

$$

L_{i d}^{l v}=\sum_{j=2}^{S}\sum_{i=1}^{N}-q_{i}\log(p_{i}^{j})

$$

where $N$ is the total number of categories in the training dataset, $q_{i}$ is the sample true probability distribution , $S$ is the number of horizontal slices, $p_{i}^{j}$ and $p_{i}^{g}$ are the predicted probability distributions.

其中 $N$ 是训练数据集中类别的总数,$q_{i}$ 是样本的真实概率分布,$S$ 是水平切片的数量,$p_{i}^{j}$ 和 $p_{i}^{g}$ 是预测的概率分布。

For each of $P$ that randomly selected person identities, $K$ visible images and $K$ thermal images are randomly sampled, totally are $2\times P\times K$ images, We define the heterogeneous center-based triad loss as Eq. 8

对于随机选取的 $P$ 个人物身份,各随机采样 $K$ 张可见光图像和 $K$ 张热成像图像,总计 $2\times P\times K$ 张图像。我们将基于异构中心的三元组损失定义为式 (8