Gallery Filter Network for Person Search

用于行人搜索的图库过滤网络

Abstract

摘要

In person search, we aim to localize a query person from one scene in other gallery scenes. The cost of this search operation is dependent on the number of gallery scenes, making it beneficial to reduce the pool of likely scenes. We describe and demonstrate the Gallery Filter Network (GFN), a novel module which can efficiently discard gallery scenes from the search process, and benefit scoring for persons detected in remaining scenes. We show that the GFN is robust under a range of different conditions by testing on different retrieval sets, including cross-camera, occluded, and low-resolution scenarios. In addition, we develop the base SeqNeXt person search model, which improves and simplifies the original SeqNet model. We show that the SeqNeX $^{\cdot}+$ GFN combination yields significant performance gains over other state-of-the-art methods on the standard PRW and CUHK-SYSU person search datasets. To aid experi ment ation for this and other models, we provide standardized tooling for the data processing and evaluation pipeline typically used for person search research.

在行人搜索任务中,我们的目标是从一个场景中定位查询人物在其他图库场景中的位置。该搜索操作的成本取决于图库场景的数量,因此减少可能场景池的规模是有益的。我们提出并展示了图库过滤网络(Gallery Filter Network,GFN),这是一个新颖的模块,可以高效地从搜索过程中剔除图库场景,并提升在剩余场景中检测到的人物的评分。通过在不同检索集(包括跨摄像头、遮挡和低分辨率场景)上的测试,我们证明了GFN在一系列不同条件下都具有鲁棒性。此外,我们开发了基础的SeqNeXt行人搜索模型,该模型改进并简化了原始的SeqNet模型。我们展示了SeqNeXt$^{\cdot}+$GFN组合在标准PRW和CUHK-SYSU行人搜索数据集上的性能显著优于其他最先进的方法。为了支持该模型及其他模型的实验,我们提供了用于行人搜索研究的数据处理和评估流程的标准化工具。

1. Introduction

1. 引言

In the person search problem, a query person image crop is used to localize co-occurrences in a set of scene images, known as a gallery. The problem may be split into two parts: 1) person detection, in which all person bounding boxes are localized within each gallery scene and 2) person re-identification (re-id), in which detected gallery person crops are compared against a query person crop. Twostep person search methods [6,10,14,20,36,44] tackle each of these parts explicitly with separate models. In contrast, end-to-end person search methods [3–5, 7, 9, 13, 15, 19, 21, 22, 26, 29, 38–43, 45] use a single model, typically sharing backbone features for detection and re-identification.

在行人搜索问题中,查询图像裁剪区域用于定位一组场景图像(称为图库)中的共现目标。该问题可分为两部分:1) 行人检测,即在每个图库场景中定位所有行人边界框;2) 行人重识别(re-id),将检测到的图库行人裁剪区域与查询行人裁剪区域进行比对。两步式行人搜索方法 [6,10,14,20,36,44] 使用独立模型分别处理这两个部分。相比之下,端到端行人搜索方法 [3–5, 7, 9, 13, 15, 19, 21, 22, 26, 29, 38–43, 45] 采用单一模型,通常共享检测和重识别的骨干网络特征。

For both model types, the same steps are needed: 1) computation of detector backbone features, 2) detection of person bounding boxes, and 3) computation of feature embeddings for each bounding box, to be used for retrieval. Improvement of person search model efficiency is typically

对于两种模型类型,都需要执行相同的步骤:1) 计算检测器主干特征,2) 检测人物边界框,3) 为每个边界框计算特征嵌入以用于检索。提升人物搜索模型效率通常

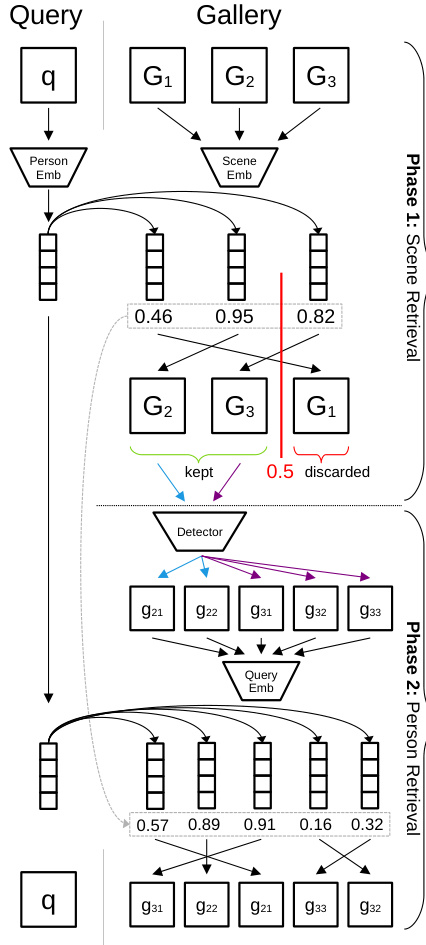

1b. Query-scene scores are computed using cosine similarity of extracted embeddings.

1b. 查询场景分数通过提取嵌入向量的余弦相似度计算。

2a. Detection is performed on highscoring scenes.

2a. 检测在高分场景中进行。

2b. Embeddings are extracted from detected boxes.

2b. 从检测到的边界框中提取嵌入向量 (embeddings)。

1a. Person and scene embeddings are extracted using embedding (Emb) modules. 1c. Using a hard threshold, low-scoring scenes are discarded: no need to perform detection. 2c. Query-detect similarity scores are computed and combined with queryscene scores. 2d. Combined scores are sorted to determine final ranking. Figure 1: An illustration of our proposed two-phase retrieval inference pipeline. In the first phase, the Gallery Filter Network discards scenes unlikely to contain the query person. The second phase is the standard person retrieval process, in which persons are detected, corresponding embeddings extracted, and these embeddings are compared to the query to produce a ranking.

图 1: 我们提出的两阶段检索推理流程示意图。第一阶段,Gallery Filter Network 会过滤掉不太可能包含查询人物的场景。第二阶段是标准的人物检索流程,包括人物检测、对应嵌入向量的提取,以及这些嵌入向量与查询的比对以生成排名。

1a. 使用嵌入 (Emb) 模块提取人物和场景的嵌入向量。

1c. 通过硬阈值过滤低分场景:无需执行检测。

2c. 计算查询-检测相似度分数,并与查询-场景分数结合。

2d. 对综合分数排序以确定最终排名。

focused on reducing the cost of one or more of these steps. We propose the second and third steps can be avoided altogether for some subset of gallery scenes by splitting the retrieval process into two phases: scene retrieval, followed by typical person retrieval. This two-phase process is visualized in Figure 1. We call the module implementing scene retrieval the Gallery Filter Network (GFN), since its function is to filter scenes from the gallery.

我们致力于降低其中一步或多步的成本。针对部分场景库子集,我们提出可完全跳过第二和第三步,将检索过程分为两个阶段:场景检索,随后是常规的人物检索。图 1 展示了这一两阶段流程。我们将实现场景检索的模块称为场景库过滤网络 (Gallery Filter Network, GFN) ,因其功能是从场景库中筛选场景。

By performing the cheaper query-scene comparison before detection is needed, the GFN allows for a modular computational pipeline for practical systems, in which one process can determine which scenes are of interest, and another can detect and extract person embeddings only for interesting scenes. This could serve as an efficient filter for video frames in a high frame rate context, or to cheaply reduce the search space when querying large image databases.

通过在执行检测前进行成本较低的查询-场景比对,GFN为实际系统提供了一种模块化计算流程:一个流程可确定哪些场景值得关注,另一个流程则仅针对这些关键场景进行人物嵌入向量的检测与提取。该机制可作为高帧率视频场景下的高效筛选方案,或在查询大型图像数据库时低成本地缩减搜索空间。

The GFN also provides a mechanism to incorporate global context into the gallery ranking process. Instead of combining global context features with intermediate model features as in [10, 21], we explicitly compare global scene embeddings to query embeddings. The resulting score can be used not only to filter out gallery scenes using a hard threshold, but also to weight predicted box scores for remaining scenes.

GFN还提供了一种将全局上下文整合到图库排序流程中的机制。不同于[10, 21]中将全局上下文特征与中间模型特征相结合的做法,我们显式地将全局场景嵌入(embedding)与查询嵌入进行比对。生成的分数不仅能通过硬阈值过滤图库场景,还可用于对剩余场景的预测框分数进行加权。

We show that both the hard-threshold ing and scoreweighting mechanisms are effective for the benchmark PRW and CUHK-SYSU datasets, resulting in state-of-theart retrieval performance $(+2.7%$ top-1 accuracy on the PRW dataset over previous best model), with improved efficiency (over $50%$ per-query cost savings on the CUHKSYSU dataset vs. same model without the GFN). Additionally, we make contributions to the data processing and evaluation frameworks that are used by most person search methods with publicly available code. That work is described in Supplementary Material Section A.

我们证明,硬阈值和分数加权机制在基准PRW和CUHK-SYSU数据集上均有效,实现了最先进的检索性能(PRW数据集的top-1准确率比之前最佳模型提升+2.7%),同时提高了效率(CUHK-SYSU数据集上每个查询成本比未使用GFN的相同模型节省超过50%)。此外,我们对多数采用公开代码的行人搜索方法所使用的数据处理和评估框架做出了改进,相关细节见补充材料A节。

1.1. Contributions

1.1. 贡献

Our contributions are as follows:

我们的贡献如下:

All of our code and model configurations are made publicly available1.

我们所有的代码和模型配置均已公开1。

2. Related Work

2. 相关工作

Person Search. Beginning with the release of two benchmark person search datasets, PRW [44] and CUHK-SYSU [39], there has been continual development of new deep learning models for person search. Most methods utilize the Online Instance Matching (OIM) Loss from [39] for the re-id feature learning objective. Several methods [21,40,43] enhance this objective using variations of a triplet loss [33].

人物搜索。随着PRW [44]和CUHK-SYSU [39]两个基准人物搜索数据集的发布,针对人物搜索的新深度学习模型不断发展。大多数方法采用[39]提出的在线实例匹配(OIM)损失函数作为重识别特征学习目标。部分方法[21,40,43]通过改进三元组损失[33]的变体来增强这一目标。

Many methods make modifications to the object detection sub-module. In [3, 21, 40], a variation of the Feature Pyramid Network (FPN) [24] is used to produce multi-scale feature maps for detection and re-id. Models in [3, 40] are based on the Fully-Convolutional One-Stage (FCOS) detector [34]. In COAT [42], a Cascade R-CNN-style [2] transformer-augmented [35] detector is used to refine box predictions. We use a variation of the single-scale two-stage Faster R-CNN [31] approach from the SeqNet model [22]. Query-Based Search Space Reduction. In [4, 26], query information is used to iterative ly refine the search space within a gallery scene until the query person is localized. In [10], Region Proposal Network (RPN) proposals are filtered by similarity to the query, reducing the number of proposals for expensive RoI-Pooled feature computations. Our method uses query features to perform a coarser-grained but more efficient search space reduction by filtering out full scenes before expensive detector features are computed.

许多方法对目标检测子模块进行了改进。在[3, 21, 40]中,使用了一种改进的特征金字塔网络(FPN)[24]来生成用于检测和重识别的多尺度特征图。[3, 40]中的模型基于全卷积单阶段(FCOS)检测器[34]。COAT[42]采用级联R-CNN风格[2]的Transformer增强[35]检测器来优化边界框预测。我们使用来自SeqNet模型[22]的单尺度两阶段Faster R-CNN[31]方法的改进版本。

基于查询的搜索空间缩减。在[4, 26]中,查询信息被用于迭代优化图库场景内的搜索空间,直到定位到查询目标。[10]中通过查询相似性过滤区域提议网络(RPN)生成的提议,减少了需要昂贵RoI池化特征计算的提议数量。我们的方法使用查询特征进行更粗粒度但更高效的搜索空间缩减,即在计算昂贵的检测器特征之前过滤掉整个场景。

Query-Scene Prediction. In the Instance Guided Proposal Network (IGPN) [10], a global relation branch is used for binary prediction of query presence in a scene image. This is similar in principal to the GFN prediction, but it is done using expensive intermediate query-scene features, in contrast to our cheaper modular approach to the task.

查询场景预测。在实例引导提案网络 (IGPN) [10] 中,全局关系分支用于对场景图像中查询存在的二元预测。这与 GFN 预测在原理上类似,但它是通过昂贵的中间查询-场景特征完成的,相比之下,我们对该任务采用了成本更低的模块化方法。

Backbone Variation. While the original ResNet50 [17] backbone used in SeqNet and most other person search models has been effective to date, many newer architectures have since been introduced. With the recent advent of vision transformers (ViT) [11] and a cascade of improvements including the Swin Transformer [27] and the Pyramid Vision Transformer (v2) [37], used by the PSTR person search model [3], transformer-based feature extraction has increased in popularity. However, there is still an efficiency gap with CNN models, and newer CNNs including ConvNeXt [28] have closed the performance gap with ViT-based models, while retaining the inherent efficiency of convolutional layers. For this reason, we explore ConvNeXt for our model backbone as an improvement to ResNet50, which is more efficient than ViT alternatives.

主干网络变体。尽管SeqNet和大多数其他行人搜索模型最初采用的ResNet50 [17] 主干网络至今仍表现优异,但近年来涌现了许多新架构。随着视觉Transformer (ViT) [11] 的兴起,以及Swin Transformer [27]、金字塔视觉Transformer (v2) [37](被PSTR行人搜索模型 [3] 采用)等系列改进,基于Transformer的特征提取方法日益流行。然而其效率仍落后于CNN模型,而ConvNeXt [28] 等新型CNN在保持卷积层固有效率的同时,已缩小了与ViT模型的性能差距。因此,我们选择ConvNeXt作为模型主干网络来替代ResNet50,这比ViT方案更具效率优势。

3. Methods

3. 方法

3.1. Base Model

3.1. 基础模型

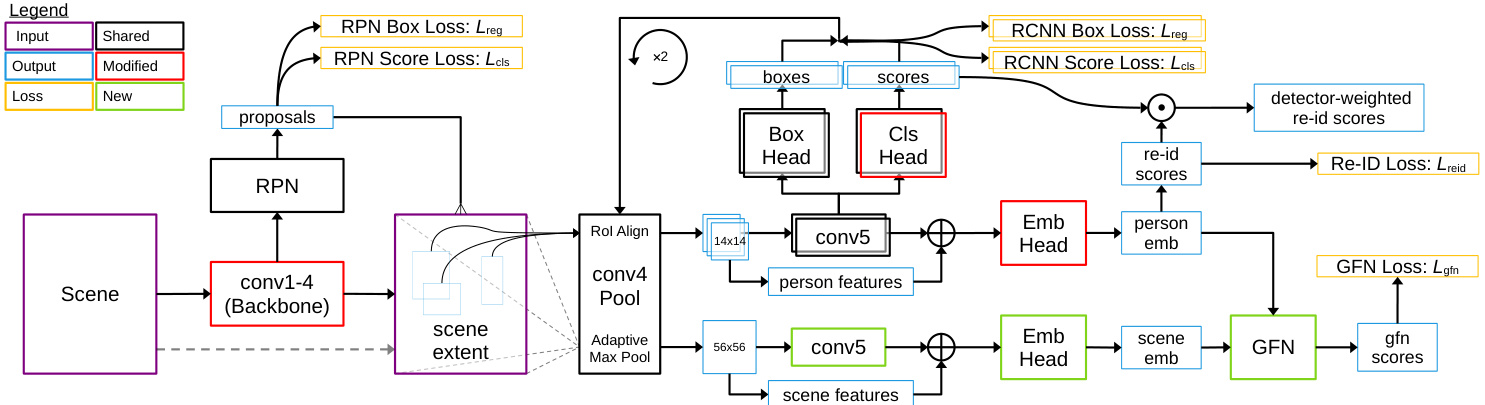

Our base person search model is an end-to-end architecture based on SeqNet [22]. We make modifications to the model backbone, simplify the two-stage detection pipeline, and improve the training recipe, resulting in superior performance. Since the model inherits heavily from SeqNet, and uses a ConvNeXt base, we refer to it simply as SeqNeXt to distinguish it from the original model. Our model, combined with the GFN module, is shown in Figure 2.

我们的基础人物搜索模型是基于SeqNet [22]的端到端架构。我们对模型主干进行了修改,简化了两阶段检测流程,并改进了训练方案,从而实现了更优的性能。由于该模型在很大程度上继承了SeqNet,并使用了ConvNeXt基础架构,我们将其简称为SeqNeXt以区别于原始模型。结合GFN模块的模型如图2所示。

Figure 2: Architecture of the SeqNeXt person search model augmented with the GFN. Modules modified from SeqNet are colored red, and new modules, related to the GFN, are colored green. The model follows the standard Faster R-CNN paradigm, with backbone features from conv4 being used to generate proposals via the RPN. conv4 features are pooled for RPN proposals and passed through the conv5 head to generate refined proposals. This process is repeated with the refined proposals to generate the final boxes. conv4 features are also used to generate both person embeddings and scene embeddings in the same way: the person box or scene passes through the pooling block and then a duplicated conv5 head, and conv4, conv5 features are concatenated and passed through an embedding (Emb) head. In the pooling block, RoI Align [16] is used for person and proposal features, while adaptive max pooling is used for scene features. GFN scores are generated using person and scene embeddings from the same or different scenes. Person re-id scores are combined with the score output of the second R-CNN stage to produce detector-weighted scores.

图 2: 采用GFN增强的SeqNeXt人员搜索模型架构。从SeqNet修改的模块标为红色,与GFN相关的新模块标为绿色。该模型遵循标准Faster R-CNN范式,使用conv4的主干特征通过RPN生成候选框。对RPN候选框进行conv4特征池化后,经conv5头部生成优化候选框。该过程在优化候选框上重复以生成最终检测框。conv4特征还以相同方式生成人员嵌入和场景嵌入:人员框或场景通过池化块后,经复制的conv5头部处理,最终将conv4和conv5特征拼接并通过嵌入(Emb)头部。池化块中,人员及候选框特征采用RoI Align [16],场景特征采用自适应最大池化。GFN分数通过同场景或跨场景的人员与场景嵌入生成。人员重识别分数与第二阶段R-CNN的输出分数结合,形成检测器加权分数。

Backbone Features. Following SeqNet’s usage of the first four CNN blocks (conv1-4) from ResNet50 for backbone features, we use the analogous layers in terms of downsampling from ConvNeXt, also referred to as conv1-4 for convenience.

骨干特征。遵循SeqNet使用ResNet50前四个CNN块(conv1-4)作为骨干特征的做法,我们采用ConvNeXt中具有相同下采样层级的对应层,为方便起见同样称为conv1-4。

Multi-Stage Refinement and Inference. We simplify the detection pipeline of SeqNet by duplicating the Faster RCNN head [31] in place of the Norm-Aware Embedding (NAE) head from [7]. We still weight person similarity scores using the output of the detector, but use the secondstage class score instead of the first-stage as in SeqNet. This is depicted in Figure 2 as “detector-weighted re-id scores”.

多阶段优化与推理。我们通过用 Faster RCNN 头 [31] 复制替换 [7] 中的 Norm-Aware Embedding (NAE) 头,简化了 SeqNet 的检测流程。虽然仍使用检测器输出对行人相似度得分进行加权,但采用第二阶段的类别分数而非 SeqNet 使用的第一阶段分数。如图 2 所示,这部分标记为"检测器加权重识别分数"。

Additionally during inference, we do not use the Context Bipartite Graph Matching (CBGM) algorithm from SeqNet, discussed in Supplementary Material Section E.

此外在推理过程中,我们未使用SeqNet中提出的上下文二分图匹配 (CBGM) 算法,该算法在补充材料E节中有讨论。

Augmentation. Following resizing images to $900\times1500$ (Window Resize) at training time, we employ one of two random cropping methods with equal probability: 1) Random Focused Crop (RFC): randomly take a $512\times512$ crop in the original image resolution which contains at least one known person, 2) Random Safe Crop (RSC): randomly crop the image such that all persons are contained, then resize to $512\times512$ . This cropping strategy allowed us to train with larger batch sizes, while benefiting performance with improved regular iz ation. At inference time, we resize to $900\times1500$ , as in other models. We also consider a variant of Random Focused Crop (RFC2), which resizes images so the “focused” person box is not clipped.

增强。在训练时将图像尺寸调整为$900\times1500$(窗口调整)后,我们以同等概率采用两种随机裁剪方法之一:1) 随机聚焦裁剪 (RFC):在原始图像分辨率中随机截取一个包含至少一个已知人物的$512\times512$区域;2) 随机安全裁剪 (RSC):随机裁剪图像以确保包含所有人物,然后调整至$512\times512$。这种裁剪策略使我们能够以更大的批量进行训练,同时通过改进的正则化提升性能。推理时与其他模型一致,我们将尺寸调整为$900\times1500$。我们还考虑了随机聚焦裁剪的变体 (RFC2),该方式会调整图像尺寸以避免"聚焦"人物框被裁剪。

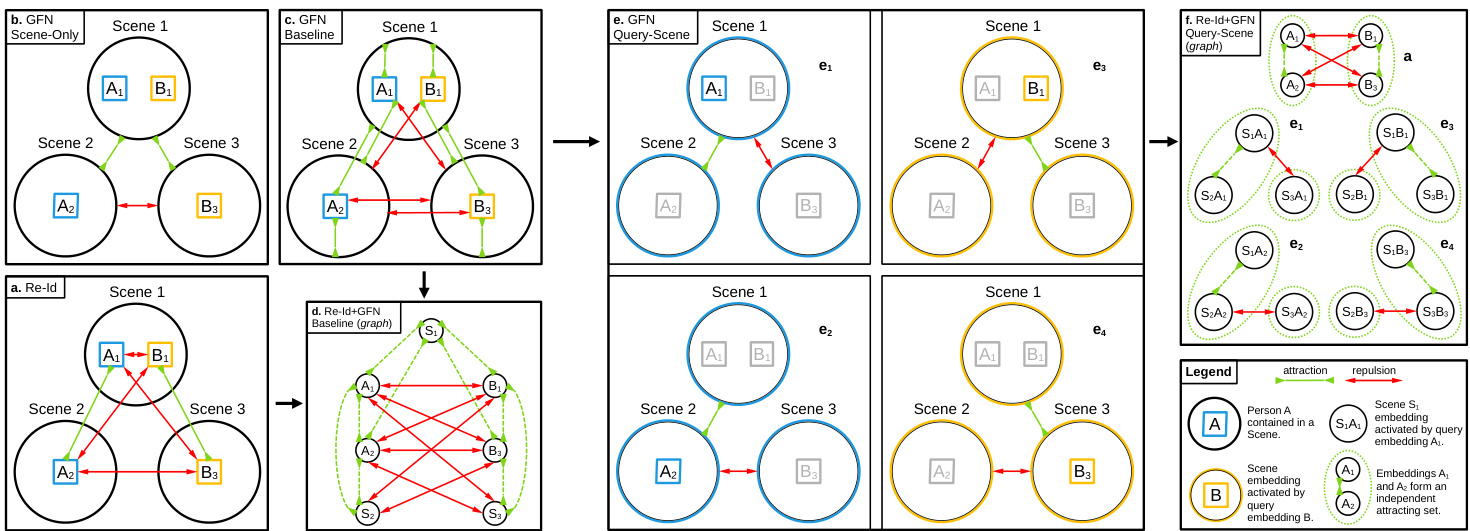

Objective. As in other person search models, we employ the Online Instance Matching (OIM) Loss [39], represented as $\mathcal{L}_{\mathrm{reid}}$ . This is visualized in Figure 3a. For all diagrams in Figure 3, we borrow from the spring analogy for metric learning used in DrLIM [12], with the concept of attractions and repulsions.

目标。与其他行人搜索模型类似,我们采用在线实例匹配(Online Instance Matching,OIM)损失函数 [39],表示为$\mathcal{L}_{\mathrm{reid}}$。如图3a所示。对于图3中的所有示意图,我们借鉴了DrLIM [12]中用于度量学习的弹簧类比概念,包含吸引力和排斥力。

The full loss is the sum of the detector, re-id, and GFN losses:

总损失是检测器损失、重识别损失和GFN损失之和:

$$

{\mathcal{L}}={\mathcal{L}}{\mathrm{det}}+{\mathcal{L}}{\mathrm{reid}}+{\mathcal{L}}_{\mathrm{gfn}}

$$

$$

{\mathcal{L}}={\mathcal{L}}{\mathrm{det}}+{\mathcal{L}}{\mathrm{reid}}+{\mathcal{L}}_{\mathrm{gfn}}

$$

3.2. Gallery Filter Network

3.2. 图库过滤网络 (Gallery Filter Network)

Our goal is to design a module which removes lowscoring scenes, and reweights boxes from higher-scoring scenes. Let $s_{\mathrm{reid}}$ be the cosine similarity of a predicted gallery box embedding with the query embedding, $s_{\mathrm{det}}$ be the detector box score, $s_{\mathrm{gfn}}$ be the cosine similarity for the corresponding gallery scene from the GFN, $\begin{array}{r}{\sigma(x)=\frac{e^{-x}}{1+e^{-x}}}\end{array}$ $\alpha$ be a temperature constant, and $\lambda_{\mathrm{gfn}}$ be the GFN score threshold. At inference time, scenes scoring below $\lambda_{\mathrm{gfn}}$ are removed, and detection is performed for remaining scenes, with the final score for detected boxes given by $s_{\mathrm{final}}=s_{\mathrm{reid}}\cdot s_{\mathrm{det}}\cdot\sigma(s_{\mathrm{gfn}}/\alpha)$ .

我们的目标是设计一个模块,用于移除低分场景并重新加权高分场景的检测框。设 $s_{\mathrm{reid}}$ 为预测图库框嵌入与查询嵌入的余弦相似度,$s_{\mathrm{det}}$ 为检测器框得分,$s_{\mathrm{gfn}}$ 为GFN对应图库场景的余弦相似度,$\begin{array}{r}{\sigma(x)=\frac{e^{-x}}{1+e^{-x}}}\end{array}$,$\alpha$ 为温度常数,$\lambda_{\mathrm{gfn}}$ 为GFN得分阈值。在推理阶段,得分低于 $\lambda_{\mathrm{gfn}}$ 的场景将被移除,并对剩余场景执行检测,最终检测框得分由 $s_{\mathrm{final}}=s_{\mathrm{reid}}\cdot s_{\mathrm{det}}\cdot\sigma(s_{\mathrm{gfn}}/\alpha)$ 计算得出。

The module should discriminate as many scenes below $\lambda_{\mathrm{gfn}}$ as possible, while positively impacting the scores of boxes from any remaining scenes. To this end, we consider three variations of the standard contrastive objective [8, 30] in Sections 3.2.1-3.2.3, in addition to a number of architectural and optimization considerations in Section 3.2.4.

该模块应尽可能区分低于 $\lambda_{\mathrm{gfn}}$ 的场景,同时对剩余场景中检测框的得分产生积极影响。为此,我们在第3.2.1-3.2.3节中考虑了标准对比目标 [8, 30] 的三种变体,并在第3.2.4节中讨论了若干架构和优化方面的考量。

Figure 3: Visual representation of the re-id and GFN optimization objectives. In a), b), c), e), circles represent scene images which contain one or more different person identities, labeled A and B. We show a system of three scenes with two unique person identities. Green connectors represent attraction, meaning two embeddings are pushed together by an objective, and red connectors represent repulsion, meaning two embeddings are pulled apart by an objective. In a) we show the standard re-id loss objective. In b) we show the scene-only GFN objective. In c) we show the baseline GFN objective, and in e) we show the combined query-scene GFN objective. In d) we show the graph form of the baseline GFN objective and re-id objective together, and in f) we show the graph form of the combined query-scene GFN objective and re-id objective together, with green ellipses surrounding independent sets in each multi part it e component.

图 3: 重识别(re-id)与图因子网络(GFN)优化目标的视觉表示。a) b) c) e)中的圆圈代表包含一个或多个不同人员身份(标记为A和B)的场景图像,展示了包含两个独特人员身份的三种场景系统。绿色连接线表示吸引力(即两个嵌入向量被目标函数拉近),红色连接线表示排斥力(即两个嵌入向量被目标函数推远)。a)展示了标准重识别损失目标;b)展示了仅场景的GFN目标;c)展示了基线GFN目标;e)展示了查询-场景联合GFN目标。d)以图形式展示了基线GFN目标与重识别目标的组合;f)以图形式展示了查询-场景联合GFN目标与重识别目标的组合,其中绿色椭圆包围了每个多部件独立集。

3.2.1 Baseline Objective

3.2.1 基线目标

The goal of the baseline GFN optimization is to push person embeddings toward scene embeddings when a person is contained within a scene, and to pull them apart when the person is not in the scene, shown in Figure 3c.

基线GFN优化的目标是当人物处于场景中时,将人物嵌入向量推向场景嵌入向量,当人物不在场景中时则将两者分离,如图3c所示。

Let $x_{i}\in\mathbb{R}^{d}$ denote the embedding extracted from person $q_{i}$ located in some scene $s_{j}$ . Let $y_{j}\in\mathbb{R}^{d}$ denote the embedding extracted from scene $s_{j}$ . Let $X$ be the set of all person embeddings $x_{i}$ , and $Y$ the set of all scene embeddings $y_{j}$ , with $N=|X|,M=|Y|$ .

设 $x_{i}\in\mathbb{R}^{d}$ 表示从位于场景 $s_{j}$ 中的人物 $q_{i}$ 提取的嵌入向量,$y_{j}\in\mathbb{R}^{d}$ 表示从场景 $s_{j}$ 提取的嵌入向量。设 $X$ 为所有人物嵌入向量 $x_{i}$ 的集合,$Y$ 为所有场景嵌入向量 $y_{j}$ 的集合,其中 $N=|X|,M=|Y|$。

We define the query-scene indicator function to denote positive query-scene pairs as

我们定义查询场景指示函数来表示正查询场景对为

We then define a set to denote indices for a specific positive pair and all negative pairs:

然后我们定义一个集合来表示特定正样本对和所有负样本对的索引:

$\bar{K_{i,j}^{Q}}\hat{=}{k~\in1,\bar{}\hat{}\cdot\cdot,\bar{M}|k~=~j$ or $\mathcal{I}_{i,j}^{Q}=0}$ . Define $\sin(u,v)=u^{\top}v/|u||v|$ , the cosine similarity between two $u,v\in\mathbb{R}^{d}$ , and $\tau$ is a temperature constant. Then the loss for a positive query-scene pair is the cross-entropy loss

$\bar{K_{i,j}^{Q}}\hat{=}{k~\in1,\bar{}\hat{}\cdot\cdot,\bar{M}|k~=~j$ 或 $\mathcal{I}_{i,j}^{Q}=0}$。定义 $\sin(u,v)=u^{\top}v/|u||v|$ 为两个向量 $u,v\in\mathbb{R}^{d}$ 的余弦相似度,$\tau$ 为温度常数。正查询-场景对的损失函数采用交叉熵损失。

$$

\ell_{i,j}^{Q}=-\log\frac{\exp{(\sin(x_{i},y_{j})/\tau)}}{\sum_{k\in K_{i,j}^{Q}}\exp{(\sin(x_{i},y_{k})/\tau)}}

$$

$$

\ell_{i,j}^{Q}=-\log\frac{\exp{(\sin(x_{i},y_{j})/\tau)}}{\sum_{k\in K_{i,j}^{Q}}\exp{(\sin(x_{i},y_{k})/\tau)}}

$$

The baseline Gallery Filter Network loss sums positive pair losses over all query-scene pairs:

基线 Gallery Filter Network 损失对所有查询-场景对的正样本对损失求和:

$$

\mathcal{L}{\mathrm{gfn}}^{Q}=\sum_{i=1}^{N}\sum_{j=1}^{M}\mathcal{I}{i,j}^{Q}\ell_{i,j}^{Q}

$$

$$

\mathcal{L}{\mathrm{gfn}}^{Q}=\sum_{i=1}^{N}\sum_{j=1}^{M}\mathcal{I}{i,j}^{Q}\ell_{i,j}^{Q}

$$

3.2.2 Combined Query-Scene Objective

3.2.2 联合查询-场景目标

While it is possible to train the GFN directly with person and scene embeddings using the loss in Equation 5, we show that this objective is ill-posed without modification. The problem is that we have constructed a system of opposing attractions and repulsions. We can formalize this concept by interpreting the system as a graph $G(V,E)$ , visualized in Figure 3d. Let the vertices $V$ correspond to person, scene, and/or combined person-scene embeddings, where an edge in $E$ (red arrow) connecting any two nodes in $V$ represents a negative pair used in the optimization objective. Let any group of nodes connected by green dashed arrows (not edges in $G$ ) be an independent set, representing positive pairs in the optimization objective. Then, each connected component of $G$ must be multi part it e, or the optimization problem will be ill-posed by design, as in the baseline objective.

虽然可以直接使用方程5中的损失函数来训练GFN(Generative Flow Network)与人物和场景嵌入,但我们证明这一目标若不经过修改是不适定的。问题在于我们构建了一个包含相互对立吸引力和排斥力的系统。我们可以通过将该系统解释为图$G(V,E)$(如图3d所示)来形式化这一概念。设顶点$V$对应人物、场景和/或组合的人物-场景嵌入,其中$E$中的边(红色箭头)连接$V$中的任意两个节点,代表优化目标中的负样本对。设任何由绿色虚线箭头连接的节点组(非$G$中的边)为一个独立集,代表优化目标中的正样本对。那么,$G$的每个连通分量必须是多部图,否则优化问题将因设计而成为不适定问题,如基线目标所示。

To learn whether a person is contained within a scene while preventing this conflict of attractions and repulsions, we need to apply some unique transformation to query and scene embeddings before the optimization. One such option is to combine a query person embedding separately with the query scene and gallery scene embeddings to produce fused representations. This allows us to disentangle the web of interactions between query and scene embeddings, while still learning the desired relationship, visualized in Figure 3e. The person embedding used to fuse with each scene embedding in a pair is left colored, and the corresponding scenes are colored according to that person embedding. Person embeddings present in scenes which are not used are grayed out.

为了判断一个人是否包含在场景中,同时避免吸引与排斥的冲突,我们需要在优化前对查询和场景嵌入(embedding)应用独特的变换。一种可行方案是将查询人物嵌入分别与查询场景及图库场景嵌入结合,生成融合表征。这种方法能解耦查询与场景嵌入间的复杂交互网络,同时仍能学习目标关联关系(如图3e所示)。用于与每对场景嵌入融合的人物嵌入保留原色显示,对应场景则根据该人物嵌入着色。未使用的人物嵌入在场景中以灰色显示。

In the graph-based presentation, shown in Figure 3f, this modified scheme using query-scene embeddings will always result in a graph comprising some number of star graph connected components. Since these star graph components are multi part it e by design, the issue of conflicting attractions and repulsions is avoided.

在图 3f 所示的基于图的表示中,这种使用查询场景嵌入 (query-scene embeddings) 的改进方案总会生成由若干星图连通分量组成的图。由于这些星图分量在设计上就是多部分的,因此避免了吸引和排斥冲突的问题。

To combine a query and scene embedding into a single query-scene embedding, we define a function $f:\mathbb{R}^{d},\mathbb{R}^{d}\rightarrow$ $\mathbb{R}^{d}$ , such that $z_{i,j}=f(x_{i},y_{j})$ and $w_{i}=f(x_{i},y^{x_{i}})$ , where $y^{x_{i}}$ is the embedding of the scene that person $i$ is present in. Borrowing from SENet [18] and QEEPS [29], we choose a sigmoid-activated element wise excitation, with $\odot$ used for element wise product. “BN” is a Batch Normalization layer, to mirror the architecture of the other embedding heads, and $\beta$ is a temperature constant.

为了将查询和场景嵌入结合成单一的查询-场景嵌入,我们定义了一个函数 $f:\mathbb{R}^{d},\mathbb{R}^{d}\rightarrow$ $\mathbb{R}^{d}$,使得 $z_{i,j}=f(x_{i},y_{j})$ 且 $w_{i}=f(x_{i},y^{x_{i}})$,其中 $y^{x_{i}}$ 表示人物 $i$ 所在场景的嵌入。借鉴 SENet [18] 和 QEEPS [29] 的设计,我们选择了经过 sigmoid 激活的逐元素激励机制,并用 $\odot$ 表示逐元素乘积。“BN” 是一个批归一化层,以匹配其他嵌入头的架构,$\beta$ 为温度常数。

$$

f(x,y)=\mathtt{B N}(\sigma(x/\beta)\odot y)

$$

$$

f(x,y)=\mathtt{B N}(\sigma(x/\beta)\odot y)

$$

Other choices are possible for $f$ , but the element wiseproduct is critical, because it excites the features most relevant to a given query within a scene, eliciting the relationship shown in Figure 3e.

对于函数 $f$ 还有其他选择,但逐元素乘积(element wise product)是关键,因为它能激发场景中与给定查询最相关的特征,从而引出图 3e 所示的关系。

The loss for a positive query-scene pair is the crossentropy loss

正查询-场景对的损失是交叉熵损失

$$

\ell_{i,j}^{C}=-\log\frac{\exp{(\sin(w_{i},z_{i,j})/\tau)}}{\sum_{k\in K_{i,j}^{Q}}\exp{(\sin(w_{i},z_{i,k})/\tau)}}

$$

$$

\ell_{i,j}^{C}=-\log\frac{\exp{(\sin(w_{i},z_{i,j})/\tau)}}{\sum_{k\in K_{i,j}^{Q}}\exp{(\sin(w_{i},z_{i,k})/\tau)}}

$$

The query-scene combined Gallery Filter Network loss sums positive pair losses over all query-scene pairs:

查询-场景组合图库过滤网络损失对所有查询-场景正样本对的损失求和:

$$

\mathcal{L}{\mathrm{gfn}}^{C}=\sum_{i=1}^{N}\sum_{j=1}^{M}\mathcal{I}{i,j}^{Q}\ell_{i,j}^{C}

$$

$$

\mathcal{L}{\mathrm{gfn}}^{C}=\sum_{i=1}^{N}\sum_{j=1}^{M}\mathcal{I}{i,j}^{Q}\ell_{i,j}^{C}

$$

3.2.3 Scene-Only Objective

3.2.3 仅场景目标

As a control for the query-scene objective, we also define a simpler objective which uses scene embeddings only, depicted in Figure 3b. This objective attempts to learn the less disc rim i native concept of whether two scenes share any persons in common, and has the same optimization issue of conflicting attractions and repulsions as the baseline objective. At inference time, it is used in the same way as the other GFN methods.

作为查询-场景目标的对照,我们还定义了一个仅使用场景嵌入的简化目标,如图 3b 所示。该目标试图学习两个场景是否共享任何共同人物的区分性较低的概念,并且与基线目标存在相同的吸引力和排斥力冲突优化问题。在推理时,其使用方式与其他 GFN 方法相同。

We define the scene-scene indicator function to denote positive scene-scene pairs as

我们定义场景-场景指示函数来表示正例场景-场景对

Similar to Section 3.2.1, we define an index set: $K_{i,j}^{S}={k\in1,\ldots,M|k=j$ or $\mathcal{I}_{i,j}^{S}=0}$ . Then the loss for a positive scene-scene pair is the cross-entropy loss

与第3.2.1节类似,我们定义一个索引集:$K_{i,j}^{S}={k\in1,\ldots,M|k=j$ 或 $\mathcal{I}_{i,j}^{S}=0}$。正样本场景对的损失为交叉熵损失

$$

\ell_{i,j}^{S}=-\log\frac{\exp{(\sin(y_{i},y_{j})/\tau)}}{\sum_{k\in K_{i,j}^{S}}\exp{(\sin(y_{i},y_{k})/\tau)}}

$$

$$

\ell_{i,j}^{S}=-\log\frac{\exp{(\sin(y_{i},y_{j})/\tau)}}{\sum_{k\in K_{i,j}^{S}}\exp{(\sin(y_{i},y_{k})/\tau)}}

$$

The scene-only Gallery Filter Network loss sums positive pair losses over all scene-scene pairs:

场景专用图库过滤网络损失将所有场景-场景配对的正样本损失相加:

$$

\mathcal{L}{\mathrm{gfn}}^{S}=\sum_{i=1}^{M}\sum_{j=1}^{M}[i\neq j]\mathcal{L}{i,j}^{S}\ell_{i,j}^{S}

$$

$$

\mathcal{L}{\mathrm{gfn}}^{S}=\sum_{i=1}^{M}\sum_{j=1}^{M}[i\neq j]\mathcal{L}{i,j}^{S}\ell_{i,j}^{S}

$$

where $[i\neq j]$ is 1 if $i\neq j$ else 0.

其中 $[i\neq j]$ 在 $i\neq j$ 时为 1,否则为 0。

3.2.4 Architecture and Optimization

3.2.4 架构与优化

We consider a number of design choices for the architecture and optimization strategy of the GFN to improve its performance.

我们考虑了GFN架构和优化策略的多种设计选择以提升其性能。

Architecture. Scene embeddings are extracted in the same way as person embeddings, except that a larger $56\times56$ pooling size with adaptive max pooling is used vs. the person pooling size of $14\times14$ with RoI Align. This larger scene pooling size is needed to adequately summarize scene information, since the scene extent is much larger than a typical person bounding box. In addition, the scene conv5 head and Emb Head are duplicated from the corresponding person modules (no weight-sharing), shown in Figure 2.

架构。场景嵌入的提取方式与人物嵌入相同,只是采用了更大的 $56\times56$ 自适应最大池化尺寸(相比人物检测使用的 $14\times14$ RoI Align 池化尺寸)。由于场景范围远大于典型的人物检测框,需要更大的池化尺寸来充分概括场景信息。此外,场景卷积头(conv5 head)和嵌入头(Emb Head)是从对应的人物模块复制而来(不共享权重),如图 2 所示。

Lookup Table. Similar to the methodology used for the OIM objective [39], we use a lookup table (LUT) to store scene and person embeddings from previous batches, refreshing the LUT fully during each epoch. We compare the person and scene embeddings in each batch, which have gradients, with some subset of the embeddings in the LUT, which do not have gradients. Therefore only comparisons of embeddings within the batch, or between the batch and the LUT, have gradients.

查找表 (Lookup Table)。与OIM目标[39]所采用的方法类似,我们使用查找表(LUT)存储来自先前批次的场景和人物嵌入,并在每个训练周期完全刷新该表。我们将每个批次中带有梯度的人物和场景嵌入,与查找表中不带梯度的部分嵌入子集进行对比。因此,只有批次内部的嵌入比较,或批次与查找表之间的嵌入比较会产生梯度。

Query Prototype Embeddings. Rather than using person embeddings directly from a given batch, we can use the identity prototype embeddings stored in the OIM LUT, similar to [19]. To do so, we lookup the corresponding identity for a given batch person identity in the OIM LUT during training, and substitute that into the objective. In doing so, we discard gradients from batch person embeddings, meaning that we only pass gradients through scene embeddings, and therefore only update the scene embedding module. This choice is examined in an ablation in Section 4.4.

查询原型嵌入。与直接使用给定批次中的人物嵌入不同,我们可以采用类似[19]的方法,使用存储在OIM查找表(OIM LUT)中的身份原型嵌入。具体而言,在训练过程中,我们会根据批次人物身份从OIM查找表中检索对应的身份嵌入,并将其代入目标函数。通过这种方式,我们舍弃了来自批次人物嵌入的梯度,意味着仅通过场景嵌入传递梯度,因此只更新场景嵌入模块。第4.4节的消融实验将验证这一选择。

4. Experiments and Analysis

4. 实验与分析

4.1. Datasets and Evaluation

4.1. 数据集与评估

Datasets. For our experiments, we use the two standard person search datasets, CUHK-SYSU [39], and Person Reidentification in the Wild (PRW) [44]. CUHK-SYSU comprises a mixture of imagery from hand-held cameras, and shots from movies and TV shows, resulting in significant visual diversity. It contains 18,184 scene images annotated with 96,143 person bounding boxes from tracked (known) and untracked (unknown) persons, with 8,432 known identities. PRW comprises video frames from six surveillance cameras at Tsinghua University in Hong Kong. It contains 11,816 scene images annotated with 43,110 person bounding boxes from known and unknown persons, with 932 known identities.

数据集。我们实验采用两个标准人物搜索数据集:CUHK-SYSU [39] 和野外行人重识别(PRW) [44]。CUHK-SYSU包含手持摄像机拍摄的图像以及影视剧截图,具有显著的视觉多样性。该数据集包含18,184张场景图像,标注了来自追踪(已知)和未追踪(未知)人物的96,143个行人边界框,涵盖8,432个已知身份。PRW数据集由香港清华大学六台监控摄像头采集的视频帧组成,包含11,816张场景图像,标注了来自已知和未知人物的43,110个行人边界框,涵盖932个已知身份。

The standard test retrieval partition for the CUHK-SYSU dataset has 2,900 query persons, with a gallery size of 100 scenes per query. The standard test retrieval partition for the PRW dataset has 2,057 query persons, and uses all 6,112 test scenes in the gallery, excluding the identity. For a more robust analysis, we additionally divide the given train set into separate train and validation sets, further discussed in Supplementary Material Section A.

CUHK-SYSU数据集的标准测试检索分区包含2,900个查询人物,每个查询对应的图库规模为100个场景。PRW数据集的标准测试检索分区包含2,057个查询人物,并使用图库中全部6,112个测试场景(排除身份信息)。为进行更稳健的分析,我们还将给定训练集划分为独立的训练集和验证集,具体讨论见补充材料A节。

Evaluation Metrics. As in other works, we use the standard re-id metrics of mean average precision (mAP), and top-1 accuracy (top-1). For detection metrics, we use recall and average precision at $0.5\mathrm{IoU}$ (Recall, AP).

评估指标。与其他工作一致,我们采用平均精度均值(mAP)和首位准确率(top-1)作为标准行人重识别指标。对于检测指标,我们使用$0.5\mathrm{IoU}$阈值下的召回率(Recall)和平均精度(AP)。

In addition, we show GFN metrics mAP and top-1, which are computed as metrics of scene retrieval using GFN scores. To calculate these values, we compute the GFN score for each scene, and consider a gallery scene a match to the query if the query person is present in it.

此外,我们还展示了GFN指标mAP和top-1,这些指标是使用GFN分数作为场景检索的度量标准计算得出的。为了计算这些值,我们为每个场景计算GFN分数,并在查询人物出现在其中时,认为图库场景与查询匹配。

4.2. Implementation Details

4.2. 实现细节

We use SGD optimizer with momentum for ResNet models, with starting learning rate 3e-3, and Adam for ConvNeXt models, with starting learning rate 1e-4. We train all models for 30 epochs, reducing the learning rate by a factor of 10 at epochs 15 and 25. Gradients are clipped to norm 10 for all models.

我们使用带动量的SGD优化器训练ResNet模型,初始学习率为3e-3;对ConvNeXt模型采用Adam优化器,初始学习率为1e-4。所有模型均训练30个周期,在第15和25周期时将学习率降至十分之一。所有模型的梯度裁剪阈值设为10。

Models are trained on a single Quadro RTX 6000 GPU (24 GB VRAM), and 30 epoch training time using the final model configuration takes 11 hours for the PRW dataset, and 21 hours for the CUHK-SYSU dataset.

模型在单个Quadro RTX 6000 GPU (24 GB显存) 上进行训练,使用最终模型配置时,PRW数据集的30轮训练耗时11小时,CUHK-SYSU数据集则需21小时。

Our baseline model used for ablation studies has a ConvNeXt Base backbone, embedding dimension 2,048, scene embedding pool size $56\times56$ , and is trained with $512\times512$ image crops using the combined cropping strategy $(\mathrm{RSC}{+}\mathrm{RFC})$ . It uses the combined prototype feature version of the GFN objective. The final model configuration, used for comparison to other state-of-the-art models, is trained with $640\times640$ image crops using the altered combined cropping strategy $({\mathrm{RSC}}{\mathrm{+RFC2}}$ ). It uses the combined batch feature version of the GFN objective.

我们用于消融实验的基线模型采用ConvNeXt Base主干网络,嵌入维度为2,048,场景嵌入池化尺寸为$56\times56$,并使用$512\times512$图像裁剪配合组合裁剪策略$(\mathrm{RSC}{+}\mathrm{RFC})$进行训练。该模型采用GFN目标的组合原型特征版本。最终用于与其他先进模型对比的配置采用$640\times640$图像裁剪配合改进版组合裁剪策略$({\mathrm{RSC}}{\mathrm{+RFC2}})$进行训练,并使用GFN目标的组合批次特征版本。

| 遮挡情况 | mAP | top-1 |

|---|---|---|

| SeqNeXt | 91.1 | 89.8 |

| SeqNeXt+GFN | 92.0 | 90.9 |

| 低分辨率情况 | mAP | top-1 |

|---|---|---|

| SeqNeXt | 91.4 | 92.4 |

| SeqNeXt+GFN | 92.0 | 93.1 |

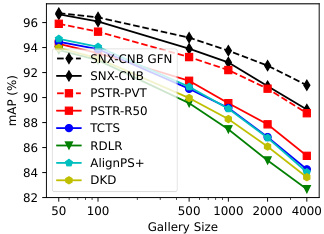

Figure 4: Effect of gallery size Table 1: Performance metrics on mAP for the CUHK-SYSU on two CUHK-SYSU retrieval dataset. SNX-CNB $=$ SeqNeXt partitions using either Occluded ConvNeXt Base. GFN helps (top) or Low-Resolution (botmore as gallery size increases. tom) query persons.

图 4: 图库规模的影响

表 1: CUHK-SYSU检索数据集上mAP性能指标。SNX-CNB $=$ 使用遮挡ConvNeXt Base分区的SeqNeXt。GFN在图库规模增大时提升效果更明显 (上) 或低分辨率 (下) 查询人物。

Additional implementation details are given in Supplementary Material Section B.

补充材料B节提供了更多实现细节。

4.3. Comparison to State-of-the-art

4.3. 与最先进技术的对比

We show a comparison of state-of-the-art methods on the standard benchmarks in Table 2. The GFN benefits all metrics, especially top-1 accuracy for the PRW dataset, which improves by $4.6%$ for the ResNet50 backbone, and $2.9%$ for the ConvNeXt Base backbone. Our best model, Se $\mathrm{qNeXt{+}G F N}$ with ConvNext Base, improves mAP by $1.8%$ on PRW and $1.2%$ on CUHK-SYSU over the previous best PSTR model. This benefit extends to larger gallery sizes for CUHK-SYSU, shown in Figure 4. In fact, the GFN scoreweighting helps more as gallery size increases. This is expected, since the benefit of down-weighting con textuallyunlikely scenes, vs. discriminating between persons within a single scene, has a greater effect when there are more scenes compared against.

我们在表2中展示了标准基准上最先进方法的对比结果。GFN (Generative Flow Networks) 在所有指标上均带来提升,尤其在PRW数据集上的top-1准确率表现突出:ResNet50骨干网络提升4.6%,ConvNeXt Base骨干网络提升2.9%。我们采用ConvNext Base的最佳模型SeqNeXt+GFN,在PRW数据集上mAP指标较此前最优的PSTR模型提升1.8%,在CUHK-SYSU数据集上提升1.2%。如图4所示,这种优势在CUHK-SYSU更大的图库规模中持续存在。实际上,随着图库规模增大,GFN的分数加权机制会发挥更大作用——这与预期一致,因为相较于在单一场景中区分人物,降低上下文不相关场景权重的策略在对比场景增多时会产生更显著的影响。

The GFN benefits CUHK-SYSU retrieval scenarios with occluded or low-resolution query persons, as shown in Table 1. This shows that high quality query person views are not essential to the function of the GFN.

如表 1 所示,GFN 对存在遮挡或低分辨率查询行人的 CUHK-SYSU 检索场景具有优势。这表明高质量查询行人视图并非 GFN 运行的必要条件。

The GFN also benefits both cross-camera and samecamera retrieval, as shown in Table 3. Strong cross-camera performance shows that the GFN can generalize to varying locations, and does not simply pick the scene which is the most visually similar. Strong same-camera performance shows that the GFN is able to use query information, even when all gallery scenes are con textually similar.

如表3所示,GFN (Generative Feature Network) 在跨摄像头和同摄像头检索任务中均表现优异。优异的跨摄像头性能表明GFN能泛化至不同地理位置,而非仅选择视觉上最相似的场景;出色的同摄像头性能则证明即使所有候选场景在上下文层面高度相似,GFN仍能有效利用查询信息。

To showcase these benefits, we provide some qualitative results in Supplementary Material Section C. These examples show that the GFN uses local person information combined with global context to improve retrieval ranking, even in the presence of difficult confusers.

为展示这些优势,我们在补充材料C节提供了部分定性结果。这些案例表明,即使存在高混淆项,GFN仍能通过结合局部人物信息与全局上下文来提升检索排序效果。

4.4. Ablation Studies

4.4. 消融研究

We conduct a series of ablations using the PRW dataset to show how detection, re-id, and GFN performance are each impacted by variations in model architecture, data augmentation, and GFN design choices.

我们使用PRW数据集进行了一系列消融实验,以展示检测、重识别和GFN性能如何分别受到模型架构、数据增强和GFN设计选择变化的影响。

Table 4: Comparison of different options for the GFN optimization objective. “None” does not use the GFN, Scene-Only uses the objective in Section 3.2.3, Base uses the baseline objective in Section 3.2.1, Combined (Comb.) uses the query-scene objective in Section 3.2.2, Batch indicates that batch query embeddings are used, Proto indicates that prototype query embeddings are used. Baseline model is marked with $\dagger$ , final model is highlighted gray.

表 4: GFN优化目标的不同选项对比。"None"表示不使用GFN,"Scene-Only"使用第3.2.3节的目标,"Base"使用第3.2.1节的基线目标,"Combined (Comb.)"使用第3.2.2节的查询-场景目标,"Batch"表示使用批量查询嵌入,"Proto"表示使用原型查询嵌入。基线模型用$\dagger$标记,最终模型以灰色高亮显示。

| 方法 | 主干网络 | CUHK-SYSU | PRW |

|---|---|---|---|

| mAP | top-1 | ||

| 两步法 | |||

| IDE [44] | ResNet50 | - | - |

| MGTS [6] | VGG16 | 83.0 | 83.7 |

| CLSA [20] | ResNet50 | 87.2 | 88.5 |

| IGPN [10] | ResNet50 | 90.3 | 91.4 |

| RDLR [14] | ResNet50 | 93.0 | 94.2 |

| TCTS [36] | ResNet50 | 93.9 | 95.1 |

| 端到端 | |||

| OIM [39] | ResNet50 | 75.5 | 78.7 |

| IAN [38] | ResNet50 | 76.3 | 80.1 |

| NPSM [26] | ResNet50 | 77.9 | 81.2 |

| RCAA [4] | ResNet50 | 79.3 | 81.3 |

| CTXG [41] | ResNet50 | 84.1 | 86.5 |

| QEEPS [29] | ResNet50 | 88.9 | 89.1 |

| APNet [45] | ResNet50 | 88.9 | 89.3 |

| HOIM [5] | ResNet50 | 89.7 | 90.8 |

| BINet [9] | ResNet50 | 90.0 | 90.7 |

| NAE+ [7] | ResNet50 | 92.1 | 92.9 |

| PGSFL [19] | ResNet50 | 92.3 | 94.7 |

| DKD [43] | ResNet50 | 93.1 | 94.2 |

| DMRN [15] | ResNet50 | 93.2 | 94.2 |

| AGWF [13] | ResNet50 | 93.3 | 94.2 |

| AlignPS [40] | ResNet50 | 94.0 | 94.5 |

| SeqNet [22] | ResNet50 | 93.8 | 94.6 |

| SeqNet+CBGM [22] | ResNet50 | 94.8 | 95.7 |

| COAT [42] | ResNet50 | 94.2 | 94.7 |

| COAT+CBGM [42] | ResNet50 | 94.8 | 95.2 |

| MHGAM [21] | ResNet50 | 94.9 | 95.9 |

| PSTR [3] | ResNet50 | 94.2 | 95.2 |

| PSTR [3] | PVTv2-B2 | 95.2 | 96.2 |

| SeqNeXt (本文) | ResNet50 | 94.1 | 94.7 |

| SeqNeXt+GFN (本文) | ResNet50 | 94.7 | 95.3 |

| SeqNeXt (本文) | ConvNeXt | 96.1 | 96.5 |

| SeqNeXt+GFN (本文) | ConvNeXt | 96.4 | 97.0 |

Table 2: Standard performance metrics mAP and top-1 accuracy on the benchmark CUHK-SYSU and PRW datasets are compared for state-of-the-art two-step and end-to-end models. ConvNeXt backbone $=$ ConvNeXt Base. Table 3: Performance on the PRW test set for query and gallery scenes from the same camera (Same Cam ID) or different cameras (Cross Cam ID).

表 2: 在基准数据集 CUHK-SYSU 和 PRW 上比较了最先进的两阶段和端到端模型的标准性能指标 mAP 和 top-1 准确率。ConvNeXt 骨干网络 $=$ ConvNeXt Base。

表 3: PRW 测试集上查询场景和图库场景来自相同摄像头 (Same Cam ID) 或不同摄像头 (Cross Cam ID) 的性能表现。

| 方法 | SameCamID | CrossCamID |

|---|---|---|

| mAP | top-1 | |

| HOIM [5] NAE+ [7] SeqNet [22] SeqNet+CBGM[22] AGWF [13] | ||

| 1 | 43.6 | |

| 1 1 | 1 | 44.3 48.0 |

| COAT+CBGM[42] SeqNeXt(ours) SeqNeXt+GFN(ours) | 1 | 1 |

| - | - | 51.7 |

| 82.9 85.1 | 98.5 98.6 | 55.3 56.4 |

In the corresponding metrics tables, we show re-id results by presenting the GFN-modified scores as mAP and top-1, and the difference between unmodified mAP and top1 with $\Delta\mathrm{mAP}$ and ∆top-1. This highlights the change in reid performance specifically from the GFN score-weighting. To indicate the baseline configuration in a table, we use the $\dagger$ symbol, and the final model configuration is highlighted in gray.

在对应的指标表中,我们通过展示GFN修正后的mAP和top-1分数,以及未修正mAP/top-1与$\Delta\mathrm{mAP}$、∆top-1之间的差值来呈现重识别结果。这突出了GFN分数加权对重识别性能的具体影响。表格中用$\dagger$符号标注基线配置,最终模型配置以灰色高亮显示。

| 检测 | 重识别 | GFN | ||||||

|---|---|---|---|---|---|---|---|---|

| GFN目标 | 召回率 | AP | mAP | top-1 | mAP | △ top-1 | mAP | top-1 |

| 无 | 96.0 | 93.6 | 58.6 | 88.7 | ! | |||

| 仅场景 | 96.0 | 93.4 | 56.5 | 91.9 | -0.9 | +2.8 | 16.1 | 73.3 |

| 基础批次 | 95.7 | 93.1 | 53.9 | 86.6 | -2.6 | -2.0 | 23.8 | 58.4 |

| 基础原型 | 96.0 | 93.6 | 55.0 | 86.2 | -3.0 | -2.7 | 22.9 | 57.8 |

| 组合批次 | 96.2 | 93.6 | 59.5 | 92.2 | +1.1 | +2.9 | 20.5 | 78.8 |

| 组合原型↑ | 96.0 | 93.4 | 58.8 | 92.3 | +1.1 | +3.5 | 20.4 | 78.5 |

Results for most of the ablations are shown in Supplementary Material Section D, including model modifications, image augmentation, scene pooling size, embedding dimension, and GFN sampling.

大多数消融实验的结果展示在补充材料D节中,包括模型修改、图像增强、场景池大小、嵌入维度和GFN采样。

GFN Objective. We analyze the impact of the various GFN objective choices discussed in Section 3.2. Comparisons are shown in Table 4. Most importantly, the re-id mAP performance without the GFN is relatively high, but the re-id top1 performance is much lower than the best GFN methods. Conversely, the Scene-Only method achieves competitive re-id top-1 performance, but reduced re-id mAP.

GFN目标。我们分析了第3.2节讨论的各种GFN目标选择的影响。比较结果如表4所示。最重要的是,不使用GFN时的重识别(re-id) mAP性能相对较高,但重识别top1性能远低于最佳GFN方法。相反,仅场景(Scene-Only)方法实现了具有竞争力的重识别top-1性能,但降低了重识别mAP。

The Base methods were found to be significantly worse than all other methods, with GFN score-weighting actually reducing GFN performance. The Combined methods were the most effective, better than the Base and Scene-Only methods for both re-id and GFN-only stats, showcasing the improvements discussed in Section 3.2.2. In addition, the success of the Combined objective can be explained by two factors: 1) similarity relationship between scene embeddings and 2) query information given by query-scene embeddings. The Scene-Only objective, which uses only similarity between scene embeddings, is functional but not as effective as the Combined objective, which uses both scene similarity and query information. Since the Scene-Only objective incorporates background information, and does not use query information, we reason that the provided additional benefit of the Combined objective comes from the described mechanism of query excitation of scene features, and not from e.g., simple matching of the query background with the gallery scene image.

研究发现,基础方法 (Base) 显著劣于其他所有方法,其中 GFN 分数加权反而降低了 GFN 性能。组合方法 (Combined) 效果最佳,在重识别 (re-id) 和纯 GFN 统计指标上均优于基础方法和仅场景方法 (Scene-Only),这印证了第 3.2.2 节讨论的改进机制。组合目标的成功可归因于两个因素:(1) 场景嵌入 (scene embeddings) 之间的相似性关系;(2) 查询-场景嵌入 (query-scene embeddings) 提供的查询信息。仅场景目标虽然通过场景嵌入相似性实现了基本功能,但由于缺乏查询信息,其效果不及同时利用场景相似性和查询信息的组合目标。由于仅场景目标已包含背景信息却未使用查询信息,我们认为组合