Knowledge Graphs and Pre-trained Language Models enhanced Representation Learning for Conversational Recommend er Systems

知识图谱与预训练语言模型增强的对话推荐系统表征学习

Abstract—Conversational recommend er systems (CRS) utilize natural language interactions and dialogue history to infer user preferences and provide accurate recommendations. Due to the limited conversation context and background knowledge, existing CRSs rely on external sources such as knowledge graphs to enrich the context and model entities based on their interrelations. However, these methods ignore the rich intrinsic information within entities. To address this, we introduce the Knowledge-Enhanced Entity Representation Learning (KERL) framework, which leverages both the knowledge graph and a pretrained language model to improve the semantic understanding of entities for CRS. In our KERL framework, entity textual descriptions are encoded via a pre-trained language model, while a knowledge graph helps reinforce the representation of these entities. We also employ positional encoding to effectively capture the temporal information of entities in a conversation. The enhanced entity representation is then used to develop a recommend er component that fuses both entity and contextual representations for more informed recommendations, as well as a dialogue component that generates informative entity-related information in the response text. A high-quality knowledge graph with aligned entity descriptions is constructed to facilitate our study, namely the Wiki Movie Knowledge Graph (WikiMKG). The experimental results show that KERL achieves state-of-theart results in both recommendation and response generation tasks. Our code is publicly available at the link: https://github. com/icedpanda/KERL.

摘要—对话推荐系统(CRS)利用自然语言交互和对话历史推断用户偏好并提供精准推荐。由于对话上下文和背景知识有限,现有CRS依赖知识图谱等外部资源来丰富上下文,并基于实体间关系建模。然而这些方法忽略了实体内部丰富的固有信息。为此,我们提出知识增强的实体表示学习(KERL)框架,通过结合知识图谱和预训练语言模型来提升CRS对实体的语义理解。在KERL框架中,实体文本描述通过预训练语言模型编码,知识图谱则用于强化实体表示。我们还采用位置编码来有效捕捉对话中实体的时序信息。增强后的实体表示被用于开发两个组件:融合实体与上下文表示以生成更明智推荐的推荐组件,以及在回复文本中生成信息性实体相关内容的对话组件。为支持研究,我们构建了包含对齐实体描述的高质量知识图谱Wiki Movie Knowledge Graph(WikiMKG)。实验结果表明,KERL在推荐和回复生成任务上均达到最先进水平。代码已开源:https://github.com/icedpanda/KERL。

Index Terms—Pre-trained language model, conversational recommend er system, knowledge graph, representation learning.

索引术语—预训练语言模型、对话推荐系统、知识图谱、表征学习。

I. INTRODUCTION

I. 引言

This research was supported in part by the Australian Research Council (ARC) under grants FT 210100097 and DP 240101547, and the CSIRO – National Science Foundation (US) AI Research Collaboration Program. Zhangchi Qiu is supported by the Griffith University Postgraduate Research Scholarship. Manuscript received xx; revised xxx; accepted xxx. Date of publication xxx; date of current version xxx. (Corresponding author: Alan Wee-Chung Liew.)

本研究部分获得澳大利亚研究理事会(ARC) FT 210100097和DP 240101547项目资助,以及CSIRO-美国国家科学基金会人工智能研究合作计划支持。张驰秋获得格里菲斯大学研究生研究奖学金资助。稿件收稿日期xx;修改日期xxx;录用日期xxx。出版日期xxx;当前版本日期xxx。(通讯作者:Alan Wee-Chung Liew。)

Zhangchi Qiu, Ye Tao, Shirui Pan, and Alan Wee-Chung Liew are with the School of Information and Communication Technology, Griffith University, Gold Coast, Queensland 4222, Australia (e-mail: {zhangchi.qiu, griffith uni.edu.au; {s.pan, a.liew}@griffith.edu.au).

张驰邱、叶涛、潘世瑞和Alan Wee-Chung Liew就职于澳大利亚昆士兰州黄金海岸4222格里菲斯大学信息与通信技术学院 (e-mail: {zhangchi.qiu, griffithuni.edu.au; {s.pan, a.liew}@griffith.edu.au)。

TABLE I: An illustrative example of a chatbot-user conversation on movie recommendations, with items (movies) in italic blue font and entities (e.g., movie genres) in italic red font.

表 1: 电影推荐场景下的聊天机器人与用户对话示例,其中项目(电影)以蓝色斜体显示,实体(如电影类型)以红色斜体显示。

| 用户 | 你好,我特别喜欢喜剧片。能推荐一部吗?比如《油脂》(1978)这种风格的? |

| 聊天机器人 | 你看过《非亲兄弟》(2008)吗?这部非常搞笑。 |

| 用户 | 看过,很棒!或许再来部音乐剧?比如《芝加哥》(2002)? |

| 聊天机器人 | 如果你喜欢这种类型,应该会爱看《婚礼傲客》(2005)。风格很相似。 |

| 用户 | 这部还没看过呢。听起来太完美了!谢谢! |

these methods encounter several drawbacks. They may yield recommendations misaligned with the user’s current interests, often suggesting items that are similar to those previously interacted with. Furthermore, they are not adept at capturing sudden changes in user preferences, rendering them less responsive to the user’s evolving interests.

这些方法存在若干缺陷。它们可能产生与用户当前兴趣不符的推荐,往往建议与之前交互过的物品相似的内容。此外,它们不擅长捕捉用户偏好的突然变化,导致对用户兴趣演变的响应能力不足。

These drawbacks motivated the development of conversational recommend er systems (CRSs) [6]–[10], which seek to address the limitations of traditional recommendation systems by employing natural language processing (NLP) techniques to engage users in multi-turn dialogues, allowing CRS to elicit their preferences, and provide personalized recommendations that are better aligned with their current situations, along with accompanying explanations. As an example shown in Table I, a CRS aims to recommend a movie suitable for the user. To accomplish this, the CRS initially offers a recommendation based on the user’s expressed preferences. As the user provides feedback, the CRS refines its understanding of the user’s current interests and adjusts its recommendations accordingly, ensuring a more personalized and relevant suggestion.

这些缺点推动了对话式推荐系统 (CRS) [6]–[10] 的发展,其通过采用自然语言处理 (NLP) 技术让用户参与多轮对话,从而解决传统推荐系统的局限性。这使得 CRS 能够获取用户偏好,并提供更符合其当前情境的个性化推荐及相应解释。如表 1 所示,CRS 的目标是为用户推荐合适的电影。为此,CRS 首先根据用户表达的偏好提供推荐。随着用户给出反馈,CRS 会不断细化对用户当前兴趣的理解,并相应调整推荐,确保建议更加个性化和相关。

To develop an effective CRS, existing studies [8], [9], [11] have explored the integration of external data sources, such as knowledge graphs (KGs) and relevant reviews [10], to supplement the limited contextual information in dialogues and backgrounds. These systems extract entities from the conversational history and search for relevant candidate items in the knowledge graph to make recommendations.

为开发有效的对话推荐系统(CRS),现有研究[8]、[9]、[11]探索了整合外部数据源(如知识图谱(KGs)和相关评论[10])的方法,以补充对话和背景中有限的上下文信息。这些系统从对话历史中提取实体,并在知识图谱中搜索相关候选项目以进行推荐。

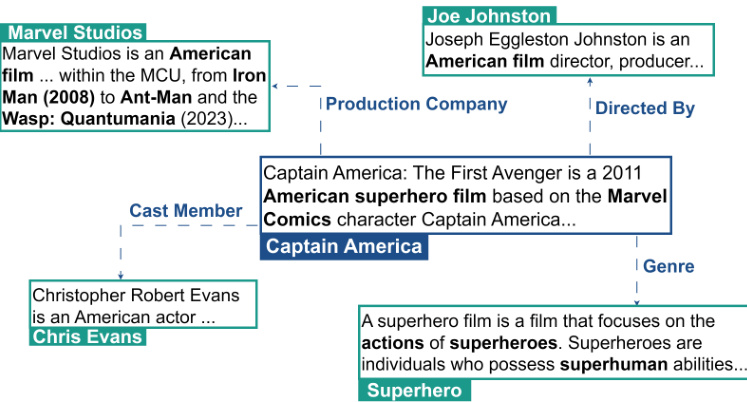

Fig. 1: Example of a KG that incorporates entity descriptions. The figure suggests that descriptions contain rich information and can help improve the semantic understanding of entities

图 1: 融合实体描述的知识图谱示例。该图表明描述包含丰富信息,有助于提升实体的语义理解

Despite the advances made by these studies, three main challenges remain to be addressed:

尽管这些研究取得了进展,仍有三个主要挑战亟待解决:

To address these issues, we propose a novel framework that leverages KGs and pre-trained language models (PLMs) for enhanced representation learning in CRSs. This framework, which we refer to as KERL, stands for Knowledge-enhanced Entity Representation Learning framework. Firstly, we use a PLM to directly encode the textual descriptions of entities into embeddings, which are then used in combination with graph neural networks to learn entity embeddings that incorporate both topological and textual information. This results in a more comprehensive representation of the entities and their relationships (challenge 1). Secondly, we adopt a positional encoding inspired by the Transformer [12] to account for the sequence order of entities in the context history (challenge 2), along with a conversational history encoder to capture contextual information in the conversation. By combining these two components, we are able to gain a better understanding of the user’s preferences from both entity and contextual perspectives. This leads to more informed and tailored recommendations that better align with the user’s current interests and context. However, as these two user preference embeddings are from two different embedding spaces, we employ the contrastive learning [13] method to bring together the same users with different perspectives, such as entity-level user preferences and contextual-level user preferences, while simultaneously distancing irrelevant ones. Lastly, we integrate the knowledge-enhanced entity representation with the pre-trained BART [14] model as our dialogue generation module. This allows us to leverage the capability of the BART model while also incorporating entity knowledge to generate more diverse and informative responses (challenge 3), providing a more comprehensive and engaging experience for the user.

为解决这些问题,我们提出了一种新颖的框架,该框架利用知识图谱(KG)和预训练语言模型(PLM)来增强对话推荐系统(CRS)中的表示学习。我们称该框架为KERL(知识增强的实体表示学习框架)。首先,我们使用PLM直接将实体的文本描述编码为嵌入向量,然后结合图神经网络学习融合拓扑和文本信息的实体嵌入。这能生成更全面的实体及其关系表示(挑战1)。其次,我们采用受Transformer [12]启发的 positional encoding 来建模上下文历史中的实体序列顺序(挑战2),并通过对话历史编码器捕捉对话中的上下文信息。通过结合这两个组件,我们能从实体和上下文两个视角更全面地理解用户偏好,从而生成更贴合用户当前兴趣和情境的个性化推荐。由于这两个用户偏好嵌入来自不同的嵌入空间,我们采用对比学习[13]方法拉近同一用户在不同视角(如实体级用户偏好和上下文级用户偏好)的表示,同时疏离无关表示。最后,我们将知识增强的实体表示与预训练的BART [14]模型集成作为对话生成模块,在保留BART模型能力的同时融入实体知识,生成更多样且信息丰富的回复(挑战3),为用户提供更全面、更具吸引力的体验。

The contributions can be summarized as follows: (1) We construct a movie knowledge graph (WikiMKG) with entity description information. (2) We use a knowledgeenhanced entity representation learning approach to enrich the representation of entities that captures both topological and textual information. (3) We utilize positional encoding to accurately capture the order of appearance of entities in a conversation. This allows for a more precise understanding of the user’s current preferences. (4) We adopt a contrastive learning scheme to bridge the gap between entity-level user preferences and contextual-level user preferences. (5) We integrate entity descriptions and a pre-trained BART model to improve the system’s ability to compensate for limited contextual information and enable the generation of informative responses.

本研究的贡献可总结如下:(1) 我们构建了包含实体描述信息的电影知识图谱(WikiMKG);(2) 采用知识增强的实体表示学习方法,通过融合拓扑结构与文本信息来丰富实体表征;(3) 利用位置编码技术精准捕捉对话中实体的出现顺序,从而更准确地理解用户当前偏好;(4) 通过对比学习方案弥合实体级用户偏好与上下文级用户偏好之间的差异;(5) 整合实体描述与预训练BART模型,增强系统在有限上下文条件下的补偿能力,实现信息丰富的响应生成。

II. RELATED WORK

II. 相关工作

A. Conversational Recommend er System

A. 对话式推荐系统

With the rapid development of dialogue systems [15]– [19], there has been growing interest in utilizing interactive conversations to better understand users’ dynamic intent and preferences. This has led to the rapidly expanding area of conversational recommend er systems [6], [20], [21], which aim to provide personalized recommendations to users through natural language interactions.

随着对话系统[15]-[19]的快速发展,人们越来越关注利用交互式对话来更好地理解用户动态意图和偏好。这推动了对话推荐系统[6]、[20]、[21]领域的迅速扩展,其目标是通过自然语言交互为用户提供个性化推荐。

In the realm of CRS, one approach involves the use of predefined actions, such as item attributes and intent slots [6], [20], [22], [23], for interaction with users. This category of CRS primarily focuses on efficiently completing the recommendation task within a limited number of conversational turns. To achieve this objective, they have adopted reinforcement learning [20], [23]–[25], multi-armed bandit [6] to help the system in finding the optimal interaction strategy. However, these methods still struggle to generate human-like conversations, which is a crucial aspect of a more engaging and personalized CRS.

在CRS领域,一种方法涉及使用预定义动作(如物品属性和意图槽位)[6], [20], [22], [23]与用户交互。这类CRS主要关注在有限对话轮次内高效完成推荐任务。为实现该目标,它们采用了强化学习[20], [23]–[25]、多臂老虎机[6]等技术帮助系统寻找最优交互策略。然而这些方法仍难以生成类人对话,而这正是构建更具吸引力和个性化CRS的关键要素。

Another category of CRS focuses on generating both accurate recommendations and human-like responses, by incorporating a generation-based dialogue component in their design. Li et al. [21] proposed a baseline HREDbased [7] model and released the CRS dataset in a movie recommendation scenario. However, the limited contextual information in dialogues presents a challenge in accurately capturing user preferences. To address this issue, existing studies introduce the entity-oriented knowledge graph [8], [11], the word-oriented knowledge graph [9], and review information [10]. This information is also used in text generation to provide knowledge-aware responses. Although these integration s have enriched the system’s knowledge, the challenge of effectively fusing this information into the recommendation and generation process still remains. Therefore, Zhou et al. [26] proposed the contrastive learning approach to better fuse this external information to enhance the performance of the system. Additionally, instead of modeling entity representation with KG, Yang et al. [27] constructed entity metadata into text sentences to reflect the semantic representation of items. However, such an approach lacks the capability to capture multi-hop information.

另一类CRS通过在设计中加入基于生成的对话组件,专注于同时提供精准推荐和拟人化回应。Li等人[21]提出了基于HRED[7]的基线模型,并发布了电影推荐场景下的CRS数据集。然而,对话中有限的上下文信息对准确捕捉用户偏好提出了挑战。为解决这一问题,现有研究引入了面向实体的知识图谱[8][11]、面向词汇的知识图谱[9]以及评论信息[10]。这些信息也被用于文本生成以提供知识感知的回应。虽然这些整合丰富了系统知识,但如何有效融合这些信息到推荐与生成过程中仍是挑战。为此,Zhou等人[26]提出对比学习方法以更好地融合外部信息来提升系统性能。此外,Yang等人[27]摒弃了用知识图谱建模实体表示的方法,转而将实体元数据构造成文本来反映物品的语义表征,但该方法缺乏捕捉多跳信息的能力。

Inspired by the success of PLMs, Wang et al. [28] combined DialogGPT [17] with an entity-oriented KG to seamlessly integrate recommendation into dialogue generation using a vocabulary pointer. Furthermore, Wang et al. [29] introduced the knowledge-enhanced prompt learning approach based on a fixed DialogGPT [17] to perform both recommendation and conversation tasks. These studies do not exploit the information present in the textual description of entities and their sequence order in the dialogue. In contrast, our proposed KERL incorporates a PLM for encoding entity descriptions and uses positional encoding to consider sequence order, leading to a more comprehensive understanding of entities and conversations.

受PLM成功的启发,Wang等人[28]将DialogGPT[17]与面向实体的知识图谱(KG)相结合,通过词汇指针将推荐无缝整合到对话生成中。此外,Wang等人[29]基于固定版DialogGPT[17]提出了知识增强的提示学习方法,可同时执行推荐和对话任务。这些研究未充分利用实体文本描述信息及其在对话中的序列顺序。相比之下,我们提出的KERL采用PLM编码实体描述,并利用位置编码考虑序列顺序,从而实现对实体和对话更全面的理解。

B. Knowledge Graph Embedding

B. 知识图谱嵌入 (Knowledge Graph Embedding)

Knowledge graph embedding (KGE) techniques have evolved to map entities and relations into low-dimensional vector spaces, extending beyond simple structural information to include rich semantic contexts. These embeddings are vital for tasks such as graph completion [30]–[32], question answering [33], [34], and recommendation [35], [36]. Conventional KGE methods such as TransE [37], RotatE [38] and DistMult [39] focus on KGs’ structural aspects, falling into translation-based or semantic matching categories based on their unique scoring functions [40], [41]. Recent research capitalizes on the advancements in NLP to encode rich textual information of entities and relations. DKRL [30] were early adopters, encoding entity descriptions using convolutional neural networks. PretrainKGE [42] further advanced this approach by employing BERT [43] as an encoder and initializes additional learnable knowledge embeddings, then discarding the PLM after finetuning for efficiency. Subsequent developments, including KEPLER [44] and JAKET [45], have utilized PLMs to encode textual descriptions as entity embeddings. These methods optimize both knowledge embedding objectives and masked language modeling tasks. Additionally, LMKE [46] introduced a contrastive learning method, which significantly improves the learning of embeddings generated by PLMs for KGE tasks. In comparison to these existing methods, our work enhances conversational recommend er systems by integrating a PLM with a KG to produce enriched knowledge embeddings. We then align these embeddings with user preferences based on the conversation history. This method effectively tailors the recommendation and generation tasks in CRS.

知识图谱嵌入 (Knowledge Graph Embedding, KGE) 技术已发展至将实体和关系映射到低维向量空间,不仅涵盖简单结构信息,还扩展至丰富语义上下文。这些嵌入对于图谱补全 [30]–[32]、问答系统 [33][34] 以及推荐系统 [35][36] 等任务至关重要。传统 KGE 方法如 TransE [37]、RotatE [38] 和 DistMult [39] 聚焦知识图谱的结构特性,根据其独特评分函数可分为基于翻译或语义匹配的类别 [40][41]。近期研究利用自然语言处理 (NLP) 进展来编码实体与关系的丰富文本信息:DKRL [30] 率先采用卷积神经网络编码实体描述,PretrainKGE [42] 则通过 BERT [43] 作为编码器进一步优化,初始化额外可学习知识嵌入后为提升效率在微调阶段舍弃预训练语言模型 (PLM)。后续发展如 KEPLER [44] 和 JAKET [45] 利用 PLM 将文本描述编码为实体嵌入,同时优化知识嵌入目标和掩码语言建模任务。此外,LMKE [46] 引入对比学习方法,显著提升了 PLM 生成嵌入在 KGE 任务中的学习效果。相较于现有方法,本研究通过整合 PLM 与知识图谱生成增强型知识嵌入,进而基于对话历史将这些嵌入与用户偏好对齐,有效优化了对话式推荐系统 (CRS) 中的推荐与生成任务。

III. METHODOLOGY

III. 方法论

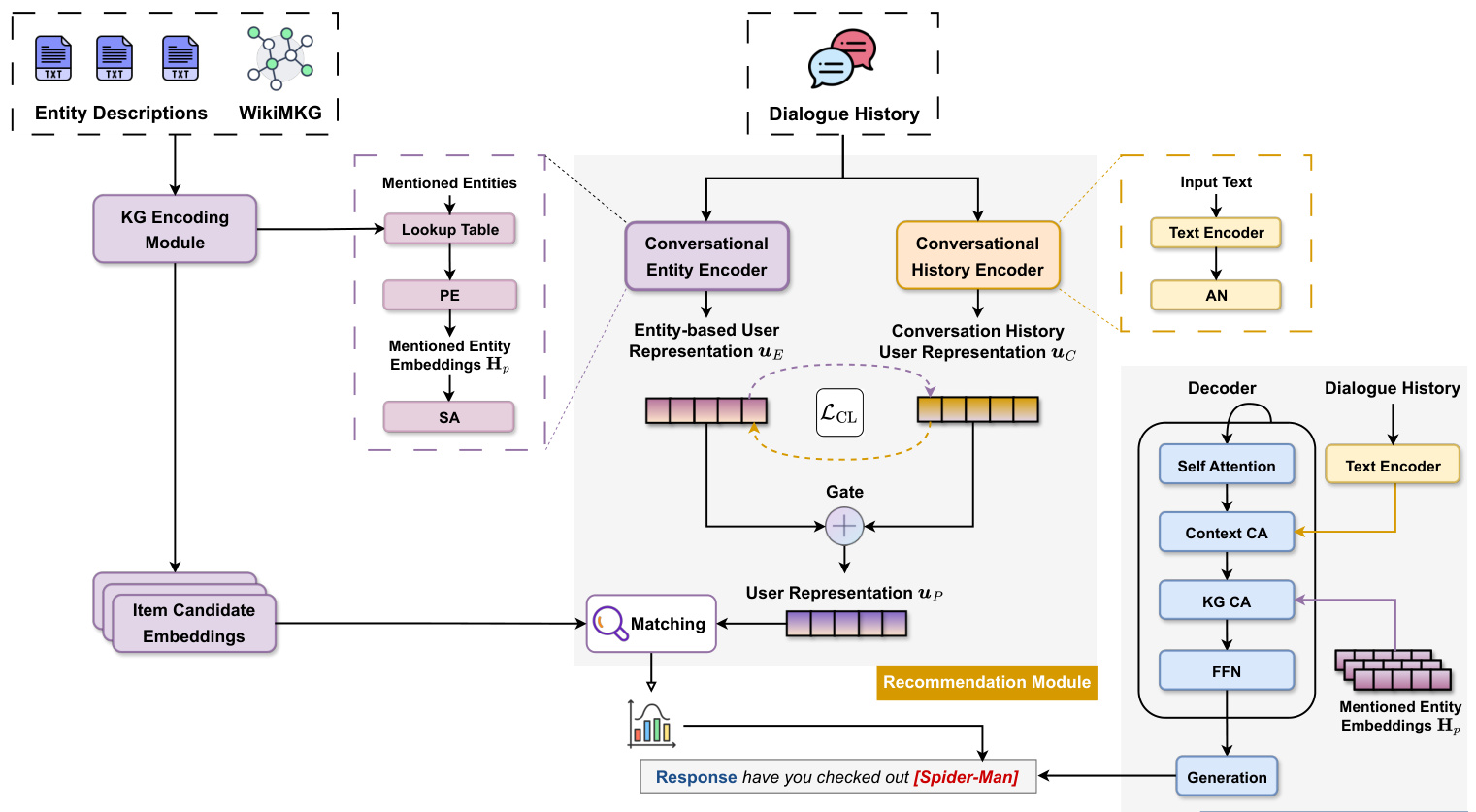

In this section, we present KERL with its overview shown in Figure 2, which consists of three main modules: knowledge graph encoding module, recommendation module, and response generation module. We first formalize the conversational recommendation task in Section III-A, followed by a brief architecture overview in Section III-B, we then introduce the process of encoding entity information (Section III-C), followed by our approach to both recommendation (Section III-D) and conversation tasks (Section III-E). Finally, we present our training algorithm of KERL in Section III-F.

在本节中,我们将介绍KERL,其整体架构如图2所示,包含三个主要模块:知识图谱编码模块、推荐模块和响应生成模块。我们首先在III-A节形式化定义了对话推荐任务,随后在III-B节简要概述了整体架构,接着介绍了实体信息编码过程(III-C节),之后分别阐述了推荐任务(III-D节)和对话任务(III-E节)的处理方法。最后,我们在III-F节介绍了KERL的训练算法。

A. Problem Formulation

A. 问题表述

Formally, let $C={c_{1},c_{2},...,c_{m}}$ denote the history context of a conversation, where $c_{m}$ represents the utterance $c$ at the $m$ -th round of a conversation. Each utterance $c_{m}$ is either from the seeker (i.e., user) or from the recommend er (i.e., system). At the $m$ -th round, the recommendation module will select items $\mathscr{T}{m+1}$ from a set of candidate items $\mathcal{T}$ based on the estimated user preference, and the response generation module will generate a response $c_{m+1}$ to prior utterances. Note that $\mathcal{T}_{t}$ can be empty when there is no need for a recommendation (i.e., chit-chat).

形式上,令 $C={c_{1},c_{2},...,c_{m}}$ 表示对话的历史上下文,其中 $c_{m}$ 代表第 $m$ 轮对话中的语句 $c$。每条语句 $c_{m}$ 来自提问者(即用户)或推荐者(即系统)。在第 $m$ 轮时,推荐模块会根据预估的用户偏好从候选物品集 $\mathcal{T}$ 中选择物品 $\mathscr{T}{m+1}$,响应生成模块则会针对先前的语句生成响应 $c_{m+1}$。注意当无需推荐时(即闲聊场景),$\mathcal{T}_{t}$ 可能为空集。

For the knowledge graph, let $\mathcal{G}={(h,r,t)|h,t\in\mathcal{E},r\in$ $\scriptstyle{\mathcal{R}}}$ denote the knowledge graph, where each triplet $(h,r,t)$ describes a relationship $r$ between the head entity $h$ and the tail entity $t$ . The entity set $\mathcal{E}$ contains all movie items in $\mathcal{T}$ and other non-item entities that are movie properties (i.e., director, production company).

对于知识图谱,令 $\mathcal{G}={(h,r,t)|h,t\in\mathcal{E},r\in$ $\scriptstyle{\mathcal{R}}}$ 表示知识图谱,其中每个三元组 $(h,r,t)$ 描述了头实体 $h$ 和尾实体 $t$ 之间的关系 $r$。实体集 $\mathcal{E}$ 包含 $\mathcal{T}$ 中的所有电影条目以及其他非条目实体(即导演、制片公司等电影属性)。

B. Architecture Overview

B. 架构概述

This section elaborates on the workflow integrating the knowledge graph encoding module, the knowledgeenhanced recommendation module, and the knowledgeenhanced response generation module, as shown in Figure 2. These components work together to process user inputs and generate personalized recommendations, as demonstrated in scenarios such as suggesting a superhero movie.

本节详细阐述了知识图谱编码模块、知识增强推荐模块和知识增强响应生成模块的集成工作流程,如图 2 所示。这些组件协同处理用户输入并生成个性化推荐,例如在推荐超级英雄电影等场景中有所体现。

• Knowledge Graph Encoding Module: This module integrates textual descriptions and entity relationships from the WikiMKG. When a user mentions an interest in superhero films and watched Avengers: Infinity War, this module engages by extracting and encoding relevant entity information (e.g. actors, directors, and movie characteristics). This process ensures a rich understanding of the entities, laying the foundation for con textually aware recommendations. • Knowledge-enhanced Recommendation Module: This module synthesizes the encoded entity information with the user’s conversational history, capturing the essence of the ongoing dialogue. For instance, when a user, after discussing Avengers: Infinity War, seeks something different, this module can suggest a new superhero movie, aligning with their current preferences.

• 知识图谱编码模块 (Knowledge Graph Encoding Module):该模块整合来自 WikiMKG 的文本描述和实体关系。当用户提到对超级英雄电影感兴趣并观看了《复仇者联盟3:无限战争》时,该模块通过提取和编码相关实体信息(如演员、导演和电影特征)进行交互。这一过程确保了对实体的深入理解,为基于上下文的推荐奠定了基础。

• 知识增强推荐模块 (Knowledge-enhanced Recommendation Module):该模块将编码后的实体信息与用户的对话历史相结合,捕捉当前对话的核心。例如,当用户在讨论《复仇者联盟3:无限战争》后寻求不同的内容时,该模块可以根据其当前偏好推荐一部新的超级英雄电影。

Fig. 2: The overview of the framework of the proposed KERL in a movie recommendation scenario. The Attention Network (AN) selectively focuses on relevant tokens. Positional Encoding (PE) and Self-Attention (SA) mechanisms preserve the sequence order and context, respectively. Context Cross-Attention (CA) and KG Cross-Attention integrate conversational and knowledge graph cues. The Recommendation Module matches items to user preferences, and the Response Generation Module formulates natural language suggestions.

图 2: 电影推荐场景中提出的KERL框架概览。注意力网络(AN)选择性关注相关Token。位置编码(PE)和自注意力(SA)机制分别保留序列顺序和上下文。上下文交叉注意力(CA)与知识图谱交叉注意力整合对话线索和知识图谱信息。推荐模块将物品与用户偏好匹配,响应生成模块形成自然语言建议。

• Knowledge-enhanced Response Generation Module: This final module brings together the insights from the KG and the conversational context to formulate coherent and engaging responses. In our superhero movie scenario, it would generate a natural language suggestion that not only aligns with the user’s expressed interest, but also fits seamlessly into the flow of the conversation. For example, the system suggests “Have you checked out Spider-Man?” as a fresh yet relevant choice.

• 知识增强的响应生成模块 (Knowledge-enhanced Response Generation Module):该最终模块整合知识图谱 (KG) 和对话上下文的洞察,以生成连贯且引人入胜的响应。在我们的超级英雄电影场景中,它会生成一个自然语言建议,不仅符合用户表达的兴趣,还能无缝融入对话流程。例如,系统会建议"你看过《蜘蛛侠》吗?"作为一个新颖且相关的选择。

C. Knowledge Graph Encoding Module

C. 知识图谱编码模块

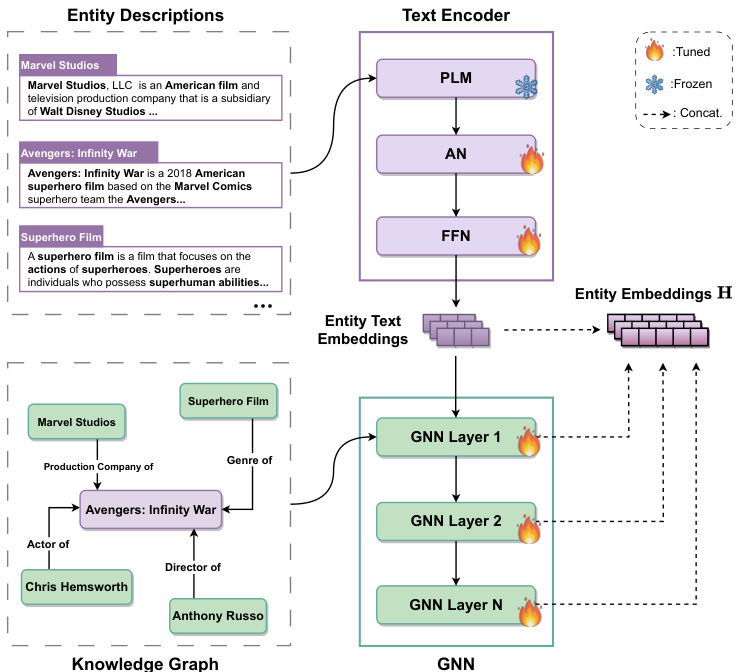

The Knowledge Graph Encoding Module is central to the proposed framework, as illustrated in detail in Figure 3, designed to integrate rich textual descriptions with complex entity relationships from the KG. This integration is crucial, as it ensures that the system captures both the semantic and structural nuances of entities, providing a solid foundation for the CRS’s contextual awareness and recommendation mechanisms.

知识图谱编码模块是该框架的核心,如图 3 所示,其设计目的是将丰富的文本描述与知识图谱 (KG) 中的复杂实体关系相整合。这种整合至关重要,因为它确保系统能捕捉实体的语义和结构细微差别,为 CRS 的上下文感知和推荐机制奠定坚实基础。

- PLM for Entity Encoding: Previous studies focused on modeling entity representations based on their relationships with other entities, without taking into account the rich information contained in the text description of entities. To address this limitation, we propose to leverage the textual descriptions of entities to encode entities into vector representations. Our innovative approach builds upon the capabilities of PLMs such as BERT [43], BART [14] and GPT2 [47]. Within our framework, a domain-specific or generic PLM serves as a fundamental component for encoding textual information. These PLMs have demonstrated superior performance on various tasks in natural language processing. In our architecture, the entity text encoder is designed to be adaptable and customizable, ensuring its compatibility with a range of PLMs. For the purpose of this study, we use a pretrained distillation BERT [48] as our entity text encoder to capture the contextual word representations, underlining the adaptability and efficacy of our approach.

- 用于实体编码的预训练语言模型 (PLM) :先前研究主要基于实体间关系建模实体表示,未充分利用实体文本描述中的丰富信息。为解决这一局限,我们提出利用实体文本描述将实体编码为向量表示。这一创新方法基于 BERT [43]、BART [14] 和 GPT2 [47] 等预训练语言模型的能力构建。在我们的框架中,领域专用或通用预训练语言模型作为编码文本信息的基础组件,这些模型已在自然语言处理多项任务中展现卓越性能。该架构中的实体文本编码器设计为可适配定制,确保与多种预训练语言模型兼容。本研究采用预训练蒸馏 BERT [48] 作为实体文本编码器以捕获上下文词表征,体现了该方法的适应性与高效性。

In the entity descriptions, different words carry varying degrees of significance in representing the entity. Therefore, computing the average of hidden word representations, which assigns equal weight to all words, may lead to a loss of crucial information. Thus, we adopt an attention network that integrates multi-head self-attention [12], and an attention-pooling block [49]. This approach allows the model to selectively focus on the important words in entity descriptions, facilitating the learning of more informative entity representations. More specifically, for a given entity description text denoted as $[w_{1},w_{2},...,w_{k}]$ , with $k$ representing the total number of words in the description, we first transform the words into embeddings using the BERT model. These embeddings are fed into multiple Transformer [12] layers to produce hidden word representations. We then use an attention network and a feed-forward network to summarize the hidden word representations into a unified entity embedding. The attention weights are calculated as follows:

在实体描述中,不同词语对表征实体具有不同程度的重要性。因此,若对所有词语赋予同等权重计算隐藏词表征的平均值,可能导致关键信息丢失。为此,我们采用融合多头自注意力 [12] 和注意力池化模块 [49] 的注意力网络。该方法使模型能选择性聚焦实体描述中的重要词语,从而学习信息更丰富的实体表征。具体而言,对于给定实体描述文本 $[w_{1},w_{2},...,w_{k}]$ (其中 $k$ 表示描述中的总词数),我们首先通过BERT模型将词语转换为嵌入向量,将这些嵌入输入多层Transformer [12] 以生成隐藏词表征,随后使用注意力网络和前馈网络将隐藏词表征汇总为统一实体嵌入。注意力权重计算公式如下:

Fig. 3: The Knowledge Graph Encoding Module employs a PLM for textual semantics and a GNN for structural relations. It generates entity embeddings that include item embeddings, which are a subset used for recommendations. “AN” denotes the attention network.

图 3: 知识图谱编码模块采用PLM处理文本语义,GNN处理结构关系。生成的实体嵌入包含用于推荐的物品嵌入子集。"AN"表示注意力网络。

$$

\alpha_{i}^{w}=\frac{\exp(\pmb q_{w}^{\top}\cdot\sigma(\mathbf V_{w}\mathbf w_{i}+\mathbf v_{w})}{\sum_{j=1}^{k}\exp(\pmb q_{w}^{\top}\cdot\sigma(\mathbf V_{w}\mathbf w_{j}+\mathbf v_{w})}

$$

$$

\alpha_{i}^{w}=\frac{\exp(\pmb q_{w}^{\top}\cdot\sigma(\mathbf V_{w}\mathbf w_{i}+\mathbf v_{w})}{\sum_{j=1}^{k}\exp(\pmb q_{w}^{\top}\cdot\sigma(\mathbf V_{w}\mathbf w_{j}+\mathbf v_{w})}

$$

where $\pmb{\alpha}{i}^{w}$ denotes the attention weights of the $i$ -th word in the entity descriptions, $\mathbf{w}{i}$ denotes the word representations obtained from the top layer of BERT, $\sigma$ is tanh activation function, ${\bf V}{w}$ and ${\bf v}{w}$ are projection parameters, and $\pmb{q}_{w}$ is the query vector. To generate the entity embeddings, we aggregate the representation of these word representations by employing the weighted summation of the contextual word representation and a feed-forward network, formulated as:

其中 $\pmb{\alpha}{i}^{w}$ 表示实体描述中第 $i$ 个词的注意力权重,$\mathbf{w}{i}$ 表示从BERT顶层获得的词表征,$\sigma$ 是tanh激活函数,${\bf V}{w}$ 和 ${\bf v}{w}$ 是投影参数,$\pmb{q}_{w}$ 是查询向量。为生成实体嵌入,我们通过加权求和上下文词表征与前馈网络来聚合这些词表征,其公式为:

$$

\mathbf{h}{e}^{d}=\mathrm{FFN}\left(\sum_{i=1}^{k}\alpha_{i}\mathbf{x}_{i}\right)

$$

$$

\mathbf{h}{e}^{d}=\mathrm{FFN}\left(\sum_{i=1}^{k}\alpha_{i}\mathbf{x}_{i}\right)

$$

where $\mathrm{FFN}(\cdot)$ denotes a fully connected feed-forward network, consisting of two linear transformations with an activation layer, $\mathbf{h}_{e}^{d}$ represents the entity embedding that captures the rich information contained in its textual description. We omit equations related to BERT and multi-head attention.

其中 $\mathrm{FFN}(\cdot)$ 表示全连接前馈网络,由两个线性变换和一个激活层组成,$\mathbf{h}_{e}^{d}$ 代表捕获其文本描述中丰富信息的实体嵌入。我们省略了与BERT和多头注意力相关的方程。

- Knowledge Graph Embedding: In addition to the textual descriptions of entities, semantic relationships between entities can provide valuable information and context. To capture this information, we adopt Relational Graph Convolutional Networks (R-GCNs) [8], [26], [50] to encode structural and relational information between entities. Formally, the representation of the entity $e$ at the $(\ell{+}1)$ -th layer is calculated as follows:

- 知识图谱嵌入 (Knowledge Graph Embedding): 除了实体的文本描述外,实体的语义关系也能提供有价值的信息和上下文。为了捕捉这类信息,我们采用关系图卷积网络 (Relational Graph Convolutional Networks, R-GCNs) [8], [26], [50] 来编码实体的结构和关系信息。形式上,实体 $e$ 在第 $(\ell{+}1)$ 层的表征计算如下:

$$

\mathbf{h}{e}^{(\ell+1)}=\sigma\left(\sum_{r\in\mathcal{R}}\sum_{e^{\prime}\in\mathcal{E}{e}^{r}}\frac{1}{Z_{e,r}}\mathbf{W}{r}^{(\ell)}\mathbf{h}{e^{\prime}}^{(\ell)}+\mathbf{W}{e}^{(\ell)}\mathbf{h}_{e}^{(\ell)}\right)

$$

$$

\mathbf{h}{e}^{(\ell+1)}=\sigma\left(\sum_{r\in\mathcal{R}}\sum_{e^{\prime}\in\mathcal{E}{e}^{r}}\frac{1}{Z_{e,r}}\mathbf{W}{r}^{(\ell)}\mathbf{h}{e^{\prime}}^{(\ell)}+\mathbf{W}{e}^{(\ell)}\mathbf{h}_{e}^{(\ell)}\right)

$$

where $\mathbf{h}{e}^{\ell}$ is the embedding of entity $e$ at the $\ell$ -th layer and $h_{e}^{0}$ is the textual embedding of the entity derived from the text encoder described in Equation 2. The set $\mathcal{E}{e}^{r}$ consists of neighboring entities that are connected to the entity $e$ through the relation $r.\mathbf{W}{r}^{(\ell)}$ and $\mathbf{W}{e}^{(\ell)}$ are learnable model parameters matrices, $Z_{e,r}$ serves as a normalization factor, and $\sigma$ is the activation function. As the output of different layers represents information from different hops, we use the layer-aggregation mechanism [51] to concatenate the representations from each layer into a single vector as:

其中 $\mathbf{h}{e}^{\ell}$ 是实体 $e$ 在第 $\ell$ 层的嵌入表示,$h_{e}^{0}$ 是由公式2所述文本编码器生成的实体文本嵌入。集合 $\mathcal{E}{e}^{r}$ 包含通过关系 $r$ 与实体 $e$ 相连的相邻实体。$\mathbf{W}{r}^{(\ell)}$ 和 $\mathbf{W}{e}^{(\ell)}$ 是可学习的模型参数矩阵,$Z_{e,r}$ 是归一化因子,$\sigma$ 为激活函数。由于不同层的输出代表不同跳数的信息,我们采用层聚合机制 [51] 将各层表示拼接为单个向量:

$$

{\bf h}{e}^{*}={\bf h}{e}^{(0)}\vert\vert\cdot\cdot\cdot\vert\vert{\bf h}_{e}^{(\ell)}

$$

$$

{\bf h}{e}^{*}={\bf h}{e}^{(0)}\vert\vert\cdot\cdot\cdot\vert\vert{\bf h}_{e}^{(\ell)}

$$

where $||$ is the concatenation operation. By doing so, we can enrich the entity embedding by performing the propagation operations as well as control the propagation strength by adjusting $\ell$ . Finally, we can obtain the hidden representation of all entities in $\mathcal{G}$ , which can be further used for user modeling and candidate matching.

其中 $||$ 表示拼接操作。通过这种方式,我们既可以通过传播操作丰富实体嵌入,又能通过调整 $\ell$ 来控制传播强度。最终,我们可以获得 $\mathcal{G}$ 中所有实体的隐藏表示,这些表示可进一步用于用户建模和候选匹配。

To preserve the relational reasoning within the structure of the knowledge graph, we employ the TransE [37] scoring function as our knowledge embedding objective to train our knowledge graph embedding. This widely used method learns to embed each entity and relation by optimizing the translation principle of $h+r\approx t$ for a valid triple $(h,r,t)$ . Formally, the score function $d_{r}$ for a triplet $(h,r,t)$ in the knowledge graph $\mathcal{G}$ is defined as follows:

为了保持知识图谱结构中的关系推理能力,我们采用TransE [37]评分函数作为知识嵌入目标来训练知识图谱嵌入。这一广泛使用的方法通过优化有效三元组$(h,r,t)$的平移原理$h+r\approx t$,学习嵌入每个实体和关系。形式上,知识图谱$\mathcal{G}$中三元组$(h,r,t)$的评分函数$d_{r}$定义如下:

$$

d_{r}(h,t)=||\mathbf{e}{h}+\mathbf{r}-\mathbf{e}{t}||_{p}

$$

$$

d_{r}(h,t)=||\mathbf{e}{h}+\mathbf{r}-\mathbf{e}{t}||_{p}

$$

where $h$ and $t$ represent the head and tail entities, respectively. $\mathbf{e}{h}$ and $\mathbf{e}_{t}$ are the new entity embeddings from Equation 4, and $\mathbf{r}\in\mathbb{R}^{|\mathcal{R}|\times d}$ are the embeddings of the relation, $d$ is the embedding dimension, and $p$ is the normalization factor. The training process of knowledge graph embeddings prioritizes the relative order between valid and broken triplets and encourages their discrimination through margin-based ranking loss [38]:

其中 $h$ 和 $t$ 分别表示头实体和尾实体。$\mathbf{e}{h}$ 和 $\mathbf{e}_{t}$ 是来自公式4的新实体嵌入,$\mathbf{r}\in\mathbb{R}^{|\mathcal{R}|\times d}$ 是关系的嵌入表示,$d$ 为嵌入维度,$p$ 是归一化因子。知识图谱嵌入的训练过程优先考虑有效三元组与破坏三元组之间的相对顺序,并通过基于间隔的排序损失 [38] 鼓励它们之间的区分性:

$$

\begin{array}{l}{\displaystyle\mathcal{L}{\mathrm{KE}}=-\log\sigma(\gamma-d_{r}(h,t))}\ {\displaystyle-\sum_{i=1}^{k}\frac{1}{k}\log\sigma(d_{r}(h_{i}^{'},t_{i}^{'})-\gamma)}\end{array}

$$

$$

\begin{array}{l}{\displaystyle\mathcal{L}{\mathrm{KE}}=-\log\sigma(\gamma-d_{r}(h,t))}\ {\displaystyle-\sum_{i=1}^{k}\frac{1}{k}\log\sigma(d_{r}(h_{i}^{'},t_{i}^{'})-\gamma)}\end{array}

$$

where $\gamma$ is a fixed margin, $\sigma$ is the sigmoid function, and $(h_{i}^{'},r,t_{i}^{'})$ are broken triplets that are constructed by randomly corrupting either the head or tail entity of the positive triples $k$ times.

其中 $\gamma$ 是固定边界值,$\sigma$ 是 sigmoid 函数,$(h_{i}^{'},r,t_{i}^{'})$ 是通过随机破坏正样本三元组的头实体或尾实体 $k$ 次而构建的损坏三元组。

D. Knowledge-enhanced Recommendation Module

D. 知识增强推荐模块

The Knowledge-enhanced Recommendation Module synthesizes entity-based user representation with contextual dialogue history to deliver precise and context-aware recommendations. This module employs a conversational entity encoder, integrating positional encoding with selfattention to reflect the sequential significance of entities in dialogue. Complementing this, a conversational history encoder captures the textual nuances of user interactions. A contrastive learning strategy harmonizes these dual representations, sharpening the system’s ability to discern and align with user preferences for more personalized recommendations.

知识增强推荐模块通过融合基于实体的用户表征与上下文对话历史,提供精准且情境感知的推荐。该模块采用对话实体编码器,将位置编码与自注意力机制相结合,以反映对话中实体的时序重要性。同时,对话历史编码器负责捕捉用户交互的文本细微特征。通过对比学习策略协调这两种表征,增强系统识别和适应用户偏好的能力,从而实现更个性化的推荐。

- Conversational Entity Encoder: To effectively capture user preferences with respect to the mentioned entities, we employ positional encoding and a self-attention mechanism [52]. First, we extract non-item entities and item entities in the conversation history $C$ that are matched to the entity set $\mathcal{E}$ . Here, item entities refer to movie items, while non-item entities pertain to movie properties such as the director and production company. Then, we can represent a user as a set of entities ${\bf E}{u}={e_{1},e_{2},...,e_{i}}$ , where $e_{i}\in{\mathcal{E}}$ . After looking up the entities in ${\bf E}{u}$ from the entity representation matrix $\mathbf{H}$ (as shown in Equation 4), we obtain the respective embeddings $\mathbf{H}{u}=(\mathbf{h}{1}^{},\mathbf{h}{2}^{},...,\mathbf{h}_{i}^{*})$ .

- 对话实体编码器:为有效捕捉用户对提及实体的偏好,我们采用位置编码和自注意力机制[52]。首先,我们从对话历史$C$中提取与实体集$\mathcal{E}$匹配的非物品实体和物品实体。此处物品实体指电影条目,非物品实体则涉及导演、制作公司等电影属性。随