Why Is It Hate Speech? Masked Rationale Prediction for Explain able Hate Speech Detection

为什么这是仇恨言论?基于遮蔽理由预测的可解释仇恨言论检测

Abstract

摘要

In a hate speech detection model, we should consider two critical aspects in addition to detection performance–bias and explain ability. Hate speech cannot be identified based solely on the presence of specific words: the model should be able to reason like humans and be explain able. To improve the performance concerning the two aspects, we propose Masked Rationale Prediction (MRP) as an intermediate task. MRP is a task to predict the masked human rationales–snippets of a sentence that are grounds for human judgment–by referring to surrounding tokens combined with their unmasked rationales. As the model learns its reasoning ability based on rationales by MRP, it performs hate speech detection robustly in terms of bias and explain ability. The proposed method generally achieves state-of-the-art performance in various metrics, demonstrating its effectiveness for hate speech detection. Warning: This paper contains samples that may be upsetting.

在仇恨言论检测模型中,除检测性能外还需考虑两个关键因素——偏差(bias)和可解释性(explainability)。仇恨言论不能仅通过特定词汇存在与否进行判定:模型应具备类人推理能力且决策过程可解释。为提升这两方面的性能,我们提出掩码依据预测(Masked Rationale Prediction, MRP)作为中间任务。MRP通过结合周边token及其未掩码依据(rationales)——即支撑人类判断的句子片段——来预测被掩码的人类判断依据。当模型通过MRP学习基于依据的推理能力时,能在偏差控制和可解释性方面实现稳健的仇恨言论检测。所提方法在多项指标上普遍达到最先进性能,验证了其对仇恨言论检测的有效性。警告:本文包含可能引起不适的示例内容。

1 Introduction

1 引言

With the recent development of social media and online communities, hate speech, one of the critical social problems, can spread easily. The spread of hate strengthens discrimination and prejudice against the target social groups and can violate their human rights. Moreover, online hatred extends offline and causes real-world crimes. Therefore, properly regulating online hate speech is important to address many social problems related to aversion.

随着社交媒体和在线社区的近期发展,仇恨言论这一关键社会问题极易扩散。仇恨的传播会强化对目标社会群体的歧视与偏见,并可能侵犯其人权。此外,网络仇恨会延伸至线下,引发现实世界的犯罪行为。因此,妥善管控网络仇恨言论对解决诸多与厌恶情绪相关的社会问题至关重要。

In addition to the detection performance, two essential considerations are involved in implementing a hate speech detection model–bias and explainability. Hate speech should not be judged by any specific word but by the context in which the word is used. Even if any word generally considered vicious does not exist in a text, the text can be hate speech. A specific expression does not always imply hatred either (e.g., e.g., ‘nigger’) (Del Vigna12 et al., 2017). However, the presence of this word can cause a model to make a biased detection of hate speech. This erroneous judgment may inadvertently strengthen the discrimination against the target group of the expression (Sap et al., 2019; Davidson et al., 2019). In this respect, the model’s bias toward specific expressions should be excluded.

除了检测性能外,在实施仇恨言论检测模型时还需考虑两个关键因素——偏差(bias)和可解释性。仇恨言论不应通过特定词汇来判断,而应结合词汇使用的上下文语境。即使文本中不存在普遍认为恶毒的词汇,仍可能构成仇恨言论。特定表达也并非总是隐含仇恨(例如"nigger") (Del Vigna12 et al., 2017)。然而,这类词汇的出现可能导致模型对仇恨言论产生有偏见的检测。这种错误判断可能会无意间强化对该表达目标群体的歧视(Sap et al., 2019; Davidson et al., 2019)。因此,应排除模型对特定表达的偏见倾向。

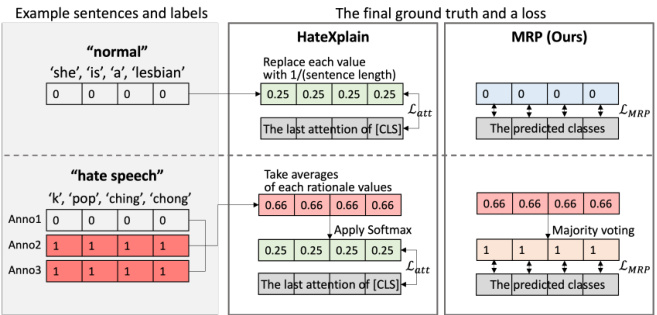

Figure 1: Examples for the two methods to get the final ground truths. Example input sentences are represented with the class and human rationale labels. In this figure, HateXplain uses the same ground truth about both normal and hateful sentences for the loss. However, our method could determine the two classes with the ground truths.

图 1: 获取最终真实标签的两种方法示例。输入句子示例展示了类别和人工标注依据标签。本图中,HateXplain对正常和仇恨语句使用相同的真实标签计算损失。而我们的方法能够根据真实标签区分这两类。

The expressions that can cause biased judgment should be interpreted in context. It means it is vital for the hate speech detection models to have the ability to make judgments based on context, as humans do. Therefore, the model should be explain able to humans so that the rationale behind a result is explained (Liu et al., 2018). Here, the rationale is a piece of a sentence as justification for the model’s prediction about the sentence, as defined by related research (Hancock et al., 2018; Lei et al., 2016).

可能导致偏见判断的表达应在上下文中进行解释。这意味着仇恨言论检测模型必须具备像人类一样基于上下文做出判断的能力。因此,该模型应具备可解释性,以便阐明结果背后的逻辑依据 (Liu et al., 2018)。此处所述逻辑依据是指相关研究定义的、作为模型对句子预测理由的句子片段 (Hancock et al., 2018; Lei et al., 2016)。

To the best of our knowledge, HateXplain (Mathew et al., 2020) is the first hate speech detection benchmark dataset that considers both these aspects. They proposed a method that utilizes rationales as attention ground truths to complement the performance of the two elements. However, when most tokens are annotated as the human rationale in a hateful sentence, the rationale’s information could be meaningless as the ground truth attention becomes hard to be distinguished from that of a normal sentence, as shown in Figure 1. This can hinder the model’s learning.

据我们所知,HateXplain (Mathew et al., 2020) 是首个同时考虑这两个方面的仇恨言论检测基准数据集。他们提出了一种利用人类标注依据 (rationale) 作为注意力真实标签的方法,以提升两个要素的性能。然而如图 1 所示,当仇恨语句中大部分 token 都被标注为人类依据时,这些依据信息可能失去意义——因为真实注意力分布会变得与普通语句难以区分,从而阻碍模型学习。

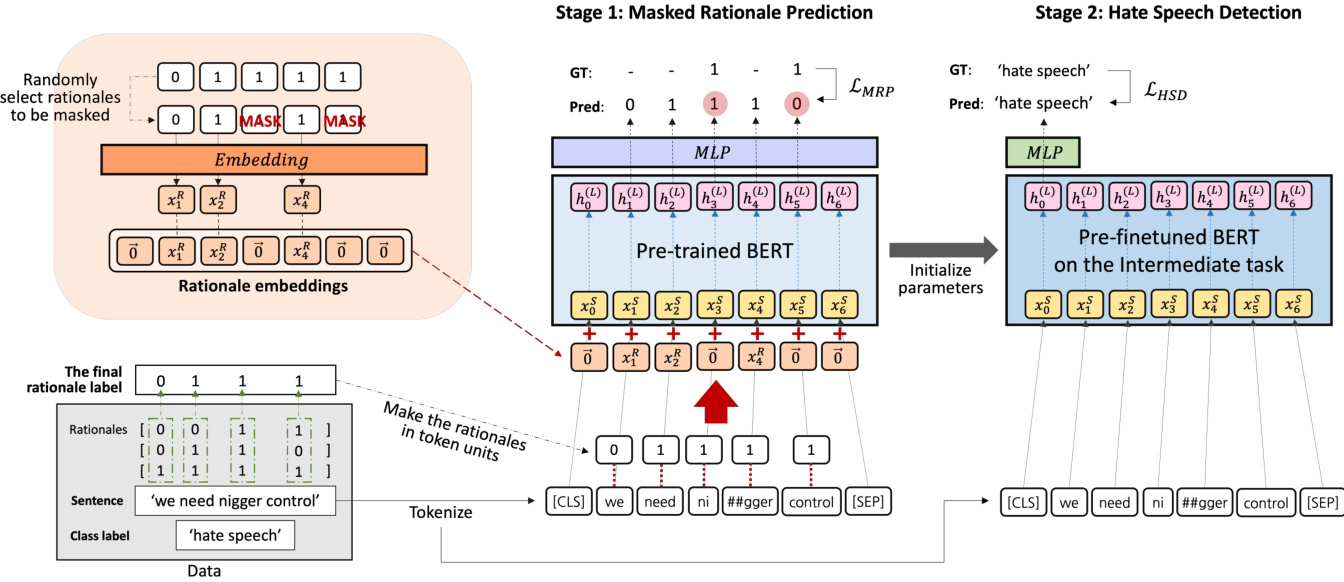

Figure 2: Framework of the proposed method. We finetune a pre-trained BERT through two training stages– Masked Rationale Prediction (MRP) and then hate speech detection. In MRP, the partially masked rationale label is inputted as the rationale embeddings by being added into the input embeddings of BERT. The model predicts each masked rationale per token. The model for hate speech detection is initialized by the updated parameters during MRP.

图 2: 所提方法的框架。我们通过两个训练阶段对预训练的 BERT 进行微调——掩码依据预测 (MRP) 和仇恨言论检测。在 MRP 中,部分掩码的依据标签作为依据嵌入,通过添加到 BERT 的输入嵌入中进行输入。模型预测每个 token 的掩码依据。仇恨言论检测模型通过 MRP 期间更新的参数进行初始化。

In this paper, we present a method to implement a hate speech detection model much more effectively by using the human rationale of hate for finetuning a pre-trained language model. To achieve this, we propose Masked Rationale Prediction (MRP) as an intermediate task before finetuning the model on hate speech detection. MRP trains a model to predict the human rationale label of each token by referring to the context of the input sentence. The model takes the human rationale information of some input tokens among the sentence along with the corresponding tokens as input. It then predicts the rationale of the remaining tokens on which the rationale is masked. We embed the rationales to provide the human rationales as input per token. The masking process of the partial rationales is implemented while creating rationale embeddings; some rationale embedding vectors are replaced with zero vectors.

本文提出一种方法,通过利用人类仇恨言论判定依据来微调预训练语言模型,从而更高效地实现仇恨言论检测。为此,我们提出掩码依据预测(MRP)作为仇恨言论检测微调前的中间任务。MRP通过参考输入句子的上下文,训练模型预测每个token的人类判定依据标签。该模型以句子中部分输入token及其对应的人类判定依据作为输入,进而预测被掩码处理token的判定依据。我们将判定依据嵌入向量化,为每个token提供人类判定依据输入。部分依据的掩码过程在创建依据嵌入时实现:部分依据嵌入向量会被替换为零向量。

MRP allows the model to make judgments per token about its masked rationale by considering surrounding tokens with an unmasked rationale. With this, the model learns a human-like reasoning process to get context-dependent abusiveness of tokens. The model parameters trained on MRP become the initial parameter values for hate speech detection in the following training stage. In this way, based on the way of human reasoning for hate, the model can get improved abilities in terms of bias and explain ability in detecting hate speech. We experimented with BERT (Devlin et al., 2018) as the pre-trained model. Consequently, our models finetuned in the proposed way–BERTMRP and BERT-RP–achieve state-of-the-art performance overall on all three types of 11 metrics of HateXplain benchmark–Performance-based, Biasbased, and Explain ability-based (Mathew et al., 2020). And the two models, especially BERT-MRP, also show the best results in qualitative evaluation of explicit and implicit hate speech detection.

MRP允许模型通过考虑带有未遮蔽依据的周围token,对每个被遮蔽依据的token进行判断。通过这种方式,模型学习了一种类似人类的推理过程,以获取token在上下文中的侮辱性。训练MRP所得的模型参数将成为下一阶段仇恨言论检测任务的初始参数值。基于人类对仇恨言论的推理方式,该方法能提升模型在检测仇恨言论时的偏置控制能力和可解释性。我们采用BERT (Devlin et al., 2018)作为预训练模型进行实验。最终,通过所提方法微调的模型——BERT-MRP和BERT-RP——在HateXplain基准测试的所有11项指标上均达到最先进水平,这些指标分为三类:基于性能、基于偏置和基于可解释性 (Mathew et al., 2020)。两个模型(特别是BERT-MRP)在显性与隐性仇恨言论检测的定性评估中也展现出最佳结果。

The main contributions of this paper are:

本文的主要贡献包括:

• We propose a method to utilize human rationales as input by transforming them into rationale embeddings. Combining the embedded rationales with the corresponding input sentence can provide information about the human rationales per token during model training. • We propose Masked Rationale Prediction (MRP), a learning method that leads the model to predict the masked rationale by considering the surrounding tokens. The model is allowed to learn the reasoning process in context. • We finetune a pre-trained BERT in two stages– on MRP as an intermediate task and then on hate speech detection. The parameters trained concerning human reasoning for hate become a sufficient basis not only for the detection but also in terms of the model bias and explainability.

• 我们提出一种方法,通过将人类推理依据 (rationale) 转化为嵌入向量来将其作为模型输入。在训练过程中,将这些嵌入向量与对应文本结合,可为每个token提供人类推理依据的信息。

• 我们提出掩码推理预测 (Masked Rationale Prediction,MRP) 学习方法,引导模型通过上下文token预测被掩码的推理依据,使模型能够学习语境中的推理过程。

• 我们采用两阶段微调预训练BERT模型:先以MRP作为中间任务进行训练,再进行仇恨言论检测任务。这种针对人类仇恨推理逻辑的参数训练,不仅能提升检测效果,还能改善模型偏差和可解释性。

2 Related works Hate speech detection With the advance of deep learning, hate speech detection studies have utilized neural networks (Badjatiya et al., 2017; Han and Eisenstein, 2019), and word embedding methods (McKeown and McGregor, 2018). More recently, Transformer-based (Vaswani et al., 2017) models have shown remarkable results. In hate speech detection, BERT has been adopted for various studies as hate speech detection can be considered a classification task. Mandl et al. (2019) and Ranasinghe et al. (2019) compared a BERT-based model with Recurrent Neural Networks (RNNs)-based models and showed the BERT-based model outperforms other models. Furthermore, some studies have considered the model’s bias and explain ability. Vaidya et al. (2020) improved accuracy and reduced unintended bias by adopting multi-task learning that predicts toxicity of text and target group labels as additional information. Mathew et al. (2020) utilized rationales of the dataset as additional information for finetuning BERT to deal with the bias and explain ability. To improve performance in terms of the two considerations, we propose a more effective finetuning approach based on BERT and the rationales by adopting the pre-training framework. Pre-finetuning on an intermediate task Recently, finetuning a pre-trained model on a downstream task has become the norm (Howard and Ruder, 2018; Radford et al., 2018). However, it cannot be guaranteed that the model finetuned with a small dataset compared to its size will be sufficiently welladjusted for the target downstream task (Phang et al., 2018). Pre-finetuning is a technique to train the model on a task before the target task (Aghajanyan et al., 2021). This can help the model learn the data patterns or reduce the tuning time so that it converges quickly to better fit the target task. According to Pr uk s a chat kun et al. (2020); Aghajanyan et al. (2021), the more closely the intermediate task is related to the target task, the better the effect of pre-finetuning. And inference tasks involving the reasoning process show a remarkable improvement in the target task performance. We adopt this method to train a pre-trained language model through two stages for hate speech detection.

2 相关工作

仇恨言论检测

随着深度学习的进步,仇恨言论检测研究已开始利用神经网络 (Badjatiya et al., 2017; Han and Eisenstein, 2019) 和词嵌入方法 (McKeown and McGregor, 2018)。近年来,基于 Transformer (Vaswani et al., 2017) 的模型展现出显著效果。由于仇恨言论检测可视为分类任务,BERT 被广泛应用于相关研究。Mandl et al. (2019) 和 Ranasinghe et al. (2019) 将基于 BERT 的模型与基于循环神经网络 (RNN) 的模型进行对比,证明前者性能更优。此外,部分研究关注模型偏见与可解释性。Vaidya et al. (2020) 通过多任务学习(同时预测文本毒性和目标群体标签作为附加信息)提升准确率并降低非预期偏见。Mathew et al. (2020) 利用数据集的逻辑依据作为微调 BERT 的附加信息,以处理偏见和可解释性问题。为从这两方面提升性能,我们提出基于 BERT 和逻辑依据的预训练框架微调方法。

中间任务预微调

当前,在下游任务上微调预训练模型已成为主流 (Howard and Ruder, 2018; Radford et al., 2018)。但对于参数量远大于训练数据规模的模型,难以保证其能充分适配目标下游任务 (Phang et al., 2018)。预微调技术通过在目标任务前训练中间任务来优化模型 (Aghajanyan et al., 2021),可帮助模型学习数据模式或缩短调优时间,使其快速收敛至更适合目标任务的状态。根据 Pr uk s a chat kun et al. (2020) 和 Aghajanyan et al. (2021) 的研究,中间任务与目标任务关联性越强,预微调效果越显著;涉及推理过程的中间任务能大幅提升目标任务表现。我们采用该方法,通过两阶段训练预训练语言模型以检测仇恨言论。

As the intermediate task, we propose MRP, which guides the model to infer the human rationale of each token based on surrounding tokens.

作为中间任务,我们提出MRP,引导模型基于周围token推断每个token的人类推理依据。

Explain able NLP and rationale Explaining the rationale of the result of an AI model is necessary for it to be explain able to humans (Liu et al., 2018). Some Natural Language Processing (NLP) studies define rationale as snippets of an input text on which the model’s prediction is supported (Hancock et al., 2018; Lei et al., 2016). Lei et al. (2016) implemented a generator that generates words considered rationales and used them as input of an encoder for sentiment classification. Bao et al. (2018) mapped the human rationales into the model attention values to solve the low-resource problem by learning a domain-invariant token representation. For hate speech detection, HateXplain employs the human rationales as ground truth attention to concentrate on aggressive tokens. Unlike existing approaches, we utilize the masked human rationale label embeddings as input. They become the useful additional information of each token.

可解释NLP与依据说明

解释AI模型结果的依据对于使其对人类可解释至关重要 (Liu et al., 2018)。部分自然语言处理(NLP)研究将依据定义为支持模型预测的输入文本片段 (Hancock et al., 2018; Lei et al., 2016)。Lei等人(2016)实现了一个生成器,用于生成被视为依据的词语,并将其作为情感分类编码器的输入。Bao等人(2018)将人类依据映射到模型注意力值,通过学习领域不变的token表示来解决低资源问题。针对仇恨言论检测,HateXplain采用人类依据作为真实注意力,聚焦于攻击性token。与现有方法不同,我们利用掩码处理后的人类依据标签嵌入作为输入,这些嵌入成为每个token的有用附加信息。

Masked label prediction The UniMP model presented by Shi et al. (2020) aims to solve the graph node classification problem using graph transformer networks (Yun et al., 2019). They maximized the propagation information required to reconstruct a partially observable label by using both feature information and label information as inputs. However, to prevent over fitting due to excessive information, some label information is masked, and the masked label is predicted. We apply a similar method to text data for an intermediate task with rationales. Through additional rationale information, the model increases the understanding of input sentences, and the performance of the downstream task is improved.

掩码标签预测

Shi等人(2020)提出的UniMP模型旨在利用图Transformer网络(Yun等人,2019)解决图节点分类问题。他们通过同时使用特征信息和标签信息作为输入,最大化重建部分可观测标签所需的传播信息。为防止因信息过载导致的过拟合,该方法会掩码部分标签信息并预测被掩码的标签。我们将类似方法应用于带有理论依据的文本数据中间任务。通过额外的理论依据信息,模型增强了对输入句子的理解,从而提升了下游任务的性能。

3 Method

3 方法

Hate speech detection can be described as a text classification problem. Following the problem setting of HateXplain, we define the problem as a three-class classification involving three categories– ‘hate speech,’ ‘offensive,’ or ‘normal’. We finetune a pre-trained BERT on hate speech detection. Note that other transformer encoder-based models can be used instead. Before finetuning the model on this task, we pre-finetune it on an intermediate task. We propose Masked Rationale Prediction (MRP) as the intermediate task. Our method is described in Figure 2.

仇恨言论检测可视为一个文本分类问题。参照HateXplain的问题设定,我们将其定义为包含"仇恨言论"、"冒犯性言论"和"正常言论"的三分类任务。我们在仇恨言论检测任务上微调了预训练的BERT模型(注:也可使用其他基于Transformer编码器的模型)。在任务微调前,我们先对模型进行中间任务的预微调。我们提出遮蔽依据预测(Masked Rationale Prediction, MRP)作为中间任务,具体方法如图2所示。

3.1 Masked rationale prediction

3.1 掩码原理预测

For MRP, we utilize human rationales of hate provided by the HateXplain dataset. Annotators marked some words in a sentence as rationales for judging the sentence as abusive. A rationale label is presented in a list format, including 1 as rationale and 0 as non-rationale per word in the corresponding sentence. There are no such labels for a sentence whose final class is ‘normal.’ As the dataset was annotated by two or three people per sentence, some pre-processing is required to get the final rationale labels for MRP. To manipulate the multiple rationale labels to one per sentence, we obtain the average value of the rationales per word, and if it is over 0.5, the value of 1; otherwise, the value of 0 is determined as the final rationale of the corresponding word. The final rationale label is a list of these last values. In the case of the ‘normal’ sentence, a list of zeros is used. Accordingly, the final rationale label consists of as many 0s or 1s as the number of words in the sentence. As a sentence is tokenized, its rationale label is also modified in token units.

对于MRP任务,我们采用HateXplain数据集中提供的人类仇恨判定依据。标注者将句子中部分词语标记为判定该句具有攻击性的依据。依据标签以列表形式呈现,对应句子中的每个词语分别标注为1(依据)或0(非依据)。被最终归类为"normal"的句子则没有此类标签。由于数据集中每个句子由两到三人标注,需经过预处理才能获得MRP所需的最终依据标签。为将多重依据标签整合为每句单一标签,我们计算每个词语标注结果的平均值:若超过0.5则取1,否则取0作为该词语的最终依据值。最终依据标签即为这些值的列表。对于"normal"类句子,则使用全零列表。因此,最终依据标签由与句子词语数量相同的0或1构成。当句子被分词时,其依据标签也按token单位相应调整。

MRP is based on token classification, which predicts the rationale label $R$ per token in an input sentence $S$ . In our MRP, the rationale labels, as well as the sentences, are used as inputs. The process of embedding $S$ is the same as that of BERT. We denote the embedded $S$ as $X^{S}=$ ${x_{0}^{S},x_{1}^{S},\cdot\cdot\cdot,x_{n-1}^{S}}\in\mathbb{R}^{n\times d}$ where $n$ is the se- quence length and $d$ is the embedding size. And to use $R$ as input, we pass it through an embedding layer to get $X^{R}={x_{0}^{R},x_{1}^{R},\cdot\cdot\cdot,x_{n-1}^{R}}\in\mathbb{R}^{n\times d}$ as shown in Figure 2. The rationale embeddings reflect the attributes of each token as a ground for the human judgment.

MRP基于Token分类,通过预测输入句子$S$中每个Token的理性标签$R$来实现。在我们的MRP中,理性标签和句子都作为输入使用。嵌入$S$的过程与BERT相同。我们将嵌入后的$S$表示为$X^{S}={x_{0}^{S},x_{1}^{S},\cdot\cdot\cdot,x_{n-1}^{S}}\in\mathbb{R}^{n\times d}$,其中$n$为序列长度,$d$为嵌入维度。为了将$R$作为输入,我们通过嵌入层得到$X^{R}={x_{0}^{R},x_{1}^{R},\cdot\cdot\cdot,x_{n-1}^{R}}\in\mathbb{R}^{n\times d}$,如图2所示。理性嵌入反映了每个Token作为人类判断依据的属性。

MRP differs from BERT’s Masked Language Modeling (MLM) in masking processing. Specifically, we do not mask the tokens; we mask the rationales. To construct the partially masked rationale embeddings ${\tilde{X}}^{R}$ , some rationales are randomly selected to be masked. Each of rationales is transformed into its corresponding embedding vector, except the masked ones. For masking, zero vectors replace the embedding vectors of each corresponding token. For example, if we mask $x_{1}^{R}$ and B, then the rationale embedding matrix is like $\tilde{X}^{R}={\vec{0},\vec{0},x_{2}^{R},\vec{0},\cdot\cdot\cdot,x_{n-2}^{R},\vec{0}}$ . The first and last rationale embeddings corresponding to CLS and SEP tokens, respectively, are replaced with $\vec{0}$ .

MRP与BERT的掩码语言建模(MLM)在掩码处理上有所不同。具体来说,我们不掩码token,而是掩码依据(rationale)。为了构建部分掩码的依据嵌入${\tilde{X}}^{R}$,我们会随机选择部分依据进行掩码。每个依据都会转换为对应的嵌入向量,除了被掩码的部分。对于掩码操作,零向量会替换每个对应token的嵌入向量。例如,如果我们掩码$x_{1}^{R}$和B,那么依据嵌入矩阵就会像$\tilde{X}^{R}={\vec{0},\vec{0},x_{2}^{R},\vec{0},\cdot\cdot\cdot,x_{n-2}^{R},\vec{0}}$这样。分别对应CLS和SEP token的第一个和最后一个依据嵌入也会被替换为$\vec{0}$。

The MRP model predicts the rationale by taking the sum of the embedded tokens $X^{S}$ and the partially masked rationales ${\tilde{X}}^{R}$ as input. We then get:

MRP模型通过将嵌入的token $X^{S}$ 和部分掩码的rationales ${\tilde{X}}^{R}$ 之和作为输入来预测rationale。我们得到:

$$

\begin{array}{r l}&{H_{M R P}^{(0)}=X^{S}+\tilde{X}^{R},}\ &{H_{M R P}^{(l+1)}=\mathrm{Transformer}(H_{M R P}^{(l)}),}\ &{\quad\quad\hat{X}^{R}=\mathrm{MLP}(H_{M R P}^{(L)}).}\end{array}

$$

$$

\begin{array}{r l}&{H_{M R P}^{(0)}=X^{S}+\tilde{X}^{R},}\ &{H_{M R P}^{(l+1)}=\mathrm{Transformer}(H_{M R P}^{(l)}),}\ &{\quad\quad\hat{X}^{R}=\mathrm{MLP}(H_{M R P}^{(L)}).}\end{array}

$$

The $l$ -th hidden state passes through the transformer last hidden state $H_{M R P}^{(L)}$ $l+1$ outputs a predicted rationale through Multi-Layer Perceptron (MLP). In other words, the model is guided to predict the masked rationales by referring to the representations of tokens using their corresponding observed rationales.

第 $l$ 个隐藏状态通过 Transformer 最后一层隐藏状态 $H_{M R P}^{(L)}$ 后,经由多层感知机 (MLP) 输出 $l+1$ 层的预测依据。换言之,该模型通过引用 Token 对应已观测依据的表征,来预测被掩码的依据。

The loss $\mathcal{L}_{M R P}$ is calculated with only the predictions of the masked rationales. Therefore, our objective function is:

损失 $\mathcal{L}_{M R P}$ 仅通过遮蔽依据 (masked rationales) 的预测值计算得出。因此,我们的目标函数为:

$$

\begin{array}{r}{\arg\operatorname*{max}{\theta}\log\mathtt{p}{\theta}\left(\hat{X}^{R}|X^{S},\tilde{X}^{R}\right)=}\ {\displaystyle\sum_{m\in\cal M}\log\mathfrak{p}{\theta}\left(x_{m}^{R}|X^{S},\tilde{X}^{R}\right),}\end{array}

$$

$$

\begin{array}{r}{\arg\operatorname*{max}{\theta}\log\mathtt{p}{\theta}\left(\hat{X}^{R}|X^{S},\tilde{X}^{R}\right)=}\ {\displaystyle\sum_{m\in\cal M}\log\mathfrak{p}{\theta}\left(x_{m}^{R}|X^{S},\tilde{X}^{R}\right),}\end{array}

$$

where $M$ indicates a set of index numbers of rationales that have been masked.

其中 $M$ 表示一组已被掩盖的理性依据的索引编号集合。

3.2 Hate speech detection

3.2 仇恨言论检测

Hate speech detection is implemented as threeclass text classification. The model predicts which category $Y$ the input sentence belongs to among ‘hate speech’, ‘offensive’, and ‘normal’. The head that outputs the predicted class $\hat{Y}$ is used on the top of BERT. Before training, the model parameters are initialized by parameters updated on the intermediate task MRP, except for the head. As the forms of heads are different for two stages, their parameters are randomly initialized. Consequently, in the finetuning stage on hate speech detection, the rationale labels are not involved functionally, icnopnusti doef rtehde ams $[0]{n\times d}$ $H_{H S D}^{(0)}=X^{S}$ . this stage, the

仇恨言论检测被实现为三分类文本分类任务。模型预测输入句子$Y$属于"仇恨言论"、"冒犯性内容"或"正常内容"中的哪一类别。在BERT顶部使用输出预测类别$\hat{Y}$的分类头。训练前,除分类头外,模型参数均通过中间任务MRP更新后的参数进行初始化。由于两个阶段分类头结构不同,其参数采用随机初始化。因此在仇恨言论检测的微调阶段,原理标签不参与功能运算,初始化为$[0]{n\times d}$的$H_{H S D}^{(0)}=X^{S}$。

In this stage, the model does not refer to the rationale labels. The parameters trained during MRP are utilized as a base for reasoning hatefulness in context. The loss $\mathcal{L}_{H S D}$ is obtained through a cross-entropy function, as the task is a multi-class classification problem.

在此阶段,模型不参考原理标签。MRP训练期间获得的参数被用作上下文仇恨性推理的基础。由于该任务是一个多类分类问题,损失 $\mathcal{L}_{HSD}$ 通过交叉熵函数获得。

$$

\arg\operatorname*{max}{\theta}\log\mathbb{p}_{\theta}\left(\hat{Y}|X^{S}\right).

$$

$$

\arg\operatorname*{max}{\theta}\log\mathbb{p}_{\theta}\left(\hat{Y}|X^{S}\right).

$$

Table 1: Results for the performance-based and the bias-based metrics. Scores in bold type are the best for each corresponding metric, while the underlined are the second best, and so are in Table 2.

表 1: 基于性能和偏见的指标结果。加粗分数表示对应指标的最佳结果,下划线表示次优结果,表 2 同理。

| Model | ration. | pre-fin. | Acc. | MacroF1 | AUROC | GMB-Sub. | GMB-BPSN | GMB-BNSP |

|---|---|---|---|---|---|---|---|---|

| BERT | 69.0 | 67.4 | 84.3 | 76.2 | 70.9 | 75.7 | ||

| BERT-HateXplain | 69.8 | 68.7 | 85.1 | 80.7 | 74.5 | 76.3 | ||

| BERT-MLM | √ | 70.0 | 67.5 | 85.4 | 79.0 | 67.7 | 80.9 | |

| BERT-RP | 70.7 | 69.3 | 85.3 | 81.4 | 74.6 | 84.8 | ||

| BERT-MRP | √ | 70.4 | 69.9 | 86.2 | 81.5 | 74.8 | 85.4 |

Table 2: Results for the explain ability-based metrics. The lower the score Sufficiency in Faithfulness, the better, and the higher the other scores, the better.

| 模型 | 可解释性 | |||||

|---|---|---|---|---|---|---|

| 合理性 | 忠实性 | |||||

| 推理 | 预训练 IOUF1 | Token F1 | AUPRC | 完备性 ↑HnS | ||

| BERT[Att] | 13.0 11.8 | 49.7 | 77.8 | 44.7 5.7 | ||

| BERT[LIME] | 46.8 | 74.7 | 43.6 | 0.8 | ||

| BERT-HateXplain[Att] | 12.0 | 41.1 | 62.6 | 42.4 | 16.0 | |

| BERT-HateXplain[LIME] | 11.2 | 45.2 | 72.2 | 50.0 | 0.4 | |

| BERT-MLM[Att] | 13.5 | 43.5 | 60.8 | 40.1 | 11.9 | |

| BERT-MLM [LIME] | √ | 11.3 | 47.2 | 76.5 43.4 | -5.5 | |

| BERT-RP[Att] | 13.8 11.4 | 50.3 | 73.8 | 45.4 7.2 | ||

| BERT-RP[LIME] | 49.3 | 77.7 | 48.6 | -2.6 | ||

| BERT-MRP[Att] | 14.1 | 50.4 | 74.5 | 47.9 | 6.7 | |

| BERT-MRP[LIME] | √ | √ 12.9 | 50.1 | 79.2 | 48.3 | -1.2 |

表 2: 基于可解释性指标的结果。忠实性中的充分性分数越低越好,其他分数越高越好。

4 Experiments

4 实验

4.1 Dataset

4.1 数据集

For both stages of the intermediate and the target task, we use the HateXplain dataset. It contains 20,148 items collected from Twitter and Gab. Every item consists of one English sentence with its own ID and annotations about labels for its category, target groups, and rationales, which are annotated by two or three annotators. Based on the IDs, the dataset is split into 8:1:1 for training, validation, and test phases. Following the permanent split provided by the dataset, the models can’t reference any test data during the training phases of all stages.

在中间任务和目标任务的两个阶段中,我们都使用了HateXplain数据集。该数据集包含从Twitter和Gab收集的20,148条数据项,每条数据项由一个英文句子及其唯一ID、类别标签、目标群体和理由注释组成,这些注释由两到三名标注者完成。根据ID,数据集按8:1:1的比例划分为训练集、验证集和测试集。遵循数据集提供的固定划分,所有阶段的模型在训练过程中都无法引用任何测试数据。

4.2 Metrics

4.2 指标

The evaluation is according to the metrics of HateXplain, which are classified into three types: performance-based, bias-based, and explain abilitybased. The performance-based metrics measure the detection performance in distinguishing among three classes (i.e., hate speech, offensive, and normal). Accuracy, macro F1 score, and AUROC score are used as the metrics.

评估基于HateXplain的指标,分为三类:基于性能、基于偏见和基于可解释性。基于性能的指标衡量模型在区分三类内容(即仇恨言论、冒犯性和正常内容)时的检测性能,采用准确率、宏观F1分数和AUROC分数作为衡量标准。

The bias-based metrics evaluate how biased the model is for specific expressions or profanities easily assumed to be hateful. HateXplain follows AUC-based metrics developed by Borkan et al. (2019). The model classifies the data into ‘toxic’– hateful and offensive–and ‘non-toxic’–normal. For evaluating the model’s prediction results, the data are separated into four subsets: $D_{g}^{+},D_{g}^{-},D^{+}$ , and $D^{-}$ . The target group labels are considered standard for dividing data into subgroups. The notations with $g$ denote the data of a specific subgroup among the subgroups, and the notations without g are the remaining data. $+$ and mean that the data are toxic and non-toxic, respectively. Based on these subsets, three AUC metrics are calculated.

基于偏见的指标评估模型对特定表达或脏话的偏见程度,这些内容容易被假定为仇恨言论。HateXplain采用Borkan等人 (2019) 开发的基于AUC (Area Under Curve) 的评估指标。该模型将数据分类为"有毒"(仇恨和攻击性)和"无毒"(正常)两类。为评估模型预测结果,数据被划分为四个子集:$D_{g}^{+}$、$D_{g}^{-}$、$D^{+}$和$D^{-}$。目标群体标签作为划分数据子组的标准,带$g$的符号表示子组中的特定子群数据,不带g的符号表示剩余数据。$+$和$-$分别表示数据有毒和无毒。基于这些子集,计算得出三个AUC指标。

Subgroup AUC is to evaluate how biased the model is to the context of each target group: $A U C(D_{g}^{-}+D_{g}^{+})$ . The higher the score, the less biased the model is with its prediction of a certain social group.

子群AUC用于评估模型对每个目标群体上下文的偏见程度:$A U C(D_{g}^{-}+D_{g}^{+})$。分数越高,模型对特定社会群体的预测偏见越小。

BPSN (Background Positive, Subgroup Negative) AUC measures the model’s false-positive rates regarding the target groups: $A U C(D^{+}{+}D_{g}^{-})$ . The higher the score is, the less a model is likely to confuse non-toxic sentences whose target is the specific subgroup and toxic sentences whose target is one of the other groups.

BPSN (Background Positive, Subgroup Negative) AUC 衡量模型在目标群体上的假阳性率: $A U C(D^{+}{+}D_{g}^{-})$。分数越高,模型越不容易将针对特定子群组的非恶意句子与针对其他群组的恶意句子混淆。

BNSP (Background Negative, Subgroup Positive) AUC measures the model’s false-negative rates regarding the target groups: $A U C(D^{-}{+}D_{g}^{+})$ . The higher the score is, the less the model is likely to confuse non-toxic sentences whose target is the specific group and toxic sentences whose target is one of the other groups.

BNSP (背景负例,子群正例) AUC 衡量模型针对目标群体的假阴性率:$A U C(D^{-}{+}D_{g}^{+})$。该分数越高,模型越不容易混淆以特定群体为目标的非毒性语句和以其他群体为目标的毒性语句。

We calculate GMB (Generalized Mean of Bias)1 of the three metrics as the final scores to combine those ten scores of each of the metrics into one overall measure according to the HateXplain benchmark. The formula is: $M_{p}(m_{s})= $ $\begin{array}{r}{\big(\frac{1}{N}\sum_{s=1}^{N}m_{s}^{p}\big)^{\frac{1}{p}}}\end{array}$ , where $M_{p}$ means the $\boldsymbol{p}^{t h}$ powermean function, $m_{s}$ is one of the bias metrics $m$ calculated for a specific subgroup $s$ , and $\mathbf{N}$ is the number of subgroups which is 10 in this paper.

我们根据HateXplain基准,计算三个指标的GMB (Generalized Mean of Bias)1作为最终分数,将每个指标的十个分数合并为一个总体衡量标准。公式为:$M_{p}(m_{s})= $ $\begin{array}{r}{\big(\frac{1}{N}\sum_{s=1}^{N}m_{s}^{p}\big)^{\frac{1}{p}}}\end{array}$,其中$M_{p}$表示$\boldsymbol{p}^{t h}$幂平均函数,$m_{s}$是为特定子群$s$计算的偏差度量$m$之一,$\mathbf{N}$是子群数量,本文中为10。

The explain ability-based metrics evaluate how much the model is explain able. HateXplain follows ERASER (DeYoung et al., 2019), which is a benchmark for the evaluation of explain ability of an NLP model based on rationales. The metrics are divided into Plausibility and Faithfulness. Plausibility refers to how the model’s rationale matches the human rationale. Plausibility can be considered both discrete selection and soft selection. For discrete selection, We convert token scores to binary values by more than some threshold(here 0.5). Then, We measures IOU F1 score and Token F1 score. For soft selection, We constructed AUPRC by sweeping a threshold over token scores.

基于可解释性的指标评估模型的可解释程度。HateXplain遵循ERASER (DeYoung et al., 2019)基准,该基准用于评估基于原理的NLP模型可解释性。指标分为合理性(Plausibility)和忠实性(Faithfulness)。合理性指模型原理与人类原理的匹配程度,可分为离散选择和软选择两种情况。对于离散选择,我们将token分数通过阈值(此处为0.5)转换为二进制值,然后测量IOU F1分数和Token F1分数。对于软选择,我们通过扫描token分数阈值来构建AUPRC。

Faithfulness evaluates the influence of the model rationale on its prediction result and consists of Comprehensiveness and Sufficiency. Comprehensiveness assumes the model prediction is less confidence when rationales are removed. This metric can be calculated: $m({x}{i}){j}-m({x}{i}\backslash{r}{i}){j}$ . $m(x_{i}){j}$ is the prediction probability of the corresponding class j with an input sentence $x_{i}$ by the model $m$ And $x_{i}\backslash r_{i}$ is the sentence manipulated by removing the predicted rationale tokens $r_{i}$ from $x_{i}$ .2 The higher a score, the more influential the model’s rationales in its prediction. Sufficiency captures the extent to which extracted rationales are acceptable for a model to make a prediction: $m(x_{i}){j}-m(r_{i})_{j}$ . A low score of this metric means that the rationales are adequate in the prediction.

忠实度评估模型依据对其预测结果的影响,包含全面性和充分性两个指标。全面性假设移除依据时模型预测置信度会降低,计算公式为:$m({x}{i}){j}-m({x}{i}\backslash{r}{i}){j}$。其中$m(x_{i}){j}$表示模型$m$对输入句子$x_{i}$中类别j的预测概率,$x_{i}\backslash r_{i}$则是从$x_{i}$中移除预测依据token$r_{i}$后的句子。该分数越高,表明模型依据对预测的影响越大。充分性衡量提取的依据对模型做出预测的支撑程度:$m(x_{i}){j}-m(r_{i})_{j}$。该指标分数越低,说明依据对预测的支持越充分。

In addition, for the HateXplain benchmark, the scores are calculated based on the attention scores of the last layer or by using the LIME method (Ribeiro et al., 2016). The former is marked as [Att], and the latter is [LIME] in Table 2. DeYoung et al. (2019) and Mathew et al. (2020) contain more detailed explanations.

此外,对于HateXplain基准测试,分数是基于最后一层的注意力分数或使用LIME方法 (Ribeiro et al., 2016) 计算的。前者在表2中标记为[Att],后者标记为[LIME]。DeYoung et al. (2019) 和 Mathew et al. (2020) 包含了更详细的解释。

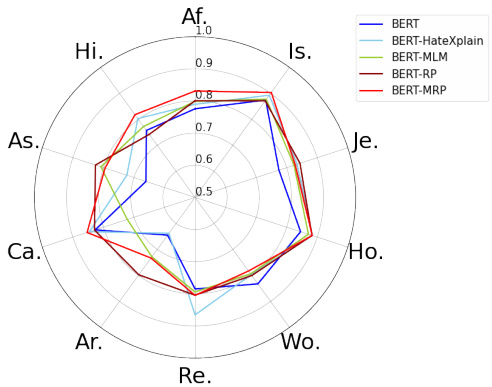

Figure 3: The Subgroup scores among bias-based metrics for each of ten target groups. The target group labels are ‘African’, ‘Islam’, ‘Jewish’, ‘Homosexual’, ‘Women’, ‘Refugee’, ‘Arab’, ‘Caucasian’, ‘Asian’, and ‘Hispanic’ in clockwise direction respectively. The BPSN and BNSP scores are attached in Appendix.

图 3: 十个目标群体在基于偏见的指标中的子群体得分。目标群体标签按顺时针方向分别为"非洲裔"、"伊斯兰教"、"犹太裔"、"同性恋"、"女性"、"难民"、"阿拉伯裔"、"高加索裔"、"亚裔"和"拉丁裔"。BPSN和BNSP得分详见附录。

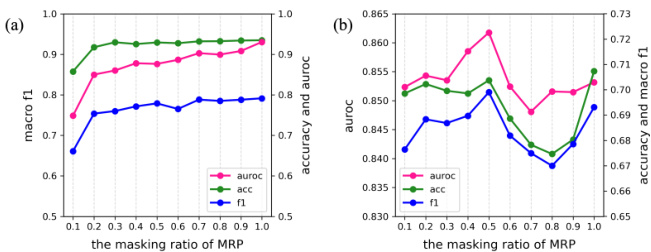

Figure 4: Classification test scores of the proposed models according to masking ratio in MRP. (a) is for token classification after training on MRP in the first stage, and (b) is for hate speech detection in the final stage. The case of masking $100%$ of tokens is the same as BERT-RP.

图 4: 基于MRP中掩码比例提出的模型分类测试分数。(a) 为第一阶段MRP训练后的token分类结果,(b) 为最终阶段的仇恨言论检测结果。当掩码比例为 $100%$ 时,其情况与BERT-RP相同。

4.3 Models and Experimental settings

4.3 模型与实验设置

The evaluated models in Table 1 and Table 2 are as follows. All models are based on a BERT-baseuncased model for a pre-trained model and finetuned on hate speech detection. BERT in the tables is simply finetuned on hate speech detection with a fully-connected layer as a head for the three-class classification described