ASR in German: A Detailed Error Analysis

德语ASR:详细错误分析

Abstract— The amount of freely available systems for automatic speech recognition (ASR) based on neural networks is growing steadily, with equally increasingly reliable predictions. However, the evaluation of trained models is typically exclusively based on statistical metrics such as WER or CER, which do not provide any insight into the nature or impact of the errors produced when predicting transcripts from speech input. This work presents a selection of ASR model architectures that are pretrained on the German language and evaluates them on a benchmark of diverse test datasets. It identifies cross-architectural prediction errors, classifies those into categories and traces the sources of errors per category back into training data as well as other sources. Finally, it discusses solutions in order to create qualitatively better training datasets and more robust ASR systems.

摘要—基于神经网络的自动语音识别(ASR)开源系统数量持续增长,其预测可靠性也在同步提升。然而,训练模型的评估通常仅基于WER或CER等统计指标,这些指标无法揭示语音输入转写预测过程中产生错误的本质或影响。本研究选取了多个针对德语预训练的ASR模型架构,在多样化测试数据集构成的基准上进行评估。通过识别跨架构的预测错误,将其分类并追溯每类错误的训练数据及其他来源,最终讨论了创建更优质训练数据集和构建更鲁棒ASR系统的解决方案。

Keywords—Automatic Speech Recognition, German

关键词—自动语音识别 (Automatic Speech Recognition)、德语

I. INTRODUCTION

I. 引言

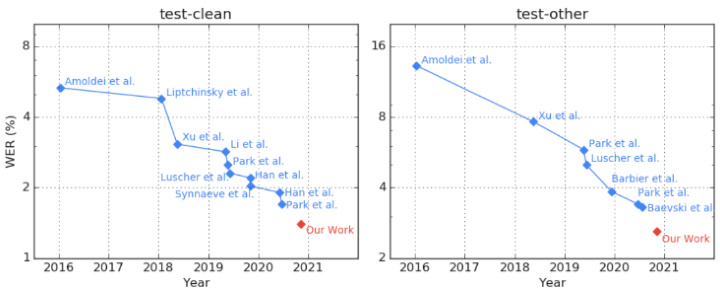

“Benchmarks that are nearing or have reached saturation are problematic, since either they cannot be used for measuring and steering progress any longer, or – perhaps even more problematic – they see continued use but become misleading measures: actual progress of model capabilities is not properly reflected, statistical significance of differences in model performance is more difficult to achieve, and remaining progress becomes increasingly driven by over-optimization for specific benchmark characteristics that are not general iz able to other data distributions”[1]. Hence, novel benchmarks need to be created to complement or replace older benchmarks (ibid.). This description is also true for the English automatic speech recognition (ASR) benchmark Libri speech as seen in Figure 1. Therefore, several different benchmarks have been proposed, including Switchboard, TIMIT and WSJ. However, even on those, a couple of systems already achieve low single digit word error rates (WER) and small improvements are hard to interpret [2]. In the case of Switchboard, a considerable portion of the remaining errors involves filler words, hesitations and non-verbal back channel cues (ibid.).

接近或已达到饱和的基准测试存在诸多问题:它们要么无法继续用于衡量和引导技术进步,要么(可能更严重)虽被持续使用却沦为误导性指标——模型能力的真实进步无法准确体现,模型性能差异的统计显著性更难达成,剩余的进步日益依赖于对特定基准特性的过度优化,而这些特性无法泛化到其他数据分布[1]。因此需要创建新基准来补充或替代旧基准(同上)。这一描述同样适用于英语自动语音识别(ASR)基准LibriSpeech,如图1所示。为此学界提出了Switchboard、TIMIT和WSJ等多个替代基准,但即便在这些基准上,已有部分系统实现了个位数的词错率(WER),细微改进难以评估[2]。以Switchboard为例,剩余错误中相当部分涉及填充词、犹豫词和非言语反馈信号(同上)。

This paper investigates whether benchmarks for German ASR exhibit similar saturation, although there is far less available data for the German language compared to English with correspondingly fewer benchmark results being published. This includes detailed error analysis and error back tracing to determine specific improvements that are necessary to increase model performance in terms of training data quality and to more realistically represent model performance in evaluations.

本文研究了德语自动语音识别(ASR)的基准测试是否也表现出类似的饱和现象,尽管与英语相比,德语可用数据量要少得多,相应发布的基准测试结果也较少。研究包括详细的错误分析和错误回溯,以确定在训练数据质量方面提升模型性能所需的具体改进措施,并使评估结果能更真实地反映模型性能。

The rest of the paper is structured as follows. Related work is presented in section 2. Section 3 presents an overview of ASR models considered and which ones were chosen for further analysis. Section 4 describes the datasets that were used for analysis. Results in terms of WER are shown in section 5 before the detailed error analysis is presented in section 6. We briefly discuss possible resolutions for the different kinds of error in section 7 before the conclusion and outlook in section 8.

本文的其余部分结构如下。第2节介绍相关工作。第3节概述了所考虑的ASR (Automatic Speech Recognition) 模型以及选择用于进一步分析的模型。第4节描述了用于分析的数据集。第5节展示了以WER (Word Error Rate) 衡量的结果,随后第6节进行详细的错误分析。在第8节的结论与展望之前,我们在第7节简要讨论了针对不同类型错误的可能解决方案。

Figure 1: Libri speech benchmark reaching saturation [3]

图 1: Libri speech 基准测试达到饱和 [3]

II. RELATED WORK

II. 相关工作

Previous work was done on analyzing the robustness of ASR models in English language, by running them through a diverse set of test data [4], [5], see Table 1. Li k homan en ko et al. found that for models trained on a specific dataset there is a huge discrepancy between the WER when evaluating them on a test split of the same dataset and evaluating them on other datasets. In other words, ASR models are often not able to generalize well and the authors demand that models should always be evaluated on a diverse dataset consisting of at least Switchboard, Common Voice and TED-LIUM v3 for English language in the future. To reach that conclusion, they evaluated a single ASR model on five different public datasets.

先前已有研究通过多种测试数据集分析了英语自动语音识别(ASR)模型的鲁棒性[4][5],如表1所示。Li k homan en ko等人发现,针对特定数据集训练的模型,在相同数据集的测试集与其他数据集上评估时,其词错误率(WER)存在巨大差异。换言之,ASR模型通常难以实现良好泛化,作者主张未来评估英语模型时至少应包含Switchboard、Common Voice和TED-LIUM v3组成的多样化数据集。该结论基于他们在五个不同公共数据集上对单一ASR模型的评估得出。

TABLE I. BENCHMARKS USED FOR ASR EVALUATIONS

表 1: 用于 ASR 评估的基准数据集

| Source | Datasets for evaluation |

|---|---|

| [4] EN | WSJ, LibriSpeech, Tedlium, Switchboard, MCV |

| [5] EN | ST, LibriSpeech, Tedlium, Timit, Voxforge, RT, AMI, MCV |

| [5] DE | Voxforge, MCV, Tuda, SC10, VerbmobilII, Hempel |

| ours DE | Voxforge, MCV, Tuda, VoxPopuli, MLS, M-AILABS, SWC, HUI, GermanTEDTalks, ALC, SL10O, Thorsten, Bundestag |

Ulasik et al. collected a similar benchmark corpus of publicly available data for both English and German language, called CEASR. They evaluated it on four commercial and three open-source ASR systems. They found that the commercial systems outperform the open-source systems by a large percentage for some datasets. Additionally, the performance differences of single systems across different datasets were large. However, they use open-source systems that seem a bit outdated, even for the year of publication [6] and only conducted evaluations for the English language. For German, only anonymous commercial systems were evaluated. The best performing model achieves $11.8%$ WER on Mozilla Common Voice (MCV), which is less than Sc ribose r mo, an open source model based on Quartznet that delivers $7.7%$ WER on MCV [7]. For Tuda, (see section IV) Sc ribose r mo reports $11.7%$ WER whereas Ulasik et al. reports

Ulasik等人收集了一个类似的公开基准语料库CEASR,涵盖英语和德语。他们在4个商业和3个开源ASR系统上进行了评测,发现某些数据集中商业系统的性能显著优于开源系统。此外,单个系统在不同数据集上的表现差异较大。但值得注意的是,他们使用的开源系统即使在论文发表年份[6]也略显过时,且仅对英语进行了评测。德语部分仅评估了匿名商业系统。性能最佳的模型在Mozilla Common Voice(MCV)上达到11.8%词错误率(WER),低于基于Quartznet的开源模型Sc ribose r mo(该模型在MCV上取得7.7% WER)[7]。在Tuda数据集(见第IV节)上,Sc ribose r mo报告11.7% WER,而Ulasik等人报告的数值为...

$13.05%$ for the best commercial system. In contrast to that, our work evaluates six state-of-the-art (SOTA) open-source models in German language on thirteen datasets.

$13.05%$ 的最佳商用系统表现。相比之下,我们的工作评估了六种最先进 (SOTA) 的开源德语模型在十三个数据集上的表现。

Aksënova et al. analyzed the requirements for a new ASR benchmark, that fixes the shortcomings of existing ones [2]. They stated that a next-gen benchmark should cover the application areas dictation, voice search & control, audiobooks, voicemail, conversations and meetings as well as podcasts, movies and TV shows. Furthermore, it should cover technical challenges such as varying speed, acoustic environments, sample frequencies and terminology (ibid.). They identified coherent transcription conventions as a key element for building high quality datasets, as well as detecting and correcting errors in existing corpora. This paper applies exactly these approaches for the German language.

Aksënova等人分析了新一代自动语音识别(ASR)基准测试的需求[2],旨在解决现有基准的缺陷。他们指出,下一代基准应涵盖听写、语音搜索与控制、有声读物、语音邮件、会话会议以及播客电影电视节目等应用场景,同时需要应对语速变化、声学环境差异、采样频率多样化和专业术语等技术挑战。该研究特别强调连贯的转写规范是构建高质量数据集的关键要素,也是检测修正现有语料库错误的核心方法。本文正是将这些方法论应用于德语场景的实践探索。

Keung et al. [8] show that modern ASR architectures may even start emitting repetitive, nonsensical transcriptions when faced with audio from a domain that was not covered at training time. We found similar issues in our analysis.

Keung等人[8]指出,现代自动语音识别(ASR)架构在面对训练时未覆盖领域的音频时,甚至可能开始生成重复且无意义的转录文本。我们在分析中也发现了类似问题。

III. ASR MODELS

III. ASR 模型

There is a large variety of ASR models presented in literature [9]. Our selection considers speaker independent models with a very large vocabulary for continuous as well as spontaneous speech according to the classification in [9]. We are further looking for models with a publicly available implementation, either by the original authors of the model or a third party. Finally, we are using models that were already pretrained on German language. After first tests, we excluded models, that performed bad in our first tests without a language model, including Mozilla DeepSpeech [10] and Sc ribose r mo [7], which are both available as part of the Coqui project .

文献[9]中提出了多种ASR (Automatic Speech Recognition) 模型。根据[9]的分类标准,我们筛选了支持连续语音和自发语音的大词汇量、说话人无关模型,并优先选择具有公开实现(无论是原作者还是第三方提供)的模型。此外,我们仅使用已针对德语进行预训练的模型。初步测试后,我们排除了在不使用语言模型时表现不佳的模型,包括作为Coqui项目组成部分的Mozilla DeepSpeech [10]和Sc ribose r mo [7]。

The Kaldi project, which is one of the most popular opensource ASR projects, does not provide any publicly available pretrained models for German language2. The reported WERs for their model on MCV, Tuda, SWC and Voxforge are however worse than that of all models tested in this work, except Quartznet, although Tuda and SWC were included in the training data of Kaldi. Speech brain [11] can be seen as a potential successor of Kaldi with a more modern architecture and focus on neural models. It provides a CRDNN with CTC/Attention trained on MCV 7.0 German on hugging face 3 with a self-reported WER of $15.37%$ on MCV7. CRDNN stands for an architecture that combines convolutional, recurrent and fully connected layers.

Kaldi项目作为最受欢迎的开源ASR(自动语音识别)项目之一,并未提供公开可用的德语预训练模型。尽管其训练数据包含Tuda和SWC数据集,但该模型在MCV、Tuda、SWC和Voxforge数据集上报告的WER(词错误率)仍低于本研究中测试的所有模型(Quartznet除外)。SpeechBrain[11]可视为Kaldi的潜在继任者,采用更现代的架构并专注于神经模型。该项目在Hugging Face平台提供了基于MCV7.0德语数据集训练的CRDNN(卷积循环深度神经网络)模型,采用CTC/Attention机制,自报在MCV7数据集上的WER为$15.37%$。CRDNN指代结合卷积层、循环层和全连接层的混合架构。

Most of the tested models are provided by Nvidia as part of the NeMo toolkit [12]. Quartznet and Citrinet share a very similar architecture. While Quartznet targets small lightweight models with fast in ferenc ing [13], Citrinet is more targeted towards scaling to higher accuracy while keeping a moderate fast in ferenc ing speed [14]. ContextNet was originally developed by Google [15] and was adopted by Nvidia to be included in their NeMo toolbox. Finally, we have evaluated two Conformer models [16] that have been trained by Nvidia, one that is using CTC loss and the other one being a Conformer Transducer [17]. All Nvidia models can be found online 4 . We always use the “large” models if different versions are available. All models from Nvidia except

大多数测试模型由Nvidia作为NeMo工具包[12]的一部分提供。Quartznet和Citrinet采用非常相似的架构——Quartznet专注于轻量化快速推理的小型模型[13],而Citrinet更侧重在保持适中推理速度的同时提升准确率[14]。ContextNet最初由Google开发[15],后被Nvidia纳入其NeMo工具箱。我们还评估了Nvidia训练的两个Conformer模型[16]:一个使用CTC损失函数,另一个是Conformer Transducer[17]。所有Nvidia模型均可在线获取4。当存在不同版本时,我们始终采用"large"版本。除...

Quartznet are trained on the German parts of Mozilla Common Voice v7.0, Muli lingual Libri Speech and VoxPopuli. Quartznet has been pretrained on 3,000 hours of English data and then finetuned on Mozilla Common Voice v6.0 (see TABLE II. ).

Quartznet 在 Mozilla Common Voice v7.0、Multilingual LibriSpeech 和 VoxPopuli 的德语部分数据上进行训练。该模型已基于 3,000 小时英语数据完成预训练,并在 Mozilla Common Voice v6.0 上进行了微调 (见表 II)。

TABLE II. ASR MODELS WITH TRAINING DATA EVALUATED

表 II: 使用训练数据评估的 ASR (Automatic Speech Recognition) 模型

| 模型 | 训练数据集 |

|---|---|

| Citrinet | GermanMCV7, MLS, VoxPopuli |

| ContextNet | German MCV 7, MLS, VoxPopuli |

| ConformerCTC | GermanMCV7, MLS, VoxPopuli |

| ConformerTransducer | German MCV 7, MLS, VoxPopuli |

| Wav2Vec2.0 | 预训练 MLS EN, 微调 MCV6 |

| Quartznet | 预训练 3000h EN, 微调 MCV6 |

The last model evaluated in detail is Wav2Vec 2.0 [3] from Facebook AI research (FAIR). There are several pretrained models available on Hugging face. We picked the Wav2Vec2-Large-XLSR-53-German by Jonatas Grosman [18], since it had the lowest self-reported WER on Common Voice $(12.06%)$ compared to $18.5%$ reported for the original model provided by FAIR. Recently, there was an update on the model [19] with the new MCV v8 data, which was not included in the evaluation.

最后详细评估的模型是来自Facebook AI研究实验室(FAIR)的Wav2Vec 2.0 [3]。Hugging Face平台上提供了多个预训练模型。我们选择了Jonatas Grosman开发的Wav2Vec2-Large-XLSR-53-German [18],因为该模型在Common Voice数据集上自报的词错误率(WER)最低$(12.06%)$,而FAIR提供的原始模型报告结果为$18.5%$。最近该模型基于新版MCV v8数据进行了更新[19],但本次评估未包含该更新版本。

All models were evaluated without utilizing any language model in order not to introduce another source of error into the analysis. In this context, it is worth noting that two of the six models evaluated, ContextNet and Conformer Transducer, are auto regressive (based on RNN-T loss) while all others are based on CTC loss.

所有模型在评估时均未使用任何语言模型,以避免在分析中引入额外的误差源。值得注意的是,在评估的六个模型中,ContextNet和Conformer Transducer是自回归模型(基于RNN-T损失函数),其余模型则基于CTC损失函数。

IV. DATASETS

IV. 数据集

The evaluation was intended to include as many and diverse publicly available datasets as possible. They should include spontaneous as well as continuous speech, shorter and longer sentences as well as a diverse set of vocabulary in order to find systematic errors that models have learned and to identify how well the selected models can generalize on unseen data.

评估旨在涵盖尽可能多且多样化的公开数据集。这些数据集应包含自发和连续语音、长短不一的句子以及多样化的词汇,以便发现模型学习到的系统性错误,并确定所选模型在未见数据上的泛化能力。

Mozilla Common Voice [20] is a multi-lingual speech corpus with regular releases and a steadily growing amount of data. It is crowd sourced and contains 1,062 hours of German speech data in its 8.0 release from January 2022 ( $965~\mathrm{h}$ for v7.0). Tuda is a dataset for German ASR that was collected by TU Darmstadt in 2015 [21]. In contrast to those, some of the datasets collected from the Bavarian Speech Archive (BAS) were not intended for ASR but “were originally intended either for research into the (dialectal) variation of German or for studies in conversation analysis and related fields” [22]. Tuda contains 127 hours of training data from 147 speakers with very little noise.

Mozilla Common Voice [20] 是一个多语言语音语料库,定期发布且数据量稳步增长。它采用众包方式收集,在2022年1月发布的8.0版本中包含1,062小时的德语语音数据 (v7.0版本为 $965~\mathrm{h}$)。Tuda是由达姆施塔特工业大学于2015年收集的德语自动语音识别(ASR)数据集[21]。与这些数据集不同,从巴伐利亚语音档案馆(BAS)收集的部分数据集并非为ASR设计,而是"最初旨在研究德语(方言)变体,或用于会话分析及相关领域研究"[22]。Tuda包含来自147位说话者的127小时训练数据,且噪声极少。

Several datasets are based on the Librivox project [23], where volunteers read and record public domain books. Among them is the M-AILabs dataset [24] which contains 237 hours of German speech from 29 speakers. It also includes Angela Merkel, a speaker from a political context and thus quite different from the other speakers. We therefore excluded this part from the M-AILabs dataset and listed it separately. Voxforge is another dataset derived from Librivox [10]. It contains only 35 hours of speech from 180 speakers. MLS is the Multilingual Libri Speech corpus [25] compiled by Facebook that contains over 3,000 hours of German speech and is also based on Librivox. Finally, the HUI speech corpus was originally designed to be used for text-to-speech (TTS) systems [26], but can also be used for ASR with its 326 hours of German speech recorded by 122 different speakers of the Librivox project. We expect the datasets based on Librivox to have a large overlap; however, no further analyses were conducted to determine exact values.

多个数据集基于Librivox项目[23],该项目由志愿者朗读并录制公有领域书籍。其中包括M-AILabs数据集[24],该数据集包含29位说话者的237小时德语语音。其中还包含政治背景的说话者Angela Merkel,与其他说话者差异显著,因此我们将这部分从M-AILabs数据集中剔除并单独列出。Voxforge是另一个源自Librivox的数据集[10],仅包含180位说话者的35小时语音。MLS(Multilingual Libri Speech)语料库由Facebook整理[25],包含超过3,000小时的德语语音,同样基于Librivox。最后,HUI语音语料库最初设计用于文本转语音(TTS)系统[26],但由于其包含122位不同说话者录制的326小时Librivox德语语音,也可用于自动语音识别(ASR)。我们预计基于Librivox的数据集存在大量重叠,但未进行进一步分析以确定具体数值。

Similar to the HUI dataset is Thorsten Voice [27]. It also was created for training TTS systems with 23 hours of German speech. It was, however, recorded by a single speaker. It contains mostly short sentences, primarily related to voice assistant prompts. All spoken words and utterances are pronounced in an unambiguous and very clear manner.

与HUI数据集类似的是Thorsten Voice [27]。该数据集同样用于训练TTS(文本转语音)系统,包含23小时的德语语音。不过,它仅由一位说话者录制而成,主要收录与语音助手提示相关的短句。所有发音和语句都以清晰明确的方式呈现。

Another major source of audio data in German originates from political speeches. VoxPopuli is a multilingual dataset based on speeches held at the European parliament [28]. It contains both unlabeled and labeled data, which results in 268 hours of German speech data suitable for training and evaluation. At Hof University, an ASR dataset based on speeches at the German Bundestag5 was prepared using 211 hours of commission sessions and 393 hours of plenary sessions. The test split of the dataset is already released6.

德国音频数据的另一主要来源是政治演讲。VoxPopuli是基于欧洲议会演讲构建的多语言数据集[28],包含未标注和已标注数据,可提供268小时适用于训练和评估的德语语音数据。霍夫大学利用211小时委员会会议和393小时全体会议录音,构建了基于德国联邦议院(Bundestag)演讲的自动语音识别(ASR)数据集,其测试集已公开发布6。

The Spoken Wikipedia Corpus (SWC) is a multilingual dataset comprising 285 hours of read articles from Wikipedia [29]. “Being recorded by volunteers reading complete articles, the data represents the way a user naturally speaks very well, arguably better than a controlled recording in a lab. The vocabulary is quite large due to the encyclopedic nature of the articles” [30].

口语维基百科语料库(SWC)是一个多语言数据集,包含285小时的维基百科文章朗读录音[29]。"由于录音是由志愿者朗读完整文章完成的,这些数据很好地反映了用户自然说话的方式,可以说比实验室受控录音更具代表性。由于文章具有百科全书性质,其词汇量相当庞大"[30]。

TABLE III. DATASETS USED IN EVALUATION

表 III. 评估使用的数据集

| 数据集 | 小时数(全部|测试) | 说话人数 | 类型 | 时长(最小/平均/最大) |

|---|---|---|---|---|

| MCV 7.0 | 965|26.8 | 15,620 | 朗读 | 1.3/6.1/11.2s |

| Tuda | 127|11.9 | 147 | 朗读 | 2.5/8.4/33.1s |

| SWC | 285|9.1 | 363 | 朗读 | 5.0/7.9/24.9s |

| M-AILabs | 237|10.8 | 29 | 朗读 | 0.4/7.2/24.2s |

| MLS | 3287|14.3 | 244 | 朗读 | 10/15.2/22s |

| VoxForge | 35|2.7 | 180 | 朗读 | 1.2/5.1/17.0s |

| HUI | 326|16.3 | 122 | 5.0/9.0/34.3s | |

| Thorsten | 23|1.1 | 1 | 朗读 | 0.2/3.4/11.5s |

| VoxPopuli | 268|4.9 | 530 | 口语 | 0.6/9.0/36.4s |

| Bundestag | 604|5.1 | 口语 | 5.0/7.2/35.6s | |

| Merkel | 1.0 | 1 | 口语 | 0.7/6.9/17.1s |

| TED Talks | 16|1.6 | 71 | 口语 | 0.2/5.1/118s |

| ALC | 95|2.6 | 朗读 | 2.0/12.5/62s | |

| BAS SI100 | 31|1.8 | 101 | 朗读 | 2.1/12.7/54.1s |

One of the few datasets with spontaneous speech besides the political speeches is the TED Talks corpus [31]. It is similar to the English TED-lium corpus [32] and contains data from German, Swiss and Austrian speakers. It contains only 16 hours of speech but is a valuable addition due to its unique features.

除了政治演讲外,少数包含自发演讲的数据集之一是TED Talks语料库[31]。它与英文TED-lium语料库[32]类似,包含来自德国、瑞士和奥地利演讲者的数据。虽然仅包含16小时的语音,但由于其独特特征,仍是一个有价值的补充。

Additionally, we also included a few corpora that were collected for linguistic research, namely ALC, a corpus for the use of language under the influence of alcohol [33] and BAS SI100, a corpus created by the LMU Munich with 101 speakers (50 female, 50 male, 1 unknown). Each speaker has read $\mathord{\sim}100$ sentences from either the SZ subcorpus or the CeBit subcorpus [34]. The subcorpus SZ contains 544 sentences from newspaper articles (“Sued deutsche Zeitung”). The subcorpus CeBit contains 483 sentences from newspaper articles about the CeBit 1995.

此外,我们还纳入了部分为语言学研究收集的语料库,包括ALC(一种记录酒精影响下语言使用的语料库)[33] 以及由慕尼黑大学创建的BAS SI100语料库(包含101位说话人:50名女性、50名男性、1名未知性别)。每位说话人朗读了约100个句子,这些句子选自SZ子语料库或CeBit子语料库[34]。其中SZ子语料库包含544条来自《南德意志报》的新闻句子,CeBit子语料库则包含483条关于1995年CeBit展会的新闻报道句子。

TABLE III. summarizes the key metrics of the datasets. The numbers reported for M-AILabs are for the whole dataset regarding number of speakers and total hours, but for test hours and durations we are referring to the test dataset without Merkel. The statistics of this subset are reported separately. We used the official test splits where applicable. For the other datasets, a random split with $10%$ of the overall data per set was created. Inclusion of more datasets proved to be unnecessarily tedious due to diverse formats of transcriptions with no tool to automatically convert between different styles.

表 III: 总结了数据集的关键指标。M-AILabs报告的说话人数量和总时长数据针对整个数据集,但测试时长和持续时间数据则指代不含Merkel的测试子集,该子集统计数据单独列出。我们尽可能采用官方测试划分方案,其余数据集则按每组数据总量的$10%$进行随机划分。由于转录格式差异且缺乏自动转换工具,纳入更多数据集被证明会带来不必要的繁琐工作。

V. EVALUATION RESULTS

V. 评估结果

First, a conventional evaluation of the models based on WER was performed using the WER collected on every dataset in order to put their accuracy into perspective and to collect prediction errors for further processing.

首先,基于WER对模型进行常规评估,通过收集每个数据集上的WER值来量化其准确性,并收集预测误差以供进一步处理。

TABLE IV. WORD ERROR RATES FOR ALL MODELS AND ALL DATASETS

表 IV: 所有模型和数据集下的词错误率

| Citrinet | Conf.CTC | Conf.T | Contextnet | Wav2Vec2.0 | Quartznet | |

|---|---|---|---|---|---|---|

| MCV 7.0 | 8.78% | 8.00% | 6.28% | 7.33% | 10.97% | 13.90% |

| Bundestag | 13.25% | 13.65% | 11.16% | 14.44% | 21.78% | 28.61% |

| VoxPopuli | 10.35% | 10.82% | 8.98% | 10.13% | 21.96% | 28.34% |

| Merkel | 13.63% | 17.17% | 13.49% | 15.92% | 21.81% | 27.57% |

| MLS | 5.56% | 5.16% | 4.11% | 4.62% | 13.04% | 20.34% |

| MAI-LABS | 5.52% | 5.56% | 4.28% | 4.32% | 9.94% | 18.47% |

| Voxforge | 4.15% | 3.95% | 3.36% | 4.16% | 5.64% | 7.58% |

| HUI | 2.31% | 2.45% | 1.89% | 2.02% | 8.52% | 14.66% |

| Thorsten | 6.74% | 8.49% | 6.20% | 9.21% | 7.57% | 5.95% |

| Tuda | 9.16% | 7.81% | 5.82% | 7.91% | 12.69% | 20.31% |

| SWC | 10.15% | 9.36% | 8.04% | 9.29% | 15.01% | 16.49% |

| GermanTED | 34.53% | 35.77% | 31.98% | 35.58% | 41.90% | 47.75% |

| ALC | 31.42% | 31.30% | 25.90% | 26.85% | 40.94% | 45.53% |

| BAS SL100 | 23.13% | 24.81% | 22.82% | 22.74% | 28.84% | 28.94% |

| Average | 12.76% | 13.16% | 11.02% | 12.47% | 18.62% | 23.17% |

| Median | 9.65% | 8.93% | 7.16% | 9.25% | 14.02% | 20.33% |

The numbers reported in TABLE IV. show that Conformer Transducer outperforms all other models regarding WER. Quartznet scored worst on all but a single test set. Citrinet, Conformer CTC and ContextNet perform very similar with less than $0.5%$ absolute difference in WER. However, the median of Conformer CTC is the best of those three models although the average is the worst, which means that its output predictions show higher variation than those of the two other models, which we count as an indicator for lower robustness. Citrinet and Quartznet have the lowest difference between average and median, which we count as an indicator for robustness. Wav2Vec 2.0 is the second worst model and does exceptionally bad for German TED and ALC. This is similar to Quartznet. We hypothesize that this stems from filling words like “äh” and “hm” occurring in their output transcript predictions, which, in contrast, are omitted in the predictions of all other models. These fillers are also missing in all ground truth transcripts. The WERs for the three political datasets are nearly identical for Wav2Vec. For the Conformer models and Contextnet, VoxPopuli WERs are much better than for Bundestag and Merkel, which is expected with respect to the training data. However, it is not clear why Bundestag has lower WERs than Merkel for those models. It could be due to less errors in the transcript. The HUI dataset has the lowest WERs of all datasets. This is due to the alignment process, where ASR was already used and sentences, that could not be aligned with ASR were discarded. The significant differences in WERs between Merkel and the rest of MAI-Labs on the one hand and the similarities to Bundestag on the other hand, confirm our decision to treat Merkel separately.

表 IV 中的数据表明,Conformer Transducer 在词错误率 (WER) 方面优于所有其他模型。Quartznet 在除一个测试集外的所有测试集上表现最差。Citrinet、Conformer CTC 和 ContextNet 的表现非常相似,WER 绝对差异小于 $0.5%$。然而,Conformer CTC 的中位数是这三个模型中最好的,尽管平均值最差,这意味着其输出预测显示出比其他两个模型更高的变异性,我们认为这是鲁棒性较低的指标。Citrinet 和 Quartznet 的平均值和中位数差异最小,我们认为这是鲁棒性的指标。Wav2Vec 2.0 是第二差的模型,在 German TED 和 ALC 上表现特别差,这与 Quartznet 类似。我们假设这是因为其输出转录预测中填充了“äh”和“hm”等词语,而其他所有模型的预测中都省略了这些填充词。这些填充词在所有真实转录中也都没有出现。Wav2Vec 在三个政治数据集上的 WER 几乎相同。对于 Conformer 模型和 Contextnet,VoxPopuli 的 WER 远好于 Bundestag 和 Merkel,这与训练数据的预期一致。然而,尚不清楚为什么这些模型在 Bundestag 上的 WER 低于 Merkel。可能是由于转录中的错误较少。HUI 数据集在所有数据集中的 WER 最低。这是由于对齐过程中已经使用了 ASR,并且无法与 ASR 对齐的句子被丢弃。Merkel 与 MAI-Labs 其他数据集在 WER 上的显著差异,以及与 Bundestag 的相似性,证实了我们决定将 Merkel 单独处理的合理性。

In general, the datasets with spontaneous speech show a higher WER than the ones with continuous speech, which is in line with findings for the English language [4].

通常情况下,包含自发语音的数据集比包含连续语音的数据集显示出更高的词错误率 (WER) ,这与英语语言的研究结果一致 [4]。

TABLE V. PERFORMANCE COMPARISON OF ASR MODELS

表 V: ASR模型性能对比

| 参数量 | 磁盘占用 | 自回归 | RTF | 速度 | |

|---|---|---|---|---|---|

| Citrinet | 142.0 M | 532 MB | 否 | 0.007 | 158% |

| ConformerCTC | 118.8 M | 452 MB | 否 | 0.006 | 124% |

| ConformerT | 118.0 M | 446 MB | 是 | 0.015 | 323% |

| Contextnet | 112.7 M | 476 MB | 是 | 0.013 | 291% |

| Wav2Vec2.0 | 317.0 M | 1204 MB | 否 | 0.021 | 464% |

| Quartznet | 18.9 M | 71 MB | 否 | 0.005 | 100% |

When evaluating the speed of the models in a batch operation with ${\sim}1\mathrm{h}$ of audio in 600 files on a AMD Ryzen 3700X with 16 GB RAM and Nvidia GTX 1080 GPU, they all perform reasonably well (see Fehler! Ver we is quelle konnte nicht gefunden werden.). Quartznet is the fastest, with Conformer CTC and Citrinet being close. ContextNet and Conformer Transducer are about three times slower due to their auto regressive nature. Wav2Vec 2.0 performs worst due to its large model size and needs 4.6 times as long as Quartznet.

在 AMD Ryzen 3700X (16 GB RAM) 和 Nvidia GTX 1080 GPU 上对 600 个音频文件 (总计约 1 小时时长) 进行批量处理的速度评估中,所有模型表现均较为理想 (参见 Fehler! Ver we is quelle konnte nicht gefunden werden.)。Quartznet 速度最快,Conformer CTC 和 Citrinet 紧随其后。由于自回归特性,ContextNet 和 Conformer Transducer 的处理速度约为前者的三分之一。Wav2Vec 2.0 因模型体积庞大表现最差,耗时达到 Quartznet 的 4.6 倍。

VI. ERROR IDENTIFICATION

VI. 错误识别

As shown in TABLE IV. , WER can be used to determine how well models perform generally and in relation to each other. However, a single metric does not suffice for the identification of cases, in which ASR systems perform particularly poorly and for what reasons. The following sections describe the methods performed to accumulate crossmodel and model-exclusive prediction errors, to classify those further, and how their root causes can be traced back to training and test data.

如表 IV 所示,WER (Word Error Rate) 可用于衡量模型的整体性能及相互比较。但单一指标不足以识别语音识别 (ASR) 系统表现特别差的案例及其原因。以下部分将阐述跨模型和模型专属预测错误的积累方法、进一步分类方案,以及如何将这些错误的根本原因追溯至训练和测试数据。

A. Method

A. 方法

For deeper error analysis, subsets of the total error set (all predictions with $\mathrm{WER}\neq0$ , excluding pure spacing errors) of all models were created. On one hand, difference sets were formed to identify model- and architecture-specific error sources. On the other hand, the intersection of the incorrectly predicted transcripts of all models was created to identify model-independent error causes, which this work focuses on. It was expected that consistent cross-model errors could be traced back to shared training data or ambiguous and lowquality test data.

为进行更深入的错误分析,我们创建了所有模型总错误集(所有预测中$\mathrm{WER}\neq0$的部分,不含纯空格错误)的子集。一方面,通过构建差异集来识别模型和架构特有的错误来源;另一方面,通过建立所有模型错误预测转录结果的交集,以识别与模型无关的错误成因(本研究重点关注这部分)。预期跨模型一致的错误可追溯至共享训练数据或存在歧义/低质量的测试数据。

In addition, the vocabulary of the training datasets of the models was accumulated and compared to that of the test datasets in order to determine how well models can generalize to unseen words, as well as how many words were regularly predicted incorrectly although they were contained within the training datasets.

此外,模型训练数据集的词汇被累积并与测试数据集的词汇进行比较,以确定模型对未见词汇的泛化能力,以及有多少词汇虽然包含在训练数据集中但仍被频繁预测错误。

B. Error Classification

B. 错误分类

From the intersection of the previously described crossmodel prediction errors, 2,000 samples were extracted and manually assigned error categories. This partitioning was intended to more distinctively specify the degree of impact and the cause of several groups of errors in false transcript predictions. All mis predicted transcripts across all models could be assigned at least one of the following categories.

从上述跨模型预测错误的交叉分析中,我们提取了2000个样本并手动标注了错误类别。这一划分旨在更清晰地界定错误转录预测中若干组错误的影响程度和成因。所有模型中的错误预测转录至少可归入以下类别之一:

1) Negligible Errors

1) 可忽略误差

This category includes different forms of otherwise correct transcript predictions, i.e., most models in use were trained on non normalized and commonly abbreviated phrases like “et cetera” or “etc.”, which increases the word error rate due to normalized ground truth transcripts but does not represent an actual error. All models except Quartznet and Wav2Vec 2.0 were trained using non normalized abbreviations, which appear in the training datasets of MLS and VoxPopuli. This category also includes words which can be correctly written in multiple possible ways (including obsolete orthography).

此类情况包括其他形式正确的转录预测,即大多数使用中的模型是在非标准化且常见缩写短语(如"et cetera"或"etc.")上训练的。由于标准化真实转录本的存在,这会增加词错误率,但并不代表实际错误。除Quartznet和Wav2Vec 2.0外,所有模型都使用非标准化缩写进行训练,这些缩写出现在MLS和VoxPopuli的训练数据集中。该类别还包括可以用多种正确方式书写的单词(包括过时的拼写)。

2) Minor Errors (noncontext-breaking)

2) 次要错误 (不影响上下文)

Primarily Quartznet and Wav2Vec 2.0, which were trained on less German data than all other models, often produce transcript predictions with redundant letters, omit single letters or predict hard instead of soft vowels and vice versa (e.g., confusion between d and t) without distorting the meaning. These errors are quickly recognized by humans as spelling errors and can usually be corrected when utilizing a language model, thus having little impact on potential context misinterpretations of model outputs. While increasing character error rate only slightly, WER quickly rises to unrealistic values if these minor errors are included.

主要基于Quartznet和Wav2Vec 2.0的模型(其德语训练数据量少于其他所有模型)常出现字母冗余、漏字母或硬/软元音误判(如d与t混淆)等不影响语义的转写错误。这类错误易被人类识别为拼写错误,通过语言模型通常可修正,因此对模型输出的上下文误读影响较小。虽然这些细微错误仅轻微提升字符错误率(CER),但若计入此类错误,词错误率(WER)会迅速攀升至不合理的数值。

3) Major Errors (context-breaking)

3) 重大错误 (破坏上下文)

Fully incorrectly transcribed or omitted words and omitted or inserted letters that change the meaning of a transcript or exclude necessary information and sentence components represent the most critical category for which the causes were to be identified. Those are further discussed in the following section. A specifically humorous example is:

完全错误转录或遗漏的词语,以及改变转录含义或排除必要信息和句子成分的遗漏或插入字母,是需要识别原因的最关键类别。这些将在下一节进一步讨论。一个特别幽默的例子是:

Ground truth: “an einem stand werden waffeln verkauft um die verein sk asse auf zu besser n” (EN: waffles sold) Prediction: “an einem strand werden waffen verkauft um die verein sk asse auf zu besser n” (EN: weapons sold)

真实情况: "an einem stand werden waffeln verkauft um die verein sk asse auf zu besser n" (英文: 华夫饼出售)

预测结果: "an einem strand werden waffen verkauft um die verein sk asse auf zu besser n" (英文: 武器出售)

4) Names, Loan Words and Anglicisms

4) 名称、外来词和英语借词

A commonly occurring type of error in ASR systems, since names can often be spelled in several ways and only a small fraction of common names is usually found in training datasets, foreign words are strongly domain dependent, and some anglicisms have homophonic pronunciations to German phonemes and therefore can often only be correctly interpreted in a strongly context-dependent manner. Anglicisms are closely related to code-switching ASR.

ASR系统中常见的一类错误,由于人名通常存在多种拼写方式且训练数据集中通常只包含少量常见姓名,外来词高度依赖具体领域,部分英语借词与德语发音存在同音异义现象,因此往往需要极强的上下文依赖才能正确识别。英语借词与语码转换( code-switching ) ASR密切相关。

Although errors in this subcategory often have similar effects to contextual understanding as major errors, they have been separated due to being genera