Multi-Modal Open-Domain Dialogue

多模态开放域对话

Abstract

摘要

Recent work in open-domain conversational agents has demonstrated that significant improvements in model engaging ness and humanness metrics can be achieved via massive scaling in both pre-training data and model size (Adiwardana et al., 2020; Roller et al., 2020). However, if we want to build agents with human-like abilities, we must expand beyond handling just text. A particularly important topic is the ability to see images and communicate about what is perceived. With the goal of engaging humans in multi-modal dialogue, we investigate combining components from state-of-the-art open-domain dialogue agents with those from state-of-the-art vision models. We study incorporating different image fusion schemes and domain-adaptive pre-training and fine-tuning strategies, and show that our best resulting model outperforms strong existing models in multi-modal dialogue while simultaneously performing as well as its predecessor (text-only) BlenderBot (Roller et al., 2020) in text-based conversation. We additionally investigate and incorpo- rate safety components in our final model, and show that such efforts do not diminish model performance with respect to engaging ness metrics.

开放域对话智能体的最新研究表明,通过大规模扩展预训练数据和模型规模,可以显著提升模型的互动性和拟人化指标 (Adiwardana et al., 2020; Roller et al., 2020) 。然而,若要构建具备类人能力的智能体,我们必须突破纯文本处理的局限。其中尤为关键的是视觉感知与图像交流能力。为实现多模态人机对话的目标,我们探索将顶尖开放域对话模型与前沿视觉模型相结合的技术路径。通过研究不同图像融合方案、领域自适应预训练及微调策略,我们证明最优模型不仅在多模态对话任务上超越现有强基线,同时在纯文本对话中保持与前代文本模型BlenderBot (Roller et al., 2020) 相当的性能。我们还在最终模型中集成安全组件,验证了这些设计不会损害模型在互动性指标上的表现。

1 Introduction

1 引言

An important goal of artificial intelligence is the construction of open-domain conversational agents that can engage humans in discourse. Indeed, the future of human interaction with AI is predicated on models that can exhibit a number of different conversational skills over the course of rich dialogue. Much recent work has explored building and training dialogue agents that can blend such skills throughout natural conversation, with the ultimate goal of providing an engaging and interesting experience for humans (Smith et al., 2020; Shuster et al., 2019b). Coupled with the advancement of large-scale model training schemes, such models are becoming increasingly engaging and humanlike when compared to humans (Zhang et al., 2020; Adiwardana et al., 2020; Roller et al., 2020).

人工智能的一个重要目标是构建能够与人类进行对话的开放域对话智能体。事实上,未来人类与AI的交互将基于能够在丰富对话过程中展现多种对话技能的模型。近期许多研究都致力于构建和训练能在自然对话中融合这些技能的对话智能体,其终极目标是为人类提供引人入胜的交互体验 (Smith et al., 2020; Shuster et al., 2019b)。随着大规模模型训练方案的进步,这类模型在与人类对比时正变得越来越吸引人且拟人化 (Zhang et al., 2020; Adiwardana et al., 2020; Roller et al., 2020)。

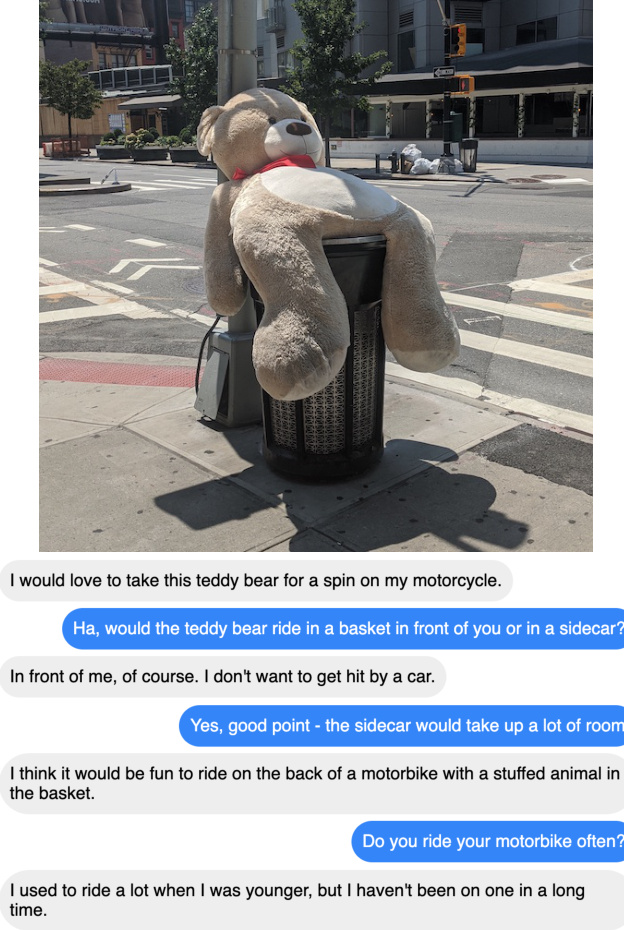

Figure 1: Paper author (right speaker) conversing with our MMB DegenPos model (left speaker). This example was cherry picked. We show more sample conversations in Section 6.2.

图 1: 论文作者(右)与我们的MMB DegenPos模型(左)对话示例。此案例经过精选,更多对话样本见第6.2节。

In order to better approach human-like ability, however, it is necessary that agents can converse with both textual and visual context, similarly to how humans interact in the real world; indeed, communication grounded in images is naturally engaging to humans (Hu et al., 2014). Recent efforts have gone beyond classical, fact-based tasks such as image captioning or visual question answering (Antol et al., 2015; Das et al., 2017a) to produce models that can respond and communicate about images in the flow of natural conversation (Shuster et al., 2020, 2019b).

然而,为了更好地接近人类的能力,AI智能体需要能够结合文本和视觉上下文进行对话,就像人类在现实世界中的互动方式一样;事实上,基于图像的交流对人类而言天然具有吸引力 (Hu et al., 2014)。近期研究已超越图像描述或视觉问答等传统基于事实的任务 (Antol et al., 2015; Das et al., 2017a),开发出了能在自然对话流中响应和讨论图像的模型 (Shuster et al., 2020, 2019b)。

In this work, we explore the extension of largescale conversational agents to image-based dialogue. We combine representations from imagebased models that have been trained on object detection tasks (Lu et al., 2020, 2019) with representations from Transformers with billions of parameters pre-trained on massive (text-only) dialogue datasets, to produce responses conditioned on both visual and textual context. To ensure that our model retains the ability to engage in regular, text-based conversation, we include in our training procedure multi-tasking with datasets expressly designed to instill conversational skills in the model (Smith et al., 2020).

在本工作中,我们探索将大规模对话智能体扩展至基于图像的对话领域。我们将基于图像的目标检测任务训练模型(Lu等人,2020,2019)的表征与基于海量(纯文本)对话数据集预训练的数十亿参数Transformer表征相结合,生成同时基于视觉和文本上下文的响应。为确保模型保留常规文本对话能力,我们在训练过程中引入了专门设计用于培养对话技能的多任务数据集(Smith等人,2020)。

We find that our best resulting models are as proficient in text-only conversation as the current best reported dialogue models, with respect to both automated metrics measuring performance on the relevant datasets and human evaluations of engagingness. Simultaneously, our model significantly outperforms recent strong multi-modal dialogue models when in an image-dialogue regime; we measure several metrics via pairwise human judgments using ACUTE-Eval (Li et al., 2019b) to show that our model is not only more engaging but can also discuss and reference visual context throughout a conversation. See Figure 1 for one sample cherrypicked conversation with our model, with random and lemon-picked conversations in Figures 2 and 3.

我们发现,无论是在相关数据集上的自动化性能指标还是人类对互动性的评估,我们最终的最佳模型在纯文本对话方面的熟练程度都与当前报道的最佳对话模型相当。同时,在图像对话场景下,我们的模型显著优于近期表现优异的多模态对话模型;我们通过ACUTE-Eval (Li et al., 2019b) 进行成对人类评判来衡量多项指标,结果表明我们的模型不仅更具吸引力,还能在整个对话过程中讨论和引用视觉上下文。图1展示了与模型的一次精选对话示例,随机和刻意挑选的对话分别见图2和图3。

One important avenue that we explore with our best models is safety - that is, ensuring that our models are not offensive to their conversational partners. Dialogue safety is indeed a well-studied, but still unsolved, research area (Dinan et al., 2019b; Liu et al., 2019; Dinan et al., 2019a; Blod- gett et al., 2020; Khatri et al., 2018; Schafer and Burtenshaw, 2019; Zhang et al., 2018a), yet we note that safety in the context of image-dialogue is relatively less explored. In this work we examine gender bias and toxicity of text generations in the context of various styles from the Image-Chat dataset (Shuster et al., 2020). Notably, after tuning the model to reduce toxicity and gender bias, we find that model engaging ness does not diminish.

我们探索最佳模型的一个重要方向是安全性——即确保我们的模型不会冒犯对话伙伴。对话安全确实是一个被广泛研究但仍未解决的领域 (Dinan et al., 2019b; Liu et al., 2019; Dinan et al., 2019a; Blodgett et al., 2020; Khatri et al., 2018; Schafer and Burtenshaw, 2019; Zhang et al., 2018a),但我们注意到图像对话场景下的安全性研究相对较少。本研究基于Image-Chat数据集 (Shuster et al., 2020) 分析了不同风格下文本生成存在的性别偏见和毒性问题。值得注意的是,在调整模型以降低毒性和性别偏见后,我们发现模型的对话吸引力并未减弱。

We make publicly available the training procedure, initial pre-trained model weights, and datasets in ParlAI 1 to allow for fully reproducible results.

我们公开了训练流程、初始预训练模型权重以及 ParlAI 数据集 [1] ,以确保结果完全可复现。

2 Related Work

2 相关工作

2.1 Multi-Modal Models and Tasks

2.1 多模态模型与任务

Rich Representations Modeling multi-modal inputs, i.e. in the visual $^+$ textual context, is a well-researched area. Much of the existing literature explores similar architectures to our setup, i.e., using standard Transformer-based models to jointly encode text and images (Li et al., 2019a; Kiela et al., 2019). Others have explored modifications to the standard self-attention scheme in Transformers by incorporating additional co-attention (Lu et al., 2019; Tan and Bansal, 2019) or crossattention (Stefanini et al., 2020) layers. These models have primarily been used for generating rich joint representations of images and text for use in downstream tasks, and they primarily focus on the encoding aspect.

丰富表示建模

多模态输入(即视觉$^+$文本上下文)建模是一个被广泛研究的领域。现有文献大多探索与我们方案类似的架构,例如使用基于标准Transformer的模型联合编码文本和图像(Li et al., 2019a; Kiela et al., 2019)。其他研究则通过引入额外的共注意力(Lu et al., 2019; Tan and Bansal, 2019)或交叉注意力层(Stefanini et al., 2020),对Transformer中的标准自注意力机制进行改进。这些模型主要用于生成图像和文本的丰富联合表示以用于下游任务,且主要侧重于编码方面。

Visual Dialogue/Caption Generation Many tasks have been designed to measure the ability of a model to produce text in the context of images. Specifically, COCO Captions (Chen et al., 2015) and Flickr30k (Young et al., 2014) require a model to produce a caption for a given image. A variety of sequence-to-sequence (Vinyals et al., 2015; Xu et al., 2015; Anderson et al., 2018) and retrievalbased (Gu et al., 2018; Faghri et al., 2018; Nam et al., 2016) models have been applied to these tasks, however they do not go beyond the one-turn text generation expected for captioning an image. Other recent architectures have explored text generation (Wang et al., 2020; Park et al., 2020) in the context of the Visual Dialog (Das et al., 2017b) task; however, this task is primarily used to measure a model’s ability to answer questions about an image in the flow of a natural conversation, which differs somewhat from the open-domain dialogue task. Further still, there have been recent forays into open-domain natural dialogue in the context of images, e.g. in the Image-Chat (Shuster et al., 2020) and Image-grounded Conversations (Most af azad eh et al., 2017) tasks. Again, retrievalbased (Shuster et al., 2020; Ju et al., 2019) and sequence-to-sequence (Shuster et al., 2019b, 2020)

视觉对话/描述生成

许多任务旨在衡量模型在图像上下文中生成文本的能力。具体而言,COCO Captions (Chen et al., 2015) 和 Flickr30k (Young et al., 2014) 要求模型为给定图像生成描述。多种序列到序列模型 (Vinyals et al., 2015; Xu et al., 2015; Anderson et al., 2018) 和基于检索的模型 (Gu et al., 2018; Faghri et al., 2018; Nam et al., 2016) 已应用于这些任务,但它们并未超越图像描述所需的单轮文本生成。

近期其他架构探索了视觉对话 (Visual Dialog, Das et al., 2017b) 任务中的文本生成 (Wang et al., 2020; Park et al., 2020),但该任务主要用于衡量模型在自然对话流中回答图像相关问题的能力,与开放域对话任务略有不同。此外,近期还出现了基于图像的开放域自然对话尝试,例如 Image-Chat (Shuster et al., 2020) 和 Image-grounded Conversations (Mostafazadeh et al., 2017) 任务。同样,基于检索 (Shuster et al., 2020; Ju et al., 2019) 和序列到序列 (Shuster et al., 2019b, 2020) 方法被应用于此。

models have been used to conduct dialogue in this regime.

模型已被用于在此领域进行对话。

2.2 Multi-Task Training / Using Pre-Trained Representations

2.2 多任务训练/使用预训练表示

Our multi-modal model is constructed from models pre-trained in other, related domains; specifically, we seek to fuse the resulting weights of large-scale, uni-modal pre-training to achieve good performance on downstream, multi-modal tasks. Adapting pre-trained representations to later downstream tasks has been shown to be successful in NLP (Peters et al., 2019; Devlin et al., 2019) and dialogue in particular (Roller et al., 2020; Mazaré et al., 2018). Additionally, large-scale multi-modal pre-training has been shown to be effective in other downstream multi-modal tasks (Li et al., 2020; Chen et al., 2020; Singh et al., 2020b). Our work does not contain multi-modal pre-training in itself, but rather we explore what some have deemed “domain-adaptive pre-training” (Gururangan et al., 2020) or “intermediate task transfer” (Pr uk s a chat kun et al., 2020), in which pre-trained representations are “adapted” to a certain domain via an intermediate training step, before training/evaluating on the requisite downstream tasks. It is also worth noting that we employ multi-task training, to both help generalize the applicability of the model and improve its performance on downstream tasks/evaluations; this has been shown recently to help in both image-based (Singh et al., 2020b; Ju et al., 2019; Lu et al., 2020) and text-based (Shuster et al., 2019b; Roller et al., 2020) tasks.

我们的多模态模型由在其他相关领域预训练的模型构建而成;具体而言,我们试图融合大规模单模态预训练的权重结果,以在下游多模态任务中实现良好性能。将预训练表征适配到下游任务的方法已在自然语言处理(NLP)领域(Peters等人,2019;Devlin等人,2019)尤其是对话系统(Roller等人,2020;Mazaré等人,2018)中被证明有效。此外,大规模多模态预训练在其他下游多模态任务(Li等人,2020;Chen等人,2020;Singh等人,2020b)中也展现出优势。本研究本身不包含多模态预训练,而是探索了所谓的"领域自适应预训练"(Gururangan等人,2020)或"中间任务迁移"(Pr uk s a chat kun等人,2020)——通过中间训练步骤将预训练表征"适配"到特定领域,再对必要下游任务进行训练/评估。值得注意的是,我们采用多任务训练来提升模型的泛化能力及下游任务表现,近期研究证明该方法对图像类(Singh等人,2020b;Ju等人,2019;Lu等人,2020)和文本类(Shuster等人,2019b;Roller等人,2020)任务均有助益。

2.3 Comparison to Existing Models

2.3 与现有模型的对比

In this work, we compare our best resulting model to the following existing models in the literature:

在本研究中,我们将最佳成果模型与文献中的以下现有模型进行了对比:

• The 2.7-billion-parameter Transformer sequence-to-sequence model from Roller et al. (2020), known as “BST Generative 2.7B model” in that work, pre-trained on 1.5B comments from a third-party Reddit dump hosted by pushshift.io (Baumgartner et al., 2020). We refer to this model as “BlenderBot”. • DialoGPT, a GPT-2-based model trained on 147M exchanges from public-domain socialmedia conversations (Zhang et al., 2020) • Meena, a 2.6B-parameter Transformer sequence-to-sequence model trained on

• Roller等人 (2020) 提出的27亿参数Transformer序列到序列模型(在该研究中称为"BST Generative 2.7B模型"),基于pushshift.io提供的第三方Reddit评论数据集(Baumgartner等人, 2020)中15亿条评论进行预训练。我们将该模型称为"BlenderBot"。

• DialoGPT:基于GPT-2的模型,在1.47亿条公开社交媒体对话数据上训练(Zhang等人, 2020)

• Meena:26亿参数Transformer序列到序列模型

341GB of conversations (Adiwardana et al., 2020)

341GB的对话数据 (Adiwardana et al., 2020)

• The Image+Seq2Seq model from dodecaDialogue (Shuster et al., 2019b), a Transformer sequence-to-sequence model in which the encoder is passed pre-trained image features from the ResNeXt-IG-3.5B model (Mahajan et al., 2018). We use the do dec a Dialogue model fine-tuned on Image-Chat (and we refer to this model as “Dodeca”).

• 来自dodecaDialogue (Shuster等人, 2019b) 的Image+Seq2Seq模型:这是一个Transformer序列到序列模型,其编码器接收来自ResNeXt-IG-3.5B模型 (Mahajan等人, 2018) 的预训练图像特征。我们使用在Image-Chat上微调过的dodecaDialogue模型(将该模型称为"Dodeca")。

• 2AMMC (Ju et al., 2019), in which multiple Transformers are attended over in order to make use of a combination of ResNeXtIG-3.5B and Faster R-CNN image features (Girshick et al., 2018). We specifically use the 2AMMC model from Ju et al. (2019) because that model has the best test-set performance on Image-Chat in that work.

• 2AMMC (Ju等人,2019),该模型通过叠加多个Transformer来结合ResNeXtIG-3.5B和Faster R-CNN图像特征 (Girshick等人,2018)。我们专门采用Ju等人 (2019) 的2AMMC模型,因为该工作在Image-Chat测试集上取得了最佳性能。

3 Model Architectures

3 模型架构

The inputs to our models are visual and/or textual context, where applicable. We explore different ways to encode images from their pixels to vector representations, and we additionally compare ways of combining (fusing) the image and text representations before outputting a response.

我们模型的输入包括视觉和/或文本上下文(如适用)。我们探索了多种将图像从像素编码为向量表示的方法,并进一步比较了在输出响应前融合图像和文本表示的不同方式。

3.1 Image Encoders

3.1 图像编码器

Converting an image from pixels to a vector represent ation is a well-researched problem, and thus we explore using two different image encoders to determine the best fit for our tasks.

将图像从像素转换为向量表示是一个经过深入研究的问题,因此我们探索使用两种不同的图像编码器来确定最适合我们任务的方案。

• ResNeXt WSL We first experiment with image representations obtained from pre-training a ResNeXt $32\mathrm{x}48\mathrm{d}$ model on nearly 1 billion public images (Mahajan et al., 2018), with subsequent fine-tuning on the ImageNet1K dataset (Russ a kov sky et al., 2015) 2. The output of this model is a 2048-dimensional vector, and we refer to these representations as “ResNeXt WSL” features.

• ResNeXt WSL 我们首先试验了基于近10亿张公开图像预训练的ResNeXt $32\mathrm{x}48\mathrm{d}$模型(Mahajan等人,2018)获得的图像表征,随后在ImageNet1K数据集(Russakovsky等人,2015)上进行微调。该模型输出为2048维向量,我们将这些表征称为"ResNeXt WSL"特征。

• ResNeXt WSL Spatial One can also take the output of the image encoder prior to its final fully-connected layer to obtain “spatial” image features, resulting in a $2048\times7\times7.$ - dimensional vector. We explore results with these features as well, and refer to them as “ResNeXt WSL Spatial”.

• ResNeXt WSL 空间特征 也可以在图像编码器的最终全连接层之前获取其输出,从而得到“空间”图像特征,形成一个 $2048\times7\times7$ 维向量。我们也探索了使用这些特征的结果,并将其称为“ResNeXt WSL 空间特征”。

• Faster R-CNN Finally, we consider Faster RCNN features (Ren et al., 2017), using models trained in the Detectron framework (Girshick et al., 2018); specifically, we use a ResNeXt152 backbone trained on the Visual Genome dataset (Krishna et al., 2016) with the attribute head (Singh et al., 2020a) 3. The Faster RCNN features are $2048\times100$ -dimensional represent at ions, and we refer to these features as “Faster R-CNN”.

• Faster R-CNN 最后,我们采用 Detectron 框架 (Girshick et al., 2018) 训练的 Faster RCNN 特征 (Ren et al., 2017) ,具体使用基于 Visual Genome 数据集 (Krishna et al., 2016) 训练并配备属性头 (Singh et al., 2020a) 的 ResNeXt152 主干网络。Faster RCNN 特征为 $2048\times100$ 维表示,我们将这些特征称为 "Faster R-CNN"。

3.2 Multi-Modal Architecture

3.2 多模态架构

To jointly encode visual and textual context, we use a modification of a standard Transformer sequenceto-sequence architecture (Vaswani et al., 2017), whereby we experiment with different ways of combining (fusing) the image and text representations to generate an output sequence. Our Transformer model has 2 encoder layers, 24 decoder layers, 2560-dimensional embeddings, and 32 attention heads, and the weights are initialized from a 2.7-billion parameter model pre-trained on 1.5B comments from a third-party Reddit dump hosted by pushshift.io (Baumgartner et al., 2020) to generate a comment conditioned on the full thread leading up to the comment (Roller et al., 2020). From this base model, we explore two possible fusion schemes.

为了共同编码视觉和文本上下文,我们采用了一种改进的标准Transformer序列到序列架构 (Vaswani et al., 2017) ,通过实验探索不同方式来融合图像和文本表示以生成输出序列。我们的Transformer模型包含2个编码器层、24个解码器层、2560维嵌入和32个注意力头,其权重初始化为一个基于pushshift.io托管的第三方Reddit数据集中15亿条评论预训练的27亿参数模型 (Baumgartner et al., 2020) ,该模型用于生成以前置完整讨论串为条件的评论 (Roller et al., 2020) 。基于这个基础模型,我们研究了两种可能的融合方案。

Late Fusion The late fusion method is the same as used in Shuster et al. (2019b), whereby the encoded image is projected to the same dimension as the text encoding of the Transformer encoder, concatenated with this output as an extra “token” output, and finally fed together as input to the decoder.

晚期融合

晚期融合方法与 Shuster 等人 (2019b) 使用的方法相同,即将编码后的图像投影到与 Transformer 编码器的文本编码相同的维度,作为额外的 "token" 输出与该编码结果拼接,最终作为解码器的共同输入。

Early Fusion We additionally experiment with an earlier fusion scheme to allow greater interac- tion between the image and text in the sequenceto-sequence architecture. In a similar fashion to VisualBERT (Li et al., 2019a) and multi-modal Bitransformers (Kiela et al., 2019), we concatenate the projected image encoding from the visual input with the token embeddings from the textual input, assign each a different segment embedding, and jointly encode the text and image in the encoder. The encoder thus performs full self-attention across the textual and visual context, with the entire output used as normal in the sequence-to-sequence architecture.

早期融合

我们还尝试了一种更早的融合方案,以增强序列到序列架构中图像与文本的交互。类似于 VisualBERT (Li et al., 2019a) 和多模态 Bitransformers (Kiela et al., 2019) 的做法,我们将视觉输入中投影后的图像编码与文本输入的 Token 嵌入拼接起来,为两者分配不同的片段嵌入,并在编码器中联合编码文本和图像。因此,编码器在文本和视觉上下文中执行完整的自注意力机制,整个输出按常规方式用于序列到序列架构。

As our resulting model can be seen as a multimodal extension to the BlenderBot model (Roller et al., 2020), we refer to it as “Multi-Modal BlenderBot” (MMB).

由于我们的最终模型可视为BlenderBot模型 (Roller et al., 2020) 的多模态扩展版本,我们将其称为"多模态BlenderBot (Multi-Modal BlenderBot, MMB)"。

4 Training Details

4 训练细节

When training the model, we fix the weights of the pre-trained image encoders, except the linear projection to the Transformer output dimension, and fine-tune all of the weights of the Transformer encoder/decoder.

在训练模型时,我们固定预训练图像编码器的权重(仅调整线性投影至Transformer输出维度的部分),并对Transformer编码器/解码器的所有权重进行微调。

4.1 Domain-Adaptive Pre-Training

4.1 领域自适应预训练

During training, the vast majority of trainable model weights are initialized from a large, 2.7B parameter Transformer pre-trained solely on tex- tual input. As our end goal is to achieve improved performance on multi-modal tasks, we found that training first on domain-specific/related data was helpful in order to adapt the Transformer model to an image setting. Following (Singh et al., 2020b), we experimented with pre-training on COCO Captions (Chen et al., 2015) - a dataset of over 120k images with 5 captions each, resulting in over $600\mathrm{k}$ utterances - in which the model is trained to generate a caption solely from image input. We additionally explored multi-tasked training with COCO Captions and on the same third-party Reddit dump hosted by pushshift.io (Baumgartner et al., 2020) as the one used in pre-training the Transformer model, to see whether it was necessary to ensure the model did not stray too far from its ability to handle pure textual input.

在训练过程中,绝大多数可训练模型权重都初始化自一个仅基于文本输入预训练的27亿参数Transformer。由于我们的最终目标是提升多模态任务性能,我们发现先使用领域相关数据进行训练有助于使Transformer模型适应图像场景。参照(Singh et al., 2020b)的方法,我们在COCO Captions(Chen et al., 2015)数据集上进行了预训练实验——该数据集包含超过12万张图像(每张图配5条描述),总计超$600\mathrm{k}$条文本语句——训练模型仅根据图像输入生成描述。我们还尝试了COCO Captions与第三方Reddit语料库(pushshift.io托管,Baumgartner et al., 2020)的多任务训练(该Reddit语料与Transformer预训练所用数据相同),以验证是否需要确保模型不偏离其处理纯文本输入的能力。

During domain-adaptive pre-training, we trained the model on 8 GPUs for $10\mathrm{k}{-}30\mathrm{k}$ SGD updates, using early-stopping on the validation set. The models were optimized using Adam (Kingma and Ba, 2014), with sweeps over a learning rate between 5e-6 and 3e-5, using 100 warmup steps.

在领域自适应预训练阶段,我们使用8块GPU对模型进行了 $10\mathrm{k}{-}30\mathrm{k}$ 次SGD更新训练,并在验证集上采用早停策略。模型优化采用Adam (Kingma and Ba, 2014) 算法,学习率在5e-6至3e-5之间进行扫描,并设置100步预热步数。

4.2 Fine-tuning Datasets

4.2 微调数据集

The goal of our resulting model is to perform well in a multi-modal dialogue setting; thus, we fine-tune the model on both dialogue and imagedialogue datasets. For dialogue-based datasets, we consider the same four as in Roller et al. (2020): ConvAI2 (Dinan et al., 2020b), Empathetic Dialogues (Rashkin et al., 2019), Wizard of Wikipedia (Dinan et al., 2019c), and Blended Skill Talk (Smith et al., 2020). To model image-dialogue, we con- sider the Image-Chat dataset (Shuster et al., 2020).

我们最终模型的目标是在多模态对话场景中表现良好;因此,我们在对话和图像对话数据集上对模型进行了微调。对于基于对话的数据集,我们采用了与Roller等人 (2020) 相同的四个数据集:ConvAI2 (Dinan等人, 2020b)、Empathetic Dialogues (Rashkin等人, 2019)、Wizard of Wikipedia (Dinan等人, 2019c) 以及Blended Skill Talk (Smith等人, 2020)。为了建模图像对话,我们使用了Image-Chat数据集 (Shuster等人, 2020)。

We give a brief description below of the five datasets; more information can be found in Roller et al. (2020) and Shuster et al. (2020).

我们简要介绍以下五个数据集;更多信息可参阅 Roller et al. (2020) 和 Shuster et al. (2020)。

ConvAI2 The ConvAI2 dataset (Dinan et al., 2020b) is based on the Persona-Chat (Zhang et al., 2018b) dataset, and contains 140k training utterances in which crowd workers were given prepared “persona” lines, e.g. “I like dogs” or “I play basketball”, and then paired up and asked to get to know each other through conversation.

ConvAI2

ConvAI2数据集 (Dinan et al., 2020b) 基于Persona-Chat (Zhang et al., 2018b) 数据集构建,包含14万条训练语句。该数据集的构建方式为:向众包工作人员提供预设的"人设"语句(例如"我喜欢狗"或"我打篮球"),随后将参与者两两配对,要求他们通过对话互相了解。

Empathetic Dialogues (ED) The Empathetic Dialogues dataset (Rashkin et al., 2019) was created via crowd workers as well, and involves two speakers playing different roles in a conversation. One is a “listener”, who displays empathy in a conversation while conversing with someone who is describing a personal situation. The model is trained to act like the “listener”. The resulting dataset contains $50\mathrm{k\Omega}$ utterances.

共情对话数据集 (ED)

共情对话数据集 (Rashkin et al., 2019) 同样通过众包方式构建,要求两名对话者扮演不同角色。其中"倾听者"需在与他人讨论个人经历时展现共情能力,模型被训练模拟该角色。最终数据集包含 $50\mathrm{k\Omega}$ 条话语。

Wizard of Wikipedia (WoW) The Wizard of Wikipedia dataset (Dinan et al., 2019c) involves two speakers discussing a given topic in depth, comprising 194k utterances. One speaker (the “apprentice”) attempts to dive deep on and learn about a chosen topic; the other (the “wizard”) has access to a retrieval system over Wikipedia, and is tasked with teaching their conversational partner about a topic via grounding their responses in a knowledge source.

维基百科奇才 (WoW)

维基百科奇才数据集 (Dinan et al., 2019c) 包含两位发言者围绕特定主题展开的深度讨论,共包含19.4万条对话。其中一位发言者("学徒")试图深入探索并学习选定主题;另一位("奇才")则拥有基于维基百科的检索系统,其任务是通过将回答基于知识源来教导对话伙伴理解该主题。

Blended Skill Talk (BST) Blended Skill Talk (Smith et al., 2020) is a dataset that essentially combines the three above. That is, crowd workers are paired up similarly to the three previous datasets, but now all three “skills” (personalization, empathy, and knowledge) are at play throughout the dialogue: the speakers are tasked with blending the skills while engaging their partners in conversation. The resulting dataset contains $74\mathrm{k\Omega}$ utterances.

混合技能对话 (BST)

混合技能对话 (Smith等, 2020) 是一个本质上结合了上述三个数据集的数据集。也就是说,与之前三个数据集类似,众包工作者被配对进行对话,但现在所有三种"技能"(个性化、共情和知识)在整个对话过程中都发挥作用:说话者的任务是在与伙伴交谈时融合这些技能。最终的数据集包含 $74\mathrm{k\Omega}$ 条话语。

Image-Chat (IC) The Image-Chat dataset (Shuster et al., 2020) contains $200\mathrm{k\Omega}$ dialogues over 200k images: crowd workers were tasked with discussing an image in the context of a given style, e.g. “Happy”, “Cheerful”, or “Sad”, in order to hold an engaging conversation. The resulting dataset contains over $400\mathrm{k\Omega}$ utterances. For each conversation in the dataset, the two speakers are each assigned a style in which that speaker responds, and these styles are optionally fed into models as part of the input, alongside the dialogue context. There are 215 styles in total, and styles are divided into 3 categories, “positive”, “neutral”, and “negative”.4

Image-Chat (IC)

Image-Chat数据集 (Shuster等人, 2020) 包含基于20万张图像的$200\mathrm{k\Omega}$组对话:众包工作人员需在指定风格(如"快乐"、"欢快"或"悲伤")的语境下讨论图像以展开引人入胜的对话。最终数据集包含超过$400\mathrm{k\Omega}$条话语。数据集中每个对话的两位发言者均被分配特定应答风格,这些风格可随对话上下文作为输入的一部分馈入模型。共包含215种风格,分为"积极"、"中立"和"消极"三大类[4]。

In the fine-tuning stage, we consider two different regimes: one in which we multi-task train on the five datasets together, and one in which we train on Image-Chat alone. While the latter regime is useful in exploring upper bounds of model performance, our main goal is to build a model that can display the requisite skills of an engaging convers ation a list (empathy, personalization, knowledge) while also having the ability to respond to and converse about images; thus, we are more interested in the former training setup. In this stage, we train the models on 8 GPUs for around $10\mathrm{k\Omega}$ train updates using a similar optimization setup as in the domain-adaptive pre-training stage.

在微调阶段,我们考虑两种不同的训练模式:一种是在五个数据集上联合进行多任务训练,另一种是仅在Image-Chat数据集上单独训练。虽然后一种模式有助于探索模型性能的上限,但我们的主要目标是构建一个既能展现吸引人的对话所需技能(共情、个性化、知识),又能响应和讨论图像的模型;因此,我们更关注前一种训练方式。在此阶段,我们使用与领域自适应预训练阶段相似的优化设置,在8块GPU上进行了约$10\mathrm{k\Omega}$次训练更新。

5 Experiments

5 实验

5.1 Automatic Evaluations

5.1 自动评估

5.1.1 Results on Pre-Training Datasets

5.1.1 预训练数据集结果

To fully understand the effects of various training data and image features, as well as multi-modal fusion schemes, we measure model perplexity on the COCO and pushshift.io Reddit validation sets. We are primarily interested in performance on COCO Captions, as the model has already been extensively pre-trained on the pushshift.io Reddit data. The results are shown in Table 1.

为了全面理解不同训练数据和图像特征以及多模态融合方案的影响,我们在COCO和pushshift.io Reddit验证集上测量了模型困惑度。我们主要关注COCO Captions上的性能表现,因为该模型已在pushshift.io Reddit数据上进行了大量预训练。结果如表1所示。

Training Data We first note that, regardless of image fusion and image feature choices, we see the best performance on COCO Captions by simply fine-tuning exclusively on that data. This is an expected result, though we do see that in nearly every scenario the decrease in perplexity is not large (e.g. 5.23 for Faster R-CNN early fusion multi-tasking, down to 4.83 with just COCO Captions).

训练数据

我们首先注意到,无论选择何种图像融合和图像特征,仅在COCO Captions数据上进行微调即可获得最佳性能。这一结果符合预期,尽管在几乎所有场景中,困惑度(perplexity)的下降幅度并不大(例如Faster R-CNN早期融合多任务处理的困惑度为5.23,而仅使用COCO Captions时降至4.83)。

Image Features Across all training setups, we see that using spatially-based image features (ResNeXt WSL Spatial, Faster R-CNN) yields better performance than just a single vector image represent ation (ResNeXt WSL). This difference is particularly noticeable when training with COCO and pushshift.io Reddit, where with Faster R-CNN features the model obtains an average ppl of 9.13 over the two datasets, while with ResNeXt WSL features the model only obtains $10.1\mathrm{ppl}$ . We find that using Faster R-CNN features additionally outperforms using ResNeXt WSL Spatial features, where using the latter obtains an average of $10.0\mathrm{ppl}$ over the two datasets.

图像特征

在所有训练设置中,我们发现使用基于空间位置的特征(ResNeXt WSL Spatial、Faster R-CNN)比单一向量图像表征(ResNeXt WSL)能带来更好的性能。这一差异在使用COCO和pushshift.io Reddit数据集训练时尤为明显:采用Faster R-CNN特征时模型在两个数据集上的平均困惑度(ppl)为9.13,而使用ResNeXt WSL特征时仅获得$10.1\mathrm{ppl}$。进一步实验表明,Faster R-CNN特征也优于ResNeXt WSL Spatial特征——后者在两个数据集上的平均困惑度为$10.0\mathrm{ppl}$。

Table 1: Model performance, measured via perplexity on validation data, on domain-adaptive pre-training datasets, comparing various image features and image fusion techniques. The top three rows involve multi-task training on COCO Captions and pushshift.io Reddit, while the bottom three rows involve single task training on COCO Captions only. We note that early fusion with Faster R-CNN features yields the best performance on COCO Captions.

表 1: 模型在领域自适应预训练数据集上的性能表现(通过验证数据的困惑度衡量),比较了不同图像特征和图像融合技术。前三行涉及 COCO Captions 和 pushshift.io Reddit 的多任务训练,后三行仅涉及 COCO Captions 的单任务训练。我们注意到,使用 Faster R-CNN 特征的早期融合在 COCO Captions 上表现最佳。

| 图像特征 | 图像融合 | COCO (ppl) | pushshift.io Reddit (ppl) | 平均 |

|---|---|---|---|---|

| COCO & pushshift.io Reddit 训练数据 | ||||

| ResNeXtWSL | 晚期 早期 | 11.11 6.69 | 13.80 13.50 | 12.45 10.10 |

| ResNeXt WSL Spatial | 晚期 早期 | 7.43 6.53 | 13.00 13.46 | 10.22 10.00 |

| Faster R-CNN | 晚期 早期 | 5.26 5.23 | 13.17 13.15 | 9.21 9.13 |

| 仅 COCO 训练数据 | ||||

| ResNeXtWSL | 晚期 早期 | 5.82 6.21 | 19.52 21.30 | 12.67 13.76 |

| ResNeXt WSL Spatial | 晚期 早期 | 6.51 6.19 | 16.50 18.77 | 11.51 |

| Faster R-CNN | 晚期 早期 | 5.21 4.83 | 17.88 18.81 | 12.48 11.55 11.82 |

Table 2: Ablation analysis of the impact of various image features, training data (including domain-adaptive pretraining), and image fusion techniques on the datasets described in Section 4.2, where $\mathrm{BST^{+}}$ refers to the four text-only dialogue datasets (ConvAI2, ED, WoW, and BST). The numbers shown are model perplexities measured on each of the datasets’ validation data. Performance on the first turn of Image-Chat is also measured to highlight model performance when only given visual context. We note that using Faster R-CNN image features results in the best average performance, as well as the best performance on Image-Chat.

表 2: 不同图像特征、训练数据(包括领域自适应预训练)和图像融合技术对4.2节所述数据集的消融分析结果,其中$\mathrm{BST^{+}}$指四个纯文本对话数据集(ConvAI2、ED、WoW和BST)。表中数值为各数据集验证数据上的模型困惑度。同时测量Image-Chat首轮表现以突显仅提供视觉上下文时的模型性能。我们注意到使用Faster R-CNN图像特征能取得最佳平均性能,以及在Image-Chat上的最优表现。

| 图像特征 | 训练数据 | 图像融合 | ConvAI2 | ED | WoW | BST | IC首轮 | IC | 文本平均 | 全体平均 |

|---|---|---|---|---|---|---|---|---|---|---|

| 无 | 无 BST+ BST+ +IC | 无 | 12.31 8.74 8.72 | 10.21 8.32 8.24 | 13.00 8.78 8.81 | 12.41 10.08 10.03 | 32.36 38.94 16.03 | 21.48 23.13 13.21 | 11.98 8.98 8.95 | 13.88 14.76 9.83 |

| ResNeXtWSL | BST++IC BST++IC BST++IC+COCO+Reddit BST++IC+COCO+Reddit BST++IC+COCO BST++IC+COCO | 晚期 早期 晚期 早期 晚期 早期 晚期 | 8.71 8.80 9.27 9.34 8.79 8.91 | 8.25 8.32 8.87 8.90 8.36 8.38 8.24 | 8.87 8.79 9.45 9.48 9.00 8.99 8.88 | 10.09 10.17 10.74 10.78 10.21 10.29 10.10 | 16.20 15.16 17.56 15.87 16.00 14.64 | 13.27 12.99 14.44 13.88 13.31 12.85 | 8.98 9.02 9.58 9.62 9.09 9.14 | 9.84 9.81 10.56 10.48 9.93 9.88 |

| ResNeXtWSL空间特征 | BST++IC BST++IC BST++IC+COCO+Reddit BST++IC+COCO+Reddit BST++IC+COCO BST++IC+COCO | 早期 晚期 早期 晚期 早期 | 8.71 8.79 8.76 9.30 8.73 8.81 | 8.29 8.31 8.82 8.31 8.34 | 8.92 8.88 9.46 8.87 8.99 | 10.15 10.14 10.76 10.13 10.22 | 15.39 15.34 15.20 15.67 15.04 14.76 | 13.02 13.02 13.04 13.79 12.98 12.87 | 8.98 9.04 9.02 9.56 9.01 9.09 | 9.78 9.83 9.83 10.43 9.84 9.80 |

| Faster R-CNN | BST+IC BST++IC BST++IC+COCO+Reddit BST++IC+COCO+Reddit BST++IC+COCO BST++IC+COCO | 晚期 早期 晚期 早期 晚期 早期 | 8.70 8.81 8.75 8.78 8.74 8.81 | 8.24 8.33 8.31 8.31 8.33 8.34 | 8.92 8.81 8.93 8.85 8.87 8.93 | 10.07 10.15 10.14 10.15 10.13 10.19 | 13.97 13.66 13.83 13.51 13.85 | 12.48 12.43 12.49 12.36 12.51 | 8.98 9.03 9.03 9.02 9.02 | 9.68 9.71 9.73 9.69 9.72 |

Image Fusion Finally, holding all other variables constant, we find that using our early fusion scheme yields improvements over using a late fusion scheme. E.g., with Faster-R-CNN features in the COCO-only setup, we see a decrease in perplexity from 5.21 to 4.83; with ResNeXt WSL Spatial image features, we see perplexity differences ranging from 0.3 to 0.9 depending on the training data.

图像融合

最后,在保持其他所有变量不变的情况下,我们发现采用早期融合方案相比晚期融合方案能带来性能提升。例如,在仅使用COCO数据集的配置中,采用Faster-R-CNN特征时,困惑度从5.21降至4.83;而使用ResNeXt WSL空间图像特征时,根据训练数据的不同,困惑度差异在0.3到0.9之间波动。

5.1.2 Results on Fine-Tuned Datasets

5.1.2 微调数据集上的结果

We conduct the same ablation setups for training on the dialogue and image-and-dialogue datasets as we did in the domain-adapative pre-training setup; the results for multi-tasking all of the datasets are in Table 2, while results for fine-tuning on Image

我们对对话数据集以及图像-对话混合数据集进行了与领域自适应预训练相同的消融实验设置。多任务联合训练所有数据集的结果见表2,而仅在Image数据集上微调的结果则显示在

Table 3: Ablation analysis of the impacts of various image features, training data (including domain-adaptive pretraining), and image fusion techniques when training on the Image-Chat dataset alone (i.e., ignoring the text-only dialogue datasets). As in Table 2, we note that Faster R-CNN features yield the best results on Image-Chat.

表 3: 仅使用Image-Chat数据集训练时(即忽略纯文本对话数据集),不同图像特征、训练数据(包括领域自适应预训练)和图像融合技术的消融分析。如表2所示,我们注意到Faster R-CNN特征在Image-Chat上取得了最佳结果。

| 图像特征 | 训练数据 | 图像融合 | IC第一轮 | IC |

|---|---|---|---|---|

| 无 | 无Image Chat | 无 | 32.36 28.71 | 21.48 13.17 |

| ResNeXt WSL | IC IC IC + COCO +Reddit IC + COCO + Reddit IC + COCO IC + COCO | 晚期 早期 晚期 早期 晚期 早期 | 14.80 16.00 16.73 15.71 14.70 14.62 | 12.83 13.21 13.92 13.53 12.95 12.92 |

| ResNeXt WSL Spatial | IC IC IC + COCO + Reddit IC + COCO +Reddit IC + COCO IC + COCO | 晚期 早期 晚期 早期 晚期 | 15.34 15.27 15.09 15.55 15.02 | 13.01 13.00 12.95 13.50 12.95 |

| Faster R-CNN | IC IC IC + COCO +Reddit IC + COCO + Reddit IC + COCO IC + COCO | 早期 晚期 早期 晚期 早期 晚期 早期 | 14.62 13.99 13.76 13.75 13.44 13.82 13.56 | 12.87 12.51 12.42 12.43 12.29 12.48 12.37 |

Table 4: Test results of best multi-task model on $\mathrm{BST^{+}}$ and Image Chat datasets, measured via perplexity (ppl), F1, BLEU-4, and ROUGE-L scores. ConvAI2 results are reported on the validation set, as the test set is hidden.

表 4: 最佳多任务模型在 $\mathrm{BST^{+}}$ 和 Image Chat 数据集上的测试结果,通过困惑度 (ppl)、F1、BLEU-4 和 ROUGE-L 分数衡量。ConvAI2 结果在验证集上报告,因为测试集被隐藏。

| 模型 | ConvAI2 | ED | WoWSeen | BST | IC | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | B | R | F1 | B | R | F1 | B | R | F1 | B | R | F1 | B | R | |

| DialoGPT (Zhang et al., 2020) | 11.4 | 0.1 | 8.5 | 10.8 | 0.3 | 8.2 | 8.6 | 0.1 | 5.9 | 10.5 | 0.1 | 7.6 | 6.2 | 0.1 | 5.2 |

| Dodeca (Shuster et al., 2019b) | 21.7 | 5.5 | 33.7 | 19.3 | 3.7 | 31.4 | 38.4* | 21.0* | 45.4* | 12.9 | 2.1 | 24.6 | |||

| 2AMMC (Ju et al., 2019) | 9.3 | 0.1 | 11.0 | ||||||||||||

| BlenderBot (Roller et al., 2020) | 18.4 | 1.1 | 22.7 | 19.1 | 1.4 | 24.2 | 18.8 | 2.3 | 17.5 | 17.8 | 1.0 | 19.2 | 9.2 | 0.1 | 12.3 |

| Multi-Modal BlenderBot (ours) | 18.4 | 1.1 | 22.6 | 19.2 | 1.5 | 24.5 | 18.6 | 2.2 | 17.4 | 17.8 | 1.0 | 19.3 | 13.1 | 0.4 | 18.0 |

Table 5: Test performance of existing models on the datasets considered, compared to MMB (specifically, the “MMB Style” model discussed in Section 5.2.2), in terms of F1, BLEU-4 (B), and ROUGE-L (R) scores. * indicates that gold knowledge was utilized in the WoW task.

表 5: 现有模型在考虑的数据集上的测试性能,与 MMB (具体指第 5.2.2 节讨论的 "MMB Style" 模型) 在 F1、BLEU-4 (B) 和 ROUGE-L (R) 分数上的对比。* 表示在 WoW 任务中使用了黄金知识。

| 数据集 | PPL | F1 | BLEU-4 | ROUGE-L |

|---|---|---|---|---|

| Image Chat (第一轮) | 13.56 | 11.96 | 0.411 | 16.72 |

| Image Chat | 12.64 | 13.14 | 0.418 | 18.00 |

| BlendedSkillTalk | 9.98 | 17.84 | 0.980 | 19.25 |

| Wizard of Wikipedia (已见) | 8.82 | 18.63 | 2.224 | 17.39 |

| ConvAI2 | 8.78 | 18.41 | 1.080 | 22.64 |

| EmpatheticDialogues | 8.46 | 19.23 | 1.448 | 24.46 |

Chat alone are in Table 3.

仅聊天内容见表3。

From these extensive ablations, we note some interesting conclusions.

从这些广泛的消融实验中,我们得出了一些有趣的结论。

Text-Only Datasets First, we look at the performance of our models on the text-only datasets. The second-to-last column in Table 2 shows the average perplexity across the text-only datasets. If we compare the model that performs best on Image-Chat across all sets of image features (Faster-R-CNN features with $\mathrm{BST^{+}+I C+C O C O+R e}$ eddit training data with early fusion) to the model in row 2, which is trained both without images and without Image-Chat on the text-only datasets, we see that the perplexity differences are quite small: that is, including training on an image-dialogue dataset, and overloading the Transformer encoder/decoder to incorporate image features, does not hinder dialogue performance.

纯文本数据集

首先,我们观察模型在纯文本数据集上的表现。表2的倒数第二列显示了纯文本数据集的平均困惑度。若将在Image-Chat上表现最佳的模型(采用Faster-R-CNN特征、结合$\mathrm{BST^{+}+I C+C O C O+R e}$编辑训练数据及早期融合)与第2行模型(未使用图像数据且未在Image-Chat上训练的纯文本模型)进行对比,可发现两者困惑度差异极小:这表明加入图像对话数据集训练,以及让Transformer编码器/解码器额外处理图像特征,并不会损害对话性能。

Training Data Across all image-feature choices, we see that the choice of training data indeed makes a difference in performance on Image-Chat. Examining the early fusion model in Table 2, by including COCO Captions (and, in some cases, pushshift.io Reddit) in the training data we see drops in perplexity from 12.99 to 12.85, 13.02 to 12.87, and 12.43 to 12.36 with ResNeXt WSL, ResNeXt WSL Spatial, and Faster R-CNN features respectively. The decrease in perplexity indicates that domain-adaptive pre-training indeed improves performance on Image-Chat. This difference is highlighted even more when we measure performance on the first turn of Image-Chat, in which the model must generate a response given no textual context: 15.16 to 14.64, 15.34 to 14.76, and 13.66 to 13.51. We note a similar trend in Table 3.

训练数据

在所有图像特征选择中,我们发现训练数据的选择确实会影响Image-Chat的性能表现。观察表2中的早期融合模型,当训练数据包含COCO Captions(以及部分情况下加入pushshift.io Reddit数据)时,ResNeXt WSL、ResNeXt WSL Spatial和Faster R-CNN特征分别使困惑度从12.99降至12.85、13.02降至12.87、12.43降至12.36。困惑度的下降表明领域自适应预训练确实能提升Image-Chat的性能。当我们测量Image-Chat首轮对话(模型需在无文本上下文条件下生成回复)的性能时,这种差异更为显著:15.16降至14.64、15.34降至14.76、13.66降至13.51。表3中也呈现类似趋势。

Image Features Again, we see that using Faster R-CNN features leads to dramatic improvements compared to using the ResNeXt WSL features (spatial or otherwise), yielding 12.36 perplexity on Image-Chat compared to 12.85 and 12.87 perplexity with ResNeXt WSL (non-spatial and spatial respectively) during multi-tasking, and 12.29 perplexity on Image-Chat compared to 12.92 and 12.87 respectively for single-task training on Image-Chat (see Table 3).

图像特征分析

我们再次发现,与使用ResNeXt WSL特征(空间或其他类型)相比,采用Faster R-CNN特征能带来显著提升:多任务训练时,Image-Chat上的困惑度(perplexity)为12.36,而ResNeXt WSL(非空间和空间特征)分别为12.85和12.87;单任务训练时,Image-Chat的困惑度为12.29,而ResNeXt WSL对应结果为12.92和12.87(见表3)。

Image Fusion Finally, we note as before that using our early fusion technique improves performance on Image-Chat across all ablation regimes. While the average perplexity across the dialogue datasets is best when using late image fusion, we obtain the best image chat perplexity when performing early image fusion.

图像融合

最后需要指出的是,与之前一样,使用我们的早期融合技术能提升所有消融方案下的Image-Chat性能。虽然在对话数据集中采用后期图像融合时平均困惑度最佳,但在执行早期图像融合时我们获得了最优的图像聊天困惑度。

Final Test Results Following the ablation analyses, we decide to compare our best multi-tasked and single-tasked trained model (with respect to the fine-tuning datasets), where we use Faster RCNN image features and an early fusion scheme, to existing models in the literature. For this comparison, we consider additional metrics that can be computed on the actual model generations: F1, BLEU-4 and ROUGE-L. We generate model responses during inference with the same generation scheme as in Roller et al. (2020) - beam search with beam size of 10, minimum beam length of 20, and tri-gram blocking within the current generation and within the full textual context. The test performance of our best multitask model on the various datasets is shown in Table 4, with comparisons to existing models from Section 2.3 in Table 5; all evaluations are performed in ParlAI 5.

最终测试结果

在消融分析后,我们决定将最佳多任务和单任务训练模型(基于微调数据集)与文献中的现有模型进行比较,其中使用了Faster RCNN图像特征和早期融合方案。为此比较,我们考虑了可在实际模型生成上计算的额外指标:F1、BLEU-4和ROUGE-L。在推理过程中,我们采用与Roller等人(2020)相同的生成方案生成模型响应——束搜索(beam size为10,最小束长度为20),并在当前生成和完整文本上下文中进行三元组阻塞。表4展示了我们最佳多任务模型在各数据集上的测试性能,表5则与第2.3节中的现有模型进行了对比;所有评估均在ParlAI 5中完成。

We first note that the Dodeca model performs well across the board, and indeed has the highest ROUGE-L, BLEU-4, and F1 scores for the three text-only datasets. Higher BLEU-4 scores can be attributed to specifying a smaller minimum generation length, as forcing the BlenderBot models to generate no less than 20 tokens hurts precision when compared to reference labels - this was verified as we tried generating with a smaller minimum length (5 tokens) and saw a $20%$ increase in BLEU-4 on Image-Chat for Multi-Modal BlenderBot. Higher ROUGE-L scores can additionally be attributed to specifying a larger minimum generation length; this was also verified by generating with a higher minimum length (50 tokens) where we saw nearly a $40%$ increase in ROUGE-L score. Nevertheless, we do not report an exhaustive search over parameters here for our model, and instead compare it to BlenderBot with the same settings next.

我们首先注意到,Dodeca模型在所有方面都表现良好,并且在三个纯文本数据集上确实获得了最高的ROUGE-L、BLEU-4和F1分数。较高的BLEU-4分数可以归因于指定了较小的最小生成长度,因为强制BlenderBot模型生成不少于20个token会降低与参考标签相比的精确度——这一点在我们尝试以更小的最小长度(5个token)生成时得到了验证,发现多模态BlenderBot在Image-Chat上的BLEU-4提高了20%。较高的ROUGE-L分数还可以归因于指定了更大的最小生成长度;这一点同样通过以更高的最小长度(50个token)生成得到了验证,我们看到ROUGE-L分数提升了近40%。尽管如此,我们并未在此对模型参数进行详尽搜索,而是接下来在相同设置下将其与BlenderBot进行比较。

When compared to its predecessor, text-only BlenderBot, MMB performs nearly the same on all four text-only datasets, indicating that MMB has not lost its proficiency in text-only dialogue. Additionally, when comparing performance on ImageChat to models trained on multi-modal data, MMB outperforms Dodeca in terms of F1 score (13.1 vs. 12.9) and outperforms 2AMMC on all three metrics. For the 2AMMC model, these metrics are computed under the assumption that the model’s chosen response (from a set of candidate responses collated from the Image-Chat training set) is the “generated” response.

与前代纯文本BlenderBot相比,MMB在所有四个纯文本数据集上表现几乎持平,表明MMB未丧失纯文本对话能力。此外,在多模态数据训练的模型对比中,MMB在ImageChat上的F1分数优于Dodeca (13.1 vs. 12.9),并在全部三项指标上超越2AMMC。对于2AMMC模型,其指标计算基于以下假设:模型从Image-Chat训练集整理的候选响应集合中选择的响应被视为"生成"响应。

5.2 Human Evaluations

5.2 人工评估

5.2.1 Summary of Human Evaluations

5.2.1 人工评估总结

Since our model must demonstrate compelling performance both in general chit-chat dialogue and when responding to an image when conversing with humans, we present several types of human evaluations in this section. Section 5.2.2 presents evaluations of chit-chat performance, Section 5.2.3 shows evaluations on how well the model responds to sample images, and Section 5.2.4 combines both of these skills by demonstrating how well the model performs when talking to a human about an image.

由于我们的模型在与人类对话时,既需要在日常闲聊中表现出色,又需要在回应图像时展现吸引力,因此本节将呈现多种类型的人工评估。5.2.2节展示闲聊性能评估,5.2.3节呈现模型对示例图像的响应能力评估,5.2.4节则通过演示模型在人类围绕图像对话时的综合表现,将这两项技能结合起来进行评估。

Table 6: Per-turn annotations and mean engagingness ratings of human/model conversations with MMB Style and BlenderBot. Both models perform roughly equivalently on these metrics. Ranges given are plus/minus one standard deviation.

表 6: 人类与MMB Style和BlenderBot模型对话的每轮标注及平均吸引力评分。两个模型在这些指标上表现大致相当。给出的范围为±1个标准差。

| MMB Style | BlenderBot | |

|---|---|---|

| 矛盾 | 2.15% | 3.37% |

| 英语不规范 | 0.27% | 0.26% |

| 重复 | 1.34% | 1.55% |

| 无关内容 | 2.42% | 2.33% |

| 无意义 | 4.03% | 2.07% |

| 无问题(良好) | 91.13% | 91.45% |

| 平均吸引力 | 4.70±0.60 | 4.70±0.60 |

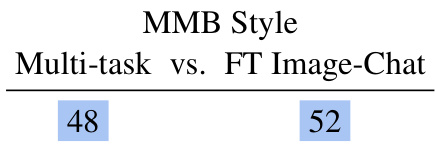

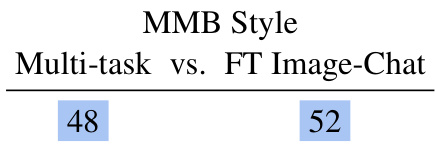

Table 7: ACUTE-Evals (engaging ness and human- ness) on human/model conversations with MMB Style, MMB Degendered, and BlenderBot. No ratings are statistically significant ratings per matchup).

表 7: 采用MMB Style、MMB Degendered和BlenderBot进行人机对话的ACUTE-Evals(参与度与拟人性)评估。所有评分均无统计学显著性 ( 每组对比的评分数量)。

| 50% 人类胜率 | 败率 % | |||

|---|---|---|---|---|

| MMBStyle | MMBStyle | MMBDegen50 | BB45 | |

| MMBDegen BlenderBot | 50 | 43 | ||

| MMBStyle | 55 | 57 52 | 53 | |

| MMB Degen BlenderBot | 48 47 | 47 | 53 |

Table 8: ACUTE-Evals (engaging ness and humanness) show that MMB Style outperforms DialoGPT with standard generation parameters (GPT-2 medium, beam search with beam width 10), DialoGPT with the same parameters but a min beam length of 20 (to match BlenderBot’s setting), and Meena. Asterisk indicates significance (two-tailed binomial test, $p<0.05)$ .

表 8: ACUTE-Evals (吸引力和拟人性) 显示,MMB Style 在标准生成参数 (GPT-2 medium,束搜索宽度为 10) 下优于 DialoGPT,与 DialoGPT 相同参数但最小束长度为 20 (以匹配 BlenderBot 的设置) 以及 Meena。星号表示显著性 (双尾二项检验,$p<0.05$)。

| Baseline VS | MMB | |

|---|---|---|

| DialoGPTstd.beam | 17 * | 83 * |

| DialoGPT minbeam 20 | 29 * | 71 * |

| Meena | 37 * | 63 * |

| Human | ||

| DialoGPT std.beam | 33 * | 67 * |

| DialoGPT min beam 20 | 40 * | 60 * |

| Meena | 36 * | 64 * |

5.2.2 Human/Model Chats Without Images

5.2.2 无图像的人类/模型对话

We compare MMB Style, our model exposed to Image-Chat styles during training, to BlenderBot by having crowd sourced workers chat with our models, over 50 conversations per model. Each conversation consists of 7 turns per speaker, with the human speaking first by saying “Hi!”, following the convention of Adiwardana et al. (2020). After every model response, the human records if the response contains any one of a number of different issues. Finally, at the end of the conversation, the human gives a 1-to-5 Likert-scale rating of the model’s overall engaging ness. No Image-Chat style is shown to MMB Style at the beginning of these conversations, matching its training setup in which no style was given when training on dialogue datasets.

我们将MMB Style(训练过程中接触过Image-Chat风格的模型)与BlenderBot进行对比,通过众包工作者与模型进行对话(每个模型超过50轮对话)。每轮对话包含每位参与者7次发言,按照Adiwardana等人(2020)的惯例,人类首先以"你好!"开始对话。在每次模型回应后,人类会记录回应是否包含若干问题中的任何一种。最后,在对话结束时,人类会以1-5分的李克特量表对模型的整体吸引力进行评分。这些对话开始时不会向MMB Style展示任何Image-Chat风格,与其在对话数据集训练时的设置保持一致(训练时也未提供任何风格)。

Table 6 shows that humans flag the models’ responses at comparable rates for most categories of issues, with BlenderBot being flagged slightly more often for contradictions and repetitive ness and MMB Style flagged more often for being nonsensical; however, the mean engaging ness rating of the two models across conversations is the same (both 4.7 out of 5).

表 6: 数据显示,在大多数问题类别中,人类对模型响应的标记率相当。BlenderBot 在自相矛盾和重复性方面被标记的频率略高,而 MMB Style 因无意义内容被标记的频率更高。不过,两种模型在对话中的平均吸引力评分相同 (均为 4.7 分,满分 5 分)。

We then perform ACUTE-Evals (Li et al., 2019b) on the collected conversations of MMB Style and BlenderBot in order for crowd sourced raters to directly compare conversations from different models in an A/B setting. For each comparison, we ask each rater to compare conversations on one of two metrics, following Li et al. (2019b):

我们随后对收集到的MMB Style和BlenderBot对话进行ACUTE-Evals (Li et al., 2019b) ,以便众包评分者在A/B测试中直接比较不同模型的对话。对于每次比较,我们要求每位评分者根据Li et al. (2019b) 的方法,在以下两个指标之一上对对话进行比较:

Results are shown in Table 7: raters choose conversations from one model over the other roughly equally, with no statistically significant differences among models.

结果如表 7 所示:评分者选择不同模型的对话比例大致相当,各模型之间无统计学显著差异。

In Table 8, we also compare MMB Style to two other baseline models, DialoGPT and Meena. Raters are significantly more likely to prefer MMB Style over both of these models with respect to both the engaging ness and humanness metrics.

在表8中,我们还将MMB Style与其他两个基线模型DialoGPT和Meena进行了比较。评分者明显更倾向于认为MMB Style在这两个模型的吸引力和人性化指标上表现更优。

5.2.3 Initial Responses to Images

5.2.3 对图像的初始响应

We measure MMB Style’s ability to communicate about what it perceives visually by generating responses of the model to 100 images in the test set of Image-Chat.6 Only Image-Chat images for which the first speaker speaks with a style in the list of “positive” or ‘neutral” styles are included when creating this set of 100 images, and all images for which the first speaker has a style in the “negative” list are filtered out. As a comparison, we also generate responses from two previous models trained on Image-Chat data, Dodeca and 2AMMC.

我们通过让模型对Image-Chat测试集中的100张图片生成回复,来评估MMB Style在视觉感知表达方面的能力。在选取这100张图片时,仅包含首轮发言者使用"积极"或"中性"风格对话的Image-Chat图片,同时过滤掉所有首轮发言者采用"消极"风格对话的图片。作为对比,我们还生成了两个先前基于Image-Chat数据训练的模型(Dodeca和2AMMC)的响应结果。

Table 9: Example outputs from MMB Style, Dodeca fine-tuned on Image-Chat, and 2AMMC, on images from Shuster et al. (2020). Image-Chat styles fed to the models are shown above the models’ responses.

表 9: 来自 MMB Style、在 Image-Chat 上微调的 Dodeca 以及 2AMMC 的示例输出,图片来自 Shuster 等人 (2020)。输入模型的 Image-Chat 风格显示在模型响应上方。

| 图片 | 输出 | |

|---|---|---|

| (风格) MMB | 甜蜜 | |

| 我想带我妻子来这里庆祝我们的纪念日。那会非常浪漫。 | ||

| Dodeca | 多美的景色啊! | |

| 2AMMC 哦,多么棒的蜜月胜地,还有可爱的山景。 | ||

| (风格) MMB | 母性 我想带我的孩子来这里,向他们展示地球及其居民的美丽 | |

| Dodeca | 我想带我的孩子来这里。 | |

| 2AMMC 我想把我的孩子安全地藏在那个洞穴里躲避暴风雨。 | ||

| (风格) | 恐惧 | |

| MMB | 我希望那只狗不要咬我。我不想被狗咬。 | |

| Dodeca | 我希望那只狗不要咬我。 | |

| 2AMMC 这只狗看起来准备攻击。它正专注于某物,也许是 | ||

| (风格) | 另一只动物?我希望它不会追赶它或周围的任何人! 活泼 | |

| MMB | 我想爬到那个雕塑的顶部,在喷泉里玩耍。 | |

| Dodeca | 我想参观这个地方! | |

| (风格) | 2AMMC 谁想和我一起在喷泉里放泡泡浴? 自负(自以为是,傲慢) | |

| MMB | 我的后院有一个和这个一模一样的雕像,但比这个好多了。 | |

| Dodeca 2AMMC 我可以做一个看起来更好的围栏 | 我可以拍一张比那更好的照片。 | |

| (风格) MMB | 共情 这些花让我想起了我祖母的花园。我非常想念她。 | |

| Dodeca | 我为这些花感到难过。 | |

| (风格) | 2AMMC 我无法想象农民把它们都围起来有多困难。他工作如此努力。 自恋(自我中心,自负) | |

| 我永远不会去这样的海滩。我的海滩好多了。 Dodeca 我可以拍一张比那更好的照片。 | ||

| 2AMMC 我可以游到那些岩石那里。水流很强,但我是一个更强的游泳者。 | ||

| (风格) | 喧闹(吵闹,大声) | |

| MMB Dodeca | 我想跳进那个水坑里玩泥巴!那会很有趣! | |

| 这看起来是个聚会的好地方! | ||

| 2AMMC 我会骑着大声的摩托车穿过这里,然后开个派对! | ||

| 2AMMC 脸上满是风,闻到咸水的味道,感受到海洋的凉爽,还有正在创造的美好回忆! | (风格) | 快乐 |

| MMB | 我等不及要去海滩和我最好的朋友放风筝了! | |

| Dodeca 多么美好的一天! |

| 损失百分比 MMB Dodeca 2AMMC | |||

|---|---|---|---|

| MMB 风格百分比 | 65* | 49 | |

| Win Dodeca | 35* | 39* | |

| * | |||

| 2AMMC | 51 | 61 |

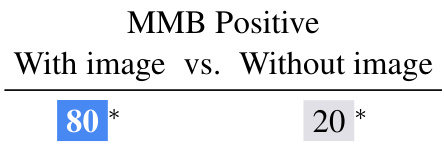

Table 10: ACUTE-Evals on the image-response metric show that MMB Style and 2AMMC significantly outperform Dodeca fine-tuned on Image-Chat. ACUTEEvals are measured on the models’ first response to an image only.

表 10: 基于图像响应指标的ACUTE-Evals结果显示,MMB Style和2AMMC显著优于在Image-Chat上微调的Dodeca。ACUTE-Evals仅测量模型对图像的首次响应。

Table 11: ACUTE-Evals show no significant difference on the image-response metric for MMB Style vs. an equivalent model only fine-tuned on Image-Chat and no dialogue datasets. ACUTE-Evals are measured on the models’ first response to an image.

表 11: ACUTE-Evals 显示在图像响应指标上,MMB Style 与仅在 Image-Chat 上微调且未使用对话数据集的等效模型无显著差异。ACUTE-Evals 测量的是模型对图像的首轮响应。

Table 12: ACUTE-Evals show that MMB Style significantly outperforms Dodeca and often 2AMMC on various metrics on human/model conversation about an image.

表 12: ACUTE-Evals 显示,在关于图像的人机对话中,MMB Style 在多项指标上显著优于 Dodeca 和 2AMMC。

| n b0 En an % Win | Loss % | |||

|---|---|---|---|---|

| MMB | Dodeca | 2AMMC | ||

| MMB Style Dodeca | 70 * | 66 * | ||

| 30 * 34 * | * | 38 * | ||

| 2AMMC MMB Style | 62 * | 58 * | ||

| Dodeca 2AMMC | 30 * | 70 | ||

| 42 * | 51 | |||

| 49 * | ||||

| e a Im | MMB Style | 61 | 52 | |

| Dodeca | 39 * | 44 | ||

| 2AMMC 48 | 56 |

Among the three models, 2AMMC alone is a retrieval model: it retrieves its response from the set of utterances in the Image-Chat training set. Examples of models’ responses to images are in Table 9.

在这三个模型中,只有2AMMC是检索模型:它从Image-Chat训练集中的话语集合中检索响应。模型对图像的响应示例如表9所示。

We run ACUTE-Evals to ask raters to compare these models’ responses on the following metric (henceforth referred to as the Image-response metric): “Who talks about the image better?” The same image is used for both sides of each A/B comparison between model responses.

我们运行ACUTE-Evals评估,要求评分者根据以下指标(以下简称图像响应指标)比较这些模型的响应:"谁能更好地描述图像?" 在模型响应的每次A/B比较中,双方使用相同的图像。

We find that raters choose both the MMB Style and 2AMMC models’ responses significantly more often than those of Dodeca (Table 10). We also find no significant difference in the rate at which MMB Style image responses are chosen compared to the same model fine-tuned only on Image-Chat and not on dialogue datasets (Table 11), which implies that multitasking on dialogue datasets does not degrade the ability to effectively respond to an image.

我们发现评分者选择MMB Style和2AMMC模型回复的频率显著高于Dodeca (表10)。同时,与仅在Image-Chat数据集微调(未在对话数据集微调)的同模型相比,MMB Style的图像回复被选择率未出现显著差异 (表11),这表明对话数据集的多任务训练不会削弱模型对图像的有效响应能力。

5.2.4 Human/Model Chats About Images

5.2.4 人类/模型关于图像的对话

In order to most meaningfully assess MMB Style’s ability to simultaneously engage in general chitchat and talk about an image, we perform ACUTEEvals where we ask raters to evaluate model performance through the span of an entire conversation about an image. For each conversation, an image from the subset of Image-Chat test set images discussed in Section 5.2.3 is first shown to both the human and the model. Then, the model responds to the image, and the human responds to the model to carry the conversation forward. The conversation continues for 6 human utterances and 7 model utterances total.

为了更有意义地评估MMB Style在闲聊和图像讨论中的综合能力,我们进行了ACUTEEvals评估,要求评分者通过整段关于图像的对话来评估模型表现。每次对话会先向人类和模型展示5.2.3节讨论过的Image-Chat测试集子集中的一张图像。随后模型对图像作出回应,人类再回应模型以推进对话。每段对话共包含6轮人类发言和7轮模型发言。

Ratings are shown in Table 12: MMB Style

评分如表 12: MMB 风格所示

Table 13: The frequency of utterances containing gendered words is greatly reduced for degendered models (MMB Degendered, MMB DegenPos), given contexts from ConvAI2 and the same generation parameters as in Roller et al. (2020).

表 13: 在ConvAI2上下文和Roller等人(2020)相同生成参数下,去性别化模型(MMB Degendered, MMB DegenPos)包含性别词汇的话语频率大幅降低。

| 男性词汇 | 女性词汇 | |

|---|---|---|

| Goldresponse | 5.80% | 5.25% |

| BlenderBot | 5.55% | 3.25% |

| MMBStyle | 6.25% | 3.90% |

| MMBDegendered | 0.65% | 0.85% |

| MMBDegenPos | 0.75% | 0.90% |

| BST | Conv | ED | Wow | IC | Avg | |

|---|---|---|---|---|---|---|

| Style | 10.15 | 8.78 | 8.31 | 8.88 | 12.36 | 9.70 |

| Degen | 10.14 | 8.76 | 8.21 | 9.01 | 12.58 | 9.74 |

| Pos | 10.15 | 8.76 | 8.27 | 8.95 | 12.55 | 9.74 |

| DP | 10.36 | 8.97 | 8.34 | 9.41 | 12.65 | 9.95 |

Table 14: Perplexities of MMB Style, MMB Degendered, MMB Positive, and MMB DegenPos on the validation set. For Image-Chat, styles are used in the context for all models, for consistency. (MMB Positive and MMB DegenPos observed styles for $25%$ of ImageChat examples during training.)

表 14: MMB Style、MMB Degendered、MMB Positive 和 MMB DegenPos 在验证集上的困惑度。对于 Image-Chat,所有模型的上下文中都使用了风格,以确保一致性。(MMB Positive 和 MMB DegenPos 在训练期间观察到 $25%$ 的 ImageChat 示例具有风格。)

Table 15: ACUTE-Evals on the models’ first response to an image show no significant differences in how well MMB models can respond to the image, even if the model is degendered or was trained to not require concrete Image-Chat styles.

表 15: 模型对图像首次响应的ACUTE评估显示,即使模型经过去性别化训练或无需具体Image-Chat风格,MMB模型在图像响应能力上均无显著差异。

| Loss % | |||

|---|---|---|---|

| Style Degen | Pos | DP | |

| MMB Style MMB Degendered | 54 | 49 | 56 |

| 46 51 | 48 | 52 | |

| Win MMB Positive | 52 | 41 | |

| MMB DegenPos | 44 | 48 | 59 |

Table 16: ACUTE-Evals show that the MMB Positive model is significantly better at responding to an image than an equivalent model not shown any images during training or inference.

表 16: ACUTE-Evals 显示,MMB Positive 模型在响应图像方面明显优于在训练或推理期间未显示任何图像的等效模型。

performs significantly better than Dodeca and 2AMMC on the engaging ness and humanness metrics, and it performs significantly better than Dodeca on the image-response metric.

在吸引力和人性化指标上表现显著优于Dodeca和2AMMC,同时在图像响应指标上显著优于Dodeca。

6 Analysis of Safety and Gender Bias

6 安全性与性别偏见分析

6.1 De gender ing Models

6.1 去性别化模型

We would like to reduce the ways in which the MMB Style model could potentially display gender bias: for instance, there is no safeguard against it mis gender ing a person in an image, and many common text datasets are known to contain gender bias (Dinan et al., 2019a, 2020a), which may lead to bias in models trained on them. To remedy this, we train a version of the MMB Style model in which the label of each training example is run through a classifier that identifies whether it contains female or male words, and then a string representing that classification is appended to the example’s context string (Dinan et al., 2019a), for input to the model. At inference time, the string representing a classification of “no female or male words” is appended to the context, nudging the model to generate a response containing no gendered words. The fraction of utterances produced by this model that still contain gendered words is shown in Table 13. Compared to the gold response, the original BlenderBot, and MMB Style, this degendered MMB model (which we call “MMB Degendered”) reduces the likelihood of producing an utterance with male word(s) by roughly a factor of 9 and of producing an utterance with female word(s) by roughly a factor of 4, given a context from the ConvAI2 validation set. ACUTE-Evals in Table 7 show that this de gender ing does not lead to a significant drop in the humanness or engaging ness of the model’s responses during a conversation.

我们希望通过减少MMB Style模型可能展现性别偏见的方式来解决这一问题:例如,目前没有防止该模型对图像中人物性别识别错误的保护机制,且许多常见文本数据集已知存在性别偏见 (Dinan et al., 2019a, 2020a),这可能导致基于这些数据训练的模型产生偏见。为改善此问题,我们训练了一个改进版的MMB Style模型,其中每个训练样本的标签会通过一个分类器检测是否包含女性或男性词汇,随后将该分类结果以字符串形式附加到样本的上下文字符串中 (Dinan et al., 2019a) 作为模型输入。在推理阶段,代表"无女性或男性词汇"分类结果的字符串会被附加到上下文中,引导模型生成不含性别词汇的回应。该模型生成语句中仍包含性别词汇的比例如 表 13 所示。与标准回答、原始BlenderBot及MMB Style相比,在ConvAI2验证集的上下文条件下,这个去性别化的MMB模型(我们称为"MMB Degendered")生成含男性词汇语句的概率降低了约9倍,生成含女性词汇语句的概率降低了约4倍。 表 7 中的ACUTE-Evals评估表明,这种去性别化处理不会导致模型在对话中生成回答的人类化程度或吸引力出现显著下降。

6.2 Removing Dependence on Style

6.2 消除对风格的依赖

Since each of the images that MMB Style saw during training was associated with an Image-Chat style, it relies on an input style during inference in order to be able to discuss an image. However, this results in a model whose utterances will necessarily strongly exhibit a particular style. (For example, see the “Playf