DTrOCR: Decoder-only Transformer for Optical Character Recognition

DTrOCR: 仅解码器Transformer的光学字符识别

Abstract

摘要

Typical text recognition methods rely on an encoderdecoder structure, in which the encoder extracts features from an image, and the decoder produces recognized text from these features. In this study, we propose a simpler and more effective method for text recognition, known as the Decoder-only Transformer for Optical Character Recognition (DTrOCR). This method uses a decoder-only Trans- former to take advantage of a generative language model that is pre-trained on a large corpus. We examined whether a generative language model that has been successful in natural language processing can also be effective for text recognition in computer vision. Our experiments demonstrated that DTrOCR outperforms current state-of-the-art methods by a large margin in the recognition of printed, handwritten, and scene text in both English and Chinese.

典型文本识别方法依赖于编码器-解码器结构,其中编码器从图像中提取特征,解码器根据这些特征生成识别文本。在本研究中,我们提出了一种更简单高效的文本识别方法——仅解码器Transformer光学字符识别 (DTrOCR)。该方法利用仅解码器Transformer架构,充分发挥了在大规模语料库上预训练的生成式语言模型 (Generative Language Model) 优势。我们验证了在自然语言处理领域表现优异的生成式语言模型,是否同样适用于计算机视觉中的文本识别任务。实验表明,DTrOCR 在英文和中文的印刷体、手写体及场景文本识别任务中,均大幅领先当前最先进方法。

1. Introduction

1. 引言

The aim of text recognition, also known as optical character recognition (OCR), is to convert the text in images into digital text sequences. Many studies have been conducted on this technology owing to its wide range of real-world applications, including reading license plates and handwritten text, analyzing documents such as receipts and invoices [23, 58], and analyzing road signs in automated driving and natural scenes [14, 16]. However, the various fonts, lighting variations, complex backgrounds, low-quality images, occlusion, and text deformation make text recognition challenging. Numerous methods have been proposed to overcome these challenges.

文本识别(OCR)的目标是将图像中的文字转换为数字文本序列。由于该技术在现实世界中的广泛应用,包括车牌和手写文本识别、收据和发票等文档分析[23, 58],以及自动驾驶和自然场景中的路标分析[14, 16],已有大量相关研究。然而,多样的字体、光照变化、复杂背景、低质量图像、遮挡和文本变形等因素使得文本识别极具挑战性。为应对这些挑战,研究者们提出了众多方法。

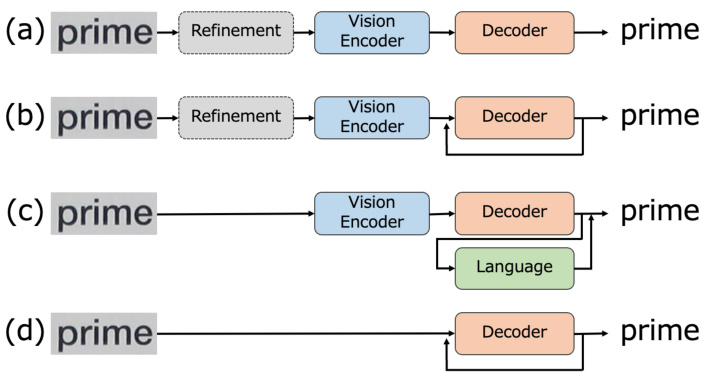

Existing approaches have mainly employed an encoderdecoder architecture for robust text recognition [3, 13, 47]. In such methods, the encoder extracts the intermediate features from the image, and the decoder predicts the corresponding text sequence. Figures 1 (a)–(c) present the encoder-decoder model patterns of previous studies. The methods in Figures 1 (a) and (b) employ the convolutional neural network (CNN) [20] and Vision Transformer (ViT) [11] families as image encoding methods, with the recurrent neural network (RNN) [22] and Transformer [51] families as decoders, which can be used for batch inference (Figure 1 (a)) or recursively inferring characters one by one (Figure 1 (b)). Certain modules apply image curve correction [2] and high-resolution enhancement [38] to the input images to boost the accuracy. Owing to the limited information from images, several methods in recent years have focused on linguistic information and have employed language models (LMs) in the decoder either externally [13] or internally [3, 29], as illustrated in Figure 1 (c). Although these approaches can achieve high accuracy by leveraging linguistic information, the additional computational cost is an issue.

现有方法主要采用编码器-解码器架构来实现鲁棒文本识别[3, 13, 47]。这类方法中,编码器从图像中提取中间特征,解码器则预测对应的文本序列。图1(a)-(c)展示了先前研究的编码器-解码器模型模式。图1(a)和(b)中的方法分别采用卷积神经网络(CNN)[20]和视觉Transformer(ViT)[11]系列作为图像编码方法,配合循环神经网络(RNN)[22]和Transformer[51]系列作为解码器,可实现批量推理(图1(a))或逐字符递归推理(图1(b))。部分模块会对输入图像应用曲线校正[2]和高分辨率增强[38]以提升准确率。由于图像信息有限,近年一些方法开始关注语言信息,在解码器中外部[13]或内部[3, 29]集成语言模型(LM),如图1(c)所示。虽然这些方法通过利用语言信息能实现高准确率,但额外的计算成本仍是待解决的问题。

Figure 1. Model patterns for text recognition. The existing methods in (a) to (c) consist of a vision encoder to extract the image features and a decoder to predict text sequences from the features. Some methods use refinement modules to deal with low-quality images and an LM to correct the output text. Our approach differs significantly. As shown in (d), it consists of a simple model pattern in which the image is fed directly into the decoder to generate text.

图 1: 文本识别的模型模式。(a)至(c)中的现有方法包含一个视觉编码器用于提取图像特征,以及一个解码器用于从特征中预测文本序列。部分方法使用细化模块处理低质量图像,并借助语言模型(LM)校正输出文本。我们的方法截然不同,如(d)所示,采用直接将图像输入解码器生成文本的简洁模式。

LMs based on generative pre-training have been used successfully in various natural language processing (NLP) tasks [43, 44] in recent years. These models are built with a decoder-only Transformer. The model passes the input text directly to the decoder, which outputs the subsequent word token. The model is pre-trained with a large corpus to generate the following text sequence in the given text. The model needs to understand the meaning and context of the words and acquire language knowledge to generate the text sequence accurately. As the obtained linguistic information is powerful, it can be fine-tuned for various tasks in NLP. However, the applicability of such models to text recognition has yet to be demonstrated.

近年来,基于生成式预训练的大语言模型已成功应用于各种自然语言处理 (NLP) 任务 [43, 44]。这些模型采用仅包含解码器的 Transformer 架构,直接将输入文本传递给解码器以输出后续单词 Token。模型通过大规模语料库进行预训练,从而在给定文本中生成后续文本序列。该过程要求模型理解单词含义与上下文,并掌握语言知识以准确生成文本序列。由于所获取的语言信息具有强大表征能力,此类模型可针对不同 NLP 任务进行微调。然而,此类模型在文本识别任务中的适用性尚未得到验证。

Motivated by the above observations, this study presents a novel text recognition framework known as the Decoder-only Transformer for Optical Character Recognition (DTrOCR), which does not require a vision encoder for feature extraction. The proposed method transforms a pretrained generative LM with a high language representation capability into a text recognition model. Generative models that are used in NLP use a text sequence as input and generate the following text auto regressive ly. In this work, the model is trained to use an image as the input. The input image is converted into a patch sequence, and the recognition results are output auto regressive ly. The structure of the proposed method is depicted in Figure 1 (d). DTrOCR does not require vision encoders such as a CNN or ViT but has a simple model structure with only a decoder that leverages the internal generative LM. In addition, the proposed method employs fine-tuning from a pre-trained model, which reduces the computational resources. Despite its simple structure, DTrOCR is revealed to outperform existing methods in various text recognition benchmarks, such as handwritten, printed, and natural scene image text, in English and Chinese. The main contributions of this work are summarized as follows:

基于上述观察,本研究提出了一种新颖的文本识别框架——仅解码器Transformer光学字符识别(DTrOCR),该框架无需视觉编码器进行特征提取。该方法将具有高语言表征能力的预训练生成式大语言模型转化为文本识别模型。自然语言处理中使用的生成式模型以文本序列作为输入,并以自回归方式生成后续文本。本研究中,模型被训练为使用图像作为输入:输入图像被转换为图像块序列,并以自回归方式输出识别结果。图1(d)展示了所提方法的结构。DTrOCR无需CNN或ViT等视觉编码器,仅通过利用内部生成式大语言模型的解码器构成简洁模型结构。此外,该方法采用预训练模型微调策略,有效降低了计算资源需求。尽管结构简洁,实验表明DTrOCR在英语和中文的手写体、印刷体及自然场景图像文本等多种文本识别基准测试中均优于现有方法。本文主要贡献可归纳如下:

• We propose a novel decoder-only text recognition method known as DTrOCR, which differs from the mainstream encoder-decoder approach. • Despite its simple structure, DTrOCR achieves stateof-the-art results on various benchmarks by leveraging the internal LM, without relying on complex pre- or post-processing.

• 我们提出了一种新颖的仅解码器文本识别方法DTrOCR,该方法与主流的编码器-解码器架构不同。

• 尽管结构简单,DTrOCR通过利用内部语言模型 (LM) ,无需依赖复杂的预处理或后处理,便在多种基准测试中达到了最先进的性能。

2. Related Works

2. 相关工作

Scene Text Recognition. Scene text recognition refers to text recognition in natural scene images. Existing methods can be divided into three categories: word-based, characterbased, and sequence-based approaches. Word-based approaches perform text recognition as image classification, in which each word is a direct classification class [24]. Character-based approaches perform text recognition using detection, recognition, and grouping on a character-bycharacter basis [53]. Sequence-based approaches deal with the task as sequence labeling and are mainly realized using encoder-decoder structures [13, 29, 47]. The encoder, which can be constructed using the CNN and ViT families, aims to extract a visual representation of a text im- age. The goal of the decoder is to map the representation to text with connection is t temporal classification (CTC)-based methods [18, 47] or attention mechanisms [3, 29, 32, 61].

场景文本识别。场景文本识别指对自然场景图像中的文本进行识别。现有方法可分为三类:基于单词、基于字符和基于序列的方法。基于单词的方法将文本识别视为图像分类任务,每个单词直接作为一个分类类别[24]。基于字符的方法通过逐字符进行检测、识别和分组来完成文本识别[53]。基于序列的方法将该任务视为序列标注问题,主要采用编码器-解码器结构实现[13,29,47]。编码器可采用CNN和ViT系列架构构建,旨在提取文本图像的视觉表征。解码器的目标是通过基于连接时序分类(CTC)的方法[18,47]或注意力机制[3,29,32,61],将该表征映射为文本。

The encoder has been improved using a neural architecture search [65] and a graph convolutional network [59], whereas the decoder has been enhanced using multistep reasoning [5], two-dimensional features [28], semantic learn- ing [42], and feedback [4]. Furthermore, the accuracy can be improved by converting low-resolution inputs into high-resolution inputs [38], normalizing curved and irregular text images [2, 33, 48], or using diffusion models [15]. Some methods have predicted the text directly from encoders alone for computational efficiency [1, 12]. However, these approaches do not use linguistic information and face difficulties when the characters are hidden or unclear.

编码器通过神经架构搜索 [65] 和图卷积网络 [59] 进行了改进,而解码器则采用了多步推理 [5]、二维特征 [28]、语义学习 [42] 和反馈机制 [4] 来增强性能。此外,将低分辨率输入转换为高分辨率输入 [38]、对弯曲和不规则文本图像进行归一化处理 [2, 33, 48] 或使用扩散模型 [15] 均可提升准确率。部分方法为提升计算效率,仅通过编码器直接预测文本 [1, 12],但这些方法未利用语言信息,在字符被遮挡或模糊时表现欠佳。

Therefore, methods that leverage language knowledge have been proposed to make the models more robust in recent years. ABINet uses bi-directional context via external LMs [13]. In VisionLAN, an internal LM is constructed by selectively masking the image features of individual characters during training [56]. The learning of internal LMs using permutation language modeling was proposed in PARSeq [3]. In TrOCR, LMs that are pre-trained on an NLP corpus using masked language modeling (MLM) are used as the decoder [29]. MaskOCR includes sophisticated MLM pre-training methods to enhance the Transformerbased encoder-decoder structure [34]. The outputs of these encoders are either passed directly to the decoder or intricately linked by a cross-attention mechanism.

因此,近年来提出了利用语言知识的方法来增强模型的鲁棒性。ABINet通过外部语言模型(LM)利用双向上下文[13]。VisionLAN在训练期间通过选择性掩码单个字符的图像特征构建了内部语言模型[56]。PARSeq提出了使用排列语言建模(permutation language modeling)学习内部语言模型的方法[3]。TrOCR采用基于自然语言处理(NLP)语料库、通过掩码语言建模(MLM)预训练的语言模型作为解码器[29]。MaskOCR包含复杂的MLM预训练方法,用于增强基于Transformer的编码器-解码器结构[34]。这些编码器的输出要么直接传递给解码器,要么通过交叉注意力机制复杂地连接起来。

Our approach exhibits similarities to TrOCR [29] as both use linguistic information and pre-trained LMs for the decoding process. However, our method differs in two significant aspects. First, we use generative pre-training [43] as the pre-training method in the decoder, which predicts the next word token for generating text, instead of solving masked fill-in-the-blank problems using MLM. Second, we eliminate the encoder to obtain elaborate features from images. The images are patched and directly fed into the decoder. As a result, no complicated connections such as cross-attention are required to link the encoder and decoder because the image and text information are handled at the same sequence level. This enables our text recognition model to be simple yet effective.

我们的方法与TrOCR [29]有相似之处,两者都在解码过程中利用了语言信息和预训练的大语言模型。但我们的方法在两个方面存在显著差异:首先,我们在解码器中采用生成式预训练 [43] 作为预训练方法,通过预测下一个单词token来生成文本,而非使用MLM解决掩码填空问题;其次,我们移除了编码器来获取图像精细特征,直接将分块后的图像输入解码器。由于图像和文本信息在同一序列层级处理,因此无需通过跨注意力等复杂连接来关联编码器与解码器。这使得我们的文本识别模型简洁而高效。

Handwritten Text Recognition. Handwritten text recognition (HWR) has long been studied, and the recent methods have been reviewed [35]. In addition, the effects of different attention mechanisms of encoder-decoder structures in the HWR domain have been compared [36]. The combination of RNNs and CTC decoders has been established as the primary approach in this field [6, 36, 55], with improvements such as multi-dimensional long short-term memory [41] and attention mechanisms [10, 25] having been applied. In recent years, extensions using LMs have also been implemented [29]. Thus, we tested the effectiveness of our method in HWR to confirm its s cal ability.

手写文本识别。手写文本识别 (HWR) 领域的研究由来已久,近期方法已有综述 [35]。此外,学界还比较了编码器-解码器结构中不同注意力机制在 HWR 领域的效果 [36]。目前 RNN 与 CTC 解码器的组合已成为该领域主流方法 [6, 36, 55],并衍生出多维长短期记忆网络 [41] 和注意力机制 [10, 25] 等改进方案。近年来还出现了结合语言模型 (LM) 的扩展研究 [29]。因此我们通过 HWR 任务验证了本方法的有效性以确认其扩展能力。

Chinese Text Recognition. Text recognition tasks on alphabets and symbols in English have been studied, and significant accuracy improvements have been achieved [3, 13]. The adaptation of recognition models to Chinese text recognition (CTR) has been investigated in recent years [34, 62]. However, studies on CTR remain lacking. CTR is a challenging task as Chinese has substantially more characters than English and contains many similar-appearing characters. Thus, we validated our method to determine whether it can be applied to Chinese in addition to English text.

中文文本识别。针对英文字母和符号的文本识别任务已有研究,并取得了显著的准确率提升 [3, 13]。近年来,识别模型在中文文本识别 (CTR) 上的适配性得到了探索 [34, 62]。然而针对 CTR 的研究仍然不足。由于中文字符数量远超英文且包含大量形近字,CTR 是一项极具挑战性的任务。为此我们验证了本方法在英文文本之外是否同样适用于中文。

Generative Pre-Trained Transformer. Generative pretrained Transformer (GPT) has emerged in NLP, which has attracted attention owing to its ability to produce results in various tasks [43,44]. The model can acquire linguistic ability by predicting the continuation of a given text. GPT comprises a decoder-only auto regressive transformer that does not require an encoder to acquire the input text features. Whereas previous studies [29] examined the adaptation of LMs that are learned using MLM to text recognition models, this study explores the extension of GPT to text recognition.

生成式预训练Transformer。生成式预训练Transformer (GPT) 在自然语言处理领域崭露头角,其能够生成多种任务结果的能力引起了广泛关注 [43,44]。该模型通过预测给定文本的后续内容来获得语言能力。GPT由一个仅包含解码器的自回归Transformer组成,无需编码器即可获取输入文本特征。尽管先前的研究 [29] 探讨了将使用掩码语言建模 (MLM) 学习的大语言模型适配到文本识别模型的方法,但本研究探索了将GPT扩展到文本识别的可能性。

3. Method

3. 方法

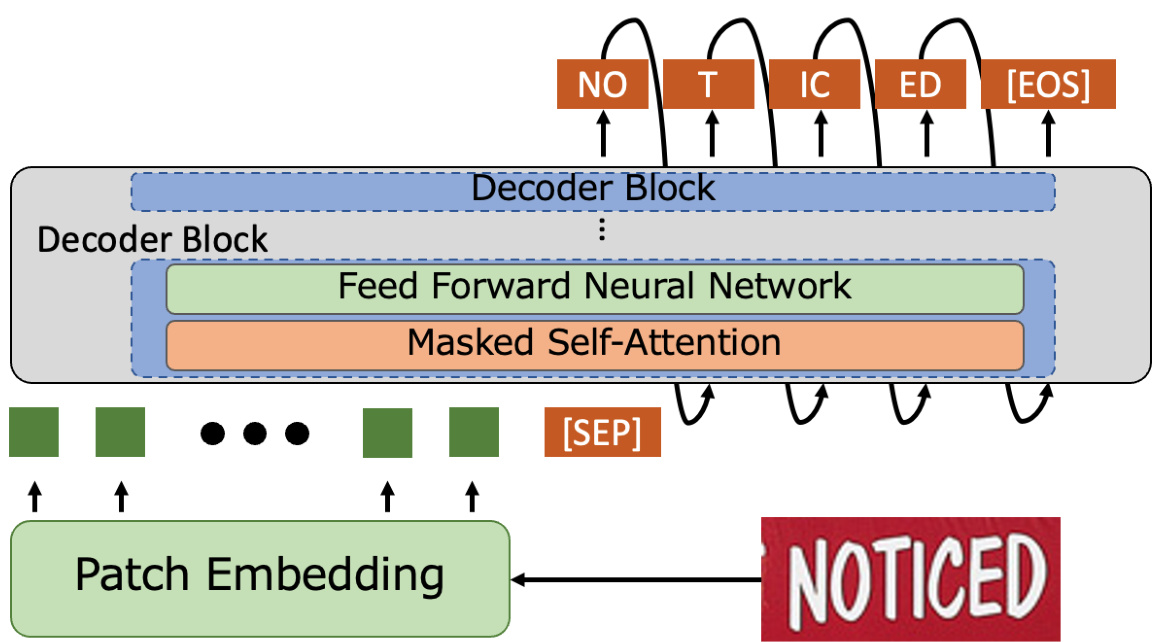

The pipeline of our method is illustrated in Figure 2. We use a generative LM [44] that incorporates Transformers [51] with a self-attention mechanism. Our model comprises two main components: the patch embedding and Transformer decoder. The input text image undergoes patch embedding to divide it into patch-sized image sequences. Subsequently, these sequences are passed through the decoder with the positional embedding. The decoder predicts the first-word token after receiving a special token [SEP] that separates the image and text sequence. Thereafter, it predicts the subsequent tokens in an auto regressive manner until the special token [EOS], which indicates the end of the text, and produces the final result. The modules and training methods are described in detail in the following sections.

我们方法的流程如图 2 所示。我们采用了一个结合 Transformer [51] 与自注意力机制的生成式大语言模型 [44]。该模型包含两大核心组件:图像块嵌入 (patch embedding) 和 Transformer 解码器。输入文本图像首先通过图像块嵌入被分割为图像块序列,随后这些序列与位置编码一起输入解码器。解码器在接收到分隔图像与文本序列的特殊标记 [SEP] 后,会预测首个单词标记 (token),之后以自回归方式持续预测后续标记,直至遇到表示文本结束的特殊标记 [EOS] 并输出最终结果。各模块及训练方法将在后续章节详细说明。

3.1. Patch Embedding Module

3.1. 图像块嵌入模块

Since the input to the transformer is a sequence of tokens, the patch embedding as the input to the Transformer is a sequence of tokens, the patch embedding module transforms the tokens so that the image can be input into the decoder. We employ the patch embedding procedure proposed in [11]. The input image is resized to a fixed-size image $\boldsymbol{I}\in\mathbb{R}^{W\times H\times C}$ , where $W,H$ , and $C$ are the width, height, and channel of the image, respectively. The input image is divided by fixed patch sizes $p_{w}\times p_{h}$ , where $p_{w}$ and $p_{h}$ are the width and height of the patch, respectively. The patch images are first transformed into vectors and adjusted to fit the input dimensions of the Transformer. Position encoding is added to preserve the information on the position of each patch. Subsequently, the resulting sequence, which contains both the transformed patches and position information, is sent to the decoder.

由于Transformer的输入是一个Token序列,作为Transformer输入的补丁嵌入模块会对这些Token进行转换,使得图像能够输入解码器。我们采用[11]中提出的补丁嵌入流程:输入图像被调整为固定尺寸的$\boldsymbol{I}\in\mathbb{R}^{W\times H\times C}$,其中$W,H$和$C$分别表示图像的宽度、高度和通道数。该图像被划分为固定尺寸为$p_{w}\times p_{h}$的补丁块,$p_{w}$和$p_{h}$分别代表补丁的宽度和高度。这些补丁图像首先被转换为向量,并调整以适应Transformer的输入维度。通过添加位置编码来保留每个补丁的位置信息。最终,包含转换后补丁和位置信息的序列将被送入解码器。

3.2. Decoder Module

3.2. 解码器模块

The decoder performs text recognition using a given image sequence. The decoder initially uses the input image sequence and generates the first predicted token by following a beginning token. This token is a special token named [SEP], which marks the division between the image and text sequence. Thereafter, the model uses the image and predicted token sequence to generate text auto regressive ly until it reaches the token [EOS]. The decoder output is projected by a linear layer from the dimension of the model to the vocabulary size $V$ . Thereafter, the probabilities are computed on the vocabulary using a softmax function. Finally, the beam search is employed to obtain the final output. The cross-entropy loss function is used in this process.

解码器通过给定的图像序列执行文本识别任务。解码器首先利用输入图像序列,并按照起始标记 [SEP] 生成第一个预测token。该标记是用于区分图像与文本序列的特殊分隔符。随后,模型以自回归方式结合图像和已预测token序列持续生成文本,直至遇到终止标记 [EOS]。解码器输出会通过线性层从模型维度映射到词汇表大小 $V$,再通过softmax函数计算词汇表概率分布,最终采用束搜索(beam search)获取最终输出。此过程使用交叉熵损失函数进行优化。

The decoder uses GPT [43, 44] to recognize the text accurately using linguistic knowledge. It predicts the next word in a sentence by maximizing the entropy. Pre-trained models are publicly available, which eliminates the need for computational resources to acquire language knowledge.

解码器采用GPT[43, 44]模型,通过语言学知识实现精准文本识别。其通过最大化熵值来预测句子中的下一个词。公开可用的预训练模型消除了获取语言知识所需的计算资源开销。

The decoder comprises multiple stacks, with the Transformer layer [51] constituting one block. This block includes a multi-head mask self-attention and feed-forward network. As opposed to previous encoder-decoder structures, this method only uses a decoder for prediction, thereby eliminating the need for cross-attention between the image features and text and significantly simplifying the design.

解码器由多个堆叠层组成,其中Transformer层[51]构成一个模块。该模块包含多头掩码自注意力机制和前馈网络。与以往的编码器-解码器结构不同,该方法仅使用解码器进行预测,因此无需图像特征与文本之间的交叉注意力,大幅简化了设计。

3.3. Pre-training with Synthetic Datasets

3.3. 基于合成数据集的预训练

The decoder of our method gains language knowledge through GPT in NLP. However, it does not connect this knowledge with the image information. Thus, we trained the model on artificially generated datasets that included various text forms, such as scenes, handwritten, and printed text, to aid in acquiring image and language knowledge. Further details are provided in the experimental section.

我们方法的解码器通过自然语言处理(NLP)中的GPT获取语言知识。然而,它并未将这些知识与图像信息建立关联。为此,我们在人工生成的数据集上训练模型,这些数据集包含场景文本、手写体和印刷体等多种文本形式,以帮助获取图像与语言知识。更多细节详见实验部分。

3.4. Fine-Tuning with Real-World Datasets

3.4. 基于真实世界数据集的微调

Recent studies have demonstrated that synthetic datasets alone are insufficient for handling real-world problems [2, 3]. Text shapes, fonts, and features may vary depending on the type of recognition required, such as printed or handwritten text. Therefore, we fine-tuned the pre-trained models using actual data for specific tasks to solve real-world text recognition issues effectively. The training procedure for the real datasets was the same as that for the synthetic ones.

近期研究表明,仅靠合成数据集无法有效解决现实问题 [2, 3]。根据识别需求类型(如印刷体或手写体),文本形状、字体和特征会存在差异。为此,我们采用实际数据对预训练模型进行特定任务的微调,以高效解决真实场景的文本识别问题。真实数据集的训练流程与合成数据集保持一致。

Figure 2. Architecture of proposed DTrOCR, which consists of patch embedding and decoder modules. The input images are transformed into one-dimensional sequences using the patch embedding and then sent to the decoder along with the positional encoding. The decoder uses the special token [SEP] to indicate sequence separation. Thereafter, it predicts the subsequent word token based on the sequence condition. It continues to generate text auto regressive ly until it reaches the end of the text token [EOS].

图 2: 提出的 DTrOCR 架构,由 patch embedding 和解码器模块组成。输入图像通过 patch embedding 转换为一维序列,随后与位置编码一起送入解码器。解码器使用特殊 token [SEP] 表示序列分隔,之后基于序列条件预测后续单词 token。该模型会自回归地持续生成文本,直至遇到文本结束 token [EOS]。

3.5. Inference

3.5. 推理

The proposed method uses the same training process for inference. Patch embedding is employed for the input images and decoder to generate predicted tokens iterative ly until the [EOS] token is reached for text recognition.

所提出的方法在推理阶段采用相同的训练流程。通过使用图像块嵌入 (patch embedding) 处理输入图像和解码器,迭代生成预测token,直至文本识别任务中出现[EOS] token为止。

4. Experiments

4. 实验

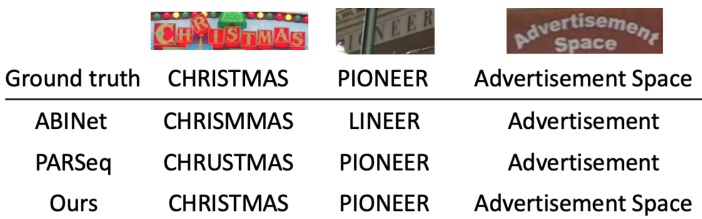

Figure 3. Comparison of recognition results of state-of-the-art methods and proposed method [3, 13]. The result corresponding to an image is shown on each line, with the ground truth at the top. The proposed method is robust to occlusion and irregularly arranged scenes and is accurate even for two lines.

图 3: 现有最优方法与提出方法的识别结果对比 [3, 13]。每行显示对应图像的识别结果,顶部为真实标注。所提出的方法对遮挡和非规则排列场景具有鲁棒性,即使对两行文本也能保持准确识别。

We evaluated the performance of the proposed method on scene image, printed, and handwritten text recognition in English and Chinese.

我们在英文和中文的场景图像、印刷体及手写体文本识别任务上评估了所提出方法的性能。

4.1. Datasets

4.1. 数据集

Pre-training with Synthetic Datasets. Our proposed method was pre-trained using synthetic datasets to connect the visual and language information in the LM of the decoder. Previous studies [29] obtained training data by extracting available PDFs from the Internet and using real-world receipt data with annotations that are generated by commercial OCR. However, substantial time and effort are required to prepare such data. We created annotated datasets from a text corpus using an artificial generation method to make the process more reproducible. We used large datasets that are commonly used to train LMs as our corpus for generating synthetic text images: PILE [17] for English, CC100 [57], and the Chinese NLP Corpus1 for Chinese with preprocessing [68].

基于合成数据集的预训练。我们提出的方法通过合成数据集进行预训练,以连接解码器大语言模型中的视觉与语言信息。先前研究[29]通过从互联网提取可用PDF文件,以及使用商业OCR生成标注的真实收据数据来获取训练数据,但此类数据准备过程需要耗费大量时间和精力。为提高实验可复现性,我们采用人工生成方法从文本语料库创建标注数据集。选用以下常用于训练大语言模型的大规模语料库生成合成文本图像:英语采用PILE[17],中文使用经过预处理的CC100[57]和Chinese NLP Corpus1[68]。

We used three open-source libraries to create synthetic datasets from our corpus. We randomly divided the corpus into three categories: scene, printed, and handwritten text recognition, with a distribution of $60%$ , $20%$ , and $20%$ , respectively, to ensure text recognition accuracy. We generated four billion horizontal and two billion vertical images of text for scene text recognition using SynthTIGER [60]. We employed the default font for English and 64 commonly used fonts for Chinese. We used the Multiline text image configuration in SynthTIGER, set the word count to five, and generated 100 million images using the MJSynth [24] and SynthText [19] corpora for the recognition of multiple lines of English text.

我们使用三个开源库从语料库中创建合成数据集。为确保文本识别准确性,将语料库随机分为场景、印刷体和手写体文本识别三类,分布比例为$60%$、$20%$和$20%$。利用SynthTIGER [60]生成了40亿张水平文本图像和20亿张垂直文本图像用于场景文本识别,其中英文采用默认字体,中文采用64种常用字体。在SynthTIGER中使用多行文本图像配置,将单词数设置为5,并基于MJSynth [24]和SynthText [19]语料库生成了1亿张图像用于多行英文文本识别。

We created two billion datasets for printed text recognition using the default settings of Text Render2. Additionally, we employed TRDG3 to generate another two billion datasets for recognizing handwritten text. We followed the methods outlined in previous studies for our process [29]. We used 5,427 English and four Chinese hand

我们使用Text Render2的默认设置创建了20亿个印刷文本识别数据集。此外,还采用TRDG3生成了另外20亿个手写文本识别数据集。数据处理流程遵循了先前研究提出的方法 [29],共使用了5,427个英文和4个中文手写样本。

Table 1. Word accuracy on English scene text recognition benchmark datasets with 36 characters. “Synth” and “Real” refer to synthetic and real training datasets, respectively.

表 1: 36字符英文场景文本识别基准数据集上的单词准确率。"Synth"和"Real"分别指合成训练数据集和真实训练数据集。

| 方法 | 训练数据 | 测试数据集及样本数量 |

|---|---|---|

| IIIT5k | ||

| 3,000 | ||

| CRNN [47] | Synth | 81.8 |

| ViTSTRBASE [1] | Synth | 88.4 |

| TRBA [2] | Synth | 92.1 |

| ABINet [13] | Synth | 96.2 |

| PlugNet [38] | Synth | 94.4 |

| SRN [61] | Synth | 94.8 |

| TextScanner [53] | Synth | 95.7 |

| AutoSTR [65] | Synth | 94.7 |

| PREN2D [59] | Synth | 95.6 |

| VisionLAN [56] | Synth | 95.8 |

| JVSR [5] | Synth | 95.2 |

| CVAE-Feed [4] | Synth | 95.2 |

| DiffusionSTR [15] | Synth Synth | 97.3 |

| TrOCRBASE [29] | Synth | 90.1 91.0 |

| TrOCRLARGE [29] | Synth | 97.0 |

| PARSeq [3] | Synth | |

| MaskOCRBASE [34] MaskOCRLARGE [34] | Synth | 95.8 96.5 |

| SVTRBASE [12] | Synth | 96.0 |

| SVTRLARGE [12] | Synth | 96.3 |

| DTrOCR (Ours) | Synth | 98.4 |

| CRNN [3,47] | Real | 94.6 |

| TRBA [2,3] | Real | 98.6 |

| ABINet [3,13] | Real | 98.6 |

| PARSeq [3] DTrOCR (ours) | Real Real | 99.1 99.6 |

Table 2. Word-level recall, precision and F1 on SROIE Task 2.

表 2: SROIE任务2中的词级召回率、精确率和F1值

| 模型 | 召回率 | 精确率 | F1值 |

|---|---|---|---|

| CRNN [47] | 28.71 | 48.58 | 36.09 |

| H&H Lab [23] | 96.35 | 96.52 | 96.43 |

| MSOLab [23] | 94.77 | 94.88 | 94.82 |

| CLOVA OCR [23] | 94.30 | 94.88 | 94.59 |

| TrOCRLARGE [29] | 96.59 | 96.57 | 96.58 |

| DTrOCR (本方法) | 98.24 | 98.51 | 98.37 |

Table 3. CER on IAM Handwriting Database, where a lower score is better.

表 3: IAM手写数据库上的CER (字符错误率) ,数值越低越好。

| 模型 | 训练数据 | 外部语言模型 | CER |

|---|---|---|---|

| Bluche et al. [6] | Synthetic+IAM | 是 | 3.20 |

| Michael et al. [36] | IAM | 否 | 4.87 |

| Wang et al. [55] | IAM | 否 | 6.40 |

| Kang et al. [25] | Synthetic+IAM | 否 | 4.67 |

| Diazetal. [10] | Internal+IAM | 是 | 2.75 |

| TrOCRLARGE [29] | Synthetic+IAM | 否 | 2.89 |

| DTrOCR (本工作) | Synthetic+IAM | 否 | 2.38 |

writing fonts 4.

书写字体 4.

Fine-Tuning with Real-World and Evaluation Datasets. We fine-tuned the pre-trained models on each dataset and evaluated their performance on benchmarks. English scene text recognition models have traditionally been trained on large synthetic datasets owing to the limited availability of labeled real datasets. However, with the increasing amount of real-world datasets, models are now also being trained on real data. Therefore, following previous studies [2, 3], we trained our models on both synthetic and real datasets to validate the performance. Specifically, we used MJSynth [24] and SynthText [19] as synthetic datasets and COCO-Text [52], RCTW [49], Uber-Text [67], ArT [7], LSVT [50], MLT19 [39], and ReCTS [66] as real datasets. Each model was evaluated on six standard scene text datasets: ICDAR 2013 (IC13) [27], Street View Text (SVT) [54], IIIT5K-Words (IIIT5K) [37], ICDAR 2015 (IC15) [26], Street View Text-Perspective (SVTP) [40], and CUTE80 (CUTE) [45]. The initial three datasets mainly consist of standard text images, whereas the remaining datasets include images of text that are either curved or in perspective.

基于真实场景与评估数据集的微调。我们在每个数据集上对预训练模型进行微调,并在基准测试中评估其性能。由于标注真实数据集的稀缺性,英文场景文本识别模型传统上依赖大规模合成数据集进行训练。但随着现实世界数据集的增长,当前模型也开始采用真实数据训练。为此,我们遵循先前研究[2,3]的方法,同时使用合成与真实数据集进行模型训练以验证性能。具体而言,采用MJSynth[24