https://arxiv.org/pdf/2006.11149v3# Compositional Learning of Image-Text Query for Image Retrieval

图像检索中图文查询的组合学习

Abstract

摘要

In this paper, we investigate the problem of retrieving images from a database based on a multi-modal (imagetext) query. Specifically, the query text prompts some modification in the query image and the task is to retrieve images with the desired modifications. For instance, a user of an E-Commerce platform is interested in buying a dress, which should look similar to her friend’s dress, but the dress should be of white color with a ribbon sash. In this case, we would like the algorithm to retrieve some dresses with desired modifications in the query dress. We propose an auto encoder based model, ComposeAE, to learn the composition of image and text query for retrieving images. We adopt a deep metric learning approach and learn a metric that pushes composition of source image and text query closer to the target images. We also propose a rotational symmetry constraint on the optimization problem. Our approach is able to outperform the state-of-the-art method TIRG [23] on three benchmark datasets, namely: MIT-States, Fashion 200 k and Fashion IQ. In order to ensure fair comparison, we introduce strong baselines by enhancing TIRG method. To ensure reproducibility of the results, we publish our code here: https://github. com/ecom-research/ComposeAE.

本文研究基于多模态(图像文本)查询从数据库中检索图像的问题。具体而言,查询文本提示对查询图像进行某些修改,任务则是检索具有所需修改的图像。例如,电子商务平台用户想购买一件与她朋友裙子相似但需改为白色并配腰带款的连衣裙。此时,我们希望算法能检索出对查询裙子进行所需修改后的若干款式。我们提出基于自动编码器的模型ComposeAE,通过学习图像和文本查询的组合来检索图像。采用深度度量学习方法,学习使源图像与文本查询的组合更接近目标图像的度量标准。同时提出优化问题的旋转对称约束。我们的方法在三个基准数据集(MIT-States、Fashion 200k和Fashion IQ)上优于当前最先进的TIRG[23]方法。为确保公平比较,我们通过增强TIRG方法引入强基线。为保障结果可复现性,代码已开源:https://github.com/ecom-research/ComposeAE。

1. Introduction

1. 引言

One of the peculiar features of human perception is multi-modality. We unconsciously attach attributes to objects, which can sometimes uniquely identify them. For instance, when a person says apple it is quite natural that an image of an apple, which may be green or red in color, forms in their mind. In information retrieval, the user seeks information from a retrieval system by sending a query. Traditional information retrieval systems allow a unimodal query, i.e., either a text or an image. Advanced information retrieval systems should enable the users in expressing the concept in their mind by allowing a multi-modal query.

人类感知的一个独特特征是多模态性。我们会无意识地为物体附加属性,这些属性有时能唯一标识它们。例如,当有人说"苹果"时,脑海中很自然地会浮现出一个可能是绿色或红色的苹果图像。在信息检索中,用户通过向检索系统发送查询来寻找信息。传统的信息检索系统只允许单模态查询,即仅支持文本或图像。先进的信息检索系统应支持多模态查询,使用户能更准确地表达心中的概念。

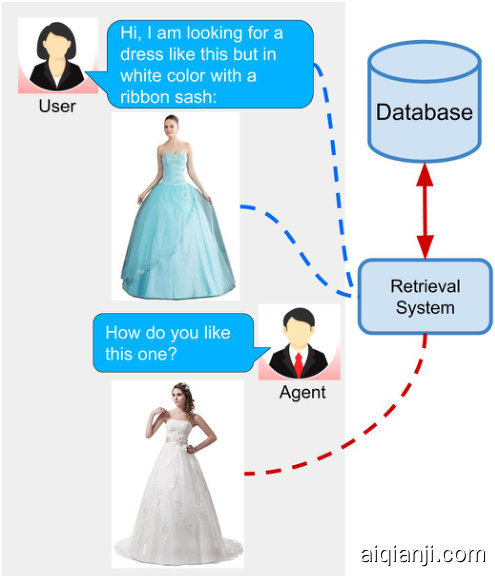

Figure 1. Potential application scenario of this task

图 1: 该任务的潜在应用场景

In this work, we consider such an advanced retrieval system, where users can retrieve images from a database based on a multi-modal query. Concretely, we have an image retrieval task where the input query is specified in the form of an image and natural language expressions describing the desired modifications in the query image. Such a retrieval system offers a natural and effective interface [18]. This task has applications in the domain of E-Commerce search, surveillance systems and internet search. Fig. 1 shows a potential application scenario of this task.

在本工作中,我们考虑这样一种高级检索系统:用户可以通过多模态查询从数据库中检索图像。具体而言,我们设定了一个图像检索任务,其输入查询由图像和自然语言描述共同构成,后者用于指定对查询图像的期望修改。这种检索系统提供了自然高效的交互界面 [18]。该任务可应用于电子商务搜索、监控系统和互联网搜索领域。图 1 展示了该任务的潜在应用场景。

Recently, Vo et al. [23] have proposed the Text Image Residual Gating (TIRG) method for composing the query image and text for image retrieval. They have achieved state-of-the-art (SOTA) results on this task. However, their approach does not perform well for real-world application scenarios, i.e. with long and detailed texts (see Sec. 4.4). We think the reason is that their approach is too focused on changing the image space and does not give the query text its due importance. The gating connection takes elementwise product of query image features with image-text represent ation after passing it through two fully connected layers. In short, TIRG assigns huge importance to query image features by putting it directly in the final composed representation. Similar to [19, 22], they employ LSTM for extracting features from the query text. This works fine for simple queries but fails for more realistic queries.

最近,Vo等人[23]提出了文本图像残差门控(TIRG)方法,用于组合查询图像和文本以进行图像检索。他们在这项任务上取得了最先进(SOTA)的结果。然而,他们的方法在现实应用场景(即长文本和详细文本)中表现不佳(见第4.4节)。我们认为原因是他们的方法过于专注于改变图像空间,而没有给予查询文本应有的重要性。门控连接在通过两个全连接层后,将查询图像特征与图像文本表示进行逐元素乘积。简而言之,TIRG通过直接将查询图像特征放入最终组合表示中,赋予了查询图像特征极大的重要性。与[19, 22]类似,他们使用LSTM从查询文本中提取特征。这对于简单查询效果良好,但对于更真实的查询则失效。

In this paper, we attempt to overcome these limitations by proposing ComposeAE, an auto encoder based approach for composing the modalities in the multi-modal query. We employ a pre-trained BERT model [1] for extracting text features, instead of LSTM. We hypothesize that by jointly conditioning on both left and right context, BERT is able to give better representation for the complex queries. Similar to TIRG [23], we use a pre-trained ResNet-17 model for extracting image features. The extracted image and text features have different statistical properties as they are extracted from independent uni-modal models. We argue that it will not be beneficial to fuse them by passing through a few fully connected layers, as typically done in image-text joint embeddings [24].

本文试图通过提出ComposeAE来克服这些限制,这是一种基于自动编码器的方法,用于组合多模态查询中的模态。我们采用预训练的BERT模型[1]来提取文本特征,而非LSTM。我们假设,通过同时考虑左右上下文,BERT能够为复杂查询提供更好的表征。与TIRG[23]类似,我们使用预训练的ResNet-17模型来提取图像特征。由于提取自独立的单模态模型,图像和文本特征具有不同的统计特性。我们认为,像图像-文本联合嵌入[24]中常见的那样,仅通过几个全连接层进行融合并不会带来益处。

We adopt a novel approach and map these features to a complex space. We propose that the target image representation is an element-wise rotation of the representation of the source image in this complex space. The information about the degree of rotation is specified by the text features. We learn the composition of these complex vectors and their mapping to the target image space by adopting a deep metric learning (DML) approach. In this formulation, text features take a central role in defining the relationship between query image and target image. This also implies that the search space for learning the composition features is restricted. From a DML point of view, this restriction proves to be quite vital in learning a good similarity metric.

我们采用了一种新颖的方法,将这些特征映射到一个复数空间。我们提出,目标图像的表征是该复数空间中源图像表征的逐元素旋转。旋转角度的信息由文本特征指定。通过采用深度度量学习 (DML) 方法,我们学习这些复数向量的组合及其到目标图像空间的映射。在这一公式中,文本特征在定义查询图像与目标图像关系时起着核心作用。这也意味着学习组合特征的搜索空间受到了限制。从 DML 的角度来看,这种限制对于学习良好的相似性度量至关重要。

We also propose an explicit rotational symmetry constraint on the optimization problem based on our novel formulation of composing the image and text features. Specifically, we require that multiplication of the target image features with the complex conjugate of the query text features should yield a representation similar to the query image features. We explore the effectiveness of this constraint in our experiments (see Sec. 4.5).

我们还基于图像和文本特征组合的新颖表述,在优化问题上提出了明确的旋转对称约束。具体而言,我们要求目标图像特征与查询文本特征的复共轭相乘后,应产生与查询图像特征相似的表示。我们在实验中探索了这一约束的有效性(参见第4.5节)。

We validate the effectiveness of our approach on three datasets: MIT-States, Fashion 200 k and Fashion IQ. In Sec. 4, we show empirically that ComposeAE is able to learn a better composition of image and text queries and outperforms SOTA method. In DML, it has been recently shown that improvements in reported results are exaggerated and performance comparisons are done unfairly [11]. In our experiments, we took special care to ensure fair comparison. For instance, we introduce several variants of TIRG. Some of them show huge improvements over the original TIRG. We also conduct several ablation studies to quantify the contribution of different modules in the improvement of the ComposeAE performance.

我们在三个数据集上验证了方法的有效性:MIT-States、Fashion 200k和Fashion IQ。在第4节中,我们通过实验证明ComposeAE能够学习更好的图像和文本查询组合,并优于SOTA方法。DML领域近期研究表明,已报道结果的改进程度被夸大,且性能对比存在不公平性[11]。实验中我们特别注重确保公平比较,例如引入了TIRG的多个变体,其中部分变体较原始TIRG展现出显著提升。我们还进行了多项消融实验,以量化不同模块对ComposeAE性能提升的贡献。

Our main contributions are summarized below:

我们的主要贡献如下:

• We propose a ComposeAE model to learn the composed representation of image and text query. • We adopt a novel approach and argue that the source image and the target image lie in a common complex space. They are rotations of each other and the degree of rotation is encoded via query text features. • We propose a rotational symmetry constraint on the optimization problem. • ComposeAE outperforms the SOTA method TIRG by a huge margin, i.e., $30.12%$ on Fashion $200\mathrm{k\Omega}$ and $11.13%$ on MIT-States on the Recall $@10$ metric. • We enhance SOTA method TIRG [23] to ensure fair comparison and identify its limitations.

• 我们提出ComposeAE模型来学习图像和文本查询的组合表征。

• 采用创新方法论证源图像与目标图像处于同一复杂空间,二者通过查询文本特征编码的旋转角度相互关联。

• 在优化问题上提出旋转对称约束。

• ComposeAE以显著优势超越SOTA方法TIRG:在Fashion 200k数据集Recall@10指标提升30.12%,在MIT-States数据集提升11.13%。

• 改进SOTA方法TIRG [23]以确保公平对比,并揭示其局限性。

2. Related Work

2. 相关工作

Deep metric learning (DML) has become a popular technique for solving retrieval problems. DML aims to learn a metric such that the distances between samples of the same class are smaller than the distances between the samples of different classes. The task where DML has been employed extensively is the cross-modal retrieval, i.e. retrieving images based on text query and getting captions from the database based on the image query [24, 9, 28, 2, 8, 26].

深度度量学习 (DML) 已成为解决检索问题的流行技术。DML 旨在学习一种度量,使得同类样本之间的距离小于不同类样本之间的距离。DML 广泛应用的场景是跨模态检索,例如基于文本查询检索图像,或基于图像查询从数据库中获取描述 [24, 9, 28, 2, 8, 26]。

In the domain of Visual Question Answering (VQA), many methods have been proposed to fuse the text and image inputs [19, 17, 16]. We review below a few closely related methods. Relationship [19] is a method based on relational reasoning. Image features are extracted from CNN and text features from LSTM to create a set of relationship features. These features are then passed through a MLP and after averaging them the composed representation is obtained. FiLM [17] method tries to “influence” the source image by applying an affine transformation to the output of a hidden layer in the network. In order to perform complex operations, this linear transformation needs to be applied to several hidden layers. Another prominent method is parameter hashing [16] where one of the fully-connected layers in a CNN acts as the dynamic parameter layer.

在视觉问答 (Visual Question Answering, VQA) 领域,已有许多方法被提出用于融合文本和图像输入 [19, 17, 16]。下面我们回顾几种密切相关的方法。Relationship [19] 是一种基于关系推理的方法,通过 CNN 提取图像特征和 LSTM 提取文本特征,生成一组关系特征。这些特征经过 MLP 处理后,通过平均操作获得组合表征。FiLM [17] 方法试图通过对网络中隐藏层输出应用仿射变换来"影响"源图像。为了实现复杂操作,这种线性变换需要应用于多个隐藏层。另一个重要方法是参数哈希 [16],其中 CNN 的某个全连接层作为动态参数层。

In this work, we focus on the image retrieval problem based on the image and text query. This task has been studied recently by Vo et al. [23]. They propose a gated feature connection in order to keep the composed representation of query image and text in the same space as that of the target image. They also incorporate a residual connection which learns the similarity between concatenation of image-text features and the target image features. Another simple but effective approach is Show and Tell[22]. They train a LSTM to predict the next word in the sequence after it has seen the image and previous words. The final state of this LSTM is considered the composed representation. Han et al. [6] presents an interesting approach to learn spatiallyaware attributes from product description and then use them to retrieve products from the database. But their text query is limited to a predefined set of attributes. Nagarajan et al. [12] proposed an embedding approach, “Attribute as Operator”, where text query is embedded as a transformation matrix. The image features are then transformed with this matrix to get the composed representation.

在本工作中,我们专注于基于图像和文本查询的图像检索问题。Vo等人[23]近期对此任务进行了研究,他们提出了一种门控特征连接方法,使查询图像与文本的组合表征与目标图像保持在同一空间。该方法还引入了残差连接,用于学习图像-文本特征拼接与目标图像特征之间的相似性。另一种简单有效的方法是Show and Tell[22],该方法训练LSTM模型在观察图像及前序词汇后预测序列中的下一个词,并将LSTM的最终状态作为组合表征。Han等人[6]提出了一种创新方法,从商品描述中学习空间感知属性,进而用于数据库商品检索,但其文本查询仅限于预定义的属性集。Nagarajan等人[12]提出了"属性即运算符"的嵌入方法,将文本查询嵌入为变换矩阵,再通过该矩阵转换图像特征以获得组合表征。

This task is also closely related with interactive image retrieval task [4, 21] and attribute-based product retrieval task [27, 23]. These approaches have their limitations such as that the query texts are limited to a fixed set of relative attributes [27], require multiple rounds of natural language queries as input [4, 21] or that query texts can be only one word i.e. an attribute [6]. In contrast, the input query text in our approach is not limited to a fixed set of attributes and does not require multiple interactions with the user. Different from our work, the focus of these methods is on modeling the interaction between user and the agent.

该任务也与交互式图像检索任务 [4, 21] 和基于属性的产品检索任务 [27, 23] 密切相关。这些方法存在局限性,例如查询文本仅限于一组固定的相对属性 [27]、需要多轮自然语言查询作为输入 [4, 21],或查询文本只能是单个词(即属性)[6]。相比之下,我们的方法中输入的查询文本不受限于固定属性集,也不需要与用户进行多次交互。与我们的工作不同,这些方法的重点在于建模用户与AI智能体之间的交互。

3. Methodology

3. 方法论

3.1. Problem Formulation

3.1. 问题描述

Let $\mathcal{X}={x_{1},x_{2},\cdot\cdot\cdot,x_{n}}$ denote the set of query images, $\mathcal{T}={t_{1},t_{2},\cdot\cdot\cdot,t_{n}}$ denote the set of query texts and $y={y_{1},y_{2},\cdot\cdot\cdot,y_{n}}$ denote the set of target images. Let $\psi(\cdot)$ denote the pre-trained image model, which takes an image as input and returns image features in a $d\cdot$ - dimensional space. Let $\kappa(\cdot,\cdot)$ denote the similarity kernel, which we implement as a dot product between its inputs. The task is to learn a composed representation of the imagetext query, denoted by $g(x,t;\Theta)$ , by maximising

设 $\mathcal{X}={x_{1},x_{2},\cdot\cdot\cdot,x_{n}}$ 表示查询图像集,$\mathcal{T}={t_{1},t_{2},\cdot\cdot\cdot,t_{n}}$ 表示查询文本集,$y={y_{1},y_{2},\cdot\cdot\cdot,y_{n}}$ 表示目标图像集。设 $\psi(\cdot)$ 表示预训练图像模型,该模型以图像为输入并返回 $d\cdot$ 维空间中的图像特征。设 $\kappa(\cdot,\cdot)$ 表示相似性核函数,我们将其实现为输入之间的点积。该任务的目标是通过最大化 $g(x,t;\Theta)$ 来学习图文查询的组合表示。

$$

\operatorname*{max}_{\Theta}\kappa(g(x,t;\Theta),\psi(y)),

$$

$$

\operatorname*{max}_{\Theta}\kappa(g(x,t;\Theta),\psi(y)),

$$

3.2. Motivation for Complex Projection

3.2. 复杂投影的动机

In deep learning, researchers aim to formulate the learning problem in such a way that the solution space is restricted in a meaningful way. This helps in learning better and robust representations. The objective function (Equation 1) maximizes the similarity between the output of the composition function of the image-text query and the target image features. Thus, it is intuitive to model the query image, query text and target image lying in some common space. One drawback of TIRG is that it does not emphasize the importance of text features in defining the relationship between the query image and the target image.

在深度学习中,研究人员的目标是以一种有意义的方式限制解空间来构建学习问题。这有助于学习更好且稳健的表征。目标函数(公式1)最大化图文查询组合函数输出与目标图像特征之间的相似性。因此,将查询图像、查询文本和目标图像建模在某个共同空间中是直观的。TIRG的一个缺点是它没有强调文本特征在定义查询图像与目标图像之间关系时的重要性。

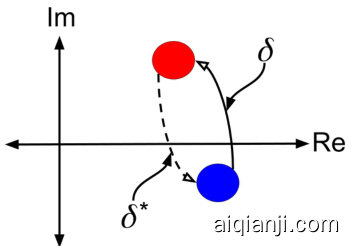

Figure 2. Conceptual Diagram of Rotation of the Images in Complex Space. Blue and Red Circle represent the query and the target image respectively. $\delta$ represents the rotation in the complex space, learned from the query text features. $\delta^{*}$ represents the complex conjugate of the rotation in the complex space.

图 2: 复数空间图像旋转概念示意图。蓝色和红色圆圈分别代表查询图像和目标图像。$\delta$表示从查询文本特征学习到的复数空间旋转。$\delta^{*}$表示复数空间旋转的共轭复数。

where $\Theta$ denotes all the network parameters.

其中 $\Theta$ 表示所有网络参数。

Based on these insights, we restrict the compositional learning of query image and text features in such a way that: (i) query and target image features lie in the same space, (ii) text features encode the transition from query image to target image in this space and (iii) transition is symmetric, i.e. some function of the text features must encode the reverse transition from target image to query image.

基于这些观察,我们对查询图像和文本特征的组合学习施加以下限制:(i) 查询图像与目标图像特征位于同一空间,(ii) 文本特征编码了从查询图像到目标图像在该空间中的转移,(iii) 转移具有对称性,即文本特征的某种函数必须能编码从目标图像返回查询图像的逆向转移。

In order to incorporate these characteristics in the composed representation, we propose that the query image and target image are rotations (transitions) of each other in a complex space. The rotation is determined by the text features. This enables incorporating the desired text information about the image in the common complex space. The reason for choosing the complex space is that some function of text features required for the transition to be symmetric can easily be defined as the complex conjugate of the text features in the complex space (see Fig. 2).

为了将这些特性融入组合表征中,我们提出查询图像与目标图像在复数空间中互为旋转(变换)。该旋转由文本特征决定,从而能在公共复数空间中融入所需的图像文本信息。选择复数空间的原因是:某些实现对称变换所需的文本特征函数,可以简单地定义为复数空间中文本特征的共轭复数(见图 2)。

Choosing such projection also enables us to define a constraint on the optimization problem, referred to as rotational symmetry constraint (see Equations 12, 13 and 14). We will empirically verify the effectiveness of this constraint in learning better composed representations. We will also explore the effect on performance if we fuse image and text information in the real space. Refer to Sec. 4.5.

选择这种投影方式还能让我们在优化问题上定义一个约束条件,称为旋转对称约束 (rotational symmetry constraint) (见公式12、13和14)。我们将通过实验验证该约束在学习更优组合表征方面的有效性。同时会探究在实数空间融合图像和文本信息对性能的影响。具体参见第4.5节。

An advantage of modelling the reverse transition in this way is that we do not require captions of query image. This is quite useful in practice, since a user-friendly retrieval system will not ask the user to describe the query image for it. In the public datasets, query image captions are not always available, e.g. for Fashion IQ dataset. In addition to that, it also forces the model to learn a good “internal” representation of the text features in the complex space.

以这种方式建模反向转换的优势在于,我们不需要查询图像的描述文本。这在实际应用中非常有用,因为用户友好的检索系统不会要求用户为查询图像提供描述。在公开数据集中,查询图像的描述文本并非总是可用(例如 Fashion IQ 数据集)。此外,这种方式还能迫使模型在复杂空间中学习文本特征的优质"内部"表示。

Interestingly, such restrictions on the learning problem serve as implicit regular iz ation. e.g., the text features only influence angles of the composed representation. This is in line with recent developments in deep learning theory [14, 13]. Neyshabur et al. [15] showed that imposing simple but global constraints on the parameter space of deep networks is an effective way of analyzing learning theoretic properties and aids in decreasing the generalization error.

有趣的是,这类对学习问题的限制起到了隐式正则化 (regularization) 的作用。例如,文本特征仅影响组合表征的角度。这与深度学习理论的最新进展 [14, 13] 相吻合。Neyshabur 等人 [15] 表明,对深度网络参数空间施加简单但全局的约束,是分析学习理论特性的有效方法,并有助于降低泛化误差。

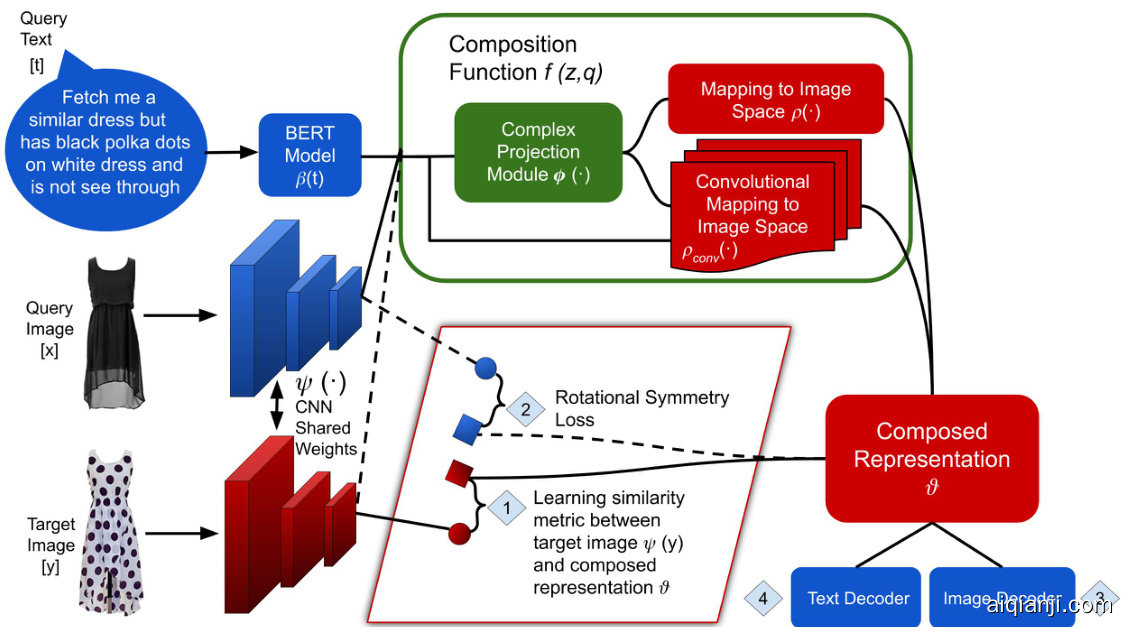

Figure 3. ComposeAE Architecture: Image retrieval using text and image query. Dotted lines indicate connections needed for calculating rotational symmetry loss (see Equations 12, 13 and 14). Here 1 refers to $L_{B A S E}$ , 2 refers to $L_{S Y M}^{B A S E}$ , 3 refers to $L_{R I}$ and 4 refers to $L_{R T}$

图 3: ComposeAE架构:使用文本和图像查询进行图像检索。虚线表示计算旋转对称损失所需的连接 (参见公式12、13和14)。其中1表示 $L_{B A S E}$ ,2表示 $L_{S Y M}^{B A S E}$ ,3表示 $L_{R I}$ ,4表示 $L_{R T}$

3.3. Network Architecture

3.3. 网络架构

Now we describe ComposeAE, an auto encoder based approach for composing the modalities in the multi-modal query. Figure 3 presents the overview of the ComposeAE architecture.

现在我们介绍ComposeAE,这是一种基于自动编码器的方法,用于组合多模态查询中的模态。图3展示了ComposeAE架构的概览。

For the image query, we extract the image feature vector living in a $d$ -dimensional space, using the image model $\psi(\cdot)$ (e.g. ResNet-17), which we denote as:

对于图像查询,我们使用图像模型 $\psi(\cdot)$ (例如 ResNet-17) 提取存在于 $d$ 维空间中的图像特征向量,记为:

$$

\psi(x)=z\in\mathbb{R}^{d}.

$$

$$

\psi(x)=z\in\mathbb{R}^{d}.

$$

Similarly, for the text query $t$ , we extract the text feature vector living in an $h$ -dimensional space, using the BERT model [1], $\beta(\cdot)$ as:

同样,对于文本查询 $t$,我们使用 BERT 模型 [1] 提取位于 $h$ 维空间中的文本特征向量 $\beta(\cdot)$:

$$

\beta(t)=q\in\mathbb{R}^{h}.

$$

$$

\beta(t)=q\in\mathbb{R}^{h}.

$$

Since the image features $z$ and text features $q$ are extracted from independent uni-modal models; they have different statistical properties and follow complex distributions. Typically in image-text joint embeddings [23, 24], these features are combined using fully connected layers or gating mechanisms.

由于图像特征 $z$ 和文本特征 $q$ 是从独立的单模态模型中提取的,它们具有不同的统计特性并遵循复杂的分布。通常在图像-文本联合嵌入 [23, 24] 中,这些特征会通过全连接层或门控机制进行组合。

In contrast to this we propose that the source image and target image are rotations of each other in some complex space, say, $\mathbb{C}^{k}$ . Specifically, the target image representation is an element-wise rotation of the representation of the source image in this complex space. The information of how much rotation is needed to get from source to target image is encoded via the query text features. During training, we learn the appropriate mapping functions which give us the composition of $z$ and $q$ in $\mathbb{C}^{k}$ .

与此相反,我们提出源图像和目标图像是某个复杂空间(例如 $\mathbb{C}^{k}$)中的相互旋转。具体而言,目标图像的表征是该复杂空间中源图像表征的逐元素旋转。从源图像到目标图像所需旋转的信息通过查询文本特征进行编码。在训练过程中,我们学习适当的映射函数,从而得到 $\mathbb{C}^{k}$ 中 $z$ 和 $q$ 的组合。

More precisely, to model the text features $q$ as specifying element-wise rotation of source image features, we learn a mapping $\gamma\colon\mathbb{R}^{k}\to{D\in\mathbb{R}^{k\times k}\mid D$ is diagonal $}$ and obtain the coordinate-wise complex rotations via

更准确地说,为了将文本特征 $q$ 建模为指定源图像特征的逐元素旋转,我们学习一个映射 $\gamma\colon\mathbb{R}^{k}\to{D\in\mathbb{R}^{k\times k}\mid D$ 是对角矩阵 $}$,并通过以下方式获得坐标方向的复数旋转:

$$

\delta=\mathcal{E}{j\gamma(q)},

$$

$$

\delta=\mathcal{E}{j\gamma(q)},

$$

where $\mathcal{E}$ denotes the matrix exponential function and $j$ is square root of $-1$ . The mapping $\gamma$ is implemented as a multilayer perceptron (MLP) i.e. two fully-connected layers with non-linear activation.

其中 $\mathcal{E}$ 表示矩阵指数函数,$j$ 是 $-1$ 的平方根。映射 $\gamma$ 通过多层感知机 (MLP) 实现,即两个带有非线性激活函数的全连接层。

Next, we learn a mapping function, $\eta:\mathbb{R}^{d}\rightarrow\mathbb{C}^{k}$ , which maps image features $z$ to the complex space. $\eta$ is also implemented as a MLP. The composed representation denoted by $\phi\in\mathbb{C}^{k}$ can be written as:

接下来,我们学习一个映射函数 $\eta:\mathbb{R}^{d}\rightarrow\mathbb{C}^{k}$,它将图像特征 $z$ 映射到复数空间。$\eta$ 同样由多层感知机 (MLP) 实现。组合后的表示记为 $\phi\in\mathbb{C}^{k}$,其表达式为:

$$

\phi=\delta\eta(z)

$$

$$

\phi=\delta\eta(z)

$$

The optimization problem defined in Eq. 1 aims to maximize the similarity between the composed features and the target image features extracted from the image model. Thus, we need to learn a mapping function, $\rho:\mathbb{C}^{k}\mapsto\mathbb{R}^{d}$ , from the complex space $\mathbb{C}^{k}$ back to the $d$ -dimensional real space where extracted target image features exist. $\rho$ is implemented as MLP.

式 (1) 中定义的优化问题旨在最大化组合特征与从图像模型中提取的目标图像特征之间的相似性。因此,我们需要学习一个映射函数 $\rho:\mathbb{C}^{k}\mapsto\mathbb{R}^{d}$ ,将复数空间 $\mathbb{C}^{k}$ 映射回存在提取目标图像特征的 $d$ 维实数空间。 $\rho$ 通过多层感知机 (MLP) 实现。

In order to better capture the underlying cross-modal similarity structure in the data, we learn another mapping, denoted as $\rho_{c o n v}$ . The convolutional mapping is implemented as two fully connected layers followed by a single convolutional layer. It learns 64 convolutional filters and adaptive max pooling is applied on them to get the representation from this convolutional mapping. This enables learning effective local interactions among different features. In addition to $\phi,\rho_{c o n v}$ also takes raw features $z$ and $q$ as input. $\rho_{c o n v}$ plays a really important role for queries where the query text asks for a modification that is spatially localized. e.g., a user wants a t-shirt with a different logo on the front (see second row in Fig. 4).

为了更好地捕捉数据中潜在的跨模态相似性结构,我们学习了另一个映射,记为 $\rho_{conv}$。该卷积映射由两个全连接层和一个卷积层实现,通过64个卷积滤波器学习特征,并应用自适应最大池化获取表征。这种方法能有效学习不同特征间的局部交互关系。除了 $\phi$,$\rho_{conv}$ 同样以原始特征 $z$ 和 $q$ 作为输入。对于需要空间局部修改的查询(例如用户想要改变T恤正面的Logo,见图4第二行),$\rho_{conv}$ 发挥着关键作用。

Let $f(z,q)$ denote the overall composition function which learns how to effectively compose extracted image and text features for target image retrieval. The final representation, $\vartheta\in\mathbb{R}^{d}$ , of the composed image-text features can be written as follows:

设 $f(z,q)$ 表示通过学习如何有效组合提取的图像和文本特征以进行目标图像检索的整体组合函数。组合后的图像-文本特征的最终表示 $\vartheta\in\mathbb{R}^{d}$ 可表示为:

$$

\vartheta=f(z,q)=a\rho(\phi)+b\rho_{c o n v}(\phi,z,q),

$$

$$

\vartheta=f(z,q)=a\rho(\phi)+b\rho_{c o n v}(\phi,z,q),

$$

where $a$ and $b$ are learnable parameters.

其中 $a$ 和 $b$ 是可学习参数。

In auto encoder terminology, the encoder has learnt the composed representation of image and text query, $\vartheta$ . Next, we learn to reconstruct the extracted image $z$ and text features $q$ from $\vartheta$ . Separate decoders are learned for each modality, i.e., image decoder and text decoder denoted by $d_{i m g}$ and $d_{t x t}$ respectively. The reason for using the decoders and reconstruction losses is two-fold: first, it acts as regularize r on the learnt composed representation and secondly, it forces the composition function to retain relevant text and image information in the final representation. Empirically, we have seen that these losses reduce the variation in the performance and aid in preventing over fitting.

在自编码器术语中,编码器已学会图像和文本查询的组合表示 $\vartheta$。接下来,我们学习从 $\vartheta$ 重构提取的图像 $z$ 和文本特征 $q$。为每种模态分别学习解码器,即图像解码器和文本解码器,分别表示为 $d_{img}$ 和 $d_{txt}$。使用解码器和重构损失的原因有两个:首先,它对学习到的组合表示起到正则化作用;其次,它迫使组合函数在最终表示中保留相关的文本和图像信息。根据经验,我们发现这些损失减少了性能波动,并有助于防止过拟合。

3.4. Training Objective

3.4. 训练目标

We adopt a deep metric learning (DML) approach to train ComposeAE. Our training objective is to learn a similarity metric, $\kappa(\cdot,\cdot):\mathbb{R}^{d}\times\mathbb{R}^{d}\mapsto\mathbb{R}$ , between composed image-text query features $\vartheta$ and extracted target image features $\psi(y)$ . The composition function $f(z,q)$ should learn to map semantically similar points from the data manifold in $\mathbb{R}^{d}\times\mathbb{R}^{h}$ onto metrically close points in $\mathbb{R}^{d}$ . Analogously, $f(\cdot,\cdot)$ should push the composed representation away from non-similar images in $\mathbb{R}^{d}$ .

我们采用深度度量学习(DML)方法来训练ComposeAE。训练目标是学习一个相似性度量函数 $\kappa(\cdot,\cdot):\mathbb{R}^{d}\times\mathbb{R}^{d}\mapsto\mathbb{R}$ ,用于衡量组合图文查询特征 $\vartheta$ 与目标图像特征 $\psi(y)$ 之间的距离。组合函数 $f(z,q)$ 需要学会将数据流形 $\mathbb{R}^{d}\times\mathbb{R}^{h}$ 中语义相似的样本映射到 $\mathbb{R}^{d}$ 空间中的邻近点,同时将不相似图像的组合表征推离。

For sample $i$ from the training mini-batch of size $N$ , let $\vartheta_{i}$ denote the composition feature, $\psi(y_{i})$ denote the target image features and $\psi(\tilde{y}_{i})$ denote the randomly selected negative image from the mini-batch. We follow TIRG [23] in choosing the base loss for the datasets.

对于训练小批量中大小为 $N$ 的样本 $i$,设 $\vartheta_{i}$ 表示组合特征,$\psi(y_{i})$ 表示目标图像特征,$\psi(\tilde{y}_{i})$ 表示从小批量中随机选择的负样本图像。我们遵循 TIRG [23] 的方法选择数据集的基准损失函数。

So, for MIT-States dataset, we employ triplet loss with soft margin as a base loss.

因此,对于 MIT-States 数据集,我们采用带软间隔的三元组损失 (triplet loss with soft margin) 作为基础损失。

where $M$ denotes the number of triplets for each training sample $i$ . In our experiments, we choose the same value as mentioned in the TIRG code, i.e. 3.

其中 $M$ 表示每个训练样本 $i$ 的三元组数量。在我们的实验中,我们选择与 TIRG 代码中提到的相同值,即 3。

For Fashion 200 k and Fashion IQ datasets, the base loss is the softmax loss with similarity kernels, denoted as $L_{S M A X}$ . For each training sample $i$ , we normalize the similarity between the composed query-image features $(\vartheta_{i})$ and target image features by dividing it with the sum of similarities between $\vartheta_{i}$ and all the target images in the batch. This is equivalent to the classification based loss in [23, 3, 20, 10].

对于Fashion 200k和Fashion IQ数据集,基础损失是带有相似性核的softmax损失,记为$L_{S M A X}$。对于每个训练样本$i$,我们通过将组合查询-图像特征$(\vartheta_{i})$与目标图像特征之间的相似度除以$\vartheta_{i}$与批次中所有目标图像相似度之和来进行归一化。这等同于[23, 3, 20, 10]中基于分类的损失。

In addition to the base loss, we also incorporate two reconstruction losses in our training objective. They act as regularize rs on the learning of the composed representation. The image reconstruction loss is given by:

除了基础损失函数外,我们还在训练目标中加入了两个重构损失。它们对组合表征的学习起到正则化作用。图像重构损失由以下公式给出:

$$

L_{R I}=\frac{1}{N}\sum_{i=1}^{N}{\left\Vert{z_{i}-\hat{z_{i}}}\right\Vert_{2}^{2}},

$$

$$

L_{R I}=\frac{1}{N}\sum_{i=1}^{N}{\left\Vert{z_{i}-\hat{z_{i}}}\right\Vert_{2}^{2}},

$$

where $\hat{z}{i}=d_{i m g}(\vartheta_{i})$ .

其中 $\hat{z}{i}=d_{i m g}(\vartheta_{i}