TMCIR: Token Merge Benefits Composed Image Retrieval

TMCIR: Token Merge提升组合图像检索

Abstract

摘要

Composed Image Retrieval (CIR) retrieves target images using a multi-modal query that combines a reference image with text describing desired modifications. The primary challenge is effectively fusing this visual and textual information. Current cross-modal feature fusion approaches for CIR exhibit an inherent bias in intention interpretation. These methods tend to disproportionately emphasize either the reference image features (visual-dominant fusion) or the textual modification intent (text-dominant fusion through image-to-text conversion). Such an imbalanced representation often fails to accurately capture and reflect the actual search intent of the user in the retrieval results. To address this challenge, we propose TMCIR, a novel framework that advances composed image retrieval through two key innovations: 1) Intent-Aware CrossModal Alignment. We first fine-tune CLIP encoders contrastive ly using intent-reflecting pseudo-target images, synthesized from reference images and textual descriptions via a diffusion model. This step enhances the encoder ability of text to capture nuanced intents in textual descriptions. 2) Adaptive Token Fusion. We further fine-tune all encoders contrastive ly by comparing adaptive tokenfusion features with the target image. This mechanism dynamically balances visual and textual representations within the contrastive learning pipeline, optimizing the composed feature for retrieval. Extensive experiments on Fashion-IQ and CIRR datasets demonstrate that TMCIR significantly outperforms state-of-the-art methods, particularly in capturing nuanced user intent.

组合图像检索 (Composed Image Retrieval, CIR) 通过结合参考图像与描述修改需求的文本构成多模态查询,以此检索目标图像。其核心挑战在于如何有效融合视觉与文本信息。当前CIR的跨模态特征融合方法存在意图理解的固有偏差:这些方法往往过度侧重参考图像特征(视觉主导融合)或将文本修改意图通过图像转文本方式主导融合。这种不平衡的表示通常无法在检索结果中准确捕捉并反映用户的真实搜索意图。

为解决这一挑战,我们提出TMCIR框架,通过两项关键创新推动组合图像检索发展:1) 意图感知跨模态对齐。我们首先使用扩散模型根据参考图像和文本描述合成的意图反映伪目标图像,以对比学习方式微调CLIP编码器。这一步骤增强了文本编码器捕捉文本描述中细微意图的能力。2) 自适应Token融合。我们通过将自适应Token融合特征与目标图像进行对比,进一步以对比学习方式微调所有编码器。该机制在对比学习流程中动态平衡视觉与文本表示,优化检索所需的组合特征。在Fashion-IQ和CIRR数据集上的大量实验表明,TMCIR显著优于现有最优方法,尤其在捕捉用户细微意图方面表现突出。

CCS Concepts

CCS概念

• Composed Image Retrieval;

• 组合图像检索 (Composed Image Retrieval);

Keywords

关键词

Composed Image Retrieval, Contrastive Learning.

组合图像检索,对比学习。

1 Introduction

1 引言

Retrieving images based on a combination of a reference image and textual modification instructions defines the task of Composed ∼Image Retrieval (CIR) [5, 20, 37]. Specifically, the goal of CIR is to retrieve a target image from a candidate set that maintains overall visual similarity to the reference image while fulfilling the localized modification requirements specified in the textual description. CIR enables precise, interactive retrieval, making it valuable for applications like e-commerce and personalized web search.

基于参考图像和文本修改指令组合来检索图像的任务定义为组合图像检索 (Composed Image Retrieval, CIR) [5, 20, 37]。具体而言,CIR的目标是从候选集中检索出与参考图像保持整体视觉相似性,同时满足文本描述中指定的局部修改要求的目标图像。CIR支持精确的交互式检索,使其在电子商务和个性化网络搜索等应用中具有重要价值。

However, composed queries from two distinct modalities introduces unique challenges. Unlike text-to-image or image-to-image retrieval which rely on a single query type, CIR must interpret relative changes described textually and apply them to the specific visual content of the reference image. The core difficulty lies in effectively integrating these cross-modal signals into a unified representation for similarity comparison with candidate images. To achieve this integration, most current approaches predominantly employ visualdominant feature fusion mechanisms [1, 8, 11, 24]. which extract image and text features separately and then combining them. However, these methods exhibit two critical limitations: 1) They often fail to preserve essential visual details from the reference image; 2) They tend to inadvertently incorporate irrelevant background information (i.e., regions unrelated to the textual modifications) into the final query representation. These shortcomings become particularly pronounced in scenarios requiring fine-grained image modifications, such as precise color variations or localized texture alterations, where maintaining both visual fidelity and modification accuracy is paramount.

然而,组合来自两种不同模态的查询带来了独特的挑战。与依赖单一查询类型的文本到图像或图像到图像检索不同,CIR (Composed Image Retrieval) 必须解释文本描述的相对变化,并将其应用于参考图像的具体视觉内容。核心难点在于如何有效地将这些跨模态信号整合为统一的表示,以便与候选图像进行相似性比较。为实现这种整合,当前大多数方法主要采用视觉主导的特征融合机制 [1, 8, 11, 24],即分别提取图像和文本特征后再进行组合。但这些方法存在两个关键局限:1) 它们往往无法保留参考图像中的关键视觉细节;2) 容易将无关的背景信息(即与文本修改无关的区域)无意间融入最终查询表示。这些缺陷在需要细粒度图像修改的场景(如精确的颜色变化或局部纹理调整)中尤为突出,此时保持视觉保真度和修改准确性至关重要。

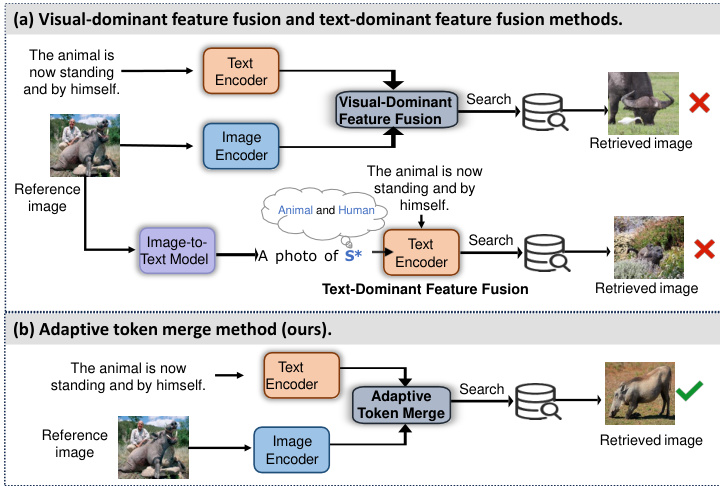

Figure 1: Workflows of existing CIR methods and our proposed TMCIR

图 1: 现有CIR方法与我们提出的TMCIR工作流程

Other recent approaches have adopted text-dominant fusion mechanisms that leverage CLIP-based image-to-text conversion [5, 12], where reference images are mapped to pseudo-word embeddings for integration with textual descriptions. While this paradigm benefits from established cross-modal alignment, it faces fundamental limitations: 1) The generated pseudo-word tokens primarily capture global image semantics while losing fine-grained visual details; 2) The constrained length of the word tokens restricts comprehensive visual representation. These factors lead to granularlevel discrepancies in the cross-modal representations, ultimately compromising retrieval accuracy.

其他近期研究采用了以文本为主导的融合机制,利用基于CLIP的图像到文本转换技术[5,12],将参考图像映射为伪词嵌入(pseudo-word embeddings)以整合文本描述。虽然该范式受益于成熟的跨模态对齐能力,但存在根本性局限:1) 生成的伪词token主要捕获全局图像语义,同时丢失细粒度视觉细节;2) 词token的长度限制制约了视觉表征的全面性。这些因素导致跨模态表征存在粒度级差异,最终影响检索精度。

As shown in Fig. 1(a), both the visual-dominant fusion and the text-dominant fusion methods are fail to accurately capture and reflect the actual search intent of the user in the retrieval results. To address these challenges, we propose a TMCIR framework. Our framework is carefully designed to preserve the critical visual information present in the reference image while accurately conveying the modification intent of the user as specified by textual description. The TMCIR comprises two key steps: 1) Intent-Aware Cross-Modal Alignment: This step is designed to precisely capture textual intent from descriptions, addressing a critical limitation in CIR. Conventional target images often contain extraneous variations (e.g., lighting conditions, irrelevant background objects, or stylistic inconsistencies) that deviate from the specified intent of text, making them suboptimal for fine-tuning. To overcome this, we introduce a pseudo-target generation module that leverages a diffusion model conditioned on both the reference image and the relative textual description. The generated pseudo-target image eliminates noise, serving as a cleaner supervisory signal that faithfully reflects the intended modifications. Using an image-text paired dataset constructed from these pseudo-targets and their corresponding descriptions, we perform contrastive fine-tuning of pre-trained visual and textual encoders. This approach ensures precise crossmodal token alignment in a shared embedding space, with a focused emphasis on text-intent preservation from the description. 2) Adaptive Token Fusion: Following alignment fine-tuning, we introduce an adaptive fusion strategy that computes token-wise cosine similarity between visual and textual encoder outputs, enhanced with positional encoding for weighted feature fusion (Fig. 1(b). This design serves two key purposes: 1) The positional cues establish explicit correspondences between textual concepts and their spatial counterparts in the image. 2) The similarity-weighted fusion preserves critical visual details while precisely encoding the nuanced modification intents specified in relative descriptions. The fused representations then drive a final contrastive fine-tuning stage, where we optimize all encoders by comparing the adaptive token-fusion features against target images. This dynamic balancing mechanism simultaneously refines both modalities within a unified contrastive framework, ultimately producing composite features that are optimally disc rim i native for retrieval tasks.

如图 1(a)所示,视觉主导融合和文本主导融合方法均无法在检索结果中准确捕捉和反映用户的真实搜索意图。为解决这些问题,我们提出了TMCIR框架。该框架通过精心设计,既能保留参考图像中的关键视觉信息,又能准确传达用户通过文本描述指定的修改意图。TMCIR包含两个关键步骤:

- 意图感知跨模态对齐:该步骤旨在从描述中精确捕捉文本意图,解决CIR中的关键局限。传统目标图像常包含与文本指定意图不符的干扰变化(如光照条件、无关背景对象或风格不一致),使其不适合微调。为此,我们引入基于参考图像和相对文本描述的扩散模型伪目标生成模块。生成的伪目标图像可消除噪声,作为忠实反映修改意图的纯净监督信号。利用这些伪目标图像与对应描述构建的图文配对数据集,我们对预训练视觉和文本编码器进行对比微调。该方法确保在共享嵌入空间实现精确的跨模态token对齐,重点保持描述中的文本意图。

- 自适应token融合:对齐微调后,我们引入自适应融合策略,计算视觉与文本编码器输出的token级余弦相似度,并通过位置编码增强加权特征融合(图 1(b)。该设计实现两大功能:(1) 位置线索建立文本概念与图像空间区域的显式对应关系;(2) 相似度加权融合在保留关键视觉细节的同时,精确编码相对描述中指定的细微修改意图。融合表征驱动最终对比微调阶段,通过比较自适应token融合特征与目标图像来优化所有编码器。这种动态平衡机制在统一对比框架中同步优化双模态,最终生成最适合检索任务的判别性复合特征。

Figure 2: Retrieval examples using the proposed TMCIR, CLIP4CIR [4] (visual-dominant feature fusion), and Pic2word [31] (text-dominant fusion) methods, respectively.

图 2: 分别使用提出的TMCIR方法、CLIP4CIR [4] (视觉主导特征融合)和Pic2word [31] (文本主导融合)方法进行检索的示例。

Experiments conducted on the Fashion-IQ and CIRR datasets demonstrate that our method achieves state-of-the-art performance on both benchmarks. As shown in Figure 2, a user provides a reference image of a black T-shirt accompanied by the relative description “change the black T-shirt to purple and add intricate patterns”.

在Fashion-IQ和CIRR数据集上进行的实验表明,我们的方法在两个基准测试中都达到了最先进的性能。如图2所示,用户提供了一张黑色T恤的参考图像,并附有相对描述"将黑色T恤改为紫色并添加复杂图案"。

The method of CLIP4CIR[4] employs visual-dominant feature fusion, which risks incorporating irrelevant visual elements (e.g., background clutter) that dilute the precise modification intent, leading to erroneous results. Conversely, the method of Pic2word[31] uses text-dominant fusion that may suppress relevant visual details while similarly weakening the intended modifications, resulting in comparable failures. Both approaches highlight the need for a balanced fusion strategy that preserves critical visual features while faithfully capturing textual intentions. Our adaptive token fusion approach analyzes the image in finer detail. It partitions the reference image, allowing visual tokens from different regions to be compared with keywords in the description. The mechanism can distinguish the T-shirt region from the background and, via cosine similarity, identify tokens highly correlated with keywords like “purple”, “T-shirt”, and “patterns”. Tokens from the T-shirt region receive higher weights, while background tokens are attenuated. Simultaneously, the textual information for “purple” guides the adjustment of the token representations associated with the T-shirt, generating a joint representation that accurately fulfills the modification requirements.

CLIP4CIR[4]方法采用视觉主导的特征融合策略,存在引入无关视觉元素(如背景杂波)的风险,这些干扰会稀释精确的修改意图,导致错误结果。相比之下,Pic2word[31]方法采用文本主导的融合方式,可能在抑制相关视觉细节的同时弱化预期修改效果,造成类似的失败案例。两种方法都凸显了平衡融合策略的必要性——既要保留关键视觉特征,又要忠实捕捉文本意图。我们的自适应token融合方案通过更精细的图像分析实现这一目标:对参考图像进行分区处理,使不同区域的视觉token能与描述中的关键词进行比对。该机制能区分T恤区域与背景,并通过余弦相似度识别与"purple"、"T-shirt"、"patterns"等关键词高度相关的token。T恤区域的token获得更高权重,背景token则被弱化。与此同时,"purple"的文本信息会引导调整T恤相关token的表征,最终生成准确满足修改需求的联合表征。

In summary, our contributions are as follows:

总之,我们的贡献如下:

• We propose a novel CIR approach that integrates intentaware cross-modal alignment (IACMA) and adaptive token fusion (ATF) to better capture user intent. The IACMA leverages a diffusion model to generate pseudo-target images that more accurately reflect user modification intent compared to potentially noisy real target images, providing a purer supervisory signal for encoder fine-tuning. The ATF adaptively fuse visual and textual tokens through weighted integration and positional encoding. This strategy ensures comprehensive preservation of key visual details while accurately capturing subtle user modification intent. • Experimental results on the Fashion-IQ and CIRR datasets indicate that our proposed method outperforms current stateof-the-art CIR approaches in both retrieval accuracy and robustness.

• 我们提出了一种新颖的组合图像检索(CIR)方法,该方法整合了意图感知跨模态对齐(IACMA)和自适应token融合(ATF)机制,以更好地捕捉用户意图。IACMA利用扩散模型生成能更准确反映用户修改意图的伪目标图像(相比可能存在噪声的真实目标图像),为编码器微调提供更纯净的监督信号。ATF通过加权集成和位置编码自适应融合视觉与文本token,该策略在全面保留关键视觉细节的同时,能精准捕捉用户细微的修改意图。

• 在Fashion-IQ和CIRR数据集上的实验结果表明,我们提出的方法在检索准确性和鲁棒性方面均优于当前最先进的CIR方法。

2 Related work

2 相关工作

Composed Image Retrieval. In current mainstream CIR methods, one category focuses on the reference image features, where the features of the reference image are fused with those of the relative caption. Then the fused feature is compared with those of all candidate images to determine the closest match [1, 9, 11, 24, 37]. Various feature fusion mechanisms [37] and attention mechanisms [9, 11] have exhibited prominent performance in CIR. Subsequently, capitalizing on the robust capabilities of pre-trained models, a number of CIR methods that adeptly amalgamate image and text features extracted from autonomously trained visual and text encoders [4, 13, 30]. Another category of approaches suggest to transform reference image into its pseudo-word embedding [3, 31], which is then combined with relative caption for text-to-image retrieval. However, the pseudo-word embeddings learned in this manner often capture only global semantic information while lacking fine-grained visual details, and their limited length makes it difficult to comprehensively represent the visual content of the reference image. Liu et al. created text with semantics opposite to that of the original text and add learnable tokens to the text to retrieve images in two distinct queries [26]. Nevertheless, this approach to fixed text prompt learning fails to modify the intrinsic information within the relative caption itself, thereby constraining retrieval performance. Although existing composed image retrieval methods have achieved varying degrees of visual and textual information fusion, they still fall short in fully preserving the detailed information in the reference image and accurately aligning with the user’s modification intent. Motivated by this, we propose the TMCIR framework, which effectively addresses the issues of information loss and insufficient cross-modal alignment through the generation of pseudo-target images, task-specific fine-tuning of encoders, and a similarity-based token fusion module. As a result, our approach achieves significant improvements in both retrieval accuracy and robustness.

组合图像检索。当前主流的CIR方法主要分为两类:一类侧重于参考图像特征,将参考图像特征与相关描述文本特征融合后,与候选图像特征进行匹配[1, 9, 11, 24, 37]。各类特征融合机制[37]和注意力机制[9, 11]在CIR中展现出卓越性能。随着预训练模型的强大能力,涌现出许多能巧妙融合自训练视觉编码器和文本编码器所提取特征的CIR方法[4, 13, 30]。另一类方法将参考图像转换为伪词嵌入(pseudo-word embedding)[3, 31],再结合文本描述进行图文检索。但这类伪词嵌入往往仅捕获全局语义信息而缺乏细粒度视觉细节,其有限长度也难以全面表征参考图像的视觉内容。Liu等人通过生成语义相反的文本并添加可学习token来实现双重查询[26],但固定文本提示学习无法修改原始描述的深层信息,限制了检索性能。现有方法虽实现了不同程度的视觉-文本信息融合,仍难以完整保留参考图像细节并精准对齐用户修改意图。为此,我们提出TMCIR框架,通过生成伪目标图像、任务导向的编码器微调以及基于相似度的token融合模块,有效解决信息丢失和跨模态对齐不足的问题,在检索精度和鲁棒性上取得显著提升。

Token Merge for Modal Fusion. Multi-modal fusion requires the compression of redundant features and efficient representation learning, both of which are critical for enhancing a model’s generalization and inference efficiency. Traditional multi-modal fusion approaches often suffer from redundant features and escalated computational costs due to overlapping information across modalities, particularly in resource-constrained settings. The Token Merge method was originally proposed for vision Transformers to reduce unnecessary computation by merging redundant or semantically similar tokens [6]. Subsequently, this method was adapted to multi-modal tasks where researchers attempted to dynamically fuse tokens from different modalities to construct a more compact and expressive joint representation. In tasks such as image–text retrieval, image–text generation, and video semantic understanding, Token Merge not only improves computational efficiency but also significantly alleviates the issues of information conflicts and semantic inconsistencies during cross-modal alignment [7, 23, 28, 32].

模态融合的Token Merge方法。多模态融合需要压缩冗余特征并实现高效表征学习,这两者对提升模型的泛化能力和推理效率至关重要。传统多模态融合方法常因跨模态信息重叠导致特征冗余和计算成本激增,在资源受限场景中尤为明显。Token Merge最初是为视觉Transformer设计的,通过合并冗余或语义相似的token来减少不必要计算[6]。随后该方法被引入多模态任务,研究者尝试动态融合来自不同模态的token,以构建更紧凑且表达能力更强的联合表征。在图文检索、图文生成和视频语义理解等任务中,Token Merge不仅能提升计算效率,还可显著缓解跨模态对齐时的信息冲突与语义不一致问题[7, 23, 28, 32]。

In the context of composed image retrieval, the Token Merge method is used to address the inconsistent granularity between cross-modal feature alignment and fusion. For example, Wang et al. [38] emphasized cross-modal entity alignment during fine-tuning by employing contrastive learning and entity-level masked modeling to promote effective information interaction and structural unification between image and text. To further exploit the potential of localized textual representations, NSFSE [39] adaptively delineated the boundaries between matching and non-matching samples by comprehensively considering the correspondence of positive and negative pairs.

在组合图像检索的背景下,Token Merge方法被用于解决跨模态特征对齐与融合粒度不一致的问题。例如,Wang等人[38]在微调阶段通过采用对比学习和实体级掩码建模,强调了跨模态实体对齐,以促进图像与文本之间的有效信息交互和结构统一。为了进一步挖掘局部化文本表征的潜力,NSFSE[39]通过综合考虑正负样本对的对应关系,自适应地划分匹配与非匹配样本的边界。

Furthermore, efficient inference and generation in multi-modal models can also be achieved by directly pruning labels in the language model. Huang [16] introduced a training-free method that minimizes video redundancy by merging spatio-temporal tokens, thereby enhancing model efficiency. Compared with traditional attention-intensive interaction mechanisms, Token Merge offers a more flexible and controllable computational pathway for multimodal fusion, with promising s cal ability and practical engineering prospects.

此外,通过直接剪枝语言模型中的标签也能实现多模态模型的高效推理与生成。Huang [16] 提出了一种无需训练的方法,通过合并时空 token (Token) 来最小化视频冗余,从而提升模型效率。与传统依赖密集注意力的交互机制相比,Token Merge 为多模态融合提供了更灵活可控的计算路径,具备良好的扩展性和实际工程前景。

In our work, we apply the Token Merge technique to the fusion of visual and textual information in composed image retrieval. By adaptively merging visual tokens and textual tokens, our approach better preserves essential visual details from the reference image and fine-grained semantic information from the text. This results in more precise cross-modal token alignment and fusion, ultimately enhancing the retrieval performance.

在我们的工作中,我们将Token Merge技术应用于组合图像检索中的视觉与文本信息融合。通过自适应合并视觉token和文本token,我们的方法能更好地保留参考图像中的关键视觉细节和文本中的细粒度语义信息。这实现了更精准的跨模态token对齐与融合,最终提升了检索性能。

3 Method

3 方法

3.1 Preliminary

3.1 初步准备

Assume that a composed image retrieval (CIR) dataset contains $N$ annotated triplet samples, where the 𝑖th triplet sample $x_{i}$ is represented as:

假设一个组合图像检索 (CIR) 数据集包含 $N$ 个标注的三元组样本,其中第 𝑖 个三元组样本 $x_{i}$ 表示为:

$$

x_{i}=(r_{i},m_{i},t_{i}),\quad r_{i},t_{i}\in\Omega,\quad m_{i}\in\mathcal{T}.

$$

$$

x_{i}=(r_{i},m_{i},t_{i}),\quad r_{i},t_{i}\in\Omega,\quad m_{i}\in\mathcal{T}.

$$

Here, $\textstyle r_{i},m_{i}$ , and $t_{i}$ denote the reference image, the relative description, and the target image of the 𝑖th triplet sample, respectively. The term relative emphasizes that $m_{i}$ specifies the modifications to be applied to $r_{i}$ to obtain the target image, capturing how the target image differs from the reference image. $\Omega$ represents the candidate image set that contains all reference and target images, and $\mathcal{T}$ denotes the text set containing all relative descriptions.

这里,$\textstyle r_{i},m_{i}$ 和 $t_{i}$ 分别表示第𝑖个三元组样本的参考图像、相对描述和目标图像。术语"相对"强调 $m_{i}$ 指定了要应用于 $r_{i}$ 以获得目标图像的修改,捕捉目标图像与参考图像的差异。$\Omega$ 表示包含所有参考图像和目标图像的候选图像集,$\mathcal{T}$ 表示包含所有相对描述的文本集。

In the CIR task, the query $q_{i}$ , which is composed of the reference image $r_{i}$ and the relative description $m_{i}$ , is used to retrieve the target image $t_{i}$ from the candidate set $\Omega$ . In the classical CIR training paradigm, multiple annotated triplet samples are first grouped into a mini-batch. Within the same batch, the reference images and relative descriptions are encoded into query representations by a query encoder $F(\cdot)$ , while the target images are encoded by an image encoder $G(\cdot)$ to obtain their embeddings. For brevity, we denote the representations of the triplet $\left({{r}{i}},{{m}{i}},t_{i}\right)$ as

在CIR任务中,查询$q_{i}$由参考图像$r_{i}$和相关描述$m_{i}$组成,用于从候选集$\Omega$中检索目标图像$t_{i}$。在经典的CIR训练范式中,首先将多个带标注的三元组样本分组为一个小批量。在同一批次内,参考图像和相关描述通过查询编码器$F(\cdot)$编码为查询表示,而目标图像则通过图像编码器$G(\cdot)$编码获取其嵌入向量。为简洁起见,我们将三元组$\left({{r}{i}},{{m}{i}},t_{i}\right)$的表示记为

$$

q_{i}=F(r_{i},m_{i})\quad\mathrm{and}\quad v_{i}=G(t_{i}),

$$

$$

q_{i}=F(r_{i},m_{i})\quad\mathrm{and}\quad v_{i}=G(t_{i}),

$$

where $v_{i}$ denotes the embedding of the target image. The cosine similarity function $f(\cdot,\cdot)$ computes the similarity between the query representation and the target image embedding. Most current methods adopt a contrastive learning paradigm, which pulls together the query and target image representations of positive pairs (i.e., the query paired with its matching target image) while pushing apart those of negative pairs (i.e., a query paired with a target image from a different triplet). The corresponding loss function is formulated as:

其中 $v_{i}$ 表示目标图像的嵌入向量。余弦相似度函数 $f(\cdot,\cdot)$ 用于计算查询表示与目标图像嵌入之间的相似度。当前大多数方法采用对比学习范式,该范式将正样本对(即查询与其匹配的目标图像配对)的查询和目标图像表示拉近,同时将负样本对(即查询与来自不同三元组的目标图像配对)的表示推远。对应的损失函数表示为:

$$

L_{c l}=\frac{1}{B}\sum_{i=1}^{B}-\log\left(\frac{\exp(f(q_{i},v_{i})/\tau)}{\sum_{j=1}^{B}\exp(f(q_{i},v_{j})/\tau)}\right),

$$

$$

L_{c l}=\frac{1}{B}\sum_{i=1}^{B}-\log\left(\frac{\exp(f(q_{i},v_{i})/\tau)}{\sum_{j=1}^{B}\exp(f(q_{i},v_{j})/\tau)}\right),

$$

where $B$ is the batch size and $\tau$ is the temperature hyper parameter, which controls the sharpness of the similarity distribution and thus regulates the strength of the contrastive signal.

其中 $B$ 是批量大小,$\tau$ 是温度超参数,用于控制相似度分布的锐度,从而调节对比信号的强度。

Our method follows the same contrastive learning paradigm.

我们的方法遵循相同的对比学习范式。

3.2 Overview

3.2 概述

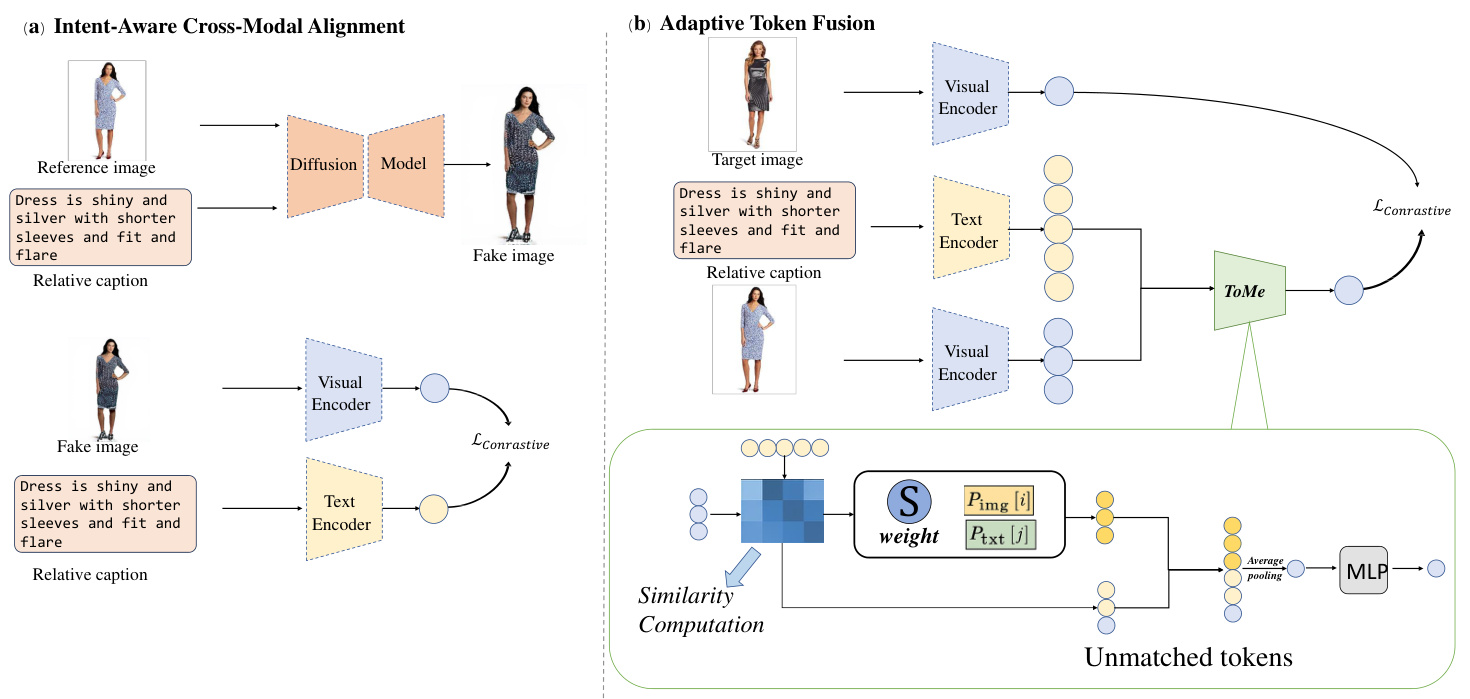

As depicted in Figure 1, our proposed TMCIR pipeline comprises two steps:

如图 1 所示,我们提出的 TMCIR 流程包含两个步骤:

(1) Intent-Aware Cross-Modal Alignment. This step contains Pseudo Target Image Generation (PTIG) and Encoder FineTuning for Token Alignment (EFTTA) modules. In the PTIG module, a diffusion model, specifically Stable Diffusion 3, is utilized to generate a pseudo target image $p_{i}$ by conditioning on the reference image $r_{i}$ and the relative description $m_{i}$ . This pseudo target image accurately reflects the modification requirements specified in $m_{i}$ , ensuring a high level of control l ability and reproducibility. In the EFTTA module, we construct an image-text pair dataset from the relative description and the pseudo target image, then finetune the visual and text encoders of the CLIP model. This process promotes more consistent cross-modal token distributions in the shared embedding space. Here, token alignment refers to the process of harmonizing tokens from different modalities in the embedding space so that their semantic representations and attention patterns become more correlated and comparable.

(1) 意图感知的跨模态对齐。该步骤包含伪目标图像生成 (PTIG) 和面向Token对齐的编码器微调 (EFTTA) 模块。在PTIG模块中,我们采用扩散模型(具体为Stable Diffusion 3)基于参考图像 $r_{i}$ 和相对描述 $m_{i}$ 生成伪目标图像 $p_{i}$ 。该伪目标图像精确反映 $m_{i}$ 中指定的修改需求,确保高度可控性与可复现性。在EFTTA模块中,我们从相对描述和伪目标图像构建图文对数据集,并对CLIP模型的视觉与文本编码器进行微调。该过程促使共享嵌入空间中的跨模态Token分布更趋一致。此处Token对齐指在嵌入空间协调不同模态Token的过程,使其语义表示与注意力模式更具关联性和可比性。

Figure 3: An Overview of the TMCIR Framework.It consists of two modules: the "Intent-Aware Cross-Modal Alignment" module and the "Adaptive Token Fusion" module. First, we input the reference image and the relative description into a diffusion model to generate a pseudo-target image. Through contrastive learning, we guide the visual and textual encoders to achieve cross-modal token distribution alignment. Then, the reference image and the relative description are fused using an adaptive token fusion strategy based on positional encoding and semantic similarity, generating a joint representation that captures both the user intent and the key visual information from the reference image.

图 3: TMCIR框架概述。该框架包含两个模块:"意图感知跨模态对齐"模块和"自适应Token融合"模块。首先,我们将参考图像和相对描述输入扩散模型生成伪目标图像,通过对比学习引导视觉和文本编码器实现跨模态Token分布对齐。随后,基于位置编码和语义相似度的自适应Token融合策略将参考图像与相对描述进行融合,生成同时捕捉用户意图和参考图像关键视觉信息的联合表征。

(2) Adaptive Token Fusion. After obtaining visual and text tokens from the fine-tuned bimodal encoder, we design an adaptive token fusion strategy. In our approach, token merging is performed on a token-by-token basis by computing the cosine similarity between individual tokens and incorporating positional encoding via weighted averaging. This fusion strategy constructs a unified and semantically rich cross-modal representation.

(2) 自适应Token融合。从微调后的双模态编码器获取视觉和文本Token后,我们设计了一种自适应Token融合策略。该方法通过计算单个Token之间的余弦相似度,并结合通过加权平均的位置编码,逐个Token进行融合。这种融合策略构建了统一且语义丰富的跨模态表征。

In the following subsections, we provide details for these two steps.

在以下小节中,我们将详细介绍这两个步骤。

3.3 Intent-Aware Cross-Modal Alignment

3.3 意图感知的跨模态对齐

The Intent-Aware Cross-Modal Alignment step aims to enhance the encoder ability of text to capture nuanced intents in textual descriptions, which includes pseudo target image generation and encoder fine-tuning for token alignment modules.

意图感知跨模态对齐步骤旨在增强文本编码器捕捉文本描述中细微意图的能力,包括伪目标图像生成和针对token对齐模块的编码器微调。

Pseudo Target Image Generation. Benefiting from large-scale training data comprising billions of image-text pairs, large-scale visual-language models (e.g., CLIP[29]) have demonstrated excellent generalization capabilities across numerous downstream tasks. This has inspired the application of such foundational models to the composite image retrieval (CIR) task. Existing methods typically rely on various modality fusion strategies to integrate the bimodal features extracted by the visual and text encoders. However, the visual encoder in pre-trained models primarily focuses on overall visual information, while the text encoder captures generic language features. Directly employing the original encoders often leads to inconsistent token distributions between the visual and text modalities in specific CIR datasets. This inconsistency results in suboptimal similarity computations during the token fusion phase, and consequently, inferior retrieval performance.

伪目标图像生成。得益于包含数十亿图像-文本对的大规模训练数据,大规模视觉语言模型(如CLIP[29])在众多下游任务中展现出卓越的泛化能力。这促使研究者将此类基础模型应用于组合图像检索(CIR)任务。现有方法通常依赖多种模态融合策略,以整合视觉编码器和文本编码器提取的双模态特征。然而,预训练模型中的视觉编码器主要关注整体视觉信息,而文本编码器捕获的是通用语言特征。直接使用原始编码器往往会导致特定CIR数据集中视觉与文本模态间的token分布不一致,这种不一致性使得token融合阶段的相似度计算欠佳,最终导致检索性能下降。

To address this issue, we first select image-text pairs from existing CIR datasets—typically sampling all available pairs or a fixed number per batch in our experiments—and perform task-specific fine-tuning of the visual and text encoders to achieve more consistent token representations from the reference image and the relative description. Considering that the manually collected triplet samples in current CIR datasets contain target images sourced from diverse origins (which may include background interference or noise not aligned with the modification description), we generate a pseudo target image ${\mathit{p}}{i}$ by conditioning a diffusion model $D$ on the reference image $r{i}$ and the relative description $m_{i}$ :

为解决这一问题,我们首先从现有的组合图像检索(CIR)数据集中选取图文对——通常在实验中采样所有可用对或每批次固定数量——并对视觉和文本编码器进行任务特定微调,以使参考图像和相对描述生成更一致的token表征。考虑到当前CIR数据集中人工收集的三元组样本包含来源各异的目标图像(可能含有与修改描述未对齐的背景干扰或噪声),我们通过以参考图像$r_{i}$和相对描述$m_{i}$为条件输入扩散模型$D$,生成伪目标图像${\mathit{p}}_{i}$:

$$

p_{i}=D(r_{i},m_{i}).

$$

$$

p_{i}=D(r_{i},m_{i}).

$$

The pseudo target image $p_{i}$ accurately embodies the modification requirements stipulated in $m_{i}$ , while excluding irrelevant background noise. This provides a purer and more precise supervisory signal for subsequent encoder fine-tuning. The pseudo target image $p_{i}$ and the relative description $m_{i}$ are then combined to form an image-text pair dataset $\mathcal{D}$ :

伪目标图像 $p_{i}$ 准确体现了 $m_{i}$ 中规定的修改要求,同时排除了无关的背景噪声。这为后续编码器微调提供了更纯净、更精确的监督信号。伪目标图像 $p_{i}$ 与相关描述 $m_{i}$ 随后被组合形成图文对数据集 $\mathcal{D}$:

$$

{\mathcal D}={(m_{i},p_{i})\mid i=1,2,\ldots,N}.

$$

$$

{\mathcal D}={(m_{i},p_{i})\mid i=1,2,\ldots,N}.

$$

We utilize $\mathcal{D}$ as a dedicated dataset in a distinct training stage for fine-tuning the encoders, separate from the original CIR training set.

我们利用 $\mathcal{D}$ 作为专用数据集,在独立于原始 CIR 训练集的单独训练阶段对编码器进行微调。

Encoder Fine-Tuning for Token Alignment. After constructing the image-text pair dataset $\mathcal{D}={(\bar{m_{i}},p_{i})}_{i=1}^{N}$ as described in Section 3.3, we adopt a contrastive learning strategy to fine-tune the CLIP-pretrained visual encoder and text encoder, thereby further enhancing their cross-modal representation and alignment abilities for the CIR task.

Token对齐的编码器微调。在按照第3.3节所述构建图文对数据集 $\mathcal{D}={(\bar{m_{i}},p_{i})}_{i=1}^{N}$ 后,我们采用对比学习策略对CLIP预训练的视觉编码器和文本编码器进行微调,从而进一步增强它们在CIR任务中的跨模态表示和对齐能力。

Specifically, for each image-text pair $(m i,p i)$ , the relative description $m_{i}$ is input into the text encoder $E_{T}$ to obtain the text feature vector:

具体来说,对于每个图文对$(m i,p i)$,将相对描述$m_{i}$输入文本编码器$E_{T}$以获取文本特征向量:

$$

t_{i}=E_{T}(m_{i})

$$

$$

t_{i}=E_{T}(m_{i})

$$

while the pseudo target image ${\mathit{p}}{i}$ is input into the visual encoder $E{V}$ to obtain the image feature vector:

伪目标图像 ${\mathit{p}}{i}$ 输入视觉编码器 $E{V}$ 后得到图像特征向量:

$$

v_{i}=E_{V}(p_{i})

$$

$$

v_{i}=E_{V}(p_{i})

$$

We then normalize these feature representations:

然后我们对这些特征表示进行归一化处理:

$$

\hat{t}{i}=\frac{t_{i}}{\lVert t_{i}\rVert_{2}},\quad\hat{v}{i}=\frac{v_{i}}{\lVert v_{i}\rVert_{2}}

$$

$$

\hat{t}{i}=\frac{t_{i}}{\lVert t_{i}\rVert_{2}},\quad\hat{v}{i}=\frac{v_{i}}{\lVert v_{i}\rVert_{2}}

$$

to facilitate subsequent cosine similarity calculations.

便于后续的余弦相似度计算。

Next, we optimize the model parameters using the InfoNCE lossfunction:

接下来,我们使用InfoNCE损失函数优化模型参数:

$$

\mathcal{L}=-\frac{1}{N}\sum_{i=1}^{N}\log\frac{\exp(\sin(\hat{v}_{i},\hat{t}_{i})/\tau)}{\sum_{j=1}^{N}\exp(\sin(\hat{v}_{i},\hat{t}_{j})/\tau)}

$$

$$

\mathcal{L}=-\frac{1}{N}\sum_{i=1}^{N}\log\frac{\exp(\sin(\hat{v}_{i},\hat{t}_{i})/\tau)}{\sum_{j=1}^{N}\exp(\sin(\hat{v}_{i},\hat{t}_{j})/\tau)}

$$

where $s\mathrm{i}\mathrm{m}(\cdot,\cdot)$ denotes the cosine similarity function and $\tau$ is a learnable temperature parameter that controls the smoothness of the similarity distribution.

其中 $s\mathrm{i}\mathrm{m}(\cdot,\cdot)$ 表示余弦相似度函数,$\tau$ 是可学习的温度参数,用于控制相似度分布的平滑度。

Following fine-tuning, the token representations produced by the visual and text encoders in the shared embedding space exhibit improved distribution consistency and cross-modal alignment, thereby providing a robust foundation for the subsequent token merging module.

经过微调后,视觉和文本编码器在共享嵌入空间生成的token表征展现出更强的分布一致性和跨模态对齐性,从而为后续的token合并模块奠定了坚实基础。

3.4 Adaptive Token Fusion

3.4 自适应Token融合

Following the fine-tuning of the visual and text encoders, the resulting visual tokens and text tokens are well-aligned in the shared embedding space. To effectively integrate the dual-modal information, we design a token merging module based on similarity computation.

视觉和文本编码器微调后,生成的视觉token和文本token在共享嵌入空间中对齐良好。为有效整合双模态信息,我们设计了一个基于相似度计算的token融合模块。

We then compute the similarity matrix $\mathbf{S_{\lambda}}\in\mathbb{R}^{L\times M}$ between the image token set $V$ and the text token set $T$ :

我们计算图像token集 $V$ 和文本token集 $T$ 之间的相似度矩阵 $\mathbf{S_{\lambda}}\in\mathbb{R}^{L\times M}$ :

$$

\mathbf{S}{i j}=\frac{\mathbf{v}{i}\cdot\mathbf{t}{j}}{|\mathbf{v}{i}|\cdot|\mathbf{t}_{j}|}.

$$

$$

\mathbf{S}{i j}=\frac{\mathbf{v}{i}\cdot\mathbf{t}{j}}{|\mathbf{v}{i}|\cdot|\mathbf{t}_{j}|}.

$$

For each text token, we iterate over each visual token, considering a pair $(\mathbf{v}{i},\mathbf{t}_{j})$ as a valid matching token pair if their similarity exceeds a preset threshold $\tau$ .

对于每个文本token,我们遍历每个视觉token,当它们的相似度超过预设阈值$\tau$时,将这对$(\mathbf{v}{i},\mathbf{t}_{j})$视为有效的匹配token对。

Fusion Strategy: For each matching token pair $(\mathbf{v}{i},\mathbf{t}{j})$ , we compute a weighted average using their similarity coefficient $\lambda$ as the weight and integrate their positional encodings $(\mathbf{P}{\mathrm{img}}[i]$ and $\mathbf{P}{\mathrm{txt}}[j],$ ) to preserve spatial information. The resulting fused representation $\mathbf{f}_{i,j}$ is calculated as:

融合策略:对于每个匹配的token对 $(\mathbf{v}{i},\mathbf{t}{j})$ ,我们使用它们的相似系数 $\lambda$ 作为权重计算加权平均值,并整合它们的位置编码 $(\mathbf{P}{\mathrm{img}}[i]$ 和 $\mathbf{P}{\mathrm{txt}}[j],$ ) 以保留空间信息。最终得到的融合表示 $\mathbf{f}_{i,j}$ 计算公式为:

$$

\mathbf{f}{i,j}=\frac{\mathbf{S}{i j}\cdot\mathbf{v}{i}+\mathbf{S}{i j}\cdot\mathbf{t}{j}}{2\mathbf{S}{i j}+\epsilon}+0.5\cdot\left(\mathbf{P}{\mathrm{img}}\big[i\big]+\mathbf{P}_{\mathrm{txt}}\big[j\big]\right),

$$

$$

\mathbf{f}{i,j}=\frac{\mathbf{S}{i j}\cdot\mathbf{v}{i}+\mathbf{S}{i j}\cdot\mathbf{t}{j}}{2\mathbf{S}{i j}+\epsilon}+0.5\cdot\left(\mathbf{P}{\mathrm{img}}\big[i\big]+\mathbf{P}_{\mathrm{txt}}\big[j\big]\right),

$$

where $\epsilon$ is a small constant to prevent division by zero.

其中 $\epsilon$ 是一个防止除以零的小常数。

For visual tokens and text tokens that do not find a matching counterpart, we retain them by directly adding their positional residuals:

对于未找到匹配项的视觉token和文本token,我们通过直接添加其位置残差来保留它们:

$$

\begin{array}{r}{\tilde{\mathbf{v}}{i}=\mathbf{v}{i}+0.5\cdot\mathbf{P}{\mathrm{img}}[i],\quad\mathrm{if}\mathbf{v}{i}\notin\mathrm{Matched},}\ {\mathrm{~}}\ {\tilde{\mathbf{t}}{j}=\mathbf{t}{j}+0.5\cdot\mathbf{P}{\mathrm{txt}}[j],\quad\mathrm{if}\mathbf{t}_{j}\notin\mathrm{Matched}.}\end{array}

$$

$$

\begin{array}{r}{\tilde{\mathbf{v}}{i}=\mathbf{v}{i}+0.5\cdot\mathbf{P}{\mathrm{img}}[i],\quad\mathrm{if}\mathbf{v}{i}\notin\mathrm{Matched},}\ {\mathrm{~}}\ {\tilde{\mathbf{t}}{j}=\mathbf{t}{j}+0.5\cdot\mathbf{P}{\mathrm{txt}}[j],\quad\mathrm{if}\mathbf{t}_{j}\notin\mathrm{Matched}.}\end{array}

$$

where $\boldsymbol{\mathcal M}$ denotes the set of matching pairs, while $\boldsymbol{\mathscr{M}}{I}$ and $\boldsymbol{\mathscr{M}}_{T}$ represent the matching indices for visual and text tokens, respectively. We then apply average pooling to $Z$ to obtain a single token representation $\mathbf{z}$ :

其中 $\boldsymbol{\mathcal M}$ 表示匹配对集合,$\boldsymbol{\mathscr{M}}{I}$ 和 $\boldsymbol{\mathscr{M}}_{T}$ 分别代表视觉token和文本token的匹配索引。我们对 $Z$ 应用平均池化以获得单个token表示 $\mathbf{z}$:

$$

\mathbf{z}=\frac{1}{N_{Z}}\sum_{n=1}^{N_{Z}}\mathbf{Z}_{n},

$$

$$

\mathbf{z}=\frac{1}{N_{Z}}\sum_{n=1}^{N_{Z}}\mathbf{Z}_{n},

$$

and pass it through a fully connected layer $\mathrm{F}$ to obtain the final cross-modal embedding vector $V_{Q}$ :

并将其通过全连接层 $\mathrm{F}$ 以获得最终的跨模态嵌入向量 $V_{Q}$:

$$

V_{Q}=\operatorname{F}(\mathbf{z}).

$$

$$

V_{Q}=\operatorname{F}(\mathbf{z}).

$$

Learning Objective: Our training objective for the composed image retrieval (CIR) task is to align the joint feature representation $V_{Q}$ of the mixed-modal query $(r,m)$ with the feature representation $V_{T}$ of the target image $t$ . In each training iteration, we process a mini-batch of