A Survey on Knowledge Distillation of Large Language Models

大语言模型知识蒸馏综述

Abstract—In the era of Large Language Models (LLMs), Knowledge Distillation (KD) emerges as a pivotal methodology for transferring advanced capabilities from leading proprietary LLMs, such as GPT-4, to their open-source counterparts like LLaMA and Mistral. Additionally, as open-source LLMs flourish, KD plays a crucial role in both compressing these models, and facilitating their selfimprovement by employing themselves as teachers. This paper presents a comprehensive survey of KD’s role within the realm of LLM, highlighting its critical function in imparting advanced knowledge to smaller models and its utility in model compression and selfimprovement. Our survey is meticulously structured around three foundational pillars: algorithm, skill, and vertical iz ation – providing a comprehensive examination of KD mechanisms, the enhancement of specific cognitive abilities, and their practical implications across diverse fields. Crucially, the survey navigates the interaction between data augmentation (DA) and KD, illustrating how DA emerges as a powerful paradigm within the KD framework to bolster LLMs’ performance. By leveraging DA to generate context-rich, skillspecific training data, KD transcends traditional boundaries, enabling open-source models to approximate the contextual adeptness, ethical alignment, and deep semantic insights characteristic of their proprietary counterparts. This work aims to provide an insightful guide for researchers and practitioners, offering a detailed overview of current methodologies in knowledge distillation and proposing future research directions. By bridging the gap between proprietary and open-source LLMs, this survey underscores the potential for more accessible, efficient, and powerful AI solutions. Most importantly, we firmly advocate for compliance with the legal terms that regulate the use of LLMs, ensuring ethical and lawful application of KD of LLMs. An associated Github repository is available at https://github.com/Tebmer/Awesome-Knowledge-Distillation-of-LLMs.

摘要—在大语言模型(LLM)时代,知识蒸馏(KD)成为将GPT-4等领先专有大语言模型的高级能力迁移至LLaMA、Mistral等开源模型的关键方法。此外,随着开源大语言模型的蓬勃发展,KD在模型压缩和通过自我教学实现模型自提升方面都发挥着至关重要的作用。本文系统综述了KD在大语言模型领域的作用,重点阐述了其在向小型模型传递高级知识方面的核心功能,以及在模型压缩与自提升中的应用价值。我们的综述围绕算法、技能和垂直化三大支柱展开——全面考察了KD机制、特定认知能力的增强及其在不同领域的实际应用。尤为关键的是,本文探讨了数据增强(DA)与KD的协同作用,阐明了DA如何作为KD框架内的强大范式来提升大语言模型性能。通过利用DA生成富含上下文、技能特定的训练数据,KD突破了传统限制,使开源模型能够接近专有模型所具备的上下文适应能力、伦理对齐和深层语义理解。本工作旨在为研究者和实践者提供深度指南,详细梳理当前知识蒸馏方法体系,并展望未来研究方向。通过弥合专有与开源大语言模型之间的鸿沟,本综述揭示了构建更易获取、更高效、更强大AI解决方案的潜力。最重要的是,我们坚决主张遵守大语言模型使用的法律条款,确保LLM知识蒸馏的伦理与合法应用。相关Github仓库详见https://github.com/Tebmer/Awesome-Knowledge-Distillation-of-LLMs。

Index Terms—Large language models, knowledge distillation, data augmentation, skill distillation, supervised fine-tuning

索引术语—大语言模型 (Large Language Model)、知识蒸馏 (knowledge distillation)、数据增强 (data augmentation)、技能蒸馏 (skill distillation)、监督微调 (supervised fine-tuning)

1 INTRODUCTION

1 引言

In the evolving landscape of artificial intelligence (AI), proprietary 1 Large Language Models (LLMs) such as GPT3.5 (Ouyang et al., 2022), GPT-4 (OpenAI et al., 2023), Gemini (Team et al., 2023) and Claude2 have emerged as groundbreaking technologies, reshaping our understanding of natural language processing (NLP). These models, characterized by their vast scale and complexity, have unlocked new realms of possibility, from generating human-like text to offering sophisticated problem-solving capabilities. The core significance of these LLMs lies in their emergent abilities (Wei et al., 2022a,b; Xu et al., 2024a), a phenomenon where the models display capabilities beyond their explicit training objectives, enabling them to tackle a diverse array of tasks with remarkable proficiency. These models excel in understanding and generation, driving applications from creative generation to complex problem-solving (OpenAI et al., 2023; Liang et al., 2022). The potential of these models extends far beyond current applications, promising to revolutionize industries, augment human creativity, and redefine our interaction with technology.

在人工智能 (AI) 快速发展的背景下,诸如 GPT3.5 (Ouyang et al., 2022)、GPT-4 (OpenAI et al., 2023)、Gemini (Team et al., 2023) 和 Claude2 等专有大语言模型 (LLM) 已成为突破性技术,重塑了我们对自然语言处理 (NLP) 的认知。这些模型以其庞大的规模和复杂性为特征,开启了从生成类人文本到提供复杂问题解决能力的新可能性领域。这些大语言模型的核心意义在于其涌现能力 (Wei et al., 2022a,b; Xu et al., 2024a),即模型展现出超越其明确训练目标的能力,使其能够以卓越的熟练度处理各种任务。这些模型在理解和生成方面表现出色,推动了从创意生成到复杂问题解决的应用 (OpenAI et al., 2023; Liang et al., 2022)。这些模型的潜力远超当前应用范围,有望彻底改变行业、增强人类创造力并重新定义我们与技术的交互方式。

Despite the remarkable capabilities of proprietary LLMs like GPT-4 and Gemini, they are not without their shortcomings, particularly when viewed in light of the advantages offered by open-source models. A significant drawback is their limited accessibility and higher cost (OpenAI et al., 2023). These proprietary models often come with substantial usage fees and restricted access, making them less attainable for individuals and smaller organizations. In terms of data privacy and security (Wu et al., 2023a), using these proprietary LLMs frequently entails sending sensitive data to external servers, which raises concerns about data privacy and security. This aspect is especially critical for users handling confidential information. Moreover, the generalpurpose design of proprietary LLMs, while powerful, may not always align with the specific needs of niche applications. The constraints of accessibility, cost, and adaptability thus present significant challenges in leveraging the full potential of proprietary LLMs.

尽管像GPT-4和Gemini这样的专有大语言模型具有卓越能力,但它们并非没有缺点,尤其是与开源模型提供的优势相比。一个显著缺陷是其有限的可用性和较高成本 (OpenAI et al., 2023)。这些专有模型通常伴随着高昂的使用费用和受限的访问权限,使得个人和小型组织难以获取。在数据隐私和安全性方面 (Wu et al., 2023a),使用这些专有大语言模型往往需要将敏感数据发送到外部服务器,这引发了关于数据隐私和安全的担忧。对于处理机密信息的用户而言,这一点尤为关键。此外,专有大语言模型的通用设计虽然强大,但可能并不总是符合特定领域应用的需求。因此,可用性、成本和适应性的限制对充分发挥专有大语言模型的潜力构成了重大挑战。

In contrast to proprietary LLMs, open-source models like LLaMA (Touvron et al., 2023) and Mistral (Jiang et al., 2023a) bring several notable advantages. One of the primary benefits of open-source models is their accessibility and adaptability. Without the constraints of licensing fees or restrictive usage policies, these models are more readily available to a broader range of users, from individual researchers to smaller organizations. This openness fosters a more collaborative and inclusive AI research environment, encouraging innovation and diverse applications. Additionally, the customizable nature of open-source LLMs allows for more tailored solutions, addressing specific needs that generic, large-scale models may not meet.

与专有大语言模型相比,LLaMA (Touvron et al., 2023) 和 Mistral (Jiang et al., 2023a) 等开源模型具有多项显著优势。开源模型的主要优点之一是其可访问性和适应性。由于不受许可费用或严格使用政策的限制,这些模型更容易被更广泛的用户群体获取,从个体研究者到小型组织皆可受益。这种开放性促进了更具协作性和包容性的人工智能研究环境,鼓励创新和多样化应用。此外,开源大语言模型的可定制特性使其能够提供更贴合需求的解决方案,解决通用大规模模型可能无法满足的特定需求。

However, the open-source LLMs also have their own set of drawbacks, primarily stemming from their relatively limited scale and resources compared to their proprietary counterparts. One of the most significant limitations is the smaller model scale, which often results in lower performance on real-world tasks with a bunch of instructions (Zheng et al., 2023a). These models, with fewer parameters, may struggle to capture the depth and breadth of knowledge embodied in larger models like GPT-4. Additionally, the pre-training investment in these open-source models is typically less substantial. This reduced investment can lead to a narrower range of pre-training data, potentially limiting the models’ understanding and handling of diverse or specialized topics (Liang et al., 2022; Sun et al., 2024a). Moreover, open-source models often undergo fewer fine-tuning steps due to resource constraints. Fine-tuning is crucial for optimizing a model’s performance for specific tasks or industries, and the lack thereof can hinder the model’s effectiveness in specialized applications. This limitation becomes particularly evident when these models are compared to the highly fine-tuned proprietary LLMs, which are often tailored to excel in a wide array of complex scenarios (OpenAI et al., 2023).

然而,开源大语言模型也存在固有缺陷,主要源于其规模与资源相较于闭源模型的局限性。最显著的制约在于模型规模较小,这往往导致其在处理复杂指令的现实任务时表现欠佳 (Zheng et al., 2023a) 。这类参数量较少的模型难以企及GPT-4等大型模型所涵盖的知识深度与广度。此外,开源模型通常获得的预训练投入相对有限,可能导致预训练数据覆盖面较窄,进而制约模型对多元化或专业化主题的理解与处理能力 (Liang et al., 2022; Sun et al., 2024a) 。由于资源限制,开源模型的微调步骤也往往较少,而微调对于优化模型在特定任务或行业的性能至关重要。这种缺陷在与经过深度优化的闭源大语言模型对比时尤为明显——后者通常被精心调校以适应各种复杂场景 (OpenAI et al., 2023) 。

Primarily, recognizing the disparities between proprietary and open-source LLMs, KD techniques have surged as a means to bridge the performance gap between these models (Gou et al., 2021; Gupta and Agrawal, 2022). Knowledge distillation, in this context, involves leveraging the more advanced capabilities of leading proprietary models like GPT-4 or Gemini as a guiding framework to enhance the competencies of open-source LLMs. This process is akin to transferring the ‘knowledge’ of a highly skilled teacher to a student, wherein the student (e.g., open-source LLM) learns to mimic the performance characteristics of the teacher (e.g., proprietary LLM). Compared to traditional knowledge distillation algorithms (Gou et al., 2021), data augmentation (DA) (Feng et al., 2021) has emerged as a prevalent paradigm to achieve knowledge distillation of LLMs, where a small seed of knowledge is used to prompt the LLM to generate more data with respect to a specific skill or domain (Taori et al., 2023). Secondly, KD still retains its fundamental role in compressing LLMs, making them more efficient without significant loss in performance. (Gu et al., 2024; Agarwal et al., 2024). More recently, the strategy of employing open-source LLMs as teachers for their own self-improvement has emerged as a promising approach, enhancing their capabilities significantly (Yuan et al., 2024a; Chen et al., 2024a). Figure 1 provides an illustration of these three key roles played by KD in the context of LLMs.

首先,认识到专有大语言模型与开源大语言模型之间的差异后,知识蒸馏(KD)技术作为弥合两者性能差距的手段迅速兴起(Gou等人,2021;Gupta和Agrawal,2022)。在此背景下,知识蒸馏利用GPT-4或Gemini等领先专有模型的先进能力作为指导框架,来提升开源大语言模型的性能。这一过程类似于将高技能教师的"知识"传授给学生,其中学生(如开源大语言模型)学习模仿教师(如专有大语言模型)的性能特征。与传统知识蒸馏算法(Gou等人,2021)相比,数据增强(DA)(Feng等人,2021)已成为实现大语言模型知识蒸馏的流行范式,即利用少量知识种子促使大语言模型生成特定技能或领域的更多数据(Taori等人,2023)。其次,知识蒸馏仍保留其在压缩大语言模型方面的基本作用,使其更高效且性能无明显损失(Gu等人,2024;Agarwal等人,2024)。最近,采用开源大语言模型作为教师进行自我提升的策略成为一种有前景的方法,显著增强了其能力(Yuan等人,2024a;Chen等人,2024a)。图1展示了知识蒸馏在大语言模型中的这三个关键作用。

A key aspect of the knowledge distillation is the enhancement of skills such as advanced context following (e.g., in-context learning (Huang et al., 2022a) and instruction following (Taori et al., 2023)), improved alignment with user intents (e.g., human values/principles (Cui et al., 2023a), and thinking patterns like chain-of-thought (CoT) (Mukherjee et al., 2023)), and NLP task specialization (e.g., semantic understanding (Ding et al., 2023a), and code generation (Chaudhary, 2023)). These skills are crucial for the wide array of applications that LLMs are expected to perform, ranging from casual conversations to complex problem-solving in specialized domains. For instance, in vertical domains like healthcare (Wang et al., 2023a), law (LAW, 2023), or science (Zhang et al., 2024), where accuracy and context-specific knowledge are paramount, knowledge distillation allows open-source models to significantly improve their performance by learning from the proprietary models that have been extensively trained and fine-tuned in these areas.

知识蒸馏的一个关键方面在于提升多项技能,包括高级上下文跟随(例如上下文学习 [Huang et al., 2022a] 和指令跟随 [Taori et al., 2023])、增强用户意图对齐(如人类价值观/原则 [Cui et al., 2023a] 和思维链 (CoT) 等思考模式 [Mukherjee et al., 2023]),以及 NLP 任务专项能力(例如语义理解 [Ding et al., 2023a] 和代码生成 [Chaudhary, 2023])。这些技能对大语言模型应对从日常对话到专业领域复杂问题解决等广泛场景至关重要。以医疗 [Wang et al., 2023a]、法律 [LAW, 2023] 或科学 [Zhang et al., 2024] 等垂直领域为例,当准确性和领域知识成为核心需求时,知识蒸馏能让开源模型通过向经过专业训练的商业模型学习,显著提升性能。

Fig. 1: KD plays three key roles in LLMs: 1) Primarily enhancing capabilities, 2) offering traditional compression for efficiency, and 3) an emerging trend of self-improvement via self-generated knowledge.

图 1: 知识蒸馏 (KD) 在大语言模型中发挥三大关键作用:1) 主要提升模型能力,2) 提供传统压缩方案以提高效率,3) 通过自生成知识实现自我提升的新兴趋势。

The benefits of knowledge distillation in the era of LLMs are multifaceted and transformative (Gu et al., 2024). Through a suite of distillation techniques, the gap between proprietary and open-source models is significantly narrowed (Chiang et al., 2023; Xu et al., 2023a) and even filled (Zhao et al., 2023a). This process not only streamlines computational requirements but also enhances the environmental sustainability of AI operations, as open-source models become more proficient with lesser computational over- head. Furthermore, knowledge distillation fosters a more accessible and equitable AI landscape, where smaller entities and individual researchers gain access to state-of-the-art capabilities, encouraging wider participation and diversity in AI advancements. This democratization of technology leads to more robust, versatile, and accessible AI solutions, catalyzing innovation and growth across various industries and research domains.

在大语言模型(LLM)时代,知识蒸馏(Knowledge Distillation)的优势具有多面性和变革性(Gu et al., 2024)。通过一系列蒸馏技术,专有模型与开源模型之间的差距被显著缩小(Chiang et al., 2023; Xu et al., 2023a)甚至消除(Zhao et al., 2023a)。这一过程不仅简化了计算需求,还提升了AI运行的环境可持续性——开源模型能以更低的计算开销实现更高性能。此外,知识蒸馏促进了更普惠、更公平的AI生态,使小型机构和独立研究者也能获得尖端能力,从而推动AI发展更广泛的参与度和多样性。这种技术民主化催生出更健壮、通用且易获取的AI解决方案,为各行业和研究领域注入创新动能与发展活力。

The escalating need for a comprehensive survey on the knowledge distillation of LLMs stems from the rapidly evolving landscape of AI (OpenAI et al., 2023; Team et al., 2023) and the increasing complexity of these models. As AI continues to penetrate various sectors, the ability to efficiently and effectively distill knowledge from proprietary LLMs to open-source ones becomes not just a technical aspiration but a practical necessity. This need is driven by the growing demand for more accessible, cost-effective, and adaptable AI solutions that can cater to a diverse range of applications and users. A survey in this field is vital for synthesizing the current methodologies, challenges, and breakthroughs in knowledge distillation. It may serve as a beacon for researchers and practitioners alike, guiding them to distill complex AI capabilities into more manageable and accessible forms. Moreover, such a survey can illuminate the path forward, identifying gaps in current techniques and proposing directions for future research.

对大语言模型知识蒸馏进行全面综述的需求日益增长,这源于AI领域的快速发展 (OpenAI等, 2023; Team等, 2023) 以及这些模型日益增加的复杂性。随着AI持续渗透各行各业,将知识从专有大语言模型高效蒸馏至开源模型的能力,已不仅是一项技术追求,更是实际需求。这一需求受到以下因素的推动:市场对更易获取、更具成本效益且适应性强的AI解决方案的需求不断增长,这些方案需要满足多样化应用场景和用户群体。

该领域的综述对于梳理当前知识蒸馏的方法论、挑战与突破至关重要。它能为研究者和从业者指明方向,帮助他们将复杂的AI能力蒸馏为更易管理和使用的形式。此外,这类综述能够揭示未来发展方向,指出现有技术的不足,并为后续研究提出建议路径。

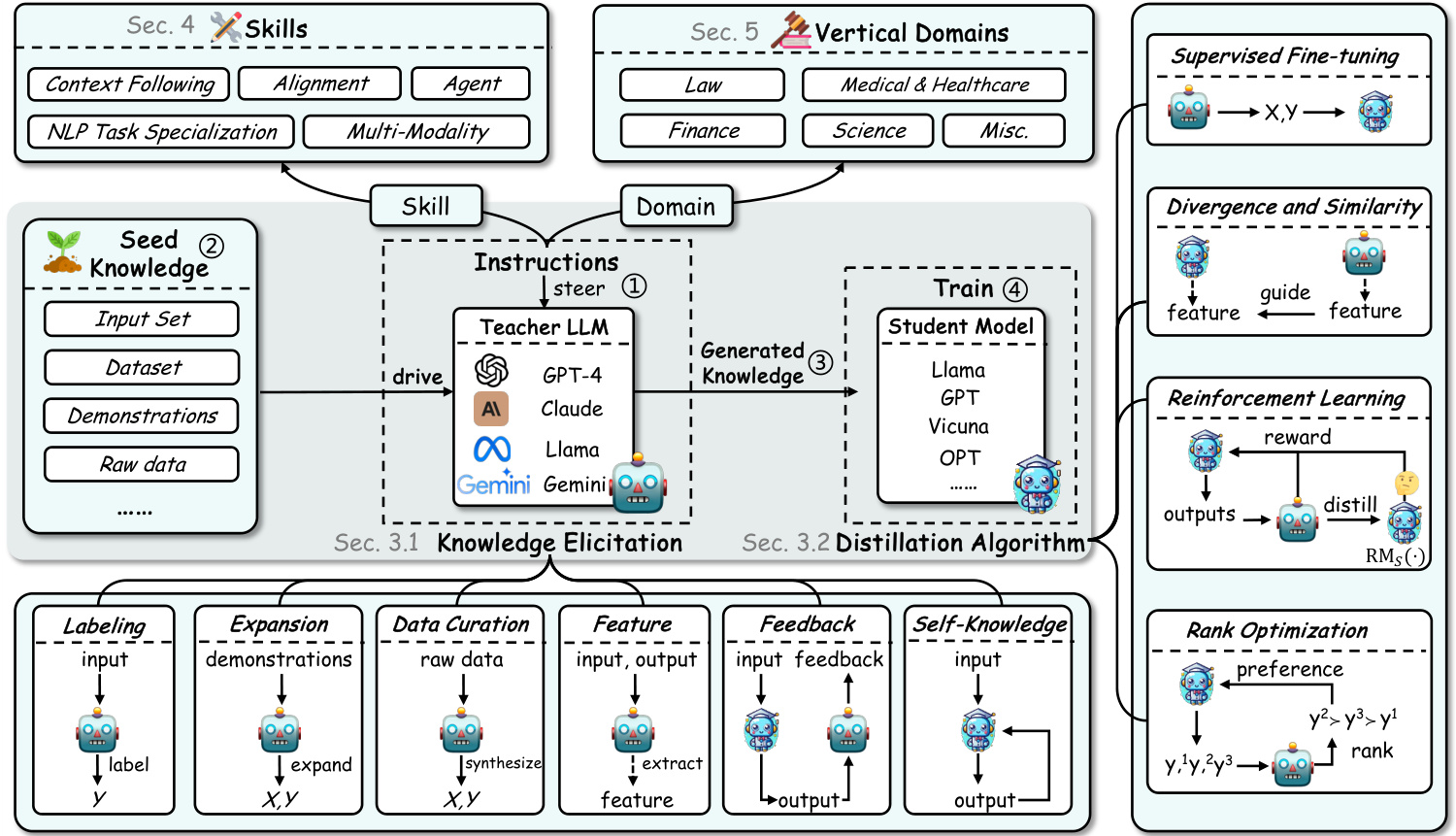

Fig. 2: An overview of this survey on knowledge distillation of large language models. Note that ‘Section’ is abbreviated as ‘Sec.’ in this figure. $\mathrm{RM}_{S}(\cdot)$ denotes the student reward model. denote the steps in KD of LLMs.

图 2: 大语言模型知识蒸馏综述概览。注意图中将"Section"缩写为"Sec."。$\mathrm{RM}_{S}(\cdot)$表示学生奖励模型。表示大语言模型知识蒸馏的步骤。

Survey Organization. The remainder of this survey is organized into several comprehensive sections, each designed to offer a deep dive into the multifaceted aspects of knowledge distillation within the realm ofLLMs. Following this introduction, §2 provides a foundational overview of knowledge distillation, comparing traditional techniques with those emerging in the era of LLMs and highlighting the role of data augmentation (DA) in this context. §3 delves into the approaches to elicit knowledge from teacher LLMs and core distillation algorithms, examining methods from supervised fine-tuning to more complex strategies involving divergence and similarity, reinforcement learning, and ranking optimization. Then, $\S4$ focuses on skill distillation, exploring how student models can be enhanced to improve context understanding, alignment with user intentions, and performance across a variety of NLP tasks. This includes discussions on natural language understanding (NLU), generation (NLG), information retrieval, recommendation systems, and the evaluation of text generation. In §5, we venture into domain-specific vertical distillation, showcasing how knowledge distillation techniques are applied within specialized fields such as law, healthcare, finance, and science, illustrating the practical implications and transformative impact of these approaches. The survey suggests open problems in §6, identifying current challenges and gaps in knowledge distillation research that offer opportunities for future work. Finally, the conclusion and discussion in $^{\S7}$ synthesize the insights gained, reflecting on the implications for the broader AI and NLP research community and proposing directions for future research. Figure 2 shows an overview of this survey.

调查结构。本综述的其余部分分为几个综合章节,每章旨在深入探讨大语言模型(LLM)领域中知识蒸馏的多方面内容。在引言之后,第2节提供了知识蒸馏的基础概述,比较了传统技术与大语言模型时代新兴技术,并重点阐述了数据增强(DA)在此背景下的作用。第3节深入探讨从教师大语言模型中提取知识的方法和核心蒸馏算法,研究范围从监督微调到涉及散度与相似性、强化学习和排序优化等更复杂的策略。接着第4节聚焦技能蒸馏,探索如何增强学生模型以提升上下文理解、用户意图对齐及各类NLP任务表现,涵盖自然语言理解(NLU)、生成(NLG)、信息检索、推荐系统以及文本生成评估等讨论。第5节深入特定领域垂直蒸馏,展示知识蒸馏技术在法律、医疗、金融和科学等专业领域的应用,阐明这些方法的实际意义和变革性影响。第6节提出开放性问题,指出当前知识蒸馏研究中的挑战与空白,为未来工作提供机遇。最后第7节的结论与讨论整合所得洞见,反思对更广泛AI和NLP研究界的影响,并提出未来研究方向。图2展示了本综述的概览结构。

2 OVERVIEW

2 概述

2.1 Comparing Traditional Recipe

2.1 传统食谱对比

The concept of knowledge distillation in the field of AI and deep learning (DL) refers to the process of transferring knowledge from a large, complex model (teacher) to a smaller, more efficient model (student) (Gou et al., 2021). This technique is pivotal in mitigating the challenges posed by the computational demands and resource constraints of deploying large-scale models in practical applications.

AI和深度学习(DL)领域中的知识蒸馏(Knowledge Distillation)概念,指的是将知识从庞大复杂的教师模型(teacher)迁移到更精简高效的学生模型(student)的过程 (Gou et al., 2021)。该技术对于缓解实际应用中部署大规模模型带来的计算需求和资源限制等挑战具有关键作用。

Historically, knowledge distillation techniques, prior to the era of LLMs, primarily concentrated on transferring knowledge from complex, often cumbersome neural networks to more compact and efficient architectures (Sanh et al., 2019; Kim and Rush, 2016). This process was largely driven by the need to deploy machine learning models in resource-constrained environments, such as mobile devices or edge computing platforms, where the computational power and memory are limited. The focus was predominantly on ad-hoc neural architecture selection and training objectives tailored for single tasks. These earlier methods involved training a smaller student network to mimic the output of a larger teacher network, often through techniques like soft target training, where the student learns from the softened softmax output of the teacher. Please refer to the survey (Gou et al., 2021) for more details on general knowledge distillation techniques in AI and DL.

历史上,在大语言模型(LLM)时代之前,知识蒸馏技术主要集中于将复杂且通常笨重的神经网络知识迁移到更紧凑高效的架构中(Sanh等人,2019;Kim和Rush,2016)。这一过程主要受限于在资源受限环境(如移动设备或边缘计算平台)中部署机器学习模型的需求,因为这些环境的计算能力和内存有限。研究重点主要集中在针对单一任务定制的临时神经网络架构选择和训练目标上。这些早期方法通过训练较小的学生网络来模仿较大教师网络的输出,通常采用软目标训练等技术,使学生从教师软化后的softmax输出中学习。更多关于人工智能和深度学习(DL)中通用知识蒸馏技术的细节,请参阅综述(Gou等人,2021)。

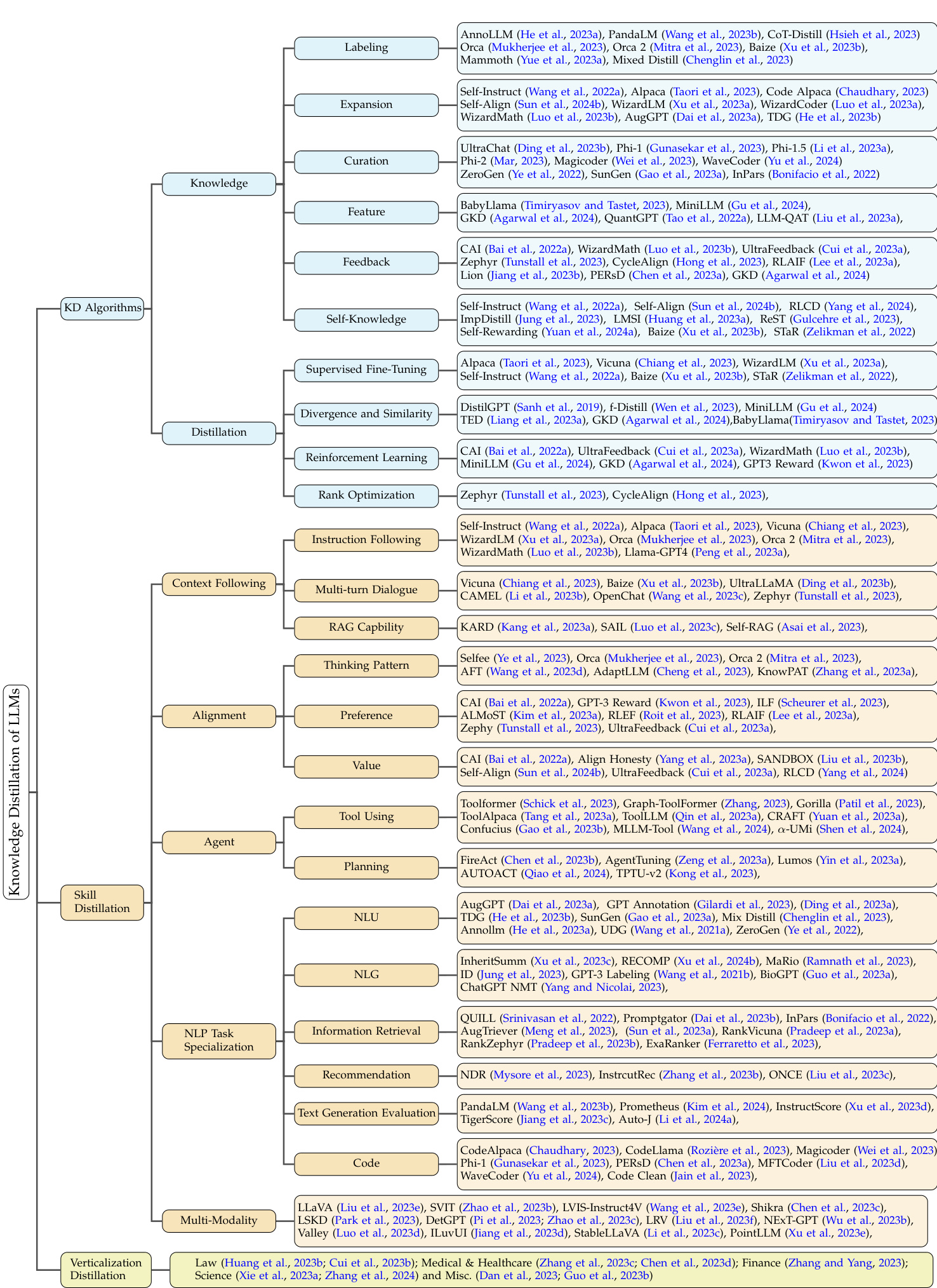

Fig. 3: Taxonomy of Knowledge Distillation of Large Language Models. The detailed taxonomy of Vertical iz ation Distillation is shown in Figure 7.

图 3: 大语言模型知识蒸馏分类体系。垂直化蒸馏的详细分类见图 7。

In contrast, the advent of LLMs has revolutionized the knowledge distillation landscape. The current era of knowledge distillation in LLMs shifts the focus from mere architecture compression to knowledge eli citation and transfer (Taori et al., 2023; Chaudhary, 2023; Tunstall et al., 2023). This paradigm change is largely due to the expansive and deep-seated knowledge that LLMs like GPT-4 and Gemini possess. And the inaccessible parameters of LLMs make it hard to compress them by using pruning (Han et al., 2016) or quantization (Liu et al., 2023a) techniques. Unlike the earlier era, where the goal was to replicate the output behavior of the teacher model or reduce the model size, the current focus in LLM-based knowledge distillation is to elicit the specific knowledge these models have.

相比之下,大语言模型(LLM)的出现彻底改变了知识蒸馏的格局。当前LLM知识蒸馏的时代,重点已从单纯的结构压缩转向知识提取与迁移 (Taori et al., 2023; Chaudhary, 2023; Tunstall et al., 2023)。这一范式转变主要归功于GPT-4和Gemini等大语言模型所具备的广博而深层的知识。由于LLM参数不可访问,使得通过剪枝(Han et al., 2016)或量化(Liu et al., 2023a)技术来压缩它们变得困难。与早期目标不同——当时旨在复现教师模型的输出行为或缩小模型规模,当前基于LLM的知识蒸馏更关注提取这些模型所拥有的特定知识。

The key to this modern approach lies in heuristic and carefully designed prompts, which are used to elicit specific knowledge (Ding et al., 2023b) or capabilities (Chaudhary, 2023) from the LLMs. These prompts are crafted to tap into the LLM’s understanding and capabilities in various domains, ranging from natural language understanding (He et al., 2023a) to more complex cognitive tasks like reasoning (Hsieh et al., 2023) and problem-solving (Qiao et al., 2024). The use of prompts as a means of knowledge elicitation offers a more flexible and dynamic approach to distillation. It allows for a more targeted extraction of knowledge, focusing on specific skills or domains of interest. This method is particularly effective in harnessing the emergent abilities of LLMs, where the models exhibit capabilities beyond their explicit training objectives.

这一现代方法的关键在于启发式精心设计的提示词(prompt),它们被用来从大语言模型中提取特定知识(Ding et al., 2023b)或能力(Chaudhary, 2023)。这些提示词经过精心设计,旨在挖掘大语言模型在多个领域的理解能力,涵盖从自然语言理解(He et al., 2023a)到推理(Hsieh et al., 2023)和问题解决(Qiao et al., 2024)等更复杂的认知任务。将提示词作为知识提取手段,为蒸馏过程提供了更灵活动态的途径,能够更有针对性地提取特定技能或目标领域的知识。这种方法在利用大语言模型涌现能力方面尤为有效,这些能力往往超出模型的显式训练目标。

Furthermore, this era of knowledge distillation also emphasizes the transfer of more abstract qualities such as reasoning patterns (Mitra et al., 2023), preference alignment (Cui et al., 2023a), and value alignment (Sun et al., 2024b). This is in stark contrast to the earlier focus on output replication (Taori et al., 2023), indicating a shift towards a more holistic and comprehensive transfer of cognitive capabilities. The current techniques involve not just the replication of outputs, but also the emulation of the thought processes (Mitra et al., 2023) and decision-making (Asai et al., 2023) patterns of the teacher model. This involves complex strategies like chain-of-thought prompting, where the student model is trained to learn the reasoning process of the teacher, thereby enhancing its problem-solving and decision-making capabilities.

此外,这一知识蒸馏时代还强调更抽象特质的迁移,例如推理模式 (Mitra et al., 2023)、偏好对齐 (Cui et al., 2023a) 和价值对齐 (Sun et al., 2024b)。这与早期专注于输出复现 (Taori et al., 2023) 形成鲜明对比,标志着向更全面认知能力迁移的转变。当前技术不仅涉及输出复现,还包括对教师模型思维过程 (Mitra et al., 2023) 和决策模式 (Asai et al., 2023) 的模拟。这涉及思维链提示等复杂策略,即训练学生模型学习教师的推理过程,从而增强其问题解决和决策能力。

2.2 Relation to Data Augmentation (DA)

2.2 与数据增强 (DA) 的关系

In the era of LLMs, Data Augmentation (DA) (Wang et al., 2022a; Ye et al., 2022) emerges as a critical paradigm integral to the process of knowledge distillation. Unlike traditional DA techniques such as paraphrasing (Gangal et al., 2022) or back-translation (Longpre et al., 2019), which primarily aim at expanding the training dataset in a somewhat mechanical manner, DA within the context of LLMs focuses on the generation of novel, context-rich training data tailored to specific domains and skills.

在大语言模型 (LLM) 时代,数据增强 (Data Augmentation, DA) [Wang et al., 2022a; Ye et al., 2022] 已成为知识蒸馏过程中不可或缺的关键范式。与传统 DA 技术 (如复述 [Gangal et al., 2022] 或回译 [Longpre et al., 2019]) 主要机械式扩展训练数据集不同,大语言模型语境下的 DA 专注于生成针对特定领域和技能、具有丰富上下文的新颖训练数据。

The relationship between DA and KD in LLMs is both symbiotic and foundational. By leveraging a set of seed knowledge, KD employs DA to prompt LLMs to produce explicit data that encapsulates specific skills or domain expertise (Chaudhary, 2023; West et al., 2022). This method stands out as a potent mechanism for bridging the knowledge and capability gap between proprietary and opensource models. Through DA, LLMs are prompted to create targeted, high-quality datasets that are not merely larger in volume but are also rich in diversity and specificity. This approach enables the distillation process to be more effective, ensuring that the distilled models not only replicate the teacher model’s output behavior but also embody its deep-seated understanding and cognitive strategies.

大语言模型中数据增强(DA)与知识蒸馏(KD)的关系既是共生的,也是基础性的。通过利用一组种子知识,KD采用DA来促使大语言模型生成包含特定技能或领域专业知识的显式数据(Chaudhary, 2023; West et al., 2022)。这种方法作为一种强大的机制脱颖而出,能够弥合专有模型与开源模型之间的知识和能力差距。通过DA,大语言模型被引导创建有针对性的高质量数据集,这些数据集不仅在数量上更大,而且在多样性和特异性方面也很丰富。这种方法使蒸馏过程更加有效,确保蒸馏后的模型不仅复制教师模型的输出行为,还体现其深层次的理解和认知策略。

DA acts as a force multiplier, enabling the distilled models to acquire and refine capabilities that would otherwise require exponentially larger datasets and computational resources. It facilitates a more effective transfer of knowledge, focusing on the qualitative aspects of learning rather than quantitative expansion. This strategic use of DA within KD processes underscores a pivotal shift towards a more efficient, sustainable, and accessible approach to harnessing the power of LLMs. It empowers open-source models with the ability to approximate the contextual adeptness, ethical alignment, and deep semantic insights characteristic of their proprietary counterparts, thereby democratizing access to advanced AI capabilities and fostering innovation across a broader spectrum of applications and users.

数据增强(Data Augmentation, DA)作为效能倍增器,使蒸馏模型能够获取并完善那些原本需要指数级更大数据集和计算资源才能获得的能力。它促进了更高效的知识迁移,聚焦于学习质量而非数量扩张。这种在知识蒸馏(Knowledge Distillation, KD)过程中对DA的策略性运用,标志着向更高效、可持续和普惠化利用大语言模型(LLM)能力的关键转变。它使开源模型能够逼近专有模型特有的语境适应力、伦理对齐和深层语义洞察力,从而推动先进AI技术的民主化应用,并在更广泛的应用场景和用户群体中激发创新。

2.3 Survey Scope

2.3 调查范围

Building on the discussions introduced earlier, this survey aims to comprehensively explore the landscape of knowledge distillation within the context of LLMs, following a meticulously structured taxonomy as in Figure 3. The survey’s scope is delineated through three primary facets: KD Algorithms, Skill Distillation, and Vertical iz ation Distillation. Each facet encapsulates a range of subtopics and methodologies. It’s important to note that KD algorithms provide the technical foundations for skill distillation and vertical iz ation distillation.

基于前文的讨论,本综述旨在全面探索大语言模型(LLM)背景下的知识蒸馏(Knowledge Distillation)领域,并遵循图3所示的精细分类体系。研究范围通过三个主要维度界定:KD算法(KD Algorithms)、技能蒸馏(Skill Distillation)和垂直领域蒸馏(Verticalization Distillation),每个维度涵盖若干子主题与方法论。需特别指出的是,KD算法为技能蒸馏和垂直领域蒸馏提供了技术基础。

KD Algorithms. This segment focuses on the technical foundations and methodologies of knowledge distillation. It includes an in-depth exploration of the processes involved in constructing knowledge from teacher models (e.g., proprietary LLMs) and integrating this knowledge into student models (e.g., open-source LLMs). Under the umbrella of ‘knowledge’, we delve into strategies such as labeling (Hsieh et al., 2023), expansion (Taori et al., 2023), curation (Gunasekar et al., 2023), feature understanding (Agarwal et al., 2024), feedback mechanisms (Tunstall et al., 2023), and selfknowledge generation (Wang et al., 2022a). This exploration seeks to uncover the various ways in which knowledge can be identified, expanded, and curated for effective distillation. The ‘distillation’ subsection examines learning approaches like supervised fine-tuning (SFT) (Wang et al., 2022a), divergence minimization (Agarwal et al., 2024), reinforcement learning techniques (Cui et al., 2023a), and rank optimization strategies (Tunstall et al., 2023). Together, these techniques demonstrate how KD enables open-source models to obtain knowledge from proprietary ones.

KD算法。本节重点探讨知识蒸馏的技术基础和方法论,包括深入分析从教师模型(如专有大语言模型)构建知识并将其整合到学生模型(如开源大语言模型)的过程。在"知识"框架下,我们研究了标注策略(Hsieh等人,2023)、扩展方法(Taori等人,2023)、知识筛选(Gunasekar等人,2023)、特征理解(Agarwal等人,2024)、反馈机制(Tunstall等人,2023)以及自知识生成(Wang等人,2022a),旨在揭示知识识别、扩展与筛选的有效蒸馏途径。"蒸馏"部分则探讨了监督微调(SFT)(Wang等人,2022a)、差异最小化(Agarwal等人,2024)、强化学习技术(Cui等人,2023a)和排序优化策略(Tunstall等人,2023)等学习方法。这些技术共同展示了知识蒸馏如何使开源模型从专有模型中获取知识。

Skill Distillation. This facet examines the specific competencies and capabilities enhanced through KD. It encompasses detailed discussions on context following (Taori et al., 2023; Luo et al., 2023c), with subtopics like instruction following and retrieval-augmented generation (RAG) Capability. In the realm of alignment (Mitra et al., 2023; Tunstall et al., 2023), the survey investigates thinking patterns, persona/preference modeling, and value alignment. The ‘agent’ category delves into skills such as Tool Using and Planning. NLP task specialization (Dai et al., 2023a; Jung et al., 2023; Chaudhary, 2023) is scrutinized through lenses like natural language understanding (NLU), natural language generation (NLG), information retrieval, recommendation systems, text generation evaluation, and code generation. Finally, the survey addresses multi-modality (Liu et al., 2023e; Zhao et al., 2023b), exploring how KD enhances LLMs’ ability to integrate multiple forms of input.

技能蒸馏 (Skill Distillation)。这一维度探讨通过知识蒸馏 (KD) 强化的具体能力范畴,包含对上下文跟随 (Taori et al., 2023; Luo et al., 2023c) 的详细讨论,其子主题涵盖指令跟随和检索增强生成 (RAG) 能力。在对齐领域 (Mitra et al., 2023; Tunstall et al., 2023),研究调查了思维模式、角色/偏好建模和价值对齐。"智能体"类别则深入探讨工具使用和规划等技能。通过自然语言理解 (NLU)、自然语言生成 (NLG)、信息检索、推荐系统、文本生成评估和代码生成等视角,审视NLP任务专项能力 (Dai et al., 2023a; Jung et al., 2023; Chaudhary, 2023)。最后,研究讨论了多模态能力 (Liu et al., 2023e; Zhao et al., 2023b),探索知识蒸馏如何增强大语言模型整合多形式输入的能力。

Vertical iz ation Distillation. This section assesses the application of KD across diverse vertical domains, offering insights into how distilled LLMs can be tailored for specialized fields such as Law (LAW, 2023), Medical & Healthcare (Wang et al., 2023a), Finance (Zhang and Yang, 2023), Science (Zhang et al., 2024), among others. This exploration not only showcases the practical implications of KD techniques but also highlights their transformative impact on domain-specific AI solutions.

垂直领域蒸馏。本节评估了知识蒸馏 (Knowledge Distillation, KD) 在不同垂直领域的应用,探讨了如何为大语言模型定制法律 (LAW, 2023)、医疗健康 (Wang et al., 2023a)、金融 (Zhang and Yang, 2023)、科学 (Zhang et al., 2024) 等专业领域蒸馏方案。该研究不仅展示了知识蒸馏技术的实际应用价值,更凸显了其对垂直领域AI解决方案的变革性影响。

Through these facets, this survey provides a comprehensive analysis of KD in LLMs, guiding researchers and practitioners through methodologies, challenges, and opport unities in this rapidly evolving domain.

通过这些方面,本综述对大语言模型(LLM)中的知识蒸馏(KD)进行了全面分析,为研究人员和实践者在这个快速发展的领域中提供了方法论、挑战和机遇的指导。

Declaration. This survey represents our earnest effort to provide a comprehensive and insightful overview of knowledge distillation techniques applied to LLMs, focusing on algorithms, skill enhancement, and domain-specific applications. Given the vast and rapidly evolving nature of this field, especially with the prevalent practice of eliciting knowledge from training data across academia, we acknowledge that this manuscript may not encompass every pertinent study or development. Nonetheless, it endeavors to introduce the foundational paradigms of knowledge distillation, highlighting key methodologies and their impacts across a range of applications.

声明:本综述旨在全面深入地概述大语言模型 (LLM) 知识蒸馏技术,聚焦算法、技能增强及领域应用。鉴于该领域发展迅猛且学术界普遍存在从训练数据中提取知识的实践,我们承认本文可能无法涵盖所有相关研究或进展。尽管如此,我们仍致力于阐释知识蒸馏的基础范式,重点分析核心方法及其在多类应用中的影响。

2.4 Distillation Pipeline in LLM Era

2.4 大语言模型 (Large Language Model) 时代的蒸馏流程

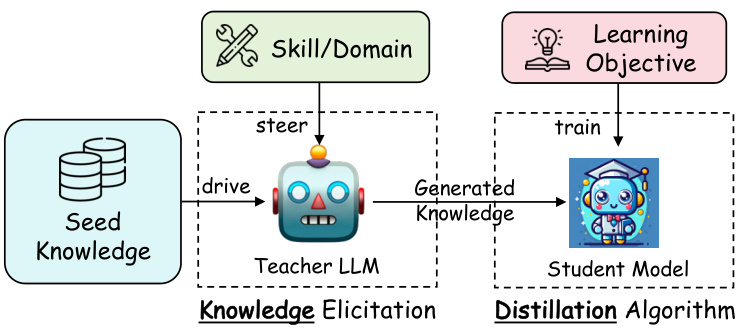

Fig. 4: An illustration of a general pipeline to distill knowledge from a large language model to a student model.

图 4: 大语言模型向学生模型蒸馏知识的通用流程示意图。

The general distillation pipeline of LLMs is a structured and methodical process aimed at transferring knowledge from a sophisticated teacher model to a less complex student model. This pipeline is integral for leveraging the advanced capabilities of models like GPT-4 or Gemini in more accessible and efficient open-source counterparts. The outline of this pipeline can be broadly categorized into four distinct stages, each playing a crucial role in the successful distillation of knowledge. An illustration is shown in Figure 4. The detailed pipeline could also be seen in Figure 2.

大语言模型 (LLM) 的通用蒸馏流程是一个结构化、系统化的过程,旨在将复杂教师模型的知识迁移至更精简的学生模型。该流程对于将 GPT-4 或 Gemini 等先进模型的能力移植到更易获取、高效的开源替代品中至关重要。该流程可大致划分为四个关键阶段,每个阶段都在知识蒸馏过程中发挥重要作用。如图 4 所示,具体流程亦可参见图 2。

I. Target Skill or Domain Steering Teacher LLM. The first stage involves directing the teacher LLM towards a specific target skill or domain. This is achieved through carefully crafted instructions or templates that guide the LLM’s focus. These instructions are designed to elicit responses that demonstrate the LLM’s proficiency in a particular area, be it a specialized domain like healthcare or law, or a skill such as reasoning or language understanding.

I. 目标技能或领域引导教师大语言模型

第一阶段涉及将教师大语言模型引导至特定目标技能或领域。这通过精心设计的指令或模板实现,以指导大语言模型的关注点。这些指令旨在激发大语言模型在特定领域(如医疗或法律等专业领域)或技能(如推理或语言理解)中展现其熟练度的响应。

II. Seed Knowledge as Input. Once the target area is defined, the next step is to feed the teacher LLM with seed knowledge. This seed knowledge typically comprises a small dataset or specific data clues relevant to the elicit skill or domain knowledge from the teacher LLM. It acts as a catalyst, prompting the teacher LLM to generate more elaborate and detailed outputs based on this initial information. The seed knowledge is crucial as it provides a foundation upon which the teacher model can build and expand, thereby creating more comprehensive and in-depth knowledge examples.

II. 种子知识作为输入。定义目标领域后,下一步是向教师大语言模型(LLM)输入种子知识。这些种子知识通常包含与目标技能或领域知识相关的小型数据集或特定数据线索,其作用是作为催化剂,促使教师大语言模型基于初始信息生成更详尽细致的输出。种子知识至关重要,它为教师模型提供了可扩展的基础,从而生成更全面深入的知识示例。

III. Generation of Distillation Knowledge. In response to the seed knowledge and steering instructions, the teacher LLM generates knowledge examples. These examples are predominantly in the form of question-and-answer (QA) dialogues or narrative explanations, aligning with the natural language processing/understanding capabilities of the LLM. In certain specialized cases, the outputs may also include logits or hidden features, although this is less common due to the complexity and specific requirements of such data forms. The generated knowledge examples constitute the core of the distillation knowledge, encapsulating the advanced understanding and skills of the teacher LLM.

III. 蒸馏知识的生成。针对种子知识和引导指令,教师大语言模型生成知识示例。这些示例主要以问答(QA)对话或叙述性解释的形式呈现,与大语言模型的自然语言处理/理解能力相匹配。在某些专业场景中,输出也可能包含logits或隐藏特征,但由于此类数据形式的复杂性和特殊要求,这种情况较为少见。生成的知识示例构成了蒸馏知识的核心,封装了教师大语言模型的高级理解能力和技能。

IV. Training the Student Model with a Specific Learning Objective. The final stage involves the utilization of the generated knowledge examples to train the student model. This training is guided by a loss function that aligns with the learning objectives. The loss function quantifies the student model’s performance in replicating or adapting the knowledge from the teacher model. By minimizing this loss, the student model learns to emulate the target skills or domain knowledge of the teacher, thereby acquiring similar capabilities. The process involves iterative ly adjusting the student model’s parameters to reduce the discrepancy between its outputs and those of the teacher model, ensuring the effective transfer of knowledge.

四、基于特定学习目标训练学生模型

最终阶段利用生成的知识样本训练学生模型,训练过程由符合学习目标的损失函数 (loss function) 指导。该损失函数量化学生模型在复制或适配教师模型知识时的表现,通过最小化损失值使学生模型学会模仿教师的目标技能或领域知识,从而获得相近能力。该过程通过迭代调整学生模型参数来缩小其输出与教师模型输出的差异,确保知识迁移的有效性。

In essential, the above four stages can be abstracted as two formulations. The first formulation represents the process of eliciting knowledge:

本质上,上述四个阶段可以抽象为两种表述。第一种表述代表知识获取的过程:

$$

{\mathcal{D}}{I}^{(\mathrm{kd})}={\mathrm{Parse}(o,s)|o\sim p_{T}(\mathsf{o}|I\oplus s),\forall s\sim{\mathcal{S}}},

$$

$$

{\mathcal{D}}{I}^{(\mathrm{kd})}={\mathrm{Parse}(o,s)|o\sim p_{T}(\mathsf{o}|I\oplus s),\forall s\sim{\mathcal{S}}},

$$

where $\bigoplus$ denotes fusing two pieces of text, $I$ denotes an instruction or a template for a task, skill, or domain to steer the LLM and elicit knowledge, $s\sim S$ denotes an example of the seed knowledge, upon which the LLM can explore to generate novel knowledge, Parse $(o,s)$ stands for to parse the distillation example ( e.g., $(x,y))$ from the teacher LLM’s output $o$ (plus the input $s$ in some cases), and $p_{T}$ represents the teacher LLM with parameters $\theta_{T}$ . Given the datasets $\mathcal{D}_{I}^{(\mathrm{kd})}$ built for distillation, we then define a learning objective as

其中 $\bigoplus$ 表示融合两段文本,$I$ 表示用于引导大语言模型并激发知识的任务、技能或领域的指令/模板,$s\sim S$ 表示种子知识的示例(大语言模型可基于此探索生成新知识),Parse $(o,s)$ 表示从教师大语言模型的输出 $o$(某些情况下还需结合输入 $s$)中解析蒸馏样本(例如 $(x,y)$),$p_{T}$ 表示参数为 $\theta_{T}$ 的教师大语言模型。基于构建的蒸馏数据集 $\mathcal{D}_{I}^{(\mathrm{kd})}$,我们定义如下学习目标:

$$

\begin{array}{r}{\mathcal{L}=\displaystyle\sum_{I}\mathcal{L}{I}(\mathcal{D}{I}^{(\mathrm{kd})};\theta_{S}),}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}=\displaystyle\sum_{I}\mathcal{L}{I}(\mathcal{D}{I}^{(\mathrm{kd})};\theta_{S}),}\end{array}

$$

where $\sum I$ denotes there could be multiple tasks or skills being distilled into one student model, $\bar{\mathcal{L}{I}}(\cdot;\cdot)$ stands for a specific learning objective, and $\theta_{S}$ parameter ize s the student model.

其中 $\sum I$ 表示可能有多个任务或技能被蒸馏到一个学生模型中,$\bar{\mathcal{L}{I}}(\cdot;\cdot)$ 代表特定的学习目标,而 $\theta_{S}$ 是学生模型的参数。

Following our exploration of the distillation pipeline and the foundational concepts underlying knowledge distillation in the LLM era, we now turn our focus to the specific algorithms that have gained prominence in this era.

在我们探讨了大语言模型(LLM)时代的知识蒸馏流程和基础概念后,现在将重点关注该时期涌现的重要算法。

3 KNOWLEDGE DISTILLATION ALGORITHMS

3 知识蒸馏算法

This section navigates through the process of knowledge distillation. According to Section 2.4, it is categorized into two principal steps: ‘Knowledge,’ focusing on eliciting knowledge from teacher LLMs (Eq.1), and ‘Distillation,’ centered on injecting this knowledge into student models (Eq.2). We will elaborate on these two processes in the subsequent sections.

本节将介绍知识蒸馏的过程。根据第2.4节的内容,该过程可分为两个主要步骤:"知识"(Knowledge)和"蒸馏"(Distillation)。"知识"步骤侧重于从教师大语言模型(LLM)中提取知识(式1),而"蒸馏"步骤则专注于将这些知识注入学生模型(式2)。我们将在后续章节中详细阐述这两个过程。

3.1 Knowledge

3.1 知识

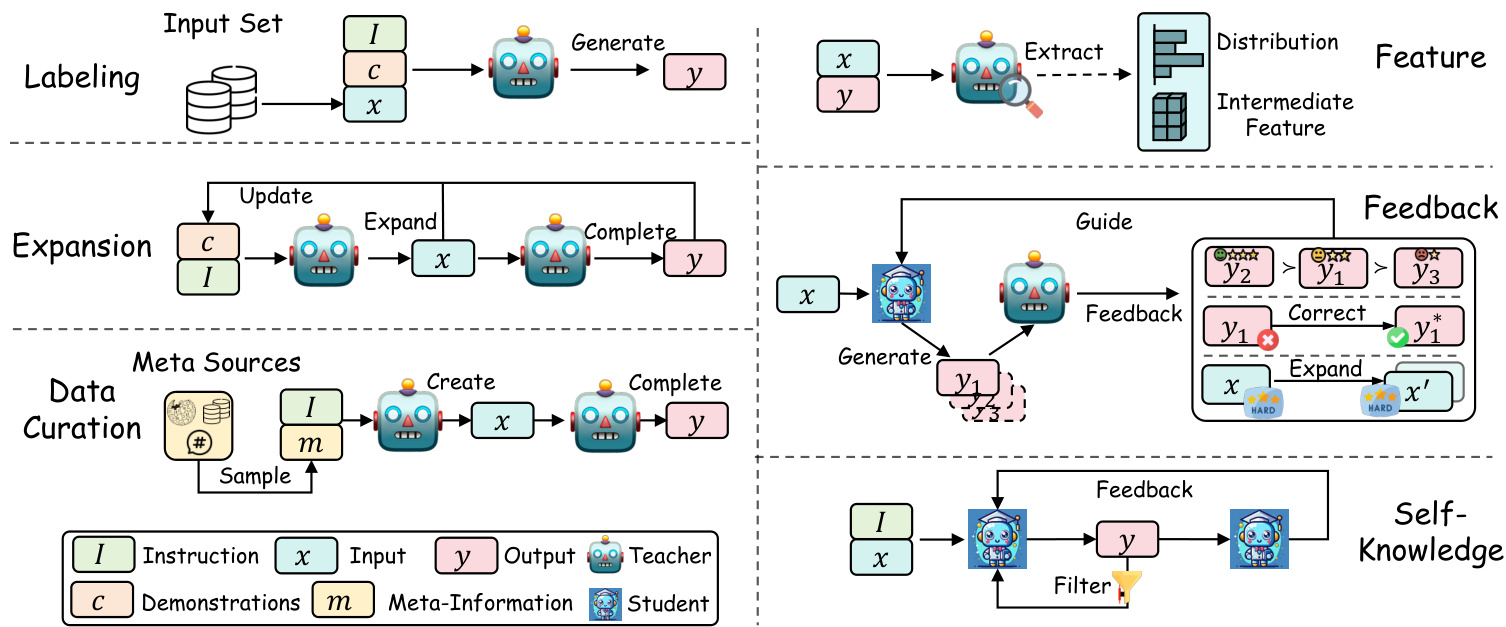

This section focuses on the approaches to elicit knowledge from teacher LLMs. According to the manners to acquire knowledge, we divided them into Labeling, Expansion, Data Curation, Feature, Feedback, and Self-Knowledge. Figure 5 shows an illustration of these knowledge eli citation methods.

本节重点探讨从教师大语言模型中提取知识的方法。根据知识获取方式,我们将其分为标注 (Labeling)、扩展 (Expansion)、数据整理 (Data Curation)、特征 (Feature)、反馈 (Feedback) 和自我知识 (Self-Knowledge)。图5展示了这些知识提取方法的示意图。

3.1.1 Labeling

3.1.1 标注

Labeling knowledge refers to using a teacher LLM to label the output $y$ for a given input $x$ as the seed knowledge, according to the instruction $I$ or demonstrations $c,$ where $c=(x_{1},y_{1}),\ldots,(x_{n},y_{n})$ . This method of eliciting knowledge from teacher LLMs is straightforward yet effective and has been widely applied across various tasks and applications. It requires only the collection of an input dataset and feeding it into LLMs to obtain the desired generations. Moreover, the generation of $y$ is controllable through the predefined $I$ and $c$ . This process can be formulated as follows:

标注知识是指利用教师大语言模型根据指令$I$或演示$c$(其中$c=(x_{1},y_{1}),\ldots,(x_{n},y_{n})$)为给定输入$x$标注输出$y$作为种子知识。这种从教师大语言模型中提取知识的方法简单却有效,已被广泛应用于各种任务和应用场景。它仅需收集输入数据集并输入大语言模型即可获得所需生成结果。此外,通过预定义的$I$和$c$可实现对$y$生成过程的控制。该过程可表述如下:

$$

{\mathcal{D}}^{(\mathrm{lab})}={x,y|x\sim\chi,y\sim p_{T}(y|I\oplus c\oplus x)}.

$$

$$

{\mathcal{D}}^{(\mathrm{lab})}={x,y|x\sim\chi,y\sim p_{T}(y|I\oplus c\oplus x)}.

$$

Input $x$ could be sourced from existing NLP task datasets, which serve as typical reservoirs for distillation efforts. Numerous works have sought to harness the capabilities of powerful LLMs as teachers for annotating dataset samples across a range of tasks. For instance, efforts in natural language understanding involve using LLMs to categorize text (Gilardi et al., 2023; Ding et al., 2023a; He et al., 2023a), while in natural language generation, LLMs assist in generating sequences for outputs (Hsieh et al., 2023; Jung et al., 2023; Wang et al., 2021b). Text generation evaluation tasks leverage LLMs to label evaluated results (Li et al., 2024b; Wang et al., 2023b), and reasoning tasks utilize LLMs for labeling Chains of Thought (CoT) explanations (Hsieh et al., 2023; Li et al., 2022; Ho et al., 2023; Magister et al., 2023; Fu et al., 2023; Ramnath et al., 2023; Li et al., 2023d; Liu et al., $2023\mathrm{g},$ ), among others. Rather than concentrating on specific tasks, many current works focus on labeling outputs based on instructions, thereby teaching student models to solve tasks in a more flexible way by following instructions. Collections of various NLP tasks, complemented by instructional templates, serve as valuable input sources for $x$ . For instance, FLAN-v2 collections (Longpre et al., 2023) offers extensive publicly available sets of tasks with instructions, which are labeled with responses generated by teacher LLMs in Orca (Mukherjee et al., 2023; Mitra et al., 2023). The instructions from these NLP tasks are built from predefined templates, which lack diversity and may have gaps between human’s natural query. The real conversations between humans and chat models provide large-scale data with real queries and generations labeled by powerful LLMs, like ShareGPT. Additionally, Xu et al. (2023b) and Anand et al. (2023) label the real questions sampled from forums like Quora and Stack Overflow.

输入 $x$ 可以来源于现有的自然语言处理(NLP)任务数据集,这些数据集是蒸馏工作的典型资源库。许多研究致力于利用强大 大语言模型 作为教师,为各类任务的数据集样本进行标注。例如,在自然语言理解领域,研究利用 大语言模型 对文本进行分类 (Gilardi et al., 2023; Ding et al., 2023a; He et al., 2023a);在自然语言生成领域,大语言模型 协助生成输出序列 (Hsieh et al., 2023; Jung et al., 2023; Wang et al., 2021b)。文本生成评估任务借助 大语言模型 标注评估结果 (Li et al., 2024b; Wang et al., 2023b),而推理任务则利用 大语言模型 标注思维链(CoT)解释 (Hsieh et al., 2023; Li et al., 2022; Ho et al., 2023; Magister et al., 2023; Fu et al., 2023; Ramnath et al., 2023; Li et al., 2023d; Liu et al., $2023\mathrm{g},$ )等。与聚焦特定任务不同,当前许多研究专注于基于指令标注输出,从而指导学生模型以更灵活的方式遵循指令解决问题。各类NLP任务的集合配合指令模板,为 $x$ 提供了宝贵的输入来源。例如,FLAN-v2集合 (Longpre et al., 2023) 提供了大量带指令的公开任务集,这些任务在Orca (Mukherjee et al., 2023; Mitra et al., 2023) 中由教师 大语言模型 生成响应标注。这些NLP任务的指令基于预定义模板构建,缺乏多样性且可能与人类自然查询存在差距。人类与聊天模型间的真实对话(如ShareGPT)提供了大规模真实查询数据,并由强大 大语言模型 标注生成内容。此外,Xu et al. (2023b) 和 Anand et al. (2023) 对来自Quora和Stack Overflow等论坛的真实问题进行了标注。

Moreover, the process of labeling could be guided by instructions $I$ or demonstrations c. A commonly used instruction type for guiding labeling is chain-of-thought (CoT) prompt (Hsieh et al., 2023; Fu et al., 2023; Magister et al., 2023). Mukherjee et al. (2023) add multiple system messages (e.g. “You must generate a detailed and long answer.” or “explain like $\mathrm{{I^{\prime}m}}$ five, think step-by-step”) to elicit rich signals. Yue et al. (2023a) and Chenglin et al. (2023) label a hybrid of knowledge of chain-of-thought (CoT) and program-of-thought (PoT) rationales. Xu et al. (2023b) propose a self-chat technique that two teacher LLMs simulate the real conversational to generate multi-turn dialogues for a question from Quora and Stack Overflow.

此外,标注过程可以通过指令 $I$ 或演示 c 进行引导。一种常用的引导标注指令类型是思维链 (CoT) 提示 (Hsieh et al., 2023; Fu et al., 2023; Magister et al., 2023)。Mukherjee et al. (2023) 添加了多条系统消息 (例如 "你必须生成详细且冗长的答案" 或 "用五岁小孩能懂的方式解释,逐步思考") 以激发丰富信号。Yue et al. (2023a) 和 Chenglin et al. (2023) 标注了思维链 (CoT) 与程序思维 (PoT) 推理的混合知识。Xu et al. (2023b) 提出了一种自对话技术,让两个教师大语言模型模拟真实对话,为 Quora 和 Stack Overflow 的问题生成多轮对话。

3.1.2 Expansion

3.1.2 扩展

While the labeling approach is simple and effective, it faces certain limitations. Primarily, it is constrained by the scale and variety of the input data. In real-world applications, especially those involving user conversations, there are also concerns regarding the privacy of the data involved. To address these limitations, various expansion methods have been proposed (Wang et al., 2022a; Taori et al., 2023; Chaudhary, 2023; Si et al., 2023; Ji et al., 2023a; Luo et al., 2023b,a; Wu et al., 2023c; Sun et al., 2024b; Xu et al., 2023a; Guo et al., 2023c; Roziere et al., 2023; West et al., 2022). These methods take the demonstrations as seed knowledge and aim to expand a large scale and various data by in-context learning.

虽然标注方法简单有效,但它面临一些局限性。主要受限于输入数据的规模和多样性。在实际应用中,特别是涉及用户对话的场景,还存在数据隐私方面的顾虑。为解决这些限制,研究者提出了多种扩展方法 (Wang et al., 2022a; Taori et al., 2023; Chaudhary, 2023; Si et al., 2023; Ji et al., 2023a; Luo et al., 2023b,a; Wu et al., 2023c; Sun et al., 2024b; Xu et al., 2023a; Guo et al., 2023c; Roziere et al., 2023; West et al., 2022)。这些方法将演示样本作为种子知识,通过上下文学习来扩展大规模多样化数据。

A key characteristic of these expansion methods is the utilization of the in-context learning ability of LLMs to generate data similar to the provided demonstrations $c$ . Unlike in the labeling approach, where the input $x$ is sampled from the existing dataset, in the expansion approach, both $x$ and $y$ are generated by teacher LLMs. This process can be formulated as follows:

这些扩展方法的一个关键特性是利用大语言模型 (LLM) 的上下文学习能力来生成与给定演示样本 $c$ 相似的数据。与标注方法中从现有数据集采样输入 $x$ 不同,在扩展方法中,$x$ 和 $y$ 均由教师大语言模型生成。该过程可表述如下:

$$

{\mathcal D}^{(\mathrm{exp})}={(x,y)|x\sim p_{T}(x|I\oplus c),y\sim p_{T}(y|I\oplus x)}.

$$

$$

{\mathcal D}^{(\mathrm{exp})}={(x,y)|x\sim p_{T}(x|I\oplus c),y\sim p_{T}(y|I\oplus x)}.

$$

Fig. 5: An illustration of different knowledge eli citation methods from teacher LLMs. Labeling: The teacher generates the output from the input; Expansion: The teacher generates samples similar to the given demonstrations through incontext learning; Data Curation: The teacher synthesizes data according to meta-information, such as a topic or an entity; Feature: Feed the data into the teacher and extract its internal knowledge, such as logits and features; Feedback: The teacher provides feedback on the student’s generations, such as preferences, corrections, expansions of challenging samples, etc; Self-Knowledge: The student first generates outputs, which is then filtered for high quality or evaluated by the student itself.

图 5: 教师大语言模型中不同知识提取方法的示意图。标注 (Labeling): 教师根据输入生成输出;扩展 (Expansion): 教师通过上下文学习生成与给定示例相似的样本;数据整理 (Data Curation): 教师根据元信息(如主题或实体)合成数据;特征提取 (Feature): 将数据输入教师模型并提取其内部知识(如logits和特征);反馈 (Feedback): 教师对学生的生成结果提供反馈(如偏好、修正、挑战性样本的扩展等);自知识 (Self-Knowledge): 学生首先生成输出,随后自行筛选高质量结果或进行自我评估。

In this formulation, $x$ and $y$ represent the new inputoutput pairs generated by the teacher LLM. The input $x$ is generated based on a set of input-output demonstrations $c$ . The output $y$ is then generated in response to the new input $x$ under the guidance of an instruction $I$ . Note that the demonstrations could be predefined or dynamically updated by adding the newly generated samples.

在这个公式中,$x$ 和 $y$ 表示由教师大语言模型生成的新输入-输出对。输入 $x$ 是基于一组输入-输出演示 $c$ 生成的。随后,输出 $y$ 在指令 $I$ 的指导下响应新输入 $x$ 而生成。需要注意的是,演示可以是预定义的,也可以通过添加新生成的样本动态更新。

Expansion techniques have been widely utilized to extract extensive instruction-following knowledge from teacher LLMs. Wang et al. (2022a) first introduces an iterative boots trapping method, Self-Instruct, to utilize LLMs to generate a wide array of instructions based on several demonstrations sampled from 175 manually-written instructions. The newly generated instructions are then added back to the initial pool, benefiting subsequent expansion iterations. Subsequently, Taori et al. (2023) applies this expansion method to a more powerful teacher LLM, textdavinci-003, to distill 52K high-quality data. To improve the diversity and coverage during expansion, Wu et al. (2023c) and (Sun et al., 2024b) prompt the teacher LLM to generate instructions corresponding to some specific topics. Xu et al. (2023a) propose an Evol-Instruct method to expand the instructions from two dimensions: difficulty (e.g. rewriting the question to be more complex) and diversity (e.g. generating more long-tailed instructions). This EvolInstruct method is domain-agnostic and has been used to expand the distillation of coding (Luo et al., 2023a) and math (Luo et al., 2023b). Additionally, expansion methods can significantly augment NLP task datasets with similar samples, thereby enhancing task performance. For instance, AugGPT (Dai et al., 2023a) leverages a teacher LLM to rephrase each sentence in the training samples into multiple conceptually similar, but semantically varied, samples to improve classification performance. Similarly, TDG (He et al., 2023b) proposes the Targeted Data Generation (TDG) framework, which automatically identifies challenging subgroups within data and generates new samples for these subgroups using LLMs through in-context learning.

扩展技术已被广泛用于从教师大语言模型中提取大量指令遵循知识。Wang等人(2022a)首次提出了一种迭代自举方法Self-Instruct,利用大语言模型基于175条人工编写指令中的若干示例生成多样化指令。新生成的指令随后被添加回初始池,助力后续扩展迭代。Taori等人(2023)将此扩展方法应用于更强大的教师模型textdavinci-003,蒸馏出52K高质量数据。为提高扩展过程中的多样性和覆盖率,Wu等人(2023c)和Sun等人(2024b)引导教师模型生成特定主题对应的指令。Xu等人(2023a)提出Evol-Instruct方法,从难度(如改写问题使其更复杂)和多样性(如生成更多长尾指令)两个维度扩展指令。这种Evol-Instruct方法不依赖特定领域,已被用于扩展编码(Luo等人,2023a)和数学(Luo等人,2023b)的蒸馏。此外,扩展方法能通过生成相似样本显著增强NLP任务数据集,从而提升任务表现。例如AugGPT(Dai等人,2023a)利用教师模型将训练样本中的每个句子改写成多个概念相似但语义不同的样本,以提高分类性能。类似地,TDG(He等人,2023b)提出目标数据生成(TDG)框架,通过上下文学习自动识别数据中的困难子群,并利用大语言模型为这些子群生成新样本。

In summary, the expansion method leverages the incontext learning strengths of LLMs to produce more varied and extensive datasets with both inputs and outputs. However, the quality and diversity of the generated data are heavily reliant on the teacher LLMs and the initial seed demonstrations. This dependence can lead to a dataset with inherent bias from LLMs (Yu et al., $2023\mathsf{a}.$ ; Wei et al., 2023) and a homogeneity issue where the generations may be prone to similarity ultimately, limiting the diversity this method seeks to achieve (Ding et al., 2023b). Moreover, the expansion process may inadvertently amplify any biases present in the seed data.

总之,扩展方法利用大语言模型(LLM)的上下文学习优势,生成输入输出更多样、更广泛的数据集。然而,生成数据的质量和多样性高度依赖于教师大语言模型和初始种子示例。这种依赖性可能导致数据集存在大语言模型固有偏见(Yu et al., $2023\mathsf{a}$; Wei et al., 2023)以及同质化问题,即生成内容最终可能趋于相似,从而限制该方法试图实现的多样性(Ding et al., 2023b)。此外,扩展过程可能会无意中放大种子数据中存在的任何偏见。

3.1.3 Data Curation

3.1.3 数据治理

The pursuit of high-quality and scalable data generation in knowledge distillation from LLMs has led to the emergence of the Data Curation approach. This method arises in response to the limitations observed in both the Labeling and Expansion approaches. These methods often yield data of variable quality and face constraints in quantity. In Labeling, the seed knowledge is sourced from task datasets, leading to potential noise and dirty data. Meanwhile, in Expansion, the input $x$ is derived from seed demonstrations, which can result in homogeneous data when generated in large quantities. To overcome these challenges, the Data Curation method curates high-quality or large-scale data by extensive meta-information as seed knowledge (Ding et al., 2023b; Gunasekar et al., 2023; Li et al., 2023a; Mar, 2023; Liu et al., 2023d; Wei et al., 2023; Yu et al., 2024; Ye et al., 2022; Gao et al., 2023a; Yang and Nicolai, 2023).

在大语言模型知识蒸馏中追求高质量、可扩展的数据生成,催生了数据精选(Data Curation)方法。该方法的出现是为了应对标注(Labeling)和扩展(Expansion)两种途径的局限性——这些方法往往产生质量参差不齐的数据且面临数量限制。标注法的种子知识来源于任务数据集,可能导致噪声和脏数据;而扩展法的输入$x$源自种子示例,大规模生成时易产生同质化数据。为突破这些限制,数据精选法通过海量元信息作为种子知识(Ding et al., 2023b; Gunasekar et al., 2023; Li et al., 2023a; Mar, 2023; Liu et al., 2023d; Wei et al., 2023; Yu et al., 2024; Ye et al., 2022; Gao et al., 2023a; Yang and Nicolai, 2023),实现了高质量或大规模数据的精选。

A distinct feature of Data Curation is its approach to synthesize data from scratch. Numerous diverse metainformation, such as topics or knowledge points, could be incorporated into this process to generate controllable $x$ and $y$ . Thus, this process can be meticulously controlled to yield datasets that are not only large in scale but also of high quality. The formulation for Data Curation can be represented as:

数据整理 (Data Curation) 的一个显著特点是其从零开始合成数据的方法。在此过程中可以融入多种多样的元信息 (metainformation) ,例如主题或知识点,从而生成可控的 $x$ 和 $y$ 。因此,这一过程可以被精细控制,最终产出不仅规模庞大而且质量优异的数据集。数据整理的公式化表示如下:

$$

{\mathcal{D}}^{\mathrm{(cur)}}={(x,y)|x\sim p_{T}(x|I\oplus m),y\sim p_{T}(y|I\oplus x)}.

$$

$$

{\mathcal{D}}^{\mathrm{(cur)}}={(x,y)|x\sim p_{T}(x|I\oplus m),y\sim p_{T}(y|I\oplus x)}.

$$

In this formulation, $m$ represents the diverse metainformation used to guide the synthesis of $x,$ and $I$ is the instruction guiding teacher LLMs to generate $x$ or $y$ .

在此公式中,$m$ 代表用于指导 $x$ 合成的多样化元信息,$I$ 是指导教师大语言模型生成 $x$ 或 $y$ 的指令。

Different studies primarily vary in their source and method of leveraging meta-information. UltraChat (Ding et al., 2023b) effectively demonstrates the process of curating both high-quality and diverse data by distilled knowledge. They collect extensive meta-information across three domains: Questions about the World, Creation and Generation, and Assistance on Existing Materials. For example, under Questions about the World, they explore 30 meta-topics like ”Technology” and ”Food and Drink.” the teacher LLMs then use this meta-information to distill a broad array of instructions and conversations, achieving a substantial scale of 1.5 million instances. UltraChat stands out with its lexical and topical diversity. The UltraLLaMA model, finetuned on this data, consistently surpasses other open-source models. Another notable series, phi (Gunasekar et al., 2023; Li et al., 2023a; Mar, 2023), focuses on distilling smaller, high-quality datasets akin to ”textbooks.” Phi-1(Gunasekar et al., 2023) experiments with synthesizing ”textbook quality” data in the coding domain. Their approach involves distilling clear, self-contained, instructive, and balanced content from LLMs, guided by random topics or function names to enhance diversity. The distilled data is a synthesis of 1 billion tokens of Python textbooks, complete with natural language explanations and code snippets, as well as 180 million tokens of Python exercises with solutions. Remarkably, the phi-1 model, despite its smaller size, outperforms nearly all open-source models on coding benchmarks like HumanEval and MBPP while being 10 times smaller in model size and 100 times smaller in dataset size. MFTCoder (Liu et al., 2023d) utilizes hundreds of Python knowledge points as meta-information to create a Code Exercise Dataset. In contrast, Magicoder (Wei et al., 2023) and WaveCoder (Yu et al., 2024) get raw code collections from open-source code datasets, using this as meta-information for generating instructional data. In the context of NLU tasks, certain studies (Ye et al., 2022; Gao et al., $2023\mathsf{a},$ ; Wang et al., 2021a) explore the use of labels as meta-information to synthesize corresponding samples for data augmentation. Similarly, in information retrieval tasks, there are efforts to utilize documents as meta-information for generating potential queries, thereby constructing large-scale retrieval pairs (Bonifacio et al., 2022; Meng et al., 2023).

不同研究主要在元信息的来源和利用方法上存在差异。UltraChat (Ding et al., 2023b) 通过知识蒸馏有效展示了同时获取高质量与多样化数据的过程。他们收集了三大领域的广泛元信息:"关于世界的问题"、"创作与生成"以及"现有材料辅助"。例如在"关于世界的问题"下,他们探索了"技术"、"食品饮料"等30个元主题,随后教师大语言模型利用这些元信息蒸馏出大量指令和对话,最终达到150万实例的规模。UltraChat以其词汇和主题多样性著称,基于该数据微调的UltraLLaMA模型持续超越其他开源模型。另一个著名系列phi (Gunasekar et al., 2023; Li et al., 2023a; Mar, 2023) 则专注于蒸馏类似"教科书"的小型高质量数据集。Phi-1(Gunasekar et al., 2023) 尝试在编码领域合成"教科书级"数据,其方法是通过随机主题或函数名引导,从大语言模型中蒸馏出清晰、自包含、具有指导性且平衡的内容。最终蒸馏数据包含10亿token的Python教科书(含自然语言解释和代码片段)以及1.8亿token的Python练习题(含解答)。值得注意的是,phi-1模型虽然在规模上小10倍、数据集小100倍,却在HumanEval和MBPP等编码基准测试中超越几乎所有开源模型。MFTCoder (Liu et al., 2023d) 使用数百个Python知识点作为元信息构建代码练习数据集,而Magicoder (Wei et al., 2023) 和WaveCoder (Yu et al., 2024) 则从开源代码数据集获取原始代码集合作为生成教学数据的元信息。在自然语言理解任务中,部分研究 (Ye et al., 2022; Gao et al., $2023\mathsf{a},$ ; Wang et al., 2021a) 探索将标签作为元信息来合成相应样本以实现数据增强。类似地,在信息检索任务中也有研究尝试以文档为元信息生成潜在查询,从而构建大规模检索对 (Bonifacio et al., 2022; Meng et al., 2023)。

In conclusion, Data Curation through teacher LLMs has emerged as a promising technique for synthesizing datasets that are not only high-quality and diverse but also large in scale. The success of models like phi-1 in specialized domains underscores the efficacy of this method. The ability to create synthetic datasets will become a crucial technical skill and a key area of focus in AI (Li et al., 2023a).

总之,通过教师大语言模型进行数据治理已成为一种前景广阔的技术,不仅能合成高质量、多样化的数据集,还能实现大规模生成。phi-1等模型在专业领域的成功验证了该方法的有效性。创建合成数据集的能力将成为一项关键技术技能,也是人工智能领域的重点研究方向 (Li et al., 2023a)。

3.1.4 Feature

3.1.4 特征

The previously discussed knowledge eli citation methods are typically applied to powerful black-box models, which are expensive and somewhat un reproducible due to calling API. In contrast, white-box distillation offers a more transparent and accessible approach for researchers. It involves leveraging the output distributions, intermediate features, or activation s from teacher LLMs, which we collectively refer to as Feature knowledge. White-box KD approaches have predominantly been studied for smaller encoder-based LMs, typically those with fewer than 1 billion parameters (cf. Gou et al. (2021) for detail). However, recent research has begun to explore white-box distillation in the context of generative LLMs (Timiryasov and Tastet, 2023; Liang et al., 2023a; Gu et al., 2024; Agarwal et al., 2024; Liu et al., 2023a; Wen et al., 2023; Wan et al., 2024a; Zhao and Zhu, 2023; Qin et al., 2023b; Boizard et al., 2024; Zhong et al., 2024).

先前讨论的知识提炼方法通常应用于强大的黑盒模型,这些模型由于需要调用API而成本高昂且难以复现。相比之下,白盒蒸馏为研究者提供了更透明、更易获取的途径。该方法利用教师大语言模型的输出分布、中间特征或激活值,我们统称为特征知识 (Feature knowledge)。白盒知识蒸馏 (KD) 方法主要针对较小的基于编码器的语言模型进行研究,通常参数少于10亿 (详见Gou等人 (2021) )。然而,最近的研究开始探索生成式大语言模型中的白盒蒸馏 (Timiryasov和Tastet, 2023; Liang等人, 2023a; Gu等人, 2024; Agarwal等人, 2024; Liu等人, 2023a; Wen等人, 2023; Wan等人, 2024a; Zhao和Zhu, 2023; Qin等人, 2023b; Boizard等人, 2024; Zhong等人, 2024)。

The typical method for acquiring this feature knowledge involves teacher LLMs annotating the output sequence $y$ with its internal representations. These annotations are then distilled into the student model using methods such as Kullback-Leibler Divergence (KLD). The process of eliciting feature knowledge can be formulated as follows:

获取这一特征知识的典型方法涉及教师大语言模型用其内部表征对输出序列 $y$ 进行标注。随后,这些标注通过诸如Kullback-Leibler散度(KLD)等方法蒸馏到学生模型中。特征知识的激发过程可表述如下:

$$

{\mathcal{D}}^{(\mathrm{feat})}={(x,y,\phi_{\mathrm{feat}}(x,y;\theta_{T}))|x\sim\chi,y\sim y}.

$$

$$

{\mathcal{D}}^{(\mathrm{feat})}={(x,y,\phi_{\mathrm{feat}}(x,y;\theta_{T}))|x\sim\chi,y\sim y}.

$$

In this formulation, $\mathcal{V}$ is the output set, which can be generated by teacher LLMs, the student model, or directly sourced from the dataset. $\phi_{\mathrm{feat}}(\cdot;\theta_{T})$ represents the operation of extracting feature knowledge (such as output distribution) from the teacher LLM.

在此公式中,$\mathcal{V}$ 是输出集,可由教师大语言模型、学生模型生成,或直接从数据集中获取。$\phi_{\mathrm{feat}}(\cdot;\theta_{T})$ 表示从教师大语言模型中提取特征知识(如输出分布)的操作。

The most straightforward method to elicit feature knowledge of teacher is to label a fixed dataset of sequences with token-level probability distributions (Sanh et al., 2019; Wen et al., 2023). To leverage the rich semantic and syntactic knowledge in intermediate layers of the teacher model, TED (Liang et al., 2023a) designs task-aware layer-wise distillation. They align the student’s hidden representations with those of the teacher at each layer, selectively extracting knowledge pertinent to the target task. Gu et al. (2024) and Agarwal et al. (2024) introduce a novel approach where the student model first generates sequences, termed ‘selfgenerated sequences.’ The student then learns by using feedback (i.e. output distribution) from teacher on these sequences. This method is particularly beneficial when the student model lacks the capacity to mimic teacher’s distribution. Moreover, various LLM-quantization methods with distilling feature knowledge from teacher LLMs have been proposed (Tao et al., 2022a; Liu et al., 2023a; Kim et al., 2023b). These methods aim to preserve the original output distribution when quantizing the LLMs, ensuring minimal loss of performance. Additionally, feature knowledge could serve as a potent source for multi-teacher knowledge distillation. Timiryasov and Tastet (2023) leverages an ensemble of GPT-2 and LLaMA as teacher models to extract output distributions. Similarly, FuseLLM (Wan et al., 2024a) innovatively combines the capabilities of various LLMs through a weighted fusion of their output distributions, integrating them into a singular LLM. This approach has the potential to significantly enhance the student model’s capabilities, surpassing those of any individual teacher LLM.

激发教师模型特征知识最直接的方法是为固定序列数据集标注token级别的概率分布 (Sanh et al., 2019; Wen et al., 2023)。为利用教师模型中间层丰富的语义和句法知识,TED (Liang et al., 2023a) 设计了任务感知的逐层蒸馏方法,通过将学生模型的隐藏表示与教师模型每一层对齐,选择性提取与目标任务相关的知识。Gu et al. (2024) 和 Agarwal et al. (2024) 提出了一种创新方法:学生模型首先生成"自生成序列",然后通过教师模型对这些序列的反馈(即输出分布)进行学习。当学生模型难以模仿教师分布时,这种方法尤为有效。此外,研究者们提出了多种结合教师大语言模型特征知识的量化方法 (Tao et al., 2022a; Liu et al., 2023a; Kim et al., 2023b),这些方法旨在量化大语言模型时保持原始输出分布,确保性能损失最小。特征知识还可作为多教师知识蒸馏的强大来源:Timiryasov and Tastet (2023) 采用GPT-2和LLaMA的集成作为教师模型来提取输出分布;类似地,FuseLLM (Wan et al., 2024a) 通过加权融合多个大语言模型的输出分布,创新性地将其整合为单一模型。这种方法有望显著提升学生模型能力,使其超越任何单个教师大语言模型。

In summary, feature knowledge offers a more transparent alternative to black-box methods, allowing for deeper insight into and control over the distillation process. By utilizing feature knowledge from teacher LLMs, such as output distributions and intermediate layer features, white-box approaches enable richer knowledge transfer. While showing promise, especially in smaller models, its application is not suitable for black-box LLMs where internal parameters are inaccessible. Furthermore, student models distilled from white-box LLMs may under perform compared to their black-box counterparts, as the black-box teacher LLMs (e.g. GPT-4) tend to be more powerful.

总之,特征知识为黑盒方法提供了更透明的替代方案,使人们能够更深入地洞察和控制蒸馏过程。通过利用教师大语言模型中的特征知识(如输出分布和中间层特征),白盒方法实现了更丰富的知识迁移。尽管这一方法在小型模型中展现出潜力,但由于无法获取内部参数,它并不适用于黑盒大语言模型。此外,从白盒大语言模型蒸馏出的学生模型性能可能逊色于黑盒模型,因为黑盒教师大语言模型(如GPT-4)通常更强大。

3.1.5 Feedback

3.1.5 反馈

Most previous works predominantly focus on one-way knowledge transfer from the teacher to the student for imitation, without considering feedback from the teacher on the student’s generation. The feedback from the teacher typically offers guidance on student-generated outputs by providing preferences, assessments, or corrective information. For example, a common form of feedback involves teacher ranking the student’s generations and distilling this preference into the student model through Reinforcement Learning from AI Feedback (RLAIF) (Bai et al., 2022a). Here is a generalized formulation for eliciting feedback knowledge:

以往的研究大多侧重于教师向学生单向传递知识以供模仿,而忽略了教师对学生生成结果的反馈。教师的反馈通常通过提供偏好、评估或修正信息来指导学生生成输出。例如,一种常见的反馈形式是教师对学生生成内容进行排序,并通过AI反馈强化学习 (Reinforcement Learning from AI Feedback, RLAIF) (Bai et al., 2022a) 将这些偏好提炼到学生模型中。以下是获取反馈知识的通用公式:

$$

\mathcal{D}^{(\mathrm{fb})}={(x,y,\phi_{\mathrm{fb}}(x,y;\theta_{T}))|x\sim\chi,y\sim p_{S}(y|x)},

$$

$$

\mathcal{D}^{(\mathrm{fb})}={(x,y,\phi_{\mathrm{fb}}(x,y;\theta_{T}))|x\sim\chi,y\sim p_{S}(y|x)},

$$

where $y$ denotes the output generated by the student model in response to $x,$ and $\phi_{\mathrm{fb}}(\cdot;\theta_{T})\rangle$ represents providing feedback from teacher LLMs. This operation evaluates the student’s output $y$ given the input $x.$ , by offering assessment, corrective information, or other forms of guidance. This feedback knowledge can not only be distilled into the student to also generate feedback (such as creating a student preference model) but, more importantly, enable the student to refine its responses based on the feedback. Various methods have been explored to elicit this advanced knowledge (Bai et al., 2022a; Luo et al., 2023b; Cui et al., 2023a; Kwon et al., 2023; Jiang et al., 2023b; Chen et al., 2023a; Gu et al., 2024; Agarwal et al., 2024; Chen et al., 2024b; Guo et al., 2024; Ye et al., 2023; Hong et al., 2023; Lee et al., 2023a).

其中 $y$ 表示学生模型根据输入 $x$ 生成的输出,$\phi_{\mathrm{fb}}(\cdot;\theta_{T})\rangle$ 代表教师大语言模型提供的反馈。该操作通过评估、纠正信息或其他形式的指导,对给定输入 $x$ 时学生输出 $y$ 进行评价。这种反馈知识不仅可以蒸馏到学生模型中使其也能生成反馈(例如创建学生偏好模型),更重要的是能让学生根据反馈优化其响应。已有多种方法被探索用于获取这种高级知识 (Bai et al., 2022a; Luo et al., 2023b; Cui et al., 2023a; Kwon et al., 2023; Jiang et al., 2023b; Chen et al., 2023a; Gu et al., 2024; Agarwal et al., 2024; Chen et al., 2024b; Guo et al., 2024; Ye et al., 2023; Hong et al., 2023; Lee et al., 2023a)。

Preference, as previously discussed, represents a notable form of feedback knowledge from teacher models. Various knowledge of preferences could be distilled from teachers by prompting it with specific criteria. Bai et al. (2022a) introduce RLAIF for distilling harmlessness preferences from LLMs. This involves using an SFT-trained LLM to generate response pairs for each prompt, then ranking them for harmlessness to create a preference dataset. This dataset is distilled into a Preference Model (PM), which then guides the RL training of a more harmless LLM policy. WizardMath (Luo et al., 2023b) places emphasis on mathematical reasoning. They employ ChatGPT as teacher to directly provide process supervision and evaluate the correctness of each step in the generated solutions. To scale up highquality distilled preference data, Cui et al. (2023a) develop a large-scale preference dataset for distilling better preference models, Ultra Feedback. It compiles various instructions and models to produce comparative data. Then, GPT-4 is used to score candidates from various aspects of preference, including instruction-following, truthfulness, honesty and helpfulness.

偏好 (Preference) 作为教师模型反馈知识的重要形式,可以通过特定标准提示从教师模型中提取多种偏好知识。Bai et al. (2022a) 提出 RLAIF 方法,用于从大语言模型中提取无害偏好:首先使用经过监督微调 (SFT) 的大语言模型为每个提示生成响应对,然后根据无害性进行排序以构建偏好数据集,最终将其蒸馏为偏好模型 (PM) 来指导强化学习训练,从而获得更具无害性的大语言模型策略。WizardMath (Luo et al., 2023b) 专注于数学推理领域,采用 ChatGPT 作为教师模型直接提供过程监督,评估生成解题过程中每个步骤的正确性。为扩大高质量蒸馏偏好数据规模,Cui et al. (2023a) 开发了大规模偏好数据集 Ultra Feedback,通过整合多样化指令和模型生成对比数据,并利用 GPT-4 从指令遵循、真实性、诚实性和帮助性等多维度对候选响应进行评分,以蒸馏更优的偏好模型。

Beyond merely assessing student generations, teachers can also furnish extensive feedback on instances where students under perform. In Lion (Jiang et al., 2023b), teacher model pinpoints instructions that pose challenges to the student model, generating new, more difficult instructions aimed at bolstering the student’s abilities. PERsD (Chen et al., 2023a) showcases a method where teacher offers tailored refinement feedback on incorrect code snippets generated by students, guided by the specific execution errors encountered. Similarly, SelFee (Ye et al., 2023) leverages ChatGPT to generate feedback and revise the student’s answer based on the feedback. In contrast, FIGA (Guo et al., 2024) revises the student’s response by comparing it to the ground-truth response. Furthermore, teacher model’s distribution over the student’s generations can itself act as a form of feedback. MiniLLM (Gu et al., 2024) and GKD (Agarwal et al., 2024) present an innovative strategy wherein the student model initially generates sequences, followed by teacher model producing an output distribution as feedback. This method leverages the teacher’s insight to directly inform and refine the student model’s learning process.

除了评估学生生成的内容外,教师还可以针对学生表现不佳的案例提供详细反馈。在Lion (Jiang et al., 2023b)中,教师模型会识别对学生模型构成挑战的指令,并生成新的、更复杂的指令以提升学生能力。PERsD (Chen et al., 2023a)展示了一种方法,教师根据具体执行错误,对学生生成的错误代码片段提供定制化的改进反馈。类似地,SelFee (Ye et al., 2023)利用ChatGPT生成反馈,并基于反馈修改学生答案。相比之下,FIGA (Guo et al., 2024)通过将学生回答与标准答案对比来进行修正。此外,教师模型对学生生成内容的分布本身也可作为一种反馈形式。MiniLLM (Gu et al., 2024)和GKD (Agarwal et al., 2024)提出了一种创新策略:学生模型首先生成序列,随后教师模型生成输出分布作为反馈。这种方法利用教师的洞察力直接指导并优化学生模型的学习过程。

3.1.6 Self-Knowledge

3.1.6 自我认知

The knowledge could also be elicited from the student itself, which we refer to as Self-Knowledge. In this setting, the same model acts both as the teacher and the student, iterative ly improving itself by distilling and refining its own previously generated outputs. This knowledge uniquely circumvents the need for an external, potentially proprietary, powerful teacher model, such as GPT-series LLMs. Furthermore, it allows the model to surpass the limitations or “ceiling” inherent in traditional teacher-student methods. Eliciting self-knowledge could be formulated as:

知识也可以从学生本身中提取,我们称之为自我知识 (Self-Knowledge)。在这种设定下,同一个模型同时扮演教师和学生的角色,通过蒸馏和优化自身先前生成的输出迭代地提升自己。这种知识独特地规避了对GPT系列大语言模型等外部、可能专有的强大教师模型的需求。此外,它使模型能够超越传统师生方法固有的限制或"天花板"。自我知识的提取可以表述为:

$$

\mathcal{D}^{(\mathrm{sk})}={(x,y,\phi_{\mathrm{sk}}(x,y))|x\sim\mathcal{S},y\sim p_{S}(y|I\oplus x)},

$$

$$

\mathcal{D}^{(\mathrm{sk})}={(x,y,\phi_{\mathrm{sk}}(x,y))|x\sim\mathcal{S},y\sim p_{S}(y|I\oplus x)},

$$

where $\phi_{\mathrm{sk}}(\cdot)$ is a generalized function that represents an additional process to the self-generated outputs $y,$ which could include but is not limited to filtering, rewarding, or any other mechanisms for enhancing or evaluating $y$ . It could be governed by external tools or the student itself $\theta_{S}$ . Recent research in this area has proposed various innovative methodologies to elicit self-knowledge, demonstrating its potential for creating more efficient and autonomous learning systems. (Allen-Zhu and Li, 2020; Wang et al., 2022a; Sun et al., 2024b; Yang et al., 2024; Jung et al., 2023; Huang et al., 2023a; Gulcehre et al., 2023; Yuan et al., 2024a; Xu et al., 2023b; Zelikman et al., 2022; Chen et al., 2024a; Zheng et al., 2024; Li et al., 2024c; Zhao et al., 2024; Singh et al., 2023; Chen et al., 2024c; Hosseini et al., 2024)

其中 $\phi_{\mathrm{sk}}(\cdot)$ 是一个广义函数,表示对自生成输出 $y$ 的附加处理过程,可能包括但不限于过滤、奖励或其他用于增强或评估 $y$ 的机制。该过程可由外部工具或学生模型本身 $\theta_{S}$ 控制。该领域最新研究提出了多种激发自我认知的创新方法,证明了其在构建更高效自主学习系统方面的潜力 (Allen-Zhu and Li, 2020; Wang et al., 2022a; Sun et al., 2024b; Yang et al., 2024; Jung et al., 2023; Huang et al., 2023a; Gulcehre et al., 2023; Yuan et al., 2024a; Xu et al., 2023b; Zelikman et al., 2022; Chen et al., 2024a; Zheng et al., 2024; Li et al., 2024c; Zhao et al., 2024; Singh et al., 2023; Chen et al., 2024c; Hosseini et al., 2024)。

A notable example of this methodology is SelfInstruct (Wang et al., 2022a), which utilizes GPT-3 for data augmentation through the Expansion approach, generating additional data samples to enhance the dataset. This enriched dataset subsequently fine-tunes the original model. Other methods aim to elicit targeted knowledge from student models by modifying prompts, and leveraging these data for further refinement. In Self-Align (Sun et al., 2024b), they find that models fine-tuned by Self-Instruct data tend to generate short or indirect responses. They prompt this model with verbose instruction to produce indepth and detailed responses. Then, they employ contextdistillation (Askell et al., 2021) to distill these responses paired with non-verbose instructions back to the model. Similarly, RLCD (Yang et al., 2024) introduces the use of contrasting prompts to generate preference pairs from an unaligned LLM, encompassing both superior and inferior examples. A preference model trained on these pairs then guides the enhancement of the unaligned model through reinforcement learning. Several other approaches employ filtering methods to refine self-generated data. For example, Impossible Distillation (Jung et al., 2023) targets sentence sum mari z ation tasks, implementing filters based on entailment, length, and diversity to screen self-generated summaries. LMSI (Huang et al., 2023a) generates multiple CoT reasoning paths and answers for each question, and then retains only those paths that lead to the most consistent answer.

该方法论的一个显著案例是SelfInstruct (Wang et al., 2022a),它通过扩展(Expansion)方法利用GPT-3进行数据增强,生成额外数据样本以扩充数据集。经过强化的数据集随后用于微调原始模型。其他方法则致力于通过修改提示词从学生模型中提取目标知识,并利用这些数据进行进一步优化。在Self-Align (Sun et al., 2024b)中,研究者发现经Self-Instruct数据微调的模型倾向于生成简短或间接的响应。他们通过详细指令提示该模型,使其产生深入细致的回答,继而运用上下文蒸馏(context distillation) (Askell et al., 2021)技术将这些回答与非详细指令配对后反哺给模型。类似地,RLCD (Yang et al., 2024)引入对比提示词方法,从未对齐的大语言模型中生成包含优劣示例的偏好对,基于这些配对训练的偏好模型通过强化学习指导未对齐模型的改进。另有若干方法采用过滤机制优化自生成数据。例如Impossible Distillation (Jung et al., 2023)针对文本摘要任务,基于蕴含关系、长度和多样性等维度实施过滤以筛选自生成摘要。LMSI (Huang et al., 2023a)为每个问题生成多条思维链(CoT)推理路径和答案,仅保留能得出最一致答案的路径。

Note that refined self-knowledge can be iterative ly acquired as the student model continuously improves, further enhancing the student’s capabilities. This is Gulcehre et al. (2023) introduces a Reinforced Self-Training (ReST) framework that cyclically alternates between Grow and Improve stages to progressively obtain better self-knowledge and refine the student model. During the Grow stage, the student model generates multiple output predictions. Then, in the Improve stage, these self-generated outputs are ranked and filtered using a scoring function. Subsequently, the language model undergoes fine-tuning on this curated dataset, employing an offline RL objective. Self-Play (Chen et al., 2024a) introduces a framework resembling iterative DPO, where the language model is fine-tuned to differentiate the self-generated responses from the human-annotated data. These self-generated responses could be seen as “negative knowledge” to promote the student to better align with the target distribution. Self-Rewarding (Yuan et al., 2024a) explores a novel and promising approach by utilizing the language model itself as a reward model. It employs LLMas-a-Judge prompting to autonomously assign rewards for the self-generated responses. The entire process can then be iterated, improving instruction following and reward modeling capabilities.

需要注意的是,精炼的自我认知可以随着学生模型的持续改进而迭代获取,从而进一步提升学生模型的能力。Gulcehre等人(2023)提出的强化自训练(ReST)框架通过"成长"和"改进"阶段的循环交替,逐步获得更好的自我认知并优化学生模型。在成长阶段,学生模型生成多个输出预测;在改进阶段,这些自生成输出会通过评分函数进行排序和筛选。随后,语言模型基于这个精选数据集采用离线强化学习目标进行微调。Self-Play(Chen等人,2024a)提出了一个类似迭代DPO的框架,通过微调语言模型来区分自生成响应与人工标注数据。这些自生成响应可视为"负知识",用于促进学生模型更好地对齐目标分布。Self-Rewarding(Yuan等人,2024a)探索了一种新颖且有前景的方法,将语言模型本身作为奖励模型,采用LLMas-a-Judge提示来自主评估自生成响应的奖励值。整个过程可迭代执行,从而同步提升指令跟随和奖励建模能力。

3.2 Distillation

3.2 蒸馏

This section focuses on the methodologies for effectively transferring the elicited knowledge from teacher LLMs into student models. We explore a range of distillation techniques, from the strategies that enhance imitation by $S u\cdot$ - pervised Fine-Tuning, Divergence and Similarity, to advanced methods like Reinforcement Learning and Rank Optimization, as shown in Figure 3.

本节重点探讨如何有效地将教师大语言模型(LLM)中提取的知识迁移到学生模型中。我们研究了一系列蒸馏技术,从通过监督微调(SFT)、散度与相似性增强模仿的策略,到强化学习和排序优化等先进方法,如图 3 所示。

3.2.1 Supervised Fine-Tuning

3.2.1 监督微调

Supervised Fine-Tuning (SFT), or called Sequence-Level KD (SeqKD) (Kim and Rush, 2016), is the simplest and one of the most effective methods for distilling powerful black-box

监督微调 (Supervised Fine-Tuning, SFT) ,或称序列级知识蒸馏 (Sequence-Level KD, SeqKD) (Kim and Rush, 2016) ,是蒸馏强大黑盒模型最简单且最有效的方法之一

TABLE 1: Functional forms of $D$ for various divergence types. $p\mathrm{.}$ : reference

| Divergence Type | D(p,q) Function |

| Forward KLD | ∑p(t) 1og |

| Reverse KLD | |

| JS Divergence | (∑p(t) log 2p(t) +∑q(t)log 2q(t) p(t)+q(t) p(t)+q(t) |

表 1: 不同散度类型中 $D$ 的函数形式。$p\mathrm{.}$ : 参考

| 散度类型 | D(p,q) 函数 |

|---|---|

| 前向KLD | ∑p(t) 1og |

| 反向KLD | |

| JS散度 | (∑p(t) log 2p(t) +∑q(t)log 2q(t) p(t)+q(t) p(t)+q(t) |

TABLE 2: Summary of similarity functions in knowledge distillation.

| SimilarityFunctionLF | Expression |

| L2-Norm Distance | Φr(fr(,y))-Φs(fs(,y))ll2 |

| L1-Norm Distance | ΦT(fT(c,y))-Φs(fs(c,y))1 |

| Cross-EntropyLoss | ∑ΦT(fr(c,y))log(Φs(fs(x,y))) |

| MaximumMeanDiscrepancy | MMD(ΦT(fT(c,y)),Φs(fs(x,y))) |

表 2: 知识蒸馏中的相似度函数总结。

| 相似度函数 | 表达式 |

|---|---|

| L2范数距离 | Φr(fr(,y))-Φs(fs(,y))ll2 |

| L1范数距离 | ΦT(fT(c,y))-Φs(fs(c,y))1 |

| 交叉熵损失 | ∑ΦT(fr(c,y))log(Φs(fs(x,y))) |

| 最大均值差异 | MMD(ΦT(fT(c,y)),Φs(fs(x,y))) |

LLMs. SFT finetunes student model by maximizing the likelihood of sequences generated by the teacher LLMs, aligning the student’s predictions with those of the teacher. This process can be mathematically formulated as minimizing the objective function:

大语言模型。监督式微调 (SFT) 通过最大化教师大语言模型生成序列的似然概率来微调学生模型,使学生的预测与教师保持一致。该过程可数学表述为最小化目标函数:

$$

\mathcal{L}{\mathrm{SFT}}=\mathbb{E}{x\sim\mathcal{X},y\sim p_{T}(y|x)}\left[-\log p_{S}(y|x)\right],

$$

$$

\mathcal{L}{\mathrm{SFT}}=\mathbb{E}{x\sim\mathcal{X},y\sim p_{T}(y|x)}\left[-\log p_{S}(y|x)\right],

$$

where $y$ is the output sequence produced by the teacher model. This simple yet highly effective technique forms the basis of numerous studies in the field. Numerous researchers have successfully employed SFT to train student models using sequences generated by teacher LLMs (Taori et al., 2023; Chiang et al., 2023; Wu et al., 2023c; Xu et al., $2023\mathsf{a},$ ; Luo et al., 2023b). Additionally, SFT has been explored in many self-distillation works (Wang et al., 2022a; Huang et al., 2023c; Xu et al., 2023b; Zelikman et al., 2022). Due to the large number of KD works applying SFT, we only list representative ones here. More detailed works can be found in §4.

其中 $y$ 是由教师模型生成的输出序列。这一简单却高效的技术构成了该领域众多研究的基础。许多研究者已成功运用监督式微调 (SFT) 技术,通过教师大语言模型生成的序列来训练学生模型 (Taori et al., 2023; Chiang et al., 2023; Wu et al., 2023c; Xu et al., $2023\mathsf{a},$ ; Luo et al., 2023b)。此外,监督式微调也在众多自蒸馏研究中得到探索 (Wang et al., 2022a; Huang et al., 2023c; Xu et al., 2023b; Zelikman et al., 2022)。由于采用监督式微调的知识蒸馏研究数量庞大,此处仅列举代表性成果,更多细节研究可参阅§4节。

3.2.2 Divergence and Similarity

3.2.2 发散性与相似性

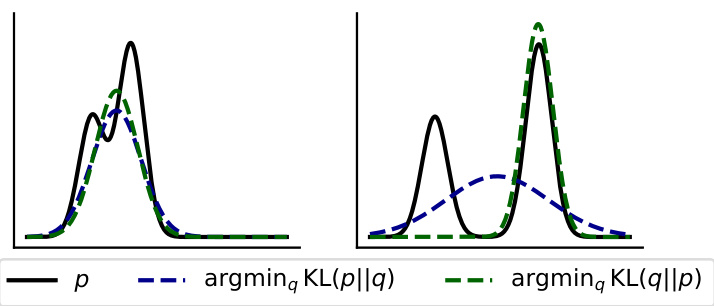

This section mainly concentrates on algorithms designed for distilling feature knowledge from white-box teacher LLMs, including distributions and hidden state features. These algorithms can be broadly categorized into two groups: those minimizing divergence in probability distributions and those aimed at enhancing the similarity of hidden states.

本节主要关注从白盒教师大语言模型中提取特征知识的算法,包括概率分布和隐藏状态特征。这些算法大致可分为两类:最小化概率分布差异的算法和提升隐藏状态相似度的算法。

Divergence. Divergence-based methods minimize divergence between the probability distributions of the teacher and student models, represented by a general divergence function $D$ :

散度。基于散度的方法通过最小化教师模型和学生模型概率分布之间的散度来实现知识迁移,这一过程由通用散度函数 $D$ 表示:

$$

\begin{array}{r}{L_{\mathrm{Div}}=\underset{x\sim\mathcal{X},y\sim\mathcal{Y}}{\mathbb{E}}\left[D\left(p_{T}(y|x),p_{S}(y|x)\right)\right],}\end{array}

$$

$$

\begin{array}{r}{L_{\mathrm{Div}}=\underset{x\sim\mathcal{X},y\sim\mathcal{Y}}{\mathbb{E}}\left[D\left(p_{T}(y|x),p_{S}(y|x)\right)\right],}\end{array}

$$