RETHINKING ATTENTION WITH PERFORMERS

重新思考注意力机制与Performers

Krzysztof Cho roman ski∗1, Valerii Li kho s her s to v∗2, David Dohan∗1, Xingyou Song∗1 Andreea Gane∗1, Tamas Sarlos∗1, Peter Hawkins∗1, Jared Davis∗3, Afroz Mohiuddin1 Lukasz Kaiser1, David Belanger1, Lucy Colwell1,2, Adrian Weller2,4 1Google 2 University of Cambridge 3DeepMind 4Alan Turing Institute

Krzysztof Choromanski∗1, Valerii Likhosherstov∗2, David Dohan∗1, Xingyou Song∗1, Andreea Gane∗1, Tamas Sarlos∗1, Peter Hawkins∗1, Jared Davis∗3, Afroz Mohiuddin1, Lukasz Kaiser1, David Belanger1, Lucy Colwell1,2, Adrian Weller2,4

1Google 2剑桥大学 3DeepMind 4艾伦·图灵研究所

ABSTRACT

摘要

We introduce Performers, Transformer architectures which can estimate regular (softmax) full-rank-attention Transformers with provable accuracy, but using only linear (as opposed to quadratic) space and time complexity, without relying on any priors such as sparsity or low-rankness. To approximate softmax attentionkernels, Performers use a novel Fast Attention Via positive Orthogonal Random features approach (FAVOR $+$ ), which may be of independent interest for scalable kernel methods. $\mathrm{FAVOR+}$ can also be used to efficiently model kernel iz able attention mechanisms beyond softmax. This representational power is crucial to accurately compare softmax with other kernels for the first time on large-scale tasks, beyond the reach of regular Transformers, and investigate optimal attention-kernels. Performers are linear architectures fully compatible with regular Transformers and with strong theoretical guarantees: unbiased or nearly-unbiased estimation of the attention matrix, uniform convergence and low estimation variance. We tested Performers on a rich set of tasks stretching from pixel-prediction through text models to protein sequence modeling. We demonstrate competitive results with other examined efficient sparse and dense attention methods, showcasing effectiveness of the novel attention-learning paradigm leveraged by Performers.

我们推出Performers,这是一种Transformer架构,能够以可证明的准确度估计常规(softmax)全秩注意力Transformer,同时仅使用线性(而非平方级)空间和时间复杂度,且不依赖任何先验假设(如稀疏性或低秩性)。为近似softmax注意力核,Performers采用了一种新颖的基于正交随机特征的快速注意力方法(FAVOR+),该方法对于可扩展核方法可能具有独立价值。FAVOR+还可用于高效建模超越softmax的其他可核化注意力机制。这种表征能力首次使得在大规模任务(超出常规Transformer处理范围)上准确比较softmax与其他核函数成为可能,并能探究最优注意力核。Performers作为线性架构,完全兼容常规Transformer并具备强大理论保证:注意力矩阵的无偏/近无偏估计、一致收敛性及低估计方差。我们在从像素预测到文本建模乃至蛋白质序列分析的多样化任务上测试了Performers,结果表明其与现有高效稀疏/稠密注意力方法相比具有竞争力,展现了Performers所采用的新型注意力学习范式的有效性。

1 INTRODUCTION AND RELATED WORK

1 引言与相关工作

Transformers (Vaswani et al., 2017; Dehghani et al., 2019) are powerful neural network architectures that have become SOTA in several areas of machine learning including natural language processing (NLP) (e.g. speech recognition (Luo et al., 2020)), neural machine translation (NMT) (Chen et al., 2018), document generation/sum mari z ation, time series prediction, generative modeling (e.g. image generation (Parmar et al., 2018)), music generation (Huang et al., 2019), and bioinformatics (Rives et al., 2019; Madani et al., 2020; Ingraham et al., 2019; Elnaggar et al., 2019; Du et al., 2020).

Transformer (Vaswani等人, 2017; Dehghani等人, 2019) 是一种强大的神经网络架构,已在包括自然语言处理 (NLP) (如语音识别 (Luo等人, 2020))、神经机器翻译 (NMT) (Chen等人, 2018)、文档生成/摘要、时间序列预测、生成式建模 (如图像生成 (Parmar等人, 2018))、音乐生成 (Huang等人, 2019) 和生物信息学 (Rives等人, 2019; Madani等人, 2020; Ingraham等人, 2019; Elnaggar等人, 2019; Du等人, 2020) 在内的多个机器学习领域成为SOTA (state-of-the-art)。

Transformers rely on a trainable attention mechanism that identifies complex dependencies between the elements of each input sequence. Unfortunately, the regular Transformer scales quadratically with the number of tokens $L$ in the input sequence, which is prohibitively expensive for large $L$ and precludes its usage in settings with limited computational resources even for moderate values of $L$ . Several solutions have been proposed to address this issue (Beltagy et al., 2020; Gulati et al., 2020; Chan et al., 2020; Child et al., 2019; Bello et al., 2019). Most approaches restrict the attention mechanism to attend to local neighborhoods (Parmar et al., 2018) or incorporate structural priors on attention such as sparsity (Child et al., 2019), pooling-based compression (Rae et al., 2020) clustering/binning/convolution techniques (e.g. (Roy et al., 2020) which applies $k$ -means clustering to learn dynamic sparse attention regions, or (Kitaev et al., 2020), where locality sensitive hashing is used to group together tokens of similar embeddings), sliding windows (Beltagy et al., 2020), or truncated targeting (Chelba et al., 2020). There is also a long line of research on using dense attention matrices, but defined by low-rank kernels substituting softmax (Katha ro poul os et al., 2020; Shen et al., 2018). Those methods critically rely on kernels admitting explicit representations as dot-products of finite positive-feature vectors.

Transformer依赖于一种可训练的注意力机制,能够识别每个输入序列元素之间的复杂依赖关系。然而,标准Transformer的计算复杂度会随着输入序列中token数量$L$呈二次方增长,这对于较大的$L$值来说成本过高,甚至在中等$L$值情况下也会因计算资源限制而无法使用。已有若干解决方案被提出以应对该问题 (Beltagy et al., 2020; Gulati et al., 2020; Chan et al., 2020; Child et al., 2019; Bello et al., 2019)。多数方法将注意力机制限制在局部邻域内 (Parmar et al., 2018),或引入结构化先验如稀疏性 (Child et al., 2019)、基于池化的压缩 (Rae et al., 2020)、聚类/分箱/卷积技术(例如 (Roy et al., 2020) 采用$k$-均值聚类学习动态稀疏注意力区域,(Kitaev et al., 2020) 使用局部敏感哈希对相似嵌入的token进行分组)、滑动窗口 (Beltagy et al., 2020) 或截断目标 (Chelba et al., 2020)。另有一系列研究采用稠密注意力矩阵,但通过低秩核函数替代softmax (Katharopoulos et al., 2020; Shen et al., 2018),这些方法的核心在于要求核函数能显式表示为有限正特征向量的点积。

The approaches above do not aim to approximate regular attention, but rather propose simpler and more tractable attention mechanisms, often by incorporating additional constraints (e.g. identical query and key sets as in (Kitaev et al., 2020)), or by trading regular with sparse attention using more layers (Child et al., 2019). Unfortunately, there is a lack of rigorous guarantees for the representation power produced by such methods, and sometimes the validity of sparsity patterns can only be verified empirically through trial and error by constructing special GPU operations (e.g. either writing $\mathrm{C}{+}{+}$ CUDA kernels (Child et al., 2019) or using TVMs (Beltagy et al., 2020)). Other techniques which aim to reduce Transformers’ space complexity include reversible residual layers allowing one-time activation storage in training (Kitaev et al., 2020) and shared attention weights (Xiao et al., 2019). These constraints may impede application to long-sequence problems, where approximations of the attention mechanism are not sufficient. Approximations based on truncated back-propagation (Dai et al., 2019) are also unable to capture long-distance correlations since the gradients are only propagated inside a localized window. Other methods propose biased estimation of regular attention but only in the non-causal setting and with large mean squared error (Wang et al., 2020).

上述方法并不旨在逼近常规注意力机制,而是通过引入额外约束(例如 (Kitaev et al., 2020) 中采用的查询与键集合相同策略)或通过增加层数来替换密集注意力为稀疏注意力 (Child et al., 2019) ,从而提出更简单、更易处理的注意力机制。遗憾的是,这类方法缺乏对表征能力的严格理论保证,有时甚至需要通过试错法构建特殊GPU操作(例如编写 $\mathrm{C}{+}{+}$ CUDA内核 (Child et al., 2019) 或使用TVM框架 (Beltagy et al., 2020))来经验性验证稀疏模式的有效性。其他降低Transformer空间复杂度的技术包括允许训练时单次激活存储的可逆残差层 (Kitaev et al., 2020) 和共享注意力权重 (Xiao et al., 2019) ,但这些约束可能阻碍其在长序列问题中的应用——此时仅靠注意力机制的近似并不充分。基于截断反向传播的近似方法 (Dai et al., 2019) 同样无法捕捉长距离依赖关系,因为梯度仅在局部窗口内传播。另有方法提出对常规注意力的有偏估计,但仅适用于非因果场景且存在较大均方误差 (Wang et al., 2020) 。

In response, we introduce the first Transformer architectures, Performers, capable of provably accurate and practical estimation of regular (softmax) full-rank attention, but of only linear space and time complexity and not relying on any priors such as sparsity or low-rankness. Performers use the Fast Attention Via positive Orthogonal Random features (FAVOR+) mechanism, leveraging new methods for approximating softmax and Gaussian kernels, which we propose. We believe these methods are of independent interest, contributing to the theory of scalable kernel methods. Consequently, Performers are the first linear architectures fully compatible (via small amounts of fine-tuning) with regular Transformers, providing strong theoretical guarantees: unbiased or nearly-unbiased estimation of the attention matrix, uniform convergence and lower variance of the approximation.

对此,我们推出了首个Transformer架构——Performers,能够以可证明的准确性和实用性估计常规(softmax)全秩注意力,同时仅具有线性空间和时间复杂度,且不依赖任何先验假设(如稀疏性或低秩性)。Performers采用基于正交随机特征的快速注意力机制(FAVOR+),利用我们提出的新方法来近似softmax和高斯核函数。我们认为这些方法本身具有独立价值,为可扩展核方法理论做出了贡献。因此,Performers是首个与常规Transformer完全兼容(通过少量微调)的线性架构,并提供强大的理论保证:注意力矩阵的无偏或近乎无偏估计、均匀收敛性以及更低的近似方差。

$\mathrm{FAVOR+}$ can be also applied to efficiently model other kernel iz able attention mechanisms beyond softmax. This representational power is crucial to accurately compare softmax with other kernels for the first time on large-scale tasks, that are beyond the reach of regular Transformers, and find for them optimal attention-kernels. $\mathrm{FAVOR+}$ can also be applied beyond the Transformer scope as a more scalable replacement for regular attention, which itself has a wide variety of uses in computer vision (Fu et al., 2019), reinforcement learning (Zambaldi et al., 2019), training with softmax cross entropy loss, and even combinatorial optimization (Vinyals et al., 2015).

$\mathrm{FAVOR+}$ 还可用于高效建模除 softmax 之外的其他可核化注意力机制。这种表征能力首次使得在大规模任务中准确比较 softmax 与其他核函数成为可能(这些任务超出了常规 Transformer 的处理范围),并为其找到最优注意力核。$\mathrm{FAVOR+}$ 的应用不仅限于 Transformer 领域,它还能作为常规注意力的可扩展替代方案——常规注意力本身在计算机视觉 (Fu et al., 2019)、强化学习 (Zambaldi et al., 2019)、使用 softmax 交叉熵损失进行训练,甚至组合优化 (Vinyals et al., 2015) 中都有广泛应用。

We test Performers on a rich set of tasks ranging from pixel-prediction through text models to protein sequence modeling. We demonstrate competitive results with other examined efficient sparse and dense attention methods, showcasing the effectiveness of the novel attention-learning paradigm leveraged by Performers. We emphasize that in principle, $\mathrm{FAVOR+}$ can also be combined with other techniques, such as reversible layers (Kitaev et al., 2020) or cluster-based attention (Roy et al., 2020).

我们在从像素预测到文本模型再到蛋白质序列建模的丰富任务集上测试了Performers。实验结果表明,与其他经过验证的高效稀疏和稠密注意力方法相比,Performers具有竞争力,展现了其采用的新型注意力学习范式的有效性。需要强调的是,FAVOR+ 原则上也可与其他技术结合使用,例如可逆层 (Kitaev et al., 2020) 或基于聚类的注意力机制 (Roy et al., 2020)。

2 FAVOR $^+$ MECHANISM & POSITIVE ORTHOGONAL RANDOM FEATURES

2 FAVOR$^+$机制与正交随机特征

Below we describe in detail the $\mathrm{FAVOR+}$ mechanism - the backbone of the Performer′s architecture. We introduce a new method for estimating softmax (and Gaussian) kernels with positive orthogonal random features which FAVOR $^{+}$ leverages for the robust and unbiased estimation of regular (softmax) attention and show how $\mathrm{FAVOR+}$ can be applied for other attention-kernels.

下面我们详细描述 $\mathrm{FAVOR+}$ 机制——Performer架构的核心。我们提出了一种使用正交互随机特征估计softmax(及高斯)核的新方法,FAVOR$^{+}$ 利用该方法对常规(softmax)注意力进行鲁棒且无偏的估计,并展示如何将 $\mathrm{FAVOR+}$ 应用于其他注意力核。

2.1 PRELIMINARIES - REGULAR ATTENTION MECHANISM

2.1 基础知识 - 常规注意力机制

Let $L$ be the size of an input sequence of tokens. Then regular dot-product attention (Vaswani et al., 2017) is a mapping which accepts matrices Q, K, $\mathbf{V}\in\mathbb{R}^{L\times d}$ as input where $d$ is the hidden dimension (dimension of the latent representation). Matrices $\mathbf{Q},\mathbf{K},\mathbf{V}$ are intermediate representations of the input and their rows can be interpreted as queries, keys and values of the continuous dictionary data structure respectively. Bidirectional (or non-directional (Devlin et al., 2018)) dot-product attention has the following form, where $\mathbf{A}\in\mathbb{R}^{L\times L}$ is the so-called attention matrix:

设 $L$ 为输入 token 序列的长度。常规的点积注意力机制 (Vaswani et al., 2017) 是一个映射函数,其输入为矩阵 Q、K 和 $\mathbf{V}\in\mathbb{R}^{L\times d}$,其中 $d$ 表示隐藏维度(潜在表征的维度)。矩阵 $\mathbf{Q}$、$\mathbf{K}$、$\mathbf{V}$ 是输入的中间表征,它们的行向量可分别解释为连续字典数据结构中的查询 (query)、键 (key) 和值 (value)。双向(或称无方向性 (Devlin et al., 2018))点积注意力的形式如下,其中 $\mathbf{A}\in\mathbb{R}^{L\times L}$ 即为所谓的注意力矩阵:

$$

\operatorname{Att}_ {\leftrightarrow}(\mathbf{Q},\mathbf{K},\mathbf{V})=\mathbf{D}^{-1}\mathbf{A}\mathbf{V},\quad\mathbf{A}=\exp(\mathbf{Q}\mathbf{K}^{\top}/{\sqrt{d}}),\quad\mathbf{D}=\operatorname{diag}(\mathbf{A}\mathbf{1}_{L}).

$$

$$

\operatorname{Att}_ {\leftrightarrow}(\mathbf{Q},\mathbf{K},\mathbf{V})=\mathbf{D}^{-1}\mathbf{A}\mathbf{V},\quad\mathbf{A}=\exp(\mathbf{Q}\mathbf{K}^{\top}/{\sqrt{d}}),\quad\mathbf{D}=\operatorname{diag}(\mathbf{A}\mathbf{1}_{L}).

$$

Here $\exp(\cdot)$ is applied element wise, ${\bf1}_{L}$ is the all-ones vector of length $L$ , and $\mathrm{diag}(\cdot)$ is a diagonal matrix with the input vector as the diagonal. Time and space complexity of computing (1) are $\bar{O(L^{2}d)}$ and $O(L^{2}+L d)$ respectively, because A has to be stored explicitly. Hence, in principle, dot-product attention of type (1) is incompatible with end-to-end processing of long sequences. Bidirectional attention is applied in encoder self-attention and encoder-decoder attention in $\mathrm{Seq2Seq}$ architectures.

这里 $\exp(\cdot)$ 是逐元素应用的, ${\bf1}_{L}$ 是长度为 $L$ 的全1向量, $\mathrm{diag}(\cdot)$ 是以输入向量为对角线的对角矩阵。计算式(1)的时间复杂度为 $\bar{O(L^{2}d)}$ ,空间复杂度为 $O(L^{2}+L d)$ ,因为需要显式存储矩阵A。因此,原则上这类点积注意力(1)无法实现长序列的端到端处理。双向注意力应用于 $\mathrm{Seq2Seq}$ 架构中的编码器自注意力层和编码器-解码器注意力层。

Another important type of attention is unidirectional dot-product attention which has the form:

另一种重要的注意力类型是单向点积注意力 (unidirectional dot-product attention),其形式为:

$$

\operatorname{Att}_ {\rightarrow}(\mathbf{Q},\mathbf{K},\mathbf{V})=\widetilde{\mathbf{D}}^{-1}\widetilde{\mathbf{A}}\mathbf{V},\quad\widetilde{\mathbf{A}}=\operatorname{tril}(\mathbf{A}),\quad\widetilde{\mathbf{D}}=\operatorname{diag}(\widetilde{\mathbf{A}}\mathbf{1}_{L}),

$$

$$

\operatorname{Att}_ {\rightarrow}(\mathbf{Q},\mathbf{K},\mathbf{V})=\widetilde{\mathbf{D}}^{-1}\widetilde{\mathbf{A}}\mathbf{V},\quad\widetilde{\mathbf{A}}=\operatorname{tril}(\mathbf{A}),\quad\widetilde{\mathbf{D}}=\operatorname{diag}(\widetilde{\mathbf{A}}\mathbf{1}_{L}),

$$

where $\operatorname{tril}(\cdot)$ returns the lower-triangular part of the argument matrix including the diagonal. As discussed in (Vaswani et al., 2017), unidirectional attention is used for auto regressive generative modelling, e.g. as self-attention in generative Transformers as well as the decoder part of Seq2Seq Transformers.

其中 $\operatorname{tril}(\cdot)$ 返回参数矩阵包含对角线的下三角部分。如 (Vaswani et al., 2017) 所述,单向注意力用于自回归生成建模,例如作为生成式 Transformer 中的自注意力机制以及 Seq2Seq Transformer 的解码器部分。

We will show that attention matrix A can be approximated up to any precision in time $O(L d^{2}\log(d))$ . For comparison, popular methods leveraging sparsity via Locality-Sensitive Hashing (LSH) techniques (Kitaev et al., 2020) have $O(L d^{2}\log\breve{L})$ time complexity. In the main body of the paper we will describe $\mathrm{FAVOR+}$ for bidirectional attention. Completely analogous results can be obtained for the unidirectional variant via the mechanism of prefix-sums (all details in the Appendix B.1).

我们将证明注意力矩阵A可在$O(L d^{2}\log(d))$时间内逼近任意精度。作为对比,基于局部敏感哈希(LSH)技术的流行稀疏化方法(Kitaev et al., 2020)具有$O(L d^{2}\log\breve{L})$时间复杂度。论文主体部分将针对双向注意力机制描述$\mathrm{FAVOR+}$方法,通过前缀和机制(附录B.1详述)可完全类推至单向注意力变体。

2.2 GENERALIZED KERNEL IZ ABLE ATTENTION

2.2 广义核注意力机制 (Generalized Kernelizable Attention)

$\mathrm{FAVOR+}$ works for attention blocks using matrices $\mathbf{A}\in\mathbb{R}^{L\times L}$ of the form $\mathbf{A}(i,j)=\mathrm{K}(\mathbf{q}_ {i}^{\top},\mathbf{k}_ {j}^{\top})$ , with ${\bf q}_ {i}/{\bf k}_ {j}$ standing for the $i^{t h}/j^{t h}$ query/key row-vector in ${\bf Q}/{\bf K}$ and kernel $\mathrm{K}:\mathbb{R}^{d}\times\mathbb{R}^{d}\rightarrow\mathbb{R}_ {+}$ defined for the (usually randomized) mapping: $\phi:\mathbb{R}^{d}\rightarrow\mathbb{R}_{+}^{r}$ (for some $r>0$ ) as:

$\mathrm{FAVOR+}$ 适用于使用矩阵 $\mathbf{A}\in\mathbb{R}^{L\times L}$ 的注意力块,其形式为 $\mathbf{A}(i,j)=\mathrm{K}(\mathbf{q}_ {i}^{\top},\mathbf{k}_ {j}^{\top})$。其中 ${\bf q}_ {i}/{\bf k}_ {j}$ 表示 ${\bf Q}/{\bf K}$ 中的第 $i^{t h}/j^{t h}$ 个查询/键行向量,核函数 $\mathrm{K}:\mathbb{R}^{d}\times\mathbb{R}^{d}\rightarrow\mathbb{R}_ {+}$ 定义为(通常随机化的)映射 $\phi:\mathbb{R}^{d}\rightarrow\mathbb{R}_{+}^{r}$(对于某些 $r>0$)的形式:

$$

\begin{array}{r}{\mathrm{K}(\mathbf{x},\mathbf{y})=\mathbb{E}[\phi(\mathbf{x})^{\top}\phi(\mathbf{y})].}\end{array}

$$

$$

\begin{array}{r}{\mathrm{K}(\mathbf{x},\mathbf{y})=\mathbb{E}[\phi(\mathbf{x})^{\top}\phi(\mathbf{y})].}\end{array}

$$

We call $\phi(\mathbf{u})$ a random feature map for $\mathbf{u}\in\mathbb{R}^{d}$ . For $\mathbf{Q}^{\prime},\mathbf{K}^{\prime}\in\mathbb{R}^{L\times r}$ with rows given as $\phi(\mathbf{q}_ {i}^{\top})^{\top}$ and $\phi(\mathbf{k}_{i}^{\top})^{\top}$ respectively, Equation 3 leads directly to the efficient attention mechanism of the form:

我们称 $\phi(\mathbf{u})$ 为 $\mathbf{u}\in\mathbb{R}^{d}$ 的随机特征映射。对于 $\mathbf{Q}^{\prime},\mathbf{K}^{\prime}\in\mathbb{R}^{L\times r}$,其行向量分别为 $\phi(\mathbf{q}_ {i}^{\top})^{\top}$ 和 $\phi(\mathbf{k}_{i}^{\top})^{\top}$,式3可直接导出以下形式的高效注意力机制:

$$

\widehat{\mathrm{Att}...}(\mathbf{Q},\mathbf{K},\mathbf{V})=\widehat{\mathbf{D}}^{-1}(\mathbf{Q}^{\prime}((\mathbf{K}^{\prime})^{\top}\mathbf{V})),\qquad\widehat{\mathbf{D}}=\mathrm{diag}(\mathbf{Q}^{\prime}((\mathbf{K}^{\prime})^{\top}\mathbf{1}_{L})).

$$

$$

\widehat{\mathrm{Att}...}(\mathbf{Q},\mathbf{K},\mathbf{V})=\widehat{\mathbf{D}}^{-1}(\mathbf{Q}^{\prime}((\mathbf{K}^{\prime})^{\top}\mathbf{V})),\qquad\widehat{\mathbf{D}}=\mathrm{diag}(\mathbf{Q}^{\prime}((\mathbf{K}^{\prime})^{\top}\mathbf{1}_{L})).

$$

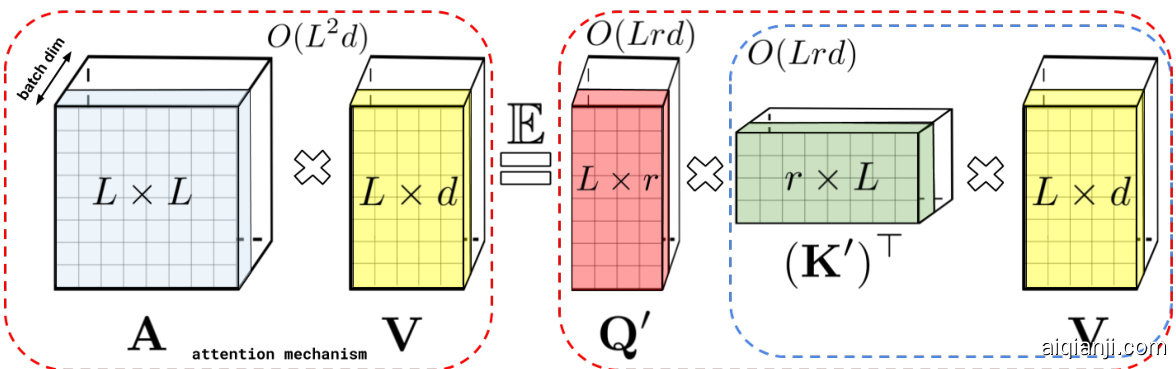

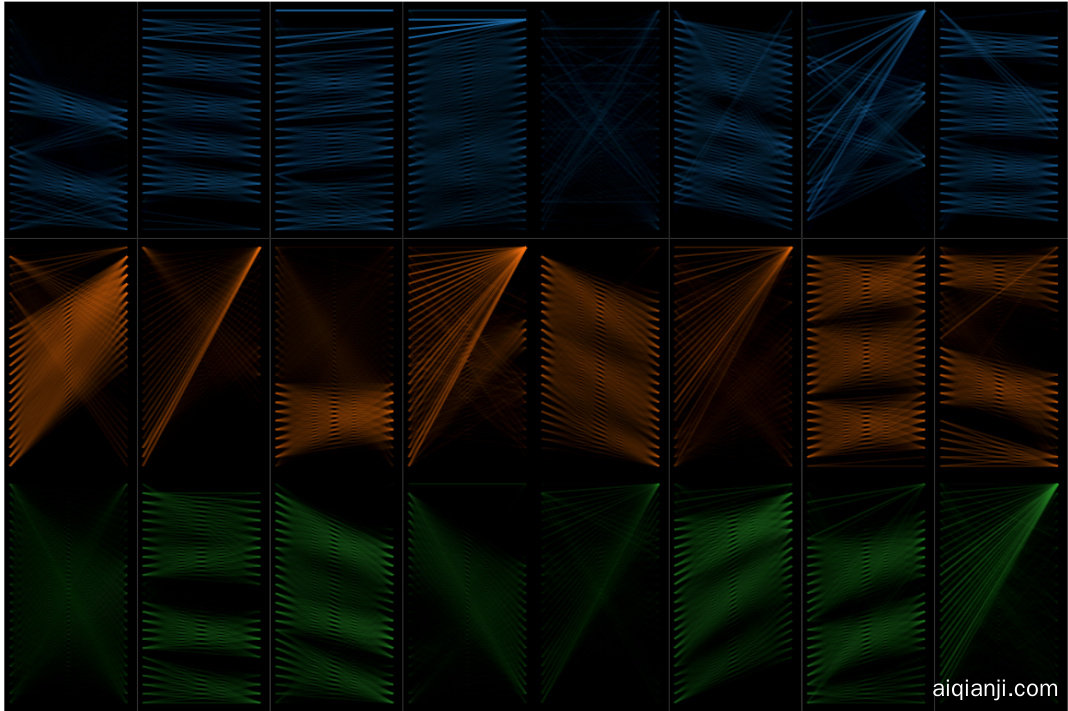

Here $\widehat{\mathrm{Att}_{\leftrightarrow}}$ stands for the approximate attention and brackets indicate the order of computations. It is easy to see that such a mechanism is characterized by space complexity $O(L r+L d+r d)$ and time complexity $O(L r d)$ as opposed to $O(L^{2}+L d)$ and $\dot{O}(\dot{L}^{2}d)$ of the regular attention (see also Fig. 1).

这里 $\widehat{\mathrm{Att}_{\leftrightarrow}}$ 表示近似注意力,括号表示计算顺序。容易看出,这种机制的空间复杂度为 $O(L r+L d+r d)$,时间复杂度为 $O(L r d)$,而常规注意力的空间复杂度为 $O(L^{2}+L d)$,时间复杂度为 $\dot{O}(\dot{L}^{2}d)$ (另见图 1)。

Figure 1: Approximation of the regular attention mechanism AV (before $\mathbf{D}^{-1}$ -re normalization) via (random) feature maps. Dashed-blocks indicate order of computation with corresponding time complexities attached.

图 1: 通过(随机)特征映射对常规注意力机制 AV (在 $\mathbf{D}^{-1}$ 归一化之前)的近似。虚线框表示计算顺序并标注相应时间复杂度。

The above scheme constitutes the FA-part of the $\mathrm{FAVOR+}$ mechanism. The remaining $\mathrm{OR}+$ part answers the following questions: (1) How expressive is the attention model defined in Equation 3, and in particular, can we use it in principle to approximate regular softmax attention ? (2) How do we implement it robustly in practice, and in particular, can we choose $r\ll L$ for $L\gg d$ to obtain desired space and time complexity gains? We answer these questions in the next sections.

上述方案构成了 $\mathrm{FAVOR+}$ 机制的 FA 部分。剩余的 $\mathrm{OR}+$ 部分则解答以下问题:(1) 公式3中定义的注意力模型表达能力如何?特别是,我们能否在原则上用它来近似常规的 softmax 注意力?(2) 如何在实践中稳健地实现它?特别是,对于 $L\gg d$ 的情况,我们能否选择 $r\ll L$ 以获得预期的空间和时间复杂度优势?我们将在接下来的章节中回答这些问题。

2.3 HOW TO AND HOW NOT TO APPROXIMATE SOFTMAX-KERNELS FOR ATTENTION

2.3 如何正确与错误地近似注意力机制中的Softmax核函数

It turns out that by taking $\phi$ of the following form for functions $f_{1},...,f_{l}:\mathbb{R}\to\mathbb{R}$ , function $g:\mathbb{R}^{d}\rightarrow\mathbb{R}$ and deterministic vectors $\omega_{i}$ or $\omega_{1},...,\omega_{m}\overset{\mathrm{iid}}{\sim}\mathcal{D}$ for some distribution $\mathcal{D}\in\mathcal{P}(\mathbb{R}^{d})$ :

结果表明,对于函数 $f_{1},...,f_{l}:\mathbb{R}\to\mathbb{R}$、函数 $g:\mathbb{R}^{d}\rightarrow\mathbb{R}$ 以及确定性向量 $\omega_{i}$ 或从某分布 $\mathcal{D}\in\mathcal{P}(\mathbb{R}^{d})$ 独立同分布抽取的 $\omega_{1},...,\omega_{m}\overset{\mathrm{iid}}{\sim}\mathcal{D}$,采用如下形式的 $\phi$ 时:

$$

\phi(\mathbf{x})=\frac{h(\mathbf{x})}{\sqrt{m}}(f_{1}(\omega_{1}^{\top}\mathbf{x}),...,f_{1}(\omega_{m}^{\top}\mathbf{x}),...,f_{l}(\omega_{1}^{\top}\mathbf{x}),...,f_{l}(\omega_{m}^{\top}\mathbf{x})),

$$

$$

\phi(\mathbf{x})=\frac{h(\mathbf{x})}{\sqrt{m}}(f_{1}(\omega_{1}^{\top}\mathbf{x}),...,f_{1}(\omega_{m}^{\top}\mathbf{x}),...,f_{l}(\omega_{1}^{\top}\mathbf{x}),...,f_{l}(\omega_{m}^{\top}\mathbf{x})),

$$

we can model most kernels used in practice. Furthermore, in most cases $\mathcal{D}$ is isotropic (i.e. with pdf function constant on a sphere), usually Gaussian. For example, by taking $h(\mathbf{x})=1$ , $l=1$ and $\mathbf{\bar{\mathcal{D}}}=\mathcal{N}(0,\mathbf{I}_ {d})$ we obtain estimators of the so-called PNG-kernels (Cho roman ski et al., 2017) (e.g. $f_{1}=\mathrm{sgn}$ corresponds to the angular kernel). Configurations: $h(\mathbf{x})=1$ , $l=2$ , $f_{1}=\sin$ , $f_{2}=\cos$ correspond to shift-invariant kernels, in particular $\mathcal{D}=\mathcal{N}(0,\mathbf{I}_ {d})$ leads to the Gaussian kernel $\mathrm{K_{gauss}}$ (Rahimi & Recht, 2007). The softmax-kernel which defines regular attention matrix $\mathbf{A}$ is given as:

我们可以对实践中使用的大多数核(kernel)进行建模。此外,在大多数情况下$\mathcal{D}$是各向同性的(即概率密度函数在球面上为常数),通常为高斯分布。例如,通过取$h(\mathbf{x})=1$、$l=1$和$\mathbf{\bar{\mathcal{D}}}=\mathcal{N}(0,\mathbf{I}_ {d})$,我们得到了所谓的PNG核(PNG-kernels)估计量(Cho roman ski et al., 2017)(例如$f_{1}=\mathrm{sgn}$对应于角度核(angular kernel))。配置:$h(\mathbf{x})=1$、$l=2$、$f_{1}=\sin$、$f_{2}=\cos$对应于平移不变核(shift-invariant kernels),特别是$\mathcal{D}=\mathcal{N}(0,\mathbf{I}_{d})$会得到高斯核$\mathrm{K_{gauss}}$(Rahimi & Recht, 2007)。定义常规注意力矩阵$\mathbf{A}$的softmax核表示为:

$$

\mathrm{SM}(\mathbf{x},\mathbf{y}){\stackrel{\mathrm{def}}{=}}\exp(\mathbf{x}^{\top}\mathbf{y}).

$$

$$

\mathrm{SM}(\mathbf{x},\mathbf{y}){\stackrel{\mathrm{def}}{=}}\exp(\mathbf{x}^{\top}\mathbf{y}).

$$

In the above, without loss of generality, we omit $\sqrt{d}$ -re normalization since we can equivalently re normalize input keys and queries. Since: $\begin{array}{r}{\mathrm{SM}(\mathbf{x},\mathbf{y})=\exp(\frac{|\mathbf{x}|^{2}}{2})\mathrm{K}_ {\mathrm{gauss}}(\mathbf{x},\mathbf{y})\exp(\frac{|\mathbf{y}|^{2}}{2})}\end{array}$ , based on what we have said, we obtain random feature map unbiased approximation of $\mathrm{SM}(\bar{{\bf x}},{\bf y})$ using trigonometric functions with: $\begin{array}{r}{h(\mathbf{x})=\exp(\frac{|\mathbf{x}|^{2}}{2})}\end{array}$ , $l=2$ , $f_{1}=\sin$ , $f_{2}=\cos$ . We call it $\widehat{\mathrm{SM}}_{m}^{\mathrm{trig}}(\mathbf{x},\mathbf{y})$ There is however a caveat there. The attention module from (1) constructs for each token, a convex combination of value-vectors with coefficients given as corresponding re normalized kernel scores. That is why kernels producing non-negative scores are used. Applying random feature maps with potentially negative dimension-values $(\sin{\it\Delta\phi}/\cos)$ leads to unstable behaviours, especially when kernel scores close to 0 (which is the case for many entries of $\mathbf{A}$ corresponding to low relevance tokens) are approximated by estimators with large variance in such regions. This results in abnormal behaviours, e.g. negative-diagonal-values re normalize rs $\mathbf{D}^{-1}$ , and consequently either completely prevents training or leads to sub-optimal models. We demonstrate empirically that this is what happens for SMm and provide detailed theoretical explanations showing that the variance of SMm is large trig trig asd approximated values tend to 0 (see: Section 3). This is one of the main reasons wdhy the robust random feature map mechanism for approximating regular softmax attention was never proposed.

在上述讨论中,不失一般性,我们省略了 $\sqrt{d}$ 的重新归一化,因为可以等价地对输入键和查询进行重新归一化。由于: $\begin{array}{r}{\mathrm{SM}(\mathbf{x},\mathbf{y})=\exp(\frac{|\mathbf{x}|^{2}}{2})\mathrm{K}_ {\mathrm{gauss}}(\mathbf{x},\mathbf{y})\exp(\frac{|\mathbf{y}|^{2}}{2})}\end{array}$ ,根据前述内容,我们利用三角函数获得了 $\mathrm{SM}(\bar{{\bf x}},{\bf y})$ 的无偏随机特征图近似,其中: $\begin{array}{r}{h(\mathbf{x})=\exp(\frac{|\mathbf{x}|^{2}}{2})}\end{array}$ , $l=2$ , $f_{1}=\sin$ , $f_{2}=\cos$ 。我们称之为 $\widehat{\mathrm{SM}}_{m}^{\mathrm{trig}}(\mathbf{x},\mathbf{y})$ 。

然而这里需要注意一个关键问题。式 (1) 中的注意力模块为每个 token 构建了一个值向量的凸组合,其系数由对应的重新归一化核分数给出。这就是为什么需要使用产生非负分数的核函数。应用可能具有负维度值的随机特征图 $(\sin{\it\Delta\phi}/\cos)$ 会导致不稳定行为,尤其是当接近 0 的核分数(对应于低相关性 token 的 $\mathbf{A}$ 中许多条目)被这些区域方差较大的估计器近似时。这会导致异常行为,例如负对角值的重新归一化矩阵 $\mathbf{D}^{-1}$ ,进而完全阻碍训练或导致次优模型。我们通过实验证明这正是 SMm 出现的问题,并提供详细的理论解释,表明 SMm 的方差在近似值趋近 0 时会显著增大(参见第 3 节)。这也是为什么从未有人提出用于近似常规 softmax 注意力的鲁棒随机特征图机制的主要原因之一。

We propose a robust mechanism in this paper. Furthermore, the variance of our new unbiased positive random feature map estimator tends to 0 as approximated values tend to 0 (see: Section 3).

本文提出了一种鲁棒机制。此外,随着近似值趋近于0,我们新的无偏正随机特征映射估计器的方差也趋近于0(详见:第3节)。

Lemma 1 (Positive Random Features (PRFs) for Softmax). For $\mathbf{x},\mathbf{y}\in\mathbb{R}^{d}$ , ${\bf z}={\bf x}+{\bf y}$ we have:

引理1 (Softmax的正随机特征(PRFs))。对于 $\mathbf{x},\mathbf{y}\in\mathbb{R}^{d}$ , ${\bf z}={\bf x}+{\bf y}$ ,我们有:

$\mathrm{SM}(\mathbf{x},\mathbf{y})=\mathbb{E}_ {\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})}\Big[\mathrm{exp}\Big(\omega^{\top}\mathbf{x}-\frac{|\mathbf{x}|^{2}}{2}\Big)\mathrm{exp}\Big(\omega^{\top}\mathbf{y}-\frac{|\mathbf{y}|^{2}}{2}\Big)\Big]=\Lambda\mathbb{E}_ {\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})}\mathrm{cosh}(\omega^{\top}\mathbf{z}),$ where $\begin{array}{r}{\Lambda=\exp(-\frac{|\mathbf{x}|^{2}+|\mathbf{y}|^{2}}{2})}\end{array}$ and cosh is hyperbolic cosine. Consequently, softmax-kernel admits $a$ positive random feature map unbiased approximation with $\begin{array}{r}{h(\mathbf{x})=\exp(-\frac{\left|\mathbf{x}\right|^{2}}{2})}\end{array}$ , $l=1$ , $f_{1}=\exp$ and $\mathcal{D}=\mathcal{N}(0,\mathbf{I}_ {d})$ or: $\begin{array}{r}{h(\mathbf{x})=\frac{1}{\sqrt{2}}\exp(-\frac{|\mathbf{x}|^{2}}{2})}\end{array}$ , $l=2$ , $f_{1}(u)=\exp(u)$ , $f_{2}(u)=\exp(-u)$ and the same $\mathcal{D}$ (the latter for further variance reduction). We call related estimators: $\widehat{\mathrm{SM}}_ {m}^{+}$ and $\widehat{\mathrm{SM}}_{m}^{\mathrm{hyp+}}$ .

$\mathrm{SM}(\mathbf{x},\mathbf{y})=\mathbb{E}_ {\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})}\Big[\mathrm{exp}\Big(\omega^{\top}\mathbf{x}-\frac{|\mathbf{x}|^{2}}{2}\Big)\mathrm{exp}\Big(\omega^{\top}\mathbf{y}-\frac{|\mathbf{y}|^{2}}{2}\Big)\Big]=\Lambda\mathbb{E}_ {\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})}\mathrm{cosh}(\omega^{\top}\mathbf{z}),$ 其中 $\begin{array}{r}{\Lambda=\exp(-\frac{|\mathbf{x}|^{2}+|\mathbf{y}|^{2}}{2})}\end{array}$ 且 cosh 为双曲余弦函数。因此,softmax核函数允许通过正随机特征映射进行无偏近似,其中 $\begin{array}{r}{h(\mathbf{x})=\exp(-\frac{\left|\mathbf{x}\right|^{2}}{2})}\end{array}$,$l=1$,$f_{1}=\exp$ 且 $\mathcal{D}=\mathcal{N}(0,\mathbf{I}_ {d})$;或采用 $\begin{array}{r}{h(\mathbf{x})=\frac{1}{\sqrt{2}}\exp(-\frac{|\mathbf{x}|^{2}}{2})}\end{array}$,$l=2$,$f_{1}(u)=\exp(u)$,$f_{2}(u)=\exp(-u)$ 及相同 $\mathcal{D}$(后者可进一步降低方差)。我们将相关估计器称为:$\widehat{\mathrm{SM}}_ {m}^{+}$ 和 $\widehat{\mathrm{SM}}_{m}^{\mathrm{hyp+}}$。

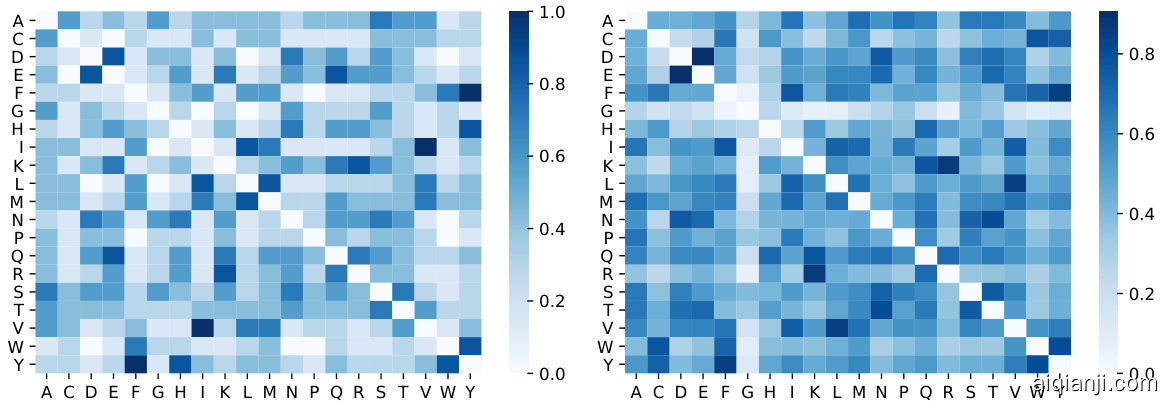

Figure 2: Left: Sym met rize d (around origin) utility function $r$ (defined as the ratio of the mean squared errors (MSEs) of estimators built on: trigonometric and positive random features) as a function of the angle $\phi$ (in radians) between input feature vectors and their lengths $l$ . Larger values indicate regions of $(\phi,l)$ -space with better performance of positive random features. We see that for critical regions with $\phi$ large enough (small enough softmax-kernel values) our method is arbitrarily more accurate than trigonometric random features. Plot presented for domain $[-\pi,\pi]\times[-2,2]$ . Right: The slice of function $r$ for fixed $l=1$ and varying angle $\phi$ . Right Upper Corner: Comparison of the MSEs of both the estimators in a low softmax-kernel value region.

图 2: 左图: 对称化(关于原点)的效用函数 $r$ (定义为基于三角随机特征和正随机特征构建的估计器均方误差(MSE)之比)随输入特征向量间夹角 $\phi$ (弧度制)及其长度 $l$ 变化的关系。较大值表示 $(\phi,l)$ 空间中正随机特征性能更优的区域。可见在 $\phi$ 足够大(softmax核函数值足够小)的关键区域,我们的方法比三角随机特征精度有显著提升。绘图展示区间为 $[-\pi,\pi]\times[-2,2]$。右图: 固定 $l=1$ 时函数 $r$ 随角度 $\phi$ 变化的切片。右上角: 在softmax核函数值较低区域两种估计器MSE的对比。

In Fig. 2 we visualize the advantages of positive versus standard trigonometric random features. In critical regions, where kernel values are small and need careful approximation, our method outperforms its counterpart. In Section 4 we further confirm our method’s advantages empirically, using positive features to efficiently train softmax-based linear Transformers. If we replace in (7) $\omega$ with $\sqrt{d}\frac{\omega}{|\omega|}$ , we obtain the so-called regularized softmax-kernel SMREG which we can approximate in a similar manner, simply changing $\mathcal{D}=\mathcal{N}(0,\mathbf{I}_ {d})$ to ${\mathcal{D}}={\mathrm{Unif}}({\sqrt{d}}S^{d-1})$ , a distribution corresponding to Haar measure on the sphere of radius $\sqrt{d}$ in $\mathbb{R}^{d}$ , obtaining estimator $\widehat{\mathrm{SMREG}_{m}^{+}}$ . As we show in Section 3, such random features can also be used to accurately approximate regular softmax-kernel.

在图 2 中,我们展示了正三角随机特征相对于标准三角随机特征的优势。在核值较小且需要精确逼近的关键区域,我们的方法表现优于对比方案。在第 4 节中,我们通过实验进一步验证了该方法的优势,使用正特征高效训练基于 softmax 的线性 Transformer。若将式 (7) 中的 $\omega$ 替换为 $\sqrt{d}\frac{\omega}{|\omega|}$ ,则可得到所谓的正则化 softmax 核 SMREG,我们可用类似方式进行逼近,只需将 $\mathcal{D}=\mathcal{N}(0,\mathbf{I}_ {d})$ 改为 ${\mathcal{D}}={\mathrm{Unif}}({\sqrt{d}}S^{d-1})$ (该分布对应于 $\mathbb{R}^{d}$ 中半径为 $\sqrt{d}$ 的球面上的哈尔测度),从而获得估计量 $\widehat{\mathrm{SMREG}_{m}^{+}}$ 。如第 3 节所示,此类随机特征也可用于精确逼近常规 softmax 核。

2.4 ORTHOGONAL RANDOM FEATURES (ORFS)

2.4 正交随机特征 (ORFs)

The above constitutes the $\mathsf{R}+$ part of the $\mathrm{FAVOR+}$ method. It remains to explain the O-part. To further reduce the variance of the estimator (so that we can use an even smaller number of random features $r$ ), we entangle different random samples $\omega_{1},...,\omega_{m}$ to be exactly orthogonal. This can be done while maintaining unbiased ness whenever isotropic distributions $\mathcal{D}$ are used (i.e. in particular in all kernels we considered so far) by the standard Gram-Schmidt orthogonal iz ation procedure (see (Cho roman ski et al., 2017) for details). ORFs is a well-known method, yet it turns out that it works particularly well with our introduced PRFs for softmax. This leads to the first theoretical results showing that ORFs can be applied to reduce the variance of softmax/Gaussian kernel estimators for any dimensionality $d$ rather than just asymptotically for large enough $d$ (as is the case for previous methods, see: next section) and leads to the first exponentially small bounds on large deviations probabilities that are strictly smaller than for non-orthogonal methods. Positivity of random features plays a key role in these bounds. The ORF mechanism requires $m\leq d$ , but this will be the case in all our experiments. The pseudocode of the entire $\mathrm{FAVOR+}$ algorithm is given in Appendix B.

上述内容构成了 $\mathrm{FAVOR+}$ 方法的 $\mathsf{R}+$ 部分。接下来需要解释O部分。为了进一步降低估计器的方差(从而可以使用更少的随机特征 $r$),我们将不同的随机样本 $\omega_{1},...,\omega_{m}$ 进行正交化处理。在使用各向同性分布 $\mathcal{D}$ 时(即特别是目前为止我们考虑的所有核函数),通过标准的Gram-Schmidt正交化程序(详见 (Cho roman ski et al., 2017)),可以在保持无偏性的同时实现这一点。ORFs是一种众所周知的方法,但事实证明它特别适用于我们提出的softmax PRFs。这首次从理论上证明,ORFs可以应用于降低任何维度 $d$ 下softmax/高斯核估计器的方差,而不仅仅是在足够大的 $d$ 时渐进有效(如之前的方法所示,见下一节),并且首次得到了严格小于非正交方法的指数级小的大偏差概率界。随机特征的正定性在这些界中起着关键作用。ORF机制要求 $m\leq d$,但这在我们所有的实验中都会满足。完整的 $\mathrm{FAVOR+}$ 算法伪代码见附录B。

Our theoretical results are tightly aligned with experiments. We show in Section 4 that PRFs $^{+}$ ORFs drastically improve accuracy of the approximation of the attention matrix and enable us to reduce $r$ which results in an accurate as well as space and time efficient mechanism which we call FAVOR+.

我们的理论结果与实验高度吻合。在第4节中,我们将展示PRFs$^{+}$ORFs能显著提升注意力矩阵近似的准确度,并使我们能够减小$r$,从而获得一种既精确又节省空间和时间的机制,我们称之为FAVOR+。

3 THEORETICAL RESULTS

3 理论结果

We present here the theory of positive orthogonal random features for softmax-kernel estimation. All these results can be applied also to the Gaussian kernel, since as explained in the previous section, one can be obtained from the other by re normalization (see: Section 2.3). All proofs and additional more general theoretical results with a discussion are given in the Appendix.

我们在此提出用于softmax核估计的正交随机特征理论。所有这些结果同样适用于高斯核 (Gaussian kernel) ,因为正如前文所述,通过重新归一化可以从一个核获得另一个核 (参见:第2.3节) 。所有证明及更一般的理论结果与讨论详见附录。

Lemma 2 (positive (hyperbolic) versus trigonometric random features). The following is true:

引理 2 (正(双曲)与三角随机特征). 以下结论成立:

$$

\begin{array}{r l r}&{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{trig}}({\bf x},{\bf y}))=\displaystyle\frac{1}{2m}\exp(|{\bf x}+{\bf y}|^{2})\mathrm{SM}^{-2}({\bf x},{\bf y})(1-\exp(-|{\bf x}-{\bf y}|^{2}))^{2},}\ &{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{+}({\bf x},{\bf y}))=\displaystyle\frac{1}{m}\exp(|{\bf x}+{\bf y}|^{2})\mathrm{SM}^{2}({\bf x},{\bf y})(1-\exp(-|{\bf x}+{\bf y}|^{2})),}\ &{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{byp}+}({\bf x},{\bf y}))=\displaystyle\frac{1}{2}(1-\exp(-|{\bf x}+{\bf y}|^{2}))\mathrm{MSE}(\widehat{\mathrm{SM}}_{m}^{+}({\bf x},{\bf y})),}\end{array}

$$

$$

\begin{array}{r l r}&{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{trig}}({\bf x},{\bf y}))=\displaystyle\frac{1}{2m}\exp(|{\bf x}+{\bf y}|^{2})\mathrm{SM}^{-2}({\bf x},{\bf y})(1-\exp(-|{\bf x}-{\bf y}|^{2}))^{2},}\ &{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{+}({\bf x},{\bf y}))=\displaystyle\frac{1}{m}\exp(|{\bf x}+{\bf y}|^{2})\mathrm{SM}^{2}({\bf x},{\bf y})(1-\exp(-|{\bf x}+{\bf y}|^{2})),}\ &{}&{\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{byp}+}({\bf x},{\bf y}))=\displaystyle\frac{1}{2}(1-\exp(-|{\bf x}+{\bf y}|^{2}))\mathrm{MSE}(\widehat{\mathrm{SM}}_{m}^{+}({\bf x},{\bf y})),}\end{array}

$$

for independent random samples $\omega_{i}$ , and where MSE stands for the mean squared error.

对于独立随机样本 $\omega_{i}$,其中 MSE 表示均方误差。

Thus, for $\mathrm{SM}(\mathbf{x},\mathbf{y})\rightarrow0$ we have: $\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{trig}}({\bf x},{\bf y}))\rightarrow\infty$ and $\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{+}({\bf x},{\bf y}))\rightarrow0$ . Furthermore, the hyperbolic estimator provides a ddd it ional accuracy improve med nts that are strictly better than those from $\widehat{\mathrm{SM}}_{2m}^{+}(\mathbf{x},\mathbf{y})$ with twice as many random features. The next result shows that the regularized soft madx-kernel is in practice an accurate proxy of the softmax-kernel in attention.

因此,当 $\mathrm{SM}(\mathbf{x},\mathbf{y})\rightarrow0$ 时,我们有:$\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{trig}}({\bf x},{\bf y}))\rightarrow\infty$ 且 $\mathrm{MSE}(\widehat{\mathrm{SM}}_ {m}^{+}({\bf x},{\bf y}))\rightarrow0$。此外,双曲估计器提供了额外的精度提升,其表现严格优于使用两倍随机特征的 $\widehat{\mathrm{SM}}_{2m}^{+}(\mathbf{x},\mathbf{y})$。接下来的结果表明,正则化后的softmax核实际上是注意力机制中softmax核的精确代理。

Theorem 1 (regularized versus softmax-kernel). Assume that the $L_{\infty}$ -norm of the attention matrix for the softmax-kernel satisfies: $|\mathbf{A}|_{\infty}\leq C$ for some constant $C~\geq~1$ . Denote by $\mathbf{A}^{\mathrm{reg}}$ the corresponding attention matrix for the regularized softmax-kernel. The following holds:

定理1 (正则化与softmax核). 假设softmax核的注意力矩阵满足 $L_{\infty}$ 范数条件: $|\mathbf{A}|_{\infty}\leq C$ (其中常数 $C~\geq~1$). 记 $\mathbf{A}^{\mathrm{reg}}$ 为正则化softmax核对应的注意力矩阵, 则有:

$$

\operatorname*{inf}_ {i,j}\frac{\mathbf{A}^{\mathrm{reg}}(i,j)}{\mathbf{A}(i,j)}\geq1-\frac{2}{d^{\frac{1}{3}}}+o\left(\frac{1}{d^{\frac{1}{3}}}\right),a n d\operatorname*{sup}_{i,j}\frac{\mathbf{A}^{\mathrm{reg}}(i,j)}{\mathbf{A}(i,j)}\leq1.

$$

$$

\operatorname*{inf}_ {i,j}\frac{\mathbf{A}^{\mathrm{reg}}(i,j)}{\mathbf{A}(i,j)}\geq1-\frac{2}{d^{\frac{1}{3}}}+o\left(\frac{1}{d^{\frac{1}{3}}}\right),a n d\operatorname*{sup}_{i,j}\frac{\mathbf{A}^{\mathrm{reg}}(i,j)}{\mathbf{A}(i,j)}\leq1.

$$

Furthermore, the latter holds for $d\geq2$ even if the $L_{\infty}$ -norm condition is not satisfied, i.e. the regularized softmax-kernel is a universal lower bound for the softmax-kernel.

此外,即使不满足 $L_{\infty}$ 范数条件,后者在 $d\geq2$ 时仍然成立,即正则化 softmax-kernel 是 softmax-kernel 的通用下界。

Consequently, positive random features for SMREG can be used to approximate the softmax-kernel. Our next result shows that orthogonality provably reduces mean squared error of the estimation with positive random features for any dimensionality $d>0$ and we explicitly provide the gap.

因此,SMREG的正随机特征可用于近似softmax核函数。我们的下一个结果表明,对于任意维度$d>0$,正交性可证明降低正随机特征估计的均方误差,并明确给出了误差差距。

Theorem 2. If $\widehat{\mathrm{SM}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})$ stands for the modification of $\widehat{\mathrm{SM}}_{m}^{+}(\mathbf{x},\mathbf{y})$ with orthogonal random features (and th usd for $m\leq d,$ ), then the following holds for an y $d>0$ :

定理 2. 若 $\widehat{\mathrm{SM}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})$ 表示采用正交随机特征改进后的 $\widehat{\mathrm{SM}}_{m}^{+}(\mathbf{x},\mathbf{y})$ (因此适用于 $m\leq d$ ), 则对于任意 $d>0$ 有:

$$

\mathrm{dSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y}))\leq\mathrm{MSE}(\widehat{\mathrm{SM}}_{m}^{+}(\mathbf{x},\mathbf{y}))-\frac{2(m-1)}{m(d+2)}\left(\mathrm{SM}(\mathbf{x},\mathbf{y})-\exp\left(-\frac{|\mathbf{x}|^{2}+|\mathbf{y}|^{2}}{2}\right)\right)^{2}.

$$

$$

\mathrm{dSE}(\widehat{\mathrm{SM}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y}))\leq\mathrm{MSE}(\widehat{\mathrm{SM}}_{m}^{+}(\mathbf{x},\mathbf{y}))-\frac{2(m-1)}{m(d+2)}\left(\mathrm{SM}(\mathbf{x},\mathbf{y})-\exp\left(-\frac{|\mathbf{x}|^{2}+|\mathbf{y}|^{2}}{2}\right)\right)^{2}.

$$

Furthermore, completely analogous result holds for the regularized softmax-kernel SMREG.

此外,完全类似的结果也适用于正则化 softmax 核 (SMREG)。

For the regularized softmax-kernel, orthogonal features provide additional concentration results - the first exponentially small bounds for probabilities of estimators’ tails that are strictly better than for non-orthogonal variants for every $d>0$ . Our next result enables us to explicitly estimate the gap.

对于正则化的 softmax 核函数,正交特征提供了额外的集中性结果——这是首个关于估计量尾概率的指数级小界,且对于所有 $d>0$ 都严格优于非正交变体。我们的下一个结果使我们能够明确估计这一差距。

Theorem 3. Let x $\cdot,\mathbf{y}\in\mathbb{R}^{d}$ . The following holds for any $a>\mathrm{SMREG}(\mathbf{x},\mathbf{y})$ , $\theta>0$ and $m\leq d$ :

定理3. 设x $\cdot,\mathbf{y}\in\mathbb{R}^{d}$。对于任意$a>\mathrm{SMREG}(\mathbf{x},\mathbf{y})$、$\theta>0$和$m\leq d$,以下不等式成立:

$$

\begin{array}{r l}&{\widehat{\mathbb{P}[\widehat{\mathrm{SMREG}}_ {m}^{+}(\mathbf{x},\mathbf{y})>a]}\le\exp(-\theta m a)M_{Z}(\theta)^{m},\quad\widehat{\mathbb{P}[\widehat{\mathrm{SMREG}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})>a]}}\ &{\le\exp(-\theta m a)\bigg(M_{Z}(\theta)^{m}-\exp\left(-\frac{m}{2}(|\mathbf{x}|^{2}+|\mathbf{y}|^{2})\right)\frac{\theta^{4}m(m-1)}{4(d+2)}|\mathbf{x}+\mathbf{y}|^{4}\bigg)}\end{array}

$$

$$

\begin{array}{r l}&{\widehat{\mathbb{P}[\widehat{\mathrm{SMREG}}_ {m}^{+}(\mathbf{x},\mathbf{y})>a]}\le\exp(-\theta m a)M_{Z}(\theta)^{m},\quad\widehat{\mathbb{P}[\widehat{\mathrm{SMREG}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})>a]}}\ &{\le\exp(-\theta m a)\bigg(M_{Z}(\theta)^{m}-\exp\left(-\frac{m}{2}(|\mathbf{x}|^{2}+|\mathbf{y}|^{2})\right)\frac{\theta^{4}m(m-1)}{4(d+2)}|\mathbf{x}+\mathbf{y}|^{4}\bigg)}\end{array}

$$

where $\widehat{\mathrm{SMREG}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})$ stands for the modification of $\widehat{\mathrm{SMREG}_ {m}^{+}(\mathbf{x},\mathbf{y})}$ with ORFs, $\boldsymbol{X}=$ $\begin{array}{r}{\Lambda\exp(\sqrt{d}\frac{\omega^{\top}}{|\omega|_ {2}}({\bf x}+{\bf y}))}\end{array}$ , $\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})$ , $\Lambda$ is as in Lemma $I$ and $M_{Z}$ is the moment generating function of $Z$ .

其中 $\widehat{\mathrm{SMREG}}_ {m}^{\mathrm{ort+}}(\mathbf{x},\mathbf{y})$ 表示用 ORFs 改进的 $\widehat{\mathrm{SMREG}_ {m}^{+}(\mathbf{x},\mathbf{y})}$,$\boldsymbol{X}=$ $\begin{array}{r}{\Lambda\exp(\sqrt{d}\frac{\omega^{\top}}{|\omega|_ {2}}({\bf x}+{\bf y}))}\end{array}$,$\omega\sim\mathcal{N}(0,\mathbf{I}_ {d})$,$\Lambda$ 如引理 $I$ 所述,$M_{Z}$ 是 $Z$ 的矩母函数。

We see that ORFs provide exponentially small and sharper bounds for critical regions where the softmax-kernel is small. Below we show that even for the $\mathrm{SM}^{\mathrm{trig}}$ mechanism with ORFs, it suffices to take $m=\Theta(d\log(d))$ random projections to accurately approximate the attention matrix (thus if not attention re normalization, PRFs would not be needed). In general, $m$ depends on the dimensionality $d$ of the embeddings, radius $R$ of the ball where all queries/keys live and precision parameter $\epsilon$ (see: Appendix F.6 for additional discussion), but does not depend on input sequence length $L$ .

我们发现,ORFs(正交随机特征)为softmax核函数较小的关键区域提供了指数级更小且更尖锐的边界。下文将证明,即使对于采用ORFs的$\mathrm{SM}^{\mathrm{trig}}$机制,也只需$m=\Theta(d\log(d))$次随机投影即可精确逼近注意力矩阵(因此若非注意力重归一化,PRFs将不再必需)。一般而言,$m$取决于嵌入维度$d$、所有查询/键所在球的半径$R$以及精度参数$\epsilon$(详见附录F.6的进一步讨论),但与输入序列长度$L$无关。

Theorem 4 (uniform convergence for attention approximation). Assume that $L_{2}$ -norms of queries/keys are upper-bounded by $R>0$ . Define $l~=~R d^{-\frac{1}{4}}$ and take $\begin{array}{r}{h^{\ast}=\exp(\frac{l^{2}}{2})}\end{array}$ . Then for any $\epsilon>0$ , $\begin{array}{r}{\delta=\frac{\epsilon}{(h^{*})^{2}}}\end{array}$ and the number of random projections $\begin{array}{r}{m=\Theta(\frac{d}{\delta^{2}}\log(\frac{4d^{\frac{3}{4}}R}{\delta}))}\end{array}$ the following holds for the attention approximation mechanism leveraging estimators $\widehat{\mathrm{SM}}^{\mathrm{trig}}$ with ORFs: $|\widehat{\mathbf A}-\mathbf A|_{\infty}\le\epsilon$ with any constant probability, where $\widehat{\bf A}$ approximates the attent iodn matrix A.

定理4 (注意力近似的一致收敛性)。假设查询/键的$L_{2}$-范数上界为$R>0$。定义$l~=~R d^{-\frac{1}{4}}$并取$\begin{array}{r}{h^{\ast}=\exp(\frac{l^{2}}{2})}\end{array}$。则对于任意$\epsilon>0$,$\begin{array}{r}{\delta=\frac{\epsilon}{(h^{*})^{2}}}\end{array}$和随机投影数量$\begin{array}{r}{m=\Theta(\frac{d}{\delta^{2}}\log(\frac{4d^{\frac{3}{4}}R}{\delta}))}\end{array}$,以下结论对采用ORF估计器$\widehat{\mathrm{SM}}^{\mathrm{trig}}$的注意力近似机制成立:以任意常数概率有$|\widehat{\mathbf A}-\mathbf A|_{\infty}\le\epsilon$,其中$\widehat{\bf A}$是注意力矩阵A的近似。

4 EXPERIMENTS

4 实验

We implemented our setup on top of pre-existing Transformer training code in Jax (Frostig et al., 2018) optimized with just-in-time $(\ j a x\cdot\ j\mathtt{i t})$ compilation, and complement our theory with empirical evidence to demonstrate the practicality of $\mathrm{FAVOR+}$ in multiple settings. Unless explicitly stated, a Performer replaces only the attention component with our method, while all other components are exactly the same as for the regular Transformer. For shorthand notation, we denote unidirectional/causal modelling as (U) and bidirectional/masked language modelling as (B).

我们在Jax (Frostig等人,2018) 现有的Transformer训练代码基础上实现了实验配置,通过即时编译 $(jax \cdot jit)$ 进行优化,并用实证数据补充理论分析,以证明FAVOR+在多种场景下的实用性。除非特别说明,Performer仅用我们的方法替换注意力模块,其余组件与常规Transformer完全相同。简写标记中,单向/因果建模记为(U),双向/掩码语言建模记为(B)。

In terms of baselines, we use other Transformer models for comparison, although some of them are restricted to only one case - e.g. Reformer (Kitaev et al., 2020) is only (U), and Linformer (Wang et al., 2020) is only (B). Furthermore, we use PG-19 (Rae et al., 2020) as an alternative (B) pre training benchmark, as it is made for long-length sequence training compared to the (now publicly unavailable) BookCorpus (Zhu et al., 2015) $^+$ Wikipedia dataset used in BERT (Devlin et al., 2018) and Linformer. All model and token iz ation hyper parameters are shown in Appendix A.

在基线方面,我们使用其他Transformer模型进行比较,尽管其中一些仅限于单一场景——例如Reformer (Kitaev et al., 2020)仅支持(U)情况,Linformer (Wang et al., 2020)仅支持(B)情况。此外,我们采用PG-19 (Rae et al., 2020)作为替代的(B)预训练基准,因为相比BERT (Devlin et al., 2018)和Linformer使用的(现已公开不可用的)BookCorpus (Zhu et al., 2015) $^+$ Wikipedia数据集,PG-19专为长序列训练设计。所有模型和token化的超参数详见附录A。

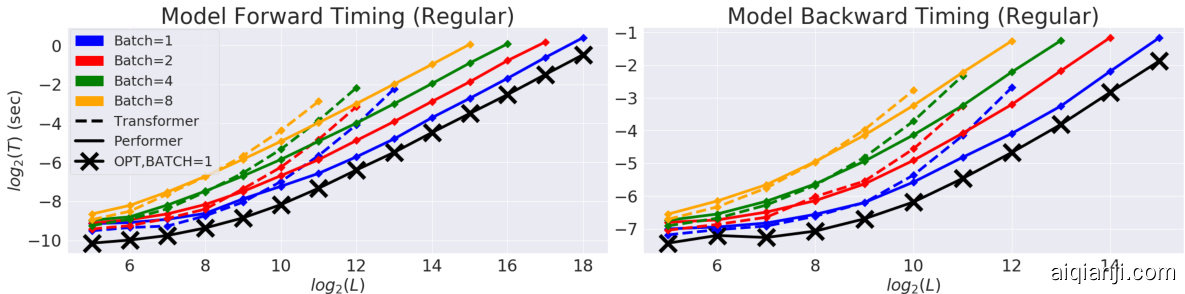

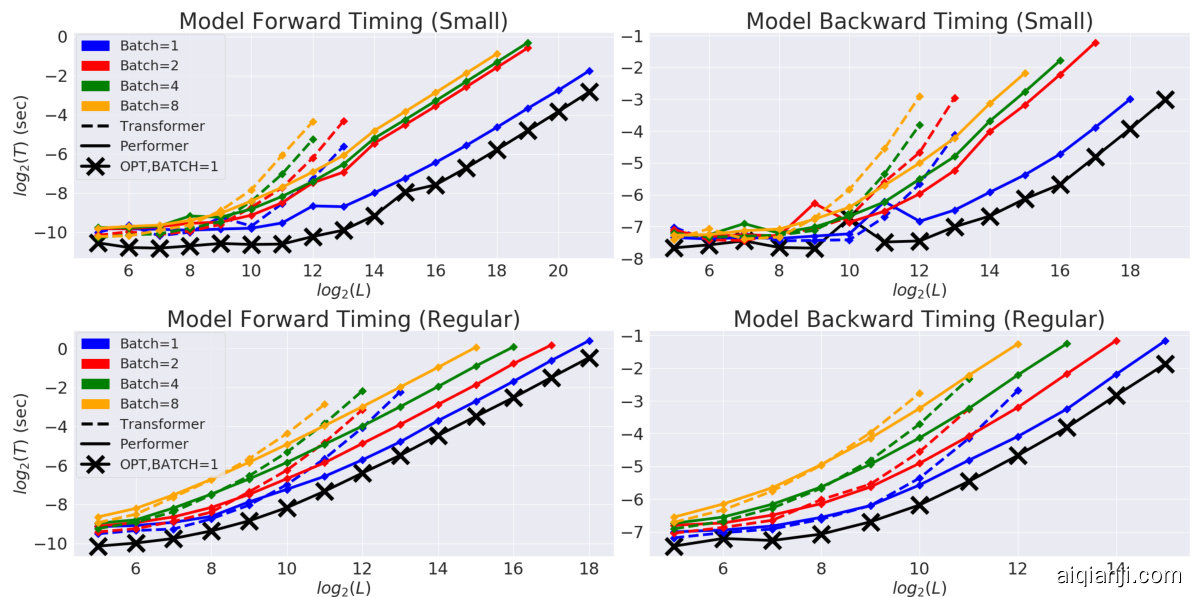

Figure 3: Comparison of Transformer and Performer in terms of forward and backward pass speed and maximum $L$ allowed. "X" (OPT) denotes the maximum possible speedup achievable, when attention simply returns the $\mathbf{V}$ -matrix. Plots shown up to when a model produces an out of memory error on a V100 GPU with 16GB. Vocabulary size used was 256. Best in color.

图 3: Transformer 和 Performer 在前向传播与反向传播速度及最大允许序列长度 $L$ 上的对比。 "X" (OPT) 表示注意力机制直接返回 $\mathbf{V}$ 矩阵时可达到的理论最大加速比。实验在显存为16GB的V100 GPU上进行,当模型触发内存错误时停止测试。词表大小设置为256。建议查看彩色版本。

4.1 COMPUTATIONAL COSTS

4.1 计算成本

We compared speed-wise the backward pass of the Transformer and the Performer in (B) setting, as it is one of the main computational bottlenecks during training, when using the regular default size $(n_{h e a d s},n_{l a y e r s},d_{f f},d\bar{)}=(8,6,2048,512)$ , where $d_{f f}$ denotes the width of the MLP layers.

我们在(B)设置下比较了Transformer和Performer的反向传播速度,因为这是训练过程中的主要计算瓶颈之一。测试使用的是常规默认尺寸$(n_{heads},n_{layers},d_{ff},d\bar{)}=(8,6,2048,512)$,其中$d_{ff}$表示MLP层的宽度。

We observed (Fig. 3) that in terms of $L$ , the Performer reaches nearly linear time and sub-quadratic memory consumption (since the explicit $O(L^{2})$ attention matrix is not stored). In fact, the Performer achieves nearly optimal speedup and memory efficiency possible, depicted by the "X"-line when attention is replaced with the "identity function" simply returning the $\mathbf{V}$ -matrix. The combination of both memory and backward pass efficiencies for large $L$ allows respectively, large batch training and lower wall clock time per gradient step. Extensive additional results are demonstrated in Appendix E by varying layers, raw attention, and architecture sizes.

我们观察到 (图 3) ,在 $L$ 方面,Performer 达到了近乎线性的时间和次二次方的内存消耗 (因为显式的 $O(L^{2})$ 注意力矩阵未被存储) 。实际上,Performer 实现了近乎最优的速度提升和内存效率,如用返回 $\mathbf{V}$ 矩阵的"恒等函数"替代注意力时的"X"线所示。对于大 $L$ ,内存和后向传播效率的结合分别支持了大批量训练和更短的每梯度步实际耗时。附录 E 通过变化层数、原始注意力和架构规模展示了更多实验结果。

4.2 SOFTMAX ATTENTION APPROXIMATION ERROR

4.2 SOFTMAX注意力近似误差

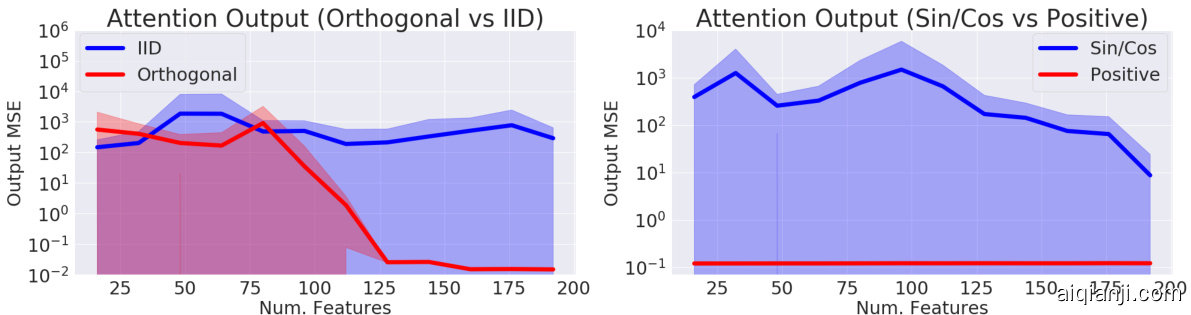

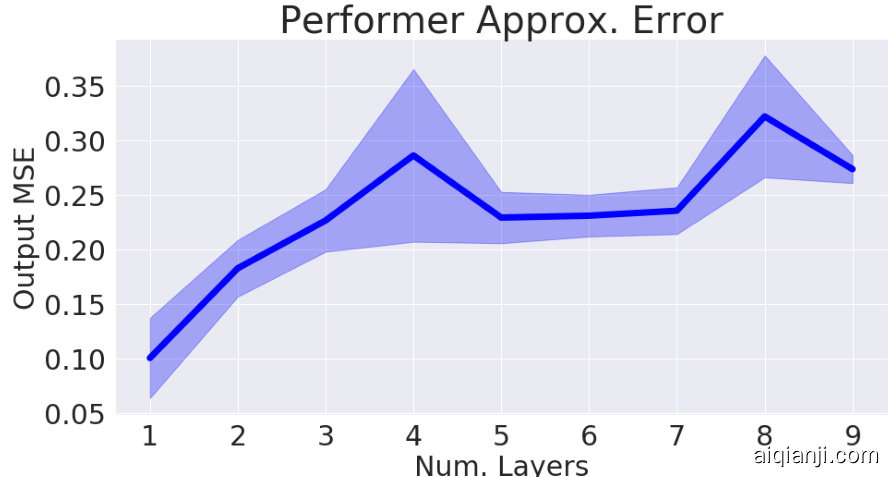

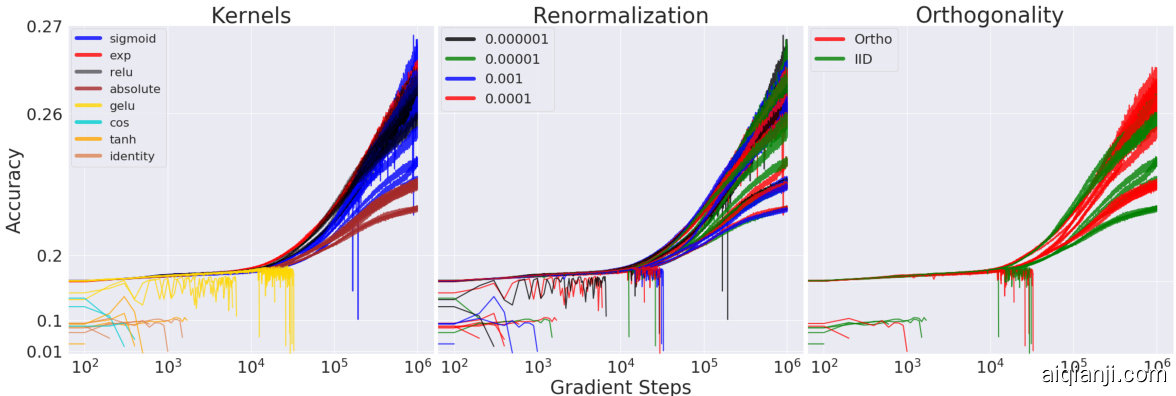

We further examined the approximation error via $\mathrm{FAVOR+}$ in Fig. 4. We demonstrate that 1. Orthogonal features produce lower error than unstructured (IID) features, 2. Positive features produce lower error than trigonometric sin/cos features. These two empirically validate the PORF mechanism.

我们进一步通过图4中的$\mathrm{FAVOR+}$检验了近似误差。结果表明:1. 正交特征比非结构化(IID)特征产生更低的误差;2. 正特征比三角函数sin/cos特征产生更低的误差。这两点从实证角度验证了PORF机制。

Figure 4: MSE of the approximation output when comparing Orthogonal vs IID features and trigonometric sin/cos vs positive features. We took $L=4096$ , $d=16$ , and varied the number of random samples $m$ . Standard deviations shown across 15 samples of appropriately normalized random matrix input data.

图 4: 正交特征与独立同分布(IID)特征、三角函数sin/cos与正特征在近似输出时的均方误差(MSE)对比。实验参数设置为$L=4096$、$d=16$,并随机采样数量$m$进行变量控制。误差线表示对15组经过适当归一化的随机矩阵输入数据的标准差。

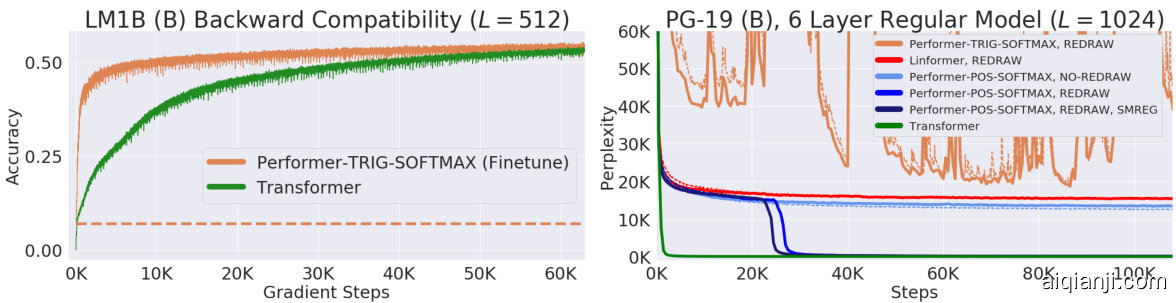

To further improve overall approximation of attention blocks across multiple iterations which further improves training, random samples should be periodically redrawn (Fig. 5, right). This is a cheap procedure, but can be further optimized (Appendix B.2).

为了进一步提升多轮迭代中注意力块的整体近似效果(从而改善训练效果),应定期重新抽取随机样本(图 5,右)。这一过程计算成本较低,但可进一步优化(附录 B.2)。

4.3 SOFTMAX APPROXIMATION ON TRANSFORMERS

4.3 Transformer 上的 Softmax 近似

Even if the approximation of the attention mechanism is tight, small errors can easily propagate throughout multiple Transformer layers (e.g. MLPs, multiple heads), as we show in Fig. 14 (Appendix). In other words, the model’s Lipschitz constant can easily scale up small attention approximation error, which means that very tight approximations may sometimes be needed. Thus, when applying $\operatorname{FAVOR}(+)$ ’s softmax approximations on a Transformer model (i.e. "Performer-XSOFTMAX"), we demonstrate that:

即使注意力机制的近似足够紧密,微小的误差仍容易在多个Transformer层(如MLP、多头注意力)中传播,如图14(附录)所示。换言之,模型的Lipschitz常数容易放大注意力近似误差,这意味着有时需要极其精确的近似。因此,在Transformer模型上应用$\operatorname{FAVOR}(+)$的softmax近似(即"Performer-XSOFTMAX")时,我们证明:

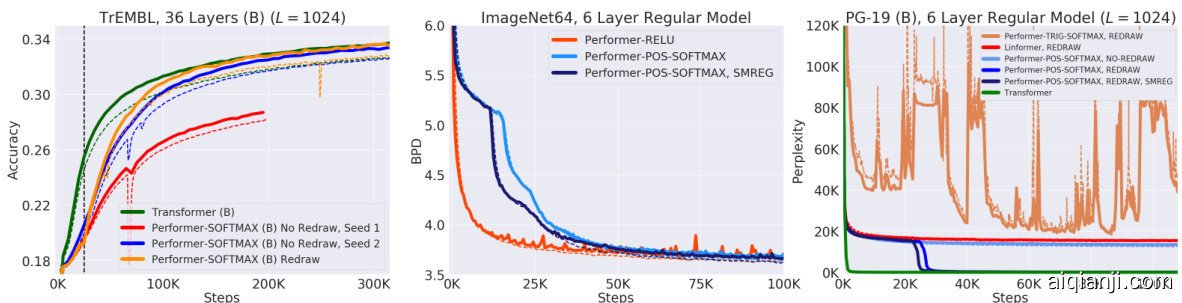

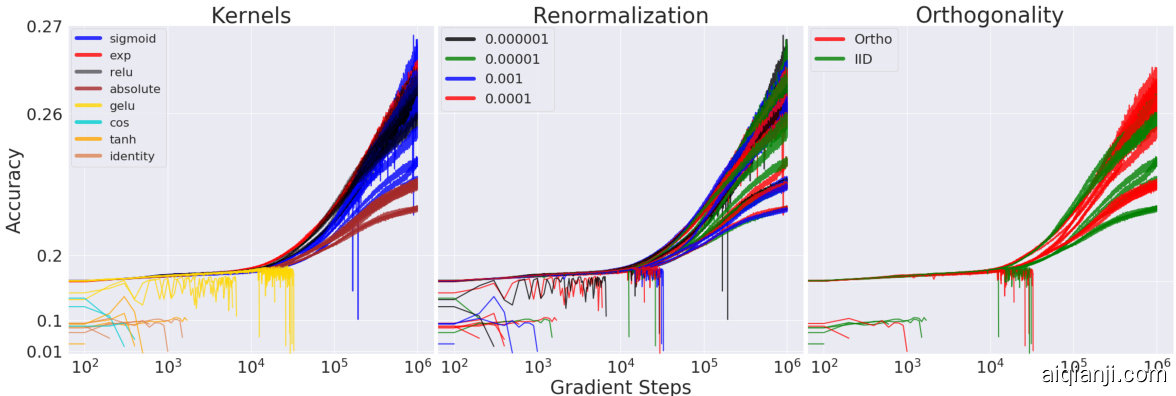

- Backwards compatibility with pretrained models is available as a benefit from softmax approximation, via small finetuning (required due to error propagation) even for trigonometric features (Fig. 5, left) on the LM1B dataset (Chelba et al., 2014). However, when on larger dataset PG-19, 2. Positive (POS) softmax features (with redrawing) become crucial for achieving performance matching regular Transformers (Fig. 5, right).

- 通过softmax近似带来的优势,可实现与预训练模型的向后兼容性,即使在LM1B数据集 (Chelba et al., 2014) 上针对三角特征 (图5左) 也只需少量微调 (由于误差传播而必需) 。然而在更大规模的PG-19数据集上

- 正向 (POS) softmax特征 (带重绘) 成为实现与常规Transformer性能匹配的关键要素 (图5右)

Figure 5: We transferred the original pretrained Transformer’s weights into the Performer, which produces an initial non-zero 0.07 accuracy (dotted orange line), but quickly recovers accuracy in a small fraction of the original number of gradient steps. However on PG-19, Trigonometric (TRIG) softmax approximation becomes highly unstable (full curve in Appendix D.2), while positive features (POS) (without redrawing) and Linformer (which also approximates softmax) even with redrawn projections, plateau at the same perplexity. Positive softmax with feature redrawing is necessary to match the Transformer, with SMREG (regular iz ation from Sec. 3) allowing faster convergence. Additional ablation studies over many attention kernels, showing also that trigonometric random features lead even to NaN values in training are given in Appendix D.3.

图 5: 我们将原始预训练 Transformer 的权重迁移到 Performer 中,初始获得了非零的 0.07 准确率 (橙色虚线),但在原始梯度步数的一小部分内迅速恢复了准确率。然而在 PG-19 数据集上,三角 (TRIG) softmax 近似变得极不稳定 (完整曲线见附录 D.2),而正特征 (POS) (无需重绘) 和 Linformer (同样近似 softmax) 即使采用重绘投影,困惑度仍停滞在同一水平。只有采用特征重绘的正 softmax 才能匹配 Transformer 表现,配合 SMREG (第 3 节的正则化方法) 可实现更快收敛。附录 D.3 提供了对多种注意力核的消融研究,表明三角随机特征甚至会导致训练中出现 NaN 值。

4.4 MULTIPLE LAYER TRAINING FOR PROTEINS

4.4 蛋白质的多层训练

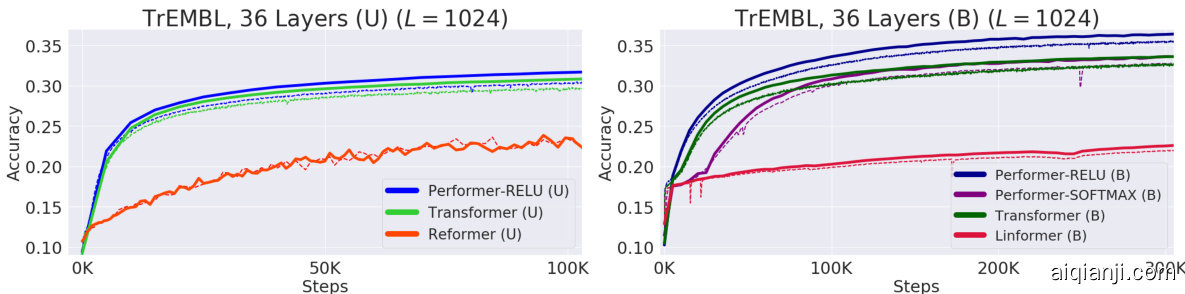

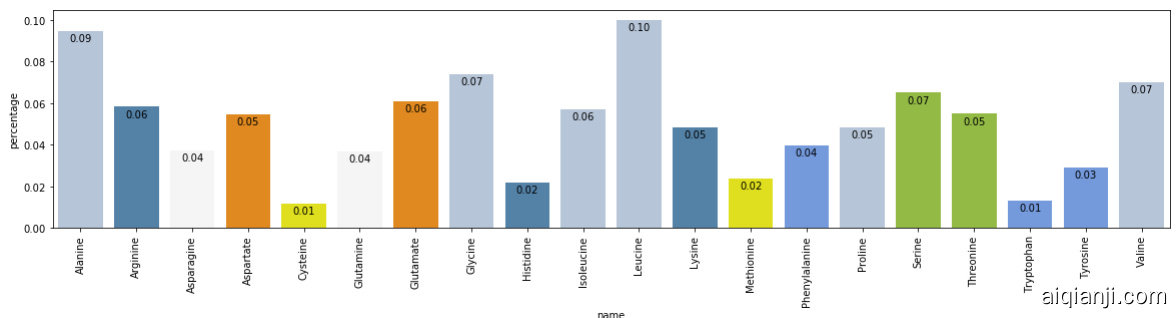

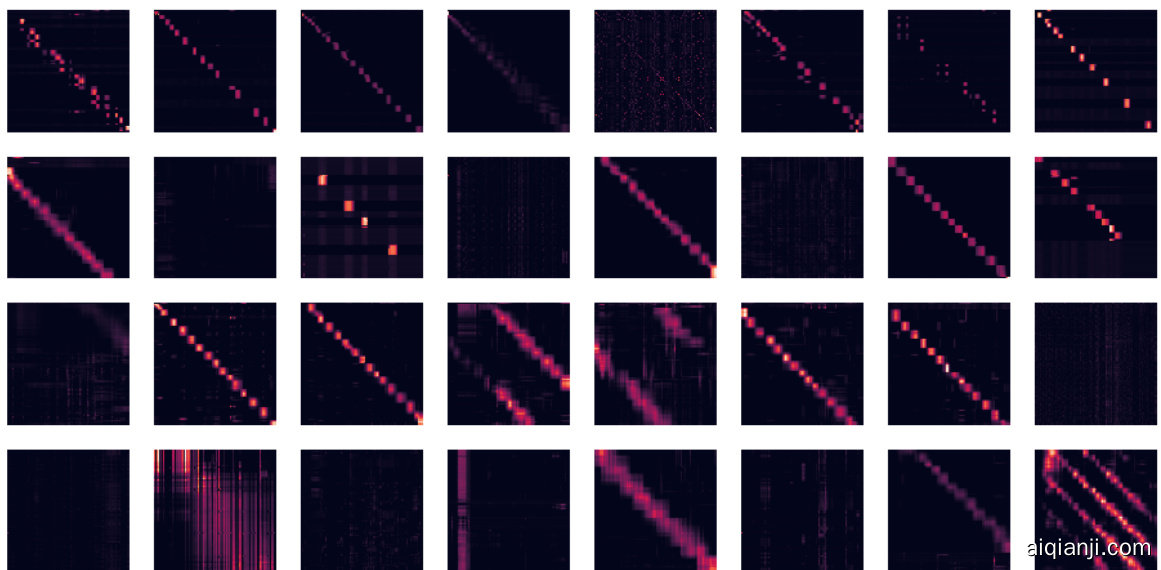

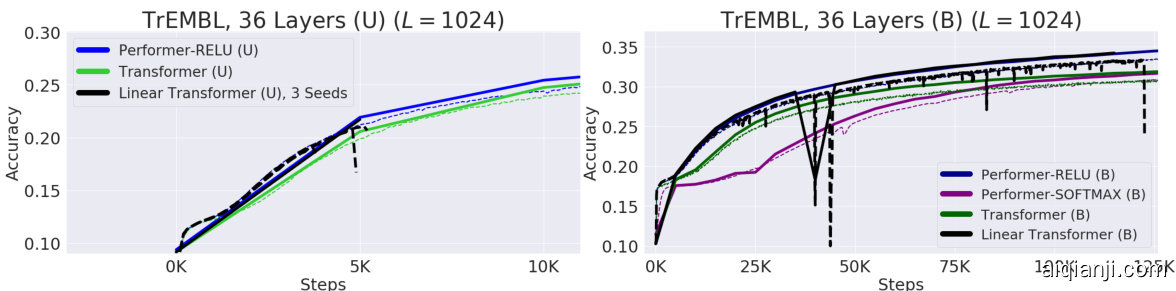

We further benchmark the Performer on both (U) and (B) cases by training a 36-layer model using protein sequences from the Jan. 2019 release of TrEMBL (Consortium, 2019), similar to (Madani et al., 2020). In Fig. 6, the Reformer and Linformer significantly drop in accuracy on the protein dataset. Furthermore, the usefulness of generalized attention is evidenced by Performer-RELU (taking $f=\mathrm{ReLU}$ in Equation 5) achieving the highest accuracy in both (U) and (B) cases. Our proposed softmax approximation is also shown to be tight, achieving the same accuracy as the exact-softmax Transformer and confirming our theoretical claims from Section 3.

我们进一步在(U)和(B)两种情况下对Performer进行基准测试,使用2019年1月发布的TrEMBL蛋白质序列(Consortium, 2019)训练了一个36层模型,方法类似于(Madani et al., 2020)。在图6中,Reformer和Linformer在蛋白质数据集上的准确率显著下降。此外,广义注意力机制的有效性通过Performer-RELU(在公式5中取$f=\mathrm{ReLU}$)在(U)和(B)情况下均获得最高准确率得到证实。我们提出的softmax近似也被证明是紧致的,达到了与精确softmax Transformer相同的准确率,验证了第3节的理论主张。

Figure 6: Train $=$ Dashed, Validation $=$ Solid. For TrEMBL, we used the exact same model parameters $(n_{h e a d s},n_{l a y e r s},d_{f f},d)=(8,36,1024,512)$ from (Madani et al., 2020) for all runs. For fairness, all TrEMBL experiments used 16x16 TPU-v2’s. Batch sizes were maximized for each separate run given the compute constraints. Hyper parameters can be found in Appendix A. Extended results including dataset statistics, out of distribution evaluations, and visualization s, can be found in Appendix C.

图 6: 训练集 $=$ 虚线, 验证集 $=$ 实线。对于TrEMBL, 我们在所有实验中均采用与 (Madani et al., 2020) 完全相同的模型参数 $(n_{heads},n_{layers},d_{ff},d)=(8,36,1024,512)$。为确保公平性, 所有TrEMBL实验均使用16x16 TPU-v2芯片。在计算资源限制下, 每次实验的批次大小均设置为最大值。超参数详见附录A。扩展结果 (包括数据集统计、分布外评估和可视化) 详见附录C。

4.5 LARGE LENGTH TRAINING - COMMON DATASETS

4.5 大长度训练 - 常用数据集

On the standard (U) ImageNet64 benchmark from (Parmar et al., 2018) with $L=12288$ which is unfeasible for regular Transformers, we set all models to use the same $(n_{h e a d s},d_{f f},d)$ but varying $\it{n_{l a y e r s}}$ . Performer/6-layers matches the Reformer/12-layers, while the Performer/12-layers matches the Reformer/24-layers (Fig. 7: left). Depending on hardware (TPU or GPU), we also found that the Performer can be $2\mathbf{x}$ faster than the Reformer via Jax optimization s for the (U) setting.

在 (Parmar et al., 2018) 提出的标准 (U) ImageNet64 基准测试中,当 $L=12288$ 时,常规 Transformer 无法处理。我们设定所有模型使用相同的 $(n_{heads},d_{ff},d)$ 参数,但调整 $\it{n_{layers}}$ 层数。6 层 Performer 与 12 层 Reformer 性能相当,而 12 层 Performer 与 24 层 Reformer 表现一致 (图 7: 左)。根据硬件 (TPU 或 GPU) 差异,我们还发现通过 Jax 优化,Performer 在 (U) 设置下可比 Reformer 快 $2\mathbf{x}$。

For a proof of principle study, we also create an initial protein benchmark for predicting interactions among groups of proteins by concatenating protein sequences to length $L=8192$ from TrEMBL, long enough to model protein interaction networks without the large sequence alignments required by existing methods (Cong et al., 2019). In this setting, a regular Transformer overloads memory even at a batch size of 1 per chip, by a wide margin. Thus as a baseline, we were forced to use a significantly smaller variant, reducing to $(n_{h e a d s},n_{l a y e r s},d_{f f},d)=(8,{1,2,3}$ , 256, 256). Meanwhile, the Performer trains efficiently at a batch size of 8 per chip using the standard $(8,6,2048,512)$ architecture. We see in Fig. 7 (right subfigure) that the smaller Transformer $(n_{l a y e r}=3)$ ) is quickly bounded at $\approx19%$ , while the Performer is able to train continuously to $\approx24%$ .

为进行原理验证研究,我们还通过将TrEMBL中的蛋白质序列连接至长度$L=8192$,创建了一个初步的蛋白质相互作用预测基准。该长度足以建模蛋白质相互作用网络,而无需现有方法所需的大规模序列比对(Cong等人,2019)。在此设置下,常规Transformer即使在每芯片批次大小为1时仍会大幅超出内存容量。因此作为基线,我们被迫使用显著缩小的变体,参数降至$(n_{heads},n_{layers},d_{ff},d)=(8,{1,2,3}$, 256, 256)。与此同时,Performer采用标准$(8,6,2048,512)$架构,能以每芯片批次大小8高效训练。图7(右侧子图)显示,缩小版Transformer$(n_{layer}=3)$)很快在$\approx19%$处达到瓶颈,而Performer能持续训练至$\approx24%$。

Figure 7: Train $=$ Dashed, Validation $=$ Solid. For ImageNet64, all models used the standard $(n_{h e a d s},d_{f f},d)=$ (8, 2048, 512). We further show that our positive softmax approximation achieves the same performance as ReLU in Appendix D.2. For concatenated TrEMBL, we varied $n_{\mathrm{layers}} \in {1, 2, 3}$ for the smaller Transformer. Hyper parameters can be found in Appendix A.

图 7: 训练 $=$ 虚线,验证 $=$ 实线。对于 ImageNet64,所有模型均采用标准 $(n_{heads},d_{ff},d)=$ (8, 2048, 512)。我们进一步证明在附录 D.2 中,我们的正 softmax 近似能达到与 ReLU 相同的性能。对于拼接的 TrEMBL,我们为较小的 Transformer 设置了 $n_{\mathrm{layers}} \in {1, 2, 3}$ 的变化。超参数详见附录 A。

5 CONCLUSION

5 结论

We presented Performer, a new type of Transformer, relying on our Fast Attention Via positive Orthogonal Random features $({\mathrm{FAVOR}}+{}$ ) mechanism to significantly improve space and time complexity of regular Transformers. Our mechanism provides to our knowledge the first effective unbiased estimation of the original softmax-based Transformer with linear space and time complexity and opens new avenues in the research on Transformers and the role of non-spars if ying attention mechanisms.

我们提出了Performer,这是一种新型Transformer,它基于我们通过正正交随机特征实现的快速注意力机制(FAVOR+),显著改善了常规Transformer的空间和时间复杂度。据我们所知,我们的机制首次提供了对原始基于softmax的Transformer的有效无偏估计,具有线性空间和时间复杂度,并为Transformer研究以及非稀疏化注意力机制的作用开辟了新途径。

6 BROADER IMPACT

6 更广泛的影响

We believe that the presented algorithm can be impactful in various ways:

我们相信所提出的算法能在多方面产生重要影响:

Biology and Medicine: Our method has the potential to directly impact research on biological sequence analysis by enabling the Transformer to be applied to much longer sequences without constraints on the structure of the attention matrix. The initial application that we consider is the prediction of interactions between proteins on the proteome scale. Recently published approaches require large evolutionary sequence alignments, a bottleneck for applications to mammalian genomes (Cong et al., 2019). The potentially broad translational impact of applying these approaches to biological sequences was one of the main motivations of this work. We believe that modern bioinformatics can immensely benefit from new machine learning techniques with Transformers being among the most promising. Scaling up these methods to train faster more accurate language models opens the door to the ability to design sets of molecules with pre-specified interaction properties. These approaches could be used to augment existing physics-based design strategies that are of critical importance for example in the development of new nano particle vaccines (Marc and all i et al., 2019).

生物学与医学:我们的方法通过使Transformer能够应用于更长的序列而不受注意力矩阵结构的限制,有望直接影响生物序列分析研究。我们考虑的首个应用是蛋白质组规模上的蛋白质相互作用预测。近期发表的方法需要大量进化序列比对,这对哺乳动物基因组应用形成了瓶颈(Cong等人,2019)。将这些方法应用于生物序列可能带来的广泛转化影响,是本研究的主要动机之一。我们认为现代生物信息学可从新型机器学习技术中极大获益,其中Transformer最具前景。通过扩展这些方法来训练更快、更准确的大语言模型,为设计具有预设相互作用特性的分子组合开辟了新途径。这些方法可用于增强现有的基于物理学的设计策略,例如在新型纳米颗粒疫苗开发中具有关键作用(Marc等人,2019)。

Environment: As we have shown, Performers with $\mathrm{FAVOR+}$ are characterized by much lower compute costs and substantially lower space complexity which can be directly translated to $\mathrm{CO_{2}}$ emission reduction (Strubell et al., 2019) and lower energy consumption (You et al., 2020), as regular Transformers require very large computational resources.

环境:正如我们所展示的,采用$\mathrm{FAVOR+}$的Performers具有更低的计算成本和显著降低的空间复杂度,这可以直接转化为$\mathrm{CO_{2}}$排放减少 (Strubell et al., 2019) 和更低的能耗 (You et al., 2020),因为常规Transformer需要非常大的计算资源。

Research on Transformers: We believe that our results can shape research on efficient Transformers architectures, guiding the field towards methods with strong mathematical foundations. Our research may also hopefully extend Transformers also beyond their standard scope (e.g. by considering the Generalized Attention mechanism and connections with kernels). Exploring scalable Transformer architectures that can handle $L$ of the order of magnitude few thousands and more, preserving accuracy of the baseline at the same time, is a gateway to new breakthroughs in bio-informatics, e.g. language modeling for proteins, as we explained in the paper. Our presented method can be potentially a first step.

Transformer研究:我们相信,这些成果能推动高效Transformer架构的研究,为该领域奠定坚实的数学基础。我们的研究还可能将Transformer的应用范围拓展至传统领域之外(例如通过广义注意力机制 (Generalized Attention) 与核方法的关联)。正如论文所述,探索可扩展的Transformer架构(在保持基线精度的同时处理数千量级及以上的序列长度$L$),将为生物信息学带来新突破(如蛋白质语言建模)。我们提出的方法有望成为这一进程的起点。

Backward Compatibility: Our Performer can be used on the top of a regular pre-trained Transformer as opposed to other Transformer variants. Even if up-training is not required, $\mathrm{FAVOR+}$ can still be used for fast inference with no loss of accuracy. We think about this backward compatibility as a very important additional feature of the presented techniques that might be particularly attractive for practitioners.

向后兼容性:我们的Performer可以在常规预训练Transformer之上使用,与其他Transformer变体不同。即使不需要重新训练,$\mathrm{FAVOR+}$仍可用于快速推理且不损失准确性。我们认为这种向后兼容性是所提出技术的一个非常重要的附加特性,对实践者可能特别具有吸引力。

Attention Beyond Transformers: Finally, FAVOR $+$ can be applied to approximate exact attention also outside the scope of Transformers. This opens a large volume of new potential applications including: hierarchical attention networks (HANS) (Yang et al., 2016), graph attention networks (Velickovic et al., 2018), image processing (Fu et al., 2019), and reinforcement learning/robotics (Tang et al., 2020).

注意力机制超越Transformer:最后,FAVOR $+$ 也可以用于近似精确注意力,而不仅限于Transformer领域。这为大量新应用开启了可能,包括:层次注意力网络 (HANs) (Yang等人, 2016)、图注意力网络 (Velickovic等人, 2018)、图像处理 (Fu等人, 2019) 以及强化学习/机器人学 (Tang等人, 2020)。

7 ACKNOWLEDGEMENTS

7 致谢

We thank Nikita Kitaev and Wojciech Gajewski for multiple discussions on the Reformer, and also thank Aurko Roy and Ashish Vaswani for multiple discussions on the Routing Transformer. We further thank Joshua Meier, John Platt, and Tom Weingarten for many fruitful discussions on biological data and useful comments on this draft. We lastly thank Yi Tay and Mostafa Dehghani for discussions on comparing baselines.

我们感谢 Nikita Kitaev 和 Wojciech Gajewski 就 Reformer 进行的多次讨论,同时感谢 Aurko Roy 和 Ashish Vaswani 对 Routing Transformer 的多次探讨。此外,我们感谢 Joshua Meier、John Platt 和 Tom Weingarten 在生物数据方面的有益讨论以及对本草稿的宝贵意见。最后,我们感谢 Yi Tay 和 Mostafa Dehghani 关于基准比较的讨论。

Valerii Li kho s her s to v acknowledges support from the Cambridge Trust and DeepMind. Lucy Colwell acknowledges support from the Simons Foundation. Adrian Weller acknowledges support from a Turing AI Fellowship under grant EP/V025379/1, The Alan Turing Institute under EPSRC grant EP/N510129/1 and U/B/000074, and the Leverhulme Trust via CFI.

Valerii Likhosherstov 感谢 Cambridge Trust 和 DeepMind 的支持。Lucy Colwell 感谢 Simons Foundation 的支持。Adrian Weller 感谢 Turing AI Fellowship (资助号 EP/V025379/1)、The Alan Turing Institute (EPSRC 资助号 EP/N510129/1 和 U/B/000074) 以及 Leverhulme Trust 通过 CFI 提供的支持。

REFERENCES

参考文献

Irwan Bello, Barret Zoph, Ashish Vaswani, Jonathon Shlens, and Quoc V. Le. Attention augmented convolutional networks. CoRR, abs/1904.09925, 2019. URL http://arxiv.org/abs/ 1904.09925.

Irwan Bello、Barret Zoph、Ashish Vaswani、Jonathon Shlens 和 Quoc V. Le。注意力增强卷积网络。CoRR,abs/1904.09925,2019。URL http://arxiv.org/abs/1904.09925。

Djork-Arné Clevert, Thomas Unter thin er, and Sepp Hochreiter. Fast and accurate deep network learning by exponential linear units (elus). In 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, 2016. URL http://arxiv.org/abs/1511.07289.

Djork-Arné Clevert、Thomas Unterthiner 和 Sepp Hochreiter。通过指数线性单元 (ELUs) 实现快速准确的深度网络学习。见《第四届国际学习表征会议》(ICLR 2016),2016年5月2-4日,波多黎各圣胡安,会议论文集,2016。URL http://arxiv.org/abs/1511.07289。

Olga Kovaleva, Alexey Romanov, Anna Rogers, and Anna Rumshisky. Revealing the dark secrets of bert. arXiv preprint arXiv:1908.08593, 2019.

Olga Kovaleva、Alexey Romanov、Anna Rogers 和 Anna Rumshisky。揭示 BERT 的黑暗秘密。arXiv 预印本 arXiv:1908.08593,2019。

Zhuoran Shen, Mingyuan Zhang, Shuai Yi, Junjie Yan, and Haiyu Zhao. Factorized attention: Self-attention with linear complexities. CoRR, abs/1812.01243, 2018. URL http://arxiv. org/abs/1812.01243.

Zhuoran Shen、Mingyuan Zhang、Shuai Yi、Junjie Yan 和 Haiyu Zhao。《因子化注意力:线性复杂度的自注意力机制》。CoRR,abs/1812.01243,2018。URL http://arxiv.org/abs/1812.01243。

Emma Strubell, Ananya Ganesh, and Andrew McCallum. Energy and policy considerations for deep learning in NLP. CoRR, abs/1906.02243, 2019. URL http://arxiv.org/abs/1906. 02243.

Emma Strubell、Ananya Ganesh 和 Andrew McCallum。自然语言处理中深度学习的能耗与政策考量。CoRR, abs/1906.02243, 2019. 网址 http://arxiv.org/abs/1906.02243。

Yujin Tang, Duong Nguyen, and David Ha. Neuro evolution of self-interpret able agents. CoRR, abs/2003.08165, 2020. URL https://arxiv.org/abs/2003.08165.

Yujin Tang、Duong Nguyen和David Ha。自解释智能体的神经进化。CoRR,abs/2003.08165,2020。URL https://arxiv.org/abs/2003.08165。

Yi Tay, Mostafa Dehghani, Samira Abnar, Yikang Shen, Dara Bahri, Philip Pham, Jinfeng Rao, Liu Yang, Sebastian Ruder, and Donald Metzler. Long range arena: A benchmark for efficient transformers. 2021.

Yi Tay、Mostafa Dehghani、Samira Abnar、Yikang Shen、Dara Bahri、Philip Pham、Jinfeng Rao、Liu Yang、Sebastian Ruder 和 Donald Metzler。Long Range Arena:高效 Transformer 的基准测试。2021。

Yao-Hung Hubert Tsai, Shaojie Bai, Makoto Yamada, Louis-Philippe Morency, and Ruslan Salakhutdinov. Transformer dissection: An unified understanding for transformer’s attention via the lens of kernel. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 4335–4344, 2019.

Yao-Hung Hubert Tsai、Shaojie Bai、Makoto Yamada、Louis-Philippe Morency和Ruslan Salakhutdinov。Transformer剖析:通过核视角统一理解Transformer注意力机制。载于《2019年自然语言处理经验方法会议暨第九届自然语言处理国际联合会议论文集》(EMNLP-IJCNLP),第4335-4344页,2019年。

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in Neural Information Processing Systems 30, pp. 5998–6008. Curran Associates, Inc., 2017. URL http://papers. nips.cc/paper/7181-attention-is-all-you-need.pdf.

Ashish Vaswani、Noam Shazeer、Niki Parmar、Jakob Uszkoreit、Llion Jones、Aidan N Gomez、Łukasz Kaiser 和 Illia Polosukhin。Attention is all you need。载于《神经信息处理系统进展》第30卷,第5998–6008页。Curran Associates公司,2017年。URL http://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf。

Petar Velickovic, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. Graph attention networks. In 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, April 30 - May 3, 2018, Conference Track Proceedings. OpenReview.net, 2018. URL https://openreview.net/forum?id $=$ rJXMpikCZ.

Petar Velickovic、Guillem Cucurull、Arantxa Casanova、Adriana Romero、Pietro Liò和Yoshua Bengio。图注意力网络。载于《第六届国际学习表征会议》(ICLR 2018),加拿大不列颠哥伦比亚省温哥华,2018年4月30日至5月3日,会议论文集。OpenReview.net,2018。网址 https://openreview.net/forum?id $=$ rJXMpikCZ。

Jesse Vig. A multiscale visualization of attention in the transformer model. arXiv preprint arXiv:1906.05714, 2019.

Jesse Vig. Transformer模型注意力的多尺度可视化. arXiv preprint arXiv:1906.05714, 2019.

Jesse Vig and Yonatan Belinkov. Analyzing the structure of attention in a transformer language model. CoRR, abs/1906.04284, 2019. URL http://arxiv.org/abs/1906.04284.

Jesse Vig 和 Yonatan Belinkov. 分析Transformer语言模型中的注意力结构. CoRR, abs/1906.04284, 2019. URL http://arxiv.org/abs/1906.04284.

Jesse Vig, Ali Madani, Lav R. Varshney, Caiming Xiong, Richard Socher, and Nazneen Fatema Rajani. Bertology meets biology: Interpreting attention in protein language models. CoRR, abs/2006.15222, 2020. URL https://arxiv.org/abs/2006.15222.

Jesse Vig、Ali Madani、Lav R. Varshney、Caiming Xiong、Richard Socher 和 Nazneen Fatema Rajani。BERTology 遇见生物学:解析蛋白质语言模型中的注意力机制。CoRR, abs/2006.15222, 2020. URL https://arxiv.org/abs/2006.15222。

Oriol Vinyals, Meire Fortunato, and Navdeep Jaitly. Pointer networks. In Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, December 7-12, 2015, Montreal, Quebec, Canada, pp. 2692–2700, 2015.

Oriol Vinyals、Meire Fortunato 和 Navdeep Jaitly。指针网络 (Pointer Networks)。载于《神经信息处理系统进展 28:2015 年神经信息处理系统年会》,2015 年 12 月 7-12 日,加拿大魁北克蒙特利尔,第 2692–2700 页,2015 年。

Sinong Wang, Belinda Z. Li, Madian Khabsa, Han Fang, and Hao Ma. Linformer: Self-attention with linear complexity. CoRR, abs/2006.04768, 2020. URL https://arxiv.org/abs/2006. 04768.

Sinong Wang、Belinda Z. Li、Madian Khabsa、Han Fang 和 Hao Ma。Linformer: 线性复杂度的自注意力机制。CoRR, abs/2006.04768, 2020。URL https://arxiv.org/abs/2006.04768。

Tong Xiao, Yinqiao Li, Jingbo Zhu, Zhengtao Yu, and Tongran Liu. Sharing attention weights for fast transformer. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, August 10-16, 2019, pp. 5292–5298. ijcai.org, 2019. doi: 10.24963/ijcai.2019/735. URL https://doi.org/10.24963/ijcai.2019/735.

Tong Xiao、Yinqiao Li、Jingbo Zhu、Zhengtao Yu 和 Tongran Liu。共享注意力权重实现快速Transformer。载于《第二十八届国际人工智能联合会议论文集》(IJCAI 2019),2019年8月10-16日,中国澳门,第5292–5298页。ijcai.org, 2019。doi: 10.24963/ijcai.2019/735。网址 https://doi.org/10.24963/ijcai.2019/735。

Zichao Yang, Diyi Yang, Chris Dyer, Xiaodong He, Alexander J. Smola, and Eduard H. Hovy. Hierarchical attention networks for document classification. In NAACL HLT 2016, The 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego California, USA, June 12-17, 2016, pp. 1480–1489. The Association for Computational Linguistics, 2016. doi: 10.18653/v1/n16-1174. URL https: //doi.org/10.18653/v1/n16-1174.

Zichao Yang, Diyi Yang, Chris Dyer, Xiaodong He, Alexander J. Smola, 和 Eduard H. Hovy。面向文档分类的层次化注意力网络。载于《NAACL HLT 2016:北美计算语言学协会人类语言技术分会2016年会论文集》,美国加州圣地亚哥,2016年6月12-17日,第1480–1489页。计算语言学协会,2016年。doi: 10.18653/v1/n16-1174。URL https://doi.org/10.18653/v1/n16-1174。

APPENDIX: RETHINKING ATTENTION WITH PERFORMERS

附录:重新思考Performer中的注意力机制

A HYPER PARAMETERS FOR EXPERIMENTS

实验超参数

This optimal setting (including comparisons to approximate softmax) we use for the Performer is specified in the Generalized Attention (Subsec. A.4), and unless specifically mentioned (e.g. using name "Performer-SOFTMAX"), "Performer" refers to using this generalized attention setting.

我们在广义注意力机制 (附录A.4) 中为Performer指定了这一最优配置 (包括与近似softmax的对比) 。除非特别说明 (例如使用名称"Performer-SOFTMAX") ,否则"Performer"均指采用该广义注意力配置。

A.1 METRICS

A.1 指标

We report the following evaluation metrics:

我们报告以下评估指标:

- Accuracy: For unidirectional models, we measure the accuracy on next-token prediction, averaged across all sequence positions in the dataset. For bidirectional models, we mask each token with $15%$ probability (same as (Devlin et al., 2018)) and measure accuracy across the masked positions.

- 准确率:对于单向模型,我们测量下一个token预测的准确率,取数据集中所有序列位置的平均值。对于双向模型,我们以15%的概率掩码每个token (与 (Devlin et al., 2018) 相同),并测量掩码位置的准确率。

- Perplexity: For unidirectional models, we measure perplexity across all sequence positions in the dataset. For bidirectional models, similar to the accuracy case, we measure perplexity across the masked positions.

- 困惑度:对于单向模型,我们测量数据集中所有序列位置的困惑度。对于双向模型,类似于准确度的情况,我们测量被遮蔽位置的困惑度。

- Bits Per Dimension/Character (BPD/BPC): This calculated by loss divided by $\ln(2)$ .

- 每维度/字符比特数 (BPD/BPC): 由损失值除以 $\ln(2)$ 计算得出。

We used the full evaluation dataset for TrEMBL in the plots in the main section, while for other datasets such as ImageNet64 and PG-19 which have very large evaluation dataset sizes, we used random batches $\mathord{\left(>2048\right.}$ samples) for plotting curves.

在主章节的图表中,我们使用了TrEMBL的完整评估数据集,而对于其他数据集(如ImageNet64和PG-19)这类评估数据集规模极大的情况,我们采用随机批次 $\mathord{\left(>2048\right.}$ 样本)来绘制曲线。

A.1.1 PG-19 PREPROCESSING

A.1.1 PG-19 预处理

The PG-19 dataset (Rae et al., 2020) is presented as a challenging long range text modeling task. It consists of out-of-copyright Project Gutenberg books published before 1919. It does not have a fixed vocabulary size, instead opting for any token iz ation which can model an arbitrary string of text. We use a unigram Sentence Piece vocabulary (Kudo & Richardson, 2018) with 32768 tokens, which maintains whitespace and is completely invertible to the original book text. Perplexities are calculated as the average log-likelihood per token, multiplied by the ratio of the sentence piece token iz ation to number of tokens in the original dataset. The original dataset token count per split is: train $_{1}=$ 1973136207, validation=3007061, test=6966499. Our sentence piece token iz ation yields the following token counts per split: train=3084760726, valid=4656945, and test=10699704. This gives log likelihood multipliers of train $=1.5634$ , valid $=1.5487$ , test $=1.5359$ per split before computing perplexity, which is equal to exp(log likelihood multiplier $^*$ loss).

PG-19数据集 (Rae等人, 2020) 被设计为一个具有挑战性的长文本建模任务。该数据集由1919年前出版的公共版权古腾堡计划书籍构成,不设固定词汇表,而是采用可对任意文本字符串建模的token化方案。我们使用包含32768个token的unigram Sentence Piece词汇表 (Kudo & Richardson, 2018),该方案保留空格且可完全还原至原始书籍文本。困惑度计算为每个token的平均对数似然,乘以sentence piece token化数量与原始数据集token数量的比值。原始数据集各分区的token数量为:训练集$_{1}=$1973136207、验证集=3007061、测试集=6966499。我们的sentence piece token化产生以下分区token数量:训练集=3084760726、验证集=4656945、测试集=10699704。由此得到计算困惑度前的对数似然乘数:训练集$=1.5634$、验证集$=1.5487$、测试集$=1.5359$,其值等于exp(对数似然乘数$^*$损失)。

Preprocessing for TrEMBL is extensively explained in Appendix C.

TrEMBL 的预处理过程在附录 C 中有详细说明。

A.2 TRAINING HYPER PARAMETERS

A.2 训练超参数

Unless specifically stated, all Performer $^+$ Transformer runs by default used 0.5 grad clip, 0.1 weight decay, 0.1 dropout, $10^{-3}$ fixed learning rate with Adam hyper parameters $(\beta_{1}=0.9,\beta_{2}=0.98,\epsilon=$ $10^{-9^{\circ}}/$ ), with batch size maximized (until TPU memory overload) for a specific model.

除非特别说明,所有Performer$^+$ Transformer默认运行参数为:梯度裁剪0.5、权重衰减0.1、dropout 0.1、固定学习率$10^{-3}$(Adam优化器超参数$\beta_{1}=0.9$,$\beta_{2}=0.98$,$\epsilon=10^{-9^{\circ}}$),并针对特定模型最大化批次大小(直至TPU内存溢出)。

All 36-layer protein experiments used the same amount of compute (i.e. 16x16 TPU-v2, 8GB per chip). For concatenated experiments, 16x16 TPU-v2’s were also used for the Performer, while 8x8’s were used for the 1-3 layer $d=256$ ) Transformer models (using 16x16 did not make a difference in accuracy).

所有36层蛋白质实验均使用相同计算量(即16x16 TPU-v2芯片组,每芯片8GB)。在级联实验中,Performer同样使用16x16 TPU-v2配置,而1-3层( $d=256$ )Transformer模型则采用8x8配置(使用16x16配置不会影响准确率)。

Note that Performers are using the same training hyper parameters as Transformers, yet achieving competitive results - this shows that FAVOR can act as a simple drop-in without needing much tuning.

请注意,Performers 使用了与 Transformer 相同的训练超参数,却取得了具有竞争力的结果——这表明 FAVOR 可以作为即插即用的模块,无需过多调参。

A.3 APPROXIMATE SOFTMAX ATTENTION DEFAULT VALUES

A.3 近似Softmax注意力默认值

The optimal values, set to default parameters 1 , are: re normalize attention $=$ True, numerical stabilizer $=10^{-6}$ , number of features $=256$ , ortho features $=$ True, ortho scaling $=0.0$ .

最优值(设为默认参数1)为:重归一化注意力(re normalize attention)=True,数值稳定器(numerical stabilizer)=10^(-6),特征数量(number of features)=256,正交特征(ortho features)=True,正交缩放(ortho scaling)=0.0。

A.4 GENERALIZED ATTENTION DEFAULT VALUES

A.4 广义注意力默认值

The optimal values, set to default parameters 2 , are: re normalize attention $=$ True, numerical stabilizer $=0.0$ , number of features $=256$ , kernel $=$ ReLU, kernel epsilon $=10^{-3}$ .

最优值(采用默认参数2)设置为:重归一化注意力 (re normalize attention) $=$ True、数值稳定器 (numerical stabilizer) $=0.0$、特征数量 (number of features) $=256$、核函数 (kernel) $=$ ReLU、核函数epsilon值 (kernel epsilon) $=10^{-3}$。

A.5 REFORMER DEFAULT VALUES

A.5 REFORMER 默认值

For the Reformer, we used the same hyper parameters as mentioned for protein experiments, without gradient clipping, while using the defaults3 (which instead use learning rate decay) for ImageNet-64. In both cases, the Reformer used the same default LSH attention parameters.

对于Reformer,我们采用了与蛋白质实验相同的超参数设置,未使用梯度裁剪,而对ImageNet-64则采用默认参数(含学习率衰减)。两种情况下,Reformer均使用默认的LSH注意力参数。

A.6 LINFORMER DEFAULT VALUES

A.6 LINFORMER 默认值

Using our standard pipeline as mentioned above, we replaced the attention function with the Linformer variant via Jax, with $\bar{\delta}=10^{-6}$ , $k=600$ (same notation used in the paper (Wang et al., 2020)), where $\delta$ is the exponent in a re normalization procedure using $e^{-\delta}$ as a multiplier in order to approximate softmax, while $k$ is the dimension of the projections of the $\mathbf{Q}$ and $\mathbf{K}$ matrices. As a sanity check, we found that our Linformer implementation in Jax correctly approximated exact softmax’s output within 0.02 error for all entries.

使用上述标准流程,我们通过Jax将注意力函数替换为Linformer变体,其中 $\bar{\delta}=10^{-6}$ 、 $k=600$ (沿用论文 (Wang et al., 2020) 的符号表示)。这里 $\delta$ 是重归一化过程中的指数项,采用 $e^{-\delta}$ 作为乘数来近似softmax,而 $k$ 是矩阵 $\mathbf{Q}$ 和 $\mathbf{K}$ 的投影维度。经检验,我们的Jax版Linformer实现能正确逼近标准softmax输出,所有条目误差均在0.02以内。

Note that for rigorous comparisons, our Linformer hyper parameters are even stronger than the defaults found in (Wang et al., 2020), as:

需要注意的是,为了进行严谨比较,我们的Linformer超参数设置甚至比 (Wang et al., 2020) 中的默认配置更强,具体表现为:

• We use $k=600$ , which is more than twice than the default $k=256$ from the paper, and also twice than our default $m=256$ number of features. • We also use redrawing, which avoids "unlucky" projections on $\mathbf{Q}$ and $\mathbf{K}$ .

• 我们使用 $k=600$ ,这比论文默认的 $k=256$ 高出两倍多,也达到我们默认特征数 $m=256$ 的两倍。

• 我们还采用重绘 (redrawing) 技术,以避免在 $\mathbf{Q}$ 和 $\mathbf{K}$ 上出现"不理想"的投影。

B MAIN ALGORITHM: FAVOR+

B 主要算法:FAVOR+

We outline the main algorithm for $\mathrm{FAVOR+}$ formally:

我们正式概述 $\mathrm{FAVOR+}$ 的主要算法:

| Algorithm1:FAVOR+(bidirectional or unidirectional). |

| Input : Q, K, V E RLxd,isBidirectional - binary flag. Result: Att(Q, K, V) E RLxL if isBidirectional, Att→(Q, K, V) E RLxL otherwise. Compute Q' and K' as described in Section 2.2 and Section 2.3 and take C := [V 1L]; ifisBidirectional then |

| Buf1 := (K')TC ∈ RM×(d+1), Buf2 := Q'Buf1 E RLx(d+1); else |

| Compute G and its prefix-sum tensor GPs according to (11); |

| [GPS:Q1 GLS.QL] Buf2 := E RL×(d+1). end |

算法1: FAVOR+(双向或单向)

输入: Q, K, V ∈ R^(L×d), isBidirectional - 二元标志

结果: 若isBidirectional为真则输出Att(Q, K, V) ∈ R^(L×L), 否则输出Att→(Q, K, V) ∈ R^(L×L)

按第2.2节和第2.3节所述计算Q'和K', 并取C := [V 1L];

若isBidirectional为真则

Buf1 := (K')^T C ∈ R^(M×(d+1)), Buf2 := Q' Buf1 ∈ R^(L×(d+1));

否则

根据式(11)计算G及其前缀和张量GPs;

[GPS:Q1 GLS.QL] Buf2 := ∈ R^(L×(d+1))

结束

B.1 UNIDIRECTIONAL CASE AND PREFIX SUMS

B.1 单向情况与前缀和

We explain how our analysis from Section 2.2 can be extended to the unidirectional mechanism in this section. Notice that this time attention matrix A is masked, i.e. all its entries not in the lower-triangular part (which contains the diagonal) are zeroed (see also Fig. 8).

我们解释如何将第2.2节的分析扩展到本节中的单向机制。注意这次的注意力矩阵A是被掩码的,即所有不在下三角部分(包含对角线)的条目都被置零(另见图8)。

Figure 8: Visual representation of the prefix-sum algorithm for unidirectional attention. For clarity, we omit attention normalization in this visualization. The algorithm keeps the prefix-sum which is a matrix obtained by summing the outer products of random features corresponding to keys with value-vectors. At each given iteration of the prefix-sum algorithm, a random feature vector corresponding to a query is multiplied by the most recent prefix-sum (obtained by summing all outer-products corresponding to preceding tokens) to obtain a new row of the matrix AV which is output by the attention mechanism.

图 8: 单向注意力机制中前缀和算法的可视化图示。为清晰起见,本示意图省略了注意力归一化步骤。该算法维护的前缀和是一个矩阵,通过累加键(keys)对应的随机特征与值向量(value-vectors)的外积获得。在每次前缀和算法迭代中,查询(query)对应的随机特征向量会与最新的前缀和(即前面所有token对应外积的累加结果)相乘,从而得到注意力机制输出的矩阵AV的新行。

For the unidirectional case, our analysis is similar as for the bidirectional case, but this time our goal is to compute $\operatorname{tril}(\mathbf{Q}^{\prime}(\mathbf{K}^{\prime})^{\top})\mathbf{C}$ without constructing and storing the $L\times L$ -sized matrix tril $(\mathbf{Q}^{\prime}(\mathbf{\breve{K}}^{\prime})^{\top})$ explicitly, where $\mathbf{C} = \left[ V \quad \mathbf{1}_L \right] \in \mathbb{R}^{L \times (d+1)}$ . In order to do so, observe that $\forall1\leq i\leq L$ :

对于单向情况,我们的分析与双向情况类似,但这次的目标是在不显式构建和存储 $L\times L$ 大小的矩阵 $\operatorname{tril}(\mathbf{Q}^{\prime}(\mathbf{\breve{K}}^{\prime})^{\top})$ 的情况下计算 $\operatorname{tril}(\mathbf{Q}^{\prime}(\mathbf{K}^{\prime})^{\top})\mathbf{C}$ ,其中 $\mathbf{C} = \left[ V \quad \mathbf{1}_L \right] \in \mathbb{R}^{L \times (d+1)}$ 。为此,观察到 $\forall1\leq i\leq L$ :

$$