Direct Feedback Alignment Scales to Modern Deep Learning Tasks and Architectures

直接反馈对齐可扩展至现代深度学习任务与架构

Julien Launay1,2 Iacopo Poli1 François Boniface1

Julien Launay1,2 Iacopo Poli1 François Boniface1

Florent Krzakala1,2,3

Florent Krzakala1,2,3

1LightOn 2LPENS, École Normale Supérieure

1LightOn 2LPENS, 巴黎高等师范学院

3 IdePhics, EPFL

3 IdePhics, EPFL

{firstname}@lighton.ai

{firstname}@lighton.ai

lair.lighton.ai/dfa-scales

lair.lighton.ai/dfa-scales

Abstract

摘要

Despite being the workhorse of deep learning, the back propagation algorithm is no panacea. It enforces sequential layer updates, thus preventing efficient parallelization of the training process. Furthermore, its biological plausibility is being challenged. Alternative schemes have been devised; yet, under the constraint of synaptic asymmetry, none have scaled to modern deep learning tasks and architectures. Here, we challenge this perspective, and study the applicability of Direct Feedback Alignment (DFA) to neural view synthesis, recommend er systems, geometric learning, and natural language processing. In contrast with previous studies limited to computer vision tasks, our findings show that it successfully trains a large range of state-of-the-art deep learning architectures, with performance close to fine-tuned back propagation. When a larger gap between DFA and back propagation exists, like in Transformers, we attribute this to a need to rethink common practices for large and complex architectures. At variance with common beliefs, our work supports that challenging tasks can be tackled in the absence of weight transport.

尽管反向传播算法是深度学习的核心方法,但它并非万能解药。该算法强制要求逐层顺序更新,导致训练过程无法高效并行化。此外,其生物学合理性正受到质疑。虽然已有替代方案被提出,但在突触不对称性的约束下,尚无方案能适配现代深度学习任务与架构。本文突破这一认知局限,研究了直接反馈对齐 (Direct Feedback Alignment, DFA) 在神经视图合成、推荐系统、几何学习及自然语言处理中的适用性。与先前局限于计算机视觉任务的研究不同,我们的实验表明:DFA 能成功训练多种前沿深度学习架构,其性能接近精调的反向传播算法。当 DFA 与反向传播存在较大差距时(如 Transformer 架构),我们认为这需要重新思考大型复杂架构的常规设计范式。与传统认知相悖的是,本研究证实:即使不依赖权重传输机制,也能攻克具有挑战性的任务。

1 Introduction

1 引言

While the back propagation algorithm (BP) [1, 2] is at the heart of modern deep learning achievements, it is not without pitfalls. For one, its weight updates are non-local and rely on upstream layers. Thus, they cannot be easily parallel i zed [3], incurring important memory and compute costs. Moreover, its biological implementation is problematic [4, 5]. For instance, BP relies on the transpose of the weights to evaluate updates. Hence, synaptic symmetry is required between the forward and backward path: this is implausible in biological brains, and known as the weight transport problem [6].

虽然反向传播算法(BP)[1,2]是现代深度学习成就的核心,但它并非没有缺陷。首先,其权重更新是非局部的,且依赖于上游层。因此,它们无法轻易并行化[3],导致显著的内存和计算成本。此外,其生物学实现也存在问题[4,5]。例如,BP依赖权重的转置来评估更新。因此,前向和反向路径之间需要突触对称性:这在生物大脑中是不合理的,被称为权重传输问题[6]。

Consequently, alternative training algorithms have been developed. Some of these algorithms are explicitly biologically inspired [7–13], while others focus on making better use of available compute resources [3, 14–19]. Despite these enticing characteristics, none has been widely adopted, as they are often demonstrated on a limited set of tasks. Moreover, as assessed in [20], their performance on challenging datasets under the constraint of synaptic asymmetry is disappointing.

因此,人们开发了替代的训练算法。其中一些算法明确受到生物学启发 [7-13],而另一些则专注于更好地利用可用的计算资源 [3, 14-19]。尽管这些特性很吸引人,但由于它们通常只在有限的任务集上得到验证,因此并未被广泛采用。此外,如 [20] 中所评估的,在突触不对称性的约束下,这些算法在具有挑战性的数据集上的表现令人失望。

We seek to broaden this perspective, and demonstrate the applicability of Direct Feedback Alignment (DFA) [19] in state-of-the-art settings: from applications of fully connected networks such as neural view synthesis and recommend er systems, to geometric learning with graph convolutions, and natural language processing with Transformers. Our results define new standards for learning without weight transport and show that challenging tasks can indeed be tackled under synaptic asymmetry.

我们试图拓宽这一视角,并展示直接反馈对齐 (Direct Feedback Alignment, DFA) [19] 在先进场景中的适用性:从全连接网络的应用(如神经视图合成和推荐系统),到图卷积的几何学习,再到基于 Transformer 的自然语言处理。我们的研究结果为无需权重传递的学习设立了新标准,并证明在突触不对称条件下确实能攻克复杂任务。

34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada.

第34届神经信息处理系统大会 (NeurIPS 2020),加拿大温哥华。

1.1 Related work

1.1 相关工作

Training a neural network is a credit assignment problem: an update is derived for each parameter from its contribution to a cost function. To solve this problem, a spectrum of algorithms exists [21].

训练神经网络是一个信用分配问题:每个参数的更新都源于其对成本函数的贡献。为解决这一问题,存在一系列算法 [21]。

Biologically motivated methods Finding a training method applicable under the constraints of biological brains remains an open problem. End-to-end propagation of gradients is unlikely to occur [22], implying local learning is required. Furthermore, the weight transport problem enforces synaptic asymmetry [6]. Inspired by auto-encoders, target propagation methods (TP) [10–12] train distinct feedback connections to invert the feed forward ones. Feedback alignment (FA) [13] replaces the transpose of the forward weights used in the backward pass by a random matrix. Throughout training, the forward weights learn to align with the arbitrary backward weights, eventually approximating BP.

生物启发方法

在生物大脑的约束条件下寻找适用的训练方法仍是一个开放性问题。梯度的端到端传播不太可能发生 [22],这意味着需要局部学习。此外,权重传输问题强制突触不对称性 [6]。受自编码器启发,目标传播方法 (TP) [10–12] 通过训练独立的反馈连接来反转前馈连接。反馈对齐 (FA) [13] 用随机矩阵替代反向传播中使用的前向权重转置。在整个训练过程中,前向权重逐渐与任意反向权重对齐,最终逼近反向传播 (BP)。

Beyond biological considerations As deep learning models grow bigger, large-scale distributed training is increasingly desirable. Greedy layer-wise training [14] allows networks to be built layer by layer, limiting the depth of back propagation. To enable parallel iz ation of the backward pass, updates must only depend on local quantities. Unsupervised learning is naturally suited for this, as it relies on local losses such as Deep InfoMax [17] and Greedy InfoMax [18]. More broadly, synthetic gradient methods, like decoupled neural interfaces [3, 15] and local error signals (LES) [16], approximate gradients using layer-wise trainable feedback networks, or using reinforcement learning [23]. DFA [19] expands on FA and directly projects a global error to each layer. A shared feedback path is still needed, but it only depends on a simple random projection operation.

超越生物学考量

随着深度学习模型规模扩大,大规模分布式训练日益成为必要。贪婪逐层训练 [14] 使网络能够逐层构建,限制反向传播的深度。为实现反向传播的并行化,更新必须仅依赖于局部量。无监督学习天然适合此场景,因其依赖局部损失函数(如Deep InfoMax [17] 和 Greedy InfoMax [18])。更广泛而言,合成梯度方法(如解耦神经接口 [3,15] 和局部误差信号 (LES) [16])通过逐层可训练反馈网络或强化学习 [23] 来近似梯度。DFA [19] 在FA基础上扩展,直接将全局误差投影至各层。仍需共享反馈路径,但其仅依赖简单的随机投影操作。

Performance of alternative methods Local training methods are successful in unsupervised learning [18]. Even in a supervised setting, they scale to challenging datasets like CIFAR-100 or ImageNet [14, 16]. Thus, locality is not too penalizing. However, FA, and DFA are unable to scale to these tasks [20]. In fact, DFA is unable to train convolutional layers [24], and has to rely on transfer learning in image tasks [25]. To enable feedback alignment techniques to perform well on challenging datasets, some form of weight transport is necessary: either by explicitly sharing sign information [26–28], or by introducing dedicated phases of alignment for the forward and backward weights where some information is shared [29, 30]. To the best of our knowledge, no method compatible with the weight transport problem has ever been demonstrated on challenging tasks.

替代方法的性能

局部训练方法在无监督学习中取得了成功[18]。即使在有监督环境下,它们也能扩展到CIFAR-100或ImageNet等具有挑战性的数据集[14, 16]。因此,局部性并不会造成太大影响。然而,FA(反馈对齐)和DFA(直接反馈对齐)无法扩展到这些任务[20]。事实上,DFA无法训练卷积层[24],在图像任务中必须依赖迁移学习[25]。

为了使反馈对齐技术能在具有挑战性的数据集上表现良好,某种形式的权重传递是必要的:要么通过显式共享符号信息[26–28],要么通过引入前向与后向权重的专用对齐阶段来共享部分信息[29, 30]。据我们所知,目前尚未有任何与权重传递问题兼容的方法在挑战性任务中得到验证。

1.2 Motivations and contributions

1.2 研究动机与贡献

We focus on DFA, a compromise between biological and computational considerations. Notably, DFA is compatible with synaptic asymmetry: this asymmetry raises important challenges, seemingly preventing learning in demanding settings. Moreover, it allows for asynchronous weight updates, and puts a single operation at the center of the training stage. This enables new classes of training co-processors [31, 32], leveraging dedicated hardware to perform the random projection.

我们关注DFA (direct feedback alignment) ,这是一种在生物与计算考量之间的折衷方案。值得注意的是,DFA与突触不对称性兼容:这种不对称性带来了重要挑战,似乎阻碍了在高要求场景下的学习。此外,它支持异步权重更新,并将单一操作置于训练阶段的核心位置。这使得新型训练协处理器 [31, 32] 成为可能,可利用专用硬件执行随机投影。

Extensive survey We apply DFA in a large variety of settings matching current trends in machine learning. Previous works have found that DFA is unsuitable for computer vision tasks [20, 24]; but computer vision alone cannot be the litmus test of a training method. Instead, we consider four vastly different domains, across eight tasks, and with eleven different architectures. This constitutes a survey of unprecedented scale for an alternative training method, and makes a strong case for the possibility of learning without weight transport in demanding scenarios.

广泛实验

我们将DFA应用于多种符合当前机器学习趋势的场景。先前研究发现DFA不适用于计算机视觉任务[20, 24],但仅凭计算机视觉不能作为训练方法的试金石。为此,我们在四个截然不同的领域、八项任务和十一种不同架构中进行验证。这构成了替代训练方法史无前例的大规模实验,有力证明了在严苛场景下无需权重传递(weight transport)的学习可能性。

Challenging settings We demonstrate the ability of DFA to tackle challenging tasks. We successfully learn and render real-world 3D scenes (section 3.1.1); we perform recommendation at scale (section 3.1.2); we explore graph-based citation networks (section 3.2); and we consider language modelling with a Transformer (section 3.3). We study tasks at the state-of-the-art level, that have only been recently successfully tackled with deep learning.

挑战性场景

我们展示了DFA应对挑战性任务的能力。我们成功学习并渲染了真实世界的3D场景(第3.1.1节);实现了大规模推荐系统(第3.1.2节);探索了基于图的引文网络(第3.2节);并研究了使用Transformer进行语言建模(第3.3节)。这些研究均处于前沿水平,其中部分任务直到最近才被深度学习成功攻克。

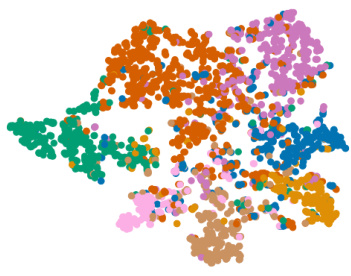

Modern architectures We prove that the previously established failure of DFA to train convolutions does not generalize. By evaluating performance metrics, comparing against a shallow baseline, measuring alignment, and visualizing t-SNE embeddings, we show that learning indeed occurs in layers involving graph convolutions and attention. This significantly broadens the applicability of DFA–previously thought to be limited to simple problems like MNIST and CIFAR-10.

现代架构

我们证明先前关于DFA无法训练卷积的结论并不具有普适性。通过评估性能指标、对比浅层基线、测量对齐度以及可视化t-SNE嵌入,我们证明了涉及图卷积和注意力机制的层确实发生了学习。这显著拓宽了DFA的适用性范围——此前人们认为它仅适用于MNIST和CIFAR-10等简单任务。

2 Methods

2 方法

Forward pass In a fully connected network, at layer $i$ out of $N$ , neglecting its biases, with $\mathbf{W}_ {i}$ its weight matrix, $f_{i}$ its non-linearity, and $\mathbf{h}_{i}$ its activation s, the forward pass is:

前向传播 在全连接网络中,忽略偏置项,设第 $i$ 层(共 $N$ 层)的权重矩阵为 $\mathbf{W}_ {i}$,非线性激活函数为 $f_{i}$,激活值为 $\mathbf{h}_{i}$,则前向传播过程可表示为:

$$

\forall i\in[1,\ldots,N]:\mathbf{a}_ {i}=\mathbf{W}_ {i}\mathbf{h}_ {i-1},\mathbf{h}_ {i}=f_{i}(\mathbf{a}_{i}).

$$

$$

\forall i\in[1,\ldots,N]:\mathbf{a}_ {i}=\mathbf{W}_ {i}\mathbf{h}_ {i-1},\mathbf{h}_ {i}=f_{i}(\mathbf{a}_{i}).

$$

$\mathbf{h}_ {0}=X$ is the input data, and ${\mathbf h}_ {N}=f({\mathbf a}_{N})=\hat{\mathbf y}$ are the predictions. A task-specific cost function $\mathcal{L}(\hat{\mathbf{y}},\mathbf{y})$ is computed to quantify the quality of the predictions with respect to the targets $\mathbf{y}$ .

$\mathbf{h}_ {0}=X$ 是输入数据,${\mathbf h}_ {N}=f({\mathbf a}_{N})=\hat{\mathbf y}$ 是预测值。通过计算任务特定的成本函数 $\mathcal{L}(\hat{\mathbf{y}},\mathbf{y})$ 来量化预测结果相对于目标值 $\mathbf{y}$ 的质量。

Backward pass with BP The weight updates are computed by back propagation of the error vector. Using the chain-rule of derivatives, each neuron is updated based on its contribution to the cost function. Leaving aside the specifics of the optimizer used, the equation for the weight updates is:

基于BP的反向传播

误差向量通过反向传播计算权重更新。利用导数的链式法则,每个神经元根据其对代价函数的贡献进行更新。不考虑所用优化器的具体细节,权重更新的公式为:

$$

\delta\mathbf{W}_ {i}=-\frac{\partial\mathcal{L}}{\partial\mathbf{W}_ {i}}=-[(\mathbf{W}_ {i+1}^{T}\delta\mathbf{a}_ {i+1})\odot f_{i}^{\prime}(\mathbf{a}_ {i})]\mathbf{h}_ {i-1}^{T},\delta\mathbf{a}_ {i}=\frac{\partial\mathcal{L}}{\partial\mathbf{a}_{i}}

$$

$$

\delta\mathbf{W}_ {i}=-\frac{\partial\mathcal{L}}{\partial\mathbf{W}_ {i}}=-[(\mathbf{W}_ {i+1}^{T}\delta\mathbf{a}_ {i+1})\odot f_{i}^{\prime}(\mathbf{a}_ {i})]\mathbf{h}_ {i-1}^{T},\delta\mathbf{a}_ {i}=\frac{\partial\mathcal{L}}{\partial\mathbf{a}_{i}}

$$

Backward pass with DFA The gradient signal $\mathbf{W}_ {i+1}^{T}\delta\mathbf{a}_ {i+1}$ of the $(\mathrm{i}{+}1)$ -th layer violates synaptic asymmetry. DFA replaces it with a random projection of the topmost derivative of the loss, $\delta\mathbf{a}_ {y}$ . For common classification and regression losses such as the mean squared error or the negative log likelihood, this corresponds to a random projection of the global error $\mathbf{e}=\hat{\mathbf{y}}-\mathbf{y}$ . With $B_{i}$ , a fixed random matrix of appropriate shape drawn at initialization for each layers:

基于DFA的反向传播

第$(i{+}1)$层的梯度信号$\mathbf{W}_ {i+1}^{T}\delta\mathbf{a}_ {i+1}$违背了突触对称性。DFA将其替换为损失函数最高层导数$\delta\mathbf{a}_ {y}$的随机投影。对于均方误差或负对数似然等常见分类回归损失函数,这相当于对全局误差$\mathbf{e}=\hat{\mathbf{y}}-\mathbf{y}$进行随机投影。其中$B_{i}$是初始化时为每层生成的固定形状随机矩阵:

$$

\delta\mathbf{W}_ {i}=-[(\mathbf{B}_ {i}\delta\mathbf{a}_ {y})\odot f_{i}^{\prime}(\mathbf{a}_ {i})]\mathbf{h}_ {i-1}^{T},\delta\mathbf{a}_ {y}=\frac{\partial\mathcal{L}}{\partial\mathbf{a}_{y}}

$$

$$

\delta\mathbf{W}_ {i}=-[(\mathbf{B}_ {i}\delta\mathbf{a}_ {y})\odot f_{i}^{\prime}(\mathbf{a}_ {i})]\mathbf{h}_ {i-1}^{T},\delta\mathbf{a}_ {y}=\frac{\partial\mathcal{L}}{\partial\mathbf{a}_{y}}

$$

We provide details in appendix $\mathrm{^C}$ regarding adapting DFA beyond fully-connected layers.

我们在附录 $\mathrm{^C}$ 中提供了关于将DFA (Direct Feedback Alignment) 应用于全连接层之外的详细说明。

3 Experiments

3 实验

We study the applicability of DFA to a diverse set of applications requiring state-of-the-art architectures. We start with fully connected networks, where DFA has already been demonstrated, and address new challenging settings. We then investigate geometric learning: we apply DFA to graph neural networks in classification tasks on citation networks, as well as graph auto encoders. These architectures feature graph convolutions and attention layers. Finally, we use DFA to train a transformer-based Natural Language Processing (NLP) model on a dataset of more than 100 million tokens.

我们研究了直接反馈对齐(DFA)在需要最先进架构的多样化应用中的适用性。首先从已证实DFA有效的全连接网络出发,探讨了更具挑战性的新场景。随后研究了几何学习领域:将DFA应用于引文网络分类任务中的图神经网络(graph neural networks)和图自编码器(graph auto encoders),这些架构包含图卷积(graph convolutions)和注意力层(attention layers)。最后,我们使用DFA在超过1亿token的数据集上训练了基于Transformer的自然语言处理(NLP)模型。

3.1 Fully connected architectures

3.1 全连接架构

DFA has been successful at training fully connected architectures, with performance on-par with back propagation [19, 20]. However, only computer vision tasks have been considered, where fully connected networks considerably under perform their convolutional counterpart. Here, we focus on tasks where fully connected architectures are state-of-the-art. Moreover, the architectures considered are deeper and more complex than those necessary to solve a simple task like MNIST.

DFA 在训练全连接架构方面取得了成功,其性能与反向传播相当 [19, 20]。然而,此前仅考虑了计算机视觉任务,其中全连接网络的性能明显低于卷积网络。本文聚焦于全连接架构处于领先水平的任务。此外,所研究的架构比解决 MNIST 等简单任务所需的网络更深、更复杂。

3.1.1 Neural view synthesis with Neural Radiance Fields

3.1.1 基于神经辐射场 (Neural Radiance Fields) 的神经视图合成

The most recent state-of-the-art neural view synthesis methods are based on large fully connected networks: this is an ideal setting for a first evaluation of DFA on a challenging task.

最先进的神经视图合成方法基于大型全连接网络:这是对DFA在挑战性任务上进行初步评估的理想场景。

Background There has been growing interest in methods capable of synthesising novel renders of a 3D scene using a dataset of past renders. The network is trained to learn an inner representation of the scene, and a classical rendering system can then query the model to generate novel views. With robust enough methods, real-world scenes can also be learned from a set of pictures.

背景

人们对能够利用过往渲染数据集合成3D场景新视图的方法兴趣日益增长。这类网络通过学习场景的内部表征进行训练,随后传统渲染系统可通过查询该模型生成新视角。若方法足够鲁棒,甚至能从一组图片中学习真实世界场景。

Until recently, most successful neural view synthesis methods were based on sampled volumetric representations [33–35]. In this context, Convolutional Neural Networks (CNNs) can be used to smooth out the discrete sampling of 3D space [36, 37]. However, these methods scale poorly to higher resolutions, as they still require finer and finer sampling. Conversely, alternative schemes based on a continuous volume representation have succeeded in generating high-quality renders [38], even featuring complex phenomenons such as view-dependant scattering [39]. These schemes make point-wise predictions, and use fully connected neural networks to encode the scene. Beyond 3D scenes, continuous implicit neural representations can be used to encode audio and images [40].

直到最近,大多数成功的神经视图合成方法都基于采样体积表示 [33-35]。在此背景下,卷积神经网络 (CNN) 可用于平滑3D空间的离散采样 [36, 37]。然而,这些方法难以扩展到更高分辨率,因为它们仍需要越来越精细的采样。相反,基于连续体积表示的替代方案已成功生成高质量渲染 [38],甚至能呈现视角依赖散射等复杂现象 [39]。这些方案采用逐点预测,并使用全连接神经网络对场景进行编码。除3D场景外,连续隐式神经表示还可用于编码音频和图像 [40]。

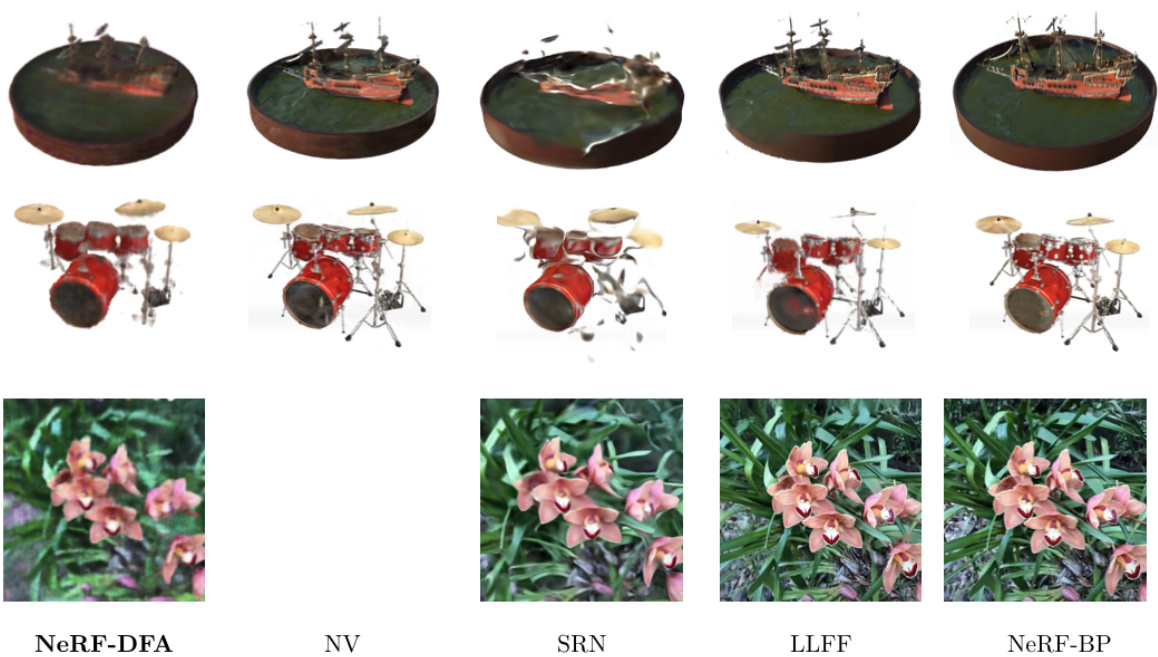

Figure 1: Comparisons of NeRF-DFA with state-of-the-art methods trained with BP on the most challenging synthetic and real-world scenes. While NeRF-DFA generates render of lower quality, they maintain multi-view consistency and exhibit no geometric artefacts. BP results from [39].

图 1: NeRF-DFA与基于反向传播(BP)训练的前沿方法在最具挑战性的合成场景和真实场景下的对比。虽然NeRF-DFA生成的渲染质量较低,但保持了多视角一致性且未出现几何伪影。BP结果来自[39]。

Setting We employ Neural Radiance Fields (NeRF) [39], the state-of-the-art for neural view synthesis. NeRF represents scenes as a continuous 5D function of space–three spatial coordinates, two viewing angles–and outputs a point-wise RGB radiance and opacity. A ray-casting renderer can then query the network to generate arbitrary views of the scene. The network modeling the continuous function is 10 layers deep. Two identical networks are trained: the coarse network predictions inform the renderer about the spatial coordinates that the fine network should preferentially evaluate to avoid empty space and occluded regions.

我们采用神经辐射场 (Neural Radiance Fields, NeRF) [39] 这一神经视图合成领域的最先进技术。NeRF 将场景表示为空间的三维坐标和两个视角方向的连续5D函数,并输出逐点的RGB辐射度和不透明度。随后,光线投射渲染器可通过查询该网络生成场景的任意视图。建模该连续函数的网络深度为10层。训练两个相同结构的网络:粗粒度网络的预测结果会指导渲染器优先让细粒度网络评估哪些空间坐标,以避免空区域和遮挡区域。

Results We report quantitative results of training NeRF with DFA in Table 1. Neural view synthesis methods are often better evaluated qualitatively: we showcase some renders in Figure 1.

结果

我们在表 1 中报告了使用 DFA (Direct Feedback Alignment) 训练 NeRF (Neural Radiance Fields) 的定量结果。神经视角合成方法通常更适合定性评估:我们在图 1 中展示了一些渲染效果。

On a dataset of renders featuring complex scenes with non-Lambertian materials (NeRF-Synthetic [39]), NeRF-DFA outperforms two previous fine-tuned state-of-the-art methods–Scene Representation Networks (SRN) [38] and Local Light Field Fusion (LLFF) [35]–and nearly matches the performance of Neural Volumes (NV) [37]. While DFA under performs alternative methods trained with BP on the real world view dataset (LLFF-Real [35]), its performance remains significant: real world view synthesis is a challenging tasks, and this level of PSNR indicates that learning is indeed happening.

在包含非朗伯材质复杂场景的渲染数据集(NeRF-Synthetic [39])上,NeRF-DFA 的表现优于两种先前经过微调的最先进方法——场景表示网络(SRN)[38] 和局部光场融合(LLFF)[35]——并几乎与神经体积(NV)[37] 的性能相当。尽管 DFA 在真实世界视图数据集(LLFF-Real [35])上的表现不如使用反向传播(BP)训练的其他方法,但其性能仍然显著:真实世界视图合成是一项具有挑战性的任务,而这一水平的 PSNR 表明学习确实在发生。

In particular, we find that NeRF-DFA retains the key characteristics of NeRF-BP: it can render viewdependant effects, and is multi-view consistent. The last point is an especially important achievement, and most visible in the video linked in appendix E, as it is a challenge for most algorithms [33– 35, 38]. The main drawback of NeRF-DFA appears to be a seemingly lower render definition. The

我们发现NeRF-DFA保留了NeRF-BP的关键特性:能够渲染视角相关效果,并保持多视角一致性。最后一点尤为重要(这在附录E链接的视频中最为明显),因为这对大多数算法而言都是个挑战[33-35, 38]。NeRF-DFA的主要缺点似乎是渲染清晰度略显不足。

Table 1: Peak Signal to Noise Ratio (PSNR, higher is better) of neural view synthesis methods trained with back propagation against NeRF trained with DFA. Even when trained with DFA, NeRF outperforms two state-of-the-art methods on a synthetic dataset (NeRF-Synthetic), and achieves fair performance on a challenging real world views datasets (LLFF-Real). BP results from [39].

| NV | SRN | LLFF | NeRF | ||

| BP | BP | BP | BP | DFA | |

| NeRF-Synthetic | 26.05 | 22.26 | 24.88 | 31.01 | 25.41 |

| LLFF-Real | / | 22.84 | 24.13 | 26.50 | 20.77 |

表 1: 基于反向传播训练的神经视图合成方法与基于DFA训练的NeRF在峰值信噪比(PSNR,值越高越好)上的对比。即使采用DFA训练,NeRF在合成数据集(NeRF-Synthetic)上仍优于两种先进方法,并在具有挑战性的真实场景视图数据集(LLFF-Real)上取得不错表现。BP结果来自[39]。

| NV | SRN | LLFF | NeRF | |

|---|---|---|---|---|

| BP | BP | BP | BP | |

| NeRF-Synthetic | 26.05 | 22.26 | 24.88 | 31.01 |

| LLFF-Real | / | 22.84 | 24.13 | 26.50 |

NeRF architecture has not been fine-tuned to achieve these results: DFA works out-of-the-box on this advanced method. Future research focusing on architectural changes to NeRF could improve performance with DFA; some preliminary results are included in appendix E of the supplementary.

NeRF架构并未经过微调来达成这些结果:DFA在这一先进方法上可直接使用。未来针对NeRF架构改进的研究或能提升DFA性能,补充材料附录E中已包含部分初步成果。

3.1.2 Click-through rate prediction with recommend er systems

3.1.2 推荐系统中的点击率预测

We have demonstrated that DFA can train large fully connected networks on the difficult task of neural view synthesis. We now seek to use DFA in more complex heterogeneous architectures, combining the use of fully connected networks with other machine learning methods. Recommend er systems are an ideal application for such considerations.

我们已经证明DFA能够在神经视图合成这一困难任务上训练大型全连接网络。现在,我们尝试将DFA应用于更复杂的异构架构,将全连接网络与其他机器学习方法相结合。推荐系统正是这类应用的理想场景。

Background Recommend er systems are used to model the behavior of users and predict future interactions. In particular, in the context of click-through rate (CTR) prediction, these systems model the probability of a user clicking on a given item. Building recommend er systems is hard [41]: their input is high-dimensional and sparse, and the model must learn to extract high-order combinatorial features from the data. Moreover, they need to do so efficiently, as they are used to make millions of predictions and the training data may contain billions of examples.

背景

推荐系统用于建模用户行为并预测未来交互。特别是在点击率 (CTR) 预测场景中,这些系统会建模用户点击特定内容的概率。构建推荐系统具有挑战性 [41]:其输入是高维且稀疏的,模型必须学会从数据中提取高阶组合特征。此外,系统需要高效运行,因为它们需要处理数百万次预测任务,且训练数据可能包含数十亿样本。

Factorization Machines (FM) [42] use inner-products of latent vectors between features to extract pairwise feature interactions. They constitute an excellent baseline for shallow recommend er systems, but fail to efficiently transcribe higher-level features. To avoid extensive feature engineering, it has been suggested that deep learning can be used in conjunction with wide shallow models to extract these higher-level features [43]. In production, these systems are regularly retrained on massive datasets: the speedup allowed by backward unlocking in DFA is thus of particular interest.

因子分解机 (Factorization Machines, FM) [42] 通过特征间潜在向量的内积来提取成对特征交互。它们为浅层推荐系统提供了优秀的基线模型,但无法有效刻画高阶特征。为避免大量特征工程,研究建议将深度学习与宽浅层模型结合来提取这些高阶特征 [43]。在生产环境中,这类系统需定期基于海量数据集重新训练:因此 DFA 中反向解锁带来的加速效果尤为重要。

Setting Deep Factorization Machines (DeepFM) [44] combine FM and a deep fully connected neural network, which we train with DFA. The input embedding is still trained directly via gradient descent, as weight transport is not necessary to back propagate through the FM. Deep & Cross Networks (DCN) [45] replace the FM with a Cross Network, a deep architecture without nonlinear i ties capable of extracting high-degree interactions across features. We train the fully connected network, the deep cross network, and the embeddings with DFA. Finally, Adaptative Factorization Network (AFN) [46] uses Logarithmic Neural Networks [47] to enhance the representational power of its deep component. We evaluate these methods on the Criteo dataset [48], which features nearly 46 million samples of one million sparse features. This is a difficult task, where performance improvements of the AUC on the 0.001-level can enhance CTR significantly [43].

深度分解机 (DeepFM) [44] 将FM与深度全连接神经网络相结合,我们使用DFA对其进行训练。输入嵌入仍通过梯度下降直接训练,因为FM的反向传播不需要权重传输。深度交叉网络 (DCN) [45] 用交叉网络替代FM,这是一种无非线性约束的深度架构,能够提取特征间的高阶交互。我们使用DFA训练全连接网络、深度交叉网络和嵌入层。最后,自适应分解网络 (AFN) [46] 采用对数神经网络 [47] 来增强其深层组件的表征能力。我们在Criteo数据集 [48] 上评估这些方法,该数据集包含近4600万样本和100万稀疏特征。这是一项具有挑战性的任务,AUC提升0.001级别即可显著改善CTR [43]。

Results Performance metrics are reported in Table 2. To obtain these results, a simple hyperparameter grid search over optimization and regular iz ation parameters was performed for BP and DFA independently. DFA successfully trains all methods above the FM baseline, and in fact matches BP performance in both DeepFM and AFN. Because of their complexity, recommend er systems require intensive tuning and feature engineering to perform at the state-of-the-art level–and reproducing existing results can be challenging [49]. Hence, it is not surprising that a performance gap exists with Deep&Cross–further fine-tuning may be necessary for DFA to reach BP performance.

结果

性能指标如表 2 所示。为了获得这些结果,我们分别对 BP (Backpropagation) 和 DFA (Direct Feedback Alignment) 进行了简单的超参数网格搜索,涵盖优化和正则化参数。DFA 成功训练了所有方法,性能均超过 FM (Factorization Machines) 基线,甚至在 DeepFM 和 AFN (Adaptive Factorization Network) 中与 BP 表现相当。由于推荐系统的复杂性,要达到最先进水平需要大量调参和特征工程 [49],因此复现现有结果可能具有挑战性。Deep&Cross 存在性能差距并不意外,DFA 可能需要进一步微调才能达到 BP 的水平。

Alignment measurements corroborate that learning is indeed occurring in the special layers of Deep&Cross and AFN–see appendix A of the supplementary for details. Our results on recommend er systems support that DFA can learn in a large variety of settings, and that weight transport is not necessary to solve a difficult recommendation task.

对齐测量结果证实,Deep&Cross和AFN的特殊层确实发生了学习行为(详见补充材料附录A)。我们在推荐系统上的实验结果表明,DFA (Direct Feedback Alignment) 能够在多种场景中有效学习,且无需权重传输即可解决复杂的推荐任务。

Table 2: AUC (higher is better) and log loss (lower is better) of recommend er systems trained on the Criteo dataset [48]. Even in complex heterogeneous architectures, DFA performance is in line with BP. Values in bold indicate DFA AUC within 0.001 from the BP AUC or better.

| FM | DeepFM | Deep&Cross | AFN | ||||

| BP | DFA | BP | DFA | BP | DFA | ||

| AUC | 0.7915 | 0.7954 | 0.7956 | 0.8104 | 0.8009 | 0.7933 | 0.7924 |

| Loss | 0.4687 | 0.4610 | 0.4624 | 0.4414 | 0.4502 | 0.4630 | 0.4621 |

表 2: 在Criteo数据集[48]上训练的推荐系统的AUC(越高越好)和log loss(越低越好)。即使在复杂的异构架构中,DFA性能也与BP相当。加粗数值表示DFA的AUC与BP的AUC相差不超过0.001或更优。

| FM | DeepFM | DeepFM | Deep&Cross | Deep&Cross | AFN | AFN | |

|---|---|---|---|---|---|---|---|

| BP | DFA | BP | DFA | BP | DFA | ||

| AUC | 0.7915 | 0.7954 | 0.7956 | 0.8104 | 0.8009 | 0.7933 | 0.7924 |

| Loss | 0.4687 | 0.4610 | 0.4624 | 0.4414 | 0.4502 | 0.4630 | 0.4621 |

3.2 Geometric Learning with Graph Convolutional Networks

3.2 基于图卷积网络的几何学习

The use of sophisticated architectures beyond fully connected layers is necessary for certain tasks, such as geometric learning [50], where information lies in a complex structured domain. To address geometric learning tasks, methods capable of handling graph-based data are commonly needed. Graph convolutional neural networks (GCNNs) [51–54] have demonstrated the ability to process large-scale graph data efficiently. We study the applicability of DFA to these methods, including recent architectures based on an attention mechanism. Overall, this is an especially interesting setting, as DFA fails to train more classic 2D image convolutional layers [24].

规则:

- 输出中文翻译部分时仅保留翻译标题,无冗余内容

- 保持原始段落格式与术语(如FLAC、JPEG)

- 保留公司缩写(Microsoft/Amazon/OpenAI)及人名

- 保留文献引用标记(如[20])

- 图表标题格式转换(Figure 1:→图1:)

- 全角括号替换为半角括号并添加空格

- 专业术语首现标注英文(如生成式AI(Generative AI))

- 使用AI术语对照表(如LLM→大语言模型)

翻译结果:

超越全连接层的复杂架构对于某些任务是必要的,例如几何学习[50],这类任务的信息存在于复杂的结构化领域中。为解决几何学习任务,通常需要能够处理基于图数据的方法。图卷积神经网络(GCNNs)[51–54]已证明能高效处理大规模图数据。我们研究了直接反馈对齐(DFA)在这些方法中的适用性,包括基于注意力机制的最新架构。总体而言,这是特别值得关注的场景,因为DFA无法训练更传统的2D图像卷积层[24]。

Background Complex data like social networks or brain connect ome s lie on irregular or nonEuclidean domains. They can be represented as graphs, and efficient processing in the spectral domain is possible. Non-spectral techniques to apply neural networks to graphs have also been developed [55–57], but they exhibit unfavorable scaling properties. The success of CNNs in deep learning can be attributed to their ability to efficiently process structured high-dimensional data by sharing local filters. Thus, a generalization of the convolution operator to the graph domain is desirable: [51] first proposed a spectral convolution operation for graphs, and [52] introduced a form of regular iz ation to enforce spatial locality of the filters. We use DFA to train different such GCNNs implementations. We study both spectral and non-spectral convolutions, as well as methods inspired by the attention mechanism. We consider the task of semi-supervised node classification: nodes from a graph are classified using their relationship to other nodes as well as node-wise features.

背景

社交网络或脑连接组等复杂数据存在于不规则或非欧几里得域中。它们可以表示为图(graph),并能在谱域(spectral domain)中进行高效处理。虽然已有研究开发了将神经网络应用于图的非谱方法(non-spectral techniques) [55–57],但这些方法存在扩展性不足的问题。CNN在深度学习中的成功可归因于其通过共享局部滤波器高效处理结构化高维数据的能力。因此,有必要将卷积算子推广到图域(graph domain):[51]首次提出了图的谱卷积操作(spectral convolution operation),[52]则引入了一种正则化形式来增强滤波器的空间局部性。我们使用DFA训练不同的图卷积神经网络(GCNN)实现,同时研究谱卷积与非谱卷积,以及受注意力机制启发的方法。我们考虑半监督节点分类任务(semi-supervised node classification):利用图中节点与其他节点的关系及节点特征对节点进行分类。

Setting Fast Localized Convolutions (ChebConv) [53] approximate the graph convolution kernel with Chebyshev polynomials, and are one of the first scalable convolution methods on graph. Graph Convolutions (GraphConv) [54] remove the need for an explicit para me tri z ation of the kernel by enforcing linearity of the convolution operation on the graph Laplacian spectrum. It is often considered as the canonical graph convolution. More recent methods do not operate in the spectral domain. Spline Convolutions (SplineConv) [58] use a spline-based kernel, enabling the inclusion of information about the relative positioning of nodes, enhancing their representational power–for instance in the context of 3D meshes. Graph Attention Networks (GATConv) [59] use self-attention [60] layers to enable predictions at a given node to attend more specifically to certain parts of its neighborhood. Finally, building upon Jumping Knowledge Network [61], Just Jump (DNAConv) [62] uses multihead attention [63] to enhance the aggregation process in graph convolutions and enable deeper architectures. Note our implementation of DFA allows for limited weight transport within attention – see appendix D. We use PyTorch Geometric [64] for implementation of all of these methods. We evaluate performance on three citation network datasets: Cora, CiteSeer, and PubMed [65].

规则:

- 快速局部卷积 (ChebConv) [53] 使用切比雪夫多项式近似图卷积核,是最早可扩展的图卷积方法之一。

- 图卷积 (GraphConv) [54] 通过强制图拉普拉斯谱上卷积操作的线性特性,消除了对核显式参数化的需求,通常被视为标准图卷积方法。

- 近期方法不再局限于谱域操作:

- 样条卷积 (SplineConv) [58] 采用基于样条的核函数,可整合节点相对位置信息(例如在3D网格场景中增强表征能力)

- 图注意力网络 (GATConv) [59] 利用自注意力机制 [60],使节点预测能更聚焦于邻域的特定部分

- Just Jump (DNAConv) [62] 基于跳跃知识网络 [61],通过多头注意力 [63] 增强图卷积的聚合过程并支持更深层架构(注:我们的DFA实现允许注意力机制内有限权重传输,详见附录D)

- 所有方法均通过PyTorch Geometric [64] 实现,并在三个引文网络数据集(Cora、CiteSeer和PubMed [65])上评估性能

Results We report classification accuracy in Table 3. BP and DFA regular iz ation and optimization hyper parameters are fine-tuned separately on the Cora dataset. In general, we find that less regular iz ation and lower learning rates are needed with DFA. DFA successfully trains all graph methods, independent of whether they use the spectral domain or not, and even if they use attention. Furthermore, for GraphConv, SplineConv, and GATConv DFA performance nearly matches BP.

结果

我们在表3中报告了分类准确率。BP和DFA的正则化及优化超参数在Cora数据集上分别进行了微调。总体而言,我们发现DFA所需的正则化更少且学习率更低。DFA成功训练了所有图方法,无论它们是否使用谱域,甚至包括使用注意力机制的模型。此外,对于GraphConv、SplineConv和GATConv,DFA的性能几乎与BP相当。

As GCNNs struggle with learning meaningful representations when stacking many layers [66], all architectures but DNAConv are quite shallow (two layers). However, DFA performance is still significantly higher than that of a shallow training method–see appendix B for details. The lower performance on DNAConv is not a failure to learn: alignment measurements in appendix A show that learning is indeed occurring. It may be explained instead by a need for more in-depth fine-tuning, as this is a deep architecture with 5 successive attention layers.

由于GCNN在堆叠多层时难以学习有意义的表示[66],除DNAConv外的所有架构都相当浅(仅两层)。然而DFA性能仍显著高于浅层训练方法(详见附录B)。DNAConv的较低性能并非学习失败:附录A中的对齐测量表明学习确实在发生。这可能是因为需要更深入的微调,因为该架构具有5个连续的注意力层。

Table 3: Classification accuracy $%$ , higher is better) of graph convolution methods trained with BP and DFA, on citation networks [65]. But for ChebConv and DNAConv, DFA performance nearly matches BP performance. Values in bold when DFA is within $2.5%$ of BP.

| ChebConv | GraphConv | SplineConv | GATConv | DNAConv | ||||||

| BP | DFA | BP | DFA | BP | DFA | BP | DFA | BP | DFA | |

| Cora | 79.2 | 75.4 | 80.1 | 79.9 | 81.0 | 77.7 | 82.6 | 80.6 | 84.6 | 82.9 |

| CiteSeer | 69.5 | 67.6 | 71.6 | 69.4 | 70.0 | 69.8 | 72.0 | 71.2 | 73.4 | 70.8 |

| PubMed | 79.5 | 75.7 | 78.8 | 77.8 | 77.5 | 77.2 | 77.7 | 77.1 | 87.2 | 79.9 |

表 3: 图卷积方法在引用网络[65]上使用BP和DFA训练的分类准确率(单位:%,越高越好)。但对于ChebConv和DNAConv,DFA性能几乎与BP性能相当。当DFA与BP相差在2.5%以内时,数值加粗显示。

| ChebConv | GraphConv | SplineConv | GATConv | DNAConv | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BP | DFA | BP | DFA | BP | DFA | BP | DFA | BP | DFA | |

| Cora | 79.2 | 75.4 | 80.1 | 79.9 | 81.0 | 77.7 | 82.6 | 80.6 | 84.6 | 82.9 |

| CiteSeer | 69.5 | 67.6 | 71.6 | 69.4 | 70.0 | 69.8 | 72.0 | 71.2 | 73.4 | 70.8 |

| PubMed | 79.5 | 75.7 | 78.8 | 77.8 | 77.5 | 77.2 | 77.7 | 77.1 | 87.2 | 79.9 |

Table 4: AUC and Average Precision (AP, higher is better) for a GraphConv GAE trained with BP or DFA on citation networks. DFA reproduces BP performance.

| Cora | GAE BP DFA | |

| AUC 0.918 | 0.900 | |

| CiteSeer | AP AUC | 0.918 0.900 0.886 |

| AP | 0.879 0.895 0.889 | |

| PubMed | AUC AP | 0.967 0.945 0.966 0.945 |

表 4: 在引文网络上使用BP或DFA训练的GraphConv GAE的AUC和平均精度(AP, 越高越好)。DFA复现了BP的性能。

| Cora | GAE BP DFA |

|---|---|

| AUC 0.918 | 0.900 |

| CiteSeer | AP AUC |

| 0.918 0.900 0.886 | |

| AP | |

| 0.879 0.895 0.889 | |

| PubMed | AUC AP |

| 0.967 0.945 0.966 0.945 |

Figure 2: t-SNE visualization of the hidden layer activation s of a two-layer GraphConv trained on Cora with DFA. Classes forms clear clusters, indicating that a useful intermediary representation is learned. Colors represent different classes.

图 2: 在 Cora 数据集上使用 DFA (Direct Feedback Alignment) 训练的双层 GraphConv 隐藏层激活的 t-SNE 可视化。不同类别形成清晰聚类,表明学习到了有效的中间表征。颜色代表不同类别。

We further demonstrate that DFA helps graph convolutions learn meaningful representations by aplying t-SNE [67, 68] to the hidden layer activation s in GraphConv (Figure 2). Cluster of classes are well-separated, indicating that a useful intermediary representation is derived by the first layer.

我们进一步证明,通过将t-SNE [67, 68]应用于GraphConv中的隐藏层激活(图2),DFA有助于图卷积学习有意义的表征。类别簇被很好地分离,表明第一层导出了有用的中间表征。

Graph auto encoders We consider one last application of graph convolutions, in the context of graph auto encoders (GAE). We train a non-probabilistic GAE [69] based on GraphConv with DFA, and report results in Table 4. DFA performance is always in line with BP.

图自编码器

我们考虑图卷积在图自编码器 (GAE) 中的最后一个应用。我们基于 GraphConv 和 DFA 训练了一个非概率 GAE [69],并在表 4 中报告了结果。DFA 的表现始终与 BP 一致。

3.3 Natural Language Processing with Transformers

3.3 基于Transformer的自然语言处理

We complete our study by training a Transformer [63] on a language modelling task. Transformers have proved successful in text, image, music generation, machine translation, and many supervised NLP tasks [63, 70–73]. Here, we demonstrate that DFA can train them, and we show the influence of tuning the optimizer hyper parameters in narrowing the gap with BP.

我们通过在大语言模型任务上训练一个Transformer [63]来完成研究。Transformer已在文本、图像、音乐生成、机器翻译及众多监督式NLP任务中取得显著成功[63, 70–73]。本文证明DFA算法能够有效训练Transformer,并通过调整优化器超参数缩小了与反向传播(BP)的性能差距。

Background NLP has largely benefited from advances in deep learning. Recurrent Neural Networks were responsible for early breakthroughs, but their sequential nature prevented efficient parallel iz ation of data processing. Transformers are attention-based models that do not rely on recurrence or convolution. Their ability to scale massively has allowed the training of models with several billion parameters [74, 75], obtaining state-of-the-art results on all NLP tasks: Transformers now top the prominent SQuAD 2.0 [76, 77] and SuperGLUE [78] benchmarks. In parallel, transfer learning in NLP has leaped forward thanks to language modelling, the unsupervised task of predicting the next word. It can leverage virtually unlimited data from web scraping [79]. This enabled the training of universal language models [80] on extremely large and diversified text corpora. These models are useful across a wide range of domains, and can solve most NLP tasks after fine-tuning.

背景

自然语言处理(NLP)在很大程度上受益于深度学习的进步。循环神经网络(RNN)曾带来早期突破,但其顺序特性阻碍了数据处理的并行化。Transformer是基于注意力机制的模型,不依赖循环或卷积结构。其强大的扩展能力使得训练具有数十亿参数的模型成为可能[74,75],并在所有NLP任务中取得最先进成果:Transformer目前主导着著名的SQuAD 2.0[76,77]和SuperGLUE[78]基准测试。与此同时,得益于语言建模(预测下一个词的无监督任务),NLP中的迁移学习实现了飞跃。这种方法可以利用网络爬取的海量数据[79],从而能够在极大规模且多样化的文本语料库上训练通用语言模型[80]。这些模型适用于广泛领域,经过微调后能解决大多数NLP任务。

Setting The prominence of both language modelling and Transformers gives us the ideal candidate for our NLP experiments: we train a Transformer to predict the next word on the WikiText-103 dataset [81], a large collection of good and featured Wikipedia articles. We use byte-pair-encoding [82] with 32,000 tokens. We adopt a Generative Pre-Training (GPT) setup [70]: we adapt the Transformer, originally an encoder-decoder model designed for machine translation, to language modelling. We keep only the encoder and mask the tokens to predict. Our architecture consists in 6 layers, 8 attention heads, a model dimension of 512, and a hidden size of 2048 in the feed-forward blocks. The text is sliced in chunks of 128 tokens and batches of 64 such chunks, resulting in 8192 tokens per batch. Our baseline is trained with BP using the optimization setup of [63]. We found perplexity after 20 epochs to be an excellent indicator of perplexity at convergence; to maximize the number of experiments we could perform, we report the best validation perplexity after 20 epochs. We study two ways of implementing DFA: applying the feedback after every encoder block (macro) or after every layer in those blocks (micro). The macro setting enables weight transport at the block-scale, and some weight transport remain in the micro setting as well: to train the input embeddings layer, by back propagation through the first encoder block, and for the values matrices in attention – see Appendix $\mathrm{D}$ for details.

设定

语言建模和Transformer的突出地位为我们的NLP实验提供了理想候选方案:我们在WikiText-103数据集[81](一个优质精选维基百科文章的大型集合)上训练Transformer来预测下一个词。我们使用包含32,000个token的字节对编码(BPE)[82],并采用生成式预训练(GPT)配置[70]:将原本为机器翻译设计的编码器-解码器架构的Transformer适配至语言建模任务。我们仅保留编码器并对待预测token进行掩码处理。模型架构包含6层、8个注意力头、512维模型维度,以及前馈块中2048维的隐藏层大小。文本被切分为128个token的片段,每批处理64个此类片段,形成每批8192个token的规模。

基线模型采用[63]的优化配置进行反向传播(BP)训练。我们发现20轮训练后的困惑度能有效反映收敛时的困惑度;为最大化实验数量,我们报告20轮后最佳验证困惑度。我们研究了两种实现直接反馈对齐(DFA)的方法:在每编码器块后施加反馈(宏观)或在块内每层后施加反馈(微观)。宏观设置支持块级权重传输,而微观设置中仍保留部分权重传输机制:通过首编码器块的反向传播训练输入嵌入层,以及注意力机制中的值矩阵——详见附录$\mathrm{D}$。

Table 5: Best validation perplexity after 20 epochs of a Transformer trained on WikiText-103 (lower is better). The BP and DFA baselines share all hyper-parameters. In Macro the feedback is applied after every transformer layer, implying weight transport at the layer-scale, while in Micro the feedback is applied after every sub-layer. The learning rate of Adam without the learning rate scheduler is $5.10^{-5}$ . With the scheduler, the initial learning rate is $1.10^{-4}$ and it is multiplied by 0.2 when performance plateaus, with a patience of 1. * score after 22 epochs to let the learning rate scheduler take effect

| DFA | BP | |||||

| Baseline | +Adam | + β2 = 0.999 | + LR schedule | Baseline | + β2 = 0.999 | |

| Macro | 95.0 | 77.1 | 55.0 | 52.0 | 34.4 | 29.8 |

| Micro | 182 | 166 | 99.9 | 93.3* | ||

表 5: 在 WikiText-103 上训练 20 个周期后的最佳验证困惑度 (越低越好) 。BP 和 DFA 基线共享所有超参数。Macro 模式下反馈应用于每个 Transformer 层后 (隐含层尺度权重传递) ,Micro 模式下反馈应用于每个子层后。未使用学习率调度器的 Adam 学习率为 $5.10^{-5}$ 。使用调度器时初始学习率为 $1.10^{-4}$ ,性能停滞时乘以 0.2 (耐心值为 1) 。* 表示运行 22 个周期以让学习率调度器生效后的分数

| DFA | BP | |||||

|---|---|---|---|---|---|---|

| Baseline | +Adam | + β2=0.999 | + LR schedule | Baseline | + β2=0.999 | |

| Macro | 95.0 | 77.1 | 55.0 | 52.0 | 34.4 | 29.8 |

| Micro | 182 | 166 | 99.9 | 93.3* | 34.4 | 29.8 |

Results Our results are summarized in Table 5. Hyper-parameters fine-tuned for BP did not fare well with DFA, but changes in the optimizer narrowed the gap between BP and DFA considerably. The learning rate schedule used on top of Adam [83] in [63] proved detrimental. Using Adam alone required reducing the learning rate between BP and DFA. Increasing $\beta_{2}$ from 0.98 [63] to 0.999 improved performance significantly. Finally, a simple scheduler that reduces the learning rate when the validation perplexity plateaus helped reducing it further. Considering that the perplexity of the shallow baseline is over 400, DFA is clearly able to train Transformers. However, our results are not on par with BP, especially in the micro setting. A substantial amount of work remains to make DFA competitive with BP, even more so in a minimal weight transport scenario. The large performance improvements brought by small changes in the optimizer indicate that intensive fine-tuning, common in publications introducing state-of-the-art results, could close the gap between BP and DFA.

结果 我们的结果总结在表5中。为反向传播(BP)优化的超参数在直接反馈对齐(DFA)中表现不佳,但优化器的调整显著缩小了BP与DFA之间的差距。文献[63]中在Adam[83]基础上采用的学习率调度策略被证明是有害的。单独使用Adam时需要在BP和DFA之间降低学习率。将$\beta_{2}$从0.98[63]提升至0.999带来了显著的性能改善。最后,当验证困惑度趋于平稳时降低学习率的简单调度器进一步缩小了差距。考虑到浅层基线的困惑度超过400,DFA显然能够训练Transformer模型。然而我们的结果仍落后于BP,尤其在微观设置中。要使DFA与BP具有竞争力仍需大量工作,在最小权重传输场景中更是如此。优化器微小改动带来的大幅性能提升表明,引入最先进成果的论文中常见的密集调参可能弥合BP与DFA之间的差距。

4 Conclusion and outlooks

4 结论与展望

We conducted an extensive study demonstrating the ability of DFA to train modern architectures. We considered a broad selection of domains and tasks, with complex models featuring graph convolutions and attention. Our results on large networks like NeRF and Transformers are encouraging, suggesting that with further tuning, such leading architectures can be effectively trained with DFA. Future work on principled training with DFA–in particular regarding the influence of common practices and whether new procedures are required–will help close the gap with BP.

我们开展了一项广泛研究,证明了直接反馈对齐(DFA)训练现代架构的能力。研究覆盖了多个领域和任务,采用包含图卷积和注意力机制的复杂模型。在NeRF和Transformer等大型网络上的实验结果令人鼓舞,表明通过进一步调优,DFA能有效训练这类前沿架构。未来关于DFA规范化训练的研究——特别是常规实践的影响以及是否需要新流程——将有助于缩小与反向传播(BP)的差距。

More broadly, we verified for the first time that learning under synaptic asymmetry is possible beyond fully-connected layers, and in tasks significantly more difficult than previously considered. This addresses a notable concern in biologically-plausible architectures. DFA still requires an implausible global feedback pathway; however, local training has already been demonstrated at scale. The next step towards biologically-compatible learning is a local method without weight transport.

更广泛地说,我们首次验证了在突触不对称性下学习不仅限于全连接层,还能应用于比以往研究更复杂的任务。这解决了生物合理性架构中一个显著问题。虽然DFA仍需依赖不合理的全局反馈通路,但局部训练已在大规模场景中得到验证。实现生物兼容学习的下一步是开发无需权重传递的局部训练方法。

While the tasks and architectures we have considered are not biologically inspired, they constitute a good benchmark for behavioural realism [20]. Any learning algorithm claiming to approximate the brain should reproduce its ability to solve complex and unseen task. Furthermore, even though the current implementation of mechanisms like attention is devoid of biological considerations, they represent broader concepts applicable to human brains [84]. Understanding how our brain learns is a gradual process, and future research could incorporate further realistic elements, like spiking neurons.

虽然我们所考虑的任务和架构并非受生物学启发,但它们构成了衡量行为真实性的良好基准 [20]。任何声称能近似大脑的学习算法,都应重现其解决复杂未知任务的能力。此外,尽管当前注意力等机制的实现未考虑生物学因素,但它们代表了适用于人脑的更广泛概念 [84]。理解大脑的学习机制是一个渐进过程,未来研究可融入更多现实元素(如脉冲神经元)。

Finally, unlocking the backward pass in large architectures like Transformers is promising. More optimized implementation of DFA–built at a lower-level of existing ML libraries–could unlock significant speed-up. Leveraging the use of a single random projection as the cornerstone of training, dedicated accelerators may employ more exotic hardware architectures. This will open new possibilities in the asynchronous training of massive models.

最后,在Transformer等大型架构中解锁反向传递过程前景广阔。通过更低层级优化现有机器学习库中的直接反馈对齐(DFA)实现,可能带来显著的加速效果。以单一随机投影作为训练基石,专用加速器可采用更前沿的硬件架构,这将为超大规模模型的异步训练开辟新的可能性。

Broader Impact

更广泛的影响

Of our survey This study is the first experimental validation of DFA as an effective training method in a wide range of challenging tasks and neural networks architectures. This significantly broadens the applications of DFA, and more generally brings new insight on training techniques alternative to backpropagation. From neural rendering and recommend er systems, to natural language processing or geometric learning, each of these applications has its own potential impact. Our task selection process was motivated by current trends in deep learning, as well as by technically appealing mechanisms (graph convolutions, attention). A limit of our survey is that our–arguably biased–selection of tasks cannot be exhaustive. Our experiments required substantial cloud compute resources, with state-ofthe-art GPU hardware. Nevertheless, as this study provides new perspectives for hardware accelerator technologies, it may favor the application of neural networks in fields previously inaccessible because of computational limits. Future research on DFA should continue to demonstrate its use in novel contexts of interest as they are discovered.

关于我们的调查

本研究首次通过实验验证了DFA作为一种有效的训练方法在多种具有挑战性的任务和神经网络架构中的适用性。这显著拓宽了DFA的应用范围,并且更广泛地为替代反向传播的训练技术提供了新的见解。从神经渲染和推荐系统,到自然语言处理或几何学习,这些应用中的每一个都具有其潜在的独特影响。我们的任务选择过程受到当前深度学习趋势的驱动,同时也考虑了技术上具有吸引力的机制(如图卷积、注意力机制)。我们调查的一个局限在于,我们可能带有偏见的任务选择无法做到完全全面。我们的实验需要大量的云计算资源,并使用了最先进的GPU硬件。尽管如此,由于这项研究为硬件加速器技术提供了新的视角,它可能有助于神经网络在之前因计算限制而无法涉足的领域中的应用。未来关于DFA的研究应继续探索并展示其在新兴感兴趣领域中的应用。

Of the considered applications Each of the applications considered in our study has a wide potential impact, consider for example the impact of textual bias in pretrained word embeddings [85]. We refer to [86] and references therein for a discussion of ethical concerns of AI applications.

在我们的研究中,所考虑的每个应用都具有广泛的潜在影响,例如预训练词嵌入中的文本偏见影响 [85]。关于AI应用的伦理问题讨论,请参阅 [86] 及其参考文献。

Of DFA as a training method DFA enables parallel iz ation of the backward pass and places a single operation at the center of the training process, opening the prospect of reducing the power consumption of training chips by an order of magnitude [31]. Not only is more efficient training a path to more environmentally responsible machine learning [87], but it may lower the barrier of entry, supporting equality and sustainable development goals. A significant downside of moving from BP to DFA is a far more limited understanding of how to train models and how the trained models behave. There is a clear empirical understanding of the impact of techniques such as batch normalization or skip connections on the performance of BP; new insights need to be obtained for DFA. BP also enjoys decades of works on topics like adversarial attacks, interpret ability, and fairness. Much of this work has to be cross-checked for alternative training methods, something we encourage further research to consider as the next step towards safely and responsively scaling up DFA.

作为训练方法的DFA

DFA实现了反向传播的并行化,并将单一操作置于训练过程的核心,有望将训练芯片的功耗降低一个数量级[31]。高效训练不仅是实现更环保机器学习[87]的途径,还可能降低准入门槛,支持平等与可持续发展目标。

从BP转向DFA的主要缺点是人们对如何训练模型及训练后模型行为的理解更为有限。关于批归一化或跳跃连接等技术对BP性能的影响已有明确的实证认知,而DFA需要获得新的见解。BP还受益于对抗攻击、可解释性和公平性等主题数十年的研究成果。这些工作大多需要针对替代训练方法进行交叉验证,我们鼓励进一步研究考虑将其作为安全、负责任地扩展DFA的下一步。

Of biologically motivated methods Finally, a key motivation for this study was to demonstrate that learning challenging tasks was po