LAMBDA NETWORKS: MODELING LONG-RANGE INTERACTIONS WITHOUT ATTENTION

LAMBDA NETWORKS:无需注意力机制的长程交互建模

Irwan Bello Google Research, Brain team ibello@google.com

Irwan Bello

Google Research, Brain团队

ibello@google.com

ABSTRACT

摘要

We present lambda layers – an alternative framework to self-attention – for capturing long-range interactions between an input and structured contextual information (e.g. a pixel surrounded by other pixels). Lambda layers capture such interactions by transforming available contexts into linear functions, termed lambdas, and applying these linear functions to each input separately. Similar to linear attention, lambda layers bypass expensive attention maps, but in contrast, they model both content and position-based interactions which enables their application to large structured inputs such as images. The resulting neural network architectures, Lambda Networks, significantly outperform their convolutional and attention al counterparts on ImageNet classification, COCO object detection and COCO instance segmentation, while being more computationally efficient. Additionally, we design Lambda Res Nets, a family of hybrid architectures across different scales, that considerably improves the speed-accuracy tradeoff of image classification models. Lambda Res Nets reach excellent accuracies on ImageNet while being $3.2\cdot4.4\mathrm{x}$ faster than the popular Efficient Nets on modern machine learning accelerators. When training with an additional 130M pseudo-labeled images, Lambda Res Nets achieve up to a $\mathbf{9.5x}$ speed-up over the corresponding EfficientNet checkpoints 1.

我们提出lambda层——一种替代自注意力(self-attention)的框架——用于捕获输入与结构化上下文信息之间的长程交互(例如被其他像素包围的单个像素)。lambda层通过将可用上下文转换为线性函数(称为lambda)并分别对每个输入应用这些线性函数,来实现此类交互建模。与线性注意力类似,lambda层绕过了昂贵的注意力图计算,但不同之处在于它们同时建模基于内容和位置的交互,这使得其能够处理图像等大型结构化输入。由此构建的神经网络架构Lambda Networks在ImageNet分类、COCO目标检测和COCO实例分割任务上显著优于卷积和注意力基线模型,同时具有更高的计算效率。此外,我们设计了Lambda Res Nets这一跨不同尺度的混合架构家族,显著改善了图像分类模型的速度-精度权衡。Lambda Res Nets在现代机器学习加速器上比流行的Efficient Nets快$3.2\cdot4.4\mathrm{x}$倍的同时,在ImageNet上达到了优异精度。当使用额外1.3亿张伪标注图像进行训练时,Lambda Res Nets相比对应EfficientNet检查点实现了高达$\mathbf{9.5x}$的加速[1]。

CONTENTS

目录

3 Lambda Layers

3 Lambda层

4 Related Work 8

4 相关工作

5 Experiments 9

5 实验 9

6 Discussion 12

6 讨论 12

A Practical Modeling Recommendations 18

实用建模建议 18

C Additional Related Work 21

C 其他相关工作 21

D Additional Experiments 25

D 补充实验 25

E Experimental Details

E 实验细节

29

29

1 INTRODUCTION

1 引言

Modeling long-range dependencies in data is a central problem in machine learning. Selfattention (Bahdanau et al., 2015; Vaswani et al., 2017) has emerged as a popular approach to do so, but the costly memory requirement of self-attention hinders its application to long sequences and multidimensional data such as images2. Linear attention mechanisms (Katha ro poul os et al., 2020; Cho roman ski et al., 2020) offer a scalable remedy for high memory usage but fail to model internal data structure, such as relative distances between pixels or edge relations between nodes in a graph.

建模数据中的长程依赖是机器学习的核心问题。自注意力机制 (Bahdanau et al., 2015; Vaswani et al., 2017) 已成为解决该问题的流行方法,但其高昂的内存需求阻碍了其在长序列和多维数据(如图像2)上的应用。线性注意力机制 (Katharopoulos et al., 2020; Choromanski et al., 2020) 为高内存消耗提供了可扩展的解决方案,但无法建模内部数据结构,例如像素间的相对距离或图中节点间的边关系。

This work addresses both issues. We propose lambda layers which model long-range interactions between a query and a structured set of context elements at a reduced memory cost. Lambda layers transform each available context into a linear function, termed a lambda, which is then directly applied to the corresponding query. Whereas self-attention defines a similarity kernel between the query and the context elements, a lambda layer instead summarizes contextual information into a fixed-size linear function (i.e. a matrix), thus bypassing the need for memory-intensive attention maps. This difference is illustrated in Figure 1.

本研究同时解决了这两个问题。我们提出了Lambda层(Lambda layers),该层能以较低内存成本建模查询(query)与结构化上下文元素集合之间的长程交互。Lambda层将每个可用上下文转换为线性函数(称为lambda),随后直接应用于对应查询。自注意力(self-attention)机制在查询与上下文元素间建立相似性核函数,而Lambda层则将上下文信息汇总为固定尺寸的线性函数(即矩阵),从而避免生成内存密集型的注意力图。图1展示了这一差异。

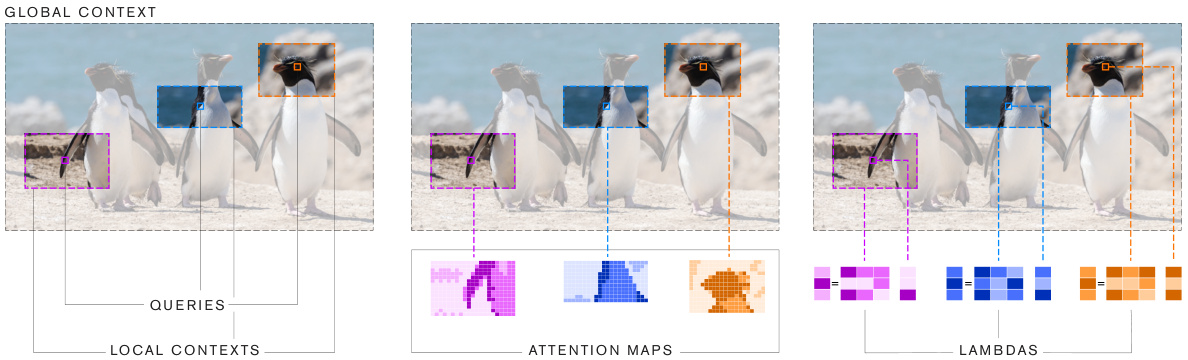

Figure 1: Comparison between self-attention and lambda layers. (Left) An example of 3 queries and their local contexts within a global context. (Middle) Self-attention associates each query with an attention distribution over its context. (Right) The lambda layer transforms each context into a linear function lambda that is applied to the corresponding query.

图 1: 自注意力机制与lambda层的对比。(左) 3个查询及其在全局上下文中的局部上下文示例。(中) 自注意力机制为每个查询生成一个基于其上下文的注意力分布。(右) lambda层将每个上下文转换为线性函数lambda并应用于对应查询。

Lambda layers are versatile and can be implemented to model both content-based and position-based interactions in global, local or masked contexts. The resulting neural networks, Lambda Networks, are computationally efficient, model long-range dependencies at a small memory cost and can therefore be applied to large structured inputs such as high resolution images.

Lambda层具有多功能性,可用于建模全局、局部或掩码上下文中的基于内容和基于位置的交互。由此产生的神经网络——Lambda网络,计算效率高,能以较小的内存成本建模长程依赖关系,因此可应用于高分辨率图像等大型结构化输入。

We evaluate Lambda Networks on computer vision tasks where works using self-attention are hindered by large memory costs (Wang et al., 2018; Bello et al., 2019), suffer impractical implementations (Rama chandra n et al., 2019), or require vast amounts of data (Do sov it ski y et al., 2020). In our experiments spanning ImageNet classification, COCO object detection and COCO instance segmentation, Lambda Networks significantly outperform their convolutional and attention al counterparts, while being more computationally efficient and faster than the latter. We summarize our contributions:

我们在计算机视觉任务上评估了Lambda Networks,这些任务中基于自注意力(self-attention)的工作常受限于高昂内存开销(Wang等人,2018;Bello等人,2019)、存在实现可行性问题(Ramachandran等人,2019)或需要海量数据(Dosovitskiy等人,2020)。在ImageNet分类、COCO目标检测和COCO实例分割的实验中,Lambda Networks在计算效率与速度均优于卷积和注意力机制方案的同时,取得了显著性能提升。我们的贡献总结如下:

| A content-based interaction considers the content of the context but ignores the relation between |

| the query position and the context (e.g. relative distance between two pixels). A position-based interaction considers the relationbetween the query position and the context position. |

基于内容的交互 (content-based interaction) 会考虑上下文内容但忽略查询位置与上下文的关系 (例如两个像素的相对距离) 。基于位置的交互 (position-based interaction) 会考虑查询位置与上下文位置的关系。

Table 1: Definition of content-based vs position-based interactions.

表 1: 基于内容与基于位置的交互定义。

simply replacing the 3x3 convolutions in the bottleneck blocks of the ResNet-50 architecture (He et al., 2016) with lambda layers yields a $+1.5%$ top-1 ImageNet accuracy improvement while reducing parameters by $40%$ .

只需将ResNet-50架构(He et al., 2016)瓶颈块中的3x3卷积替换为lambda层,就能在减少40%参数量的同时,将ImageNet top-1准确率提升1.5%。

2 MODELING LONG-RANGE INTERACTIONS

2 长程交互建模

In this section, we formally define queries, contexts and interactions. We motivate keys as a requirement for capturing interactions between queries and their contexts and show that lambda layers arise as an alternative to attention mechanisms for capturing long-range interactions.

在本节中,我们正式定义查询(query)、上下文(context)和交互(interaction)。我们将键(key)作为捕捉查询与其上下文之间交互的必要条件,并展示lambda层可作为注意力机制的替代方案来捕捉长程交互。

Notation. We denote scalars, vectors and tensors using lower-case, bold lower-case and bold upper-case letters, e.g., $n,x$ and $\boldsymbol{X}$ . We denote $|n|$ the cardinality of a set whose elements are indexed by $n$ . We denote ${\boldsymbol{x}}_ {n}$ the $n$ -th row of $\boldsymbol{X}$ . We denote $x_{i j}$ the $|i j|$ elements of $\boldsymbol{X}$ . When possible, we adopt the terminology of self-attention to ease readability and highlight differences.

符号表示。我们用小写字母、粗体小写字母和粗体大写字母分别表示标量、向量和张量,例如 $n,x$ 和 $\boldsymbol{X}$。用 $|n|$ 表示由 $n$ 索引的集合的基数。用 ${\boldsymbol{x}}_ {n}$ 表示 $\boldsymbol{X}$ 的第 $n$ 行。用 $x_{i j}$ 表示 $\boldsymbol{X}$ 的 $|i j|$ 元素。在可能的情况下,我们采用自注意力 (self-attention) 的术语以提高可读性并突出差异。

Defining queries and contexts. Let ${\mathcal{Q}}={(\pmb{q}_ {n},n)}$ and ${\mathcal{C}}={(c_{m},m)}$ denote structured collections of vectors, respectively referred to as the queries and the context. Each query $(\pmb q_{n},n)$ is characterized by its content $\pmb q_{n}\in\mathbb{R}^{|k|}$ and position $n$ . Similarly, each context element $(\pmb{c}_ {m},m)$ is characterized by its content $c_{m}$ and its position $m$ in the context. The $(n,m)$ pair may refer to any pairwise relation between structured elements, e.g. relative distances between pixels or edges between nodes in a graph.

定义查询与上下文。设 ${\mathcal{Q}}={(\pmb{q}_ {n},n)}$ 和 ${\mathcal{C}}={(c_{m},m)}$ 分别表示结构化向量集合,称为查询和上下文。每个查询 $(\pmb q_{n},n)$ 由其内容 $\pmb q_{n}\in\mathbb{R}^{|k|}$ 和位置 $n$ 表征。类似地,每个上下文元素 $(\pmb{c}_ {m},m)$ 由其内容 $c_{m}$ 及其在上下文中的位置 $m$ 表征。$(n,m)$ 对可指代结构化元素间的任意成对关系,例如像素间的相对距离或图中节点间的边。

Defining interactions. We consider the general problem of mapping a query $(\pmb q_{n},n)$ to an output vector $\pmb{y}_ {n}\in\mathbb{R}^{|v|}$ given the context $\mathcal{C}$ with a function $F:((\pmb{q}_ {n},n),\mathcal{C})\mapsto\pmb{y}_ {n}$ . Such a function may act as a layer in a neural network when processing structured inputs. We refer to $(\pmb{q}_ {n},\pmb{c}_ {m})$ interactions as content-based and $(\pmb q_{n},(n,m))$ interactions as position-based. We note that while absolute positional information is sometimes directly added to the query (or context element) content3, we consider this type of interaction to be content-based as it ignores the relation $(n,m)$ between the query and context element positions.

定义交互。我们考虑将查询 $( \pmb q_{n},n )$ 映射到输出向量 $\pmb{y}_ {n}\in\mathbb{R}^{|v|}$ 的通用问题,给定上下文 $\mathcal{C}$ 和函数 $F:((\pmb{q}_ {n},n),\mathcal{C})\mapsto\pmb{y}_ {n}$。在处理结构化输入时,此类函数可作为神经网络的层。我们将 $(\pmb{q}_ {n},\pmb{c}_ {m})$ 交互称为基于内容的交互,而 $(\pmb q_{n},(n,m))$ 交互称为基于位置的交互。需要注意的是,虽然绝对位置信息有时会直接添加到查询(或上下文元素)内容中 [3],但我们认为此类交互仍属于基于内容,因为它忽略了查询与上下文元素位置之间的关系 $(n,m)$。

Introducing keys to capture long-range interactions. In the context of deep learning, we prioritize fast batched linear operations and use dot-product operations as our interactions. This motivates introducing vectors that can interact with the queries via a dot-product operation and therefore have the same dimension as the queries. In particular, content-based interactions $\left({{q}_ {n}},{{c}_ {m}}\right)$ require a $|k|$ -dimensional vector that depends on $c_{m}$ , commonly referred to as the key $k_{m}$ . Conversely, position-based interactions $(\pmb q_{n},(n,m))$ require a relative position embedding $e_{n m}\in\mathbb{R}^{|k|}$ (Shaw et al., 2018). As the query/key depth $|k|$ and context spatial dimension $|m|$ are not in the output $\pmb{y}_{n}\in\mathbb{R}^{|v|}$ , these dimensions need to be contracted as part of the layer computations. Every layer capturing long-range interactions can therefore be characterized based on whether it contracts the query depth or the context positions first.

引入键(key)以捕捉长程交互。在深度学习背景下,我们优先考虑快速批处理线性运算,并采用点积运算作为交互方式。这促使我们引入能与查询(query)通过点积交互的向量,因此其维度需与查询相同。具体而言:

- 基于内容的交互 $\left({{q}_ {n}},{{c}_ {m}}\right)$ 需要一个依赖 $c_{m}$ 的 $|k|$ 维向量,通常称为键 $k_{m}$;

- 基于位置的交互 $(\pmb q_{n},(n,m))$ 则需要相对位置嵌入 $e_{n m}\in\mathbb{R}^{|k|}$ (Shaw et al., 2018)。

由于查询/键深度 $|k|$ 和上下文空间维度 $|m|$ 不会出现在输出 $\pmb{y}_{n}\in\mathbb{R}^{|v|}$ 中,这些维度需作为层计算的一部分进行收缩。因此,每个捕捉长程交互的层可根据其优先收缩查询深度还是上下文位置来表征。

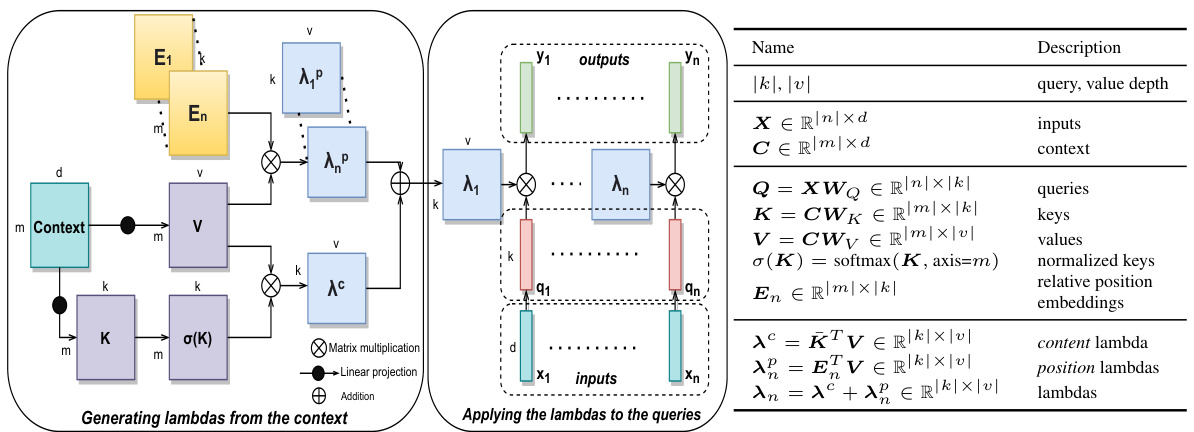

Figure 2: Computational graph of the lambda layer. Contextual information for query position $n$ is summarized into a lambda $\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$ . Applying the lambda dynamically distributes contextual features to produce the output as $\pmb{y}_ {n}=\dot{\lambda}_ {n}^{T}\dot{\pmb{q}_{n}}$ . This process captures content-based and position-based interactions without producing attention maps.

图 2: Lambda层的计算图。查询位置$n$的上下文信息被汇总为一个lambda$\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$。应用该lambda动态分配上下文特征以生成输出$\pmb{y}_ {n}=\dot{\lambda}_ {n}^{T}\dot{\pmb{q}_{n}}$。此过程无需生成注意力图即可捕获基于内容和基于位置的交互。

Attention al interactions. Contracting the query depth first creates a similarity kernel (the attention map) between the query and context elements and is known as the attention operation. As the number of context positions $|m|$ grows larger and the input and output dimensions $|k|$ and $|v|$ remain fixed, one may hypothesize that computing attention maps become wasteful, given that the layer output is a vector of comparatively small dimension $|v|\ll|m|$ .

注意力交互。首先收缩查询深度会在查询和上下文元素之间创建一个相似性核(即注意力图),这一过程被称为注意力操作。随着上下文位置数量 $|m|$ 增大,而输入输出维度 $|k|$ 和 $|v|$ 保持不变,考虑到该层输出是维度相对较小的向量 $|v|\ll|m|$,可以推测计算注意力图会变得低效。

Lambda interactions. Instead, it may be more efficient to simply map each query to its output as ${\pmb y}_ {n}=F(({\pmb q}_ {n},n),{\mathcal{C}})=\lambda({\mathcal{C}},n)({\pmb q}_ {n})$ for some linear function $\dot{\lambda(\mathcal{C},n)}:\mathbb{R}^{|k|}\stackrel{..}{\rightarrow}\mathbb{R}^{|v|}$ . In this scenario, the context is aggregated into a fixed-size linear function $\lambda_{n}=\lambda({\mathcal{C}},n)$ . Each $\lambda_{n}$ acts as a small linear function4 that exists independently of the context (once computed) and is discarded after being applied to its associated query $q_{n}$ .

Lambda交互。相反,更高效的做法可能是直接将每个查询映射到其输出,即 ${\pmb y}_ {n}=F(({\pmb q}_ {n},n),{\mathcal{C}})=\lambda({\mathcal{C}},n)({\pmb q}_ {n})$ ,其中 $\dot{\lambda(\mathcal{C},n)}:\mathbb{R}^{|k|}\stackrel{..}{\rightarrow}\mathbb{R}^{|v|}$ 为某个线性函数。在此场景中,上下文被聚合为一个固定大小的线性函数 $\lambda_{n}=\lambda({\mathcal{C}},n)$ 。每个 $\lambda_{n}$ 作为独立于上下文(一旦计算完成)存在的小型线性函数4,并在应用于其关联查询 $q_{n}$ 后被丢弃。

3 LAMBDA LAYERS

3 LAMBDA 层

3.1 LAMBDA LAYER: TRANSFORMING CONTEXTS INTO LINEAR FUNCTIONS.

3.1 LAMBDA 层:将上下文转换为线性函数

A lambda layer takes the inputs $X\in\mathbb{R}^{|n|\times d_{i n}}$ and the context $C\in\mathbb{R}^{|m|\times d_{c}}$ as input and generates linear function lambdas that are then applied to the queries, yielding outputs $\bar{Y}\in\mathbb{R}^{|n|\times d_{o u t}}$ . Without loss of generality, we assume $d_{i n}=d_{c}=d_{o u t}=d$ . As is the case with $s e l f$ -attention, we we may have $C=X$ . In the rest of this paper, we focus on a specific instance of a lambda layer and show that it captures long-range content and position-based interactions without materializing attention maps. Figure 2 presents the computational graph of the lambda layer.

Lambda层以输入 $X\in\mathbb{R}^{|n|\times d_{i n}}$ 和上下文 $C\in\mathbb{R}^{|m|\times d_{c}}$ 作为输入,生成线性函数lambda,随后将其应用于查询(query)以产生输出 $\bar{Y}\in\mathbb{R}^{|n|\times d_{o u t}}$ 。在不失一般性的前提下,我们假设 $d_{i n}=d_{c}=d_{o u t}=d$ 。与自注意力(self-attention)机制类似,可能存在 $C=X$ 的情况。本文后续将聚焦于lambda层的一个具体实例,展示其无需显式构建注意力图即可捕获长距离内容与基于位置的交互。图2展示了lambda层的计算图。

We first describe the lambda layer when applied to a single query $(\pmb q_{n},n)$ .

我们首先描述应用于单个查询 $(\pmb q_{n},n)$ 的 lambda 层。

Generating the contextual lambda function. We wish to generate a linear function $\mathbb{R}^{|k|}\rightarrow\mathbb{R}^{|v|}$ , i.e. a matrix $\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$ . The lambda layer first computes keys $\kappa$ and values $V$ by linearly projecting the context, and keys are normalized across context positions via a softmax operation yielding normalized keys $\bar{\kappa}$ . The $\lambda_{n}$ matrix is obtained by using the normalized keys $\bar{\kappa}$ and position embeddings $E_{n}$ to aggregate the values $V$ as

生成上下文lambda函数。我们希望生成一个线性函数$\mathbb{R}^{|k|}\rightarrow\mathbb{R}^{|v|}$,即一个矩阵$\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$。lambda层首先通过对上下文进行线性投影计算键$\kappa$和值$V$,并通过softmax操作对上下文位置上的键进行归一化,得到归一化键$\bar{\kappa}$。矩阵$\lambda_{n}$是通过使用归一化键$\bar{\kappa}$和位置嵌入$E_{n}$来聚合值$V$得到的。

$$

\lambda_{n}=\sum_{m}({\bar{k}}_ {m}+e_{n m}){\pmb v}_ {m}^{T}=\underbrace{{\bar{\bf K}}^{T}{\pmb V}}_ {\mathrm{contentlambda}}+\underbrace{\pmb{E}_ {n}^{T}{\pmb V}}_{\mathrm{positionlambda}}\in\mathbb{R}^{|k|\times|v|}

$$

$$

\lambda_{n}=\sum_{m}({\bar{k}}_ {m}+e_{n m}){\pmb v}_ {m}^{T}=\underbrace{{\bar{\bf K}}^{T}{\pmb V}}_ {\mathrm{contentlambda}}+\underbrace{\pmb{E}_ {n}^{T}{\pmb V}}_{\mathrm{positionlambda}}\in\mathbb{R}^{|k|\times|v|}

$$

where we also define the content lambda λc and position lambda λpn.

我们还定义了内容lambda λc和位置lambda λpn。

Applying lambda to its query. The query $q_{n}\in\mathbb{R}^{|k|}$ is obtained from the input ${\boldsymbol{x}}_{n}$ via a learned linear projection and the output of the lambda layer is obtained as

将lambda应用于其查询。查询$q_{n}\in\mathbb{R}^{|k|}$通过学习的线性投影从输入${\boldsymbol{x}}_{n}$获得,lambda层的输出计算如下

$$

\pmb{y}_ {n}=\pmb{\lambda}_ {n}^{T}\pmb{q}_ {n}=(\pmb{\lambda}^{c}+\pmb{\lambda}_ {n}^{p})^{T}\pmb{q}_{n}\in\mathbb{R}^{|v|}.

$$

$$

\pmb{y}_ {n}=\pmb{\lambda}_ {n}^{T}\pmb{q}_ {n}=(\pmb{\lambda}^{c}+\pmb{\lambda}_ {n}^{p})^{T}\pmb{q}_{n}\in\mathbb{R}^{|v|}.

$$

Interpretation of lambda layers. The columns of the $\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$ matrix can be viewed as a fixed-size set of $|k|$ contextual features. These contextual features are aggregated based on the context’s content (content-based interactions) and structure (position-based interactions). Applying the lambda then dynamically distributes these contextual features based on the query to produce the output as $\begin{array}{r}{{\pmb y}_ {n}=\sum_{k}q_{n k}{\pmb\lambda}_{n k}}\end{array}$ . This process captures content and position-based interactions without producing atten tion maps.

Lambda层的解释。矩阵$\lambda_{n}\in\mathbb{R}^{|k|\times|v|}$的列可视为一组固定大小的$|k|$个上下文特征。这些上下文特征基于上下文内容(基于内容的交互)和结构(基于位置的交互)进行聚合。随后应用lambda运算,根据查询(query)动态分配这些上下文特征,生成输出$\begin{array}{r}{{\pmb y}_ {n}=\sum_{k}q_{n k}{\pmb\lambda}_{n k}}\end{array}$。该过程在不生成注意力图(attention maps)的情况下,捕获了基于内容和位置的交互。

Normalization. One may modify Equations 1 and 2 to include non-linear i ties or normalization operations. Our experiments indicate that applying batch normalization (Ioffe & Szegedy, 2015) after computing the queries and the values is helpful.

归一化。可以对公式1和公式2进行修改,加入非线性或归一化操作。实验表明,在计算查询(query)和值(value)后应用批量归一化(batch normalization) [20] 是有效的。

3.2 A MULTI-QUERY FORMULATION TO REDUCE COMPLEXITY.

3.2 降低复杂度的多查询 (Multi-Query) 方案

Complexity analysis. For a batch of $|b|$ examples, each containing $|n|$ inputs, the number of arithmetic operations and memory footprint required to apply our lambda layer are respectively $\Theta(b n m k v)$ and $\Theta(k n m+b n k v)$ . We still have a quadratic memory footprint with respect to the input length due to the $e_{n m}$ relative position embeddings. However this quadratic term does not scale with the batch size as is the case with the attention operation which produces per-example attention maps. In practice, the hyper parameter $|k|$ is set to a small value (such as $|k|{=}16)$ and we can process large batches of large inputs in cases where attention cannot (see Table 4). Additionally, position embeddings can be shared across lambda layers to keep their $\Theta(k n m)$ memory footprint constant - whereas the memory footprint of attention maps scales with the number of layers5.

复杂度分析。对于包含 $|b|$ 个样例的批次,每个样例有 $|n|$ 个输入,应用我们的 lambda 层所需的算术运算次数和内存占用分别为 $\Theta(b n m k v)$ 和 $\Theta(k n m+b n k v)$。由于 $e_{n m}$ 相对位置嵌入的存在,我们仍然面临与输入长度平方相关的内存占用。但这个平方项不会像注意力操作那样随批次大小而扩展,后者会为每个样例生成注意力图。实践中,超参数 $|k|$ 被设置为较小值(例如 $|k|{=}16)$,这使得我们能够处理注意力机制无法应对的大批量长输入情况(见表 4)。此外,位置嵌入可以在多个 lambda 层间共享,从而保持其 $\Theta(k n m)$ 的内存占用恒定——而注意力图的内存占用会随网络层数增加而增长[5]。

Multi-query lambda layers reduce time and space complexities. Recall that the lambda layer maps inputs $\pmb{x}_ {n}\in\mathbb{R}^{d}$ to outputs $\pmb{y}_ {n}\in\mathbb{R}^{d}$ . As presented in Equation 2, this implies that $|v|{=}d$ . Small values of $|v|$ may therefore act as a bottleneck on the feature vector ${\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_{{\mathbf{}}}$ but larger output dimensions $|v|$ can incur an excessively large computational cost given our $\Theta(b n m k v)$ and $\Theta(k n m+b n k v)$ time and space complexities.

多查询lambda层降低了时间和空间复杂度。回顾lambda层将输入$\pmb{x}_ {n}\in\mathbb{R}^{d}$映射到输出$\pmb{y}_ {n}\in\mathbb{R}^{d}$。如公式2所示,这意味着$|v|{=}d$。较小的$|v|$值可能成为特征向量${\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_{{\mathbf{}}}$的瓶颈,而较大的输出维度$|v|$在$\Theta(b n m k v)$和$\Theta(k n m+b n k v)$的时间与空间复杂度下会产生过高的计算成本。

We propose to decouple the time and space complexities of our lambda layer from the output dimension $d$ . Rather than imposing $|v|{=}\mathrm{d}$ , we create $|h|$ queries ${\pmb q_{n}^{h}}$ , apply the same lambda $\lambda_{n}$ to each query $\pmb q_{n}^{h}$ , and concatenate the outputs as ${\pmb y}_ {n}=\mathrm{concat}({\pmb\lambda}_ {n}{\pmb q}_ {n}^{1},\cdot\cdot\cdot,{\pmb\lambda}_ {n}{\pmb q}_ {n}^{|h|})$ . We now have $|v|{=}d/|h|$ , which reduces complexity by a factor of $|h|$ . The number of heads $|h|$ controls the size of the lambdas $\lambda_{n}\in\mathbb{R}^{|k|\times|d|/|h|}$ relative to the total size of the queries $\pmb q_{n}\in\mathbb{R}^{|h k|}$ .

我们提出将lambda层的时间和空间复杂度与输出维度$d$解耦。不再强制要求$|v|{=}\mathrm{d}$,而是创建$|h|$个查询${\pmb q_{n}^{h}}$,对每个查询$\pmb q_{n}^{h}$应用相同的lambda$\lambda_{n}$,并将输出拼接为${\pmb y}_ {n}=\mathrm{concat}({\pmb\lambda}_ {n}{\pmb q}_ {n}^{1},\cdot\cdot\cdot,{\pmb\lambda}_ {n}{\pmb q}_ {n}^{|h|})$。此时$|v|{=}d/|h|$,复杂度降低了$|h|$倍。头数$|h|$控制lambda$\lambda_{n}\in\mathbb{R}^{|k|\times|d|/|h|}$相对于查询$\pmb q_{n}\in\mathbb{R}^{|h k|}$总大小的比例。

| def lambda_layer(queries,1 """Multi-query #b:batch,n: #k: query/key # h: number of content_lambda = einsum(softmax(keys),values,'bmk,bmv->bkv') |

def lambda_layer(queries,1 """Multi-query #b:batch,n: #k: query/key # h: number of content_lambda = einsum(softmax(keys),values,'bmk,bmv->bkv')

We refer to this operation as a multi-query lambda layer and present an implementation using einsum6 in Figure 3. The lambda layer is robust to $|k|$ and $|h|$ hyper parameter choices (see Appendix D.1), which enables flexibility in controlling its complexity. We use $\vert h\vert{=}4$ in most experiments.

我们将这一操作称为多查询lambda层,并在图3中展示了使用einsum6的实现方式。该lambda层对超参数$|k|$和$|h|$的选择具有鲁棒性(见附录D.1),从而能够灵活控制其复杂度。在大多数实验中,我们使用$\vert h\vert{=}4$。

We note that while this resembles the multi-head or multi-query (Shazeer, 2019)7 attention formulation, the motivation is different. Using multiple queries in the attention operation increases represent at ional power and complexity. In contrast, using multiple queries in the lambda layer decreases complexity and representational power (ignoring the additional queries).

我们注意到,虽然这与多头或多查询 (Shazeer, 2019) [7] 注意力公式相似,但动机不同。在注意力操作中使用多个查询会增加表示能力和复杂性。相反,在 lambda 层中使用多个查询会降低复杂性和表示能力 (忽略额外的查询)。

Extending the multi-query formulation to linear attention. Finally, we point that our analysis extends to linear attention which can be viewed as a content-only lambda layer (see Appendix C.3 for a detailed discussion). We anticipate that the multi-query formulation can also bring computational benefits to linear attention mechanisms.

将多查询(multi-query)公式扩展到线性注意力机制。最后需要指出,我们的分析同样适用于线性注意力机制——这种机制可被视为仅含内容的lambda层(详见附录C.3)。我们预计多查询公式也能为线性注意力机制带来计算优势。

3.3 MAKING LAMBDA LAYERS TRANSLATION E QUI VARIANT.

3.3 实现Lambda层翻译等效变体

Using relative position embeddings $e_{n m}$ enables making explicit assumptions about the structure of the context. In particular, translation e qui variance (i.e. the property that shifting the inputs results in an equivalent shift of the outputs) is a strong inductive bias in many learning scenarios. We obtain translation e qui variance in position interactions by ensuring that the position embeddings satisfy $e_{n m}=e_{t(n)t(m)}$ for any translation $t$ . In practice, we define a tensor of relative position embeddings $\pmb{R}\in\mathbb{R}^{|r|\times|k|}$ , where $r$ indexes the possible relative positions for all $(n,m)$ pairs, and reindex8 it into $\pmb{{\cal E}}\in\mathbb{R}^{|n|\times|m|\times|k|}$ such that $e_{n m}=r_{r(n,m)}$ .

使用相对位置嵌入 $e_{n m}$ 能够显式建模上下文的结构假设。其中,平移等变性 (即输入偏移导致输出等效偏移的特性) 是许多学习场景中的强归纳偏置。我们通过确保位置嵌入满足 $e_{n m}=e_{t(n)t(m)}$(对于任意平移 $t$)来实现位置交互的平移等变性。实践中,我们定义相对位置嵌入张量 $\pmb{R}\in\mathbb{R}^{|r|\times|k|}$(其中 $r$ 索引所有 $(n,m)$ 对的可能相对位置),并将其重新索引为 $\pmb{{\cal E}}\in\mathbb{R}^{|n|\times|m|\times|k|}$ 使得 $e_{n m}=r_{r(n,m)}$。

3.4 LAMBDA CONVOLUTION: MODELING LONGER RANGE INTERACTIONS IN LOCAL CONTEXTS.

3.4 LAMBDA卷积:在局部上下文中建模长程交互

Despite the benefits of long-range interactions, locality remains a strong inductive bias in many tasks. Using global contexts may prove noisy or computationally excessive. It may therefore be useful to restrict the scope of position interactions to a local neighborhood around the query position $n$ as is the case for local self-attention and convolutions. This can be done by zeroing out the relative embeddings for context positions $m$ outside of the desired scope. However, this strategy remains costly for large values of $|m|$ since the computations still occur - they are only being zeroed out.

尽管长程交互具有优势,但在许多任务中局部性仍是强归纳偏置。使用全局上下文可能引入噪声或导致计算冗余。因此,将位置交互范围限制在查询位置 $n$ 的局部邻域内可能更为有效,如局部自注意力 (local self-attention) 和卷积操作所示。这可通过将超出目标范围的上下文位置 $m$ 的相对嵌入置零来实现。然而,当 $|m|$ 值较大时,该策略仍存在较高计算成本——因为运算依然执行,只是结果被置零。

Lambda convolution In the case where the context is arranged in a multidimensional grid, we can equivalently compute positional lambdas from local contexts by using a regular convolution.

Lambda卷积

当上下文排列为多维网格时,我们可以通过常规卷积从局部上下文中等价地计算位置lambda。

Table 2: Alternatives for capturing long-range interactions. The lambda layer captures content and position-based interactions at a reduced memory cost compared to relative attention (Shaw et al., 2018; Bello et al., 2019). Using a multi-query lambda layer reduces complexities by a factor of $|h|$ . Additionally, position-based interactions can be restricted to a local scope by using the lambda convolution which has linear complexity. $b$ : batch size, $h$ : number of heads/queries, $n$ : input length, $m$ : context length, $r$ : local scope size, $k$ : query/key depth, $d$ : dimension output.

| Operation | Head configuration | Interactions | Time complexity | Space complexity |

| Attention Relativeattention | multi-head multi-head | content-only content & position | (bnm(hk + d)) (bnm(hk + d)) | O(bhnm bhnm |

| Lambdalayer Lambdaconvolution | multi-head multi-query multi-query | content-only content&position content & position | O(bnkd) (bnmkd/h) (bnrkd/h) | O(bkd O(knm+bnkd/h) (kr +bnkd/h) |

表 2: 捕捉长距离交互的替代方案。与相对注意力 (Shaw et al., 2018; Bello et al., 2019) 相比,lambda层以更低的内存成本捕捉基于内容和位置的交互。使用多查询lambda层可将复杂度降低 $|h|$ 倍。此外,通过使用具有线性复杂度的lambda卷积,可以将基于位置的交互限制在局部范围内。$b$: 批大小, $h$: 头数/查询数, $n$: 输入长度, $m$: 上下文长度, $r$: 局部范围大小, $k$: 查询/键深度, $d$: 输出维度。

| 操作 | 头配置 | 交互 | 时间复杂度 | 空间复杂度 |

|---|---|---|---|---|

| 注意力 相对注意力 | 多头 多头 | 仅内容 内容 & 位置 | (bnm(hk + d)) (bnm(hk + d)) | O(bhnm bhnm) |

| Lambda层 Lambda卷积 | 多头 多查询 多查询 | 仅内容 内容&位置 内容 & 位置 | O(bnkd) (bnmkd/h) (bnrkd/h) | O(bkd) O(knm+bnkd/h) (kr +bnkd/h) |

We term this operation the lambda convolution. A n-dimensional lambda convolution can be implemented using an n-d depthwise convolution with channel multiplier or (n+1)-d convolution that treats the $v$ dimension in $V$ as an extra spatial dimension. We present both implementations in Appendix B.1.

我们将这一操作称为lambda卷积。n维lambda卷积可以通过使用带有通道乘数的n维深度卷积或将$V$中的$v$维度视为额外空间维度的(n+1)维卷积来实现。我们在附录B.1中提供了这两种实现方案。

As the computations are now restricted to a local scope, the lambda convolution obtains linear time and memory complexities with respect to the input length9. The lambda convolution is readily usable with additional functionalities such as dilation and striding and enjoys optimized implementations on specialized hardware accelerators (Nickolls & Dally, 2010; Jouppi et al., 2017). This is in stark contrast to implementations of local self-attention that require materializing feature patches of overlapping query and context blocks (Parmar et al., 2018; Rama chandra n et al., 2019), increasing memory consumption and latency (see Table 4).

由于计算现在被限制在局部范围内,Lambda卷积在输入长度上实现了线性的时间和内存复杂度[9]。Lambda卷积可直接配合膨胀(dilation)和步长(striding)等附加功能使用,并能在专用硬件加速器上获得优化实现 (Nickolls & Dally, 2010; Jouppi et al., 2017)。这与局部自注意力(local self-attention)的实现形成鲜明对比——后者需要实例化重叠查询块和上下文块的特征补丁 (Parmar et al., 2018; Ramachandran et al., 2019),从而增加内存消耗和延迟(见表4)。

4 RELATED WORK

4 相关工作

Table 2 reviews alternatives for capturing long-range interactions and contrasts them with the proposed multi-query lambda layer. We discuss related works in details in the Appendix C.

表 2: 回顾了捕捉长程交互的替代方案,并与提出的多查询 lambda 层进行对比。附录 C 详细讨论了相关工作。

Channel and linear attention The lambda abstraction, i.e. transforming available contexts into linear functions that are applied to queries, is quite general and therefore encompasses many previous works. Closest to our work are channel and linear attention mechanisms (Hu et al., 2018c; Katha ro poul os et al., 2020; Cho roman ski et al., 2020). Such mechanisms also capture long-range interactions without materializing attention maps and can be viewed as specific instances of a contentonly lambda layer. Lambda layers formalize and extend such approaches to consider both contentbased and position-based interactions, enabling their use as a stand-alone layer on highly structured data such as images. Rather than attempting to closely approximate an attention kernel as is the case with linear attention, we focus on the efficient design of contextual lambda functions and repurpose a multi-query formulation (Shazeer, 2019) to further reduce computational costs.

通道与线性注意力

Lambda抽象(即将可用上下文转化为应用于查询的线性函数)具有高度通用性,因此涵盖了许多先前工作。与我们研究最接近的是通道注意力和线性注意力机制(Hu et al., 2018c; Katharopoulos et al., 2020; Choromanski et al., 2020)。这些机制同样无需显式构建注意力图就能捕获长程交互,可视为纯内容型lambda层的特定实例。Lambda层通过形式化扩展这些方法,同时考虑基于内容和基于位置的交互,使其能作为独立层处理高度结构化数据(如图像)。不同于线性注意力试图紧密逼近注意力核的做法,我们专注于上下文lambda函数的高效设计,并复用多查询框架(Shazeer, 2019)以进一步降低计算成本。

Self-attention in the visual domain In contrast to natural language processing tasks where it is now the de-facto standard, self-attention has enjoyed steady but slower adoption in the visual domain (Wang et al., 2018; Bello et al., 2019; Rama chandra n et al., 2019; Carion et al., 2020). Concurrently to this work, Do sov it ski y et al. (2020) achieve a strong $88.6%$ accuracy on ImageNet by pre-training a Transformer on sequences of image patches on a large-scale dataset of 300M images.

视觉领域的自注意力机制

与自然语言处理任务中自注意力已成为事实标准不同,视觉领域对自注意力机制的采用虽稳步推进但相对缓慢 (Wang et al., 2018; Bello et al., 2019; Ramachandran et al., 2019; Carion et al., 2020)。在本研究同期,Dosovitskiy等人 (2020) 通过在3亿张图像的大规模数据集上对图像块序列预训练Transformer,在ImageNet上实现了88.6%的高准确率。

5 EXPERIMENTS

5 实验

In subsequent experiments, we evaluate lambda layers on standard computer vision benchmarks: ImageNet classification (Deng et al., 2009), COCO object detection and instance segmentation (Lin et al., 2014). The visual domain is well-suited to showcase the flexibility of lambda layers since (1) the memory footprint of self-attention becomes problematic for high-resolution imagery and (2) images are highly structured, making position-based interactions crucial.

在后续实验中,我们在标准计算机视觉基准上评估lambda层:ImageNet分类 (Deng et al., 2009)、COCO目标检测与实例分割 (Lin et al., 2014)。视觉领域非常适合展示lambda层的灵活性,因为:(1) 自注意力(self-attention)的内存占用对高分辨率图像会形成瓶颈;(2) 图像具有高度结构性,使得基于位置的交互至关重要。

Lambda Res Nets We construct Lambda Res Nets by replacing the 3x3 convolutions in the bottleneck blocks of the ResNet architecture (He et al., 2016). When replacing all such convolutions, we simply denote the name of the layer being tested (e.g. conv $^+$ channel attention or lambda layer). We denote Lambda Res Nets the family of hybrid architectures described in Table 18 (Appendix E.1). Unless specified otherwise, all lambda layers use $|k|{=}16$ , $|h|{=}4$ with a scope size of $|m|{=}23\mathrm{x}23$ and are implemented as in Figure 3. Additional experiments and details can be found in the Appendix.

Lambda Res Nets

我们通过替换ResNet架构 (He et al., 2016) 瓶颈块中的3x3卷积来构建Lambda Res Nets。当替换所有此类卷积时,我们仅标注被测试层的名称(例如conv $^+$ 通道注意力或lambda层)。Lambda Res Nets指代表18(附录E.1)中描述的混合架构家族。除非另有说明,所有lambda层均使用 $|k|{=}16$ 、 $|h|{=}4$ ,作用域大小为 $|m|{=}23\mathrm{x}23$ ,其实现如图3所示。更多实验细节详见附录。

5.1 LAMBDA LAYERS OUTPERFORM CONVOLUTIONS AND ATTENTION LAYERS.

5.1 LAMBDA层性能优于卷积层和注意力层

We first consider the standard ResNet-50 architecture with input image size $224\mathrm{x}224$ . In Table 3, we compare the lambda layer against (a) the standard convolution (i.e. the baseline ResNet-50) (b) channel attention (squeeze-and-excitation) and (c) multiple self-attention variants. The lambda layer strongly outperforms all baselines at a fraction of the parameter cost and notably obtains a $+0.8%$ improvement over channel attention.

我们首先考虑输入图像尺寸为 $224\mathrm{x}224$ 的标准 ResNet-50 架构。在表 3 中,我们将 lambda 层与以下方法进行对比:(a) 标准卷积(即基线 ResNet-50)、(b) 通道注意力(squeeze-and-excitation)以及(c) 多种自注意力变体。lambda 层以极少的参数量显著优于所有基线方法,特别是相比通道注意力获得了 $+0.8%$ 的提升。

Table 3: Comparison of the lambda layer and attention mechanisms on ImageNet classification with a ResNet50 architecture. The lambda layer strongly outperforms attention alternatives at a fraction of the parameter cost. All models are trained in mostly similar setups (see Appendix E.2) and we include the reported improvements compared to the convolution baseline in parentheses. See Appendix B.4 for a description of the $|u|$ hyper parameter. † Our implementation.

| Layer | Params (M) | top-1 |

| Conv (He et al.,2016)t | 25.6 | 76.9 |

| Conv + channel attention (Hu et al.,2018c) | 28.1 | 77.6 (+0.7) |

| Conv+linear attention (Chenet al.,2018) | 33.0 | 77.0 |

| Conv + linear attention (Shen et al., 2018) | 77.3 (+1.2) | |

| Conv +relative self-attention(Bello et al.,2019) | 25.8 | 77.7 (+1.3) |

| Local relative self-attention (Ramachandran et al.,2019) | 18.0 | 77.4 (+0.5) |

| Localrelativeself-attention(Huetal.,2019) | 23.3 | 77.3 (+1.0) |

| Local relative self-attention (Zhao et al.,2020) | 20.5 | 78.2 (+1.3) |

| Lambda layer | 15.0 | 78.4 (+1.5) |

| Lambda layer (|u|=4) | 16.0 | 78.9 (+2.0) |

表 3: 在ResNet50架构的ImageNet分类任务上,lambda层与注意力机制的对比。lambda层以极少的参数量显著优于各类注意力变体。所有模型均在基本相同的设置下训练(详见附录E.2),括号内为相对卷积基线的改进值。超参数$|u|$的说明见附录B.4。†表示我们的实现。

| Layer | Params (M) | top-1 |

|---|---|---|

| Conv (He et al.,2016)† | 25.6 | 76.9 |

| Conv + channel attention (Hu et al.,2018c) | 28.1 | 77.6 (+0.7) |

| Conv+linear attention (Chen et al.,2018) | 33.0 | 77.0 |

| Conv + linear attention (Shen et al., 2018) | - | 77.3 (+1.2) |

| Conv +relative self-attention(Bello et al.,2019) | 25.8 | 77.7 (+1.3) |

| Local relative self-attention (Ramachandran et al.,2019) | 18.0 | 77.4 (+0.5) |

| Localrelativeself-attention(Hu et al.,2019) | 23.3 | 77.3 (+1.0) |

| Local relative self-attention (Zhao et al.,2020) | 20.5 | 78.2 (+1.3) |

| Lambda layer | 15.0 | 78.4 (+1.5) |

| Lambda layer ( | u | =4) |

.2 COMPUTATIONAL BENEFITS OF LAMBDA LAYERS OVER SELF-ATTENTIO

2.2 Lambda层相较于自注意力机制的计算优势

In Table 4, we compare lambda layers against self-attention and present through puts, memory complexities and ImageNet accuracies. Our results highlight the weaknesses of self-attention: selfattention cannot model global interactions due to large memory costs, axial self-attention is still memory expensive and local self-attention is prohibitively slow. In contrast, the lambda layer can capture global interactions on high-resolution images and obtains a $+1.0%$ improvement over local self-attention while being almost $3\mathbf{x}$ faster10. Additionally, positional embeddings can be shared across lambda layers to further reduce memory requirements, at a minimal degradation cost. Finally, the lambda convolution has linear memory complexity, which becomes practical for very large images as seen in detection or segmentation. We also find that the lambda layer outperforms local self-attention when controlling for the scope size11 ( $78.1%$ vs $77.4%$ for $|m|{=}7\mathbf{x}7,$ , suggesting that the benefits of the lambda layer go beyond improved speed and s cal ability.

在表4中,我们将lambda层与自注意力机制(self-attention)进行对比,展示了吞吐量、内存复杂度及ImageNet准确率。结果揭示了自注意力的三大缺陷:全局交互建模因内存开销过大而受限,轴向自注意力仍存在高内存消耗,局部自注意力则存在严重速度瓶颈。相比之下,lambda层能在高分辨率图像上捕捉全局交互,较局部自注意力实现$+1.0%$精度提升的同时提速近$3\mathbf{x}$。通过共享位置嵌入,lambda层可进一步降低内存需求且仅带来微小性能损失。其线性内存复杂度特性使其在检测/分割等超大图像任务中极具实用性。控制感受野范围时,lambda层仍优于局部自注意力( $78.1%$ vs $77.4%$ @ $|m|{=}7\mathbf{x}7$ ),这表明其优势不仅体现在速度与可扩展性层面。

| Layer | SpaceComplexity | Memory (GB) | Throughput | top-1 |

| Globalself-attention | O(blhn | 120 | OOM | OOM |

| Axialself-attention | O(blhn√n) | 4.8 | 960ex/s | 77.5 |

| Local self-attention (7x7) | O(blhnm | 440ex/s | 77.4 | |

| Lambdalayer | (lkn²) | 1.9 | 1160ex/s | 78.4 |

| Lambda layer (|k|=8) | O(lkn² | 0.95 | 1640ex/s | 77.9 |

| Lambda layer (shared embeddings) | O(kn 2 | 0.63 | 1210ex/s | 78.0 |

| Lambda convolution (7x7) | O(lknm) | 1100ex/s | 78.1 |

| 层 | 空间复杂度 | 内存 (GB) | 吞吐量 | top-1 |

|---|---|---|---|---|

| 全局自注意力 (Global self-attention) | O(blhn | 120 | OOM | OOM |

| 轴向自注意力 (Axial self-attention) | O(blhn√n) | 4.8 | 960ex/s | 77.5 |

| 局部自注意力 (7x7) | O(blhnm | - | 440ex/s | 77.4 |

| Lambda层 | O(lkn²) | 1.9 | 1160ex/s | 78.4 |

| Lambda层 ( | k | =8) | O(lkn² | 0.95 |

| Lambda层 (共享嵌入) | O(kn² | 0.63 | 1210ex/s | 78.0 |

| Lambda卷积 (7x7) | O(lknm) | - | 1100ex/s | 78.1 |

Table 4: The lambda layer reaches higher ImageNet accuracies while being faster and more memory-efficient than self-attention alternatives. Memory is reported assuming full precision for a batch of 128 inputs using default hyper parameters. The memory cost for storing the lambdas matches the memory cost of activation s in the rest of the network and is therefore ignored. b: batch size, $h$ : number of heads/queries, $n$ : input length, $m$ : context length, $k$ : query/key depth, l: number of layers.

表 4: Lambda层在达到更高ImageNet准确率的同时,比自注意力替代方案速度更快且内存效率更高。内存数据基于使用默认超参数、128输入批次的全精度计算得出。存储lambda的内存成本与网络中其余部分的激活内存成本相当,因此忽略不计。b: 批次大小, $h$: 头数/查询数, $n$: 输入长度, $m$: 上下文长度, $k$: 查询/键深度, l: 层数。

5.3 HYBRIDS IMPROVE THE SPEED-ACCURACY TRADEOFF OF IMAGE CLASSIFICATION.

5.3 混合模型提升图像分类的速度-准确率权衡

Studying hybrid architectures. In spite of the memory savings compared to self-attention, capturing global contexts with the lambda layer still incurs a quadratic time complexity (Table 2), which remains costly at high resolution. Additionally, one may hypothesize that global contexts are most beneficial once features contain semantic information, i.e. after having been processed by a few operations, in which case using global contexts in the early layers would be wasteful. In the Appendix 5.3, we study hybrid designs that use standard convolutions to capture local contexts and lambda layers to capture global contexts. We find that such convolution-lambda hybrids have increased representational power at a negligible decrease in throughput compared to their purely convolutional counterparts.

研究混合架构。尽管与自注意力(self-attention)相比节省了内存,但使用lambda层捕获全局上下文仍会产生二次时间复杂度(表2),在高分辨率下成本依然高昂。此外,可以假设全局上下文最有利于包含语义信息的特征,即经过若干操作处理后,在这种情况下早期层使用全局上下文将是浪费的。在附录5.3中,我们研究了使用标准卷积捕获局部上下文和lambda层捕获全局上下文的混合设计。研究发现,与纯卷积架构相比,这种卷积-lambda混合架构在吞吐量几乎不降低的情况下提高了表征能力。

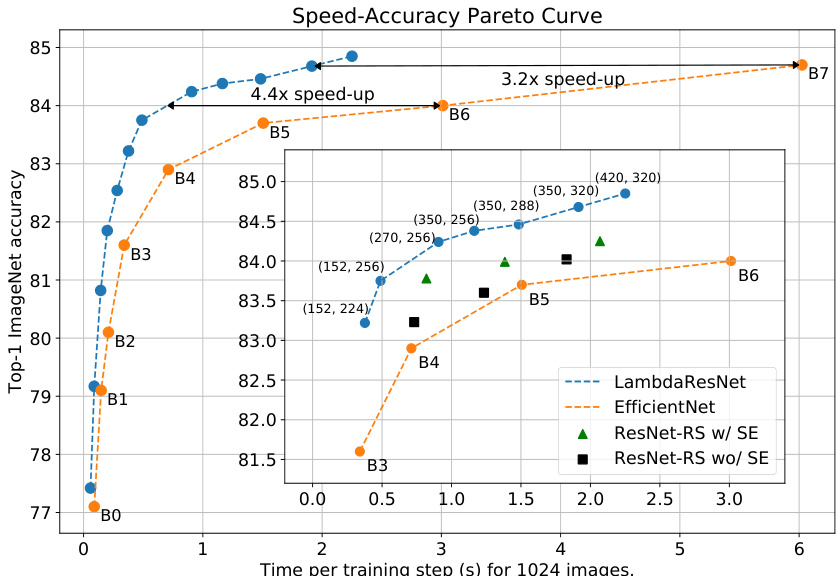

Lambda Res Nets significantly improve the speed-accuracy tradeoff of ImageNet classification. We design a family of hybrid Lambda Res Nets across scales based on our study of hybrid architectures and the scaling/training strategies from Bello et al. (2021) (see Section E.1). Figure 4 presents the speed-accuracy Pareto curve of Lambda Res Nets compared to Efficient Nets (Tan & Le, 2019) on TPUv3 hardware. In order to isolate the benefits of lambda layers, we additionally compare against the same architectures when replacing lambda layers by (1) standard 3x3 convolutions (denoted ResNet-RS wo/ SE) and (2) 3x3 convolutions with squeeze-and-excitation (denoted ResNet-RS w/ SE). All architectures are trained for 350 epochs using the same regular iz ation methods and evaluated at the same resolution they are trained at.

Lambda Res Nets显著提升了ImageNet分类任务中速度与精度的权衡关系。基于对混合架构的研究以及Bello等人(2021)提出的扩展/训练策略(参见E.1节),我们设计了一系列跨尺度的混合Lambda Res Nets变体。图4展示了在TPUv3硬件上,Lambda Res Nets与Efficient Nets(Tan & Le, 2019)的速度-精度帕累托曲线对比。为了单独评估lambda层的优势,我们还对比了两种变体:(1) 用标准3x3卷积(标记为ResNet-RS wo/SE)替换lambda层,(2) 使用带压缩激励模块的3x3卷积(标记为ResNet-RS w/SE)。所有架构均采用相同的正则化方法训练350个epoch,并在训练分辨率下进行评估。

Lambda Res Nets outperform the baselines across all scales on the speed-accuracy trade-off. LambdaResNets are $3.2\cdot4.4\mathrm{x}$ faster than Efficient Nets and $1.6\cdot2.3\mathrm{x}$ faster than ResNet-RS when controlling for accuracy, thus significantly improving the speed-accuracy Pareto curve of image classification12. Our largest model, Lambda Res Net-420 trained at image size 320, achieves a strong $84.9%$ top-1 ImageNet accuracy, $0.9%$ over the corresponding architecture with standard $3\mathrm{x}3$ convolutions and $0.65%$ over the corresponding architecture with squeeze-and-excitation.

Lambda Res Nets 在速度-准确率权衡方面全面超越基线模型。在控制准确率的情况下,LambdaResNets 比 Efficient Nets 快 $3.2\cdot4.4\mathrm{x}$,比 ResNet-RS 快 $1.6\cdot2.3\mathrm{x}$,显著提升了图像分类任务的速度-准确率帕累托曲线[12]。我们最大的模型 Lambda Res Net-420 在 320 像素图像尺寸下训练,取得了 84.9% 的 ImageNet 最高准确率,比使用标准 $3\mathrm{x}3$ 卷积的对应架构高出 0.9%,比使用压缩激励(squeeze-and-excitation)的对应架构高出 0.65%。

Scaling to larger datasets with pseudo-labels We train Lambda Res Nets in a semi-supervised learning setting using 130M pseudo-labeled images from the JFT dataset, as done for training the Efficient Net-Noisy Student checkpoints (Xie et al., 2020). Table 5 compares the through puts and ImageNet accuracies of a representative set of models with similar accuracies when trained using the JFT dataset. Lambda Res Net-152, trained and evaluated at image size 288, achieves a strong $86.7%$ top-1 ImageNet accuracy while being more parameter-efficient and 9.5x faster than the Efficient NetNoisy Student checkpoint with the same accuracy.

使用伪标签扩展更大数据集

我们采用半监督学习方式训练Lambda Res Nets,使用来自JFT数据集的1.3亿张伪标签图像,该方法与训练Efficient Net-Noisy Student检查点时采用的方式相同(Xie et al., 2020)。表5比较了使用JFT数据集训练时,一组具有相似准确率的代表性模型的吞吐量和ImageNet准确率。在288像素图像尺寸下训练和评估的Lambda Res Net-152,实现了86.7%的ImageNet top-1准确率,同时比具有相同准确率的Efficient Net-Noisy Student检查点参数效率更高、速度快9.5倍。

Figure 4: Speed-accuracy comparison between Lambda Res Nets and Efficient Nets. When matching the training and regular iz ation setup of Efficient Nets, Lambda Res Nets are $3.2\textrm{-}4.4\mathrm{X}$ faster than Efficient Nets and $1.6-2.3\mathrm{x}$ faster than ResNet-RS with squeeze-and-excitation. LambdaResNets are annotated with (depth, image size). Our largest Lambda Res Net, Lambda Res Net-420 trained at image size 320, reaches a strong $84.9%$ top-1 accuracy.

图 4: Lambda Res Nets与Efficient Nets的速度-精度对比。当采用与Efficient Nets相同的训练和正则化设置时,Lambda Res Nets比Efficient Nets快$3.2\textrm{-}4.4\mathrm{X}$,比带有squeeze-and-excitation的ResNet-RS快$1.6-2.3\mathrm{x}$。LambdaResNets标注了(深度, 图像尺寸)。我们最大的Lambda Res Net——图像尺寸为320的Lambda Res Net-420,达到了强劲的$84.9%$ top-1准确率。

Table 5: Comparison of models trained on extra data. ViT-L/16 is pre-trained on JFT and finetuned on ImageNet at resolution $384\mathrm{x}384$ , while Efficient Net and Lambda Res Net are co-trained on ImageNet and JFT pseudo-labels. Training and inference throughput is shown for 8 TPUv3 cores.

| Architecture | Params s (M) | Train (ex/s) | Infer (ex/s) | ImageNet top-1 |

| LambdaResNet-152 | 51 | 1620 | 6100 | 86.7 |

| EfficientNet-B7 | 66 | 170 (9.5x) | 980 (6.2x) | 86.7 |

| ViT-L/16 | 307 | 180 (9.0x) | 640 (9.5x) | 87.1 |

表 5: 基于额外数据训练的模型对比。ViT-L/16 在 JFT 上预训练并在 $384\mathrm{x}384$ 分辨率下对 ImageNet 微调,而 Efficient Net 和 Lambda Res Net 则在 ImageNet 和 JFT 伪标签上联合训练。训练和推理吞吐量基于 8 个 TPUv3 核心展示。

| Architecture | Params (M) | Train (ex/s) | Infer (ex/s) | ImageNet top-1 |

|---|---|---|---|---|

| LambdaResNet-152 | 51 | 1620 | 6100 | 86.7 |

| EfficientNet-B7 | 66 | 170 (9.5x) | 980 (6.2x) | 86.7 |

| ViT-L/16 | 307 | 180 (9.0x) | 640 (9.5x) | 87.1 |

5.4 OBJECT DETECTION AND INSTANCE SEGMENTATION RESULTS

5.4 目标检测与实例分割结果

In Table 6, we evaluate Lambda Res Nets as a backbone in Mask-RCNN (He et al., 2017) on the COCO object detection and instance segmentation tasks. Using lambda layers yields consistent gains across all object sizes, especially the small objects which are the hardest to locate. This indicates that lambda layers are also competitive for more complex visual tasks that require localization information.

在表6中,我们评估了将Lambda Res Nets作为Mask-RCNN (He et al., 2017) 主干网络在COCO目标检测和实例分割任务中的表现。使用lambda层在所有物体尺寸上都带来了稳定的性能提升,尤其是最难定位的小物体。这表明lambda层对于需要定位信息的更复杂视觉任务同样具有竞争力。

Table 6: COCO object detection and instance segmentation with Mask-RCNN architecture on $\mathbf{1024x1024}$ inputs. Mean Average Precision (AP) for small, medium, large objects $(\mathrm{s}/\mathrm{m}/1)$ . Using lambda layers yields consistent gains across all object sizes, especially small objects.

| Backbone | APbb COCO | APbb s/m/l | APmask coco | APmask s/m/l |

| ResNet-101 | 48.2 | 29.9/50.9/64.9 | 42.6 | 24.2/45.6/60.0 |

| ResNet-101+SE | 48.5 | 29.9/51.5/65.3 | 42.8 | 24.0/46.0/60.2 |

| LambdaResNet-101 | 49.4 | 31.7/52.2/65.6 | 43.5 | 25.9 / 46.5 / 60.8 |

| ResNet-152 | 48.9 | 29.9/51.8/ 66.0 | 43.2 | 24.2/46.1/61.2 |

| ResNet-152+SE | 49.4 | 30.0/52.3/66.7 | 43.5 | 24.6/46.8/61.8 |

| LambdaResNet-152 | 50.0 | 31.8/53.4/67.0 | 43.9 | 25.5/47.3/62.0 |

表 6: 使用Mask-RCNN架构在$\mathbf{1024x1024}$输入尺寸下的COCO目标检测与实例分割结果。小/中/大物体的平均精度均值(AP) $(\mathrm{s}/\mathrm{m}/1)$。采用lambda层在所有物体尺寸上均获得稳定提升,尤其对小物体效果显著。

| Backbone | APbb COCO | APbb s/m/l | APmask coco | APmask s/m/l |

|---|---|---|---|---|

| ResNet-101 | 48.2 | 29.9/50.9/64.9 | 42.6 | 24.2/45.6/60.0 |

| ResNet-101+SE | 48.5 | 29.9/51.5/65.3 | 42.8 | 24.0/46.0/60.2 |

| LambdaResNet-101 | 49.4 | 31.7/52.2/65.6 | 43.5 | 25.9/46.5/60.8 |

| ResNet-152 | 48.9 | 29.9/51.8/66.0 | 43.2 | 24.2/46.1/61.2 |

| ResNet-152+SE | 49.4 | 30.0/52.3/66.7 | 43.5 | 24.6/46.8/61.8 |

| LambdaResNet-152 | 50.0 | 31.8/53.4/67.0 | 43.9 | 25.5/47.3/62.0 |

6 DISCUSSION

6 讨论

How do lambda layers compare to the attention operation? Lambda layers scale favorably compared to self-attention. Vanilla Transformers using self-attention have $\Theta({\bar{b l h n^{2}}})$ memory footprint, whereas Lambda Networks have $\Theta(l k n^{2})$ memory footprint (or $\Theta(k n^{2})$ when sharing positional embeddings across layers). This enables the use of lambda layers at higher-resolution and on larger batch sizes. Additionally, the lambda convolution enjoys a simpler and faster implementation than its local self-attention counterpart. Finally, our ImageNet experiments show that lambda layers outperforms self-attention, demonstrating that the benefits of lambda layers go beyond improved speed and s cal ability.

Lambda层与注意力机制相比如何?

Lambda层在扩展性上优于自注意力机制。使用自注意力的标准Transformer具有$\Theta({\bar{b l h n^{2}}})$的内存占用,而Lambda网络的内存占用为$\Theta(l k n^{2})$(或在层间共享位置嵌入时为$\Theta(k n^{2})$)。这使得Lambda层能够在更高分辨率和更大批量大小下使用。此外,Lambda卷积的实现比局部自注意力更简单、更快速。最后,我们的ImageNet实验表明,Lambda层的性能优于自注意力机制,证明Lambda层的优势不仅限于速度和可扩展性的提升。

How are lambda layers different than linear attention mechanisms? Lambda layers generalize and extend linear attention formulations to capture position-based interactions, which is crucial for modeling highly structured inputs such as images (see Table 10 in Appendix D.1). As the aim is not to approximate an attention kernel, lambda layers allow for more flexible non-linear i ties and normalization s which we also find beneficial (see Table 12 in Appendix D.1). Finally, we propose multi-query lambda layers as a means to reduce complexity compared to the multi-head (or single-head) formulation typically used in linear attention works. Appendix C.3 presents a detailed discussion of linear attention.

Lambda层与线性注意力机制有何不同?Lambda层对线性注意力公式进行了泛化和扩展,以捕捉基于位置的交互作用,这对于建模图像等高结构化输入至关重要(参见附录D.1中的表10)。由于目标并非近似注意力核,Lambda层允许更灵活的非线性关系和归一化操作,这些特性也被证明具有优势(参见附录D.1中的表12)。最后,我们提出多查询Lambda层作为降低复杂度的方法,相较于线性注意力工作中常用的多头(或单头)公式。附录C.3对线性注意力进行了详细讨论。

How to best use lambda layers in the visual domain? The improved s cal ability, speed and ease of implementation of lambda layers compared to global or local attention makes them a strong candidate for use in the visual domain. Our ablations demonstrate that lambda layers are most beneficial in the intermediate and low-resolution stages of vision architectures when optimizing for the speed-accuracy tradeoff. It is also possible to design architectures that rely exclusively on lambda layers which can be more parameter and flops efficient. We discuss practical modeling recommendations in Appendix A.

如何在视觉领域最佳使用lambda层?相比全局或局部注意力机制,lambda层在可扩展性、速度和实现便捷性方面的改进使其成为视觉领域的理想选择。我们的消融实验表明,在优化速度-精度权衡时,lambda层在视觉架构的中低分辨率阶段最具优势。此外,还可以设计完全依赖lambda层的架构,这种架构在参数量和计算量(FLOPs)方面可能更高效。我们在附录A中讨论了实际建模建议。

Generality of lambda layers. While this work focuses on static image tasks, we note that lambda layers can be instantiated to model interactions on structures as diverse as graphs, time series, spatial lattices, etc. We anticipate that lambda layers will be helpful in more modalities, including multimodal tasks. We discuss masked contexts and auto-regressive tasks in the Appendix B.2.

Lambda层的通用性。尽管本研究聚焦于静态图像任务,但我们注意到Lambda层可实例化用于建模多种结构的交互,如图结构、时间序列、空间网格等。我们预期Lambda层将在更多模态中发挥作用,包括多模态任务。关于掩码上下文和自回归任务的讨论详见附录B.2。

Conclusion. We propose a new class of layers, termed lambda layers, which provide a scalable framework for capturing structured interactions between inputs and their contexts. Lambda layers summarize available contexts into fixed-size linear functions, termed lambdas, that are directly applied to their associated queries. The resulting neural networks, Lambda Networks, are computationally efficient and capture long-range dependencies at a small memory cost, enabling their application to large structured inputs such as high-resolution images. Extensive experiments on computer vision tasks showcase their versatility and superiority over convolutional and attention al networks. Most notably, we introduce Lambda Res Nets, a family of hybrid Lambda Networks which reach excellent ImageNet accuracies and achieve up to $9.5\mathrm{x}$ speed-ups over the popular Efficient Nets, significantly improving the speed-accuracy tradeoff of image classification models.

结论。我们提出了一类称为Lambda层的新型网络层,它为捕捉输入与其上下文之间的结构化交互提供了可扩展的框架。Lambda层将可用上下文归纳为固定大小的线性函数(称为lambdas),并直接应用于其关联查询。由此产生的神经网络——Lambda网络,计算高效且能以较小的内存开销捕获长程依赖关系,使其能够应用于高分辨率图像等大型结构化输入。在计算机视觉任务上的大量实验表明,其多功能性优于卷积网络和注意力网络。最值得注意的是,我们引入了Lambda Res Nets这一混合Lambda网络家族,它在ImageNet上达到了优异的准确率,并比流行的Efficient Nets实现了高达$9.5\mathrm{x}$的加速,显著改善了图像分类模型的速度-准确率权衡。

ACKNOWLEDGMENTS

致谢

The author would like to thank Barret Zoph and William Fedus for endless discussions, fruitful suggestions and careful revisions; Jonathon Shlens, Mike Mozer, Prajit Rama chandra n, Ashish Vaswani, Quoc Le, Neil Housby, Jakob Uszkoreit, Margaret Li, Krzysztof Cho roman ski for many insightful comments; Hedvig Rausing for the antarctic info graphics; Zolan Brinnes for the OST; Andrew Brock, Sheng Li for assistance with profiling Efficient Nets; Adam Kraft, Thang Luong and Hieu Pham for assistance with the semi-supervised experiments and the Google Brain team for useful discussions on the paper.

作者要感谢Barret Zoph和William Fedus持续不断的讨论、富有成效的建议和细致的修订;感谢Jonathon Shlens、Mike Mozer、Prajit Ramachandran、Ashish Vaswani、Quoc Le、Neil Housby、Jakob Uszkoreit、Margaret Li、Krzysztof Choromanski提供的许多深刻见解;感谢Hedvig Rausing提供的南极信息图表;感谢Zolan Brinnes制作的OST;感谢Andrew Brock和Sheng Li在分析EfficientNets时给予的帮助;感谢Adam Kraft、Thang Luong和Hieu Pham在半监督实验中的协助;感谢Google Brain团队对本文的有益讨论。

REFERENCES

参考文献

Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. Neural machine translation by jointly learning to align and translate. In International Conference on Learning Representations, 2015.

Dzmitry Bahdanau、Kyunghyun Cho 和 Yoshua Bengio。通过联合学习对齐与翻译实现神经机器翻译。收录于国际学习表征会议,2015年。

Irwan Bello, Hieu Pham, Quoc V. Le, Mohammad Norouzi, and Samy Bengio. Neural combinatorial optimization with reinforcement learning. 2016. URL http://arxiv.org/abs/1611. 09940.

Irwan Bello、Hieu Pham、Quoc V. Le、Mohammad Norouzi 和 Samy Bengio。基于强化学习的神经组合优化。2016。URL http://arxiv.org/abs/1611.09940。

Angelos Katha ro poul os, Apoorv Vyas, Nikolaos Pappas, and Francois Fleuret. Transformers are rnns: Fast auto regressive transformers with linear attention. 2020.

Angelos Katharopoulos, Apoorv Vyas, Nikolaos Pappas, 和 Francois Fleuret. Transformer是RNN:具有线性注意力的快速自回归Transformer. 2020.

Nikita Kitaev, Lukasz Kaiser, and Anselm Levskaya. Reformer: The efficient transformer. arXiv preprint arXiv:2001.04451, 2020.

Nikita Kitaev、Lukasz Kaiser 和 Anselm Levskaya。Reformer: 高效的 Transformer。arXiv 预印本 arXiv:2001.04451,2020。

attention. Proceedings of Machine Learning Research, pp. 2048–2057. PMLR, 2015.

注意力。机器学习研究论文集,第2048-2057页。PMLR,2015年。

Han Zhang, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. Self-attention generative adversarial networks. 2019.

Han Zhang、Ian Goodfellow、Dimitris Metaxas 和 Augustus Odena。自注意力生成对抗网络。2019年。

Hang Zhang, Chongruo Wu, Zhongyue Zhang, Yi Zhu, Zhi Zhang, Haibin Lin, Yue Sun, Tong He, Jonas Mueller, R. Manmatha, Mu Li, and Alexander Smola. Resnest: Split-attention networks. 2020.

张航、吴崇若、张忠岳、朱毅、张智、林海滨、孙悦、何通、Jonas Mueller、R. Manmatha、李沐、Alexander Smola。ResNeSt:拆分注意力网络。2020。

Hengshuang Zhao, Jiaya Jia, and Vladlen Koltun. Exploring self-attention for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

Hengshuang Zhao、Jiaya Jia 和 Vladlen Koltun。探索自注意力机制在图像识别中的应用。发表于 IEEE/CVF 计算机视觉与模式识别会议 (CVPR),2020 年 6 月。

Barret Zoph and Quoc V. Le. Neural architecture search with reinforcement learning. In International Conference on Learning Representations, 2017.

Barret Zoph 和 Quoc V. Le. 基于强化学习的神经网络架构搜索. 发表于 International Conference on Learning Representations, 2017.

A PRACTICAL MODELING RECOMMENDATIONS

实用建模建议

I want to make it faster on TPUs/GPUs... Hybrid models reach a better speed-accuracy tradeoff. Global contexts can be computationally wasteful, especially in the early high resolution layers where features lack semantic information, and can be replaced by lambda convolutions with smaller scopes (e.g. $|m|{=}5\mathrm{x}5$ or $7\mathrm{x}7$ ) or the standard 3x3 convolution. Additionally, using a hybrid can require less tuning when starting from a working model/training setup.

我想在TPU/GPU上提升速度...混合模型能实现更好的速度-准确率权衡。全局上下文计算可能造成资源浪费,尤其在早期高分辨率层中特征缺乏语义信息时,可替换为小范围lambda卷积(例如 $|m|{=}5\mathrm{x}5$ 或 $7\mathrm{x}7$ )或标准3x3卷积。此外,基于现有可用模型/训练方案时,采用混合架构通常需要更少的调参工作。

I want to make to minimize FLOPS (e.g. embedded applications)... Consider a hybrid with inverted bottlenecks, as done in Section D.3.2. To further reduce FLOPS, prefer lambda convolutions with smaller scopes (e.g. $|m|{=}5\mathrm{x}5$ or $7\mathrm{x}7$ ).

我希望最小化FLOPS (例如嵌入式应用)... 考虑采用D.3.2节所述的倒置瓶颈混合结构。为进一步降低FLOPS,建议选用较小作用域的lambda卷积 (例如 $|m|{=}5\mathrm{x}5$ 或 $7\mathrm{x}7$)。

I encounter memory issues... Memory footprint can be reduced by sharing position embeddings across layers (especially layers with the highest resolution). Using the lambda convolution is more memory efficient. Reducing the query depth $|k|$ or increasing the number of heads $|h|$ also decreases memory consumption.

我遇到了内存问题... 可以通过跨层共享位置嵌入(尤其是最高分辨率的层)来减少内存占用。使用lambda卷积更节省内存。降低查询深度$|k|$或增加头数$|h|$也能减少内存消耗。

I’m experiencing instability... We found it important to initialize the $\gamma$ parameter in the last batchnorm layer of the ResNet’s bottleneck blocks to 0 (this is the default in most codebases). Normalizing the keys (i.e. with the softmax) along the context’s length is important. Early experiments which employed 2 lambda layers sequentially in the same residual block were unstable, suggesting that using 2 lambda layers in sequence should be avoided.

我们发现在ResNet瓶颈块的最后一个批归一化(batchnorm)层中将$\gamma$参数初始化为0非常重要(这是大多数代码库的默认设置)。沿上下文长度对键进行归一化(例如使用softmax)至关重要。早期实验中在同一残差块中连续使用2个lambda层会导致不稳定,这表明应避免连续使用2个lambda层。

Which implementation of the lambda convolution should I use? In our experiments using Tensorflow 1.x on TPUv3 hardware, we found both the n-d depthwise and $(\mathrm{n}{+}1)$ -d convolution implementations to have similar speed. We point out that this can vary across software/hardware stacks.

应该使用哪种 lambda 卷积实现?我们在 TPUv3 硬件上使用 Tensorflow 1.x 进行实验时,发现 n-d depthwise 和 $(\mathrm{n}{+}1)$ -d 卷积实现的速度相近。需要指出的是,这种结果可能因软件/硬件栈的不同而有所差异。

What if my task doesn’t require position-based interactions? Computational costs in the lambda layer are dominated by position-based interactions. If your task doesn’t require them, you can try the content-only lambda layer or any other linear attention mechanism. We recommend using the multi-query formulation (as opposed to the usual multi-head) and scaling other dimensions of the model.

如果我的任务不需要基于位置的交互怎么办?Lambda层中的计算成本主要由基于位置的交互决定。如果您的任务不需要这些交互,可以尝试仅含内容的lambda层或其他线性注意力机制。我们建议使用多查询(multi-query)公式(而非常见的多头(multi-head)机制),并调整模型的其他维度。

B ADDITIONAL VARIANTS

B 附加变体

B.1 COMPLETE CODE WITH LAMBDA CONVOLUTION

B.1 使用 Lambda 卷积的完整代码

Figure 5: Pseudo-code for the multi-query lambda layer and the 1d lambda convolution. A n-d lambda convolution can equivalently be implemented via a regular $(\mathrm{n}{+}1)$ -d convolution or a nd depthwise convolution with channel multiplier. The embeddings can be made to satisfy various conditions (e.g. translation e qui variance and masking) when computing positional lambdas with the einsum implementation.

图 5: 多查询lambda层和一维lambda卷积的伪代码。n维lambda卷积可通过常规的$(\mathrm{n}{+}1)$维卷积或带通道乘数的n维深度卷积等效实现。在使用einsum实现位置lambda计算时,嵌入可被设计为满足各种条件(例如平移等变性和掩码)。

B.2 GENERATING LAMBDAS FROM MASKED CONTEXTS

B.2 从掩码上下文中生成 Lambda 表达式

In some applications, such as denoising tasks or auto-regressive training, it is necessary to restrict interactions to a sub-context $\mathcal{C}_ {n}\subset\mathcal{C}$ when generating $\lambda_{n}$ for query position $n$ . For example, parallel auto-regressive training requires masking the future to ensure that the output ${\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}$ only depends on past context positions $m<n$ . Self-attention achieves this by zeroing out the irrelevant attention weights $\pmb{a}_ {n m^{\prime}}=0\forall m^{\prime}\notin\mathcal{C}_ {n}$ , thus guaranteeing that $\begin{array}{r}{\pmb{y}_ {n}=\sum_{m}\pmb{a}_ {n m}\pmb{v}_ {m}}\end{array}$ only depends on $\scriptstyle{{\mathcal{C}}_{n}}$ .

在某些应用中,例如去噪任务或自回归训练,当为查询位置 $n$ 生成 $\lambda_{n}$ 时,需要将交互限制在子上下文 $\mathcal{C}_ {n}\subset\mathcal{C}$ 内。例如,并行自回归训练需要掩码未来信息,以确保输出 ${\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}{\mathbf{}}_ {{\mathbf{}}}$ 仅依赖于过去的上下文位置 $m<n$。自注意力机制通过将无关注意力权重置零 $\pmb{a}_ {n m^{\prime}}=0\forall m^{\prime}\notin\mathcal{C}_ {n}$ 来实现这一点,从而保证 $\begin{array}{r}{\pmb{y}_ {n}=\sum_{m}\pmb{a}_ {n m}\pmb{v}_ {m}}\end{array}$ 仅依赖于 $\scriptstyle{{\mathcal{C}}_{n}}$。

Similarly, one can block interactions between queries and masked context positions when generating lambdas by applying a mask before summing the contributions of context positions. As long as the mask is shared across all elements in the batch, computing masked lambdas does not require materializing per-example attention maps and the complexities are the same as for global context case. See Figure 6 for an implementation.

toward the masked context positions when generating lambdas by applying a mask before summing the contributions of context positions. As long as the mask is shared across all elements in the batch, computing masked lambdas does not require materializing per-example attention maps and the complexities are the same as for global context case. See Figure 6 for an implementation.

同样地,在生成lambda时,可以通过在汇总上下文位置贡献前应用掩码来阻止查询与掩码上下文位置之间的交互。只要掩码在批次中的所有元素间共享,计算掩码lambda就不需要具体化每个样本的注意力图,其复杂度与全局上下文情况相同。具体实现见图 6。

B.3 MULTI-HEAD VS MULTI-QUERY LAMBDA LAYERS

B.3 多头 (Multi-Head) 与多查询 (Multi-Query) Lambda 层的对比

In this section, we motivate using a multi-query formulation as opposed to the usual multi-head formulation used in self-attention. Figure 7 presents the implementation of a multi-head lambda layer. Table 7 compares complexities for multi-head and multi-query lambda layers. Using a multi-query formulation reduces computations by a factor of $|h|$ (the number of queries per lambda) compared to the multi-head formulation. We also found in early experimentation that multi-query lambdas yield a better speed-accuracy trade-off. Additionally, the multi-head lambda layer does not enjoy a simple local implementation as the lambda convolution.

在本节中,我们阐述了采用多查询 (multi-query) 公式而非自注意力中常用的多头 (multi-head) 公式的动机。图 7 展示了多头 lambda 层的实现方式。表 7 对比了多头与多查询 lambda 层的计算复杂度。与多头公式相比,多查询公式能将计算量减少 $|h|$ 倍 (即每个 lambda 的查询数量)。早期实验还表明,多查询 lambda 能实现更优的速度-精度权衡。此外,多头 lambda 层无法像 lambda 卷积那样实现简单的局部化操作。

def masked lambda layer(queries, normalized keys, embeddings, values, mask): """Masked multi−query lambda layer. Args: queries: a tensor with shape [b, h, n, k]. normalized keys: a tensor with shape [b, m, k]. embeddings: a tensor with shape [k, n, m]. values: a tensor with shape [b, m, v]. mask: a tensor of 0 and 1s with shape [n, m]. """ # We show the general case but a cumulative sum may be faster for masking the future. # Note that each query now also has its own content lambda since every query # interacts with a different context. # Keys should be normalized by only considering the elements in their contexts. content mu $=$ einsum(normalized keys, values, ’bmk,bmv−>bmkv’) content lambdas $=$ einsum(content mu, mask, ’bmkv, $\mathtt{n m->}$ bnkv’) embeddings $=$ einsum(embeddings, mask, ’knm, $\mathtt{n m->k n m}^{\prime}$ ) # apply mask to embeddings position lambdas $=$ einsum(embeddings, values, $^{,}\mathtt{k n m}$ ,bmv− $>$ bnkv’) content output $=$ einsum(queries, content lambda, ’bhnk,bnkv− $\mathrm{\sim}$ bnhv’) position output $=$ einsum(queries, position lambdas, ’bhnk,bnkv− $\mathrm{\sim}$ bnhv’) output $=$ reshape(content output $^+$ position output, [b, n, d]) return output

def masked_lambda_layer(queries, normalized_keys, embeddings, values, mask):

"""带掩码的多查询lambda层。

参数:

queries: 形状为[b, h, n, k]的张量。

normalized_keys: 形状为[b, m, k]的张量。

embeddings: 形状为[k, n, m]的张量。

values: 形状为[b, m, v]的张量。

mask: 由0和1组成的形状为[n, m]的张量。

"""

# 我们展示了通用情况,但对于掩码未来信息,累积求和可能更快。

# 注意现在每个查询也有自己的内容lambda,因为每个查询

# 与不同的上下文交互。

# 键应仅通过考虑其上下文中的元素进行归一化。

content_mu = einsum(normalized_keys, values, 'bmk,bmv->bmkv')

content_lambdas = einsum(content_mu, mask, 'bmkv, nm−>bnkv')

embeddings = einsum(embeddings, mask, 'knm, nm−>knm') # 对嵌入应用掩码

position_lambdas = einsum(embeddings, values, 'knm, bmv->bnkv')

content_output = einsum(queries, content_lambda, 'bhnk,bnkv−>bnhv')

position_output = einsum(queries, position_lambdas, 'bhnk,bnkv−>bnhv')

output = reshape(content_output + position_output, [b, n, d])

return output

def multihead lambda layer(queries, keys, embeddings, values, impl $=^{\prime}$ einsum’): """Multi−head lambda layer.""" content lambda $=$ einsum(softmax(keys), values, ’bhmk,bhmv−>bhkv’) position lambdas $=$ einsum(embeddings, values, ’hnmk,bhmv− $>$ bnhkv’) content output $=$ einsum(queries, content lambda, ’bhnk,bhkv− $>$ bnhv’) position output $=$ einsum(queries, position lambdas, ’bhnk,bnkv−>bnhv’) output $=$ reshape(content output $^+$ position output, [b, n, d]) return output

def multihead lambda layer(queries, keys, embeddings, values, impl $=^{\prime}$ einsum’): """多头lambda层""" content lambda $=$ einsum(softmax(keys), values, ’bhmk,bhmv−>bhkv’) position lambdas $=$ einsum(embeddings, values, ’hnmk,bhmv− $>$ bnhkv’) content output $=$ einsum(queries, content lambda, ’bhnk,bhkv− $>$ bnhv’) position output $=$ einsum(queries, position lambdas, ’bhnk,bnkv−>bnhv’) output $=$ reshape(content output $^+$ position output, [b, n, d]) return output

Figure 6: Pseudo-code for masked multi-query lambda layer. Figure 7: Pseudo-code for the multi-head lambda layer. This is only shown as an example as we recommend multi-query lambdas instead. Table 7: Complexity comparison between a multi-head and a multi-query lambda layer. Using a multi-query formulation reduces complexity by a factor $|h|$ (the number of queries per lambda) compared to the standard multi-head formulation.

| Operation | Time complexity | Space complexity |

| Multi-head lambda layer | O(bnmkd) | (knm + bnkd) |

| Multi-query lambda layer | (bnmkd/h) | (hknm +bnkd/h) |

图 6: 掩码多查询 lambda 层的伪代码。

图 7: 多头 lambda 层的伪代码。此处仅作为示例展示,我们推荐使用多查询 lambda 层。

表 7: 多头与多查询 lambda 层的复杂度对比。相比标准多头形式,多查询形式将复杂度降低了 $|h|$ 倍(每个 lambda 的查询数量)。

| 操作 | 时间复杂度 | 空间复杂度 |

|---|---|---|

| 多头 lambda 层 | O(bnmkd) | (knm + bnkd) |

| 多查询 lambda 层 | (bnmkd/h) | (hknm + bnkd/h) |

B.4 ADDING EXPRESSIVITY WITH AN EXTRA DIMENSION

B.4 通过额外维度增强表现力

We briefly experiment with a variant that enables increasing the cost of computing the lambdas while keeping the cost of applying them constant. This is achieved by introducing an additional dimension, termed the intra-depth with corresponding hyper parameter $|u|$ , in keys, position embeddings and values. Each key (or positional embedding) is now a $|k|\times|u|$ matrix instead of a $|k|$ -dimensional vector. Similarly, each value is now a $|\boldsymbol{v}|\times|\boldsymbol{u}|$ matrix instead of a $|v|$ -dimensional vector. The lambdas are obtained via summing over context positions and the intra-depth position $|u|$ and have $|\boldsymbol{k}|\times|\boldsymbol{v}|$ shape similar to the default case. See Figure 8 for an implementation and Table 8 for the complexities. Experiments (see Appendix D.1) demonstrate that this variant results in accuracy improvements but we find that using $\lvert u\rvert=1$ (i.e. the default case) is optimal when controlling for speed on modern machine learning accelerators.

我们简要尝试了一种变体方法,通过引入称为内部深度(intra-depth)的额外维度(对应超参数$|u|$),在保持应用成本不变的同时提高计算lambda的成本。此时每个键(或位置嵌入)变为$|k|\times|u|$矩阵而非$|k|$维向量,同理每个值变为$|\boldsymbol{v}|\times|\boldsymbol{u}|$矩阵而非$|v|$维向量。lambda通过聚合上下文位置和内部深度位置$|u|$获得,其$|\boldsymbol{k}|\times|\boldsymbol{v}|$形状与默认情况相同。具体实现见图8,复杂度分析见表8。实验(见附录D.1)表明该变体能提升精度,但发现控制现代机器学习加速器速度时,采用$\lvert u\rvert=1$(即默认情况)为最优方案。

def compute position lambdas(embeddings, values, impl $=^{\prime}$ einsum’): """Compute position lambdas with intra−depth u.""" if impl $==$ ’conv’: # values: [b, n, v, u] shape # embeddings: [r, 1, u, k] shape position lambdas $=$ conv2d(values, embeddings) # Transpose from shape [b, n, v, k] to shape [b, n, k, v] position lambdas $=$ transpose(position lambdas, [0, 1, 3, 2]) elif impl $==$ ’einsum’: # embeddings: [k, n, m, u] shape position lambdas $=$ einsum(embeddings, values, ’knmu,bmvu−>bnkv’) return position lambdas

def compute_position_lambdas(embeddings, values, impl $=^{\prime}$ einsum'): """计算带层内u的位置lambda值"""

if impl $==$ 'conv':

values: [b, n, v, u] 形状

embeddings: [r, 1, u, k] 形状

position_lambdas $=$ conv2d(values, embeddings)

从[b, n, v, k]转置为[b, n, k, v]

position_lambdas $=$ transpose(position_lambdas, [0, 1, 3, 2])

elif impl $==$ 'einsum':

embeddings: [k, n, m, u] 形状

position_lambdas $=$ einsum(embeddings, values, 'knmu,bmvu->bnkv')

return position_lambdas

def lambda layer(queries, keys, embeddings, values, impl $(=^{,}$ einsum’): """Multi−query lambda layer with intra−depth u.""" content lambda $=$ einsum(softmax(keys), values, ’bmku,bmvu− $\mathrm{\sim}$ bkv’) position lambdas $=$ compute position lambdas(embeddings, values, lambda conv) content output $=$ einsum(queries, content lambda, ’bhnk,bkv−>bnhv’) position output $=$ einsum(queries, position lambdas, ’bhnk,bnkv−>bnhv’) output $=$ reshape(content output $^+$ position output, [b, n, d]) return output

def lambda层(queries, keys, embeddings, values, impl $(=^{,}$ einsum'): """带深度内u的多查询lambda层"""

content_lambda $=$ einsum(softmax(keys), values, 'bmku,bmvu- $\mathrm{\sim}$ bkv')

position_lambdas $=$ compute_position_lambdas(embeddings, values, lambda_conv)

content_output $=$ einsum(queries, content_lambda, 'bhnk,bkv->bnhv')

position_output $=$ einsum(queries, position_lambdas, 'bhnk,bnkv->bnhv')

output $=$ reshape(content_output $^+$ position_output, [b, n, d])

return output

Figure 8: Pseudo-code for the multi-query lambda layer with intra-depth $|u|$ . Lambdas are obtained by reducing over the context positions and the intra-depth dimension. This variant allocates more computation for generating the lambdas while keeping the cost of applying them constant. The equivalent n-d lambda convolution can be implemented with a regular $(\mathrm{n}{+}1)$ )-d convolution. Table 8: Complexity for a multi-query lambda layer with intra-depth $|u|$ .

| Operation | Time complexity | Space complexity |

| Lambda layer (lul >1) | (bnmkud /h) | O(knmu + bnku) |

图 8: 带内部深度 $|u|$ 的多查询 lambda 层伪代码。通过减少上下文位置和内部深度维度获得 lambdas。该变体在保持应用成本不变的同时,为生成 lambdas 分配了更多计算量。等效的 n-d lambda 卷积可通过常规 $(\mathrm{n}{+}1)$ )-d 卷积实现。

表 8: 带内部深度 $|u|$ 的多查询 lambda 层复杂度

| 操作 | 时间复杂度 | 空间复杂度 |

|---|---|---|

| Lambda层 (lul >1) | (bnmkud /h) | O(knmu + bnku) |

C ADDITIONAL RELATED WORK

C 其他相关工作

In this section, we review the attention operation and related works on improving its s cal ability. We discuss connections between lambda layers and channel, spatial or linear attention mechanisms and show how they can be cast as less flexible specific instances of lambda layers. We conclude with a brief review of self-attention in the visual domain and discuss connections with expert models.

在本节中,我们回顾注意力操作及其可扩展性改进的相关工作。探讨了lambda层与通道、空间或线性注意力机制之间的联系,并展示如何将它们视为lambda层灵活性较低的具体实例。最后简要回顾了视觉领域的自注意力机制,并讨论了与专家模型的关联。

C.1 SOFTMAX ATTENTION

C.1 Softmax注意力机制

Softmax attention Softmax-attention produces a distribution over the context for each query $\pmb q_{n}$ as $\pmb{a}_ {n}=\mathrm{softmax}(\pmb{K q}_ {n})\in\mathbb{R}^{|m|}$ where the keys $\kappa$ are obtained from the context $C$ . The attention distribution $\pmb{a}_ {n}$ is then used to form a linear combination of values $V$ obtained from the context as $\begin{array}{r}{\pmb{y}_ {n}=\pmb{V}^{T}\pmb{a}_ {n}=\sum_{m}a_{n m}\pmb{v}_ {m}\in\mathbb{R}^{|v|}}\end{array}$ . As we take a weighted sum of the values13, we transform the query $\mathbf{\it{q}}_ {n}$ into the output ${\boldsymbol{\mathbf{\mathit{y}}}}_ {n}$ and discard its attention distribution ${\bf{{a}}}_{n}$ . This operation captures content-based interactions, but not position-based interactions.

Softmax注意力机制通过softmax运算为每个查询向量$\pmb q_{n}$生成上下文上的分布$\pmb{a}_ {n}=\mathrm{softmax}(\pmb{K q}_ {n})\in\mathbb{R}^{|m|}$,其中键$\kappa$源自上下文$C$。该注意力分布随后用于构建值$V$的线性组合$\begin{array}{r}{\pmb{y}_ {n}=\pmb{V}^{T}\pmb{a}_ {n}=\sum_{m}a_{n m}\pmb{v}_ {m}\in\mathbb{R}^{|v|}}\end{array}$。通过对值进行加权求和13,查询向量$\mathbf{\it{q}}_ {n}$被转换为输出${\boldsymbol{\mathbf{\mathit{y}}}}_ {n}$,其注意力分布${\bf{{a}}}_{n}$则被丢弃。该运算捕捉了基于内容的交互,但未包含基于位置的交互。

Relative attention In order to model position-based interactions, relative attention (Shaw et al., 2018) introduces a learned matrix of $|m|$ positional embeddings $\pmb{{\cal E}}_ {n}\in\mathbb{R}^{|m|\times|k|}$ and computes the attention distribution as $\pmb{a}_ {n}=\mathrm{softmax}((\pmb{K}+\pmb{E}_ {n})\pmb{q}_{n})\in\mathbb{R}^{|m|}$ . The attention distribution now also depends on the query position $n$ relative to positions of context elements $m$ . Relative attention therefore captures both content-based and position-based interactions.

相对注意力 (Relative attention)

为了建模基于位置的交互,相对注意力 (Shaw et al., 2018) 引入了一个可学习的 $|m|$ 位置嵌入矩阵 $\pmb{{\cal E}}_ {n}\in\mathbb{R}^{|m|\times|k|}$,并将注意力分布计算为 $\pmb{a}_ {n}=\mathrm{softmax}((\pmb{K}+\pmb{E}_ {n})\pmb{q}_{n})\in\mathbb{R}^{|m|}$。此时注意力分布还依赖于查询位置 $n$ 相对于上下文元素位置 $m$ 的关系。因此,相对注意力同时捕捉了基于内容和基于位置的交互。

C.2 SPARSE ATTENTION

C.2 稀疏注意力 (Sparse Attention)

A significant challenge in applying (relative) attention to large inputs comes from the quadratic $\Theta(|b n m|)$ memory footprint required to store attention maps. Many recent works therefore propose to impose specific patterns to the attention maps as a means to reduce the context size $|m|$ and consequently the memory footprint of the attention operation. These approaches include local attention patterns (Dai et al., 2019; Parmar et al., 2018; Rama chandra n et al., 2019), axial attention patterns (Ho et al., 2019; Wang et al., 2020a), static sparse attention patterns (Child et al.; Beltagy et al., 2020) or dynamic sparse attention patterns (Kitaev et al., 2020). See Tay et al. (2020) for a review. Their implementations can be rather complex, sometimes require low-level kernel implementations to get computational benefits or may rely on specific assumptions on the shape of the inputs (e.g., axial attention).

在大型输入上应用(相对)注意力机制的一个重大挑战来自于存储注意力图所需的二次方内存占用 $\Theta(|b n m|)$。因此,许多近期研究提出通过为注意力图施加特定模式来减小上下文规模 $|m|$,从而降低注意力操作的内存开销。这些方法包括局部注意力模式 (Dai et al., 2019; Parmar et al., 2018; Ramachandran et al., 2019)、轴向注意力模式 (Ho et al., 2019; Wang et al., 2020a)、静态稀疏注意力模式 (Child et al.; Beltagy et al., 2020) 或动态稀疏注意力模式 (Kitaev et al., 2020)。具体综述可参见 Tay et al. (2020)。这些方法的实现可能相当复杂,有时需要底层内核实现以获得计算优势,或者可能依赖于输入形状的特定假设(例如轴向注意力)。

In contrast, lambda layers are simple to implement for both global and local contexts using simple einsum and convolution primitives and capture dense content and position-based interactions with no assumptions on the input shape.

相比之下,lambda层通过简单的einsum和卷积原语即可实现全局与局部上下文的建模,无需对输入形状做任何假设,就能捕捉密集内容交互和基于位置的交互关系。

C.3 LINEAR ATTENTION: CONNECTIONS AND DIFFERENCES

C.3 线性注意力:关联与差异

Another approach to reduce computational requirements of attention mechanisms consists in approxima ting the attention operation in linear space and time complexity, which is referred to as linear (or efficient) attention. Linear attention mechanisms date back to de Brébisson & Vincent (2016); Britz et al. (2017) and were later introduced in the visual domain by Chen et al. (2018); Shen et al. (2018). They are recently enjoying a resurgence of popularity with many works modifying the popular Transformer architecture for sequential processing applications (Katha ro poul os et al., 2020; Wang et al., 2020b; Choromanski et al., 2020).

另一种降低注意力机制计算需求的方法是在线性时间和空间复杂度下近似注意力操作,这被称为线性(或高效)注意力。线性注意力机制可追溯至de Brébisson & Vincent (2016)和Britz et al. (2017)的研究,随后由Chen et al. (2018)和Shen et al. (2018)引入视觉领域。近年来该技术重新流行,许多研究通过改进流行的Transformer架构来适应序列处理应用(Katharopoulos et al., 2020; Wang et al., 2020b; Choromanski et al., 2020)。

Linear attention via kernel factorization Linear attention is typically obtained by reinterpreting attention as a similarity kernel and leveraging a low-rank kernel factorization as

线性注意力通过核因子化实现

线性注意力通常通过将注意力重新解释为相似性核并利用低秩核因子化来获得

$$

\mathrm{Attention}(Q,K,V)=\operatorname{softmax}(Q K^{T})V\sim\phi(Q)(\phi(K^{T})V)

$$

$$

\mathrm{Attention}(Q,K,V)=\operatorname{softmax}(Q K^{T})V\sim\phi(Q)(\phi(K^{T})V)

$$

for some feature function $\phi$ . Computing $\phi(K^{T})V\in\mathbb{R}^{|k|\times|v|}$ first bypasses the need to materialize the attention maps $\phi(Q)\phi(K^{T})$ and the operation therefore has linear complexity with respect to the input length $|n|$ .

对于某些特征函数 $\phi$,先计算 $\phi(K^{T})V\in\mathbb{R}^{|k|\times|v|}$ 可以避免显式生成注意力图 $\phi(Q)\phi(K^{T})$,因此