Visual Spatial Reasoning

视觉空间推理

Abstract

摘要

Spatial relations are a basic part of human cognition. However, they are expressed in natural language in a variety of ways, and previous work has suggested that current vision-and-language models (VLMs) struggle to capture relational information. In this paper, we present Visual Spatial Reasoning (VSR), a dataset containing more than 10k natural text-image pairs with 66 types of spatial relations in English (such as: under, in front of, facing). While using a seemingly simple annotation format, we show how the dataset includes challenging linguistic phenomena, such as varying reference frames. We demonstrate a large gap between human and model performance: the human ceiling is above $95%$ , while state-of-the-art models only achieve around $70%$ . We observe that VLMs’ by-relation performances have little correlation with the number of training examples and the tested models are in general incapable of recognising relations concerning the orientations of objects.1

空间关系是人类认知的基本组成部分。然而,它们在自然语言中以多种方式表达,先前的研究表明,当前的视觉与语言模型 (VLM) 难以捕捉关系信息。本文提出了视觉空间推理 (VSR) 数据集,包含超过1万组自然文本-图像对,涵盖66种英语空间关系(例如:under, in front of, facing)。尽管采用看似简单的标注格式,我们展示了该数据集如何涵盖具有挑战性的语言现象,例如变化的参照系。我们揭示了人类与模型性能之间的巨大差距:人类上限超过 $95%$ ,而最先进模型仅达到约 $70%$ 。研究发现,VLM 的按关系表现与训练样本数量相关性很低,且测试模型普遍无法识别涉及物体朝向的关系。[20]

1 Introduction

1 引言

Multimodal NLP research has developed rapidly in recent years, with substantial performance gains on tasks such as visual question answering (VQA) (Antol et al., 2015; Johnson et al., 2017; Goyal et al., 2017; Hudson and Manning, 2019; Zellers et al., 2019), vision-language reasoning or entailment (Suhr et al., 2017, 2019; Xie et al., 2019; Liu et al., 2021), and referring expression comprehension (Yu et al., 2016; Liu et al., 2019). Existing benchmarks, such as NLVR2 (Suhr et al., 2019) and VQA (Goyal et al., 2017), define generic paradigms for testing vision-language models (VLMs). However, as we further discuss in $\S2$ , these benchmarks are not ideal for probing VLMs as they typically conflate multiple sources of error and do not allow controlled analysis on specific linguistic or cognitive properties, making it difficult to categorise and fully understand the model failures. In particular, spatial reasoning has been found to be particularly challenging for current models, and much more challenging than capturing properties of individual entities (Kuhnle et al., 2018; Cirik et al., 2018; Akula et al., 2020), even for state-of-the-art models such as CLIP (Radford et al., 2021; Subramania n et al., 2022).

近年来,多模态自然语言处理(NLP)研究发展迅猛,在视觉问答(VQA) (Antol et al., 2015; Johnson et al., 2017; Goyal et al., 2017; Hudson and Manning, 2019; Zellers et al., 2019)、视觉语言推理或蕴含(Suhr et al., 2017, 2019; Xie et al., 2019; Liu et al., 2021)以及指代表达理解(Yu et al., 2016; Liu et al., 2019)等任务上取得了显著性能提升。现有基准测试如NLVR2 (Suhr et al., 2019)和VQA (Goyal et al., 2017)为测试视觉语言模型(VLM)定义了通用范式。但正如我们在$\S2$中进一步讨论的,这些基准并不适合探究VLM,因为它们通常混淆了多种错误来源,且无法对特定语言或认知属性进行受控分析,导致难以分类和全面理解模型缺陷。特别是空间推理已被证明对当前模型极具挑战性,远比对单个实体属性的捕捉更为困难(Kuhnle et al., 2018; Cirik et al., 2018; Akula et al., 2020),即便是CLIP (Radford et al., 2021; Subramania n et al., 2022)等最先进的模型也不例外。

Another line of work generates synthetic datasets in a controlled manner to target specific relations and properties when testing VLMs, e.g., CLEVR (Liu et al., 2019) and ShapeWorld (Kuhnle and Copestake, 2018). However, synthetic datasets may accidentally overlook challenges (such as orientations of objects which we will discuss in $\S5$ ), and using natural images allows us to explore a wider range of language use.

另一项工作以受控方式生成合成数据集,用于在测试视觉语言模型(VLM)时针对特定关系和属性,例如CLEVR (Liu等人,2019) 和 ShapeWorld (Kuhnle和Copestake,2018)。然而,合成数据集可能会意外忽略某些挑战(如我们将在$\S5$讨论的物体朝向问题),而使用自然图像能让我们探索更广泛的语言使用场景。

To address the lack of probing evaluation benchmarks in this field, we present VSR (Visual Spatial Reasoning), a controlled dataset that explicitly tests VLMs for spatial reasoning. We choose spatial reasoning as the focus because it is one of the most fundamental capabilities for both humans and VLMs. Such relations are crucial to how humans organise their mental space and make sense of the physical world, and therefore fundamental for a grounded semantic model (Talmy, 1983).

为解决该领域缺乏探测性评估基准的问题,我们提出了VSR (Visual Spatial Reasoning) 数据集,这是一个专门测试视觉语言模型 (VLM) 空间推理能力的受控数据集。我们选择空间推理作为研究重点,因为这是人类和视觉语言模型最基础的核心能力之一。这种关系对人类组织心理空间和理解物理世界至关重要 (Talmy, 1983) ,因此也是基础语义模型的核心要素。

The VSR dataset contains natural image-text pairs in English, with the data collection process explained in $\S3$ . Each example in the dataset consists of an image and a natural language description which states a spatial relation of two objects presented in the image (two examples are shown in Fig. 1 and Fig. 2). A VLM needs to classify the image-caption pair as either true or false, indicating whether the caption is correctly describing the spatial relation. The dataset covers 66 spatial relations and has ${>}10\mathrm{k}$ data points, using 6,940 images from

VSR数据集包含英语自然图像-文本对,其数据收集过程在$\S3$中说明。数据集中的每个样本由一张图像和一句自然语言描述组成,该描述陈述了图像中呈现的两个物体的空间关系(图1和图2展示了两个示例)。视觉语言模型(VLM)需要将图像-标题对分类为真或假,以判断标题是否正确描述了空间关系。该数据集涵盖66种空间关系,包含${>}10\mathrm{k}$个数据点,使用了来自

MS COCO (Lin et al., 2014).

MS COCO (Lin et al., 2014).

Situating one object in relation to another requires a frame of reference: a system of coordinates against which the objects can be placed. Drawing on detailed studies of more than forty typo logically diverse languages, Levinson (2003) concludes that the diversity can be reduced to three major types: intrinsic, relative, and absolute. An intrinsic frame is centred on an object, e.g., behind the chair, meaning at the side with the backrest. A relative frame is centred on a viewer, e.g., behind the chair, meaning further away from someone’s perspective. An absolute frame uses fixed coordinates, e.g., north of the chair, using cardinal directions. In English, absolute frames are rarely used when describing relations on a small scale, and they do not appear in our dataset. However, intrinsic and relative frames are widely used, and present an important source of variation. We discuss the impact on data collection in $\S3.2$ , and analyse the collected data in $\S4$ .

确定一个物体相对于另一个物体的位置需要参照系:一个可以放置物体的坐标系系统。通过对四十多种类型学上多样语言的详细研究,Levinson (2003) 得出结论,这种多样性可以归纳为三大类:内在 (intrinsic)、相对 (relative) 和绝对 (absolute)。内在参照系以物体为中心,例如"椅子后面"指的是有靠背的一侧。相对参照系以观察者为中心,例如"椅子后面"意味着从某人的视角看更远的位置。绝对参照系使用固定坐标系,例如"椅子北面"使用基本方向。在英语中,小范围描述关系时很少使用绝对参照系,我们的数据集中也未出现。然而,内在和相对参照系被广泛使用,并构成了重要的变异来源。我们将在 $\S3.2$ 讨论对数据收集的影响,并在 $\S4$ 分析收集到的数据。

We test four popular VLMs, i.e., VisualBERT (Li et al., 2019), LXMERT (Tan and Bansal, 2019), ViLT (Kim et al., 2021), and CLIP (Radford et al., 2021) on VSR, with results given in $\S5$ . While the human ceiling is above $95%$ , all four models struggle to reach $70%$ accuracy. We conduct comprehensive analysis on the failures of the investigated VLMs and highlight that (1) positional encodings are extremely important for the VSR task; (2) models’ by-relation performance barely correlates with the number of training examples; (3) in fact, several spatial relations that concern orientation of objects are especially challenging for current VLMs; (4) VLMs have extremely poor generalisation on unseen concepts.

我们在VSR上测试了四种流行的视觉语言模型(VLM),即VisualBERT (Li et al., 2019)、LXMERT (Tan and Bansal, 2019)、ViLT (Kim et al., 2021)和CLIP (Radford et al., 2021),结果如$\S5$所示。虽然人类准确率上限超过$95%$,但这四种模型都难以达到$70%$的准确率。我们对这些VLM的失败案例进行了全面分析,并指出:(1) 位置编码对VSR任务极为重要;(2) 模型在各关系上的表现与训练样本数量几乎无关;(3) 实际上,涉及物体方向的若干空间关系对当前VLM尤其具有挑战性;(4) VLM在未见概念上的泛化能力极差。

2 Related Work

2 相关工作

2.1 Comparison with synthetic datasets

2.1 与合成数据集的对比

Synthetic language-vision reasoning datasets, e.g., SHAPES (Andreas et al., 2016), CLEVR (Liu et al., 2019), NLVR (Suhr et al., 2017), and ShapeWorld (Kuhnle and Copestake, 2018), enable full control of dataset generation and could potentially benefit probing of spatial reasoning capability of VLMs. They share a similar goal to us, to diagnose and pinpoint weaknesses in VLMs. However, synthetic datasets necessarily simplify the problem as they have inherently bounded expressivity. In CLEVR, objects can only be spatially related via four relationships: “left”, “right”, “behind”, and “in front of” while VSR covers 66 relations.

合成语言视觉推理数据集,例如 SHAPES (Andreas et al., 2016)、CLEVR (Liu et al., 2019)、NLVR (Suhr et al., 2017) 和 ShapeWorld (Kuhnle and Copestake, 2018),能够完全控制数据集的生成过程,可能有助于探究视觉语言模型 (VLM) 的空间推理能力。这些数据集与我们有着相似的目标,即诊断并精确定位 VLM 的缺陷。然而,合成数据集本质上表达能力有限,必然会简化问题。例如在 CLEVR 中,物体之间仅能通过"左"、"右"、"后"和"前"四种空间关系进行关联,而 VSR 则涵盖了 66 种空间关系。

Figure 1: Caption: The potted plant is at the right side of the bench. Label: True.

图 1: 标题: 盆栽植物位于长椅右侧。标签: 正确。

Figure 2: Caption: The cow is ahead of the person. Label: False.

图 2: 说明:牛在人的前面。标签:错误。

Synthetic data does not always accurately reflect the challenges of reasoning in the real world. For example, objects like spheres, which often appear in synthetic datasets, do not have orientations. In real images, orientations matter and human language use depends on that. Furthermore, synthetic images do not take the scene as a context into account. The interpretation of object relations can depend on such scenes (e.g., the degree of closeness can vary in open space and indoor scenes).

合成数据并不总能准确反映现实世界中的推理挑战。例如,球体等常见于合成数据集中的物体没有方向性。而在真实图像中,方向至关重要,人类语言的使用也基于此。此外,合成图像未将场景作为上下文纳入考量。物体关系的解读可能依赖于这些场景(例如,开放空间与室内场景中的亲密程度可能存在差异)。

Last but not least, the vast majority of spatial rela tion ships cannot be determined by rules. Even for the seemingly simple relationships like “left/right of”, the determination of two objects’ spatial relationships can depend on the observer’s viewpoint, whether the object has a front, if so, what are their orientations, etc.

最后但同样重要的是,绝大多数空间关系无法通过规则确定。即便是看似简单的"左/右"关系,两个物体的空间关系判定也可能取决于观察者视角、物体是否有正面朝向、以及它们的方位等因素。

2.2 Spatial relations in existing vision-language datasets

2.2 现有视觉-语言数据集中的空间关系

Several existing vision-language datasets with natural images also contain spatial relations (e.g., NLVR2, COCO, and VQA datasets). Suhr et al. (2019) summarise that there are 9 prevalent linguistic phenomena/challenges in NLVR2 (Suhr et al., 2019) such as co reference, existential quantifiers, hard cardinality, spatial relations, etc., and 4 in

现有多个包含自然图像的视觉语言数据集也涵盖空间关系(如NLVR2、COCO和VQA数据集)。Suhr等人(2019)总结了NLVR2中存在的9种常见语言现象/挑战(Suhr等人,2019),包括共指、存在量词、硬基数、空间关系等,以及4种...

VQA datasets (Antol et al., 2015; Hudson and Manning, 2019). However, the different challenges are entangled in these datasets. Sentences contain complex lexical and syntactic information and can thus conflate different sources of error, making it hard to identify the exact challenge and preventing categorised analysis. Yatskar et al. (2016) extract 6 types of visual spatial relations directly from MS COCO images with annotated bounding boxes. But rule-based automatic extraction can be restrictive as most relations are complex and cannot be identified relying on bounding boxes. Recently, Rösch and Libovický (2022) extract captions that contain 28 positional keywords from MS COCO and swap the keywords with their antonyms to construct a challenging probing dataset. However, the COCO captions also have the error-conflation problem. Also, the number of examples and types of relations are restricted by COCO captions.

VQA数据集 (Antol等人,2015;Hudson和Manning,2019)。然而这些数据集中的不同挑战相互交织。句子包含复杂的词汇和句法信息,因此可能混淆不同错误来源,难以准确定位具体挑战并阻碍分类分析。Yatskar等人 (2016) 直接从带有标注边界框的MS COCO图像中提取6种视觉空间关系。但基于规则的自动提取存在局限性,因为大多数关系较为复杂,无法仅依赖边界框进行识别。最近,Rösch和Libovický (2022) 从MS COCO中提取包含28个位置关键词的标题,并通过将这些关键词替换为其反义词来构建具有挑战性的探测数据集。但COCO标题同样存在错误混淆问题,且样本数量和关系类型受限于COCO标题内容。

Visual Genome (Krishna et al., 2017) also contains annotations of objects’ relations including spatial relations. However, it is only a collection of true statements and contains no negative ones, so cannot be framed as a binary classification task. It is non-trivial to automatically construct negative examples since multiple relations can be plausible for a pair of object in a given image. Relation classifiers are harder to learn than object class if i ers on this dataset (Liu and Emerson, 2022).

Visual Genome (Krishna等人,2017) 也包含物体间空间关系等关联标注。但该数据集仅收录真实陈述,未包含负面样本,因此无法构建为二分类任务。由于同一图像中的物体可能同时存在多种合理关系,自动构建负样本具有挑战性。在该数据集上,关系分类器的学习难度高于物体分类 (Liu和Emerson,2022)。

Parc a lab escu et al. (2022) propose a benchmark called VALSE for testing VLMs’ capabilities on various linguistic phenomena. VALSE has a subset focusing on “relations” between objects. It uses texts modified from COCO’s original captions. However, it is a zero-shot benchmark without training set, containing just 535 data points. So, it is not ideal for large-scale probing on a wide spectrum of spatial relations.

Parc a lab escu等人 (2022) 提出了名为VALSE的基准测试,用于评估视觉语言模型 (VLMs) 在不同语言现象上的能力。VALSE包含一个专注于物体间"关系"的子集,其文本改编自COCO原始描述。但该基准属于零样本测试集,不含训练数据,仅包含535个样本点,因此不适合对广泛空间关系进行大规模探测。

2.3 Spatial reasoning without grounding

2.3 无接地的空间推理

There has also been interest in probing models’ spatial reasoning capability without visual input. For example, Collell et al. (2018); Mirzaee et al. (2021); Liu et al. (2022) probe pretrained text-only models or VLMs’ spatial reasoning capabilities with text-only questions. However, a text-only dataset cannot evaluate how a model relates language to grounded spatial information. In contrast, VSR focuses on the joint understanding of vision and language input.

也有研究关注在没有视觉输入的情况下探究模型的空间推理能力。例如,Collell等人(2018)、Mirzaee等人(2021)和Liu等人(2022)通过纯文本问题探究了仅文本预训练模型或视觉语言模型(VLM)的空间推理能力。然而,纯文本数据集无法评估模型如何将语言与基础空间信息联系起来。相比之下,VSR专注于视觉和语言输入的联合理解。

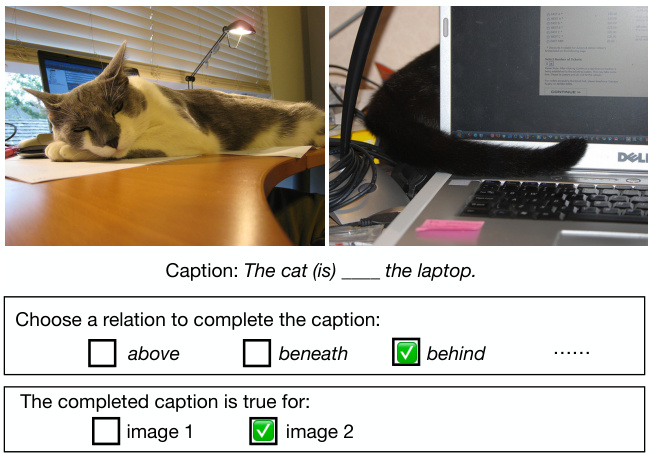

Figure 3: An annotation example of concepts “cat” & “laptop” in contrastive caption generation. The example generates two data points for our dataset: one “True” instance when the completed caption is paired with image 2 (right) and one “False” instance when paired with image 1 (left).

图 3: 对比式标题生成中"猫"与"笔记本电脑"概念的标注示例。该示例为我们的数据集生成两个数据点:当完成的标题与图像 2 (右) 配对时生成一个"真"实例,当与图像 1 (左) 配对时生成一个"假"实例。

2.4 Spatial reasoning as a sub-component

2.4 空间推理作为子组件

Last but not least, some vision-language tasks and models require spatial reasoning as a subcomponent. For example, Lei et al. (2020) propose $\mathrm{TVQA+}$ , a spatio-temporal video QA dataset containing bounding boxes for objects referred in the questions. Models then need to simultaneously conduct QA while detecting the correct object of interest. Christie et al. (2016) propose a method for simultaneous image segmentation and prepositional phrase attachment resolution. Models have to reason about objects’ spatial relations in the visual scene to determine the assignment of prepositional phrases. However, if spatial reasoning is only a sub-component of a task, error analysis becomes more difficult. In contrast, VSR provides a focused evaluation of spatial relations, which are particularly challenging for current models.

最后但同样重要的是,某些视觉语言任务和模型需要将空间推理作为子组件。例如,Lei等人 (2020) 提出了$\mathrm{TVQA+}$,这是一个时空视频问答数据集,包含问题中提及物体的边界框。模型需要在检测正确目标对象的同时进行问答。Christie等人 (2016) 提出了一种同时进行图像分割和介词短语附着消解的方法。模型必须推理视觉场景中物体的空间关系以确定介词短语的归属。然而,若空间推理仅是任务的子组件,错误分析会变得更加困难。相比之下,VSR (Visual Spatial Reasoning) 提供了对空间关系的集中评估,这对当前模型尤其具有挑战性。

3 Dataset Creation

3 数据集创建

In this section we detail how VSR is constructed. The data collection process can generally be split into two phases (1) contrastive caption generation (§3.1) and (2) second-round validation (§3.2). We then discuss annotator hiring & payment (§3.3), dataset splits (§3.4), and the human ceiling & agreement of VSR (§3.5).

本节详细阐述VSR数据集的构建过程。数据收集流程可分为两个阶段:(1) 对比式描述生成(§3.1)和(2) 二次验证(§3.2)。随后我们将讨论标注员招募与薪酬(§3.3)、数据集划分(§3.4)以及VSR的人类表现上限与一致性(§3.5)。

3.1 Contrastive Template-based Caption Generation (Fig. 3)

3.1 基于对比模板的标题生成 (图 3)

In order to highlight spatial relations and avoid annotators frequently choosing trivial relations (such as “near to”), we use a contrastive caption generation approach. Specifically, first, a pair of images, each containing two concepts of interests, would be randomly sampled from MS COCO (we use the train and validation sets of COCO 2017). Second, an annotator would be given a template containing the two concepts and is required to choose a spatial relation from a pre-defined list (Table 1) that makes the caption correct for one image but incorrect for the other image. We will detail these steps and explain the rationales in the following.

为了突出空间关系并避免标注者频繁选择简单关系(如"靠近"),我们采用了一种对比式描述生成方法。具体步骤如下:首先,从MS COCO数据集(使用COCO 2017的训练集和验证集)中随机采样一组图像,每张图像包含两个目标概念;然后,标注者会收到包含这两个概念的模板,需要从预定义列表(表1)中选择一个空间关系,使得生成的描述对其中一张图像正确而对另一张错误。下文将详细说明这些步骤并解释其设计原理。

Image pair sampling. MS COCO 2017 contains 123,287 images and has labelled the segmentation and classes of 886,284 instances (individual objects). Leveraging the segmentation, we first randomly select two concepts (e.g., “cat” and “laptop” in Fig. 3), then retrieve all images containing the two concepts in COCO 2017 (train and validation sets). Then images that contain multiple instances of any of the concept are filtered out to avoid referencing ambiguity. For the single-instance images, we also filter out any of the images with instance pixel area size $<30,000$ , to prevent extremely small instances. After these filtering steps, we randomly sample a pair in the remaining images. We repeat such a process to obtain a large number of individual image pairs for caption generation.

图像对采样。MS COCO 2017包含123,287张图像,并为886,284个实例(单个对象)标注了分割和类别。利用这些分割标注,我们首先随机选择两个概念(例如图3中的"猫"和"笔记本电脑"),然后从COCO 2017(训练集和验证集)中检索包含这两个概念的所有图像。接着过滤掉包含任一概念多个实例的图像以避免引用歧义。对于单实例图像,我们还过滤掉实例像素面积$<30,000$的任何图像,以防止出现极小的实例。经过这些过滤步骤后,我们从剩余图像中随机采样一对图像。我们重复这一过程以获得大量用于标题生成的独立图像对。

Fill in the blank: template-based caption generation. Given a pair of images, the annotator needs to come up with a valid caption that makes it a correct description for one image but incorrect for the other. In this way, the annotator should focus on the key difference between the two images (which should be a spatial relation between the two objects of interest) and choose a caption that differentiates the two. Similar paradigms are also used in the annotation of previous vision-language reasoning datasets such as NLVR(2) (Suhr et al., 2017, 2019) and MaRVL (Liu et al., 2021). To regularise annotators from writing modifiers and differentiating the image pair with things beyond accurate spatial relations, we opt for a template-based classification task instead of free-form caption writing.2 Besides, the template-generated dataset can be easily categorised based on relations and their categories. Specifically, the annotator would be given instance pairs as shown in Fig. 3.

填空:基于模板的标题生成。给定一对图像,标注者需要想出一个有效的标题,使其对一张图像是正确的描述,但对另一张是错误的。通过这种方式,标注者应聚焦于两幅图像之间的关键差异(通常是两个感兴趣物体之间的空间关系),并选择一个能区分两者的标题。类似范式也用于先前视觉语言推理数据集的标注,如NLVR(2) (Suhr et al., 2017, 2019) 和 MaRVL (Liu et al., 2021)。为避免标注者通过修饰词或非精确空间关系来区分图像对,我们选择基于模板的分类任务而非自由形式的标题撰写。此外,模板生成的数据集可根据关系及其类别轻松分类。具体而言,标注者将获得如图3所示的实例对。

The caption template has the format of “The ENT1 (is) the ENT2.”, and the annotators are instructed to select a relation from a fixed set to fill in the slot. The copula “is” can be omitted for grammatical it y. For example, for “contains” and “has as a part”, “is” should be discarded in the template when extracting the final caption.

标题模板的格式为"The ENT1 (is) the ENT2.",标注者需从固定关系集中选择一项填入空缺处。系动词"is"可根据语法规则省略。例如,对于"contains"和"has as a part"关系,在提取最终标题时应从模板中删除"is"。

The fixed set of spatial relations enable us to obtain the full control of the generation process. The full list of used relations are listed in Table 1. It contains 71 spatial relations and is adapted from the summarised relation table of Marchi Fagundes et al. (2021). We made minor changes to filter out clearly unusable relations, made relation names grammatical under our template, and reduced repeated relations. In our final dataset, 66 out of the 71 available relations are actually included (the other 6 are either not selected by annotators or are selected but the captions did not pass the validation phase).

固定的空间关系集合使我们能够完全控制生成过程。使用的全部关系列于表1中,包含71种空间关系,改编自Marchi Fagundes等人(2021)的汇总关系表。我们进行了微调以剔除明显不可用的关系,使关系名称符合模板语法要求,并减少重复关系。最终数据集中实际包含71种可用关系中的66种(其余5种未被标注者选用,或选用后未通过描述文本验证阶段)。

3.2 Second-round Human Validation

3.2 第二轮人工验证

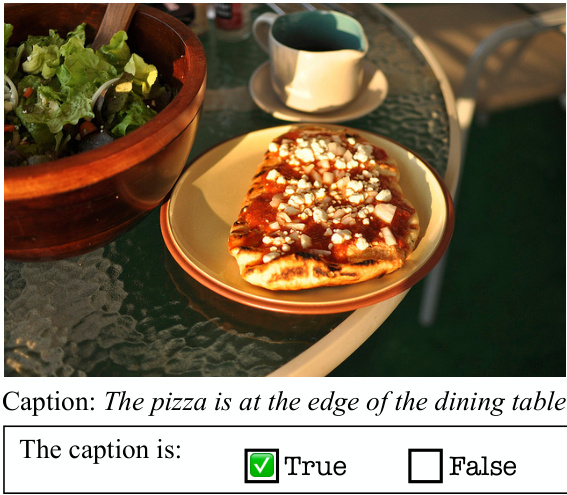

In the second-round validation, every annotated data point is reviewed by at least 3 additional human annotators (validators). Given a data point (consisting of an image and a caption), the validator gives either a True or False label as shown in Fig. 4 (the original label is hidden). In our final dataset, we exclude instances with fewer than 2 validators agreeing with the original label.

在第二轮验证中,每个标注数据点会由至少3名额外的人工标注员(验证员)进行复核。给定一个数据点(包含图像和说明文字),验证员会给出True或False标签,如图4所示(原始标签被隐藏)。在我们的最终数据集中,我们排除了少于2名验证员认同原始标签的实例。

Design choice on reference frames. During validation, a validator needs to decide whether a statement is true or false for an image. However, as discussed in $\S1$ , interpreting a spatial relation requires choosing a frame of reference. For some images, a statement can be both true and false, depending on the choice. As a concrete example, in Fig. 1, while the potted plant is on the left side from the viewer’s perspective (relative frame), the potted plant is at the right side if the bench is used to define the coordinate system (intrinsic frame).

参考框架的设计选择。在验证过程中,验证者需要判断某个陈述对于图像是真是假。然而,如 $\S1$ 所述,解释空间关系需要选择一个参考框架。对于某些图像,一个陈述可能既真又假,这取决于框架的选择。具体示例如图 1 所示:从观察者视角(相对框架)看盆栽植物位于左侧,但若以长椅为坐标系基准(固有框架),则该盆栽实际位于右侧。

In order to ensure that annotations are consistent across the dataset, we communicated to the annotators that, for relations such as “left”/“right” and “in front of”/“behind”, they should consider both possible reference frames, and assign the label True when a caption is true from either the intrinsic or the relative frame. Only when a caption is incorrect under both reference frames (e.g., if the caption is “The potted plant is under the bench.” for Fig. 1) should a False label be assigned.

为确保数据集中的标注保持一致,我们告知标注人员,对于诸如“左”/“右”和“前”/“后”这类关系,他们应考虑两种可能的参照系,并在描述从内在或相对参照系看均为正确时标注为True。仅当描述在两种参照系下均不成立时(例如,对于图1中的描述“盆栽植物在长凳下方”),才应标注为False。

Table 1: The 71 available spatial relations. 66 of them appear in our final dataset ( $^*$ indicates not used).

表 1: 71种可用的空间关系。其中66种出现在最终数据集中 ( $^*$ 表示未使用)。

| 类别 | 空间关系 |

|---|---|

| 邻接关系 | 相邻于、沿着...侧、在...侧面、在...右侧、在...左侧、附着于、在...背面、在...前方、紧靠着、在...边缘 |

| 方向性 | 离开、经过、朝向、向下、深处*、向上*、远离、沿着、环绕、从*、进入、到* |

| 方位 | 横跨、对面、穿过、...下方 面向、背向、平行于、垂直于 |

| 投射关系 | 在...顶部、在...下方、在...旁边、在...后面、在...左侧、在...右侧、在...下面、在...前面、在...下方、在...上方、越过、在...中间 |

| 接近度 | 靠近、接近、邻近、远离、相距甚远 |

| 拓扑关系 | 连接到、分离自、作为...一部分、...的组成部分、包含、在...内部、位于、在...上、在...里、带有、环绕、在...之中、由...组成、从...出来、在...之间、在...内部、在...外部、接触 |

| 未分类 | 超出、紧挨着、与...相对、之后*、在...之中、被...包围 |

Figure 4: A second-round validation example.

图 4: 第二轮验证示例。

On a practical level, this adds difficulty to the task, since a model cannot naively rely on pixel locations of the objects in the images, but also needs to correctly identify orientations of objects. However, the task is well-defined: a model that can correctly simulate both reference frames would be able to perfectly solve this task.

在实际操作层面,这增加了任务的难度,因为模型不能简单地依赖图像中物体的像素位置,还需要正确识别物体的方向。然而,该任务是明确定义的:能够正确模拟两个参考系的模型将能完美解决这一任务。

From a theoretical perspective, by involving more diverse reference frames, we are also demonstrating the complexity of human cognitive processes when understanding a scene, since different people approach a scene with different frames. Attempting to enforce a specific reference frame would be methodological ly difficult and result in an unnaturally restricted dataset.

从理论角度来看,通过引入更多样化的参照系,我们也展示了人类在理解场景时认知过程的复杂性,因为不同的人会以不同的框架来解读场景。试图强制采用特定参照系在方法论上将面临困难,并会导致数据集受到不自然的限制。

3.3 Annotator Hiring and Organisation

3.3 标注员招聘与组织

Annotators were hired from prolific.co. We required them to (1) have at least a bachelor’s degree, (2) be fluent in English, and (3) have a ${>}99%$ historical approval rate on the platform. All annotators were paid 12 GBP per hour.

标注员从prolific.co平台招募。我们要求他们满足以下条件:(1) 至少拥有学士学位,(2) 英语流利,(3) 在该平台的历史批准率${>}99%$。所有标注员的时薪为12英镑。

For caption generation, we released the task with batches of 200 instances and the annotator was required to finish a batch in 80 minutes. An annotator could not take more than one batch per day. In this way we had a diverse set of annotators and could also prevent annotators from becoming fatigued. For second-round validation, we grouped 500 data points in one batch and an annotator was asked to label each batch in 90 minutes.

在标题生成任务中,我们以每批200个实例的方式发布任务,要求标注员在80分钟内完成一批。每位标注员每天最多只能领取一个批次。这种方式确保了标注员群体的多样性,同时避免了标注疲劳。在第二轮验证阶段,我们将500个数据点归为一批,要求标注员在90分钟内完成每批标注。

In total, 24 annotators participated in caption generation and 45 participated in validation. 4 people participated in both phases, which should have minimally impacted the validation quality. The annotators had diverse demographic backgrounds: they were born in 15 countries, were living in 13 countries, and had 12 nationalities. 50 annotators were born and living in the same country while others had moved to different ones. The vast majority of our annotators were residing in the UK (32), South Africa (9), and Ireland (7). The ratio for holding a Bachelor/Master/PhD as the highest degree was: $12.5%/76.6%/10.9%$ . Only 7 annotators were non-native English speakers while the other 58 were native speakers. $56.7%$ of the annotators self-identified as female and $43.3%$ as male.

共有24名标注员参与标题生成,45名参与验证,其中4人同时参与两个阶段,这对验证质量影响极小。标注员具有多元人口背景:来自15个出生国,现居13个国家,拥有12种国籍。50名标注员始终居住在原籍国,其余人员曾跨国迁移。绝大多数标注员居住在英国(32)、南非(9)和爱尔兰(7)。最高学历为学士/硕士/博士的比例为:$12.5%/76.6%/10.9%$。仅7名标注员非英语母语者,其余58人为母语者。$56.7%$的标注员自认为女性,$43.3%$为男性。

3.4 Dataset Splits

3.4 数据集划分

We split the 10,972 validated data points into train/dev/test sets in two different ways. The stats of the two splits are shown in Table 2. In the following, we explain how they are created. Random split: We split the dataset randomly into train/dev/test with a ratio of 70/10/20. Concept zero-shot split: We create another concept zeroshot split where train/dev/test have no overlapping concepts. I.e., if “dog” appears in the train set, then it does not appear in dev or test sets. This is done by randomly grouping concepts into three sets with a ratio of 50/20/30 of all concepts. This reduces the dataset size, since data poins involving concepts from different parts of the train/dev/test split must be filtered out. The concept zero-shot split is a more challenging setup since the model has to learn concepts and the relations in a compositional way instead of remembering the co-occurrence statistics of the two.

我们将10,972个已验证数据点按两种方式划分为训练集/开发集/测试集。两种划分的统计数据如表2所示。下面说明具体划分方法:

随机划分:按70/10/20的比例将数据集随机划分为训练集/开发集/测试集。

概念零样本划分:我们创建了另一种概念零样本划分,确保训练集/开发集/测试集之间没有重叠概念。例如若"dog"出现在训练集,则不会出现在开发集或测试集。具体做法是将所有概念按50/20/30比例随机分为三组。由于需要过滤掉涉及跨划分概念的样本,该划分方式会减小数据集规模。概念零样本划分更具挑战性,因为模型必须以组合方式学习概念及其关系,而非记忆两者的共现统计规律。

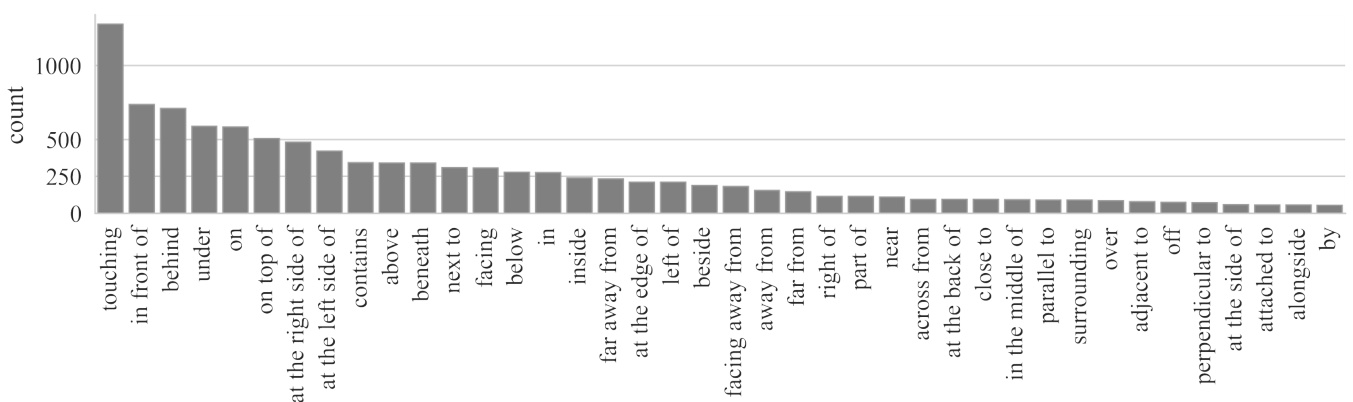

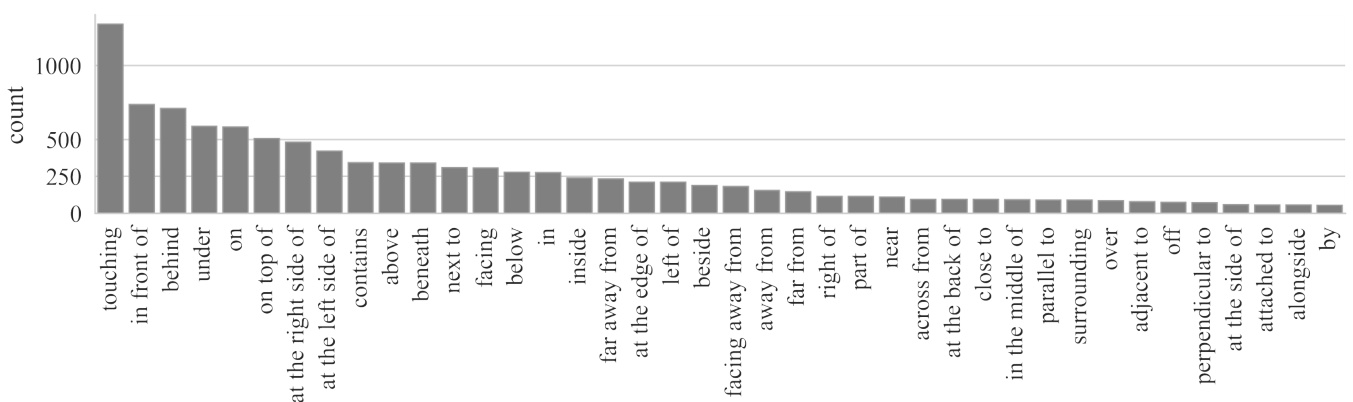

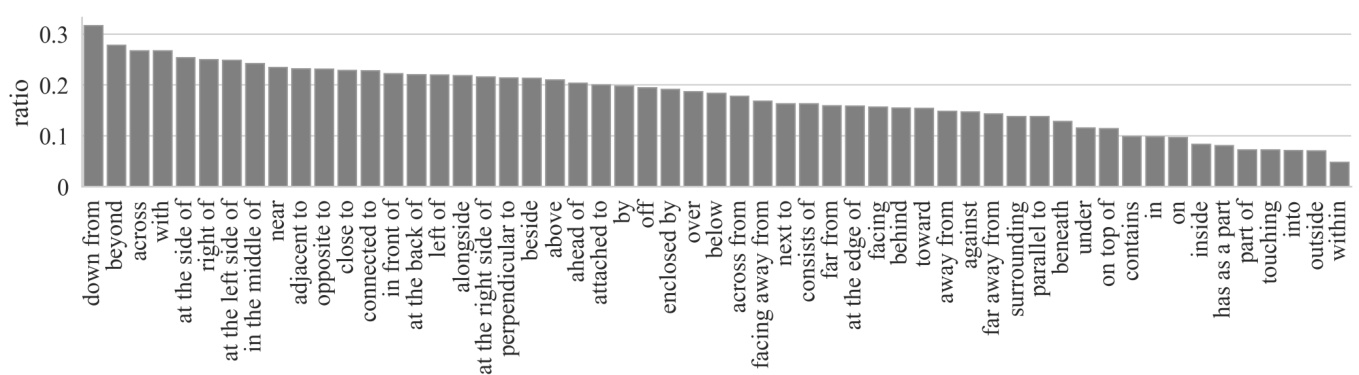

Figure 5: Relation distribution of the final dataset (sorted by frequency). Top 40 most frequent relations are included. It is clear that the relations follow a long-tailed distribution.

图 5: 最终数据集的关系分布 (按频率排序)。图中包含前40个最频繁的关系。可以明显看出这些关系遵循长尾分布。

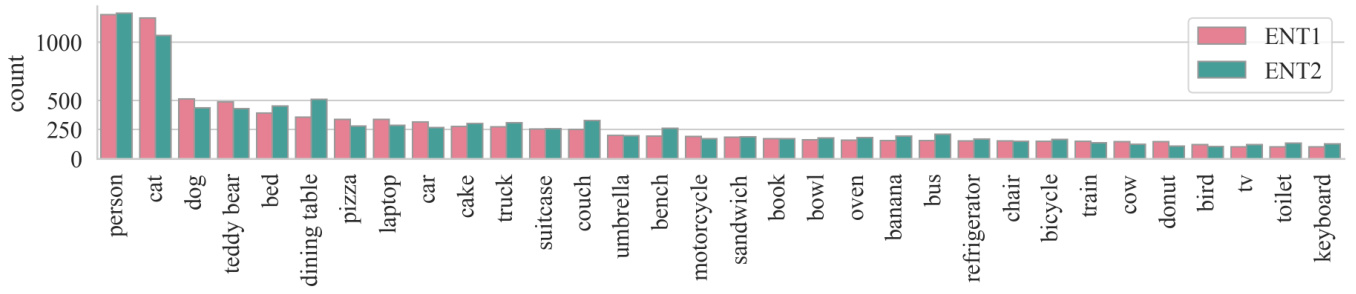

Figure 6: Concept distribution. Only concepts with $>100$ frequencies are included.

图 6: 概念分布。仅包含频率 $>100$ 的概念。

Table 2: Statistics of the random & zero-shot splits.

表 2: 随机划分与零样本划分的统计信息

| split | train | dev | test | total |

|---|---|---|---|---|

| random | 7,680 | 1,097 | 2,195 | 10,972 |

| zero-shot | 4,713 | 231 | 616 | 5,560 |

3.5 Human Ceiling and Agreement

3.5 人类上限与一致性

We randomly sample 500 data points from the final random split test set of the dataset for computing human ceiling and inter-annotator agreement. We hide the labels of the 500 examples and two additional annotators are asked to label True/False for them. On average, the two annotators achieve an accuracy of $95.4%$ on the VSR task. We further compute the Fleiss’ kappa among the original annotation and the predictions of the two human. The Fleiss’ kappa score is 0.895, indicating near-perfect agreement according to Landis and Koch (1977).

我们从数据集的最终随机划分测试集中随机抽取500个数据点用于计算人类上限和标注者间一致性。隐藏这500个示例的标签后,另请两名标注者为其标注真/假。平均而言,两名标注者在VSR任务上的准确率为$95.4%$。进一步计算原始标注与两名人类预测之间的Fleiss' kappa值,得分为0.895,根据Landis和Koch (1977)的标准表明接近完美的一致性。

4 Dataset Analysis

4 数据集分析

In this section we compute some basic statistics of our collected data (§4.1), analyse where human annotators have agreed/disagreed (§4.2), and present a case study on reference frames (§4.3).

在本节中,我们计算了收集数据的一些基本统计量(§4.1),分析了人类标注者达成一致/存在分歧的部分(§4.2),并展示了关于参考框架的案例研究(§4.3)。

4.1 Basic Statistics of VSR

4.1 VSR基础统计

After the first phase of contrastive template-based caption generation (§3.1), we collected 12,809 raw data points. In the phase of the second round validation (§3.2), we collected 39,507 validation labels. Every data point received at least 3 validation labels. In $69.1%$ of the data points, all validators agree with the original label. $85.6%$ of the data points have at least $\frac{2}{3}$ annotators agreeing with the original label. We use $\textstyle{\frac{2}{3}}$ as the threshold and exclude all instances with lower validation agreement. After excluding other instances, 10, 972 data points remained and are used as our final dataset.

在第一阶段基于对比模板的标题生成(§3.1)后,我们收集了12,809个原始数据点。在第二轮验证阶段(§3.2)中,我们收集了39,507个验证标签。每个数据点至少获得3个验证标签。在$69.1%$的数据点中,所有验证者均同意原始标签。$85.6%$的数据点至少有$\frac{2}{3}$的标注者同意原始标签。我们以$\textstyle{\frac{2}{3}}$作为阈值,排除了所有验证一致性低于该值的实例。排除其他实例后,剩余10,972个数据点作为最终数据集使用。

Here we provide basic statistics of the two components in the VSR captions: the concepts and the relations. Fig. 5 demonstrates the relation distribution. “touching” is most frequently used by annotators. The relations that reflect the most basic relative coordinates of objects are also very frequent, e.g., “behind”, “in front of”, “on”, “under”, “at the left/right side of”. Fig. 6 shows the distribution of concepts in the dataset. Note that the set of concepts is bounded by MS COCO and the distribution also largely follows MS COCO. Animals such as “cat”, “dog”, “person” are the most frequent. Indoor objects such as “dining table” and “bed” are also very dominant. In Fig. 6, we separate the concepts that appear at ENT1 and ENT2 positions of the sentence and their distributions are generally similar.

在此我们提供VSR字幕中两个组成部分的基本统计数据:概念和关系。图5展示了关系分布。"touching"是标注者最常使用的关系。反映物体最基本相对位置的关系也非常频繁,例如"behind"、"in front of"、"on"、"under"、"at the left/right side of"。图6显示了数据集中概念的分布。需要注意的是,概念集合受限于MS COCO数据集,其分布也基本遵循MS COCO。"cat"、"dog"、"person"等动物类概念出现频率最高,"dining table"和"bed"等室内物品也占主导地位。在图6中,我们将出现在句子ENT1和ENT2位置的概念分开显示,它们的分布总体相似。

Figure 7: Per-relation probability of having two randomly chosen annotator disagreeing with each other (sorted from high to low). Only relations with $>20$ data points are included in the figure.

图 7: 随机选取的两名标注者在各关系类型上出现分歧的概率 (按从高到低排序)。图中仅包含数据点数量 $>20$ 的关系类型。

4.2 Where do annotators disagree?

4.2 标注员在哪些方面存在分歧?

While we propose using data points with high validation agreement for model evaluation and development, the unfiltered dataset is a valuable resource for understanding cognitive and linguistic phenomena. We sampled 100 examples where annotators disagree, and found that around 30 of them are caused by annotation errors but the rest are genuinely ambiguous and can be interpreted in different ways. This shows a level of intrinsic ambiguity of the task and variation among people.

虽然我们建议使用验证一致性高的数据点进行模型评估和开发,但未经筛选的数据集仍是理解认知和语言现象的宝贵资源。我们抽样了100个标注者存在分歧的案例,发现其中约30例由标注错误导致,其余案例确实存在多义性且可作不同解读。这表明该任务存在固有模糊性,也反映出人群间的认知差异。

Along with the validated VSR dataset, we also release the full unfiltered dataset, with annotators’ and validators’ metadata, as a second version to facilitate linguistic studies. For example, researchers could investigate questions such as where disagreement is more likely to happen and how people from different regions or cultural backgrounds might perceive spatial relations differently.

除了经过验证的VSR数据集外,我们还发布了完整的未过滤数据集(包含标注者和验证者的元数据)作为第二个版本,以促进语言学研究。例如,研究人员可以探究诸如分歧更可能发生在哪些情境下,以及来自不同地区或文化背景的人如何可能对空间关系产生不同认知等问题。

To illustrate this, the probability of two randomly chosen annotators disagreeing with each other is given for each relation in Fig. 7. Some of the relations with high disagreement can be interpreted in the intrinsic reference frame, which requires identifying the orientations of objects, e.g. “at the side of” and “in front of”. Other relations have a high level of vagueness, e.g., for the notion of closeness: “near” and “close to”. By contrast, part-whole relations, such as “has as a part”, “part of”, and in/out relations such as “within”, “into”, “outside” and “inside” have the least disagreement.

为说明这一点,图7给出了每种关系中两位随机选择的标注者存在分歧的概率。部分高分歧率的关系需要基于物体固有参考系进行解释(例如"在侧面"和"在正前方"),这要求识别物体的方向。另一些关系则存在较高模糊性(例如表示接近程度的概念:"附近"和"靠近")。相比之下,部分整体关系(如"包含部件"、"属于部分")以及内外关系(如"内部"、"进入"、"外部"和"里面")的分歧率最低。

4.3 Case Study: Reference Frames

4.3 案例研究:参考坐标系

It is known that the relative reference frame is often preferred in English, at least in standard varieties. For example, Edmonds-Wathen (2012) compares Standard Australian English and Aboriginal English, as spoken by school children at a school on Croker Island, investigating the use of the relations “in front of” and “behind” when describing simple line drawings of a person and a tree. Speakers of Standard Australian English were found to prefer the relative frame, while speakers of Aboriginal English were found to prefer the intrinsic frame.

已知在英语中相对参照系更常被使用,至少在标准变体中如此。例如 Edmonds-Wathen (2012) 比较了标准澳大利亚英语和克罗克岛一所学校学生使用的原住民英语,调查了在描述人与树的简单线条图时对"前面"和"后面"关系的使用情况。研究发现标准澳大利亚英语使用者倾向于使用相对参照系,而原住民英语使用者则更偏好内在参照系。

Our methodology allows us to investigate reference frame usage across a wide variety of spatial relations, using a wide selection of natural images. To understand the frequency of annotators using relative vs. intrinsic frame