EFFICIENT REMOTE SENSING WITH HARMONIZED TRANSFER LEARNING AND MODALITY ALIGNMENT

高效遥感:基于统一迁移学习与模态对齐的方法

ABSTRACT

摘要

With the rise of Visual and Language Pre training (VLP), an increasing number of downstream tasks are adopting the paradigm of pre training followed by finetuning. Although this paradigm has demonstrated potential in various multimodal downstream tasks, its implementation in the remote sensing domain encounters some obstacles. Specifically, the tendency for same-modality embeddings to cluster together impedes efficient transfer learning. To tackle this issue, we review the aim of multimodal transfer learning for downstream tasks from a unified perspective, and rethink the optimization process based on three distinct objectives. We propose “Harmonized Transfer Learning and Modality Alignment (HarMA)”, a method that simultaneously satisfies task constraints, modality alignment, and single-modality uniform alignment, while minimizing training overhead through parameter-efficient fine-tuning. Remarkably, without the need for external data for training, HarMA achieves state-of-the-art performance in two popular multimodal retrieval tasks in the field of remote sensing. Our experiments reveal that HarMA achieves competitive and even superior performance to fully fine-tuned models with only minimal adjustable parameters. Due to its simplicity, HarMA can be integrated into almost all existing multimodal pre training models. We hope this method can facilitate the efficient application of large models to a wide range of downstream tasks while significantly reducing the resource consumption 1.

随着视觉与语言预训练 (VLP) 的兴起,越来越多的下游任务开始采用预训练后微调的模式。尽管该模式在多模态下游任务中展现出潜力,但在遥感领域的应用仍面临一些障碍。具体而言,同模态嵌入倾向于聚集的特性会阻碍高效的迁移学习。为解决这一问题,我们从统一视角重新审视多模态迁移学习在下游任务中的目标,并基于三个不同目标重新思考优化过程。我们提出“协调迁移学习与模态对齐 (HarMA)”方法,该方法在满足任务约束、模态对齐和单模态均匀对齐的同时,通过高效参数微调最小化训练开销。值得注意的是,无需额外训练数据,HarMA 便在遥感领域两个主流多模态检索任务中实现了最先进的性能。实验表明,仅需极少量可调参数,HarMA 就能达到与全参数微调模型相当甚至更优的性能。由于其简洁性,HarMA 可集成到几乎所有现有多模态预训练模型中。我们希望该方法能促进大模型在广泛下游任务中的高效应用,同时显著降低资源消耗 [1]。

1 INTRODUCTION

1 引言

The advent of Visual and Language Pre training (VLP) has spurred a surge in studies employing large-scale pre training and subsequent fine-tuning for diverse multimodal tasks (Tan & Bansal, 2019; Li et al., 2020; 2021; 2022; Liu et al., 2023). When conducting transfer learning for downstream tasks in the multimodal domain, the common practice is to first perform large-scale pre-training and then fully fine-tune on a specific domain dataset (Hu & Singh, 2021; Akbari et al., 2021; Zhang et al., 2024b), which is also the case in the field of remote sensing image-text retrieval (Cheng et al., 2021; Pan et al., 2023b). However, this method has at least two notable limitations. Firstly, fully fine-tuning a large model is extremely expensive and not scalable (Zhang et al., 2024a). Secondly, the pre-trained model has already been trained on a large dataset for a long time, and fully fine-tuning on a small dataset may lead to reduced generalization ability or over fitting.

视觉与语言预训练 (Visual and Language Pre-training, VLP) 的出现推动了采用大规模预训练和后续微调来处理多样化多模态任务的研究热潮 (Tan & Bansal, 2019; Li et al., 2020; 2021; 2022; Liu et al., 2023)。在多模态领域进行下游任务迁移学习时,通常的做法是先进行大规模预训练,然后在特定领域数据集上完全微调 (Hu & Singh, 2021; Akbari et al., 2021; Zhang et al., 2024b),遥感图像-文本检索领域也是如此 (Cheng et al., 2021; Pan et al., 2023b)。然而,这种方法至少存在两个显著局限:首先,完全微调大模型成本极高且难以扩展 (Zhang et al., 2024a);其次,预训练模型已在大型数据集上经过长期训练,在小数据集上完全微调可能导致泛化能力下降或过拟合。

Recently, several works have attempted to use Parameter-Efficient Fine-Tuning (PEFT) to address this issue, aiming to freeze most of the model parameters and fine-tune only a few (Houlsby et al., 2019; Mao et al., 2021; Zhang et al., 2022). This strategy seeks to incorporate domain-specific knowledge into the model while preserving the bulk of its original learned information. For example, Houlsby et al. (2019) attempted to fine-tune the pre-trained model by simply introducing a singlemodality MLP layer. In contrast, Yuan et al. (2023) designed a cross-modal interaction adapter, aiming to enhance the model’s ability to integrate multimodal knowledge. Although the above works have achieved promising results, they either concentrate on single-modality features or overlook potential semantic mismatches when modeling the visual-language joint space.

最近,多项研究尝试使用参数高效微调 (Parameter-Efficient Fine-Tuning, PEFT) 来解决这一问题,旨在冻结大部分模型参数,仅微调少量参数 (Houlsby et al., 2019; Mao et al., 2021; Zhang et al., 2022)。该策略试图在保留模型原有学习信息的同时,融入特定领域的知识。例如,Houlsby et al. (2019) 尝试通过简单引入单模态 MLP 层来微调预训练模型。相比之下,Yuan et al. (2023) 设计了一个跨模态交互适配器,旨在增强模型整合多模态知识的能力。尽管上述工作取得了不错的效果,但它们要么专注于单模态特征,要么在建模视觉-语言联合空间时忽略了潜在的语义不匹配问题。

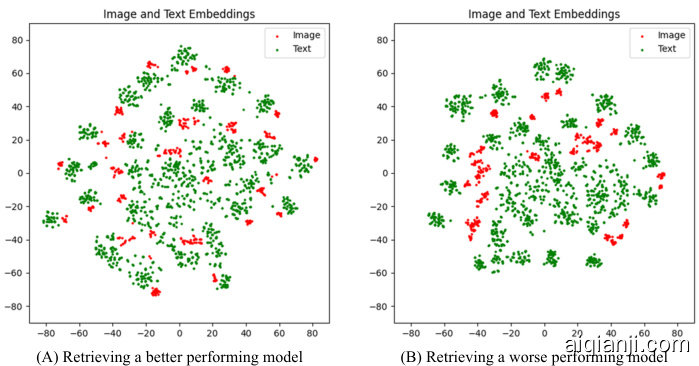

We have observed that poorly performing models sometimes exhibit a clustering phenomenon within the same modality embedding. Figure 1 illustrates the visualization of the last layer embeddings for two models with differing performance in the filed of remote sensing image-text retrieval; the clustering phenomenon is noticeably more pronounced in the right image than in the left. We hypothesize that this may be attributed to the high intra-class and inter-class similarity of remote sensing images, leading to semantic confusion when modeling a low-rank visual-language joint space. This raises a critical question: “How can we model a highly aligned visual-language joint space while ensuring efficient transfer learning?”

我们观察到,性能较差的模型有时会在同一模态嵌入中表现出聚类现象。图 1: 展示了两个在遥感图像-文本检索领域表现不同的模型最后一层嵌入的可视化结果;右图中的聚类现象明显比左图更为突出。我们推测,这可能归因于遥感图像的高类内和类间相似性,导致在建模低秩视觉-语言联合空间时出现语义混淆。这引发了一个关键问题:"如何在确保高效迁移学习的同时,建模高度对齐的视觉-语言联合空间?"

Figure 1: In remote sensing image-text retrieval, excessive clustering of the same modality sometimes leads to a decrease in performance. The experiment was conducted on the RSITMD dataset.

图 1: 在遥感图文检索任务中,同模态过度聚类有时会导致性能下降。该实验在RSITMD数据集上进行。

In the brains of congenitally blind individuals, parts of the visual cortex can take on the function of language processing (Bedny et al., 2011). Concurrently, in the typical human cortex, several small regions—such as the Angular Gyrus and the Visual Word Form Area (VWFA)—serve as hubs for integrated visual-language processing (Houk & Wise, 1995). These areas hierarchically manage both low-level and high-level stimuli information (Chen et al., 2019). Inspired by this natural phenomenon, we propose “Efficient Remote Sensing with Harmonized Transfer Learning and Modality Alignment (HarMA)”. Specifically, similar to the information processing methods of the human brain, we designed a hierarchical multimodal adapter with mini-adapters. This framework emulates the human brain’s strategy of utilizing shared mini-regions to process neural impulses originating from both visual and linguistic stimuli. It models the visual-language semantic space from low to high levels by hierarchically sharing multiple mini-adapters. Finally, we introduced a new objective function to alleviate the severe clustering of features within the same modality. Thanks to its simplicity, the method can be easily integrated into almost all existing multimodal frameworks.

在先天失明者的大脑中,视觉皮层的部分区域能够承担语言处理功能 (Bedny et al., 2011)。与此同时,典型人类大脑皮层中的多个小区域——如角回 (Angular Gyrus) 和视觉词形区 (Visual Word Form Area, VWFA)——充当着视觉-语言整合处理的枢纽 (Houk & Wise, 1995)。这些区域以层级化方式管理着从低阶到高阶的刺激信息 (Chen et al., 2019)。受此自然现象启发,我们提出了"高效遥感与协调迁移学习及模态对齐方法 (Harmonized Transfer Learning and Modality Alignment, HarMA)"。具体而言,我们仿照人脑信息处理方式,设计了一个包含微型适配器 (mini-adapter) 的层级化多模态适配框架。该框架模拟了人脑利用共享微型区域来处理源自视觉与语言刺激的神经脉冲策略,通过层级化共享多个微型适配器,实现了从低阶到高阶的视觉-语言语义空间建模。最后,我们引入新的目标函数来缓解同模态内部特征严重聚集的问题。得益于其简洁性,该方法可轻松集成到几乎所有现有多模态框架中。

2 METHOD

2 方法

2.1 OVERALL FRAMEWORK

2.1 整体框架

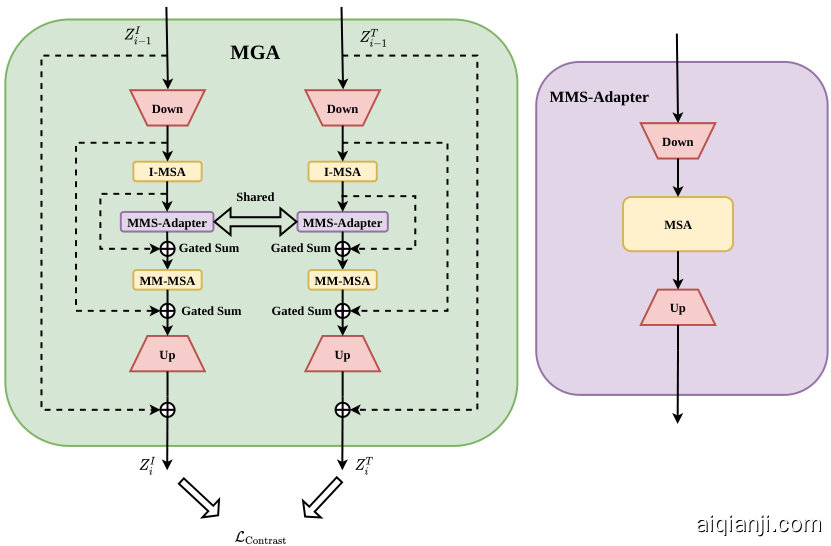

Figure 2: The overall framework of the proposed method.

图 2: 所提方法的整体框架。

Figure 2 illustrates our proposed HarMA framework. It initiates the process with the extraction of representations using image and text encoders, similar to CLIP (Radford et al., 2021b). These features are then processed by our unique multimodal gated adapter to obtain refined feature represent at ions. Unlike the simple linear layer interaction used in (Yuan et al., 2023), we employ a shared mini adapter as our interaction layer within the entire adapter. After that, we optimize using a contrastive learning objective and our adaptive triplet loss.

图 2: 展示了我们提出的 HarMA 框架。该框架首先使用图像和文本编码器提取表征,类似于 CLIP (Radford et al., 2021b)。这些特征随后通过我们独特的多模态门控适配器进行处理,以获得精细化的特征表示。与 (Yuan et al., 2023) 中使用的简单线性层交互不同,我们在整个适配器中采用共享的迷你适配器作为交互层。之后,我们使用对比学习目标和自适应三元组损失进行优化。

2.2 MULTIMODAL GATED ADAPTER

2.2 多模态门控适配器

Previous parameter-efficient fine-tuning methods in the multimodal domain (Jiang et al., 2022b; Yuan et al., 2023) use a simple shared-weight method for modal interaction, potentially causing semantic matching confusion in the inherent modal embedding space. To address this, we designed a cross-modal adapter with an adaptive gating mechanism (Figure 3).

多模态领域先前的参数高效微调方法 (Jiang et al., 2022b; Yuan et al., 2023) 采用简单的共享权重机制进行模态交互,可能导致固有模态嵌入空间中的语义匹配混乱。为此,我们设计了具有自适应门控机制的跨模态适配器 (图 3)。

Figure 3: The specific structure of the multimodal gated adapter.The overall structure is shown on the left, while the structure of the shared multimodal sub-adapter is displayed on the right.

图 3: 多模态门控适配器的具体结构。左侧展示了整体结构,右侧显示了共享多模态子适配器的结构。

In this module, the extracted features $z_{I}$ and $z_{T}$ are first projected into low-dimensional embeddings. Different features $z_{I}$ $(z_{T})$ are further enhanced in feature expression after non-linear activation and subsequent processing by I-MSA. I-MSA and the subsequent MM-MSA share parameters. The features are then fed into our designed Multimodal Sub-Adapter (MMS-Adapter) for further interaction, the structure of this module is shown on the right side of Figure 3.

在此模块中,提取的特征$z_{I}$和$z_{T}$首先被投影到低维嵌入空间。通过非线性激活和随后的I-MSA处理,不同特征$z_{I}$ $(z_{T})$在特征表达上得到进一步增强。I-MSA与后续的MM-MSA共享参数。这些特征随后被输入到我们设计的Multimodal Sub-Adapter (MMS-Adapter)中进行进一步交互,该模块结构如[图3]右侧所示。

The MMS-Adapter, akin to standard adapters, aligns multimodal context representations via sharedweight self-attention. However, direct post-projection output of these aligned representations negatively impacts image-text retrieval performance, likely due to off-diagonal semantic key matches in the feature’s low-dimensional manifold space. This contradicts contrastive learning objectives.

MMS-Adapter与标准适配器类似,通过共享权重的自注意力机制对齐多模态上下文表征。然而,这些对齐表征的直接后投影输出会对图文检索性能产生负面影响,这可能是由于特征的低维流形空间中出现了非对角语义键匹配。这一现象与对比学习目标相矛盾。

To tackle this, the already aligned representations are further processed in the MSA with shared weights, thereby reducing model parameters and leveraging prior modality knowledge. And to ensure a finer-grained semantic match between image and text, we introduce early image-text matching supervision in the MGA output, significantly mitigating the occurrence of above issue.

为了解决这一问题,已对齐的表征会在共享权重的MSA(多头自注意力)中进一步处理,从而减少模型参数并利用先前的模态知识。此外,为确保图像与文本之间更细粒度的语义匹配,我们在MGA(多粒度对齐)输出中引入了早期图文匹配监督机制,显著缓解了上述问题的发生。

Ultimately, features are projected back to their original dimensions before adding the skip connection. The final layer is initialized to zero to safeguard the performance of the pre-trained model during the initial stages of training. Algorithm 1 summarizes the proposed method.

最终,特征在添加跳跃连接前会被投影回原始维度。最后一层被初始化为零,以在训练初期保护预训练模型的性能。算法1总结了所提出的方法。

2.3 OBJECTIVE FUNCTION

2.3 目标函数

Here, $L_{\mathrm{task}}^{i}$ represents the task loss for the $i$ -th task, and $L_{\mathrm{align}}^{j k}$ denotes the alignment loss between different pairs of modalities $(j,k)$ . The expectation is taken over the data distribution $\mathcal{D}$ for each task. $\theta^{*}$ represents the target parameters for transfer learning.

这里,$L_{\mathrm{task}}^{i}$ 表示第 $i$ 个任务的任务损失,$L_{\mathrm{align}}^{j k}$ 表示不同模态对 $(j,k)$ 之间的对齐损失。期望是针对每个任务的数据分布 $\mathcal{D}$ 计算的。$\theta^{*}$ 表示迁移学习的目标参数。

In this equation, $L_{\mathrm{ini}}$ represents the initial optimization objective (Equation 1), which is composed of the task loss and alignment loss. $L_{\mathrm{uniform}}^{i}$ denotes the singe-modality uniformity loss for the $i$ -th modality, and $D(\theta,\theta^{*})$ is a cost measure between the original and updated model parameters, constrained to be less than $\delta$ . $\delta$ is the minimum parameter update cost in the ideal state.

在该等式中,$L_{\mathrm{ini}}$ 表示初始优化目标 (公式 1),由任务损失和对齐损失组成。$L_{\mathrm{uniform}}^{i}$ 表示第 $i$ 个模态的单模态均匀性损失,$D(\theta,\theta^{*})$ 是原始模型参数与更新后模型参数之间的成本度量,约束条件为小于 $\delta$。$\delta$ 是理想状态下的最小参数更新成本。

We observe that existing works often only explore one or two objectives, with most focusing either on how to efficiently fine-tune parameters for downstream tasks (Jiang et al., 2022b; Jie & Deng, 2022; Yuan et al., 2023) or on modality alignment (Chen et al., 2020; Ma et al., 2023; Pan et al., 2023a). Few can simultaneously satisfy the three requirements outlined in the above formula. We have satisfied the need for efficient transfer learning by introducing adapters that mimic the human brain. This prompts us to ask: how can we fulfill the latter two objectives—high alignment of embeddings across different modalities while preventing excessive clustering of embeddings within the same modality?

我们注意到,现有研究往往只探索一两个目标,其中大多数要么关注如何高效微调参数以适应下游任务 (Jiang et al., 2022b; Jie & Deng, 2022; Yuan et al., 2023) ,要么聚焦模态对齐 (Chen et al., 2020; Ma et al., 2023; Pan et al., 2023a) 。很少有工作能同时满足上述公式中的三个要求。通过引入模拟人脑的适配器 (adapter) ,我们已满足高效迁移学习的需求。这促使我们思考:如何实现后两个目标——在确保跨模态嵌入高度对齐的同时,避免同一模态内嵌入的过度聚类?

2.3.1 ADAPTIVE TRIPLET LOSS

2.3.1 自适应三元组损失

In image-text retrieval tasks, the bidirectional triplet loss established by Faghri et al. (2017) has become a mainstream loss function. However, if we choose to accumulate the losses of all samples in image-text matching, the model may struggle to optimize due to the high intra-class similarity and inter-class similarity prevalent in most regions of the images within the field of remote sensing (Yuan et al., 2022a). Therefore, We propose an Adaptive Triplet Loss that automatically mines and optimizes hard samples:

在图文检索任务中,Faghri等人(2017) 提出的双向三元组损失已成为主流损失函数。然而,若选择累积图像-文本匹配中所有样本的损失,由于遥感领域图像大部分区域普遍存在较高的类内相似性和类间相似性 (Yuan等人, 2022a),模型可能难以优化。因此,我们提出一种自动挖掘并优化困难样本的自适应三元组损失:

$$

\mathcal{L}{\mathrm{ada-triplet}}=\frac{1}{2}\left(\sum_{i=1}^{N}\sum_{j=1}^{N}w_{s,i j}\left[m+s_{i j}-s_{i i}\right]{+}+\sum_{j=1}^{N}\sum_{i=1}^{N}w_{s,j i}\left[m+s_{j i}-s_{i i}\right]_{+}\right),

$$

$$

\mathcal{L}{\mathrm{ada-triplet}}=\frac{1}{2}\left(\sum_{i=1}^{N}\sum_{j=1}^{N}w_{s,i j}\left[m+s_{i j}-s_{i i}\right]{+}+\sum_{j=1}^{N}\sum_{i=1}^{N}w_{s,j i}\left[m+s_{j i}-s_{i i}\right]_{+}\right),

$$

where $s_{i j}$ is the dot product between image feature $i$ and text feature $j,w_{i}$ and $w_{j}$ are the weights of sample $i$ and $j$ , determined by the loss size of different samples:

其中 $s_{i j}$ 是图像特征 $i$ 与文本特征 $j$ 的点积,$w_{i}$ 和 $w_{j}$ 分别是样本 $i$ 和 $j$ 的权重,由不同样本的损失大小决定:

$$

w_{s,i j}=(1-\exp(-[m+s_{i j}-s_{i i}]{+}))^{\gamma},w_{s,j i}=(1-\exp(-[m+s_{j i}-s_{i i}]_{+}))^{\gamma},

$$

$$

w_{s,i j}=(1-\exp(-[m+s_{i j}-s_{i i}]{+}))^{\gamma},w_{s,j i}=(1-\exp(-[m+s_{j i}-s_{i i}]_{+}))^{\gamma},

$$

where $\gamma$ is a hyper parameter adjusting the size of the weights. This loss function aims to bring the features of positive samples closer together, while distancing those between positive and negative samples. By dynamically adjusting the focus between hard and easy samples, our approach effectively satisfies the other two objectives proposed above. It not only aligns different modality samples at a fine-grained level but also prevents over-aggregation among samples of the same modality, thereby enhancing the model’s matching capability. Also, following the approach of (Radford et al., 2021b), we utilize a contrastive learning objective to align image and text semantic features. Consequently, the total objective is defined as:

其中 $\gamma$ 是一个调整权重大小的超参数。该损失函数旨在拉近正样本特征之间的距离,同时推远正负样本之间的特征。通过动态调整难样本与易样本的关注度,我们的方法有效满足了上述另外两个目标:不仅能在细粒度上对齐不同模态的样本,还能防止同模态样本的过度聚集,从而提升模型匹配能力。此外,我们沿用 (Radford et al., 2021b) 的方法,采用对比学习目标来对齐图像与文本语义特征。因此,总目标函数定义为:

$$

\begin{array}{r}{\mathcal{L}{\mathrm{total}}=(\lambda_{1}\mathcal{L}{\mathrm{ada-triplet}}+\lambda_{2}\mathcal{L}_{\mathrm{contrastive}}).}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}{\mathrm{total}}=(\lambda_{1}\mathcal{L}{\mathrm{ada-triplet}}+\lambda_{2}\mathcal{L}_{\mathrm{contrastive}}).}\end{array}

$$

The $\lambda_{1}$ and $\lambda_{2}$ are parameters for balancing the loss. We offer detailed information on contrastive learning in Appendix A.4.

$\lambda_{1}$ 和 $\lambda_{2}$ 是用于平衡损失的参数。我们在附录 A.4 中提供了关于对比学习 (contrastive learning) 的详细信息。

3 EXPERIMENTS

3 实验

We evaluate our proposed HarMA framework on two widely used remote sensing (RS) image-text datasets: RSICD (Lu et al., 2017) and RSITMD (Yuan et al., 2022a). We use standard recall at TOP-K $(\mathrm{R}@\mathrm{K},\mathrm{K}=1,5,10)$ and mean recall (mR) to assess our model.

我们在两个广泛使用的遥感 (RS) 图文数据集上评估了提出的 HarMA 框架:RSICD (Lu et al., 2017) 和 RSITMD (Yuan et al., 2022a)。采用标准 TOP-K 召回率 $(\mathrm{R}@\mathrm{K},\mathrm{K}=1,5,10)$ 和平均召回率 (mR) 作为评估指标。

3.1 COMPARATIVE EXPERIMENTS

3.1 对比实验

In this section, we compare the proposed method with state-of-the-art retrieval techniques on two remote sensing multimodal retrieval benchmarks. The backbone networks employed in our experiments are the CLIP (ViT-B-32) (Radford et al., 2021a) and the GeoRSCLIP (Zhang et al., 2023).

在本节中,我们在两个遥感多模态检索基准上,将所提方法与最先进的检索技术进行了比较。实验中采用的骨干网络为CLIP (ViT-B-32) (Radford等人, 2021a) 和 GeoRSCLIP (Zhang等人, 2023)。

Table 1: Retrieval Performance Test. $\ddagger$ : RSICD Test Set; ⋇ : RSITMD Test Set; $\dagger$ : The parameter count of a single adapter module, and $\dagger^{\prime}$ represents the results referenced from Yuan et al. (2023). Red: Our method; Blue: Full fine-tuned CLIP & GeoRSCLIP.

表 1: 检索性能测试。$\ddagger$: RSICD测试集;⋇: RSITMD测试集;$\dagger$: 单个适配器模块参数量,$\dagger^{\prime}$表示引用自Yuan等人(2023)的结果。红色: 我们的方法;蓝色: 全微调CLIP & GeoRSCLIP。

| 方法 | 主干网络(图像/文本) | 可训练参数量 | 图像到文本 | 文本到图像 | 平均召回率 | ||||

|---|---|---|---|---|---|---|---|---|---|

| R@1 | R@5 | R@10 | R@1 | R@5 | R@10 | ||||

| 传统方法 | |||||||||

| GaLR with MR (Yuan et al., 2022b) | ResNet18, biGRU | 46.89M | 6.59 | 19.85 | 31.04 | 4.69 | 19.48 | 32.13 | 18.96 |

| PIR (Pan et al., 2023a) | SwinTransformer, Bert | 9.88 | 27.26 | 39.16 | 6.97 | 24.56 | 38.92 | 24.46 | |

| 基于CLIP的方法 | |||||||||

| Full-FT CLIP (Radford et al., 2021a) | CLIP(ViT-B-32) | 151M | 15.89 | 36.14 | 47.93 | 12.21 | 32.97 | 48.84 | 32.33 |

| Full-FT GeoRSCLIP (Zhang et al., 2023) | GeoRSCLIP(ViT-B-32-RET-2) | 151M | 18.85 | 38.15 | 53.16 | 14.27 | 39.71 | 57.49 | 36.94 |

| Full-FT GeoRSCLIP(w/Extra Data) | GeoRSCLIP(ViT-B-32-RET-2) | 151M | 21.13 | 41.72 | 55.63 | 15.59 | 41.19 | 57.99 | 38.87 |

| Adapter (Houlsby et al., 2019) | CLIP(ViT-B-32) | 0.17M | 8.73 | 24.73 | 37.81 | 8.43 | 26.02 | 43.33 | 24.84 |

| CLIP-Adapter (Gao et al., 2021) | CLIP(ViT-B-32) | 0.52M | 7.11 | 19.48 | 31.01 | 7.67 | 24.87 | 39.73 | 21.65 |

| AdaptFormer(Chen et al., 2022) | CLIP(ViT-B-32) | 0.17M | 12.46 | 28.49 | 41.86 | 9.09 | 29.89 | 46.81 | 28.10 |

| Cross-Modal Adapter (Jiang et al., 2022a) | CLIP(ViT-B-32) | 0.16M | 11.18 | 27.31 | 40.62 | 9.57 | 30.74 | 48.36 | 27.96 |

| UniAdapter (Lu et al., 2023) | CLIP(ViT-B-32) | 0.55M | 12.65 | 30.81 | 42.74 | 9.61 | 30.06 | 47.16 | 28.84 |

| PE-RSITR (Yuan et al., 2023) | CLIP(ViT-B-32) | 0.16M | 14.13 | 31.51 | 44.78 | 11.63 | 33.92 | 50.73 | 31.12 |

| Ours (HarMA w/o Extra Data) | CLIP(ViT-B-32) | 0.50M | 16.36 | 34.48 | 47.74 | 12.92 | 37.17 | 53.07 | 33.62 |

| Ours (HarMA w/o Extra Data) | GeoRSCLIP(ViT-B-32-RET-2) | 0.50M | 20.52 | 41.37 | 54.66 | 15.84 | 41.92 | 59.39 | 38.95 |

| Full-FT CLIP (Radford et al., 2021a) | CLIP(ViT-B-32)* | 151M | 26.99 | 46.9 | 58.85 | 20.53 | 52.35 | 71.15 | 46.13 |

| Full-FT GeoRSCLIP (Zhang et al., 2023) | GeoRSCLIP(ViT-B-32-RET-2)* | 151M | 30.53 | 49.78 | 63.05 | 24.91 | 57.21 | 75.35 | 50.14 |

| Full-FT GeoRSCLIP (w/Extra Data) | GeoRSCLIP(ViT-B-32-RET-2)* | 151M | 32.30 | 53.32 | 67.92 | 25.04 | 57.88 | 74.38 | 51.81 |

| CLIP-Adapter (Gao et al., 2021) | CLIP(ViT-B-32)* | 0.52M | 12.83 | 28.84 | 39.05 | 13.30 | 40.20 | 60.06 | 32.38 |

| AdaptFormer (Chen et al., 2022) | CLIP(ViT-B-32)* | 0.17M | 16.71 | 30.16 | 42.91 | 14.27 | 41.53 | 61.46 | 34.81 |

| Cross-Modal Adapter (Jiang et al., 2022a) | CLIP(ViT-B-32)* | 0.16M | 18.16 | 36.08 | 48.72 | 16.31 | 44.33 | 64.75 | 38.06 |

| UniAdapter (Lu et al., 2023) | CLIP(ViT-B-32)* | 0.55M | 19.86 | 36.32 | 51.28 | 17.54 | 44.89 | 56.46 | 39.23 |

| PE-RSITR (Yuan et al., 2023) | CLIP(ViT-B-32)* | 0.16M | 23.67 | 44.07 | 60.36 | 20.10 | 50.63 | 67.97 | 44.47 |

| Ours(HarMA w/o Extra Data) | CLIP(ViT-B-32)* | 0.50M | 25.81 | 48.37 | 60.61 | 19.92 | 53.27 | 71.21 | 46.53 |

| Ours (HarMA w/o Extra Data) | GeoRSCLIP(ViT-B-32-RET-2)* | 0.50M | 32.74 | 53.76 | 69.25 | 25.62 | 57.65 | 74.60 | 52.27 |

Table 1 presents the retrieval performance on RSICD and RSITMD. Firstly, as indicated in the first column, our method surpasses traditional state-of-the-art approaches while requiring significantly fewer tuned parameters. Secondly, when using CLIP (ViT-B-32) (Radford et al., 2021a) as the backbone, our approach achieves competitive and even superior performance compared to fully fine-tuned methods. Specifically, when matched with methods that have a similar number of tunable parameters, our method’s Mean Recall (MR) sees an approximate increase of $50%$ over CLIP-Adapter (Gao et al., 2021) and $12.7%$ over UniAdapter (Lu et al., 2023) on RSICD, and an $18.6%$ improvement over UniAdapter on RSITMD. Remarkably, by utilizing the pretrained weights of GeoRSCLIP, HarMA establishes a new benchmark in the remote sensing field for two popular multimodal retrieval tasks. It only modifies less than $4%$ of the total model parameters, outperforming all current parameter-efficient fine-tuning methods and even surpassing the image-text retrieval performance of fully fine-tuned GeoRSCLIP on RSICD and RSITMD.

表1展示了在RSICD和RSITMD上的检索性能。首先,如第一列所示,我们的方法在需要显著更少调参量的情况下超越了传统最先进方法。其次,当使用CLIP (ViT-B-32) (Radford et al., 2021a)作为主干网络时,我们的方法相比完全微调方法实现了具有竞争力甚至更优的性能。具体而言,在与可调参量数量相近的方法对比时,我们的方法在RSICD上的平均召回率(MR)比CLIP-Adapter (Gao et al., 2021)提升约$50%$,比UniAdapter (Lu et al., 2023)提升$12.7%$;在RSITMD上比UniAdapter提升$18.6%$。值得注意的是,通过利用GeoRSCLIP的预训练权重,HarMA为遥感领域的两个热门多模态检索任务设立了新基准,仅修改不到$4%$的总模型参量,就超越了当前所有参数高效微调方法,甚至在RSICD和RSITMD上超越了完全微调GeoRSCLIP的图文检索性能。

4 CONCLUSION

4 结论

In this paper, we have revisited the learning objectives of multimodal downstream tasks from a unified perspective and proposed HarMA, an efficient framework that addresses the suboptimal multimodal alignment in remote sensing. HarMA uniquely enhances uniform alignment while preserving pretrained knowledge. Through the use of lightweight adapters and adaptive losses, HarMA achieves state-of-the-art retrieval performance with minimal parameter updates, surpassing even full fine-tuning. Despite all the benefits, one potential limitation is that designing pairwise objective functions may not provide more robust distribution constraints. Our future work will focus on extending this approach to more multimodal tasks.

本文从统一视角重新审视了多模态下游任务的学习目标,提出HarMA框架——一种解决遥感领域多模态次优对齐问题的高效方案。该框架在保持预训练知识的同时,通过独特设计实现了均匀对齐增强。借助轻量级适配器(adapter)和自适应损失函数,HarMA仅需极少的参数更新即达到最先进的检索性能,甚至超越全参数微调。尽管优势显著,该方法可能存在一个潜在局限:成对目标函数设计可能无法提供更强健的分布约束。我们未来工作将重点扩展该方法至更多多模态任务场景。

Zilun Zhang, Tiancheng Zhao, Yulong Guo, and Jianwei Yin. Rs5m: A large scale vision-language dataset for remote sensing vision-language foundation model. arXiv preprint arXiv:2306.11300, 2023.

张子伦, 赵天成, 郭玉龙, 尹建伟. RS5M: 面向遥感视觉-语言基础模型的大规模视觉-语言数据集. arXiv预印本 arXiv:2306.11300, 2023.

APPENDIX

附录

A PRELIMINARIES

初步准备

A.1 VISION TRANSFORMER (VIT)

A.1 视觉Transformer (ViT)

In the pipeline of ViT (Do sov it ski y et al., 2020), given an input image $I$ in $\mathbb{R}^{C\times H\times W}$ segmented into $N\times N$ patches, we define the input matrix $\bar{\mathbf{X}}\in\mathbb{R}^{({N^{2}}+\bar{1})\times{D}}$ consisting of patch embeddings and a class token. The self-attention mechanism in each Transformer layer transforms $\mathbf{X}$ into keys $K$ , values $V$ , and queries $Q$ , each in $\mathbb{R}^{(N^{2}+1)\times D}$ , and computes the self-attention (Vaswani et al., 2017) output as:

在ViT (Do sov it ski y等人,2020)的流程中,给定输入图像$I$ ($\mathbb{R}^{C\times H\times W}$),将其分割为$N\times N$个图像块后,定义输入矩阵$\bar{\mathbf{X}}\in\mathbb{R}^{({N^{2}}+\bar{1})\times{D}}$,该矩阵由图像块嵌入和一个类别Token组成。每个Transformer层中的自注意力机制将$\mathbf{X}$转换为键$K$、值$V$和查询$Q$ (均为$\mathbb{R}^{(N^{2}+1)\times D}$),并计算自注意力 (Vaswani等人,2017) 输出如下:

$$

{\mathrm{Attention}}(Q,K,V)={\mathrm{Softmax}}\left({\frac{Q K^{T}}{\sqrt{D}}}\right)V.

$$

$$

{\mathrm{Attention}}(Q,K,V)={\mathrm{Softmax}}\left({\frac{Q K^{T}}{\sqrt{D}}}\right)V.

$$

The subsequent processing involves a two-layer MLP, which refines the output across the embedded dimensions.

后续处理涉及一个双层MLP(多层感知机),用于在嵌入维度上优化输出。

A.2 ADAPTER

A.2 适配器

Adapters (Houlsby et al., 2019) are modular additions to pre-trained models, designed to allow adaptation to new tasks by modifying only a small portion of the model’s parameters. An adapter consists of a sequence of linear transformations and a non-linear activation:

适配器 (Houlsby et al., 2019) 是对预训练模型的模块化补充,旨在通过仅修改模型的一小部分参数来实现对新任务的适应。一个适配器由一系列线性变换和一个非线性激活组成:

$$

\begin{array}{r}{\mathrm{Adapter}(\mathbf{Z})=[\mathbf{W}^{\mathrm{up}}\sigma(\mathbf{W}^{\mathrm{down}}\mathbf{Z}^{T})]^{T},}\end{array}

$$

$$

\begin{array}{r}{\mathrm{Adapter}(\mathbf{Z})=[\mathbf{W}^{\mathrm{up}}\sigma(\mathbf{W}^{\mathrm{down}}\mathbf{Z}^{T})]^{T},}\end{array}

$$

where $\mathbf{W}^{\mathrm{down}}$ and $\mathbf{W}^{\mathrm{up}}$ are the weights for down-projection and up-projection, while $\sigma$ denotes a non-linear activation function that introduces non-linearity into the model.

其中 $\mathbf{W}^{\mathrm{down}}$ 和 $\mathbf{W}^{\mathrm{up}}$ 分别是下投影和上投影的权重,而 $\sigma$ 表示引入模型非线性的非线性激活函数。

Integrating adapters into the ViT architecture allows for efficient fine-tuning on specific tasks while keeping the majority of the Transformer’s weights unchanged. This approach not only retains the pre-trained visual understanding but also reduces the risk of catastrophic forgetting.

将适配器集成到ViT架构中,可以在保持Transformer大部分权重不变的同时,高效地对特定任务进行微调。这种方法不仅保留了预训练获得的视觉理解能力,还降低了灾难性遗忘的风险。

A.3 TRIPLET LOSS

A.3 三元组损失 (Triplet Loss)

In the domain of image-text retrieval tasks, the bidirectional triplet loss introduced by Faghri et al. (2017) has been widely adopted as a standard loss function. The formulation of the triplet loss is given by:

在图文检索任务领域,Faghri等人 (2017) 提出的双向三元组损失 (triplet loss) 已被广泛采用为标准损失函数。其数学表达式为:

$$

\mathcal{L}{\mathrm{triplet}}=\frac{1}{2}\left(\sum_{i=1}^{N}\sum_{j=1}^{N}[m+s_{i j}-s_{i i}]{+}+\sum_{j=1}^{N}\sum_{i=1}^{N}[m+s_{j i}-s_{i i}]_{+}\right).

$$

$$

\mathcal{L}{\mathrm{triplet}}=\frac{1}{2}\left(\sum_{i=1}^{N}\sum_{j=1}^{N}[m+s_{i j}-s_{i i}]{+}+\sum_{j=1}^{N}\sum_{i=1}^{N}[m+s_{j i}-s_{i i}]_{+}\right).

$$

The $m$ denotes the margin, a hyper parameter that defines the minimum distance between the nonmatching pairs. The function $s_{i j}$ represents the similarity score between the $i$ -th image and the $j$ -th text, and $s_{i i}$ is the similarity score between matching image-text pairs. The objective of the triplet loss is to ensure that the distance between non-matching pairs is greater than the distance between matching pairs by at least the margin $m$ .

$m$ 表示间隔 (margin),这是一个定义不匹配对之间最小距离的超参数。函数 $s_{i j}$ 表示第 $i$ 张图像与第 $j$ 段文本之间的相似度分数,而 $s_{i i}$ 是匹配的图像-文本对之间的相似度分数。三元组损失 (triplet loss) 的目标是确保不匹配对之间的距