SA-DVAE: Improving Zero-Shot Skeleton-Based Action Recognition by Disentangled Variation al Auto encoders

SA-DVAE: 通过解耦变分自编码器改进零样本骨骼动作识别

Abstract. Existing zero-shot skeleton-based action recognition methods utilize projection networks to learn a shared latent space of skeleton features and semantic embeddings. The inherent imbalance in action recognition datasets, characterized by variable skeleton sequences yet constant class labels, presents significant challenges for alignment. To address the imbalance, we propose SA-DVAE—Semantic Alignment via Disentangled Variation al Auto encoders, a method that first adopts feature disentanglement to separate skeleton features into two independent parts—one is semantic-related and another is irrelevant—to better align skeleton and semantic features. We implement this idea via a pair of modality-specific variation al auto encoders coupled with a total correction penalty. We conduct experiments on three benchmark datasets: NTU RGB+D, NTU RGB+D 120 and PKU-MMD, and our experimental results show that SA-DAVE produces improved performance over existing methods. The code is available at https://github.com/ pha123661/SA-DVAE.

摘要。现有基于骨架的零样本动作识别方法利用投影网络学习骨架特征与语义嵌入的共享潜在空间。动作识别数据集固有的不平衡性(表现为多变的骨架序列与固定的类别标签)给特征对齐带来了巨大挑战。为解决这一问题,我们提出SA-DVAE——基于解耦变分自编码器的语义对齐方法,该方法通过特征解耦将骨架特征分离为语义相关与无关两个独立部分,以实现更好的骨架-语义特征对齐。我们通过一对模态特定的变分自编码器配合总体校正惩罚项实现该构想。在NTU RGB+D、NTU RGB+D 120和PKU-MMD三个基准数据集上的实验表明,SA-DVAE相较现有方法取得了性能提升。代码已开源:https://github.com/pha123661/SA-DVAE。

Keywords: Skeleton-based Action Recognition $\cdot$ Zero-Shot and Generalized Zero-Shot Learning · Feature Disentanglement

关键词: 基于骨架的动作识别 $\cdot$ 零样本与广义零样本学习 · 特征解耦

1 Introduction

1 引言

Action recognition is a long-standing active research area because it is challenging and has a wide range of applications like surveillance, monitoring, and human-computer interfaces. Based on input data types, there are several lines of studies on human action recognition: image-based, video-based, depth-based, and skeleton-based. In this paper, we focus on the skeleton-based action recognition, which is enabled by the advance in pose estimation [24,27] and sensor [14,28] technologies, and has emerged as a viable alternative to video-based action recognition due to its resilience to variations in appearance and background. Some existing skeleton-based action recognition methods already achieve remarkable performance on large-scale action recognition datasets [5, 17, 23] through supervised learning, but labeling data is expensive and time-consuming. For the cases where training data are difficult to obtain or prevented by privacy issues, zeroshot learning (ZSL) offers an alternative solution by recognizing unseen actions through supporting information such as the names, attributes, or descriptions of the unseen classes. Therefore, zero-shot learning has multiple types of input data and aims to learn an effective way of dealing with those data representations. For skeleton-based zero-shot action recognition, several methods have been proposed to align skeleton features and text features in the same space.

动作识别是一个长期活跃的研究领域,因其具有挑战性且应用广泛,如监控、监测和人机交互等。根据输入数据类型,人体动作识别研究可分为基于图像、视频、深度和骨骼的几类方法。本文聚焦于基于骨骼的动作识别,该技术得益于姿态估计[24,27]和传感器[14,28]技术的进步,因其对外观和背景变化的鲁棒性,已成为视频动作识别的可行替代方案。现有部分基于骨骼的动作识别方法通过监督学习已在大规模动作识别数据集[5,17,23]上取得显著性能,但数据标注成本高昂且耗时。对于训练数据难以获取或受隐私问题限制的场景,零样本学习(ZSL)通过利用未见类别的名称、属性或描述等辅助信息来识别未知动作,提供了替代解决方案。因此,零样本学习涉及多种输入数据类型,旨在学习处理这些数据表征的有效方法。针对基于骨骼的零样本动作识别,已有多种方法提出将骨骼特征与文本特征对齐到同一空间。

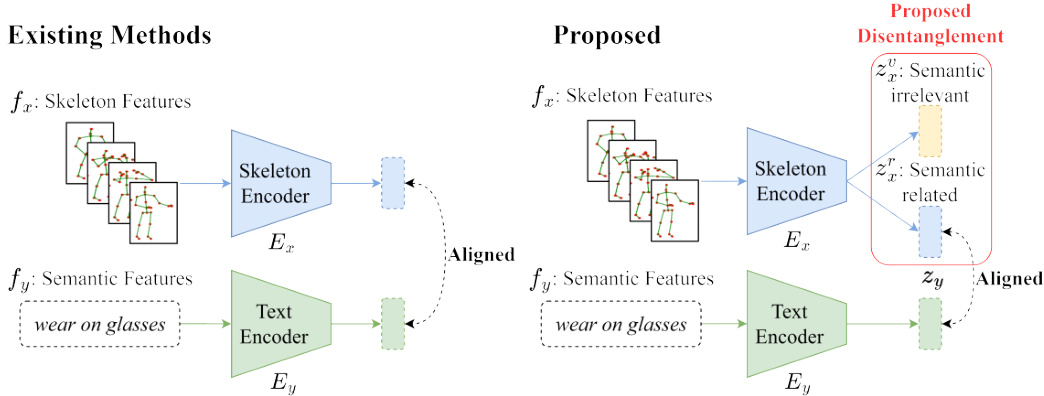

Fig. 1: Comparison with existing methods. Our method is the first to apply feature disentanglement to the problem of skeleton-based zero-shot action recognition. All existing methods directly align skeleton features with textual ones, but ours only aligns a part of semantic-related skeleton features with the textual ones.

图 1: 与现有方法的对比。我们的方法是首个将特征解耦 (feature disentanglement) 应用于基于骨架的零样本动作识别问题的方法。现有方法都直接将骨架特征与文本特征对齐,而我们仅将语义相关的部分骨架特征与文本特征对齐。

However, to the best of our knowledge, all existing methods assume that the group of skeleton sequences are well captured and highly consistent so their ideas mainly focus on how to semantically optimize text representation. After carefully examining the source videos in two widely used benchmark datasets NTU RGB+D and PKU-MMD, we found the assumption is questionable. We observe that for some labels, the camera positions and actors’ action differences do bring in significant noise. To address this observation, we seek an effective way to deal with the problem. Inspired by an existing ZSL method [3] which shows semantic-irrelevant features can be separated from semantic-related ones, we propose SA-DVAE for skeleton-based action recognition. SA-DVAE tackles the generalization problem by disentangling the skeleton latent feature space into two components: a semantic-related term and a semantic-irrelevant term as shown in Fig. 1. This enables the model to learn more robust and generalizable visual embeddings by focusing solely on the semantic-related term for action recognition. In addition, SA-DVAE implements a learned total correlation penalty that encourages independence between the two factorized latent features and minimizes the shared information captured by the two representations. This penalty is realized by an adversarial disc rim in at or that aims to estimate the lower bound of the total correlation between the factorized latent features.

然而,据我们所知,现有方法均假设骨架序列组已被完整捕捉且高度一致,因此其核心思路集中于如何从语义层面优化文本表示。通过细致分析NTU RGB+D和PKU-MMD这两个广泛使用的基准数据集中的源视频,我们发现该假设存在疑问。我们观察到,对于某些动作标签,摄像机位姿与演员动作差异确实会引入显著噪声。针对这一现象,我们探索了有效的解决方案。受现有零样本学习方法[3](证明语义无关特征可与语义相关特征分离)的启发,我们提出了基于骨架的动作识别模型SA-DVAE。如图1所示,SA-DVAE通过将骨架潜在特征空间解耦为两个组件来解决泛化问题:语义相关项与语义无关项。该设计使模型能仅聚焦语义相关项进行动作识别,从而学习更具鲁棒性和泛化能力的视觉嵌入。此外,SA-DVAE采用学习型总相关性惩罚机制,通过对抗判别器估算解耦潜在特征间总相关性下界,强制两项特征保持独立性并最小化表征间的共享信息。

The contributions of our paper are as follows:

本文的贡献如下:

2 Related Work

2 相关工作

The proposed SA-DAVE method covers two research fields: zero-shot learning and action recognition, and it uses feature disentanglement to deal with skeleton data noise. Here we discuss the most related research reports in the literature.

提出的SA-DAVE方法涵盖了两个研究领域:零样本学习和动作识别,并利用特征解耦处理骨骼数据噪声。以下我们讨论文献中最相关的研究报告。

Skeleton-Based Zero-Shot Action Recognition. ZSL aims to train a model under the condition that some classes are unseen during training. The more challenging GZSL expands the task to classify both seen and unseen classes during testing [19]. ZSL relies on semantic information to bridge the gap between seen and unseen classes.

基于骨架的零样本动作识别。ZSL旨在训练一个模型,使其在训练过程中某些类别不可见的情况下仍能工作。更具挑战性的GZSL将任务扩展为在测试时同时分类可见和不可见类别[19]。ZSL依赖语义信息来弥合可见与不可见类别之间的鸿沟。

Existing methods address the skeleton and text zero-shot action recognition problem by constructing a shared space for both modalities. ReViSE [13] learns auto encoders for each modality and aligns them by minimizing the maximum mean discrepancy loss between the latent spaces. Building on the concept of feature generation, CADA-VAE [22] employs variation al auto encoders (VAEs) for each modality, aligning the latent spaces through cross-modal reconstruction and minimizing the Wasser stein distance between the inference models. These methods then learn class if i ers on the shared space to conduct classification.

现有方法通过构建两种模态的共享空间来解决骨架和文本零样本动作识别问题。ReViSE [13] 为每种模态学习自动编码器,并通过最小化潜在空间之间的最大均值差异损失来对齐它们。基于特征生成的概念,CADA-VAE [22] 为每种模态使用变分自动编码器 (VAE),通过跨模态重构对齐潜在空间,并最小化推理模型之间的Wasserstein距离。这些方法随后在共享空间上学习分类器以进行分类。

SynSE [8] and JPoSE [25] are two methods that leverage part-of-speech (PoS) information to improve the alignment between text descriptions and their corresponding visual representations. SynSE extends CADA-VAE by decomposing text descriptions by PoS tags, creating individual VAEs for each PoS label, and aligning them in the skeleton space. Similarly, JPoSE [25] learns multiple shared latent spaces for each PoS label using projection networks. JPoSE employs uni-modal triplet loss to maintain the neighborhood structure of each modality within the shared space and cross-modal triplet loss to align the two modalities.

SynSE [8] 和 JPoSE [25] 是两种利用词性 (PoS) 信息来改善文本描述与其对应视觉表征对齐的方法。SynSE 通过按词性标签分解文本描述,为每个词性标签创建独立的 VAE (Variational Autoencoder) ,并在骨架空间中对齐它们,从而扩展了 CADA-VAE。类似地,JPoSE [25] 使用投影网络为每个词性标签学习多个共享潜在空间。JPoSE 采用单模态三元组损失来保持共享空间内各模态的邻域结构,并采用跨模态三元组损失来对齐两种模态。

On the other hand, SMIE [29] focuses on maximizing mutual information between skeleton and text feature spaces, utilizing a Jensen-Shannon Divergence estimator trained with contrastive learning. It also considers temporal information in action sequences by promoting an increase in mutual information as more frames are observed.

另一方面,SMIE [29] 专注于最大化骨架与文本特征空间之间的互信息,利用通过对比学习训练的 Jensen-Shannon 散度估计器。它还通过促进观察到更多帧时互信息的增加来考虑动作序列中的时序信息。

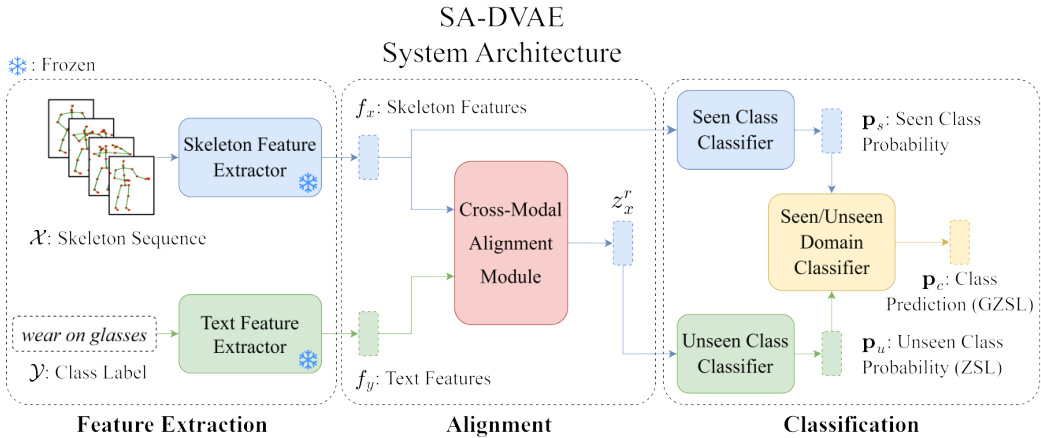

Fig. 2: System Architecture of SA-DVAE. Initially, the feature extractors are employed to extract features. Subsequently, the cross-modal alignment module aligns the two modalities and generates semantic-related unseen skeleton features $(z_{x}^{r}$ ). These generated features are utilized to train class if i ers.

图 2: SA-DVAE系统架构。首先通过特征提取器提取特征,随后跨模态对齐模块对两种模态进行对齐并生成语义相关的未见骨骼特征$(z_{x}^{r}$)。这些生成的特征用于训练分类器。

While JPoSE and SynSE demonstrate the benefits of incorporating PoS information, they rely heavily on it and require additional PoS tagging effort. Furthermore, the two methods neglect the inherent asymmetry between modalities, aligning semantic-related and irrelevant terms to the semantic features and missing the chance to improve recognition accuracy further. In contrast, our approach uses simple class labels without the need of PoS tags, and uses only semantic-related skeleton information to align text data.

虽然JPoSE和SynSE展示了融入词性(PoS)信息的优势,但它们过度依赖该信息且需要额外的词性标注工作。此外,这两种方法忽视了模态间固有的不对称性,将语义相关与无关的术语都对齐到语义特征上,错失了进一步提升识别准确率的机会。相比之下,我们的方法仅使用简单的类别标签而无需词性标注,并且仅利用语义相关的骨架信息来对齐文本数据。

Feature Disentanglement in Generalized Zero-Shot Learning. Feature disentanglement refers to the process of separating the underlying factors of variation in data [2]. Because methods of zero-shot learning are sensitive to the quality of both visual and semantic features, feature disentanglement serves as an effective approach to scrutinize either visual or semantic features, as well as addressing the domain shift problem [19], thereby generating more robust and generalized representations.

广义零样本学习中的特征解耦。特征解耦 (feature disentanglement) 指分离数据中潜在变化因素的过程 [2]。由于零样本学习方法对视觉和语义特征的质量均较为敏感,特征解耦可作为审视视觉/语义特征的有效途径,同时解决域偏移问题 (domain shift) [19],从而生成更具鲁棒性和泛化能力的表征。

SDGZSL [3] decomposes visual embeddings into semantic-consistent and semantic-unrelated components using shared class-level attributes, and learns an additional relation network to maximize compatibility between semanticconsistent representations and their corresponding semantic embeddings. This approach is motivated by the transfer of knowledge from intermediate semantics (e.g., class attributes) to unseen classes. In contrast, SA-DVAE addresses the inherent asymmetry between the text and skeleton modalities, enabling the direct use of text descriptions instead of relying on predefined class attributes.

SDGZSL [3] 利用共享的类级别属性将视觉嵌入分解为语义一致和语义无关的组件,并学习一个额外的关系网络来最大化语义一致表示与其对应语义嵌入之间的兼容性。该方法的动机是将知识从中间语义(如类属性)迁移到未见类别。相比之下,SA-DVAE解决了文本与骨骼模态之间固有的不对称性,使得可以直接使用文本描述,而无需依赖预定义的类属性。

3 Methodology

3 方法论

We show the overall architecture of our method as Fig. 2, which consists of three main components: a) two modality-specific feature extractors, b) a crossmodal alignment module, and c) three class if i ers for seen/unseen actions and their domains. The cross-modal alignment module learns a shared latent space via cross-modality reconstruction, where feature disentanglement is applied to prioritize the alignment of semantic-related information ( $z_{x}^{r}$ and $z_{y}$ ). To improve the effectiveness of the disentanglement, we use a disc rim in at or as an adversarial total correlation penalty between the disentangled features.

图 2: 展示了我们方法的整体架构,包含三个主要组件:a) 两个模态特定特征提取器,b) 跨模态对齐模块,以及 c) 三个用于可见/不可见动作及其领域的分类器。跨模态对齐模块通过跨模态重构学习共享潜在空间,其中应用特征解耦以优先对齐语义相关信息 ( $z_{x}^{r}$ 和 $z_{y}$ )。为提高解耦效果,我们使用判别器作为解耦特征间的对抗性总相关惩罚项。

Problem Definition. Let $\mathcal{D}$ be a skeleton-based action dataset consisting of a skeleton sequences set $\mathcal{X}$ and a label set $\mathcal{V}$ , in which a label is a piece of text description. The $\mathcal{X}$ is split into a seen and unseen subset $\mathcal{X}{s}$ and $\mathcal{X}{u}$ where we can only use $\mathcal{X}{s}$ and $\mathcal{V}$ to train a model to classify $x\in\mathcal{X}{u}$ . By definition, there are two types of evaluation protocols. The GZSL one asks to predict the class of $x$ among all classes $\mathcal{V}$ , and the ZSL only among $\mathcal{V}{u}={y_{i}:x_{i}\in\mathcal{X}_{u}}$ .

问题定义。设 $\mathcal{D}$ 为基于骨架的动作数据集,由骨架序列集 $\mathcal{X}$ 和标签集 $\mathcal{V}$ 组成,其中标签为文本描述。$\mathcal{X}$ 被划分为可见子集 $\mathcal{X}{s}$ 和不可见子集 $\mathcal{X}{u}$,我们只能使用 $\mathcal{X}{s}$ 和 $\mathcal{V}$ 训练模型以对 $x\in\mathcal{X}{u}$ 进行分类。根据定义,存在两种评估协议:GZSL要求在所有类别 $\mathcal{V}$ 中预测 $x$ 的类别,而ZSL仅在 $\mathcal{V}{u}={y_{i}:x_{i}\in\mathcal{X}_{u}}$ 中进行预测。

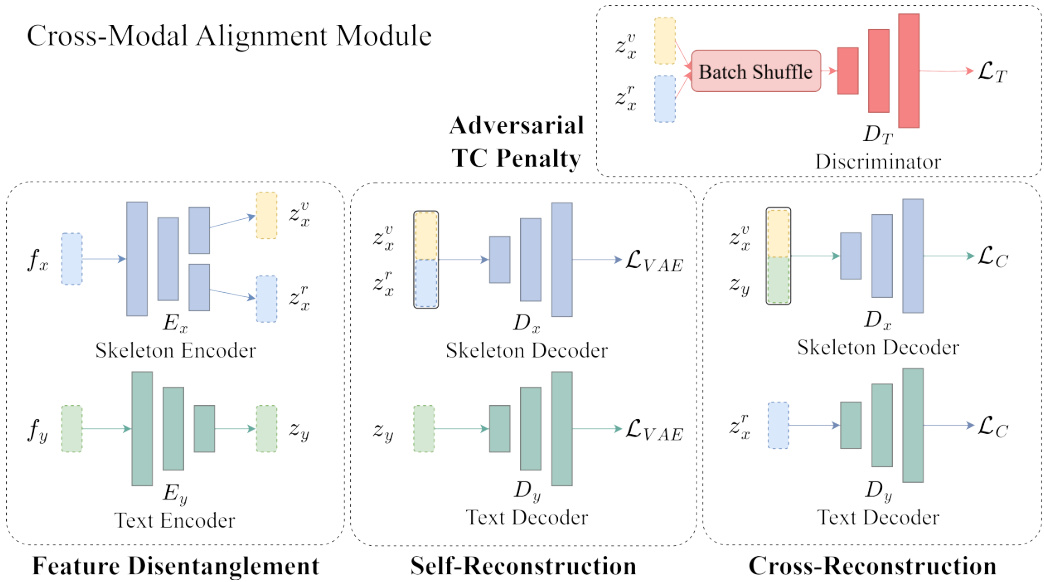

Cross-Modal Alignment Module. We train a skeleton representation model (Shift-GCN [4] or ST-GCN [26], depending on experimental settings) on the seen classes using standard cross-entropy loss. This model extracts our skeleton features, denoted as $f_{x}$ . We use a pre-trained language model (Sentence-BERT [21] or CLIP [20]) to extract our label’s text features, denoted as $f_{y}$ . Because $f_{x}$ and $f_{y}$ belong to two unrelated modalities, we train two modality-specific VAEs to adjust $f_{x}$ and $f_{y}$ for our recognition task and illustrate their data flow in Fig. 3. Our encoders $E_{x}$ and $E_{y}$ transform $f_{x}$ and $f_{y}$ into representations $z_{x}$ and $z_{y}$ in a shared latent space via the re parameter iz ation trick [15]. To optimize the VAEs, we introduce a loss as the form of the Evidence Lower Bound

跨模态对齐模块。我们在可见类上使用标准交叉熵损失训练一个骨架表示模型(根据实验设置选用Shift-GCN [4]或ST-GCN [26])。该模型提取的骨架特征记为$f_{x}$。我们使用预训练语言模型(Sentence-BERT [21]或CLIP [20])提取标签的文本特征,记为$f_{y}$。由于$f_{x}$和$f_{y}$属于两种不相关的模态,我们训练了两个模态特定的VAE来调整$f_{x}$和$f_{y}$以适应识别任务,其数据流如图3所示。编码器$E_{x}$和$E_{y}$通过重参数化技巧[15]将$f_{x}$和$f_{y}$转换到共享潜在空间中的表示$z_{x}$和$z_{y}$。为优化VAE,我们引入证据下界形式的损失函数。

$$

\mathcal{L}=\mathbb{E}{q_{\phi}(z|f)}[\log p_{\theta}(f|z)]-\beta D_{K L}\bigl(q_{\phi}(z|f)\bigr|\bigl|p_{\theta}(z)\bigr),

$$

$$

\mathcal{L}=\mathbb{E}{q_{\phi}(z|f)}[\log p_{\theta}(f|z)]-\beta D_{K L}\bigl(q_{\phi}(z|f)\bigr|\bigl|p_{\theta}(z)\bigr),

$$

where $\beta$ is a hyper parameter, $f$ and $z$ are the observed data and latent variables, the first term is the reconstruction error, and the second term is the Kullback-Leibler divergence between the approximate posterior $q(z|f)$ and $p(z)$ . The hyper parameter $\beta$ balances the quality of reconstruction with the alignment of the latent variables to a prior distribution [9]. We use multivariate Gaussian as the prior distribution.

其中 $\beta$ 是一个超参数,$f$ 和 $z$ 分别是观测数据和潜在变量,第一项是重构误差,第二项是近似后验 $q(z|f)$ 与 $p(z)$ 之间的 Kullback-Leibler 散度。超参数 $\beta$ 用于平衡重构质量与潜在变量对齐先验分布的程度 [9]。我们采用多元高斯分布作为先验分布。

Feature Disentanglement. We observe that although two skeleton sequences belong to the same class (i.e. they share the same text description), their movement varies substantially due to stylistic factors such as actors’ body shapes and movement ranges, and cameras’ positions and view angles. To the best of our knowledge, existing methods never address this issue. For example, Zhou et $a l$ . [29] and Gupta et al . [8] neglect this issue and force $f_{x}$ and $f_{y}$ to be aligned. Therefore, we propose to tackle the problem of inherent asymmetry between the two modalities to improve the recognition performance.

特征解耦。我们观察到,尽管两个骨架序列属于同一类别(即它们共享相同的文本描述),但由于演员体型、动作幅度以及摄像机位置和视角等风格因素,它们的运动差异很大。据我们所知,现有方法从未解决这一问题。例如,Zhou等[29]和Gupta等[8]忽略了这一问题,强制对齐$f_{x}$和$f_{y}$。因此,我们提出解决两种模态之间固有不对称性的问题以提高识别性能。

We design our skeleton encoder $E_{x}$ as a two-head network, of which one head generates a semantic-related latent vector $z_{x}^{r}$ and the other generates a semanticirrelevant vector $z_{x}^{v}$ . We assume each of $z_{x}^{r}$ and $z_{x}^{v}$ has its own multi variant normal distribution $N(\mu_{x}^{r},\Sigma_{x}^{r})$ and $N(\mu_{x}^{v},\Sigma_{x}^{v})$ , and our text encoder $E_{y}$ generates a latent feature $z_{y}$ , which also has a multi variant normal distribution $N(\mu_{y},\Sigma_{y})$ .

我们设计的骨架编码器 $E_{x}$ 是一个双头网络,其中一个头生成语义相关的潜在向量 $z_{x}^{r}$,另一个头生成语义无关的向量 $z_{x}^{v}$。我们假设 $z_{x}^{r}$ 和 $z_{x}^{v}$ 各自服从多元正态分布 $N(\mu_{x}^{r},\Sigma_{x}^{r})$ 和 $N(\mu_{x}^{v},\Sigma_{x}^{v})$,而文本编码器 $E_{y}$ 生成的潜在特征 $z_{y}$ 也服从多元正态分布 $N(\mu_{y},\Sigma_{y})$。

Fig. 3: Cross-Modal Alignment Module. This module serves two primary tasks: latent space construction through self-reconstruction and cross-modal alignment via crossreconstruction. The skeleton features are disentangled into semantic-related ( $z_{x}^{r}$ ) and irrelevant $(z_{x}^{v}$ ) factors.

图 3: 跨模态对齐模块。该模块执行两个主要任务:通过自重构构建潜在空间,以及通过交叉重构实现跨模态对齐。骨架特征被解耦为语义相关 ($z_{x}^{r}$) 和无关 $(z_{x}^{v}$) 因子。

where $\beta_{x}$ and $\beta_{y}$ are hyper parameters, $p_{\theta}(z_{x}^{r})$ , $p_{\theta}(z_{x}^{v})$ , $p_{\theta}(f_{x}|z_{x})$ , $p_{\theta}(z_{y})$ , and $p_{\theta}(f_{y}|z_{y})$ are the probabilities of their presumed distributions, $q_{\phi}(z_{x}|f_{x})$ , $q_{\phi}(z_{x}^{r}|f_{x})$ and $q_{\phi}(z_{x}^{v}|f_{x})$ are the probabilities calculated through our skeleton encoder $E_{x}$ , and $q_{\phi}(z_{y}|f_{y})$ is the one through our text encoder $E_{y}$ . We set the overall VAE loss as

其中 $\beta_{x}$ 和 $\beta_{y}$ 是超参数, $p_{\theta}(z_{x}^{r})$ 、 $p_{\theta}(z_{x}^{v})$ 、 $p_{\theta}(f_{x}|z_{x})$ 、 $p_{\theta}(z_{y})$ 以及 $p_{\theta}(f_{y}|z_{y})$ 是假定分布的概率, $q_{\phi}(z_{x}|f_{x})$ 、 $q_{\phi}(z_{x}^{r}|f_{x})$ 和 $q_{\phi}(z_{x}^{v}|f_{x})$ 是通过我们的骨架编码器 $E_{x}$ 计算出的概率, $q_{\phi}(z_{y}|f_{y})$ 是通过文本编码器 $E_{y}$ 计算出的概率。我们将整体VAE损失设为

$$

\mathcal{L}{V A E}=\mathcal{L}{x}+\mathcal{L}_{y}.

$$

$$

\mathcal{L}{V A E}=\mathcal{L}{x}+\mathcal{L}_{y}.

$$

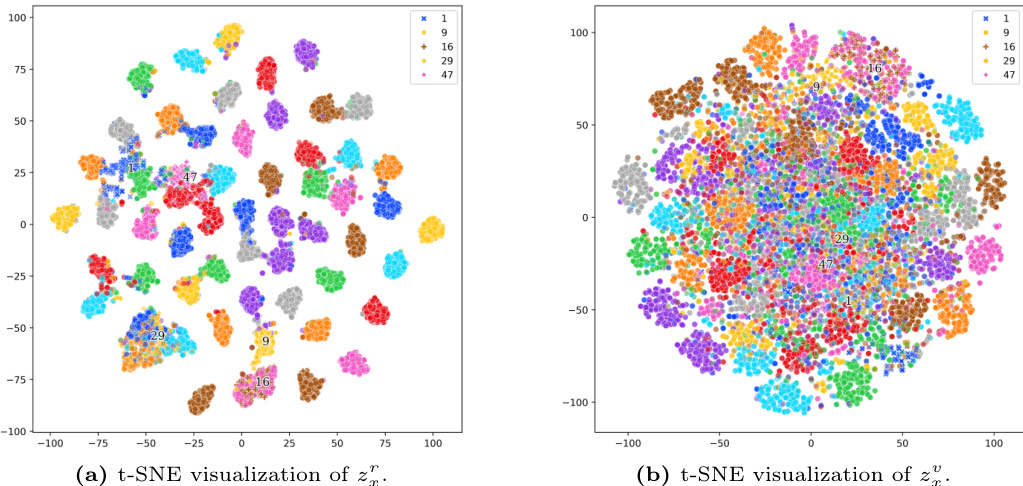

To better understand our method, we present the $\mathrm{t}$ -SNE visualization of the semantic-related and semantic-irrelevant terms, $z_{x}^{r}$ and $z_{x}^{v}$ in Fig. 4. Figure 4a displays the $\mathrm{t}$ -SNE results for $z_{x}^{r}$ , showing clear class clusters that demonstrate effective disentanglement. In contrast, Figure 4b shows the $\mathrm{t}$ -SNE results for $z_{x}^{v}$ , where class separation is less distinct. This indicates that while our method effectively clusters related semantic features, the irrelevant features remain more dispersed as they contain instance-specific information.

为了更好地理解我们的方法,我们在图4中展示了语义相关项 $z_{x}^{r}$ 和语义无关项 $z_{x}^{v}$ 的 $\mathrm{t}$ -SNE可视化结果。图4a展示了 $z_{x}^{r}$ 的 $\mathrm{t}$ -SNE结果,显示出清晰的类别聚类,表明有效的解耦效果。相比之下,图4b展示了 $z_{x}^{v}$ 的 $\mathrm{t}$ -SNE结果,其类别区分度较低。这表明我们的方法能有效聚类相关语义特征,而无关特征由于包含实例特定信息仍保持较分散状态。

Fig. 4: $\mathrm{t}$ -SNE visualization s of $z_{x}^{\prime}$ and $z_{x}^{v}$ . Best viewed in color.

图 4: $\mathrm{t}$ -SNE 对 $z_{x}^{\prime}$ 和 $z_{x}^{v}$ 的可视化。建议彩色查看。

Cross-Alignment Loss. Because we want our latent text features $z_{y}$ to align with semantic-related skeleton features $z_{x}^{r}$ only, regardless of the semantic-irrelevant features $z_{x}^{v}$ , we regulate them by setting up a cross-alignment loss

交叉对齐损失。我们希望潜在文本特征 $z_{y}$ 仅与语义相关的骨架特征 $z_{x}^{r}$ 对齐,而不受语义无关特征 $z_{x}^{v}$ 的影响,因此通过设置交叉对齐损失进行调控。

$$

\mathcal{L}{C}=|D_{y}(z_{x}^{r})-f_{y}|{2}^{2}+|D_{x}(z_{x}^{v}\oplus z_{y})-f_{x}|_{2}^{2}

$$

$$

\mathcal{L}{C}=|D_{y}(z_{x}^{r})-f_{y}|{2}^{2}+|D_{x}(z_{x}^{v}\oplus z_{y})-f_{x}|_{2}^{2}

$$

to train our VAEs for skeleton and text respectively. This loss enforces skeleton features to be reconstruct able from text features and vice versa. To reconstruct skeleton features from text features, $z_{x}^{v}$ is employed to incorporate necessary style information to mitigate the information gap between the class label and the skeleton sequence.

训练我们的骨架和文本VAE时分别使用。该损失函数强制骨架特征可以从文本特征重建,反之亦然。为了从文本特征重建骨架特征,使用$z_{x}^{v}$来整合必要的风格信息,以缓解类别标签与骨架序列之间的信息差距。

Adversarial Total Correlation Penalty. We expect the features $z_{x}^{r}$ and $z_{x}^{v}$ to be statistically independent, so we impose an adversarial total correlation penalty [3] on them. We train a disc rim in at or $D_{T}$ to predict the probability of a given latent skeleton vector $z_{x}^{v}\oplus z_{x}^{r}$ whether the $z_{x}^{v}$ and $z_{x}^{r}$ come from the same skeleton feature $f_{x}$ . In the ideal case, $D_{T}$ will return $^{1}$ if $z_{x}^{v}$ and $z_{x}^{r}$ are generated together, and $0$ otherwise. To train $D_{T}$ , we design a loss

对抗性总相关惩罚。我们希望特征$z_{x}^{r}$和$z_{x}^{v}$在统计上独立,因此对它们施加了对抗性总相关惩罚[3]。我们训练一个判别器$D_{T}$来预测给定潜在骨架向量$z_{x}^{v}\oplus z_{x}^{r}$的概率,即$z_{x}^{v}$和$z_{x}^{r}$是否来自同一骨架特征$f_{x}$。在理想情况下,如果$z_{x}^{v}$和$z_{x}^{r}$是一起生成的,$D_{T}$将返回$^{1}$,否则返回$0$。为了训练$D_{T}$,我们设计了一个损失函数

$$

\begin{array}{r}{\mathcal{L}{T}=\log D_{T}(z_{x})+\log(1-D_{T}(\tilde{z}_{x})),}\end{array}

$$

$$

\begin{array}{r}{\mathcal{L}{T}=\log D_{T}(z_{x})+\log(1-D_{T}(\tilde{z}_{x})),}\end{array}

$$

where $\tilde{z}{x}$ is an altered feature vector. We create $\tilde{z}{x}$ as the following steps. From a batch of $N$ training samples, our encoder $E_{x}$ generates $N$ pairs of $z_{x,i}^{v}$ and $z_{x,i}^{r}$ , $i=1\ldots N$ . We randomly permute the indices $i$ of $z_{x,i}^{v}$ but keep $z_{x,i}^{r}$ unchanged, and then we concatenate them as $\tilde{z}{x}$ . $D_{T}$ is trained to maximize $L_{T}$ , while $E_{x}$ is adversarial ly trained to minimize it. This training process encourages the encoder to generate latent representations that are independent. Combining the three losses, we set the overall loss

其中 $\tilde{z}{x}$ 是一个经过修改的特征向量。我们通过以下步骤创建 $\tilde{z}{x}$:从包含 $N$ 个训练样本的批次中,编码器 $E_{x}$ 生成 $N$ 对 $z_{x,i}^{v}$ 和 $z_{x,i}^{r}$($i=1\ldots N$)。我们随机置换 $z_{x,i}^{v}$ 的索引 $i$,但保持 $z_{x,i}^{r}$ 不变,然后将它们拼接为 $\tilde{z}{x}$。判别器 $D_{T}$ 被训练以最大化 $L_{T}$,而编码器 $E_{x}$ 则通过对抗训练最小化该损失。这一训练过程促使编码器生成相互独立的潜在表示。结合这三部分损失,我们设定整体损失为

$$

\mathcal{L}=\mathcal{L}{V A E}+\lambda_{1}\mathcal{L}{C}+\lambda_{2}\mathcal{L}_{T},

$$

$$

\mathcal{L}=\mathcal{L}{V A E}+\lambda_{1}\mathcal{L}{C}+\lambda_{2}\mathcal{L}_{T},

$$

where we balance the three losses by hyper parameters $\lambda_{1}$ and $\lambda_{2}$ .

我们通过超参数 $\lambda_{1}$ 和 $\lambda_{2}$ 来平衡这三个损失函数。

Seen, Unseen and Domain Classifier. Because there are two protocols, ZSL and GZSL, to evaluate a zero-shot recognition model, we use two different settings for the two protocols. For the ZSL protocol, we only need to predict the probabilities of classes ${\mathcal{V}}{u}$ from a given skeleton sequence, so we propose a classifier $C_{u}$ as a single-layer MLP (Multilayer Perception) with a softmax output layer yielding the probabilities to predict probabilities of classes ${\mathcal{V}}{u}$ from $z_{y}$ by

可见类、未见类与领域分类器。由于评估零样本识别模型存在ZSL和GZSL两种协议,我们针对这两种协议采用不同设置。对于ZSL协议,只需从给定骨骼序列预测类别${\mathcal{V}}{u}$的概率,因此我们提出一个单层MLP(多层感知器)分类器$C_{u}$,其softmax输出层通过$z_{y}$计算${\mathcal{V}}_{u}$类别的预测概率。

$$

\mathbf{p}{u}=C_{u}(z_{y})=C_{u}(E_{y}(f_{y})),

$$

$$

\mathbf{p}{u}=C_{u}(z_{y})=C_{u}(E_{y}(f_{y})),

$$

where $\mathrm{dim}({\bf p}{u})=|\mathcal{V}{u}|$ . During inference and given an unseen skeleton feature $f_{x}^{u}$ , we get $z_{x}^{u}=E_{x}(f_{x}^{u})$ , separate $z_{x}^{u}$ into zv,u and z $z_{x}^{r,u}$ , and generate $\mathbf{p}{u}=C{u}(z_{x}^{r,u})$ to predict its class as $y_{i}$ and

其中 $\mathrm{dim}({\bf p}{u})=|\mathcal{V}{u}|$。在推理阶段,给定未见过的骨架特征 $f_{x}^{u}$,我们得到 $z_{x}^{u}=E_{x}(f_{x}^{u})$,将 $z_{x}^{u}$ 分离为 $z_{v,u}$ 和 $z_{x}^{r,u}$,并生成 $\mathbf{p}{u}=C_{u}(z_{x}^{r,u})$ 以预测其类别为 $y_{i}$ 且

$$

\hat{i}=\underset{i=1,...,|\mathcal{V}{u}|}{\arg\operatorname*{max}}p_{u}^{i},

$$

$$

\hat{i}=\underset{i=1,...,|\mathcal{V}{u}|}{\arg\operatorname*{max}}p_{u}^{i},

$$

where $p_{u}^{i}$ is the i-th probability value of $\mathbf{p}_{u}$ .

其中 $p_{u}^{i}$ 是 $\mathbf{p}_{u}$ 的第 i 个概率值。

For the GZSL protocol, we need to predict the probabilities of all classes in $\mathcal{V}=\mathcal{V}{u}\cup\mathcal{V}{s}$ where $\mathcal{V}{s}={y_{i}:x_{i}\in\mathcal{X}{s}}$ . We follow the same approach proposed by Gupta et al . [8] to use an additional class classifier $C_{s}$ for seen classes and a domain classifier $C_{d}$ to merge two arrays of probabilities. Gupta et $a l$ . first apply Atzmon and Chechik’s idea [1] to a skeleton-based action recognition problem and outperform the typical single-classifier approach. The advantage of using dual class if i ers is reported in a review paper [19]. Our $C_{s}$ is also a single-layer MLP with a softmax output layer like $C_{u}$ , but it uses skeleton features $f_{x}$ rather than latent features to produce probabilities

对于GZSL协议,我们需要预测$\mathcal{V}=\mathcal{V}{u}\cup\mathcal{V}{s}$中所有类别的概率,其中$\mathcal{V}{s}={y_{i}:x_{i}\in\mathcal{X}{s}}$。我们沿用Gupta等人[8]提出的方法,使用额外的可见类分类器$C_{s}$和域分类器$C_{d}$来合并两个概率数组。Gupta等人首次将Atzmon和Chechik的思想[1]应用于基于骨架的动作识别问题,其性能超越了典型的单分类器方法。双分类器的优势已在综述论文[19]中得到验证。我们的$C_{s}$与$C_{u}$类似,也是带有softmax输出层的单层MLP,但使用骨架特征$f_{x}$而非潜在特征来生成概率。

$$

\mathbf{p}{s}=C_{s}(f_{x}),

$$

$$

\mathbf{p}{s}=C_{s}(f_{x}),

$$

where $\mathrm{dim}(\mathbf{p}{s})=|\mathcal{V}_{s}|$ .

其中 $\mathrm{dim}(\mathbf{p}{s})=|\mathcal{V}_{s}|$。

We train $C_{s}$ and $C_{u}$ first, and then we freeze their parameters to train $C_{d}$ , which is a logistic regression with an input vector $\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u}$ where ${\bf p}{s}^{\prime}$ is the temperature-tuned [10] top $k$ -pooling result of $\mathbf{p}{s}$ and the number $k=\dim({\bf p}{u})$ . $C_{d}$ yields a probability value $p_{d}$ of whether the source skeleton belongs to a seen class. We use the LBFGS algorithm [16] to train $C_{d}$ and use it during inference to predict the probability of $x$ as

我们首先训练 $C_{s}$ 和 $C_{u}$,然后冻结它们的参数来训练 $C_{d}$。$C_{d}$ 是一个逻辑回归模型,其输入向量为 $\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u}$,其中 ${\bf p}{s}^{\prime}$ 是 $\mathbf{p}{s}$ 经过温度调节 [10] 后的 top $k$ 池化结果,且 $k=\dim({\bf p}{u})$。$C_{d}$ 输出一个概率值 $p_{d}$,表示源骨架是否属于已见过的类别。我们使用 LBFGS 算法 [16] 训练 $C_{d}$,并在推理时用它来预测 $x$ 的概率为

$$

\mathbf{p}(y|x)=C_{d}(\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u})\mathbf{p}{s}\oplus(1-C_{d}(\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u}))\mathbf{p}{u}=p_{d}\mathbf{p}{s}\oplus(1-p_{d})\mathbf{p}_{u}

$$

$$

\mathbf{p}(y|x)=C_{d}(\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u})\mathbf{p}{s}\oplus(1-C_{d}(\mathbf{p}{s}^{\prime}\oplus\mathbf{p}{u}))\mathbf{p}{u}=p_{d}\mathbf{p}{s}\oplus(1-p_{d})\mathbf{p}_{u}

$$

and decide the class of $x$ as $y_{i}$ and

并将 $x$ 的类别判定为 $y_{i}$

$$

\hat{i}=\underset{i=1,\ldots,|y|}{\arg\operatorname*{max}}p^{i},

$$

$$

\hat{i}=\underset{i=1,\ldots,|y|}{\arg\operatorname*{max}}p^{i},

$$

where $p^{i}$ is the i-th probability value of ${\bf p}(y\vert x)$ .

其中 $p^{i}$ 是 ${\bf p}(y\vert x)$ 的第 i 个概率值。