Revisiting Oxford and Paris: Large-Scale Image Retrieval Benchmarking

重访牛津与巴黎:大规模图像检索基准测试

Abstract

摘要

In this paper we address issues with image retrieval benchmarking on standard and popular Oxford $5k$ and Paris 6k datasets. In particular, annotation errors, the size of the dataset, and the level of challenge are addressed: new annotation for both datasets is created with an extra attention to the reliability of the ground truth. Three new pro- tocols of varying difficulty are introduced. The protocols allow fair comparison between different methods, including those using a dataset pre-processing stage. For each dataset, 15 new challenging queries are introduced. Finally, a new set of 1M hard, semi-automatically cleaned distractors is selected.

本文探讨了标准且广泛使用的Oxford 5k和Paris 6k数据集在图像检索基准测试中存在的问题。重点关注了标注错误、数据集规模和挑战级别:我们为两个数据集重新创建了标注,并特别关注真实标注的可靠性。引入了三种不同难度的新评估协议,这些协议支持包括使用数据集预处理阶段在内的各类方法进行公平比较。针对每个数据集新增了15个具有挑战性的查询项。最后,我们还筛选出一组包含100万张经过半自动清理的困难干扰图像。

An extensive1 comparison of the state-of-the-art methods is performed on the new benchmark. Different types of methods are evaluated, ranging from local-feature-based to modern CNN based methods. The best results are achieved by taking the best of the two worlds. Most importantly, image retrieval appears far from being solved.

在新基准上对当前最先进方法进行了全面比较。评估了从基于局部特征到现代CNN (Convolutional Neural Network) 方法的各类技术,最佳结果通过融合两类方法的优势获得。最重要的是,图像检索领域显然远未达到成熟阶段。

1. Introduction

1. 引言

Image retrieval methods have gone through significant development in the last decade, starting with descriptors based on local-features, first organized in bagof-words [41], and further expanded by spatial verification [33], hamming embedding [16], and query expan- sion [7]. Compact representations reducing the memory footprint and speeding up queries started with aggregating local descriptors [18]. Nowadays, the most efficient retrieval methods are based on fine-tuned convolutional neural networks (CNNs) [10, 37, 30].

图像检索方法在过去十年中经历了显著发展,最初是基于局部特征的描述符,首次以词袋模型 [41] 组织,随后通过空间验证 [33]、汉明嵌入 [16] 和查询扩展 [7] 进一步扩展。减少内存占用并加速查询的紧凑表示始于局部描述符聚合 [18]。如今,最高效的检索方法基于微调的卷积神经网络 (CNN) [10, 37, 30]。

In order to measure the progress and compare different methods, standardized image retrieval benchmarks are used. Besides the fact that a benchmark should simulate a realworld application, there are a number of properties that determine the quality of a benchmark: the reliability of the annotation, the size, and the challenge level.

为了衡量进展并比较不同方法,通常会使用标准化的图像检索基准测试。一个优质的基准测试除了需要模拟真实应用场景外,还需具备以下关键特性:标注的可靠性、数据规模以及挑战难度。

Errors in the annotation may systematically corrupt the comparison of different methods. Too small datasets are prone to over-fitting and do not allow the evaluation of the efficiency of the methods. The reliability of the annotation and size of the dataset are competing factors, as it is difficult to secure accurate human annotation of large datasets. The size is commonly increased by adding a distractor set, which contains irrelevant images that are selected in an automated manner (different tags, GPS information, etc.) Finally, benchmarks where all the methods achieve almost perfect results [23] cannot be used for further improvement or qualitative comparison.

标注中的错误可能会系统性破坏不同方法的比较。过小的数据集容易导致过拟合,且无法评估方法的效率。标注的可靠性和数据集规模是相互制约的因素,因为很难确保对大型数据集进行准确的人工标注。通常通过添加干扰集来增加规模,这些干扰集包含以自动化方式选择的无关图像(不同标签、GPS信息等)。最后,所有方法都取得近乎完美结果的基准 [23] 无法用于进一步改进或定性比较。

Many datasets have been introduced to measure the performance of image retrieval. Oxford [33] and Paris [34] datasets belong to the most popular ones. Numerous methods of image retrieval [7, 31, 5, 27, 47, 3, 48, 20, 37, 10] and visual localization [9, 1] have used these datasets for evaluation. One reason for their popularity is that, in contrast to datasets that contain small groups of 4-5 similar images like Holidays [16] and UKBench [29], Oxford and Paris contain queries with up to hundreds of positive images.

许多数据集被引入用于评估图像检索性能。Oxford [33]和Paris [34]数据集属于最受欢迎的基准集。大量图像检索方法[7, 31, 5, 27, 47, 3, 48, 20, 37, 10]与视觉定位研究[9, 1]都采用这些数据集进行评测。相较于Holidays [16]和UKBench [29]等仅含4-5张相似图像的小规模数据集,Oxford和Paris的流行优势在于其查询样本可包含多达数百张正例图像。

Despite the popularity, there are known issues with the two datasets, which are related to all three important properties of evaluation benchmarks. First, there are errors in the annotation, including both false positives and false negatives. Further inaccuracy is introduced by queries of different sides of a landmark, sharing the annotation despite being visually distinguishable. Second, the annotated datasets are relatively small (5,062 and 6,392 images respectively). Third, current methods report near-perfect results on both the datasets. It has become difficult to draw conclusions from quantitative evaluations, especially given the annotation errors [14].

尽管这两个数据集广受欢迎,但它们存在一些已知问题,涉及评估基准的三大关键特性。首先,标注中存在错误,包括误报和漏报。此外,由于地标不同侧面的查询共享标注(尽管视觉上可区分),进一步引入了不准确性。其次,标注数据集规模相对较小(分别为5,062和6,392张图像)。第三,当前方法在这两个数据集上报告的结果近乎完美。考虑到标注错误[14],从定量评估中得出结论已变得十分困难。

The lack of difficulty is not caused by the fact that nontrivial instances are not present in the dataset, but due to the annotation. The annotation was introduced about ten years ago. At that time, the annotators had different perception of what the limits of image retrieval are. Many instances that are nowadays considered as a change of viewpoint expected to be retrieved, are de facto excluded from the evaluation by being labelled as Junk.

难度不足并非源于数据集中缺乏复杂实例,而是由标注方式导致。该标注体系引入于约十年前,当时标注者对图像检索的边界认知存在差异。如今被视为视角变化且应被检索到的众多实例,实际上因被标记为Junk (垃圾数据) 而被排除在评估范围之外。

The size issue of the datasets is partially addressed by the Oxford 100k distractor set. However, this contains false negative images, as well as images that are not challenging. State-of-the-art methods maintain near-perfect results even in the presence of these distract or s. As a result, additional computational effort is spent with little benefit in drawing conclusions.

牛津100k干扰集部分解决了数据集规模问题。然而,该集合包含假阴性图像以及缺乏挑战性的图像。即使存在这些干扰项,最先进方法仍能保持近乎完美的结果。因此,额外的计算投入对得出结论几乎无益。

Contributions. As a first contribution, we generate new annotation for Oxford and Paris datasets, update the evaluation protocol, define new, more difficult queries, and create new set of challenging distract or s. As an outcome we produce Revisited Oxford, Revisited Paris, and an accompanying distractor set of one million images. We refer to them as ROxford, RParis, and ${\mathcal{R}}1\mathbf{M}$ respectively.

贡献。首先,我们为Oxford和Paris数据集生成新的标注,更新评估协议,定义新的、更具挑战性的查询,并创建一组新的干扰图像。最终我们构建了Revisited Oxford、Revisited Paris数据集,以及一个包含一百万张图像的干扰集,分别简称为ROxford、RParis和${\mathcal{R}}1\mathbf{M}$。

As a second contribution, we provide extensive evaluation of image retrieval methods, ranging from local-feature based to CNN-descriptor based approaches, including various methods of re-ranking.

作为第二项贡献,我们对图像检索方法进行了全面评估,涵盖基于局部特征到基于CNN描述符的多种方法,包括各类重排序技术。

2. Revisiting the datasets

2. 重新审视数据集

In this section we describe in detail why and how we revisit the annotation of Oxford and Paris datasets, present a new evaluation protocol and an accompanying challenging set of one million distractor images. The revisited benchmark is publicly available2.

在本节中,我们将详细阐述为何及如何重新审视Oxford和Paris数据集的标注,提出新的评估协议,并附带包含一百万张干扰图像的挑战集。修订后的基准测试已公开提供2。

2.1. The original datasets

2.1. 原始数据集

The original Oxford and Paris datasets consist of 5,063 and 6,392 high-resolution $(1024\times768)$ images, respectively. Each dataset contains 55 queries comprising 5 queries per landmark, coming from a total of 11 landmarks. Given a landmark query image, the goal is to retrieve all database images depicting the same landmark. The original annotation (labeling) is performed manually and consists of 11 ground truth lists since 5 images of the same landmark form a query group. Three labels are used, namely, positive, junk, and negative 3.

牛津和巴黎原始数据集分别包含5,063张和6,392张高分辨率$(1024\times768)$图像。每个数据集包含55个查询(每个地标5个查询),共涵盖11个地标。给定一个地标查询图像,目标是检索出描绘同一地标的所有数据库图像。原始标注(标签)为人工完成,包含11个基准真值列表(因为同一地标的5张图像构成一个查询组)。标注使用三种标签:正样本、干扰样本和负样本[3]。

Positive images clearly depict more than $25%$ of the landmark, junk less than $25%$ , while the landmark is not shown in negative ones. The performance is measured via mean average precision (mAP) [33] over all 55 queries, while junk images are ignored, i.e. the evaluation is performed as if they were not present in the database.

正样本图像清晰展示了超过25%的地标区域,干扰样本则少于25%,而负样本中完全不包含该地标。性能评估采用所有55个查询的平均精度均值(mAP) [33],其中干扰样本被忽略(即评估时视为数据库中不存在这些样本)。

2.2. Revisiting the annotation

2.2. 重新审视标注

The annotation is performed by five annotators, and it is performed in the following steps.

标注工作由五名标注员完成,具体步骤如下。

Query groups. Query groups share the same groundtruth list and simplify the labeling problem, but also cause some inaccuracies in the original annotation. Balliol and Christ Church landmarks are depicted from a different (not fully symmetric) side in the $2^{\mathrm{nd}}$ and $4^{\mathrm{th}}$ query, respectively. Arc de Triomphe has three day and two night queries, while day-night matching is considered a challenging problem [49, 35]. We alleviate this by splitting these cases into separate groups. As a result, we form 13 and 12 query groups on Oxford and Paris, respectively.

查询组。查询组共享相同的地面实况列表,简化了标注问题,但也导致原始标注存在一些不准确性。Balliol和Christ Church地标在第2次和第4次查询中分别从不同(非完全对称)的侧面进行描绘。凯旋门(Arc de Triomphe)包含三个白天和两个夜晚的查询,而昼夜匹配被认为是一个具有挑战性的问题[49,35]。我们通过将这些案例拆分为独立组来缓解这一问题。最终,我们在Oxford和Paris数据集上分别形成了13个和12个查询组。

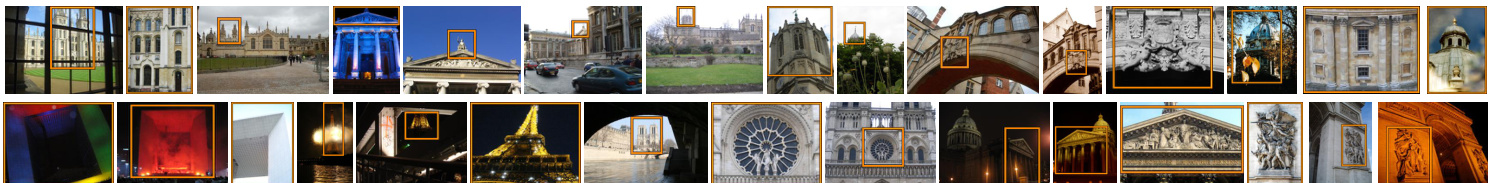

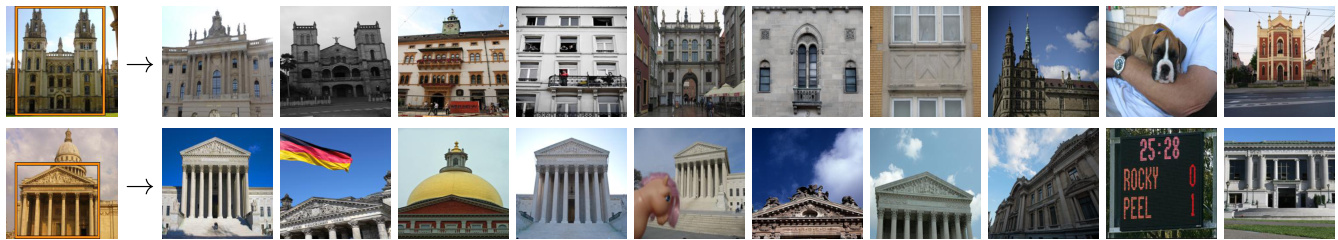

Additional queries. We introduce new and more challenging queries (see Figure 1) compared to the original ones. There are 15 new queries per dataset, originating from five out of the original 11 landmarks, with three queries per landmark. Along with the 55 original queries, they comprise the new set of 70 queries per dataset. The query groups, defined by visual similarity, are 26 and 25 for $\mathcal{R}$ Oxford and RParis, respectively. As in the original datasets, the query object bounding boxes are simulating not only a user attempting to remove background clutter, but also cases of large occlusion.

附加查询。我们引入了比原查询更具挑战性的新查询(见图 1)。每个数据集新增 15 个查询,源自原始 11 个地标中的 5 个,每个地标对应 3 个查询。结合原有的 55 个查询,每个数据集现共包含 70 个查询。根据视觉相似性定义的查询组数量分别为:$\mathcal{R}$Oxford 26 组,RParis 25 组。与原数据集一致,查询对象边界框不仅模拟用户试图消除背景干扰的情况,还模拟大面积遮挡的场景。

Labeling step 1: Selection of potential positives. Each annotator manually inspects the whole dataset and marks images depicting any side or version of a landmark. The goal is to collect all images that are originally incorrectly labeled as negative. Even uncertain cases are included in this step and the process is repeated for each landmark. Apart from inspecting the whole dataset, an interactive retrieval tool is used to actively search for further possible positive images. All images marked in this phase are merged together with images originally annotated as positive or junk, creating a list of potential positives for each landmark.

标注步骤1:潜在正样本筛选。每位标注员手动检查整个数据集,标记出描绘地标任何侧面或版本的图像。此阶段目标是收集所有原始标注错误的负样本图像,不确定案例也会被纳入,并针对每个地标重复该流程。除全量数据检查外,还使用交互式检索工具主动搜索更多潜在正样本图像。本阶段标记的图像将与原始标注为正样本或垃圾样本的图片合并,形成每个地标的潜在正样本列表。

Labeling step 2: Label assignment. In this step, each annotator manually inspects the list of potential positives for each query group and assigns labels. The possible labels are Easy, Hard, Unclear, and Negative. All images not in the list of potential positives are automatically marked negative. The instructions given to the annotators for each of the labels are as follows.

标注步骤2:标签分配。在此步骤中,每位标注员手动检查每个查询组的潜在正例列表并分配标签。可能的标签包括简单(Easy)、困难(Hard)、不明确(Unclear)和负例(Negative)。所有不在潜在正例列表中的图像将自动标记为负例。提供给标注员的各标签说明如下。

• Easy: The image clearly depicts the query landmark from the same side, with no large viewpoint change, no significant occlusion, no extreme illumination change, and no severe background clutter. In the case of fully symmetric sides, any side is valid.

- 简单:图像清晰展示了查询地标的同一侧面,无显著视角变化、明显遮挡、极端光照变化或严重背景杂乱。对于完全对称的侧面,任意侧面均有效。

Table 1. Number of images switching their labeling from the original annotation (positive, junk, negative) to the new one (easy, hard, unclear, negative).

| ROxford | RParis | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 标签 | 简单 | 困难 | 不确定 | 负样本 | 标签 | 简单 | 困难 | 不确定 | 负样本 | ||

| 正样本 | 438 | 50 | 93 | 1 | 正样本 | 1222 | 643 | 136 | 6 | ||

| 干扰项 | 50 | 222 | 72 | 9 | 干扰项 | 91 | 813 | 835 | 61 | ||

| 负样本 | 1 | 72 | 133 | 63768 | 负样本 | 16 | 147 | 273 | 71621 |

表 1: 图像标注从原始标注(正样本、干扰项、负样本)切换到新标注(简单、困难、不确定、负样本)的数量统计。

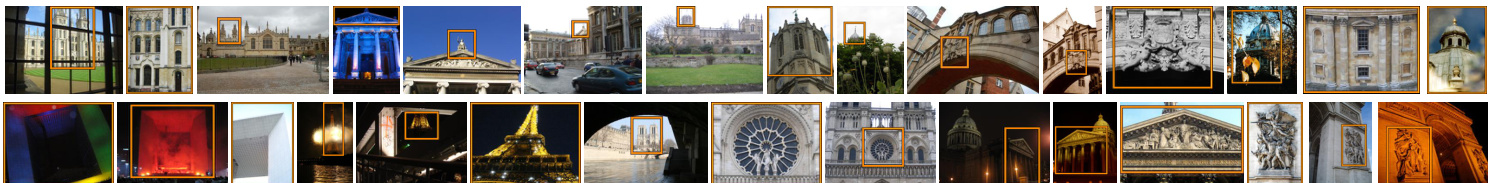

Figure 1. The newly added queries for ROxford(top) and RParis(bottom) datasets. Merged with the original queries, they comprise a new set of 70 queries in total. Figure 2. Examples of extreme labeling mistakes in the original labeling. We show the query (blue) image and the associated databas images that were originally marked as negative (red) or positive (green). Best viewed in color.

图 1: ROxford(上)和RParis(下)数据集新增的查询图像。与原始查询合并后,共计形成包含70个查询的新集合。

图 2: 原始标注中极端错误的示例。我们展示查询(蓝色)图像及原被标记为负样本(红色)或正样本(绿色)的关联数据库图像。建议彩色查看。

Hard: The image depicts the query landmark, but with viewing conditions that are difficult to match with the query. The depicted (side of the) landmark is recognizable without any contextual visual information. • Unclear: (a) The image possibly depicts the landmark in question, but the content is not enough to make a certain guess about the overlap with the query region, or context is needed to clarify. (b) The image depicts a different side of a partially symmetric building, where the symmetry is significant and disc rim i native enough. • Negative: The image is not satisfying any of the previous conditions. For instance, it depicts a different side of the landmark compared to that of the query, with no disc rim i native symmetries. If the image has any physical overlap with the query, it is never negative, but rather unclear, easy, or hard according to the above.

困难:图像描绘了查询地标,但视角条件难以与查询匹配。无需任何上下文视觉信息即可识别所描绘的地标(侧面)。

• 不明确:(a) 图像可能描绘了目标地标,但内容不足以确定与查询区域的重叠程度,或需要上下文来澄清。(b) 图像描绘了部分对称建筑的另一侧,其对称性显著且具有足够区分度。

• 负面:图像不满足上述任何条件。例如,与查询相比,它描绘了地标的另一侧,且无区分性对称特征。若图像与查询存在物理重叠,则根据上述标准归为不明确、简单或困难,而非负面。

Labeling step 3: Refinement. For each query group, each image in the list of potential positives has been assigned a five-tuple of labels, one per annotator. We perform majority voting in two steps to define the final label. The first step is voting for ${{\mathrm{easy}},{\mathrm{hard}}}$ , ${{\mathrm{unclear}}}$ , or ${{\mathrm{negative}}}$ , grouping easy and hard together. In case majority goes to ${\mathrm{easy},\mathrm{hard}}$ , the second step is to decide which of the two. Draws of the first step are assigned to unclear, and of the second step to hard. Illustrative examples are (EEHUU) $\rightarrow$ E, $(\mathrm{EHUUN})\to\mathrm{U}$ , and $(\mathrm{HHUNN})\to\mathrm{U}$ . Finally, for each query group, we inspect images by descending label entropy to make sure there are no errors.

标注步骤3:精修。对于每个查询组,潜在正例列表中的每张图像都被分配了一个五元组标签(每位标注者一个)。我们通过两步多数投票来确定最终标签:第一步在{简单(easy)、困难(hard)}、{不明确(unclear)}或{负例(negative)}之间投票,其中简单和困难归为一组。若多数票属于{easy,hard},则第二步决定具体是二者中的哪一个。第一步平局时归为不明确,第二步平局时归为困难。示例包括(EEHUU)→E、(EHUUN)→U以及(HHUNN)→U。最后,对于每个查询组,我们按标签熵降序检查图像以确保无误。

Revisited datasets: Oxford and Paris. Images from which the queries are cropped are excluded from the evaluation dataset. This way, unfair comparisons are avoided in the case of methods performing off-line preprocessing of the database [2, 14]; any preprocessing should not include any part of query images. The revisited datasets, namely, $\mathcal{R}$ Oxford and RParis, comprise 4,993 and 6,322 images respectively, after removing the 70 queries.

重新评估的数据集:Oxford和Paris。评估数据集中排除了从中裁剪查询的图像。这样可避免对数据库进行离线预处理的方法[2,14]产生不公平比较;任何预处理都不应包含查询图像的任何部分。重新评估的数据集,即$\mathcal{R}$Oxford和RParis,在移除70个查询后分别包含4,993和6,322张图像。

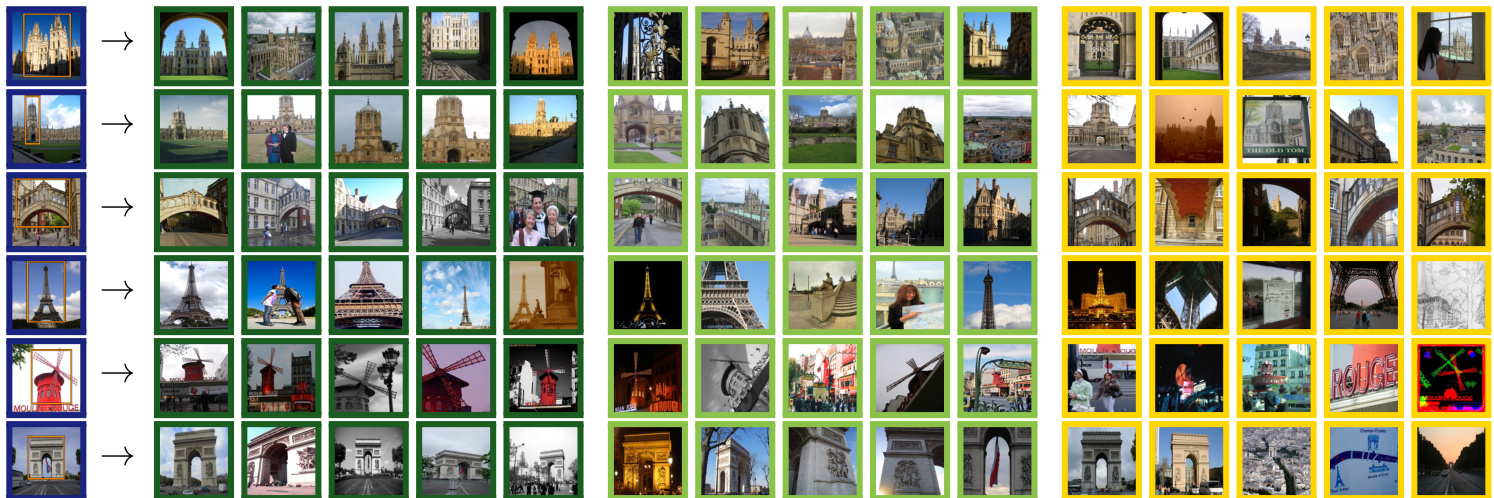

In Table 1, we show statistics of label transitions from the old to the new annotations. Note that errors in the original annotation that affect the evaluation, e.g. negative moving to easy or hard, are not uncommon. The transitions from junk to easy or hard are reflecting the greater challenges of the new annotation. Representative examples of extreme labeling errors of the original annotation are shown in Figure 2. In Figure 3, representative examples of easy, hard, and unclear images are presented for several queries. This will help understanding the level of challenge of each evaluation protocol listed below.

在表1中,我们展示了从旧标注到新标注的标签转换统计。需要注意的是,原始标注中影响评估的错误(例如负面样本转为简单或困难样本)并不罕见。从垃圾样本转为简单或困难样本的转换反映了新标注面临的更大挑战。图2展示了原始标注中极端标签错误的代表性案例。图3则针对多个查询展示了简单、困难和模糊图像的代表性示例,这将有助于理解下文列出的每种评估协议的挑战级别。

2.3. Evaluation protocol

2.3. 评估协议

Only the cropped regions are to be used as queries; never the full image, since the ground-truth labeling strictly considers only the visual content inside the query region.

仅将裁剪区域用作查询;切勿使用完整图像,因为真实标注严格仅考虑查询区域内的视觉内容。

The standard practice of reporting mean average precision (mAP) [33] for performance evaluation is followed. Additionally, mean precision at rank $K$ $(\mathrm{mP}@K)$ is reported. The former reflects the overall quality of the ranked list. The latter reflects the quality of the results of a search engine as they would be visually inspected by a user. More importantly, it is correlated to performance of subsequent processing steps [7, 21]. During the evaluation, positive images should be retrieved, while there is also an ignore list per query. Three evaluation setups of different difficulty are defined by treating labels (easy, hard, unclear) as positive or negative, or ignoring them:

遵循标准做法,报告平均精度均值 (mAP) [33] 进行性能评估。此外,还报告了排名 $K$ 处的平均精度 $(\mathrm{mP}@K)$。前者反映了排序列表的整体质量,后者则反映了搜索引擎结果在用户视觉检查时的质量。更重要的是,它与后续处理步骤的性能相关 [7, 21]。在评估过程中,应检索正样本图像,同时每个查询还有一个忽略列表。通过将标签(简单、困难、不明确)视为正样本、负样本或忽略它们,定义了三种不同难度的评估设置:

Easy (E): Easy images are treated as positive, while Hard and Unclear are ignored (same as Junk in [33]). • Medium (M): Easy and Hard images are treated as positive, while Unclear are ignored. Hard (H): Hard images are treated as positive, while Easy and Unclear are ignored.

简单 (E): 简单图像视为正样本,困难 (Hard) 和模糊 (Unclear) 图像被忽略 (与 [33] 中的 Junk 处理方式相同)。

中等 (M): 简单和困难图像视为正样本,模糊图像被忽略。

困难 (H): 困难图像视为正样本,简单和模糊图像被忽略。

If there are no positive images for a query in a particular setting, then that query is excluded from the evaluation.

如果在特定设置中没有查询的正样本图像,则该查询将从评估中排除。

Figure 3. Sample query (blue) images and images that are respectively marked as easy (dark green), hard (light green), and unclear (yellow). Best viewed in color.

图 3: 查询图像(蓝色)示例及分别标记为简单(深绿)、困难(浅绿)和不确定(黄色)的图像。建议彩色查看。

Figure 4. Sample false negative images in Oxford100k.

图 4: Oxford100k数据集中的假阴性样本图像。

The original annotation and evaluation protocol is closest to our Easy setup. Even though this setup is now trivial for the best performing methods, it can still be used for evaluation of e.g. near duplicate detection or retrieval with ultra short codes. The other setups, Medium and Hard, are challenging and even the best performing methods achieve relatively low scores. See Section 4 for details.

原始标注和评估协议最接近我们的简易设置 (Easy setup)。尽管这一设置对性能最佳的方法来说已微不足道,但仍可用于评估近重复检测或超短代码检索等任务。中等 (Medium) 和困难 (Hard) 设置则具有挑战性,即使表现最佳的方法得分也相对较低。详见第4节。

2.4. Distractor set 1M

2.4. 干扰项集 1M

Large scale experiments on Oxford and Paris dataset are commonly performed with the accompanying distractor set of 100k images, namely Oxford100k [33]. Recent results [14, 13] show that the performance only slightly degrades by adding Oxford100k in the database compared to a small-scale setting. Moreover, it is not manually cleaned and, as a consequence, Oxford and Paris landmarks are depicted in some of the distractor images (see Figure 4), hence adding further noise to the evaluation procedure.

在Oxford和Paris数据集上进行的大规模实验通常使用附带的10万张干扰图像集(即Oxford100k [33])。近期研究[14, 13]表明,与小型设置相比,在数据库中添加Oxford100k仅会轻微降低性能。此外,该干扰集未经过人工清理,因此部分干扰图像中仍包含Oxford和Paris地标(见图4),这给评估过程引入了额外噪声。

Larger distactor sets are used in the literature [33, 34, 16, 44] but none of them are standardized to provide a testbed for direct large scale comparison nor are they manually cleaned [16]. Some of the distractor sets are also biased, since they contain images of different resolution than the Oxford and Paris datasets.

文献中使用了更大的干扰项集 [33, 34, 16, 44],但它们均未经过标准化处理以提供直接大规模比较的测试基准,也未经过人工清洗 [16]。部分干扰项集还存在偏差,因为它们包含的图像分辨率与Oxford和Paris数据集不同。

We construct a new distractor set with exactly 1,001,001 high-resolution $(1024\times768)$ images, which we refer to as $\mathcal{R}1\mathbf{M}$ dataset. It is cleaned by a semi-automatic process. We automatically pick hard images for a number of state-of-theart methods, resulting in a challenging large scale setup.

我们构建了一个全新的干扰项集,包含1,001,001张高分辨率 $(1024\times768)$ 图像,称为 $\mathcal{R}1\mathbf{M}$ 数据集。该数据集通过半自动化流程进行清洗,并针对多种前沿方法自动筛选出困难样本,从而形成具有挑战性的大规模实验设置。

YFCC100M and semi-automatic cleaning. We randomly choose 5M images with GPS information from YFCC100M dataset [43]. Then, we exclude UK, France, and Las Vegas; the latter due to the Eiffel Tower and Arc de Triomphe replicas. We end up with roughly 4.1M images that are available for downloading in high resolution. We rank images with the same search tool as used in labeling step 1. Then, we manually inspect the top 2k images per landmark, and remove those depicting the query landmarks (faulty GPS, toy models, and paintings/photographs of landmarks). In total, we find 110 such images.

YFCC100M与半自动清洗。我们从YFCC100M数据集[43]中随机选取500万张含GPS信息的图像,随后排除英国、法国和拉斯维加斯的数据(后者的剔除原因是埃菲尔铁塔和凯旋门的复制品)。最终获得约410万张可下载的高分辨率图像。使用与标注步骤1相同的搜索工具对图像进行排序,随后人工检查每个地标的前2000张图像,剔除包含查询地标的错误数据(GPS错误、玩具模型以及地标的绘画/照片)。共发现110张此类问题图像。

Un-biased mining of distracting images. We propose a way to keep the most challenging 1M out of the 4.1M images. We perform all 70 queries into the 4.1M database with a number of methods. For each query and for each distractor image we count the fraction of easy or hard images that are ranked after it. We sum these fractions over all queries of ROxford and RParis and over different methods, resulting in a measurement of how distracting each distractor image is. We choose the set of 1M most distracting images and refer to it as the ${\mathcal{R}}1\mathbf{M}$ distractor set.

无偏见的干扰图像挖掘。我们提出了一种方法,从410万张图像中筛选出最具挑战性的100万张。通过多种方法对410万数据库执行全部70次查询,针对每个查询和每张干扰图像,统计排在它之后的简单或困难图像比例。我们将这些比例在ROxford和RParis的所有查询及不同方法上求和,从而量化每张干扰图像的干扰程度。最终选出最具干扰性的100万张图像,称为${\mathcal{R}}1\mathbf{M}$干扰集。

Three complementary retrieval methods are chosen to compute this measurement. These are fine-tuned ResNet with GeM pooling [37], pre-trained (on ImageNet) AlexNet with MAC pooling [38], and ASMK [46]. More details on these methods are given in Section 3. Finally, we perform a sanity check to show that this selection process is not significantly biased to distract only those 3 methods. This includes two additional methods, VLAD [18] and finetuned ResNet with R-MAC pooling by Gordo et al. [10]. As shown in Table 2, the performance on the hardest 1M distractors is hardly affected whether one of those additional methods participates or not in the selection process. This suggests that the mining process is not biased towards particular methods.

我们选择了三种互补的检索方法来计算这一指标:采用GeM池化的微调ResNet [37]、基于ImageNet预训练的MAC池化AlexNet [38]以及ASMK [46]。这些方法的详细说明见第3节。最后我们通过验证实验表明,该筛选过程不会显著偏袒那三种方法——我们额外测试了VLAD [18]和Gordo等人提出的R-MAC池化微调ResNet [10]。如表2所示,无论是否加入这两种额外方法参与筛选,在100万最难干扰项上的性能表现几乎不受影响,说明挖掘过程不存在方法偏好性。

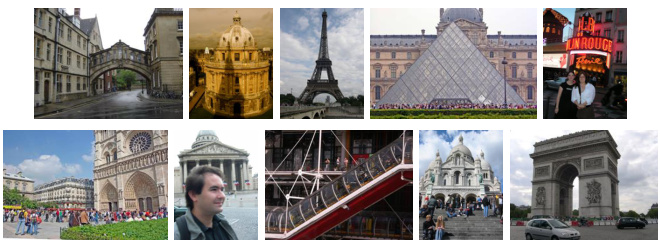

Table 2 also shows that the distractor set we choose (version 1M (1,2,3) in the Table) is much harder than a random 1M subset and nearly as hard as all 4M distractor images. Example images from the set ${\mathcal{R}}1\mathbf{M}$ are shown in Figure 5.

表 2 还显示,我们选择的干扰项集 (表格中的 version 1M (1,2,3) ) 比随机 1M 子集要难得多,且几乎与全部 4M 干扰图像难度相当。图 5 展示了来自 ${\mathcal{R}}1\mathbf{M}$ 集合的示例图像。

Figure 5. The most distracting images per query for two queries.

图 5: 两个查询中每个查询最分散注意力的图像。

3. Extensive evaluation

3. 广泛评估

We evaluate a number of state-of-the-art approaches on the new benchmark and offer a rich testbed for future comparisons. We list them in this section and they belong to two main categories, namely, classical retrieval approaches using local features and CNN-based methods producing global image descriptors.

我们在新基准上评估了多种先进方法,为未来的比较提供了丰富的测试平台。本节列举的这些方法主要分为两大类:使用局部特征的经典检索方法和生成全局图像描述符的基于CNN的方法。

3.1. Local-feature-based methods

3.1. 基于局部特征的方法

Methods based on local invariant features [25, 26] and the Bag-of-Words (BoW) model [41, 33, 7, 34, 6, 27, 47, 4, 52, 54, 42] were dominating the field of image retrieval until the advent of CNN-based approaches [38, 3, 48, 20, 1, 10, 37, 28, 51]. A typical pipeline consists of invariant local feature detection [26], local descriptor extrac- tion [25], quantization with a visual codebook [41], typ- ically created with $k$ -means, assignment of descriptors to visual words and finally descriptor aggregation in a single embedding [19, 32] or individual feature indexing with an inverted file structure [45, 33, 31]. We consider state-ofthe-art methods from both categories. In particular, we use up-right hessian-affine (HesAff) features [31], RootSIFT (rSIFT) descriptors [2], and create the codebooks on the landmark dataset from [37], same as the one used for the whitening of CNN-based methods. Note that we always crop the queries according to the defined region and then perform any processing to be directly comparable to CNNbased methods.

基于局部不变特征的方法 [25, 26] 和词袋模型 (BoW) [41, 33, 7, 34, 6, 27, 47, 4, 52, 54, 42] 在基于 CNN 的方法 [38, 3, 48, 20, 1, 10, 37, 28, 51] 出现之前一直主导着图像检索领域。典型流程包括:局部不变特征检测 [26]、局部描述子提取 [25]、使用视觉词典进行量化 [41](通常通过 $k$ 均值方法生成)、将描述子分配到视觉单词,最后通过单一嵌入 [19, 32] 进行描述子聚合或采用倒排文件结构 [45, 33, 31] 进行独立特征索引。我们综合考量了两类方法中的前沿技术,具体采用直立海森仿射 (HesAff) 特征 [31]、RootSIFT (rSIFT) 描述子 [2],并在与 CNN 方法白化处理相同的地标数据集 [37] 上构建词典。需注意,我们会根据定义区域裁剪查询图像后再进行所有处理,以确保与基于 CNN 的方法具有直接可比性。

| Distractorset | ROxford | RParis | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 方法 | (1) | (2) | (3) | (4) | (5) | (1) | (2) | (3) | ||

| 4M | 33.3 | 11.1 | 33.2 | 33.7 | 15.6 | 40.7 | 11.4 | 30.0 | 45.4 | |

| 1M (1,2,3) 1M (1,2,3,4) | 33.9 33.7 | 11.1 11.1 | 34.8 34.8 | 33.9 33.8 | 17.4 17.5 | 44.1 43.8 | 11.8 11.8 | 31.7 31.8 | 48.1 47.7 | |

| 1M (1,2,3,5) | 33.7 | 11.1 | 34.6 | 33.9 | 17.2 | 43.5 | 11.7 | 31.4 | 47.7 | |

| 37.6 | ||||||||||

| 1M (random) | 13.7 | 37.4 | 38.9 | 20.4 | 47.3 | 16.2 | 34.2 | 53.1 |

Table 2. Performance (mAP) evaluation with the Medium protocol for different distractor sets. The methods considered are (1) Fine-tuned ResNet101 with GeM pooling [37]; (2) Offthe-shelf AlexNet with MAC pooling [38]; (3) HesAff–rSIFT– ASMK⋆ [46]; (4) Fine-tuned ResNet101 with R-MAC pooling [10]; (5) HesAff–rSIFT–VLAD [18]. The sanity check in- cludes evaluation for different distractor sets, i.e. all, hardest subset chosen by method (1,2,3), (1,2,3,4), (1,2,4,5), and a random 1M sample.

表 2: 采用Medium协议在不同干扰集下的性能(mAP)评估。评估方法包括:(1) 使用GeM池化的微调ResNet101 [37];(2) 使用MAC池化的现成AlexNet [38];(3) HesAff–rSIFT–ASMK⋆ [46];(4) 使用R-MAC池化的微调ResNet101 [10];(5) HesAff–rSIFT–VLAD [18]。完整性检查涵盖不同干扰集的评估,包括:全部样本、由方法(1,2,3)选出的最难子集、(1,2,3,4)、(1,2,4,5)以及随机100万样本。

We additionally follow the same BoW-based pipeline while replacing hessian-affine and RootSIFT with the deep local attentive features (DELF) [30]. The default extraction approach is followed (i.e. at most 1000 features per image), but we reduce the descriptor dimensionality to 128 and not to 40 to be comparable to RootSIFT. This variant is a bridge between classical approaches and deep learning.

此外,我们沿用相同的基于词袋模型(BoW)的流程,但将hessian-affine和RootSIFT替换为深度局部注意力特征(DELF) [30]。遵循默认提取方法(即每张图像最多提取1000个特征),但我们将描述符维度降至128而非40,以保持与RootSIFT的可比性。该变体是经典方法与深度学习之间的桥梁。

VLAD. The Vector of Locally Aggregated Descriptors [18] (VLAD) is created by first-order statistics of the local descriptors. The residual vectors between descriptors and the closest centroid are aggregated w.r.t. a codebook whose size is 256 in our experiments. We reduce its dimensionality down to 2048 with PCA, while square-root normalization is also used [15].

VLAD。局部聚合描述子向量 (VLAD) [18] 是通过局部描述子的一阶统计量构建的。在实验中,我们使用大小为256的码本对描述子与其最近质心之间的残差向量进行聚合,并通过PCA (主成分分析) 将其维度降至2048,同时采用平方根归一化 [15]。

$\mathbf{SMK^{\star}}$ . The binarized version of the Selective Match Kernel [46] $(\mathbf{S}\mathbf{M}\mathbf{K}^{\star})$ ), a simple extension of the Hamming Em- bedding [16] (HE) technique, uses an inverted file structure to separately indexes binarized residual vectors while it performs the matching with a selective monomial kernel function. The codebook size is 65,536 in our experiments, while burstiness normalization [17] is always used. Multiple assignment to three nearest words is used on the query side, while the hamming distance threshold is set to 52 out of 128 bits. The rest are the default parameters.

$\mathbf{SMK^{\star}}$。选择性匹配核[46]的二值化版本$(\mathbf{S}\mathbf{M}\mathbf{K}^{\star})$ )是汉明嵌入[16] (HE) 技术的简单扩展,它使用倒排文件结构分别索引二值化残差向量,同时通过选择性单项核函数进行匹配。我们的实验中码本大小为65,536,且始终使用突发性归一化[17]。查询端采用三近邻词多重分配策略,汉明距离阈值设置为128位中的52位。其余参数保持默认值。

$\mathbf{ASMK^{\star}}$ . The binarized version of the Aggregated Selective Match Kernel [46] $(\mathbf{ASMK}^{\star})$ ) is an extension of $\mathrm{SMK^{\star}}$ that jointly encodes local descriptors that are assigned to the same visual word and handles the burstiness phenomenon. Same para me tri z ation as $\mathrm{SMK^{\star}}$ is used.

$\mathbf{ASMK^{\star}}$。聚合选择性匹配核 [46] 的二进制版本 $(\mathbf{ASMK}^{\star})$ ) 是 $\mathrm{SMK^{\star}}$ 的扩展,它联合编码分配到同一视觉词的局部描述符,并处理突发性现象。参数化方式与 $\mathrm{SMK^{\star}}$ 相同。

SP. Spatial verification (SP) is known to be crucial for particular object retrieval [33] and is performed with the RANSAC algorithm [8]. It is applied on the 100 top-ranked images, as these are formed by a first filtering step, e.g. the $\mathrm{SMK^{\star}}$ or ${\mathrm{ASMK}}^{\star}$ method. Its result is the number of inlier correspondences, which is one of the most intuitive similarity measures and allows to detect true positive images. To assume that an image is spatially verified, we require 5 inliers with ${\mathrm{ASMK}}^{\star}$ and 10 with other methods.

SP. 空间验证 (SP) 对特定物体检索至关重要 [33],通常通过 RANSAC 算法 [8] 实现。该步骤作用于前 100 张候选图像(由 $\mathrm{SMK^{\star}}$ 或 ${\mathrm{ASMK}}^{\star}$ 等方法初步筛选得出),其输出结果为内点匹配数——这是最直观的相似度度量之一,可有效识别真阳性图像。我们设定空间验证通过标准为:使用 ${\mathrm{ASMK}}^{\star}$ 时需至少 5 个内点,其他方法需 10 个内点。

HQE. Query expansion (QE), firstly introduced by Chum et al. [7] in the visual domain, typically uses spatial verification to select true positive among the top retrieved result and issues an enhanced query including the verified images. Hamming Query Expans