A Partition Filter Network for Joint Entity and Relation Extraction

用于联合实体和关系抽取的分区过滤网络

Abstract

摘要

In joint entity and relation extraction, existing work either sequentially encode task-specific features, leading to an imbalance in inter-task feature interaction where features extracted later have no direct contact with those that come first. Or they encode entity features and relation features in a parallel manner, meaning that feature representation learning for each task is largely independent of each other except for input sharing. We propose a partition filter network to model two-way interaction between tasks properly, where feature encoding is decomposed into two steps: partition and filter. In our encoder, we leverage two gates: entity and relation gate, to segment neurons into two task partitions and one shared partition. The shared partition represents inter-task information valuable to both tasks and is evenly shared across two tasks to ensure proper two-way interaction. The task partitions represent intra-task information and are formed through concerted efforts of both gates, making sure that encoding of taskspecific features is dependent upon each other. Experiment results on six public datasets show that our model performs significantly better than previous approaches. In addition, contrary to what previous work has claimed, our auxiliary experiments suggest that relation prediction is contributory to named entity prediction in a non-negligible way. The source code can be found at https://github.com/ Cooper coppers/PFN.

在联合实体与关系抽取任务中,现有方法要么通过顺序编码任务特定特征导致任务间特征交互失衡(后提取的特征无法直接影响先提取的特征),要么采用并行方式编码实体特征和关系特征(除输入共享外,各任务的特征表示学习基本相互独立)。我们提出分区过滤网络(Partition Filter Network)来建模任务间的双向交互,将特征编码分解为分区和过滤两个步骤。该编码器通过实体门控和关系门控将神经元划分为两个任务分区和一个共享分区:共享分区表征对两个任务均有价值的跨任务信息,并均匀分配给双方以保证有效双向交互;任务分区表征任务内部信息,通过双门控协同形成,确保任务特定特征的编码过程相互依存。在六个公开数据集上的实验表明,本模型性能显著优于现有方法。辅助实验还发现,与既有结论相反,关系预测对命名实体预测存在不可忽视的贡献。源代码详见 https://github.com/Coopercoppers/PFN。

1 Introduction

1 引言

Joint entity and relation extraction intend to simultaneously extract entity and relation facts in the given text to form relational triples as (s, r, o). The extracted information provides a supplement to many studies, such as knowledge graph construction (Riedel et al., 2013), question answering (Diefenbach et al., 2018) and text sum mari z ation (Gupta and Lehal, 2010).

联合实体与关系抽取旨在从给定文本中同时提取实体和关系事实,形成(s, r, o)形式的关系三元组。该技术为知识图谱构建[20]、问答系统[21]和文本摘要[22]等研究领域提供了重要支撑。

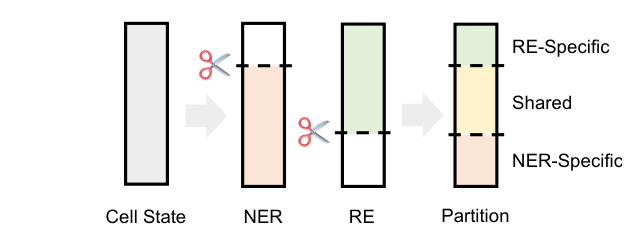

Figure 1: Partition process of cell neurons. Entity and relation gate are used to divide neurons into taskrelated and task-unrelated ones. Neurons relating to both tasks form the shared partition while the rest form two task partitions.

图 1: 细胞神经元划分过程。通过实体门控和关系门控将神经元划分为任务相关与任务无关两类。同时关联两个任务的神经元构成共享分区,其余神经元形成两个任务专属分区。

Conventionally, Named Entity Recognition (NER) and Relation Extraction (RE) are performed in a pipelined manner (Zelenko et al., 2002; Chan and Roth, 2011). These approaches are flawed in that they do not consider the intimate connection between NER and RE. Also, error propagation is another drawback of pipeline methods. In order to conquer these issues, joint extracting entity and relation is proposed and demonstrates stronger performance on both tasks. In early work, joint methods mainly rely on elaborate feature engineering to establish interaction between NER and RE (Yu and Lam, 2010; Li and Ji, 2014; Miwa and Sasaki, 2014). Recently, end-to-end neural network has shown to be successful in extracting relational triples (Zeng et al., 2014; Gupta et al., 2016; Katiyar and Cardie, 2017; Shen et al., 2021) and has since become the mainstream of joint entity and relation extraction.

传统上,命名实体识别(NER)和关系抽取(RE)以流水线方式执行 (Zelenko et al., 2002; Chan and Roth, 2011)。这些方法的缺陷在于未考虑NER与RE之间的紧密联系,且错误传播是流水线方法的另一弊端。为解决这些问题,联合抽取实体与关系的方法被提出,并在两项任务上展现出更强性能。早期工作中,联合方法主要依赖精心设计的特征工程来建立NER与RE间的交互 (Yu and Lam, 2010; Li and Ji, 2014; Miwa and Sasaki, 2014)。近年来,端到端神经网络在关系三元组抽取中取得成功 (Zeng et al., 2014; Gupta et al., 2016; Katiyar and Cardie, 2017; Shen et al., 2021),并由此成为联合实体关系抽取的主流方法。

According to their differences in encoding taskspecific features, most of the existing methods can be divided into two categories: sequential encoding and parallel encoding. In sequential encoding, task-specific features are generated sequentially, which means features extracted first are not affected by those that are extracted later. Zeng et al. (2018) and Wei et al. (2020) are typical examples of this category. Their methods extract features for different tasks in a predefined order. In parallel encoding, task-specific features are generated independently using shared input. Compared with sequential encoding, models build on this scheme do not need to worry about the implication of encoding order. For example, Fu et al. (2019) encodes entity and relation information separately using common features derived from their GCN encoder. Since both taskspecific features are extracted through isolated submodules, this approach falls into the category of parallel encoding.

根据编码任务特定特征方式的不同,现有方法主要可分为两类:顺序编码与并行编码。顺序编码中任务特征按序生成,即先提取的特征不受后续特征影响。Zeng等(2018) 和Wei等(2020) 是该类方法的典型代表,他们按预定义顺序为不同任务提取特征。并行编码则通过共享输入独立生成各任务特征,相比顺序编码,基于此方案的模型无需考虑编码顺序的影响。例如Fu等(2019) 利用GCN编码器生成的公共特征分别编码实体和关系信息,由于两个任务特征均通过独立子模块提取,该方法属于并行编码范畴。

However, both encoding designs above fail to model two-way interaction between NER and RE tasks properly. In sequential encoding, interaction is only unidirectional with a specified order, resulting in different amount of information exposed to NER and RE task. In parallel encoding, although encoding order is no longer a concern, interaction is only present in input sharing. Considering adding two-way interaction in feature encoding, we adopt an alternative encoding design: joint encoding. This design encodes task-specific features jointly with a single encoder where there should exist some mutual section for inter-task communication.

然而,上述两种编码设计都未能正确建模命名实体识别(NER)与关系抽取(RE)任务间的双向交互。在顺序编码中,交互仅按指定顺序单向进行,导致NER和RE任务获取的信息量不对等。并行编码虽消除了顺序限制,但交互仅体现在输入共享层面。为在特征编码中引入双向交互,我们采用了联合编码方案:通过单一编码器联合生成任务专属特征,并保留特征间的公共交互区域以实现跨任务通信。

In this work, we instantiate joint encoding with a partition filter encoder. Our encoder first sorts and partitions each neuron according to its contribution to individual tasks with entity and relation gates. During this process, two task partitions and one shared partition are formed (see figure 1). Then individual task partitions and shared partition are combined to generate task-specific features, filtering out irrelevant information stored in the opposite task partition.

在本工作中,我们通过分区过滤器编码器实现了联合编码。该编码器首先利用实体门和关系门,根据神经元对各个任务的贡献度进行排序和分区。此过程会形成两个任务分区和一个共享分区(见图1)。随后将独立任务分区与共享分区组合生成任务专属特征,同时过滤掉存储在对立任务分区中的无关信息。

Task interaction in our encoder is achieved in two ways: First, the partitions, especially the taskspecific ones, are formed through concerted efforts of entity and relation gates, allowing for interaction between the formation of entity and relation features determined by these partitions. Second, the shared partition, which represents information useful to both task, is equally accessible to the formation of both task-specific features, ensuring balanced two-way interaction. The contributions of our work are summarized below:

我们编码器中的任务交互通过两种方式实现:首先,分区(特别是任务特定分区)通过实体门和关系门的协同作用形成,使得由这些分区决定的实体特征与关系特征在形成过程中能够交互。其次,共享分区代表对两个任务都有用的信息,它可以平等地参与两个任务特定特征的形成,从而确保双向交互的平衡。我们工作的贡献总结如下:

- We propose partition filter network, a framework designed specifically for joint encoding. This method is capable of encoding taskspecific features and guarantees proper twoway interaction between NER and RE.

- 我们提出分区过滤网络 (partition filter network),这是一个专为联合编码设计的框架。该方法能够编码任务特定特征,并确保命名实体识别 (NER) 和关系抽取 (RE) 之间的双向交互。

- We conduct extensive experiments on six datasets. The main results show that our method is superior to other baseline approaches, and the ablation study provides insight into what works best for our framework.

- 我们在六个数据集上进行了大量实验。主要结果表明,我们的方法优于其他基线方法,消融研究深入揭示了框架中最有效的设计要素。

- Contrary to what previous work has claimed, our auxiliary experiments suggest that relation prediction is contributory to named entity prediction in a non-negligible way.

- 与先前研究结论相反,我们的辅助实验表明关系预测对命名实体预测的贡献不可忽视。

2 Related Work

2 相关工作

In recent years, joint entity and relation extraction approaches have been focusing on tackling triple overlapping problem and modelling task interaction. Solutions to these issues have been explored in recent works (Zheng et al., 2017; Zeng et al., 2018, 2019; Fu et al., 2019; Wei et al., 2020). The triple overlapping problem refers to triples sharing the same entity (SEO, i.e. Single Entity Overlap) or entities (EPO, i.e. Entity Pair Overlap). For example, In "Adam and Joe were born in the USA", since triples (Adam, birthplace, USA) and (Joe, birthplace, USA) share only one entity "USA", they should be categorized as SEO triples; or in "Adam was born in the USA and lived there ever since", triples (Adam, birthplace, USA) and (Adam, residence, USA) share both entities at the same time, thus should be categorized as EPO triples. Generally, there are two ways in tackling the problem. One is through generative methods like seq2seq (Zeng et al., 2018, 2019) where entity and relation mentions can be decoded multiple times in output sequence, another is by modeling each relation separately with sequences (Wei et al., 2020), graphs (Fu et al., 2019) or tables (Wang and Lu, 2020). Our method uses relation-specific tables (Miwa and Sasaki, 2014) to handle each relation separately.

近年来,联合实体与关系抽取方法主要聚焦于解决三元组重叠问题及建模任务交互。针对这些问题的解决方案已在近期研究中得到探索 (Zheng et al., 2017; Zeng et al., 2018, 2019; Fu et al., 2019; Wei et al., 2020)。三元组重叠问题指共享同一实体(SEO,即单实体重叠)或多个实体(EPO,即实体对重叠)的三元组。例如在"Adam和Joe都出生于美国"中,由于三元组(Adam,出生地,美国)和(Joe,出生地,美国)仅共享实体"美国",应归类为SEO三元组;而在"Adam出生于美国并一直居住于此"中,三元组(Adam,出生地,美国)和(Adam,居住地,美国)同时共享两个实体,故应归类为EPO三元组。现有解决方案主要分为两类:一类采用seq2seq等生成式方法 (Zeng et al., 2018, 2019),通过在输出序列中多次解码实体和关系提及;另一类则通过序列 (Wei et al., 2020)、图结构 (Fu et al., 2019) 或表格 (Wang and Lu, 2020) 分别建模每种关系。本方法采用关系专用表格 (Miwa and Sasaki, 2014) 来实现关系的独立处理。

Task interaction modeling, however, has not been well handled by most of the previous work. In some of the previous approaches, Task interaction is achieved with entity and relation prediction sharing the same features (Tran and Kavuluru, 2019; Wang et al., 2020b). This could be problematic as information about entity and relation could sometimes be contradictory. Also, as models that use sequential encoding (Bekoulis et al., 2018b; Eberts and Ulges, 2019; Wei et al., 2020) or parallel encoding (Fu et al., 2019) lack proper two-way interaction in feature extraction, predictions made on these features suffer the problem of improper interaction. In our work, the partition filter encoder is built on joint encoding and is capable of handling communication of inter-task information more appropriately to avoid the problem of sequential and parallel encoding (exposure bias and insufficient interaction), while keeping intra-task information away from the opposite task to mitigate the problem of negative transfer between the tasks.

然而,任务交互建模在多数先前工作中并未得到妥善处理。部分早期方法通过实体与关系预测共享相同特征来实现任务交互 (Tran and Kavuluru, 2019; Wang et al., 2020b),这种做法存在隐患,因为实体与关系信息有时可能相互矛盾。此外,采用序列编码 (Bekoulis et al., 2018b; Eberts and Ulges, 2019; Wei et al., 2020) 或并行编码 (Fu et al., 2019) 的模型由于特征提取阶段缺乏适当的双向交互,基于这些特征的预测会存在交互不当的问题。本研究提出的分区过滤器编码器基于联合编码构建,能更合理地处理任务间信息交流,既避免了序列/并行编码的暴露偏差和交互不足问题,又通过隔离任务内信息来减轻任务间负迁移的影响。

3 Problem Formulation

3 问题表述

Our framework split up joint entity and relation extraction into two sub-tasks: NER and RE. Formally, Given an input sequence $s={w_{1},\dots,w_{L}}$ with $L$ tokens, $w_{i}$ denotes the i-th token in sequence $s$ . For NER, we aim to extract all typed entities whose set is denoted as $S$ , where $\langle w_{i},e,w_{j}\rangle\in S$ signifies that token $w_{i}$ and $w_{j}$ are the start and end token of an entity typed $e\in\mathcal{E}$ . $\mathcal{E}$ represents the set of entity types. Concerning RE, the goal is to identify all head-only triples whose set is denoted as $T$ , each triple $\langle w_{i},r,w_{j}\rangle\in T$ indicates that tokens $w_{i}$ and $w_{j}$ are the corresponding start token of subject and object entity with relation $r\in\mathcal{R}$ . $\mathcal{R}$ represents the set of relation types. Combining the results from both NER and RE, we should be able to extract relational triples with complete entity spans.

我们的框架将联合实体和关系抽取拆分为两个子任务:NER(命名实体识别)和RE(关系抽取)。形式化地,给定一个输入序列 $s={w_{1},\dots,w_{L}}$(包含 $L$ 个token),$w_{i}$ 表示序列 $s$ 中的第i个token。对于NER任务,目标是抽取所有类型化实体,其集合记为 $S$,其中 $\langle w_{i},e,w_{j}\rangle\in S$ 表示token $w_{i}$ 和 $w_{j}$ 是一个类型为 $e\in\mathcal{E}$ 的实体的起始和结束token,$\mathcal{E}$ 代表实体类型集合。对于RE任务,目标是识别所有头实体三元组,其集合记为 $T$,每个三元组 $\langle w_{i},r,w_{j}\rangle\in T$ 表示token $w_{i}$ 和 $w_{j}$ 分别是具有关系 $r\in\mathcal{R}$ 的主客体实体的起始token,$\mathcal{R}$ 表示关系类型集合。结合NER和RE的结果,我们就能抽取具有完整实体跨度的关系三元组。

4 Model

4 模型

We describe our model design in this section. Our model consists of a partition filter encoder and two task units, namely NER unit and RE unit. The partition filter encoder is used to generate taskspecific features, which will be sent to task units as input for entity and relation prediction. We will discuss each component in detail in the following three sub-sections.

在本节中,我们将描述模型设计。我们的模型由分区过滤器编码器和两个任务单元组成,即命名实体识别(NER)单元和关系抽取(RE)单元。分区过滤器编码器用于生成任务特定特征,这些特征将作为输入发送至任务单元以进行实体和关系预测。我们将在以下三个子章节中详细讨论每个组件。

4.1 Partition Filter Encoder

4.1 分区过滤器编码器

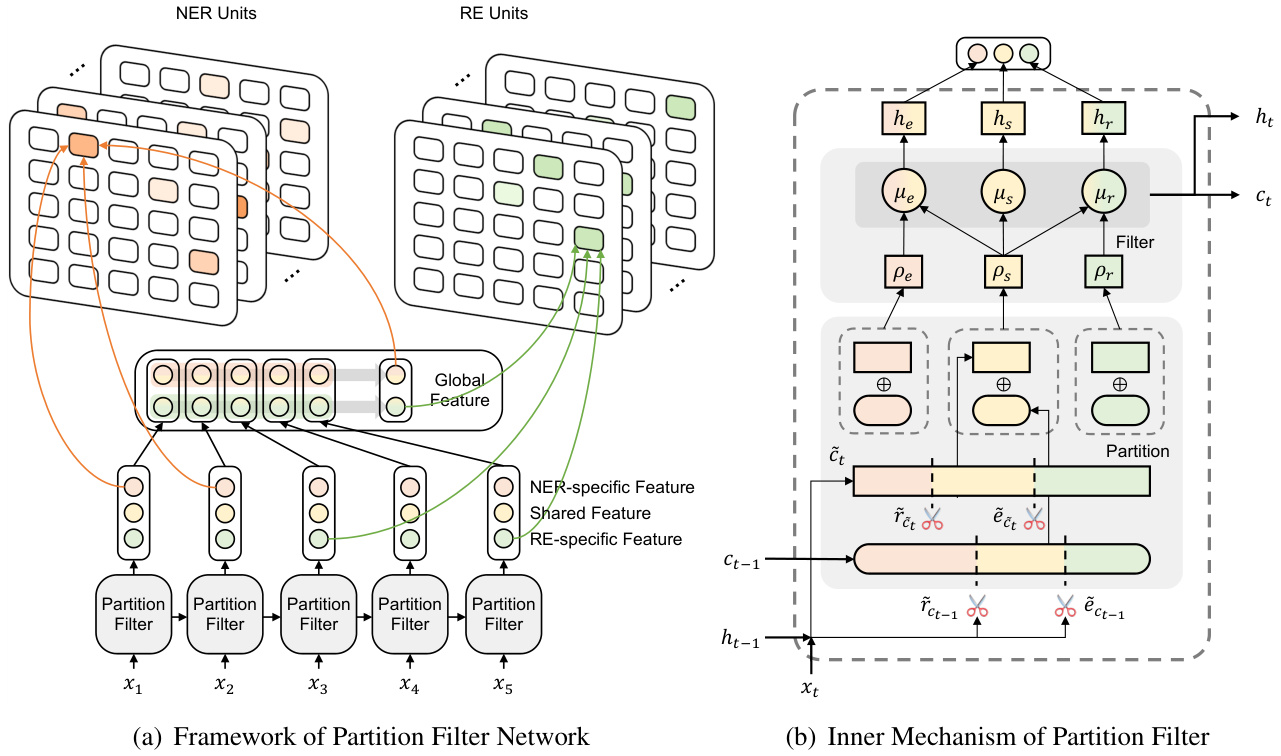

Similar to LSTM, the partition filter encoder is a recurrent feature encoder with information stored in intermediate memories. In each time step, the encoder first divides neurons into three partitions: entity partition, relation partition and shared partition. Then it generates task-specific features by selecting and combining these partitions, filtering out information irrelevant to each task. As shown in figure 2, this module is designed specifically to jointly extract task-specific features, which strictly follows two steps: partition and filter.

与LSTM类似,分区过滤器编码器是一种具有中间记忆存储的循环特征编码器。在每个时间步中,编码器首先将神经元划分为三个分区:实体分区、关系分区和共享分区。然后通过选择和组合这些分区来生成任务特定特征,过滤掉与每个任务无关的信息。如图2所示,该模块专为联合提取任务特定特征而设计,严格遵循两个步骤:分区和过滤。

Partition This step performs neuron partition to divide cell neurons into three partitions: Two task partitions storing intra-task information, namely entity partition and relation partition, as well as one shared partition storing inter-task information. The neuron to be divided are candidate cell $\tilde{c}{t}$ representing current information and previous cell $c_{t-1}$ representing history information. $c_{t-1}$ is the direct input from the last time step and $\tilde{c}_{t}$ is calculated in the same manner as LSTM:

分区

此步骤执行神经元分区,将单元神经元划分为三个分区:两个存储任务内信息的任务分区,即实体分区和关系分区,以及一个存储任务间信息的共享分区。待划分的神经元是表示当前信息的候选单元$\tilde{c}{t}$和表示历史信息的先前单元$c_{t-1}$。$c_{t-1}$是来自上一时间步的直接输入,而$\tilde{c}_{t}$的计算方式与LSTM相同:

$$

\tilde{c}{t}=\operatorname{tanh}(\operatorname{Linear}(\left[x_{t};h_{t-1}\right]))

$$

$$

\tilde{c}{t}=\operatorname{tanh}(\operatorname{Linear}(\left[x_{t};h_{t-1}\right]))

$$

where Linear stands for the operation of linear transformation.

Linear代表线性变换操作。

We leverage entity gate $\tilde{e}$ and relation gate $\tilde{r}$ , which are referred to as master gates in (Shen et al., 2019), for neuron partition. As illustrated in figure 1, each gate, which represents one specific task, will divide neurons into two segments according to their usefulness to the designated task. For example, entity gate $\tilde{e}$ will separate neurons into two partitions: NER-related and NER-unrelated. The shared partition is formed by combining partition results from both gates. Neurons in the shared partition can be regarded as information valuable to both tasks. In order to model twoway interaction properly, inter-task information in the shared partition is evenly accessible to both tasks (which will be discussed in the filter subsection). In addition, information valuable to only one task is invisible to the opposing task and will be stored in individual task partitions. The gates are calculated using cummax activation function cummax $(\cdot)=c u m s u m(s o f t m a x(\cdot))^{1}$ , whose output can be seen as approximation of a binary gate with the form of $(0,\ldots,0,1,\ldots,1)$ :

我们利用实体门 $\tilde{e}$ 和关系门 $\tilde{r}$ (在 Shen et al., 2019 中称为主门) 进行神经元划分。如图 1 所示,每个代表特定任务的门会根据神经元对指定任务的有用性将其划分为两个部分。例如,实体门 $\tilde{e}$ 会将神经元分为两个分区:与 NER 相关和与 NER 无关。共享分区是通过合并两个门的划分结果形成的。共享分区中的神经元可视为对两个任务都有价值的信息。为了正确建模双向交互,共享分区中的跨任务信息对两个任务都是平等可访问的 (这将在过滤器小节中讨论)。此外,仅对单个任务有价值的信息对另一个任务不可见,并将存储在各自的任务分区中。门的计算使用 cummax 激活函数 cummax $(\cdot)=c u m s u m(s o f t m a x(\cdot))^{1}$,其输出可视为形式为 $(0,\ldots,0,1,\ldots,1)$ 的二元门近似。

$$

\begin{array}{r l}&{\tilde{e}=\mathrm{cummax}(\mathrm{Linear}([x_{t};h_{t-1}]))}\ &{\tilde{r}=1-\mathrm{cummax}(\mathrm{Linear}([x_{t};h_{t-1}]))}\end{array}

$$

$$

\begin{array}{r l}&{\tilde{e}=\mathrm{cummax}(\mathrm{Linear}([x_{t};h_{t-1}]))}\ &{\tilde{r}=1-\mathrm{cummax}(\mathrm{Linear}([x_{t};h_{t-1}]))}\end{array}

$$

The intuition behind equation (2) is to identify two cut-off points, displayed as scissors in figure 2, which naturally divide a set of neurons into three segments.

方程 (2) 背后的直觉是找出两个截断点,如图 2 中的剪刀所示,它们自然地将一组神经元分成三个部分。

As a result, the gates will divide neurons into three partitions, entity partition $\rho_{e}$ , relation partition $\rho_{r}$ and shared partition $\rho_{s}$ . Partitions for previous cell ct 1 are formulated as below: 2

因此,门控机制会将神经元划分为三个分区:实体分区 $\rho_{e}$、关系分区 $\rho_{r}$ 和共享分区 $\rho_{s}$。前一个细胞 ct 1 的分区公式如下:2

Figure 2: (a) Overview of PFN. The framework consists of three components: partition filter encoder, NER unit and RE unit. In task units, we use table-filling for word pair prediction. Orange, yellow and green represents NER-related, shared and RE-related component or features. (b) Detailed depiction of partition filter encoder in one single time step. We decompose feature encoding into two steps: partition and filter (shown in the gray area). In partition, we first segment neurons into two task partitions and one shared partition. Then in filter, partitions are selected and combined to form task-specific features and shared features, filtering out information irrelevant to each task.

图 2: (a) PFN框架概览。该框架包含三个组件: 分区过滤器编码器、NER单元和RE单元。在任务单元中, 我们使用表格填充(word pair prediction)进行预测。橙色、黄色和绿色分别代表NER相关、共享以及RE相关的组件或特征。(b) 单时间步内分区过滤器编码器的详细结构。我们将特征编码分解为两个步骤: 分区(partition)和过滤(filter)(如灰色区域所示)。在分区步骤中, 我们首先将神经元划分为两个任务分区和一个共享分区。然后在过滤步骤中, 通过选择并组合分区来形成任务特定特征和共享特征, 同时过滤掉与各任务无关的信息。

$$

\begin{array}{r l}&{\rho_{s,c_{t-1}}=\tilde{e}{c_{t-1}}\circ\tilde{r}{c_{t-1}}}\ &{\rho_{e,c_{t-1}}=\tilde{e}{c_{t-1}}-\rho_{s,c_{t-1}}}\ &{\rho_{r,c_{t-1}}=\tilde{r}{c_{t-1}}-\rho_{s,c_{t-1}}}\end{array}

$$

$$

\begin{array}{r l}&{\rho_{s,c_{t-1}}=\tilde{e}{c_{t-1}}\circ\tilde{r}{c_{t-1}}}\ &{\rho_{e,c_{t-1}}=\tilde{e}{c_{t-1}}-\rho_{s,c_{t-1}}}\ &{\rho_{r,c_{t-1}}=\tilde{r}{c_{t-1}}-\rho_{s,c_{t-1}}}\end{array}

$$

Note that if you add up all three partitions, the result is not equal to one. This guarantees that in forward message passing, some information is discarded to ensure that message is not overloaded, which is similar to the forgetting mechanism in LSTM.

需要注意的是,如果将三个分区的值相加,结果并不等于1。这保证了在前向消息传递过程中会丢弃部分信息,以防止消息过载,类似于LSTM中的遗忘机制。

Then, we aggregate partition information from both target cells, and three partitions are formed as a result. For all three partitions, we add up all related information from both cells:

然后,我们汇总来自两个目标单元格的分区信息,最终形成三个分区。对于这三个分区,我们将两个单元格中的所有相关信息相加:

$$

\begin{array}{r}{\rho_{e}=\rho_{e,c_{t-1}}\circ c_{t-1}+\rho_{e,\tilde{c}{t}}\circ\tilde{c}{t}}\ {\rho_{r}=\rho_{r,c_{t-1}}\circ c_{t-1}+\rho_{r,\tilde{c}{t}}\circ\tilde{c}{t}}\ {\rho_{s}=\rho_{s,c_{t-1}}\circ c_{t-1}+\rho_{s,\tilde{c}{t}}\circ\tilde{c}_{t}}\end{array}

$$

$$

\begin{array}{r}{\rho_{e}=\rho_{e,c_{t-1}}\circ c_{t-1}+\rho_{e,\tilde{c}{t}}\circ\tilde{c}{t}}\ {\rho_{r}=\rho_{r,c_{t-1}}\circ c_{t-1}+\rho_{r,\tilde{c}{t}}\circ\tilde{c}{t}}\ {\rho_{s}=\rho_{s,c_{t-1}}\circ c_{t-1}+\rho_{s,\tilde{c}{t}}\circ\tilde{c}_{t}}\end{array}

$$

Filter We propose three types of memory block: entity memory, relation memory and shared memory. Here we denote $\mu_{e}$ as entity memory, $\mu_{r}$ as relation memory and $\mu_{s}$ as shared memory. In $\mu_{e}$ , information in entity partition and shared partition are selected. In contrast, information in relation partition, which we assume is irrelevant or even harmful to named entity recognition task, is filtered out. The same logic applies to $\mu_{r}$ as well, where information in entity partition is filtered out and the rest is kept. In addition, information in shared partition will be stored in $\mu_{s}$ :

我们提出了三种类型的内存块:实体记忆 (entity memory) 、关系记忆 (relation memory) 和共享记忆 (shared memory) 。这里我们将 $\mu_{e}$ 表示为实体记忆, $\mu_{r}$ 表示为关系记忆, $\mu_{s}$ 表示为共享记忆。在 $\mu_{e}$ 中,实体分区和共享分区中的信息被选中。相反,我们假设关系分区中的信息对命名实体识别任务无关甚至有害,因此被过滤掉。同样的逻辑也适用于 $\mu_{r}$ ,其中实体分区中的信息被过滤掉,其余部分被保留。此外,共享分区中的信息将存储在 $\mu_{s}$ 中:

$$

\mu_{e}=\rho_{e}+\rho_{s};\mu_{r}=\rho_{r}+\rho_{s};\mu_{s}=\rho_{s}

$$

$$

\mu_{e}=\rho_{e}+\rho_{s};\mu_{r}=\rho_{r}+\rho_{s};\mu_{s}=\rho_{s}

$$

Note that inter-task information in the shared partition is accessible to both entity memory and relation memory, allowing balanced interaction between NER and RE. Whereas in sequential and parallel encoding, relation features have no direct impact on the formation of entity features.

请注意,共享分区中的任务间信息对实体记忆和关系记忆都是可访问的,这允许命名实体识别(NER)和关系抽取(RE)之间实现平衡的交互。而在顺序编码和并行编码中,关系特征对实体特征的形成没有直接影响。

After updating information in each memory, entity features $h_{e}$ , relation features $h_{r}$ and shared features $h_{s}$ are generated with corresponding memories:

在每次更新记忆信息后,会生成实体特征 $h_{e}$、关系特征 $h_{r}$ 和共享特征 $h_{s}$,它们分别对应不同的记忆模块:

$$

\begin{array}{r}{h_{e}=\operatorname{tanh}(\mu_{e})}\ {h_{r}=\operatorname{tanh}(\mu_{r})}\ {h_{s}=\operatorname{tanh}(\mu_{s})}\end{array}

$$

$$

\begin{array}{r}{h_{e}=\operatorname{tanh}(\mu_{e})}\ {h_{r}=\operatorname{tanh}(\mu_{r})}\ {h_{s}=\operatorname{tanh}(\mu_{s})}\end{array}

$$

Following the partition and filter steps, information in all three memories is used to form cell state $c_{t}$ , which will then be used to generate hidden state $h_{t}$ (The hidden and cell state at time step $t$ are input to the next time step):

在完成分区和过滤步骤后,所有三个记忆单元中的信息被用于形成细胞状态 $c_{t}$ ,随后该状态将用于生成隐藏状态 $h_{t}$ (时间步 $t$ 的隐藏状态和细胞状态将作为下一时间步的输入):

$$

\begin{array}{r l}&{c_{t}=\operatorname{Linear}([\mu_{e,t};\mu_{r,t};\mu_{s,t}])}\ &{h_{t}=\operatorname{tanh}(c_{t})}\end{array}

$$

$$

\begin{array}{r l}&{c_{t}=\operatorname{Linear}([\mu_{e,t};\mu_{r,t};\mu_{s,t}])}\ &{h_{t}=\operatorname{tanh}(c_{t})}\end{array}

$$

4.2 Global Representation

4.2 全局表征

In our model, we employ a unidirectional encoder for feature encoding. The backward encoder in the bidirectional setting is replaced with task-specific global representation to capture the semantics of future context. Empirically this shows to be more effective. For each task, global representation is the combination of task-specific features and shared features computed by:

在我们的模型中,我们采用单向编码器进行特征编码。双向设置中的反向编码器被替换为任务特定的全局表示,以捕捉未来上下文的语义。经验表明这种方法更为有效。对于每个任务,全局表示由任务特定特征和共享特征组合而成,计算方式如下:

$$

\begin{array}{r l}&{h_{g_{e},t}=\operatorname{tanh}(\operatorname{Linear}[h_{e,t};h_{s,t}])}\ &{h_{g_{r},t}=\operatorname{tanh}(\operatorname{Linear}[h_{r,t};h_{s,t}])}\ &{h_{g_{e}}=\operatorname*{maxpool}(h_{g_{e},1},\dots,h_{g_{e},L})}\ &{h_{g_{r}}=\operatorname{maxpool}(h_{g_{r},1},\dots,h_{g_{r},L})}\end{array}

$$

$$

\begin{array}{r l}&{h_{g_{e},t}=\operatorname{tanh}(\operatorname{Linear}[h_{e,t};h_{s,t}])}\ &{h_{g_{r},t}=\operatorname{tanh}(\operatorname{Linear}[h_{r,t};h_{s,t}])}\ &{h_{g_{e}}=\operatorname*{maxpool}(h_{g_{e},1},\dots,h_{g_{e},L})}\ &{h_{g_{r}}=\operatorname{maxpool}(h_{g_{r},1},\dots,h_{g_{r},L})}\end{array}

$$

4.3 Task Units

4.3 任务单元

Our model consists of two task units: NER unit and RE unit. In NER unit, the objective is to identify and categorize all entity spans in a given sentence. More specifically, the task is treated as a type-specific table filling problem. Given a entity type set $\mathcal{E}$ , for each type $k$ , we fill out a table whose element $e_{i j}^{k}$ represents probability of word $w_{i}$ and word $w_{j}$ being start and end position of an entity with type $k$ . For each word pair $(w_{i},w_{j})$ , we concatenate word-level entity featur e(s $h_{i}^{e}$ an)d $h_{j}^{e}$ as well as sentence-level global features $h_{g_{e}}$ before feeding it into a fully-connected layer with ELU activation to get entity span representation $h_{i j}^{e}$ :

我们的模型由两个任务单元组成:命名实体识别(NER)单元和关系抽取(RE)单元。在NER单元中,目标是识别并分类给定句子中的所有实体跨度。具体而言,该任务被视为类型特定的表格填充问题。给定实体类型集合$\mathcal{E}$,对于每个类型$k$,我们填充一个表格,其元素$e_{i j}^{k}$表示单词$w_{i}$和单词$w_{j}$作为类型$k$实体起止位置的概率。对于每个词对$(w_{i},w_{j})$,我们将词级实体特征$h_{i}^{e}$和$h_{j}^{e}$与句子级全局特征$h_{g_{e}}$拼接后,输入带有ELU激活函数的全连接层以获得实体跨度表示$h_{i j}^{e}$:

$$

h_{i j}^{e}=\mathrm{ELU}(\mathrm{Linear}([h_{i}^{e};h_{j}^{e};h_{g e}]))

$$

$$

h_{i j}^{e}=\mathrm{ELU}(\mathrm{Linear}([h_{i}^{e};h_{j}^{e};h_{g e}]))

$$

With the span representation, we can predict whether the span is an entity with type $k$ by feeding it into a feed forward neural layer:

通过跨度表示,我们可以将其输入前馈神经网络层来预测该跨度是否为类型 $k$ 的实体:

$$

\begin{array}{r}{e_{i j}^{k}=p\big(e=\langle w_{i},k,w_{j}\rangle|e\in S\big)}\ {=\sigma\big(\mathrm{Linear}(h_{i j}^{e})\big),\forall k\in\mathcal{E}}\end{array}

$$

$$

\begin{array}{r}{e_{i j}^{k}=p\big(e=\langle w_{i},k,w_{j}\rangle|e\in S\big)}\ {=\sigma\big(\mathrm{Linear}(h_{i j}^{e})\big),\forall k\in\mathcal{E}}\end{array}

$$

where $\sigma$ represents sigmoid activation function.

其中 $\sigma$ 表示 sigmoid 激活函数。

Computation in RE unit is mostly symmetrical to NER unit. Given a set of gold relation triples denoted as $T$ , this unit aims to identify all triples in the sentence. We only predict starting word of each entity in this unit as entity span prediction is already covered in NER unit. Similar to NER, we consider relation extraction as a relation-specific table filling problem. Given a relation label set $\mathcal{R}$ , for each relation $l\in\mathcal{R}$ , we fill out a table whose element $r_{i j}^{l}$ represents the probability of word $w_{i}$ and word $w_{j}$ being starting word of subject and object entity. In this way, we can extract all triples revolving around relation $l$ with one relation table. For each triple $(w_{i},l,w_{j})$ , similar to NER unit, triple representation $h_{i j}^{r}$ and relation score $r_{i j}^{l}$ are calculated as follows:

RE (关系抽取) 单元的计算过程与NER (命名实体识别) 单元基本对称。给定一组标注好的关系三元组$T$,该单元的目标是识别句子中的所有三元组。由于实体跨度预测已在NER单元完成,本单元仅预测每个实体的起始词。与NER类似,我们将关系抽取视为特定于关系类型的表格填充问题。给定关系标签集$\mathcal{R}$,对于每个关系$l\in\mathcal{R}$,我们填充一个表格,其元素$r_{i j}^{l}$表示词$w_{i}$与词$w_{j}$分别作为主体和客体实体起始词的概率。通过这种方式,我们可以用一个关系表提取围绕关系$l$的所有三元组。对于每个三元组$(w_{i},l,w_{j})$,与NER单元类似,三元组表示$h_{i j}^{r}$和关系分数$r_{i j}^{l}$的计算方式如下:

$$

\begin{array}{r l}&{h_{i j}^{r}=\mathrm{ELU}(\mathrm{Linear}([h_{i}^{r};h_{j}^{r};h_{g r}]))}\ &{r_{i j}^{l}=p(r=\langle w_{i},l,w_{j}\rangle|r\in T)}\ &{\quad\quad=\sigma(\mathrm{Linear}(h_{i j}^{r})),\forall l\in\mathcal{R}}\end{array}

$$

$$

\begin{array}{r l}&{h_{i j}^{r}=\mathrm{ELU}(\mathrm{Linear}([h_{i}^{r};h_{j}^{r};h_{g r}]))}\ &{r_{i j}^{l}=p(r=\langle w_{i},l,w_{j}\rangle|r\in T)}\ &{\quad\quad=\sigma(\mathrm{Linear}(h_{i j}^{r})),\forall l\in\mathcal{R}}\end{array}

$$

4.4 Training and Inference

4.4 训练与推理

For a given training dataset, the loss function $L$ that guides the model during training consists of two parts: $L_{n e r}$ for NER unit and $L_{r e}$ for RE unit:

对于给定的训练数据集,指导模型训练的损失函数 $L$ 由两部分组成:NER单元的 $L_{ner}$ 和RE单元的 $L_{re}$:

$$

\begin{array}{r l}&{L_{n e r}=\sum_{\hat{e}{i j}^{k}\in S}\mathrm{BCELoss}(e_{i j}^{k},\hat{e}{i j}^{k})}\ &{L_{r e}=\sum_{\hat{r}{i j}^{l}\in T}\mathrm{BCELoss}(r_{i j}^{l},\hat{r}_{i j}^{l})}\end{array}

$$

$$

\begin{array}{r l}&{L_{n e r}=\sum_{\hat{e}{i j}^{k}\in S}\mathrm{BCELoss}(e_{i j}^{k},\hat{e}{i j}^{k})}\ &{L_{r e}=\sum_{\hat{r}{i j}^{l}\in T}\mathrm{BCELoss}(r_{i j}^{l},\hat{r}_{i j}^{l})}\end{array}

$$

$\hat{e}{i j}^{k}$ and $\hat{r}{i j}^{l}$ are respectively ground truth label of entity table and relation table. $e_{i j}^{k}$ and rl are the predicted ones. We adopt BCELoss for each task3. The training objective is to minimize the loss function $L$ , which is computed as $L_{n e r}+L_{r e}$ .

$\hat{e}{i j}^{k}$ 和 $\hat{r}{i j}^{l}$ 分别是实体表和关系表的真实标签。$e_{i j}^{k}$ 和 rl 是预测值。我们对每个任务采用 BCELoss。训练目标是最小化损失函数 $L$,其计算方式为 $L_{n e r}+L_{r e}$。

During inference, we extract relational triples by combining results from both NER and RE unit. For each legitimate triple prediction $(s_{i,j}^{k},l,o_{m,n}^{k^{\prime}})$ where $l$ is the relation label, $k$ and $k^{\prime}$ are the entity type labels, and the indexes $i,j$ and $m,n$ are respectively starting and ending index of subject entity $s$ and object entity $o$ , the following conditions should be satisfied:

在推理过程中,我们通过结合命名实体识别(NER)和关系抽取(RE)单元的结果来提取关系三元组。对于每个合法的三元组预测 $(s_{i,j}^{k},l,o_{m,n}^{k^{\prime}})$ ,其中 $l$ 是关系标签, $k$ 和 $k^{\prime}$ 是实体类型标签,索引 $i,j$ 和 $m,n$ 分别表示主体实体 $s$ 和客体实体 $o$ 的起始和结束位置,需满足以下条件:

$$

e_{i j}^{k}\geq\lambda_{e};~e_{m n}^{k^{\prime}}\geq\lambda_{e};~r_{i m}^{l}\geq\lambda_{r}

$$

$$

e_{i j}^{k}\geq\lambda_{e};~e_{m n}^{k^{\prime}}\geq\lambda_{e};~r_{i m}^{l}\geq\lambda_{r}

$$

$\lambda_{e}$ and $\lambda_{r}$ are threshold hyper-parameters for entity and relation prediction, both set to be 0.5 without further fine-tuning.

$\lambda_{e}$ 和 $\lambda_{r}$ 是实体预测和关系预测的阈值超参数,均设为0.5且未进一步微调。

5 Experiment

5 实验

5.1 Dataset, Evaluation and Implementation Details

5.1 数据集、评估与实现细节

We evaluate our model on six datasets. NYT (Riedel et al., 2010), WebNLG (Zeng et al., 2018),

我们在六个数据集上评估了模型。NYT (Riedel et al., 2010)、WebNLG (Zeng et al., 2018)

ADE (Gu ruling appa et al., 2012), SciERC (Luan et al., 2018), ACE04 and ACE05 (Walker et al., 2006). Descriptions of the datasets can be found in Appendix A.

ADE (Gu等人, 2012)、SciERC (Luan等人, 2018)、ACE04和ACE05 (Walker等人, 2006)。数据集的详细说明见附录A。

Following previous work, we assess our model on NYT/WebNLG under partial match, where only the tail of an entity is annotated. Besides, as entity type information is not annotated in these datasets, we set the type of all entities to a single label "NONE", so entity type would not be predicted in our model. On ACE05, ACE04, ADE and SciERC, we assess our model under exact match where both head and tail of an entity are annotated. For ADE and ACE04, 10-fold and 5- fold cross validation are used to evaluate the model respectively, and $15%$ of the training set is used to construct the development set. For evaluation metrics, we report F1 scores in both NER and RE. In NER, an entity is seen as correct only if its type and boundary are correct. In RE, A triple is correct only if the types, boundaries of both entities and their relation type are correct. In addition, we report Macro-F1 score in ADE and Micro-F1 score in other datasets.

遵循先前工作,我们在NYT/WebNLG数据集上采用部分匹配(partial match)评估模型性能,其中仅标注实体的尾部信息。此外,由于这些数据集未标注实体类型信息,我们将所有实体类型统一设为"NONE"标签,因此模型不会预测实