Semantic Segmentation-Assisted Instance Feature Fusion for Multi-Level 3D Part Instance Segmentation

用于多层次3D零件实例分割的语义分割辅助实例特征融合

Abstract

摘要

Recognizing 3D part instances from a 3D point cloud is crucial for 3D structure and scene understanding. Several learning-based approaches use semantic segmentation and instance center prediction as training tasks and fail to further exploit the inherent relationship between shape semantics and part instances. In this paper, we present a new method for 3D part instance segmentation. Our method exploits semantic segmentation to fuse nonlocal instance features, such as center prediction, and further enhances the fusion scheme in a multi- and cross-level way. We also propose a semantic region center prediction task to train and leverage the prediction results to improve the clustering of instance points. Our method outperforms existing methods with a large-margin improvement in the PartNet benchmark. We also demonstrate that our feature fusion scheme can be applied to other existing methods to improve their performance in indoor scene instance segmentation tasks.

从3D点云中识别3D部件实例对三维结构和场景理解至关重要。现有基于学习的方法大多采用语义分割和实例中心预测作为训练任务,但未能进一步挖掘形状语义与部件实例间的内在关联。本文提出一种新型3D部件实例分割方法:通过语义分割融合非局部实例特征(如中心预测),并以多层次跨层级方式优化融合机制;同时设计语义区域中心预测任务,利用其预测结果提升实例点聚类效果。在PartNet基准测试中,本方法以显著优势超越现有方案。实验还表明,我们的特征融合机制可迁移至其他方法,有效提升室内场景实例分割任务的性能。

ters, which have a clear geometric and semantic meaning, or feature vectors embedded in a high-dimensional space, where the feature vectors of the points within the same instance should be similar. The feature vectors of the points belonging to different instances are far apart from each other. Instance-aware features are used to group points into 3D instances via suitable clustering algorithms. Point semantics is usually used only in the clustering step. As the point set with the same semantics in a scene is composed of one or multiple 3D instances, it is natural to think about how to utilize this relation maximally. The work of [38] and [45] associates semantic features with instance-aware features to improve the learning of semantic features and instance features. However, they only fuse instance features with semantic features in a pointwise manner, without using semantics-similar points to provide nonlocal and robust guidance to instance features.

规则:

- 输出中文翻译部分的时候,只保留翻译的标题,不要有任何其他的多余内容,不要重复,不要解释。

- 不要输出与英文内容无关的内容。

- 翻译时要保留原始段落格式,以及保留术语,例如 FLAC,JPEG 等。保留公司缩写,例如 Microsoft, Amazon, OpenAI 等。

- 人名不翻译

- 同时要保留引用的论文,例如 [20] 这样的引用。

- 对于 Figure 和 Table,翻译的同时保留原有格式,例如:“Figure 1: ”翻译为“图 1: ”,“Table 1: ”翻译为:“表 1: ”。

- 全角括号换成半角括号,并在左括号前面加半角空格,右括号后面加半角空格。

- 在翻译专业术语时,第一次出现时要在括号里面写上英文原文,例如:“生成式 AI (Generative AI)”,之后就可以只写中文了。

- 以下是常见的 AI 相关术语词汇对应表(English -> 中文):

- Transformer -> Transformer

- Token -> Token

- LLM/Large Language Model -> 大语言模型

- Zero-shot -> 零样本

- Few-shot -> 少样本

- AI Agent -> AI智能体

- AGI -> 通用人工智能

- Python -> Python语言

策略:

分三步进行翻译工作:

- 不翻译无法识别的特殊字符和公式,原样返回

- 将HTML表格格式转换成Markdown表格格式

- 根据英文内容翻译成符合中文表达习惯的内容,不要遗漏任何信息

最终只返回Markdown格式的翻译结果,不要回复无关内容。

现在请按照上面的要求开始翻译以下内容为简体中文:

具有明确几何和语义意义的点,或嵌入高维空间的特征向量,其中同一实例内的点的特征向量应相似。属于不同实例的点的特征向量彼此相距较远。实例感知特征通过合适的聚类算法将点分组为3D实例。点语义通常仅在聚类步骤中使用。由于场景中具有相同语义的点集由一个或多个3D实例组成,很自然地会思考如何最大限度地利用这种关系。[38] 和 [45] 的工作将语义特征与实例感知特征关联起来,以改进语义特征和实例特征的学习。然而,它们仅以逐点方式融合实例特征与语义特征,而未利用语义相似的点为实例特征提供非局部且鲁棒的指导。

1. Introduction

1. 引言

3D instance segmentation is the task of distinguishing 3D instances from 3D data at the object or part level and extracting the instance semantics simultaneously [33, 39, 22, 43]. It is essential for various applications, such as remote sensing, autonomous driving, mixed reality, 3D reverse engineering, and robotics. However, it is also a challenging task due to the diverse geometry and irregular distribution of 3D instances. Extracting part-level instances like chair wheels and desk legs becomes more difficult than segmenting object-level instances like beds and bookshelves, as the shape of the parts have large variations in structure and geometry, while part-annotated data are scarce.

3D实例分割(3D instance segmentation)是在物体或部件级别从3D数据中区分3D实例并同时提取实例语义的任务 [33, 39, 22, 43]。该技术对遥感、自动驾驶、混合现实、3D逆向工程和机器人等应用至关重要。然而,由于3D实例的几何多样性和不规则分布,这也是一项具有挑战性的任务。提取椅子轮子、桌腿等部件级实例比分割床、书架等物体级实例更为困难,因为部件的形状在结构和几何上存在较大差异,且带有部件标注的数据稀缺。

A popular learning-based approach to 3D instance segmentation follows the encoder-decoder paradigm, which predicts pointwise semantic labels and pointwise instanceaware features intercurrently [22, 32, 10, 25, 21, 42, 17]. Instance-sensitive features can be either 3D instance cen

一种流行的基于学习的3D实例分割方法遵循编码器-解码器范式,该方法同时预测逐点语义标签和逐点实例感知特征 [22, 32, 10, 25, 21, 42, 17]。实例敏感特征可以是3D实例中心...

In this study, we leverage the probability vectors of semantic segmentation to help aggregate the instance features of points in an explicit and nonlocal way. We call our approach semantic segmentation-assisted instance feature fusion. The aggregated instance feature combined with the pointwise instance feature provides both global and local guidance to improve instance center prediction robustly, whose accuracy is critical to the final quality of instance clustering. Compared to existing feature fusion schemes [38, 45], our feature fusion strategy is more effective and simpler, as verified by our experiments.

在本研究中,我们利用语义分割的概率向量以显式且非局部的方式帮助聚合点的实例特征。我们将这种方法称为语义分割辅助实例特征融合。聚合后的实例特征与逐点实例特征相结合,为实例中心预测提供了全局和局部指导,从而稳健地提升预测精度,这对实例聚类的最终质量至关重要。与现有特征融合方案 [38, 45] 相比,我们的特征融合策略更有效且更简单,实验也验证了这一点。

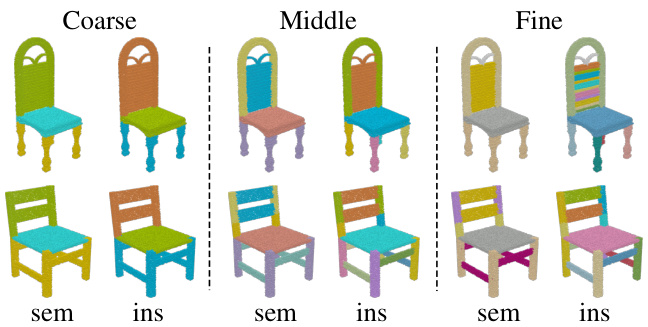

Human-made 3D shapes, such as chairs, are composed of a set of meaningful parts and exhibit hierarchical 3D structures (see Fig. 1). Extracting multi-level part instances from the point cloud is challenging, especially for fine-level 3D instances, such as chair wheels. Existing studies independently performed 3D part instance segmentation on each structural level and also suffered from the insufficient labeled-data issue on some shape categories. By utilizing the hierarchy of shape semantics and part instances, we extend our feature fusion scheme in a multi- and cross-level manner, where the probability feature vectors at all levels are used to aggregate instance features. Furthermore, to better distinguish part instances that are very close to each other, we propose to predict the centers of grouped instances, called semantic region centers, and use them to push the predicted instance centers away from them, as the semantic region centers play the role of the centers of a group of semantics-same part instances. On the PartNet dataset [27] in which 3D shapes have 3-level semantic part instances, our approach exceeds all existing approaches on the mean average precision (mAP) part category $(\mathrm{IoU}{>}0.5)$ by an average margin of $+6.6%$ on 24 shape categories.

人工制作的3D形状(如椅子)由一组有意义的部分组成,并呈现层次化的3D结构(见图1)。从点云中提取多层级部件实例具有挑战性,尤其是对于细粒度3D实例(如椅子滚轮)。现有研究独立地在每个结构层级上执行3D部件实例分割,同时面临某些形状类别标注数据不足的问题。通过利用形状语义和部件实例的层次结构,我们以多层级和跨层级方式扩展了特征融合方案,其中所有层级的概率特征向量都用于聚合实例特征。此外,为更好地区分彼此非常接近的部件实例,我们提出预测分组实例的中心(称为语义区域中心),并利用它们将预测的实例中心推离这些区域中心,因为语义区域中心扮演着一组语义相同部件实例的中心角色。在包含3级语义部件实例的PartNet数据集[27]上,我们的方法在24个形状类别上的平均精度均值(mAP)部件类别$(\mathrm{IoU}{>}0.5)$指标上,平均超出所有现有方法+6.6%。

Figure 1: Illustration of 3D models with fine-grained and hierarchical part structures. Models are selected from PartNet [27]. From left to right: part semantics and part instances at the coarse, middle and fine level. Point colors are assigned to distinguish different part semantics and part instances.

图 1: 具有细粒度层级部件结构的3D模型示意图。模型选自PartNet [27]。从左至右分别为:粗粒度、中粒度和细粒度层级的部件语义及部件实例。通过点着色区分不同部件语义与部件实例。

Our semantic segmentation-assisted instance feature fusion scheme is simple and lightweight; it is not limited to 3D part instance segmentation and can be extended to 3D instance segmentation for indoor scenes. We integrated several state-of-the-art 3D instance segmentation frameworks with our feature fusion scheme and observed consistent improvements on the benchmark of ScanNet [8] and S3DIS [2], which demonstrate the efficacy and generality of our approach.

我们的语义分割辅助实例特征融合方案简单轻量,不仅限于3D部件实例分割,还可扩展至室内场景的3D实例分割任务。我们将多个前沿的3D实例分割框架与特征融合方案结合,在ScanNet [8] 和 S3DIS [2] 基准测试中均观察到性能提升,证明了该方法的有效性和普适性。

Contributions We make two contributions to tackle 3D instance segmentation: (1) We propose an instance feature fusion strategy that directly fuses instance features in a nonlocal way according to the guidance of semantic segmentation to improve instance center prediction. This strategy is lightweight and easily incorporated into many 3D instance segmentation frameworks for both 3D object and part instance segmentation. (2) Our multi- and cross-level instance feature fusion and the use of the semantic region center are effective for multi-level part instance segmentation and achieve the best performance on the PartNet bench- mark. Our code and trained models are publicly available at https://isunchy.github.io/projects/3d_ instance segmentation.html.

贡献

我们在3D实例分割方面做出了两项贡献:(1) 提出了一种实例特征融合策略,该策略根据语义分割的指导以非局部方式直接融合实例特征,从而改进实例中心预测。该策略轻量且易于集成到多种3D实例分割框架中,适用于3D物体和部件实例分割。(2) 我们的多层级与跨层级实例特征融合及语义区域中心的使用,对多层级部件实例分割有效,并在PartNet基准测试中取得了最佳性能。代码与训练模型已公开于https://isunchy.github.io/projects/3d_instance_segmentation.html。

2. Related Work

2. 相关工作

2D instance segmentation As surveyed by [14], four typical paradigms exist in the literature. The methods in the first paradigm generate mask proposals and then assign suitable shape semantics to the proposals [11, 30, 37]. The second one detects multiple objects using boxes and then extracts object masks within the boxes. Mask R-CNN [16] is one of the representative methods. The third is a bottom-up approach that predicts the semantic labels of each pixel and then groups pixels into 2D instances [3]. Its computation is relatively heavy due to per-pixel prediction. The fourth paradigm suggests using dense sliding windows techniques to generate mask proposals and mask scores for better instance segmentation [9, 5]. For detailed surveys, see articles [14, 44, 26].

2D实例分割

如[14]所述,文献中存在四种典型范式。第一种范式的方法先生成掩膜(mask)候选区域,再为候选区域分配合适的形状语义[11, 30, 37]。第二种方法先用边界框检测多个物体,再在框内提取物体掩膜,代表性方法是Mask R-CNN[16]。第三种是自底向上方法,先预测每个像素的语义标签,再将像素分组为2D实例[3],由于需要逐像素预测,计算量较大。第四种范式建议使用密集滑动窗口技术生成掩膜候选区域和掩膜分数,以获得更好的实例分割效果[9, 5]。详细综述可参阅文献[14, 44, 26]。

3D Instance segmentation The existing 3D approaches follow the paradigms of 2D instance segmentation (cf. surveys [13, 18]). Proposal-based methods [27, 20] predict a fixed number of instance segmentation masks and match them with the ground truth using the Hungarian algorithm or a trainable assignment module. The learned matching scores are used to group 3D points into instances. Detection-based methods [19, 40, 39, 10] generate highobjectness 3D proposals like boxes and then refine them to obtain instance masks.

3D实例分割

现有的3D方法遵循2D实例分割的范式(参见综述[13, 18])。基于提议的方法[27, 20]预测固定数量的实例分割掩码,并使用匈牙利算法或可训练的分配模块将其与真实标注匹配。学习到的匹配分数用于将3D点分组为实例。基于检测的方法[19, 40, 39, 10]生成高目标性的3D提议(如边界框),然后细化这些提议以获得实例掩码。

Clustering-based methods first produce per-point predictions and then use clustering methods to group points into instances. SGPN [36] predicts the similarity score of any two points and merges points into instance groups according to the scores. MASC [24] predicts the multiscale affinity between neighboring voxels, for instance, clustering. Hao et al. [15] regress the instance voxel occupancy for more accurate segmentation outputs. PointGroup [21] uses both the original and offset-shifted point sets to group points into candidate instances. DyCo3D [17] improves pointgroup by introducing a dynamic-convolution-based instance decoder. Observing that non-end-to-end clustered-based methods often exhibit over-segmentation and under-segmentation, Chen et al. [4] and Liang et al. [23] proposed mid-level shape representation to generate instance proposals hierarchically in an end-to-end training manner. Liu et al. [25] approximate the distributions of centers to select center candidates for instance prediction. As mentioned in Section 1, most cluster-based methods treat semantic segmentation and instance feature learning as multitasks; only the works of [38] and [45] fuse the network features of the instance prediction branch and the semantic segmentation branch to improve the performance of both branches. Unlike the pointwise fusion of [38] and [45], our method fuses instance features in a nonlocal manner guided by semantic outputs, which is more robust and effective.

基于聚类的方法首先生成逐点预测,随后通过聚类方法将点分组为实例。SGPN [36] 预测任意两点间的相似度分数,并依据分数将点合并为实例组。MASC [24] 预测相邻体素间的多尺度亲和力以实现聚类。Hao等 [15] 通过回归实例体素占用率来获得更精确的分割输出。PointGroup [21] 同时利用原始点集和偏移点集将点分组为候选实例。DyCo3D [17] 通过引入基于动态卷积的实例解码器改进了PointGroup。针对非端到端聚类方法常出现的过分割与欠分割问题,Chen等 [4] 和Liang等 [23] 提出中层形状表示法,以端到端训练方式分层生成实例提案。Liu等 [25] 通过中心分布近似来筛选实例预测的中心候选点。如第1节所述,多数基于聚类的方法将语义分割与实例特征学习视为多任务处理;仅[38]和[45]的工作通过融合实例预测分支与语义分割分支的网络特征来提升双分支性能。不同于[38]和[45]的逐点融合,我们的方法在语义输出引导下以非局部方式融合实例特征,具有更强的鲁棒性和有效性。

Part instance segmentation Different from object-level 3D instance segmentation, part-level 3D instance segmentation is less studied due to limited annotated data and the difficulty brought by geometry-similar but semantics-different shape parts. Mo et al. [27] present PartNet — a large-scale dataset of 3D objects with fine-grained, instance-level, and hierarchical part information. For the part instance segmentation task, they developed a detection-by-segmentation method and trained a specific network to extract part instances per structural level, where the semantic hierarchy was used for part instance segmentation. Other object-level instance segmentation methods, such as [17, 42], have also been extended to the task of part instance segmentation, but they do not use the semantic hierarchy. Yu et al. [41] further enriched PartNet with information about the binary hierarchy and designed a recursive neural network to perform recursive binary decomposition to extract 3D parts. Our multi- and cross-level instance feature fusion uses semantic hierarchy to improve instance center prediction. Furthermore, the use of semantic region centers assists instance grouping. The semantic region centers serve the role of symmetric centers of a group of semantics-same part instances and provide weak supervision to the training.

部件实例分割

与物体级3D实例分割不同,由于标注数据有限以及几何相似但语义不同的形状部件带来的困难,部件级3D实例分割的研究较少。Mo等人[27]提出了PartNet——一个包含细粒度、实例级和层次化部件信息的大规模3D物体数据集。针对部件实例分割任务,他们开发了一种基于分割的检测方法,并训练了特定网络来提取每个结构层级的部件实例,其中语义层次结构被用于部件实例分割。其他物体级实例分割方法(如[17, 42])也被扩展到部件实例分割任务,但未使用语义层次结构。Yu等人[41]进一步用二元层次结构信息丰富了PartNet,并设计了一个递归神经网络来执行递归二元分解以提取3D部件。我们的多层级和跨层级实例特征融合利用语义层次结构来改进实例中心预测。此外,语义区域中心的使用有助于实例分组。语义区域中心充当一组语义相同部件实例的对称中心角色,并为训练提供弱监督。

3. Methodology

3. 方法论

In this section, we first introduce our baseline neural network for single-level and multi-level 3D part instance segmentation in Section 3.1, then present the model enhanced by our semantic segmentation-assisted instance feature fusion module in Section 3.2 and the semantic region center prediction module in Section 3.3.

在本节中,我们首先在第3.1节介绍用于单层级和多层级3D零件实例分割的基线神经网络,然后在第3.2节展示通过语义分割辅助实例特征融合模块增强的模型,并在第3.3节介绍语义区域中心预测模块。

3.1. Baseline network

3.1. 基线网络

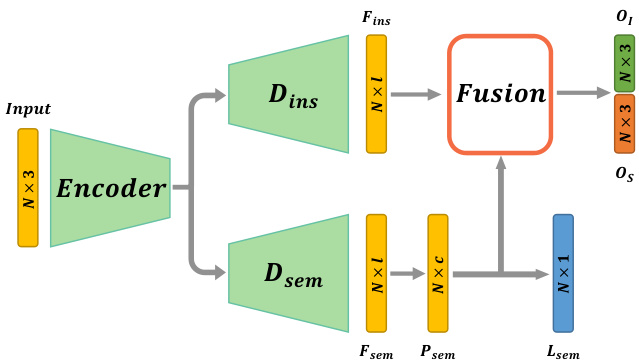

Our baseline network follows the encoder-decoder paradigm. The input to the encoder is a set of 3D points $s$ in which each point may be equipped with additional signals such as point normal and RGB color. Two parallel decoders are concatenated after the encoder to predict the point-wise semantic labels and the point offset to its corresponding instance center, named semantic decoder $D_{s e m}$ and instance decoder $D_{i n s}$ , respectively. The baseline network is depicted in Fig. 2, where the fusion module and semantic region center will be introduced in 3.2 and 3.3, respectively.

我们的基线网络遵循编码器-解码器范式。编码器的输入是一组3D点$s$,其中每个点可能附带法向量和RGB颜色等额外信号。编码器后连接两个并行解码器,分别用于预测逐点语义标签及其对应实例中心的点偏移量,称为语义解码器$D_{sem}$和实例解码器$D_{ins}$。基线网络结构如图2所示,其中融合模块和语义区域中心将分别在3.2和3.3节介绍。

The input points are shifted by the predicted offsets, and the shifted points with the same semantics are clustered into multiple 3D instances via the mean-shift algorithm [7]. In an ideal situation, all input points are shifted to their ground truth instance centers, but in practice, the accuracy of predicted offsets affects the performance of instance clustering.

输入点根据预测偏移量进行位移,通过均值漂移算法 [7] 将具有相同语义的位移点聚类为多个3D实例。理想情况下,所有输入点都应位移至其真实实例中心,但实际上预测偏移量的精度会影响实例聚类的性能。

Network structure We choose O-CNN-based U-Nets [34, 35] as our encoder-decoder structure. The network is built on octree-based CNNs , and its memory and computational efficiency are similar to those of other sparse convolutionbased neural networks [12, 6]. The input point cloud is converted to an octree first, whose non-empty finest octants store the average signal of the points contained by the octants. Both $D_{s e m}$ and $D_{i n s}$ output point-wise features via trilinear interpolation on sparse voxels: $F_{s e m},F_{i n s}\in\mathbb{R}^{N\times l}$ , where $N$ is the number of points and $l$ is the dimension of feature vectors.

网络结构 我们选择基于O-CNN的U-Net [34, 35]作为编码器-解码器结构。该网络建立在基于八叉树的CNN上,其内存和计算效率与其他基于稀疏卷积的神经网络 [12, 6] 相似。输入点云首先被转换为八叉树,其非空最精细八分区存储了该八分区所含点的平均信号。$D_{sem}$ 和 $D_{ins}$ 都通过对稀疏体素的三线性插值输出逐点特征:$F_{sem},F_{ins}\in\mathbb{R}^{N\times l}$,其中 $N$ 是点数,$l$ 是特征向量的维度。

Figure 2: Illustration of our network architecture for single-level part instance segmentation. The network takes a 3D point cloud as input. $N$ is the point number. A shared encoder and two parallel decoders $D_{s e m},D_{i n s}$ are used to output the pointwise semantic feature $F_{s e m}$ and instance feature $F_{i n s}$ to predict the point semantic label $L_{s e m}$ and the offset vector $O_{I}$ to the instance center, and the offset vector $O_{S}$ to the semantic region center. The feature fusion module aggregates the instance features of points according to semantic segmentation probability vectors to improve the offset prediction.

图 2: 单层级部件实例分割的网络架构示意图。该网络以3D点云作为输入,$N$表示点数。通过共享编码器和两个并行解码器$D_{sem}$、$D_{ins}$分别输出逐点语义特征$F_{sem}$和实例特征$F_{ins}$,用于预测点的语义标签$L_{sem}$、到实例中心的偏移向量$O_{I}$以及到语义区域中心的偏移向量$O_{S}$。特征融合模块根据语义分割概率向量聚合点的实例特征,以提升偏移预测精度。

Semantic prediction and offset prediction A two-layer MLP is used to convert $F_{s e m}$ to the segmentation probability $P_{s e m}\in\mathbb{R}^{N\times c}$ , where $c$ is the number of semantic classes. The segmentation label $L_{s e m}$ is then determined from $P_{s e m}$ . The loss for training semantic segmentation is the standard cross-entropy loss.

语义预测与偏移预测

使用一个双层MLP将$F_{s e m}$转换为分割概率$P_{s e m}\in\mathbb{R}^{N\times c}$,其中$c$为语义类别数。分割标签$L_{s e m}$由$P_{s e m}$确定。语义分割训练的损失函数采用标准交叉熵损失。

$$

L_{s e m a n t i c}=\frac{1}{N}\sum_{i=1}^{N}C E(p_{i},p_{i}^{*}).

$$

$$

L_{s e m a n t i c}=\frac{1}{N}\sum_{i=1}^{N}C E(p_{i},p_{i}^{*}).

$$

Here, $p^{*}$ is the semantic label.

这里,$p^{*}$ 是语义标签。

Parallel to the semantic branch, another two-layer MLP maps $F_{i n s}$ to the offset tensor $O_{I}\in\mathbb{R}^{N\times3}$ , which is used to shift the input points to the center of the target instance. The loss for predicting the offsets is the $L_{2}$ loss between the prediction and the ground-truth offsets.

与语义分支并行,另一个双层MLP将 $F_{i n s}$ 映射到偏移张量 $O_{I}\in\mathbb{R}^{N\times3}$ ,用于将输入点移动到目标实例的中心。预测偏移的损失是预测值与真实偏移之间的 $L_{2}$ 损失。

$$

L_{o f f s e t}=\frac{1}{N}\sum_{i=1}^{N}||o_{i}-o_{i}^{*}||_{2}.

$$

$$

L_{o f f s e t}=\frac{1}{N}\sum_{i=1}^{N}||o_{i}-o_{i}^{*}||_{2}.

$$

Here, $o^{*}$ is the ground-truth offset.

这里,$o^{*}$ 是真实偏移量。

Instance clustering During the test phase, the network outputs pointwise semantics and offset vectors. We use the mean-shift algorithm to group the shifted points with the same semantics into disjointed instances.

实例聚类

在测试阶段,网络会逐点输出语义和偏移向量。我们使用均值漂移(mean-shift)算法将具有相同语义的偏移点分组为不连贯的实例。

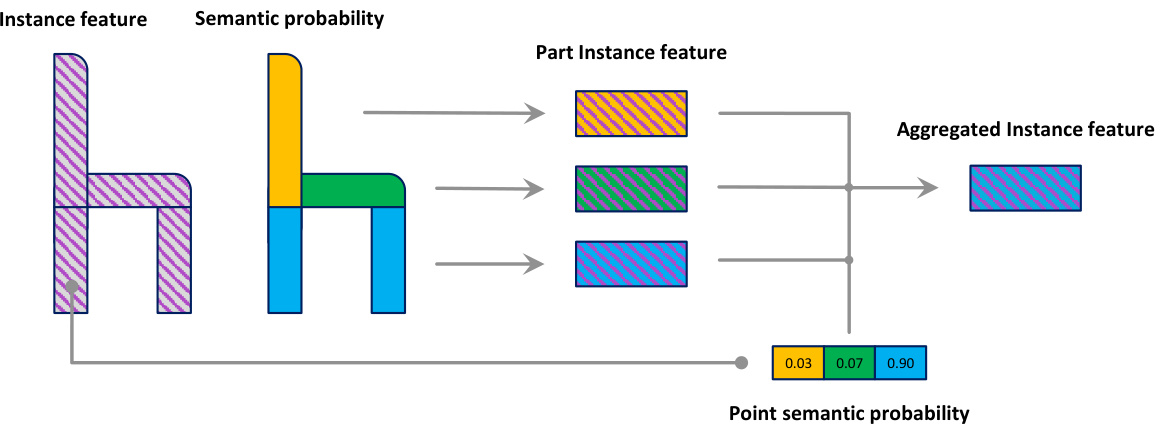

Figure 3: Semantic segmentation-assisted instance feature fusion pipeline. Given the per-point instance feature and semantic probability, we get the part instance features according to the instance feature of points associated with each semantic part. Then we obtain the aggregated instance feature for each point by combining part instance features using its semantic probability.

图 3: 语义分割辅助的实例特征融合流程。给定逐点实例特征和语义概率,我们根据与每个语义部分相关联的点的实例特征获取部件实例特征。随后通过结合各部件实例特征并利用其语义概率,得到每个点的聚合实例特征。

Multi-level part instances For shapes with hierarchical and multi-level part instances, there are two naive way to extend the baseline network: (1) train the baseline network for each level individually; (2) revise the baseline network to output multi-level semantics and multi-level offset vectors simultaneously by adding multi-prediction branches after $F_{i n s}$ and $F_{s e m}$ . We denote $K$ as the level number, add a superscript $k$ to all the symbols defined above to distinguish features at the $k$ -th level, like $F_{s e m}^{(k)},F_{i n s}^{(k)},P_{s e m}^{(k)},c^{(k)},\check{O}_{I}^{(k)}$ .

多层次部件实例

对于具有层次结构和多层次部件实例的形状,有两种简单的方法来扩展基线网络:(1) 为每个级别单独训练基线网络;(2) 通过在 $F_{ins}$ 和 $F_{sem}$ 后添加多预测分支,修改基线网络以同时输出多层次语义和多层次偏移向量。我们将 $K$ 表示为级别数,并为上述所有符号添加一个上标 $k$ 以区分第 $k$ 层的特征,例如 $F_{sem}^{(k)},F_{ins}^{(k)},P_{sem}^{(k)},c^{(k)},\check{O}_{I}^{(k)}$。

3.2. Semantic segmentation-assisted instance feature fusion

3.2. 语义分割辅助的实例特征融合

3.2.1 Single-level instance feature fusion

3.2.1 单层级实例特征融合

As the points within the same instance possess the same instance center, it is essential to aggregate the instance features over these points to regress the offset to the instance center robustly. However, these points are not known during the network inference stage and they are also the objective of the task. The semantic decoder branch can predict the semantic region composed by a set of part instances; we can aggregate the instance features over the semantic parts to provide nonlocal guidance to the input points. We propose a semantic segmentation-assisted instance feature fusion module that contains two steps. In the first step, for each semantic part, we compute the instance feature based on the points associated with this part. Each point is associated with an aggregated instance feature from semantic parts in the second step according to its semantic probability vector. The instance feature fusion pipeline is illustrated in Fig. 3. Our feature aggregation procedure is as follows.

由于同一实例内的点共享相同的实例中心,因此需要聚合这些点上的实例特征以稳健地回归到实例中心的偏移量。然而,在网络推理阶段这些点尚未可知,它们本身也是任务的目标。语义解码器分支可预测由一组部件实例构成的语义区域,我们能够通过聚合语义部件上的实例特征为输入点提供非局部引导。我们提出了一个包含两步操作的语义分割辅助实例特征融合模块:第一步,针对每个语义部件,基于与该部件关联的点计算实例特征;第二步,根据各点的语义概率向量,为其关联来自语义部件的聚合实例特征。图3展示了该实例特征融合流程,具体聚合步骤如下。

Part instance feature We first aggregate the instance features with respect to the semantic label $m\in{1,\ldots,c}$ over

部件实例特征

我们首先针对语义标签 $m\in{1,\ldots,c}$ 聚合实例特征

the input:

输入:

$$

Z_{m}:=\frac{\sum_{\mathbf{p}\in\mathcal{S}}P_{s e m}(\mathbf{p})|{m}\cdot F_{i n s}(\mathbf{p})}{\sum_{\mathbf{p}\in\mathcal{S}}P_{s e m}(\mathbf{p})|_{m}}.

$$

$$

Z_{m}:=\frac{\sum_{\mathbf{p}\in\mathcal{S}}P_{s e m}(\mathbf{p})|{m}\cdot F_{i n s}(\mathbf{p})}{\sum_{\mathbf{p}\in\mathcal{S}}P_{s e m}(\mathbf{p})|_{m}}.

$$

$Z_{m}$ is the aggregated instance feature for the semantic part with semantic label of $m$ , $P_{s e m}(\mathbf{p})|_{m}$ is the probability value of point $\mathbf{p}$ with respect to the semantic label $m$ .

$Z_{m}$ 是具有语义标签 $m$ 的语义部分的聚合实例特征, $P_{sem}(\mathbf{p})|_{m}$ 是点 $\mathbf{p}$ 相对于语义标签 $m$ 的概率值。

Aggregated instance feature For each point $\mathbf{p}$ , we aggregate the instance feature $Z_{m}\mathbf{s}$ using the semantic probability of $\mathbf{p}$ as follows:

聚合实例特征 对于每个点 $\mathbf{p}$,我们使用 $\mathbf{p}$ 的语义概率聚合实例特征 $Z_{m}\mathbf{s}$,具体如下:

$$

\hat{F}(\mathbf{p})=\sum_{m=1}^{c}P_{s e m}(\mathbf{p})|{m}\cdot Z_{m}.

$$

$$

\hat{F}(\mathbf{p})=\sum_{m=1}^{c}P_{s e m}(\mathbf{p})|{m}\cdot Z_{m}.

$$

The above equations for all points can be written in matrix form: $\mathbf{Z}=(\mathbf{P_{sem}^{\star}}/\left(\mathbf{I_{1}}\mathbf{P_{sem}}\right))^{T}\mathbf{F_{ins}},\hat{\mathbf{F}}=\mathbf{P_{sem}}\mathbf{Z}$ , where $\mathbf{Z}\in\mathbb{R}^{c\times l}$ , $\mathbf{P_{sem}}\in\mathbb{R}^{N\times c}$ , $\mathbf{F_{ins}}\in\mathbb{R}^{N\times l}$ , $\hat{\mathbf{F}}\in\mathbb{R}^{N\times l}$ , ${\bf{I}_{1}}$ is an $N\times N$ matrix with all ones, and $\mathbf{\mu}^{\leftarrow}/\mathbf{\eta}^{\rightarrow}$ represents elementwise division.

所有点的上述方程可以写成矩阵形式:$\mathbf{Z}=(\mathbf{P_{sem}^{\star}}/\left(\mathbf{I_{1}}\mathbf{P_{sem}}\right))^{T}\mathbf{F_{ins}},\hat{\mathbf{F}}=\mathbf{P_{sem}}\mathbf{Z}$,其中$\mathbf{Z}\in\mathbb{R}^{c\times l}$,$\mathbf{P_{sem}}\in\mathbb{R}^{N\times c}$,$\mathbf{F_{ins}}\in\mathbb{R}^{N\times l}$,$\hat{\mathbf{F}}\in\mathbb{R}^{N\times l}$,${\bf{I}_{1}}$是一个全1的$N\times N$矩阵,$\mathbf{\mu}^{\leftarrow}/\mathbf{\eta}^{\rightarrow}$表示逐元素除法。

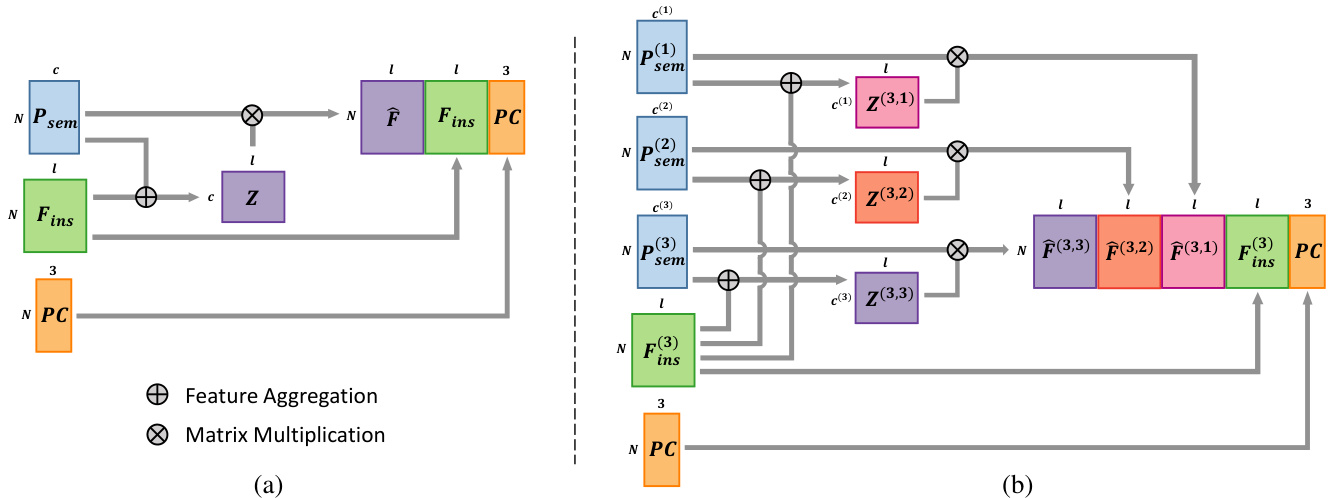

We concatenate the aggregated instance feature ${\hat{F}}(\mathbf{p})$ , the local instance feature $F_{i n s}(\mathbf{p})$ and the position of $\mathbf{p}$ to form a fused instance feature $F_{f u s i o n}(\mathbf{p}):=[\hat{F}(\mathbf{p}),{F}_{i n s}(\mathbf{p}),\mathbf{p}]$ , and use it to predict the instance center offset. Fig. 4-(a) illustrates our feature fusion module for a single level. The overall network structure is shown in Fig. 2.

我们将聚合实例特征 ${\hat{F}}(\mathbf{p})$、局部实例特征 $F_{i n s}(\mathbf{p})$ 和点 $\mathbf{p}$ 的位置拼接形成融合实例特征 $F_{f u s i o n}(\mathbf{p}):=[\hat{F}(\mathbf{p}),{F}_{i n s}(\mathbf{p}),\mathbf{p}]$,并利用该特征预测实例中心偏移量。图4-(a)展示了单层级的特征融合模块结构,整体网络架构如图2所示。

3.2.2 Multi-level instance feature fusion

3.2.2 多层级实例特征融合

For shapes with multi-level part instances, our single-level instance feature fusion can be applied to each level individually. The naively extended baseline networks (Section 3.1) can benefit from this kind of instance feature fusion for multi-level part instance segmentation.

对于具有多层级部件实例的形状,我们的单层级实例特征融合可以分别应用于每个层级。这种实例特征融合能够帮助朴素扩展的基线网络(第3.1节)提升多层级部件实例分割的效果。

Figure 4: Semantic segmentation-assisted instance feature fusion for single-level and cross-level. (a) Single-level instance feature fusion. Instance features $\mathbf{F_{ins}}$ are aggregated to $\hat{\mathbf{F}}$ , with the help of semantic probability vectors $\mathbf{P_{sem}}$ . $\hat{\mathbf{F}}$ , $\mathbf{F_{ins}}$ and the point position PC are assembled to form the fused instance features $\mathbf{F_{fusion}}$ . (b) Cross-level instance feature fusion for a 3-level part instance segmentation. The fused features at the 3rd level are depicted. For clarity, we omit fused features at other levels.

图 4: 单层级与跨层级的语义分割辅助实例特征融合。(a) 单层级实例特征融合。实例特征 $\mathbf{F_{ins}}$ 在语义概率向量 $\mathbf{P_{sem}}$ 的辅助下聚合为 $\hat{\mathbf{F}}$ 。$\hat{\mathbf{F}}$ 、$\mathbf{F_{ins}}$ 与点云位置 PC 组合形成融合实例特征 $\mathbf{F_{fusion}}$ 。(b) 3层级部件实例分割的跨层级特征融合。图中展示了第3层级的融合特征,为简化示意图省略了其他层级的融合特征。

3.2.3 Cross-level instance feature fusion

3.2.3 跨层级实例特征融合

When multi-level part instances and semantic segmentation exhibit a hierarchical relationship, i.e. , the fine-level part instances are contained within the coarser-level part instances and can inherit the semantics from their parent level, we leverage the semantic segmentation in multi-levels to fuse instance features at each level, we call our strategy crosslevel instance feature fusion. The exact fusion procedure is as follows.

当多级部件实例与语义分割呈现层级关系时(即细粒度部件实例包含在粗粒度部件实例中,并可从父级继承语义),我们利用多级语义分割来融合每一级的实例特征,这种策略称为跨级实例特征融合。具体融合流程如下。

Instance feature aggregation On level $k$ , we aggregate the instance features using semantic probability vectors at the $r$ -th level:

实例特征聚合

在层级 $k$ 上,我们使用第 $r$ 层级的语义概率向量聚合实例特征:

$$

Z_{m}^{(k,r)}:=\frac{\sum_{{\bf{q}}\in{\cal{S}}}P_{s e m}^{(r)}({\bf{q}})|{m}\cdot F_{i n s}^{(k)}({\bf{q}})}{\sum_{{\bf{q}}\in{\cal{S}}}P_{s e m}^{(r)}({\bf{q}})|_{m}},m\in{1,\ldots,c^{(r)}}.

$$

$$

Z_{m}^{(k,r)}:=\frac{\sum_{{\bf{q}}\in{\cal{S}}}P_{s e m}^{(r)}({\bf{q}})|{m}\cdot F_{i n s}^{(k)}({\bf{q}})}{\sum_{{\bf{q}}\in{\cal{S}}}P_{s e m}^{(r)}({\bf{q}})|_{m}},m\in{1,\ldots,c^{(r)}}.

$$

$Z_{m}^{(k,r)}\mathbf{s}$ are then averaged at point $\mathbf{p}$ at the $k$ -th level:

在第 $k$ 层,将 $Z_{m}^{(k,r)}\mathbf{s}$ 在点 $\mathbf{p}$ 处进行平均:

$$

\hat{F}^{(k,r)}(\mathbf{p})=\sum_{m=1}^{c^{(r)}}P_{s e m}^{(r)}(\mathbf{p})|{m}\cdot Z_{m}^{(k,r)}.

$$

$$

\hat{F}^{(k,r)}(\mathbf{p})=\sum_{m=1}^{c^{(r)}}P_{s e m}^{(r)}(\mathbf{p})|{m}\cdot Z_{m}^{(k,r)}.

$$

The fused instance feature of $\mathbf{p}$ at the $k$ -th level is defined as follows:

$\mathbf{p}$ 在第 $k$ 层的融合实例特征定义如下:

$$

F_{f u s i o n}^{(k)}(\mathbf{p}):=[\hat{F}^{(k,1)}(\mathbf{p}),\cdot\cdot\cdot,\hat{F}^{(k,K)}(\mathbf{p}),F_{i n s}^{(k)}(\mathbf{p}),\mathbf{p}].

$$

$$

F_{f u s i o n}^{(k)}(\mathbf{p}):=[\hat{F}^{(k,1)}(\mathbf{p}),\cdot\cdot\cdot,\hat{F}^{(k,K)}(\mathbf{p}),F_{i n s}^{(k)}(\mathbf{p}),\mathbf{p}].

$$

It is mapped to offset vectors at the $k$ -th level by an MLP layer. We illustrate the cross-level instance feature fusion in Fig. 4-(b).

它通过一个MLP层被映射到第$k$层的偏移向量。我们在图4-(b)中展示了跨层级实例特征融合。

3.3. Semantic region center

3.3. 语义区域中心

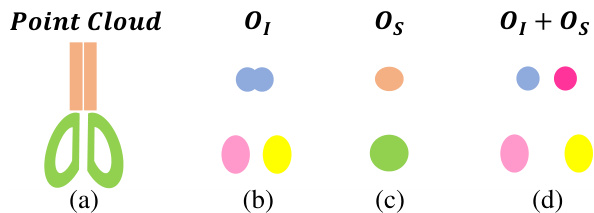

During the test phase, we use the mean-shift algorithm to split the offset-shifted points with the same semantics into different instances. For 3D instances which are close to each other, like two blades of a scissor shown in Fig. 5-(a), it is difficult to separate the points belonging to them using mean-shift or other 3D point clustering algorithms, as the instance centers are very close to each other (see Fig. 5-(b)). We introduce the concept of semantic region center, which is the center of semantically -same instance centers. The semantic region center is usually the center of symmetrically arranged parts for human-made shapes Fig. 5-(c) illustrates the semantic region centers. To make instance clustering easy, the instance centers can be further shifted away from the semantic region center, as shown Fig. 5-(d). In the offset prediction branch of our network, we also add the offset prediction $O_{S}$ to the center of the semantic region for each point.

在测试阶段,我们使用均值漂移算法将具有相同语义的偏移点分割到不同实例中。对于彼此靠近的3D实例(如图5-(a)所示的剪刀两片刀刃),由于实例中心非常接近(见图5-(b)),使用均值漂移或其他3D点聚类算法难以分离属于它们的点。我们引入了语义区域中心的概念,即语义相同实例中心的中心点。对于人造形状,语义区域中心通常是对称排列部件的中心,图5-(c)展示了语义区域中心。为简化实例聚类,实例中心可进一步远离语义区域中心进行偏移,如图5-(d)所示。在网络偏移预测分支中,我们还为每个点增加了指向语义区域中心的偏移预测$O_{S}$。

Figure 5: Illustration of the use of semantic region centers. (a) Input point cloud of a scissor shape. Ground-truth part instances are colored according to their semantics. (b) Predicted instance centers. (c) Predicted semantic region centers. (d) By pushing the predicted instance centers away from the predicted semantic region centers, the shifted instance centers of the scissor blades become more distinguishable than in(b).

图 5: 语义区域中心的使用示意图。(a) 剪刀形状的输入点云,真实部件实例按其语义着色。(b) 预测的实例中心。(c) 预测的语义区域中心。(d) 通过将预测的实例中心推离预测的语义区域中心,剪刀刀片的偏移实例中心比(b)中更具区分性。

In the instance clustering step, we shift the input points as follows:

在实例聚类步骤中,我们对输入点进行如下位移:

$$

\hat{\mathbf{p}}:=\mathbf{p}+O_{I}(\mathbf{p})+\lambda\cdot\frac{O_{I}(\mathbf{p})-O_{S}(\mathbf{p})}{||O_{I}(\mathbf{p})-O_{S}(\mathbf{p})||}.

$$

$$

\hat{\mathbf{p}}:=\mathbf{p}+O_{I}(\mathbf{p})+\lambda\cdot\frac{O_{I}(\mathbf{p})-O_{S}(\mathbf{p})}{||O_{I}(\mathbf{p})-O_{S}(\mathbf{p})||}.

$$

Here $\mathbf{p}\in{\mathcal{S}},\lambda>0$ .

这里 $\mathbf{p}\in{\mathcal{S}},\lambda>0$ 。

Table 1: Part instance segmentation results of the test set on PartNet [27]. We report part-category $A P_{50}$ on three instance levels. The results of other methods are reported by PE [42]. Bold numbers are better. Some shape categories, masked by dashed lines, have no middle- and fine-level instances for benchmark.

表 1: PartNet [27] 测试集上的部件实例分割结果。我们在三个实例级别上报告了部件类别的 $A P_{50}$。其他方法的结果由 PE [42] 报告。加粗数字表示更优。部分形状类别(用虚线标记)在基准测试中没有中等级别和精细级别的实例。

4. Experiments and Analysis

4. 实验与分析

We design a series of experiments and ablation studies to demonstrate the efficacy of our approach and its superiority to other fusion schemes, including multi-level part instance segmentation on PartNet [27] (Section 4.1), and instance segmentation on indoor scene datasets (Section 4.2): ScanNet [8] and S3DIS [2].

我们设计了一系列实验和消融研究来证明方法的有效性及其相对于其他融合方案的优势,包括在PartNet [27]上的多层次部件实例分割(第4.1节)以及室内场景数据集(ScanNet [8]和S3DIS [2])上的实例分割(第4.2节)。

4.1. Part instance segmentation on PartNet

4.1 PartNet 上的部件实例分割

4.1.1 Experiments and comparison

4.1.1 实验与对比

Dataset PartNet is a large-scale dataset with fine-grained and hierarchical part annotations. It contains more than $570\mathrm{k}$ part instances over 26,671 3D models covering 24 object categories. It provides coarse-, middle-, and fine-grained part instance annotations.

数据集 PartNet 是一个具有细粒度和层次化部件标注的大规模数据集。它包含超过 $570\mathrm{k}$ 个部件实例,覆盖 26,671 个 3D 模型,涵盖 24 个物体类别。该数据集提供了粗粒度、中粒度和细粒度的部件实例标注。

Network configuration The encoder and decoders of our O-CNN-based U-Net had five levels of domain resolution, and the maximum depth of the octree was six. The dimension of the feature was set to 64. Details of the U-Net structure are provided in Appendix A. We implemented our network in the TensorFlow framework [1]. The network was trained with 100000 iterations with a batch size of 8. We used the SGD optimizer with a learning rate of 0.1 and decay two times with the factor of 0.1 at the 50000-th and 75000-th iterations. Our code and trained models are available.

网络配置

我们基于O-CNN的U-Net编码器和解码器具有五级域分辨率,八叉树的最大深度为6。特征维度设置为64。U-Net结构的详细信息见附录A。我们在TensorFlow框架[1]中实现了该网络。网络训练了100000次迭代,批量大小为8。我们使用学习率为0.1的SGD优化器,并在第50000次和第75000次迭代时以0.1的因子进行两次衰减。我们的代码和训练模型已公开。

Data processing The input point cloud contained 10 000 points and was scaled into a unit sphere. During training, we also augmented each shape by a uniform scaling with the scale ratio of [0.75, 1, 25], a random rotation whose pitch, yaw, and roll rotation angles were less than $10^{\circ}$ , and random translations along each coordinate axis within the interval $[-0.125,0.125]$ . The train/test split is provided in PartNet. Note that not all categories have three-level part annotations. During training, we duplicated the labels at the coarser level to the finer level, if the latter was missing, to mimic the three-level shape structure. During the test phase, we only evaluated the output from the levels which exist in the data. The ground-truth instance centers and semantic region centers were pre-computed according to the semantic labels and part instances of PartNet.

数据处理

输入点云包含10,000个点,并被缩放至单位球体内。训练过程中,我们通过以下方式对每个形状进行数据增强:均匀缩放比例范围为[0.75, 1.25],随机旋转(俯仰角、偏航角和滚转角均小于$10^{\circ}$),以及沿各坐标轴在区间$[-0.125,0.125]$内的随机平移。训练/测试划分由PartNet提供。需注意并非所有类别都具备三级部件标注。训练时,若较细级别的标注缺失,我们会将较粗级别的标签复制至该级别以模拟三级形状结构。测试阶段仅评估数据中实际存在的级别输出。根据PartNet的语义标签和部件实例,我们预先计算了真实实例中心和语义区域中心。

Experiment setup We set $\lambda~=~0.05$ for Eq. (7). We used the mean-shift implementation implemented in scikitlearn [28]. The default bandwidth of mean-shift was set to 0.1. All our experiments were conducted on an Azure Linux server with Intel Xeon Platinum 8168 CPU (2.7 GHz) and Tesla V100 GPU (16 GB memory). Our baseline network with cross-level fusion was the default configuration. In practice, we found that stopping the gradient from the fusion module to the semantic decoder helps maintain the semantic segmentation accuracy and slightly improves the instance segmentation. So, we enabled gradient stopping by default. An ablation study on gradient stopping is provided in Section 4.1.2.

实验设置

我们在公式(7)中设定$\lambda~=~0.05$。采用scikitlearn[28]实现的均值漂移算法,其默认带宽设为0.1。所有实验均在配备Intel Xeon Platinum 8168处理器(2.7 GHz)和Tesla V100显卡(16GB显存)的Azure Linux服务器上完成。基线网络采用默认的跨层级融合配置。实验发现,阻断融合模块至语义解码器的梯度流有助于保持语义分割精度,同时小幅提升实例分割效果,因此默认启用了梯度阻断机制。梯度阻断的消融实验详见第4.1.2节。

Evaluation metrics We used per-category mAP score with the IoU threshold of 0.25, 0.5 and 0.75 to evaluate the quality of part instance segmentation. They are denoted by $A P_{25}$ , $A P_{50}$ and $A P_{75}$ . ${\bf s}{-}A P_{50}$ is the metric proposed by [27], which averages the precision over the shapes.

评估指标

我们采用每类别的mAP分数,IoU阈值为0.25、0.5和0.75,以评估部件实例分割的质量。这些分数分别表示为$A P_{25}$、$A P_{50}$和$A P_{75}$。${\bf s}{-}A P_{50}$是[27]提出的指标,它对形状上的精度进行平均。

Performance report and comparison We report $A P_{50}$ of our approach in all 24 shape categories in Table 1. We also report the performance of three comparison approaches: SGPN [36], PartNet [27], and PE [42]. The results are averaged over three levels of granularity. Our method outperformed the best competitor PE [42] by $6.6%$ , and also achieved the best performance in most categories. Our approach was also the best on other evaluation metrics, as shown in Table 2. Appendix C reports the per-category results of $A P_{25}$ , $A P_{75}$ and ${\bf s}{-}A P_{50}$ . As DyCo3D [17] only performed instance segmentation experiments in four categories of the PartNet dataset, we compare it with our approach on these categories separately in Table 3. Our method outperformed DyCo3D by a large margin.

性能报告与对比

我们在表1中报告了本方法在所有24种形状类别中的$AP_{50}$值,同时列出了三种对比方法(SGPN [36]、PartNet [27] 和 PE [42])的性能表现。所有结果均按三个粒度层级取平均值。我们的方法以$6.6%$的优势超越最佳竞争对手PE [42],并在大多数类别中取得最优表现。如表2所示,本方法在其他评估指标上同样保持领先。附录C详细列出了$AP_{25}$、$AP_{75}$和${\bf s}{-}AP_{50}$的逐类别结果。由于DyCo3D [17]仅在PartNet数据集的四个类别中进行了实例分割实验,我们在表3中单独比较了这些类别的结果,本方法以显著优势超越DyCo3D。

Table 2: Part instance segmentation on the test set of PartNet. $A P_{25}$ $,A P_{50},A P_{75}$ , $\mathrm{s}{-}A P_{50}$ are averaged over three levels. The results of other methods are reported by PartNet [27] and PE [42].

表 2: PartNet 测试集上的部件实例分割结果。$A P_{25}$、$A P_{50}$、$A P_{75}$ 和 $\mathrm{s}{-}A P_{50}$ 为三个层级的平均值。其他方法的结果来自 PartNet [27] 和 PE [42]。

| AP25 | AP50 | AP75 | S-AP50 | mloU | |

|---|---|---|---|---|---|

| SGPN[36] | 46.8 | - | 64.2 | ||

| PartNet [27] | 62.8 | 54.4 | 38.9 | 72.2 | |

| PE [42] | 66.5 | 57.5 | 41.7 | - | - |

| Ours | 72.1 | 64.1 | 49.7 | 76.1 | 66.1 |

Table 3: Part instance segmentation on the four categories of PartNet. $A P_{50}$ is reported.

表 3: PartNet四类别的部件实例分割结果。报告指标为 $A P_{50}$。

| Level | Chair | Lamp | Stora. | Table | |

|---|---|---|---|---|---|

| D 3 | Coarse | 81.0 | 37.3 | 44.5 | 55.0 |

| Middle | 41.3 | 28.8 | 38.9 | 32.5 | |

| Fine | 33.4 51.9 | 20.5 | 30.4 | 24.9 | |

| Avg Coarse Middle | 84.1 45.7 | 28.9 38.2 | 37.9 56.4 | 37.5 65.3 | |

| sino | Fine | 38.2 | 30.9 21.7 | 53.3 44.0 | 36.2 28.9 |

| Avg | 56.0 | 30.3 | 51.2 | 43.5 |

4.1.2 Ablation study

4.1.2 消融实验

We validated our network design on PartNet instance segmentation, especially for the fusion module and the semantic region centers. The variants of our network are listed below.

我们在PartNet实例分割任务上验证了网络设计,特别是融合模块和语义区域中心部分。网络变体如下所示。

For each variant, we use symbol $\dagger$ to indicate that the predicted semantic region centers are not used for instance clustering. The optimal variant is cross-level fusion. The performance of each variant is reported in Table 4.

对于每个变体,我们用符号 $\dagger$ 表示预测的语义区域中心未用于实例聚类。最佳变体是跨层级融合。各变体的性能如表 4 所示。

Table 4: Ablation studies of our approach on PartNet test data. Methods marked with $\dagger$ use the predicted instance centers only. Our default and optimal network setting is cross-level fusion.

表 4: 我们的方法在PartNet测试数据上的消融研究。标记为$\dagger$的方法仅使用预测的实例中心。我们的默认和最优网络设置是跨层级融合(cross-level fusion)。

| 方法 | AP25 | AP50 | AP75 | S-AP50 | mloU |

|---|---|---|---|---|---|

| 单层级基线(single-level baseline) | 67.3 | 57.9 | 45.3 | 74.4 | 64.9 |

| 单层级基线(single-level baseline) | 67.4 | 58.2 | 45.5 | 75.0 | 64.9 |

| 单层级融合$\dagger$(single-level fusion$\dagger$) | 70.4 | 61.2 | 48.8 | 74.8 | 65.4 |

| 单层级融合(single-level fusion) | 71.1 | 62.1 | 49.0 | 75.8 | 65.4 |

| 多层级基线(multi-level baseline) | 67.1 | 57.9 | 45.0 | 74.1 | 65.0 |

| 多层级基线(multi-level baseline) | 67.3 | 58.1 | 45.1 | 74.7 | 65.0 |

| 多层级融合$\dagger$(multi-level fusion$\dagger$) | 70.9 | 61.8 | 48.8 | 74.8 | 65.5 |

| 多层级融合(multi-level fusion) | 71.5 | 62.5 | 49.2 | 75.6 | 65.5 |

| 跨层级融合(cross-level fusion) | 71.3 | 63.1 | 48.6 | 75.2 | 66.1 |

| 跨层级融合(cross-level fusion) | 72.1 | 64.1 | 49.7 | 76.1 | 66.1 |

| 跨层级融合(梯度)(cross-level fusion(gradient)) | 71.1 | 62.2 | 48.4 | 75.0 | 65.2 |

| 跨层级融合(梯度)(cross-level fusion(gradient)) | 71.8 | 63.3 | 49.3 | 75.9 | 65.2 |

| 跨层级融合(独热)$\dagger$(cross-level fusion(one-hot)$\dagger$) | 70.7 | 62.4 | 48.1 | 75.0 | 65.8 |

| 跨层级融合(独热)(cross-level fusion(one-hot)) | 71.6 | 63.5 | 49.0 | 75.8 | 65.8 |

| 跨层级融合(主干)$\dagger$(cross-level fusion(backbone)$\dagger$) | 69.6 | 61.6 | 46.0 | 74.7 | 65.3 |

| 跨层级融合(主干)(cross-level fusion(backbone)) | 70.2 | 62.4 | 47.1 | 75.3 | 65.3 |

| 跨层级融合(双向)(cross-level fusion(two-dir)) | 71.0 | 62.6 | 48.3 | 75.2 | 65.7 |

| 跨层级融合(双向)(cross-level fusion(two-dir)) | 71.8 | 63.6 | 48.7 | 76.0 | 65.7 |

| ASIS融合$\dagger$(ASIS fusion$\dagger$) | 68.2 | 59.0 | 45.0 | 74.7 | 65.1 |

| ASIS融合(ASIS fusion) | 68.6 | 59.1 | 45.9 | 75.0 | 65.1 |

| JSNet融合(JSNet fusion) | 68.5 | 59.2 | 46.3 | 75.4 | 65.4 |

| JSNet融合(JSNet fusion) | 68.8 | 59.3 | 46.6 | 75.6 | 65.4 |

Single-level baseline versus multi-level baseline The performances of single-level baseline and multi-level baseline in the same setting (w. or w/o fusion and semantic region center) are not much different. However, the training effort of multi-level baseline is much lower. There are a total of 50 levels for all 24 categories of PartNet. The single-level baseline must train 50 networks, while the multi-level baseline only needs to train 24 networks.

单层基线与多层基线的比较

在相同设置下(无论是否融合及使用语义区域中心),单层基线和多层基线的性能差异不大。然而,多层基线的训练成本显著更低。PartNet的24个类别共有50个层级,单层基线需训练50个网络,而多层基线仅需训练24个网络。

Fusion module It is clear that the performance of all baselines with the fusion modules improved. Single-level fusion and multi-level fusion increase $A P_{50}$ by $+3.9$ and $+4.4$ points compared to their baselines. Cross-level fusion surpasses them at $A P_{50}$ by $+2.0$ and $+1.6$ points. Here, the network of cross-level fusion has a slightly large network size. On Chair category, the network parameters of cross-level fusion, multi-level fusion, multi-level baselines are 8.13 M, 7.98 M, and $7.89\mathrm{M}$ , respectively.

融合模块

显然,所有带有融合模块的基线性能均有所提升。单级融合和多级融合相比其基线在 $AP_{50}$ 上分别提升了 $+3.9$ 和 $+4.4$ 个百分点。跨级融合在 $AP_{50}$ 上进一步超越它们,分别高出 $+2.0$ 和 $+1.6$ 个百分点。此处,跨级融合的网络规模略大。在 Chair 类别中,跨级融合、多级融合和多级基线的网络参数量分别为 8.13 M、7.98 M 和 $7.89\mathrm{M}$。

Use of semantic region centers The instance segmentation performance is consistently improved by using semantic region centers. The improvement is also more noticeable when the fusion module is enabled to improve both the instance center prediction and the semantic region center prediction. For example, there is only $+(0.2\sim0.3)$ improvement when using semantic region centers on single-level baseline and multi-level baseline, while the improvement over cross-level fusion $\dagger$ is $+1.0$ .

使用语义区域中心

通过使用语义区域中心,实例分割性能得到持续提升。当启用融合模块以同时改进实例中心预测和语义区域中心预测时,提升效果更为显著。例如,在单层级基线和多层级基线上使用语义区域中心时仅带来 $+(0.2\sim0.3)$ 的提升,而对跨层级融合 $\dagger$ 的提升达到 $+1.0$。

In Fig. 6, we present the instance segmentation results of multi-level baseline, cross-level fusion† and cross-level fusion. The predicted instance centers are more compact and distinguishable when using the fusion module. The use of semantic region centers helps further separate close instances, e.g. , the scissor blades in the 1st column, the bag handles in the 2nd column and the chair back frames in the 7th column.

在图 6 中,我们展示了多级基线、跨级融合†和跨级融合的实例分割结果。使用融合模块时,预测的实例中心更加紧凑且易于区分。语义区域中心的使用有助于进一步分离相邻实例,例如第 1 列中的剪刀刃、第 2 列中的包把手以及第 7 列中的椅背框架。

Figure 6: Visual comparison of part instance segmentation on the test set of PartNet. Part instances at the fine level are colored with random colors. 1st row: part instance ground-truth. 2nd row: results of our multi-level baseline†. 3rd row: results of our cross-level fusion† without using semantic region centers. 4th row: results of our cross-level fusion using semantic region centers. The corresponding shifted points are rendered at the top left of each instance segmentation image. Green and red boxes represent good and bad instances, respectively.

图 6: PartNet测试集上部件实例分割的视觉对比。精细层级的部件实例采用随机颜色标注。第1行: 部件实例真实标注。第2行: 我们的多层级基线†结果。第3行: 未使用语义区域中心的跨层级融合†结果。第4行: 采用语义区域中心的跨层级融合结果。对应的偏移点渲染在每张实例分割图像的左上角。绿色和红色方框分别表示良好与欠佳的实例。

Stopping gradient One of the inputs of the fusion module is the semantic segmentation probability. The gradients of the fusion module can back propagate the errors to the semantic branch. In our experiments, we found that gradient back propagation impairs semantic segmentation and leads to slightly worse instance segmentation results (see cross-level fusion(gradient) in Table 4).

停止梯度 (Stopping gradient)

融合模块的输入之一是语义分割概率。融合模块的梯度会将误差反向传播至语义分支。实验中我们发现,梯度反向传播会损害语义分割性能,并导致实例分割结果略微下降 (见表 4 中的跨层级融合(梯度) )。

Instance feature aggregation In our instance feature fusion module, we used the semantic probability of the point to aggregate the instance features f