The COT COLLECTION: Improving Zero-shot and Few-shot Learning of Language Models via Chain-of-Thought Fine-Tuning

COT COLLECTION:通过思维链微调提升大语言模型的零样本和少样本学习能力

Abstract

摘要

Language models (LMs) with less than 100B parameters are known to perform poorly on chain-of-thought (CoT) reasoning in contrast to large LMs when solving unseen tasks. In this work, we aim to equip smaller LMs with the step-by-step reasoning capability by instruction tuning with CoT rationales. In order to achieve this goal, we first introduce a new instructiontuning dataset called the COT COLLECTION, which augments the existing Flan Collection (including only 9 CoT tasks) with additional 1.84 million rationales across 1,060 tasks. We show that CoT fine-tuning Flan-T5 (3B & 11B) with COT COLLECTION enables smaller LMs to have better CoT capabilities on unseen tasks. On the BIG-Bench-Hard (BBH) benchmark, we report an average improvement of $+4.34%$ (Flan-T5 3B) and $+2.60%$ (Flan-T5 11B), in terms of zero-shot task accuracy. Furthermore, we show that instruction tuning with COT COLLECTION allows LMs to possess stronger fewshot learning capabilities on 4 domain-specific tasks, resulting in an improvement of $+2.24%$ (Flan-T5 3B) and $+2.37%$ (Flan-T5 11B), even outperforming ChatGPT utilizing demonstrations until the max length by a $+13.98%$ margin. Our code, the COT COLLECTION data, and model checkpoints are publicly available 1.

参数少于100B的语言模型(LM)在解决未见任务时,其思维链(CoT)推理能力明显逊色于大语言模型。本研究旨在通过CoT原理的指令微调,为小型LM赋予逐步推理能力。为此,我们首先引入了名为COT COLLECTION的新指令微调数据集,该数据集在原有Flan Collection(仅含9个CoT任务)基础上,新增了覆盖1,060个任务的184万条推理原理。实验表明,使用COT COLLECTION对Flan-T5(3B和11B)进行CoT微调后,小型LM在未见任务上展现出更强的CoT能力。在BIG-Bench-Hard(BBH)基准测试中,Flan-T5 3B和11B的零样本任务准确率分别平均提升$+4.34%$和$+2.60%$。此外,采用COT COLLECTION进行指令微调的模型在4个特定领域任务上表现出更强的少样本学习能力,Flan-T5 3B和11B分别提升$+2.24%$和$+2.37%$,甚至以$+13.98%$的优势超越了使用最大长度演示的ChatGPT。我们的代码、COT COLLECTION数据及模型检查点已公开1。

1 Introduction

1 引言

Language models (LMs) pre-trained on massive text corpora can adapt to downstream tasks in both zero-shot and few-shot learning settings by incorporating task instructions and demonstrations (Brown et al., 2020; Wei et al., 2021; Sanh et al., 2021; Mishra et al., 2022; Wang et al., 2022b; Iyer et al., 2022; Liu et al., 2022b; Chung et al., 2022; Long- pre et al., 2023; Ye et al., 2023). One approach that has been particularly effective in enabling LMs to excel at a multitude of tasks is Chain-of-Thought (CoT) prompting, making LMs generate a rationale to derive its final prediction in a sequential manner (Wei et al., 2022b; Kojima et al., 2022; Zhou et al., 2022; Zhang et al., 2022; Yao et al., 2023).

在大规模文本语料库上预训练的语言模型 (language model, LM) 可以通过融入任务指令和示例, 在零样本 (zero-shot) 和少样本 (few-shot) 学习场景中适应下游任务 [Brown et al., 2020; Wei et al., 2021; Sanh et al., 2021; Mishra et al., 2022; Wang et al., 2022b; Iyer et al., 2022; Liu et al., 2022b; Chung et al., 2022; Longpre et al., 2023; Ye et al., 2023]。其中, 思维链 (Chain-of-Thought, CoT) 提示法被证明能显著提升语言模型处理多任务的能力, 该方法使模型通过逐步生成推理过程来得出最终预测 [Wei et al., 2022b; Kojima et al., 2022; Zhou et al., 2022; Zhang et al., 2022; Yao et al., 2023]。

While CoT prompting works effectively for large LMs with more than 100 billion parameters, it does not necessarily confer the same benefits to smaller LMs (Tay et al., 2022; Suzgun et al., 2022; Wei et al., 2022a; Chung et al., 2022). The requirement of a large number of parameters consequently results in significant computational cost and accessibility issues (Kaplan et al., 2020; Min et al., 2022; Liu et al., 2022b; Mhlanga, 2023; Li et al., 2023).

虽然思维链 (CoT) 提示对参数量超过千亿的大语言模型效果显著,但它未必能为较小规模的模型带来同等优势 (Tay et al., 2022; Suzgun et al., 2022; Wei et al., 2022a; Chung et al., 2022)。这种对海量参数的需求进而导致了高昂的计算成本和可及性问题 (Kaplan et al., 2020; Min et al., 2022; Liu et al., 2022b; Mhlanga, 2023; Li et al., 2023)。

Recent work has focused on empowering relatively smaller LMs to effectively solve novel tasks as well, primarily through fine-tuning with rationales (denoted as CoT fine-tuning) and applying CoT prompting on a single target task (Shridhar et al., 2022; Ho et al., 2022; Fu et al., 2023). How- ever, solving a single task does not adequately address the issue of generalization to a broad range of unseen tasks. While Chung et al. (2022) leverage 9 publicly available CoT tasks during instruction tuning to solve multiple unseen tasks, the imbalanced ratio compared to 1,827 tasks used for direct finetuning results in poor CoT results across smaller LMs (Longpre et al., 2023). In general, the community still lacks a comprehensive strategy to fully leverage CoT prompting to solve multiple unseen novel tasks in the context of smaller LMs.

近期研究主要关注如何通过基于原理的微调(称为CoT微调)和在单一目标任务上应用CoT提示(Shridhar等人,2022;Ho等人,2022;Fu等人,2023),使相对较小的大语言模型也能有效解决新任务。然而,解决单一任务并不能充分应对广泛未见任务的泛化问题。尽管Chung等人(2022)在指令微调中利用了9个公开可用的CoT任务来解决多个未见任务,但与直接微调所用的1,827个任务相比,这种不平衡的比例导致较小的大语言模型的CoT效果较差(Longpre等人,2023)。总体而言,学界仍缺乏一个全面策略,以在较小的大语言模型背景下充分利用CoT提示来解决多个未见新任务。

To bridge this gap, we present the COT COLLECTION, an instruction tuning dataset that augments 1.84 million rationales from the FLAN Collection (Longpre et al., 2023) across 1,060 tasks. We fine-tune Flan-T5 (3B & 11B) using COT COLLECTION and denote the resulting model as CoTT5. We perform extensive comparisons of CoT-T5 and Flan-T5 under two main scenarios: (1) zeroshot learning and (2) few-shot learning.

为弥补这一差距,我们提出了COT COLLECTION,这是一个指令微调数据集,在1,060个任务中扩展了来自FLAN Collection (Longpre et al., 2023) 的184万条原理。我们使用COT COLLECTION对Flan-T5 (3B & 11B) 进行微调,并将所得模型命名为CoTT5。我们在两种主要场景下对CoT-T5和Flan-T5进行了广泛比较:(1) 零样本学习 (zero-shot learning) 和 (2) 少样本学习 (few-shot learning)。

In the zero-shot learning setting, CoT-T5 (3B & 11B) outperforms Flan-T5 (3B & 11B) by $+4.34%$ and $+2.60%$ on average accuracy across 27 datasets from the Big Bench Hard (BBH) benchmark (Suzgun et al., 2022) when evaluated with CoT prompting. During ablation experiments, we show that CoT fine-tuning T0 (3B) (Sanh et al., 2021) on a subset of the CoT Collection, specifically 163 training tasks used in T0, shows a performance increase of $+8.65%$ on average accuracy across 11 datasets from the P3 Evaluation benchmark. Moreover, we translate 80K instances of COT COLLECTION into 5 different languages (French, Japanese, Korean, Russian, Chinese) and observe that CoT fine-tuning mT0 (3B) (Mu en nigh off et al., 2022) on each language results in $2\mathrm{x}\sim10\mathrm{x}$ performance improvement on average accuracy across all 5 languages from the MGSM benchmark (Shi et al., 2022).

在零样本学习设置中,CoT-T5(3B和11B)在使用思维链提示(CoT prompting)评估时,在Big Bench Hard(BBH)基准测试(Suzgun等人,2022)的27个数据集上平均准确率分别比Flan-T5(3B和11B)高出$+4.34%$和$+2.60%$。在消融实验中,我们发现对T0(3B)(Sanh等人,2021)在思维链集合(CoT Collection)的子集(特别是T0中使用的163个训练任务)上进行微调后,在P3评估基准的11个数据集上平均准确率提升了$+8.65%$。此外,我们将思维链集合中的80K个实例翻译成5种不同语言(法语、日语、韩语、俄语、中文),并观察到对mT0(3B)(Muennighoff等人,2022)在每种语言上进行微调后,在MGSM基准测试(Shi等人,2022)的所有5种语言上平均准确率提升了$2\mathrm{x}\sim10\mathrm{x}$。

In the few-shot learning setting, where LMs must adapt to new tasks with a minimal number of instances, CoT-T5 (3B & 11B) exhibits a $+2.24%$ and $+2.37%$ improvement on average compared to using Flan-T5 (3B & 11B) as the base model on 4 different domain-specific tasks2. Moreover, it demonstrates $+13.98%$ and $+8.11%$ improvement over ChatGPT (OpenAI, 2022) and Claude (Anthropic, 2023) that leverages ICL with demonstrations up to the maximum input length.

在少样本学习场景下,当大语言模型需要以极少量样本适应新任务时,基于Flan-T5 (3B & 11B)的CoT-T5 (3B & 11B)在4个不同领域任务上平均实现了$+2.24%$和$+2.37%$的性能提升。此外,相较于采用最大输入长度演示样本的ChatGPT (OpenAI, 2022)和Claude (Anthropic, 2023),其性能优势分别达到$+13.98%$和$+8.11%$。

Our contributions are summarized as follows:

我们的贡献总结如下:

• We introduce COT COLLECTION, a new instruction dataset that includes 1.84 million rationales across 1,060 tasks that could be used for applying CoT fine-tuning to LMs. • With COT COLLECTION, we fine-tune Flan- T5, denoted as CoT-T5, which shows a nontrivial boost in zero-shot and few-shot learning capabilities with CoT Prompting. • For ablations, we show that CoT fine-tuning could improve the CoT capabilities of LMs in low-compute settings by using a subset of COT COLLECTION and training on (1) smaller number of tasks (T0 setting; 163 tasks) and (2) smaller amount of instances in 5 different languages (French, Japanese, Korean, Russian, Chinese; 80K instances).

• 我们推出COT COLLECTION,这是一个包含1060个任务中184万条推理链的新指令数据集,可用于对大语言模型进行思维链 (CoT) 微调。

• 基于COT COLLECTION,我们对Flan-T5进行微调得到CoT-T5模型,该模型在使用思维链提示时,零样本和少样本学习能力均有显著提升。

• 消融实验表明:在低算力环境下,通过使用COT COLLECTION子集(1)减少任务量(T0设定,163个任务)或(2)缩减5种语言(法语、日语、韩语、俄语、中文)的实例规模(8万条),仍能提升大语言模型的思维链能力。

2 Related Works

2 相关工作

2.1 Chain-of-Thought (CoT) Prompting

2.1 思维链 (Chain-of-Thought, CoT) 提示

Wei et al. (2022b) propose Chain of Thought (CoT) Prompting, a technique that triggers the model to generate a rationale before the answer. By generating a rationale, large LMs show improved reasoning abilities when solving challenging tasks. Kojima et al. (2022) show that by appending the phrase ‘Let’s think step by step’, large LMs could perform CoT prompting in a zero-shot setting. Different work propose variants of CoT prompting such as automatically composing CoT demonstrations (Zhang et al., 2022) and performing a finegrained search through multiple rationale candidates with a tree search algorithm (Yao et al., 2023). While large LMs could solve novel tasks with CoT Prompting, Chung et al. (2022) and Longpre et al. (2023) show that this effectiveness does not necessarily hold for smaller LMs. In this work, we aim to equip smaller LMs with the same capabilities by instruction tuning on large amount of rationales.

Wei等人 (2022b) 提出了思维链 (Chain of Thought, CoT) 提示技术,该方法通过触发模型在生成答案前先产生推理过程。实验表明,生成推理步骤能显著提升大语言模型解决复杂任务时的推理能力。Kojima等人 (2022) 发现只需追加"让我们逐步思考"的提示语,大语言模型就能实现零样本场景下的思维链推理。后续研究提出了多种改进方案,包括自动构建思维链演示样本 (Zhang等人, 2022) ,以及通过树搜索算法在多候选推理路径中进行细粒度筛选 (Yao等人, 2023) 。尽管大语言模型能通过思维链提示解决新任务,但Chung等人 (2022) 和Longpre等人 (2023) 的研究表明,较小规模语言模型未必具备同等能力。本研究旨在通过海量推理步骤的指令微调,使较小规模语言模型获得 comparable 的推理能力。

2.2 Improving Zero-shot Generalization

2.2 提升零样本 (Zero-shot) 泛化能力

Previous work show that instruction tuning enables generalization to multiple unseen tasks (Wei et al., 2021; Sanh et al., 2021; Aribandi et al., 2021; Ouyang et al., 2022; Wang et al., 2022b; Xu et al., 2022). Different work propose to improve instruction tuning by enabling cross-lingual generaliza- tion (Mu en nigh off et al., 2022), improving label generalization capability (Ye et al., 2022), and training modular, expert LMs (Jang et al., 2023). Meanwhile, a line of work shows that CoT fine-tuning could improve the reasoning abilities of LMs on a single-seen task (Zelikman et al., 2022; Shridhar et al., 2022; Ho et al., 2022; Fu et al., 2023). As a follow-up study, we CoT fine-tune on 1,060 instruction tasks and observe a significant improvement in terms of zero-shot generalization on multiple tasks.

先前研究表明,指令微调 (instruction tuning) 能够实现模型在未见任务上的泛化能力 (Wei et al., 2021; Sanh et al., 2021; Aribandi et al., 2021; Ouyang et al., 2022; Wang et al., 2022b; Xu et al., 2022)。不同研究团队通过提升跨语言泛化能力 (Muennighoff et al., 2022)、改进标签泛化能力 (Ye et al., 2022) 以及训练模块化专家大语言模型 (Jang et al., 2023) 来优化指令微调。同时,一系列研究表明,思维链 (CoT) 微调可以提升大语言模型在单一已知任务上的推理能力 (Zelikman et al., 2022; Shridhar et al., 2022; Ho et al., 2022; Fu et al., 2023)。作为后续研究,我们在1,060个指令任务上进行思维链微调,观察到模型在多项任务上的零样本泛化能力显著提升。

2.3 Improving Few-Shot Learning

2.3 改进少样本学习

For adapting LMs to new tasks with a few instances, recent work propose advanced parameter efficient fine-tuning (PEFT) methods, where a small number of trainable parameters are added (Hu et al., 2021; Lester et al., 2021; Liu et al., 2021, 2022b; Asai et al., 2022; Liu et al., 2022c). In this work, we show that a simple recipe of (1) applying LoRA (Hu et al., 2021) to a LM capable of per- forming CoT reasoning and (2) CoT fine-tuning on a target task results in strong few-shot performance.

为了让大语言模型能够用少量样本适应新任务,近期研究提出了先进的参数高效微调 (parameter efficient fine-tuning, PEFT) 方法,即仅添加少量可训练参数 (Hu et al., 2021; Lester et al., 2021; Liu et al., 2021, 2022b; Asai et al., 2022; Liu et al., 2022c)。本文证明,只需采用以下简单策略:(1) 对具备思维链 (CoT) 推理能力的大语言模型应用LoRA技术 (Hu et al., 2021),(2) 在目标任务上进行思维链微调,即可实现强大的少样本性能。

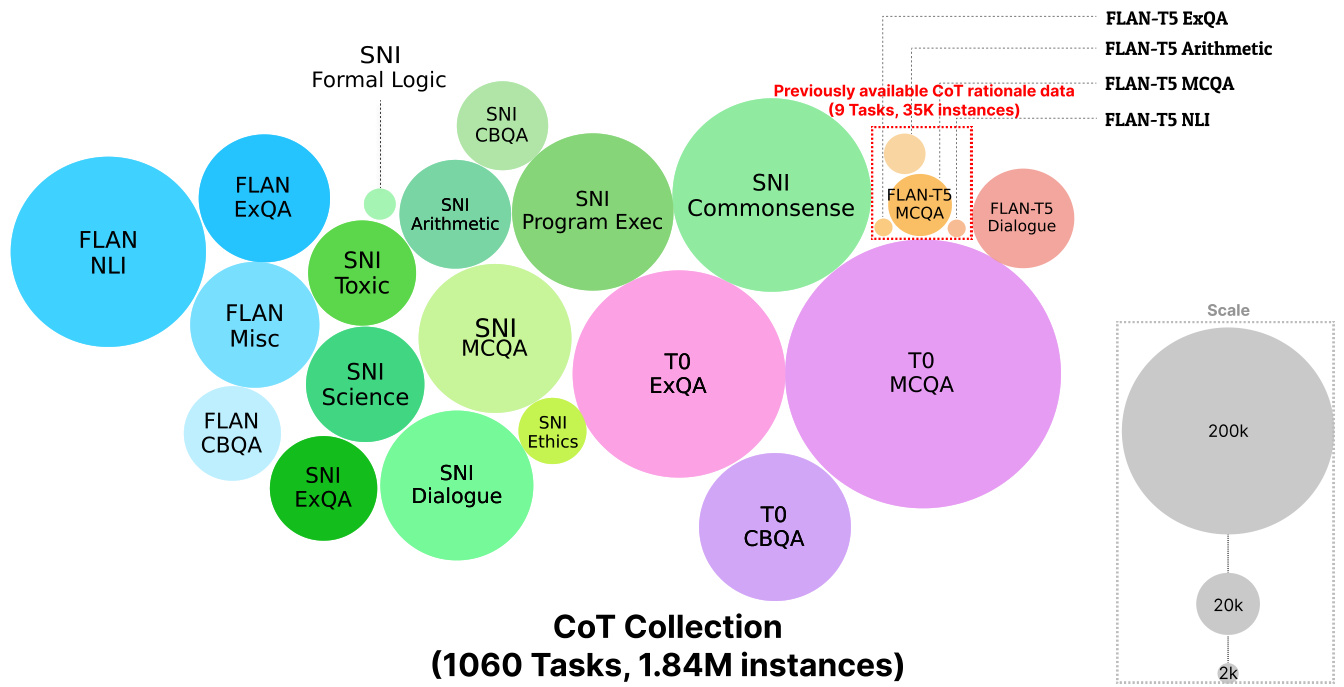

Figure 1: An illustration of the overall task group and dataset source of where we obtained the instances to augment the rationales in COT COLLECTION. Compared to the 9 datasets that provide publicly available rationales (included within ‘Flan-T5 ExQA’, ‘Flan-T5 Arithmetic’, ‘Flan-T5 MCQA’, ‘Flan-T5 NLI’ from the red box), we generate ${\sim}51.29$ times more rationales (1.84 million rationales) and ${\sim}117.78$ times more task variants (1,060 tasks).

图 1: 展示COT COLLECTION中用于增强原理的实例来源及整体任务组构成示意图。与提供公开原理的9个数据集(红色框内的"Flan-T5 ExQA"、"Flan-T5 Arithmetic"、"Flan-T5 MCQA"、"Flan-T5 NLI")相比,我们生成的原理数量多出${\sim}51.29$倍(184万条原理),任务变体数量多出${\sim}117.78$倍(1060个任务)。

3 The COT COLLECTION

3 COT 合集

Despite its effectiveness for CoT fine-tuning, rationale data still remains scarce. To the best of our knowledge, recent work mostly rely on 9 publicly available NLP datasets3 for fine-tuning with rationales (Zelikman et al., 2022; Shridhar et al., 2022; Chung et al., 2022; Ho et al., 2022; Longpre et al., 2023; Fu et al., 2023). This is due to the difficulty in gathering human-authored rationales (Kim et al., 2023). To this end, we create COT COLLECTION, an instruction-tuning dataset that includes $1.84\mathrm{mil}$ - lion rationales augmented across 1,060 tasks4. In this section, we explain the datasets we select to augment into rationales and how we perform the overall augmentation process.

尽管思维链 (CoT) 微调效果显著,但推理数据仍然稀缺。据我们所知,近期研究大多依赖9个公开NLP数据集3进行带推理的微调 (Zelikman et al., 2022; Shridhar et al., 2022; Chung et al., 2022; Ho et al., 2022; Longpre et al., 2023; Fu et al., 2023),这主要源于人工撰写推理的困难性 (Kim et al., 2023)。为此,我们构建了COT COLLECTION指令微调数据集,包含跨1,060个任务4增强的184万条推理数据。本节将阐述所选的数据集及其增强为推理的整体流程。

Broad Overview Given an input $X~=~[I,z]$ composed of an instruction $I$ , and an instance $z$ along with the answer $y$ , we obtain a rationale $r$ by applying in-context learning (ICL) with a large LM. Note that this differs from previous works which focused on generating new instances $z$ using large LMs (West et al., 2022; Liu et al., 2022a; Kim et al., 2022; Honovich et al., 2022; Wang et al., 2022a; Taori et al., 2023; Chiang et al., 2023) while we extend it to generating new rationales $r$ .

概要概览

给定一个由指令 $I$、实例 $z$ 和答案 $y$ 组成的输入 $X~=~[I,z]$,我们通过在大语言模型上应用上下文学习 (ICL) 来获得推理过程 $r$。需要注意的是,这与之前的工作不同,之前的工作主要关注使用大语言模型生成新实例 $z$ (West et al., 2022; Liu et al., 2022a; Kim et al., 2022; Honovich et al., 2022; Wang et al., 2022a; Taori et al., 2023; Chiang et al., 2023),而我们将这一方法扩展到了生成新的推理过程 $r$。

Source Dataset Selection As a source dataset to extract rationales, we choose the Flan Collection (Longpre et al., 2023), consisting of 1,836 di- verse NLP tasks from P3 (Sanh et al., 2021), SuperNatural Instructions (Wang et al., 2022b), Flan (Wei et al., 2021), and some additional dialogue & code datasets. We choose 1,060 tasks, narrowing our focus following the criteria as follows:

源数据集选择

作为提取原理的源数据集,我们选择了Flan Collection (Longpre等人,2023),该集合包含来自P3 (Sanh等人,2021)、SuperNatural Instructions (Wang等人,2022b)、Flan (Wei等人,2021)的1,836个多样化NLP任务,以及一些额外的对话和代码数据集。我们筛选出1,060个任务,并依据以下标准缩小研究范围:

• Generation tasks with long outputs are excluded since the total token length of appending $r$ and $y$ exceeds the maximum output token length (512 tokens) during training. • Datasets that are not publicly available such as DeepMind Coding Contents and Dr Repair (Yasunaga and Liang, 2020) are excluded. • Datasets where the input and output do not correspond to each other in the hugging face datasets (Lhoest et al., 2021) are excluded. • When a dataset appears in common across different sources, we prioritize using the task from P3 first, followed by SNI, and Flan. • During preliminary experiments, we find that for tasks such as sentiment analysis, sentence completion, co reference resolution, and word disambiguation, rationales generated by large LMs are very short and uninformative. We exclude these tasks to prevent negative transfer during multitask learning (Aribandi et al., 2021; Jang et al., 2023).

- 排除了输出较长的生成任务,因为在训练过程中追加 $r$ 和 $y$ 的总 token 长度超过了最大输出 token 长度 (512 tokens)。

- 排除了非公开可用的数据集,例如 DeepMind Coding Contents 和 Dr Repair (Yasunaga and Liang, 2020)。

- 排除了在 Hugging Face 数据集中输入与输出不对应的数据集 (Lhoest et al., 2021)。

- 当同一数据集出现在不同来源时,我们优先使用 P3 的任务,其次是 SNI 和 Flan。

- 在初步实验中,我们发现对于情感分析、句子补全、共指消解和词义消歧等任务,大语言模型生成的原理非常简短且信息量不足。为避免多任务学习中的负迁移 (Aribandi et al., 2021; Jang et al., 2023),我们排除了这些任务。

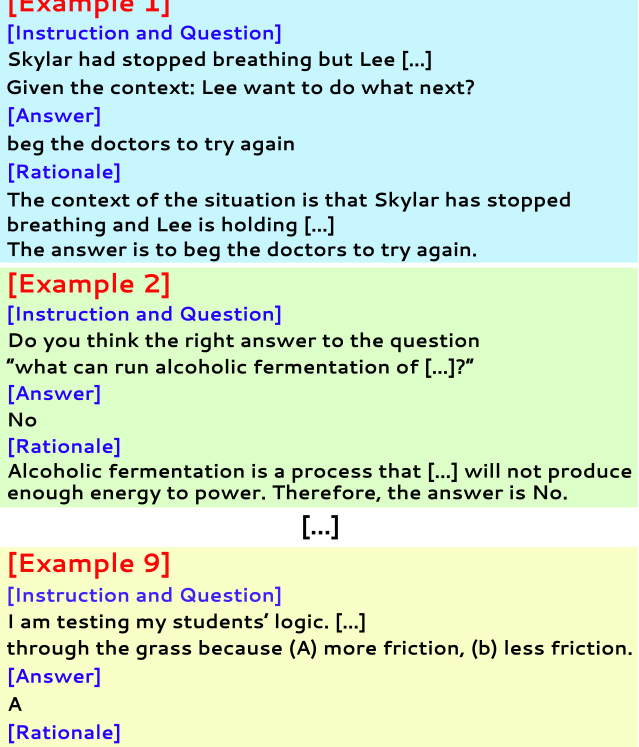

Creating Demonstrations for ICL We first create prompts to apply in-context learning (ICL) with large LMs for augmenting the instances in the selected tasks with rationales. Preparing demonstrations $\mathcal{D}^{t}$ for each task $t$ is the most straightforward, but it becomes infeasible to prepare demonstrations for each task as the number of tasks gets larger. Instead, we assign each task $t$ to $T_{k}$ , a family of tasks that shares a similar task format such as multiple choice QA, closed book QA, and dialogue generation. Each family of tasks share $\mathcal{D}^{T_{k}}$ , which consists of $6\sim8$ demonstrations. These $6\sim8$ demonstrations for each task group $T_{k}$ is manually created by 3 of the authors in this paper. Specifically, given 136 instances sampled from Flan Collection, two annotators are assigned to write a rationale, and the other third annotator conducts an $\mathbf{A}/\mathbf{B}$ testing between the two options. We manually create $\mathcal{D}^{T_{k}}$ across $k~=~26$ task groups. We include the prompts for all of the different task groups in Appendix D.

为ICL创建演示样本

我们首先创建提示(prompt),用于在大语言模型(LLM)中应用上下文学习(ICL),通过添加推理过程来增强选定任务中的实例。为每个任务$t$准备演示样本$\mathcal{D}^{t}$是最直接的方法,但随着任务数量增加,为每个任务单独准备样本变得不可行。因此,我们将每个任务$t$分配到$T_{k}$(共享相似任务格式的任务族,例如多选题QA、闭卷QA和对话生成)。每个任务族共享由$6\sim8$个演示样本组成的$\mathcal{D}^{T_{k}}$。这些样本由本文三位作者手动创建:从Flan Collection采样的136个实例中,两位标注者撰写推理过程,第三位标注者对两个选项进行$\mathbf{A}/\mathbf{B}$测试。我们手动创建了覆盖$k~=~26$个任务组的$\mathcal{D}^{T_{k}}$,所有任务组的提示模板详见附录D。

Rationale Augmentation We use the OpenAI $\mathrm{Codex^{5}}$ to augment rationales. Formally, given $(X_{i}^{t},y_{i}^{t})$ , the $i^{t h}$ instance of a task $t$ , the goal is to generate corresponding rationale $r_{i}^{t}$ . Note that during preliminary experiments, we found that ordering the label in front of the rationale within the demonstration $\mathcal{D}^{T_{k}}$ was crucial to generate good quality rationales. We conjecture this is because ordering the label in front of the rationale loosens the need for the large LM to solve the underlying task and only focus on generating a rationale. However, we also found that in some tasks such as arithmetic reasoning, large LMs fail to generate good-quality rationales. To mitigate this issue, we apply filtering to the augmented rationales. We provide the criteria used for the filtering phase and

原理增强

我们使用OpenAI的$\mathrm{Codex^{5}}$来增强原理。形式上,给定任务$t$的第$i^{t h}$个实例$(X_{i}^{t},y_{i}^{t})$,目标是生成对应的原理$r_{i}^{t}$。需要注意的是,在初步实验中,我们发现将标签放在演示$\mathcal{D}^{T_{k}}$中的原理之前,对于生成高质量的原理至关重要。我们推测这是因为将标签放在原理前面,降低了大语言模型解决底层任务的需求,使其仅专注于生成原理。然而,我们也发现,在某些任务(如算术推理)中,大语言模型无法生成高质量的原理。为了缓解这一问题,我们对增强后的原理进行了过滤。我们提供了过滤阶段使用的标准以及

[Example 1]

[示例 1]

Figure 2: MCQA Prompt used to augment rationales from P3 dataset. Through ICL, the large LM generates a rationale that is conditioned on the ground-truth label.

图 2: 用于增强P3数据集原理的MCQA提示模板。通过少样本学习(ICL) ,大语言模型基于正确答案标签生成相应原理说明。

the filtered cases at Appendix B. Also, we include analysis of the diversity and quality of COT COLLECTION compared to the existing 9 CoT tasks and human-authored rationales in Appendix A.

附录B中筛选的案例。此外,我们在附录A中分析了COT COLLECTION与现有9个CoT任务及人工编写原理在多样性和质量上的对比。

4 Experiments

4 实验

For our main experiments, we use Flan-T5 (Chung et al., 2022) as our base model, and obtain CoTT5 by CoT fine-tuning on the COT COLLECTION. Formally, given $X_{i}^{t}$ , the goal of CoT fine-tuning is to sequentially generate the rationale $r_{i}^{t}$ and answer $y_{i}^{t}$ . To indicate that $r_{i}^{t}$ should be generated before $y_{i}^{t}$ , the trigger phrase ‘Let’s think step by step’ is added during both training and evaluation. We mostly follow the details for training and evaluation from Chung et al. (2022), and provide additional details in Appendix C. In this section, we show how training on COT COLLECTION enhances zeroshot generalization capabilities (Section 4.2) and few-shot adaptation capabilities (Section 4.3).

在我们的主要实验中,我们使用Flan-T5 (Chung et al., 2022)作为基础模型,并通过在COT COLLECTION上进行思维链(CoT)微调获得CoTT5。形式化地,给定$X_{i}^{t}$,CoT微调的目标是顺序生成推理过程$r_{i}^{t}$和答案$y_{i}^{t}$。为了表明$r_{i}^{t}$应在$y_{i}^{t}$之前生成,在训练和评估阶段都添加了触发短语"Let's think step by step"。我们基本遵循Chung et al. (2022)中的训练和评估细节,并在附录C中提供了更多细节。本节将展示在COT COLLECTION上的训练如何增强零样本泛化能力(第4.2节)和少样本适应能力(第4.3节)。

4.1 Evaluation

4.1 评估

We evaluate under two different evaluation methods: Direct Evaluation and CoT Evaluation. For Direct Evaluation on classification tasks, we follow previous works using verbalize rs, choosing the option with the highest probability through comparison of logit values (Schick and Schütze, 2021;

我们在两种不同的评估方法下进行评估:直接评估 (Direct Evaluation) 和思维链评估 (CoT Evaluation)。对于分类任务的直接评估,我们遵循先前使用言语化器 (verbalizer) 的工作,通过比较 logit 值选择概率最高的选项 (Schick and Schütze, 2021;

Sanh et al., 2021; Ye et al., 2022; Jang et al., 2023), and measure the accuracy. For generation tasks, we directly compare the LM’s prediction with the answer and measure the EM score.

Sanh等人, 2021; Ye等人, 2022; Jang等人, 2023), 并测量准确性。对于生成任务, 我们直接将大语言模型的预测与答案进行比较, 并测量EM分数。

When evaluating with CoT Evaluation, smaller LMs including Flan-T5 often do not generate any rationales even with the trigger phrase ‘Let’s think step by step’. Therefore, we adopt a hard constraint of requiring the LM to generate $r_{i}^{t}$ with at least a minimum length of 8 tokens. In classification tasks, we divide into two steps where the LM first generates $r_{i}^{t}$ , and then verbalize rs are applied with a indicator phrase ‘[ANSWER]’ inserted between $r_{i}^{t}$ and the possible options. For generation tasks, we extract the output coming after the indicator phrase. Accuracy metric is used for classification tasks while EM metric is used for generation tasks.

在使用思维链(CoT)评估时,包括Flan-T5在内的小型大语言模型即使使用触发短语"Let's think step by step"也经常无法生成任何推理过程。因此,我们采用硬性约束,要求大语言模型生成的$r_{i}^{t}$至少包含8个token的最小长度。在分类任务中,我们分为两个步骤:首先让大语言模型生成$r_{i}^{t}$,然后在$r_{i}^{t}$和可能选项之间插入指示短语"[ANSWER]"来应用语言化表达。对于生成任务,我们提取指示短语后的输出内容。分类任务使用准确率(Accuracy)作为评估指标,而生成任务则采用精确匹配(EM)指标。

4.2 Zero-shot Generalization

4.2 零样本 (Zero-shot) 泛化

In this subsection, we show how training with COT COLLECTION could effectively improve the LM’s ability to solve unseen tasks. We have three difference experimental set-ups, testing different aspects: Setup #1: training on the entire 1060 tasks in COT COLLECTION and evaluating the reasoning capabilities of LMs with the Bigbench Hard (BBH) benchmark (Suzgun et al., 2022), Setup #2: training only on 163 tasks that T0 (Sanh et al., 2021) used for training (a subset of the COT COLLECTION), and evaluating the linguistic capabilities of LMs with the P3 evaluation benchmark (Sanh et al., 2021), and Setup #3: training with a translated, subset version of COT COLLECTION for each five different languages and evaluating how LMs could perform CoT reasoning in multilingual settings using the MGSM benchmark (Shi et al., 2022).

在本小节中,我们将展示如何通过COT COLLECTION训练有效提升大语言模型解决未知任务的能力。我们设计了三种不同的实验设置以测试不同方面:

设置#1:在COT COLLECTION全部1060个任务上训练,并使用Bigbench Hard (BBH)基准测试(Suzgun et al., 2022)评估大语言模型的推理能力;

设置#2:仅在T0 (Sanh et al., 2021)训练使用的163个任务(COT COLLECTION子集)上训练,通过P3评估基准(Sanh et al., 2021)测试大语言模型的语言能力;

设置#3:使用COT COLLECTION翻译后的五语言子集进行训练,并基于MGSM基准(Shi et al., 2022)评估大语言模型在多语言场景下的思维链推理表现。

Setup #1: CoT Fine-tuning with 1060 CoT Tasks We first perform experiments with our main model, CoT-T5, by training Flan-T5 on the entire COT COLLECTION and evaluate on the BBH benchmark (Suzgun et al., 2022). In addition to evaluating Flan-T5, we compare the performances of different baselines such as (1) T5-LM (Raffel et al., 2020): the original base model of Flan-T5, (2) T0 (Sanh et al., 2021): an instruction-tuned LM trained with P3 instruction dataset, (3) TkInstruct (Wang et al., 2022b): an instruction-tuned LM trained with SNI instruction dataset, and (4) GPT-3 (Brown et al., 2020): a pre-trained LLM with 175B parameters. For ablation purposes, we also train T5-LM with COT COLLECTION (denoted as ${}^{5}\mathrm{T}5+\mathrm{CoT}$ FT’). Note that FLAN Collection includes 15 million instances, hence ${\sim}8$ times larger compared to our COT COLLECTION.

实验设置1:基于1060项CoT任务进行微调

我们首先在完整COT COLLECTION数据集上对Flan-T5进行训练,并在BBH基准测试(Suzgun et al., 2022)中评估主要模型CoT-T5的表现。除Flan-T5外,我们还对比了以下基线模型:(1) T5-LM (Raffel et al., 2020):Flan-T5的原始基础模型;(2) T0 (Sanh et al., 2021):基于P3指令数据集微调的指令调优模型;(3) TkInstruct (Wang et al., 2022b):基于SNI指令数据集微调的指令调优模型;(4) GPT-3 (Brown et al., 2020):拥有1750亿参数的预训练大语言模型。为进行消融实验,我们同时使用COT COLLECTION训练了T5-LM (记为${}^{5}\mathrm{T}5+\mathrm{CoT}$ FT')。需注意FLAN Collection包含1500万实例,规模约为COT COLLECTION的${\sim}8$倍。

Table 1: Evaluation performance on all the 27 unseen datasets from BBH benchmark, including generation tasks. All evaluations are held in a zero-shot setting. The best comparable performances are bolded and second best underlined.

| 方法 | CoT | Direct | 总平均 |

|---|---|---|---|

| T5-LM-3B | 26.68 | 26.96 | 26.82 |

| TO-3B | 26.64 | 27.45 | 27.05 |

| TK-INSTRUCT-3B | 29.86 | 29.90 | 29.88 |

| TK-INSTRUCT-11B | 33.60 | 30.71 | 32.16 |

| T0-11B | 31.83 | 33.57 | 32.70 |

| FLAN-T5-3B | 34.06 | 37.14 | 35.60 |

| GPT-3(175B) | 38.30 | 33.60 | 38.30 |

| FLAN-T5-11B | 38.57 | 40.99 | 39.78 |

| T5-3B+CoTFT | 37.95 | 35.52 | 36.74 |

| CoT-T5-3B | 38.40 | 36.18 | 37.29 |

| T5-11B+CoT FT | 40.02 | 38.76 | 39.54 |

| CoT-T5-11B | 42.20 | 42.56 | 42.38 |

表 1: 在BBH基准测试的27个未见数据集(包括生成任务)上的评估性能。所有评估均在零样本设置下进行。最佳可比性能加粗显示,次佳性能加下划线标注。

The results on BBH benchmark are shown across Table 1 and Table 2. In Table 1, CoT-T5 (3B & 11B) achieves a $+4.34%$ and $+2.60%$ improvement over Flan-T5 (3B & 11B) with CoT Evaluation. Surprisingly, while CoT-T5-3B CoT performance improves $+4.34%$ with the cost of $0.96%$ degradation in Direct Evalution, CoT-T5-11B’s Direct Evaluation performance even improves, resulting in a $+2.57%$ total average improvement. Since COT COLLECTION only includes instances augmented with rationales, these results show that CoT fine-tuning could improve the LM’s capabilities regardless of the evaluation method. Also, T5-3B $+\mathrm{CoT}$ FT and $\ensuremath{\mathrm{T}}5\ensuremath{-}11\ensuremath{\mathrm{B}}+\ensuremath{\mathrm{CoT}}.$ FT outperforms FLAN-T5-3B and FLAN-T5-11B by a $+1.45%$ and $+3.89%$ margin, respectively, when evaluated with CoT evaluation. Moreover, $\mathrm{T}5{-}3\mathbf{B}+\mathrm{CoT}$ FINE-TUNING outperforms ${\sim}4$ times larger models such as T0-11B and Tk-Instruct-11B in both Direct and CoT Evaluation. The overall results indicate that (1) CoT fine-tuning on a diverse number of tasks enables smaller LMs to outperform larger LMs and (2) training with FLAN Collection and CoT Collection provides complementary improvements to LMs under different evaluation methods; CoT-T5 obtains good results across both evaluation methods by training on both datasets.

BBH基准测试结果如表1和表2所示。在表1中,CoT-T5 (3B & 11B) 通过CoT评估相比Flan-T5 (3B & 11B) 分别实现了$+4.34%$和$+2.60%$的提升。值得注意的是,虽然CoT-T5-3B的CoT性能提升$+4.34%$的同时导致直接评估下降$0.96%$,但CoT-T5-11B的直接评估性能反而有所提升,最终实现$+2.57%$的总平均改进。由于COT COLLECTION仅包含增强推理链的实例,这些结果表明无论采用何种评估方法,CoT微调都能提升语言模型的能力。此外,T5-3B $+\mathrm{CoT}$ FT和$\ensuremath{\mathrm{T}}5\ensuremath{-}11\ensuremath{\mathrm{B}}+\ensuremath{\mathrm{CoT}}$ FT在CoT评估下分别以$+1.45%$和$+3.89%$的优势超越FLAN-T5-3B和FLAN-T5-11B。更重要的是,$\mathrm{T}5{-}3\mathbf{B}+\mathrm{CoT}$微调模型在直接评估和CoT评估中均优于T0-11B和Tk-Instruct-11B等约4倍规模的模型。整体结果表明:(1) 在多任务上进行CoT微调可使较小语言模型超越较大模型;(2) FLAN Collection与CoT Collection训练能为不同评估方法下的语言模型提供互补性改进——通过联合训练这两个数据集,CoT-T5在两种评估方法下均取得良好表现。

In Table 2, CoT-T5-11B obtains same or better results on 15 out of 23 tasks when evaluated with Direct evaluation, and 17 out of 23 tasks when evaluated with CoT Evaluation compared to Flan

在表2中,与Flan相比,CoT-T5-11B采用直接评估 (Direct evaluation) 时在23个任务中的15个上取得相同或更好的结果,采用思维链评估 (CoT Evaluation) 时则在23个任务中的17个上表现更优。

Table 2: Evaluation performance on 23 unseen classification datasets from BBH benchmark. Scores of Vicuna, ChatGPT, Codex (teacher model of COT-T5), GPT-4 are obtained from Chung et al. (2022) and Mukherjee et al. (2023). Evaluations are held in a zero-shot setting. The best comparable performances are bolded and second best underlined among the open-sourced LMs.

表 2: BBH基准测试中23个未见分类数据集的评估表现。Vicuna、ChatGPT、Codex (COT-T5的教师模型) 和GPT-4的分数来自Chung等人 (2022) 和Mukherjee等人 (2023) 。评估在零样本设置下进行。开源大语言模型中最佳表现加粗显示,次佳表现加下划线。

| Task | CoT-T5-11B CoT | CoT-T5-11B Direct | FLAN-T5-11B CoT | FLAN-T5-11B Direct | VICUNA-13B Direct | CHATGPT Direct | CODEX Direct | GPT-4 Direct |

|---|---|---|---|---|---|---|---|---|

| BOOLEANEXPRESSIONS | 65.6 | 59.2 | 51.6 | 56.8 | 40.8 | 82.8 | 88.4 | 77.6 |

| CAUSALJUDGMENT | 60.4 | 60.2 | 58.3 | 61.0 | 42.2 | 57.2 | 63.6 | 59.9 |

| DATE UNDERSTANDING | 52.0 | 51.0 | 46.8 | 54.8 | 10.0 | 42.8 | 63.6 | 74.8 |

| DISAMBIGUATION QA | 63.4 | 68.2 | 63.2 | 67.2 | 18.4 | 57.2 | 67.2 | 69.2 |

| FORMAL FALLACIES | 51.2 | 55.2 | 54.4 | 55.2 | 47.2 | 53.6 | 52.4 | 64.4 |

| GEOMETRIC SHAPES | 22.0 | 10.4 | 12.4 | 21.2 | 3.6 | 25.6 | 32.0 | 40.8 |

| HYPERBATON | 65.2 | 64.2 | 55.2 | 70.8 | 44.0 | 69.2 | 60.4 | 62.8 |

| LOGICAL DEDUCTION (5) | 48.2 | 54.4 | 51.2 | 53.6 | 4.8 | 38.8 | 32.4 | 66.8 |

| LOGICAL DEDUCTION (7) | 52.4 | 60.6 | 57.6 | 60.0 | 1.2 | 39.6 | 26.0 | 66.0 |

| LOGICAL DEDUCTION (3) | 55.4 | 75.0 | 66.4 | 74.4 | 16.8 | 60.4 | 52.8 | 94.0 |

| MOVIE RECOMMENDATION | 44.6 | 52.8 | 32.4 | 36.4 | 43.4 | 55.4 | 84.8 | 79.5 |

| NAVIGATE | 59.0 | 60.0 | 60.8 | 61.6 | 46.4 | 55.6 | 50.4 | 68.8 |

| PENGUINS IN A TABLE | 39.1 | 41.8 | 41.8 | 41.8 | 15.1 | 45.9 | 66.4 | 76.7 |

| REASONING COLORED OBJ. | 32.6 | 33.2 | 22.8 | 23.2 | 12.0 | 47.6 | 67.6 | 84.8 |

| RUIN NAMES | 42.8 | 41.6 | 31.6 | 34.4 | 15.7 | 56.0 | 75.2 | 89.1 |

| SALIENT TRANS ERR. | 43.8 | 49.2 | 35.6 | 49.2 | 2.0 | 40.8 | 62.0 | 62.4 |

| SNARKS | 67.7 | 66.2 | 59.5 | 70.2 | 28.1 | 59.0 | 61.2 | 87.6 |

| SPORTS UNDERSTANDING | 64.8 | 66.4 | 56.0 | 60.0 | 48.4 | 79.6 | 72.8 | 84.4 |

| TEMPORAL SEQUENCES | 27.4 | 28.8 | 24.4 | 28.8 | 16.0 | 35.6 | 77.6 | 98.0 |

| TRACKING SHUFF OBJ.(5) | 20.0 | 13.2 | 19.6 | 15.2 | 9.2 | 18.4 | 20.4 | 25.2 |

| TRACKING SHUFF OBJ.(7) | 18.4 | 9.6 | 13.2 | 12.0 | 5.6 | 15.2 | 14.4 | 25.2 |

| TRACKING SHUFF OBJ.(3) | 41.8 | 31.2 | 28.8 | 24.4 | 23.2 | 31.6 | 37.6 | 42.4 |

| WEB OF LIES | 57.0 | 51.6 | 52.8 | 50.0 | 41.2 | 56.0 | 51.6 | 49.6 |

| AVERAGE | 47.60 | 48.00 | 43.32 | 47.05 | 23.30 | 48.90 | 52.80 | 67.40 |

Table 3: Evaluation performance on 11 different unseen P3 dataset (Sanh et al., 2021) categorized into 4 task categories. We report the direct performance of the baselines since they were not CoT fine-tuned on instruction data. The best comparable performances are bolded and second best underlined. We exclude Flan-T5 and CoT-T5 since they were trained on the unseen tasks (tasks from FLAN and SNI overlap with the P3 Eval datasets), breaking unseen task assumption.

| 方法 | 自然语言推理 | 句子补全 | 指代消解 | 词义消歧 | 总平均 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RTE | CB | AN.R1 | AN.R2 | AN.R3 | COPA | Hellasw. | StoryC. | Winogr. | WSC | WiC | ||

| T5-3B (Raffel et al., 2020) | 53.03 | 34.34 | 32.89 | 33.76 | 33.82 | 54.88 | 27.00 | 48.16 | 50.64 | 54.09 | 50.30 | 42.99 |

| TO-3B (Sanh et al., 2021) | 60.61 | 48.81 | 35.10 | 33.27 | 33.52 | 75.13 | 27.18 | 84.91 | 50.91 | 65.00 | 51.27 | 51.43 |

| ROE-3B (Jang et al., 2023) | 64.01 | 43.57 | 35.49 | 34.64 | 31.22 | 79.25 | 34.60 | 86.33 | 61.60 | 62.21 | 52.97 | 53.48 |

| KIC-770M (Pan et al., 2022) | 74.00 | 67.90 | 36.30 | 35.00 | 37.60 | 85.30 | 29.60 | 94.40 | 55.30 | 65.40 | 52.40 | 57.56 |

| FLIPPED-3B (Ye et al., 2022) | 71.05 | 57.74 | 39.99 | 37.05 | 37.73 | 89.88 | 41.64 | 95.88 | 58.56 | 58.37 | 50.42 | 58.03 |

| GPT-3 (175B) (Brown et al., 2020) | 63.50 | 46.40 | 34.60 | 35.40 | 34.50 | 91.00 | 78.90 | 83.20 | 70.20 | 65.40 | 45.92 | 59.00 |

| TO-11B (Sanh et al., 2021) | 80.83 | 70.12 | 43.56 | 38.68 | 41.26 | 90.02 | 33.58 | 92.40 | 59.94 | 61.45 | 56.58 | 60.76 |

| T5-3B+CoTFT-EVALW/DIRECT | 69.96 | 58.69 | 37.58 | 36.00 | 37.44 | 84.59 | 40.92 | 90.47 | 55.40 | 64.33 | 51.53 | 56.99 |

| TO-3B+CoTFT-EVALW/DIRECT | 80.79 | 65.00 | 39.49 | 35.13 | 38.58 | 88.27 | 41.04 | 92.13 | 56.40 | 65.96 | 53.60 | 59.67 |

| T5-3B+CoTFT-EVALW/CoT | 80.61 | 69.17 | 40.24 | 36.67 | 40.13 | 90.10 | 41.08 | 93.00 | 56.47 | 55.10 | 56.73 | 59.94 |

| TO-3B+CoTFT-EVALW/CoT | 80.25 | 72.62 | 41.71 | 37.22 | 41.89 | 90.88 | 39.50 | 94.47 | 57.47 | 50.58 | 54.27 | 60.08 |

表 3: 在11个未见过的P3数据集 (Sanh et al., 2021) 上的评估性能, 分为4个任务类别。我们报告了基线的直接性能, 因为它们未在指令数据上进行思维链微调。最佳可比性能加粗显示, 次佳性能加下划线。我们排除了Flan-T5和CoT-T5, 因为它们是在未见任务上训练的 (FLAN和SNI的任务与P3评估数据集存在重叠), 这违背了未见任务假设。

T5-11B. Interestingly, Vicuna (Chiang et al., 2023), a LM trained on long-form dialogues between users and GPT models, perform much worse compared to both CoT-T5 and Flan-T5. We conjecture that training on instruction datasets from existing academic benchmarks consisting CoT Collection and Flan Collection is more effective in enabling LMs to solve reasoning tasks compared to chat LMs.

T5-11B。有趣的是,Vicuna (Chiang et al., 2023) 这个基于用户与GPT模型长对话训练的大语言模型,表现远逊于CoT-T5和Flan-T5。我们推测,相比聊天型大语言模型,使用包含CoT Collection和Flan Collection的现有学术基准指令数据集进行训练,能更有效地提升大语言模型解决推理任务的能力。

Setup #2: CoT Fine-tuning with 163 CoT Tasks (T0 Setup) To examine whether the effect of CoT fine-tuning is dependent on large number of tasks and instances, we use the P3 training subset from the COT COLLECTION consisted of 644K instances from 163 tasks, and apply CoT finetuning to T0 (3B) (Sanh et al., 2021) and T5-LM (3B) (Raffel et al., 2020). Note that T0 is trained with 12M instances, hence ${\sim}18.63$ times larger. Then, we evaluate on the P3 evaluation benchmark which consists of 11 different NLP datasets. In addition to the baselines from the previous section (T5-LM, T0, and GPT-3), we also include LMs that are trained on the same T0 setup for comparison such as, (1) RoE (Jang et al., 2023): a modular expert LM that retrieves different expert models depending on the unseen task, (2) KiC (Pan et al., 2022): a retrieval-augmented model that is instruction-tuned to retrieve knowledge from a KB memory, and (3) Flipped (Ye et al., 2022): an instruction-tuned model that is trained to generate the instruction in order to resolve the LM overfitting to the output label as baseline models.

设置 #2: 使用163个思维链任务进行微调 (T0设置)

为验证思维链微调效果是否依赖于大量任务和实例,我们采用COT COLLECTION中的P3训练子集(包含来自163个任务的644K实例),对T0(3B) [Sanh等人,2021]和T5-LM(3B) [Raffel等人,2020]进行思维链微调。需注意T0使用12M实例训练,规模约为${\sim}18.63$倍。随后在包含11个不同NLP数据集的P3评估基准上进行测试。除上一节的基线模型(T5-LM、T0和GPT-3)外,我们还纳入采用相同T0设置训练的对比模型:(1) RoE [Jang等人,2023]:根据未见任务检索不同专家模型的模块化专家大语言模型;(2) KiC [Pan等人,2022]:通过指令调优从知识库内存检索知识的检索增强模型;(3) Flipped [Ye等人,2022]:通过生成指令来解决大语言模型输出标签过拟合问题的指令调优模型。

The results are shown in Table 3. Surprisingly, $\mathrm{T}5{-}3\mathbf{B}+\mathrm{CoT}\mathbf{F}$ T outperforms T0-3B by a $+8.24%$ margin when evaluated with CoT Evaluation, while using ${\sim}18.63$ times less instances. This supports that CoT fine-tuning is data efficient, being effective even with less number of instances and tasks. Moreover, $\mathrm{T}0{-}3\mathbf{B}+\mathrm{CoT}$ FT improves T0-3B by $+8.65%$ on average accuracy. When compared with T0-11B with ${\sim}4$ times more number of parameters, it achieves better performance at sentence completion, and word sense disambiguation (WSD) tasks, and obtains similar performances at natural language inference and co reference resolution tasks.

结果如表 3 所示。令人惊讶的是,在使用 CoT 评估时,$\mathrm{T}5{-}3\mathbf{B}+\mathrm{CoT}\mathbf{F}$ 的表现比 T0-3B 高出 $+8.24%$,而使用的实例数量却减少了 ${\sim}18.63$ 倍。这表明 CoT 微调具有数据高效性,即使在实例和任务数量较少的情况下也能有效工作。此外,$\mathrm{T}0{-}3\mathbf{B}+\mathrm{CoT}$ FT 将 T0-3B 的平均准确率提高了 $+8.65%$。与参数数量多出 ${\sim}4$ 倍的 T0-11B 相比,它在句子补全和词义消歧 (WSD) 任务上表现更好,在自然语言推理和共指消解任务上表现相近。

Setup #3: Multilingual Adaptation with CoT Fine-tuning In previous work, Shi et al. (2022) proposed MGSM, a multilingual reasoning benchmark composed of 10 different languages. In this subsection, we conduct a toy experiment to examine whether CoT fine-tuning could enable LMs to reason step-by-step in multilingual settings as well, using a subset of 5 languages (Korean, Russian, French, Chineses, Japanese) from MGSM.

设置 #3: 采用思维链微调的多语言适应

在之前的工作中,Shi等人 (2022) 提出了MGSM,这是一个由10种不同语言组成的多语言推理基准。在本小节中,我们进行了一个小规模实验,以检验思维链微调是否也能使大语言模型在多语言环境下进行逐步推理,实验中使用了MGSM中的5种语言子集 (韩语、俄语、法语、汉语、日语)。

In Table 4, current smaller LMs can be divided into three categories: (1) Flan-T5, a LM that is CoT fine-tuned with mostly English instruction data, (2) MT5 (Xue et al., 2021), a LM pretrained on diverse languages, but isn’t instruction tuned or CoT finetuned, (3) MT0 (Mu en nigh off et al., 2022), a LM that is instruction-tuned on diverse languages, but isn’t CoT fine-tuned. In relatively underrepresented languages such as Korean, Japanese, and Chinese, all three LMs get close to zero accuracy.

在表4中,当前较小规模的大语言模型可分为三类:(1) Flan-T5,一个主要使用英语指令数据进行思维链(CoT)微调的大语言模型;(2) MT5 (Xue et al., 2021),一个在多语言上预训练但未进行指令调优或CoT微调的大语言模型;(3) MT0 (Muennighoff et al., 2022),一个在多语言上进行指令调优但未进行CoT微调的大语言模型。在韩语、日语和汉语等相对资源不足的语言中,这三种大语言模型的准确率都接近于零。

A natural question arises whether training a multilingual LM that could reason step-by-step on different languages is viable. As a preliminary research, we examine whether CoT Fine-tuning on a single language with a small amount of CoT data could enable LMs to avoid achieving near zero score such as Korean, Chinese and Japanese subsets of MGSM. Since there is no publicly available multilingual instruction dataset, we translate 60K $\sim80\mathrm{K}$ instances from COT COLLECTION for each 5 languages using ChatGPT (OpenAI, 2022), and CoT fine-tune mT5 and mT0 on each of them.

一个自然的问题是,训练一个能在不同语言上逐步推理的多语言大语言模型是否可行。作为初步研究,我们探讨了在单一语言上使用少量思维链 (CoT) 数据进行微调,是否能让大语言模型避免在MGSM的韩语、汉语和日语子集等测试中接近零分的情况。由于目前没有公开的多语言指令数据集,我们使用ChatGPT (OpenAI, 2022) 将COT COLLECTION中的6万$\sim80\mathrm{K}$条实例分别翻译成5种语言,并对mT5和mT0模型进行了各语言的思维链微调。

The results are shown in Table 4. Across all the 5 different languages, CoT fine-tuning brings

结果如表 4 所示。在全部 5 种不同语言中,CoT (Chain-of-Thought) 微调带来了

| 方法 | ko | ru | fr | zh | ja |

|---|---|---|---|---|---|

| FLAN-T5-3B | 0.0 | 2.8 | 7.2 | 0.0 | 0.0 |

| FLAN-T5-11B | 0.0 | 5.2 | 13.2 | 0.0 | 0.0 |

| MT5-3.7B | 0.0 | 1.2 | 2.0 | 0.8 | 0.8 |

| MT0-3.7B | 0.0 | 4.8 | 7.2 | 1.6 | 2.4 |

| GPT-3 3(175B) | 0.0 | 4.4 | 10.8 | 6.8 | 0.8 |

| MT5-3.7B+CoTFT | 3.2 | 6.8 | 9.6 | 6.0 | 7.6 |

| MT0-3.7B+CoTFT | 7.6 | 10.4 | 15.6 | 11.2 | 11.0 |

Table 4: Evaluation performance on MGSM benchmark (Shi et al., 2022) across 5 languages (Korean, Russian, French, Chinese, Japanese, respectively). All evaluations are held in a zero-shot setting with CoT Evaluation except GPT-3 using a 6-Shot prompt for ICL. The best comparable performances are bolded and second best underlined. Note that $\mathrm{^{4}M T}5\mathrm{-}3.7\mathrm{B}+$ COT FT’ and $\mathrm{^{\circ}M T0-}3.7\mathrm{B}+\mathrm{CoT}$ FT’ are trained on a single language instead of multiple languages as mT5 and mT0.

表 4: MGSM基准测试(Shi et al., 2022)在5种语言(韩语、俄语、法语、汉语、日语)上的评估性能。除GPT-3使用6样本提示进行ICL外,所有评估均在零样本设置下使用思维链(CoT)评估进行。最佳性能加粗显示,次佳性能加下划线标注。注意 $\mathrm{^{4}M T}5\mathrm{-}3.7\mathrm{B}+$ CoT FT 和 $\mathrm{^{\circ}M T0-}3.7\mathrm{B}+\mathrm{CoT}$ FT 是在单一语言而非多语言(如mT5和mT0)上训练的。

about non-trivial gains in performance. Even for relatively low-resource languages such as Korean Japanese, and Chinese, CoT fine-tuning on the specific language allows the underlying LM to perform mathematical reasoning in the target language, which are considered very difficult (Shi et al., 2022). Considering that only a very small number of instances were used for language-specific adaptation $(60\mathrm{k}{-}80\mathrm{k})$ , CoT fine-tuning shows potential for efficient language adaptation.

在性能上取得显著提升。即使对于韩语、日语和中文等资源相对较少的语言,针对特定语言进行思维链 (CoT) 微调也能使底层大语言模型在目标语言中执行数学推理任务(这类任务通常被认为极具挑战性 [Shi et al., 2022])。值得注意的是,仅需极少量语种适配样本 $(60\mathrm{k}{-}80\mathrm{k})$ 即可实现这一目标,这表明CoT微调在高效语言适配方面具有潜力。

However, it is noteworthy that we limited our setting to training/evaluating on a single target language, without exploring the cross-lingual transfer of CoT capabilities among varied languages. The chief objective of this experimentation was to ascertain if introducing a minimal volume of CoT data could facilitate effective adaptation to the target language, specifically when addressing reasoning challenges. Up to date, no hypothesis has suggested that training with CoT in various languages could enable cross-lingual transfer of CoT abilities among different languages. We identify this as a promising avenue for future exploration.

然而值得注意的是,我们的实验设置仅限于在单一目标语言上进行训练/评估,并未探索思维链 (CoT) 能力在不同语言间的跨语言迁移。本实验的主要目的是验证:当处理推理任务时,引入少量思维链数据是否有助于有效适应目标语言。迄今为止,尚无假设表明用多种语言的思维链数据进行训练可以实现不同语言间思维链能力的跨语言迁移。我们认为这是一个值得未来探索的研究方向。

4.3 Few-shot Generalization

4.3 少样本 (Few-shot) 泛化

In this subsection, we show how CoT-T5 performs in a few-shot adaptation setting where a limited number of instances from the target task can be used for training, which is sometimes more likely in real-world scenarios.

在本小节中,我们将展示CoT-T5在少样本适应(few-shot adaptation)设置下的表现,该设置允许使用目标任务的有限实例进行训练,这在实际场景中更为常见。

Dataset Setup We choose 4 domain-specific datasets from legal and medical domains including LEDGAR (Tuggener et al., 2020), Case Hold (Zheng et al., 2021), MedNLI (Romanov and Shivade, 2018), and PubMedQA (Jin et al., 2019). To simulate a few-shot setting, we randomly sample 64 instances from the train split of each dataset.

数据集设置

我们从法律和医疗领域选择了4个特定领域数据集,包括LEDGAR (Tuggener et al., 2020)、Case Hold (Zheng et al., 2021)、MedNLI (Romanov and Shivade, 2018) 和 PubMedQA (Jin et al., 2019)。为模拟少样本设置,我们从每个数据集的训练集中随机抽取64个实例。

Table 5: Evaluation performance on 4 domain-specific datasets. FT. denotes Fine-tuning, COT FT. denotes CoT fine-tuning, and COT PT. denotes CoT Prompting. The best comparable performances are bolded and second best underlined. For a few-shot adaptation, we use 64 randomly sampled instances from each dataset.

表 5: 在4个特定领域数据集上的评估性能。FT. 表示微调 (Fine-tuning),COT FT. 表示思维链微调 (CoT fine-tuning),COT PT. 表示思维链提示 (CoT Prompting)。最佳性能加粗显示,次佳性能加下划线。对于少样本适应,我们从每个数据集中随机抽取64个样本。

| 方法 | #训练参数量 | Ledgar | Case Hold | MedNLI | PubmedQA | 总平均 |

|---|---|---|---|---|---|---|

| Flan-T5-3B+全参数微调 | 2.8B | 52.60 | 61.40 | 66.82 | 66.28 | 61.78 |

| Flan-T5-3B+全参数思维链微调 | 2.8B | 53.60 | 58.80 | 65.89 | 65.89 | 61.05 |

| CoT-T5-3B + 全参数思维链微调 (Ours) | 2.8B | 51.90 | 60.60 | 67.16 | 68.12 | 61.95 |

| Flan-T5-3B + LoRA微调 | 2.35M | 53.20 | 58.80 | 61.60 | 67.18 | 60.19 |

| Flan-T5-3B+LoRA思维链微调 | 2.35M | 51.20 | 61.60 | 62.59 | 66.06 | 60.36 |

| CoT-T5-3B + LoRA思维链微调 (Ours) | 2.35M | 54.80 | 63.60 | 68.00 | 69.66 | 64.02 |

| Flan-T5-11B + LoRA微调 | 4.72M | 55.30 | 64.90 | 75.91 | 70.25 | 66.59 |

| Flan-T5-11B+LoRA思维链微调 | 4.72M | 52.10 | 65.50 | 71.63 | 71.60 | 65.21 |

| CoT-T5-11B + LoRA思维链微调 (Ours) | 4.72M | 56.10 | 68.30 | 78.02 | 73.42 | 68.96 |

| Claude (Anthropic, 2023) + 上下文学习 | 0 | 55.70 | 57.20 | 75.94 | 54.58 | 60.85 |

| Claude (Anthropic,2023)+ 思维链提示 | 0 | 34.80 | 43.60 | 76.51 | 52.06 | 51.74 |

| ChatGPT (OpenAI, 2022) + 上下文学习 | 0 | 51.70 | 32.10 | 70.53 | 65.59 | 54.98 |

| ChatGPT (OpenAI, 2022) + 思维链提示 | 0 | 51.00 | 18.90 | 63.71 | 25.22 | 39.70 |

We report the average accuracy across 3 runs with different random seeds. We augment rationales for the 64 training instances using the procedure described in Section 3 for the rationale augmentation phase, utilizing the MCQA prompt from P3 dataset. In an applied setting, practitioners could obtain rationales written by human experts.

我们报告了使用不同随机种子进行3次运行的平均准确率。在理由增强阶段,我们采用P3数据集中的MCQA提示,按照第3节描述的程序对64个训练实例的理由进行了增强。在实际应用中,从业者可以获取由人类专家撰写的理由。

Training Setup We compare Flan-T5 & CoT-T5, across 3B and 11B scale and explore 4 different approaches for few-shot adaptation: (1) regular finetuning, (2) CoT fine-tuning, (3) LoRA fine-tuning, and (4) LoRA CoT fine-tuning. When applying Lora, we use a rank of 4 and train for 1K steps following Liu et al. (2022b). This results in training 2.35M parameters for 3B scale models and 4.72M parameters for 11B scale models. Also, we include Claude (Anthropic, 2023) and ChatGPT (OpenAI, 2022) as ICL baselines by appending demonstrations up to maximum context length6. Specifically, For CoT prompting, the demonstrations are sampled among 64 augmented rationales are used.

训练设置

我们比较了Flan-T5和CoT-T5在3B和11B规模下的表现,并探索了四种少样本适应方法:(1) 常规微调,(2) 思维链微调,(3) LoRA微调,(4) LoRA思维链微调。应用LoRA时,我们采用秩为4的配置,并按照Liu等人(2022b)的方法训练1000步。这使得3B规模模型训练参数达235万,11B规模模型达472万。同时,我们将Claude(Anthropic, 2023)和ChatGPT(OpenAI, 2022)作为上下文学习基线,通过添加示例直至最大上下文长度6。具体而言,对于思维链提示,从64个增强推理样本中选取演示案例。

Effect of LoRA The experimental results are shown in Table 5. Overall, CoT fine-tuning CoTT5 integrated with LoRA obtains the best results overall. Surprisingly for Flan-T5, applying full fine-tuning obtains better performance compared to its counterpart using LoRA fine-tuning. However, when using CoT-T5, LoRA achieves higher performance compared to full fine-tuning. We conjecture this to be the case because introducing only a few parameters enables CoT-T5 to maintain the CoT ability acquired during CoT fine-tuning.

LoRA的影响

实验结果如表5所示。总体而言,集成LoRA的CoT微调CoTT5取得了最佳效果。令人惊讶的是,对于Flan-T5,相比使用LoRA微调的版本,全参数微调表现更优。但在使用CoT-T5时,LoRA的性能反而超越了全参数微调。我们推测这是因为仅引入少量参数能使CoT-T5保持其在CoT微调阶段获得的思维链(CoT)能力。

Fine-tuning vs. CoT Fine-tuning While CoT fine-tuning obtains similar or lower performance compared to regular fine-tuning in Flan-T5, CoTT5 achieves higher performance with CoT finetuning compared to Flan-T5 regular fine-tuning. This results in CoT-T5 in combination with CoT fine-tuning showing the best performance in fewshot adaptation setting.

微调 vs. 思维链 (CoT) 微调

虽然 Flan-T5 的思维链微调相比常规微调取得了相似或更低的性能,但 CoT-T5 通过思维链微调实现了比 Flan-T5 常规微调更高的性能。这使得结合思维链微调的 CoT-T5 在少样本适应场景中表现出最佳性能。

Fine-tuning vs. ICL Lastly, fine-tuning methods obtain overall better results compared to ICL methods utilizing much larger, proprietary LLMs. We conjecture this to be the case due to the long input length of legal and medical datasets, making appending all available demonstrations (64) impossible. While increasing the context length could serve as a temporary solution, it would still mean that the inference time will increase quadratically in proportion to the input length, which makes ICL computationally expensive.

微调 vs 上下文学习

最后,与使用规模更大、专有大语言模型的上下文学习方法相比,微调方法总体上能获得更好的结果。我们推测这是由于法律和医疗数据集的输入长度较长,导致无法附加所有可用示例(64个)。虽然增加上下文长度可以作为一种临时解决方案,但这仍意味着推理时间将随输入长度呈二次方增长,使得上下文学习在计算上成本高昂。

5 Analysis of of CoT Fine-tuning

5 CoT微调分析

In this section, we conduct experiments to address the following two research questions:

在本节中,我们通过实验来解答以下两个研究问题:

• For practitioners, is it more effective to augment CoT rationales across diverse tasks or more instances with a fixed number of tasks? During CoT fine-tuning, does the LM maintain its performance on in-domain tasks without any catastrophic forgetting?

• 对于从业者而言,在多样化任务中增强思维链(CoT)推理更有效,还是在固定任务数量下增加更多实例更有效?在进行思维链微调时,大语言模型能否保持其在领域内任务上的性能而不出现灾难性遗忘?

5.1 Scaling the number of tasks & instances

5.1 扩展任务和实例数量

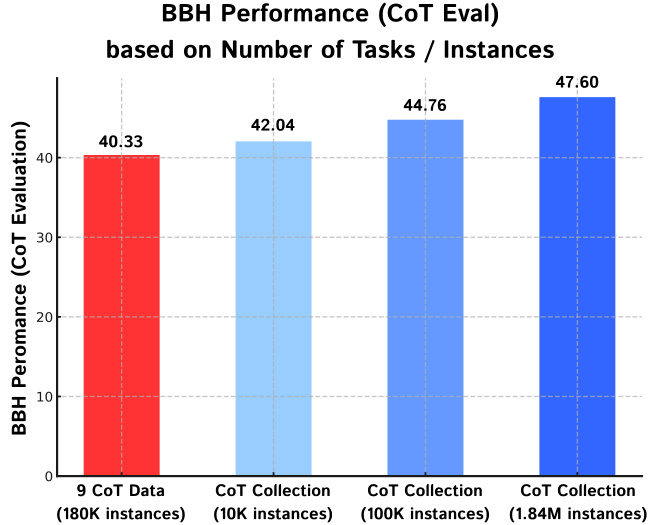

In our main experiments, we used a large number of instances (1.84M) across a large number of tasks (1,060) to apply CoT fine-tuning. A natural question arises: “Is it more effective to increase the number of tasks or the number of instances?” To address this question, we conduct an experiment of randomly sampling a small number of instances within the COT COLLECTION and comparing the BBH performance with (1) a baseline that is only CoT fine-tuned with the existing $9\mathrm{CoT}$ tasks and (2) COT-T5 that fully utilizes all the 1.84M instances. Specifically, we sample 10K, 100K instances within the COT COLLECTION and for the 9 CoT tasks, we fully use all the 180K instances. As COT-T5, we use Flan-T5 as our base model and use the same training configuration and evaluation setting (CoT Eval) during our experiments.

在我们的主要实验中,我们使用了大量任务(1,060个)中的大量实例(1.84M)来进行CoT微调。一个自然的问题是:"增加任务数量还是增加实例数量更有效?"为了回答这个问题,我们进行了一项实验:在COT COLLECTION中随机抽取少量实例,并将其BBH性能与(1)仅使用现有$9\mathrm{CoT}$任务进行CoT微调的基线、(2)充分利用所有1.84M实例的COT-T5进行对比。具体而言,我们在COT COLLECTION中抽取了10K和100K实例,而对于9个CoT任务,我们完全使用了所有180K实例。作为COT-T5,我们使用Flan-T5作为基础模型,并在实验过程中采用相同的训练配置和评估设置(CoT Eval)。

The results are shown in Figure 3, where surprisingly, only using 10K instances across 1,060 tasks obtains better performance compared to using 180K instances across 9 tasks. This shows that maintaining a wide range of tasks is more crucial compared to increasing the number of instances.

结果如图 3 所示,令人惊讶的是,仅在 1,060 个任务中使用 10K 样本的表现优于在 9 个任务中使用 180K 样本。这表明保持任务多样性比增加样本数量更为关键。

5.2 In-domain Task Accuracy of CoT-T5

5.2 CoT-T5的领域内任务准确率

It is well known that LMs that are fine-tuned on a wide range of tasks suffer from catastrophic forgetting (Chen et al., 2020; Jang et al., 2021, 2023), a phenomenon where an LM improves its performance on newly learned tasks while the performance on previously learned tasks diminishes.

众所周知,在广泛任务上进行微调的大语言模型会遭遇灾难性遗忘 (catastrophic forgetting) (Chen et al., 2020; Jang et al., 2021, 2023),即模型在新学习任务上性能提升的同时,先前已掌握任务的性能却出现下降。

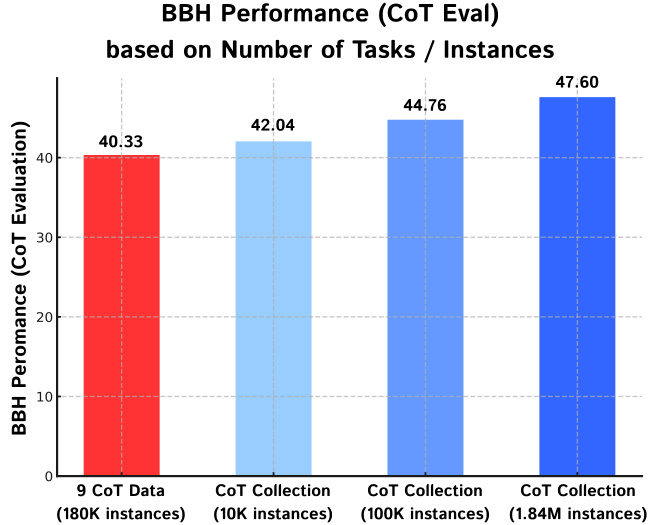

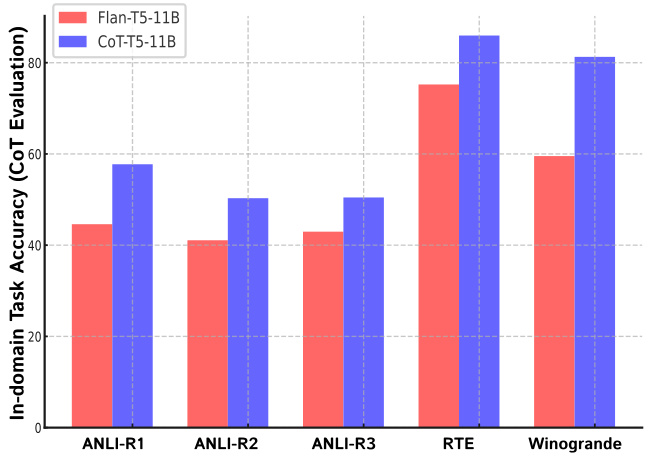

Figure 3: Scaling plot of increasing the number of instances within the COT COLLECTION compared to using the existing 9 CoT datasets. Even with less number of instances, maintaining a wider range of tasks is crucial to improve the CoT abilities of an underlying LLM. In-domain Task Accuracy (CoT Eval) Figure 4: In-domain task accuracy with CoT evaluation. CoT Fine-tuning with the COT COLLECTION also improves accuracy on in-domain tasks as well.

图 3: COT COLLECTION 增加实例数量与使用现有 9 个 CoT 数据集的扩展对比图。即使实例数量较少,保持更广泛的任务范围对于提升底层大语言模型的 CoT 能力至关重要。

图 4: 采用 CoT 评估的领域内任务准确率。使用 COT COLLECTION 进行 CoT 微调也能提高领域内任务的准确率。

While COT-T5 uses the same tasks as its base model (Flan-T5), we also check whether CoT finetuning on a wide range of tasks could possibly harm performance. For this purpose, we use the test set of 5 tasks within the COT COLLECTION, namely ANLI-R1, ANLI-R2, ANLI-R3, RTE, and Winogrande. Note that this differs with the Setup #2 in the main experiments in that we use different base models ( $\mathbf{\nabla}^{\prime}\mathbf{T}()\nu s$ Flan-T5), and the tasks are already used for CoT fine-tuning.

虽然COT-T5使用了与其基础模型(Flan-T5)相同的任务,但我们还检查了在广泛任务上进行思维链(CoT)微调是否会损害性能。为此,我们使用了COT COLLECTION中的5个任务的测试集,即ANLI-R1、ANLI-R2、ANLI-R3、RTE和Winogrande。请注意,这与主要实验中的设置#2不同,因为我们使用了不同的基础模型( $\mathbf{\nabla}^{\prime}\mathbf{T}()\nu s$ vs Flan-T5),且这些任务已用于CoT微调。

Results are shown in Figure 4, where COT-T5 consistently improves in-domain accuracy on the learned tasks as well. However, we conjecture that this is because we used the exact same task that Flan-T5 used to CoT fine-tuned COT-T5. Adding additional tasks that were not used to train Flan-T5 and COT-T5 could show different results, and we leave additional exploration of catastrophic forgetting during CoT fine-tuning to future work.

结果如图 4 所示,其中 COT-T5 在已学习任务上的领域内准确率也持续提升。然而,我们推测这是因为我们使用了与 Flan-T5 完全相同的任务对 COT-T5 进行思维链 (CoT) 微调。若加入未用于训练 Flan-T5 和 COT-T5 的其他任务,可能会呈现不同结果。关于思维链微调过程中的灾难性遗忘问题,我们留待未来工作中进一步探索。

6 Conclusion

6 结论

In this work, we show that augmenting rationales from an instruction tuning data using LLMs (Open AI Codex), and CoT fine-tuning could improve the reasoning capabilities of smaller LMs. Specifically, we construct COT COLLECTION, a largescale instruction-tuning dataset with 1.84M CoT rationales extracted across 1,060 NLP tasks. With our dataset, we CoT fine-tune Flan-T5 and obtain CoT-T5, which shows better zero-shot generalization performance and serves as a better base model when training with few number of instances. We hope COT COLLECTION could be beneficial in the development of future strategies for advancing the capabilities of LMs with CoT fine-tuning.

在本研究中,我们展示了利用大语言模型(OpenAI Codex)增强指令调优数据中的推理依据(rationales),并通过思维链(CoT)微调提升较小规模语言模型的推理能力。具体而言,我们构建了COT COLLECTION数据集——一个包含184万条跨1,060项NLP任务的思维链推理依据的大规模指令调优数据集。基于该数据集,我们对Flan-T5进行思维链微调得到CoT-T5模型,该模型展现出更优的零样本泛化性能,并在少样本训练时成为更优的基础模型。我们希望COT COLLECTION能助力未来通过思维链微调提升语言模型能力的策略开发。

Limitations

局限性

References

参考文献

Recently, there has been a lot of focus on distilling the ability to engage in dialogues with longform outputs in the context of instruction following (Taori et al., 2023; Chiang et al., 2023). Since our model COT-T5 is not trained to engage in dialogues with long-form responses from LLMs, it does not necessarily possess the ability to be applied in chat applications. In contrast, our work focuses on improving the zero-shot and few-shot capabilities by training on academic benchmarks (COT COLLECTION, Flan Collection), where LMs trained with chat data lack on. Utilizing both longform chat data from LLMs along with instruction data from academic tasks has been addressed in future work (Wang et al., 2023). Moreover, various applications have been introduced by using the FEEDBACK COLLECTION to train advanced chat models 7.

近期,指令跟随场景下长文本对话能力的提炼受到广泛关注 (Taori et al., 2023; Chiang et al., 2023)。由于我们的模型COT-T5未针对大语言模型的长文本对话进行训练,其未必具备应用于聊天场景的能力。相比之下,我们的工作聚焦于通过学术基准数据集 (COT COLLECTION, Flan Collection) 训练来提升零样本和少样本能力——这正是基于聊天数据训练的语言模型所欠缺的。如何结合大语言模型的长文本聊天数据与学术任务的指令数据,已有研究将其列为未来工作方向 (Wang et al., 2023)。此外,利用FEEDBACK COLLECTION训练高级聊天模型已催生多种应用7。

Also, since COT-T5 uses Flan-T5 as a base model, it doesn’t have the ability to perform stepby-step reasoning in diverse languages. Exploring how to efficiently and effectively train on CoT data from multiple languages is also a promising and important line of future work. While Shi et al. (2022) has shown that large LMs with more than 100B parameters have the ability to write CoT in different languages, our results show that smaller LMs show nearly zero accuracy when solving math problems in different languages. While CoT finetuning somehow shows slight improvement, a more comprehensive strategy of integrating the ability to write CoT in diverse language would hold crucial.

此外,由于COT-T5以Flan-T5为基础模型,它不具备用多种语言进行逐步推理的能力。探索如何高效地从多语言CoT数据中进行训练,也是一个有前景且重要的未来研究方向。虽然Shi等人 (2022) 表明参数量超过1000亿的大语言模型具备用不同语言编写CoT的能力,但我们的结果显示,较小规模语言模型在解决不同语言的数学问题时准确率几乎为零。尽管CoT微调显示出轻微改进,但整合多语言CoT编写能力的更全面策略将至关重要。

In terms of reproducibility, it is extremely concerning that proprietary LLMs shut down such as the example of the Codex, the LLM we used for rationale augmentation. We provide additional analysis on how different LLMs could be used for this process in Appendix A. Also, there is room of improvement regarding the quality of our dataset by using more powerful LLMs such as GPT-4 and better prompting techniques such as Tree of Thoughts (ToT) (Yao et al., 2023). This was examined by later work in Mukherjee et al. (2023) which used GPT-4 to augment 5 million rationales and Yue et al. (2023) which mixed Chain-of-Thoughts and Program of Thoughts (PoT) during fine-tuning. Using rationales extracted using Tree of Thoughts (Yao et al., 2023) could also be explored in future work.

在可复现性方面,像我们用于原理增强的Codex这样的专有大语言模型停止服务的情况令人极度担忧。我们在附录A中提供了关于如何用不同大语言模型完成这一过程的额外分析。此外,通过使用更强大的大语言模型(如GPT-4)和更好的提示技术(如思维树(ToT) (Yao et al., 2023),我们的数据集质量仍有提升空间。这一点在后续研究中得到了验证:Mukherjee et al. (2023) 使用GPT-4增强了500万条原理,Yue et al. (2023) 在微调阶段混合使用了思维链和程序思维(PoT)。未来工作中还可以探索使用思维树(Yao et al., 2023)提取的原理。

Shourya Aggarwal, Divyanshu Mandowara, Vishwajeet Agrawal, Dinesh Khandelwal, Parag Singla, and Dinesh Garg. 2021. Explanations for commonsense qa: New dataset and models. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 3050–3065.

Shourya Aggarwal、Divyanshu Mandowara、Vishwajeet Agrawal、Dinesh Khandelwal、Parag Singla和Dinesh Garg。2021。常识问答的解释:新数据集与模型。载于《第59届计算语言学协会年会暨第11届自然语言处理国际联合会议(第一卷:长论文)》,第3050–3065页。

Aida Amini, Saadia Gabriel, Shanchuan Lin, Rik Koncel-Kedziorski, Yejin Choi, and Hannaneh Ha- jishirzi. 2019. Mathqa: Towards interpret able math word problem solving with operation-based formalisms. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 2357–2367.

Aida Amini、Saadia Gabriel、Shanchuan Lin、Rik Koncel-Kedziorski、Yejin Choi和Hannaneh Hajishirzi。2019. MathQA: 基于运算形式化的可解释数学应用题求解方法。载于《2019年北美计算语言学协会人类语言技术会议论文集》第1卷(长文与短文),第2357–2367页。

Anthropic. 2023. Claude. https: //www.anthropic.com/index/ introducing-claude.

Anthropic. 2023. Claude. https: //www.anthropic.com/index/ introducing-claude.

Vamsi Aribandi, Yi Tay, Tal Schuster, Jinfeng Rao, Huaixiu Steven Zheng, Sanket Vaibhav Mehta, Honglei Zhuang, Vinh Q Tran, Dara Bahri, Jianmo Ni, et al. 2021. Ext5: Towards extreme multi- task scaling for transfer learning. arXiv preprint arXiv:2111.10952.

Vamsi Aribandi、Yi Tay、Tal Schuster、Jinfeng Rao、Huaixiu Steven Zheng、Sanket Vaibhav Mehta、Honglei Zhuang、Vinh Q Tran、Dara Bahri、Jianmo Ni等。2021。Ext5:面向迁移学习的极端多任务扩展。arXiv预印本arXiv:2111.10952。

Akari Asai, Mohammad reza Salehi, Matthew E Peters, and Hannaneh Hajishirzi. 2022. Attention al mixtures of soft prompt tuning for parameter-efficient multi-task knowledge sharing. arXiv preprint arXiv:2205.11961.

Akari Asai、Mohammad reza Salehi、Matthew E Peters和Hannaneh Hajishirzi。2022。基于注意力混合软提示调参的高效多任务知识共享方法。arXiv预印本arXiv:2205.11961。

Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda Askell, et al. 2020. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901.

Tom Brown、Benjamin Mann、Nick Ryder、Melanie Subbiah、Jared D Kaplan、Prafulla Dhariwal、Arvind Neelakantan、Pranav Shyam、Girish Sastry、Amanda Askell 等. 2020. 大语言模型是少样本学习者. 神经信息处理系统进展, 33:1877–1901.

Oana-Maria Camburu, Tim Rock t s chel, Thomas L ukasiewicz, and Phil Blunsom. 2018. e-snli: Natural language inference with natural language explanations. Advances in Neural Information Processing Systems, 31.

Oana-Maria Camburu、Tim Rocktäschel、Thomas Lukasiewicz 和 Phil Blunsom。2018. e-SNLI:带自然语言解释的自然语言推理。神经信息处理系统进展,31。

Sanyuan Chen, Yutai Hou, Yiming Cui, Wanxiang Che, Ting Liu, and Xiangzhan Yu. 2020. Recall and learn: Fine-tuning deep pretrained language models with less forgetting. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 7870–7881.

Sanyuan Chen、Yutai Hou、Yiming Cui、Wanxiang Che、Ting Liu和Xiangzhan Yu。2020。记忆与学习:减少遗忘的深度预训练语言模型微调。载于《2020年自然语言处理实证方法会议论文集》(EMNLP),第7870–7881页。

Wei-Lin Chiang, Zhuohan Li, Zi Lin, Ying Sheng, Zhanghao Wu, Hao Zhang, Lianmin Zheng, Siyuan Zhuang, Yonghao Zhuang, Joseph E. Gonzalez, Ion Stoica, and Eric P. Xing. 2023. Vicuna: An opensource chatbot impressing gpt-4 with $90%*$ chatgpt quality.

Wei-Lin Chiang、Zhuohan Li、Zi Lin、Ying Sheng、Zhanghao Wu、Hao Zhang、Lianmin Zheng、Siyuan Zhuang、Yonghao Zhuang、Joseph E. Gonzalez、Ion Stoica 和 Eric P. Xing。2023。Vicuna:一款以90% ChatGPT质量惊艳GPT-4的开源聊天机器人。

Hyung Won Chung, Le Hou, Shayne Longpre, Barret Zoph, Yi Tay, William Fedus, Eric Li, Xuezhi Wang, Mostafa Dehghani, Siddhartha Brahma, et al. 2022. Scaling instruction-finetuned language models. arXiv preprint arXiv:2210.11416.

Hyung Won Chung、Le Hou、Shayne Longpre、Barret Zoph、Yi Tay、William Fedus、Eric Li、Xuezhi Wang、Mostafa Dehghani、Siddhartha Brahma 等. 2022. 规模化指令微调语言模型. arXiv预印本 arXiv:2210.11416.

Karl Cobbe, Vineet Kosaraju, Mohammad Bavarian, Jacob Hilton, Reiichiro Nakano, Christopher Hesse, and John Schulman. 2021. Training verifiers to solve math word problems. arXiv preprint arXiv:2110.14168.

Karl Cobbe、Vineet Kosaraju、Mohammad Bavarian、Jacob Hilton、Reiichiro Nakano、Christopher Hesse 和 John Schulman。2021. 训练验证器解决数学应用题。arXiv预印本 arXiv:2110.14168。

Yao Fu, Hao Peng, Litu Ou, Ashish Sabharwal, and Tushar Khot. 2023. Specializing smaller language models towards multi-step reasoning. arXiv preprint arXiv:2301.12726.

Yao Fu、Hao Peng、Litu Ou、Ashish Sabharwal 和 Tushar Khot。2023。面向多步推理的小型语言模型专业化研究。arXiv预印本 arXiv:2301.12726。

Mor Geva, Daniel Khashabi, Elad Segal, Tushar Khot, Dan Roth, and Jonathan Berant. 2021. Did aristotle use a laptop? a question answering benchmark with implicit reasoning strategies. Transactions of the Association for Computational Linguistics, 9:346– 361.

Mor Geva、Daniel Khashabi、Elad Segal、Tushar Khot、Dan Roth 和 Jonathan Berant。2021。亚里士多德使用过笔记本电脑吗?一个蕴含隐式推理策略的问答基准。《计算语言学协会汇刊》9:346–361。

Olga Golovneva, Moya Chen, Spencer Poff, Martin Corredor, Luke Z ett le moyer, Maryam Fazel-Zarandi, and Asli Cel i kyi l maz. 2022. Roscoe: A suite of metrics for scoring step-by-step reasoning. arXiv preprint arXiv:2212.07919.

Olga Golovneva、Moya Chen、Spencer Poff、Martin Corredor、Luke Zettlemoyer、Maryam Fazel-Zarandi和Asli Celikyilmaz。2022。Roscoe: 一套用于评估逐步推理的指标集。arXiv预印本arXiv:2212.07919。

Google. 2023. Bard. blog.google/technology/ai/ bard-google-ai-search-updates/.

Google. 2023. Bard. https://blog.google/technology/ai/bard-google-ai-search-updates/.

Namgyu Ho, Laura Schmid, and Se-Young Yun. 2022. Large language models are reasoning teachers. arXiv preprint arXiv:2212.10071.

Namgyu Ho、Laura Schmid 和 Se-Young Yun。2022。大语言模型 (Large Language Model) 是推理导师。arXiv 预印本 arXiv:2212.10071。

Ari Holtzman, Jan Buys, Li Du, Maxwell Forbes, and Yejin Choi. 2019. The curious case of neural text degeneration. arXiv preprint arXiv:1904.09751.

Ari Holtzman、Jan Buys、Li Du、Maxwell Forbes 和 Yejin Choi。2019。神经文本退化的奇特案例。arXiv预印本 arXiv:1904.09751。

Or Honovich, Thomas Scialom, Omer Levy, and Timo Schick. 2022. Unnatural instructions: Tuning language models with (almost) no human labor. arXiv preprint arXiv:2212.09689.

Or Honovich、Thomas Scialom、Omer Levy 和 Timo Schick. 2022. 非自然指令: 以(几乎)无需人工的方式调优语言模型. arXiv预印本 arXiv:2212.09689.

Edward J Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685.

Edward J Hu、Yelong Shen、Phillip Wallis、Zeyuan Allen-Zhu、Yuanzhi Li、Shean Wang、Lu Wang 和 Weizhu Chen。2021。LoRA: 大语言模型的低秩适配。arXiv预印本 arXiv:2106.09685。

Srinivasan Iyer, Xi Victoria Lin, Ramakanth Pasunuru, Todor Mihaylov, Dániel Simig, Ping Yu, Kurt Shuster, Tianlu Wang, Qing Liu, Punit Singh Koura, et al. 2022. Opt-iml: Scaling language model instruction meta learning through the lens of generalization. arXiv preprint arXiv:2212.12017.

Srinivasan Iyer、Xi Victoria Lin、Ramakanth Pasunuru、Todor Mihaylov、Dániel Simig、Ping Yu、Kurt Shuster、Tianlu Wang、Qing Liu、Punit Singh Koura等。2022。Opt-IML:通过泛化视角扩展语言模型指令元学习。arXiv预印本 arXiv:2212.12017。

Joel Jang, Seonghyeon Ye, Sohee Yang, Joongbo Shin, Janghoon Han, Gyeonghun Kim, Stanley Jungkyu Choi, and Minjoon Seo. 2021. Towards continual knowledge learning of language models. arXiv preprint arXiv:2110.03215.

Joel Jang、Seonghyeon Ye、Sohee Yang、Joongbo Shin、Janghoon Han、Gyeonghun Kim、Stanley Jungkyu Choi 和 Minjoon Seo。2021。语言模型的持续知识学习探索。arXiv预印本 arXiv:2110.03215。

Joel Jang, Seungone Kim, Seonghyeon Ye, Doyoung Kim, Lajanugen Logeswaran, Moontae Lee, Kyung- jae Lee, and Minjoon Seo. 2023. Exploring the benefits of training expert language models over instruction tuning. arXiv preprint arXiv:2302.03202.

Joel Jang、Seungone Kim、Seonghyeon Ye、Doyoung Kim、Lajanugen Logeswaran、Moontae Lee、Kyung-jae Lee 和 Minjoon Seo。2023。探索专家语言模型训练相较于指令调优的优势。arXiv预印本 arXiv:2302.03202。

Quentin Lhoest, Albert Villanova del Moral, Yacine Jernite, Abhishek Thakur, Patr