Enhancing Wrist Fracture Detection with YOLO: Analysis of State-of-the-art Single-stage Detection Models

基于YOLO的腕部骨折检测增强:先进单阶段检测模型分析

A R T I C L E I N F O

文章信息

A B S T R A C T

摘要

Diagnosing and treating abnormalities in the wrist, specifically distal radius, and ulna fractures, is a crucial concern among children, adolescents, and young adults, with a higher incidence rate during puberty. However, the scarcity of radiologists and the lack of specialized training among medical professionals pose a significant risk to patient care. This problem is further exacerbated by the rising number of imaging studies and limited access to specialist reporting in certain regions. This highlights the need for innovative solutions to improve the diagnosis and treatment of wrist abnormalities. Automated wrist fracture detection using object detection has shown potential, but current studies mainly use two-stage detection methods with limited evidence for single-stage effectiveness. This study employs state-of-the-art single-stage deep neural network-based detection models YOLOv5, YOLOv6, YOLOv7, and YOLOv8 to detect wrist abnormalities. Through extensive experimentation, we found that these YOLO models outperform the commonly used two-stage detection algorithm, Faster R-CNN, in fracture detection. Additionally, compound-scaled variants of each YOLO model were compared, with YOLOv8m demonstrating a highest fracture detection sensitivity of 0.92 and mean average precision (mAP) of 0.95. On the other hand, YOLOv6m achieved the highest sensitivity across all classes at 0.83. Meanwhile, YOLOv8x recorded the highest mAP of 0.77 for all classes on the GRAZPEDWRI-DX pediatric wrist dataset, highlighting the potential of single-stage models for enhancing pediatric wrist imaging.

诊断和治疗腕部异常(尤其是桡骨远端和尺骨骨折)是儿童、青少年和年轻成人群体中的关键问题,在青春期发病率较高。然而,放射科医师的稀缺以及医疗专业人员缺乏专业培训,对患者护理构成了重大风险。随着影像检查数量的增加和某些地区专科报告的获取受限,这一问题进一步加剧。这凸显了需要创新解决方案来改善腕部异常的诊断和治疗。

基于目标检测的自动腕部骨折识别已展现出潜力,但目前研究主要采用两阶段检测方法,关于单阶段方法有效性的证据有限。本研究采用最先进的基于单阶段深度神经网络的检测模型 YOLOv5、YOLOv6、YOLOv7 和 YOLOv8 来检测腕部异常。通过大量实验,我们发现这些 YOLO 模型在骨折检测中优于常用的两阶段检测算法 Faster R-CNN。此外,比较了各 YOLO 模型的复合缩放变体,其中 YOLOv8m 表现出最高的骨折检测灵敏度(0.92)和平均精度均值(mAP,0.95)。另一方面,YOLOv6m 在所有类别中实现了最高的灵敏度(0.83)。同时,YOLOv8x 在 GRAZPEDWRI-DX 儿科腕部数据集上记录了所有类别的最高 mAP(0.77),凸显了单阶段模型在提升儿科腕部影像诊断中的潜力。

1. Introduction

1. 引言

Wrist abnormalities are a common occurrence in children, adolescents, and young adults. Among them, wrist fractures such as distal radius and ulna fractures are the most common with incidence peaks during puberty Hedstrom, Svensson, Bergstrom and Michno (2010); Randsborg et al. (2013); Landin (1997); Cheng and Shen (1993). Timely evaluation and treatment of these fractures are essential to prevent life-long implications. Digital radio graph y is a widely used imaging modality to obtain wrist radio graphs. While X-ray is often the first and most common imaging modality used for wrist problems, the choice of test depends on the suspected abnormality, clinical presentation, and available resources. If an X-ray doesn’t provide a clear diagnosis, other imaging modalities like MRI, CT, or ultrasound may be recommended. The obtained radio graphs are then interpreted by surgeons or physicians in training to diagnose wrist abnormalities. However, medical professionals may lack the specialized training to assess these injuries accurately and may rely on radiograph interpretation without the support of an expert radiologist or qualified colleagues Hallas and Ellingsen (2006). Studies have shown that diagnostic errors in reading emergency X-rays can reach up to $26%$ Guly (2001); Mounts, C lingen peel, McGuire, Byers and Kireeva (2011); Er, Kara, Oyar and Unluer (2013); Juhl, Moller-Madsen and Jensen (1990). This is compounded by the shortage of radiologists even in developed countries

手腕异常在儿童、青少年和年轻成人中较为常见。其中,桡骨远端和尺骨骨折等手腕骨折最为普遍,其发病率在青春期达到峰值 (Hedstrom, Svensson, Bergstrom and Michno 2010; Randsborg et al. 2013; Landin 1997; Cheng and Shen 1993)。及时评估和治疗这些骨折对于避免终身影响至关重要。数字X线摄影是获取手腕X线片的常用成像方式。虽然X线通常是手腕问题的首选和最常用成像方法,但检查选择取决于疑似异常、临床表现和可用资源。若X线无法明确诊断,则可能建议采用MRI、CT或超声等其他成像方式。随后,由外科医生或实习医师解读所获得的X线片以诊断手腕异常。然而,医疗专业人员可能缺乏准确评估这些损伤的专业培训,且可能在缺乏放射科专家或合格同事支持的情况下依赖X线片解读 (Hallas and Ellingsen 2006)。研究表明,急诊X线读片的诊断错误率可高达$26%$ (Guly 2001; Mounts, C lingen peel, McGuire, Byers and Kireeva 2011; Er, Kara, Oyar and Unluer 2013; Juhl, Moller-Madsen and Jensen 1990)。即使在发达国家,放射科医师的短缺也加剧了这一问题。

Burki (2018); Rimmer (2017); Makary and Takacs (2022) and limited access to specialist reporting in other parts of the world Rosman (2015) posing a high risk to patient care. The shortage is expected to escalate in the upcoming years due to a growing disparity between the increasing demand for imaging studies and the limited supply of radiology residency positions. The number of imaging studies rises by an average of five percent annually, while the number of radiology residency positions only grows by two percent. Smith-Bindman, Kwan, Marlow and et al. (2019). While imaging modalities such as MRI, CT, and ultrasound can further assist in the diagnosis of wrist abnormalities, some fractures may still be occult Fotiadou, Patel, Morgan and Karantanas (2011); Neubauer et al. (2016).

Burki (2018); Rimmer (2017); Makary和Takacs (2022) 以及世界其他地区专业报告获取受限 Rosman (2015) 对患者护理构成高风险。由于影像检查需求增长与放射科住院医师职位供应有限之间的差距日益扩大,预计未来几年短缺问题将加剧。影像检查数量每年平均增长5%,而放射科住院医师职位仅增长2%。Smith-Bindman、Kwan、Marlow等 (2019)。虽然MRI、CT和超声等影像模态可进一步协助诊断腕部异常,但某些骨折仍可能隐匿 Fotiadou、Patel、Morgan和Karantanas (2011); Neubauer等 (2016)。

Recent advances in computer vision, more specifically, object detection have shown promising results in medical settings. Some of the positive results of detecting pathologies in trauma X-rays were recently published Adams, Henderson, Yi and Babyn (2020); Tanzi et al. (2020); Choi et al. (2020). In recent years, significant progress has been made in the development of object detection algorithms, leading to their widespread adoption in the medical community. An earlier approach called the sliding window approach Lampert, Blaschko and Hofmann (2008) for object detection involved dividing an image into a grid of overlapping regions and then classifying each region as containing the object of interest or not. Key implementations of this method include cascade class if i ers that employ LBP (Local Binary Patterns)

计算机视觉领域,尤其是目标检测技术的最新进展,在医疗场景中展现出令人瞩目的成果。近期多项研究 [Adams, Henderson, Yi and Babyn (2020); Tanzi et al. (2020); Choi et al. (2020)] 证实了该技术在创伤X光片病理检测中的有效性。近年来,目标检测算法的显著进步使其在医疗界得到广泛应用。早期采用滑动窗口法 [Lampert, Blaschko and Hofmann (2008)] 进行目标检测时,需将图像划分为重叠区域网格,再逐区域判断是否包含目标对象。该方法的关键实现包括采用LBP (Local Binary Patterns) 特征的级联分类器。

or Haar-like features. These class if i ers are trained using positive examples of a specific object set against random negative images of the same size. Once optimized, the classifier can accurately identify the target object within a specific section of an image. To detect the object throughout the whole image, the classifier systematically examines each segment. It’s essential to differentiate between LBP and Haar-like features. LBP characterizes the local texture of an image by comparing a pixel to its neighboring ones, while Haarlike features measure differences in pixel intensities within neighboring rectangular areas. There are several disadvantages of the sliding window approach, one of them being that it is computationally expensive as a large number of regions need to be classified. To address these issues, region-based methods were invented. The main idea behind these methods was to generate candidate object regions and classify only those regions as containing the object of interest or not.

或类 Haar 特征。这些分类器使用特定对象的正例样本与相同尺寸的随机负例图像进行训练。优化后,分类器能准确识别图像特定区域中的目标对象。为了在整个图像中检测对象,分类器会系统性地检查每个片段。区分 LBP 和类 Haar 特征至关重要:LBP 通过比较像素与其邻域像素来描述图像的局部纹理,而类 Haar 特征则测量相邻矩形区域内像素强度的差异。滑动窗口方法存在若干缺点,其中之一是计算成本高,因为需要对大量区域进行分类。为解决这些问题,人们发明了基于区域的方法,其核心思想是生成候选对象区域,仅对这些区域进行分类以判断是否包含目标对象。

Another method developed as an improvement over the sliding window approach was the single-stage detection method which has gained popularity in recent years due to its efficiency and good performance. This approach uses a single forward propagation through the network to predict bounding boxes and class probabilities, eliminating the need to generate candidate object regions, and making it faster than region-based approaches. While two-stage detection generates candidate regions in the first stage and refines them in the second stage at the cost of speed and computational efficiency, single-stage detection provides a balance between speed and accuracy by predicting final results in a single pass through the network.

作为对滑动窗口方法的改进,另一种近年因高效和良好性能而流行的技术是单阶段检测法。该方法通过单次网络前向传播同时预测边界框和类别概率,无需生成候选目标区域,因而比基于区域的方法更快速。双阶段检测首先生成候选区域,第二阶段进行细化,但牺牲了速度和计算效率;相比之下,单阶段检测通过单次网络推理直接输出最终结果,在速度与精度间实现了平衡。

Two-stage detection has been the most widely used approach for detecting wrist abnormalities in recent years. However, there has been limited research on the effectiveness of single-stage detectors in detecting various abnormalities in the wrist, including fractures. In this study, we focus on the effectiveness of SOTA single-stage detectors in detecting wrist abnormalities. Additionally, this study is unique in its use of a large, comprehensively annotated dataset called GRAZPEDWRI-DX presented in a recent publication Nagy, Janisch, Hržić, Sorantin and Tschauner (2022). The characteristics and complexity of the dataset are discussed in section 4.

近年来,两阶段检测一直是检测手腕异常最广泛使用的方法。然而,关于单阶段检测器在检测手腕各类异常(包括骨折)方面的有效性研究却十分有限。本研究重点关注SOTA(State-of-the-Art)单阶段检测器在手腕异常检测中的有效性。此外,本研究的独特之处在于使用了近期发表的GRAZPEDWRI-DX大型综合标注数据集(Nagy, Janisch, Hržić, Sorantin和Tschauner,2022)。第4节将讨论该数据集的特征和复杂性。

Wrist fractures represent just one of several typical wrist abnormalities, other prevalent conditions include Carpal Tunnel Syndrome (CTS), Ganglion Cysts, Osteoarthritis, Tendinitis, as well as Sprains and Strains. Within the dataset that we use, the distinct objects are categorized as fracture, per io steal reaction, metal, pron at or sign, softtissue, boneanomaly, bonelesion, and foreign body. It’s crucial to understand that our primary goal is to detect these specific objects rather than diagnose the over arching abnormalities. In our context, the presence of these objects (including fractures) in the wrist can be considered as ’abnormal’. Moreover, the presence of objects other than fractures may suggest another associated wrist abnormality. For instance, soft tissue presence might be indicative of CTS or a ganglion cyst. In CTS, swelling of the synovial tissue that lines the tendons in the carpal tunnel may be observable. Conversely, a ganglion cyst manifests as a soft tissue structure. The term ’bone lesion’ denotes an anomalous area within the bone, severe sprains can involve avulsion fractures where a fragment of bone is pulled away by the ligament.

腕部骨折只是几种典型腕部异常情况之一,其他常见病症还包括腕管综合征 (CTS) 、腱鞘囊肿、骨关节炎、肌腱炎以及扭伤和拉伤。在我们使用的数据集中,不同对象被分类为骨折、骨膜反应、金属物、旋前肌征、软组织、骨异常、骨病变和异物。需要明确的是,我们的主要目标是检测这些特定对象而非诊断 overarching 异常。在本研究中,腕部出现这些对象(包括骨折)可被视为"异常"。此外,非骨折对象的存在可能提示其他相关腕部异常。例如,软组织存在可能暗示腕管综合征或腱鞘囊肿——腕管综合征中可观察到肌腱滑膜组织肿胀,而腱鞘囊肿则表现为软组织结构。"骨病变"指骨骼内的异常区域,严重扭伤可能涉及韧带撕脱性骨折(骨碎片被韧带扯离)[20]。

1.1. Study Objective & Research Questions

1.1. 研究目标与问题

The primary objective of this study is to test the effec ti ve ness of the state-of-the-art YOLO detection models, YOLOv5, YOLOv6, YOLOv7, and YOLOv8 on a comprehensively annotated dataset "GRAZPEDWRI-DX" recently released to the public. We compare the performances of all variants within each YOLO model employed to see whether the use of a compound-scaled version of the same architecture improves its performance. Moreover, this study also investigates how effective these single-stage detection methods are in detecting fractures compared to a two-stage detection method widely used in the past. In addition to conducting object detection across multiple classes, we also evaluate the performance of a conventional CNN in binary classification, specifically in distinguishing between fractures and non-fractures. We hypothesize that fractures in the near vicinity of the wrist in pediatric X-ray images can be detected efficiently using YOLO models proposed by ultra ly tics (2022), Li, Li, Jiang, Weng, Geng, Li, Ke, Li, Cheng, Nie, Li, Zhang, Liang, Zhou, Xu, Chu and Wei (2022), Wang, Boc hk ovsk iy and Liao (2022), and ultra ly tics (2023) respectively.

本研究的主要目标是测试最先进的YOLO检测模型(包括YOLOv5、YOLOv6、YOLOv7和YOLOv8)在近期公开的全面标注数据集"GRAZPEDWRI-DX"上的有效性。我们比较了每个YOLO模型所有变体的性能,以验证相同架构的复合缩放版本是否能提升其表现。此外,本研究还探讨了这些单阶段检测方法与过去广泛使用的两阶段检测方法相比,在骨折检测方面的效果差异。除了进行多类别目标检测外,我们还评估了传统CNN在二分类任务(即区分骨折与非骨折)中的性能。我们假设,使用ultralytics (2022)、Li等(2022)、Wang等(2022)和ultralytics (2023)分别提出的YOLO模型,可以有效检测儿科X光图像中腕部附近区域的骨折。

We analyze the potential of utilizing object detection techniques in answering the following research questions (RQ):

我们分析了利用目标检测技术回答以下研究问题(RQ)的潜力:

1.2. Contribution

1.2. 贡献

The major contributions of this article are as follows:

本文的主要贡献如下:

• A thorough performance assessment of SOTA YOLO detection models on the newly released GRAZPEDWRIDX dataset, a large and diverse set of pediatric X-ray images. To the best of our knowledge, this is the first study of its kind. • An in-depth comparison of the performance of various variants within each YOLO model utilized. • Achieved state-of-the-art mean average precision (mAP) score on the GRAZPEDWRI-DX dataset.

• 在新发布的GRAZPEDWRI-DX数据集(一个庞大且多样化的儿科X光影像集)上对SOTA YOLO检测模型进行全面性能评估。据我们所知,这是该领域的首项研究。

• 深入比较所用各YOLO模型变体的性能表现。

• 在GRAZPEDWRI-DX数据集上实现了最先进的平均精度均值(mAP)分数。

• A detailed performance analysis of single-stage detection models in comparison to the widely-used twostage detection model, Faster R-CNN.

• 对单阶段检测模型与广泛使用的两阶段检测模型 Faster R-CNN 进行详细性能分析。

2. Related Work

2. 相关工作

Fracture detection is a crucial aspect in the field of wrist trauma, and computer vision techniques have played a significant role in advancing the research in this area. This section provides a comprehensive overview of the existing studies on fracture detection and highlights the key findings. The studies are divided into two subheadings: "Two-stage detection" and "One-stage detection". The first subheading covers studies that have used two-stage detection techniques, while the second subheading focuses on studies that have only employed single-stage detection algorithms.

手腕创伤领域的骨折检测至关重要,计算机视觉技术在该领域的研究推进中发挥了重要作用。本节全面综述了现有骨折检测研究并突出关键发现,将相关研究分为两个子章节:"两阶段检测"与"单阶段检测"。第一个子章节涵盖采用两阶段检测技术的研究,第二个子章节则聚焦仅使用单阶段检测算法的研究。

2.1. Two-stage detection

2.1. 两阶段检测

The detection of bone abnormalities, including fracture detection, has been widely studied in the literature, mainly using two-stage detection algorithms. For instance, In a study by Yahalomi, Chernofsky and Werman (2018), a Faster R-CNN model utilizing Visual Geometry Group (VGG16) was applied to identify distal radius fractures in antero posterior wrist X-ray images. The model achieved a mAP of 0.87 when tested on a set of 1,312 images. It should be noted that the initial dataset consisted of only 95 antero posterior images, with and without fractures, which were then augmented for training as well as for testing.

骨骼异常检测(包括骨折检测)在文献中已有广泛研究,主要采用两阶段检测算法。例如,Yahalomi、Chernofsky和Werman (2018) 的研究中,应用了基于Visual Geometry Group (VGG16) 的Faster R-CNN模型来识别腕关节正位X光图像中的桡骨远端骨折。该模型在1,312张测试图像上取得了0.87的mAP值。值得注意的是,初始数据集仅包含95张正位图像(含骨折与不含骨折),随后通过数据增强扩充用于训练和测试。

Thian, Li, Jagmohan, Sia, Chan and Tan (2019) developed two separate Faster R-CNN models with InceptionResNet for frontal and lateral projections of wrist images. The models were trained on 6,515 and 6,537 images of frontal and lateral projections, respectively. The frontal model detected $91%$ of fractures, with a specificity of 0.83 and a sensitivity of 0.96. The lateral model detected $96%$ of fractures, with a specificity of 0.86 and a sensitivity of 0.97. Both models had a high area under the receiver operating characteristic curve (AUC-ROC) values, with the frontal model having 0.92 and the lateral model having 0.93. The overall per-study specificity was 0.73, sensitivity was 0.98, and AUC was 0.89.

Thian、Li、Jagmohan、Sia、Chan和Tan (2019) 开发了两个独立的Faster R-CNN模型,采用InceptionResNet架构,分别用于腕部图像的正位和侧位投影。模型分别在6,515张正位投影图像和6,537张侧位投影图像上进行了训练。正位模型检测到$91%$的骨折,特异性为0.83,灵敏度为0.96。侧位模型检测到$96%$的骨折,特异性为0.86,灵敏度为0.97。两个模型的受试者工作特征曲线下面积 (AUC-ROC) 值均较高,正位模型为0.92,侧位模型为0.93。整体每项研究的特异性为0.73,灵敏度为0.98,AUC为0.89。

Guan, Zhang, Yao, Wang and Wang (2020) used a twostage R-CNN method to achieve an average precision (AP) of 0.62 on approximately $4{,}000\mathrm{X}$ -ray images of arm fractures in mus cul o skeletal radio graphs, MURA dataset. Wang, Yao, Zhang, Guan, Wang and Zhang (2021) developed a twostage R-CNN network called Parallel Net, with a TripleNet backbone network, for fracture detection in a dataset of 3,842 thigh fracture X-ray images, achieving an AP of 0.88 at an Intersection over Union (IoU) threshold of 0.5.

Guan、Zhang、Yao、Wang和Wang (2020) 采用两阶段R-CNN方法,在肌肉骨骼放射影像MURA数据集的约4000张手臂骨折X光片上实现了0.62的平均精度(AP)。Wang、Yao、Zhang、Guan、Wang和Zhang (2021) 开发了名为Parallel Net的双阶段R-CNN网络,其主干网络为TripleNet,用于3842张大腿骨折X光片数据集的骨折检测,在交并比(IoU)阈值为0.5时达到0.88的AP值。

Qi, Zhao, Shi, Zuo, Zhang, Long, Wang and Wang (2020) used a Faster R-CNN model with an anchor-based approach, combined with a multi-resolution Feature Pyramid Network (FPN) and a ResNet50 backbone network. They tested the model on $2333\mathrm{X}$ -ray images of different types of femoral fractures and obtained a mAP score of 0.69.

Qi、Zhao、Shi、Zuo、Zhang、Long、Wang和Wang (2020) 采用基于锚点的Faster R-CNN模型,结合多分辨率特征金字塔网络 (FPN) 和ResNet50主干网络。他们在2333张不同类型股骨骨折的X射线图像上测试该模型,获得了0.69的mAP分数。

Raisuddin, Va at to va ara, Nevalainen and et al. (2021) developed a deep learning-based pipeline called DeepWrist for detecting distal radius fractures. The model was trained on a dataset of 1946 wrist studies and was evaluated on two test sets. The first test set, comprising 207 cases, resulted in an AP score of 0.99, while the second test set, comprising 105 challenging cases, resulted in an AP of 0.64. The model generated heatmaps to indicate the probability of a fracture near the vicinity of the wrist but did not provide a bounding box or polygon to clearly locate the fracture. The study was limited by the use of a small dataset with a disproportionate number of challenging cases.

Raisuddin、Va at to va ara、Nevalainen等人(2021)开发了一个名为DeepWrist的基于深度学习(deep learning)的流程,用于检测桡骨远端骨折。该模型在1946个腕部研究数据集上进行训练,并在两个测试集上进行评估。第一个测试集包含207个病例,AP得分为0.99;第二个测试集包含105个具有挑战性的病例,AP得分为0.64。该模型生成热图(heatmap)来显示腕部附近骨折的概率,但没有提供边界框(bounding box)或多边形来精确定位骨折位置。该研究的局限性在于使用了小数据集,且具有挑战性的病例比例过高。

Ma and Luo (2021) in their study, first classified the images in the Radio pae dia dataset into the fracture and nonfracture categories using CrackNet. After this, they utilized Faster R-CNN for fracture detection on the 1052 bone images in the dataset. With an accuracy of 0.88, a recall of 0.88, and a precision of 0.89, they demonstrated the usefulness of the proposed approach. Wu, Yan, Liu, Yu, Geng, Wu, Han, Guo and Gao (2021) applied a Feature Ambiguity Mitigate Operator model along with ResNeXt101 and a FPN to identify fractures in a collection of 9040 radio graphs of various body parts, including the hand, wrist, pelvic, knee, ankle, foot, and shoulder. They accomplished an AP of 0.77.

Ma 和 Luo (2021) 在其研究中,首先使用 CrackNet 将 Radio pae dia 数据集中的图像分类为骨折和非骨折两类。随后,他们利用 Faster R-CNN 对数据集中的 1052 张骨骼图像进行骨折检测,并以 0.88 的准确率、0.88 的召回率和 0.89 的精确度验证了该方法的有效性。Wu、Yan、Liu、Yu、Geng、Wu、Han、Guo 和 Gao (2021) 则采用特征模糊缓解算子 (Feature Ambiguity Mitigate Operator) 模型结合 ResNeXt101 和 FPN,对包含手部、腕部、骨盆、膝盖、脚踝、足部和肩部等部位的 9040 张放射图像进行骨折识别,最终实现了 0.77 的平均精度 (AP)。

Xue, Yan, Luo, Zhang, Chaikovska, Liu, Gao and Yang (2021) proposed a guided anchoring method (GA) for fracture detection in hand X-ray images using the Faster RCNN model, which was used to forecast the position of fractures using proposal regions that were refined using the GA module’s learnable and flexible anchors. They evaluated the method on 3067 images and achieved an AP score of 0.71.

Xue、Yan、Luo、Zhang、Chaikovska、Liu、Gao和Yang (2021) 提出了一种用于手部X射线图像骨折检测的引导锚定方法(GA),该方法采用Faster RCNN模型,通过GA模块可学习且灵活的锚点优化提案区域来预测骨折位置。他们在3067张图像上评估了该方法,获得了0.71的AP分数。

Hardalaç, Uysal, Peker, Çiçeklidağ, Tolunay, Tokgöz, Kutbay, Demirciler and Mert (2022) conducted 20 fracture detection experiments using a dataset of wrist X-ray images from Gazi University Hospital. To improve the results, they developed an ensemble model by combining five different models, named WFD-C. Out of the 26 models evaluated for fracture detection, the WFD-C model achieved the highest average precision of 0.86. This study utilized both two-stage and single-stage detection methods. The two-stage models employed were Dynamic R-CNN, Faster R-CNN, and SABL and DCN models based on Faster R-CNN. Meanwhile, the single-stage models used were PAA, FSAF, RetinaNet and RegNet, SABL, and Libra.

Hardalaç、Uysal、Peker、Çiçeklidağ、Tolunay、Tokgöz、Kutbay、Demirciler和Mert (2022) 使用加齐大学医院的手腕X光图像数据集进行了20次骨折检测实验。为提高结果,他们通过结合五种不同模型开发了一个集成模型,命名为WFD-C。在评估的26个骨折检测模型中,WFD-C模型以0.86的平均精度取得了最高成绩。该研究同时采用了两阶段和单阶段检测方法。使用的两阶段模型包括Dynamic R-CNN、Faster R-CNN,以及基于Faster R-CNN的SABL和DCN模型。而单阶段模型则采用了PAA、FSAF、RetinaNet和RegNet、SABL,以及Libra。

Joshi, Singh and Joshi (2022) employed transfer learning with a modified Mask R-CNN to detect and segment fractures using two datasets: a surface crack image dataset of 3000 images and a wrist fracture dataset of 315 images. They first trained the model on the surface crack dataset and then fine-tuned it on the wrist fracture dataset. They achieved an average precision of $92.3%$ for detection and 0.78 for segmentation on a 0.5 scale, 0.79 for detection, and 0.52 for segmentation on a strict 0.75 scale.

Joshi、Singh和Joshi (2022) 采用迁移学习结合改进的Mask R-CNN模型,通过两个数据集实现骨折检测与分割:包含3000张图像的地表裂缝数据集和315张图像的腕部骨折数据集。他们先在地表裂缝数据集上训练模型,随后在腕部骨折数据集上微调。模型在0.5阈值下取得检测平均精度92.3%、分割精度0.78;在严格0.75阈值下达到检测精度0.79、分割精度0.52。

2.2. One-stage detection

2.2. 单阶段检测

Very few studies have been conducted demonstrating the performance of one-stage detectors in the area of wrist trauma and fracture detection. In the study by Sha, Wu and Yu (2020a), a YOLOv2 model was used to detect fractures in a dataset of 5134 spinal CT images, resulting in a mAP of 0.75. In another research by the same authors Sha, Yu and Wu (2020b), a Faster R-CNN model was applied to the same dataset, yielding an mAP of 0.73.

目前关于单阶段检测器在手腕创伤和骨折检测领域性能的研究较少。Sha、Wu和Yu (2020a) 的研究中,使用YOLOv2模型在包含5134张脊柱CT图像的数据集上检测骨折,获得了0.75的mAP值。同一作者团队Sha、Yu和Wu (2020b) 的另一项研究中,将Faster R-CNN模型应用于相同数据集,取得了0.73的mAP值。

A recent study by Hrži’c et al. (2022) compared the performance of the YOLOv4 object detection model Boc hk ovsk iy Wang and Liao (2020) to that of the U-Net segmentation model proposed by Lindsey, Daluiski, Chopra, Lachapelle, Mozer, Sicular, Hanel, Gardner, Gupta, Hotchkiss et al. (2018) and a group of radiologists on the "GRAZPEDWRIDX" dataset. The authors trained two YOLOv4 models for this study: one for identifying the most probable fractured object in an image and the other for counting the number of fractures present in an image. The first YOLOv4 model achieved high performance, with an AUC-ROC of 0.90 and an F1-score of 0.90, while the second YOLOv4 model achieved an AUC-ROC of 0.90 and an F1-score of 0.96. These results demonstrate the superior performance of YOLOv4 in comparison to traditional methods for fracture detection.

Hrži'c等人 (2022) 的最新研究在"GRAZPEDWRIDX"数据集上比较了YOLOv4目标检测模型 (Bochkovskiy Wang和Liao, 2020) 与U-Net分割模型 (Lindsey, Daluiski, Chopra, Lachapelle, Mozer, Sicular, Hanel, Gardner, Gupta, Hotchkiss等人, 2018) 以及一组放射科医生的表现。作者为此训练了两个YOLOv4模型:一个用于识别图像中最可能的骨折对象,另一个用于统计图像中的骨折数量。第一个YOLOv4模型表现出色,AUC-ROC为0.90,F1分数为0.90;第二个YOLOv4模型的AUC-ROC为0.90,F1分数达到0.96。这些结果表明YOLOv4在骨折检测方面优于传统方法。

The "GRAZPEDWRI-DX" dataset used in this study was recently published Nagy et al. (2022). The authors presented the baseline results for the dataset using the COCO pretrained YOLOv5m variant of YOLOv5. The model was trained on 15,327 (of 20,327) images and tested on 1,000 images. They achieved a mAP of 0.93 for fracture detection and an overall mAP of 0.62 at an IoU threshold of 0.5.

本研究所用的"GRAZPEDWRI-DX"数据集由Nagy等人 (2022) 近期发布。作者使用基于COCO预训练的YOLOv5m变体作为基线模型,该模型在15,327张 (共20,327张) 图像上训练,并在1,000张测试图像上取得0.93的骨折检测mAP值,在IoU阈值为0.5时整体mAP达到0.62。

In conclusion, the literature review shows that the majority of studies on fracture detection have utilized the twostage detection approach. Additionally, the datasets utilized in these studies tend to be limited in size in comparison to the dataset used in our study. This study builds upon the work of studies Hrži’c et al. (2022) and Nagy et al. (2022) by conducting a comprehensive comparative study between the state-of-the-art single-stage detection algorithms (YOLOv5, YOLOv6, YOLOv7, and YOLOv8) and a widely used twostage model Faster R-CNN. The results of this study provide valuable insights into the performance of these algorithms and contribute to the ongoing research in the field of wrist trauma and fracture detection.

综上所述,文献综述表明大多数骨折检测研究采用了双阶段检测方法。此外,这些研究使用的数据集规模普遍小于本研究采用的数据集。本研究基于Hrži'c等人 (2022) 和Nagy等人 (2022) 的工作,对当前最先进的单阶段检测算法 (YOLOv5、YOLOv6、YOLOv7和YOLOv8) 与广泛使用的双阶段模型Faster R-CNN进行了全面对比研究。该研究结果为这些算法的性能评估提供了重要参考,并推动了腕部创伤及骨折检测领域的持续研究。

3. Material & Methods

3. 材料与方法

3.1. Research Design

3.1. 研究设计

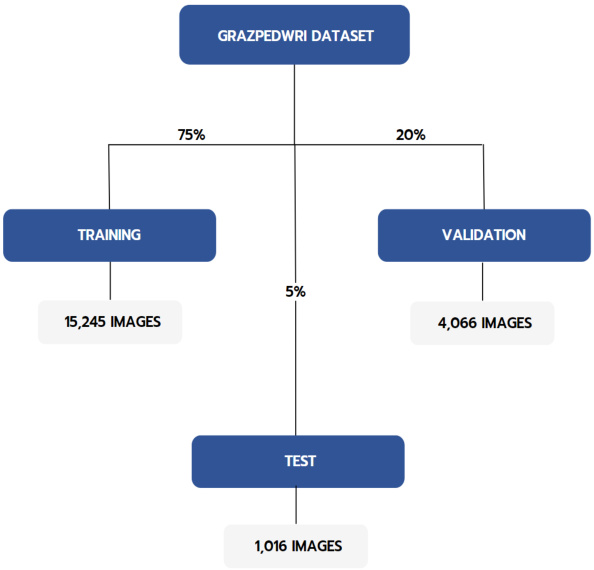

A quantitative (experimental) study is conducted using data from 10,643 wrist radio graph y studies of 6,091 unique patients collected by the Division of Paediatric Radiology, Department of Radiology, Medical University of Graz, Austria. As shown in Fig. 1, the dataset was randomly partitioned into a training set of 15,245, a validation set of 4,066, and a testing set of 1016. In the following subsection, we describe various measurements used to assess the performance of the models.

一项定量(实验)研究使用了奥地利格拉茨医科大学放射科儿科放射学部门收集的6,091名独特患者的10,643份手腕X光片数据。如图1所示,数据集被随机划分为15,245份的训练集、4,066份的验证集和1,016份的测试集。在接下来的小节中,我们将描述用于评估模型性能的各种测量方法。

Figure 1: Dataset split into training, validation, and test sets.

图 1: 数据集划分为训练集、验证集和测试集。

3.2. Tools & Instruments

3.2. 工具与仪器

Python scripts were used to partition the dataset into training, validation, and testing sets. The deep learning framework PyTorch was used to train object detection models. To visualize, track, and compare model training, we employed the Weights and Biases (WANDB) platform. To take advantage of our system’s graphical processing units (GPUs), we utilized CUDA and cuDNN. All training was performed on a Windows PC equipped with an NVIDIA GeForce RTX 2080 SUPER (with 8,192 MB of video memory), an Intel(R) Xeon(R) W-2223 CPU $\textsuperscript{(0)}3.60\mathrm{GHz}$ processor, and 64GB of RAM. The Python version used was 3.9.13.

使用Python语言脚本将数据集划分为训练集、验证集和测试集。采用深度学习框架PyTorch训练目标检测模型。为可视化、追踪和比较模型训练过程,我们使用了Weights and Biases (WANDB)平台。为充分发挥系统图形处理器(GPU)性能,我们启用了CUDA和cuDNN加速库。所有训练均在配备NVIDIA GeForce RTX 2080 SUPER显卡(8,192 MB显存)、Intel(R) Xeon(R) W-2223 CPU $\textsuperscript{(0)}3.60\mathrm{GHz}$处理器及64GB内存的Windows PC上完成,运行环境为Python语言3.9.13版本。

3.3. Deep Learning Models For Object Detection

3.3. 目标检测深度学习模型

In this study, we employed 4 single-stage detection models, namely YOLOv5, YOLOv6, YOLOv7, and YOLOv8, as well as a two-stage detection model Faster R-CNN. To further optimize the performance of the single-stage models, we experimented with multiple variants of each YOLO model, ranging from 5 to 7 variants. This resulted in a total of 23 wrist abnormality detection procedures.

在本研究中,我们采用了4种单阶段检测模型(YOLOv5、YOLOv6、YOLOv7和YOLOv8)以及一个两阶段检测模型Faster R-CNN。为了进一步优化单阶段模型的性能,我们对每个YOLO模型进行了5至7种变体的实验,最终共构建了23种腕部异常检测流程。

The YOLO (You Only Look Once) algorithm, initially introduced by Redmon, Divvala, Girshick and Farhadi (2015) in 2015, is a single-stage object detection approach that uses a single pass of a convolutional neural network to make predictions about the locations of objects in an image, making it faster than other approaches to date. In 2021, YOLOv4 achieved the highest mean average precision on the MS COCO dataset while also being the fastest realtime object detection algorithm Boc hk ovsk iy et al. (2020). Since its initial release, the algorithm has undergone several improvements, with versions ranging from v1 to v8, with each subsequent version offering smaller volume, higher speed, and higher precision. Fig. 2 illustrates the general structure of YOLO with various backbones used in this study such as CSP, VGG, and EELAN.

YOLO (You Only Look Once) 算法最初由Redmon、Divvala、Girshick和Farhadi于2015年提出[20],是一种单阶段目标检测方法,通过单次卷积神经网络前向传播即可预测图像中物体的位置,使其成为当时速度最快的算法。2021年,YOLOv4在MS COCO数据集上实现了最高的平均精度(mAP),同时保持实时检测速度(Bochkovskiy et al., 2020)。该算法自发布以来经历了多次迭代,版本从v1演进至v8,每个后续版本都实现了更小体积、更快速度和更高精度。图2展示了本研究采用的YOLO通用结构,包括CSP、VGG和EELAN等多种骨干网络。

Figure 2: YOLO Architecture depicting the input, backbone, neck, head, and the output.

图 2: YOLO架构示意图,展示输入( input )、骨干网络( backbone )、颈部网络( neck )、头部网络( head )及输出( output )结构。

3.3.1. The YOLOv5 Model

3.3.1. YOLOv5模型

The YOLO framework comprises of three components: the backbone, neck, and head. The backbone extracts image features using the CSPDarknet architecture, known for its superior performance Wang, Liao, Wu, Chen, Hsieh and Yeh (2020). We adopted the same architecture in our research. CSPDarknet involves convolution, pooling, and residual connections represented as:

YOLO框架由三部分组成:骨干网络(backbone)、颈部(neck)和头部(head)。骨干网络采用CSPDarknet架构提取图像特征,该架构以卓越性能著称[20]。我们在研究中采用了相同的架构。CSPDarknet包含卷积、池化和残差连接,其数学表示如下:

$$

F_{i}=f(F_{i-1},W_{i})+F_{i-1}

$$

$$

F_{i}=f(F_{i-1},W_{i})+F_{i-1}

$$

(Where $F_{i}$ and $F_{i-1}$ are feature maps at $i$ -th and $(i\mathrm{-}1)$ - th layer respectively, $W_{i}$ represents weights and biases, and $f(\cdot)$ applies convolution and pooling operations). The SPP structure is then used to extract multi-scale features from the CSPDarknet’s output:

(其中 $F_{i}$ 和 $F_{i-1}$ 分别表示第 $i$ 层和第 $(i\mathrm{-}1)$ 层的特征图, $W_{i}$ 代表权重和偏置, $f(\cdot)$ 执行卷积和池化操作)。随后通过SPP结构从CSPDarknet的输出中提取多尺度特征:

$$

F_{S P P}=g(F_{i})

$$

$$

F_{S P P}=g(F_{i})

$$

(Where $F_{S P P}$ denotes multi-scale feature maps, and $g(\cdot)$ performs the SPP operation on $F_{i}$ ). The neck component adopts the Path Aggregation Network (PANet) to aggregate backbone features, generating higher-level features for output layers. The head constructs output vectors containing class probabilities, objectness scores, and bounding box coordinates. YOLOv5 encompasses five model variants ("n", "s", $"\mathrm{m"}$ , "l", and $"\mathbf{X}"$ ), which are compound-scaled versions of the same architecture. These variants offer varying detection accuracy and performance, achieved by adjusting network depth and layer count.

(其中 $F_{SPP}$ 表示多尺度特征图,$g(\cdot)$ 对 $F_{i}$ 执行SPP操作)。颈部组件采用路径聚合网络(PANet)来聚合骨干特征,为输出层生成更高层次的特征。头部构建包含类别概率、目标性得分和边界框坐标的输出向量。YOLOv5包含五种模型变体("n"、"s"、"m"、"l"和"x"),它们是同一架构的复合缩放版本。这些变体通过调整网络深度和层数,提供了不同的检测精度和性能。

3.3.2. The YOLOv6 Model

3.3.2. YOLOv6模型

YOLOv6 features an anchor-free design and reparameterized Backbone, with VGG and CSP Backbones used in the "n" and "s" variants, and "m", "l" and "l6" variants respectively. This Backbone is referred to as Efficient Rep. The Neck, named Rep-PAN, is similar to YOLOv5, but the Head is efficiently decoupled, improving accuracy and reducing computation by not sharing parameters between the classification and detection branches. The YOLOv6 includes five model variants ("n", "s", "m", "l", and "l6").

YOLOv6采用无锚点设计和重参数化Backbone,其中"n"和"s"变体使用VGG与CSP Backbone,"m"、"l"及"l6"变体则分别采用不同结构。该Backbone被称为Efficient Rep。其Neck模块Rep-PAN与YOLOv5类似,但Head采用高效解耦设计,通过分离分类与检测分支参数来提升精度并降低计算量。YOLOv6共包含五种模型变体 ("n", "s", "m", "l", "l6")。

3.3.3. The YOLOv7 Model

3.3.3. YOLOv7模型

YOLOv7 comes with several changes, including EELAN, which uses expand, shuffle, and merge cardinality to improve network learning without disrupting the gradient path. Other changes include Model Scaling techniques, Reparameter iz ation planning, and Auxiliary Head Coarse-toFine. Model scaling adjusts the width, depth, and resolution of a model to align with specific application requirements. YOLOv7 uses compound scaling to simultaneously scale network depth and width by concatenating layers, maintaining optimal architecture while scaling.

YOLOv7带来多项改进,包括采用扩展、洗牌与合并基数操作的EELAN结构 (在不破坏梯度路径的前提下提升网络学习能力) 。其他创新包含模型缩放 (Model Scaling) 技术、重参数化规划 (Reparameterization planning) 以及由粗到精的辅助头设计 (Auxiliary Head Coarse-to-Fine) 。模型缩放通过调整宽度、深度和分辨率使模型适配具体应用需求。YOLOv7采用复合缩放策略,通过层连接同步扩展网络深度与宽度,在缩放时保持最优架构。

Re-parameter iz ation techniques use gradient flow propagation to identify modules that require averaging weights for robustness. An auxiliary head in the middle of the network improves training but requires a coarse-to-fine approach for efficient supervision. The YOLOv7 model consists of seven variants: "P5" models (v7, v7x, and v7-tiny) and "P6" models (d6, e6, w6, and e6e).

重参数化技术利用梯度流传播来识别需要平均权重以提高鲁棒性的模块。网络中间的辅助头能提升训练效果,但需要采用由粗到细的方法进行高效监督。YOLOv7模型包含七个变体:"P5"系列模型(v7、v7x和v7-tiny)和"P6"系列模型(d6、e6、w6和e6e)。

3.3.4. The YOLOv8 Model

3.3.4. YOLOv8模型

YOLOv8 is reported to provide significant advancements in object detection when compared to previous YOLO models, particularly in compact versions that are implemented on less powerful hardware. At the time of writing this paper, the architecture of YOLOv8 is not fully disclosed and some of its features are still under development. As of now, it’s been confirmed that the system has a new backbone, uses an anchor-free design, has a revamped detection head, and has a newly implemented loss function. We have included the performance of this model on the GRAZPEDWRI-DX dataset as a benchmark for future studies, as further improvements to YOLOv8 may surpass the results obtained in this study. YOLOv8 comes in five versions at the time of release (January 10, 2023), namely, "n", "s", "m", "l", and "x".

据报道,YOLOv8相比之前的YOLO模型在目标检测方面取得了显著进步,尤其是在算力较低的硬件上运行的紧凑版本中。撰写本文时,YOLOv8的架构尚未完全公开,部分功能仍在开发中。目前已确认该系统具有新主干网络、采用无锚点(anchor-free)设计、改进了检测头并实现了新的损失函数。我们将该模型在GRAZPEDWRI-DX数据集上的性能作为未来研究的基准,因为YOLOv8的进一步改进可能会超越本研究的结果。截至发布时(2023年1月10日),YOLOv8共推出"n"、"s"、"m"、"l"和"x"五个版本。

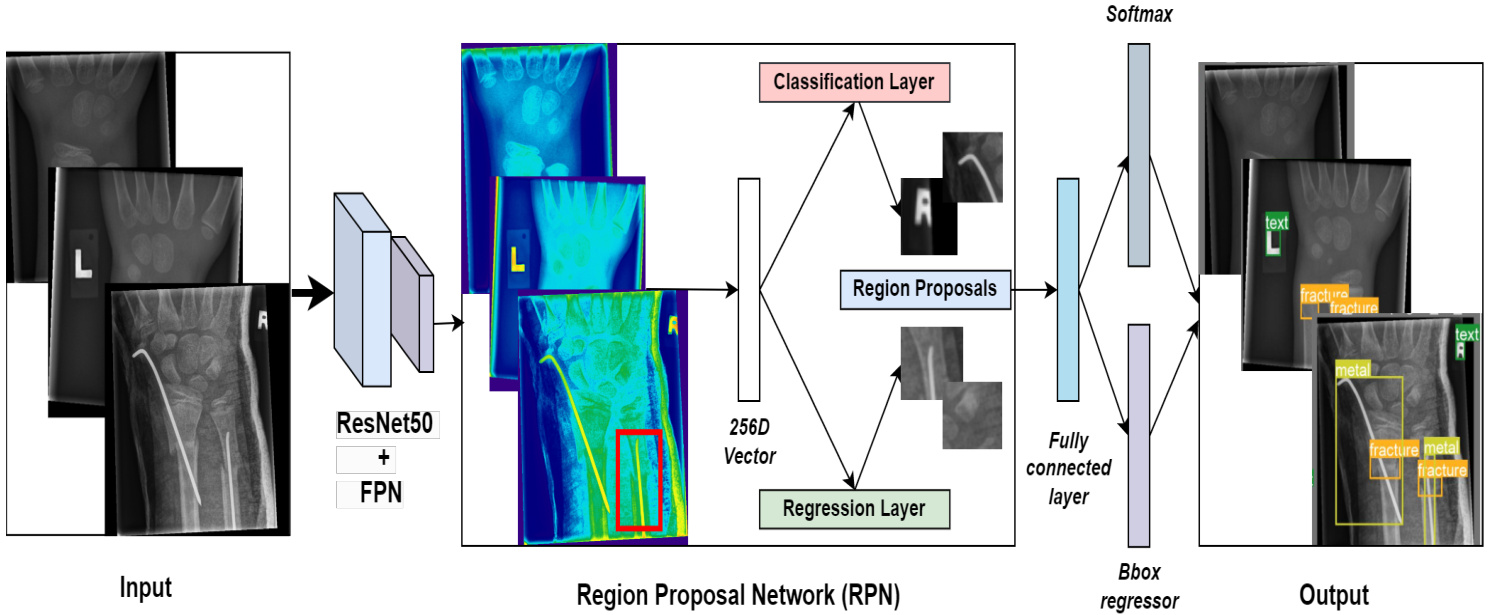

Figure 3: Faster R-CNN Pipeline.

图 3: Faster R-CNN 流程。

3.3.5. Faster R-CNN

3.3.5. Faster R-CNN

The Faster R-CNN model includes a backbone, an RPN (regional proposal network), and a detection network. ResNet50 with FPN is used as the backbone for feature extraction. Anchors with variable sizes and aspect ratios are generated for each feature. The RPN selects appropriate anchor boxes using a classifier that predicts if an anchor box contains an object based on an IoU threshold of 0.5. The regressor predicts offsets for anchor boxes containing objects to fit them tightly to the ground truth labels. Finally, the RoI pooling layer converts variable-sized proposals to a fixed size to run a classifier and regress a bounding box. Fig. 3 illustrates the architecture of Faster R-CNN.

Faster R-CNN模型包含主干网络、区域提议网络(RPN)和检测网络三部分。采用带有特征金字塔网络(FPN)的ResNet50作为特征提取主干。每个特征图会生成不同尺寸和长宽比的锚框(anchor),RPN通过分类器(以0.5交并比(IoU)阈值判断锚框是否包含物体)筛选合适锚框,回归器则对含物体的锚框预测偏移量以贴合真实标注。最后通过兴趣区域(RoI)池化层将可变尺寸的提议框转为固定尺寸,用于执行分类和边界框回归。图3展示了Faster R-CNN的架构。

3.4. Training Details

3.4. 训练细节

In the experimentation of YOLO variants, standard hyper parameters were utilized. The input resolution was fixed at 640 pixels. The optimization algorithm employed was SGD with an initial learning rate $\alpha=1\times10^{-2}$ , final learning rate $\alpha_{f}~=~1\times10^{-2}$ (except for YOLOv7 variants with a final learning rate $\alpha_{f}~=~1\times10^{-1}.$ ), momentum $=0.937$ , weight decay $=5\times10^{-4}$ . Each variant/model underwent 100 epochs of training from scratch and was observed to converge between 90-100 epochs. Every variant was trained with a batch size of 16 except for the $"\mathbf{P}6"$ variants of YOLOv7 namely (d6, e6, w6, e6e) which were trained with a batch size of 8 due to computational constraints.

在YOLO变体的实验中,采用了标准超参数。输入分辨率固定为640像素。使用的优化算法为SGD (随机梯度下降),初始学习率 $\alpha=1\times10^{-2}$,最终学习率 $\alpha_{f}~=~1\times10^{-2}$(YOLOv7变体除外,其最终学习率为 $\alpha_{f}~=~1\times10^{-1}.$),动量 $=0.937$,权重衰减 $=5\times10^{-4}$。每个变体/模型均从头开始训练100个epoch,并观察到在90-100个epoch之间收敛。除YOLOv7的$"\mathbf{P}6"$变体(即d6、e6、w6、e6e)由于计算限制采用批量大小8外,其余变体均以批量大小16进行训练。

With Faster R-CNN, the only difference was the learning rate of $\alpha=1\times10^{-3}$ , momentum of 0.9 and weight decay of $5\times10^{-4}$ . All other parameters were the same as YOLO variants. As with YOLO models, the selection of these parameters is not deliberate, they are the default settings.

使用Faster R-CNN时,唯一区别是学习率为$\alpha=1\times10^{-3}$、动量为0.9且权重衰减为$5\times10^{-4}$。其余参数均与YOLO变体相同。与YOLO模型一样,这些参数的选择并非刻意为之,均为默认设置。

All binary class if i ers were trained for a maximum of 100 epochs using a batch size of 64. The learning rate was set at $1\times10^{-3}$ . The Adam optimization algorithm guided the training process. Input images were standardized to a resolution of 224 pixels.

所有二分类器均以64的批次大小训练最多100个周期,学习率设定为$1\times10^{-3}$,训练过程采用Adam优化算法。输入图像统一标准化为224像素分辨率。

3.5. Evaluation Metrics: mAP

3.5. 评估指标: mAP

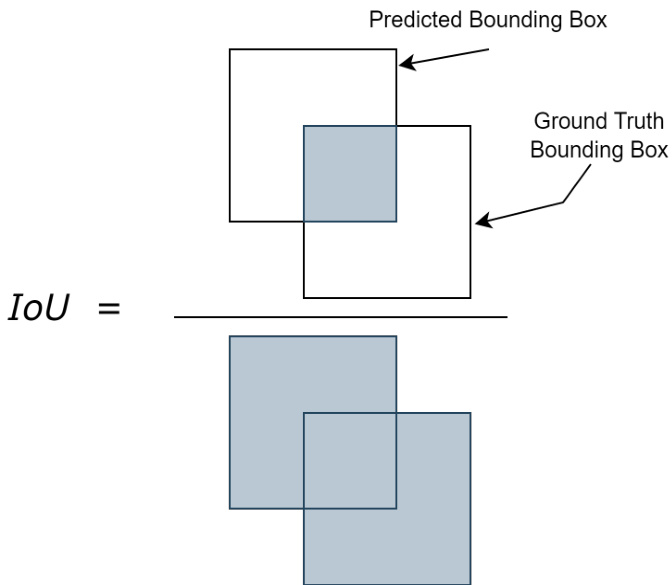

For the evaluation of object detection, a common way to determine if the predicted location of an object was correct is to find in Intersection over Union $(I o U)$ . It is defined as the ratio of the intersection of the predicted and the ground truth bounding box over the union of the predicted and ground truth bounding box. A visual illustration of $I o U$ is presented in Fig. 4. Given the set of predicted bounding boxes $A$ for a given image, and the set of ground truth bounding boxes $B$ for the same image. The IoU can be computed as:

在目标检测的评估中,判断物体预测位置是否正确的一种常见方法是计算交并比 $(IoU)$。其定义为预测边界框与真实边界框的交集面积除以它们的并集面积。图 4 展示了 $IoU$ 的直观图示。给定图像的一组预测边界框 $A$ 和同一图像的真实边界框集合 $B$,IoU 的计算公式为:

$$

I o U(A,B)={\frac{A\cap B}{A\cup B}};\qquad{\mathrm{where~}}A,B\in[0,1]

$$

$$

I o U(A,B)={\frac{A\cap B}{A\cup B}};\qquad{\mathrm{where~}}A,B\in[0,1]

$$

Commonly, if the $I o U>0.5$ , we classify the detection as true positive, otherwise, it is classified as false positive. Given IoU, we can compute the number of true positives $T P$ and false positives $F P$ and compute the Average precision $A P$ for each object class $c$ as follows:

通常,如果 $I o U>0.5$,我们将检测结果分类为真阳性 (true positive),否则归类为假阳性 (false positive)。给定 IoU,我们可以计算真阳性数量 $T P$ 和假阳性数量 $F P$,并按以下方式计算每个物体类别 $c$ 的平均精度 (Average precision) $A P$:

$$

A P(c)={\frac{T P(c)}{T P(c)+F P(c)}}

$$

$$

A P(c)={\frac{T P(c)}{T P(c)+F P(c)}}

$$

Finally, after computing $A P$ for each object class, we compute the Mean Average Precision $m A P$ which is an average of $A P$ across all classes $C$ under consideration. $m A P$ is given

最后,在计算每个对象类别的 $AP$ 后,我们计算平均精度均值 $mAP$ ,即所有考虑类别 $C$ 的 $AP$ 平均值。$mAP$ 的表达式为

Figure 4: Visual illustration of Intersection over Union $(I o U)$ .

图 4: 交并比 (Intersection over Union, IoU) 的视觉示意图。

as:

as:

$$

m A P={\frac{1}{C}}\sum_{c=1}^{C}A P(c)

$$

$$

m A P={\frac{1}{C}}\sum_{c=1}^{C}A P(c)

$$

$m A P$ is the metric that quantifies the performance of object detection algorithms. Thus, the metric $m A P_{0.5}$ indicates $m A P$ for $I o U>0.5$ . This is the $I o U$ threshold we will be using to make our assessments of the detection models.

$mAP$ 是量化目标检测算法性能的指标。因此,指标 $mAP_{0.5}$ 表示 $IoU>0.5$ 时的 $mAP$。这是我们用于评估检测模型的 $IoU$ 阈值。

3.5.1. Sensitivity

3.5.1. 敏感性

Sensitivity, in the context of our model, pertains to its capacity to accurately recognize true detections among all positive detections within the dataset. Specifically, it gauges the model’s ability to correctly identify the presence of a fracture or abnormality. We prioritize this metric due to the potential consequences of false negatives in wrist trauma cases. Failure to detect fractures is a frequent reason for differences in diagnosis between the initial interpretation of X-ray images and the final analysis conducted by certified radiologists. The calculation for sensitivity is as follows:

在我们模型的背景下,敏感度 (sensitivity) 指的是模型在数据集中所有阳性检测中准确识别真实检测的能力。具体而言,它衡量了模型正确识别骨折或异常情况的能力。我们优先考虑这一指标,因为在手腕创伤病例中假阴性可能带来严重后果。未能检测出骨折是X光片初步解读与认证放射科医师最终分析之间出现诊断差异的常见原因。敏感度的计算公式如下:

$$

\mathrm{Sensitivity}={\frac{\mathrm{True Positives}}{\mathrm{True Positives}+\mathrm{False~Negatives}}}

$$

$$

\mathrm{Sensitivity}={\frac{\mathrm{True Positives}}{\mathrm{True Positives}+\mathrm{False~Negatives}}}

$$

4. Dataset

4. 数据集

The dataset used in this study is called GRAZPEDWRIDX for machine learning presented by the authors in Nagy et al. (2022) and is publicly made available to encourage computer vision research. The dataset contains pediatric wrist radiograph images in PNG format of 6,091 patients (mean age 10.9 years, range 0.2 to 19 years; 2,688 females, 3,402 males, 1 unknown), treated at the Division of Paediatric Radiology, Department of Radiology, Medical University of Graz, Austria. The dataset includes a total of 20,327 wrist images covering lateral and poster o anterior projections. The radio graphs were acquired over the span of 10 years between 2008 and 2018 and have been compre hens iv ely annotated between 2018 and 2020 by expert radiologists and various medical students. The annotations were validated by three experienced radiologists as the Xray images were annotated. This process was repeated until a consensus was met between the annotations and interpretations from three radiologists. We choose to use this dataset in our study for the following reasons:

本研究所用数据集名为GRAZPEDWRIDX,由Nagy等人(2022)提出用于机器学习研究,并公开共享以促进计算机视觉领域发展。该数据集包含6,091名儿科患者(平均年龄10.9岁,范围0.2-19岁;2,688名女性,3,402名男性,1名性别未知)的腕部X光PNG格式图像,这些患者均就诊于奥地利格拉茨医科大学放射科儿科放射学部门。数据集总计包含20,327张腕部图像,涵盖侧位和后前位投照体位。所有X光片拍摄于2008至2018年间,并在2018至2020年期间由专业放射科医师和多名医学生完成全面标注。标注过程中,三位资深放射科医师对每张X光片的标注结果进行验证,直至三位医师的标注与解读达成一致意见。本研究选用该数据集基于以下考量:

4.1. Analysis of Objects in the Dataset

4.1. 数据集中的对象分析

The dataset includes a total of 9 objects: periosteal reaction, fracture, metal, pronator sign, soft tissue, bone anomaly, bone lesion, foreign body, and text. The object "text" is present in all X-ray images and is used to identify the side of the body (right or left hand) on which the Xray was taken. The number of objects in the dataset is shown in Table 1. The table clearly indicates that the object "fracture" has the most common occurrence in wrist Xrays of GRAZPEDWRI-Dataset. The class "periosteal reaction" has the second largest occurrence followed by the third largest class "metal". Meanwhile, the classes "bone anomaly", "bone lesion", and "foreign body" have the lowest occurrence. Note that this table shows how many X-ray images contain a particular object and not the number of times an object is labeled in the dataset. Additionally, a histogram is shown in Fig. 5 visually shows the class distribution.

该数据集共包含9类对象:骨膜反应、骨折、金属物、旋前肌征、软组织、骨骼异常、骨病变、异物和文本。其中"文本"对象存在于所有X光片中,用于标识拍摄部位(右手或左手)。数据集中各类对象的数量如 表1 所示。该表清晰显示在GRAZPEDWRI数据集的腕部X光片中,"骨折"对象的出现频率最高,"骨膜反应"次之,第三位是"金属物"。而"骨骼异常"、"骨病变"和"异物"这三类的出现频率最低。需注意此表统计的是包含特定对象的X光片数量,而非数据集中该对象的标注总次数。此外,图5 所示的直方图直观展示了类别分布情况。

Table 1 Class Distribution

表 1: 类别分布

| 异常类型 | 实例数 | 比例 |

|---|---|---|

| Boneanomaly | 192 | 0.94% |

| Bonelesion | 42 | 0.21% |

| Foreignbody | 8 | 0.04% |

| Fracture | 13550 | 66.6% |

| Metal | 708 | 3.48% |

| Periostealreaction | 2235 | 11.0% |

| Pronatorsign | 566 | 2.78% |

| Softtissue | 439 | 2.16% |

In Table 2, we show the number of images in which a particular anomaly occurs only once, twice, or multiple times. The column "Total" represents the total number of images in which a particular anomaly is present.

在表2中,我们展示了特定异常仅出现一次、两次或多次的图像数量。"Total"列表示存在特定异常的图像总数。