Constrained Contrastive Distribution Learning for Unsupervised Anomaly Detection and Local is ation in Medical Images

医学图像无监督异常检测与定位的受限对比分布学习

Abstract. Unsupervised anomaly detection (UAD) learns one-class class if i ers exclusively with normal (i.e., healthy) images to detect any abnormal (i.e., unhealthy) samples that do not conform to the expected normal patterns. UAD has two main advantages over its fully supervised counterpart. Firstly, it is able to directly leverage large datasets available from health screening programs that contain mostly normal image samples, avoiding the costly manual labelling of abnormal samples and the subsequent issues involved in training with extremely classimbalanced data. Further, UAD approaches can potentially detect and localise any type of lesions that deviate from the normal patterns. One significant challenge faced by UAD methods is how to learn effective low-dimensional image representations to detect and localise subtle abnormalities, generally consisting of small lesions. To address this challenge, we propose a novel self-supervised representation learning method, called Constrained Contrastive Distribution learning for anomaly detection (CCD), which learns fine-grained feature representations by simultaneously predicting the distribution of augmented data and image contexts using contrastive learning with pretext constraints. The learned representations can be leveraged to train more anomaly-sensitive detection models. Extensive experiment results show that our method outperforms current state-of-the-art UAD approaches on three different colon os copy and fundus screening datasets. Our code is available at https://github.com/tianyu0207/CCD.

摘要。无监督异常检测 (UAD) 通过仅使用正常 (即健康) 图像训练单分类器,来检测不符合预期正常模式的任何异常 (即不健康) 样本。与全监督方法相比,UAD 具有两大优势:首先,它能直接利用健康筛查项目中包含大量正常图像样本的大规模数据集,避免了异常样本的高成本人工标注以及后续极端类别不平衡数据训练带来的问题;其次,UAD 方法有望检测并定位任何偏离正常模式的病变类型。UAD 方法面临的关键挑战在于如何学习有效的低维图像表示来检测和定位通常由微小病灶组成的细微异常。为此,我们提出了一种新型自监督表征学习方法——基于约束对比分布的异常检测学习 (CCD),该方法通过结合对比学习与前置约束,同时预测增强数据分布和图像上下文,从而学习细粒度特征表示。这些学习到的表征可用于训练对异常更敏感的检测模型。大量实验结果表明,在三种不同的结肠镜和眼底筛查数据集上,我们的方法优于当前最先进的 UAD 方案。代码已开源:https://github.com/tianyu0207/CCD。

Keywords: Anomaly detection $\cdot$ Unsupervised learning $\cdot$ Lesion detection and segmentation $\cdot$ Self-supervised pre-training $\cdot$ Colon os copy.

关键词: 异常检测 (Anomaly detection) $\cdot$ 无监督学习 (Unsupervised learning) $\cdot$ 病变检测与分割 (Lesion detection and segmentation) $\cdot$ 自监督预训练 (Self-supervised pre-training) $\cdot$ 结肠镜 (Colon os copy)

1 Introduction

1 引言

Classifying and localising malignant tissues have been vastly investigated in medical imaging [1, 11, 22–24, 26, 29, 42, 43]. Such systems are useful in health screening programs that require radiologists to analyse large quantities of images [35, 41], where the majority contain normal (or healthy) cases, and a small minority have abnormal (or unhealthy) cases that can be regarded as anomalies. Hence, to avoid the difficulty of learning from such class-imbalanced training sets and the prohibitive cost of collecting large sets of manually labelled abnormal cases, several papers investigate anomaly detection (AD) with a few or no labels as an alternative to traditional fully supervised imbalanced learning [1, 26, 28, 32, 33, 37, 38, 43–45]. UAD methods typically train a one-class classifier using data from the normal class only, and anomalies (or abnormal cases) are detected based on the extent the images deviate from the normal class.

在医学影像领域,对恶性组织的分类与定位已得到广泛研究 [1, 11, 22–24, 26, 29, 42, 43]。这类系统在需要放射科医师分析大量影像的健康筛查项目中尤为实用 [35, 41],其中多数为正常(或健康)案例,仅少数异常(或不健康)案例可视为异常值。为避免从类别不平衡训练集中学习的困难,以及人工标注大量异常案例的高昂成本,部分研究探索了少标签或无标签的异常检测 (anomaly detection, AD) 方法,作为传统全监督不平衡学习的替代方案 [1, 26, 28, 32, 33, 37, 38, 43–45]。无监督异常检测 (UAD) 方法通常仅使用正常类别数据训练单分类器,并通过图像偏离正常类别的程度来识别异常案例。

Current anomaly detection approaches [7, 8, 14, 27, 37, 43, 46] train deep generative models (e.g., auto-encoder [19], GAN [15]) to reconstruct normal images, and anomalies are detected from the reconstruction error [33]. These approaches rely on a low-dimensional image representation that must be effective at reconstructing normal images, where the main challenge is to detect anomalies that show subtle deviations from normal images, such as with small lesions [43]. Recently, self-supervised methods that learn auxiliary pretext tasks [2, 6, 13, 17, 18, 25] have been shown to learn effective representations for UAD in general computer vision tasks [2, 13, 18], so it is important to investigate if self-supervision can also improve UAD for medical images.

当前异常检测方法 [7, 8, 14, 27, 37, 43, 46] 通过训练深度生成模型 (如自编码器 [19]、GAN [15]) 来重建正常图像,并从重建误差中检测异常 [33]。这些方法依赖于低维图像表征,该表征必须能有效重建正常图像,其主要挑战在于检测与正常图像存在细微偏差的异常 (如微小病变 [43])。最近研究表明,通过自监督方法学习辅助代理任务 [2, 6, 13, 17, 18, 25] 可为通用计算机视觉任务中的无监督异常检测 (UAD) 学习有效表征 [2, 13, 18],因此探究自监督能否提升医学图像 UAD 性能具有重要意义。

The main challenge for the design of UAD methods for medical imaging resides in how to devise effective pretext tasks. Self-supervised pretext tasks consist of predicting geometric or brightness transformations [2, 13, 18], or contrastive learning [6, 17]. These pretext tasks have been designed to work for downstream classification problems that are not related to anomaly detection, so they may degrade the detection performance of UAD methods [47]. Sohn et al. [40] tackle this issue by using smaller batch sizes than in [6, 17] and a new data augmentation method. However, the use of selfsupervised learning in UAD for medical images has not been investigated, to the best of our knowledge. Further, although transformation prediction and contrastive learning show great success in self-supervised feature learning, there are no studies on how to properly combine these two approaches to learn more effective features for UAD.

医学影像无监督异常检测 (UAD) 方法设计的主要挑战在于如何设计有效的预训练任务。自监督预训练任务包括预测几何或亮度变换 [2, 13, 18] 或对比学习 [6, 17]。这些预训练任务专为与异常检测无关的下游分类问题设计,因此可能降低 UAD 方法的检测性能 [47]。Sohn 等人 [40] 通过采用比 [6, 17] 更小的批次大小和新的数据增强方法来解决此问题。然而据我们所知,自监督学习在医学影像 UAD 中的应用尚未得到研究。此外,尽管变换预测和对比学习在自监督特征学习中取得了巨大成功,但关于如何正确结合这两种方法来学习更有效的 UAD 特征尚未有研究。

In this paper, we propose Constrained Contrastive Distribution learning (CCD), a new self-supervised representation learning designed specifically to learn normality information from exclusively normal training images. The contributions of CCD are: a) contrastive distribution learning, and b)two pretext learning constraints, both of which are customised for anomaly detection (AD). Unlike modern self-supervised learning (SSL) [6, 17] that focuses on learning generic semantic representations for enabling diverse downstream tasks, CCD instead contrasts the distributions of strongly augmented images (e.g., random permutations). The strongly augmented images resemble some types of abnormal images, so CCD is enforced to learn disc rim i native normality representations by its contrastive distribution learning. The two pretext learning constraints on augmentation and location prediction are added to learn fine-grained normality represent at ions for the detection of subtle abnormalities. These two unique components result in significantly improved self-supervised AD-oriented representation learning, substantially outperforming previous general-purpose SOTA SSL approaches [2, 6, 13, 18]. Another important contribution of CCD is that it is agnostic to downstream anomaly class if i ers. We empirically show that our CCD improves the performance of three diverse anomaly detectors (f-anogan [37], IGD [8], MS-SSIM) [48]). Inspired by IGD [8], we adapt our proposed CCD pre training on global images and local patches, respectively. Extensive experimental results on three different health screening medical imaging benchmarks, namely, colon os copy images from two datasets [4, 27], and fundus images for glaucoma detection [21], show that our proposed self-supervised approach enables the production of SOTA anomaly detection and local is ation in medical images.

本文提出了一种专门从正常训练图像中学习正态信息的自监督表示学习方法——约束对比分布学习 (CCD)。CCD的核心贡献包括:a) 对比分布学习,b) 两个专为异常检测 (AD) 设计的预训练约束。不同于现代自监督学习 (SSL) [6,17] 致力于学习通用语义表示以支持多样下游任务,CCD通过对比强增强图像 (如随机排列) 的分布进行学习。由于强增强图像与某些异常图像相似,CCD的对比分布学习机制迫使模型学习具有判别力的正态表示。新增的增强预测和位置预测两个预训练约束,则用于学习细粒度正态表示以检测细微异常。这两个独特组件显著提升了面向异常检测的自监督表示学习效果,大幅超越先前通用型SOTA SSL方法 [2,6,13,18]。CCD的另一重要贡献是其与下游异常分类器的无关性。实验证明CCD能提升三种不同异常检测器 (f-anogan [37], IGD [8], MS-SSIM [48]) 的性能。受IGD [8] 启发,我们分别在全图与局部图像块上实施CCD预训练。在结肠镜图像 (来自两个数据集 [4,27]) 和青光眼检测眼底图像 [21] 这三个医疗筛查基准上的大量实验表明,该方法能在医学图像中实现SOTA级别的异常检测与定位。

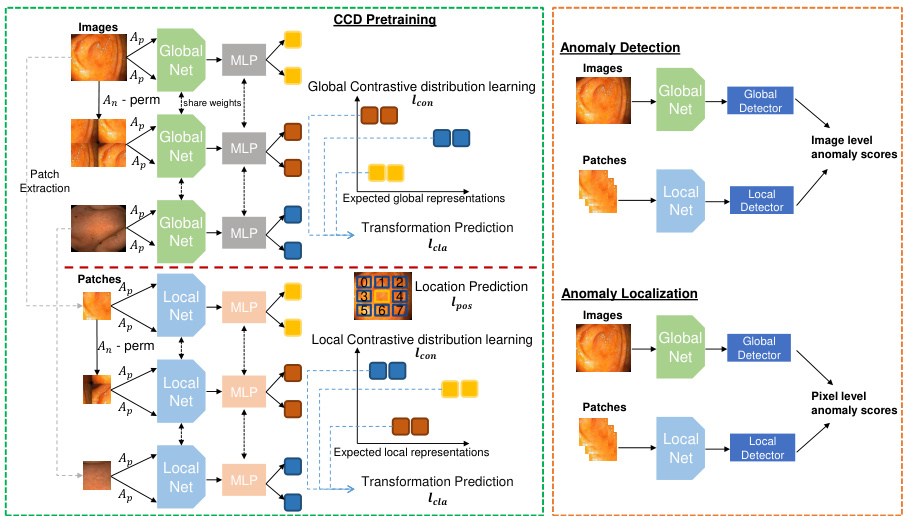

Fig. 1: Our proposed CCD framework. Left shows the proposed pre-training method that unifies a contrastive distribution learning and pretext learning on both global and local perspectives (Sec. 2.1), Right shows the inference for detection and local is ation (Sec. 2.2).

图 1: 我们提出的CCD框架。左侧展示了提出的预训练方法,该方法在全局和局部视角上统一了对比分布学习和借口学习 (第2.1节),右侧展示了用于检测和定位的推理过程 (第2.2节)。

2 Method

2 方法

2.1 Constrained Contrastive Distribution Learning

2.1 受限对比分布学习

Contrastive learning has been used by self-supervised learning methods to pre-train encoders with data augmentation [6, 17, 47] and contrastive learning loss [39]. The idea is to sample functions from a data augmentation distribution (e.g., geometric and brightness transformations), and assume that the same image, under separate augmentations, form one class to be distinguished against all other images in the batch [2, 13]. Another form of pre-training is based on a pretext task, such as solving jigsaw puzzle and predicting geometric and brightness transformations [6, 17]. These self-supervised learning approaches are useful to pre-train classification [6, 17] and segmentation models [31, 49]. Only recently, self-supervised learning using contrastive learning [40] and pretext learning [2,13] have been shown to be effective in anomaly detection. However, these two approaches are explored separately. In this paper, we aim at harnessing the power of both approaches to learn more expressive pre-trained features specifically for UAD. To this end, we propose the novel Constrained Contrastive Distribution learning method (CCD).

对比学习已被自监督学习方法用于通过数据增强 [6, 17, 47] 和对比学习损失 [39] 预训练编码器。其核心思想是从数据增强分布(如几何和亮度变换)中采样函数,并假设同一图像经过不同增强后形成的样本应归类为一类,与批次中其他图像区分开来 [2, 13]。另一种预训练形式基于代理任务,例如拼图游戏或预测几何与亮度变换 [6, 17]。这些自监督学习方法对分类 [6, 17] 和分割模型 [31, 49] 的预训练具有价值。直到最近,采用对比学习 [40] 和代理学习 [2,13] 的自监督学习才被证明在异常检测中有效。然而这两种方法此前未被联合探索。本文旨在结合两种方法的优势,学习专门针对无监督异常检测 (UAD) 更具表现力的预训练特征。为此,我们提出了新颖的约束对比分布学习方法 (CCD)。

Contrastive distribution learning is designed to enforce a non-uniform distribution of the representations in the space $\mathcal{Z}$ , which has been associated with more effective anomaly detection performance [40]. Our CCD method constrains the const r asti ve distribution learning with two pretext learning tasks, with the goal of enforcing further the non-uniform distribution of the representations. The CCD loss is defined as

对比分布学习旨在强制表示在空间$\mathcal{Z}$中呈现非均匀分布,这与更有效的异常检测性能相关[40]。我们的CCD方法通过两个前置学习任务约束对比分布学习,目的是进一步强化表示的非均匀分布。CCD损失定义为

$$

\ell_{C C D}(\mathcal{D};\theta,\beta,\gamma)=\ell_{c o n}(\mathcal{D};\theta)+\ell_{c l a}(\mathcal{D};\beta)+\ell_{p o s}(\mathcal{D};\gamma),

$$

$$

\ell_{C C D}(\mathcal{D};\theta,\beta,\gamma)=\ell_{c o n}(\mathcal{D};\theta)+\ell_{c l a}(\mathcal{D};\beta)+\ell_{p o s}(\mathcal{D};\gamma),

$$

where $\ell_{c o n}(\cdot)$ is the contrastive distribution loss, $\ell_{c l a}$ and $\ell_{p o s}$ are two pretext learning tasks added to constrain the optimisation; and $\theta$ , $\beta$ and $\gamma$ are trainable parameters. The contrastive distribution learning uses a dataset of weak data augmentations $\mathcal{A}{p}~=$ ${a_{l}:\mathcal{X}\to\mathcal{X}}{l=1}^{|\mathcal{A}{p}|}$ taicnudl asrt rdoantag aduagtam ea nut gat mio en n ta apt pi loin es d $\mathcal{A}{n}={a_{l}:\mathcal{X}\to\mathcal{X}}{l=1}^{|\mathcal{A}{n}|}$ ,d washere $a_{l}(\mathbf{x})$ $\mathbf{x}$

其中 $\ell_{con}(\cdot)$ 是对比分布损失函数,$\ell_{cla}$ 和 $\ell_{pos}$ 是用于约束优化的两个预训练任务;$\theta$、$\beta$ 和 $\gamma$ 是可训练参数。对比分布学习采用弱数据增强数据集 $\mathcal{A}{p}~=$ ${a_{l}:\mathcal{X}\to\mathcal{X}}{l=1}^{|\mathcal{A}{p}|}$ 以及强数据增强集 $\mathcal{A}{n}={a_{l}:\mathcal{X}\to\mathcal{X}}{l=1}^{|\mathcal{A}{n}|}$,其中 $a_{l}(\mathbf{x})$ 表示对样本 $\mathbf{x}$ 的增强操作。

$$

\begin{array}{r l}&{\ell_{c o n}(\mathcal{D};\theta)=}\ &{-\mathbb{E}\left[\log\frac{\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}^{j})\right)\right]}{\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}^{j})\right)\right]+\sum_{i=1}^{M}\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}_{i}^{j})\right)\right]}\right],}\end{array}

$$

$$

\begin{array}{r l}&{\ell_{c o n}(\mathcal{D};\theta)=}\ &{-\mathbb{E}\left[\log\frac{\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}^{j})\right)\right]}{\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}^{j})\right)\right]+\sum_{i=1}^{M}\exp\left[\frac{1}{\tau}f_{\theta}\left(a(\tilde{\mathbf{x}}^{j})\right)^{\top}f_{\theta}\left(a^{\prime}(\tilde{\mathbf{x}}_{i}^{j})\right)\right]}\right],}\end{array}

$$

where the expectation is over $\mathbf{x}\in\mathcal{D}$ , ${\mathbf{x}{i}}{i=1}^{M}\subset\mathcal{D}\setminus{\mathbf{x}},a(.),a^{\prime}(.)\in\mathcal{A}{p}$ , $\tilde{\mathbf{x}}^{j}\mathbf{\Phi}=$ $a_{j}(\mathbf{x})$ , $\tilde{\mathbf{x}}{i}^{j}=a_{j}(\mathbf{x}{i})$ , and $a_{j}(.)\in\mathcal A_{n}$ . The images augmented with the functions from the strong set $\mathcal{A}_{n}$ carry some ‘abnormality’ compared to the original images, which is helpful to learn a non-uniform distribution in the representation space $\mathcal{Z}$ .

其中期望是对 $\mathbf{x}\in\mathcal{D}$ 、 ${\mathbf{x}{i}}{i=1}^{M}\subset\mathcal{D}\setminus{\mathbf{x}},a(.),a^{\prime}(.)\in\mathcal{A}{p}$ 、 $\tilde{\mathbf{x}}^{j}\mathbf{\Phi}=$ $a_{j}(\mathbf{x})$ 、 $\tilde{\mathbf{x}}{i}^{j}=a_{j}(\mathbf{x}{i})$ 以及 $a_{j}(.)\in\mathcal A_{n}$ 进行计算的。通过强增强集 $\mathcal{A}_{n}$ 中的函数增强后的图像相较于原始图像带有某种"异常性",这有助于在表示空间 $\mathcal{Z}$ 中学习非均匀分布。

We can then constrain further the training to learn more non-uniform representations with a self-supervised classification constraint $\ell_{c l a}(\cdot)$ that enforces the model to achieve accurate classification of the strong augmentation function:

然后,我们可以通过自监督分类约束 $\ell_{c l a}(\cdot)$ 进一步限制训练,以学习更多非均匀表示,该约束强制模型实现对强增强功能的准确分类:

$$

\ell_{c l a}(\mathcal{D};\beta)=-\mathbb{E}{{\mathbf{x}}\in\mathcal{D},a(.)\in\mathcal{A}{n}}\left[\log{\mathbf{a}^{\top}}f_{\beta}(f_{\theta}(a(\mathbf{x})))\right],

$$

$$

\ell_{c l a}(\mathcal{D};\beta)=-\mathbb{E}{{\mathbf{x}}\in\mathcal{D},a(.)\in\mathcal{A}{n}}\left[\log{\mathbf{a}^{\top}}f_{\beta}(f_{\theta}(a(\mathbf{x})))\right],

$$

where $f_{\beta}:\mathcal{Z}\rightarrow[0,1]^{\vert\mathcal{A}{n}\vert}$ is a fully-connected (FC) layer, and $\mathbf{a}\in{0,1}^{|A_{n}|}$ is a one-hot vector representing the strong augmentation $a(.)\in\mathcal A_{n}$ .

其中 $f_{\beta}:\mathcal{Z}\rightarrow[0,1]^{\vert\mathcal{A}{n}\vert}$ 是全连接层 (FC layer) , $\mathbf{a}\in{0,1}^{|A_{n}|}$ 是表示强增强 $a(.)\in\mathcal A_{n}$ 的独热向量。

The second constraint is based on the relative patch location from the centre of the training image – this positional information is important for segmentation tasks [20, 31]. This constraint is added to learn fine-grained features and achieve more accurate anomaly local is ation. Inspired by [10], the positional constraint predicts the relative position of the paired image patches, with its loss defined as

第二个约束基于训练图像中心相对补丁位置的位置信息——这种位置信息对于分割任务至关重要 [20, 31]。添加该约束是为了学习细粒度特征并实现更精确的异常定位。受 [10] 启发,位置约束通过预测配对图像补丁的相对位置来定义其损失函数

$$

\begin{array}{r}{\ell_{p o s}(\mathcal{D};\gamma)=-\mathbb{E}{{\mathbf{x}{\omega_{1}},\mathbf{x}{\omega_{2}}}\sim\mathbf{x}\in\mathcal{D}}\left[\log\mathbf{p}^{\top}f_{\gamma}(f_{\theta}(\mathbf{x}{\omega_{1}}),f_{\theta}(\mathbf{x}{\omega_{2}}))\right],}\end{array}

$$

$$

\begin{array}{r}{\ell_{p o s}(\mathcal{D};\gamma)=-\mathbb{E}{{\mathbf{x}{\omega_{1}},\mathbf{x}{\omega_{2}}}\sim\mathbf{x}\in\mathcal{D}}\left[\log\mathbf{p}^{\top}f_{\gamma}(f_{\theta}(\mathbf{x}{\omega_{1}}),f_{\theta}(\mathbf{x}{\omega_{2}}))\right],}\end{array}

$$

where $\mathbf{x}{\omega_{1}}$ is a randomly selected fixed-size image patch from x, $\mathbf{x}{\omega_{2}}$ is another image patch from one of its eight neighbouring patches (as shown in ‘patch location prediction’ in Fig. 1), $f_{\gamma}:\mathcal{Z}\times\mathcal{Z}\to[0,1]^{8}$ , and $\mathbf{p}={0,1}^{8}$ is a one-hot encoding of the synthetic class label.

其中 $\mathbf{x}{\omega_{1}}$ 是从 x 中随机选取的固定大小图像块,$\mathbf{x}{\omega_{2}}$ 是来自其八个相邻块之一的另一图像块 (如图 1 中的 "块位置预测" 所示),$f_{\gamma}:\mathcal{Z}\times\mathcal{Z}\to[0,1]^{8}$,而 $\mathbf{p}={0,1}^{8}$ 是合成类别标签的独热编码。

Overall, the constraints in (3) and (4) to the contrastive distribution loss in (2) are designed to increase the non-uniform representation distribution and to improve the representation disc rim inability between normal and abnormal samples, compared with [40].

总体而言,(3) 和 (4) 中对 (2) 对比分布损失的约束设计,旨在相较于 [40] 提升表征分布的非均匀性,并增强正常与异常样本间的表征区分能力。

2.2 Anomaly Detection and Local is ation

2.2 异常检测与定位

Building upon the pre-trained encoder $f_{\theta}(\cdot)$ using the loss in (1), we fine-tune two state-of-the-art UAD methods, IGD [8] and F-anoGAN [37], and a baseline method, multi-scale structural similarity index measure (MS-SSIM)-based auto-encoder [48]. All UAD methods use the same training set $\mathcal{D}$ that contains only normal image samples.

基于使用(1)中损失预训练的编码器$f_{\theta}(\cdot)$,我们对两种最先进的异常检测方法IGD [8]和F-anoGAN [37]以及基线方法——基于多尺度结构相似性指数(MS-SSIM)的自编码器[48]进行微调。所有异常检测方法均使用仅包含正常图像样本的相同训练集$\mathcal{D}$。

IGD [8] combines three loss functions: 1) two reconstruction losses based on local and global multi-scale structural similarity index measure (MS-SSIM) [48] and mean absolute error (MAE) to train the encoder $f_{\theta}(\cdot)$ and decoder $g_{\phi}(\cdot),2)$ a regular is ation loss to train adversarial interpolations from the encoder [3], and 3) an anomaly classification loss to train $h_{\psi}(\cdot)$ . The anomaly detection score of image $\mathbf{x}$ is

IGD [8] 结合了三种损失函数:1) 基于局部和全局多尺度结构相似性指数 (MS-SSIM) [48] 和平均绝对误差 (MAE) 的两个重建损失,用于训练编码器 $f_{\theta}(\cdot)$ 和解码器 $g_{\phi}(\cdot)$;2) 一个正则化损失,用于训练来自编码器的对抗插值 [3];3) 一个异常分类损失,用于训练 $h_{\psi}(\cdot)$。图像 $\mathbf{x}$ 的异常检测分数为

$$

s_{I G D}(\mathbf{x})=\xi\ell_{r e c}(\mathbf{x},\tilde{\mathbf{x}})+(1-\xi)(1-h_{\psi}(f_{\theta}(\mathbf{x}))),

$$

$$

s_{I G D}(\mathbf{x})=\xi\ell_{r e c}(\mathbf{x},\tilde{\mathbf{x}})+(1-\xi)(1-h_{\psi}(f_{\theta}(\mathbf{x}))),

$$

where $\tilde{\mathbf{x}}=g_{\phi}(f_{\theta}(\mathbf{x}))$ , $h_{\psi}(f_{\theta}(\mathbf{x}))\in[0,1]$ returns the likelihood that $\mathbf{x}$ belongs to the normal class, $\xi\in[0,1]$ is a hyper-parameter, and

其中 $\tilde{\mathbf{x}}=g_{\phi}(f_{\theta}(\mathbf{x}))$,$h_{\psi}(f_{\theta}(\mathbf{x}))\in[0,1]$ 返回 $\mathbf{x}$ 属于正常类的概率,$\xi\in[0,1]$ 是一个超参数,且

$$

\ell_{r e c}(\mathbf{x},\tilde{\mathbf{x}})=\rho|\mathbf{x}-\tilde{\mathbf{x}}|{1}+\left(1-\rho\right)\left(1-\left(\nu m_{G}(\mathbf{x},\tilde{\mathbf{x}})+(1-\nu)m_{L}(\mathbf{x},\tilde{\mathbf{x}})\right)\right),

$$

$$

\ell_{r e c}(\mathbf{x},\tilde{\mathbf{x}})=\rho|\mathbf{x}-\tilde{\mathbf{x}}|{1}+\left(1-\rho\right)\left(1-\left(\nu m_{G}(\mathbf{x},\tilde{\mathbf{x}})+(1-\nu)m_{L}(\mathbf{x},\tilde{\mathbf{x}})\right)\right),

$$

with $\rho,\nu\in[0,1],m_{G}(\cdot)$ and $m_{L}(\cdot)$ denoting the global and local MS-SSIM scores [8]. Anomaly local is ation uses (5) to compute $s_{I G D}(\mathbf{x}{\omega})$ , $\forall\omega\in\mathcal{\varOmega}$ , where $\mathbf{x}_{\omega}\in\mathbb{R}^{\hat{H}\times\hat{W}\times C}$ is an image region–this forms a heatmap, where large values denote anomalous regions.

其中 $\rho,\nu\in[0,1]$,$m_{G}(\cdot)$ 和 $m_{L}(\cdot)$ 分别表示全局和局部 MS-SSIM 分数 [8]。异常定位使用 (5) 式计算 $s_{I G D}(\mathbf{x}{\omega})$,$\forall\omega\in\mathcal{\varOmega}$,其中 $\mathbf{x}_{\omega}\in\mathbb{R}^{\hat{H}\times\hat{W}\times C}$ 是一个图像区域——这将形成一个热力图,其中较大的值表示异常区域。

F-anoGAN [37] combines generative adversarial networks (GAN) and auto-encoder models to detect anomalies. Training involves the minim is ation of reconstruction losses in both the original image and representation spaces to model $f_{\theta}(\cdot)$ and $g_{\phi}(\cdot)$ . It also uses a GAN loss [15] to model $g_{\phi}(\cdot)$ and $h_{\psi}(\cdot)$ . Anomaly detection for image $\mathbf{x}$ is

F-anoGAN [37] 结合了生成对抗网络 (GAN) 和自编码器模型来检测异常。训练过程包括最小化原始图像和表示空间中的重建损失,以建模 $f_{\theta}(\cdot)$ 和 $g_{\phi}(\cdot)$。它还使用 GAN 损失 [15] 来建模 $g_{\phi}(\cdot)$ 和 $h_{\psi}(\cdot)$。对于图像 $\mathbf{x}$ 的异常检测是

$$

s_{F A N}(\mathbf{x})=|\mathbf{x}-g_{\phi}(f_{\theta}(\mathbf{x}))|+\kappa|f_{\theta}(\mathbf{x})-f_{\theta}(g_{\phi}(f_{\theta}(\mathbf{x})))|.

$$

$$

s_{F A N}(\mathbf{x})=|\mathbf{x}-g_{\phi}(f_{\theta}(\mathbf{x}))|+\kappa|f_{\theta}(\mathbf{x})-f_{\theta}(g_{\phi}(f_{\theta}(\mathbf{x})))|.

$$

Anomaly local is ation at $\mathbf{x}{\omega}\in\mathbb{R}^{\hat{H}\times\hat{W}\times C}$ is achieved by $|{\bf x}{\omega}-g_{\phi}(f_{\theta}({\bf x}_{\omega}))|$ , $\forall\omega\in\mathcal{\varOmega}$

异常定位在 $\mathbf{x}{\omega}\in\mathbb{R}^{\hat{H}\times\hat{W}\times C}$ 处通过 $|{\bf x}{\omega}-g_{\phi}(f_{\theta}({\bf x}_{\omega}))|$ 实现,其中 $\forall\omega\in\mathcal{\varOmega}$

For the MS-SSIM auto-encoder [48], we train it with the MS-SSIM loss for reconstructing the training images. Anomaly detection for $\mathbf{x}$ is based on $s_{M S I}({\bf x}){\bf\Psi}={\bf\Psi}$ $1-\left(\nu m_{G}(\mathbf{x},\tilde{\mathbf{x}})+(1-\nu)m_{L}(\mathbf{x},\tilde{\mathbf{x}})\right)$ , with $\tilde{\bf x}$ as defined in (5). Anomaly local is ation is performed with $s_{M S I}(\mathbf{x}{\omega})$ at image regions $\mathbf{x}_{\omega}\in\mathbb{R}^{\hat{H}\times\hat{W}\times C}$ , $\forall\omega\in\mathcal{Q}$ . Inspired by IGD [8], we