Patient Trajectory Prediction: Integrating Clinical Notes with Transformers

患者轨迹预测:结合临床记录与Transformer模型

Keywords:

关键词:

Trajectory prediction, Transformers, Knowledge integration, Deep learning

轨迹预测、Transformer、知识整合、深度学习

Abstract:

摘要:

Predicting disease trajectories from electronic health records (EHRs) is a complex task due to major challenges such as data non-station ari ty, high granularity of medical codes, and integration of multimodal data. EHRs contain both structured data, such as diagnostic codes, and unstructured data, such as clinical notes, which hold essential information often overlooked. Current models, primarily based on structured data, struggle to capture the complete medical context of patients, resulting in a loss of valuable information. To address this issue, we propose an approach that integrates unstructured clinical notes into transformer-based deep learning models for sequential disease prediction. This integration enriches the representation of patients’ medical histories, thereby improving the accuracy of diagnosis predictions. Experiments on MIMIC-IV datasets demonstrate that the proposed approach outperforms traditional models relying solely on structured data.

基于电子健康档案(EHR)预测疾病发展轨迹是一项复杂任务,主要面临数据非平稳性、医疗代码高颗粒度以及多模态数据整合等挑战。EHR既包含诊断代码等结构化数据,也包含临床记录等非结构化数据,后者常被忽视却蕴含关键信息。当前主要基于结构化数据的模型难以全面捕捉患者医疗背景,导致重要信息丢失。为解决这一问题,我们提出将非结构化临床记录整合到基于Transformer的深度学习模型中,用于序列化疾病预测。这种整合能丰富患者病史表征,从而提高诊断预测准确性。在MIMIC-IV数据集上的实验表明,该方法优于仅依赖结构化数据的传统模型。

1 INTRODUCTION

1 引言

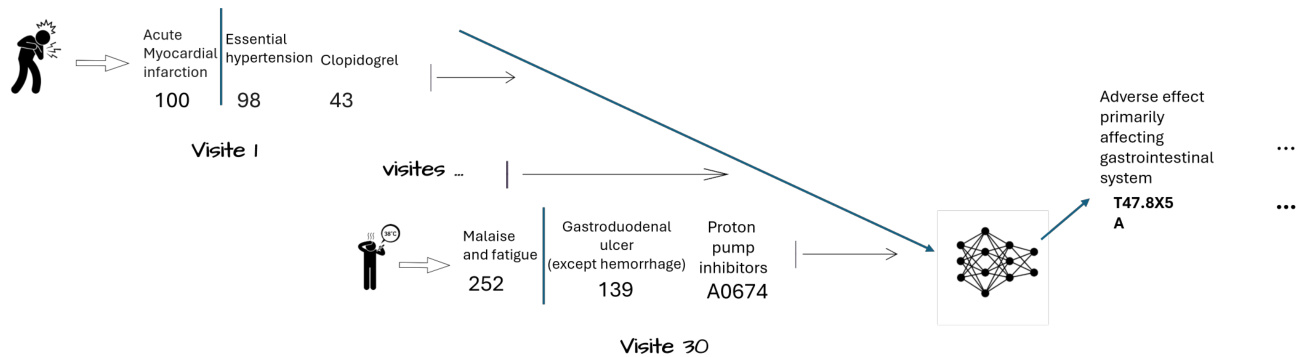

In healthcare, the exponential growth of Electronic Health Records (EHRs) has revolutionized patient care while posing new challenges. Healthcare professionals now frequently interact with medical records spanning several decades, having to process and analyze this vast amount of information to make informed decisions about patients’ future health status. This evolution has accelerated the development of automated systems to predict future diagnoses from past medical data, thus becoming a key element of personalized and proactive medicine (Figure 1). Machine learning techniques, particularly deep learning, have seen increasing growth in medicine (Egger et al., 2022), thanks to their adaptability and good results. In medical imaging, for example, deep learning models have achieved a high level of performance in predicting medical diagnoses, sometimes comparable to or even surpassing that of human experts (Mall et al., 2023). These results have led researchers to apply similar techniques to the task of sequential disease prediction ((Choi et al., 2016a; Rodrigues-Jr et al., 2021; Shankar et al., 2023)), where the goal is to predict a patient’s diagnosis at their next visit $(\mathrm{N}{+}1)$ based on the content of their previous visits (N). However, modeling patient trajectories from EHR data presents unique challenges:

在医疗健康领域,电子健康档案(EHR)的指数级增长既革新了患者护理方式,也带来了新的挑战。医疗专业人员如今需要频繁处理跨越数十年的病历记录,必须分析和处理这些海量信息,才能对患者未来健康状况做出明智决策。这一演变加速了从历史医疗数据预测未来诊断的自动化系统发展,使其成为个性化主动医疗的关键要素(图1)。机器学习技术(尤其是深度学习)凭借其适应性和良好效果,在医学领域的应用持续增长(Egger等人,2022)。例如在医学影像领域,深度学习模型已实现高水平的诊断预测性能,有时甚至达到或超越人类专家水平(Mall等人,2023)。这些成果促使研究者将类似技术应用于时序疾病预测任务((Choi等人,2016a; Rodrigues-Jr等人,2021; Shankar等人,2023)),其目标是根据患者既往就诊记录(N)预测其下次就诊$(\mathrm{N}{+}1)$时的诊断。然而,基于EHR数据建立患者病程模型存在独特挑战:

• The non-station ari ty of EHR data which leads to variations in the data, limiting the general iz ability of models.

• 电子健康记录 (EHR) 数据的非平稳性导致数据变化,限制了模型的泛化能力。

Addressing these challenges is essential to develop both accurate and reliable patient trajectory prediction systems capable of assisting physicians in decisionmaking by providing comprehensive forecasts based on a patient’s clinical history.

解决这些挑战对于开发准确可靠的患者轨迹预测系统至关重要,该系统能够基于患者的临床历史提供全面预测,从而辅助医生决策。

In light of these challenges, this article focuses on improving the accuracy of automated medical prognosis systems, particularly in predicting future diagnoses based on patients’ historical medical records.

鉴于这些挑战,本文着重于提高自动化医疗预后系统的准确性,特别是在基于患者历史医疗记录预测未来诊断方面。

Figure 1: Sequential disease predictions

图 1: 序列化疾病预测

Current coding systems, such as the International Classification of Diseases (ICD) 2, often do not fully capture the richness of information contained in clinical notes, which can lead to a loss of valuable information for predicting patient trajectories. To overcome this problem, we propose an approach aimed at improving the accuracy of diagnostic code predictions by integrating clinical note embeddings into transformers, which typically rely solely on medical codes. This method incorporates a discriminating factor that reduces prediction errors by enriching the representation of embeddings. This also allows for the recovery of valuable information often lost in coding systems such as ICD. By incorporating additional context, our approach addresses challenges related to understanding the reasons behind medication prescriptions, procedures performed, and diagnoses made.

当前的编码系统,如国际疾病分类(ICD) 2,通常无法完全捕捉临床记录中包含的丰富信息,这可能导致预测患者病程时有价值信息的丢失。为解决这一问题,我们提出了一种方法,旨在通过将临床记录嵌入(embeddings)整合到通常仅依赖医疗代码的Transformer模型中,提高诊断代码预测的准确性。该方法引入了一个区分因子,通过丰富嵌入表示来减少预测误差,同时还能恢复ICD等编码系统中常丢失的有价值信息。通过融入额外上下文,我们的方法解决了理解药物处方原因、执行操作依据及诊断形成动因等相关挑战。

This article is organized as follows: Section 2 reviews the literature. Section 3 describes our approach, including the process of generating embeddings and their integration into transformers. In Section 4, we present our experimental results. Finally, Section 5 concludes this article and presents future work.

本文结构如下:第2节回顾相关文献。第3节描述我们的方法,包括生成嵌入(embeddings)的过程及其与Transformer的集成。第4节展示实验结果。最后,第5节总结全文并展望未来工作。

2 State of the Art

2 技术现状

Various methods, whether based on deep learning or traditional approaches, have been explored to predict patient trajectories. Among them, Doctor AI (Choi et al., 2016a), a temporal model based on recurrent neural networks (RNN), developed and applied to longitudinal time-stamped EHR data. Doc- tor AI predicts a patient’s medical codes and estimates the time until the next visit. However, it is limited by a fixed window width, which proves inadequate, as a patient’s future diagnosis may depend on medical conditions outside this window. LIG-Doctor (Rodrigues-Jr et al., 2021), an artificial neural network architecture designed to efficiently predict patient trajectories using minimal bidirectional recurrent networks MGRU. MGRU handle the granularity of ICD-9 codes, but suffer from the same limitations as Doctor AI. In (Choi et al., 2016b), the authors propose RETAIN, an interpret able predictive model for healthcare using reverse time attention mechanism. Two RNNs are trained in reverse time order to learn the importance of previous visits, offering improved interpret ability. DeepCare (Pham et al., 2017) employs Long Short-Term Memory (LSTM) networks for predicting next visit diagnosis codes, intervention recommendations and future risk prediction. The Life Model (LM) Framework (Manashty and Light, 2019) proposed an efficient representation of temporal data in concise sequences for training RNN-based models, introducing Mean Tolerance Error (MTE) as both a loss function and metric. Deep Patient (Miotto et al., 2016) introduced an unsupervised deep learning approach using Stack Denoising Auto encoders (SDA) to extract meaningful feature representations from EHR data, but does not consider temporal characteristics, which is limiting, as this notion of time is inherent to the trajectory of a patient. In parallel, more classical methods such as Markov chains (Severson et al., 2020), Bayesian networks (Longato et al., 2022), and Hawkes processes (Lima, 2023) have been explored, but suffer from computational complexity when faced with massive data.

为预测患者病程轨迹,研究者探索了基于深度学习或传统方法的多种方案。其中,Doctor AI (Choi等,2016a) 作为基于循环神经网络(RNN)的时间序列模型,被开发应用于带时间戳的纵向电子健康记录(EHR)数据。该模型可预测患者医疗编码及下次就诊时间,但受限于固定时间窗口宽度,当患者未来诊断涉及窗口期外的健康状况时效果受限。LIG-Doctor (Rodrigues-Jr等,2021) 采用最小化双向循环网络MGRU的人工神经网络架构,虽能处理ICD-9编码粒度,但仍存在与Doctor AI相同的局限性。Choi等(2016b)提出RETAIN模型,通过逆向时间注意力机制构建可解释的医疗预测系统,采用两个逆向训练的RNN评估历史就诊重要性,显著提升模型可解释性。DeepCare (Pham等,2017) 运用长短期记忆(LSTM)网络预测下次就诊诊断编码、治疗建议及远期风险。Life Model框架 (Manashty和Light,2019) 提出将时序数据压缩为简洁序列的高效表示方法以训练RNN模型,并引入平均容忍误差(MTE)作为损失函数和评估指标。Deep Patient (Miotto等,2016) 采用堆叠去噪自编码器(SDA)进行无监督特征提取,但未考虑时间特性这一病程轨迹的核心要素。传统方法方面,马尔可夫链(Severson等,2020)、贝叶斯网络(Longato等,2022)和霍克斯过程(Lima,2023)等方案在大规模数据场景下存在计算复杂度问题。

The introduction of transformers marked an advancement, with Clinical GAN (Shankar et al., 2023), a Generative Adversarial Networks (GAN) method based on the Transformer architecture. In this approach, an encoder-decoder model serves as the generator, while an encoder-only Transformer acts as the critic. The goal was to address exposure bias (Arora et al., 2022), a general issue (i.e., not specific to the Transformer) that arises from the teacher forcing training strategy. However, the use of GANs is challenged by s cal ability issues, such as training instability, non-convergence, and mode collapse (Saad et al., 2024).

Transformer的引入标志着技术的进步,其中Clinical GAN (Shankar等人,2023)是一种基于Transformer架构的生成对抗网络(GAN)方法。该方法采用编码器-解码器模型作为生成器,而仅含编码器的Transformer则充当判别器。其目标是解决暴露偏差(Arora等人,2022),这是由教师强制训练策略引发的普遍问题(即并非Transformer特有)。然而,GAN的使用受到可扩展性问题的挑战,例如训练不稳定、不收敛和模式崩溃(Saad等人,2024)。

Despite the development of various approaches for predicting medical codes, it is important to note that most proposed models have been trained on electronic health records (EHRs) containing only structured data on diagnoses and procedures, such as ICD and CCS codes. However, these data omit some essential contextual information, such as medical reasoning and patient-specific nuances, which can be captured through clinical notes.

尽管已有多种预测医疗编码的方法被开发出来,但值得注意的是,大多数已提出的模型都是在仅包含诊断和程序结构化数据(如ICD和CCS编码)的电子健康记录(EHRs)上训练的。然而,这些数据遗漏了一些重要的背景信息,例如医疗推理和患者特异性细节,而这些信息可以通过临床记录获取。

Moreover, comparing results between different studies poses several challenges:

此外,对比不同研究结果存在多重挑战:

• Dataset Variation: Studies utilize different datasets (e.g., MIMIC-III vs. MIMIC-IV), which encompass varying patient populations and time periods (Johnson et al., 2016; Johnson et al., 2020). This variation can lead to discrepancies in results, as one dataset may present more challenging diagnoses to predict than another due to dif- fering distributions. Consequently, such discrepancies complicate the reliability of comparisons between studies and may impact the applicability of findings to clinical practice. • Test Set Size: The size of the test set can significantly impact results. For instance, Shankar et al. (Shankar et al., 2023) used a test set of only $5%$ of their dataset (approximately 1700 visits), which may not adequately represent the diversity of the patients’ profiles and complexity. • Lack of Standardization: There’s often a lack of transparency regarding specific training datasets and preprocessing steps. Additionally, inconsistencies in the implementation of evaluation metrics can lead to discrepancies in reported results. • Preprocessing Variations: Different preprocessing steps, such as token iz ation and data cleaning, can affect model performance and hinder direct comparisons (Edin et al., 2023). • Code Mapping Inconsistencies: Some approaches predict medical codes directly, while others map to ICD codes. Variations in mapping schemes, such as choosing to apply the Clinical Classification Software Refined (CCSR) or not, can lead to inconsistencies in final code representations (i.e., different target labels).

• 数据集差异:研究采用不同数据集(如MIMIC-III与MIMIC-IV),这些数据集覆盖的患者群体和时间范围存在差异 (Johnson et al., 2016; Johnson et al., 2020)。由于数据分布不同,某个数据集可能比另一个包含更难预测的诊断案例,从而导致结果偏差。这种差异会降低研究间比较的可信度,并可能影响研究结论在临床实践中的适用性。

• 测试集规模:测试集大小会显著影响结果。例如Shankar等 (Shankar et al., 2023) 仅使用数据集中$5%$的样本(约1700次就诊)作为测试集,这可能无法充分反映患者特征的多样性和病情复杂性。

• 标准化缺失:研究常缺乏对具体训练数据集和预处理步骤的透明度。此外,评估指标实施的不一致性会导致报告结果出现偏差。

• 预处理差异:不同的预处理步骤(如token化和数据清洗)会影响模型性能,阻碍直接比较 (Edin et al., 2023)。

• 编码映射不一致:部分方法直接预测医疗编码,其他方法则映射到ICD编码。映射方案的差异(例如是否应用临床分类优化软件CCSR)会导致最终编码表示的不一致(即不同的目标标签)。

These challenges underscore the importance of careful consideration when comparing results across different studies in this field. To enhance comparability and reproducibility in research on patient trajectory prediction, it is crucial to standardize datasets, preprocessing methods, and evaluation metrics.

这些挑战凸显了在比较该领域不同研究结果时需谨慎对待的重要性。为提高患者轨迹预测研究的可比性和可复现性,标准化数据集、预处理方法和评估指标至关重要。

3 Proposed Methodology

3 研究方法

In this section, we describe our approach for predicting patient trajectories, which relies on the MIMICIV datasets 3 4. We detail our methodology, including data preprocessing, model architecture, and integration of clinical notes.

在本节中,我们将介绍基于MIMICIV数据集[3][4]的患者轨迹预测方法,详细阐述数据预处理流程、模型架构设计及临床记录整合策略。

3.1 Data Preprocessing

3.1 数据预处理

Preprocessing of MIMIC-IV data includes several operations:

MIMIC-IV数据的预处理包括以下操作:

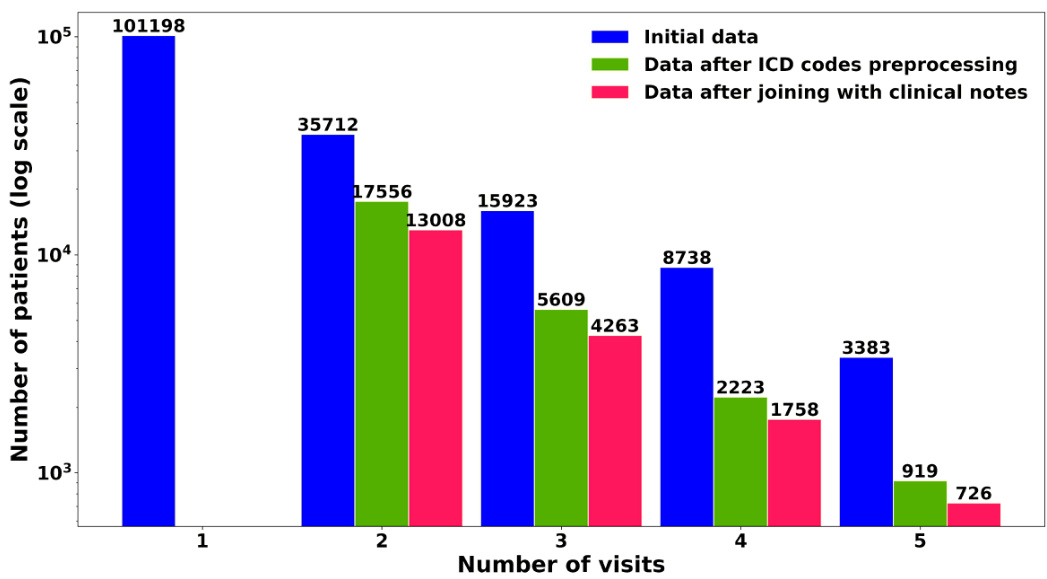

Table 1 presents code statistics before and after applying processing steps. This is further illustrated in Figure 2, which reveals a significant data imbalance, showing that a larger number of patients made only a single visit compared to those with multiple visits.

表 1: 展示了应用处理步骤前后的代码统计情况。图 2: 进一步说明了这一点,揭示了显著的数据不平衡现象:相比多次就诊的患者,仅单次就诊的患者数量明显更多。

In addition to structured data processing, clinical notes were also pre processed to ensure consistency. Working with limited textual datasets poses challenges, particularly due to subword tokenizers that fragment similar tokens differently due to slight structural variations. Therefore, we standardized clinical notes by unifying medical abbreviations (e.g., ”hr”, ”hrs”, and ”hr(s)” to ”hours”), removing accents, con- verting Danish characters (such as ”æ” to ”ae”), and putting all notes in lowercase, following the approach of (Alsentzer et al., 2019).

除了结构化数据处理外,临床记录也经过预处理以确保一致性。处理有限的文本数据集存在挑战,特别是由于子词分词器(subword tokenizer)会因细微结构差异而对相似token进行不同分割。因此,我们按照(Alsentzer et al., 2019)的方法,通过统一医学缩写(如将"hr"、"hrs"和"hr(s)"统一为"hours")、去除重音符号、转换丹麦字符(如将"æ"转为"ae")以及将所有记录转为小写,实现了临床记录的标准化。

3.2 Integration of Clinical Notes

3.2 临床记录整合

Electronic Health Records (EHRs) typically contain both structured data (e.g., ICD and CCS codes) and unstructured clinical notes. While structured codes provide a standardized representation of diagnoses and procedures, we hypothesize that clinical notes contain additional valuable information that may not be fully captured by these codification systems.

电子健康档案 (EHR) 通常包含结构化数据 (如ICD和CCS编码) 和非结构化临床记录。虽然结构化编码提供了诊断和程序的标准化表示形式,但我们假设临床记录中还包含这些编码系统可能无法完全捕获的额外有价值信息。

Figure 2: Sample distribution of patients by visit count

图 2: 按就诊次数划分的患者样本分布

Table 1: Code statistics before and after processing Note: For each code type, the first row shows the number of distinct codes, and the second row shows the mean $\pm$ standard deviation per visit.

| 代码类型 | 加载时 | 预处理后 |

|---|---|---|

| 操作代码 | 8482 | 470 |

| 3.03 ± 2.81 | 2.99 ± 2.77 | |

| 诊断代码 | 15763 | 762 |

| 12.50 ± 7.67 | 13.18 ± 8.58 | |

| 药物代码 | 1609 | 1609 |

| 24.12 ± 28.19 | 24.12 ± 28.19 |

表 1: 处理前后的代码统计

注: 每种代码类型的第一行显示唯一代码数量,第二行显示每次就诊的均值 ± 标准差。

In light of this, we propose incorporating clinical note embeddings into transformer-based models, which have traditionally focused solely on clinical codes. Our model, Clinical Mosaic, aims to lever- age both structured and unstructured data, offering a more comprehensive view of a patient’s clinical state and history. This integration not only enriches the model’s input but also enhances its ability to understand and predict patient trajectories more accurately.

鉴于此,我们提出将临床记录嵌入(clinical note embeddings)整合到基于Transformer的模型中。传统方法仅聚焦于临床代码(clinical codes),而我们的Clinical Mosaic模型旨在同时利用结构化和非结构化数据,为患者临床状态及病史提供更全面的观察视角。这种集成不仅丰富了模型输入,还提升了其理解和预测患者病情发展的准确性。

3.2.1 Clinical Mosaic Model

3.2.1 临床马赛克模型

To effectively exploit the information contained in clinical notes, it is crucial to obtain vector representations. BERT models (Devlin et al., 2018), and particularly Clinical BERT (Alsentzer et al., 2019), have proven their ability to capture relevant semantic represent at ions in the medical domain. Clinical BERT is a pre-trained model on MIMIC-III, specifically designed for medical notes. However, this model has certain limitations that may affect its performance in our current context:

为了有效利用临床记录中的信息,获取向量表示至关重要。BERT模型 (Devlin et al., 2018) ,尤其是Clinical BERT (Alsentzer et al., 2019) ,已被证明能够捕捉医疗领域的相关语义表示。Clinical BERT是基于MIMIC-III预训练的模型,专为医疗记录设计。然而,该模型存在一些可能影响当前场景性能的局限性:

- Limited sequence length: Clinical BERT was primarily pretrained on sequence lengths of 128 tokens. This limitation may cause the model to under perform when generating representations for longer clinical texts, such as comprehensive discharge summaries. Many studies (Wang et al., 2024) show that models trained with larger context lengths tend to outperform those trained on shorter sequences, as they can capture more longrange dependencies and contextual information.

- 序列长度限制:Clinical BERT 主要在 128 token 的序列长度上进行预训练。这一限制可能导致模型在处理较长临床文本(如完整出院摘要)生成表征时表现不佳。许多研究 [20] 表明,使用更长上下文训练的模型往往优于短序列训练的模型,因为它们能捕捉更远距离的依赖关系和上下文信息。

- Outdated training data: Clinical BERT was pretrained on MIMIC-III, which is an older version of the MIMIC database. We are currently using MIMIC-IV-NOTES 2.2, which contains more recent and potentially more diverse clinical data. To the best of our knowledge, no publicly available model has been pretrained on this latest version of MIMIC-IV-NOTES.

- 过时的训练数据:Clinical BERT是在MIMIC-III上预训练的,而MIMIC-III是MIMIC数据库的旧版本。我们目前使用的是MIMIC-IV-NOTES 2.2,它包含更新且可能更多样化的临床数据。据我们所知,目前还没有公开可用的模型是在这个最新版本的MIMIC-IV-NOTES上预训练的。

These limitations may hinder the model’s ability to fully capture the richness and complexity of clinical narratives. The mismatch between the pretraining data (MIMIC-III) and the target data (MIMIC-IV- NOTES 2.2) could result in suboptimal performance due to differences in language patterns, terminology, and structure.

这些限制可能会阻碍模型充分捕捉临床叙述的丰富性和复杂性。预训练数据 (MIMIC-III) 与目标数据 (MIMIC-IV-NOTES 2.2) 之间的不匹配可能导致性能欠佳,因为两者在语言模式、术语和结构上存在差异。

To address this, we introduce Clinical Mosaic, an adaptation of the Mosaic BERT architecture (Portes et al., 2024) designed for clinical text. The model is pretrained with a sequence length of 512 tokens, leveraging Attention with Linear Biases (ALiBi) to improve extrapolation beyond this limit without requiring learned positional embeddings. This allows for better generalization to downstream tasks that may require longer contexts. Pre training is conducted on 331,794 clinical notes (approximately 170 million tokens) from MIMIC-IV-NOTES 2.2, utilizing 7 A40 GPUs with distributed data parallelism (DDP). The training parameters are detailed in Table 2. To facilitate further research and reproducibility, we publicly release the model weights.5

为此,我们推出了Clinical Mosaic,这是基于Mosaic BERT架构 (Portes et al., 2024) 专为临床文本设计的改进版本。该模型以512个token的序列长度进行预训练,采用线性偏置注意力机制 (ALiBi) 来提升超出该长度限制的外推能力,无需学习位置嵌入。这使得模型能更好地泛化到可能需要更长上下文的下游任务。预训练数据来自MIMIC-IV-NOTES 2.2的331,794份临床记录 (约1.7亿token),使用7块A40 GPU通过分布式数据并行 (DDP) 完成训练。具体训练参数详见表2。为促进后续研究和可复现性,我们公开了模型权重。5

表2:

Table 2: Training parameters of the Clinical Mosaic model

| 参数 | 值 |

|---|---|

| 有效批次大小 (EffectiveBatchSize) | 224 |

| 训练步数 (TrainingSteps) | 80,000 |

| 序列长度 (SequenceLength) | 512 token |

| 优化器 (Optimizer) | AdamW |

| 初始学习率 (InitialLearningRate) | 5e-4 |

| 学习率调度 (LearningRateSchedule) | 线性预热 33,000 步,随后余弦退火 46,000 步 |

| 最终学习率 (Final Learning Rate) | 1e-5 |

| 掩码概率 (MaskingProbability) | 30% |

表 2: Clinical Mosaic 模型的训练参数

3.2.2 Evaluation of Clinical Reasoning of Clinical Mosaic

3.2.2 临床马赛克的临床推理评估

To evaluate the performance of Clinical Mosaic, we fine-tuned the model on the Medical Natural Language Inference (MedNLI) dataset (Romanov and Shivade, 2018). MedNLI is a dataset designed for natural language inference tasks in the clinical domain, derived from MIMIC-III clinical notes. It consists of 14,049 pairs of premises and hypotheses, with the objective of classifying the relationship between each pair as entailment, contradiction, or neutral. We report our results on the same test set used by other models for consistency and comparability.

为评估Clinical Mosaic的性能,我们在医学自然语言推理(MedNLI)数据集(Romanov and Shivade, 2018)上对模型进行了微调。MedNLI是为临床领域自然语言推理任务设计的数据集,源自MIMIC-III临床记录。该数据集包含14,049对前提和假设,目标是将每对关系分类为蕴含、矛盾或中立。为确保一致性和可比性,我们在与其他模型相同的测试集上报告了结果。

The MedNLI task evaluates several essential aspects of clinical language understanding, including semantic comprehension of medical terminology, logical reasoning in a clinical context, as well as the ability to discern nuanced relationships between clinical statements. Performance on this dataset serves as an indicator of a model’s ability to understand and reason about clinical language, a crucial foundation for predicting patient trajectories.

MedNLI任务评估临床语言理解的几个关键方面,包括医学术语的语义理解、临床情境下的逻辑推理,以及辨别临床陈述间微妙关系的能力。在该数据集上的表现可作为模型理解和推理临床语言能力的指标,这是预测患者病程的重要基础。

Experimental Setup We fine-tuned Clinical Mo- saic using the AdamW optimizer with a linear warmup and decay learning rate schedule. The backbone of the model was initialized from the publicly released Sifal/Clinical Mosaic checkpoint on Hugging Face. The classifier head was trained with an increased learning rate compared to the backbone, allowing for targeted adaptation to the MedNLI classification task. The batch size was set to 64, with gradient clipping applied to stabilize training. We used early stopping with a patience of 10 epochs based on validation loss. The model achieved its best result at epoch 27, with an average loss of 0.0221 and a validation accuracy of $86.5%$ .

实验设置

我们使用AdamW优化器对Clinical Mosaic进行微调,并采用线性预热与衰减学习率策略。模型主干部分初始化自Hugging Face平台公开的Sifal/Clinical Mosaic检查点。分类器头部采用比主干更高的学习率进行训练,以实现对MedNLI分类任务的针对性适配。批次大小设为64,并应用梯度裁剪以稳定训练过程。基于验证损失,我们采用早停机制(耐心值为10个周期)。模型在第27个周期达到最佳性能,平均损失为0.0221,验证准确率为$86.5%$。

From the few experiments we conducted, we found the model to be highly sensitive to the learning rate. This could be an artifact of using a lower Adam $\upbeta_{2}$ (0.98) than the commonly used value of 0.999, potentially affecting the optimizer’s adaptation dynamics.

从我们进行的少量实验中发现,该模型对学习率高度敏感。这可能是由于使用了低于常规值0.999的Adam $\upbeta_{2}$ (0.98)参数所致,该设置可能影响优化器的自适应动态特性。

Table 3: Hyper parameter configuration used for fine-tuning Clinical Mosaic on MedNLI.

表 3: 在MedNLI上微调Clinical Mosaic使用的超参数配置。

| 超参数 | 值 |

|---|---|

| 学习率 (骨干网络) | 2e-5 |

| 学习率 (分类器) 权重衰减 | 2e-4 |

| 优化器 | 1e-6 |

| Betas | Adamw |

| Epsilon (e) | (0.9,0.98) 1e-6 |

| 批量大小 | 64 |

| 最大梯度范数 | |

| 预热周期数 | 1.0 |

| 总周期数 | 5 |

| 早停耐心值 | 40 |

| 冻结骨干网络 | 10 False |

Table 4 presents the performance of Clinical Mosaic on the MedNLI task in comparison with other state-of-the-art models, including the original Clinical BERT model (Alsentzer et al., 2019).

表 4: 展示了Clinical Mosaic在MedNLI任务上的性能表现,并与包括原始Clinical BERT模型 (Alsentzer et al., 2019) 在内的其他先进模型进行了对比。

Table 4: Comparison of performance of BERT variants and Clinical Mosaic on the MedNLI test set.

| 模型 | 准确率 |

|---|---|

| BERT | 77.6% |

| BioBERT | 80.8% |

| DischargeSummary BERT | 80.6% |

| Clinical Discharge BERT | 84.1% |

| Bio+ClinicalBERT | 82.7% |

| ClinicalMosaic | 86.5% |

表 4: BERT变体与Clinical Mosaic在MedNLI测试集上的性能对比。

The results show that Clinical Mosaic achieves superior accuracy $(86.5%)$ compared to existing models, including the original Clinical BERT $(84.1%)$ . This improvement suggests that our model optimization s and pre-training approach have strengthened its clinical language comprehension capabilities. To ensure reproducibility, our training script is available in the Github repository.

结果显示,Clinical Mosaic的准确率达到了86.5%,优于包括原始Clinical BERT (84.1%) 在内的现有模型。这一提升表明,我们的模型优化和预训练方法增强了其临床语言理解能力。为确保可复现性,训练脚本已发布于Github仓库。

3.3 Fusion of Clinical Representations

3.3 临床表征融合

To evaluate the impact of integrating clinical note embeddings into an encoder-decoder transformer,