DATA EXTRAPOLATION FOR TEXT-TO-IMAGE GENER- ATION ON SMALL DATASETS

小数据集上的文本到图像生成数据外推

ABSTRACT

摘要

Text-to-image generation requires large amount of training data to synthesizing high-quality images. For augmenting training data, previous methods rely on data interpolations like cropping, flipping, and mixing up, which fail to introduce new information and yield only marginal improvements. In this paper, we propose a new data augmentation method for text-to-image generation using linear extrapolation. Specifically, we apply linear extrapolation only on text feature, and new image data are retrieved from the internet by search engines. For the reliability of new text-image pairs, we design two outlier detectors to purify retrieved images. Based on extrapolation, we construct training samples dozens of times larger than the original dataset, resulting in a significant improvement in text-to-image performance. Moreover, we propose a NULL-guidance to refine score estimation, and apply recurrent affine transformation to fuse text information. Our model achieves FID scores of 7.91, 9.52 and 5.00 on the CUB, Oxford and COCO datasets. The code and data will be available on GitHub.

文本到图像生成需要大量训练数据来合成高质量图像。为扩充训练数据,先前方法依赖于裁剪、翻转和混合等数据插值技术,这些方法无法引入新信息且仅带来边际改进。本文提出一种基于线性外推的文本到图像生成数据增强新方法。具体而言,我们仅对文本特征进行线性外推,并通过搜索引擎从互联网检索新图像数据。为确保新文本-图像对的可靠性,我们设计两个离群值检测器来净化检索图像。基于外推方法,我们构建了比原始数据集大数十倍的训练样本,使文本到图像生成性能显著提升。此外,我们提出NULL引导机制来优化分数估计,并应用循环仿射变换融合文本信息。我们的模型在CUB、Oxford和COCO数据集上分别取得7.91、9.52和5.00的FID分数。代码和数据将在GitHub开源。

1 INTRODUCTION

1 引言

Text-to-image generation aims to synthesize images according to textual descriptions. As the bridge between human language and generative models, textto-image generation (Reed et al., 2016b; Ye et al., 2023; Sauer et al., 2023; Rombach et al., 2022; Ramesh et al., 2022)is applied to more and more application domains, such as digital human (Yin & Li, 2023), image editing (Brack et al., 2024), and computer-aided design (Liu et al., 2023). The diversity of applications leads to a large number of small datasets, where existing data are not sufficient to train high-quality generative models, and generative large models cannot overcome the long-tail effect of diverse applications.

文本到图像生成旨在根据文本描述合成图像。作为人类语言与生成模型之间的桥梁,文本到图像生成 (Reed et al., 2016b; Ye et al., 2023; Sauer et al., 2023; Rombach et al., 2022; Ramesh et al., 2022) 被应用于越来越多的领域,例如数字人 (Yin & Li, 2023)、图像编辑 (Brack et al., 2024) 和计算机辅助设计 (Liu et al., 2023)。应用的多样性导致了大量小型数据集的出现,其中现有数据不足以训练高质量的生成模型,而生成式大模型也无法克服多样化应用的长尾效应。

To augment training data, existing methods typically rely on data interpolation techniques such as cropping, flipping, and mixing up images (Zhang et al., 2017). While these methods leverage human knowledge to create new perspectives on existing images or features, they do not introduce new information and yield only marginal improvements. Additionally, Retrieval-base models (Chen et al., 2022; Sheynin et al., 2022; Li et al., 2022) employs retrieval methods to gather relevant training data from external databases like WikiImages. However, these external databases often contain very few images for specific entries, and their description styles differ significantly from those in text-to-image datasets. Furthermore, VQ-diffusion (Gu et al., 2022) pre-trains its text-to-image model on the Conceptual Caption dataset with 15 million images, but the resulting improvements are not obvious.

为扩充训练数据,现有方法通常依赖于数据插值技术,例如裁剪、翻转和图像混合 (Zhang et al., 2017)。虽然这些方法利用人类知识从现有图像或特征中创造新视角,但并未引入新信息,仅带来边际提升。此外,基于检索的模型 (Chen et al., 2022; Sheynin et al., 2022; Li et al., 2022) 采用检索方法从WikiImages等外部数据库收集相关训练数据。然而这些外部数据库对特定条目的图像覆盖极少,且其描述风格与文生图数据集差异显著。VQ-diffusion (Gu et al., 2022) 虽在包含1500万图像的Conceptual Caption数据集上预训练文生图模型,但改进效果并不明显。

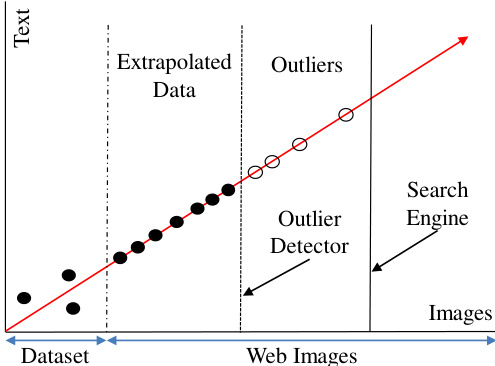

Figure 1: An illustration of data linear extrapolation. We use search engine and outlier detectors to ensure the image similarity. Extrapolation produces much more text-image pairs than the original dataset.

图 1: 数据线性外推示意图。我们使用搜索引擎和离群值检测器来确保图像相似性。外推生成的文本-图像对数量远超原始数据集。

In this paper, we explore data linear extrapolation to augment training data. Linear extrapolation can be risky, as similar text-image pairs may not be nearby in Euclidean space. For information reliability, as depicted in Figure 1, we explore linear extrapolation only on text data, and new image data are retrieved from the internet by search engines. And then outlier detectors are designed to purify retrieved web images. In this way, the reliability of new text-image pairs are guaranteed by search precision and outlier detection.

本文探讨了通过数据线性外推来增强训练数据的方法。由于相似的文本-图像对在欧氏空间中可能并不邻近,线性外推存在风险。为确保信息可靠性(如图 1 所示),我们仅对文本数据进行线性外推,新图像数据则通过搜索引擎从互联网检索获取。随后设计了离群值检测器来净化检索到的网络图像。通过这种方式,新文本-图像对的可靠性由搜索精度和离群值检测共同保证。

To detect outliers from web images, we divide outliers into irrelevant and similar ones. For detecting irrelevant outliers, K-means (Lloyd, 1982) algorithm is used to cluster noisy web images into similar images and outliers. In the image feature space generated by a CLIP encoder (Radford et al., 2021), similar images will be close to dataset images, while outliers will be far away. Based on this observation, we remove images that differ significantly from dataset images. For detecting similar outliers, each web image is assigned a label by a fine-grained classifier trained on the original dataset. If the label does not match the search keyword, the image is considered as an outlier and removed. For every purified web image, we extrapolate a new text descriptions according to the local manifold of dataset images. Based on extrapolation, we construct training samples dozens of times larger than the original dataset.

为检测网络图像中的异常值,我们将其划分为不相关异常与相似异常两类。针对不相关异常检测,采用K-means (Lloyd, 1982)算法对含噪网络图像进行聚类,分离出相似图像与异常值。在CLIP编码器 (Radford et al., 2021)生成的图像特征空间中,相似图像会接近数据集图像,而异常值则相距较远。基于此观察,我们移除与数据集图像差异显著的图像。对于相似异常检测,通过原始数据集训练的细粒度分类器为每张网络图像分配标签,若标签与搜索关键词不匹配则判定为异常值并移除。针对每张净化后的网络图像,我们根据数据集图像的局部流形结构外推生成新文本描述,基于此外推构建的训练样本规模可达原始数据集的数十倍。

Moreover, we propose NULL-condition guidance to refine score estimation for text-to-image generation. Classifier-free guidance (Ho & Salimans, 2022) uses a dummy label to refine labelconditioned image synthesis. Similarly, in text-to-image generation, such a dummy label can be replaced by a prompt with no new physical meaning. For example, “a picture of bird” provides no information for the CUB dataset “a picture of flower” provides no information for the Oxford dataset). In addition, we apply recurrent affine transformation (RAT) in the diffusion model for handling complex textual information.

此外,我们提出NULL-condition引导方法来优化文本到图像生成的分数估计。无分类器引导(Ho & Salimans, 2022)使用虚拟标签来优化标签条件图像合成。类似地,在文本到图像生成中,这种虚拟标签可以被替换为没有新物理意义的提示词(例如“一张鸟的图片”对CUB数据集不提供信息,“一张花的图片”对Oxford数据集不提供信息)。此外,我们在扩散模型中应用循环仿射变换(RAT)来处理复杂文本信息。

The contributions of this paper are summarized as follows:

本文的贡献总结如下:

2 RELATED WORK

2 相关工作

GAN-based text-to-image models. Text-to-image synthesis is a key task within conditional image synthesis (Feng et al., 2022; Tan et al., 2022; Peng et al., 2021; Hou et al., 2022). The pioneering work of (Reed et al., 2016b) first tackled this task using conditional GANs (Mirza & Osindero, 2014). To better integrate text information into the synthesis process, DF-GAN (Tao et al., 2022) introduced a deep fusion method featuring multiple affine layers within a single block. Unlike previous approaches, DF-GAN eliminated the normalization operation without sacrificing performance, thus reducing computational demands and alleviating limitations associated with large batch sizes. Building on DF-GAN, RAT-GAN employed a recurrent neural network to progressively incorporate text information into the synthesized images. GALIP (Tao et al., 2023) and StyleGAN-T (Sauer et al., 2023) explore the potential of combining GAN models with transformers for large-scale textto-image synthesis. However, the aforementioned GAN-based models often struggle to produce high-quality images.

基于GAN的文本到图像模型。文本到图像合成是条件图像合成中的关键任务 (Feng et al., 2022; Tan et al., 2022; Peng et al., 2021; Hou et al., 2022)。(Reed et al., 2016b) 的开创性工作首次使用条件GAN (Mirza & Osindero, 2014) 解决该任务。为更好地将文本信息融入合成过程,DF-GAN (Tao et al., 2022) 提出了一种深度融合方法,在单个模块中采用多重仿射层。与先前方法不同,DF-GAN在保持性能的同时移除了归一化操作,从而降低计算需求并缓解了大批量大小的限制。在DF-GAN基础上,RAT-GAN采用循环神经网络逐步将文本信息整合到合成图像中。GALIP (Tao et al., 2023) 和StyleGAN-T (Sauer et al., 2023) 探索了将GAN模型与Transformer结合用于大规模文本到图像合成的潜力。然而,上述基于GAN的模型往往难以生成高质量图像。

Diffusion-based text-to-image models. Recently, diffusion models (Ho et al., 2020; Song & Ermon, 2019; Song et al., 2021; Hyvarinen, 2005) have demonstrated impressive generation performance across various tasks. Building on this success, Imagen (Saharia et al., 2022) and DALL·E 2 (Ramesh et al., 2022) can synthesize images that are sufficiently realistic for real-world applications. To alleviate computational burdens, they first generate $64\times64$ images and then upsample them to high-resolution using another diffusion model. Additionally, the Latent Diffusion Model (Rombach et al., 2022) encodes high-resolution images into low-resolution latent codes, avoiding the exponential computation costs associated with increased resolution. DiT (Peebles & Xie, 2023)

基于扩散的文本到图像模型。近年来,扩散模型(Ho等人,2020;Song & Ermon,2019;Song等人,2021;Hyvarinen,2005)已在多项任务中展现出卓越的生成性能。基于这一成果,Imagen(Saharia等人,2022)和DALL·E 2(Ramesh等人,2022)能合成足以应用于现实场景的逼真图像。为减轻计算负担,它们首先生成$64\times64$尺寸的图像,再通过另一扩散模型上采样至高分辨率。此外,潜在扩散模型(Rombach等人,2022)将高分辨率图像编码为低分辨率潜在表征,避免了分辨率提升带来的指数级计算开销。DiT(Peebles & Xie,2023)

integrated latent diffusion models and transformers to enhance performance on large datasets. VQDiffusion (Gu et al., 2022) pre-train their text-to-image model on the Conceptual Caption dataset, which contains 15 million text-image pairs, and then fine-tune it on smaller datasets like CUB, Oxford, and COCO. Hence, VQ-Diffusion is the work most similar to ours but we use significantly less pre-training data while achieving better results.

集成潜在扩散模型和Transformer以提升在大规模数据集上的性能。VQDiffusion (Gu等人, 2022) 先在包含1500万文本-图像对的Conceptual Caption数据集上预训练其文生图模型,随后在CUB、Oxford和COCO等较小数据集上微调。因此VQ-Diffusion是与我们工作最接近的研究,但我们使用显著更少的预训练数据同时取得了更好的结果。

Data augmentation methods. Data augmentation increases training data to improve the performance of deep learning applications, from image classification (Krizhevsky et al., 2012) to speech recognition (Graves et al., 2013; Amodei et al., 2016). Common techniques include rotation, translation, cropping, resizing, flipping (LeCun et al., 2015; Vedaldi & Zisserman, 2016), and random erasing (Zhong et al., 2020) to promote visually plausible in variances. Similarly, label smoothing is widely used to boost the robustness and accuracy of trained models (Miller et al., 2019; Lukasik et al., 2020). Mixup (Zhang et al., 2017) involves training a neural network on convex combinations of examples and their labels. However, interpolated samples fail to introduce new information and effectively address data scarcity. Hence, Re-imagen (Chen et al., 2022; Sheynin et al., 2022; Li et al., 2022) retrieval relevant training data from external databases to augment training data.

数据增强方法。数据增强通过增加训练数据来提升深度学习应用性能,涵盖从图像分类 (Krizhevsky et al., 2012) 到语音识别 (Graves et al., 2013; Amodei et al., 2016) 等多个领域。常用技术包括旋转、平移、裁剪、缩放、翻转 (LeCun et al., 2015; Vedaldi & Zisserman, 2016) 以及随机擦除 (Zhong et al., 2020) 以增强视觉合理性。类似地,标签平滑被广泛用于提升训练模型的鲁棒性和准确率 (Miller et al., 2019; Lukasik et al., 2020)。Mixup (Zhang et al., 2017) 通过在样本及其标签的凸组合上训练神经网络实现增强,但插值样本无法引入新信息以有效解决数据稀缺问题。因此,Re-imagen (Chen et al., 2022; Sheynin et al., 2022; Li et al., 2022) 通过从外部数据库检索相关训练数据来实现数据增强。

3 LINEAR EXTRAPOLATION FOR TEXT-TO-IMAGE GENERATION

3 文本到图像生成的线性外推

In this section, we begin by collecting similar images from the internet. Next, we explain how to extrapolate text descriptions. Following that, we use the extrapolated text-image pairs to train a diffusion model with RAT blocks. Finally, we sample images using NULL-condition guidance.

在本节中,我们首先从互联网收集相似图像,接着说明如何外推文本描述。随后,利用外推的文本-图像对训练带有RAT模块的扩散模型。最后,采用NULL-condition引导进行图像采样。

3.1 COLLECTING SIMILAR AND CLEAN IMAGES

3.1 收集相似且干净的图像

Linear extrapolation requires the images to be sufficiently close in semantic space. Hence, we auto mati call y retrieve similar images by searching for their classification labels. However, search engines return both similar images and outliers. To eliminate unwanted outliers, we employ a cluster detector for irrelevant outliers and a classification detector for similar outliers. For the cluster detector, each image is encoded into a vector using the CLIP image encoder. Images retrieved with the same keyword are then clustered using K-means. If the distance from the cluster center to dataset images exceeds a threshold, this cluster is excluded. For the classification detector, we train a finegrained classification model on the original dataset, which assigns a label to each web image. If the label does not match with the search keyword, corresponding image is then excluded.

线性外推要求图像在语义空间中足够接近。因此,我们通过搜索分类标签自动检索相似图像。然而,搜索引擎会同时返回相似图像和离群值。为消除无关离群值,我们采用聚类检测器处理不相关离群值,并使用分类检测器筛选相似离群值。对于聚类检测器,每张图像通过CLIP图像编码器转换为向量,相同关键词检索到的图像通过K-means聚类。若某聚类中心与数据集图像的距离超过阈值,则排除该聚类。对于分类检测器,我们在原始数据集上训练细粒度分类模型,为每张网络图像分配标签。若标签与搜索关键词不匹配,则排除对应图像。

3.2 LINEAR EXTRAPOLATION ON TEXT FEATURE SPACE

3.2 文本特征空间上的线性外推

Here we introduce how to extrapolates text descriptions for web images. Assuming that web images are sufficiently close to dataset images in semantic space, each web image can be represented by nearest $\mathrm{k\Omega}$ images:

这里我们介绍如何推断网络图像的文本描述。假设网络图像在语义空间与数据集图像足够接近,每张网络图像可以用最近的 $\mathrm{k\Omega}$ 张图像表示:

$$

\underset{W}{\arg\operatorname*{min}}|\mathbf{f}-\mathbf{F}\times\mathbf{w}|^{2},

$$

$$

\underset{W}{\arg\operatorname*{min}}|\mathbf{f}-\mathbf{F}\times\mathbf{w}|^{2},

$$

where $\mathbf{w}=[w_{1},w_{2},...,w_{k}]\mathrm{are}$ the reconstruction weights and $\mathbf{F}~=~[\mathbf{f}_{1},\mathbf{f}_{2},...,\mathbf{f}_{k}]$ are the image features of dataset images produced by CLIP image encoder. Since the above equation is a superdetermined problem, we solve this coefficient using least squares:

其中 $\mathbf{w}=[w_{1},w_{2},...,w_{k}]\mathrm{是}$ 重建权重,$\mathbf{F}~=~[\mathbf{f}_{1},\mathbf{f}_{2},...,\mathbf{f}_{k}]$ 是由 CLIP 图像编码器生成的数据集图像特征。由于上述方程是一个超定问题,我们使用最小二乘法求解该系数:

$$

\mathbf{w}=(\mathbf{F}^{T}\mathbf{F})^{-1}\mathbf{F}^{T}\mathbf{f}.

$$

$$

\mathbf{w}=(\mathbf{F}^{T}\mathbf{F})^{-1}\mathbf{F}^{T}\mathbf{f}.

$$

We assume that the image feature space and text feature space share the same local manifold. Hence, the image reconstruction efficient w can be used to compute the text feature of web images:

我们假设图像特征空间和文本特征空间共享相同的局部流形。因此,图像重建效率w可用于计算网络图像的文本特征:

$$

\begin{array}{r}{\mathbf{s}=\mathbf{S}\times\mathbf{w},}\end{array}

$$

$$

\begin{array}{r}{\mathbf{s}=\mathbf{S}\times\mathbf{w},}\end{array}

$$

where $\mathbf{S}=[\pmb{s}{1},\pmb{s}{2},...,\pmb{s}_{k}]$ is the fake sentence features for nearest $\mathbf{k}$ dataset images, and $\mathbf{s}$ is the sentence feature for a web image.

其中 $\mathbf{S}=[\pmb{s}{1},\pmb{s}{2},...,\pmb{s}_{k}]$ 是最近 $\mathbf{k}$ 个数据集图像的伪句子特征,$\mathbf{s}$ 是网络图像的句子特征。

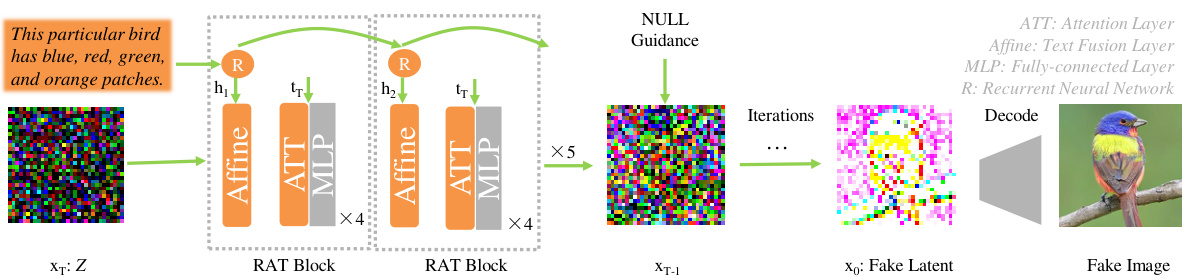

Figure 2: Latent diffusion model with recurrent affine transformation and NULL-guidance for textto-image synthesis. The RAT blocks are connected by a recurrent neural network to ensure the global assignment of text information.

图 2: 采用循环仿射变换和NULL引导的潜在扩散模型用于文本到图像合成。RAT模块通过循环神经网络连接以确保文本信息的全局分配。

3.3 RECURRENT DIFFUSION TRANSFORMER ON LATENT SPACE

3.3 潜在空间中的循环扩散Transformer

The training objective of the diffusion model is the squared error loss proposed by DDPM (Ho et al., 2020):

扩散模型的训练目标是 DDPM (Ho et al., 2020) 提出的平方误差损失:

$$

L(\theta)=\left|\epsilon-\epsilon_{\theta}\left(\sqrt{\bar{\alpha}{t}}\mathrm{x}{0}+\sqrt{1-\bar{\alpha}_{t}}\epsilon\right)\right|^{2},

$$

$$

L(\theta)=\left|\epsilon-\epsilon_{\theta}\left(\sqrt{\bar{\alpha}{t}}\mathrm{x}{0}+\sqrt{1-\bar{\alpha}_{t}}\epsilon\right)\right|^{2},

$$

where $\epsilon\in N(0,1)$ is the score noise injected at every diffusion step, and $\epsilon_{\theta}$ is the predicted noise by a diffusion network consisted of 12 transformer layers. $\overline{{\bar{\alpha}}}{t}$ and ${\bar{\alpha}_{t}}$ are hyper-parameters controlling the speed of diffusion. The work of score mismatching (Ye & Liu, 2024) shows that predicting the score noise leads to an unbiased estimation.

其中 $\epsilon\in N(0,1)$ 是每个扩散步骤注入的分数噪声,$\epsilon_{\theta}$ 是由12层Transformer组成的扩散网络预测的噪声。$\overline{{\bar{\alpha}}}{t}$ 和 ${\bar{\alpha}_{t}}$ 是控制扩散速度的超参数。分数失配研究 (Ye & Liu, 2024) 表明,预测分数噪声可实现无偏估计。

Network architecture. As depicted in Fig 8, the diffusion network consists of transformer blocks. Recurrent affine transformation is used to enhance the consistency between transformer blocks. To avoid directly mixing text embedding and time embedding, we stack four transformer blocks as a RAT block and text embedding is fed into the top of each RAT block. Each RAT block applies a channel-wise shifting operation on a image feature map:

网络架构。如图 8 所示,扩散网络由 Transformer 块组成。采用循环仿射变换 (recurrent affine transformation) 增强 Transformer 块间的一致性。为避免文本嵌入 (text embedding) 与时间嵌入 (time embedding) 直接混合,我们将四个 Transformer 块堆叠为 RAT 块,并将文本嵌入输入每个 RAT 块顶部。每个 RAT 块会对图像特征图执行通道平移操作:

$$

c^{\prime}=c+\beta,

$$

$$

c^{\prime}=c+\beta,

$$

where $c$ is the image feature vector and $\beta$ is shifting parameters predicted by a one-hidden-layer multi-layer perception (MLP) conditioned on recurrent neural network hidden state $h_{t}$ .

其中 $c$ 是图像特征向量,$\beta$ 是由基于循环神经网络隐藏状态 $h_{t}$ 的单隐藏层多层感知机 (MLP) 预测的平移参数。

In each transformer block, we inject time embedding by a channel-wise scaling operation and a channel-wise shifting operation on $c$ . At last, the image feature $c$ is multiplied by a scaling parameter $\alpha$ . This process can be formally expressed as:

在每个Transformer块中,我们通过对$c$进行通道级缩放操作和通道级平移操作来注入时间嵌入。最后,图像特征$c$会乘以一个缩放参数$\alpha$。这一过程可以形式化表示为:

$$

c^{\prime}=T r a n s f o r m e r((1+\gamma)\cdot c+\beta)\cdot\alpha,

$$

$$

c^{\prime}=T r a n s f o r m e r((1+\gamma)\cdot c+\beta)\cdot\alpha,

$$

where $\alpha,\gamma,\beta$ are parameters predicted by two one-hidden-layer MLPs conditioned on time embedding.

其中 $\alpha,\gamma,\beta$ 是由两个以时间嵌入为条件的单隐藏层 MLP 预测的参数。

When applied to an image feature map composed of $w\times h$ feature vectors, the same affine transformation is repeated for every feature vector.

当应用于由 $w\times h$ 个特征向量组成的图像特征图时,会对每个特征向量重复相同的仿射变换。

Early stop of fine-tuning. Extrapolation may produces training data very close to the original dataset, which makes fine-tuning saturate very quickly. Excessive fine-tuning epochs would forget knowledge gained from the extrapolated data and overfit small datasets. As a result, the training loss of the diffusion model becomes unreliable. Therefore, fine-tuning should be stopped when the FID score begins to increase.

早停微调。外推生成的数据可能与原始数据集高度相似,导致微调迅速饱和。过多的微调轮次会遗忘从外推数据中获得的知识,并对小数据集过拟合。因此,扩散模型的训练损失将变得不可靠。当FID分数开始上升时,应停止微调。

3.4 SYNTHESIZING FAKE IMAGES

3.4 合成虚假图像

Finally, we introduces how to synthesizing images from scratch. As depicted in Figure 8, the synthesis begins with sampling a random vector $z$ from standard Gaussian distribution. And then, this

最后,我们介绍如何从零开始合成图像。如图 8 所示,合成过程从标准高斯分布中采样一个随机向量 $z$ 开始,然后...

noise is gradually denoised into an image latent code by the diffusion model. The reverse diffusion iterations are formulated as:

噪声通过扩散模型逐步去噪为图像潜在代码。反向扩散迭代过程可表示为:

$$

\mathbf{x}{t-1}=\frac{1}{\sqrt{\alpha_{t}}}\left(\mathbf{x}{t}-\frac{1-\alpha_{t}}{\sqrt{1-\bar{\alpha}{t}}}\epsilon_{\theta}\left(\mathbf{x}{t},t\right)\right)+\sigma_{t}\mathbf{z},

$$

$$

\mathbf{x}{t-1}=\frac{1}{\sqrt{\alpha_{t}}}\left(\mathbf{x}{t}-\frac{1-\alpha_{t}}{\sqrt{1-\bar{\alpha}{t}}}\epsilon_{\theta}\left(\mathbf{x}{t},t\right)\right)+\sigma_{t}\mathbf{z},

$$

where, $\alpha_{t},\bar{\alpha}{t}$ and $\sigma_{t}$ are diffusion hyper-parameters, and $z$ is a random vector sampled from standard Gaussian distribution. At last, we decode image latent codes into images with the pre-trained decoder from Stable Diffusion (Rombach et al., 2022).

其中,$\alpha_{t},\bar{\alpha}{t}$ 和 $\sigma_{t}$ 是扩散超参数,$z$ 是从标准高斯分布采样的随机向量。最后,我们使用 Stable Diffusion (Rombach et al., 2022) 的预训练解码器将图像潜在编码解码为图像。

NULL guidance. A sentence with no new information is able to boost text-to-image performance obviously. This guidance is inspired by Classifier-free diffusion guidance (Ho & Salimans, 2022) which uses a dummy class label to boost label-to-image performance. Similarly, we design CLIP prompt without obvious visual meaning and embed them into the diffusion model. Specifically, we denote the original score estimation based on text description as $\epsilon_{t e x t}$ and score estimation based on null description as $\epsilon_{n u l l}$ . Then we mix these two estimations for a more accurate estimation $\epsilon^{\prime}$ :

NULL引导。一个不含新信息的句子能显著提升文本到图像的生成效果。该方法的灵感来自Classifier-free扩散引导(Ho & Salimans, 2022)通过使用虚拟类别标签来提升标签到图像的生成性能。类似地,我们设计了无明显视觉含义的CLIP提示词,并将其嵌入扩散模型。具体而言,我们将基于文本描述的原始分数估计记为$\epsilon_{text}$,基于空描述的分数估计记为$\epsilon_{null}$。随后混合这两个估计值以获得更精确的估计$\epsilon^{\prime}$:

$$

\epsilon^{\prime}=\left(\epsilon_{t e x t}-\epsilon_{n u l l}\right)\times\eta+\epsilon_{n u l l},

$$

$$

\epsilon^{\prime}=\left(\epsilon_{t e x t}-\epsilon_{n u l l}\right)\times\eta+\epsilon_{n u l l},

$$

where, $\eta$ is the guidance ration controlling the balance of two estimations. When $\eta=1$ , NULL Guidance falls back to an ordinary score estimation. Usually, a NULL prompt with the average meaning of the dataset achieve the best performance.

其中,$\eta$ 是控制两种估计平衡的引导比率。当 $\eta=1$ 时,NULL 引导退化为普通分数估计。通常,具有数据集平均意义的 NULL 提示能实现最佳性能。

4 EXPERIMENTS

4 实验

A small bird This bird is This bird is A bird with This flower has This flower has This flower has A pale purple with blue-grey white from mainly grey, it blue head, a lot of dark red petals that are large smooth five petaled wings, rust crown to belly, has brown on white belly and petals and no purple and white petals flower with colored sides with gray the feathers and breast, and the visible outer bunched that turn yellow yellow stamen and white wingbars and back of the tail. bill is pointed. stigma or together. toward the and green collar. retrices. stamen. center. stigma.

一只小鸟

这只鸟是

这只鸟是

一只拥有

这朵花有

这朵花有

这朵花有

浅紫色带蓝灰色

白色为主,略带灰色

蓝色头部,

许多深红色花瓣

花瓣大而光滑,五瓣

翅膀呈锈色

从冠部到腹部,

腹部和胸部为白色

羽毛和尾羽背面为灰色

喙尖锐。

无明显柱头或雄蕊。

花瓣聚拢。

逐渐向中心变黄

黄色雄蕊和绿色领状结构。

尾羽。

雄蕊。

中心。

柱头。

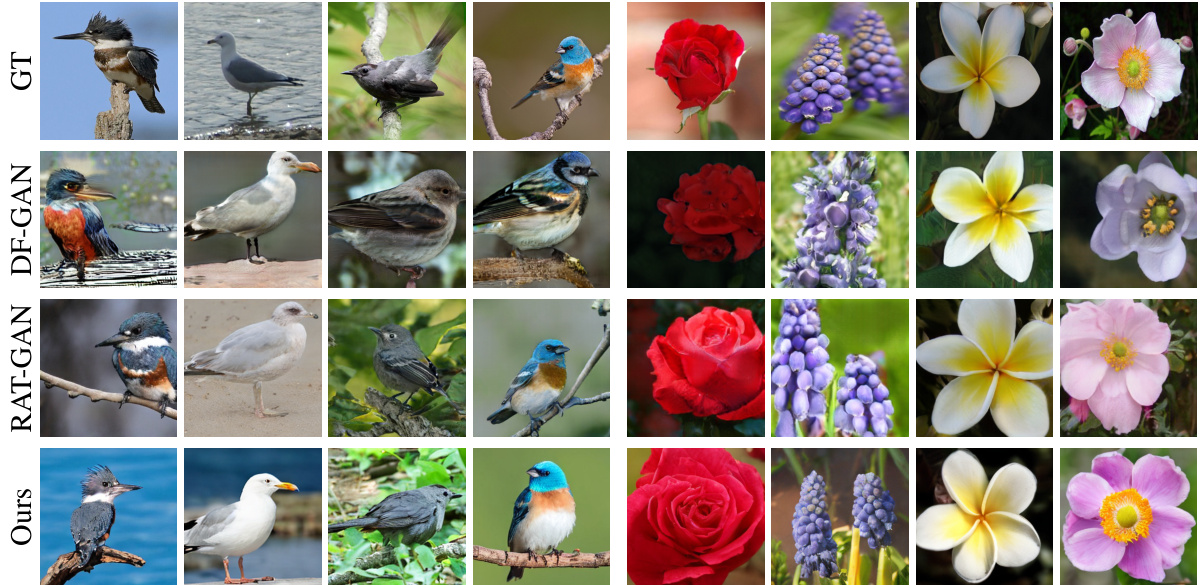

Figure 3: Qualitative comparison on the CUB and Oxford dataset. The input text descriptions are given in the first row and the corresponding generated images from different methods are shown in the same column. Best view in color and zoom in.

图 3: CUB 和 Oxford 数据集上的定性对比。首行展示输入文本描述,同列显示不同方法生成的对应图像。建议彩色查看并放大观察。

Datasets. We report results on the popular CUB, Oxford-102, and MS COCO datasets. The CUB dataset includes 200 categories with a total of 11,788 bird images, while the Oxford-102 dataset contains 102 categories with 8,189 flower images. Unlike the approaches taken in Reed et al. (2016a;b), we utilize the entire dataset for both training and testing. Each image is paired with 10 captions. To expand the original datasets, we collect 300,000 bird images and 130,000 flower images. The MS COCO dataset comprises 123,287 images, each with 5 sentence annotations. We use the official training split of COCO for training and the official validation split for testing. During mini-batch selection, a random image view (e.g., crop or flip) is chosen for one of the captions.

数据集。我们在常用的CUB、Oxford-102和MS COCO数据集上报告结果。CUB数据集包含200个类别共计11,788张鸟类图像,Oxford-102数据集包含102个类别共计8,189张花卉图像。与Reed等人(2016a;b)采用的方法不同,我们将完整数据集同时用于训练和测试。每张图像配有10条描述文本。为扩展原始数据集,我们额外收集了300,000张鸟类图像和130,000张花卉图像。MS COCO数据集包含123,287张图像,每张图像配有5条语句标注。我们使用COCO官方训练集划分进行训练,并采用官方验证集划分进行测试。在迷你批次选择过程中,会随机选取某条描述文本对应的图像视图(如裁剪或翻转)。

Web images. For the CUB and Oxford datasets, we collected 603,484 bird images and 331,602 flower images using search engines, utilizing fine-grained classification labels as search keywords. After removing detected outliers, we retained 399,246 bird images and 132,648 flower images. In the case of the COCO dataset, we gathered 770,059 daily images without applying any outlier detection, as the precise descriptions in COCO allow search engines to retrieve clean images effectively.

网络图像。对于CUB和Oxford数据集,我们使用搜索引擎收集了603,484张鸟类图像和331,602张花卉图像,利用细粒度分类标签作为搜索关键词。在剔除检测到的异常值后,保留了399,246张鸟类图像和132,648张花卉图像。针对COCO数据集,我们收集了770,059张日常图像且未进行异常值检测,因为COCO的精确描述能使搜索引擎有效检索到干净图像。

Training details. The text encoder is a pre-trained CLIP text encoder with an output of size 512. The latent encoder and decoder is pre-trained by Stable Diffusion (Rombach et al., 2022). We have tried to pre-train new latent encoders on extrapolated data but the results are not satisfying. Adam optimizer is used to optimize the network with base learning rates of 0.0001 and weight decay of 0. The same as RAT-GAN, we used a mini-batch size of 24 to train the model. Most training and testing of our model are conducted on 2 RTX 3090 Ti and the detailed training consumption is listed in Table 3.

训练细节。文本编码器采用预训练的CLIP文本编码器,输出维度为512。潜在空间编码器与解码器由Stable Diffusion (Rombach等人,2022) 预训练。我们尝试在扩展数据上预训练新的潜在编码器,但效果不佳。使用Adam优化器进行网络训练,基础学习率为0.0001,权重衰减为0。与RAT-GAN相同,我们采用24的小批量规模进行模型训练。大部分训练和测试在2块RTX 3090 Ti显卡上完成,具体训练耗时如 表3 所示。

Evaluation metrics. We adopt the widely used Inception Score (IS) (Salimans et al., 2016) and Fréchet Inception Distance (FID) (Heusel et al., 2017) to quantify the performance. On the MS COCO dataset, an Inception-v3 network pre-trained on the ImageNet dataset is used to compute the KL-divergence between the conditional class distribution (generated images) and the marginal class distribution (real images). The presence of a large IS indicates that the generated images are of high quality. The FID computes the Fréchet Distance between the image feature distributions of the generated and real-world images. The image features are extracted by the same pre-trained Inception v3 network. A lower FID implies the generated images are closer to the real images. We only compare the FID on the COCO dataset. On the CUB and Oxford-102 dataset, pre-trained Inception models are fine-tuned on two fine-grained classification tasks (Zhang et al., 2019).

评估指标。我们采用广泛使用的 Inception Score (IS) (Salimans et al., 2016) 和 Fréchet Inception Distance (FID) (Heusel et al., 2017) 来量化性能。在 MS COCO 数据集上,使用 ImageNet 数据集预训练的 Inception-v3 网络计算条件类别分布(生成图像)与边缘类别分布(真实图像)之间的 KL 散度。较高的 IS 值表明生成图像质量较好。FID 计算生成图像与真实图像特征分布之间的 Fréchet 距离,图像特征由相同的预训练 Inception v3 网络提取。较低的 FID 值意味着生成图像更接近真实图像。我们仅在 COCO 数据集上比较 FID。在 CUB 和 Oxford-102 数据集上,预训练的 Inception 模型会在两个细粒度分类任务上进行微调 (Zhang et al., 2019)。

There are two conflicts in evaluation methods in previous works. First, some studies report Inception Score (IS) using the ImageNet Inception model, while others use a fine-tuned version. Second, some works evaluate using the entire training data, whereas others use only the test split. To address these