SPIdepth: Strengthened Pose Information for Self-supervised Monocular Depth Estimation

SPIdepth: 增强位姿信息的自监督单目深度估计

Abstract

摘要

Self-supervised monocular depth estimation has garnered considerable attention for its applications in autonomous driving and robotics. While recent methods have made strides in leveraging techniques like the Self Query Layer (SQL) to infer depth from motion, they often overlook the potential of strengthening pose information. In this paper, we introduce SPIdepth, a novel approach that prioritizes enhancing the pose network for improved depth estimation. Building upon the foundation laid by SQL, SPIdepth emphasizes the importance of pose information in capturing fine-grained scene structures. By enhancing the pose network’s capabilities, SPIdepth achieves remarkable advancements in scene understanding and depth estimation. Experimental results on benchmark datasets such as KITTI, Cityscapes, and Make3D showcase SPIdepth’s state-of-the-art performance, surpassing previous methods by significant margins. Specifically, SPIdepth tops the self-supervised KITTI benchmark. Additionally, SPIdepth achieves the lowest AbsRel (0.029), SqRel (0.069), and RMSE (1.394) on KITTI, establishing new state-of-theart results. On Cityscapes, SPIdepth shows improvements over SQLdepth of $2l.7%$ in AbsRel, $36.8%$ in SqRel, and $16.5%$ in RMSE, even without using motion masks. On Make3D, SPIdepth in zero-shot outperforms all other models. Remarkably, SPIdepth achieves these results using only a single image for inference, surpassing even methods that utilize video sequences for inference, thus demonstrating its efficacy and efficiency in real-world applications. Our approach represents a significant leap forward in self-supervised monocular depth estimation, underscoring the importance of strengthening pose information for advancing scene understanding in real-world applications. The code and pre-trained models are publicly available at https://github.com/Lavreniuk/SPIdepth.

自监督单目深度估计因其在自动驾驶和机器人领域的应用而备受关注。尽管现有方法通过自查询层(SQL)等技术从运动中推断深度取得了进展,但往往忽视了强化位姿信息的潜力。本文提出SPIdepth,这是一种通过优先增强位姿网络来改进深度估计的新方法。基于SQL框架,SPIdepth强调了位姿信息在捕捉细粒度场景结构中的重要性。实验结果表明,在KITTI、Cityscapes和Make3D等基准数据集上,SPIdepth以显著优势超越了现有方法。具体而言,SPIdepth在自监督KITTI基准测试中排名第一,并创下最低的AbsRel(0.029)、SqRel(0.069)和RMSE(1.394)记录。在Cityscapes数据集上,即使不使用运动掩码,SPIdepth相较SQLdepth在AbsRel指标提升2.7%,SqRel提升36.8%,RMSE提升16.5%。在Make3D的零样本测试中,SPIdepth超越了所有对比模型。值得注意的是,SPIdepth仅需单张图像即可实现这些成果,其性能甚至优于依赖视频序列进行推理的方法。代码和预训练模型已开源:https://github.com/Lavreniuk/SPIdepth。

1. Introduction

1. 引言

Monocular depth estimation is a critical component in the field of computer vision, with far-reaching applications in autonomous driving and robotics [14, 8, 1]. The evolution of this field has been marked by a transition towards self-supervised methods, which aim to predict depth from a single RGB image without extensive labeled data. These methods offer a promising alternative to traditional supervised approaches, which often require costly and timeconsuming data collection processes by sensors such as LiDAR [45, 57, 30, 48, 11].

单目深度估计是计算机视觉领域的关键组成部分,在自动驾驶和机器人技术中具有深远应用 [14, 8, 1]。该领域的发展呈现出向自监督方法的转变趋势,这类方法旨在无需大量标注数据的情况下从单张RGB图像预测深度。相较于传统监督方法(通常需要LiDAR等传感器进行昂贵耗时的数据采集 [45, 57, 30, 48, 11]),这些方法提供了极具前景的替代方案。

Recent advancements have seen the emergence of novel techniques that utilize motion cues and the Self Query Layer (SQL) to infer depth information [45]. Despite their contributions, these methods have not fully capitalized on the potential of pose estimation. Addressing this gap, we present SPIdepth, approach that prioritizes the refinement of the pose network to enhance depth estimation accuracy. By focusing on the pose network, SPIdepth captures the intricate details of scene structures more effectively, leading to significant improvements in depth prediction.

近期研究进展中,涌现出利用运动线索和自查询层(SQL)推断深度信息的新技术[45]。尽管这些方法有所贡献,但尚未充分挖掘姿态估计的潜力。为此,我们提出SPIdepth方法,通过优先优化姿态网络来提升深度估计精度。该方法聚焦姿态网络,能更有效地捕捉场景结构的复杂细节,从而显著改进深度预测效果。

SPIdepth extends the capabilities of SQL by strengthened robust pose information, which is crucial for interpreting complex spatial relationships within a scene. Our extensive evaluations on benchmark datasets such as KITTI, Cityscapes, Make3D and demonstrate SPIdepth’s superior performance, surpassing previous self-supervised methods in both accuracy and generalization capabilities. Remarkably, SPIdepth achieves these results using only a single image for inference, outperforming methods that rely on video sequences. Specifically, SPIdepth tops the self-supervised KITTI benchmark. Additionally, SPIdepth achieves the lowest AbsRel (0.029), SqRel (0.069), and RMSE (1.394) on KITTI, establishing new state-of-the-art results. On Cityscapes, SPIdepth shows improvements over SQLdepth of $21.7%$ in AbsRel, $36.8%$ in SqRel, and $16.5%$ in RMSE, even without using motion masks. On Make3D, SPIdepth in zero-shot outperforms all other models.

SPIdepth通过增强的鲁棒位姿信息扩展了SQL的功能,这对于解析场景内复杂的空间关系至关重要。我们在KITTI、Cityscapes、Make3D等基准数据集上的大量评估表明,SPIdepth在精度和泛化能力上均超越了以往的自监督方法,仅需单张图像进行推理即可超越依赖视频序列的方法。具体而言,SPIdepth在自监督KITTI基准测试中排名第一,并以AbsRel(0.029)、SqRel(0.069)和RMSE(1.394)创下KITTI最低误差记录,确立了新的技术标杆。在Cityscapes数据集上,即使不使用运动掩码,SPIdepth相较SQLdepth在AbsRel(提升21.7%)、SqRel(提升36.8%)和RMSE(提升16.5%)指标上均取得显著改进。在Make3D的零样本测试中,SPIdepth性能优于所有其他模型。

The contributions of SPIdepth are significant, establishing a new state-of-the-art in the domain of depth estimation. It underscores the importance of enhancing pose estimation within the self-supervised learning. Our findings suggest that incorporating strong pose information is essential for advancing autonomous technologies and improving scene

SPIdepth的贡献显著,在深度估计领域确立了新的技术标杆。该研究强调了在自监督学习中提升姿态估计的重要性。我们的研究结果表明,整合强姿态信息对于推动自主技术进步和优化场景理解至关重要。

understanding.

理解。

Our main contributions are as follows:

我们的主要贡献如下:

• Introducing SPIdepth, a novel self-supervised approach that significantly improves monocular depth estimation by focusing on the refinement of the pose network. This enhancement allows for more precise capture of scene structures, leading to substantial advancements in depth prediction accuracy.

• 介绍SPIdepth,这是一种新颖的自监督方法,通过专注于位姿网络(pose network)的优化,显著改进了单目深度估计。这一增强能够更精确地捕捉场景结构,从而大幅提升深度预测的准确性。

• Our self-supervised method sets a new benchmark in depth estimation, outperforming all existing methods on standard datasets like KITTI and Cityscapes using only a single image for inference, without the need for video sequences. Additionally, our approach achieves significant improvements in zero-shot performance on the Make3D dataset.

• 我们的自监督方法为深度估计设立了新标杆,仅需单张图像进行推理(无需视频序列),就在KITTI和Cityscapes等标准数据集上超越了所有现有方法。此外,该方法在Make3D数据集上实现了零样本性能的显著提升。

2. Related works

2. 相关工作

2.1. Supervised Depth Estimation

2.1. 监督式深度估计

The field of depth estimation has been significantly advanced by the introduction of learning-based methods, with Eigen et al. [10] that used a multiscale convolutional neural network as well as a scale-invariant loss function. Subsequent methods have typically fallen into two categories: regression-based approaches [10, 20, 58] that predict continuous depth values, and classification-based approaches [12, 7] that predict discrete depth levels.

深度估计领域因引入基于学习的方法而取得显著进展,其中Eigen等人[10]率先使用多尺度卷积神经网络和尺度不变损失函数。后续方法通常分为两类:预测连续深度值的回归方法[10, 20, 58],以及预测离散深度级别的分类方法[12, 7]。

To leverage the benefits of both methods, recent works [3, 21] have proposed a combined classification-regression approach. This method involves regressing a set of depth bins and then classifying each pixel to these bins, with the final depth being a weighted combination of the bin centers.

为了结合两种方法的优势,近期研究[3, 21]提出了一种分类-回归联合方法。该方法通过回归一组深度区间,然后将每个像素分类到这些区间中,最终深度为区间中心的加权组合。

2.2. Diffusion Models in Vision Tasks

2.2. 视觉任务中的扩散模型

Diffusion models, which are trained to reverse a forward noising process, have recently been applied to vision tasks, including depth estimation. These models generate realistic images from noise, guided by text prompts that are encoded into embeddings and influence the reverse diffusion process through cross-attention layers [36, 18, 29, 34, 37].

扩散模型 (Diffusion models) 通过训练逆转前向加噪过程,近期被应用于视觉任务(包括深度估计)。这类模型在文本提示的引导下从噪声生成逼真图像,其中文本提示被编码为嵌入向量,并通过交叉注意力层 [20] 影响逆向扩散过程 [36, 18, 29, 34, 37]。

The VPD approach [59] encodes images into latent represent at ions and processes them through the Stable Diffusion model [36]. Text prompts, through cross-attention, guide the reverse diffusion process, influencing the latent representations and feature maps. This method has shown that aligning text prompts with images significantly improves depth estimation performance. Different newer model further improve the accuracy of multi modal models based on Stable Diffusion [23, 22].

VPD方法 [59] 将图像编码为潜在表征(latent representations),并通过Stable Diffusion模型 [36] 进行处理。文本提示(prompt)通过交叉注意力机制引导逆向扩散过程,影响潜在表征和特征图。该方法表明,将文本提示与图像对齐能显著提升深度估计性能。基于Stable Diffusion的更新模型 [23, 22] 进一步提高了多模态模型的准确性。

2.3. Self-supervised Depth Estimation

2.3. 自监督深度估计

Ground truth data is not always available, prompting the development of self-supervised models that leverage either the temporal consistency found in sequences of monocular videos [61, 16], or the spatial correspondence in stereo vision [13, 15, 32].

真实标注数据并非总是可用,这促使了自监督模型的发展。这些模型要么利用单目视频序列中的时间一致性 [61, 16],要么利用立体视觉中的空间对应关系 [13, 15, 32]。

When only single-view inputs are available, models are trained to find coherence between the generated perspective of a reference point and the actual perspective of a related point. The initial framework SfMLearner [61], was developed to learn depth estimation in conjunction with pose prediction, driven by losses based on photometric alignment. This approach has been refined through various methods, such as enhancing the robustness of image reconstruction losses [17, 41], introducing feature-level loss functions [41, 56], and applying new constraints to the learning process [53, 54, 35, 63, 2].

当仅能获取单视图输入时,模型通过训练来寻找参考点生成视角与关联点真实视角之间的一致性。初始框架SfMLearner [61] 被开发用于结合位姿预测来学习深度估计,其驱动力来自基于光度对齐的损失函数。该方法通过多种途径得到改进,例如提升图像重建损失的鲁棒性 [17, 41]、引入特征级损失函数 [41, 56],以及对学习过程施加新约束 [53, 54, 35, 63, 2]。

In scenarios where stereo image pairs are available, the focus shifts to deducing the disparity map, which inversely correlates with depth [39]. This disparity estimation is crucial as it serves as a proxy for depth in the absence of direct measurements. The disparity maps are computed by exploiting the known geometry and alignment of stereo camera setups. With stereo pairs, the disparity calculation becomes a matter of finding correspondences between the two views. Early efforts in this domain, such as the work by Garg et al. [13], laid the groundwork for self-supervised learning paradigms that rely on the consistency of stereo images. These methods have been progressively enhanced with additional constraints like left-right consistency checks [15].

在可获得立体图像对的场景中,重点转向推断视差图(disparity map),其与深度呈反比关系 [39]。视差估计至关重要,因为在缺乏直接测量的情况下,它可作为深度的替代指标。通过利用立体相机设置的已知几何和对齐关系来计算视差图。对于立体图像对,视差计算转化为寻找两个视图间对应关系的问题。该领域的早期工作(如Garg等人 [13] 的研究)为依赖立体图像一致性的自监督学习范式奠定了基础。这些方法通过左右一致性检查 [15] 等附加约束逐步得到增强。

Parallel to the pursuit of depth estimation is the broader field of unsupervised learning from video. This area explores the development of pretext tasks designed to extract versatile visual features from video data. These features are foundational for a variety of vision tasks, including object detection and semantic segmentation. Notable tasks in this domain include ego-motion estimation, tracking, ensuring temporal coherence, verifying the sequence of events, and predicting motion masks for objects. [42] have also proposed a framework for the joint training of depth, camera motion, and scene motion from videos.

与深度估计研究并行的是更广泛的视频无监督学习领域。该领域探索如何设计预训练任务,以从视频数据中提取通用的视觉特征。这些特征是多种视觉任务的基础,包括目标检测和语义分割。该领域的代表性任务包括:自我运动估计、目标追踪、时序一致性保持、事件序列验证以及物体运动掩码预测。[42] 还提出了一个联合训练框架,可从视频中同步学习深度、相机运动和场景运动。

While self-supervised methods for depth estimation have advanced, they still fall short in effectively using pose data.

虽然自监督深度估计方法已取得进展,但在有效利用位姿数据方面仍有不足。

3. Methodology

3. 方法论

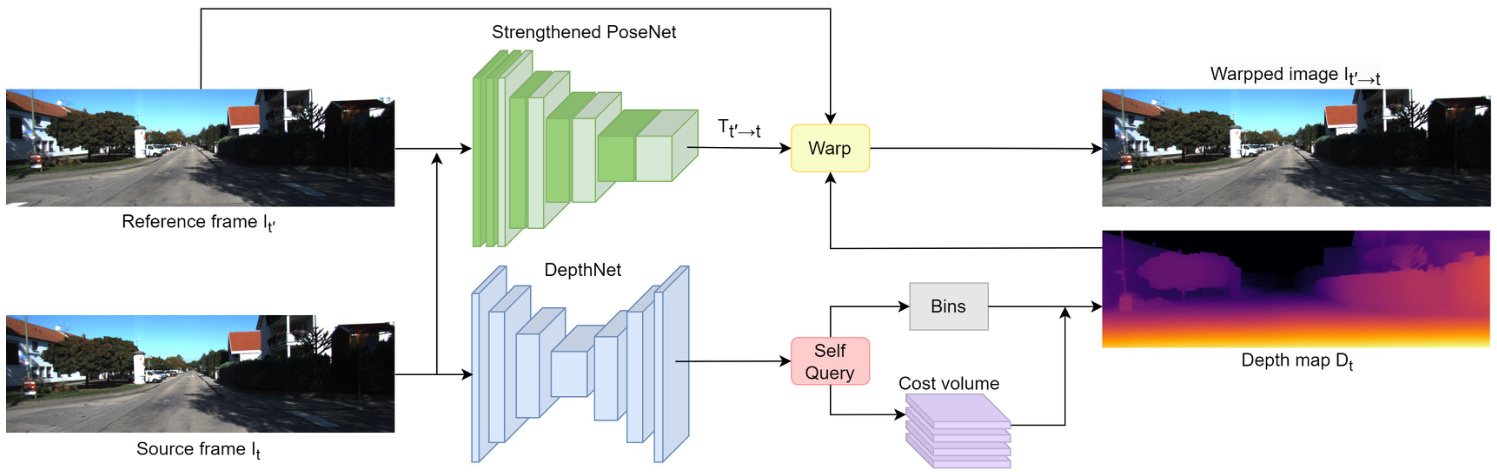

We address the task of self-supervised monocular depth estimation, focusing on predicting depth maps from single RGB images without ground truth, akin to learning structure from motion (SfM). Our approach, SPIdepth fig. 1, introduces strengthen the pose network and enhances depth estimation accuracy. Unlike conventional methodologies that primarily focus on depth refinement, SPIdepth prioritizes improving the accuracy of the pose network to capture intricate scene structures more effectively, leading to significant advancements in depth prediction accuracy.

我们致力于自监督单目深度估计任务,重点研究从单张RGB图像预测深度图而无需真实值,类似于从运动恢复结构(SfM)的学习方式。我们的方法SPIdepth(图1)通过强化位姿网络来提升深度估计精度。与传统方法主要关注深度优化不同,SPIdepth优先提高位姿网络的准确性,从而更有效地捕捉复杂场景结构,实现深度预测精度的显著提升。

Figure 1: The SPIdepth architecture. An encoder-decoder extracts features from frame $I_{t}$ , which are then input into the Self Query Layer to obtain the depth map $D_{t}$ . Strengthened PoseNet predicts the relative pose between frame $I_{t}$ and reference frame $I_{t}^{\prime}$ using a powerful pose network, needed only during training. Pixels from frame $I_{t}^{\prime}$ are used to reconstruct frame $I_{t}$ with depth map $D_{t}$ and relative pose $T_{t^{\prime}\rightarrow t}$ . The loss function is based on the differences between the warped image $I_{t^{\prime}\rightarrow t}$ and the source image $I_{t}$ .

图 1: SPIdepth架构。编码器-解码器从帧$I_{t}$中提取特征,随后输入至自查询层(Self Query Layer)以获取深度图$D_{t}$。强化位姿网络(Strengthened PoseNet)通过高性能位姿网络预测帧$I_{t}$与参考帧$I_{t}^{\prime}$间的相对位姿,该网络仅在训练阶段需要。利用深度图$D_{t}$和相对位姿$T_{t^{\prime}\rightarrow t}$,通过帧$I_{t}^{\prime}$的像素重建帧$I_{t}$。损失函数基于变形图像$I_{t^{\prime}\rightarrow t}$与源图像$I_{t}$之间的差异构建。

Our method comprises two primary components: DepthNet for depth prediction and PoseNet for relative pose estimation.

我们的方法包含两个主要组件:用于深度预测的DepthNet和用于相对位姿估计的PoseNet。

DepthNet: Our method employs DepthNet, a cornerstone component responsible for inferring depth maps from single RGB images. To achieve this, DepthNet utilizes a sophisticated convolutional neural network architecture, designed to extract intricate visual features from the input images. These features are subsequently processed through an encoder-decoder framework, facilitating the extraction of detailed and high-resolution visual representations denoted as $\mathbf{S}$ with dimensions $\mathbb{R}^{C\times h\times w}$ . The integration of skip connections within the network architecture enhances the preservation of local fine-grained visual cues.

DepthNet: 我们的方法采用DepthNet作为核心组件,负责从单张RGB图像推断深度图。为此,DepthNet采用精心设计的卷积神经网络架构,能够从输入图像中提取复杂的视觉特征。这些特征随后通过编码器-解码器框架进行处理,从而提取出维度为$\mathbb{R}^{C\times h\times w}$的精细高分辨率视觉表示$\mathbf{S}$。网络架构中跳跃连接(skip connections)的集成增强了局部细粒度视觉线索的保留能力。

The depth estimation process could be written as:

深度估计过程可以表示为:

$$

D_{t}=\mathrm{DepthNet}(I_{t})

$$

$$

D_{t}=\mathrm{DepthNet}(I_{t})

$$

where $I_{t}$ denotes the input RGB image.

其中 $I_{t}$ 表示输入的 RGB 图像。

To ensure the efficacy and accuracy of DepthNet, we leverage a state-of-the-art ConvNext as the pretrained encoder. ConvNext’s ability to learn from large datasets helps DepthNet capture detailed scene structures, improving depth prediction accuracy.

为确保DepthNet的效能和准确性,我们采用了最先进的ConvNext作为预训练编码器。ConvNext从大型数据集学习的能力有助于DepthNet捕捉细致的场景结构,从而提升深度预测的准确度。

PoseNet: PoseNet plays a crucial role in our methodology, estimating the relative pose between input and reference images for view synthesis. This estimation is essential for accurately aligning the predicted depth map with the reference image during view synthesis. To achieve robust and accurate pose estimation, PoseNet utilizes a powerful pretrained model, such as a ConvNet or Transformer. Leveraging the representations learned by the pretrained model enhances the model’s ability to capture complex scene structures and geometric relationships, ultimately improving depth estimation accuracy. Given a source image $I_{t}$ and a reference image $I_{t^{\prime}}$ , PoseNet predicts the relative pose $T_{t\rightarrow t^{\prime}}$ . The predicted depth map $D_{t}$ and relative pose $T_{t\rightarrow t^{\prime}}$ are then used to perform view synthesis:

PoseNet: PoseNet 在我们的方法中起着关键作用,用于估计输入图像与参考图像之间的相对位姿,以进行视图合成。这一估计对于在视图合成过程中将预测的深度图与参考图像准确对齐至关重要。为了实现稳健且精确的位姿估计,PoseNet 采用了强大的预训练模型,例如 ConvNet 或 Transformer。利用预训练模型学习到的表征,增强了模型捕捉复杂场景结构和几何关系的能力,最终提升了深度估计的准确性。给定源图像 $I_{t}$ 和参考图像 $I_{t^{\prime}}$,PoseNet 预测相对位姿 $T_{t\rightarrow t^{\prime}}$。随后,预测的深度图 $D_{t}$ 和相对位姿 $T_{t\rightarrow t^{\prime}}$ 被用于执行视图合成:

$$

I_{t^{\prime}\rightarrow t}=I_{t^{\prime}}\left\langle\mathrm{proj}\left(D_{t},T_{t\rightarrow t^{\prime}},K\right)\right\rangle

$$

$$

I_{t^{\prime}\rightarrow t}=I_{t^{\prime}}\left\langle\mathrm{proj}\left(D_{t},T_{t\rightarrow t^{\prime}},K\right)\right\rangle

$$

where $\langle\rangle$ denotes the sampling operator and proj returns the 2D coordinates of the depths in $D_{t}$ when re projected into the camera view of $I_{t^{\prime}}$ .

其中 $\langle\rangle$ 表示采样运算符,proj 返回深度图 $D_{t}$ 中的深度值重投影到 $I_{t^{\prime}}$ 相机视图时的二维坐标。

To capture intra-geometric clues for depth estimation, we employ a Self Query Layer (SQL) [45]. The SQL builds a self-cost volume to store relative distance representations, approximating relative distances between pixels and patches. Let S denote the immediate visual representations extracted by the encoder-decoder. The self-cost volume $\mathbf{V}$ is calculated as follows:

为了捕捉深度估计中的内部几何线索,我们采用了自查询层(SQL) [45]。该层通过构建自代价体积来存储相对距离表征,从而近似像素与图像块之间的相对距离。设S表示编码器-解码器提取的即时视觉表征,自代价体积$\mathbf{V}$的计算方式如下:

$$

V_{i,j,k}=Q_{i}^{T}\cdot S_{j,k}

$$

$$

V_{i,j,k}=Q_{i}^{T}\cdot S_{j,k}

$$

where $Q_{i}$ represents the coarse-grained queries, and $S_{j,k}$ denotes the per-pixel immediate visual representations.

其中 $Q_{i}$ 表示粗粒度查询,$S_{j,k}$ 表示逐像素的即时视觉表征。

We calculate depth bins by tallying latent depths within the self-cost volume $\mathbf{V}$ . These bins portray the distribution of depth values and are determined through regression using a multi-layer perceptron (MLP) to estimate depth. The process for computing the depth bins is as follows:

我们通过统计自代价体积 $\mathbf{V}$ 中的潜在深度来计算深度区间。这些区间描述了深度值的分布,并通过使用多层感知机 (MLP) 进行回归来估计深度。计算深度区间的过程如下:

$$

\mathbf{b}=\mathbf{MLP}\left(\bigoplus_{i=1}^{Q}\sum_{(j,k)=(1,1)}^{(h,w)}\operatorname{softmax}(V_{i})_{j,k}\cdot S_{j,k}\right)

$$

$$

\mathbf{b}=\mathbf{MLP}\left(\bigoplus_{i=1}^{Q}\sum_{(j,k)=(1,1)}^{(h,w)}\operatorname{softmax}(V_{i})_{j,k}\cdot S_{j,k}\right)

$$

Here, $\oplus$ denotes concatenation, $Q$ represents the number of coa rse-grained queries, and $h$ and $w$ are the height and width of the immediate visual representations.

这里,$\oplus$ 表示连接操作,$Q$ 代表粗粒度查询的数量,$h$ 和 $w$ 则是即时视觉表征的高度和宽度。

To generate the final depth map, we combine depth estimations from coarse-grained queries using a probabilistic linear combination approach. This involves applying a plane-wise softmax operation to convert the self-cost volume V into plane-wise probabilistic maps, which facilitates depth calculation for each pixel.

为生成最终深度图,我们采用概率线性组合方法整合来自粗粒度查询的深度估计。具体而言,通过应用平面级softmax运算将自代价体积(self-cost volume) V转换为平面级概率图,从而计算每个像素的深度。

During training, both DepthNet and PoseNet are simultaneously optimized by minimizing the photometric reprojection error. We adopt established methodologies [13, 61, 62], optimizing the loss for each pixel by selecting the per-pixel minimum over the reconstruction loss $p e$ defined in Equation 1, where $t^{\prime}$ ranges within $(t-1,t+1)$ .

在训练过程中,DepthNet和PoseNet通过最小化光度重投影误差同时进行优化。我们采用已有方法 [13, 61, 62],通过选择公式1中定义的重构损失$pe$的逐像素最小值来优化每个像素的损失,其中$t^{\prime}$的取值范围为$(t-1,t+1)$。

$$

L_{p}=\operatorname*{min}{t^{\prime}}p e\left(I_{t},I_{t^{\prime}\rightarrow t}\right)

$$

$$

L_{p}=\operatorname*{min}{t^{\prime}}p e\left(I_{t},I_{t^{\prime}\rightarrow t}\right)

$$

In real-world scenarios, stationary cameras and dynamic objects can influence depth prediction. We utilize an automasking strategy [16] to filter stationary pixels and lowtexture regions, ensuring s cal ability and adaptability.

在实际场景中,静态摄像头和动态物体会影响深度预测。我们采用自动掩码策略 [16] 来过滤静止像素和低纹理区域,确保可扩展性和适应性。

We employ the standard photometric loss combined with L1 and SSIM [46] as shown in Equation 2.

我们采用结合了L1和SSIM [46]的标准光度损失,如公式2所示。

$$

p e\left(I_{a},I_{b}\right)=\frac{\alpha}{2}\left(1-\mathrm{SSIM}\left(I_{a},I_{b}\right)\right)+\left(1-\alpha\right)\left\Vert I_{a}-I_{b}\right\Vert_{1}

$$

$$

p e\left(I_{a},I_{b}\right)=\frac{\alpha}{2}\left(1-\mathrm{SSIM}\left(I_{a},I_{b}\right)\right)+\left(1-\alpha\right)\left\Vert I_{a}-I_{b}\right\Vert_{1}

$$

To regularize depth in texture less regions, edge-aware smooth loss is utilized.

为了在无纹理区域规范化深度,采用了边缘感知平滑损失。

$$

L_{s}=|\partial_{x}d_{t}^{}|e^{-|\partial_{x}I_{t}|}+|\partial_{y}d_{t}^{*}|e^{-|\partial_{y}I_{t}|}

$$

$$

L_{s}=|\partial_{x}d_{t}^{}|e^{-|\partial_{x}I_{t}|}+|\partial_{y}d_{t}^{*}|e^{-|\partial_{y}I_{t}|}

$$

We apply an auto-masking strategy to filter out stationary pixels and low-texture regions consistently observed across frames.

我们采用自动掩码策略来过滤掉在连续帧中观察到的静止像素和低纹理区域。

The final training loss integrates per-pixel smooth loss and masked photometric losses, enhancing resilience and accuracy in diverse scenarios, as depicted in Equation 4.

最终训练损失整合了逐像素平滑损失和掩膜光度损失,增强了不同场景下的鲁棒性和准确性,如公式4所示。

$$

{\cal L}=\mu L_{p}+\lambda L_{s}

$$

$$

{\cal L}=\mu L_{p}+\lambda L_{s}

$$

4. Results

4. 结果

Our assessment of SPIDepth encompasses three widelyused datasets: KITTI, Cityscapes and Make3D, employing established evaluation metrics.

我们对SPIDepth的评估涵盖了三个广泛使用的数据集:KITTI、Cityscapes和Make3D,并采用了既定的评估指标。

4.1. Datasets

4.1. 数据集

4.1.1 KITTI Dataset

4.1.1 KITTI数据集

KITTI [14] provides stereo image sequences, a staple in self-supervised monocular depth estimation. We adopt the Eigen split [9], using approximately $26\mathrm{k\Omega}$ images for training and 697 for testing. Notably, our training procedure for SQLdepth on KITTI starts from scratch, without utilizing motion masks [16], additional stereo pairs, or auxiliary data. During testing, we maintain a stringent regime, employing only a single frame as input, diverging from methods that exploit multiple frames for enhanced accuracy.

KITTI [14] 提供了立体图像序列,这是自监督单目深度估计的基础数据集。我们采用 Eigen 划分 [9],使用约 $26\mathrm{k\Omega}$ 张图像进行训练,697 张用于测试。值得注意的是,我们在 KITTI 上训练 SQLdepth 时从零开始,未使用运动掩码 [16]、额外立体图像对或辅助数据。测试阶段保持严格设定,仅输入单帧图像,与依赖多帧提升精度的方法形成对比。

4.1.2 Cityscapes Dataset

4.1.2 Cityscapes数据集

Cityscapes [6] poses a unique challenge with its plethora of dynamic objects. To gauge SPIDepth’s adaptability, we fine-tune on Cityscapes using pre-trained models from KITTI. Notably, we abstain from leveraging motion masks, a feature common among other methods, even in the presence of dynamic objects. Our performance improvements hinge solely on SPIDepth’s design and generalization capacity. This approach allows us to scrutinize SPIDepth’s robustness in dynamic environments. We adhere to data preprocessing practices from [61], ensuring consistency by preprocessing image sequences into triples.

Cityscapes [6] 因其大量动态物体带来了独特挑战。为评估 SPIDepth 的适应性,我们使用 KITTI 预训练模型在 Cityscapes 上进行微调。值得注意的是,即便存在动态物体,我们也不采用其他方法常用的运动掩模技术。性能提升完全依赖于 SPIDepth 的设计和泛化能力。该方法使我们能严格检验 SPIDepth 在动态环境中的鲁棒性。我们遵循 [61] 的数据预处理规范,通过将图像序列预处理为三元组来确保一致性。

4.1.3 Make3D Dataset

4.1.3 Make3D 数据集

Make3D [38] is a monocular depth estimation dataset containing 400 high-resolution RGB and low-resolution depth map pairs for training, and 134 test samples. To evaluate SPIDepth’s generalization ability on unseen data, zero-shot evaluation on the Make3D test set has been performed using the SPIDepth model pre-trained on KITTI.

Make3D [38] 是一个单目深度估计数据集,包含400对高分辨率RGB图像和低分辨率深度图用于训练,以及134个测试样本。为评估SPIDepth在未见数据上的泛化能力,我们使用在KITTI上预训练的SPIDepth模型对Make3D测试集进行了零样本评估。

4.2. KITTI Results

4.2. KITTI 结果

We present the performance comparison of SPIDepth with several state-of-the-art self-supervised depth estimation models on the KITTI dataset, as summarized in Table 1. SPIDepth achieves superior performance compared to all other models across various evaluation metrics. Notably, it achieves the lowest values of AbsRel (0.071), SqRel (0.531), RMSE (3.662), and RMSElog (0.153), indicating its exceptional accuracy in predicting depth values.

我们在KITTI数据集上将SPIDepth与几种最先进的自我监督深度估计模型进行了性能对比,如表 1 所示。SPIDepth在所有评估指标上均优于其他模型,尤其以AbsRel (0.071)、SqRel (0.531)、RMSE (3.662) 和 RMSElog (0.153) 的最低值展现了卓越的深度预测精度。

Moving on to Table 2, we compare the performance of SPIDepth with several supervised depth estimation models on the KITTI eigen benchmark. Despite being self- supervised and metric fine-tuned, SPIDepth outperforms supervised methods across all these metrics, indicating its superior accuracy in predicting metric depth values.

转到表 2: 我们在KITTI eigen基准上比较了SPIDepth与几种有监督深度估计模型的性能。尽管SPIDepth是自监督且经过度量微调的模型,但它在所有指标上都优于有监督方法,这表明其在预测度量深度值方面具有更高的准确性。

Furthermore, SPIDepth surpasses Lighted Depth, a model that operates on video sequence