Global and Local Mixture Consistency Cumulative Learning for Long-tailed Visual Recognitions

面向长尾视觉识别的全局与局部混合一致性累积学习

Abstract

摘要

In this paper, our goal is to design a simple learning paradigm for long-tail visual recognition, which not only improves the robustness of the feature extractor but also alleviates the bias of the classifier towards head classes while reducing the training skills and overhead. We propose an efficient one-stage training strategy for long-tailed visual recognition called Global and Local Mixture Consistency cumulative learning (GLMC). Our core ideas are twofold: (1) a global and local mixture consistency loss improves the robustness of the feature extractor. Specifically, we generate two augmented batches by the global MixUp and local CutMix from the same batch data, respectively, and then use cosine similarity to minimize the difference. (2) A cumulative head-tail soft label reweighted loss mitigates the head class bias problem. We use empirical class frequencies to reweight the mixed label of the head-tail class for long-tailed data and then balance the conventional loss and the rebalanced loss with a coefficient accumulated by epochs. Our approach achieves state-of-the-art accuracy on CIFAR10-LT, CIFAR100-LT, and ImageNet-LT datasets. Additional experiments on balanced ImageNet and CIFAR demonstrate that GLMC can significantly improve the genera liz ation of backbones. Code is made publicly available at https://github.com/ynu-yangpeng/GLMC.

本文旨在为长尾视觉识别设计一种简洁的学习范式,该范式既能提升特征提取器的鲁棒性,又可缓解分类器对头部类别的偏好,同时减少训练技巧与开销。我们提出名为全局局部混合一致性累积学习(GLMC)的高效单阶段长尾视觉识别训练策略,其核心思想包含两方面:(1) 通过全局局部混合一致性损失增强特征提取器的鲁棒性。具体而言,我们分别对同一批数据实施全局MixUp和局部CutMix生成两个增强批次,再利用余弦相似度最小化其差异。(2) 采用累积式头尾软标签重加权损失缓解头部类别偏差问题。我们基于经验类频率对长尾数据的头尾类别混合标签进行重加权,并通过逐轮累积的系数平衡常规损失与重加权损失。该方法在CIFAR10-LT、CIFAR100-LT和ImageNet-LT数据集上达到最先进准确率。在平衡版ImageNet和CIFAR上的附加实验表明,GLMC能显著提升主干网络的泛化能力。代码已开源:https://github.com/ynu-yangpeng/GLMC。

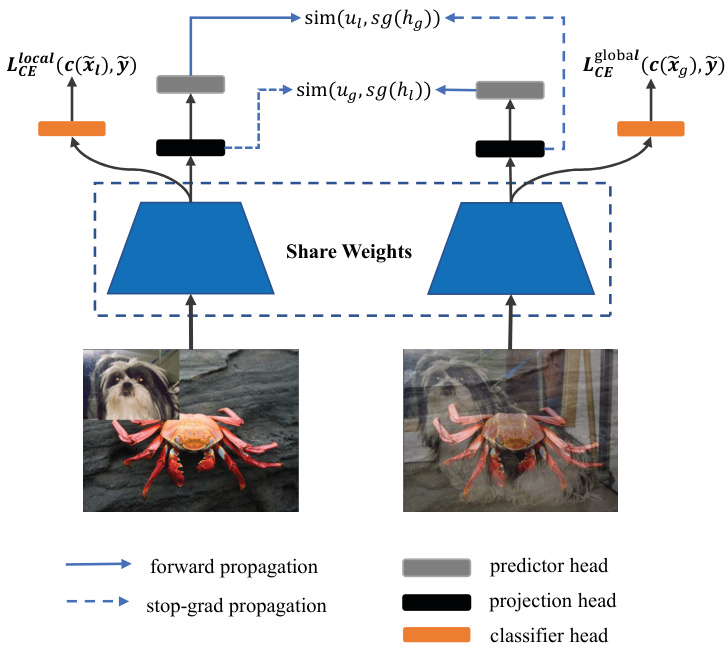

Figure 1. An overview of our GLMC: two types of mixed-label augmented images are processed by an encoder network and a projection head to obtain the representation $h_{g}$ and $h_{l}$ . Then a prediction head transforms the two representations to output $u_{g}$ and $u_{l}$ . We minimize their negative cosine similarity as an auxiliary loss in the supervised loss. $s g(\cdot)$ denotes stop gradient operation.

图 1: 我们的 GLMC 方法概述:两种混合标签增强图像通过编码器网络和投影头处理,获得表示 $h_{g}$ 和 $h_{l}$。随后预测头将这两个表示转换为输出 $u_{g}$ 和 $u_{l}$。我们将它们的负余弦相似度作为监督损失中的辅助损失进行最小化。$sg(\cdot)$ 表示停止梯度操作。

1. Introduction

1. 引言

Thanks to the available large-scale datasets, e.g., ImageNet [10], MS COCO [27], and Places [46] Database, deep neural networks have achieved dominant results in image recognition [15]. Distinct from these well-designed balanced datasets, data naturally follows long-tail distribution in real-world scenarios, where a small number of head classes occupy most of the samples. In contrast, dominant tail classes only have a few samples. Moreover, the tail classes are critical for some applications, such as medical diagnosis and autonomous driving. Unfortunately, learning directly from long-tailed data may cause model predictions to over-bias toward the head classes.

得益于大规模数据集(如ImageNet [10]、MS COCO [27]和Places [46] Database)的可用性,深度神经网络在图像识别领域取得了显著成果[15]。与这些精心设计的平衡数据集不同,现实场景中的数据天然遵循长尾分布——少数头部类别占据大部分样本,而占主导地位的尾部类别仅拥有少量样本。此外,尾部类别在某些应用(如医疗诊断和自动驾驶)中至关重要。然而,直接基于长尾数据训练可能导致模型预测过度偏向头部类别。

There are two classical rebalanced strategies for longtailed distribution, including resampling training data [7, 13, 35] and designing cost-sensitive re weighting loss functions [3, 20]. For the resampling methods, the core idea is to oversample the tail class data or under sample the head classes in the SGD mini-batch to balance training. As for the re weighting strategy, it mainly increases the loss weight of the tail classes to strengthen the tail class. However, learning to rebalance the tail classes directly would damage the original distribution [45] of the long-tailed data, either increasing the risk of over fitting in the tail classes or sacrificing the performance of the head classes. Therefore, these methods usually adopt a two-stage training process [1,3,45] to decouple the representation learning and classifier finetuning: the first stage trains the feature extractor on the original data distribution, then fixes the representation and trains a balanced classifier. Although multi-stage training significantly improves the performance of long-tail recognition, it also negatively increases the training tricks and overhead.

针对长尾分布有两种经典的再平衡策略:重采样训练数据 [7, 13, 35] 和设计成本敏感的重新加权损失函数 [3, 20]。对于重采样方法,其核心思想是在SGD小批量中过采样尾部类数据或欠采样头部类数据以实现训练平衡。而重新加权策略主要通过增加尾部类的损失权重来强化尾部类学习。然而,直接学习对尾部类进行再平衡会破坏长尾数据的原始分布 [45],既可能增加尾部类过拟合风险,也会牺牲头部类性能。因此,这些方法通常采用两阶段训练流程 [1,3,45] 来解耦表示学习和分类器微调:第一阶段在原始数据分布上训练特征提取器,随后固定表示并训练平衡分类器。尽管多阶段训练显著提升了长尾识别性能,但也负面增加了训练技巧和开销。

In this paper, our goal is to design a simple learning paradigm for long-tail visual recognition, which not only improves the robustness of the feature extractor but also alleviates the bias of the classifier towards head classes while reducing the training skills and overhead. For improving representation robustness, recent contrastive learning techniques [8,18,26,47] that learn the consistency of augmented data pairs have achieved excellence. Still, they typically train the network in a two-stage manner, which does not meet our simplification goals, so we modify them as an auxiliary loss in our supervision loss. For head class bias problems, the typical approach is to initialize a new classifier for resampling or re weighting training. Inspired by the cumulative weighted rebalancing [45] branch strategy, we adopt a more efficient adaptive method to balance the conventional and reweighted classification loss.

本文旨在为长尾视觉识别设计一种简洁的学习范式,该范式不仅能提升特征提取器的鲁棒性,还能缓解分类器对头部类别的偏置,同时降低训练技巧和开销。为增强表征鲁棒性,近期通过增强数据对一致性进行学习的对比学习技术 [8,18,26,47] 表现卓越。但这些方法通常采用两阶段训练网络,不符合我们的简化目标,因此我们将其改造为监督损失中的辅助损失项。针对头部类别偏置问题,典型方案是通过初始化新分类器进行重采样或重加权训练。受累积加权再平衡 [45] 分支策略启发,我们采用更高效的自适应方法来平衡常规分类损失与重加权分类损失。

Based on the above analysis, we propose an efficient one-stage training strategy for long-tailed visual recognition called Global and Local Mixture Consistency cumulative learning (GLMC). Our core ideas are twofold: (1) a global and local mixture consistency loss improves the robustness of the model. Specifically, we generate two augmented batches by the global MixUp and local CutMix from the same batch data, respectively, and then use cosine similarity to minimize the difference. (2) A cumulative headtail soft label reweighted loss mitigates the head class bias problem. Specifically, we use empirical class frequencies to reweight the mixed label of the head-tail class for longtailed data and then balance the conventional loss and the rebalanced loss with a coefficient accumulated by epochs.

基于上述分析,我们提出了一种高效的长尾视觉识别单阶段训练策略——全局与局部混合一致性累积学习(GLMC)。其核心思想包含两点:(1) 全局与局部混合一致性损失提升模型鲁棒性。具体而言,我们分别通过全局MixUp和局部CutMix对同一批数据生成两个增强批次,随后利用余弦相似度最小化其差异。(2) 累积式头尾软标签重加权损失缓解头部类别偏差问题。具体实现中,我们使用经验类别频率对长尾数据的头尾类别混合标签进行重加权,并通过逐轮累积的系数平衡常规损失与重加权损失。

Our method is mainly evaluated in three widely used long-tail image classification benchmark datasets, which include CIFAR10-LT, CIFAR100-LT, and ImageNet-LT datasets. Extensive experiments show that our approach outperforms other methods by a large margin, which verifies the effectiveness of our proposed training scheme. Additional experiments on balanced ImageNet and CIFAR demonstrate that GLMC can significantly improve the genera liz ation of backbones. The main contributions of our work can be summarized as follows:

我们的方法主要在三个广泛使用的长尾图像分类基准数据集上进行评估,包括 CIFAR10-LT、CIFAR100-LT 和 ImageNet-LT 数据集。大量实验表明,我们的方法大幅优于其他方法,验证了我们提出的训练方案的有效性。在平衡的 ImageNet 和 CIFAR 上进行的额外实验表明,GLMC 能显著提升主干网络的泛化能力。我们工作的主要贡献可总结如下:

• We propose an efficient one-stage training strategy called Global and Local Mixture Consistency cumulative learning framework (GLMC), which can effectively improve the generalization of the backbone for long-tailed visual recognition.

• 我们提出了一种高效的单阶段训练策略——全局与局部混合一致性累积学习框架 (GLMC) ,该框架能有效提升主干网络在长尾视觉识别任务中的泛化能力。

• GLMC does not require negative sample pairs or large batches and can be as an auxiliary loss added in supervised loss.

• GLMC 不需要负样本对或大批量数据,可作为监督损失中的辅助损失函数。

• Our GLMC achieves state-of-the-art performance on three challenging long-tailed recognition benchmarks, including CIFAR10-LT, CIFAR100-LT, and ImageNet-LT datasets. Moreover, experimental results on full ImageNet and CIFAR validate the effectiveness of GLMC under a balanced setting.

• 我们的 GLMC 在三个具有挑战性的长尾识别基准测试(包括 CIFAR10-LT、CIFAR100-LT 和 ImageNet-LT 数据集)上实现了最先进的性能。此外,在完整 ImageNet 和 CIFAR 上的实验结果验证了 GLMC 在平衡设置下的有效性。

2. Related Work

2. 相关工作

2.1. Contrastive Representation Learning

2.1. 对比表征学习

The recent renaissance of self-supervised learning is expected to obtain a general and transfer r able feature representation by learning pretext tasks. For computer vision, these pretext tasks include rotation prediction [22], relative position prediction of image patches [11], solving jigsaw puzzles [30], and image color iz ation [23, 43]. However, these pretext tasks are usually domain-specific, which limits the generality of learned representations.

自监督学习的近期复兴旨在通过预训练任务获取通用且可迁移的特征表示。在计算机视觉领域,这类预训练任务包括旋转预测 [22]、图像块相对位置预测 [11]、拼图求解 [30] 以及图像着色 [23, 43]。然而,这些预训练任务通常具有领域局限性,制约了所学表征的通用性。

Contrastive learning is a significant branch of selfsupervised learning. Its pretext task is to bring two augmented images (seen as positive samples) of one image closer than the negative samples in the representation space. Recent works [17, 31, 36] have attempted to learn the embedding of images by maximizing the mutual information of two views of an image between latent representations. However, their success relies on a large number of negative samples. To handle this issue, BYOL [12] removes the negative samples and directly predicts the output of one view from another with a momentum encoder to avoid collapsing. Instead of using a momentum encoder, Simsiam [5] adopts siamese networks to maximize the cosine similarity between two augmentations of one image with a simple stop-gradient technique to avoid collapsing.

对比学习(Contrastive Learning)是自监督学习的重要分支,其前置任务是将同一图像的两个增强版本(视为正样本)在表征空间中拉近,同时远离负样本。近期研究[17,31,36]尝试通过最大化图像两个视角在潜在表征中的互信息来学习嵌入表示,但这些方法的成功依赖于大量负样本。为解决该问题,BYOL[12]摒弃负样本,直接通过动量编码器预测一个视角的输出以避免坍塌。Simsiam[5]则采用孪生网络结构,配合简单的停止梯度技术最大化同一图像两个增强版本的余弦相似度,同样实现了避免坍塌的效果。

For long-tail recognition, there have been numerous works [8, 18, 26, 47] to obtain a balanced representation space by introducing a contrastive loss. However, they usually require a multi-stage pipeline and large batches of negative examples for training, which negatively increases training skills and overhead. Our method learns the consistency of the mixed image by cosine similarity, and this method is conveniently added to the supervised training in an auxiliary loss way. Moreover, our approach neither uses negative pairs nor a momentum encoder and does not rely on largebatch training.

对于长尾识别问题,已有大量研究[8, 18, 26, 47]通过引入对比损失来获得平衡的表征空间。但这些方法通常需要多阶段流程和大量负样本进行训练,这会显著增加训练技巧和开销。我们的方法通过余弦相似度学习混合图像的一致性,并能以便捷的辅助损失形式加入监督训练。此外,我们的方法既不使用负样本对也不依赖动量编码器,且无需大批量训练。

2.2. Class Rebalance learning

2.2. 类别重平衡学习

Rebalance training has been widely studied in long-tail recognition. Its core idea is to strengthen the tail class by oversampling [4, 13] or increasing weight [2, 9, 44]. However, over-learning the tail class will also increase the risk of over fitting [45]. Conversely, under-sampling or reducing weight in the head class will sacrifice the performance of head classes. Recent studies [19, 45] have shown that directly training the rebalancing strategy would degrade the performance of representation extraction, so some multistage training methods [1, 19, 45] decouple the training of representation learning and classifier for long-tail recognition. For representation learning, self-supervised-based [18, 26, 47] and augmentation-based [6, 32] methods can improve robustness to long-tailed distributions. And for the rebalanced classifier, such as multi-experts [24, 37], reweighted class if i ers [1], and label-distribution-aware [3], all can effectively enhance the performance of tail classes. Further, [45] proposed a unified Bilateral-Branch Network (BBN) that adaptively adjusts the conventional learning branch and the reversed sampling branch through a cumulative learning strategy. Moreover, we follow BBN to weight the mixed labels of long-tailed data adaptively and do not require an ensemble during testing.

重平衡训练在长尾识别领域已被广泛研究。其核心思想是通过过采样[4, 13]或增加权重[2, 9, 44]来强化尾部类别。然而过度学习尾部类别也会增加过拟合风险[45]。反之,对头部类别进行欠采样或降低权重则会牺牲头部类别的性能。近期研究[19, 45]表明,直接采用重平衡策略训练会降低特征提取性能,因此一些多阶段训练方法[1, 19, 45]将特征学习与分类器训练解耦。在特征学习方面,基于自监督[18, 26, 47]和基于数据增强[6, 32]的方法能提升模型对长尾分布的鲁棒性;而在重平衡分类器方面,如多专家系统[24, 37]、重加权分类器[1]和标签分布感知方法[3]都能有效提升尾部类别性能。[45]进一步提出统一的双边分支网络(BBN),通过累积学习策略自适应调整常规学习分支和反向采样分支。我们沿用BBN框架对长尾数据的混合标签进行自适应加权,且测试时无需集成模型。

3. The Proposed Method

3. 提出的方法

In this section, we provide a detailed description of our GLMC framework. First, we present an overview of our framework in Sec.3.1. Then, we introduce how to learn global and local mixture consistency by maximizing the cosine similarity of two mixed images in Sec.3.2. Next, we propose a cumulative class-balanced strategy to weight long-tailed data labels progressively in Sec.3.3. Finally, we introduce how to optionally use MaxNorm [1, 16] to finetune the classifier weights in Sec.3.4.

在本节中,我们将详细介绍GLMC框架。首先,在3.1节概述框架结构;其次,在3.2节阐述如何通过最大化两幅混合图像的余弦相似度来学习全局与局部混合一致性;接着,在3.3节提出渐进式加权长尾数据标签的累积类平衡策略;最后,在3.4节说明如何选用MaxNorm [1, 16] 对分类器权重进行微调。

3.1. Overall Framework

3.1. 总体框架

Our framework is divided into the following six major components:

我们的框架分为以下六个主要组成部分:

• A predictor $p r e d(x)$ that maps the output of projection to the contrastive space. The predictor also a fully connected layer and has no activation function. • A linear conventional classifier head $c(x)$ that maps vectors $r$ to category space. The classifier head calculates mixed cross entropy loss with the original data distribution. (optional) A linear rebalanced classifier head $c b(x)$ that maps vectors $r$ to rebalanced category space. The rebalanced classifier calculates mixed cross entropy loss with the reweighted data distribution.

• 预测器 $pred(x)$:将投影输出映射到对比空间的全连接层(无激活函数)。

• 线性常规分类头 $c(x)$:将向量 $r$ 映射到类别空间,基于原始数据分布计算混合交叉熵损失。

• (可选)线性平衡分类头 $cb(x)$:将向量 $r$ 映射到重平衡类别空间,基于加权数据分布计算混合交叉熵损失。

Note that only the rebalanced classifier $c b(x)$ is retained at the end of training for the long-tailed recognition, while the predictor, projection, and conventional classifier head will be removed. However, for the balanced dataset, the rebalanced classifier $c b(x)$ is not needed.

请注意,在长尾识别的训练结束时,仅保留重新平衡的分类器 $cb(x)$,而预测器、投影层和常规分类器头将被移除。然而,对于平衡数据集,则不需要使用重新平衡的分类器 $cb(x)$。

3.2. Global and Local Mixture Consistency Learning

3.2. 全局与局部混合一致性学习

In supervised deep learning, the model is usually divided into two parts: an encoder and a linear classifier. And the class if i ers are label-biased and rely heavily on the quality of representations. Therefore, improving the generalization ability of the encoder will significantly improve the fi- nal classification accuracy of the long-tailed challenge. Inspired by self-supervised learning to improve representation by learning additional pretext tasks, as illustrated in Fig.1, we train the encoder using a standard supervised task and a self-supervised task in a multi-task learning way. Further, unlike simple pretext tasks such as rotation prediction, image color iz ation, etc., following the global and local ideas [39], we expect to learn the global-local consistency through the strong data augmentation method MixUp [42] and CutMix [41].

在监督式深度学习中,模型通常分为两部分:编码器和线性分类器。而分类器存在标签偏差,高度依赖表征质量。因此提升编码器的泛化能力将显著改善长尾挑战的最终分类精度。受自监督学习通过额外 pretext 任务提升表征的启发,如图 1 所示,我们采用多任务学习方式,同时用标准监督任务和自监督任务训练编码器。此外,不同于旋转预测、图像着色等简单 pretext 任务,遵循全局-局部思想 [39],我们期望通过 MixUp [42] 和 CutMix [41] 这类强数据增强方法学习全局-局部一致性。

Global Mixture. MixUp is a global mixed-label data augmentation method that generates mixture samples by mixing two images of different classes. For a pair of two images and their labels probabilities $\left({x_{i},p_{i}}\right)$ and $(x_{j},p_{j})$ , we calculate $(\tilde{x}{g},\tilde{p}_{g})$ by

全局混合 (Global Mixture). MixUp 是一种全局混合标签的数据增强方法,通过混合两张不同类别的图像生成混合样本。对于一对图像及其标签概率 $\left({x_{i},p_{i}}\right)$ 和 $(x_{j},p_{j})$ ,我们通过计算得到 $(\tilde{x}{g},\tilde{p}_{g})$ :

$$

\begin{array}{c}{{\lambda\sim B e t a(\beta,\beta),}}\ {{\tilde{x}{g}=\lambda x_{i}+(1-\lambda)x_{j},}}\ {{\tilde{p}{g}=\lambda p_{i}+(1-\lambda)p_{j}.}}\end{array}

$$

$$

\begin{array}{c}{{\lambda\sim B e t a(\beta,\beta),}}\ {{\tilde{x}{g}=\lambda x_{i}+(1-\lambda)x_{j},}}\ {{\tilde{p}{g}=\lambda p_{i}+(1-\lambda)p_{j}.}}\end{array}

$$

where $\lambda$ is sampled from a Beta distribution parameterized by the $\beta$ hyper-parameter. Note that $p$ are one-hot vectors. Local Mixture. Different from MixUp, CutMix combines two images by locally replacing the image region with a patch from another training image. We define the combining operation as

其中 $\lambda$ 是从由超参数 $\beta$ 参数化的 Beta 分布中采样得到。注意 $p$ 是独热向量。局部混合 (Local Mixture) 。与 MixUp 不同,CutMix 通过用另一张训练图像的局部区域替换当前图像的局部区域来组合两张图像。我们将组合操作定义为

$$

\tilde{x}{l}=M\odot x_{i}+({\bf1}-M)\odot x_{j}.

$$

$$

\tilde{x}{l}=M\odot x_{i}+({\bf1}-M)\odot x_{j}.

$$

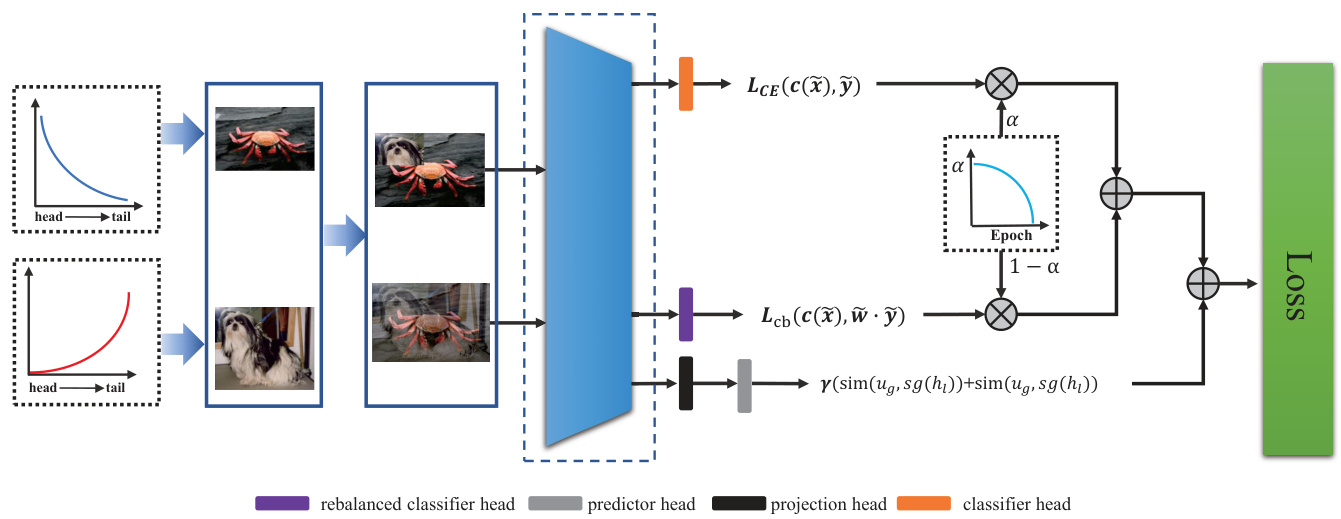

Figure 2. An illustration of the cumulative class-balanced learning pipeline. We apply uniform and reversed samplers to obtain head and tail data, and then they are synthesized into head-tail mixture samples by MixUp and CutMix. The cumulative learning strategy adaptively weights the rebalanced classifier and the conventional classifier by epochs.

图 2: 累积式类别平衡学习流程示意图。我们采用均匀采样器和反向采样器分别获取头部数据与尾部数据,随后通过 MixUp 和 CutMix 将其合成为头尾混合样本。累积学习策略根据训练轮次自适应地加权平衡分类器与常规分类器。

where $M\in{0,1}^{W\times H}$ denotes the randomly selected pixel patch from the image $x_{i}$ and pasted on $x_{j}$ , 1 is a binary mask filled with ones, and $\odot$ is element-wise multiplication. Concretely, we sample the bounding box coordinates $\boldsymbol{B}=\left(r_{x},r_{y},r_{w},r_{h}\right)$ indicating the cropping regions on $x_{i}$ and $x_{j}$ . The box coordinates are uniformly sampled according to

其中 $M\in{0,1}^{W\times H}$ 表示从图像 $x_{i}$ 中随机选取并粘贴到 $x_{j}$ 上的像素块,1 是填充为全1的二元掩码,$\odot$ 表示逐元素相乘。具体而言,我们采样边界框坐标 $\boldsymbol{B}=\left(r_{x},r_{y},r_{w},r_{h}\right)$ 来指示 $x_{i}$ 和 $x_{j}$ 上的裁剪区域。框坐标根据均匀分布采样得到

$$

\begin{array}{l l}{{r_{x}\sim U n i f o r m(0,W),r_{w}=W\sqrt{1-\lambda}}}\ {{r_{y}\sim U n i f o r m(0,H),r_{h}=H\sqrt{1-\lambda}}}\end{array}

$$

$$

\begin{array}{l l}{{r_{x}\sim U n i f o r m(0,W),r_{w}=W\sqrt{1-\lambda}}}\ {{r_{y}\sim U n i f o r m(0,H),r_{h}=H\sqrt{1-\lambda}}}\end{array}

$$

where $\lambda$ is also sampled from the $B e t a(\beta,\beta)$ , and their mixed labels are the same as MixUp.

其中 $\lambda$ 也从 $B e t a(\beta,\beta)$ 中采样,其混合标签与 MixUp 相同。

Self-Supervised Learning Branch. Previous works require large batches of negative samples [17, 36] or a memory bank [14] to train the network. That makes it difficult to apply to devices with limited memory. For simplicity, our goal is to maximize the cosine similarity of global and local mixtures in representation space to obtain contrastive consistency. Specifically, the two types of augmented images are processed by an encoder network and a projection head to obtain the representation $h_{g}$ and $h_{l}$ . Then a prediction head transforms the two representations to output $u_{g}$ and $u_{l}$ . We minimize their negative cosine similarity:

自监督学习分支。先前的工作需要大批量负样本 [17, 36] 或记忆库 [14] 来训练网络,这使其难以应用于内存受限的设备。为简化流程,我们的目标是通过最大化全局与局部混合特征在表示空间中的余弦相似度来获得对比一致性。具体而言,两种增强图像经过编码器网络和投影头处理后获得表征 $h_{g}$ 和 $h_{l}$,随后预测头将这两个表征转换为输出 $u_{g}$ 和 $u_{l}$。我们通过最小化它们的负余弦相似度来实现优化:

$$

s i m(u_{g},h_{l})=-\frac{u_{g}}{|u_{g}|}\cdot\frac{h_{l}}{|h_{l}|}

$$

$$

s i m(u_{g},h_{l})=-\frac{u_{g}}{|u_{g}|}\cdot\frac{h_{l}}{|h_{l}|}

$$

where $\left\Vert\cdot\right\Vert$ is $l_{2}$ normalization. An undesired trivial solution to minimize the negative cosine similarity of augmented images is all outputs “collapsing” to a constant. Following SimSiam [5], we use a stop gradient operation to prevent collapsing. The SimSiam loss function is defined as:

其中 $\left\Vert\cdot\right\Vert$ 表示 $l_{2}$ 归一化。最小化增强图像负余弦相似度时可能出现一个不理想的平凡解,即所有输出"坍缩"为常数。借鉴 SimSiam [5] 的方法,我们采用停止梯度操作来防止坍缩。SimSiam 损失函数定义为:

$$

\mathcal{L}{s i m}=s i m(u_{g},s g(h_{l}))+s i m(u_{l},s g(h_{g}))

$$

$$

\mathcal{L}{s i m}=s i m(u_{g},s g(h_{l}))+s i m(u_{l},s g(h_{g}))

$$

this means that $h_{l}$ and $h_{g}$ are treated as a constant.

这意味着 $h_{l}$ 和 $h_{g}$ 被视为常量。

Supervised Learning Branch. After constructing the global and local augmented data pair $(\tilde{x}{g};\tilde{p}{g})$ and $(\tilde{x}{l};\tilde{p}{l})$ , we calculate the mixed-label cross-entropy loss:

监督学习分支。在构建全局和局部增强数据对 $(\tilde{x}{g};\tilde{p}{g})$ 和 $(\tilde{x}{l};\tilde{p}_{l})$ 后,我们计算混合标签交叉熵损失:

$$

\mathcal{L}{c}=-\frac{1}{2N}\sum_{i=1}^{N}(\tilde{p}{g}^{i}(l o g f(\tilde{x}{g}^{i}))+\tilde{p}{l}^{i}(l o g f(\tilde{x}_{l}^{i})))

$$

$$

\mathcal{L}{c}=-\frac{1}{2N}\sum_{i=1}^{N}(\tilde{p}{g}^{i}(l o g f(\tilde{x}{g}^{i}))+\tilde{p}{l}^{i}(l o g f(\tilde{x}_{l}^{i})))

$$

where $N$ denote the sampling batch size and $f(\cdot)$ denote predicted probability of $\tilde{x}$ . Note that a batch of images is augmented into a global and local mixture so that the actual batch size will be twice the sampling size.

其中 $N$ 表示采样批次大小,$f(\cdot)$ 表示 $\tilde{x}$ 的预测概率。注意,一批图像会被增强为全局和局部混合形式,因此实际批次大小将是采样大小的两倍。

3.3. Cumulative Class-Balanced Learning

3.3. 累积式类别平衡学习

Class-Balanced learning. The design principle of class re weighting is to introduce a weighting factor inversely proportional to the label frequency and then strengthen the learning of the minority class. Following [44], the weighting factor $w_{i}$ is define as:

类别平衡学习。类别重新加权的设计原则是引入一个与标签频率成反比的权重因子,从而加强对少数类别的学习。根据[44],权重因子$w_{i}$定义为:

$$

w_{i}={\frac{C\cdot(1/r_{i})^{k}}{\sum_{i=1}^{C}(1/r_{i})^{k}}}

$$

$$

w_{i}={\frac{C\cdot(1/r_{i})^{k}}{\sum_{i=1}^{C}(1/r_{i})^{k}}}

$$

where $r_{i}$ is the i-th class frequencies of the training dataset, and $k$ is a hyper-parameter to scale the gap between the head and tail classes. Note that $k=0$ corresponds to no reweighting and $k=1$ corresponds to class-balanced method [9]. We change the scalar weights to the one-hot vectors form and mix the weight vectors of the two images:

其中 $r_{i}$ 表示训练数据集中第 i 类的频率,$k$ 是用于调节头部与尾部类别差距的超参数。需注意 $k=0$ 表示不进行重新加权,$k=1$ 则对应类别平衡方法 [9]。我们将标量权重转换为独热向量形式,并混合两张图像的权重向量:

$$

\tilde{w}=\lambda w_{i}+(1-\lambda)w_{j}.

$$

$$

\tilde{w}=\lambda w_{i}+(1-\lambda)w_{j}.

$$

Formally, given a train dataset $D={(x_{i},y_{i},w_{i})}_{i=1}^{N}$ , the rebalanced loss can be written as:

给定训练数据集 $D={(x_{i},y_{i},w_{i})}_{i=1}^{N}$ ,重平衡损失可表示为:

$$

\mathcal{L}{c b}=-\frac{1}{2N}\sum_{i=1}^{N}\tilde{w}^{i}(\tilde{p}{g}^{i}(l o g f(\tilde{x}{g}^{i}))+\tilde{p}{l}^{i}(l o g f(\tilde{x}_{l}^{i})))

$$

$$

\mathcal{L}{c b}=-\frac{1}{2N}\sum_{i=1}^{N}\tilde{w}^{i}(\tilde{p}{g}^{i}(l o g f(\tilde{x}{g}^{i}))+\tilde{p}{l}^{i}(l o g f(\tilde{x}_{l}^{i})))

$$

Table 1. Top-1 accuracy $(%)$ of ResNet-32 on CIFAR-10-LT and CIFAR-100-LT with different imbalance factors [100, 50, 10]. GLMC consistently outperformed the previous best method only in the one-stage.

表 1. ResNet-32 在 CIFAR-10-LT 和 CIFAR-100-LT 上不同不平衡因子 [100, 50, 10] 的 Top-1 准确率 $(%)$。GLMC 仅在单阶段训练中持续优于先前最佳方法。

| 方法 | CIFAR-10-LT | CIFAR-100-LT | |||||

|---|---|---|---|---|---|---|---|

| IF=100 | 50 | 10 | 100 | 50 | 10 | ||

| CE | 70.4 | 74.8 | 86.4 | 38.3 | 43.9 | 55.7 | |

| 重平衡分类器 | BBN [45] | 79.82 | 82.18 | 88.32 | 42.56 | 47.02 | 59.12 |

| CB-Focal [9] | 74.6 | 79.3 | 87.1 | 39.6 | 45.2 | 58 | |

| LogitAjust [29] | 80.92 | 42.01 | 47.03 | 57.74 | |||

| weight balancing [1] | 53.35 | 57.71 | 68.67 | ||||

| 数据增强 | Mixup [42] | 73.06 | 77.82 | 87.1 | 39.54 | 54.99 | 58.02 |

| RISDA [6] | 79.89 | 79.89 | 79.89 | 50.16 | 53.84 | 62.38 | |

| CMO [32] | 47.2 | 51.7 | 58.4 | ||||

| 自监督预训练 | KCL [18] | 77.6 | 81.7 | 88 | 42.8 | 46.3 | 57.6 |

| TSC [25] | 79.7 | 82.9 | 88.7 | 42.8 | 46.3 | 57.6 | |

| BCL [47] | 84.32 | 87.24 | 91.12 | 51.93 | 56.59 | 64.87 | |

| PaCo [8] | 52 | 56 | 64.2 | ||||

| SSD [26] | 46 | 50.5 | 62.3 | ||||

| 集成分类器 | RIDE (3 experts) + CMO [32] | 50 | 53 | 60.2 | |||

| RIDE (3 experts) [37] | 48.6 | 51.4 | 59.8 | ||||

| 单阶段训练 | ours | 87.75 | 90.18 | 94.04 | 55.88 | 61.08 | 70.74 |

| 微调分类器 | ours + MaxNorm [1] | 87.57 | 90.22 | 94.03 | 57.11 | 62.32 | 72.33 |

where $f(\tilde{x})$ and $\tilde{w}$ denote predicted probability and weighting factor of mixed image $\tilde{x}$ , respectively. Note that the global and local mixed image have the same mixed weights.

其中 $f(\tilde{x})$ 和 $\tilde{w}$ 分别表示混合图像 $\tilde{x}$ 的预测概率和权重因子。注意全局和局部混合图像具有相同的混合权重。

Cumulative Class-Balanced Learning. As illustrated in Fig.2, we use the bilateral branches structure to learn the rebalance branch adaptively. But unlike BBN [45], our cumulative learning strategy is imposed on the loss function instead of the fully connected layer weights and uses re weighting instead of resampling for learning. Concretely, the loss $\mathcal{L}{c}$ of the unweighted classification branch is multiplied by $\alpha$ , and the rebalanced loss $\mathcal{L}_{c b}$ is multiplied by $1-\alpha$ . $\alpha$ automatically decreases as the current training epochs $T$ increase:

累积式类别平衡学习。如图 2 所示,我们采用双边分支结构自适应地学习重平衡分支。但与 BBN [45] 不同,我们的累积学习策略作用于损失函数而非全连接层权重,并采用重加权 (reweighting) 而非重采样 (resampling) 进行学习。具体而言,未加权分类分支的损失 $\mathcal{L}{c}$ 乘以 $\alpha$,而重平衡损失 $\mathcal{L}_{c b}$ 乘以 $1-\alpha$。$\alpha$ 会随着当前训练周期 $T$ 的增加而自动递减:

$$

\alpha=1-(\frac{T}{T_{m a x}})^{2}

$$

$$

\alpha=1-(\frac{T}{T_{m a x}})^{2}

$$

where $T_{m a x}$ is the maximum training epoch.

其中 $T_{m a x}$ 为最大训练周期。

Finally, the total loss is defined as a combination of loss $L_{s u p},L_{c b}$ , and $L_{s i m}$ :

最终,总损失定义为损失 $L_{s u p},L_{c b}$ 和 $L_{s i m}$ 的组合:

$$

\mathcal{L}{t o t a l}=\alpha\mathcal{L}{c}+(1-\alpha)\mathcal{L}{c b}+\gamma\mathcal{L}_{s i m}

$$

$$

\mathcal{L}{t o t a l}=\alpha\mathcal{L}{c}+(1-\alpha)\mathcal{L}{c b}+\gamma\mathcal{L}_{s i m}

$$

where $\gamma$ is a hyper parameter that controls $L_{s i m}$ loss. The default value is 10.

其中 $\gamma$ 是控制 $L_{s i m}$ 损失的超参数,