Git: Clustering Based on Graph of Intensity Topology

Git: 基于强度拓扑图的聚类方法

Abstract

摘要

Accuracy, Robustness to noises and scales, Interpret ability, Speed, and Easy to use (ARISE) are crucial requirements of a good clustering algorithm. However, achieving these goals simultan e ou sly is challenging, and most advanced approaches only focus on parts of them. Towards an overall consideration of these aspects, we propose a novel clustering algorithm, namely GIT (Clustering Based on Graph of Intensity Topology). GIT considers both local and global data structures: firstly forming local clusters based on intensity peaks of samples, and then estimating the global topological graph (topo-graph) between these local clusters. We use the Wasserstein Distance between the predicted and prior class proportions to automatically cut noisy edges in the topo-graph and merge connected local clusters as final clusters. Then, we compare GIT with seven competing algorithms on five synthetic datasets and nine real-world datasets. With fast local cluster detection, robust topo-graph construction and accurate edge-cutting, GIT shows attractive ARISE performance and significantly exceeds other non-convex clustering methods. For example, GIT outperforms its counterparts about $10%$ (F1-score) on MNIST and Fashion M NIST. Code is available at https://github.com/gao zhang yang/GIT.

准确性、抗噪性和尺度鲁棒性、可解释性、速度以及易用性(ARISE)是一个优秀聚类算法的关键要求。然而同时实现这些目标具有挑战性,大多数先进方法仅聚焦于部分特性。为全面考量这些方面,我们提出了一种新颖的聚类算法GIT(基于强度拓扑图的聚类)。该算法兼顾局部与全局数据结构:首先根据样本强度峰值形成局部聚类簇,随后估计这些局部簇之间的全局拓扑图(topo-graph)。我们通过预测类别比例与先验类别比例的Wasserstein距离来自动剪除拓扑图中的噪声边,并将连通的局部聚类簇合并为最终聚类结果。通过在五个合成数据集和九个真实数据集上与七种竞争算法的对比实验表明,凭借快速的局部簇检测、鲁棒的拓扑图构建和精确的剪边策略,GIT展现出极具吸引力的ARISE性能,显著优于其他非凸聚类方法。例如在MNIST和Fashion MNIST数据集上,GIT的F1分数平均领先其他方法约10%。代码已开源:https://github.com/gaozhangyang/GIT。

1 Introduction

1 引言

With the continuous development of the past 90 years (Driver and Kroeber 1932; Zubin 1938; Tryon 1939), numerous clustering algorithms (Jain, Murty, and Flynn 1999; Saxena et al. 2017; Gan, Ma, and Wu 2020) have promoted scientific progress in various fields, such as biology, social science and computer science. As to these approaches, Accuracy, Robustness, Interpret ability, Speed, and Easy to use (ARISE) are crucial requirements for wide usage. However, most previous works only show their superiority in certain aspects while ignoring others, leading to sub-optimal solutions. How to boost the overall ARISE performance, especially the accuracy and robustness is the critical problem this paper try to address.

在过去90年的持续发展过程中 (Driver and Kroeber 1932; Zubin 1938; Tryon 1939),大量聚类算法 (Jain, Murty, and Flynn 1999; Saxena et al. 2017; Gan, Ma, and Wu 2020) 推动了生物学、社会科学和计算机科学等多个领域的科学进步。对于这些方法而言,准确性 (Accuracy)、鲁棒性 (Robustness)、可解释性 (Interpretability)、速度 (Speed) 和易用性 (Easy to use) (简称ARISE) 是实现广泛应用的五大关键要求。然而,先前大多数工作仅在某些方面展现优势而忽略其他指标,导致解决方案难以达到最优。如何提升整体ARISE性能(尤其是准确性和鲁棒性)正是本文试图解决的核心问题。

Existing clustering methods, e.g., center-based, spectralbased and density-based, cannot achieve satisfactory ARISE performance. Typical center-based methods such as $k$ -means (Steinhaus 1956; Lloyd 1982) and $k$ -means $^{++}$ (Arthur and Vas sil v its kii 2006; Lattanzi and Sohler 2019) are fast, convenient and interpret able, but the resulted clusters must be convex and depend on the initial state. In the non-convex case, elegant spectral clustering (Dhillon, Guan, and Kulis 2004) finds clusters by minimizing the edge-cut between them with solid mathematical basis. However, it is challenging to calculate ei gen vectors of the large and dense similarity matrix and handle noisy or multiscale data for spectral clustering (Nadler and Galun 2006). Moreover, the sensitivity of eigenvectors to the similarity matrix is not intuitive (Meila 2016), limiting its interpret ability. A more explain able way to detect non-convex clusters is density-based method, which has recently attracted considerable attention. Density clustering relies on the following assumption: data points tend to form clusters in high-density areas, while noises tend to appear in low-density areas. For example, DBSCAN (Ester et al. 1996) groups closely connected points into clusters and leaves outliers as noises, but it only provides flat labeling of samples; thus, HDBSCAN (Campello, Moulavi, and Sander 2013; Campello et al. 2015; McInnes and Healy 2017) and DPA (d’Errico et al. 2021) are proposed to identify hierarchical clusters. In practice, we find that DBSCAN, HDBSCAN, and DPA usually treat overmuch valid points as outliers, resulting in the label missing issue1. In addition, Meanshift, ToMATo, FSFDP and their derivatives (Comaniciu and Meer 2002; Chazal et al. 2013; Rodriguez and Laio 2014; Ezugwu et al. 2021) are other classic density clustering algorithms with sub-optimum accuracy. Recently, some delicate algorithms appear to improve the robustness to data scales or noises, such as RECOME (Geng et al. 2018), Quickshift $^{++}$ (Jiang, Jang, and Kpotufe 2018) and SpectACI (Hess et al. 2019). However, the accuracy gain of these methods is limited, and the computational cost increases sharply on largescale datasets. Another promising direction is to combine deep learning with clustering (Hershey et al. 2016; Caron et al. 2018; Wang, Le Roux, and Hershey 2018; Zhan et al. 2020), but these methods are hard to use, time-consuming, and introduce the randomness of deep learning. In summary, as to clustering algotirhms, there is still a large room for improving ARISE performance.

现有的聚类方法,如基于中心、基于谱和基于密度的方法,均无法实现令人满意的ARISE性能。典型的基于中心方法如$k$-means (Steinhaus 1956; Lloyd 1982)和$k$-means$^{++}$ (Arthur and Vassilvitskii 2006; Lattanzi and Sohler 2019)虽然快速、便捷且可解释,但生成的簇必须为凸集且依赖初始状态。在非凸情况下,优雅的谱聚类(Dhillon, Guan, and Kulis 2004)通过最小化簇间边割来发现簇结构,具有坚实的数学基础。然而,计算大型稠密相似度矩阵的特征向量以及处理噪声或多尺度数据对谱聚类具有挑战性(Nadler and Galun 2006)。此外,特征向量对相似度矩阵的敏感性不够直观(Meila 2016),限制了其可解释性。

检测非凸簇更具可解释性的方法是基于密度的聚类,近期受到广泛关注。密度聚类基于以下假设:数据点倾向于在高密度区域形成簇,而噪声则出现在低密度区域。例如DBSCAN (Ester et al. 1996)将紧密连接的点归为簇并将离群点视为噪声,但仅提供样本的扁平标签;因此HDBSCAN (Campello, Moulavi, and Sander 2013; Campello et al. 2015; McInnes and Healy 2017)和DPA (d'Errico et al. 2021)被提出用于识别层次化簇。实践中我们发现DBSCAN、HDBSCAN和DPA常将过多有效点视为离群点,导致标签缺失问题。此外,Meanshift、ToMATo、FSFDP及其衍生算法(Comaniciu and Meer 2002; Chazal et al. 2013; Rodriguez and Laio 2014; Ezugwu et al. 2021)是其他经典密度聚类方法,但精度欠佳。

近期出现了一些精细算法以提升对数据尺度或噪声的鲁棒性,如RECOME (Geng et al. 2018)、Quickshift$^{++}$ (Jiang, Jang, and Kpotufe 2018)和SpectACI (Hess et al. 2019)。但这些方法的精度提升有限,且在大规模数据集上计算成本急剧增加。另一个有前景的方向是将深度学习与聚类结合(Hershey et al. 2016; Caron et al. 2018; Wang, Le Roux, and Hershey 2018; Zhan et al. 2020),但这些方法使用困难、耗时且引入深度学习的随机性。总之,在聚类算法方面,ARISE性能仍有很大提升空间。

To improve the overall ARISE performance, we propose a novel algorithm named GIT (Clustering Based on Graph of Intensity Topology), which contains two stages: finding local clusters, and merging them into final clusters. We detect locally high-density regions through an intensity function and collect internal points as local clusters. Unlike previous works, we take local clusters as basic units instead of sample points and further consider connect iv i ties between them to cons it it ude a topo-graph describing the global data structure. We point out that the key to improving the accuracy is cutting noisy edges in the topo-graph. Differ from thresholdbased edge-cutting, we introduce a knowledge-guidied algorithm to filter noisy edges by using prior class proportion, e.g., 1:0.5:0.1 for a dataset with three unbalanced classes. This algorithm enjoys two advantages. Firstly, it is relatively robust and easy-to-tune when only the number of classes is known, in which case we set the same sample number for all classes. Secondly, it is promising to solve the problem of unbalanced sample distribution given the actual proportion. Treat the balanced proportion as prior knowledge, there is only one parameter ( $k$ for kNN searching) need to be tuned in GIT, which is relative easy to use. We further study the robustness of various methods to data shapes, noises and scales, where GIT significantly outperform competitors. We also speed up GIT and its time complexity is ${\mathcal{O}}({\bar{d}}{s}n\log(n))$ , where $d_{s}$ is the dimension of feature channels and $n$ is the number of samples. Finally, we provide visual explanations of each step for GIT to show its interpret ability.

为提高ARISE的整体性能,我们提出了一种名为GIT(基于强度拓扑图的聚类)的新算法,该算法包含两个阶段:寻找局部聚类,并将其合并为最终聚类。我们通过强度函数检测局部高密度区域,并收集内部点作为局部聚类。与以往工作不同,我们将局部聚类作为基本单元而非样本点,并进一步考虑它们之间的连接性,以构建描述全局数据结构的拓扑图。我们指出提高精度的关键在于切割拓扑图中的噪声边。不同于基于阈值的边切割方法,我们引入了一种知识引导算法,利用先验类别比例(例如三类不平衡数据集的1:0.5:0.1)来过滤噪声边。该算法具有两大优势:首先,在仅知类别数量时(此时为所有类别设置相同样本数),其调参相对稳健且简便;其次,给定实际比例后,有望解决样本分布不平衡问题。若将平衡比例作为先验知识,GIT仅需调整一个参数(kNN搜索中的$k$),易于使用。我们进一步研究了各方法对数据形状、噪声和尺度的鲁棒性,结果表明GIT显著优于竞争对手。我们还优化了GIT的速度,其时间复杂度为${\mathcal{O}}({\bar{d}}{s}n\log(n))$,其中$d_{s}$为特征通道维度,$n$为样本数量。最后,我们提供了GIT各步骤的可视化解释以展示其可解释性。

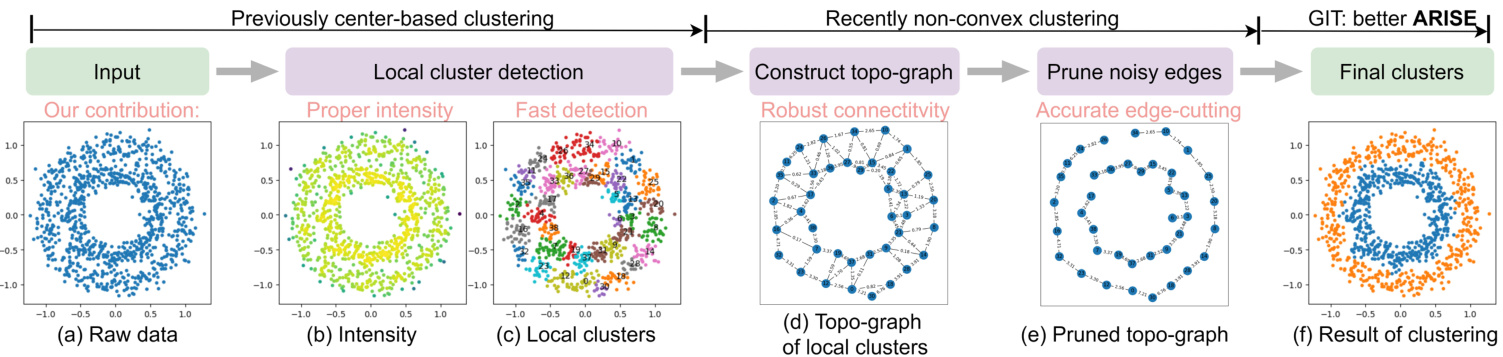

Figure 1: The pipeline of GIT: after the intensity function in (b) has been estimated from the raw data (two rings) in (a), local clusters (c) are detected through the intensity growing process, seeing Section. 2.3. Due to the limited number of colors, different local clusters may share the same color. (d) shows that each local cluster is represented as a vertex in the topological graph, and edges between them are calculated. In (e), noisy edges are pruned and the real global structure (two rings) is revealed. Finally, the clustering task is finished by merging connected local lusters, as shown in (f).

图 1: GIT流程示意图:(a)中原始数据(双环形结构)经过(b)强度函数估计后,通过第2.3节所述的强度增长过程检测出局部簇(c)。由于颜色数量有限,不同局部簇可能使用相同颜色标识。(d)展示每个局部簇被表示为拓扑图中的顶点,并计算它们之间的边连接。(e)经过噪声边剪枝后,真实的全局结构(双环形)得以显现。最终如(f)所示,通过合并相连的局部簇完成聚类任务。

In summary, we propose GIT to achieve better ARISE performance, considering both local and global data structures. Extensive experiments confirm this claim.

总之,我们提出GIT方法以同时兼顾局部和全局数据结构,从而实现更好的ARISE性能。大量实验证实了这一主张。

Table 1: Commonly used symbols

表 1: 常用符号

| 符号 | 描述 |

|---|---|

| D | 数据集,包含 c1, c2, ..., cn。 |

| n | 样本数量。 |

| k | 超参数,用于 kNN 搜索。 |

| ds | 输入特征维度数量。 |

| ri | 第 i 个局部簇的根(或强度峰值)。 |

| R(c) | 包含 c 的局部簇的根。 |

| R | 根点集合。 |

| S(·) | 局部簇之间的相似性。 |

| S(·,·) | 基于局部簇的样本间相似性。 |

| f(c) | c 的强度。 |

| L(λ) | 强度函数的超水平集,阈值为 λ。 |

| Ci(t) | 时间 t 的第 i 个局部簇。 |

2 Method

2 方法

2.1 Motivation, Symbols and Pipeline

2.1 动机、符号与流程

In Fig. 2, we illustrate the motivation that local clusters are point sets in high-intensity regions and they are connected by shared boundaries. And GIT aims to

在图 2 中,我们展示了局部簇作为高密度区域点集并通过共享边界连接的动机。GIT 的目标是

- determine local clusters via the intensity function; 2. construct the connectivity graph of local clusters; 3. cut noisy edges for reading out final clusters, each of which contains several connected local clusters.

- 通过强度函数确定局部聚类;

- 构建局部聚类的连通图;

- 切断噪声边以读取最终聚类,每个最终聚类包含多个相连的局部聚类。

The commonly used symbols and the overall pipeline are shown in Table. 1 and Fig. 1.

常用符号及整体流程如表 1 和图 1 所示。

Figure 2: Motivation with 1D data. In (a), we estimate the intensity function $f(x)$ from samples and partition data into local clusters ${v_{1},v_{2},v_{3}}$ along valleys. Points with the largest intensity are called roots of local clusters, such as $r_{1},r_{2}$ and $r_{3}$ . $(\pmb{x}{1},\pmb{x}{2})$ is a boundary pair connecting $v_{1}$ and $v_{2}$ . (b) shows the topology graph containing local clusters (nodes) and their connectivity (edges), where $e_{1,2}$ is stronger than $^{\textstyle e_{2,3},}$ . In (c), by cutting $^{e_{2,3}}$ , we get final clusters $\mathcal{V}{1}={v_{1},v_{2}}$ and $\mathcal{V}{2}={v_{3}}$ .

图 2: 一维数据动机示意图。(a) 中我们从样本估计强度函数 $f(x)$ 并将数据沿谷值划分为局部聚类 ${v_{1},v_{2},v_{3}}$。强度最大的点称为局部聚类的根,例如 $r_{1},r_{2}$ 和 $r_{3}$。$(\pmb{x}{1},\pmb{x}{2})$ 是连接 $v_{1}$ 和 $v_{2}$ 的边界对。(b) 展示了包含局部聚类(节点)及其连接性(边)的拓扑图,其中 $e_{1,2}$ 的强度大于 $^{\textstyle e_{2,3},}$。(c) 中通过切断 $^{e_{2,3}}$,我们得到最终聚类 $\mathcal{V}{1}={v_{1},v_{2}}$ 和 $\mathcal{V}{2}={v_{3}}$。

2.2 Intensity Function

2.2 强度函数

To identify local clusters, non-parametric kernel density estimation (KDE) is employed to estimate the data distribution. However, the kernel-based (Parzen 1962; Davis, Lii, and Politis 2011) or $\mathbf{k}$ -Nearest Neighborhood(KNN)-based (Lofts ga arden, Que sen berry et al. 1965) KDE either suffers from global over-smoothing or local oscillator y (Yip, Ding, and Chan 2006), both of which are not suitable for GIT. Instead, we use a new intensity function $f(x)$ for better empirical performance:

为识别局部聚类,采用非参数核密度估计(KDE)来估算数据分布。然而基于核方法(Parzen 1962; Davis, Lii和Politis 2011)或k近邻(KNN)方法(Loftsgaarden, Quesenberry等1965)的KDE会面临全局过平滑或局部振荡问题(Yip, Ding和Chan 2006),这两种情况都不适用于GIT。为此,我们采用新的强度函数$f(x)$以获得更好的实证效果:

$$

f(\pmb{x})=\frac{1}{|\mathscr{N}{\pmb{x}}|}\sum_{\pmb{y}\in\mathscr{N}{\pmb{x}}}e^{-d(\pmb{x},\pmb{y})};d(\pmb{x},\pmb{y})=\sqrt{\sum_{j=1}^{s}\frac{(x_{j}-y_{j})^{2}}{\sigma_{j}^{2}}},

$$

$$

f(\pmb{x})=\frac{1}{|\mathscr{N}{\pmb{x}}|}\sum_{\pmb{y}\in\mathscr{N}{\pmb{x}}}e^{-d(\pmb{x},\pmb{y})};d(\pmb{x},\pmb{y})=\sqrt{\sum_{j=1}^{s}\frac{(x_{j}-y_{j})^{2}}{\sigma_{j}^{2}}},

$$

where $\mathcal{N}{x}$ is ${x}$ ’s neighbors for intensity estimation and $\sigma_{j}$ is the standard deviation of the $j$ -th dimension. With the consideration of $\sigma_{j}$ , $d(\cdot,\cdot)$ is robust to the absolute data scale. We estimate the intensity for each point in its neighborhood, avoiding the global over-smoothing. The exponent kernel is helpful to avoid local oscillator y, and we show that $f(x)$ is Lipschitz continuous under a mild condition, seeing Appendix. 5.1.

其中 $\mathcal{N}{x}$ 是 ${x}$ 的邻域用于强度估计,$\sigma_{j}$ 是第 $j$ 维的标准差。通过考虑 $\sigma_{j}$,$d(\cdot,\cdot)$ 对绝对数据尺度具有鲁棒性。我们为每个点在其邻域内估计强度,避免全局过度平滑。指数核有助于避免局部振荡,并且在温和条件下可以证明 $f(x)$ 是 Lipschitz 连续的,详见附录 5.1。

2.3 Local Clusters

2.3 本地集群

A local cluster is a set of points belonging to the same highintensity region. Once the intensity function is given, how to efficiently detect these local clusters is the key problem.

局部聚类是指属于同一高密度区域的一组点。在给定强度函数后,如何高效检测这些局部聚类成为关键问题。

We introduce a fast algorithm that collects points within the same intensity peak by searching along the gradient direction of the intensity function (refer to Fig. 8, Appendix. 5.1). Although similar approaches have been proposed before (Chazal et al. 2013; Rodriguez and Laio 2014), we provide comprehensive time complexity analysis and take connected boundary pairs (introduced in Section. 2.4) of adjacent local clusters into considerations. To clarify this approach, we formally define the root, gradient flow and $l o$ - cal clusters. Then we introduce a density growing process for an efficient algorithmic implementation.

我们提出了一种快速算法,该算法通过沿强度函数的梯度方向搜索来收集同一强度峰内的点(参见附录5.1中的图8)。尽管之前已有类似方法被提出(Chazal等人2013;Rodriguez和Laio 2014),但我们提供了全面的时间复杂度分析,并考虑了相邻局部簇的连通边界对(见第2.4节)。为阐明该方法,我们正式定义了根、梯度流和局部簇,随后介绍了一种高效算法实现的密度增长过程。

Definition. (root, gradient flow, local clusters) Given intensity function $f({\pmb x})$ , the set of roots is $\mathcal{R} = {r|\nabla f(r) = 0,|\nabla^{2}f(r)| < 0,r~\in~\mathcal{X}}$ , i.e., the local maximum. For any point $\textbf{\textit{x}}\in\mathbb{R}^{d_{s}}$ , there is a gradient flow $\pi_{\pmb{x}}:[0,1]\mapsto\mathbb{R}^{d_{s}}$ , starting at $\pi_{\pmb{x}}(0) =~\pmb{x}$ and ending in $\pi_{\pmb{x}}(1)\overset{}{=\i}R(\pmb{x})$ , where $R({\pmb x})\in\mathcal{R}$ . A local cluster a set of points converging to the same root along the gradient flow, e.g., ${\pmb{x}|R(\pmb{x})\in\mathcal{R},\pmb{x}\in\mathcal{X}}$ . To better understand, please see Fig. 8 in Appendix. 5.1.

定义。 (root, gradient flow, local clusters) 给定强度函数 $f({\pmb x})$,其根集为 $\mathcal{R} = {r|\nabla f(r) = 0,|\nabla^{2}f(r)| < 0,r~\in~\mathcal{X}}$,即局部最大值点。对于任意点 $\textbf{\textit{x}}\in\mathbb{R}^{d_{s}}$,存在一条梯度流 $\pi_{\pmb{x}}:[0,1]\mapsto\mathbb{R}^{d_{s}}$,起始于 $\pi_{\pmb{x}}(0) =~\pmb{x}$ 并终止于 $\pi_{\pmb{x}}(1)\overset{}{=\i}R(\pmb{x})$,其中 $R({\pmb x})\in\mathcal{R}$。局部簇是指沿梯度流收敛至同一根点的点集,即 ${\pmb{x}|R(\pmb{x})\in\mathcal{R},\pmb{x}\in\mathcal{X}}$。具体示意图参见附录5.1中的图8。

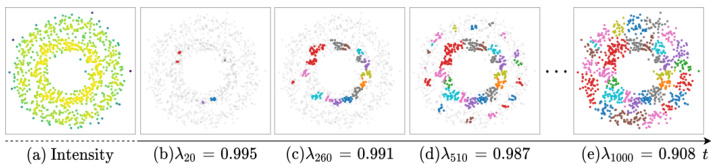

Intensity Growing Process. As shown in Fig. 3, points with higher intensities appear earlier and local clusters grow as the time goes on. We formally define the intensity growing process via a series of super-level sets w.r.t. $\lambda$ :

强度增长过程。如图 3 所示,强度较高的点出现较早,随着时间推移局部簇逐渐增长。我们通过一系列关于 $\lambda$ 的超水平集来正式定义强度增长过程:

$$

L_{\lambda}^{+}={{\pmb x}|f({\pmb x})\geq\lambda}.

$$

$$

L_{\lambda}^{+}={{\pmb x}|f({\pmb x})\geq\lambda}.

$$

We introduce a time variable $t,1\leq t\leq n$ , such that $\lambda_{t-1}\geq$ $\lambda_{t}$ . At time $t$ , the $i$ -th local cluster is

我们引入一个时间变量 $t,1\leq t\leq n$,使得 $\lambda_{t-1}\geq$ $\lambda_{t}$。在时间 $t$,第 $i$ 个本地聚类为

$$

C_{i}(t)={\pmb{x}|R(\pmb{x})=\pmb{r}{i},\pmb{x}\in L_{\lambda_{t}}^{+}},

$$

$$

C_{i}(t)={\pmb{x}|R(\pmb{x})=\pmb{r}{i},\pmb{x}\in L_{\lambda_{t}}^{+}},

$$

where $R(\cdot)$ is the root function, $\mathbf{\nabla}{\mathbf{\phi}}\mathbf{\mathit{r}}{i}$ is the $i$ -th root. It is obvious that $L_{\lambda_{t-1}}^{+}\subset L_{\lambda_{t}}^{+}$ and $L_{\lambda_{n}}^{+}=\chi$ . When $t=n$ , we can get all local clusters $C_{1}(n),C_{2}(n),...$ Next, we introduce a recursive function to efficiently compute $C_{i}(t)$ .

其中 $R(\cdot)$ 是根函数,$\mathbf{\nabla}{\mathbf{\phi}}\mathbf{\mathit{r}}{i}$ 是第 $i$ 个根。显然 $L_{\lambda_{t-1}}^{+}\subset L_{\lambda_{t}}^{+}$ 且 $L_{\lambda_{n}}^{+}=\chi$。当 $t=n$ 时,我们可以得到所有局部聚类 $C_{1}(n),C_{2}(n),...$。接下来,我们引入一个递归函数来高效计算 $C_{i}(t)$。

Figure 3: Example of the intensity growth process. (a) shows the estimated intensities; and (b), (c), (d), (e) show the growing process of local clusters as $\lambda_{t}$ gradually decays. We mark the unborn points in light gray and highlight local clusters. Limited by the number of colors, different local clusters may share the same color.

图 3: 强度增长过程示例。(a) 展示估计强度值;(b)、(c)、(d)、(e) 显示随着 $\lambda_{t}$ 逐渐衰减时局部簇的形成过程。未出生点标记为浅灰色,局部簇以高亮显示。受颜色数量限制,不同局部簇可能共用相同颜色。

Efficient Implementation. For each point $\scriptstyle{\boldsymbol{x}}{i}$ , to determine whether it creates a new local cluster or belongs to existing one, we need to compute its intensity2 and its parent point $P a(\pmb{x}{i})$ along the gradient direction. We determine $P a(\pmb{x}{i})$ as the neighbor which has maximum directional derivative from $\pmb{x}{i}$ to $P a(\pmb{x}_{i})$ , that is

高效实现。对于每个点 $\scriptstyle{\boldsymbol{x}}{i}$,为了确定它是创建新局部聚类还是属于现有聚类,我们需要计算其强度2及其沿梯度方向的父点 $P a(\pmb{x}{i})$。我们将 $P a(\pmb{x}{i})$ 确定为从 $\pmb{x}{i}$ 到 $P a(\pmb{x}_{i})$ 具有最大方向导数的邻居点,即

$$

P a(\pmb{x}{i})=\arg\operatorname*{max}{\pmb{x}{p}\in L_{f(\pmb{x}{i})}^{+}\cap\mathcal{N}{\pmb{x}{i}}}\frac{f(\pmb{x}{p})-f(\pmb{x}{i})}{||\pmb{x}{p}-\pmb{x}_{i}||},

$$

$$

P a(\pmb{x}{i})=\arg\operatorname*{max}{\pmb{x}{p}\in L_{f(\pmb{x}{i})}^{+}\cap\mathcal{N}{\pmb{x}{i}}}\frac{f(\pmb{x}{p})-f(\pmb{x}{i})}{||\pmb{x}{p}-\pmb{x}_{i}||},

$$

where $i=1,2,\ldots,n_{t-1}{}^{3}$ . Eq. 6 means that 1) if $\scriptstyle{\boldsymbol{x}}{i}$ ’s father is itself, $\boldsymbol{\mathbf{\mathit{x}}}{i}$ is the peak point and creates a new local cluster ${\pmb{x}{i}}$ , 2) if $\scriptstyle{\boldsymbol{x}}{i}$ ’s father shares the same root with $C_{i}(t-1)$ , $\scriptstyle{\boldsymbol{x}}{i}$ will be absorbed by $C_{i}(t-1)$ to generate $C_{i}(t)$ , and 3) local clusters which is irrelevant to $\scriptstyle{\boldsymbol{x}}{i}$ remain unchanged. We treat $R(\cdot)$ as a mapping table and update it on-the-fly. $C_{i}(t)$ is obviously recursive (Davis 2013). The time complexity of computing $C_{i}(n)$ is $\mathcal{O}(n\times\mathrm{cost}(P a))^{4}$ , where $\mathsf{c o s t}(P a)$ is $\mathcal{O}(k d_{s}\log n)$ for querying $k$ neighbors.

其中 $i=1,2,\ldots,n_{t-1}{}^{3}$。式6表示:1) 若 $\scriptstyle{\boldsymbol{x}}{i}$ 的父节点是其自身,则 $\boldsymbol{\mathbf{\mathit{x}}}{i}$ 为峰值点并创建新局部聚类 ${\pmb{x}{i}}$;2) 若 $\scriptstyle{\boldsymbol{x}}{i}$ 的父节点与 $C_{i}(t-1)$ 同根,则 $\scriptstyle{\boldsymbol{x}}{i}$ 将被 $C_{i}(t-1)$ 吸收生成 $C_{i}(t)$;3) 与 $\scriptstyle{\boldsymbol{x}}{i}$ 无关的局部聚类保持不变。我们将 $R(\cdot)$ 视为映射表并实时更新。显然 $C_{i}(t)$ 具有递归性 (Davis 2013)。计算 $C_{i}(n)$ 的时间复杂度为 $\mathcal{O}(n\times\mathrm{cost}(P a))^{4}$,其中 $\mathsf{c o s t}(P a)$ 为查询 $k$ 近邻的 $\mathcal{O}(k d_{s}\log n)$。

In summary, by applying Eq. 4-7, we can obtain local clusters $C_{1}\dot{(}n),\dot{C}{2}\dot{(}n),\cdot\cdot\cdot$ with time complexity $\mathscr{O}(d_{s}n\log n)$ . For simplicity, we write $C_{i}(n)$ as $C_{i}$ in later sections. The detailed local cluster detection algorithm 1 can be found in the Appendix.

总之,通过应用公式4-7,我们可以在时间复杂度$\mathscr{O}(d_{s}n\log n)$下获得局部聚类$C_{1}\dot{(}n),\dot{C}{2}\dot{(}n),\cdot\cdot\cdot$。为简化表述,后文将$C_{i}(n)$记为$C_{i}$。详细的局部聚类检测算法1见附录。

2.4 Construct Topo-graph

2.4 构建拓扑图

Instead of using local clusters as final results (Comaniciu and Meer 2002; Chazal et al. 2013; Rodriguez and Laio 2014; Jiang, Jang, and Kpotufe 2018), we further consider connect iv i ties between them for improving the results. For example, local clusters that belong to the same class usually have stronger connect iv i ties. Meanwhile, there is a risk that noisy connect iv i ties may damage performance. We manage local clusters and their connect iv i ties with a graph, namely topo-graph, and the essential problem is cutting noisy edges.

我们并未将局部聚类作为最终结果 (Comaniciu and Meer 2002; Chazal et al. 2013; Rodriguez and Laio 2014; Jiang, Jang, and Kpotufe 2018),而是进一步考虑它们之间的连接性以优化结果。例如,属于同一类别的局部聚类通常具有更强的连接性。然而,噪声连接可能损害性能。我们通过图结构(即拓扑图)管理局部聚类及其连接性,核心问题在于剔除噪声边。

Connectivity. According to UPGMA standing for unweighted pair group method using arithmetic averages (Jain and Dubes 1988; Gan, Ma, and Wu 2020), the similarity $S(\cdot,\cdot)$ between $C_{i},C_{j}\cup C_{k}$ can be obtained from the LanceWilliams formula:

连通性。根据代表算术平均非加权配对组方法的UPGMA (Jain and Dubes 1988; Gan, Ma, and Wu 2020),$C_{i},C_{j}\cup C_{k}$之间的相似度$S(\cdot,\cdot)$可通过LanceWilliams公式获得:

$$

\begin{array}{l}{{S(C_{i},C_{j}\cup C_{k})=}}\ {{\displaystyle\frac{|C_{j}|}{|C_{j}|+|C_{k}|}S(C_{i},C_{j})+\frac{|C_{k}|}{|C_{j}|+|C_{k}|}S(C_{i},C_{k}),}}\end{array}

$$

$$

\begin{array}{l}{{S(C_{i},C_{j}\cup C_{k})=}}\ {{\displaystyle\frac{|C_{j}|}{|C_{j}|+|C_{k}|}S(C_{i},C_{j})+\frac{|C_{k}|}{|C_{j}|+|C_{k}|}S(C_{i},C_{k}),}}\end{array}

$$

and

和

$$

S(C_{i},C_{j})=\frac{1}{|C_{i}||C_{j}|}\sum_{{\pmb x}\in C_{i},{\pmb y}\in C_{j}}s_{l}({\pmb x},{\pmb y}),

$$

$$

S(C_{i},C_{j})=\frac{1}{|C_{i}||C_{j}|}\sum_{{\pmb x}\in C_{i},{\pmb y}\in C_{j}}s_{l}({\pmb x},{\pmb y}),

$$

Note that $s_{l}({\pmb x},{\pmb y})$ is non-zero only if ${x}$ and $\textit{\textbf{y}}$ are mutual neighborhood and belong to different local clusters, in which case $(x,y)$ is a boundary pair of adjacent local clusters, e.g., ${x_{1}}$ and $\mathbf{\delta}{\mathbf{\delta}{x_{2}}}$ in Fig. 2. Fortunately, all the boundary pairs among local clusters can be obtained from previous intensity growing process, without further computation.

注意,$s_{l}({\pmb x},{\pmb y})$ 仅在 ${x}$ 和 $\textit{\textbf{y}}$ 互为邻域且属于不同局部聚类时非零,此时 $(x,y)$ 是相邻局部聚类的边界对,例如图 2 中的 ${x{1}}$ 和 $\mathbf{\delta}{\mathbf{\delta}{x_{2}}}$。幸运的是,所有局部聚类间的边界对都可以通过先前的强度增长过程获得,无需额外计算。

Topo-graph. We construct the topo-graph as $G=(V,E)$ , where $v_{i}$ is the $i$ -th local cluster $C_{i}$ and $e_{i,j}=S(C_{i},C_{j})$ .

拓扑图 (Topo-graph)。我们将拓扑图构建为 $G=(V,E)$,其中 $v_{i}$ 表示第 $i$ 个局部聚类 $C_{i}$,$e_{i,j}=S(C_{i},C_{j})$。

In summary, we introduce a well-defined connectivity between local clusters using connected boundary pairs. The detailed topo-graph construction algorithm 3 can be found in the Appendix, whose time complexity is $\mathcal{O}(k n)$ .

总之,我们通过连接边界对在局部聚类之间建立了明确的连通性。详细的拓扑图构建算法3可在附录中找到,其时间复杂度为 $\mathcal{O}(k n)$。

2.5 Edges Cutting and Final Clusters

2.5 边缘切割与最终聚类

To capture global data structures, connected local clusters are merged as final clusters, as shown in Fig. 1(e, f). However, the original topo-graph is usually dense, indicating redundant (or noisy) edges which lead to trivial solutions, e.g., all the local clusters are connected, and there is one final cluster. In this case, cutting noisy edges is necessary and the simplest way is to set a threshold to filter weak edges or using spectral clustering. However, we find that these methods are either sensitive, hard to tune or inaccurate, which severely limits the widely usage of similar approaches. Is there a robust and accurate way to filter noisy edges using available prior knowledge?

为捕捉全局数据结构,相连的局部聚类会被合并为最终聚类,如图1(e, f)所示。然而原始拓扑图通常较为稠密,存在冗余(或噪声)边,这会导致平凡解,例如所有局部聚类相连而仅形成一个最终聚类。此时需剪除噪声边,最简单的方法是设定阈值过滤弱边或使用谱聚类。但我们发现这些方法要么敏感难调参,要么精度不足,严重制约了同类方法的广泛应用。是否存在利用现有先验知识过滤噪声边的鲁棒且准确的方法?

Auto Edge Filtering. To make GIT more easy to use and accurate, we automatically filter edges with the help of pior class proportion. Firstly, we define a metric about final clusters by comparing the predicted and pior proportions, such that the higher the proportion score, the better the result (seeing the next paragraph). We sort edges with connectivity strengths from high to low, in which order we merge two end local clusters for each edge if this operation gets a higher score. In this process, edges that cannot increase the metric score are regarded as noisy edges and will be cutted.

自动边缘过滤。为使GIT更易用且精准,我们借助先验类别比例自动过滤边缘。首先,通过比较预测比例与先验比例定义最终聚类的评估指标(比例分数越高结果越优,详见下段)。按连接强度从高到低排序边缘,依次合并两端局部聚类(当该操作能提高分数时)。在此过程中,无法提升指标分数的边缘被视为噪声边缘并被切除。

Metric about Final Clusters. Let $\pmb{p}=[p_{1},p_{2},\dots,p_{m}]$ and $\pmb q=[q_{1},q_{2},\dots,q_{m^{\prime}}]$ be predicted and predefined class proportions, where $\begin{array}{r}{\sum_{i=1}^{m}p_{i}=1,\sum_{i}^{m^{\prime}}q_{i}=1}\end{array}$ . We take the similarity between ${p}$ and $\pmb q$ as t he aforementioned metric score. Because $m$ and $m^{\prime}$ may not be equal and the order of elements in $_{p}$ and $\pmb q$ is random, it is not feasible to use conventional Lp-norm. Instead, we use Wasser stein Distance to measure the similarity and define the metric $\mathcal{M}(\pmb{p},\pmb{q})$ as:

关于最终聚类的度量。令 $\pmb{p}=[p_{1},p_{2},\dots,p_{m}]$ 和 $\pmb q=[q_{1},q_{2},\dots,q_{m^{\prime}}]$ 分别表示预测和预定义的类别比例,其中 $\begin{array}{r}{\sum_{i=1}^{m}p_{i}=1,\sum_{i}^{m^{\prime}}q_{i}=1}\end{array}$。我们将 $\pmb{p}$ 与 $\pmb q$ 之间的相似度作为前述度量得分。由于 $m$ 和 $m^{\prime}$ 可能不相等,且 $\pmb{p}$ 和 $\pmb q$ 中元素的顺序是随机的,使用传统的 Lp 范数不可行。因此,我们采用 Wasserstein 距离来衡量相似性,并将度量 $\mathcal{M}(\pmb{p},\pmb{q})$ 定义为:

$$

\begin{array}{l}{{\mathcal{M}}({\pmb{p}},{\pmb q})=\mathrm{Exp}(-\underset{\gamma_{i,j}\geq0}{m i n}\displaystyle\sum_{i,j}\gamma_{i,j})}\ {{s.t.\qquad\displaystyle\sum_{j}\gamma_{i,j}=p_{i};\quad\displaystyle\sum_{i}\gamma_{i,j}=q_{j},}}\end{array}

$$

$$

\begin{array}{l}{{\mathcal{M}}({\pmb{p}},{\pmb q})=\mathrm{Exp}(-\underset{\gamma_{i,j}\geq0}{m i n}\displaystyle\sum_{i,j}\gamma_{i,j})}\ {{s.t.\qquad\displaystyle\sum_{j}\gamma_{i,j}=p_{i};\quad\displaystyle\sum_{i}\gamma_{i,j}=q_{j},}}\end{array}

$$

where the original Wasser stein Distance is $\begin{array}{r}{m i n\sum_{i,j}\gamma_{i,j}d_{i,j},\tilde{\gamma}{i,j}}\ {\gamma_{i,j}\geq0\sum_{i,j}\gamma_{i,j}d_{i,j},}\end{array}$ is the trans pot ation cost from $i$ to $j$ , and we set $d_{i,j}=1$ . Because $\dim(p_{i})=\dim(q_{j})=1$ , Eq. 11 can be efficiently calculated by linear programming.

原始Wasserstein距离为 $\begin{array}{r}{m i n\sum_{i,j}\gamma_{i,j}d_{i,j},\tilde{\gamma}{i,j}}\ {\gamma_{i,j}\geq0\sum_{i,j}\gamma_{i,j}d_{i,j},}\end{array}$ ,其中 $d_{i,j}$ 表示从 $i$ 到 $j$ 的传输成本,我们设 $d_{i,j}=1$ 。由于 $\dim(p_{i})=\dim(q_{j})=1$ ,公式11可通过线性规划高效计算。

In summary, we introduce a new metric of class proportion and use it to guide the process of edge filtering, then merge connected local clusters as final clusters. Compared to threshold-based or spectral-based edge cutting, this algorithm considers available prior knowledge to reduce the difficulty of parameter tuning or provide higher accuracy. By default, we set the same proportion for each category if only the number of classes is known. Besides, it is promising for dealing with sample imbalances if we know the actual proportion, seeing Section. 3.1 for ex peri metal evidence.

总结来说,我们引入了一种新的类别比例度量指标,并利用其指导边缘过滤过程,最终将连通的局部聚类合并为最终聚类。与基于阈值或谱分析的边缘切割方法相比,该算法通过整合先验知识来降低参数调优难度或提供更高精度。默认情况下,若仅已知类别数量,我们会为每个类别设置相同比例。此外,若已知实际类别分布比例,该方法在处理样本不平衡问题时表现优异 (详见第3.1节实验验证)。

3 Experiments

3 实验

In this section, we conduct experiments on a series of synthetic, small-scale and large-scale datasets to study the Accuracy, Robustness to shapes, noises and scales, Speed and Easy to use, while the Interpret ability of GIT has been demonstrated in previous sections. As an extension, we further investigate how PCA and AE (auto encoder) work with GIT to further improve the accuracy.

在本节中,我们通过一系列合成数据集、小规模和大规模数据集进行实验,研究GIT在准确度、对形状/噪声/尺度的鲁棒性、速度及易用性方面的表现(其可解释性已在前面章节得到验证)。作为扩展,我们进一步探究PCA和AE(auto encoder)如何与GIT协同工作以提升准确度。

Baselines. GIT is compared to density-based methods, such as FSFDP (Rodriguez and Laio 2014), HDBSCAN (McInnes and Healy 2017), Quickshift $^{++}$ (Jiang, Jang, and

基线方法。GIT与基于密度的方法进行比较,例如FSFDP (Rodriguez and Laio 2014)、HDBSCAN (McInnes and Healy 2017)、Quickshift$^{++}$ (Jiang, Jang和

Kpotufe 2018), SpectACl (Hess et al. 2019) and DPA (d’Errico et al. 2021). Besides, classical Spectral Clustering and $k$ -means $^{++}$ (Pedregosa et al. $201\bar{1})^{5}$ . Since there is little work comprehensively comparing recent clustering algorithms, our results can provide a reliable baseline for subsequent researches. For simplicity, we use these abbreviations: FSF (FSFDP), HDB (HDBSCAN), QSP (QuickshiftPP), SA (SpectACI), DPA (DPA), SC (Spectral Clustering) and KM ( $k$ -means $++$ ).

Kpotufe 2018)、SpectACl (Hess et al. 2019) 和 DPA (d'Errico et al. 2021)。此外还包括经典谱聚类和 $k$-means$^{++}$ (Pedregosa et al. $201\bar{1})^{5}$。由于目前缺乏对近期聚类算法的全面比较研究,我们的结果可为后续研究提供可靠基线。为简洁起见,我们使用以下缩写:FSF (FSFDP)、HDB (HDBSCAN)、QSP (QuickshiftPP)、SA (SpectACI)、DPA (DPA)、SC (谱聚类) 和 KM ($k$-means$++$)。

Metrics. We report F1-score (Evett and Spiehler 1999), Adjusted Rand Index (ARI) (Hubert and Arabie 1985) and Normalized Mutual Information (NMI) (Vinh, Epps, and Bailey 2010) to measure the clustering results. F1-score evaluates both each class’s accuracy and the bias of the model, ranging from 0 to 1. ARI is a measure of agreement between partitions, ranging from $-1$ to 1, and if it is less than 0, the model does not work in the task. NMI is a normalization of the Mutual Information (MI) score, where the result is scaled to range of 0 (no mutual information) and 1 (perfect correlation). Besides, because HDBSCAN and DPA perfer to drop some valid points as noises, we also consider