Automated Concatenation of Embeddings for Structured Prediction

结构化预测中嵌入向量的自动拼接

Abstract

摘要

Pretrained contextual i zed embeddings are powerful word representations for structured prediction tasks. Recent work found that better word representations can be obtained by concatenating different types of embeddings. However, the selection of embeddings to form the best concatenated representation usually varies depending on the task and the collection of candidate embeddings, and the ever- increasing number of embedding types makes it a more difficult problem. In this paper, we propose Automated Concatenation of Embeddings (ACE) to automate the process of finding better concatenations of embeddings for structured prediction tasks, based on a formulation inspired by recent progress on neural architecture search. Specifically, a controller alternately samples a concatenation of embeddings, according to its current belief of the effec ti ve ness of individual embedding types in consideration for a task, and updates the belief based on a reward. We follow strategies in reinforcement learning to optimize the parameters of the controller and compute the reward based on the accuracy of a task model, which is fed with the sampled concatenation as input and trained on a task dataset. Empirical results on 6 tasks and 21 datasets show that our approach outperforms strong baselines and achieves state-of-the-art performance with fine-tuned embeddings in all the evaluations.1

预训练上下文嵌入(contextualized embeddings)是结构化预测任务中强大的词表征方式。近期研究发现,通过拼接不同类型的嵌入可以获得更优的词表征。然而,最优拼接组合的选择通常因任务和候选嵌入集而异,且嵌入类型的不断增加使得该问题更具挑战性。本文提出自动化嵌入拼接(Automated Concatenation of Embeddings,ACE)方法,基于神经架构搜索最新进展的启发式建模,自动寻找适用于结构化预测任务的更优嵌入组合。具体而言,控制器根据当前对各类嵌入在任务中有效性的评估,交替采样嵌入组合,并基于奖励信号更新评估。我们采用强化学习策略优化控制器参数,其奖励信号源自任务模型的准确率——该模型以采样组合作为输入,并在任务数据集上训练。在6个任务21个数据集上的实验表明,我们的方法优于强基线模型,且在所有评估中使用微调嵌入均达到了最先进性能[20]。

1 Introduction

1 引言

Recent developments on pretrained contextual i zed embeddings have significantly improved the performance of structured prediction tasks in natural language processing. Approaches based on contextualized embeddings, such as ELMo (Peters et al., 2018), Flair (Akbik et al., 2018), BERT (Devlin et al., 2019), and XLM-R (Conneau et al., 2020), have been consistently raising the state-of-the-art for various structured prediction tasks. Concurrently, research has also showed that word representations based on the concatenation of multiple pretrained contextual i zed embeddings and traditional non-contextual i zed embeddings (such as word2vec (Mikolov et al., 2013) and character embeddings (Santos and Zadrozny, 2014)) can further improve performance (Peters et al., 2018; Akbik et al., 2018; Straková et al., 2019; Wang et al., 2020b). Given the ever-increasing number of embedding learning methods that operate on different granular i ties (e.g., word, subword, or character level) and with different model architectures, choosing the best embeddings to concatenate for a specific task becomes non-trivial, and exploring all possible concatenations can be prohibitively demanding in computing resources.

预训练上下文嵌入的最新进展显著提升了自然语言处理中结构化预测任务的性能。基于上下文嵌入的方法,如ELMo (Peters et al., 2018)、Flair (Akbik et al., 2018)、BERT (Devlin et al., 2019)和XLM-R (Conneau et al., 2020),不断刷新各类结构化预测任务的技术水平。同时,研究表明将多种预训练上下文嵌入与传统非上下文嵌入(如word2vec (Mikolov et al., 2013)和字符嵌入 (Santos and Zadrozny, 2014))进行拼接的词表示方法能进一步提升性能 (Peters et al., 2018; Akbik et al., 2018; Straková et al., 2019; Wang et al., 2020b)。鉴于不同粒度(如词、子词或字符级别)和不同模型架构的嵌入学习方法数量持续增长,为特定任务选择最佳拼接组合变得尤为复杂,而穷尽所有可能的拼接方式在计算资源上往往难以承受。

Neural architecture search (NAS) is an active area of research in deep learning to automatically search for better model architectures, and has achieved state-of-the-art performance on various tasks in computer vision, such as image classification (Real et al., 2019), semantic segmentation (Liu et al., 2019a), and object detection (Ghiasi et al., 2019). In natural language processing, NAS has been successfully applied to find better RNN structures (Zoph and Le, 2017; Pham et al., 2018b) and recently better transformer structures (So et al., 2019; Zhu et al., 2020). In this paper, we propose Automated Concatenation of Embeddings (ACE) to automate the process of finding better concatenations of embeddings for structured prediction tasks. ACE is formulated as an NAS problem. In this approach, an iterative search process is guided by a controller based on its belief that models the effec ti ve ness of individual embedding candidates in consideration for a specific task. At each step, the controller samples a concatenation of embeddings according to the belief model and then feeds the concatenated word representations as inputs to a task model, which in turn is trained on the task dataset and returns the model accuracy as a reward signal to update the belief model. We use the policy gradient algorithm (Williams, 1992) in reinforcement learning (Sutton and Barto, 1992) to solve the optimization problem. In order to improve the efficiency of the search process, we also design a special reward function by accumulating all the rewards based on the transformation between the current concatenation and all previously sampled concatenations.

神经架构搜索 (NAS) 是深度学习领域的一个活跃研究方向,旨在自动搜索更优的模型架构,并在计算机视觉的多个任务中取得了最先进的性能,例如图像分类 (Real et al., 2019)、语义分割 (Liu et al., 2019a) 和物体检测 (Ghiasi et al., 2019)。在自然语言处理领域,NAS已成功应用于寻找更优的RNN结构 (Zoph and Le, 2017; Pham et al., 2018b),最近还被用于发现更优的Transformer结构 (So et al., 2019; Zhu et al., 2020)。本文提出自动化嵌入拼接 (Automated Concatenation of Embeddings, ACE) 方法,用于自动寻找结构化预测任务中更优的嵌入拼接方式。ACE被形式化为一个NAS问题。在该方法中,基于控制器对特定任务中各个嵌入候选有效性的建模信念,引导迭代搜索过程。每一步中,控制器根据信念模型采样一个嵌入拼接方案,然后将拼接后的词表征作为输入馈送给任务模型,该模型在任务数据集上进行训练并返回模型准确率作为奖励信号来更新信念模型。我们使用强化学习中的策略梯度算法 (Williams, 1992) (Sutton and Barto, 1992) 来解决这一优化问题。为了提高搜索效率,我们还设计了一个特殊的奖励函数,通过累积当前拼接方案与所有先前采样拼接方案之间的转换所产生的全部奖励。

Our approach is different from previous work on NAS in the following aspects:

我们的方法在以下方面与以往NAS研究不同:

Empirical results show that ACE outperforms strong baselines. Furthermore, when ACE is applied to concatenate pretrained contextual i zed embeddings fine-tuned on specific tasks, we can achieve state-of-the-art accuracy on 6 structured prediction tasks including Named Entity Recognition (Sundheim, 1995), Part-Of-Speech tagging (DeRose, 1988), chunking (Tjong Kim Sang and Buchholz, 2000), aspect extraction (Hu and Liu,

实证结果表明,ACE优于强基线方法。此外,当应用ACE串联针对特定任务微调的预训练上下文嵌入时,我们能在6项结构化预测任务中取得最先进的准确率,包括命名实体识别 (Sundheim, 1995) 、词性标注 (DeRose, 1988) 、组块分析 (Tjong Kim Sang and Buchholz, 2000) 、方面抽取 (Hu and Liu,

2004), syntactic dependency parsing (Tesnière, 1959) and semantic dependency parsing (Oepen et al., 2014) over 21 datasets. Besides, we also analyze the advantage of ACE and reward function design over the baselines and show the advantage of ACE over ensemble models.

2004年)、句法依存分析(Tesnière, 1959)和语义依存分析(Oepen等, 2014)在内的21个数据集上的表现。此外,我们还分析了ACE和奖励函数设计相较于基线方法的优势,并展示了ACE相对于集成模型的优越性。

2 Related Work

2 相关工作

2.1 Embeddings

2.1 嵌入 (Embeddings)

Non-contextual i zed embeddings, such as word2vec (Mikolov et al., 2013), GloVe (Pennington et al., 2014), and fastText (Bojanowski et al., 2017), help lots of NLP tasks. Character embeddings (Santos and Zadrozny, 2014) are trained together with the task and applied in many structured prediction tasks (Ma and Hovy, 2016; Lample et al., 2016; Dozat and Manning, 2018). For pretrained contex- tualized embeddings, ELMo (Peters et al., 2018), a pretrained contextual i zed word embedding generated with multiple Bidirectional LSTM layers, significantly outperforms previous state-of-the-art approaches on several NLP tasks. Following this idea, Akbik et al. (2018) proposed Flair embeddings, which is a kind of contextual i zed character embeddings and achieved strong performance in sequence labeling tasks. Recently, Devlin et al. (2019) proposed BERT, which encodes contextualized sub-word information by Transformers (Vaswani et al., 2017) and significantly improves the performance on a lot of NLP tasks. Much research such as RoBERTa (Liu et al., 2019c) has focused on improving BERT model’s performance through stronger masking strategies. Moreover, multilingual contextual i zed embeddings become popular. Pires et al. (2019) and Wu and Dredze (2019) showed that Multilingual BERT (M-BERT) could learn a good multilingual representation effectively with strong cross-lingual zero-shot transfer performance in various tasks. Conneau et al. (2020) proposed XLM-R, which is trained on a larger multilingual corpus and significantly outperforms M-BERT on various multilingual tasks.

非上下文嵌入方法,如word2vec (Mikolov et al., 2013)、GloVe (Pennington et al., 2014)和fastText (Bojanowski et al., 2017),对众多自然语言处理任务大有裨益。字符嵌入(Santos and Zadrozny, 2014)与任务联合训练,被广泛应用于结构化预测任务(Ma and Hovy, 2016; Lample et al., 2016; Dozat and Manning, 2018)。在预训练上下文嵌入方面,ELMo (Peters et al., 2018)通过多层双向LSTM生成的预训练上下文词嵌入,在多项自然语言处理任务上显著超越先前最优方法。受此启发,Akbik et al. (2018)提出了Flair嵌入,这是一种上下文字符嵌入,在序列标注任务中表现优异。近期,Devlin et al. (2019)提出的BERT模型通过Transformer (Vaswani et al., 2017)架构编码上下文子词信息,大幅提升了众多自然语言处理任务的性能。许多研究如RoBERTa (Liu et al., 2019c)致力于通过更强大的掩码策略改进BERT模型表现。此外,多语言上下文嵌入逐渐流行,Pires et al. (2019)与Wu and Dredze (2019)研究表明多语言BERT (M-BERT)能有效学习优质多语言表征,在各种任务中展现出强大的跨语言零样本迁移能力。Conneau et al. (2020)提出的XLM-R基于更大规模多语言语料训练,在多语言任务上显著优于M-BERT。

2.2 Neural Architecture Search

2.2 神经架构搜索 (Neural Architecture Search)

Recent progress on deep learning has shown that network architecture design is crucial to the model performance. However, designing a strong neural architecture for each task requires enormous efforts, high level of knowledge, and experiences over the task domain. Therefore, automatic design of neural architecture is desired. A crucial part of

深度学习的最新进展表明,网络架构设计对模型性能至关重要。然而,为每项任务设计强大的神经网络架构需要大量精力、高水平专业知识以及对该任务领域的丰富经验。因此,自动化的神经架构设计成为迫切需求。其中关键环节在于

NAS is search space design, which defines the discoverable NAS space. Previous work (Baker et al., 2017; Zoph and Le, 2017; Xie and Yuille, 2017) designs a global search space (Elsken et al., 2019) which incorporates structures from hand-crafted architectures. For example, Zoph and Le (2017) designed a chained-structured search space with skip connections. The global search space usually has a considerable degree of freedom. For example, the approach of Zoph and Le (2017) takes 22,400 GPUhours to search on CIFAR-10 dataset. Based on the observation that existing hand-crafted architectures contain repeated structures (Szegedy et al., 2016; He et al., 2016; Huang et al., 2017), Zoph et al. (2018) explored cell-based search space which can reduce the search time to 2,000 GPU-hours.

NAS是搜索空间设计,它定义了可发现的NAS空间。先前的工作 (Baker et al., 2017; Zoph and Le, 2017; Xie and Yuille, 2017) 设计了一个全局搜索空间 (Elsken et al., 2019),其中包含了手工设计架构的结构。例如,Zoph和Le (2017) 设计了一个带有跳跃连接的链式结构搜索空间。全局搜索空间通常具有相当大的自由度。例如,Zoph和Le (2017) 的方法在CIFAR-10数据集上搜索需要22,400 GPU小时。基于现有手工设计架构包含重复结构 (Szegedy et al., 2016; He et al., 2016; Huang et al., 2017) 的观察,Zoph等人 (2018) 探索了基于单元的搜索空间,可将搜索时间减少到2,000 GPU小时。

In recent NAS research, reinforcement learning and evolutionary algorithms are the most usual approaches. In reinforcement learning, the agent’s actions are the generation of neural architectures and the action space is identical to the search space. Previous work usually applies an RNN layer (Zoph and Le, 2017; Zhong et al., 2018; Zoph et al., 2018) or use Markov Decision Process (Baker et al., 2017) to decide the hyper-parameter of each structure and decide the input order of each structure. Evolutionary algorithms have been applied to architecture search for many decades (Miller et al., 1989; Angeline et al., 1994; Stanley and Mi ikk ul a inen, 2002; Floreano et al., 2008; Jozefowicz et al., 2015). The algorithm repeatedly generates new populations through recombination and mutation operations and selects survivors through competing among the population. Recent work with evolutionary algorithms differ in the method on parent/survivor selection and population generation. For example, Real et al. (2017), Liu et al. (2018a), Wistuba (2018) and Real et al. (2019) applied tournament selection (Goldberg and Deb, 1991) for the parent selection while Xie and Yuille (2017) keeps all parents. Suganuma et al. (2017) and Elsken et al. (2018) chose the best model while Real et al. (2019) chose several latest models as survivors.

在近期的神经网络架构搜索(NAS)研究中,强化学习和进化算法是最常用的方法。在强化学习中,智能体的动作是生成神经架构,动作空间与搜索空间相同。先前的工作通常应用RNN层(Zoph和Le,2017;Zhong等,2018;Zoph等,2018)或使用马尔可夫决策过程(Baker等,2017)来决定每个结构的超参数和输入顺序。进化算法应用于架构搜索已有数十年历史(Miller等,1989;Angeline等,1994;Stanley和Miikkulainen,2002;Floreano等,2008;Jozefowicz等,2015)。该算法通过重组和变异操作反复生成新种群,并通过种群竞争选择幸存者。近期采用进化算法的工作在父代/幸存者选择和种群生成方法上有所不同。例如,Real等(2017)、Liu等(2018a)、Wistuba(2018)和Real等(2019)采用锦标赛选择(Goldberg和Deb,1991)进行父代选择,而Xie和Yuille(2017)保留所有父代。Suganuma等(2017)和Elsken等(2018)选择最佳模型,而Real等(2019)选择几个最新模型作为幸存者。

3 Automated Concatenation of Embeddings

3 嵌入向量的自动拼接

In ACE, a task model and a controller interact with each other repeatedly. The task model predicts the task output, while the controller searches for better embedding concatenation as the word representation for the task model to achieve higher accuracy.

在ACE中,任务模型和控制器反复交互。任务模型预测任务输出,而控制器搜索更好的嵌入拼接作为词表征,以使任务模型达到更高准确率。

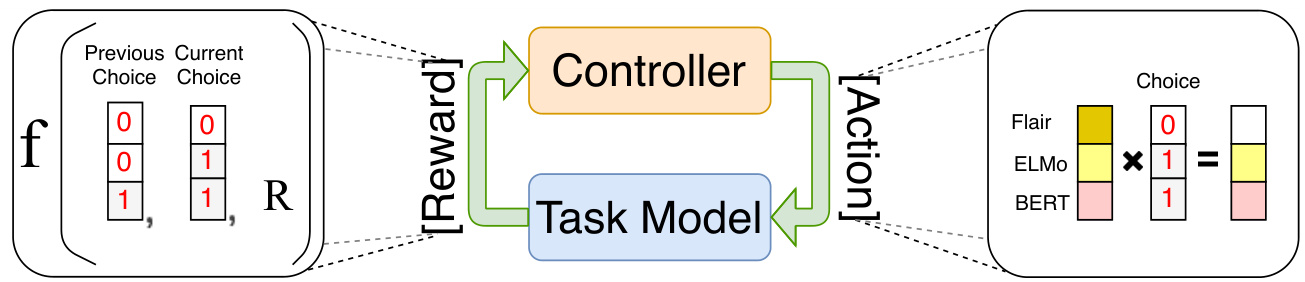

Given an embedding concatenation generated from the controller, the task model is trained over the task data and returns a reward to the controller. The controller receives the reward to update its parameter and samples a new embedding concatenation for the task model. Figure 1 shows the general architecture of our approach.

给定控制器生成的嵌入拼接 (embedding concatenation),任务模型在任务数据上进行训练,并向控制器返回奖励值。控制器接收该奖励值以更新其参数,并为任务模型采样新的嵌入拼接。图 1: 展示了我们方法的整体架构。

3.1 Task Model

3.1 任务模型

For the task model, we emphasis on sequencestructured and graph-structured outputs. Given a structured prediction task with input sentence $_{x}$ and structured output $\pmb{y}$ , we can calculate the probability distribution $P(\pmb{y}|\pmb{x})$ by:

对于任务模型,我们重点关注序列结构和图结构输出。给定一个结构化预测任务,其输入句子为 $_{x}$,结构化输出为 $\pmb{y}$,我们可以通过以下方式计算概率分布 $P(\pmb{y}|\pmb{x})$:

$$

P(\pmb{y}|\pmb{x})=\frac{\exp\left(\operatorname{Score}(\pmb{x},\pmb{y})\right)}{\sum_{\pmb{y}^{\prime}\in\mathbb{X}(\pmb{x})}\exp\left(\operatorname{Score}(\pmb{x},\pmb{y}^{\prime})\right)}

$$

$$

P(\pmb{y}|\pmb{x})=\frac{\exp\left(\operatorname{Score}(\pmb{x},\pmb{y})\right)}{\sum_{\pmb{y}^{\prime}\in\mathbb{X}(\pmb{x})}\exp\left(\operatorname{Score}(\pmb{x},\pmb{y}^{\prime})\right)}

$$

where $\mathbb{Y}({\pmb x})$ represents all possible output structures given the input sentence $_{x}$ . Depending on different structured prediction tasks, the output structure $\pmb{y}$ can be label sequences, trees, graphs or other structures. In this paper, we use sequencestructured and graph-structured outputs as two exemplar structured prediction tasks. We use BiLSTM-CRF model (Ma and Hovy, 2016; Lample et al., 2016) for sequence-structured outputs and use BiLSTM-Biaffine model (Dozat and Manning, 2017) for graph-structured outputs:

其中 $\mathbb{Y}({\pmb x})$ 表示给定输入句子 $_{x}$ 的所有可能输出结构。根据不同的结构化预测任务,输出结构 $\pmb{y}$ 可以是标签序列、树结构、图结构或其他结构。本文采用序列结构和图结构输出作为两个典型的结构化预测任务:使用 BiLSTM-CRF 模型 (Ma and Hovy, 2016; Lample et al., 2016) 处理序列结构输出,使用 BiLSTM-Biaffine 模型 (Dozat and Manning, 2017) 处理图结构输出:

$$

\begin{array}{r l}&{\quad P^{\mathrm{seq}}({\pmb y}|{\pmb x})=\mathrm{BiLSTM-CRF}({\pmb V},{\pmb y})}\ &{\quad P^{\mathrm{graph}}({\pmb y}|{\pmb x})=\mathrm{BiLSTM-Biaffine}({\pmb V},{\pmb y})}\end{array}

$$

$$

\begin{array}{r l}&{\quad P^{\mathrm{seq}}({\pmb y}|{\pmb x})=\mathrm{BiLSTM-CRF}({\pmb V},{\pmb y})}\ &{\quad P^{\mathrm{graph}}({\pmb y}|{\pmb x})=\mathrm{BiLSTM-Biaffine}({\pmb V},{\pmb y})}\end{array}

$$

where $V=[\pmb{v}{1};\pmb{\cdot}\pmb{\cdot}\pmb{\cdot};\pmb{v}{n}]$ , $V\in\mathbb{R}^{d\times n}$ is a matrix of the word representations for the input sentence ${\pmb{x}}$ with $n$ words, $d$ is the hidden size of the concatenation of all embeddings. The word representation ${\boldsymbol{v}}_{i}$ of $i$ -th word is a concatenation of $L$ types of word embeddings:

其中 $V=[\pmb{v}{1};\pmb{\cdot}\pmb{\cdot}\pmb{\cdot};\pmb{v}{n}]$,$V\in\mathbb{R}^{d\times n}$ 是输入句子 ${\pmb{x}}$ 的词表示矩阵,包含 $n$ 个单词,$d$ 为所有嵌入拼接后的隐藏层大小。第 $i$ 个单词的词表示 ${\boldsymbol{v}}_{i}$ 由 $L$ 种词嵌入拼接而成:

$$

\pmb{v}{i}^{l}=\mathrm{embed}{i}^{l}(\pmb{x});~\pmb{v}{i}=[\pmb{v}{i}^{1};\pmb{v}{i}^{2};...;\pmb{v}_{i}^{L}]

$$

$$

\pmb{v}{i}^{l}=\mathrm{embed}{i}^{l}(\pmb{x});~\pmb{v}{i}=[\pmb{v}{i}^{1};\pmb{v}{i}^{2};...;\pmb{v}_{i}^{L}]

$$

where embedl is the model of $l$ -th embeddings, $\pmb{v}{i}\in\mathbb{R}^{d},\pmb{v}_{i}^{l}\in\mathbb{R}^{d^{l}}.\pmb{d}^{l}$ is the hidden size of embedl.

其中 embedl 是第 $l$ 个嵌入的模型,$\pmb{v}{i}\in\mathbb{R}^{d},\pmb{v}_{i}^{l}\in\mathbb{R}^{d^{l}}.\pmb{d}^{l}$ 是 embedl 的隐藏大小。

3.2 Search Space Design

3.2 搜索空间设计

The neural architecture search space can be represented as a set of neural networks (Elsken et al., 2019). A neural network can be represented as a directed acyclic graph with a set of nodes and directed edges. Each node represents an operation, while each edge represents the inputs and outputs between these nodes. In ACE, we represent each embedding candidate as a node. The input to the nodes is the input sentence $_{\pmb{x}}$ , and the outputs are the embeddings $v^{l}$ . Since we concatenate the embeddings as the word representation of the task model, there is no connection between nodes in our search space. Therefore, the search space can be significantly reduced. For each node, there are a lot of options to extract word features. Taking BERT embeddings as an example, Devlin et al. (2019) concatenated the last four layers as word features while Kondratyuk and Straka (2019) applied a weighted sum of all twelve layers. However, the empirical results (Devlin et al., 2019) do not show a significant difference in accuracy. We follow the typical usage for each embedding to further reduce the search space. As a result, each embedding only has a fixed operation and the resulting search space contains $2^{L}{-}1$ possible combinations of nodes.

神经架构搜索空间可以表示为一组神经网络 (Elsken et al., 2019)。神经网络可以表示为具有一组节点和有向边的有向无环图。每个节点代表一个操作,而每条边代表这些节点之间的输入和输出。在ACE中,我们将每个嵌入候选表示为一个节点。节点的输入是句子 $_{\pmb{x}}$,输出是嵌入 $v^{l}$。由于我们将这些嵌入连接起来作为任务模型的词表示,因此搜索空间中的节点之间没有连接。因此,搜索空间可以显著缩小。对于每个节点,有许多提取词特征的选项。以BERT嵌入为例,Devlin et al. (2019) 将最后四层连接起来作为词特征,而Kondratyuk和Straka (2019) 则对所有十二层应用加权求和。然而,实证结果 (Devlin et al., 2019) 并未显示出准确率的显著差异。我们遵循每种嵌入的典型用法,以进一步缩小搜索空间。因此,每个嵌入只有一个固定的操作,最终的搜索空间包含 $2^{L}{-}1$ 种可能的节点组合。

In NAS, weight sharing (Pham et al., 2018a) shares the weight of structures in training different neural architectures to reduce the training cost. In comparison, we fixed the weight of pretrained embedding candidates in ACE except for the character embeddings. Instead of sharing the parameters of the embeddings, we share the parameters of the task models at each step of search. However, the hidden size of word representation varies over the concatenations, making the weight sharing of structured prediction models difficult. Instead of deciding whether each node exists in the graph, we keep all nodes in the search space and add an additional operation for each node to indicate whether the embedding is masked out. To represent the selected concatenation, we use a binary vector $\pmb{a}=[a_{1},\cdots,a_{l},\cdots,a_{L}]$ as an mask to mask out the embeddings which are not selected:

在神经架构搜索(NAS)中,权重共享(Pham等人,2018a)通过共享不同神经架构训练中的结构权重来降低训练成本。相比之下,我们在ACE中固定了预训练嵌入候选的权重(字符嵌入除外)。我们不是共享嵌入参数,而是在搜索的每一步共享任务模型的参数。然而,词表征的隐藏维度会随着拼接方式而变化,这使得结构化预测模型的权重共享变得困难。我们不是判断图中每个节点是否存在,而是保留搜索空间中的所有节点,并为每个节点添加额外操作来指示该嵌入是否被掩蔽。为了表示选定的拼接方式,我们使用二元向量$\pmb{a}=[a_{1},\cdots,a_{l},\cdots,a_{L}]$作为掩码来过滤未被选中的嵌入:

$$

\pmb{v}{i}=[\pmb{v}{i}^{1}a_{1};,...;\pmb{v}{i}^{l}a_{l};,...;\pmb{v}{i}^{L}a_{L}]

$$

$$

\pmb{v}{i}=[\pmb{v}{i}^{1}a_{1};,...;\pmb{v}{i}^{l}a_{l};,...;\pmb{v}{i}^{L}a_{L}]

$$

where $a_{l}$ is a binary variable. Since the input $V$ is applied to a linear layer in the BiLSTM layer, multiplying the mask with the embeddings is equivalent to directly concatenating the selected embeddings:

其中 $a_{l}$ 是二元变量。由于输入 $V$ 会作用于 BiLSTM 层的线性层,将掩码与嵌入向量相乘相当于直接拼接选中的嵌入向量:

$$

\boldsymbol{W}^{\top}\pmb{v}{i}=\sum_{l=1}^{L}\boldsymbol{W}{l}^{\top}\pmb{v}{i}^{l}\pmb{a}_{l}

$$

$$

\boldsymbol{W}^{\top}\pmb{v}{i}=\sum_{l=1}^{L}\boldsymbol{W}{l}^{\top}\pmb{v}{i}^{l}\pmb{a}_{l}

$$

where W =[W1; W2; . . . ; WL] and W ∈Rd×h and $\scriptstyle W_{l}\in\mathbb{R}^{d^{l}\times h}$ . Therefore, the model weights can be shared after applying the embedding mask to all embedding candidates’ concatenation. Another benefit of our search space design is that we can remove the unused embedding candidates and the corresponding weights in $W$ for a lighter task model after the best concatenation is found by ACE.

其中 W =[W1; W2; . . . ; WL] 且 W ∈Rd×h,同时 $\scriptstyle W_{l}\in\mathbb{R}^{d^{l}\times h}$。因此,在对所有嵌入候选进行拼接并应用嵌入掩码后,模型权重可以实现共享。我们设计的搜索空间还有另一个优势:当 ACE 找到最佳拼接方案后,可以移除未使用的嵌入候选及其在 $W$ 中对应的权重,从而获得更轻量的任务模型。

3.3 Searching in the Space

3.3 在空间中搜索

where $\sigma$ is the sigmoid function. Given the mask, the task model is trained until convergence and returns an accuracy $R$ on the development set. As the accuracy cannot be back-propagated to the controller, we use the reinforcement algorithm for optimization. The accuracy $R$ is used as the reward signal to train the controller. The controller’s target is to maximize the expected reward $J(\pmb\theta)=\mathbb{E}_{P^{\mathrm{ctrl}}(\pmb a;\pmb\theta)}[R]$ through the policy gradient method (Williams, 1992). In our approach, since calculating the exact expectation is intractable, the gradient of $J(\pmb\theta)$ is approximated by sampling only one selection following the distribution $P^{\mathrm{ctrl}}(\pmb{a};\pmb{\theta})$ at each step for training efficiency:

其中 $\sigma$ 是 sigmoid 函数。给定掩码后,任务模型训练至收敛并在开发集上返回准确率 $R$。由于准确率无法反向传播至控制器,我们采用强化学习算法进行优化。该准确率 $R$ 被用作奖励信号来训练控制器。控制器的目标是通过策略梯度法 (Williams, 1992) 最大化期望奖励 $J(\pmb\theta)=\mathbb{E}_{P^{\mathrm{ctrl}}(\pmb a;\pmb\theta)}[R]$。在我们的方法中,由于精确计算期望值不可行,为提升训练效率,每一步仅按分布 $P^{\mathrm{ctrl}}(\pmb{a};\pmb{\theta})$ 采样一个选择来近似计算 $J(\pmb\theta)$ 的梯度:

$$

\nabla_{\boldsymbol{\theta}}J(\boldsymbol{\theta})\approx\sum_{l=1}^{L}\nabla_{\boldsymbol{\theta}}\log P_{l}^{\mathrm{ctrl}}(a_{l};\theta_{l})(R-b)

$$

$$

\nabla_{\boldsymbol{\theta}}J(\boldsymbol{\theta})\approx\sum_{l=1}^{L}\nabla_{\boldsymbol{\theta}}\log P_{l}^{\mathrm{ctrl}}(a_{l};\theta_{l})(R-b)

$$

where $b$ is the baseline function to reduce the high variance of the update function. The baseline usually can be the highest accuracy during the search process. Instead of merely using the highest accuracy of development set over the search process as the baseline, we design a reward function on how each embedding candidate contributes to accuracy change by utilizing all searched concatenations’ development scores. We use a binary vector $\left|\pmb{a}^{t}-\pmb{a}^{i}\right|$ to represent the change between current embedding concatenation ${\pmb a}^{t}$ at current time step $t$ and ${\pmb a}^{i}$ at previous time step $i$ . We then define the reward function as:

其中 $b$ 是用于降低更新函数高方差的基线函数。基线通常可以设为搜索过程中的最高准确率。我们不仅将开发集在搜索过程中的最高准确率作为基线,还通过利用所有已搜索拼接组合的开发集得分,设计了一个衡量每个嵌入候选对准确率变化贡献度的奖励函数。我们使用二元向量 $\left|\pmb{a}^{t}-\pmb{a}^{i}\right|$ 来表示当前时间步 $t$ 的嵌入拼接组合 ${\pmb a}^{t}$ 与先前时间步 $i$ 的 ${\pmb a}^{i}$ 之间的变化,并将奖励函数定义为:

$$

\pmb{r}^{t}=\sum_{i=1}^{t-1}(R_{t}-R_{i})|\pmb{a}^{t}-\pmb{a}^{i}|

$$

$$

\pmb{r}^{t}=\sum_{i=1}^{t-1}(R_{t}-R_{i})|\pmb{a}^{t}-\pmb{a}^{i}|

$$

Figure 1: The main paradigm of our approach is shown in the middle, where an example of reward function is represented in the left and an example of a concatenation action is shown in the right.

图 1: 我们的方法主要范式如中间部分所示,左侧展示了奖励函数示例,右侧展示了拼接操作示例。

where $\pmb{r}^{t}$ is a vector with length $L$ representing the reward of each embedding candidate. $R_{t}$ and $R_{i}$ are the reward at time step $t$ and $i$ When the Hamming distance of two concatenations $H a m m(\pmb{a}^{t},\pmb{a}^{i})$ gets larger, the changed candidates’ contribution to the accuracy becomes less noticeable. The controller may be misled to reward a candidate that is not actually helpful. We apply a discount factor to reduce the reward for two concatenations with a large Hamming distance to alleviate this issue. Our final reward function is:

其中 $\pmb{r}^{t}$ 是一个长度为 $L$ 的向量,表示每个嵌入候选的奖励。$R_{t}$ 和 $R_{i}$ 分别是时间步 $t$ 和 $i$ 的奖励。当两个拼接的汉明距离 $Hamm(\pmb{a}^{t},\pmb{a}^{i})$ 增大时,被修改候选对准确率的贡献会变得不明显。控制器可能会误判并奖励实际上无益的候选。我们引入折扣因子来降低具有较大汉明距离的拼接奖励,以缓解该问题。最终的奖励函数为:

$$

r^{t}{=}\sum_{i=1}^{t-1}(R_{t}{-}R_{i})\gamma^{H a m m(a^{t},a^{i})-1}|a^{t}{-}a^{i}|

$$

$$

r^{t}{=}\sum_{i=1}^{t-1}(R_{t}{-}R_{i})\gamma^{H a m m(a^{t},a^{i})-1}|a^{t}{-}a^{i}|

$$

where $\gamma\in(0,1)$ . Eq. 4 is then reformulated as:

其中 $\gamma\in(0,1)$。式4可改写为:

$$

\nabla_{\boldsymbol{\theta}}J_{t}(\boldsymbol{\theta})\approx\sum_{l=1}^{L}\nabla_{\boldsymbol{\theta}}\log P_{l}^{\mathrm{ctrl}}(a_{l}^{t};\theta_{l})r_{l}^{t}

$$

$$

\nabla_{\boldsymbol{\theta}}J_{t}(\boldsymbol{\theta})\approx\sum_{l=1}^{L}\nabla_{\boldsymbol{\theta}}\log P_{l}^{\mathrm{ctrl}}(a_{l}^{t};\theta_{l})r_{l}^{t}

$$

3.4 Training

3.4 训练

To train the controller, we use a dictionary $\mathbb{D}$ to store the concatenations and the corresponding validation scores. At $t=1$ , we train the task model with all embedding candidates concatenated. From $t=2$ , we repeat the following steps until a maximum iteration $T$ :

为了训练控制器,我们使用字典 $\mathbb{D}$ 来存储拼接组合及其对应的验证分数。在 $t=1$ 时,我们用所有候选嵌入的拼接来训练任务模型。从 $t=2$ 开始,我们重复以下步骤直到达到最大迭代次数 $T$:

When sampling ${\mathbf{}}a^{t}$ , we avoid selecting the previous concatenation ${\pmb a}^{t-1}$ and the all-zero vector (i.e., selecting no embedding). If ${\mathbf{}}a^{t}$ is in the dictionary $\mathbb{D}$ , we compare the $R_{t}$ with the value in the dictionary and keep the higher one.

在采样 ${\mathbf{}}a^{t}$ 时,我们避免选择先前的拼接 ${\pmb a}^{t-1}$ 和全零向量(即不选择任何嵌入)。如果 ${\mathbf{}}a^{t}$ 在字典 $\mathbb{D}$ 中,我们会将 $R_{t}$ 与字典中的值进行比较,并保留较高的那个。

4 Experiments

4 实验

We use ISO 639-1 language codes to represent languages in the table2.

我们使用ISO 639-1语言代码来表示表2中的语言。

4.1 Datasets and Configurations

4.1 数据集与配置

To show ACE’s effectiveness, we conduct extensive experiments on a variety of structured prediction tasks varying from syntactic tasks to semantic tasks. The tasks are named entity recognition (NER), PartOf-Speech (POS) tagging, Chunking, Aspect Extraction (AE), Syntactic Dependency Parsing (DP) and Semantic Dependency Parsing (SDP). The details of the 6 structured prediction tasks in our experiments are shown in below:

为了验证ACE的有效性,我们在多种结构化预测任务上进行了广泛实验,涵盖句法任务到语义任务。具体任务包括命名实体识别(NER)、词性标注(POS)、组块分析(Chunking)、方面抽取(AE)、句法依存分析(DP)和语义依存分析(SDP)。实验中涉及的6项结构化预测任务详情如下:

• NER: We use the corpora of 4 languages from the CoNLL 2002 and 2003 shared task (Tjong Kim Sang, 2002; Tjong Kim Sang and De Meulder, 2003) with standard split.

• NER (命名实体识别): 我们使用来自 CoNLL 2002 和 2003 共享任务的 4 种语言语料库 (Tjong Kim Sang, 2002; Tjong Kim Sang and De Meulder, 2003) 并采用标准划分。

• POS Tagging: We use three datasets, Ritter11-TPOS (Ritter et al., 2011), ARK-Twitter (Gimpel et al., 2011; Owoputi et al., 2013) and Tweebank- v2 (Liu et al., 2018b) datasets (Ritter, ARK and TB-v2 in simplification). We follow the dataset split of Nguyen et al. (2020).

• 词性标注 (POS Tagging): 我们使用了三个数据集: Ritter11-TPOS (Ritter et al., 2011)、ARK-Twitter (Gimpel et al., 2011; Owoputi et al., 2013) 和 Tweebank-v2 (Liu et al., 2018b) 数据集 (简称为 Ritter、ARK 和 TB-v2)。我们遵循 Nguyen et al. (2020) 的数据集划分方式。

• Chunking: We use CoNLL 2000 (Tjong Kim Sang and Buchholz, 2000) for chunking. Since there is no standard development set for CoNLL 2000 dataset, we split $10%$ of the training data as the development set.

• 分块 (Chunking): 我们使用 CoNLL 2000 (Tjong Kim Sang 和 Buchholz, 2000) 进行分块。由于 CoNLL 2000 数据集没有标准开发集,我们将训练数据的 $10%$ 划分为开发集。

• Aspect Extraction: Aspect extraction is a subtask of aspect-based sentiment analysis (Pontiki et al., 2014, 2015, 2016). The datasets are from the laptop and restaurant domain of SemEval

• 方面提取 (Aspect Extraction): 方面提取是基于方面的情感分析 (Pontiki et al., 2014, 2015, 2016) 的子任务。数据集来自 SemEval 的笔记本电脑和餐厅领域。

Table 1: Comparison with concatenating all embeddings and random search baselines on 6 tasks.

表 1: 在6项任务上与全嵌入拼接及随机搜索基线的对比结果

14, restaurant domain of SemEval 15 and restaurant domain of SemEval 16 shared task (14Lap, 14Res, 15Res and 16Res in short). Additionally, we use another 4 languages in the restaurant domain of SemEval 16 to test our approach in multiple languages. We randomly split $10%$ of the training data as the development set following Li et al. (2019).

14、SemEval 15的餐厅领域和SemEval 16共享任务的餐厅领域(简称14Lap、14Res、15Res和16Res)。此外,我们使用SemEval 16餐厅领域的另外4种语言来测试我们的多语言方法。按照Li等人(2019)的方法,我们随机划分了$10%$的训练数据作为开发集。

• Syntactic Dependency Parsing: We use Penn Tree Bank (PTB) 3.0 with the same dataset preprocessing as (Ma et al., 2018).

• 句法依存分析:我们使用宾州树库(PTB)3.0版本,数据集预处理方式与(Ma et al., 2018)保持一致。

• Semantic Dependency Parsing: We use DM, PAS and PSD datasets for semantic dependency parsing (Oepen e