UniNet: A Contrastive Learning-guided Unified Framework with Feature Selection for Anomaly Detection

UniNet: 基于对比学习的特征选择统一异常检测框架

Abstract

摘要

Anomaly detection $(A D)$ is a crucial visual task aimed at recognizing abnormal pattern within samples. However, most existing $A D$ methods suffer from limited generalizability, as they are primarily designed for domain-specific applications, such as industrial scenarios, and often perform poorly when applied to other domains. This challenge largely stems from the inherent discrepancies in features across domains. To bridge this domain gap, we introduce UniNet, a generic unified framework that incorporates effective feature selection and contrastive learning-guided anomaly discrimination. UniNet comprises student-teacher models and a bottleneck, featuring several vital innovations: First, we propose domain-related feature selection, where the student is guided to select and focus on representative features from the teacher with domain-relevant priors, while restoring them effectively. Second, a similarity contrastive loss function is developed to strengthen the correlations among homogeneous features. Meanwhile, a margin loss function is proposed to enforce the separation between the similarities of abnormality and normality, effectively improving the model’s ability to discriminate anomalies. Third, we propose a weighted decision mechanism for dynamically evaluating the anomaly score to achieve robust AD. Large-scale experiments on 11 datasets from various domains show that UniNet surpasses existing methods1.

异常检测(AD)是一项关键的视觉任务,旨在识别样本中的异常模式。然而,现有大多数AD方法泛化能力有限,因为它们主要针对特定领域(如工业场景)设计,在其他领域表现往往不佳。这一挑战主要源于跨领域特征的内在差异。为弥合领域差距,我们提出了通用统一框架UniNet,它融合了有效特征选择和对比学习引导的异常判别。UniNet包含师生模型和瓶颈结构,具有以下关键创新:首先,我们提出领域相关特征选择机制,通过领域先验知识指导学生模型从教师模型中选择并聚焦代表性特征,同时高效恢复这些特征。其次,开发了相似性对比损失函数以增强同类特征间的关联性,同时提出边界损失函数来扩大异常与正常样本相似度的分离度,有效提升模型异常判别能力。第三,我们提出加权决策机制来动态评估异常分数,实现稳健的异常检测。在11个跨领域数据集的大规模实验中,UniNet超越了现有方法[1]。

1. Introduction

1. 引言

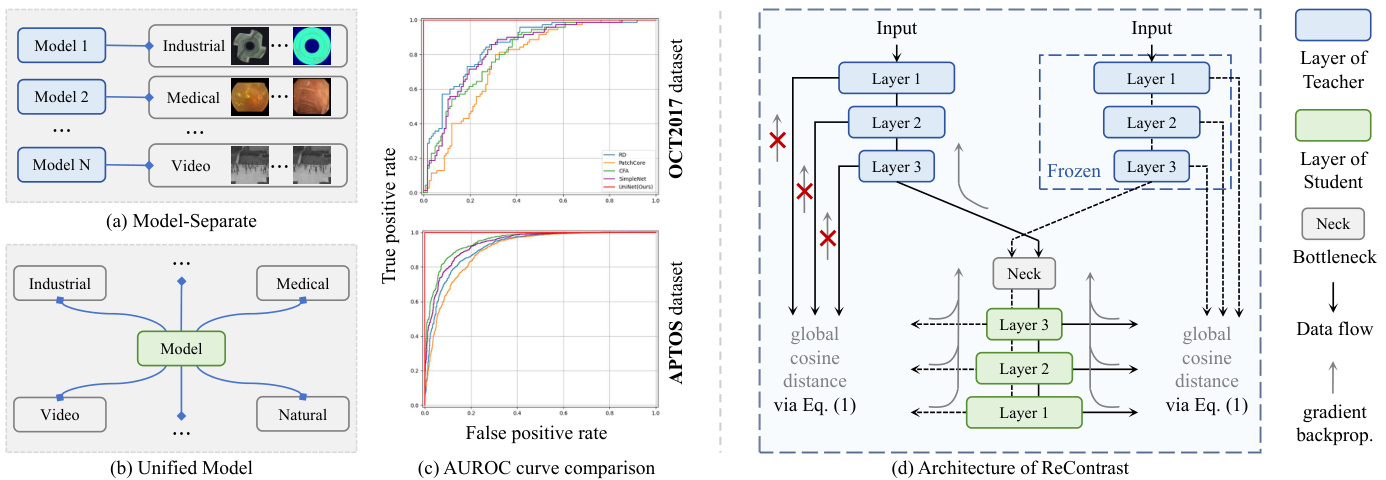

Visual anomaly detection (AD) has gained significant traction in recent years, with applications spanning across various fields, such as medical image diagnosis [7, 37, 48], industrial defect inspection [9, 25, 33, 50], and video surveillance [1, 39, 44]. Prior AD paradigms typically develop separate models tailored to each domain (see Fig. 1(a)). Despite considerable advancements in domain-specific applications, these approaches often suffer from limited crossdomain applicability. This limitation primarily arises from domain differences and inherent discrepancies in features. For instance, in industrial AD, some self-supervised methods [29, 43, 55] employ external data [10] or data augmentation technologies to synthesize anomalies and learn anoma- lous feature distribution. However, the anomalies generated in this manner can differ substantially from those encountered in other domains, e.g., medical imaging [11, 26, 45] or video surveillance [30], potentially resulting in insufficient learning of the anomalous distribution. In fact, beyond the stark differences in visual appearance of anomalies–such as defects on industrial products vs. polyps on the intestine or anomalous behavior like cyclist in video surveillance–there are also notable differences in the normal features across different domains. This variability further complicates their cross-domain applications. Moreover, another main challenge hindering the effective application of most methods across other domains is their reliance on pre-trained networks–trained on source domains such as ImageNet [13]–for feature extraction. Recent studies [17, 23, 57] have demonstrated that pre-trained features often bear little resemblance to those needed to the target domain owing to inherent biases in these pre-trained networks, adversely affecting performance (see Fig. 1(c)). In light of these challenges, this paper explores the problem of how to develop a unified framework capable of adapting to diverse domains while achieving accurate AD (see Fig. 1(b)).

视觉异常检测 (AD) 近年来受到广泛关注,其应用涵盖医疗影像诊断 [7, 37, 48]、工业缺陷检测 [9, 25, 33, 50] 和视频监控 [1, 39, 44] 等多个领域。传统AD范式通常针对每个领域开发独立模型(见图1(a))。尽管在特定领域应用中取得了显著进展,但这些方法往往缺乏跨领域适用性。这一局限主要源于领域差异和特征固有差异。例如在工业AD中,部分自监督方法 [29, 43, 55] 通过外部数据 [10] 或数据增强技术合成异常并学习异常特征分布,但这种方式生成的异常与其他领域(如医疗影像 [11, 26, 45] 或视频监控 [30])的真实异常可能存在显著差异,导致异常分布学习不充分。事实上,除了异常视觉表现的明显差异(如工业产品缺陷与肠道息肉,或视频中骑行者等异常行为),不同领域的正常特征也存在显著差异,这进一步增加了跨领域应用的复杂性。此外,多数方法依赖源领域(如ImageNet [13])预训练网络进行特征提取,这种预训练特征由于网络固有偏差往往与目标领域需求特征不符 [17, 23, 57],从而影响性能(见图1(c))。针对这些挑战,本文探索如何构建能适应多领域并实现精准AD的统一框架(见图1(b))。

Recently, ReContrast [17] introduces contrastive learning (CL) elements to optimize its framework for adaptation to different target domains, showing good transfer abil- ity. Nevertheless, two limitations restrict its further development. First, it struggles to capture representative features relevant to the target domain, which impacts its ability to understand domain-related information. Second, it still faces a significant challenge in effectively discriminating between abnormality and normality, even after being trained on some anomalous samples, which limits its applicability in supervised settings [2, 6, 24, 46].

最近,ReContrast [17] 引入了对比学习 (contrastive learning, CL) 元素来优化其框架以适应不同的目标域,展现出良好的迁移能力。然而,其进一步发展受到两个限制。首先,它难以捕获与目标域相关的代表性特征,这影响了其理解领域相关信息的能力。其次,即使在训练了一些异常样本后,它仍然面临有效区分异常与正常的重大挑战,这限制了其在监督设置中的适用性 [2, 6, 24, 46]。

Figure 1. (a) One-model-one-domain setting. (b) One-model multi-domain setting. (c) AUROC curve comparison of UniNet and competing AD methods (reliance on pre-trained features) on medical datasets. (d) Architecture of ReContrast.

图 1: (a) 单模型单领域设置。 (b) 单模型多领域设置。 (c) UniNet与依赖预训练特征的竞争性异常检测方法在医学数据集上的AUROC曲线对比。 (d) ReContrast架构。

In respond to these problems, we propose a novel generic unified framework based on ReContrast, termed UniNet. It consists of student-teacher (S-T) models along with a bottleneck. Concretely, UniNet first develop a lightweightyet-powerful multi-scale embedding module (MEM) within the bottleneck to better capture the contextual relationships among features provided to the student. We then propose domain-related feature selection, a method that prompts the student to select crucial features from the teacher with prior knowledge to learn domain-related information. To effectively distinguish anomalies, a similarity-contrastive loss is first proposed to strengthen the correlations among homogeneous features. Followed this, a margin loss is developed to enhance the similarity of normal features, ensuring they are separated from anomalous ones with low similarity. Finally, considering the similarity between the outputs of S-T networks, we propose a weighted decision mechanism to adaptively calculate the anomaly score for improved AD performance. Notably, unlike ReContrast [17] that mainly focuses on unsupervised AD, our UniNet can be suited for unsupervised and supervised settings simultaneously. In summary, our contributions are as follows:

针对这些问题,我们提出了一种基于ReContrast的新型通用统一框架UniNet。该框架由师生(S-T)模型和瓶颈结构组成。具体而言,UniNet首先在瓶颈结构中开发了一个轻量级但强大的多尺度嵌入模块(MEM),以更好地捕获提供给学生的特征之间的上下文关系。接着我们提出领域相关特征选择方法,通过先验知识指导学生从教师模型中选择关键特征来学习领域相关信息。为有效区分异常,首次提出相似性对比损失来强化同类特征间的相关性;随后开发边界损失来增强正常特征的相似性,确保其与相似度较低的异常特征分离。最后,考虑到S-T网络输出的相似性,我们提出加权决策机制来自适应计算异常分数以提升异常检测(AD)性能。值得注意的是,与主要关注无监督AD的ReContrast [17]不同,我们的UniNet可同时适用于无监督和有监督场景。本文的主要贡献如下:

• This paper presents UniNet, a generic unified framework that can be oriented towards a wider range of domains, applicable to both unsupervised and supervised settings. • We design MEM to capture contextual information and propose domain-related feature selection to guide the student in selecting and learning target-oriented representative features from the teacher. • A similarity-contrastive loss is developed to enhance the relationships among homogeneous features and we then employ a margin loss to enhance the similarity of normal features for better anomaly discrimination. We implement a weighted decision mechanism to achieve superior AD performance during inference.

• 本文提出UniNet,一种面向更广泛领域的通用统一框架,适用于无监督和有监督场景。

• 我们设计MEM (Contextual Information Capture Module) 来捕获上下文信息,并提出领域相关特征选择机制,指导学生从教师网络中选择和学习目标导向的代表性特征。

• 开发了相似性对比损失函数以增强同类特征间的关系,并采用边界损失提升正常特征的相似性以实现更好的异常判别。推理阶段通过加权决策机制实现卓越的异常检测(AD)性能。

• Large-scale experiments conducted on 11 datasets from industrial, medical and video domains manifest that UniNet achieves superior results across different metrics.

• 在来自工业、医疗和视频领域的11个数据集上进行的大规模实验表明,UniNet在不同指标上均取得了优异结果。

2. Related work

2. 相关工作

Unsupervised methods. Unsupervised AD methods rely solely on available anomaly-free samples to learn their distribution due to the scarcity of anomalous data. Conse- quently, numerous promising methods [17, 25, 33, 39, 41, 47, 50, 58] have been continuously proposed. AST [41] employs an asymmetric S-T framework, minimizing the distance between their outputs to identify anomalies with large deviations. THF [25] proposes a new flow-based method that prevents the overlap of distribution between normal and anomalous features. Other attempts explore the use of memory banks to store additional normal prototypes to effectively detect anomalies, such as PatchCore [40] and MemKD [16]. The aforementioned methods are typically classified as one-class AD methods, as they train a separate model for each class. Recently, some efforts [17, 18, 53] have shifted toward multi-class AD, aiming to use one unified model to detect anomalies across different classes concurrently. UniAD [53] pioneers this approach, solving the problem that a growing number of training categories often leads to increased computational time. MambaAD [18] further advances this idea by exploring state space models, achieving outstanding performance while maintaining low complexity and computational overheads.

无监督方法。由于异常数据稀缺,无监督异常检测(AD)方法仅依赖可用的正常样本来学习其分布。因此,不断涌现出许多有前景的方法[17,25,33,39,41,47,50,58]。AST[41]采用非对称S-T框架,通过最小化输出间距来识别具有较大偏差的异常。THF[25]提出了一种新的基于流(flow-based)的方法,防止正常与异常特征分布重叠。其他尝试则探索使用记忆库存储额外正常原型以有效检测异常,例如PatchCore[40]和MemKD[16]。上述方法通常被归类为一类异常检测方法,因为它们为每个类别训练独立模型。最近,一些研究[17,18,53]转向多类异常检测,旨在使用统一模型同时检测不同类别的异常。UniAD[53]开创了这一方向,解决了训练类别增长导致计算时间增加的问题。MambaAD[18]通过探索状态空间模型进一步推进该理念,在保持低复杂度和计算开销的同时实现了卓越性能。

Supervised methods. Unlike unsupervised AD methods, supervised AD approaches can train a model on anomalous samples, thereby improving the accuracy of class boundaries [42]. DevNet [38] utilizes some labeled anomalous samples and prior probabilities to enforce that the anomaly scores of the anomalous samples significantly deviate from those of the normal samples in the upper tail. FCDD [34] employs a fully convolutional neural network architecture to map normal samples towards the center of the feature space, effectively distancing anomalous samples from this central region. DRA [14] generalizes to unknown anomalies by learning disentangled anomalous representations for different types of anomalies. Due to the scarcity of available supervised datasets, these methods are primarily trained on the widely used MVTec AD dataset [4], where the labeled anomalous samples are generally derived from the test set. However, they still face challenge in having adequate anomalous samples. Consequently, they often employ anomaly synthesis strategy [29, 43, 55] to generate anomalous samples, but these anomalies hardly conform to the real-world anomaly distribution. To tackle the issue of limited supervised datasets, Baitieva et al. [2] recently introduced a new industrial supervised AD benchmark, which features a wider array of complex anomalies and substantial intra-class variability among anomalous-free images. Given that conventional AD methods struggle with this benchmark, they further incorporated a segmentation-based anomaly detector to enhance AD performance.

监督方法。与无监督异常检测 (AD) 方法不同,监督式异常检测方法可利用异常样本训练模型,从而提升类别边界精度 [42]。DevNet [38] 利用标记异常样本和先验概率,强制使异常样本的异常分数显著偏离正常样本的上尾分布。FCDD [34] 采用全卷积神经网络架构,将正常样本映射至特征空间中心区域,同时使异常样本远离该中心。DRA [14] 通过学习不同异常类型的解耦表征,实现对未知异常的泛化检测。由于可用监督数据集稀缺,这些方法主要在广泛使用的 MVTec AD 数据集 [4] 上训练,其中标记异常样本通常来自测试集。但它们仍面临异常样本不足的挑战,因此常采用异常合成策略 [29, 43, 55] 生成异常样本,但这些合成异常往往不符合真实异常分布。为解决监督数据有限的问题,Baitieva 等人 [2] 近期提出了新型工业监督异常检测基准,其特点是包含更复杂的异常类型,且正常图像存在显著的类内差异。鉴于传统异常检测方法在该基准上表现不佳,研究者进一步引入基于分割的异常检测器以提升性能。

In this paper, our work seeks to develop a unified solution for AD across different domains, in contrast to most current mainstream methods, which design separate models for domain-specific AD.

本文旨在开发一种适用于不同领域的统一异常检测(AD)解决方案,这与当前主流方法形成鲜明对比——现有方法通常为特定领域设计独立模型。

3. Preliminaries

3. 预备知识

The prototype of ReContrast [17] is composed of a pretrained teacher model, a bottleneck, and a learnable student model. ReContrast aims to optimize the entire framework to the target domain through CL elements. Let $F_{T}^{i},F_{S}^{i}\in$ $\mathbb{R}^{C_{i}\times H_{i}\times\bar{W}{i}}$ respectively represent the output of $i^{t h}$ layer of the teacher and student models, where $C_{i},H_{i}$ , and $W_{i}$ are the channels, height, width of the corresponding output. To optimize the student model, ReContrast first proposes global cosine distance to better maintain global consistency between feature points and avoid instability during training:

ReContrast [17] 的原型由一个预训练的教师模型、一个瓶颈层和一个可学习的学生模型组成。ReContrast 旨在通过对比学习 (Contrastive Learning, CL) 元素将整个框架优化到目标域。设 $F_{T}^{i},F_{S}^{i}\in$ $\mathbb{R}^{C_{i}\times H_{i}\times\bar{W}{i}}$ 分别表示教师模型和学生模型第 $i^{t h}$ 层的输出,其中 $C_{i},H_{i}$ 和 $W_{i}$ 是对应输出的通道数、高度和宽度。为了优化学生模型,ReContrast 首先提出全局余弦距离,以更好地保持特征点之间的全局一致性,并避免训练过程中的不稳定性:

$$

\mathcal{L}{g}=\sum_{i=1}^{n}d(F_{T}^{i},F_{S}^{i})=\sum_{i=1}^{n}1-\frac{\mathcal{F}(F_{T}^{i})^{\top}}{\big|\mathcal{F}(F_{T}^{i})\big|}\cdot\frac{\mathcal{F}(F_{S}^{i})}{\big|\mathcal{F}(F_{S}^{i})\big|},

$$

$$

\mathcal{L}{g}=\sum_{i=1}^{n}d(F_{T}^{i},F_{S}^{i})=\sum_{i=1}^{n}1-\frac{\mathcal{F}(F_{T}^{i})^{\top}}{\big|\mathcal{F}(F_{T}^{i})\big|}\cdot\frac{\mathcal{F}(F_{S}^{i})}{\big|\mathcal{F}(F_{S}^{i})\big|},

$$

where $n$ represents the number of layers, $d(:,:)$ is the cosine distance, $\left\Vert\cdot\right\Vert$ is $\ell_{2}$ norm, and $\mathcal{F}(\cdot){\mathrm{:}}\mathbb{R}^{C\times H\times W}\rightarrow\mathbb{R}^{C H W}$ denotes a flattening operation. Subsequently, ReContrast optimizes the pre-trained teacher model to adapt it to the target domain. However, prior works suggested that this would result in pattern collapse. Inspired by CL for selfsupervised learning [8], the stop gradient operation is introduced to mitigate pattern collapse by modifying Eq. (1):

其中 $n$ 表示层数,$d(:,:)$ 为余弦距离,$\left\Vert\cdot\right\Vert$ 是 $\ell_{2}$ 范数,$\mathcal{F}(\cdot){\mathrm{:}}\mathbb{R}^{C\times H\times W}\rightarrow\mathbb{R}^{C H W}$ 表示展平操作。随后,ReContrast 通过优化预训练教师模型使其适应目标域。但先前研究表明这会导致模式坍塌 [8]。受自监督学习中对比学习 (CL) 的启发,我们引入停止梯度操作来缓解模式坍塌,并将公式 (1) 修改为:

$$

\mathcal{L}{g}=\sum_{i=1}^{n}1-\frac{\mathrm{SG}(\mathcal{F}(F_{T}^{i}))^{\top}}{\left|\mathrm{SG}(\mathcal{F}(F_{T}^{i}))\right|}\cdot\frac{\mathcal{F}(F_{S}^{i})}{\left|\mathcal{F}(F_{S}^{i})\right|},

$$

$$

\mathcal{L}{g}=\sum_{i=1}^{n}1-\frac{\mathrm{SG}(\mathcal{F}(F_{T}^{i}))^{\top}}{\left|\mathrm{SG}(\mathcal{F}(F_{T}^{i}))\right|}\cdot\frac{\mathcal{F}(F_{S}^{i})}{\left|\mathcal{F}(F_{S}^{i})\right|},

$$

where $\mathrm{SG}(\cdot)$ is the stop gradient operation. To prevent “identical shortcut” caused by no contrastive pairs, ReContrast introduces an additional frozen teacher model without any optimization during training. In this way, two teacher models can produce two views from one image, i.e., a target domain view and a source domain view, to achieve image augmentations, similar to a CL paradigm (see Fig. 1(d)).

其中 $\mathrm{SG}(\cdot)$ 是停止梯度操作。为避免因缺少对比对而导致的"相同捷径"问题,ReContrast引入了一个额外的冻结教师模型,在训练期间不进行任何优化。这样,两个教师模型可以从一张图像生成两种视图,即目标域视图和源域视图,以实现图像增强,类似于CL范式(见图1(d))。

4. Methodology

4. 方法论

4.1. Approach overview

4.1. 方法概述

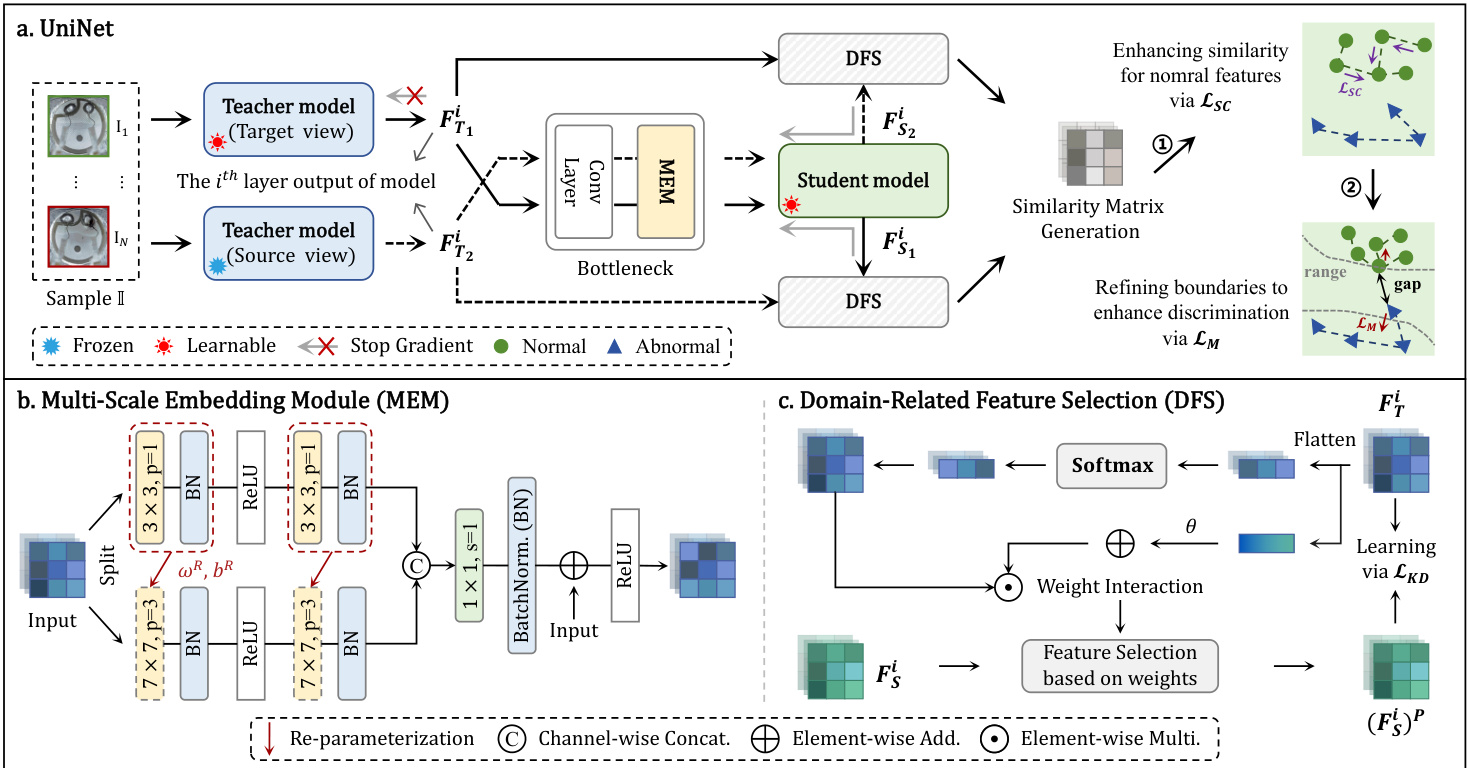

With inspiration from ReContrast [17], this paper proposes UniNet, a generic unified framework for different domains, as illustrated in Fig. 2. The goal of UniNet is to optimize the entire framework towards the target domain, while enabling domain-relevant feature selection and learning, along with effective anomaly discrimination.

受ReContrast [17]启发,本文提出了UniNet这一适用于不同领域的通用统一框架,如图 2 所示。UniNet的目标是针对目标领域优化整个框架,同时实现领域相关特征的选择与学习,以及有效的异常判别。

To capture the contextual relationships among features, UniNet first develops a lightweight-yet-effective MEM within the bottleneck (Sec. 4.2). Then, we propose domainrelated feature selection, guiding the student to select targetoriented features from the teacher with prior knowledge and prompting its learning (Sec. 4.3). Besides, a similaritycontrastive loss is proposed to enhance the correlations among homogeneous features, followed by the development of a margin loss to preserve the distinction between the similarities of normal and anomalous features, thereby enhancing disc rim inability (Sec. 4.4). Based on the similarity between the outputs of S-T network, a weighted decision mechanism is proposed to achieve robust AD performance during inference (Sec. 4.5).

为捕捉特征间的上下文关系,UniNet首先在瓶颈层开发了轻量高效的MEM模块(见第4.2节)。随后,我们提出基于先验知识的领域相关特征选择方法,指导学生网络从教师网络中筛选目标导向特征以促进学习(见第4.3节)。此外,通过设计相似性对比损失来增强同质特征间的关联性,并引入边界损失以保持正常特征与异常特征相似度的区分度,从而提升判别能力(见第4.4节)。基于师生网络输出的相似性,我们提出加权决策机制以实现推理阶段稳健的异常检测性能(见第4.5节)。

4.2. Multi-Scale Embedding Module

4.2. 多尺度嵌入模块

Motivation. Some prior approaches [12, 25] struggle to capture the contextual relationships among features, impeding enhancement in feature correlations and redundancy reduction. These methods typically employ a set of small kernels to mitigate increased computational overheads, but recent research [15] has demonstrated that fittingly using a few larger kernels can be helpful for vision tasks. Propelled by this insight, we design a simple yet powerful Multi-Scale Embedding Module (MEM) within the bottleneck for feature extraction across various contexts while maintaining low memory consumption, as visualized in Fig. 2.

动机。现有方法[12, 25]难以捕捉特征间的上下文关联,阻碍了特征相关性增强与冗余降低。这些方法通常采用一组小卷积核来缓解计算开销增长,但近期研究[15]表明,合理使用少量大卷积核反而有利于视觉任务。受此启发,我们在瓶颈层设计了一个简洁高效的多尺度嵌入模块(Multi-Scale Embedding Module, MEM),可在低内存消耗下提取多上下文特征,如图2所示。

Module design. Considering the multi-scale features, we first split the input as two parts along the channel dimensions, with two different size of kernels to capture global and local information, thus enriching the contextual relationships among features. These two parts are respectively fed into a $k\times k$ (where $k$ is 3 or 7) kernel convolution layer, followed by a batch normalization (BN) layer and a ReLU activation (ReLU). Similarly, they are further fed into a $k\times k$ kernel convolution layer and a BN layer to enhance feature extraction. Then, these two parts are concatenated to compress channel dimensions by a $1\times1$ kernel convolution layer and a BN layer. Finally, to achieve better regular iz ation, a residual connection is conducted before ReLU.

模块设计。考虑到多尺度特征,我们首先沿通道维度将输入分为两部分,采用两种不同大小的卷积核来捕获全局和局部信息,从而丰富特征间的上下文关系。这两部分分别输入一个$k\times k$(其中$k$为3或7)的卷积核层,后接批量归一化(BN)层和ReLU激活函数(ReLU)。类似地,它们会进一步输入一个$k\times k$卷积核层和BN层以增强特征提取。随后,这两部分通过一个$1\times1$卷积核层和BN层进行通道维度压缩并拼接。最后,为实现更好的正则化效果,在ReLU前执行残差连接。

Figure 2. Overall framework of the proposed UniNet. It consists of a pair of teachers models, a bottleneck, and a student model, with several key components: MEM, DFS, Similarity-Contrastive loss $\mathcal{L}{S C}$ and Margin loss $\mathcal{L}_{M}$ .

图 2: 提出的UniNet整体框架。它由一对教师模型、一个瓶颈层和一个学生模型组成,包含几个关键组件:MEM、DFS、相似性对比损失 $\mathcal{L}{S C}$ 和边界损失 $\mathcal{L}_{M}$。

Due to the use of large kernels, the number of parameters and inference time would increase. To mitigate this, we reparameterized the large kernel convolutional layers through the small kernel convolutional layers and BN layers:

由于使用大核卷积,参数量和推理时间会增加。为缓解这一问题,我们通过小核卷积层和BN层对大型核卷积层进行重参数化:

$$

\omega^{R}=\omega\cdot\frac{\gamma}{\sqrt{\sigma^{2}+\epsilon}},b^{R}=b-\frac{\mu\cdot\gamma}{\sqrt{\sigma^{2}+\epsilon}},

$$

$$

\omega^{R}=\omega\cdot\frac{\gamma}{\sqrt{\sigma^{2}+\epsilon}},b^{R}=b-\frac{\mu\cdot\gamma}{\sqrt{\sigma^{2}+\epsilon}},

$$

where $\omega$ is the weight of small kernel convolutional layer, while $\omega^{R}$ and $b^{R}$ are the weight and bias of large kernel convolutional layer after re-parameter iz ation. $\mu,\sigma^{2},\gamma$ , and $b$ denote the mean, variance, weight, and bias of BN layers, respectively. $\epsilon$ is a small constant. In this way, $7\times7$ kernel convolution layers can be viewed as equivalent to $3\times3$ kernel convolution layers, thus decreasing computational costs.

其中 $\omega$ 是小核卷积层的权重,$\omega^{R}$ 和 $b^{R}$ 是重参数化后大核卷积层的权重和偏置。$\mu,\sigma^{2},\gamma$ 和 $b$ 分别表示 BN (Batch Normalization) 层的均值、方差、权重和偏置。$\epsilon$ 是一个小常数。通过这种方式,$7\times7$ 核卷积层可视为等效于 $3\times3$ 核卷积层,从而降低计算成本。

4.3. Domain-Related Feature Selection

4.3. 领域相关特征选择

Motivation. Despite its great transfer ability across different domains, ReContrast [17] still suffer from an insufficient capture of feature representations relevant to the target domain, resulting in the loss of crucial information. To solve this challenge, we propose Domain-Related Feature Selection (DFS), which encourages the student to selectively concentrate on target-oriented features from the teacher and well restore them, thereby avoiding the inclusion of unimportant information. Particularly, the student is required to learn only representative information pertaining to the target domain, rather than all available information.

动机。尽管ReContrast [17]在不同领域间展现出卓越的迁移能力,但其对目标领域相关特征表征的捕捉仍不充分,导致关键信息丢失。为解决这一挑战,我们提出领域相关特征选择(Domain-Related Feature Selection, DFS)方法,促使学生模型从教师模型中选择性聚焦目标导向特征并充分还原,从而避免引入无关信息。具体而言,学生模型仅需学习与目标域相关的代表性信息,而非全部可用信息。

Selection and learning. Introducing information from target-oriented domain into the student is crucial for enabling it to understand and generate the feature representations required for that domain. To achieve it, we utilize the weight to control how much representative information should be selected from the teacher with prior knowledge. Concretely, let the features from the teacher and student models be $F_{T}^{i},F_{S}^{i}\in\mathbb{R}^{C_{i}\times H_{i}\times W_{i}}$ , and the teacher feature is flattened to $\widehat{F}{T}^{i}\in\mathbb{R}^{C_{i}\times H_{i}W_{i}}$ . The weight can be generated as follows:

选择与学习。将目标领域的信息引入学生模型对于使其理解和生成该领域所需的特征表示至关重要。为此,我们利用权重来控制应从具备先验知识的教师模型中选择多少代表性信息。具体而言,设教师模型和学生模型的特征分别为 $F_{T}^{i},F_{S}^{i}\in\mathbb{R}^{C_{i}\times H_{i}\times W_{i}}$ ,并将教师特征展平为 $\widehat{F}{T}^{i}\in\mathbb{R}^{C_{i}\times H_{i}W_{i}}$ 。权重可按如下方式生成:

$$

w_{i}=\frac{\exp(\widehat{F}{T}^{i}-\varrho)}{\sum_{j=1}^{H_{i}W_{i}}\exp(\widehat{F}_{T}^{i}(:,j)-\varrho)},

$$

$$

w_{i}=\frac{\exp(\widehat{F}{T}^{i}-\varrho)}{\sum_{j=1}^{H_{i}W_{i}}\exp(\widehat{F}_{T}^{i}(:,j)-\varrho)},

$$

where $w_{i}\in\mathbb{R}^{C_{i}\times H_{i}W_{i}}$ and $\varrho=\operatorname*{max}(\widehat{F}_{T}^{i})$ . To avoid relying on local information only, we integ rbate global informa- tion to ensure weight interaction, enhancing aware ability.

其中 $w_{i}\in\mathbb{R}^{C_{i}\times H_{i}W_{i}}$ ,且 $\varrho=\operatorname*{max}(\widehat{F}_{T}^{i})$ 。为避免仅依赖局部信息,我们整合全局信息以确保权重交互,从而增强感知能力。

The global information $F_{i}^{g}$ can be obtained as follows:

全局信息 $F_{i}^{g}$ 可通过以下方式获取:

$$

F_{i}^{g}=\frac{1}{H_{i}\cdot W_{i}}\sum_{h=1}^{H_{i}}\sum_{w=1}^{W_{i}}F_{T}^{i}(:,h,w),

$$

$$

F_{i}^{g}=\frac{1}{H_{i}\cdot W_{i}}\sum_{h=1}^{H_{i}}\sum_{w=1}^{W_{i}}F_{T}^{i}(:,h,w),

$$

where $F_{i}^{g}\in\mathbb{R}^{C_{i}\times1\times1}$ . Let the weight $w_{i}$ be further reshaped to $(w_{i})^{R}\in\mathbb{R}^{C_{i}\times H_{i}\times W_{i}}$ . The interacted weight can be obtained through the fusion of global and local information, and the student can then select domain-related feature information based on the interacted weight to generate domain-related features $(F_{S_{i}})^{P}$ :

其中 $F_{i}^{g}\in\mathbb{R}^{C_{i}\times1\times1}$。将权重 $w_{i}$ 进一步重塑为 $(w_{i})^{R}\in\mathbb{R}^{C_{i}\times H_{i}\times W_{i}}$。通过全局和局部信息的融合可获得交互权重,学生网络随后可根据该交互权重选择与领域相关的特征信息,生成领域相关特征 $(F_{S_{i}})^{P}$:

$$

(F_{S}^{i})^{P}=F_{S}^{i}\odot{(w_{i})^{R}\odot(\theta+F_{i}^{g})},

$$

$$

(F_{S}^{i})^{P}=F_{S}^{i}\odot{(w_{i})^{R}\odot(\theta+F_{i}^{g})},

$$

where $\theta$ is a learnable parameter flexibly controlling the domain-related feature selection and $\odot$ represents elementwise multiplication. To ensure that the student effectively learns the prior knowledge, we aim to minimize the distance between its output and that of the teacher during training:

其中 $\theta$ 是可学习参数,用于灵活控制与领域相关的特征选择,$\odot$ 表示逐元素相乘。为确保学生模型有效学习先验知识,我们旨在训练期间最小化其输出与教师模型输出之间的距离:

$$

\mathcal{L}{K D}=\sum_{i=1}^{n}d(\mathrm{SG}(F_{T}^{i}),(F_{S}^{i})^{P}).

$$

$$

\mathcal{L}{K D}=\sum_{i=1}^{n}d(\mathrm{SG}(F_{T}^{i}),(F_{S}^{i})^{P}).

$$

4.4. Comparing similarity and enhancing discrimination

4.4. 相似性比较与区分度增强

Motivation. Most unsupervised methods [12, 17, 33, 40] may fail to establish class boundaries [38] due to the absence of anomalous samples. However, even when trained with some anomalous samples, these methods still face challenge in effectively discriminating anomalies, particularly unseen ones [14]. To overcome this limitation, we propose a similarity-contrastive loss and a margin loss. The similarity-contrastive loss is first employed to enhance the correlations among normal features, ensuring they remain tightly clustered. Yet, since anomalous features are not expected to exhibit high similarity to normal ones, this encourages a clear gap between them. Thus, we then use the margin loss to enforce a greater separation between their similarity, further enhancing the model’s discrimination ability.

动机。大多数无监督方法 [12, 17, 33, 40] 由于缺乏异常样本,可能无法有效建立类别边界 [38]。然而,即使使用部分异常样本进行训练,这些方法仍难以有效区分异常,尤其是未见过的异常 [14]。为突破这一局限,我们提出了相似性对比损失 (similarity-contrastive loss) 和边界损失 (margin loss)。首先采用相似性对比损失增强正常特征间的相关性,确保它们紧密聚集;同时由于异常特征不应与正常特征高度相似,这促使二者形成明显间隔。随后通过边界损失进一步扩大相似性差异,从而提升模型的判别能力。

Mechanism. The learned student feature $(F_{S}^{i})^{P}$ is flattened and reshaped to $(\widehat{F}{S}^{i})^{P}\in\mathbb{R}^{H_{i}W_{i}\times C_{i}}$ . The similarity matrix $m_{i}$ between the obutputs of S-T models is obtained:

机制。学习到的学生特征 $(F_{S}^{i})^{P}$ 被展平并重塑为 $(\widehat{F}{S}^{i})^{P}\in\mathbb{R}^{H_{i}W_{i}\times C_{i}}$。通过计算S-T模型输出之间的相似度矩阵 $m_{i}$ 得到:

$$

m_{i}=\frac{(\widehat{F}{S}^{i})^{P}\cdot(\widehat{F}{T}^{i})}{\left|(\widehat{F}{S}^{i})^{P}\right|\cdot\left|\widehat{F}_{T}^{i}\right|\cdot\mathcal{T}},

$$

$$

m_{i}=\frac{(\widehat{F}{S}^{i})^{P}\cdot(\widehat{F}{T}^{i})}{\left|(\widehat{F}{S}^{i})^{P}\right|\cdot\left|\widehat{F}_{T}^{i}\right|\cdot\mathcal{T}},

$$

where $\tau$ is a temperature parameter which controls the dis- tribution of similarity. With the similarity matrix, the normalized similarity matrix can be then obtained by:

其中 $\tau$ 是控制相似度分布的温度参数。通过相似度矩阵,归一化相似度矩阵可通过以下方式获得:

$$

\widehat{m}{i}=\frac{\exp(m_{i})}{\sum_{k=1}^{H_{i}W_{i}}\exp(m_{i}(:,k))+\epsilon}.

$$

$$

\widehat{m}{i}=\frac{\exp(m_{i})}{\sum_{k=1}^{H_{i}W_{i}}\exp(m_{i}(:,k))+\epsilon}.

$$

Each diagonal element of $\widehat{m}_{i}$ is the similarity between the features of S-T pairs. Tob strengthen the relationships among normal features within these pairs, we employ the similarity-contrastive loss to maximize their similarity:

$\widehat{m}_{i}$ 的每个对角元素表示 S-T 对特征之间的相似度。为了增强这些对中正常特征之间的关系,我们采用相似性对比损失 (similarity-contrastive loss) 来最大化它们的相似性:

$$

\mathcal{L}{S C}=-\frac{1}{n}\sum_{i=1}^{n}\sum_{j=1}^{N_{1}}\log(\mathrm{{diag}}((\widehat{m}{i})_{j})+\epsilon),

$$

$$

\mathcal{L}{S C}=-\frac{1}{n}\sum_{i=1}^{n}\sum_{j=1}^{N_{1}}\log(\mathrm{{diag}}((\widehat{m}{i})_{j})+\epsilon),

$$

where $N_{1}(N_{1}\leq N)$ is the number of normal samples and diag $(\cdot)$ is the operation of selecting diagonal elements.

其中 $N_{1}(N_{1}\leq N)$ 是正常样本的数量,diag $(\cdot)$ 是选择对角线元素的操作。

where $\tau$ is a hyper-parameter controlling the boundary. In this way, homogeneous features can be grouped together.

其中 $\tau$ 是控制边界的超参数。通过这种方式,同质特征可以被分组在一起。

During training, the overall losses are measured as:

训练期间,总体损失计算为:

$$

\mathcal{L}{U}=\lambda\mathcal{L}{K D}+(1-\lambda)\mathcal{L}{S C}+\mathcal{L}_{M},

$$

$$

\mathcal{L}{U}=\lambda\mathcal{L}{K D}+(1-\lambda)\mathcal{L}{S C}+\mathcal{L}_{M},

$$

where $\lambda$ is a balancing hyper-parameter. In supervised settings, Eq.(12) can be modified based on its task:

其中 $\lambda$ 是一个平衡超参数。在有监督的设置中,方程 (12) 可以根据其任务进行修改:

$$

\mathcal{L}=\mathcal{L}{U}+\sum_{i=1}^{n-1}\mathcal{L}{S}(\Phi(F_{S}^{i}),l),

$$

$$

\mathcal{L}=\mathcal{L}{U}+\sum_{i=1}^{n-1}\mathcal{L}{S}(\Phi(F_{S}^{i}),l),

$$

where $\mathcal{L}_{S}(:,:)$ denotes a Binary Cross-Entropy loss or a Dice loss, $\Phi(\cdot)$ is a flattening or upsampling operation, and $l$ is a label or a ground-truth mask.

其中 $\mathcal{L}_{S}(:,:)$ 表示二元交叉熵损失 (Binary Cross-Entropy loss) 或 Dice 损失 (Dice loss),$\Phi(\cdot)$ 为展平或上采样操作,$l$ 为标签或真实掩码。

4.5. Anomaly detection

4.5. 异常检测

Motivation. Some efforts [22, 38] use top $K$ or top-ranked values from the anomaly map to evaluate the anomaly score. Nevertheless, they rely on a fixed value (e.g., $K=3%$ ) for score calculation, which does not ensure robust AD. To remedy this, we propose a weighted decision mechanism that dynamically calculates image-level anomaly score for each sample, where anomaly score is determined by weight.

动机。一些研究[22, 38]采用异常图中前 $K$ 个或最高排名的值来评估异常分数。然而,它们依赖固定值(如 $K=3%$)进行分数计算,这无法确保稳健的异常检测(AD)。为此,我们提出一种加权决策机制,动态计算每个样本的图像级异常分数,其中异常分数由权重决定。

Weighted decision mechanism. Formally, we begin with obtaining the pixel-level anomaly map for each sample. With a pair of teacher models and one student model, two anomaly maps can be generated from each layer by $d(\cdot)$ in Eq. (1), with in total of $2n$ anomaly maps. Then, we obtain $2n$ low similarity values by taking the maximum value in each anomaly map. These low similarity values are further transformed into a probability distribution $\mathcal{N}$ via a Softmax activation. The values from $\mathcal{N}$ higher than the average of $\mathcal{N}$ are added to a set $\mathcal{P}$ to dynamically calculate the weight. The weight is defined as follows:

加权决策机制。具体而言,我们首先获取每个样本的像素级异常图。通过一对教师模型和一个学生模型,可利用公式(1)中的 $d(\cdot)$ 从每层生成两张异常图,共计 $2n$ 张异常图。随后,通过提取每张异常图中的最大值,得到 $2n$ 个低相似度值。这些低相似度值通过Softmax激活函数转换为概率分布 $\mathcal{N}$ ,将 $\mathcal{N}$ 中高于其均值的数值加入集合 $\mathcal{P}$ 以动态计算权重。权重定义如下:

(a) MVTec AD

(a) MVTec AD

| 方法 | I-AUROC | P-AUROC | PRO |

|---|---|---|---|

| RD++ [47] | 99.44 | 98.25 | 94.99 |

| DMAD [32] | 99.50 | 98.21 | — |

| GLAD [52] | 99.30 | 98.62 | 95.31 |

| ReConPacth [23] | 99.56 | 98.18 |