CLIP4STR: A Simple Baseline for Scene Text Recognition with Pre-trained Vision-Language Model

CLIP4STR: 基于预训练视觉语言模型的场景文本识别简单基线

Abstract—Pre-trained vision-language models (VLMs) are the de-facto foundation models for various downstream tasks. However, scene text recognition methods still prefer backbones pretrained on a single modality, namely, the visual modality, despite the potential of VLMs to serve as powerful scene text readers. For example, CLIP can robustly identify regular (horizontal) and irregular (rotated, curved, blurred, or occluded) text in images. With such merits, we transform CLIP into a scene text reader and introduce CLIP4STR, a simple yet effective STR method built upon image and text encoders of CLIP. It has two encoderdecoder branches: a visual branch and a cross-modal branch. The visual branch provides an initial prediction based on the visual feature, and the cross-modal branch refines this prediction by addressing the discrepancy between the visual feature and text semantics. To fully leverage the capabilities of both branches, we design a dual predict-and-refine decoding scheme for inference. We scale CLIP4STR in terms of the model size, pre-training data, and training data, achieving state-of-the-art performance on 13 STR benchmarks. Additionally, a comprehensive empirical study is provided to enhance the understanding of the adaptation of CLIP to STR. Our method establishes a simple yet strong baseline for future STR research with VLMs.

摘要—预训练的视觉语言模型 (VLM) 已成为各类下游任务实际采用的基础模型。然而,尽管VLM具备成为强大场景文本阅读器的潜力,现有场景文本识别 (STR) 方法仍倾向于使用单模态 (视觉模态) 预训练的主干网络。例如,CLIP能稳健识别图像中的规则 (水平) 和不规则 (旋转、弯曲、模糊或遮挡) 文本。基于这一优势,我们将CLIP改造为场景文本阅读器,提出CLIP4STR——一种基于CLIP图像与文本编码器的简单高效STR方法。该方法包含双编码器-解码器分支:视觉分支和跨模态分支。视觉分支基于视觉特征生成初始预测,跨模态分支则通过消除视觉特征与文本语义间的差异来优化预测结果。为充分发挥双分支能力,我们设计了双预测-优化解码方案用于推理。我们在模型规模、预训练数据和训练数据三个维度扩展CLIP4STR,在13个STR基准测试中达到最先进性能。此外,本文通过全面实验研究深化了CLIP在STR任务中适配机制的理解。本方法为未来基于VLM的STR研究建立了简单而强大的基线。

Index Terms—Vision-Language Model, Scene Text Recognition, CLIP

索引术语—视觉语言模型 (Vision-Language Model)、场景文本识别 (Scene Text Recognition)、CLIP

I. INTRODUCTION

I. 引言

ISION-LANGUAGE models (VLMs) pre-trained on web-scale data like CLIP [1] and ALIGN [2] shows remarkable zero-shot capacity across different tasks. Researchers also successfully transfer the knowledge from pre-trained VLMs to diverse tasks in a zero-shot or fine-tuning manner, e.g., visual question answering [3], information retrieval [4], [5], referring expression comprehension [6], and image captioning [7]. VLM is widely recognized as a foundational model and an important component of artificial intelligence [8].

视觉语言模型 (Vision-Language Model, VLM) 通过CLIP [1]和ALIGN [2]等网络规模数据预训练后,在不同任务中展现出卓越的零样本能力。研究者们还成功地将预训练VLM的知识以零样本或微调方式迁移至多种任务,例如视觉问答 [3]、信息检索 [4][5]、指代表达理解 [6] 以及图像描述生成 [7]。VLM被广泛认为是基础模型和人工智能的重要组成部分 [8]。

Scene text recognition (STR) is a critical technique and an essential process in many vision and language applications, e.g., document analysis, autonomous driving, and augmented reality. Similar to the aforementioned cross-modal tasks, STR involves two different modalities: image and text. However,

场景文本识别 (Scene Text Recognition, STR) 是一项关键技术,也是许多视觉与语言应用(如文档分析、自动驾驶和增强现实)中的核心流程。与前述跨模态任务类似,STR 涉及图像和文本两种不同模态。然而,

This work was partially supported by the Earth System Big Data Platform of the School of Earth Sciences, Zhejiang University. Corresponding author: Ruijie Quan

本研究部分由浙江大学地球科学学院地球系统大数据平台支持。通讯作者:Ruijie Quan

Shuai Zhao is with the ReLER Lab, Australian Artificial Intelligence Institute, University of Technology Sydney, Ultimo, NSW 2007, Australia. Part of this work is done during an internship at Baidu Inc. Email: zhao shuai m cc $@$ gmail.com. Linchao Zhu, Ruijie Quan, Yi Yang are with ReLER Lab, CCAI, Zhejiang University, Zhejiang, China. E-mail: {zhulinchao, quanruijie, yangyics}@zju.edu.cn.

帅钊就职于悉尼科技大学澳大利亚人工智能研究所ReLER实验室,地址:澳大利亚新南威尔士州Ultimo NSW 2007。部分工作完成于百度公司实习期间。邮箱:zhaoshuaimcc@gmail.com。林超柱、权锐杰、杨易同属浙江大学CCAI研究院ReLER实验室。邮箱:{zhulinchao, quanruijie, yangyics}@zju.edu.cn。

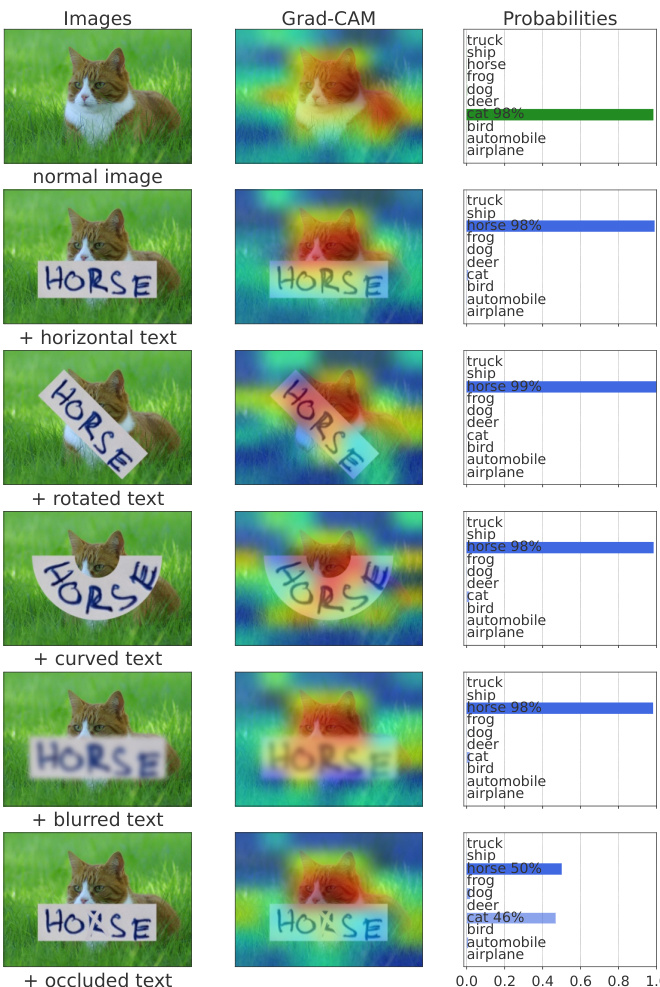

Fig. 1: Zero-shot classification results of CLIP-ViT-B/32. CLIP can perceive and understand text in images, even for irregular text with noise, rotation, and occlusion. CLIP is potentially a powerful scene text recognition expert.

图 1: CLIP-ViT-B/32 的零样本分类结果。CLIP 能够感知并理解图像中的文本,即使对于带有噪声、旋转和遮挡的不规则文本也是如此。CLIP 可能是一位强大的场景文本识别专家。

unlike the popularity of pre-trained VLMs in other crossmodal tasks, STR methods still tend to rely on backbones pre-trained on single-modality data [9], [10], [11], [12]. In this work, we show that VLM pre-trained on image-text pairs possess strong scene text perception abilities, making them superior choices as STR backbones.

与其他跨模态任务中预训练视觉语言模型(VLM)的流行不同,场景文本识别(STR)方法仍倾向于依赖单模态数据预训练的骨干网络[9][10][11][12]。本研究表明,基于图像-文本对预训练的VLM具有强大的场景文本感知能力,是更优的STR骨干网络选择。

STR methods generally struggle with irregular text like rotated, curved, blurred, or occluded text [13], [14]. However, irregular text is prevalent in real-life scenarios [15], [16], making it necessary for STR models to effectively handle these challenging cases. Interestingly, we observe that the VLM (e.g., CLIP [1]) can robustly perceive irregular text in arbitrary orders without relying on specific sequence order assumptions. During training, the visual branch provides an initial prediction based on the visual feature, which is then refined by the cross-modal branch to address possible discrepancies between the visual feature and text semantics of the prediction. The cross-modal branch functions as a semanticaware spell checker, similar to modern STR methods [9], [12]. For inference, we design a dual predict-and-refine decoding scheme to fully utilize the capabilities of both encoder-decoder branches for improved character recognition.

STR方法通常难以处理旋转、弯曲、模糊或被遮挡的不规则文本 [13], [14]。然而,不规则文本在现实场景中十分普遍 [15], [16],因此STR模型必须有效应对这些挑战性情况。有趣的是,我们发现视觉语言模型(VLM,如CLIP [1])无需依赖特定序列顺序假设,就能稳健感知任意排列的不规则文本。训练过程中,视觉分支基于视觉特征提供初始预测,随后跨模态分支通过修正视觉特征与预测文本语义间的潜在偏差来优化结果。该跨模态分支类似于现代STR方法 [9], [12] 中的语义感知拼写检查器。推理阶段,我们设计了双预测-优化解码方案,充分融合两个编码器-解码器分支的能力以提升字符识别性能。

Fig. 2: Attention of CLIP-ViT-B/32 for STR images.

图 2: CLIP-ViT-B/32 对 STR (Scene Text Recognition) 图像的注意力机制可视化

We scale CLIP4STR across different model sizes, pretraining data, and training data to investigate the effectiveness of large-scale pre-trained VLMs as STR backbones. CLIP4STR achieves state-of-the-art performance on 13 STR benchmarks, encompassing regular and irregular text. Additionally, we present a comprehensive empirical study on adapting CLIP to STR. CLIP4STR provides a simple yet strong baseline for future STR research with VLMs.

我们通过调整不同模型规模、预训练数据和训练数据来研究大规模预训练视觉语言模型(VLM)作为STR(场景文本识别)主干的有效性。CLIP4STR在13个STR基准测试(包括规则和不规则文本)上实现了最先进的性能。此外,我们对CLIP模型在STR任务上的适配进行了全面的实证研究。CLIP4STR为未来基于VLM的STR研究提供了一个简单而强大的基线。

II. RELATED WORK

II. 相关工作

natural images. In Fig. 1, we put different text stickers on a natural image, use CLIP to classify it1, and visualize the attention of CLIP via Grad-CAM [19]. It is evident that CLIP pays high attention to the text sticker and accurately understands the meaning of the word, regardless of text variations 2. CLIP is trained on massive natural images collected from the web, and its text perception ability may come from the natural images containing scene texts [20]. Will CLIP perceive the text in common STR images [21], [22], [16], which are cropped from a natural image? Fig. 2 presents the visualization results of CLIP-ViT-B/32 for STR images. Although the text in these STR images is occluded, curved, blurred, and rotated, CLIP can still perceive them. From Fig. 1&2, we can see CLIP possesses an exceptional capability to perceive and comprehend various text in images. This is exactly the desired quality for a robust STR backbone.

自然图像。在图1中,我们在自然图像上放置不同的文字贴纸,使用CLIP对其进行分类1,并通过Grad-CAM[19]可视化CLIP的注意力。显然,无论文字如何变化2,CLIP都对文字贴纸高度关注并准确理解单词含义。CLIP是基于从网络收集的海量自然图像训练的,其文本感知能力可能源自包含场景文字的自然图像[20]。那么CLIP能否感知常见STR图像[21][22][16]中的文字?这些图像是从自然图像中裁剪得到的。图2展示了CLIP-ViT-B/32对STR图像的可视化结果。尽管这些STR图像中的文字存在遮挡、弯曲、模糊和旋转,CLIP仍能感知它们。从图1和图2可以看出,CLIP具有感知和理解图像中各类文字的卓越能力,这正是稳健STR骨干网络所需的特质。

In this work, we aim to leverage the text perception capability of CLIP for STR and build a strong baseline for future STR research with VLMs. To this end, we introduce CLIP4STR, a simple yet effective STR framework built upon CLIP. CLIP4STR consists of two encoder-decoder branches: the visual branch and the cross-modal branch. The image and text encoders inherit from CLIP, while the decoders employ the transformer decoder [23]. To enable the decoder to delve deep into word structures (dependency relationship among characters in a word), we incorporate the permuted sequence modeling technique proposed by PARSeq [24]. This allows the decoder to perform sequence modeling of characters in

在本工作中,我们旨在利用CLIP的文本感知能力进行场景文本识别(STR),并为基于视觉语言模型(VLM)的未来STR研究建立强大基线。为此,我们提出了CLIP4STR——一个基于CLIP构建的简单而有效的STR框架。CLIP4STR包含视觉分支和跨模态分支两个编码器-解码器分支:图像和文本编码器继承自CLIP,而解码器采用Transformer解码器[23]。为使解码器能深入探究单词结构(单词内字符间的依赖关系),我们引入了PARSeq[24]提出的置换序列建模技术,该技术使得解码器能够对字符序列进行建模...

A. Vision-Language Models and Its Application

A. 视觉语言模型及其应用

Large-scale pre-trained vision-language models learning under language supervision such as CLIP [1], ALIGN [2], and Florence [25] demonstrate excellent generalization abilities. This encourages researchers to transfer the knowledge of these pre-trained VLMs to different downstream tasks in a finetuning or zero-shot fashion. For instance, [4], [26], [27] tune CLIP on videos and make CLIP specialized in text-video retrieval, CLIPScore [7] uses CLIP to evaluate the quality of generated image captions, and [28], [29] use CLIP as the reward model during test time or training. The wide application of VLMs also facilitates the research on different pre-training models, e.g., ERNIE-ViLG [30], CoCa [31], OFA [32], DeCLIP [33], FILIP [34], and ALBEF [35]. Researchers also explore the power of scaling up the data, e.g., COYO-700M [36] and LAION-5B [37]. Generally, more data brings more power for large VLMs [38].

大规模预训练的视觉语言模型(如CLIP [1]、ALIGN [2]和Florence [25])在语言监督下学习,展现出卓越的泛化能力。这促使研究者们将这些预训练VLM的知识通过微调或零样本方式迁移到不同下游任务中。例如,[4]、[26]、[27]对CLIP进行视频微调使其专精于文本-视频检索,CLIPScore [7]利用CLIP评估生成图像描述的质量,而[28]、[29]则在测试或训练阶段将CLIP作为奖励模型。VLM的广泛应用也推动了不同预训练模型的研究,如ERNIE-ViLG [30]、CoCa [31]、OFA [32]、DeCLIP [33]、FILIP [34]和ALBEF [35]。研究者还探索了数据规模化的潜力,例如COYO-700M [36]和LAION-5B [37]。通常,更多数据会为大型VLM带来更强性能[38]。

VLMs pre-trained on large-scale image-text pairs possess many fascinating attributes [1], [20], [39]. For instance, some neurons in CLIP can perceive the visual and text signals corresponding to the same concept. [20] finds particular neurons in CLIP-RN $I50\times4$ respond to both photos of Spiderman and the text ‘‘spider’’ in an image. This also leads to Typographic Attacks, namely, VLMs focus on the text rather than natural objects in an image as shown in Figure 1. In this work, we leverage the text perception ability of multi-modal neurons and make CLIP specialize in scene text recognition.

在大规模图文对上预训练的视觉语言模型(VLM)具有许多引人入胜的特性[1]、[20]、[39]。例如,CLIP中的某些神经元能够感知同一概念对应的视觉和文本信号。[20]发现CLIP-RN $I50\times4$ 中的特定神经元会对蜘蛛侠照片和图像中的"spider"文本同时产生响应。这也导致了排版攻击(Typographic Attacks),即如图1所示,视觉语言模型会聚焦于图像中的文字而非自然物体。本研究利用多模态神经元的文本感知能力,使CLIP专门用于场景文本识别。

B. Scene Text Recognition

B. 场景文本识别

Scene text recognition methods can be broadly divided into two categories: context-free and context-aware. Context-free STR methods only utilize the visual features of images, such as CTC-based [40] methods [41], [42], [43], [10], segmentationbased methods [44], [45], [46], and attention-based methods with an encoder-decoder mechanism [47], [48]. Since contextfree STR methods lack the understanding of text semantics, they are less robust against occluded or incomplete text.

场景文本识别方法大致可分为两类:无上下文(context-free)和上下文感知(context-aware)。无上下文STR方法仅利用图像的视觉特征,例如基于CTC (Connectionist Temporal Classification)的方法[40][41][42][43][10]、基于分割的方法[44][45][46]以及采用编码器-解码器机制的基于注意力机制的方法[47][48]。由于无上下文STR方法缺乏对文本语义的理解,它们在处理遮挡或不完整文本时鲁棒性较差。

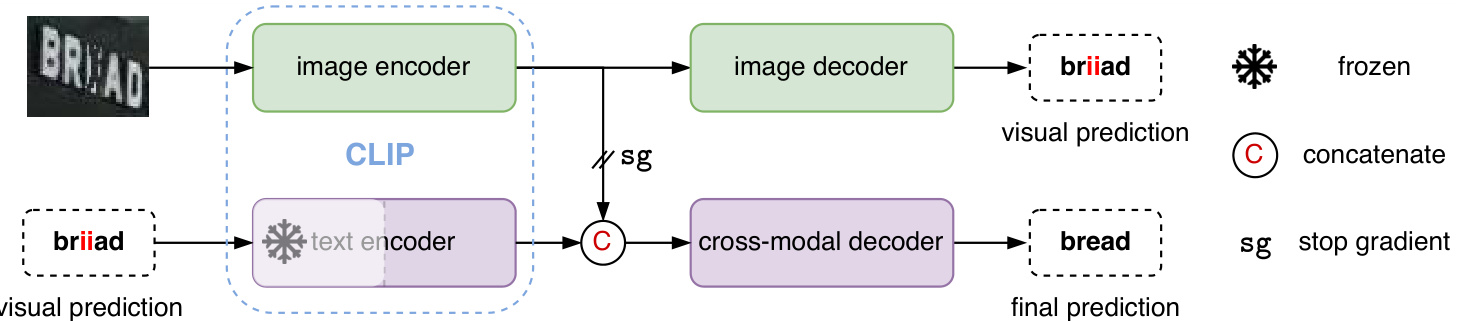

Fig. 3: The framework of CLIP4STR. It has a visual branch and a cross-modal branch. The cross-modal branch refines the prediction of the visual branch for the final output. The text encoder is partially frozen.

图 3: CLIP4STR框架。它包含视觉分支和跨模态分支。跨模态分支对视觉分支的预测进行优化以生成最终输出。文本编码器部分参数被冻结。

Context-aware STR methods are the mainstream approach, leveraging text semantics to enhance recognition performance. For example, ABINet [9], LevOCR [49], MATRN [50], and TrOCR [12] incorporate an external language model to capture text semantics. Other methods achieve similar goals with built-in modules, such as RNN [51], [52], GRU [53], transformer [54], [24], [55]. The context information is interpreted as the relations of textual primitives by Zhang et al. [56], who proposes a relational contrastive self-supervised learning STR framework. Besides the context-free and context-aware methods, some efforts aim to enhance the explain ability of STR. For instance, STRExp [57] utilizes local individual character explanations to deepen the understanding of STR methods. Moreover, training data plays a vital role in STR. Traditionally, synthetic data [58], [59] are used for training due to the ease of generating a large number of samples. However, recent research suggests that using realistic training data can lead to better outcomes compared to synthetic data [60], [24], [61], [62]. Motivated by these findings, we primarily employ realistic training data in this work.

上下文感知的场景文本识别 (STR) 方法已成为主流技术,它们通过利用文本语义来提升识别性能。例如 ABINet [9]、LevOCR [49]、MATRN [50] 和 TrOCR [12] 都引入了外部语言模型来捕获文本语义。其他方法则通过内置模块实现类似目标,如 RNN [51][52]、GRU [53]、Transformer [54][24][55]。Zhang 等人 [56] 将上下文信息解释为文本基元之间的关系,并提出了一种关系对比自监督学习 STR 框架。

除上下文无关和上下文感知方法外,部分研究致力于提升 STR 的可解释性。例如 STRExp [57] 通过局部单字符解释来深化对 STR 方法的理解。此外,训练数据对 STR 至关重要。传统方法因易于生成大量样本而采用合成数据 [58][59] 进行训练,但最新研究表明,相比合成数据,使用真实训练数据能获得更好效果 [60][24][61][62]。受此启发,本研究主要采用真实训练数据。

The success of VLMs also spreads to the STR area. For example, TrOCR [12] adopts separate pre-trained language and vision models plus post-pre training on STR data in an auto-regressive manner [63], MATRN [50] uses a popular multi-modal fusion manner in VLMs such as ALBEF [35] and ViLT [64]. CLIPTER [65] enhances the character recognition performance by utilizing the CLIP features extracted from the global image. CLIP-OCR [66] leverages both visual and linguistic knowledge from CLIP through feature distillation. In contrast, we directly transfer CLIP to a robust scene text reader, eliminating the need for CLIP features from the global image or employing an additional CLIP model as a teacher for the STR reader. We hope our method can be a strong baseline for future STR research with VLMs.

视觉语言模型(VLM)的成功也延伸到了场景文本识别(STR)领域。例如,TrOCR [12]采用预训练的语言和视觉模型分离架构,并以自回归方式在STR数据上进行后预训练[63];MATRN [50]使用了ALBEF [35]和ViLT [64]等VLM中流行的多模态融合方法。CLIPTER [65]通过利用从全局图像提取的CLIP特征来增强字符识别性能。CLIP-OCR [66]则通过特征蒸馏同时利用CLIP的视觉和语言知识。相比之下,我们直接将CLIP迁移为鲁棒的场景文本阅读器,无需从全局图像获取CLIP特征,也无需额外使用CLIP模型作为STR阅读器的教师模型。我们希望该方法能成为未来基于VLM的STR研究的强基线。

III. METHOD

III. 方法

A. Preliminary

A. 初步准备

Before illustrating the framework of CLIP4STR, we first introduce CLIP [1] and the permuted sequence modeling (PSM) technique proposed by PARSeq [24]. CLIP serves as the backbone, and the PSM is used for sequence modeling.

在阐述CLIP4STR框架之前,我们首先介绍CLIP [1] 和PARSeq [24] 提出的置换序列建模 (permuted sequence modeling, PSM) 技术。CLIP作为主干网络,PSM用于序列建模。

- CLIP: CLIP consists of a text encoder and an image encoder. CLIP is pre-trained on 400 million image-text pairs using contrastive learning. The text and image features from

- CLIP: CLIP由文本编码器和图像编码器组成。该模型通过对比学习在4亿个图文对上进行了预训练,能提取文本与图像特征。

TABLE I: Examples of attention mask $\mathcal{M}$ . The sequences with [B] and [E] represent the input context and output sequence, respectively. The entry $\mathcal{M}_{i,j}=-\infty$ (negative infinity) indicates that the dependency of output $i$ on input context $j$ is removed.

表 1: 注意力掩码 $\mathcal{M}$ 示例。带有 [B] 和 [E] 的序列分别表示输入上下文和输出序列。条目 $\mathcal{M}_{i,j}=-\infty$ (负无穷) 表示输出 $i$ 对输入上下文 $j$ 的依赖关系被移除。

| [B] | y1 | y2 | y3 | [B] | y1 | y2 | y3 | [B] | y1 | y2 | y3 | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| y1 y2 y3 | 0 | 181818 | y1 | 0 | 18 | 0 | 0 | y1 | 0 | 18 | 0 | 0 | ||

| 0 | 0 | 18丨8 | y2 | 0 | 0 | 18 | 0 | y2 | 0 | 181818 | ||||

| 0 | 0 | 0 | 18 | y3 | 0 | 0 | 0 | 18 | y3 | 0 18 | 0 | 18 | ||

| 0 | 0 | 0 | 0 | [E] | 0 | 0 | 0 | 0 | [E] | 0 | 0 | 0 | ||

| (a) AR掩码 | (b) 完形掩码 | (c) 随机掩码 | 0 |

CLIP are aligned in a joint image-text embedding space. i) The image encoder of CLIP is a vision transformer (ViT) [69]. Given an image, ViT introduces a visual tokenizer (convolution) to convert non-overlapped image patches into a discrete sequence. A [CLASS] token is then prepended to the beginning of the image sequence. Initially, CLIP image encoder only returns the feature of the [CLASS] token, but in this work, we return features of all tokens. The rationale behind this choice is that character-level recognition requires fine-grained detail, and local features from all patches are necessary. These features are normalized and linearly projected into the joint image-text embedding space. ii) The text encoder of CLIP is a transformer encoder [23], [70]. The text tokenizer is a lower-cased byte pair encoding – BPE [71] with vocabulary size 49,152. The beginning and end of the text sequence are padded with [SOS] and [EOS] tokens, respectively. Linguistic features of all tokens are utilized for character recognition. These features are also normalized and linearly projected into the joint image-text embedding space.

CLIP在联合图文嵌入空间中对齐。i) CLIP的图像编码器采用视觉Transformer (ViT) [69]。给定图像时,ViT通过视觉分词器(卷积操作)将非重叠图像块转换为离散序列,并在序列开头添加[CLASS]标记。原始CLIP图像编码器仅返回[CLASS]标记的特征,但本研究返回所有标记的特征——因为字符级识别需要细粒度细节,必须利用所有图像块的局部特征。这些特征经归一化后线性投影至联合图文嵌入空间。ii) CLIP的文本编码器采用Transformer编码器[23][70],其文本分词器使用小写字节对编码(BPE)[71](词表大小49,152),文本序列首尾分别添加[SOS]和[EOS]标记。字符识别任务会利用所有文本标记的语言特征,这些特征同样经过归一化后线性投影至联合图文嵌入空间。

- Permuted sequence modeling: Traditionally, STR methods use a left-to-right or right-to-left order to model character sequences [9]. However, the characters in a word do not strictly follow such directional dependencies. For instance, to predict the letter “o” in the word “model”, it is sufficient to consider only the context “m_de” rather than relying solely on the left-to-right context “m_” or the right-to-left context “led_”. The dependencies between characters in a word can take various forms. To encourage the STR method to explore these structural relationships within words, PARSeq [24] introduces a permuted sequence modeling (PSM) technique. This technique uses a random attention mask $\mathcal{M}$ for attention operations [23] to generate random dependency relationships between the input context and the output. Table I illustrates three examples of mask $\mathcal{M}$ . We will delve further into this mechanism in $\S\mathrm{III-C}$ .

- 置换序列建模 (Permuted sequence modeling): 传统STR方法采用从左到右或从右到左的顺序建模字符序列[9]。但单词中的字符并不严格遵循这种方向性依赖关系。例如预测单词"model"中的字母"o"时,仅需考虑上下文"m_de",而非单纯依赖从左到右的"m_"或从右到左的"led_"上下文。单词内字符间的依赖关系可呈现多种形式。为促使STR方法探索单词内部的结构关系,PARSeq[24]引入了置换序列建模(PSM)技术,该技术通过随机注意力掩码$\mathcal{M}$进行注意力运算[23],从而在输入上下文与输出之间生成随机依赖关系。表I展示了三种掩码$\mathcal{M}$的示例,我们将在$\S\mathrm{III-C}$章节深入探讨该机制。

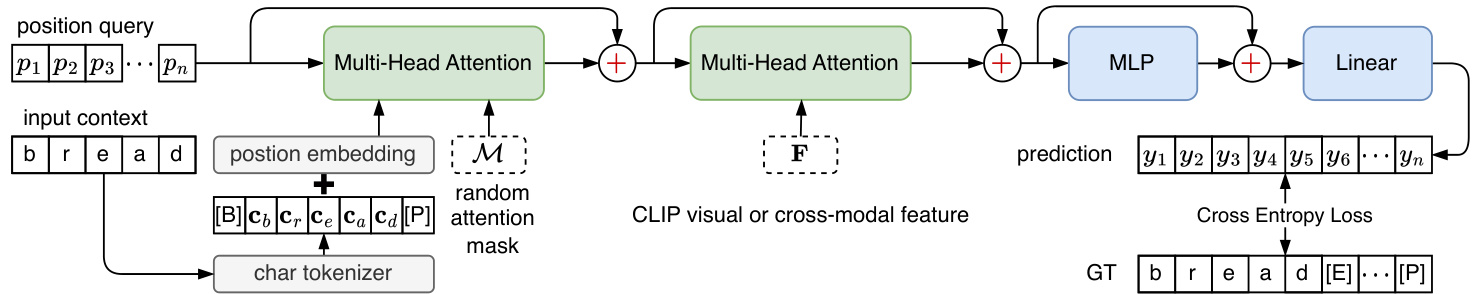

Fig. 4: The decoder of CLIP4STR. [B], [E], and [P] are the beginning, end, and padding tokens, respectively. ‘[· · · ]’ in prediction represents the ignored outputs. Layer normalization [67] and dropout [68] in the decoder are ignored.

图 4: CLIP4STR的解码器结构。[B]、[E]和[P]分别表示起始token、结束token和填充token。预测中的"[···]"代表被忽略的输出。解码器中的层归一化[67]和dropout[68]未在图中显示。

B. Encoder

B. 编码器

The framework of CLIP4STR is illustrated in Fig. 3. CLIP4STR employs a dual encoder-decoder design, consisting of a visual branch and a cross-modal branch. The text and image encoders utilize the architectures and pre-trained weights from CLIP. The visual branch generates an initial prediction based on the visual features extracted by the image encoder. Subsequently, the cross-modal branch refines the initial prediction by addressing the discrepancy between the visual features and the textual semantics of the prediction. Since the image and text features are aligned in a joint imagetext embedding space during pre-training, it becomes easy to identify this discrepancy. The cross-modal branch acts as a semantic-aware spell checker.

CLIP4STR的框架如图3所示。CLIP4STR采用双编码器-解码器设计,包含视觉分支和跨模态分支。文本和图像编码器使用CLIP的架构和预训练权重。视觉分支基于图像编码器提取的视觉特征生成初始预测。随后,跨模态分支通过解决视觉特征与预测文本语义之间的差异来优化初始预测。由于图像和文本特征在预训练期间被对齐到联合图像-文本嵌入空间,因此很容易识别这种差异。跨模态分支充当语义感知的拼写检查器。

The text encoder is partially frozen. This freezing operation retains the learned text understanding ability of the language model and reduces training costs. It is a common practice in transfer learning of large language models [72]. In contrast, the visual branch is fully trainable due to the domain gap between STR data (cropped word images) and CLIP training data (collected from the web, often natural images). Additionally, we block the gradient flow from the cross-modal decoder to the visual encoder to enable autonomous learning of the visual branch, resulting in improved refined cross-modal predictions.

文本编码器部分冻结。这种冻结操作保留了语言模型已学习的文本理解能力,并降低了训练成本,这是大语言模型迁移学习中的常见做法 [72]。相比之下,由于STR数据(裁剪后的单词图像)与CLIP训练数据(从网络收集的通常是自然图像)之间存在领域差距,视觉分支是完全可训练的。此外,我们阻断了从跨模态解码器到视觉编码器的梯度流,使视觉分支能够自主学习,从而改进优化后的跨模态预测。

For the text encoder $g(\cdot)$ and the image encoder $h(\cdot)$ , given the input text $\pmb{t}$ and image $_{x}$ , the text, image, and cross-modal features are computed as:

对于文本编码器 $g(\cdot)$ 和图像编码器 $h(\cdot)$,给定输入文本 $\pmb{t}$ 和图像 $_{x}$,其文本特征、图像特征及跨模态特征的计算方式为:

$$

\begin{array}{r l}&{{\boldsymbol{F}}{t}={\boldsymbol{g}}(t)\in\mathbb{R}^{L_{t}\times D},}\ &{{\boldsymbol{F}}{i}={\boldsymbol{h}}({\boldsymbol{x}})\in\mathbb{R}^{L_{i}\times D},}\ &{{\boldsymbol{F}}{c}=[{\boldsymbol{F}}{i}^{T}{\boldsymbol{F}}{t}^{T}]^{T}\in\mathbb{R}^{L_{c}\times D},}\end{array}

$$

$$

\begin{array}{r l}&{{\boldsymbol{F}}{t}={\boldsymbol{g}}(t)\in\mathbb{R}^{L_{t}\times D},}\ &{{\boldsymbol{F}}{i}={\boldsymbol{h}}({\boldsymbol{x}})\in\mathbb{R}^{L_{i}\times D},}\ &{{\boldsymbol{F}}{c}=[{\boldsymbol{F}}{i}^{T}{\boldsymbol{F}}{t}^{T}]^{T}\in\mathbb{R}^{L_{c}\times D},}\end{array}

$$

where $L_{t}$ represents the text sequence length, $L_{i}$ is the sequence length of image tokens, $D$ denotes the dimension of the joint image-text embedding space, and the cross-modal sequence length $L_{c}=L_{i}+L_{t}$ .

其中 $L_{t}$ 表示文本序列长度,$L_{i}$ 表示图像token序列长度,$D$ 表示图文联合嵌入空间的维度,跨模态序列长度 $L_{c}=L_{i}+L_{t}$。

C. Decoder

C. 解码器

The decoder aims to extract the character information from the visual feature $F_{i}$ or cross-modal feature $F_{c}$ . The decoder framework is shown in Fig. 4. It adopts the design of the transformer decoder [23] plus the PSM technique mentioned in $\S$ III-A2, enabling a predicted character to have arbitrary dependencies on the input context during training.

解码器旨在从视觉特征 $F_{i}$ 或跨模态特征 $F_{c}$ 中提取字符信息。解码器框架如图 4 所示。它采用了 Transformer 解码器 [23] 的设计,并结合了 $\S$ III-A2 中提到的 PSM 技术,使得训练期间预测字符能够对输入上下文具有任意依赖性。

The visual and cross-modal decoders have the same architecture but differ in the input. They receive the following inputs: a learnable position query $\textbf{}\in\mathbb{R}^{N\times D}$ , an input context c ∈ RN×D, and a randomly generated attention mask $\mathcal{M}\in\mathbb{R}^{N\times N}$ . $N$ represents the length of characters. The decoder outputs the prediction $\textbf{}\in\mathbf{\bar{\mathbb{R}}}^{N\times C}$ , where $C$ is the number of character classes. The decoding stage can be denoted as: $\pmb{y}=\mathrm{DEC}(\pmb{p},\pmb{c},\pmb{\mathcal{M}},\pmb{F})$ . The first Multi-Head Attention (MHA) in Fig. 4 performs context-position attention:

视觉解码器和跨模态解码器结构相同但输入不同。它们接收以下输入:可学习的位置查询 $\textbf{}\in\mathbb{R}^{N\times D}$ 、输入上下文 c ∈ RN×D,以及随机生成的注意力掩码 $\mathcal{M}\in\mathbb{R}^{N\times N}$ 。$N$ 表示字符长度。解码器输出预测 $\textbf{}\in\mathbf{\bar{\mathbb{R}}}^{N\times C}$ ,其中 $C$ 是字符类别数。解码阶段可表示为:$\pmb{y}=\mathrm{DEC}(\pmb{p},\pmb{c},\pmb{\mathcal{M}},\pmb{F})$ 。图4中第一个多头注意力(MHA)执行上下文-位置注意力:

$$

m_{1}=\mathsf{s o f t m a x}(\frac{p c^{T}}{\sqrt{D}}+\mathcal{M})c+p.

$$

$$

m_{1}=\mathsf{s o f t m a x}(\frac{p c^{T}}{\sqrt{D}}+\mathcal{M})c+p.

$$

The second MHA focuses on feature-position attention:

第二个 MHA 专注于特征-位置注意力:

$$

m_{2}={\mathsf{s o f t m a x}}({\frac{m_{1}F^{T}}{\sqrt{D}}})F+m_{1}.

$$

$$

m_{2}={\mathsf{s o f t m a x}}({\frac{m_{1}F^{T}}{\sqrt{D}}})F+m_{1}.

$$

For simplicity, we ignore the input and output linear transformations in the attention operations of Eq. (4) and Eq. (5). Then $m_{2}\in\mathbb{R}^{N\times D}$ is used for the final prediction $\pmb{y}$ :

为简化起见,我们忽略式(4)和式(5)注意力操作中的输入输出线性变换。随后用 $m_{2}\in\mathbb{R}^{N\times D}$ 进行最终预测 $\pmb{y}$ :

$$

\pmb{y}=\mathrm{Linear}\big(\mathrm{MLP}\big(m_{2}\big)+m_{2}\big).

$$

$$

\pmb{y}=\mathrm{Linear}\big(\mathrm{MLP}\big(m_{2}\big)+m_{2}\big).

$$

During training, the output of the decoder depends on the randomly permuted input context. This encourages the decoder to analyze the word structure beyond the traditional leftto-right or right-to-left sequence modeling assumptions [9]. The inclusion of a random attention mask $\mathcal{M}$ in Eq.(4) enables this capability [24]. Table I presents examples of generated attention masks, including a left-to-right autoregressive (AR) mask, a cloze mask, and a random mask. Following PARSeq [24], we employ $K=6$ masks per input context during training. The first two masks are left-to-right and right-to-left masks, and others are randomly generated. CLIP4STR is optimized to minimize the sum of cross-entropy losses $\left(\mathrm{CE}\left(\cdot\right)\right)$ of the visual branch and the cross-modal branch:

训练过程中,解码器的输出依赖于随机置换的输入上下文。这促使解码器能够分析单词结构,而不仅限于传统的从左到右或从右到左的序列建模假设 [9]。在公式(4) 中加入随机注意力掩码 $\mathcal{M}$ 实现了这一能力 [24]。表 I 展示了生成的注意力掩码示例,包括从左到右的自回归 (AR) 掩码、填空掩码和随机掩码。遵循 PARSeq [24] 的方法,我们在训练时为每个输入上下文使用 $K=6$ 个掩码。前两个掩码是左到右和右到左掩码,其余为随机生成。CLIP4STR 通过最小化视觉分支和跨模态分支的交叉熵损失 $\left(\mathrm{CE}\left(\cdot\right)\right)$ 之和进行优化:

$$

\mathcal{L}=\mathbb{C}\mathbb{E}(\pmb{y}^{i},\hat{\pmb{y}})+\mathbb{C}\mathbb{E}(\pmb{y},\hat{\pmb{y}}),

$$

$$

\mathcal{L}=\mathbb{C}\mathbb{E}(\pmb{y}^{i},\hat{\pmb{y}})+\mathbb{C}\mathbb{E}(\pmb{y},\hat{\pmb{y}}),

$$

where ${\hat{y}},{y}^{i}$ , and $\textit{\textbf{y}}$ indicate ground truth, prediction of the visual branch, and prediction of the cross-modal branch.

其中 ${\hat{y}},{y}^{i}$ 和 $\textit{\textbf{y}}$ 分别表示真实值、视觉分支预测值和跨模态分支预测值。

Algorithm 1: Inference decoding scheme $(\S\mathrm{A})$

算法 1: 推理解码方案 $(\S\mathrm{A})$

| 模型 | 参数量 | 训练数据 | 批次大小 | 训练轮数 | 耗时 |

|---|---|---|---|---|---|

| CLIP4STR-B | 158M | Real(3.3M) | 1024 | 16 | 12.8h |

| CLIP4STR-L | 446M | Real(3.3M) | 1024 | 10 | 23.4h |

| CLIP4STR-H | 1B | RBU(6.5M) | 1024 | 4 | 48.0h |

TABLE II: Model sizes and optimization hyper-parameter. The learning rate for CLIP encoders is $8.4\mathrm{e}{-5}\times\frac{\mathrm{batch}}{512}$ [73]. For models trained from scratch (decoders), the learning rate is multiplied by 19.0. Params is the total parameters in a model, and non-trainable parameters in three models are $44.3\mathbf{M}$ , $80.5\mathbf{M}$ , and 126M, respectively. Training time is measured on 8 NVIDIA RTX A6000 GPUs.

表 II: 模型规模与优化超参数。CLIP编码器的学习率为 $8.4\mathrm{e}{-5}\times\frac{\mathrm{batch}}{512}$ [73]。对于从头训练的模型(解码器),学习率需乘以19.0。Params表示模型总参数量,三个模型的不可训练参数量分别为 $44.3\mathbf{M}$、$80.5\mathbf{M}$ 和126M。训练时长基于8块NVIDIA RTX A6000 GPU测得。

- Decoding scheme: CLIP4STR consists of two branches: a visual branch and a cross-modal branch. To fully exploit the capacity of both branches, we design a dual predict-and-refine decoding scheme for inference, inspired by previous STR methods [9], [24]. Alg. 1 illustrates the decoding process. The visual branch first performs auto regressive decoding, where the future output depends on previous predictions. Subsequently, the cross-modal branch addresses possible discrepancies between the visual feature and the text semantics of the visual prediction, aiming to improve recognition accuracy. This process is also auto regressive. Finally, the previous predictions are utilized as the input context for refining the output in a cloze-filling manner. The refinement process can be iterative. After iterative refinement, the output of the cross-modal branch serves as the final prediction.

- 解码方案:CLIP4STR包含两个分支:视觉分支和跨模态分支。为充分发挥两个分支的能力,我们受先前STR方法[9][24]启发,设计了一种双预测-精调的解码方案进行推理。算法1展示了该解码流程。视觉分支首先执行自回归解码,其未来输出取决于先前的预测结果。随后,跨模态分支会处理视觉特征与视觉预测文本语义之间可能存在的差异,旨在提升识别准确率。此过程同样采用自回归方式。最终,先前预测结果将作为填空式精调的输入上下文,该精调过程可迭代进行。经过迭代精调后,跨模态分支的输出将作为最终预测结果。

IV. EXPERIMENT

IV. 实验

A. Experimental Details

A. 实验细节

We instantiate CLIP4STR with CLIP-ViT-B/16, CLIP-ViTL/14, and CLIP-ViT-H/14 [38]. Table II presents the main hyper-parameters of CLIP4STR. A reproduction of CLIP4STR is at https://github.com/VamosC/CLIP4STR.

我们使用CLIP-ViT-B/16、CLIP-ViTL/14和CLIP-ViT-H/14 [38]实例化CLIP4STR。表II展示了CLIP4STR的主要超参数。CLIP4STR的复现代码位于https://github.com/VamosC/CLIP4STR。

Test benchmarks The evaluation benchmarks include IIIT5K [74], CUTE80 [75], Street View Text (SVT) [76], SVTPerspective (SVTP) [77], ICDAR 2013 (IC13) [21], ICDAR 2015 (IC15) [22], and three occluded datasets – HOST,

测试基准

评估基准包括IIIT5K [74]、CUTE80 [75]、街景文本(SVT) [76]、透视街景文本(SVTP) [77]、ICDAR 2013(IC13) [21]、ICDAR 2015(IC15) [22]以及三个遮挡数据集——HOST、

WOST [78], and OCTT [14]. Additionally, we utilize 3 recent large benchmarks: COCO-Text (low-resolution, occluded text) [79], ArT (curved and rotated text) [15], and UberText (vertical and rotated text) [16].

WOST [78]和OCTT [14]。此外,我们使用了3个近期的大型基准数据集:COCO-Text(低分辨率、遮挡文本)[79]、ArT(弯曲和旋转文本)[15]以及UberText(垂直和旋转文本)[16]。

Training dataset 1) $\mathbf{MJ+SJ}$ : MJSynth (MJ, 9M samples) [58] and SynthText (ST, 6.9M samples) [59]. 2) Real(3.3M): COCO-Text (COCO) [79], RCTW17 [80], UberText (Uber) [16], ArT [15], LSVT [81], MLT19 [82], ReCTS [83], TextOCR [84], Open Images [85] annotations from the OpenVINO toolkit [86]. These real datasets have 3.3M images in total. 3) RBU(6.5M): A dataset provided by [62]. It combines the Real(3.3M), benchmark datsets (training data of SVT, IIIT5K, IC13, and IC15), and part of Union14M-L [61].

训练数据集

- $\mathbf{MJ+SJ}$:MJSynth(MJ,900万样本)[58] 和 SynthText(ST,690万样本)[59]。

- Real(330万):COCO-Text(COCO)[79]、RCTW17 [80]、UberText(Uber)[16]、ArT [15]、LSVT [81]、MLT19 [82]、ReCTS [83]、TextOCR [84]、Open Images [85](来自 OpenVINO 工具包 [86] 的标注)。这些真实数据集总计包含 330 万张图像。

- RBU(650万):由 [62] 提供的数据集,整合了 Real(330万)、基准数据集(SVT、IIIT5K、IC13 和 IC15 的训练数据)以及部分 Union14M-L [61]。

Learning strategies We apply a warm up and cosine learning rate decay policy. The batch size is kept to be close to 1024. For large models, this is achieved by gradient accumulation. For synthetic data, we train CLIP4STR-B for 6 epochs and CLIP4STR-L for 5 epochs. For RBU(6.5M) data, we train 11, 5, and 4 epochs for CLIP4STR-B, CLIP4STR-L, and CLIP4STR-H, respectively. AdamW [87] optimizer is adopted with a weight decay value 0.2. All experiments are performed with mixed precision [88].

学习策略

我们采用预热和余弦学习率衰减策略。批量大小保持在接近1024。对于大型模型,这是通过梯度累积实现的。对于合成数据,我们训练CLIP4STR-B 6个周期,CLIP4STR-L 5个周期。对于RBU(6.5M)数据,我们分别训练CLIP4STR-B、CLIP4STR-L和CLIP4STR-H 11、5和4个周期。采用AdamW [87]优化器,权重衰减值为0.2。所有实验均使用混合精度 [88]进行。

Data and label processing Rand Augment [97] excludes sharpness and invert is used with layer depth 3 and magnitude 5. The image size is $224\times224$ . The sequence length of the text encoder is 16. The maximum length of the character sequence is 25. Considering an extra [B] or [E] token, we set $N=26$ . During training, the number of character classes $C=94$ , i.e., mixed-case alphanumeric characters and punctuation marks are recognized. During inference, we only use a lowercase alphanumeric charset, i.e., $C=36$ . The iterative refinement times $T_{i}=1$ . The evaluation metric is word accuracy.

数据和标签处理

Rand Augment [97] 排除了锐度和反转操作,采用层深度3和幅度5。图像尺寸为 $224\times224$。文本编码器的序列长度为16。字符序列的最大长度为25。考虑到额外的[B]或[E] token,我们设定 $N=26$。训练时字符类别数 $C=94$(即识别大小写字母数字及标点符号),推理时仅使用小写字母数字字符集($C=36$)。迭代优化次数 $T_{i}=1$。评估指标为单词准确率。

B. Comparison to State-of-the-art

B. 与最先进技术的对比

We compare CLIP4STR with previous SOTA methods on 10 common STR benchmarks in Table III. CLIP4STR surpasses the previous methods by a significant margin, achieving new SOTA performance. Notably, CLIP4STR performs exceptionally well on irregular text datasets, such as IC15 (incidental scene text), SVTP (perspective scene text), CUTE (curved text line images), HOST (heavily occluded scene text), and WOST (weakly occluded scene text). This aligns with the examples shown in Fig. 1&2 and supports our motivation for adapting CLIP as a scene text reader, as CLIP demonstrates robust identification of regular and irregular text. CLIP4STR exhibits excellent reading ability on occluded datasets, surpassing the previous SOTA by $7.8%$ and $5.4%$ in the best case on HOST and WOST, respectively. This ability can be attributed to the pre-trained text encoder and cross-modal decoder, which can infer missing characters using text semantics or visual features. The performance of CLIP4STR is also much better than CLIP-OCR [66] and CLIPTER [65], both of which work in a similar direction as CLIP4STR. This demonstrates that directly transferring CLIP into a STR reader is more effective than the distillation method [66] or utilizing CLIP features of the global image as auxiliary context [65].

我们在表 III 中将 CLIP4STR 与之前的 SOTA (State-of-the-Art) 方法在 10 个常见 STR (Scene Text Recognition) 基准上进行了比较。CLIP4STR 以显著优势超越先前方法,实现了新的 SOTA 性能。值得注意的是,CLIP4STR 在不规则文本数据集上表现尤为突出,例如 IC15 (自然场景文本)、SVTP (透视场景文本)、CUTE (弯曲文本行图像)、HOST (严重遮挡场景文本) 和 WOST (轻微遮挡场景文本)。这与图 1&2 所示的示例一致,并支持我们将 CLIP 适配为场景文本阅读器的动机,因为 CLIP 展现出对规则和不规则文本的鲁棒识别能力。CLIP4STR 在遮挡数据集上表现出卓越的阅读能力,在 HOST 和 WOST 的最佳情况下分别超越先前 SOTA 方法 $7.8%$ 和 $5.4%$。这种能力可归因于预训练的文本编码器和跨模态解码器,它们能利用文本语义或视觉特征推断缺失字符。CLIP4STR 的性能也远优于 CLIP-OCR [66] 和 CLIPTER [65],这两种方法与 CLIP4STR 的研究方向类似。这表明直接将 CLIP 迁移为 STR 阅读器比蒸馏方法 [66] 或利用全局图像的 CLIP 特征作为辅助上下文 [65] 更有效。

TABLE III: Word accuracy on 10 common benchmarks. The best and second-best results are highlighted. Benchmark datasets (B) - SVT, IIIT5K, IC13, and IC15. ‘N/A’ for not applicable. ♯Reproduced by PARSeq [24].

表 III: 10个常见基准测试的词准确率。最佳和次佳结果已高亮显示。基准数据集 (B) - SVT、IIIT5K、IC13和IC15。"N/A"表示不适用。♯由PARSeq [24]复现。

| 方法 | 预训练数据 | 训练数据 | IIIT5K 3,000 | SVT 647 | IC13 1,015 | IC15 1,811 | IC15 2,077 | SVTP 645 | CUTE 288 | HOST 2,416 | WOST 2,416 | OCTT 1,911 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 零样本 CLIP [1] | WIT 400M [1] | N/A | 90.0 | — | — | — | — | — | — | — | — | — |

| SRN [89] | ImageNet-1K | MJ+ST | 94.8 | 91.5 | — | 82.7 | — | 85.1 | 87.8 | — | — | — |

| TextScanner [45] | N/A | MJ+ST | 95.7 | 92.7 | 94.9 | — | 83.5 | 84.8 | 91.6 | — | — | — |

| RCEED [90] | N/A | MJ+ST+B | 94.9 | 91.8 | — | — | 82.2 | 83.6 | 91.7 | — | — | — |

| TRBA [60] | N/A | MJ+ST | 92.1 | 88.9 | — | 86.0 | — | 89.3 | 89.2 | — | — | — |

| VisionLAN [78] | 从头训练 | MJ+ST | 95.8 | 91.7 | — | 83.7 | — | 86.0 | 88.5 | 50.3 | 70.3 | — |

| ABINet [9] | WikiText-103 | MJ+ST | 96.2 | 93.5 | — | 86.0 | — | 89.3 | 89.2 | — | — | — |

| ViTSTR-B [10] | ImageNet-1K | MJ+ST | 88.4 | 87.7 | 92.4 | 78.5 | 72.6 | 81.8 | 81.3 | — | — | — |

| LevOCR [49] | WikiText-103 | MJ+ST | 96.6 | 92.9 | — | 86.4 | — | 88.1 | 91.7 | — | — | — |

| MATRN [50] | WikiText-103 | MJ+ST | 96.6 | 95.0 | 95.8 | 86.6 | 82.8 | 90.6 | 93.5 | — | — | — |

| PETR [91] | N/A | MJ+ST | 95.8 | 92.4 | 97.0 | 83.3 | — | 86.2 | 89.9 | — | — | — |

| DiG-ViT-B [11] | Textimages-33M | MJ+ST | 96.7 | 94.6 | 96.9 | 87.1 | — | 91.0 | 91.3 | 74.9 | 82.3 | — |

| PARSeqA [24] | 从头训练 | MJ+ST | 97.0 | 93.6 | 96.2 | 86.5 | 82.9 | 88.9 | 92.2 | — | — | — |

| TrOCRLarge [12] | Textlines-684M | MJ+ST+B | 94.1 | 96.1 | 97.3 | 88.1 | 84.1 | 93.0 | 95.1 | — | — | — |

| SIGAT [92] | ImageNet-1K | MJ+ST | 96.6 | 95.1 | 96.8 | 86.6 | 83.0 | 90.5 | 93.1 | — | — | — |

| CLIP-OCR [66] | 从头训练 | MJ+ST | 97.3 | 94.7 | — | 87.2 | — | 89.9 | 93.1 | — | — | — |

| LISTER-B [93] | N/A | MJ+ST | 96.9 | 93.8 | — | 87.2 | — | 87.5 | 93.1 | — | — | — |

| CLIPTER [65] | N/A | Real(1.5M) | — | 96.6 | — | — | 85.9 | — | — | — | — | — |

| DiG-ViT-B [11] | Textimages-33M | Real(2.8M) | 97.6 | 96.5 | 97.6 | 88.9 | — | 92.9 | 96.5 | 62.8 | 79.7 | — |

| CCD-ViT-B [94] | Textimages-33M | Real(2.8M) | 98.0 | 97.8 | 98.3 | 91.6 | — | 96.1 | 98.3 | — | — | — |

| ViTSTR-S [10]# | ImageNet-1K | Real(3.3M) | 97.9 | 96.0 | 97.8 | 89.0 | 87.5 | 91.5 | 96.2 | 64.5 | 77.9 | 64.2 |

| ABINet [9]# | 从头训练 | Real(3.3M) | 98.6 | 98.2 | 98.0 | 90.5 | 88.7 | 94.1 | 97.2 | 72.2 | 85.0 | 70.1 |

| PARSeqA [24] | 从头训练 | Real(3.3M) | 99.1 | 97.9 | 98.4 | 90.7 | 89.6 | 95.7 | 98.3 | 74.4 | 85.4 | 73.1 |

| MAERec-B [61] | Union14M-U | Union14M-L | 98.5 | 97.8 | 98.1 | — | 89.5 | 94.4 | 98.6 | — | — | — |

| CLIP4STR-B | — | MJ+ST | 97.7 | 95.2 | 96.1 | 87.6 | 84.2 | 91.3 | 95.5 | 79.8 | 87.0 | 57.1 |

| CLIP4STR-L | WIT 400M | MJ+ST | 98.0 | 95.2 | 96.9 | 87.7 | 84.5 | 93.3 | 95.1 | 82.7 | 88.8 | 59.2 |

| CLIP4STR-B | — | Real(3.3M) | 99.2 | 98.3 | 98.3 | 91.4 | 90.6 | 97.2 | 99.3 | 77.5 | 87.5 | 81.8 |

| CLIP4STR-L | — | Real(3.3M) | 99.5 | 98.5 | 98.5 | 91.3 | 90.8 | 97.4 | 99.0 | 79.8 | 89.2 | 84.9 |

| CLIP4STR-B | DataComp-1B [95] | Real(3.3M) | 99.4 | 98.6 | 98.3 | 90.8 | 90.3 | 97.8 | 99.0 | 77.6 | 87.9 | 83.1 |

| CLIP4STR-B | — | RBU(6.5M) | 99.5 | 98.3 | 98.6 | 91.4 | 91.1 | 98.0 | 99.0 | 79.3 | 88.8 | 83.5 |

| CLIP4STR-L | — | RBU(6.5M) | 99.6 | 98.6 | 99.0 | 91.9 | 91.4 | 98.1 | 99.7 | 81.1 | 90.6 | 85.9 |

| CLIP4STR-H | DFN-5B [96] | RBU(6.5M) | 99.5 | 99.1 | 98.9 | 91.7 | 91.0 | 98.0 | 99.0 | 82.6 | 90.9 | 86.5 |

In addition to the small-scale common benchmarks, we also evaluate CLIP4STR on 3 larger and more challenging benchmarks. These benchmarks primarily consist of irregular texts with various shapes, low-resolution images, rotation, etc. The results, shown in Table IV, further demonstrate the strong generalization ability of CLIP4STR. CLIP4STR substantially outperforms the previous SOTA methods on these three large and challenging benchmarks. At the same time, we observe that scaling CLIP4STR to 1B parameters does not bring much improvement in performance. CLIP4STR-L is comparable to CLIP4STR-H in most cases, while CLIP4STR-H is superior in recognizing occluded characters (WOST, HOST, OCTT).

除了小规模通用基准测试外,我们还在3个更大、更具挑战性的基准上评估了CLIP4STR。这些基准主要包含各种形状的不规则文本、低分辨率图像、旋转等情况。表IV所示结果进一步证明了CLIP4STR强大的泛化能力。在这三个大型挑战性基准上,CLIP4STR显著优于之前的SOTA方法。同时我们观察到,将CLIP4STR扩展到10亿参数并未带来明显的性能提升。CLIP4STR-L在大多数情况下与CLIP4STR-H相当,而CLIP4STR-H在识别遮挡字符(WOST、HOST、OCTT)方面表现更优。

TABLE IV: Word accuracy on 3 large benchmarks. ♯Reproduced by PARSeq [24].

表 IV: 三大基准测试的词准确率。♯由 PARSeq [24] 复现。

| 方法 | 训练数据 | COCO 9,825 | ArT 35,149 | Uber 80,551 |

|---|---|---|---|---|

| ViTSTR-S [10]# TRBA [60]# ABINet [9]# PARSeqA [24] MPSTRA [98] | MJ+ST MJ+ST MJ+ST MJ+ST MJ+ST | 56.4 61.4 57.1 64.0 | 66.1 68.2 65.4 70.7 | 37.6 38.0 34.9 42.0 |

| CLIP-OCR [66] CLIP4STR-B CLIP4STR-L | MJ+ST MJ+ST MJ+ST | 64.5 66.5 66.3 | 69.9 70.5 72.8 | 42.8 42 |