Attention-Guided Generative Adversarial Networks for Unsupervised Image-to-Image Translation

注意力引导的生成对抗网络在无监督图像到图像转换中的应用

Abstract—The state-of-the-art approaches in Generative Adversarial Networks (GANs) are able to learn a mapping function from one image domain to another with unpaired image data. However, these methods often produce artifacts and can only be able to convert low-level information, but fail to transfer high-level semantic part of images. The reason is mainly that generators do not have the ability to detect the most discriminative semantic part of images, which thus makes the generated images with low-quality. To handle the limitation, in this paper we propose a novel Attention-Guided Generative Adversarial Network (AGGAN), which can detect the most disc rim i native semantic object and minimize changes of unwanted part for semantic manipulation problems without using extra data and models. The attention-guided generators in AGGAN are able to produce attention masks via a built-in attention mechanism, and then fuse the input image with the attention mask to obtain a target image with high-quality. Moreover, we propose a novel attention-guided disc rim in at or which only considers attended regions. The proposed AGGAN is trained by an end-to-end fashion with an adversarial loss, cycle-consistency loss, pixel loss and attention loss. Both qualitative and quantitative results demonstrate that our approach is effective to generate sharper and more accurate images than existing models. The code is available at https://github.com/Ha0Tang/Attention GAN.

摘要—生成对抗网络(GAN)的最先进方法能够利用不成对的图像数据学习从一个图像域到另一个图像域的映射函数。然而,这些方法常会产生伪影,且仅能转换低层次信息,无法传递图像的高层次语义部分。其主要原因在于生成器缺乏检测图像最具区分性语义部分的能力,从而导致生成图像质量低下。为解决这一局限,本文提出了一种新颖的注意力引导生成对抗网络(AGGAN),该网络无需使用额外数据和模型即可检测最具区分性的语义对象,并在语义操控问题中最小化非目标区域的变化。AGGAN中的注意力引导生成器通过内置注意力机制生成注意力掩码,随后将输入图像与注意力掩码融合以获得高质量目标图像。此外,我们提出了一种仅关注注意区域的新型注意力引导判别器。所提出的AGGAN采用端到端训练方式,结合对抗损失、循环一致性损失、像素损失和注意力损失进行优化。定性与定量结果表明,相较于现有模型,我们的方法能生成更清晰、更准确的图像。代码已开源:https://github.com/Ha0Tang/Attention_GAN。

Index Terms—GANs, Image-to-Image Translation, Attention

索引术语—GANs, 图像到图像转换, 注意力机制

I. INTRODUCTION

I. 引言

Recently, Generative Adversarial Networks (GANs) [8] have received considerable attention across many communities, e.g., computer vision, natural language processing, audio and video processing. GANs are generative models, which are particularly designed for image generation task. Recent works in computer vision, image processing and computer graphics have produced powerful translation systems in supervised settings such as Pix2pix [11], where the image pairs are required. However, the paired training data are usually difficult and expensive to obtain. Especially, the input-output pairs for images tasks such as artistic styli z ation can be even more difficult to acquire since the desired output is quite complex, typically requiring artistic authoring. To tackle this problem, CycleGAN [47], DualGAN [43] and DiscoGAN [13] provide an insight, in which the models can learn the mapping from one image domain to another one with unpaired image data.

近来,生成对抗网络 (GANs) [8] 在计算机视觉、自然语言处理、音视频处理等多个领域受到广泛关注。GANs 是专为图像生成任务设计的生成模型。近期在计算机视觉、图像处理和计算机图形学领域的研究已开发出强大的监督式转换系统,例如需要成对图像的 Pix2pix [11]。然而,成对训练数据通常难以获取且成本高昂。特别是艺术风格化等图像任务的输入-输出对更难获得,因为理想输出通常较为复杂,需要艺术创作。为解决这一问题,CycleGAN [47]、DualGAN [43] 和 DiscoGAN [13] 提出了一种创新思路:模型能够利用非成对图像数据学习不同图像域之间的映射关系。

Despite these efforts, image-to-image translation, e.g., converting a neutral expression to a happy expression, remains a challenging problem due to the fact that the facial expression changes are non-linear, unaligned and vary conditioned on the appearance of the face. Moreover, most previous models change unwanted objects during the translation stage and can also be easily affected by background changes. In order to address these limitations, Liang et al. propose the ContrastGAN [18], which uses object mask annotations from each dataset. In Contrast GAN, it first crops a part in the image according to the masks, and then makes translations and finally pastes it back. Promising results have been obtained from it, however it is hard to collect training data with object masks. More importantly, we have to make an assumption that the object shape should not change after applying semantic modification. Another option is to train an extra model to detect the object masks and fit them into the generated image patches [6], [12]. In this case, we need to increase the number of parameters of our network, which consequently increases the training complexity both in time and space.

尽管已有这些努力,图像到图像的转换(例如将中性表情转为快乐表情)仍是一个具有挑战性的问题,因为面部表情变化是非线性、未对齐的,且会因面部外观而呈现条件性差异。此外,多数现有模型在转换阶段会改变非目标对象,并易受背景变化干扰。为解决这些限制,Liang等人提出了ContrastGAN [18],该方法利用各数据集中的对象掩码标注:先根据掩码裁剪图像局部区域,完成转换后再将其粘贴回原位置。虽然该方法取得了显著效果,但带有对象掩码的训练数据难以收集。更重要的是,该方法需假设语义修改后对象形状保持不变。另一种方案是训练额外模型来检测对象掩码并将其适配到生成图像块中 [6][12],但这会增加网络参数量,进而提升训练在时间和空间维度上的复杂度。

To overcome the aforementioned issues, in this paper we propose a novel Attention-Guided Generative Adversarial Network (AGGAN) for the image translation problem without using extra data and models. The proposed AGGAN comprises of two generators and two disc rim in at or s, which is similar with CycleGAN [47]. Fig. 1 illustrates the differences between previous representative works and the proposed AGGAN. Two attention-guided generators in the proposed AGGAN have built-in attention modules, which can disentangle the discriminative semantic object and the unwanted part by producing a attention mask and a content mask. Then we fuse the input image with new patches produced through the attention mask to obtain high-quality results. We also constrain generators with pixel-wise and cycle-consistency loss function, which forces the generators to reduce changes. Moreover, we propose two novel attention-guided disc rim in at or s which aims to consider only the attended regions. The proposed AGGAN is trained by an end-to-end fashion, and can produce attention mask, content mask and targeted images at the same time. Experimental results on four public available datasets demonstrate that the proposed AGGAN is able to produce higher-quality images compared with the state-of-the-art methods.

为克服上述问题,本文提出了一种新型注意力引导生成对抗网络 (Attention-Guided Generative Adversarial Network, AGGAN) ,用于无需额外数据和模型的图像转换任务。所提出的 AGGAN 包含两个生成器和两个判别器,其结构与 CycleGAN [47] 类似。图 1 展示了先前代表性工作与本文提出的 AGGAN 之间的差异。AGGAN 中两个注意力引导生成器内置了注意力模块,通过生成注意力掩码和内容掩码来分离判别性语义对象与非目标区域。随后,我们将输入图像与通过注意力掩码生成的新图像块融合,以获得高质量结果。我们还通过逐像素损失和循环一致性损失函数约束生成器,迫使生成器减少变化。此外,我们提出了两种新型注意力引导判别器,其仅关注被注意力机制选定的区域。所提出的 AGGAN 采用端到端训练方式,可同时生成注意力掩码、内容掩码和目标图像。在四个公开数据集上的实验结果表明,与现有最优方法相比,AGGAN 能够生成更高质量的图像。

The contributions of this paper are summarized as follows: • We propose a novel Attention-Guided Generative Adversarial Network (AGGAN) for unsupervised image-to-image translation. • We propose a novel generator architecture with built-in at

本文的贡献总结如下:

- 我们提出了一种新颖的注意力引导生成对抗网络 (Attention-Guided Generative Adversarial Network, AGGAN) ,用于无监督图像到图像转换。

- 我们提出了一种新型生成器架构,内置注意力机制。

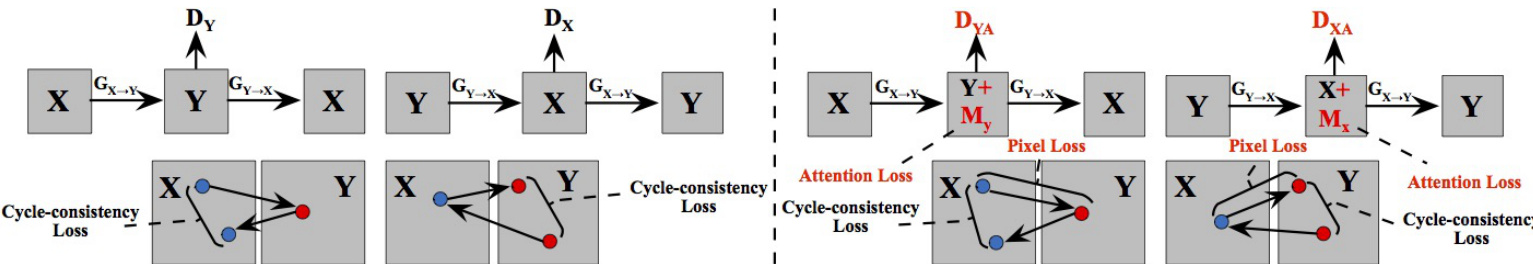

Fig. 1: Comparison of previous frameworks, e.g., CycleGAN [47], DualGAN [43] and DiscoGAN [13] (Left), and the proposed AGGAN (Right). The contribution of AGGAN is that the proposed generators can produce the attention mask $M_{x}$ and $M_{y}$ ) via the built-in attention module and then the produced attention mask and content mask mixed with the input image to obtain the targeted image. Moreover, we also propose two attention-guided disc rim in at or s $D_{X A}$ , $D_{Y A}$ , which aim to consider only the attended regions. Finally, for better optimizing the proposed AGGAN, we employ pixel loss, cycle-consistency loss and attention loss.

图 1: 现有框架(如CycleGAN [47]、DualGAN [43]和DiscoGAN [13])(左)与提出的AGGAN(右)对比。AGGAN的贡献在于:通过内置注意力模块,生成器可产生注意力掩码$M_{x}$和$M_{y}$,随后将生成的注意力掩码与内容掩码混合输入图像以获取目标图像。此外,我们还提出两个注意力引导判别器$D_{X A}$、$D_{Y A}$,其仅关注注意力区域。最后,为更好优化AGGAN,我们采用像素损失、循环一致性损失和注意力损失。

tention mechanism, which can detect the most disc rim i native semantic part of images in different domains. • We propose a novel attention-guided disc rim in at or which only consider the attended regions. Moreover, the proposed attention-guided generator and disc rim in at or can be easily used to other GAN models. • Extensive results demonstrate that the proposed AGGAN can generate sharper faces with clearer details and more realistic expressions compared with baseline models.

• 我们提出了一种新颖的注意力机制,能够检测不同领域中图像最具判别性的语义部分。

• 我们提出了一种新型的注意力引导判别器,仅关注被注意区域。此外,所提出的注意力引导生成器和判别器可轻松应用于其他GAN模型。

• 大量实验结果表明,与基线模型相比,提出的AGGAN能生成更清晰、细节更丰富且表情更逼真的人脸图像。

II. RELATED WORK

II. 相关工作

Generative Adversarial Networks (GANs) [8] are powerful generative models, which have achieved impressive results on different computer vision tasks, e.g., image generation [5], [27], [9], image editing [34], [35] and image inpainting [17], [10]. In order to generate meaningful images that meet user requirement, Conditional GAN (CGAN) [26] is proposed where the conditioned information is employed to guide the image generation process. The conditioned information can be discrete labels [28], text [22], [31], object keypoints [32], human skeleton [39] and reference images [11]. CGANs using a reference images as conditional information have tackled a lot of problems, e.g., text-to-image translation [22], image-toimage translation [11] and video-to-video translation [42].

生成对抗网络 (GANs) [8] 是一种强大的生成模型,在不同计算机视觉任务中取得了令人印象深刻的成果,例如图像生成 [5]、[27]、[9],图像编辑 [34]、[35] 以及图像修复 [17]、[10]。为了生成符合用户需求的有意义图像,研究者提出了条件生成对抗网络 (CGAN) [26],通过引入条件信息来指导图像生成过程。这些条件信息可以是离散标签 [28]、文本 [22]、[31]、物体关键点 [32]、人体骨架 [39] 或参考图像 [11]。以参考图像作为条件信息的 CGAN 已经解决了许多问题,例如文本到图像转换 [22]、图像到图像转换 [11] 以及视频到视频转换 [42]。

Image-to-Image Translation models learns a translation function using CNNs. Pix2pix [11] is a conditional framework using a CGAN to learn a mapping function from input to output images. Similar ideas have also been applied to many other tasks, such as generating photographs from sketches [33] or vice versa [38]. However, most of the tasks in the real world suffer from the constraint of having few or none of the paired input-output samples available. To overcome this limitation, unpaired image-to-image translation task has been proposed. Different from the prior works, unpaired image translation task try to learn the mapping function without the requirement of paired training data. Specifically, CycleGAN [47] learns the mappings between two image domains (i.e., a source domain $X$ to a target domain $Y$ ) instead of the paired images. Apart from CycleGAN, many other GAN variants are proposed to tackle the cross-domain problem. For example, to learn a common representation across domains, CoupledGAN [19] uses a weight-sharing strategy. The work of [37] utilizes some certain shared content features between input and output even though they may differ in style. Kim et al. [13] propose a method based on GANs that learns to discover relations between different domains. A model which can learn object transfiguration from two unpaired sets of images is presented in [46]. Tang et al. [41] propose $\mathbf{G}^{2}\mathbf{GAN}$ , which is a robust and scalable approach allowing to perform unpaired imageto-image translation for multiple domains. However, those models can be easily affected by unwanted content and cannot focus on the most disc rim i native semantic part of images during translation stage.

图像到图像翻译模型利用CNN学习翻译函数。Pix2pix [11] 是一个使用条件生成对抗网络(CGAN)学习从输入到输出图像映射函数的框架。类似思路也被应用于许多其他任务,例如从草图生成照片 [33] 或逆向操作 [38]。然而现实世界中的多数任务都面临配对输入-输出样本稀缺的约束。为突破这一限制,研究者提出了非配对图像翻译任务。

与先前工作不同,非配对图像翻译任务旨在无需配对训练数据的情况下学习映射函数。具体而言,CycleGAN [47] 学习的是两个图像域(即源域$X$到目标域$Y$)之间的映射,而非配对图像间的映射。除CycleGAN外,还有许多其他GAN变体被提出以解决跨域问题。例如,CoupledGAN [19] 采用权重共享策略来学习跨域共同表示。[37] 的研究则利用输入输出间某些特定的共享内容特征(尽管风格可能不同)。Kim等人 [13] 提出基于GAN的方法来发现不同域间的关系。[46] 展示了一个能从两组非配对图像中学习物体变形的模型。Tang等人 [41] 提出$\mathbf{G}^{2}\mathbf{GAN}$,这是一种鲁棒且可扩展的方法,可实现多域非配对图像翻译。然而这些模型容易受到无关内容干扰,在翻译阶段难以聚焦最具判别性的图像语义部分。

Attention-Guided Image-to-Image Translation. In order to fix the aforementioned limitations, Liang et al. propose Contrast GAN [18], which uses the object mask annotations from each dataset as extra input data. In this method, we have to make an assumption that after applying semantic changes an object shape does not change. Another method is to train another segmentation or attention model and fit it to the system. For instance, Mejjati et al. [25] propose an attention mechanisms that are jointly trained with the generators and disc rim in at or s. Chen et al. propose Attention GAN [6], which uses an extra attention network to generate attention maps, so that major attention can be paid to objects of interests. K as tani otis et al. [12] present ATAGAN, which use a teacher network to produce attention maps. Zhang et al. [45] propose the Self-Attention Generative Adversarial Networks (SAGAN) for image generation task. Qian et al. [30] employ a recurrent network to generate visual attention first and then transform a raindrop degraded image into a clean one. Tang et al. [40] propose a novel Multi-Channel Attention Selection GAN for the challenging cross-view image translation task. Sun et al. [36] generate a facial mask by using FCN [21] for face attribute manipulation.

注意力引导的图像到图像翻译。为解决上述限制,Liang等人提出了Contrast GAN [18],该方法将每个数据集中的物体掩码标注作为额外输入数据。此方法需假设语义变化后物体形状保持不变。另一种方案是训练独立的分割或注意力模型并集成至系统中。例如Mejjati等人[25]提出与生成器和判别器联合训练的注意力机制。Chen等人开发的Attention GAN [6]通过额外注意力网络生成注意力图,使系统聚焦于目标物体。Kastaniotis等人[12]提出的ATAGAN采用教师网络生成注意力图。Zhang等人[45]针对图像生成任务提出自注意力生成对抗网络(SAGAN)。Qian等人[30]先通过循环网络生成视觉注意力,再将雨滴退化图像恢复为清晰图像。Tang等人[40]针对跨视角图像翻译任务提出多通道注意力选择GAN。Sun等人[36]利用FCN[21]生成面部掩码以实现人脸属性编辑。

All these aforementioned methods employ extra networks or data to obtain attention masks, which increases the number of parameters, training time and storage space of the whole system. In this work, we propose the Attention-Guided Generative Adversarial Network (AGGAN), which can produce attention masks by the generators. For this purpose, we embed an attention method to the vanilla generator which means that we do not need any extra models to obtain the attention masks of objects of interests.

上述所有方法都采用额外网络或数据来获取注意力掩码,这增加了整个系统的参数量、训练时间和存储空间。本文提出注意力引导生成对抗网络 (AGGAN),可通过生成器直接生成注意力掩码。为此,我们在基础生成器中嵌入注意力机制,这意味着无需任何额外模型即可获取目标对象的注意力掩码。

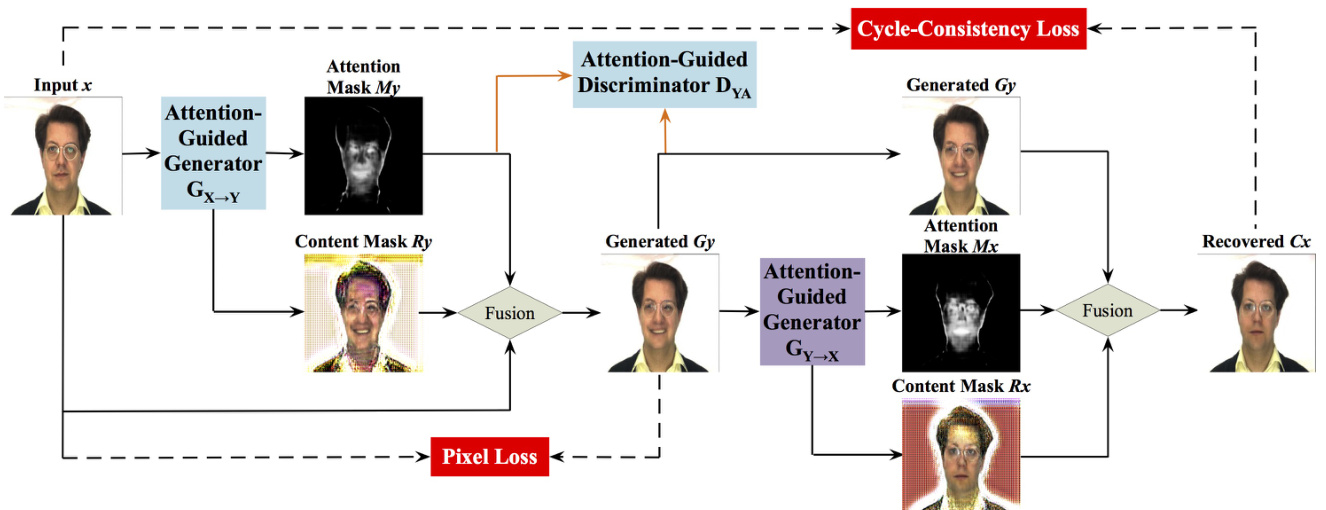

Fig. 2: The framework of the proposed AGGAN. Because of the space limitation, we only show one mapping in this figure, i.e., $x{\rightarrow}[M_{y},R_{y},G_{y}]{\rightarrow}C_{x}{\approx}x$ . We also have the other mapping, i.e., $y\rightarrow[M_{x},R_{x},G_{x}]\rightarrow C_{y}\approx y$ . The attention-guided generators have built-in attention mechanism, which can detect the most disc rim i native part of images. After that we mix the input image, content mask and the attention mask to synthesize the targeted image. Moreover, to distinguish only the most disc rim i native content, we also propose a attention-guided disc rim in at or $D_{Y A}$ . Note that our systems does not require supervision, i.e., no pairs of images of the same person with different expressions.

图 2: 提出的 AGGAN 框架。由于篇幅限制,本图仅展示一个映射关系,即 $x{\rightarrow}[M_{y},R_{y},G_{y}]{\rightarrow}C_{x}{\approx}x$ 。另一个映射关系为 $y\rightarrow[M_{x},R_{x},G_{x}]\rightarrow C_{y}\approx y$ 。注意力引导生成器内置注意力机制,可检测图像中最具判别性的区域。随后我们将输入图像、内容掩码和注意力掩码混合以合成目标图像。此外,为仅区分最具判别性的内容,我们还提出了注意力引导判别器 $D_{Y A}$ 。需注意本系统无需监督信号,即不需要同一人物不同表情的成对图像。

III. METHOD

III. 方法

We first start with the attention-guided generator and discriminator of the proposed Attention-Guided Generative Adversarial Network (AGGAN), and then introduce the loss function for better optimization of the model. Finally we present the implementation details of the whole model including network architecture and training procedure.

我们首先从所提出的注意力引导生成对抗网络 (AGGAN) 的注意力引导生成器和判别器开始,然后介绍用于更好优化模型的损失函数。最后我们展示整个模型的实现细节,包括网络架构和训练过程。

A. Attention-Guided Generator

A. 注意力引导生成器

GANs [8] are composed of two competing modules, i.e., the generator $G_{X\rightarrow Y}$ and the disc rim in at or $D_{Y}$ (where $X$ and $Y$ denote two different image domains), which are iterative ly trained competing against with each other in the manner of two-player minimax. More formally, let $x_{i}\in X$ and $y_{j}\in Y$ denote the training images in source and target image domain, respectively (for simplicity, we usually omit the subscript $i$ and $j$ ). For most current image translation models, e.g., CycleGAN [47] and DualGAN [43], they include two mappings $G_{X\rightarrow Y}{:}x{\rightarrow}G_{y}$ and $G_{Y\rightarrow X}{:}y\rightarrow G_{x}$ , and two corresponding adversarial disc rim in at or s $D_{X}$ and $D_{Y}$ . The generator $G_{X\rightarrow Y}$ maps $x$ from the source domain to the generated image $G_{y}$ in the target domain $Y$ and tries to fool the disc rim in at or $D_{Y}$ , whilst the $D_{Y}$ focuses on improving itself in order to be able to tell whether a sample is a generated sample or a real data sample. Similar to $G_{Y\rightarrow X}$ and $D_{X}$ .

GANs [8] 由两个相互竞争的模块组成,即生成器 $G_{X\rightarrow Y}$ 和判别器 $D_{Y}$ (其中 $X$ 和 $Y$ 表示两个不同的图像域),它们以双人极小极大博弈的方式迭代训练并相互对抗。更正式地说,令 $x_{i}\in X$ 和 $y_{j}\in Y$ 分别表示源图像域和目标图像域中的训练图像(为简化起见,通常省略下标 $i$ 和 $j$ )。对于当前大多数图像翻译模型(例如 CycleGAN [47] 和 DualGAN [43]),它们包含两个映射 $G_{X\rightarrow Y}{:}x{\rightarrow}G_{y}$ 和 $G_{Y\rightarrow X}{:}y\rightarrow G_{x}$ ,以及两个对应的对抗判别器 $D_{X}$ 和 $D_{Y}$ 。生成器 $G_{X\rightarrow Y}$ 将源域的 $x$ 映射到目标域 $Y$ 中的生成图像 $G_{y}$ ,并试图欺骗判别器 $D_{Y}$ ,而 $D_{Y}$ 则专注于提升自身能力以判断样本是生成样本还是真实数据样本。 $G_{Y\rightarrow X}$ 和 $D_{X}$ 的原理与之类似。

While for the proposed AGGAN, we intend to learn two mappings between domains $X$ and $Y$ via two generators with built-in attention mechanism, i.e., $G_{X\rightarrow Y};x{\rightarrow}[M_{y},R_{y},G_{y}]$ and $G_{Y\rightarrow X};y\rightarrow[M_{x},R_{x},G_{x}]$ , where $M_{x}$ and $M_{y}$ are the attention masks of images $x$ and $y$ , respectively; $R_{x}$ and $R_{y}$ are the content masks of images $x$ and $y$ , respectively; $G_{x}$ and $G_{y}$ are the generated images. The attention masks $M_{x}$ and $M_{y}$ define a per pixel intensity specifying to which extend each pixel of the content masks $R_{x}$ and $R_{y}$ will contribute in the final rendered image. In this way, the generator does not need to render static elements, and can focus exclusively on the pixels defining the facial movements, leading to sharper and more realistic synthetic images. After that, we fuse input image $x$ and the generated attention mask $M_{y}$ , and the content mask $R_{y}$ to obtain the targeted image $G_{y}$ . Through this way, we can disentangle the most disc rim i native semantic object and unwanted part of images. In Fig. 2, the attention-guided generators focus only on those regions of the image that are responsible of generating the novel expression such as eyes and mouth, and keep the rest parts of the image such as hair, glasses, clothes untouched. The higher intensity in the attention mask means the larger contribution for changing the expression.

而对于我们提出的AGGAN,旨在通过两个内置注意力机制的生成器学习域$X$和$Y$之间的双向映射:$G_{X\rightarrow Y};x{\rightarrow}[M_{y},R_{y},G_{y}]$与$G_{Y\rightarrow X};y\rightarrow[M_{x},R_{x},G_{x}]$。其中$M_{x}$和$M_{y}$分别是图像$x$和$y$的注意力掩膜;$R_{x}$和$R_{y}$是图像$x$和$y$的内容掩膜;$G_{x}$和$G_{y}$为生成图像。注意力掩膜$M_{x}$和$M_{y}$通过逐像素强度值,定义了内容掩膜$R_{x}$和$R_{y}$中各像素对最终合成图像的贡献程度。这种方式使生成器无需渲染静态元素,仅需聚焦于定义面部运动的像素,从而产生更清晰逼真的合成图像。随后,我们融合输入图像$x$与生成的注意力掩膜$M_{y}$、内容掩膜$R_{y}$来获得目标图像$G_{y}$。该方法能有效解耦图像中最具判别力的语义对象与无关区域。如图2所示,注意力引导的生成器仅聚焦于眼睛、嘴巴等生成新表情的关键区域,而保持头发、眼镜、衣物等其他部分不变。注意力掩膜中强度越高,表示该区域对表情变化的贡献越大。

To focus on the disc rim i native semantic parts in two different domains, we specifically designed two generators with built-in attention mechanism. By using this mechanism, generators can generate attention masks in two different domains. The input of each generator is a three-channel image, and the outputs of each generator are a attention mask and a content mask. Specifically, the input image of GX→Y is x∈RH×W ×3, and the outputs are the attention mask $M_{y}{\in}{0,{\ldots},1}^{H\times W}$ and content mask $R_{y}{\in}\mathbb{R}^{H\times W\times3}$ . Thus, we use the following formulation to calculate the final image $G_{y}$ ,

为了聚焦两个不同领域中具有区分性的语义部分,我们专门设计了两个内置注意力机制的生成器。通过该机制,生成器可在两个不同域中生成注意力掩码。每个生成器的输入为三通道图像,输出为注意力掩码和内容掩码。具体而言,生成器GX→Y的输入图像为x∈RH×W ×3,输出为注意力掩码$M_{y}{\in}{0,{\ldots},1}^{H\times W}$和内容掩码$R_{y}{\in}\mathbb{R}^{H\times W\times3}$。因此,我们采用以下公式计算最终图像$G_{y}$:

$$

G_{y}=R{y}M{y}+x(1-M{y}),

$$

where attention mask $M_{y}$ is copied into three-channel for multiplication purpose. The formulation for generator $G_{Y\rightarrow X}$ and input image $y$ is $G_{x}{=}R_{x}M_{x}{+}y(1{-}M_{x})$ . Intuitively, attention mask $M_{y}$ enables some specific areas where facial muscle changed to get more focus, applying it to the content mask $R_{y}$ can generate images with clear dynamic area and unclear static area. After that, what left is to enhance the static area, which should be similar between the generated image and the original real image. Therefore we can enhance the static area (basically it refers to background area) in the original real image $(1-M_{y})x$ and merge it to $R{y}M{y}$ to obtain final result $R_{y}M_{y}+x(1{-}M_{y})$ .

其中注意力掩码 $M_{y}$ 被复制为三通道以进行乘法运算。生成器 $G_{Y\rightarrow X}$ 和输入图像 $y$ 的公式为 $G_{x}{=}R_{x}M_{x}{+}y(1{-}M_{x})$ 。直观上,注意力掩码 $M_{y}$ 能使面部肌肉变化的特定区域获得更多关注,将其应用于内容掩码 $R_{y}$ 可生成动态区域清晰而静态区域模糊的图像。随后只需增强静态区域(通常指背景区域),该区域在生成图像与原始真实图像间应保持相似。因此我们可增强原始真实图像中的静态区域 $(1-M_{y})x$ 并将其与 $R_{y}M_{y}$ 融合,最终得到 $R_{y}M_{y}+x(1{-}M_{y})$ 。

B. Attention-Guided Disc rim in at or

B. 注意力引导判别器

Eq. (1) constrains the generators to act only on the attended regions. However, the disc rim in at or s currently consider the whole image. More specifically, the vanilla disc rim in at or $D_{Y}$ takes the generated image $G_{y}$ or the real image $y$ as input and tries to distinguish them. Similar to disc rim in at or $D_{X}$ , which tries to distinguish the generated image $G_{x}$ and the real image $x$ . To add attention mechanism to the disc rim in at or so that it only considers attended regions. We propose two attentionguided disc rim in at or s. The attention-guided disc rim in at or is structurally the same with the vanilla disc rim in at or but it also takes the attention mask as input. For attention-guided disc rim in at or $D_{Y A}$ , which tries to distinguish the fake image pairs $[M_{y},G_{y}]$ and the real image pairs $[M_{y},y]$ . Similar to $D_{X A}$ , which tries to distinguish the fake image pairs $[M_{x},G_{x}]$ and the real image pairs $[M_{x},x]$ . In this way, disc rim in at or s can focus on the most disc rim i native content.

式 (1) 约束生成器仅作用于注意力区域。然而当前判别器仍考虑整张图像。具体而言,原始判别器 $D_{Y}$ 以生成图像 $G_{y}$ 或真实图像 $y$ 作为输入进行区分。类似地,判别器 $D_{X}$ 用于区分生成图像 $G_{x}$ 与真实图像 $x$。

为使判别器仅关注注意力区域,我们提出两种注意力引导的判别器。其结构与原始判别器相同,但额外接收注意力掩模作为输入。注意力引导判别器 $D_{YA}$ 用于区分伪造图像对 $[M_{y},G_{y}]$ 与真实图像对 $[M_{y},y]$;类似地,$D_{XA}$ 用于区分伪造图像对 $[M_{x},G_{x}]$ 与真实图像对 $[M_{x},x]$。该方法可使判别器聚焦于最具判别力的内容。

C. Optimization Objective

C. 优化目标

Vanilla Adversarial Loss $\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})$ [8] can be formulated as follows:

普通对抗损失 $\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})$ [8] 可表述如下:

$$

\begin{array}{r l}{\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})=\mathbb{E}{y\sim p_{\mathrm{data}}(y)}\left[\log D_{Y}(y)\right]+}\ {\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[\log(1-D_{Y}(G_{X\rightarrow Y}(x)))].}\end{array}

$$

$$

\begin{array}{r l}&{\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})=\mathbb{E}{y\sim p_{\mathrm{data}}(y)}\left[\log D_{Y}(y)\right]+}\ &{\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[\log(1-D_{Y}(G_{X\rightarrow Y}(x)))].}\end{array}

$$

$G_{X\rightarrow Y}$ tries to minimize the adversarial loss objective $\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})$ while $D_{Y}$ tries to maximize it. The target of $G_{X\rightarrow Y}$ is to generate an image $G_{y}{=}G_{X{\rightarrow}Y}(x)$ that looks similar to the images from domain $Y$ , while $D_{Y}$ aims to distinguish between the generated images $G_{X\rightarrow Y}(x)$ and the real images $y$ . A similar adversarial loss of Eq. (2) for mapping function $G_{Y\rightarrow X}$ and its disc rim in at or $D_{X}$ is defined as $\mathcal{L}{G A N}(G_{Y\rightarrow X},D_{X})=\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[\log D_{X}(x)]+$ $\mathbb{E}{y\sim p_{\mathrm{data}}(y)}[\log(1-D_{X}(G_{Y\rightarrow X}(y)))]$ .

$G_{X\rightarrow Y}$ 试图最小化对抗损失目标 $\mathcal{L}{G A N}(G_{X\rightarrow Y},D_{Y})$,而 $D_{Y}$ 则试图最大化该目标。$G_{X\rightarrow Y}$ 的目标是生成图像 $G_{y}{=}G_{X{\rightarrow}Y}(x)$,使其看起来类似于域 $Y$ 中的图像,而 $D_{Y}$ 旨在区分生成的图像 $G_{X\rightarrow Y}(x)$ 和真实图像 $y$。对于映射函数 $G_{Y\rightarrow X}$ 及其判别器 $D_{X}$,定义了一个与式(2)类似的对抗损失:$\mathcal{L}{G A N}(G_{Y\rightarrow X},D_{X})=\mathbb{E}_{x\sim p_{\mathrm{data}}(x)}[\log D_{X}(x)]+$ $\mathbb{E}{y\sim p_{\mathrm{data}}(y)}[\log(1-D_{X}(G_{Y\rightarrow X}(y)))]$。

Attention-Guided Adversarial Loss. We propose the attention-guided adversarial loss for training the attentionguide disc rim in at or s. The min-max game between the attention-guided disc rim in at or $D_{Y A}$ and the generator $G_{X\rightarrow Y}$ is performed through the following objective functions:

注意力引导对抗损失。我们提出注意力引导对抗损失用于训练注意力引导判别器。注意力引导判别器 $D_{YA}$ 与生成器 $G_{X\rightarrow Y}$ 之间的最小-最大博弈通过以下目标函数实现:

$$

\begin{array}{r l}&{\mathcal{L}{A G A N}(G_{X\rightarrow Y},D_{Y A})=\mathbb{E}{y\sim p_{\mathrm{data}}(y)}\left[\log D_{Y A}([M_{y},y])\right]+}\ &{\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[\log(1-D_{Y A}([M_{y},G_{X\rightarrow Y}(x)]))],}\end{array}

$$

$$

\begin{array}{r l}&{\mathcal{L}{A G A N}(G{X\rightarrow Y},D_{Y A})=\mathbb{E}{y\sim p{\mathrm{data}}(y)}\left[\log D_{Y A}([M_{y},y])\right]+}\ &{\mathbb{E}{x\sim p{\mathrm{data}}(x)}[\log(1-D_{Y A}([M_{y},G_{X\rightarrow Y}(x)]))],}\end{array}

$$

where $D_{Y A}$ aims to distinguish between the generated image pairs $[M_{y},G_{X\rightarrow Y}(x)]$ and the real image pairs $[M_{y},y]$ . We also have another loss $\mathcal{L}{A G A N}(G_{Y\rightarrow X},D_{X A})$ for discriminator $D_{X A}$ and the generator $G_{Y\rightarrow X}$ .

其中 $D_{Y A}$ 旨在区分生成的图像对 $[M_{y},G_{X\rightarrow Y}(x)]$ 和真实图像对 $[M_{y},y]$ 。我们还为判别器 $D_{X A}$ 和生成器 $G_{Y\rightarrow X}$ 定义了另一个损失函数 $\mathcal{L}{A G A N}(G_{Y\rightarrow X},D_{X A})$ 。

Cycle-Consistency Loss. Note that CycleGAN [47] and DualGAN [43] are different from Pix2pix [11] as the training data in those models are unpaired. The cycle-consistency loss can be used to enforce forward and backward consistency. The cycle-consistency loss can be regarded as “pseudo” pairs of training data even though we do not have the corresponding data in the target domain which corresponds to the input data from the source domain. Thus, the loss function of cycleconsistency can be defined as:

循环一致性损失 (Cycle-Consistency Loss)。需要注意的是,CycleGAN [47] 和 DualGAN [43] 与 Pix2pix [11] 不同,因为这些模型的训练数据是非配对的。循环一致性损失可用于强化前向和后向一致性。即使我们没有目标域中与源域输入数据相对应的数据,循环一致性损失也可以被视为训练数据的"伪"配对。因此,循环一致性损失函数可以定义为:

$$

\begin{array}{r l}&{\mathcal{L}{c y c l e}(G_{X\rightarrow Y},G_{Y\rightarrow X})=}\ &{\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[|G_{Y\rightarrow X}(G_{X\rightarrow Y}(x))-x|{1}]+}\ &{\mathbb{E}{y\sim p_{\mathrm{data}}(y)}[|G_{X\rightarrow Y}(G_{Y\rightarrow X}(y))-y|_{1}].}\end{array}

$$

$$

\begin{array}{r l}&{\mathcal{L}{c y c l e}(G_{X\rightarrow Y},G_{Y\rightarrow X})=}\ &{\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[|G_{Y\rightarrow X}(G_{X\rightarrow Y}(x))-x|{1}]+}\ &{\mathbb{E}{y\sim p_{\mathrm{data}}(y)}[|G_{X\rightarrow Y}(G_{Y\rightarrow X}(y))-y|_{1}].}\end{array}

$$

The reconstructed images $C_{x}{=}G_{Y\rightarrow X}(G_{X{\rightarrow}Y}(x))$ are closely matched to the input image $x$ , and similar to $G_{X\rightarrow Y}(G_{Y\rightarrow X}(y))$ and image $y$ .

重建图像 $C_{x}{=}G_{Y\rightarrow X}(G_{X{\rightarrow}Y}(x))$ 与输入图像 $x$ 高度匹配,且与 $G_{X\rightarrow Y}(G_{Y\rightarrow X}(y))$ 及图像 $y$ 相似。

Pixel Loss. To reduce changes and constrain generators, we adopt pixel loss between the input images and the generated images. We express this loss as:

像素损失 (Pixel Loss)。为了减少变化并约束生成器,我们采用输入图像与生成图像之间的像素损失。该损失函数表示为:

$$

\begin{array}{r}{\mathcal{L}{p i x e l}(G_{X\rightarrow Y},G_{Y\rightarrow X})=\mathbb{E}{x\sim p_{\mathrm{data}}(x)}[|G_{X\rightarrow Y}(x)-x|{1}]+}\ {\mathbb{E}{y\sim p_{\mathrm{data}}(y)}[|G_{Y\rightarrow X}(y)-y|{1}]._{\ell=1}.}\end{array}

$$

We adopt $L1$ distance as loss measurement in pixel loss. Note that the pixel loss usually used in the paired image-to-image translation models such as Pix2pix [11]. While we use it in our AGGAN for unpaired image-to-image translation task.

我们在像素损失中采用$L1$距离作为损失度量。需要注意的是,像素损失通常用于成对图像转换模型(如Pix2pix [11]),而我们在AGGAN中将其用于非成对图像转换任务。

Attention Loss. When training our AGGAN we do not have ground-truth annotation for the attention masks. They are learned from the resulting gradients of the attentionguided disc rim in at or s and the rest of the losses. However, the attention masks can easily saturate to 1 which makes the attention-guided generator has no effect as indicated in GANimation [29]. To prevent this situation, we perform a Total Variation Regular iz ation over attention masks $M_{y}$ and $M_{x}$ . The attention loss of mask $M_{x}$ therefore can be defined as:

注意力损失 (Attention Loss)。在训练我们的 AGGAN 时,我们没有注意力掩码 (attention masks) 的真实标注。它们是从注意力判别器 (attention-guided discriminators) 和其他损失的梯度中学习得到的。然而,注意力掩码很容易饱和到 1,这会使注意力引导生成器 (attention-guided generator) 失效,如 GANimation [29] 所述。为了防止这种情况,我们对注意力掩码 $M_{y}$ 和 $M_{x}$ 进行了总变分正则化 (Total Variation Regularization)。因此,掩码 $M_{x}$ 的注意力损失可以定义为:

$$

\begin{array}{r l r}{\lefteqn{\mathcal{L}{t v}(M_{x})=\displaystyle\sum_{w,h=1}^{W,H}|M_{x}(w+1,h,c)-M_{x}(w,h,c)|+}}\ &{}&{\quad\quad\quad|M_{x}(w,h+1,c)-M_{x}(w,h,c)|,}\end{array}

$$

$$

\begin{array}{r l r}{\lefteqn{\mathcal{L}{t v}(M_{x})=\displaystyle\sum_{w,h=1}^{W,H}|M_{x}(w+1,h,c)-M_{x}(w,h,c)|+}}\ &{}&{\quad\quad\quad|M_{x}(w,h+1,c)-M_{x}(w,h,c)|,}\end{array}

$$

where $W$ and $H$ are the width and height of $M_{x}$ .

其中 $W$ 和 $H$ 是 $M_{x}$ 的宽度和高度。

Full Objective. Thus, the complete objective loss of AGGAN can be formulated as follows:

完整目标。因此,AGGAN的完整目标损失可表述如下:

$$

\begin{array}{r l}&{\mathcal{L}(G_{X\to Y},G_{Y\to X},D_{X},D_{Y},D_{X A},D_{Y A})=}\ &{\lambda_{g a n}[\mathcal{L}{G A N}(G_{X\to Y},D_{Y})+\mathcal{L}{G A N}(G_{Y\to X},D_{X})+}\ {\mathcal{L}{A G A N}(G_{X\to Y},D_{Y A})+\mathcal{L}{A G A N}(G_{Y\to X},D_{X A})]+}\ &{\lambda_{c y c l e}\mathcal{L}{c y c l e}(G_{X\to Y},G_{Y\to X})+}\ {\lambda_{p i x e l}\mathcal{L}{p i x e l}(G_{X\to Y},G_{Y\to X})+\lambda_{t v}[\mathcal{L}{t v}(M_{x})+\mathcal{L}{t v}(M_{y})].}\end{array}

$$

where $\lambda_{g a n},\lambda_{c y c l e},\lambda_{p i x e l}$ and $\lambda_{t v}$ are parameters controlling the relative relation of objectives terms. We aim to solve:

其中 $\lambda_{g a n},\lambda_{c y c l e},\lambda_{p i x e l}$ 和 $\lambda_{t v}$ 是控制目标项相对关系的参数。我们的目标是求解:

$$

\begin{array}{r l}&{G_{X\rightarrow Y}^,G_{Y\rightarrow X}^=}\ &{\arg\underset{G_{Y\rightarrow X},D_{X},D_{Y},}{\operatorname{min}}\underset{G_{X\rightarrow Y},G_{Y\rightarrow X},D_{X},D_{Y},D_{X A},D_{Y A}).}{\arg}.}\end{array}

$$

$D$ . Implementation Details

$D$ . 实现细节

Network Architecture. For fair comparison, we use the generat