Universal Domain Adaptation via Compressive Attention Matching

通过压缩注意力匹配实现通用领域自适应

Abstract

摘要

Universal domain adaptation (UniDA) aims to transfer knowledge from the source domain to the target domain without any prior knowledge about the label set. The challenge lies in how to determine whether the target samples belong to common categories. The mainstream methods make judgments based on the sample features, which overemphasizes global information while ignoring the most crucial local objects in the image, resulting in limited accuracy. To address this issue, we propose a Universal Attention Matching (UniAM) framework by exploiting the selfattention mechanism in vision transformer to capture the crucial object information. The proposed framework introduces a novel Compressive Attention Matching (CAM) approach to explore the core information by compressive ly representing attentions. Furthermore, CAM incorporates a residual-based measurement to determine the sample commonness. By utilizing the measurement, UniAM achieves domain-wise and category-wise Common Feature Alignment (CFA) and Target Class Separation (TCS). Notably, UniAM is the first method utilizing the attention in vision transformer directly to perform classification tasks. Extensive experiments show that UniAM outperforms the current state-of-the-art methods on various benchmark datasets.

通用域适应 (UniDA) 旨在无需任何标签集先验知识的情况下,将知识从源域迁移到目标域。其核心挑战在于如何判断目标样本是否属于共有类别。主流方法基于样本特征进行判断,过度强调全局信息而忽略了图像中最关键的局部对象,导致准确率受限。为解决该问题,我们提出通用注意力匹配 (UniAM) 框架,通过利用视觉 Transformer 中的自注意力机制捕捉关键对象信息。该框架引入创新的压缩注意力匹配 (CAM) 方法,通过压缩表征注意力来挖掘核心信息。此外,CAM 采用基于残差的度量机制来判定样本共有性。通过该度量机制,UniAM 实现了域级和类别级的共有特征对齐 (CFA) 与目标类别分离 (TCS)。值得注意的是,UniAM 是首个直接利用视觉 Transformer 注意力机制执行分类任务的方法。大量实验表明,UniAM 在多个基准数据集上超越了当前最先进方法。

1. Introduction

1. 引言

While deep neural networks have achieved remarkable success on visual tasks [12, 25, 19, 73, 51, 69, 70], their performance heavily relies on the assumption of independently and identically distributed (i.i.d.) training and test data [57]. However, this assumption is frequently violated due to the presence of domain shift in real-world scenarios [52, 33, 34, 68, 67, 74, 72]. Unsupervised Domain Adaptation (DA) [1] has emerged as a promising solution to address this limitation by adapting models trained on a source domain to perform well on an unlabeled target domain. Nevertheless, most existing DA approaches [14, 56, 49, 40, 39, 38] assume that the label spaces in the source and target domains are identical, which may not always hold in practical scenarios. Partial Domain Adaptation (PDA) [4] and Open Set Domain Adaptation (OSDA) [43] have been proposed to handle cases where the label spaces in one domain include those in the other, but these still rely on prior knowledge on label set, limiting knowledge generalizing from one scenario to others. Universal domain adaptation (UniDA) [66] considers a more practical and challenging scenario where the relationship of label space between source and target domains is completely unknown i.e. with any number of common, source-private and target-private classes.

虽然深度神经网络在视觉任务上取得了显著成就 [12, 25, 19, 73, 51, 69, 70],但其性能高度依赖于训练数据与测试数据独立同分布 (i.i.d.) 的假设 [57]。然而,由于现实场景中存在域偏移 (domain shift) [52, 33, 34, 68, 67, 74, 72],这一假设经常被打破。无监督域适应 (Unsupervised Domain Adaptation, DA) [1] 通过将源域训练的模型适配到无标注目标域,成为解决这一局限性的有效方案。但现有大多数域适应方法 [14, 56, 49, 40, 39, 38] 假设源域与目标域的标签空间完全一致,这在实际场景中往往不成立。部分域适应 (Partial Domain Adaptation, PDA) [4] 和开放集域适应 (Open Set Domain Adaptation, OSDA) [43] 被提出用于处理一个域标签空间包含另一个域的情况,但这些方法仍依赖于标签集的先验知识,限制了知识在不同场景间的泛化能力。通用域适应 (Universal Domain Adaptation, UniDA) [66] 考虑了更现实且更具挑战性的场景:源域与目标域标签空间的关系完全未知,即可能包含任意数量的共有类、源域私有类和目标域私有类。

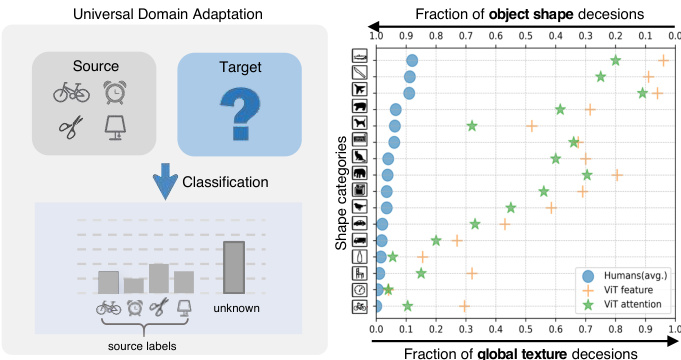

Figure 1: Left: Illustration of Universal Domain Adaptation. Right: Shape-bias Analysis. Plot shows shape-texture trade off for attention and feature in ViT and humans.

图 1: 左: 通用域自适应 (Universal Domain Adaptation) 示意图。右: 形状偏置分析。曲线图展示了 ViT 和人类在注意力机制与特征层面的形状-纹理权衡关系。

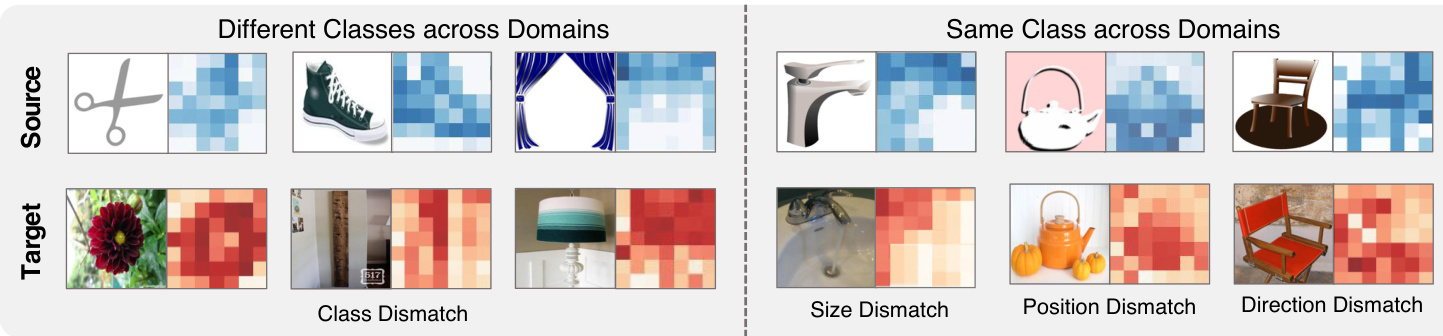

In UniDA, the primary objective is to develop a model capable of precisely categorizing target samples as one of the common classes or an "unknown" class as shown in Fig. 1 left. Existing UniDA methods aim to design a transfer ability criteria to detect common and private classes solely based on the disc rim inability of deep features [6, 7, 8, 9, 13, 27, 29, 47, 48, 50, 66]. However, over-reliance on deep features can impede model adaptation performance, as they have a strong bias towards global information like texture rather than the essential object information like shape [16, 20], which is considered by humans as the most critical cue for recognition [28]. Fortunately, recent studies have demonstrated that vision transformer (ViT) [24] exhibits a stronger shape bias than Convolutional Neural Network (CNN) [41, 55]. As shown in Fig. 1 right, we confirmed that such strong object shape bias is mainly attributed to the self-attention mechanism, verified in a similar way as [16]. Figure 2 demonstrates the attention vectors of samples accross domains. Although we can leverage the attention to focus on more object parts, the attention mismatch problem may still exist due to domain shift, which refers to the attention vectors of sameclass samples from different domains having some degree of the difference caused by potential variations in object size, orientation, and position across different domains. Attention mismatch can hinder the accurate classification of samples, especially when objects of different classes share similar sizes or positions. For example, in Figure 2, the kettle in the source domain and the flower in the target domain have more similar attention patterns. Therefore, the key challenge in utilizing attention is to effectively explore and leverage the object information embedded in attention while mitigating the negative impact of attention mismatch.

在UniDA中,主要目标是开发一个能够精确将目标样本分类为常见类别或"未知"类的模型,如图1左所示。现有UniDA方法旨在设计迁移能力标准,仅基于深度特征的判别性来检测公共类和私有类 [6, 7, 8, 9, 13, 27, 29, 47, 48, 50, 66]。然而,过度依赖深度特征会阻碍模型适应性能,因为它们对纹理等全局信息有强烈偏好,而非形状等本质对象信息 [16, 20],而人类认为后者是最关键的识别线索 [28]。幸运的是,近期研究表明视觉Transformer (ViT) [24] 比卷积神经网络 (CNN) [41, 55] 表现出更强的形状偏好。如图1右所示,我们证实这种强烈的对象形状偏好主要归因于自注意力机制,验证方式与 [16] 类似。图2展示了跨域样本的注意力向量分布。虽然可以利用注意力聚焦更多对象部位,但由于域偏移导致的注意力失配问题仍然存在——不同域中同类样本的注意力向量会因对象尺寸、朝向和位置的潜在差异而产生一定程度的分化。注意力失配会阻碍样本的准确分类,特别是当不同类别的对象具有相似尺寸或位置时。例如图2中,源域的水壶和目标域的花朵就表现出更相似的注意力模式。因此,利用注意力的关键挑战在于:有效发掘并利用注意力中嵌入的对象信息,同时减轻注意力失配的负面影响。

Figure 2: Attention Visualization accross domains. Attention patterns vary significantly between different classes of images. However, within the same class, attention can also exhibit variations due to differences in object size, position, and orientation. These variations are collectively referred to as attention mismatch.

图 2: 跨领域注意力可视化。不同类别图像间的注意力模式存在显著差异。然而在同一类别中,由于物体尺寸、位置和方向的差异,注意力分布也会呈现变化。这些差异统称为注意力失配 (attention mismatch)。

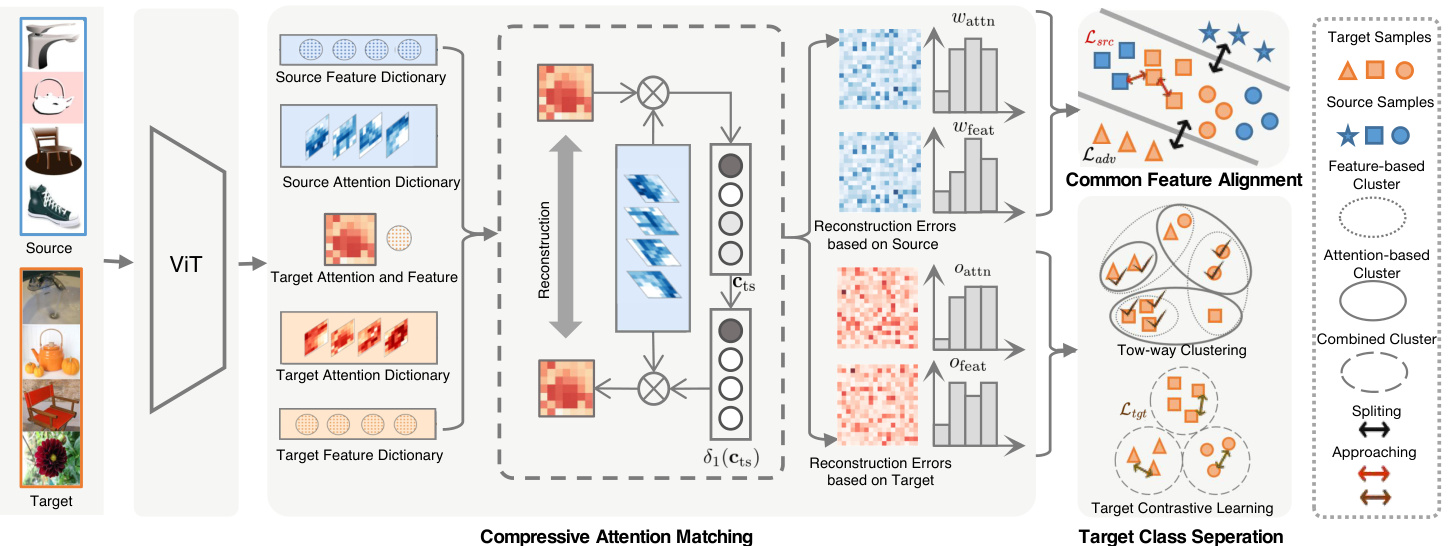

In this paper, we propose a novel Universal Attention Matching (UniAM) framework to address the UniDA problem by leveraging both the feature and attention information in a complementary way. Specifically, UniAM introduces a Compressive Attention Matching (CAM) approach to solve the attention mismatch problem implicitly by sparsely representing target attentions using source attention prototypes. This allows CAM to identify the most relevant attention prototype for each target sample and distinguish irrelevant private labels. Furthermore, a residual-based measurement is proposed in CAM to explicitly distinguish common and private samples across domains. By integrating attention information with features, we can mitigate the interference caused by domain shift and focus on label shift to some extent. With the guidance of CAM, the UniAM framework achieves domain-wise and category-wise common feature alignment (CFA) and target class separation (TCS). By using an adversarial loss and a source contrastive loss, CFA identifies and aligns the common features across domains, ensuring their consistency and transfer ability. On the other hand, TCS enhances the compactness of the target clusters, leading to better separation among all target classes. This is accomplished through a target contrastive loss, which encourages samples from the same target class to be closer together and farther apart from samples with other classes.

本文提出了一种新颖的通用注意力匹配(UniAM)框架,通过互补利用特征和注意力信息来解决UniDA问题。具体而言,UniAM引入压缩注意力匹配(CAM)方法,通过使用源注意力原型对目标注意力进行稀疏表示,从而隐式解决注意力失配问题。这使得CAM能够为每个目标样本识别最相关的注意力原型,并区分无关的私有标签。此外,CAM中提出基于残差的度量方法,显式区分跨域的公共样本和私有样本。通过将注意力信息与特征相结合,我们能在一定程度上减轻域偏移带来的干扰,并聚焦于标签偏移问题。

在CAM的指导下,UniAM框架实现了域级和类别级的公共特征对齐(CFA)与目标类分离(TCS)。通过对抗损失和源对比损失,CFA识别并对齐跨域公共特征,确保其一致性和迁移能力。另一方面,TCS通过目标对比损失增强目标类簇的紧密度,从而提升所有目标类间的分离度——该损失函数促使同类目标样本彼此靠近,而异类样本相互远离。

Main Contributions: (1) We propose the UniAM framework that comprehensively considers both attention and feature information, which allows for more accurate identification of common and private samples. (2) We validate the strong object bias of attention in ViT. To the best of our knowledge, we are the first to directly utilize attention in ViT for classification prediction. (3) We implicitly explore object information by sparsely reconstructing attention, enabling better common feature alignment (CFA) and target class separation (TCS). (4) We conduct extensive experiments to show that UniAM can outperform current state-ofthe-art approaches.

主要贡献:(1) 我们提出UniAM框架,全面考虑注意力与特征信息,能更精准识别共有和私有样本。(2) 我们验证了ViT中注意力机制的强目标偏向性。据我们所知,这是首次直接利用ViT中的注意力进行分类预测。(3) 通过稀疏重构注意力隐式探索目标信息,实现更好的共有特征对齐(CFA)与目标类别分离(TCS)。(4) 大量实验表明UniAM能超越当前最先进方法。

2. Related Works

2. 相关工作

2.1. Universal Domain Adaptation

2.1. 通用领域自适应

UniDA [66] does not require prior knowledge of label set relationship. To address this problem, UAN [66] proposes a criterion based on entropy and domain similarity to quantify sample transfer ability. CMU [13] follows this paradigm to detect open classes by setting the mean of three uncertain scores including entropy, consistency and confidence as a new measurement. Afterward, [27] proposes a real-time adaptive source-free UniDA method. In [47] and [29], clustering is developed to solve this problem. [31]. OVANet [48] employs a One-vs-All classifier for each class and decides known or unknown by using the output. Recent works have shifted their focus towards finding mutually nearest neighbor samples of target samples [7, 8, 9, ?] or constructing relationships between target samples and source prototypes [26, 6].

UniDA [66] 不需要预先了解标签集关系。为解决这一问题,UAN [66] 提出了一种基于熵和域相似性的准则来量化样本迁移能力。CMU [13] 沿用这一范式,通过将熵、一致性和置信度三个不确定性分数的均值作为新度量来检测开放类别。随后,[27] 提出了一种实时自适应的无源 UniDA 方法。在 [47] 和 [29] 中,聚类方法被用于解决该问题。[31]。OVANet [48] 为每个类别使用一对多分类器,并通过输出结果判定已知或未知类别。近期研究转向寻找目标样本的互最近邻样本 [7, 8, 9, ?] 或构建目标样本与源原型之间的关系 [26, 6]。

2.2. Vision Transformer

2.2. Vision Transformer

Inspired by the success of Transformer [58, 23] in the NLP field, many researchers have attempted to exploit it for solving computer vision tasks. One of the most pioneering works is Vision Transformer (ViT)[24], which decomposes input images into a sequence of fixed-size patches. Different from CNNs that rely on image-specific inductive bias, ViT takes the advantage of large-scale pre-training data and global context modeling on the entire images. Due to the outstanding performance of ViT, many approaches have been proposed based on it [54, 36, 60, 21, 37], such as Touvron et al. [54] propose DeiT, which introduces a distillation strategy specific to transformers to reduce computational costs. In general, ViT and its variants have achieved excellent results on many computer vision tasks, such as object detection [5, 76, 60], image segmentation [75, 61], and video understanding [17, 42], etc.

受Transformer [58, 23] 在自然语言处理领域成功的启发,许多研究者尝试将其应用于计算机视觉任务。最具开创性的工作之一是Vision Transformer (ViT) [24],它将输入图像分解为固定大小的图像块序列。不同于依赖图像特定归纳偏置的CNN,ViT充分利用了大规模预训练数据和对整幅图像的全局上下文建模优势。由于ViT的卓越表现,基于它的各类方法不断涌现 [54, 36, 60, 21, 37],例如Touvron等人 [54] 提出的DeiT通过引入针对Transformer的蒸馏策略来降低计算成本。总体而言,ViT及其变体在目标检测 [5, 76, 60]、图像分割 [75, 61] 和视频理解 [17, 42] 等计算机视觉任务中取得了优异成果。

Recently, ViT has been adopted for the DA task in several works. TVT [65] proposes an transferable adaptation module to capture disc rim i native features and achieve domain alignment. SSRT [53] formulates a comprehensive framework, which pairs a transformer backbone with a safe self-refinement strategy to navigate challenges associated with large domain gaps effectively. CDTrans [64] designs a triple-branch framework to apply self-attention and cross-attention for source-target domain feature alignment. Differently, we focus in this paper on investigating attention mechanism’s superior disc rim inability across different classes on universal domain adaptation.

最近,ViT(Vision Transformer)已被多项研究应用于领域自适应(DA)任务。TVT [65] 提出了一种可迁移适配模块,用于捕捉判别性特征并实现域对齐。SSRT [53] 构建了一个综合框架,将Transformer主干网络与安全自优化策略相结合,有效应对大域差距带来的挑战。CDTrans [64] 设计了三分支框架,通过自注意力和交叉注意力实现源-目标域特征对齐。与之不同,本文重点研究注意力机制在通用域自适应中跨类别的卓越判别能力。

2.3. Sparse Representation Classification

2.3. 稀疏表示分类

Sparse Representation Classification (SRC) [62] and Collaborative Representation Classification (CRC)[71], along with their numerous extensions [35, 63, 11, 10, 18], have been extensively investigated in the field of face recognition using single images and videos. These methods have demonstrated promising performance in the presence of occlusions and variations in illumination. By modeling the test data in terms of a sparse linear combination of a dictionary, SRC can capture non-linear relationships between features. Our UniAM is inspired by them but uses a novel measurement instead of a sparsity concentration index.

稀疏表示分类 (Sparse Representation Classification, SRC) [62] 与协同表示分类 (Collaborative Representation Classification, CRC) [71] 及其众多扩展方法 [35, 63, 11, 10, 18] 已在单图像和视频人脸识别领域得到广泛研究。这些方法在存在遮挡和光照变化的情况下表现出优异的性能。通过将测试数据建模为字典的稀疏线性组合,SRC 能够捕捉特征间的非线性关系。我们的 UniAM 受其启发,但采用了一种新颖的度量方式替代稀疏集中指数。

3. Problem Formulation and Preliminary

3. 问题表述与初步准备

3.1. Problem Formulation

3.1. 问题表述

Denoting X, Y, $\mathbb{Z}$ as the input space, label space and latent space, respectively. Elements of $\mathbb{X}$ , Y, $\mathbb{Z}$ are noted as $x,y$ and ${z}$ . Let $P{s}$ and $P_{t}$ be the source distribution and target distribution, respectively. We are given a labeled source domain $\mathbb{D}{s}={\pmb{x}{i},y_{i}}}{i=1}^{m}$ and an unlabeled target domain $\mathbb{D}{t}={\pmb{x}{i}}{i=1}^{n}$ are respectively sampled from $P_{s}$ and $P_{t}$ , where $m$ and $n$ denote the number of samples of source and target domains, respectively. Denote $\mathbb{L}_{s}$ and $\mathbb{L}_{t}$ as the label sets of the source and target domains, respectively. Let $\mathbb{L}=\mathbb{L}_{s}\cap\mathbb{L}_{t}$ be the common label set shared by both domains, while $\overline{{\mathbb{L}}}_{s}=\mathbb{L}_{s}\backslash\mathbb{L}$ and $\overline{{\mathbb{L}}}_{t}=\mathbb{L}_{t}\backslash\mathbb{L}$ be the label sets private to source and target domains, respectively. Denote $M=\left|\mathbb{L}_{s}\right|$ as the number of source labels. Universal domain adaptation aims to predict labels of target data in $\mathbb{L}$ while rejecting the target data in $\overline{{\mathbb{L}}}^{t}$ based on $\mathbb{D}_{s}$ and $\mathbb{D}_{t}$ .

记X、Y、$\mathbb{Z}$分别为输入空间、标签空间和潜在空间。$\mathbb{X}$、Y、$\mathbb{Z}$中的元素记为$x,y$和${z}$。设$P{s}$和$P_{t}$分别为源分布和目标分布。给定标记的源域$\mathbb{D}{s}={\pmb{x}{i},y_{i}}{i=1}^{m}$和未标记的目标域$\mathbb{D}{t}={\pmb{x}{i}}{i=1}^{n}$分别从$P_{s}$和$P_{t}$中采样,其中$m$和$n$分别表示源域和目标域的样本数量。记$\mathbb{L}_{s}$和$\mathbb{L}_{t}$分别为源域和目标域的标签集。设$\mathbb{L}=\mathbb{L}_{s}\cap\mathbb{L}_{t}$为两域共享的公共标签集,$\overline{{\mathbb{L}}}_{s}=\mathbb{L}_{s}\backslash\mathbb{L}$和$\overline{{\mathbb{L}}}_{t}=\mathbb{L}_{t}\backslash\mathbb{L}$分别为源域和目标域私有的标签集。记$M=\left|\mathbb{L}_{s}\right|$为源标签数量。通用域适应的目标是根据$\mathbb{D}_{s}$和$\mathbb{D}_{t}$预测$\mathbb{L}$中的目标数据标签,同时拒绝$\overline{{\mathbb{L}}}^{t}$中的目标数据。

Our overall architecture consists of a ViT-based feature extractor, an adversarial domain classifier, and a label classifier. Suppose the function for learning embedding features is $G_{f}:\mathbb{X}\rightarrow\mathbb{Z}\in\mathbb{R}^{d_{z}}$ where $d_{z}$ is the length of each feature vector, the discrimination function of the label classifier is $G_{c}:\mathbb{Z}\to\mathbb{Y}\in\mathbb{R}^{M}$ , and the function of the domain classifier is $G_{d}:\mathbb{Z}\to\mathbb{R}^{1}$ .

我们的整体架构由一个基于ViT的特征提取器、一个对抗性域分类器和一个标签分类器组成。假设学习嵌入特征的函数为 $G_{f}:\mathbb{X}\rightarrow\mathbb{Z}\in\mathbb{R}^{d_{z}}$ ,其中 $d_{z}$ 是每个特征向量的长度,标签分类器的判别函数为 $G_{c}:\mathbb{Z}\to\mathbb{Y}\in\mathbb{R}^{M}$ ,域分类器的函数为 $G_{d}:\mathbb{Z}\to\mathbb{R}^{1}$ 。

3.2. Preliminary

3.2. 初步准备

To start with, we provide an overview of the selfattention mechanism used in ViT. First, the input image ${x}$ is divided into $N$ fixed-size patches, which are linearly embedded into a sequence of vectors. Next, a special token called the class token is prepended to the sequence of image patches for classification. The resulting sequence of length $N+1$ is then projected into three matrices: queries $Q\in\mathbb{R}^{(N+1)\times d{k}}$ , keys $\dot{\boldsymbol{K}}\in\mathbb{R}^{(N+1)\times d_{k}}$ and values $V\in\mathbf{\Omega}$ R(N+1)×dv with dk and dv being the length of each query and value vector, respectively. Then, $Q$ and $\kappa$ are passed to the self-attention layer to compute the patch-to-patch similarity matrix $\pmb{A}^{(N+\bar{1})\times(N+1)}$ , which is given by

首先,我们概述ViT中使用的自注意力机制。输入图像$x$被划分为$N$个固定大小的图像块,这些块经过线性嵌入转换为向量序列。随后,一个名为分类token的特殊token被添加到图像块序列前用于分类。最终得到的长度为$N+1$的序列被投影为三个矩阵:查询矩阵$Q\in\mathbb{R}^{(N+1)\times d_{k}}$、键矩阵$\dot{\boldsymbol{K}}\in\mathbb{R}^{(N+1)\times d_{k}}$和值矩阵$V\in\mathbf{\Omega}$ R(N+1)×dv,其中$d_k$和$d_v$分别表示每个查询向量和值向量的长度。接着,$Q$和$\kappa$被送入自注意力层,计算块间相似度矩阵$\pmb{A}^{(N+\bar{1})\times(N+1)}$,其表达式为

For ease of further processing, we flatten $\pmb{A}$ into a vector $\pmb{a}\in\mathbb{R}^{(N+1)^{2}\times1}$ . It is worth noting that multiple attention heads are utilized in the self-attention mechanism. Each head outputs a separate attention, and the final attention is obtained by concatenating the vectors from all heads. As a result, the dimensionality of $\pmb{a}\in\mathbb{R}^{d_{a}\times1}$ , where $d_{a}=N_{H}\times(N+1)^{2}$ and $N_{H}$ is the number of attention heads. The utilization of multiple heads allows the model to jointly attend to information from different feature subspaces at different positions.

为便于后续处理,我们将 $\pmb{A}$ 展平为向量 $\pmb{a}\in\mathbb{R}^{(N+1)^{2}\times1}$。值得注意的是,自注意力机制中使用了多个注意力头。每个头输出独立的注意力,最终注意力通过拼接所有头的向量获得。因此,$\pmb{a}\in\mathbb{R}^{d_{a}\times1}$ 的维度为 $d_{a}=N_{H}\times(N+1)^{2}$,其中 $N_{H}$ 是注意力头的数量。多头机制使模型能够同时关注来自不同位置、不同特征子空间的信息。

Figure 3: Illustration of the proposed UniAM framework. The framework consists of three integral components: Compressive Attention Matching (CAM), Common Feature Alignment (CFA) and Target Class Separation (TCS). At its core, CAM reconstructs all target attentions and features based on the source dictionary (with feature reconstruction omitted in the figure for simplicity), and attention and feature commonness scores $w_{\mathrm{attn}}$ and $w_{\mathrm{feat}}$ are computed from residual vectors. Then, domain- and category-wise CFA is achieved by minimizing $\mathcal{L}{a d v}$ and $\mathcal{L}{s r c}$ guided by $w_{\mathrm{attn}}$ and $w_{\mathrm{feat}}$ . Similarly, $O_{\mathrm{{attn}}}$ and $O_{\mathrm{feat}}$ are obtained by reconstructing target attentions and features based on the target dictionary in CAM. TCS performs two-way clustering from both the attention and feature views and minimizes $\mathcal{L}_{t g t}$ to achieve effective separation of target classes.

图 3: 提出的UniAM框架示意图。该框架包含三个核心组件:压缩注意力匹配(CAM)、共同特征对齐(CFA)和目标类别分离(TCS)。核心部分CAM基于源字典重建所有目标注意力和特征(图中为简化省略了特征重建),并从残差向量计算注意力共同性分数$w_{\mathrm{attn}}$和特征共同性分数$w_{\mathrm{feat}}$。随后,在$w_{\mathrm{attn}}$和$w_{\mathrm{feat}}$的指导下,通过最小化$\mathcal{L}{a d v}$和$\mathcal{L}{s r c}$实现域级和类别级的CFA。类似地,$O_{\mathrm{{attn}}}$和$O_{\mathrm{feat}}$通过基于CAM中目标字典重建目标注意力和特征获得。TCS从注意力和特征两个视角进行双向聚类,并通过最小化$\mathcal{L}_{t g t}$实现目标类别的有效分离。

Once the attention vector $\textbf{\em a}$ is available, the corresponding $k$ -th attention prototype ${p{k}}$ is calculated by averaging all attention vectors of samples in class $k$ , which will be used in the subsequent matching process.

一旦获得注意力向量 $\textbf{\em a}$,对应的第 $k$ 个注意力原型 ${p{k}}$ 将通过计算类别 $k$ 中所有样本注意力向量的平均值得到,该原型将用于后续的匹配过程。

4. Proposed Methodology

4. 研究方法

4.1. Compressive Attention Matching

4.1. 压缩注意力匹配

Since the attention mismatch problem exists due to domain shift mentioned in Section 1, how to effectively utilize the core object information and avoid interference from redundant information poses a challenge in applying attention to UniDA. To address this challenge, compressive attention matching (CAM) is proposed to capture the most informative object structures by sparsely representing target attentions. Define the attention dictionary in CAM as the collection of source attention prototypes for efficient matching, i.e., $P_{s}=[p_{1}^{s},p_{2}^{s},\cdot\cdot\cdot,p_{M}^{s}]\in\mathbb{R}^{d_{a}\times M}$ . Definition 1 gives the definition of CAM.

由于第1节提到的领域偏移导致注意力失配问题,如何有效利用核心对象信息并避免冗余信息干扰,成为在UniDA中应用注意力机制面临的挑战。为解决该挑战,我们提出压缩注意力匹配(CAM)方法,通过稀疏化表示目标注意力来捕获最具信息量的对象结构。将CAM中的注意力字典定义为源注意力原型集合以实现高效匹配,即$P_{s}=[p_{1}^{s},p_{2}^{s},\cdot\cdot\cdot,p_{M}^{s}]\in\mathbb{R}^{d_{a}\times M}$。定义1给出了CAM的数学表述。

Definition 1 (Compressive Attention Matching). Given an attention vector $\pmb{a}{t}\in\mathbb{R}^{d{a}\times1}$ of the target sample $\scriptstyle{\pmb{x}}{t}$ and a source attention dictionary $P{s}$ , Compressive Attention Matching aims to match $\mathbf{}\mathbf{}{a{t}}$ with one prototype in $P_{s}$ to determine its commonness, which is achieved by assuming that $\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf}\mathbf{\mathbf}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf\mathbf{}\mathbf\mathbf$ can be approximated by a linear combination of $P_{s}$ :

定义 1 (压缩注意力匹配)。给定目标样本 $\scriptstyle{\pmb{x}}{t}$ 的注意力向量 $\pmb{a}{t}\in\mathbb{R}^{d_{a}\times1}$ 和源注意力字典 $P_{s}$,压缩注意力匹配旨在将 $\mathbf{}\mathbf{}{a{t}}$ 与 $P_{s}$ 中的一个原型进行匹配以确定其共性,这是通过假设 $\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf}\mathbf{\mathbf}\mathbf{}\mathbf\mathbf{}\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf\mathbf{}\mathbf\mathbf{}\mathbf\mathbf}\mathbf{\mathbf}\mathbf{\mathbf}\mathbf\mathbf{}\mathbf\mathbf$ 可以近似为 $P_{s}$ 的线性组合来实现:

where the coefficient vector $\pmb{c}{t s}\in\mathbb{R}^{M\times1}$ satisfies a sparsity constraint in order to achieve a compressive representation. Based on $\mathbf{\Delta}{c_{t s},\textit{\mathbf{x}}_{t}}$ is regarded as belonging to common classes from an attention perspective when the following inequality is satisfied:

其中系数向量 $\pmb{c}{t s}\in\mathbb{R}^{M\times1}$ 需满足稀疏性约束以实现压缩表示。当满足以下不等式时,基于 $\mathbf{\Delta}{c_{t s},\textit{\mathbf{x}}_{t}}$ 从注意力角度被视为属于常见类别:

$w_{a t t n}(\cdot)$ indicates a measurement to evaluate the commonness of $\scriptstyle{\pmb{x}}_{t}$ which is defined later and $\delta$ is a threshold.

$w_{a t t n}(\cdot)$ 表示评估 $\scriptstyle{\pmb{x}}_{t}$ 常见程度的度量标准 (该定义将在后文给出) ,$\delta$ 为阈值。

Why Compressive Attention Matching is desirable? By enforcing sparsity on the coefficients in CAM, we can obtain a compressive representation of the attention vectors, which facilitates the extraction and utilization of lowdimensional structures embedded in high-dimensional attention vectors. In the context of UniDA, this compressive representation enables us to identify the most relevant attention prototype for each target sample and distinguish irrele- vant private labels, which is crucial for achieving effective common and private class detection. Therefore, CAM with sparse coefficients plays a vital role in solving UniDA.

为什么压缩注意力匹配(CAM)是理想的?通过对CAM中的系数施加稀疏性,我们可以获得注意力向量的压缩表示,这有助于提取和利用嵌入高维注意力向量中的低维结构。在UniDA(通用域适应)的背景下,这种压缩表示使我们能够为每个目标样本识别最相关的注意力原型,并区分无关的私有标签,这对于实现有效的公共类和私有类检测至关重要。因此,具有稀疏系数的CAM在解决UniDA问题中起着关键作用。

To solve Eq. 2 in CAM, the coefficient vector $c_{t s}$ is estimated by:

为求解CAM中的方程2,系数向量$c_{t s}$通过以下方式估计:

where $||\cdot||{p}$ denotes $\ell{p}$ -norm. The $\ell_{1}$ -minimization term in Eq. 3 yields a sparse solution, which enforces that $c_{t s}$ has only a small number of non-zero coefficients.

其中 $||\cdot||{p}$ 表示 $\ell{p}$ 范数。式(3)中的 $\ell_{1}$ 最小化项会产生稀疏解,这强制要求 $c_{t s}$ 仅具有少量非零系数。

Then we can compute the class reconstruction error vector $\pmb{r}{t s}\in\mathbb{R}^{M}$ for each target sample using the sparse matrix $c{t s}$ . The $k$ -th entry of $\mathbf{\Delta}{r{t s}}$ can be represented:

然后我们可以利用稀疏矩阵 $c_{t s}$ 计算每个目标样本的类别重构误差向量 $\pmb{r}{t s}\in\mathbb{R}^{M}$ 。$\mathbf{\Delta}{r_{t s}}$ 的第 $k$ 个分量可表示为:

where $\delta_{k}(\mathbf{\sigma}{c{t s}})$ is a one-hot vector with the $k$ -th entry in $c_{t s}$ being non-zero while setting all other entries to zero. If $\scriptstyle{\pmb{x}}{t}$ corresponds to a common class $k$ , then the reconstruction error corresponding to class $k$ . $r{t s}(k)$ should be much lower than that corresponding to the other classes. Conversely, if $\mathbf{\boldsymbol{x}}{t}$ belongs to a private class, the difference between elements of the entire reconstruction error vector $\mathbf{\Delta}{r_{t s}}$ should be relatively small, without a significant difference between the errors corresponding to different classes.

其中 $\delta_{k}(\mathbf{\sigma}{c{t s}})$ 是一个独热向量 (one-hot vector) ,其 $c_{t s}$ 中的第 $k$ 个条目为非零值,而其他所有条目均为零。如果 $\scriptstyle{\pmb{x}}{t}$ 对应于一个常见类别 $k$ ,那么对应于类别 $k$ 的重构误差 $r{t s}(k)$ 应该远低于其他类别对应的重构误差。相反,如果 $\mathbf{\boldsymbol{x}}{t}$ 属于一个私有类别,则整个重构误差向量 $\mathbf{\Delta}{r_{t s}}$ 中各元素之间的差异应该相对较小,不同类别对应的误差之间没有显著差异。

As a result, the reconstruction error vector $\mathbf{\Delta}{r{t s}}$ is a crucial component in CAM. It serves as the foundation for the design of the measurement $w_{\mathrm{attn}}(\cdot)$ in Definition 1 called Attention Commonness Degree (ACD), defined as belows:

因此,重建误差向量 $\mathbf{\Delta}{r{t s}}$ 是 CAM 中的关键组成部分。它作为定义 1 中测量 $w_{\mathrm{attn}}(\cdot)$ 的基础,称为注意力共性度 (ACD),定义如下:

Definition 2 (Attention Commonness Degree). Given the residual vector $\mathbf{\Delta}{r{t s}}$ of $\mathbf{\boldsymbol{x}}_{t}$ , the ACD is defined as the difference between the average of non-matched errors and matched errors:

定义 2 (注意力共性度). 给定 $\mathbf{\boldsymbol{x}}{t}$ 的残差向量 $\mathbf{\Delta}{r_{t s}}$ ,ACD 定义为非匹配误差与匹配误差平均值之差:

where match $(\pmb{r}{t s})=\pmb{r}{t s}(\hat{y})$ , $\begin{array}{r}{\hat{y}=\arg\operatorname*{min}{k}r{t s}(k)}\end{array}$ and non-match $\left(\pmb{r}_{t s}\right)$ is the average of reconstruction errors excepting $\hat{y}$ .

其中匹配 $(\pmb{r}{t s})=\pmb{r}{t s}(\hat{y})$ , $\begin{array}{r}{\hat{y}=\arg\operatorname*{min}{k}r{t s}(k)}\end{array}$ ,非匹配 $\left(\pmb{r}_{t s}\right)$ 是除 $\hat{y}$ 外重建误差的平均值。

Remark 1. ACD measures the degree of commonness for a target sample $\scriptstyle{\pmb{x}}{t}$ , which represents the probability of belonging to common classes. A higher ACD value indicates a larger difference between non-matched and matched errors, suggesting the presence of an attention prototype similar to $\scriptstyle{\pmb{x}}{t}$ , and consequently, a higher degree of sample commonness. Conversely, a smaller ACD value implies a similar reconstruction error between $\scriptstyle{\boldsymbol{x}}_{t}$ and all source prototypes, indicating a lower degree of sample commonness and a higher degree of private ness.

备注1. ACD衡量目标样本$\scriptstyle{\pmb{x}}{t}$的共性程度,表示其属于常见类的概率。ACD值越高,非匹配误差与匹配误差之间的差异越大,表明存在与$\scriptstyle{\pmb{x}}{t}$相似的注意力原型,因此样本共性程度越高。反之,ACD值越小,说明$\scriptstyle{\boldsymbol{x}}_{t}$与所有源原型之间的重构误差相近,表明样本共性程度较低而私有性程度较高。

To complement the attention information, we retain features that reflect global information. The target feature $z_{t}$ can be also represented by the linear span of source feature prototypes $Q_{s}=[\pmb{q}{1}^{s},\pmb{q}{2}^{s},\cdot\cdot\cdot,\pmb{q}{M}^{s}]$ , i.e., ${z{t}=Q_{s}c_{t s}}$ . The corresponding residual vector $r_{t s}^{\prime}(k)$ is computed based on $c_{t s}$ . The Feature Commonness Degree (FCD) can be defined as $w_{\mathrm{feat}}({\pmb x}_{t})=\mathrm{non}\mathrm{-match}({\pmb r}_{t s}^{\prime})-\mathrm{match}({\pmb r}_{t s}^{\prime}).$ .

为补充注意力信息,我们保留了反映全局信息的特征。目标特征$z_{t}$也可表示为源特征原型$Q_{s}=[\pmb{q}{1}^{s},\pmb{q}{2}^{s},\cdot\cdot\cdot,\pmb{q}{M}^{s}]$的线性组合,即${z{t}=Q_{s}c_{t s}}$。对应的残差向量$r_{t s}^{\prime}(k)$基于$c_{t s}$计算得出。特征共性度(FCD)可定义为$w_{\mathrm{feat}}({\pmb x}_{t})=\mathrm{non}\mathrm{-match}({\pmb r}_{t s}^{\prime})-\mathrm{match}({\pmb r}_{t s}^{\prime}).$

It is worth noting that by replacing $P_{s}$ in Definition 2 with the target dictionary $P_{t}$ , we can obtain compressive representations of target attentions towards $P_{t}$ . This leads to a score similar to that in Definition 2, denoted as $O_{\mathrm{{atth}}}$ . The same goes for $O_{\mathrm{feat}}$ . These scores can facilitate determining the probability that two target samples belong to the same class, more details will be provided in Section 4.3.

值得注意的是,将定义2中的$P_{s}$替换为目标字典$P_{t}$,我们可以获得针对$P_{t}$的目标注意力压缩表示。这将产生一个类似于定义2中的分数,记为$O_{\mathrm{{atth}}}$。同理适用于$O_{\mathrm{feat}}$。这些分数有助于确定两个目标样本属于同一类别的概率,更多细节将在4.3节中提供。

In summary, both attention and feature characteristics are important factors that affect the perception of similarity between different categories. Attention captures the structural properties of objects, while feature captures the appearance properties of the global images. Therefore, we can achieve a more comprehensive and accurate private class detection model that takes into account both object information and global information.

总之,注意力和特征特性都是影响不同类别间相似性感知的重要因素。注意力捕捉物体的结构属性,而特征捕捉全局图像的外观属性。因此,我们可以构建一个更全面、更准确的私有类别检测模型,兼顾物体信息和全局信息。

4.2. Common Feature Alignment

4.2. 通用特征对齐

To identify and align the common class features across domains, we propose a domain-wise and category-wise Common Feature Alignment (CFA) technique, which considers both attention and feature information.

为了识别并对齐跨领域的共同类别特征,我们提出了一种基于领域和类别的共同特征对齐(Common Feature Alignment, CFA)技术,该技术同时考虑了注意力机制和特征信息。

Domain-wise Alignment. To achieve domain-wise alignment, we first propose a residual-based transfer ability score $d_{\mathrm{t}}$ measuring the probability that the target sample belongs to the common classes, which can be summarized as:

领域对齐。为实现领域对齐,我们首先提出一种基于残差的迁移能力评分 $d_{\mathrm{t}}$ ,用于衡量目标样本属于公共类的概率,其计算公式可表示为:

where $\lambda$ is a hyper parameter balancing their contribution. Meanwhile, to measure the probability that the source sample $\pmb{x}{s}$ with label $j$ belongs to the common label set, we compute $\boldsymbol{w}{j}^{s}$ with the sum of all target samples’ attention and feature reconstruction errors respectively, i.e.

其中 $\lambda$ 是平衡两者贡献的超参数。同时,为了衡量带标签 $j$ 的源样本 $\pmb{x}{s}$ 属于公共标签集的概率,我们分别计算所有目标样本的注意力之和与特征重构误差之和来得到 $\boldsymbol{w}{j}^{s}$,即

where $r_{t s}^{i}$ indicates the reconstruction error of the $i$ -th target sample. The operator $\begin{array}{r}{\sigma({\pmb r})=\frac{{\pmb r}-m i n({\pmb r})}{m a x({\pmb r})-m i n({\pmb r})}}\end{array}$ max(−r)−min(r) refers to the normalization sum of all target attention or feature residual vectors. A larger value of $w_{j}^{s}$ indicates a higher probability that the source label $j$ belongs to the common label set, while lower values suggest that it is more likely to be a source private label. It is worth noting that source samples with the same label are assigned the same weight.

其中 $r_{t s}^{i}$ 表示第 $i$ 个目标样本的重构误差。运算符 $\begin{array}{r}{\sigma({\pmb r})=\frac{{\pmb r}-m i n({\pmb r})}{m a x({\pmb r})-m i n({\pmb r})}}\end{array}$ max(−r)−min(r) 表示所有目标注意力或特征残差向量的归一化总和。$w_{j}^{s}$ 值越大,表明源标签 $j$ 属于公共标签集的概率越高;值越小,则越可能是源私有标签。值得注意的是,具有相同标签的源样本会被赋予相同的权重。

Based on the above two weights, we can derive a domain-wise adversarial loss that aligns the common classes across domains as follows:

基于上述两种权重,我们可以推导出一个跨域对抗损失函数,用于对齐不同领域间的共有类别,具体如下:

In addition, to avoid being interfered with the knowledge of source private samples, we employ an indicator as the weight for the weighted cross-entropy loss $\mathcal{L}_{\mathrm{cls}}$ for the source domain, as shown below:

此外,为避免受到源私有样本知识的干扰,我们采用一个指示器作为源域加权交叉熵损失 $\mathcal{L}_{\mathrm{cls}}$ 的权重,如下所示:

where ${l}_{c e}$ is the standard cross-entropy loss.

其中 ${l}_{c e}$ 是标准交叉熵损失。

Category-wise Alignment. To enhance the source discriminability and align the common features from a categorywise perspective across domains, we propose a contrastive

类别对齐。为了增强源可辨别性并从跨领域的类别视角对齐共有特征,我们提出了一种对比式

Thus the category-wise common feature alignment can be improved by minimizing the source contrastive loss $\mathcal{L}_{\mathrm{src}}$ :

因此,通过最小化源对比损失 $\mathcal{L}_{\mathrm{src}}$ 可以改进类别间共同特征的对齐:

with

与