Do ImageNet Classifiers Generalize to ImageNet?

ImageNet分类器能否泛化到ImageNet?

Benjamin Recht∗ Rebecca Roelofs Ludwig Schmidt Vaishaal Shankar UC Berkeley UC Berkeley UC Berkeley UC Berkeley

Benjamin Recht∗ Rebecca Roelofs Ludwig Schmidt Vaishaal Shankar 加州大学伯克利分校 加州大学伯克利分校 加州大学伯克利分校 加州大学伯克利分校

Abstract

摘要

We build new test sets for the CIFAR-10 and ImageNet datasets. Both benchmarks have been the focus of intense research for almost a decade, raising the danger of over fitting to excessively re-used test sets. By closely following the original dataset creation processes, we test to what extent current classification models generalize to new data. We evaluate a broad range of models and find accuracy drops of 3%-15% on CIFAR-10 and 11%-14% on ImageNet. However, accuracy gains on the original test sets translate to larger gains on the new test sets. Our results suggest that the accuracy drops are not caused by adaptivity, but by the models’ inability to generalize to slightly “harder” images than those found in the original test sets.

我们为CIFAR-10和ImageNet数据集构建了新的测试集。这两个基准测试近十年来一直是密集研究的焦点,存在对过度重复使用测试集过拟合的风险。通过严格遵循原始数据集创建流程,我们测试了当前分类模型对新数据的泛化程度。我们评估了多种模型,发现CIFAR-10准确率下降3%-15%,ImageNet下降11%-14%。然而,原始测试集上的准确率提升会转化为新测试集上更大的提升。结果表明,准确率下降并非由适应性导致,而是模型无法泛化到比原始测试集图像稍"难"的图像。

1 Introduction

1 引言

The over arching goal of machine learning is to produce models that generalize. We usually quantify generalization by measuring the performance of a model on a held-out test set. What does good performance on the test set then imply? At the very least, one would hope that the model also performs well on a new test set assembled from the same data source by following the same data cleaning protocol.

机器学习的核心目标是构建具有泛化能力的模型。我们通常通过在保留的测试集上评估模型表现来量化泛化能力。那么测试集上的优异表现意味着什么?至少可以预期,该模型在遵循相同数据清洗流程、从同一数据源构建的新测试集上也能表现良好。

In this paper, we realize this thought experiment by replicating the dataset creation process for two prominent benchmarks, CIFAR-10 and ImageNet [10, 35]. In contrast to the ideal outcome, we find that a wide range of classification models fail to reach their original accuracy scores. The accuracy drops range from 3% to $15%$ on CIFAR-10 and $11%$ to $14%$ on ImageNet. On ImageNet, the accuracy loss amounts to approximately five years of progress in a highly active period of machine learning research.

在本文中,我们通过复现两个重要基准数据集CIFAR-10和ImageNet [10, 35]的构建过程来实现这一思想实验。与理想结果相反,我们发现多种分类模型均无法达到原始准确率:CIFAR-10的准确率下降幅度为3%至$15%$,ImageNet则下降$11%$至$14%$。在ImageNet上,这一准确率损失相当于机器学习研究高度活跃时期约五年的进展成果。

Conventional wisdom suggests that such drops arise because the models have been adapted to the specific images in the original test sets, e.g., via extensive hyper parameter tuning. However, our experiments show that the relative order of models is almost exactly preserved on our new test sets: the models with highest accuracy on the original test sets are still the models with highest accuracy on the new test sets. Moreover, there are no diminishing returns in accuracy. In fact, every percentage point of accuracy improvement on the original test set translates to a larger improvement on our new test sets. So although later models could have been adapted more to the test set, they see smaller drops in accuracy. These results provide evidence that exhaustive test set evaluations are an effective way to improve image classification models. Adaptivity is therefore an unlikely explanation for the accuracy drops.

传统观点认为,这种准确率下降源于模型通过超参数调优等方式过度适配了原始测试集中的特定图像。但我们的实验表明,模型在新测试集上的相对性能排序几乎完全保持:在原测试集上准确率最高的模型,在新测试集上依然表现最优。此外,准确率提升并未出现收益递减现象——原测试集上每提升1%准确率,在新测试集上会带来更大的改进幅度。尽管后期模型可能对测试集有更高适配度,但其准确率下降幅度反而更小。这些结果证明,详尽的测试集评估是提升图像分类模型的有效手段,适配性假设难以解释准确率下降现象。

Instead, we propose an alternative explanation based on the relative difficulty of the original and new test sets. We demonstrate that it is possible to recover the original ImageNet accuracies almost exactly if we only include the easiest images from our candidate pool. This suggests that the accuracy scores of even the best image class if i ers are still highly sensitive to minutiae of the data cleaning process. This brittleness puts claims about human-level performance into context [20, 31, 48]. It also shows that current class if i ers still do not generalize reliably even in the benign environment of a carefully controlled reproducibility experiment.

相反,我们基于原始测试集与新测试集的相对难度提出了另一种解释。研究表明,若仅从候选图像池中选取最易识别的样本,几乎可以完全复现原始ImageNet准确率。这表明即使最优图像分类器的精度得分,仍高度依赖于数据清洗过程的细微差异 [20, 31, 48]。这种脆弱性使得关于人类水平性能的论断需要重新审视,同时也表明当前分类器即使在精心控制的复现实验环境中,仍无法实现可靠的泛化。

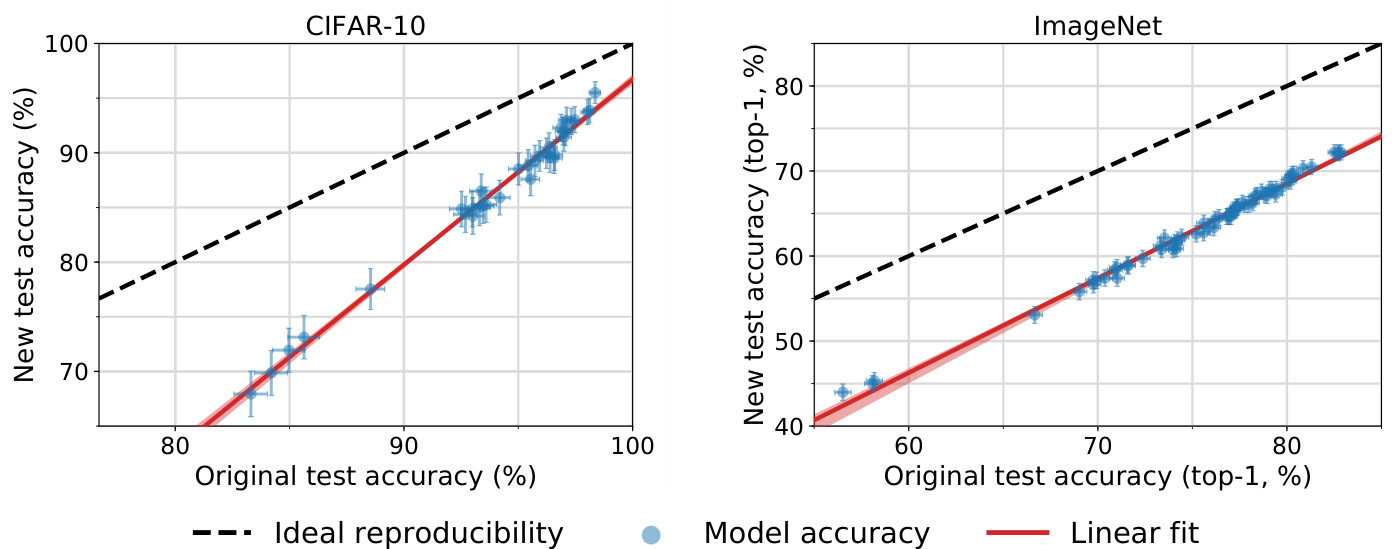

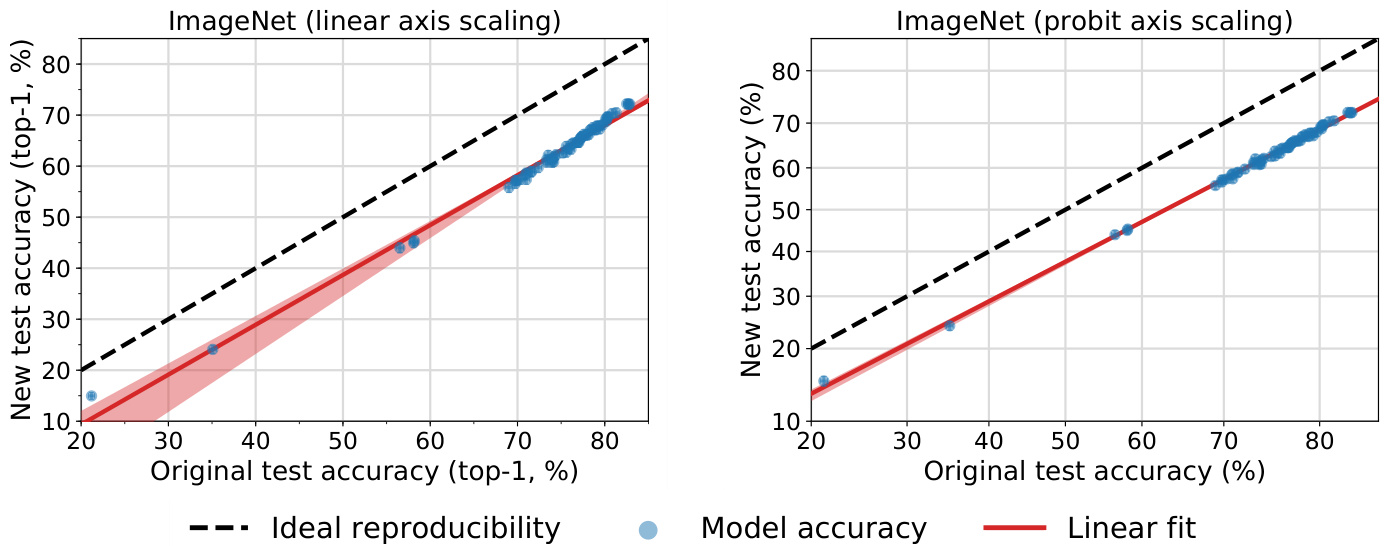

Figure 1 shows the main result of our experiment. Before we describe our methodology in Section 3, the next section provides relevant background. To enable future research, we release both our new test sets and the corresponding code.1

图 1: 展示了我们实验的主要结果。在第3节描述我们的方法之前,下一节将提供相关背景。为了支持未来研究,我们同时发布了新的测试集和对应代码。1

Figure 1: Model accuracy on the original test sets vs. our new test sets. Each data point corresponds to one model in our testbed (shown with $95%$ Clopper-Pearson confidence intervals). The plots reveal two main phenomena: (i) There is a significant drop in accuracy from the original to the new test sets. (ii) The model accuracies closely follow a linear function with slope greater than 1 (1.7 for CIFAR-10 and 1.1 for ImageNet). This means that every percentage point of progress on the original test set translates into more than one percentage point on the new test set. The two plots are drawn so that their aspect ratio is the same, i.e., the slopes of the lines are visually comparable. The red shaded region is a 95% confidence region for the linear fit from 100,000 bootstrap samples.

图 1: 原始测试集与新测试集的模型准确率对比。每个数据点对应测试平台中的一个模型 (显示为 $95%$ Clopper-Pearson置信区间)。图表揭示两个主要现象:(i) 从原始测试集到新测试集存在显著的准确率下降。(ii) 模型准确率严格遵循斜率大于1的线性函数 (CIFAR-10为1.7,ImageNet为1.1)。这意味着原始测试集上每提升1个百分点,对应新测试集的提升幅度会超过1个百分点。两个图表采用相同纵横比绘制,使得直线斜率具有视觉可比性。红色阴影区域是通过10万次bootstrap采样得到的线性拟合95%置信区间。

2 Potential Causes of Accuracy Drops

2 准确率下降的潜在原因

We adopt the standard classification setup and posit the existence of a “true” underlying data distribution $\mathcal{D}$ over labeled examples $(x,y)$ . The overall goal in classification is to find a model $\hat{f}$

我们采用标准分类设置,假设存在一个基于标注样本$(x,y)$的"真实"潜在数据分布$\mathcal{D}$。分类的总体目标是找到一个模型$\hat{f}$

that minimizes the population loss

最小化总体损失

$$

L_{\mathcal{D}}(\hat{f})=\underset{(x,y)\sim\mathcal{D}}{\mathbb{E}}\Big[\mathbb{I}[\hat{f}(x)\neq y]\Big].

$$

$$

L_{\mathcal{D}}(\hat{f})=\underset{(x,y)\sim\mathcal{D}}{\mathbb{E}}\Big[\mathbb{I}[\hat{f}(x)\neq y]\Big].

$$

Since we usually do not know the distribution $\textit{D}$ , we instead measure the performance of a trained classifier via a test set $S$ drawn from the distribution $\mathcal{D}$ :

由于我们通常不知道分布 $\textit{D}$,因此我们改为通过从分布 $\mathcal{D}$ 中抽取的测试集 $S$ 来衡量训练分类器的性能:

$$

L_{S}(\hat{f})=\frac{1}{|S|}\sum_{(x,y)\in S}\mathbb{I}[\hat{f}(x)\neq y].

$$

$$

L_{S}(\hat{f})=\frac{1}{|S|}\sum_{(x,y)\in S}\mathbb{I}[\hat{f}(x)\neq y].

$$

We then use this test error $L_{S}(\hat{f})$ as a proxy for the population loss $L_{\mathcal{D}}(\hat{f})$ . If a model $\hat{f}$ achieves a low test error, we assume that it will perform similarly well on future examples from the distribution $\mathcal{D}$ . This assumption underlies essentially all empirical evaluations in machine learning since it allows us to argue that the model $\hat{f}$ generalizes.

然后我们用这个测试误差 $L_{S}(\hat{f})$ 作为总体损失 $L_{\mathcal{D}}(\hat{f})$ 的代理。如果一个模型 $\hat{f}$ 实现了较低的测试误差,我们假设它在来自分布 $\mathcal{D}$ 的未来样本上也会表现良好。这个假设是机器学习中几乎所有实证评估的基础,因为它使我们能够论证模型 $\hat{f}$ 具有泛化能力。

In our experiments, we test this assumption by collecting a new test set $S^{\prime}$ from a data distribution ${\mathcal{D}^{\prime}}$ that we carefully control to resemble the original distribution $\mathcal{D}$ . Ideally, the original test accuracy $L_{S}({\hat{f}})$ and new test accuracy $L_{S^{\prime}}(\hat{f})$ would then match up to the random sampling error. In contrast to this idealized view, our results in Figure 1 show a large drop in accuracy from the original test set $S$ set to our new test set $S^{\prime}$ . To understand this accuracy drop in more detail, we decompose the difference between $L_{S}(\hat{f})$ and $L_{S^{\prime}}(\hat{f})$ into three parts (dropping the dependence on $\hat{f}$ to simplify notation):

在我们的实验中,我们通过从数据分布 ${\mathcal{D}^{\prime}}$ 中收集一个新的测试集 $S^{\prime}$ 来验证这一假设,该分布经过精心控制以接近原始分布 $\mathcal{D}$。理想情况下,原始测试准确率 $L_{S}({\hat{f}})$ 和新测试准确率 $L_{S^{\prime}}(\hat{f})$ 应仅存在随机抽样误差范围内的差异。然而,与这一理想化观点相反,图 1 中的结果显示,从原始测试集 $S$ 到新测试集 $S^{\prime}$ 的准确率出现了大幅下降。为了更详细地理解这一准确率下降现象,我们将 $L_{S}(\hat{f})$ 和 $L_{S^{\prime}}(\hat{f})$ 之间的差异分解为三个部分(为简化表示,省略对 $\hat{f}$ 的依赖):

$$

L_{S}-L_{S^{\prime}}=\underbrace{\left(L_{S}-L_{\mathcal{D}}\right)}_ {\mathrm{Adaptivitygap}}+\underbrace{\left(L_{\mathcal{D}}-L_{\mathcal{D}^{\prime}}\right)}_ {\mathrm{DistributionGap}}+\underbrace{\left(L_{\mathcal{D}^{\prime}}-L_{S^{\prime}}\right)}_{\mathrm{Generalization~gap}}.

$$

$$

L_{S}-L_{S^{\prime}}=\underbrace{\left(L_{S}-L_{\mathcal{D}}\right)}_ {\mathrm{Adaptivitygap}}+\underbrace{\left(L_{\mathcal{D}}-L_{\mathcal{D}^{\prime}}\right)}_ {\mathrm{DistributionGap}}+\underbrace{\left(L_{\mathcal{D}^{\prime}}-L_{S^{\prime}}\right)}_{\mathrm{Generalization~gap}}.

$$

We now discuss to what extent each of the three terms can lead to accuracy drops.

我们现在讨论这三项因素各自在多大程度上会导致准确率下降。

Generalization Gap. By construction, our new test set $S^{\prime}$ is independent of the existing classifier $\hat{f}$ . Hence the third term $L_{\mathit{D}^{\prime}}-L_{\mathit{S}^{\prime}}$ is the standard generalization gap commonly studied in machine learning. It is determined solely by the random sampling error.

泛化差距。根据构造,我们的新测试集 $S^{\prime}$ 独立于现有分类器 $\hat{f}$。因此,第三项 $L_{\mathit{D}^{\prime}}-L_{\mathit{S}^{\prime}}$ 是机器学习中常见的标准泛化差距,完全由随机抽样误差决定。

A first guess is that this inherent sampling error suffices to explain the accuracy drops in Figure 1 (e.g., the new test set $S^{\prime}$ could have sampled certain “harder” modes of the distribution $\mathcal{D}$ more often). However, random fluctuations of this magnitude are unlikely for the size of our test sets. With 10,000 data points (as in our new ImageNet test set), a Clopper-Pearson 95% confidence interval for the test accuracy has size of at most $\pm1%$ . Increasing the confidence level to $99.99%$ yields a confidence interval of size at most $\pm2%$ . Moreover, these confidence intervals become smaller for higher accuracies, which is the relevant regime for the best-performing models. Hence random chance alone cannot explain the accuracy drops observed in our experiments.2

初步猜测是,这种固有的采样误差足以解释图1中的准确率下降(例如,新测试集$S^{\prime}$可能更频繁地采样了分布$\mathcal{D}$中某些"更难"的模式)。然而,对于我们的测试集规模而言,这种量级的随机波动不太可能发生。在拥有10,000个数据点(如我们的新ImageNet测试集)的情况下,测试准确率的Clopper-Pearson 95%置信区间大小最多为$\pm1%$。将置信水平提高到$99.99%$时,置信区间大小最多为$\pm2%$。此外,对于准确率更高的模型(即性能最佳模型所处的区间),这些置信区间会更小。因此,仅凭随机因素无法解释我们实验中观察到的准确率下降[20]。

Adaptivity Gap. We call the term $L_{S}-L_{\mathcal{D}}$ the adaptivity gap. It measures how much adapting the model $\hat{f}$ to the test set $S$ causes the test error $L_{S}$ to underestimate the population loss $L_{\mathcal{D}}$ . If we assumed that our model $\hat{f}$ is independent of the test set $S$ , this terms would follow the same concentration laws as the generalization gap ${\cal L}_ {\cal D^{\prime}}-{\cal L}_ {S^{\prime}}$ above. But this assumption is undermined by the common practice of tuning model hyper parameters directly on the test set, which introduces dependencies between the model $\hat{f}$ and the test set $S$ . In the extreme case, this can be seen as training directly on the test set. But milder forms of adaptivity may also artificially inflate accuracy scores by increasing the gap between $L_{S}$ and $L_{\mathcal{D}}$ beyond the purely random error.

自适应差距。我们将 $L_{S}-L_{\mathcal{D}}$ 称为自适应差距。它衡量了模型 $\hat{f}$ 对测试集 $S$ 的适应导致测试误差 $L_{S}$ 低估总体损失 $L_{\mathcal{D}}$ 的程度。如果我们假设模型 $\hat{f}$ 独立于测试集 $S$,这一项将遵循与上述泛化差距 ${\cal L}_ {\cal D^{\prime}}-{\cal L}_ {S^{\prime}}$ 相同的集中规律。但这种假设被直接在测试集上调整模型超参数的常见做法所削弱,这引入了模型 $\hat{f}$ 和测试集 $S$ 之间的依赖关系。在极端情况下,这可以视为直接在测试集上训练。但较温和的自适应形式也可能通过扩大 $L_{S}$ 和 $L_{\mathcal{D}}$ 之间的差距(超出纯粹的随机误差)人为地提高准确率分数。

Distribution Gap. We call the term $L_{D}-L_{\mathcal{D}^{\prime}}$ the distribution gap. It quantifies how much the change from the original distribution $\mathcal{D}$ to our new distribution ${\mathcal{D}^{\prime}}$ affects the model $\hat{f}$ . Note that this term is not influenced by random effects but quantifies the systematic difference between sampling the original and new test sets. While we went to great lengths to minimize such systematic differences, in practice it is hard to argue whether two high-dimensional distributions are exactly the same. We typically lack a precise definition of either distribution, and collecting a real dataset involves a plethora of design choices.

分布差距 (Distribution Gap)。我们将 $L_{D}-L_{\mathcal{D}^{\prime}}$ 这一项称为分布差距。它量化了从原始分布 $\mathcal{D}$ 到新分布 ${\mathcal{D}^{\prime}}$ 的变化对模型 $\hat{f}$ 的影响程度。需要注意的是,这一项不受随机效应影响,而是量化了原始测试集与新测试集抽样之间的系统性差异。尽管我们竭尽全力最小化此类系统性差异,但在实践中很难论证两个高维分布是否完全相同。我们通常缺乏对任一分布的准确定义,而收集真实数据集涉及大量设计选择。

2.1 Distinguishing Between the Two Mechanisms

2.1 区分两种机制

For a single model $\hat{f}$ , it is unclear how to disentangle the adaptivity and distribution gaps. To gain a more nuanced understanding, we measure accuracies for multiple models $\hat{f}_ {1},\ldots,\hat{f}_{k}$ . This provides additional insights because it allows us to determine how the two gaps have evolved over time.

对于单个模型 $\hat{f}$,我们难以区分适应性差距和分布差距。为了获得更细致的理解,我们测量了多个模型 $\hat{f}_ {1},\ldots,\hat{f}_{k}$ 的准确率。这种方法能提供更多洞见,因为它使我们能够追踪这两个差距随时间的变化趋势。

For both CIFAR-10 and ImageNet, the classification models come from a long line of papers that increment ally improved accuracy scores over the past decade. A natural assumption is that later models have experienced more adaptive over fitting since they are the result of more successive hyper parameter tuning on the same test set. Their higher accuracy scores would then come from an increasing adaptivity gap and reflect progress only on the specific examples in the test set $S$ but not on the actual distribution $\mathcal{D}$ . In an extreme case, the population accuracies $L_{\mathcal{D}}(\hat{f}_ {i})$ would plateau (or even decrease) while the test accuracies $L_{S}(\hat{f}_ {i})$ would continue to grow for successive models $\hat{f}_{i}$ . However, this idealized scenario is in stark contrast to our results in Figure 1. Later models do not see diminishing returns but an increased advantage over earlier models. Hence we view our results as evidence that the accuracy drops mainly stem from a large distribution gap. After presenting our results in more detail in the next section, we will further discuss this point in Section 5.

在CIFAR-10和ImageNet上,分类模型的发展源自一系列论文,这些论文在过去十年间逐步提升了准确率分数。一个自然的假设是,后期模型经历了更多的适应性过拟合 (adaptive overfitting),因为它们是同一测试集上连续超参数调优的结果。它们更高的准确率分数可能源于不断增大的适应性差距,仅反映了测试集$S$中特定样本的进步,而非实际分布$\mathcal{D}$上的提升。极端情况下,总体准确率$L_{\mathcal{D}}(\hat{f}_ {i})$可能停滞(甚至下降),而连续模型$\hat{f}_ {i}$的测试准确率$L_{S}(\hat{f}_{i})$却持续增长。然而,这种理想化场景与我们在图1中的结果形成鲜明对比——后期模型并未出现收益递减,反而比早期模型更具优势。因此,我们认为这些结果表明准确率下降主要源于较大的分布差距。下一节详细展示结果后,我们将在第5节进一步讨论这一点。

3 Summary of Our Experiments

3 我们的实验总结

We now give an overview of the main steps in our reproducibility experiment. Appendices B and C describe our methodology in more detail. We begin with the first decision, which was to choose informative datasets.

我们现在概述复现实验的主要步骤。附录B和C更详细地描述了我们的方法。我们从第一个决策开始,即选择信息丰富的数据集。

3.1 Choice of Datasets

3.1 数据集选择

We focus on image classification since it has become the most prominent task in machine learning and underlies a broad range of applications. The cumulative progress on ImageNet is often cited as one of the main breakthroughs in computer vision and machine learning [42]. State-of-the-art models now surpass human-level accuracy by some measure [20, 48]. This makes it particularly important to check if common image classification models can reliably generalize to new data from the same source.

我们聚焦于图像分类任务,因为该任务已成为机器学习领域最突出的研究方向,并支撑着广泛的应用场景。ImageNet数据集上的累积进展常被引用为计算机视觉和机器学习领域的主要突破之一 [42]。当前最先进的模型在某些指标上已超越人类水平准确率 [20, 48]。这使得验证常见图像分类模型能否可靠地泛化至同源新数据变得尤为重要。

We decided on CIFAR-10 and ImageNet, two of the most widely-used image classification benchmarks [18]. Both datasets have been the focus of intense research for almost ten years now. Due to the competitive nature of these benchmarks, they are an excellent example for testing whether adaptivity has led to over fitting. In addition to their popularity, their carefully documented dataset creation process makes them well suited for a reproducibility experiment [10, 35, 48].

我们选择了CIFAR-10和ImageNet这两个最广泛使用的图像分类基准数据集 [18]。近十年来,这两个数据集一直是密集研究的焦点。由于这些基准的竞争性质,它们成为测试适应性是否导致过拟合的绝佳示例。除了流行度之外,它们详尽记录的数据集创建过程也使其非常适合重现性实验 [10, 35, 48]。

Each of the two datasets has specific features that make it especially interesting for our replication study. CIFAR-10 is small enough so that many researchers developed and tested new models for this dataset. In contrast, ImageNet requires significantly more computational resources, and experimenting with new architectures has long been out of reach for many research groups. As a result, CIFAR-10 has likely experienced more hyper parameter tuning, which may also have led to more adaptive over fitting.

这两个数据集各自的特点使它们对我们的复现研究特别有价值。CIFAR-10规模较小,许多研究者曾基于此数据集开发和测试新模型;而ImageNet需要更多计算资源,新架构的实验长期超出许多研究团队的能力范围。因此,CIFAR-10可能经历了更多超参数调优,这也可能导致更多适应性过拟合现象。

On the other hand, the limited size of CIFAR-10 could also make the models more susceptible to small changes in the distribution. Since the CIFAR-10 models are only exposed to a constrained visual environment, they may be unable to learn a robust representation. In contrast, ImageNet captures a much broader variety of images: it contains about 24 $\times$ more training images than CIFAR-10 and roughly $100\times$ more pixels per image. So conventional wisdom (such as the claims of human-level performance) would suggest that ImageNet models also generalize more reliably .

另一方面,CIFAR-10的有限规模也可能使模型更容易受到分布微小变化的影响。由于CIFAR-10模型仅暴露于受限的视觉环境中,它们可能无法学习到稳健的表征。相比之下,ImageNet涵盖了更广泛的图像多样性:其训练图像数量约为CIFAR-10的24倍,且每张图像的像素量大约是CIFAR-10的100倍。因此传统观点(例如宣称达到人类水平性能的说法)会认为ImageNet模型也具有更可靠的泛化能力。

As we will see, neither of these conjectures is supported by our data: CIFAR-10 models do not suffer from more adaptive over fitting, and ImageNet models do not appear to be significantly more robust.

正如我们将看到的,这些猜想都没有得到数据支持:CIFAR-10模型并未出现更多自适应过拟合现象,而ImageNet模型也并未表现出显著更强的鲁棒性。

3.2 Dataset Creation Methodology

3.2 数据集构建方法

One way to test generalization would be to evaluate existing models on new i.i.d. data from the original test distribution. For example, this would be possible if the original dataset authors had collected a larger initial dataset and randomly split it into two test sets, keeping one of the test sets hidden for several years. Unfortunately, we are not aware of such a setup for CIFAR-10 or ImageNet.

测试泛化能力的一种方法是在原始测试分布的新独立同分布(i.i.d.)数据上评估现有模型。例如,如果原始数据集作者收集了更大的初始数据集并将其随机分成两个测试集,并将其中一个测试集隐藏数年,这种方法就可行。遗憾的是,我们尚未发现CIFAR-10或ImageNet存在此类实验设置。

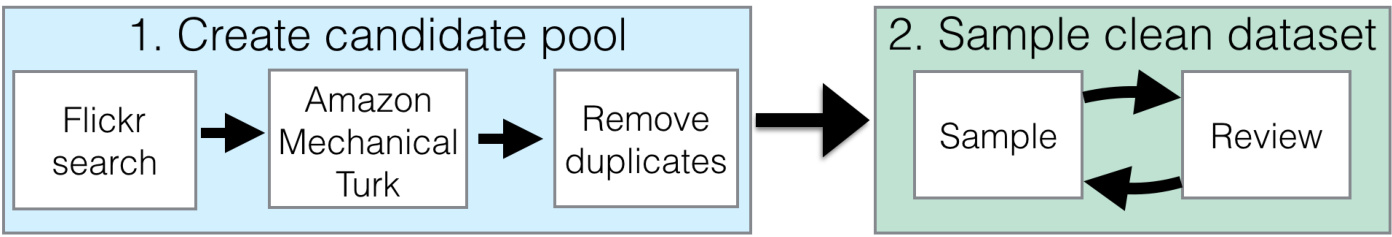

In this paper, we instead mimic the original distribution as closely as possible by repeating the dataset curation process that selected the original test set $^{3}$ from a larger data source. While this introduces the difficulty of disentangling the adaptivity gap from the distribution gap, it also enables us to check whether independent replication affects current accuracy scores. In spite of our efforts, we found that it is astonishingly hard to replicate the test set distributions of CIFAR-10 and ImageNet. At a high level, creating a new test set consists of two parts:

在本文中,我们通过重复从更大数据源筛选原始测试集的数据集构建流程 $^{3}$ ,尽可能贴近原始分布。虽然这会带来难以区分适应性差距与分布差距的难题,但也使我们能够检验独立复现是否会影响当前准确率分数。尽管付出诸多努力,我们发现复现CIFAR-10和ImageNet的测试集分布异常困难。总体而言,创建新测试集包含两个部分:

Gathering Data. To obtain images for a new test set, a simple approach would be to use a different dataset, e.g., Open Images [34]. However, each dataset comes with specific biases [54]. For instance, CIFAR-10 and ImageNet were assembled in the late 2000s, and some classes such as car or cell_phone have changed significantly over the past decade. We avoided such biases by drawing new images from the same source as CIFAR-10 and ImageNet. For CIFAR-10, this was the larger Tiny Image dataset [55]. For ImageNet, we followed the original process of utilizing the Flickr image hosting service and only considered images uploaded in a similar time frame as for ImageNet. In addition to the data source and the class distribution, both datasets also have rich structure within each class. For instance, each class in CIFAR-10 consists of images from multiple specific keywords in Tiny Images. Similarly, each class in ImageNet was assembled from the results of multiple queries to the Flickr API. We relied on the documentation of the two datasets to closely match the sub-class distribution as well.

收集数据。为获取新测试集的图像,一种简单方法是使用不同数据集,例如Open Images [34]。但每个数据集都存在特定偏差[54]。例如CIFAR-10和ImageNet构建于2000年代末,其中汽车(car)或手机(cell_phone)等类别在过去十年已发生显著变化。我们通过从与CIFAR-10和ImageNet相同来源采集新图像来避免此类偏差:对CIFAR-10使用更大的Tiny Image数据集[55],对ImageNet则沿用其原始构建流程——通过Flickr图片托管服务,仅采集与ImageNet同期上传的图像。除数据源和类别分布外,这两个数据集在每个类别内部还具有丰富结构:CIFAR-10的每个类别由Tiny Images中多个特定关键词对应的图像组成,而ImageNet的每个类别则是通过向Flickr API发起多个查询后汇编而成。我们还依据两个数据集的文档说明,确保子类分布也高度匹配。

Cleaning Data. Many images in Tiny Images and the Flickr results are only weakly related to the query (or not at all). To obtain a high-quality dataset with correct labels, it is therefore necessary to manually select valid images from the candidate pool. While this step may seem trivial, our results in Section 4 will show that it has major impact on the model accuracies.

清理数据。Tiny Images和Flickr结果中的许多图像与查询仅存在微弱关联(或完全无关)。为获得标注准确的高质量数据集,必须从候选池中手动筛选有效图像。尽管此步骤看似简单,但第4节的实验结果将证明其对模型准确率具有重大影响。

The authors of CIFAR-10 relied on paid student labelers to annotate their dataset. The researchers in the ImageNet project utilized Amazon Mechanical Turk (MTurk) to handle the large size of their dataset. We again replicated both annotation processes. Two graduate students authors of this paper impersonated the CIFAR-10 labelers, and we employed MTurk workers for our new ImageNet test set. For both datasets, we also followed the original labeling instructions, MTurk task format, etc.

CIFAR-10 的作者们依靠付费学生标注员来注释他们的数据集。ImageNet 项目的研究人员则利用 Amazon Mechanical Turk (MTurk) 来处理其庞大规模的数据集。我们再次复现了这两种标注流程:本文的两位研究生作者模拟了 CIFAR-10 的标注员,同时我们雇佣 MTurk 工作者来标注新的 ImageNet 测试集。对于这两个数据集,我们也遵循了原始标注说明、MTurk 任务格式等规范。

After collecting a set of correctly labeled images, we sampled our final test sets from the filtered candidate pool. We decided on a test set size of 2,000 for CIFAR-10 and 10,000 for ImageNet. While these are smaller than the original test sets, the sample sizes are still large enough to obtain 95% confidence intervals of about $\pm1%$ . Moreover, our aim was to avoid bias due to CIFAR-10 and ImageNet possibly leaving only “harder” images in the respective data sources. This effect is minimized by building test sets that are small compared to the original datasets (about 3% of the overall CIFAR-10 dataset and less than $1%$ of the overall ImageNet dataset).

在收集了一组正确标注的图像后,我们从筛选后的候选池中抽取了最终测试集。我们决定将CIFAR-10的测试集规模定为2,000张,ImageNet的测试集规模定为10,000张。虽然这些数字小于原始测试集,但样本量仍足以获得约$\pm1%$的95%置信区间。此外,我们的目标是避免因CIFAR-10和ImageNet可能仅保留各自数据源中"更难"图像而导致的偏差。通过构建相对于原始数据集较小的测试集(约占整个CIFAR-10数据集的3%,不到整个ImageNet数据集的$1%$),这种影响被降至最低。

3.3 Results on the New Test Sets

3.3 新测试集上的结果

After assembling our new test sets, we evaluated a broad range of image classification models spanning a decade of machine learning research. The models include the seminal AlexNet [36], widely used convolutional networks [21, 27, 49, 52], and the state-of-the-art [8, 39]. For all deep architectures, we used code previously published online. We relied on pre-trained models whenever possible and otherwise ran the training commands from the respective repositories. In addition, we also evaluated the best-performing approaches preceding convolutional networks on each dataset. These are random features for CIFAR-10 [7, 46] and Fisher vectors for ImageNet [44].4 We wrote our own implementations for these models, which we also release publicly.5

在构建新的测试集后,我们评估了跨越十年机器学习研究的广泛图像分类模型。这些模型包括开创性的AlexNet [36]、广泛使用的卷积网络 [21, 27, 49, 52] 以及最先进的模型 [8, 39]。对于所有深度架构,我们使用了先前在线发布的代码。只要可能就依赖预训练模型,否则从各自的代码库运行训练命令。此外,我们还评估了卷积网络之前在每个数据集上表现最佳的方法:CIFAR-10采用随机特征 [7, 46],ImageNet采用Fisher向量 [44]。我们为这些模型编写了自己的实现代码并公开。

| CIFAR-10 | ||||||||

| Orig. Rank Model | Orig. Accuracy | New Accuracy | Gap | New Rank | △ Rank | |||

| 1 | autoaug-pyramid_net_tf | 98.4 [98.1, 98.6] | 95.5 [94.5, 96.4] | 2.9 | 1 | 0 | ||

| 6 | shake_shake_64d_cutout | 97.1 [96.8, 97.4] | 93.0 [91.8, 94.1] | 4.1 | 5 | 1 | ||

| 16 | wide_resnet_28_10 | 95.9 [95.5, 96.3] | 89.7 [88.3, 91.0] | 6.2 | 14 | 2 | ||

| 23 | resnet_basic_110 | 93.5 [93.0, 93.9] | 85.2 [83.5, 86.7] | 8.3 | 24 | -1 | ||

| 27 | vgg-15_BN_64 | 93.0 [92.5, 93.5] | 84.9 [83.2, 86.4] | 8.1 | 27 | 0 | ||

| 30 | cudaconvnet | 88.5 [87.9, 89.2] | 77.5 [75.7, 79.3] | 11.0 | 30 | 0 | ||

| 31 | random_features_256k_aug | 85.6 [84.9, 86.3] | 73.1 [71.1, 75.1] | 12.5 | 31 | 0 | ||

| ImageNet Top-1 | ||||||||

| Orig. Rank | Model | Orig. Accuracy | New Accuracy | Gap | New Rank | △ Rank | ||

| 1 | pnasnet_large_tf | 82.9 [82.5, 83.2] | 72.2 [71.3, 73.1] | 10.7 | 3 | -2 | ||

| 4 | nasnetalarge | 82.5 [82.2, 82.8] | 72.2 [71.3, 73.1] | 10.3 | 1 | 3 | ||

| 21 | resnet152 | 78.3 [77.9, 78.7] | 67.0 [66.1, 67.9] | 11.3 | 21 | 0 | ||

| 23 | inception_v3_tf | 78.0 [77.6, 78.3] | 66.1 [65.1, 67.0] | 11.9 | 24 | -1 | ||

| 30 | densenet161 | 77.1 [76.8, 77.5] | 65.3 [64.4, 66.2] | 11.8 | 30 | 0 | ||

| 43 | vgg19_bn | 74.2 [73.8, 74.6] | 61.9 [60.9, 62.8] | 12.3 | 44 | -1 | ||

| 64 | alexnet | 56.5 [56.1, 57.0] | 44.0 [43.0, 45.0] | 12.5 | 64 | 0 | ||

| 65 | fv_64k | 35.1 [34.7, 35.5] | 24.1 [23.2, 24.9] | 11.0 | 65 | 0 | ||

| 原始排名 | 模型 | 原始准确率 | 新准确率 | 差距 | 新排名 | 排名变化 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | autoaug-pyramid_net_tf | 98.4 [98.1, 98.6] | 95.5 [94.5, 96.4] | 2.9 | 1 | 0 | ||

| 6 | shake_shake_64d_cutout | 97.1 [96.8, 97.4] | 93.0 [91.8, 94.1] | 4.1 | 5 | 1 | ||

| 16 | wide_resnet_28_10 | 95.9 [95.5, 96.3] | 89.7 [88.3, 91.0] | 6.2 | 14 | 2 | ||

| 23 | resnet_basic_110 | 93.5 [93.0, 93.9] | 85.2 [83.5, 86.7] | 8.3 | 24 | -1 | ||

| 27 | vgg-15_BN_64 | 93.0 [92.5, 93.5] | 84.9 [83.2, 86.4] | 8.1 | 27 | 0 | ||

| 30 | cudaconvnet | 88.5 [87.9, 89.2] | 77.5 [75.7, 79.3] | 11.0 | 30 | 0 | ||

| 31 | random_features_256k_aug | 85.6 [84.9, 86.3] | 73.1 [71.1, 75.1] | 12.5 | 31 | 0 |

ImageNet Top-1

| 原始排名 | 模型 | 原始准确率 | 新准确率 | 差距 | 新排名 | 排名变化 | ||

|---|---|---|---|---|---|---|---|---|

| 1 | pnasnet_large_tf | 82.9 [82.5, 83.2] | 72.2 [71.3, 73.1] | 10.7 | 3 | -2 | ||

| 4 | nasnetalarge | 82.5 [82.2, 82.8] | 72.2 [71.3, 73.1] | 10.3 | 1 | 3 | ||

| 21 | resnet152 | 78.3 [77.9, 78.7] | 67.0 [66.1, 67.9] | 11.3 | 21 | 0 | ||

| 23 | inception_v3_tf | 78.0 [77.6, 78.3] | 66.1 [65.1, 67.0] | 11.9 | 24 | -1 | ||

| 30 | densenet161 | 77.1 [76.8, 77.5] | 65.3 [64.4, 66.2] | 11.8 | 30 | 0 | ||

| 43 | vgg19_bn | 74.2 [73.8, 74.6] | 61.9 [60.9, 62.8] | 12.3 | 44 | -1 | ||

| 64 | alexnet | 56.5 [56.1, 57.0] | 44.0 [43.0, 45.0] | 12.5 | 64 | 0 | ||

| 65 | fv_64k | 35.1 [34.7, 35.5] | 24.1 [23.2, 24.9] | 11.0 | 65 | 0 |

Table 1: Model accuracies on the original CIFAR-10 test set, the original ImageNet validation set, and our new test sets. $\Delta$ Rank is the relative difference in the ranking from the original test set to the new test set in the full ordering of all models (see Appendices B.3.3 and C.4.4). For example, $\Delta\mathrm{Rank}=-2$ means that a model dropped by two places on the new test set compared to the original test set. The confidence intervals are $95%$ Clopper-Pearson intervals. Due to space constraints, references for the models can be found in Appendices B.3.2 and C.4.3.

表 1: 模型在原始CIFAR-10测试集、原始ImageNet验证集及新测试集上的准确率。$\Delta$ Rank表示所有模型完整排序中,从原始测试集到新测试集的排名相对变化 (详见附录B.3.3和C.4.4)。例如,$\Delta\mathrm{Rank}=-2$ 表示该模型在新测试集上的排名比原始测试集下降了两位。置信区间为$95%$ Clopper-Pearson区间。由于篇幅限制,模型参考文献见附录B.3.2和C.4.3。

Overall, the top-1 accuracies range from 83% to 98% on the original CIFAR-10 test set and 21% to $83%$ on the original ImageNet validation set. We refer the reader to Appendices C.4.3 and B.3.2 for a full list of models and source repositories.

总体而言,在原始CIFAR-10测试集上的top-1准确率介于83%至98%之间,在原始ImageNet验证集上则达到21%至$83%$。完整模型列表及源代码仓库详见附录C.4.3和B.3.2。

Figure 1 in the introduction plots original vs. new accuracies, and Table 1 in this section summarizes the numbers of key models. The remaining accuracy scores can be found in Appendices B.3.3 and C.4.4. We now briefly describe the two main trends and discuss the results further in Section 5.

引言中的图1绘制了原始准确率与新准确率的对比,本节中的表1总结了关键模型的数量。其余准确率分数可在附录B.3.3和C.4.4中找到。我们现在简要描述两个主要趋势,并在第5节进一步讨论结果。

A Significant Drop in Accuracy. All models see a large drop in accuracy from the original test sets to our new test sets. For widely used architectures such as VGG [49] and ResNet [21], the drop is $8%$ on CIFAR-10 and $11%$ on ImageNet. On CIFAR-10, the state of the art [8] is more robust and only drops by 3% from 98.4% to $95.5%$ . In contrast, the best model on ImageNet [39] sees an $11%$ drop from 83% to 72% in top-1 accuracy and a 6% drop from 96% to 90% in top-5 accuracy. So the top-1 drop on ImageNet is larger than what we observed on CIFAR-10.

准确率显著下降。所有模型从原始测试集到我们新测试集的准确率都出现了大幅下降。对于VGG [49]和ResNet [21]等广泛使用的架构,在CIFAR-10上下降了8%,在ImageNet上下降了11%。在CIFAR-10上,当前最优模型[8]表现更为稳健,仅从98.4%下降3%至95.5%。相比之下,ImageNet上的最佳模型[39]在top-1准确率上从83%降至72%,下降了11%;在top-5准确率上从96%降至90%,下降了6%。因此,ImageNet的top-1下降幅度大于我们在CIFAR-10上观察到的结果。

To put these accuracy numbers into perspective, we note that the best model in the ILSVRC $^6$ 2013 competition achieved 89% top-5 accuracy, and the best model from ILSVRC 2014 achieved 93% top-5 accuracy. So the 6% drop in top-5 accuracy from the 2018 state-of-the-art corresponds to approximately five years of progress in a very active period of machine learning research.

为了更直观地理解这些准确率数据,我们注意到 ILSVRC [6] 2013 年竞赛中最佳模型的 top-5 准确率为 89%,而 ILSVRC 2014 年最佳模型的 top-5 准确率为 93%。因此,与 2018 年最先进水平相比,top-5 准确率下降 6% 大致相当于机器学习研究高度活跃时期五年的进展。

Few Changes in the Relative Order. When sorting the models in order of their original and new accuracy, there are few changes in the respective rankings. Models with comparable original accuracy tend to see a similar decrease in performance. In fact, Figure 1 shows that the original accuracy is highly predictive of the new accuracy and that the relationship can be summarized well with a linear function. On CIFAR-10, the new accuracy of a model is approximately given by the following formula:

相对顺序变化不大。按原始准确率和调整后准确率对模型进行排序时,各模型的排名变化很小。原始准确率相近的模型,其性能下降幅度也较为接近。实际上,图1显示原始准确率对新准确率具有高度预测性,且两者关系可用线性函数很好地概括。在CIFAR-10数据集上,模型的新准确率大致遵循以下公式:

$$

\mathrm{acc}_ {\mathrm{new}}=1.69\cdot\mathrm{acc}_{\mathrm{orig}}-72.7%.

$$

$$

\mathrm{acc}_ {\mathrm{new}}=1.69\cdot\mathrm{acc}_{\mathrm{orig}}-72.7%.

$$

On ImageNet, the top-1 accuracy of a model is given by

在ImageNet上,模型的top-1准确率由

$$

\mathrm{acc}_ {\mathrm{new}}=1.11\cdot\mathrm{acc}_{\mathrm{orig}}-20.2%.

$$

$$

\mathrm{acc}_ {\mathrm{new}}=1.11\cdot\mathrm{acc}_{\mathrm{orig}}-20.2%.

$$

Computing a $95%$ confidence interval from 100,000 bootstrap samples gives [1.63, 1.76] for the slope and $[-78.6,-67.5]$ for the offset on CIFAR-10, and [1.07, 1.19] and $[-26.0,-17.8]$ respectively for ImageNet.

通过10万次自助采样(bootstrap)计算得到的95%置信区间显示,在CIFAR-10数据集上斜率范围为[1.63, 1.76],截距为$[-78.6,-67.5]$;而在ImageNet数据集上斜率范围为[1.07, 1.19],截距为$[-26.0,-17.8]$。

On both datasets, the slope of the linear fit is greater than 1. So models with higher original accuracy see a smaller drop on the new test sets. In other words, model robustness improves with increasing accuracy. This effect is less pronounced on ImageNet (slope 1.1) than on CIFAR-10 (slope 1.7). In contrast to a scenario with strong adaptive over fitting, neither dataset sees diminishing returns in accuracy scores when going from the original to the new test sets.

在两个数据集上,线性拟合的斜率均大于1。因此原始准确率较高的模型在新测试集上的性能下降幅度更小。换言之,模型鲁棒性随准确率提升而增强。ImageNet数据集(斜率1.1)的这种效应弱于CIFAR-10数据集(斜率1.7)。与存在严重自适应过拟合的情况不同,两个数据集从原始测试集切换到新测试集时,准确率均未出现收益递减现象。

3.4 Experiments to Test Follow-Up Hypotheses

3.4 后续假设验证实验

Since the drop from original to new accuracies is concerning ly large, we investigated multiple hypotheses for explaining this drop. Appendices B.2 and C.3 list a range of follow-up experiments we conducted, e.g., re-tuning hyper parameters, training on part of our new test set, or performing cross-validation. However, none of these effects can explain the size of the drop. We conjecture that the accuracy drops stem from small variations in the human annotation process. As we will see in the next section, the resulting changes in the test sets can significantly affect model accuracies.

由于原始准确率与新准确率之间的下降幅度异常显著,我们针对这一现象提出了多种解释假设。附录B.2和C.3列举了我们开展的一系列后续实验,例如重新调整超参数、在部分新测试集上训练或进行交叉验证。但所有这些因素都无法解释如此大幅度的准确率下降。我们推测准确率下降源于人工标注过程中细微的差异性变化。正如下一节将展示的,测试集中这些细微变化可能对模型准确率产生显著影响。

4 Understanding the Impact of Data Cleaning on ImageNet

4 理解数据清洗对ImageNet的影响

A crucial aspect of ImageNet is the use of MTurk. There is a broad range of design choices for the MTurk tasks and how the resulting annotations determine the final dataset. To better understand the impact of these design choices, we assembled three different test sets for ImageNet. All of these test sets consist of images from the same Flickr candidate pool, are correctly labeled, and selected by more than 70% of the MTurk workers on average. Nevertheless, the resulting model accuracies vary by $14%$ . To put these numbers in context, we first describe our MTurk annotation pipeline.

ImageNet的一个关键方面是使用了MTurk。MTurk任务的设计选择范围广泛,这些选择如何决定最终数据集取决于生成的标注。为了更好地理解这些设计选择的影响,我们为ImageNet组装了三个不同的测试集。所有这些测试集都由来自同一Flickr候选池的图像组成,标注正确,并且平均超过70%的MTurk工作者选择了这些图像。然而,由此产生的模型准确率差异高达$14%$。为了理解这些数字的背景,我们首先描述我们的MTurk标注流程。

MTurk Tasks. We designed our MTurk tasks and user interface to closely resemble those originally used for ImageNet. As in ImageNet, each MTurk task contained a grid of 48 candidate images for a given target class. The task description was derived from the original ImageNet instructions and included the definition of the target class with a link to a corresponding Wikipedia page. We asked the MTurk workers to select images belonging to the target class regardless of “occlusions, other objects, and clutter or text in the scene” and to avoid drawings or paintings (both as in ImageNet). Appendix C.4.1 shows a screenshot of our UI and a screenshot of the original UI for comparison.

MTurk任务。我们将MTurk任务和用户界面设计成与最初用于ImageNet的界面高度相似。与ImageNet一样,每个MTurk任务包含一个由48张候选图像组成的网格,对应给定的目标类别。任务说明源自原始ImageNet指导,包含目标类别的定义及对应维基百科页面的链接。我们要求MTurk工作者选择属于目标类别的图像,忽略"遮挡物、其他对象、场景中的杂乱或文字",并避免选择绘图或油画(均与ImageNet标准一致)。附录C.4.1展示了我们的用户界面截图和原始界面对比截图。

For quality control, we embedded at least six randomly selected images from the original validation set in each MTurk task (three from the same class, three from a class that is nearby in the WordNet hierarchy). These images appeared in random locations of the image grid for each task. In total, we collected sufficient MTurk annotations so that we have at least 20 annotated validation images for each class.

为了质量控制,我们在每个MTurk任务中嵌入了至少六张从原始验证集中随机选取的图像(三张来自同一类别,三张来自WordNet层级结构相邻的类别)。这些图像在每个任务的图像网格中随机出现。我们总共收集了足够的MTurk标注,确保每个类别至少有20张标注过的验证图像。

The main outcome of the MTurk tasks is a selection frequency for each image, i.e., what fraction of MTurk workers selected the image in a task for its target class. We recruited at least ten MTurk workers for each task (and hence for each image), which is similar to ImageNet. Since each task contained original validation images, we could also estimate how often images from the original dataset were selected by our MTurk workers.

MTurk任务的主要结果是每张图片的选择频率,即在该任务中,MTurk工作者为目标类别选择该图片的比例。我们为每项任务(即每张图片)招募了至少十名MTurk工作者,这与ImageNet的做法类似。由于每项任务都包含原始验证图像,我们还可以估算原始数据集中图片被MTurk工作者选中的频率。

Sampling Strategies. In order to understand how the MTurk selection frequency affects the model accuracies, we explored three sampling strategies.

采样策略。为了理解MTurk选择频率如何影响模型准确率,我们探索了三种采样策略。

• Matched Frequency: First, we estimated the selection frequency distribution for each class from the annotated original validation images. We then sampled ten images from our candidate pool for each class according to these class-specific distributions (see Appendix C.1.2 for details). • Threshold0.7: For each class, we sampled ten images with selection frequency at least 0.7. • TopImages: For each class, we chose the ten images with highest selection frequency.

• 匹配频率:首先,我们从标注的原始验证图像中估算每个类别的选择频率分布。然后根据这些类别特定分布,从候选池中为每个类别采样十张图像(详见附录 C.1.2)。

• 阈值0.7:对于每个类别,我们采样了选择频率至少为0.7的十张图像。

• 顶部图像:对于每个类别,我们选择了选择频率最高的十张图像。

In order to minimize labeling errors, we manually reviewed each dataset and removed incorrect images. The average selection frequencies of the three final datasets range from 0.93 for TopImages over 0.85 for Threshold0.7 to 0.73 for Matched Frequency. For comparison, the original validation set has an average selection frequency of 0.71 in our experiments. Hence all three of our new test sets have higher selection frequencies than the original ImageNet validation set. In the preceding sections, we presented results on Matched Frequency for ImageNet since it is closest to the validation set in terms of selection frequencies.

为了尽量减少标注错误,我们手动审核了每个数据集并删除了错误图像。三个最终数据集的平均选择频率分别为:TopImages 0.93、Threshold0.7 0.85、Matched Frequency 0.73。作为对比,原始验证集在我们的实验中平均选择频率为0.71。因此,我们新建的三个测试集选择频率均高于原始ImageNet验证集。在前述章节中,我们展示了ImageNet的Matched Frequency结果,因为其选择频率最接近验证集水平。

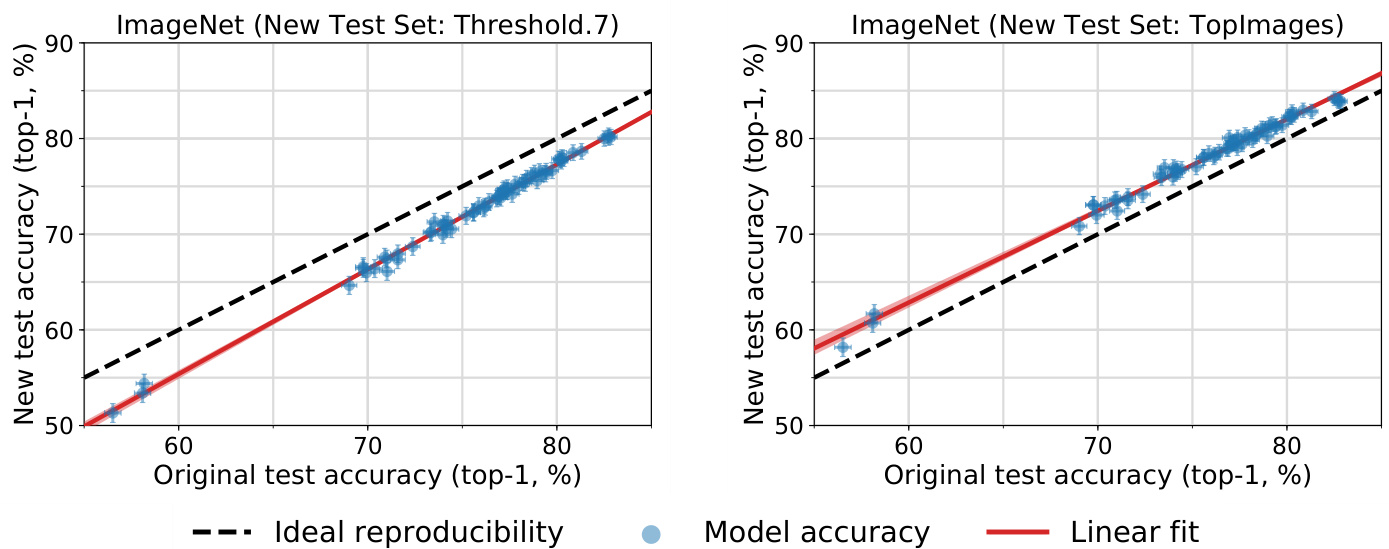

Results. Table 2 shows that the MTurk selection frequency has significant impact on both top-1 and top-5 accuracy. In particular, TopImages has the highest average MTurk selection frequency and sees a small increase of about $2%$ in both average top-1 and top-5 accuracy compared to the original validation set. This is in stark contrast to Matched Frequency, which has the lowest average selection frequency and exhibits a significant drop of $12%$ and $8%$ , respectively. The Threshold0.7 dataset is in the middle and sees a small decrease of $3%$ in top-1 and $1%$ in top-5 accuracy.

结果。表 2 显示 MTurk 选择频率对 top-1 和 top-5 准确率均有显著影响。其中,TopImages 的平均 MTurk 选择频率最高,其平均 top-1 和 top-5 准确率较原始验证集均小幅提升约 $2%$ 。这与 Matched Frequency 形成鲜明对比——后者平均选择频率最低,top-1 和 top-5 准确率分别大幅下降 $12%$ 和 $8%$ 。Threshold0.7 数据集处于中间位置,top-1 和 top-5 准确率分别小幅下降 $3%$ 和 $1%$ 。

In total, going from TopImages to Matched Frequency decreases the accuracies by about $14%$ (top-1) and $10%$ (top-5). For comparison, note that after excluding AlexNet (and the SqueezeNet models

从TopImages到Matched Frequency的转变使准确率总体下降了约$14%$ (top-1)和$10%$ (top-5)。作为对比,需注意在排除AlexNet(以及SqueezeNet模型)后

| Sampling Strategy | Average MTurk Selection Freq. | Average Top-1 Accuracy Change | Average Top-5 Accuracy Change |

| MatchedFrequency | 0.73 | -11.8% | -8.2% |

| Threshold0.7 | 0.85 | -3.2% | -1.2% |

| Toplmages | 0.93 | +2.1% | +1.8% |

| 采样策略 (Sampling Strategy) | 平均MTurk选择频率 (Average MTurk Selection Freq.) | 平均Top-1准确率变化 (Average Top-1 Accuracy Change) | 平均Top-5准确率变化 (Average Top-5 Accuracy Change) |

|---|---|---|---|

| MatchedFrequency | 0.73 | -11.8% | -8.2% |

| Threshold0.7 | 0.85 | -3.2% | -1.2% |

| Toplmages | 0.93 | +2.1% | +1.8% |

Table 2: Impact of the three sampling strategies for our ImageNet test sets. The table shows the average MTurk selection frequency in the resulting datasets and the average changes in model accuracy compared to the original validation set. We refer the reader to Section 4 for a description of the three sampling strategies. All three test sets have an average selection frequency of more than 0.7, yet the model accuracies still vary widely. For comparison, the original ImageNet validation set has an average selection frequency of 0.71 in our MTurk experiments. The changes in average accuracy span $14%$ and $10%$ in top-1 and top-5, respectively. This shows that details of the sampling strategy have large influence on the resulting accuracies.

表 2: 三种采样策略对我们的ImageNet测试集的影响。该表显示了所得数据集中MTurk平均选择频率以及模型准确率相较于原始验证集的平均变化。关于这三种采样策略的详细说明,请参阅第4节。所有三个测试集的平均选择频率均超过0.7,但模型准确率仍存在显著差异。作为对比,原始ImageNet验证集在我们的MTurk实验中平均选择频率为0.71。平均准确率变化在top-1和top-5中分别达到$14%$和$10%$。这表明采样策略的细节对最终准确率有重大影响。

tuned to match AlexNet [28]), the range of accuracies spanned by all remaining convolutional networks is roughly $14%$ (top-1) and $8%$ (top-5). So the variation in accuracy caused by the three sampling strategies is larger than the variation in accuracy among all post-AlexNet models we tested.

经过调校以匹配AlexNet [28]后,其余所有卷积网络的准确率范围大约为14%(top-1)和8%(top-5)。因此,三种采样策略导致的准确率差异大于我们测试的所有后AlexNet模型之间的准确率差异。

Figure 2 plots the new vs. original top-1 accuracies on Threshold0.7 and TopImages, similar to Figure 1 for Matched Frequency before. For easy comparison of top-1 and top-5 accuracy plots on all three datasets, we refer the reader to Figure 1 in Appendix C.4.4. All three plots show a good linear fit.

图 2 展示了 Threshold0.7 和 TopImages 数据集上新旧 top-1 准确率的对比,类似于前文 Matched Frequency 的图 1。为方便比较三个数据集的 top-1 和 top-5 准确率曲线,请参阅附录 C.4.4 中的图 1。所有曲线均显示出良好的线性拟合度。

Figure 2: Model accuracy on the original ImageNet validation set vs. accuracy on two variants of our new test set. We refer the reader to Section 4 for a description of these test sets. Each data point corresponds to one model in our testbed (shown with $95%$ Clopper-Pearson confidence intervals). On Threshold0.7, the model accuracies are $3%$ lower than on the original test set. On TopImages, which contains the images most frequently selected by MTurk workers, the models perform $2%$ better than on the original test set. The accuracies on both datasets closely follow a linear function, similar to Matched Frequency in Figure 1. The red shaded region is a 95% confidence region for the linear fit from 100,000 bootstrap samples.

图 2: 原始ImageNet验证集上的模型准确率与我们新测试集两个变体上的准确率对比。关于这些测试集的详细说明请参阅第4节。每个数据点对应我们测试平台中的一个模型(显示为95% Clopper-Pearson置信区间)。在Threshold0.7数据集上,模型准确率比原始测试集低3%。在包含MTurk工作者最常选择图像的TopImages数据集上,模型表现比原始测试集高2%。两个数据集上的准确率都紧密遵循线性函数关系,与图1中的Matched Frequency情况相似。红色阴影区域是通过100,000次bootstrap采样得到的线性拟合95%置信区域。

5 Discussion

5 讨论

We now return to the main question from Section 2: What causes the accuracy drops? As before, we distinguish between two possible mechanisms.

我们现在回到第2节中的主要问题:是什么导致了准确率下降?和之前一样,我们区分了两种可能的机制。

5.1 Adaptivity Gap

5.1 适应性差距

In its prototypical form, adaptive over fitting would manifest itself in diminishing returns observed on the new test set (see Section 2.1). However, we do not observe this pattern on either CIFAR-10 or ImageNet. On both datasets, the slope of the linear fit is greater than 1, i.e., each point of accuracy improvement on the original test set translates to more than 1% on the new test set. This is the opposite of the standard over fitting scenario. So at least on CIFAR-10 and ImageNet, multiple years of competitive test set adaptivity did not lead to diminishing accuracy numbers.

在其典型形式中,自适应过拟合会表现为新测试集上的收益递减现象(参见第2.1节)。然而,我们在CIFAR-10和ImageNet上均未观察到这种模式。在这两个数据集上,线性拟合的斜率均大于1,即原始测试集每提升1%准确率,新测试集上会获得超过1%的提升。这与标准过拟合情景完全相反。因此至少在CIFAR-10和ImageNet上,多年来的竞争性测试集自适应并未导致准确率数值的递减。

While our experiments rule out the most dangerous form of adaptive over fitting, we remark that they do not exclude all variants. For instance, it could be that any test set adaptivity leads to a roughly constant drop in accuracy. Then all models are affected equally and we would see no diminishing returns since later models could still be better. Testing for this form of adaptive over fitting likely requires a new test set that is truly i.i.d. and not the result of a separate data collection effort. Finding a suitable dataset for such an experiment is an interesting direction for future research.

虽然我们的实验排除了最危险的自适应过拟合形式,但需要说明的是,这些实验并未涵盖所有变体。例如,任何测试集自适应性都可能导致准确率出现大致恒定的下降。这种情况下所有模型会受到同等影响,我们就不会观察到收益递减现象,因为后续模型仍可能表现更优。要检测这种形式的自适应过拟合,可能需要一个真正独立同分布(i.i.d.)的新测试集,而非通过额外数据收集获得的集合。寻找适合此类实验的数据集是未来研究中有趣的方向。

The lack of adaptive over fitting contradicts conventional wisdom in machine learning. We now describe two mechanisms that could have prevented adaptive over fitting:

缺乏适应性过拟合与传统机器学习观念相矛盾。我们接下来将阐述两种可能防止适应性过拟合的机制:

The Ladder Mechanism. Blum and Hardt introduced the Ladder algorithm to protect machine learning competitions against adaptive over fitting [3]. The core idea is that constrained interaction with the test set can allow a large number of model evaluations to succeed, even if the models are chosen adaptively. Due to the natural form of their algorithm, the authors point out that it can also be seen as a mechanism that the machine learning community implicitly follows.

梯子机制 (The Ladder Mechanism)。Blum 和 Hardt 提出了梯子算法 (Ladder algorithm) 来防止机器学习竞赛中的自适应过拟合现象 [3]。该机制的核心思想是:即使模型被自适应地选择,通过限制与测试集的交互形式,仍能确保大量模型评估成功。由于该算法的自然形式,作者指出它也可以被视为机器学习社区隐式遵循的一种机制。

Limited Model Class. Adaptivity is only a problem if we can choose among models for which the test set accuracy differs significantly from the population accuracy. Importantly, this argument does not rely on the number of all possible models (e.g., all parameter settings of a neural network), but only on those models that could actually be evaluated on the test set. For instance, the standard deep learning workflow only produces models trained with SGD-style algorithms on a fixed training set, and requires that the models achieve high training accuracy (otherwise we would not consider the corresponding hyper parameters). Hence the number of different models arising from the current methodology may be small enough so that uniform convergence holds.

有限模型类别。只有当我们可以选择那些在测试集上准确率与总体准确率存在显著差异的模型时,适应性才会成为问题。关键的是,这一论点并不依赖于所有可能模型的数量(例如神经网络的所有参数设置),而仅依赖于那些实际上可以在测试集上评估的模型。例如,标准的深度学习工作流仅产生通过SGD类算法在固定训练集上训练的模型,并要求这些模型达到较高的训练准确率(否则我们不会考虑相应的超参数)。因此,当前方法所产生的不同模型数量可能足够少,从而满足一致收敛条件。

Our experiments offer little evidence for favoring one explanation over the other. One observation is that the convolutional networks shared many errors on CIFAR-10, which could be an indicator that the models are rather similar. But to gain a deeper understanding into adaptive over fitting, it is likely necessary to gather further data from more machine learning benchmarks, especially in scenarios where adaptive over fitting does occur naturally.

我们的实验几乎没有提供支持某一解释优于另一解释的证据。一个观察是,卷积网络在CIFAR-10上共享了许多错误,这可能表明这些模型相当相似。但要更深入地理解自适应过拟合 (adaptive over fitting),可能需要从更多机器学习基准中收集额外数据,特别是在自然发生自适应过拟合的场景中。

5.2 Distribution Gap

5.2 分布差距

The lack of diminishing returns in our experiments points towards the distribution gap as the primary reason for the accuracy drops. Moreover, our results on ImageNet show that changes in the sampling strategy can indeed affect model accuracies by a large amount, even if the data source and other parts of the dataset creation process stay the same.

实验中未出现收益递减现象表明,分布差距是准确率下降的主因。此外,我们在ImageNet上的结果表明,即使数据源和数据集创建过程的其他部分保持不变,采样策略的改变仍会大幅影响模型准确率。

So in spite of our efforts to match the original dataset creation process, the distribution gap is still our leading hypothesis for the accuracy drops. This demonstrates that it is surprisingly hard to accurately replicate the distribution of current image classification datasets. The main difficulty likely is the subjective nature of the human annotation step. There are many parameters that can affect the quality of human labels such as the annotator population (MTurk vs. students, qualifications, location & time, etc.), the exact task format, and compensation. Moreover, there are no exact definitions for many classes in ImageNet (e.g., see Appendix C.4.8). Understanding these aspects in more detail is an important direction for designing future datasets that contain challenging images while still being labeled correctly.

尽管我们努力匹配原始数据集的创建流程,分布差异仍是准确率下降的主要原因。这表明精确复现当前图像分类数据集的分布出人意料地困难。主要难点可能在于人工标注步骤的主观性——影响标注质量的因素众多,包括标注人员群体(MTurk工作者vs学生、资质、地域与时间等)、具体任务格式和报酬标准。此外,ImageNet中许多类别缺乏精确定义(例如参见附录C.4.8)。更深入地理解这些因素,对于设计既包含挑战性图像又能保证标注准确性的未来数据集至关重要。

The difficulty of clearly defining the data distribution, combined with the brittle behavior of the tested models, calls into question whether the black-box and i.i.d. framework of learning can produce reliable class if i ers. Our analysis of selection frequencies in Figure 15 (Appendix C.4.7) shows that we could create a new test set with even lower model accuracies. The images in this hypothetical dataset would still be correct, from Flickr, and selected by more than half of the MTurk labelers on average. So in spite of the impressive accuracy scores on the original validation set, current ImageNet models still have difficulty generalizing from “easy” to “hard” images.

数据分布难以明确定义,加之测试模型表现脆弱,这让人质疑黑箱学习和独立同分布(i.i.d.)框架能否产出可靠的分类器。我们对图15(附录C.4.7)中筛选频率的分析表明,完全可以构建一个模型准确率更低的新测试集。这个假设数据集中的图像仍将符合要求——来自Flickr且平均获得超半数MTurk标注者选择。因此尽管原始验证集上的准确率令人瞩目,当前ImageNet模型在从"简单"图像推广到"困难"图像时仍存在障碍。

5.3 A Model for the Linear Fit

5.3 线性拟合模型

Finally, we briefly comment on the striking linear relationship between original and new test accuracies that we observe in all our experiments (for instance, see Figure 1 in the introduction or Figures 12 and 13 in the appendix). To illustrate how this phenomenon could arise, we present a simple data model where a small modification of the data distribution can lead to significant changes in accuracy, yet the relative order of models is preserved as a linear relationship. We emphasize that this model should not be seen as the true explanation. Instead, we hope it can inform future experiments that explore natural variations in test distributions.

最后,我们简要评论在所有实验中观察到的原始测试准确率与新测试准确率之间惊人的线性关系(例如,参见引言中的图1或附录中的图12和13)。为了说明这种现象如何产生,我们提出了一个简单的数据模型,其中对数据分布的微小修改可能导致准确率的显著变化,但模型的相对顺序仍保持线性关系。我们强调,该模型不应被视为真实解释,而是希望它能启发未来探索测试分布自然变化的实验。

First, as we describe in Appendix C.2, we find that we achieve better fits to our data under a probit scaling of the accuracies. Over a wide range from $21%$ to $83%$ (all models in our ImageNet testbed), the accuracies on the new test set, $\alpha_{\mathrm{new}}$ , are related to the accuracies on the original test set, $\alpha_{\mathrm{orig}}$ by the relationship

首先,如附录 C.2 所述,我们发现对准确率进行概率单位 (probit) 缩放能获得更好的数据拟合效果。在 21% 到 83% 的广泛范围内(我们 ImageNet 测试平台中的所有模型),新测试集的准确率 $\alpha_{\mathrm{new}}$ 与原始测试集的准确率 $\alpha_{\mathrm{orig}}$ 存在以下关系:

$$

\Phi^{-1}(\alpha_{\mathrm{new}})~=~u\cdot\Phi^{-1}(\alpha_{\mathrm{orig}})+v

$$

$$

\Phi^{-1}(\alpha_{\mathrm{new}})~=~u\cdot\Phi^{-1}(\alpha_{\mathrm{orig}})+v

$$

where $\Phi$ is the Gaussian CDF, and $u$ and $\upsilon$ are scalars. The probit scale is in a sense more natural than a linear scale as the accuracy numbers are probabilities. When we plot accuracies on a probit scale in Figures 6 and 13, we effectively visualize $\Phi^{-1}(\alpha)$ instead of $\alpha$ .

其中 $\Phi$ 是高斯累积分布函数 (CDF),$u$ 和 $\upsilon$ 是标量。从准确率为概率值的角度来看,概率比尺度 (probit scale) 比线性尺度更自然。当我们在图 6 和图 13 中使用概率比尺度绘制准确率时,实际上是在可视化 $\Phi^{-1}(\alpha)$ 而非 $\alpha$。

We now provide a simple plausible model where the original and new accuracies are related linearly on a probit scale. Assume that every example $i$ has a scalar “difficulty” $\tau_{i}\in\mathbb{R}$ that quantifies how easy it is to classify. Further assume the probability of a model $j$ correctly classifying an image with

我们现在提供一个简单的合理模型,其中原始准确率与新准确率在概率单位尺度上呈线性关系。假设每个样本$i$都有一个标量"难度"$\tau_{i}\in\mathbb{R}$用于量化其分类难易程度。进一步假设模型$j$正确分类具有...

difficulty $\tau$ is given by an increasing function $\zeta_{j}(\tau)$ . We show that for restricted classes of difficulty functions $\zeta_{j}$ , we find a linear relationship between average accuracies after distribution shifts.

难度 $\tau$ 由递增函数 $\zeta_{j}(\tau)$ 给出。我们证明,对于受限类别的难度函数 $\zeta_{j}$,可以找到分布偏移后平均准确率之间的线性关系。

To be specific, we focus on the following parameter iz ation. Assume the difficulty distribution of images in a test set follows a normal distribution with mean $\mu$ and variance $\sigma^{2}$ . Further assume that

具体而言,我们关注以下参数化设定。假设测试集中图像的难度分布服从均值为$\mu$、方差为$\sigma^{2}$的正态分布。进一步假设

$$

\zeta_{j}(\tau)=\Phi(s_{j}-\tau),

$$

$$

\zeta_{j}(\tau)=\Phi(s_{j}-\tau),

$$

where $\Phi:\mathbb{R}\rightarrow(0,1)$ is the CDF of a standard normal distribution, and $s_{j}$ is the “skill” of model $j$ . Models with higher skill have higher classification accuracy, and images with higher difficulty lead to smaller classification accuracy. Again, the choice of $\Phi$ here is somewhat arbitrary: any sigmoidal function that maps $(-\infty,+\infty)$ to $(0,1)$ is plausible. But using the Gaussian CDF yields a simple calculation illustrating the linear phenomenon.

其中 $\Phi:\mathbb{R}\rightarrow(0,1)$ 是标准正态分布的累积分布函数 (CDF),$s_{j}$ 表示模型 $j$ 的"技能值"。技能值越高的模型分类准确率越高,图像难度越高则分类准确率越低。此处 $\Phi$ 的选择具有一定随意性:任何将 $(-\infty,+\infty)$ 映射到 $(0,1)$ 的S型函数都适用。但采用高斯CDF能简化计算过程,便于展示线性现象。

Using the above notation, the accuracy $\alpha_{j,\mu,\sigma}$ of a model $j$ on a test set with difficulty mean $\mu$ and variance $\sigma$ is then given by

使用上述符号,模型 $j$ 在难度均值为 $\mu$、方差为 $\sigma$ 的测试集上的准确率 $\alpha_{j,\mu,\sigma}$ 由下式给出

$$

\alpha_{j,\mu,\sigma}=\underset{\tau\sim\mathcal{N}(\mu,\sigma)}{\mathbb{E}}\left[\Phi(s_{j}-\tau)\right].

$$

$$

\alpha_{j,\mu,\sigma}=\underset{\tau\sim\mathcal{N}(\mu,\sigma)}{\mathbb{E}}\left[\Phi(s_{j}-\tau)\right].

$$

We can expand the CDF into an expectation and combine the two expectations by utilizing the fact that a linear combination of two Gaussians is again Gaussian. This yields:

我们可以将累积分布函数(CDF)展开为一个期望,并利用两个高斯分布的线性组合仍然是高斯分布这一性质,将两个期望合并。最终得到:

$$

\alpha_{j,\mu,\sigma}=\Phi\left({\frac{s_{j}-\mu}{\sqrt{\sigma^{2}+1}}}\right).

$$

$$

\alpha_{j,\mu,\sigma}=\Phi\left({\frac{s_{j}-\mu}{\sqrt{\sigma^{2}+1}}}\right).

$$

On a probit scale, the quantities we plot are given by

在概率单位尺度上,我们绘制的量由

$$

{\tilde{\alpha}}_ {j,\mu,\sigma}=\Phi^{-1}(\alpha_{j,\mu,\sigma})=\frac{s_{j}-\mu}{\sqrt{\sigma^{2}+1}}.

$$

$$

{\tilde{\alpha}}_ {j,\mu,\sigma}=\Phi^{-1}(\alpha_{j,\mu,\sigma})=\frac{s_{j}-\mu}{\sqrt{\sigma^{2}+1}}.

$$

Next, we consider the case where we have multiple models and two test sets with difficulty parameters $\mu_{k}$ and $\sigma_{k}$ respectively for $k\in{1,2}$ . Then $\tilde{\alpha}_ {j,2}$ , the probit-scaled accuracy on the second test set, is a linear function of the accuracy on the first test set, $\tilde{\alpha}_{j,1}$ :

接下来,我们考虑存在多个模型和两个测试集的情况,这两个测试集的难度参数分别为 $\mu_{k}$ 和 $\sigma_{k}$(其中 $k\in{1,2}$)。此时,第二个测试集上的概率单位尺度准确率 $\tilde{\alpha}_ {j,2}$ 是第一个测试集准确率 $\tilde{\alpha}_ {j,1}$ 的线性函数:

$$

\tilde{\alpha}_ {j,2}=u\cdot\tilde{\alpha}_{j,1}+v,

$$

$$

\tilde{\alpha}_ {j,2}=u\cdot\tilde{\alpha}_{j,1}+v,

$$

with

与

$$

u=\frac{\sqrt{\sigma_{1}^{2}+1}}{\sqrt{\sigma_{2}^{2}+1}}\mathrm{and}v=\frac{\mu_{1}-\mu_{2}}{\sqrt{\sigma_{2}^{2}+1}}.

$$

$$

u=\frac{\sqrt{\sigma_{1}^{2}+1}}{\sqrt{\sigma_{2}^{2}+1}}\mathrm{and}v=\frac{\mu_{1}-\mu_{2}}{\sqrt{\sigma_{2}^{2}+1}}.

$$

Hence, we see that the Gaussian difficulty model above yields a linear relationship between original and new test accuracy in the probit domain. While the Gaussian assumptions here made the calculations simple, a variety of different simple classes of $\zeta_{j}$ will give rise to the same linear relationship between the accuracies on two different test sets.

因此,我们看到上述高斯难度模型在概率域中产生了原始测试准确率与新测试准确率之间的线性关系。虽然这里的高斯假设使计算变得简单,但多种不同的简单类别的$\zeta_{j}$都会在两个不同测试集的准确率之间产生相同的线性关系。

6 Related Work

6 相关工作

We now briefly discuss related threads in machine learning. To the best of our knowledge, there are no reproducibility experiments directly comparable to ours in the literature.

我们现在简要讨论机器学习中的相关研究方向。据我们所知,目前文献中尚无与我们的实验直接可比的可复现性研究。

Dataset Biases. The computer vision community has a rich history of creating new datasets and discussing their relative merits, e.g., [10, 13, 15, 38, 45, 48, 54, 60]. The paper closest to ours is [54], which studies dataset biases by measuring how models trained on one dataset generalize to other datasets. The main difference to our work is that the authors test generalization across different datasets, where larger changes in the distribution (and hence larger drops in accuracy) are expected. In contrast, our experiments explicitly attempt to reproduce the original data distribution and demonstrate that even small variations arising in this process can lead to significant accuracy drops. Moreover, [54] do not test on previously unseen data, so their experiments cannot rule out adaptive over fitting.

数据集偏差。计算机视觉领域在创建新数据集和讨论其相对优劣方面有着丰富的历史,例如 [10, 13, 15, 38, 45, 48, 54, 60]。与我们的研究最接近的论文是 [54],该研究通过测量在一个数据集上训练的模型如何泛化到其他数据集来研究数据集偏差。与我们工作的主要区别在于,作者测试了不同数据集之间的泛化能力,这种情况下预期会有更大的分布变化(从而导致准确率显著下降)。相比之下,我们的实验明确尝试复现原始数据分布,并证明即使在此过程中产生的微小变化也可能导致准确率显著下降。此外,[54] 并未在未见过的数据上进行测试,因此其实验无法排除自适应过拟合的可能性。

Transfer Learning From ImageNet. Kornblith et al. [33] study how well accuracy on ImageNet transfers to other image classification datasets. An important difference from both our work and [54] is that the the ImageNet models are re-trained on the target datasets. The authors find that better ImageNet models usually perform better on the target dataset as well. Similar to [54], these experiments cannot rule out adaptive over fitting since the authors do not use new data. Moreover, the experiments do not measure accuracy drops due to small variations in the data generating process since the models are evaluated on a different task with an explicit adaptation step. Interestingly, the authors also find an approximately linear relationship between ImageNet and transfer accuracy.

基于ImageNet的迁移学习。Kornblith等人[33]研究了ImageNet上的准确率如何迁移到其他图像分类数据集。与我们的工作和[54]的一个重要区别在于,ImageNet模型会在目标数据集上重新训练。作者发现,更好的ImageNet模型通常在目标数据集上表现也更好。与[54]类似,这些实验无法排除自适应过拟合的可能性,因为作者没有使用新数据。此外,由于模型是在具有显式适应步骤的不同任务上进行评估,这些实验无法衡量数据生成过程中微小变化导致的准确率下降。有趣的是,作者还发现ImageNet准确率与迁移准确率之间存在近似线性关系。

Adversarial Examples. While adversarial examples [2, 50] also show that existing models are brittle, the perturbations have to be finely tuned since models are much more robust to random perturbations. In contrast, our results demonstrate that even small, benign variations in the data sampling process can already lead to a significant accuracy drop without an adversary.

对抗样本 (Adversarial Examples)。虽然对抗样本 [2, 50] 同样表明现有模型具有脆弱性,但由于模型对随机扰动具有更强的鲁棒性,这些扰动必须经过精细调整。相比之下,我们的结果表明,即使数据采样过程中存在微小且良性的变化,也足以在没有对抗者的情况下导致准确率显著下降。

A natural question is whether adversarial ly robust models are also more robust to the distribution shifts observed in our work. As a first data point, we tested the common $\ell_{\infty}$ -robustness baseline from [41] for CIFAR-10. Interestingly, the accuracy numbers of this model fall almost exactly on the linear fit given by the other models in our testbed. Hence $\ell_{\infty}$ -robustness does not seem to offer benefits for the distribution shift arising from our reproducibility experiment. However, we note that more forms of adversarial robustness such as spatial transformations or color space changes have been studied [12, 14, 24, 30, 57]. Testing these variants is an interesting direction for future work.

一个自然的问题是,对抗性鲁棒模型是否对我们工作中观察到的分布偏移也更具鲁棒性。作为第一个数据点,我们测试了[41]中针对CIFAR-10的常见$\ell_{\infty}$鲁棒性基线。有趣的是,该模型的准确率数值几乎完全落在我们测试平台中其他模型给出的线性拟合线上。因此,$\ell_{\infty}$鲁棒性似乎并未对我们的可复现性实验产生的分布偏移带来益处。不过我们注意到,已有研究探讨了更多形式的对抗鲁棒性(如空间变换或色彩空间变化)[12, 14, 24, 30, 57]。测试这些变体是未来工作的一个有趣方向。

Non-Adversarial Image Perturbations. Recent work also explores less adversarial changes to the input, e.g., [17, 23]. In these papers, the authors modify the ImageNet validation set via well-specified perturbations such as Gaussian noise, a fixed rotation, or adding a synthetic snow-like pattern. Standard ImageNet models then achieve significantly lower accuracy on the perturbed examples than on the unmodified validation set. While this is an interesting test of robustness, the mechanism underlying the accuracy drops is significantly different from our work. The aforementioned papers rely on an intentional, clearly-visible, and well-defined perturbation of existing validation images. Moreover, some of the interventions are quite different from the ImageNet validation set (e.g., ImageNet contains few images of falling snow). In contrast, our experiments use new images and match the distribution of the existing validation set as closely as possible. Hence it is unclear what properties of our new images cause the accuracy drops.

非对抗性图像扰动。近期研究也探索了对输入进行非对抗性修改的方法,例如 [17, 23]。这些论文通过明确定义的扰动(如高斯噪声、固定旋转或添加合成雪状图案)来修改ImageNet验证集。标准ImageNet模型在扰动样本上的准确率明显低于原始验证集。虽然这是对鲁棒性的有趣测试,但其准确率下降的机制与我们的工作存在本质差异:前述研究依赖于对现有验证图像进行人为设计、清晰可见且定义明确的扰动,且部分干预方式与ImageNet验证集差异显著(例如ImageNet极少包含落雪图像)。相比之下,我们的实验使用全新图像,并尽可能匹配原验证集的分布特性,因此难以确定新图像的哪些属性导致了准确率下降。

7 Conclusion & Future Work

7 结论与未来工作

The expansive growth of machine learning rests on the aspiration to deploy trained systems in a variety of challenging environments. Common examples include autonomous vehicles, content moderation, and medicine. In order to use machine learning in these areas responsibly, it is important that we can both train models with sufficient generalization abilities, and also reliably measure their performance. As our results show, these goals still pose significant hurdles even in a benign environment.

机器学习的高速发展依赖于将训练好的系统部署到各种具有挑战性的环境中的愿景。常见例子包括自动驾驶汽车、内容审核和医疗领域。为了负责任地在这些领域使用机器学习,我们既要训练出具备足够泛化能力的模型,也要能可靠地测量其性能。正如我们的研究结果所示,即使在良性环境中,这些目标仍存在重大障碍。

Our experiments are only one step in addressing this reliability challenge. There are multiple promising avenues for future work:

我们的实验只是应对这一可靠性挑战的第一步。未来工作存在多个有前景的方向:

Understanding Adaptive Over fitting. In contrast to conventional wisdom, our experiments show that there are no diminishing returns associated with test set re-use on CIFAR-10 and ImageNet. A more nuanced understanding of this phenomenon will require studying whether other machine learning problems are also resilient to adaptive over fitting. For instance, one direction would be to conduct similar reproducibility experiments on tasks in natural language processing, or to analyze data from competition platforms such as Kaggle and CodaLab.7

理解自适应过拟合 (Adaptive Over fitting)。与传统观点相反,我们的实验表明,在CIFAR-10和ImageNet数据集上重复使用测试集并不会导致收益递减。要更细致地理解这一现象,需要研究其他机器学习问题是否也对自适应过拟合具有抵抗力。例如,可以在自然语言处理任务中进行类似的复现实验,或分析来自Kaggle和CodaLab等竞赛平台的数据。

Characterizing the Distribution Gap. Why do the classification models in our testbed perform worse on the new test sets? The selection frequency experiments in Section 4 suggest that images selected less frequently by the MTurk workers are harder for the models. However, the selection frequency analysis does not explain what aspects of the images make them harder. Candidate hypotheses are object size, special filters applied to the images (black & white, sepia, etc.), or unusual object poses. Exploring whether there is a succinct description of the difference between the original and new test distributions is an interesting direction for future work.

表征分布差异。为何我们测试平台中的分类模型在新测试集上表现更差?第4节的选择频率实验表明,被MTurk工作者较少选择的图像对模型而言更难识别。但选择频率分析并未揭示图像中哪些具体特征导致了识别困难。可能的假设因素包括物体尺寸、图像应用的特殊滤镜(黑白、棕褐色调等)或异常物体姿态。探索能否用简洁描述来界定原始测试集与新测试集之间的分布差异,是未来研究的一个有趣方向。

Learning More Robust Models. An over arching goal is to make classification models more robust to small variations in the data. If the change from the original to our new test sets can be characterized accurately, techniques such as data augmentation or robust optimization may be able to close some of the accuracy gap. Otherwise, one possible approach could be to gather particularly hard examples from Flickr or other data sources and expand the training set this way. However, it may also be necessary to develop entirely novel approaches to image classification.

学习更鲁棒的模型。一个核心目标是使分类模型对数据中的微小变化更具鲁棒性。如果能准确描述从原始测试集到新测试集的变化特征,数据增强或鲁棒优化等技术或许能缩小部分准确率差距。另一种可行方案是从Flickr等数据源收集特别困难的样本,以此扩展训练集。然而,开发全新的图像分类方法可能同样必要。

Measuring Human Accuracy. One interesting question is whether our new test sets are also harder for humans. As a first step in this direction, our human accuracy experiment on CIFAR10 (see Appendix B.2.5) shows that average human performance is not affected significantly by the distribution shift between the original and new images that are most difficult for the models. This suggests that the images are only harder for the trained models and not for humans. But a more comprehensive understanding of the human baseline will require additional human accuracy experiments on both CIFAR-10 and ImageNet.

测量人类准确率。一个有趣的问题是,我们的新测试集对人类来说是否也更难。作为这一方向的第一步,我们在CIFAR-10上进行的人类准确率实验(见附录B.2.5)表明,人类平均表现并未因模型最难识别的原始图像与新图像之间的分布偏移而受到显著影响。这表明这些图像仅对训练后的模型更难,而非对人类。但要更全面地理解人类基线水平,还需要在CIFAR-10和ImageNet上进行更多人类准确率实验。

Building Further Test Sets. The dominant paradigm in machine learning is to evaluate the performance of a classification model on a single test set per benchmark. Our results suggest that this is not comprehensive enough to characterize the reliability of current models. To understand their generalization abilities more accurately, new test data from various sources may be needed. One intriguing question here is whether accuracy on other test sets will also follow a linear function of the original test accuracy.

构建更多测试集。机器学习领域的主流范式是在每个基准测试中使用单一测试集来评估分类模型的性能。我们的结果表明,这种做法不足以全面表征当前模型的可靠性。为了更准确地理解其泛化能力,可能需要来自不同来源的新测试数据。这里存在一个有趣的问题:其他测试集上的准确率是否也会遵循原始测试准确率的线性函数关系。

Suggestions For Future Datasets. We found that it is surprisingly difficult to create a new test set that matches the distribution of an existing dataset. Based on our experience with this process, we provide some suggestions for improving machine learning datasets in the future:

未来数据集的建议。我们发现创建一个与现有数据集分布匹配的新测试集出人意料地困难。基于这一过程的经验,我们为改进未来的机器学习数据集提供以下建议:

• Code release. It is hard to fully document the dataset creation process in a paper because it involves a long list of design choices. Hence it would be beneficial for reproducibility efforts if future dataset papers released not only the data but also all code used to create the datasets. • Annotator quality. Our results show that changes in the human annotation process can have significant impact on the difficulty of the resulting datasets. To better understand the quality of human annotations, it would be valuable if authors conducted a standardized test with their annotators (e.g., classifying a common set of images) and included the results in the description of the dataset. Moreover, building variants of the test set with different annotation processes could also shed light on the variability arising from this data cleaning step. • “Super hold-out”. Having access to data from the original CIFAR-10 and ImageNet data collection could have clarified the cause of the accuracy drops in our experiments. By keeping an additional test set hidden for multiple years, future benchmarks could explicitly test for adaptive over fitting after a certain time period. • Simpler tasks for humans. The large number of classes and fine distinctions between them make ImageNet a particularly hard problem for humans without special training. While classifying a large variety of objects with fine-grained distinctions is an important research goal, there are also trade-offs. Often it becomes necessary to rely on images with high annotator agreement to ensure correct labels, which in turn leads to bias by excluding harder images. Moreover, the large number of classes causes difficulties when characterizing human performance. So an alternative approach for a dataset could be to choose a task that is simpler for humans in terms of class structure (fewer classes, clear class boundaries), but contains a larger variety of object poses, lighting conditions, occlusions, image corruptions, etc. • Test sets with expert annotations. Compared to building a full training set, a test set requires a smaller number of human annotations. This makes it possible to employ a separate labeling process for the test set that relies on more costly expert annotations. While this violates the assumption that train and test splits are i.i.d. from the same distribution, the expert labels can also increase quality both in terms of correctness and example diversity.

• 代码发布。由于涉及大量设计选择,很难在论文中完整记录数据集创建过程。因此,未来数据集论文若能同时发布数据和全部创建代码,将极大提升可复现性。

• 标注者质量。我们的结果表明,人工标注流程的变化会显著影响最终数据集的难度。为更好评估标注质量,建议作者对标注者进行标准化测试(例如对同一组图像进行分类),并将结果写入数据集描述。此外,通过不同标注流程构建测试集变体,也能揭示数据清洗环节带来的差异性。

• "超级保留集"。若能获取原始CIFAR-10和ImageNet数据收集过程的信息,将有助于解释本实验中准确率下降的原因。通过长期隐藏额外测试集,未来基准测试可显式检测特定时间段后的自适应过拟合现象。

• 简化人类任务。ImageNet庞大的类别数量和精细分类对未经专业训练的人类极具挑战。虽然细粒度多物体分类是重要研究方向,但也需权衡取舍——通常必须依赖高标注一致性的图像来确保标签正确,这会导致排除困难样本而产生偏差。此外,过多类别也增加了人类表现评估难度。替代方案是选择类别结构更简单(更少类别、清晰类边界)但包含更丰富物体姿态、光照条件、遮挡、图像损坏等要素的任务。

• 专家标注测试集。相比完整训练集,测试集所需人工标注量更少,这使得采用成本更高的专家标注流程成为可能。虽然这违背了训练/测试集同分布的i.i.d.假设,但专家标注能同时提升标签正确性和样本多样性。

Finally, we emphasize that our recommendations here should not be seen as flaws in CIFAR-10 or ImageNet. Both datasets were assembled in the late 2000s for an accuracy regime that is very different from the state-of-the-art now. Over the past decade, especially ImageNet has successfully guided the field to increasingly better models, thereby clearly demonstrating the immense value of this dataset. But as models have increased in accuracy and our reliability expectations have grown accordingly, it is now time to revisit how we create and utilize datasets in machine learning.

最后,我们要强调,本文提出的建议不应被视为CIFAR-10或ImageNet的缺陷。这两个数据集均构建于2000年代末期,当时追求的准确率标准与现今最先进水平截然不同。过去十年间,尤其是ImageNet成功引领了领域内模型性能的持续提升,充分证明了该数据集的巨大价值。但随着模型准确率提高及我们对可靠性的期望相应增长,现在正是重新审视机器学习领域数据集创建与使用方式的时机。

Acknowledgements

致谢

We would like to thank Tudor Achim, Alex Berg, Orianna DeMasi, Jia Deng, Alexei Efros, David Fouhey, Moritz Hardt, Piotr Indyk, Esther Rolf, and Olga Russ a kov sky for helpful discussions while working on this paper. Moritz Hardt has been particularly helpful in all stages of this project and – among other invaluable advice – suggested the title of this paper and a precursor to the data model in Section 5.3. We also thank the participants of our human accuracy experiment in Appendix B.2.5 (whose names we keep anonymous following our IRB protocol).

我们要感谢Tudor Achim、Alex Berg、Orianna DeMasi、Jia Deng、Alexei Efros、David Fouhey、Moritz Hardt、Piotr Indyk、Esther Rolf和Olga Russakovsky在本文撰写过程中提供的宝贵讨论。Moritz Hardt在本项目的各个阶段都给予了特别帮助,除了其他无价的建议外,他还建议了本文的标题以及第5.3节中数据模型的雏形。我们还要感谢附录B.2.5中参与人类准确性实验的各位参与者(根据IRB协议,我们隐去了他们的姓名)。

This research was generously supported in part by ONR awards N00014-17-1-2191, N00014-17-1- 2401, and N00014-18-1-2833, the DARPA Assured Autonomy (FA8750-18-C-0101) and Lagrange (W911NF-16-1-0552) programs, an Amazon AWS AI Research Award, and a gift from Microsoft Research. In addition, LS was supported by a Google PhD fellowship and a Microsoft Research Fellowship at the Simons Institute for the Theory of Computing.

本研究得到了以下机构的慷慨资助:ONR奖项N00014-17-1-2191、N00014-17-1-2401和N00014-18-1-2833,DARPA的Assured Autonomy (FA8750-18-C-0101)和Lagrange (W911NF-16-1-0552)项目,亚马逊AWS AI研究奖,以及微软研究院的捐赠。此外,LS获得了Google博士生奖学金和微软研究院在Simons理论计算研究所的研究员资助。

References

参考文献

[63] Barret Zoph, Vijay Vasudevan, Jonathon Shlens, and Quoc V Le. Learning transferable architectures for scalable image recognition. In Conference on Computer Vision and Pattern Recognition (CVPR), 2018. https://arxiv.org/abs/1707.07012.

[63] Barret Zoph, Vijay Vasudevan, Jonathon Shlens, Quoc V Le. 学习可迁移架构以实现可扩展图像识别. 计算机视觉与模式识别会议 (CVPR), 2018. https://arxiv.org/abs/1707.07012.

A Overview of the Appendix

附录概述

Contents

目录

C Details of the ImageNet Experiments 40

C ImageNet实验细节 40

C.1 Dataset Creation Methodology 41

C.1 数据集创建方法 41

B Details of the CIFAR-10 Experiments

B CIFAR-10实验细节

We first present our reproducibility experiment for the CIFAR-10 image classification dataset [35]. There are multiple reasons why CIFAR-10 is an important example for measuring how well current models generalize to unseen data.

我们首先在CIFAR-10图像分类数据集[35]上进行了可复现性实验。选择CIFAR-10作为衡量当前模型对未见数据泛化能力的重要基准有以下多方面的原因。

Compared to ImageNet, CIFAR-10 is significantly smaller both in the number of images and in the size of each image. This makes it easier to conduct various follow-up experiments that require training new classification models. Moreover, the smaller size of CIFAR-10 also means that the dataset has been accessible to more researchers for a longer time. Hence it is plausible that CIFAR-10 experienced more test set adaptivity than ImageNet, where it is much more costly to tune hyper parameters.

与ImageNet相比,CIFAR-10在图像数量和单图尺寸上都显著更小。这使得开展需要训练新分类模型的各种后续实验更为便捷。此外,CIFAR-10的较小规模也意味着该数据集能被更多研究者长期使用。因此可以合理推测,CIFAR-10比ImageNet经历了更多测试集适应性调整,因为后者调整超参数的成本要高得多。

Before we describe how we created our new test set, we briefly review relevant background on CIFAR-10 and Tiny Images.

在介绍我们如何创建新测试集之前,我们先简要回顾CIFAR-10和Tiny Images的相关背景。

Tiny Images. The dataset contains 80 million RGB color images with resolution $32\times32$ pixels and was released in 2007 [55]. The images are organized by roughly 75,000 keywords that correspond to the non-abstract nouns from the WordNet database [43] Each keyword was entered into multiple Internet search engines to collect roughly 1,000 to 2,500 images per keyword. It is important to note that Tiny Images is a fairly noisy dataset. Many of the images filed under a certain keyword do not clearly (or not at all) correspond to the respective keyword.

Tiny Images。该数据集包含8000万张分辨率为$32\times32$像素的RGB彩色图像,于2007年发布[55]。这些图像按约75,000个关键词组织,这些关键词对应于WordNet数据库[43]中的非抽象名词。每个关键词被输入多个互联网搜索引擎,每个关键词收集约1000至2500张图像。值得注意的是,Tiny Images是一个噪声较多的数据集。许多归类在特定关键词下的图像并不明确(或完全不)对应于相应的关键词。

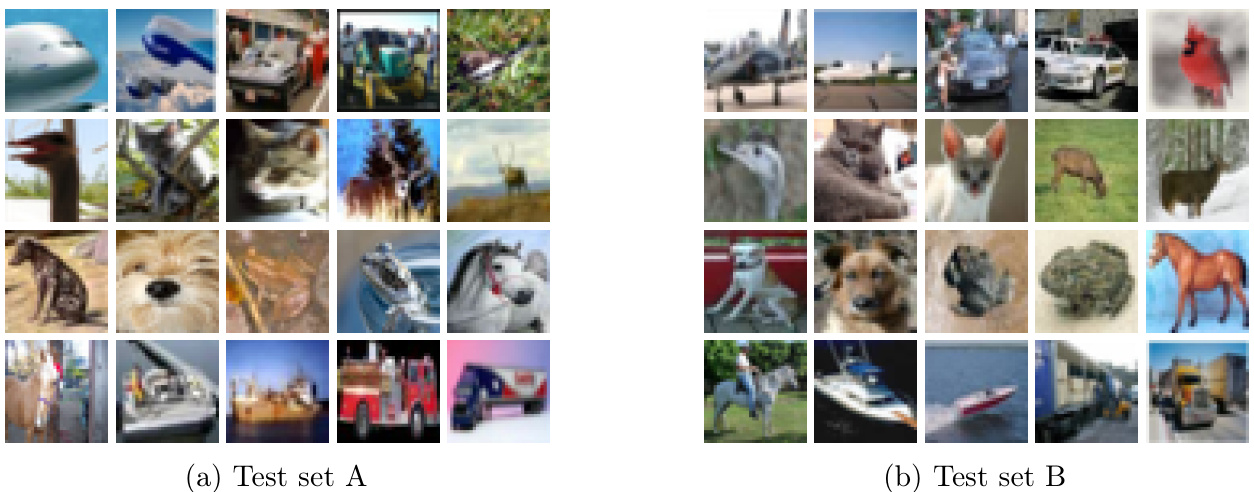

CIFAR-10. The CIFAR-10 dataset was created as a cleanly labeled subset of Tiny Images for experiments with multi-layer networks. To this end, the researchers assembled a dataset consisting of ten classes with 6,000 images per class, which was published in 2009 [35]. These classes are airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck. The standard train / test split is class-balanced and contains 50,000 training images and 10,000 test images.

CIFAR-10。CIFAR-10数据集是从Tiny Images中精选出的标注清晰的子集,用于多层网络实验。为此,研究人员构建了一个包含10个类别、每类6,000张图像的数据集,并于2009年发布[35]。这些类别包括飞机、汽车、鸟、猫、鹿、狗、青蛙、马、船和卡车。标准训练/测试集按类别均衡划分,包含50,000张训练图像和10,000张测试图像。

The CIFAR-10 creation process is well-documented [35]. First, the researchers assembled a set of relevant keywords for each class by using the hyponym relations in WordNet [43] (for instance, “Chihuahua” is a hyponym of “dog”). Since directly using the corresponding images from Tiny Images would not give a high quality dataset, the researchers paid student annotators to label the images from Tiny Images. The labeler instructions can be found in Appendix C of [35] and include a set of specific guidelines (e.g., an image should not contain two object of the corresponding class). The researchers then verified the labels of the images selected by the annotators and removed near-duplicates from the dataset via an $\ell_{2}$ nearest neighbor search.

CIFAR-10的创建过程有详细记录[35]。首先,研究人员利用WordNet[43]中的下位词关系为每个类别组装了一组相关关键词(例如"吉娃娃"是"狗"的下位词)。由于直接使用Tiny Images中的对应图像无法获得高质量数据集,研究人员付费让学生标注员对Tiny Images中的图像进行标注。标注指南可在[35]的附录C中找到,其中包含一系列具体规则(例如,图像不应包含两个对应类别的物体)。随后,研究人员核验了标注员选择的图像标签,并通过$\ell_{2}$最近邻搜索去除了数据集中的近似重复项。

B.1 Dataset Creation Methodology

B.1 数据集创建方法

Our overall goal was to create a new test set that is as close as possible to being drawn from the same distribution as the original CIFAR-10 dataset. One crucial aspect here is that the CIFAR-10 dataset did not exhaust any of the Tiny Image keywords it is drawn from. So by collecting new images from the same keywords as CIFAR-10, our new test set can match the sub-class distribution of the original dataset.

我们的总体目标是创建一个尽可能接近原始CIFAR-10数据集分布的新测试集。这里的一个关键方面是CIFAR-10数据集并未耗尽其所来源的Tiny Image关键词。因此,通过从与CIFAR-10相同的关键词中收集新图像,我们的新测试集可以匹配原始数据集的子类分布。

Understanding the Sub-Class Distribution. As the first step, we determined the Tiny Image keyword for every image in the CIFAR-10 dataset. A simple nearest-neighbor search sufficed since every image in CIFAR-10 had an exact duplicate ( $\ell_{2}$ -distance 0) in Tiny Images. Based on this information, we then assembled a list of the 25 most common keywords for each class. We decided on 25 keywords per class since the 250 total keywords make up more than $95%$ of CIFAR-10. Moreover, we wanted to avoid accidentally creating a harder dataset with infrequent keywords that the class if i ers had little incentive to learn based on the original CIFAR-10 dataset.

理解子类分布。作为第一步,我们为CIFAR-10数据集中的每张图像确定了Tiny Image关键词。由于CIFAR-10中的每张图像在Tiny Images中都有一个完全相同的副本($\ell_{2}$距离为0),因此简单的最近邻搜索就足够了。基于这些信息,我们为每个类别整理了25个最常见关键词的列表。我们选择每个类别25个关键词,是因为这250个关键词总共占CIFAR-10数据集的95%以上。此外,我们希望避免因使用不常见关键词而意外创建一个更难的数据集,这些关键词在原始CIFAR-10数据集中分类器学习的动机较弱。

The keyword distribution can be found in Appendix B.3.1. Inspecting this list reveals the importance of matching the sub-class distribution. For instance, the most common keyword in the airplane class is stealth bomber and not a more common civilian type of airplane. In addition, the third most common keyword for the airplane class is stealth fighter. Both types of planes are highly distinctive. There are more examples where certain sub-classes are considerably different. For instance, trucks from the keyword fire_truck are mostly red, which is quite different from pictures for dump_truck or other keywords.

关键词分布可在附录B.3.1中查看。观察该列表可发现匹配子类分布的重要性。例如,飞机类目中最常见的关键词是隐形轰炸机(stealth bomber)而非更常见的民用飞机类型。此外,飞机类目第三常见的关键词是隐形战斗机(stealth fighter)。这两类飞机都具有高度独特性。还有许多其他例子表明某些子类存在显著差异,例如关键词消防车(fire_truck)对应的卡车多为红色,这与自卸车(dump_truck)或其他关键词对应的图片截然不同。