Feature Fusion Transfer ability Aware Transformer for Unsupervised Domain Adaptation

Abstract

摘要

Unsupervised domain adaptation (UDA) aims to leverage the knowledge learned from labeled source domains to improve performance on the unlabeled target domains. While Convolutional Neural Networks (CNNs) have been dominant in previous UDA methods, recent research has shown promise in applying Vision Transformers (ViTs) to this task. In this study, we propose a novel Feature Fusion Transfer ability Aware Transformer (FFTAT) to enhance ViT performance in UDA tasks. Our method introduces two key innovations: First, we introduce a patch discriminator to evaluate the transfer ability of patches, generating a transfer ability matrix. We integrate this matrix into self-attention, directing the model to focus on transferable patches. Second, we propose a feature fusion technique to fuse embeddings in the latent space, enabling each embedding to incorporate information from all others, thereby improving generalization. These two components work in synergy to enhance feature representation learning. Extensive experiments on widely used benchmarks demonstrate that our method significantly improves UDA performance, achieving state-of-the-art (SOTA) results.

无监督域适应 (Unsupervised Domain Adaptation, UDA) 旨在利用从带标签的源域学到的知识提升无标签目标域的性能。虽然卷积神经网络 (Convolutional Neural Networks, CNNs) 在以往的 UDA 方法中占主导地位,但近期研究表明视觉 Transformer (Vision Transformers, ViTs) 在该任务中具有潜力。本研究提出了一种新颖的特征融合迁移能力感知 Transformer (Feature Fusion Transfer ability Aware Transformer, FFTAT) 来提升 ViT 在 UDA 任务中的性能。我们的方法包含两项关键创新:首先,引入一个块判别器来评估图像块的迁移能力,生成迁移能力矩阵,并将该矩阵整合到自注意力机制中,使模型聚焦于可迁移的图像块;其次,提出一种特征融合技术,在潜在空间融合嵌入表示,使每个嵌入都能整合其他所有嵌入的信息,从而提升泛化能力。这两个组件协同工作以增强特征表示学习。在广泛使用的基准测试上的大量实验表明,我们的方法显著提升了 UDA 性能,达到了当前最优 (State-of-the-Art, SOTA) 水平。

1. Introduction

1. 引言

Deep neural networks (DNNs) have achieved remarkable breakthroughs across various application fields owing to their impressive automatic feature extraction capabilities. However, such success often relies on the availability of large labeled datasets, which can be challenging to acquire in many real-world scenarios due to the significant time and labor required. Fortunately, unsupervised domain adaptation (UDA) techniques [40] offer a promising solution by harnessing rich labeled data from a source domain and transferring knowledge to target domains with limited or no labeled examples. The essence of UDA lies in identifying discriminant and domain-invariant features shared between the source domain and target domain within a common latent space [44]. Over the past decade, as interests in domain adaptation research have grown, numerous UDA methods have emerged and evolved [20, 24, 50], such as adversarial adaptation, which focuses on discriminating domain-invariant and domain-variant features and acquiring domain-invariant feature representations through adversarial learning [24, 52]. Besides, deep unsupervised domain adaptation techniques usually employ a pre-trained Convolutional Neural Network (CNN) backbone [19].

深度神经网络(DNN)凭借其卓越的自动特征提取能力,已在各个应用领域取得显著突破。然而,这种成功通常依赖于大量标注数据的可获得性,由于需要耗费大量时间和人力,这在现实场景中往往难以实现。幸运的是,无监督域适应(UDA)技术[40]通过利用源域的丰富标注数据,将知识迁移到标注样本有限或缺失的目标域,为此提供了可行解决方案。UDA的核心在于识别源域与目标域在共同潜在空间[44]中共享的判别性且域不变的特征。过去十年间,随着域适应研究兴趣的增长,涌现并发展出众多UDA方法[20,24,50],例如专注于区分域不变与域变特征、通过对抗学习获取域不变特征表示的对抗适应方法[24,52]。此外,深度无监督域适应技术通常采用预训练的卷积神经网络(CNN)骨干网络[19]。

Recently, the self-attention mechanism and vision transformer (ViT) [7,41,48] have received growing interest in the vision community. Unlike convolutional neural networks that gather information from local receptive fields of the given image, ViTs leverage the self-attention mechanism to capture long-range dependencies among patch features through a global view. In ViT and many of its variants, each image is partitioned into a series of non-overlapping fixedsize patches, which are then projected into a latent space as patch tokens and combined with position embeddings. A class token, representing the entire image, is prepended to the patch tokens. All tokens are then fed into a specific number of transformer layers to learn visual representations of the input image. Leveraging the superior global content capture capability of the self-attention mechanism, ViTs have demonstrated impressive performance across various vision tasks, including image classification [7], video under standing [11], and object detection [1].

近年来,自注意力机制 (self-attention) 和视觉 Transformer (ViT) [7,41,48] 在视觉领域受到越来越多的关注。与从图像局部感受野收集信息的卷积神经网络不同,ViT 利用自注意力机制通过全局视角捕捉图像块特征之间的长程依赖关系。在 ViT 及其多数变体中,图像被划分为一系列不重叠的固定尺寸图像块,这些图像块随后被投影到潜在空间作为 patch token,并与位置编码相结合。代表整张图像的 class token 会被添加到 patch token 序列首端。所有 token 随后被输入特定数量的 Transformer 层以学习输入图像的视觉表征。凭借自注意力机制卓越的全局内容捕捉能力,ViT 在图像分类 [7]、视频理解 [11] 和目标检测 [1] 等多种视觉任务中展现出卓越性能。

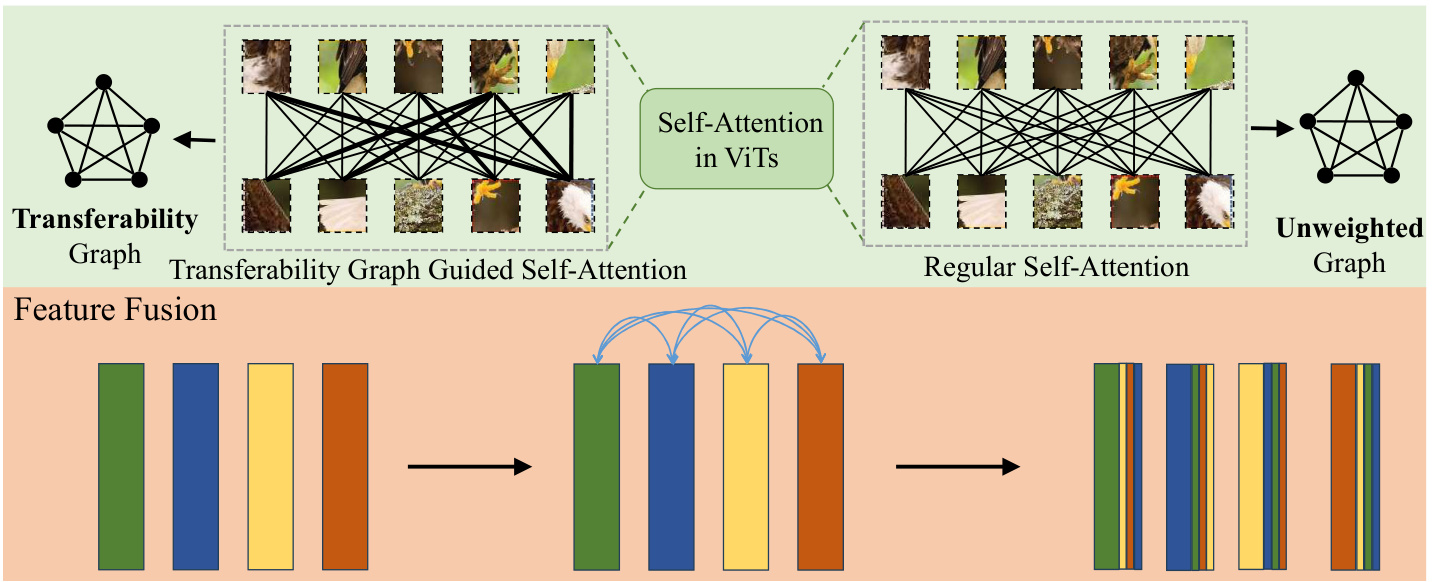

Despite increasing interest, only a few studies have explored the application of ViTs for unsupervised domain adaptation tasks [34, 43, 44, 50]. In this work, we introduce a novel Feature Fusion Transfer ability Aware Transformer, designed for unsupervised domain adaptation. FFTAT builds upon TVT [44], the first ViT-based UDA model, by introducing two key components: (1) a transfer ability graph-guided self-attention (TG-SA) mechanism that enhances information from highly transferable features while suppressing information from less transferable features, and (2) a carefully designed features fusion (FF) operation that makes each embedding incorporate information from other embeddings in the same batch. Fig. 1 illustrates the transferability graph guided self-attention and feature fusion.

尽管兴趣日益增长,但仅有少数研究探索了ViT在无监督域适应任务中的应用[34, 43, 44, 50]。本文提出了一种专为无监督域适应设计的新型特征融合迁移感知Transformer (Feature Fusion Transfer ability Aware Transformer)。FFTAT在首个基于ViT的UDA模型TVT[44]基础上,引入了两个关键组件:(1) 迁移能力图引导的自注意力机制 (transfer ability graph-guided self-attention, TG-SA),该机制能增强高迁移性特征的信息,同时抑制低迁移性特征的信息;(2) 精心设计的特征融合 (features fusion, FF) 操作,使每个嵌入都能整合同批次其他嵌入的信息。图1展示了迁移能力图引导的自注意力与特征融合机制。

Figure 1. Illustration of transfer ability graph-guided self-attention and feature fusion. The section above (green) shows the transfer ability graph guided self-attention and compares it with vanilla self-attention. The section below (orange) illustrates the feature fusion mechanism, where the features of each sample are summed with the features of all other images in the same batch. Each bar represents the features of a sample.

图 1: 迁移能力图引导的自注意力机制与特征融合示意图。上方绿色部分展示了迁移能力图引导的自注意力机制,并与原始自注意力机制进行对比。下方橙色部分展示了特征融合机制,其中每个样本的特征会与同批次中所有其他图像的特征进行求和。每个条形图代表一个样本的特征。

From a graph view, vanilla self-attention among patches can be seen as an unweighted graph, where the patches are considered as nodes, and the attention between nodes is regarded as the edge connecting them. Unlike vanilla selfattention, our proposed transfer ability graph guided selfattention is controlled by a weighted graph, where the information communication between highly transferable patches is emphasized via a large-weight edge, and the information communication between less transferable patches is attenuated by a small-weight edge [47, 48]. The transfer ability graph is automatically learned and updated through learning iterations in the transfer ability-aware layer, where we design a patch disc rim in at or to evaluate the transfer ability of each patch. The TG-SA allows for integrative information processing, facilitating the model to focus on domain- invariant features shared between domains and gather important information for domain adaptation. The Feature Fusion (FF) operation enables each embedding to integrate information from other embeddings. Different from recent work PMTrans [53] for unsupervised domain adaptation, our feature fusion occurs in the latent space rather than on the image level.

从图的角度来看,图像块(patch)之间的原始自注意力(self-attention)可视为无权重图,其中图像块作为节点,节点间的注意力被视为连接它们的边。与原始自注意力不同,我们提出的迁移能力图引导自注意力由加权图控制:高迁移性图像块间的信息交互通过大权重边增强,低迁移性图像块间的信息交互则通过小权重边弱化[47,48]。迁移能力图通过迁移能力感知层中的学习迭代自动更新,该层设计了图像块判别器(patch discriminator)来评估每个图像块的迁移能力。TG-SA支持集成化信息处理,促使模型聚焦于领域间共享的领域不变特征,并为领域适配收集重要信息。特征融合(FF)操作使每个嵌入能整合其他嵌入的信息。与近期无监督领域适配方法PMTrans[53]不同,我们的特征融合发生在潜在空间而非图像层面。

These two new components synergistic ally enhance robust feature representation learning and generalization in UDA tasks. Extensive experiments on widely used UDA benchmarks demonstrate that FFTAT significantly improves UDA performance, achieving new state-of-the-art results. In summary, our contributions are as follows:

这两个新组件协同增强了UDA任务中的鲁棒特征表示学习和泛化能力。在广泛使用的UDA基准测试上的大量实验表明,FFTAT显著提升了UDA性能,达到了新的最先进水平。总之,我们的贡献如下:

• We introduce a novel transfer ability graph-guided attention mechanism in ViT architecture for UDA, enhanc- ing performance by promoting attention between highly transferable features while suppressing attention between less transferable ones. • We propose a feature fusion technique that enhances feature learning and generalization capabilities for UDA. • Our proposed model, FFTAT, integrates transfer ability graph-guided attention and feature fusion mechanisms, resulting in notable advancements and state-of-the-art performance on widely used UDA benchmarks.

• 我们在ViT架构中引入了一种新颖的迁移能力图引导注意力机制 (transfer ability graph-guided attention mechanism) 用于无监督域适应 (UDA),通过促进高可迁移特征间的注意力交互并抑制低可迁移特征间的注意力来提升性能。

• 我们提出了一种特征融合技术 (feature fusion technique),可增强UDA任务中的特征学习与泛化能力。

• 我们提出的FFTAT模型整合了迁移能力图引导注意力与特征融合机制,在广泛使用的UDA基准测试中实现了显著提升和最先进的性能。

2. Related Work

2. 相关工作

2.1. Unsupervised Domain Adaptation

2.1. 无监督域适应 (Unsupervised Domain Adaptation)

UDA aims to learn transferable knowledge across the source and target domains with different distributions [25,

UDA旨在从具有不同分布的源域和目标域中学习可迁移知识[25,

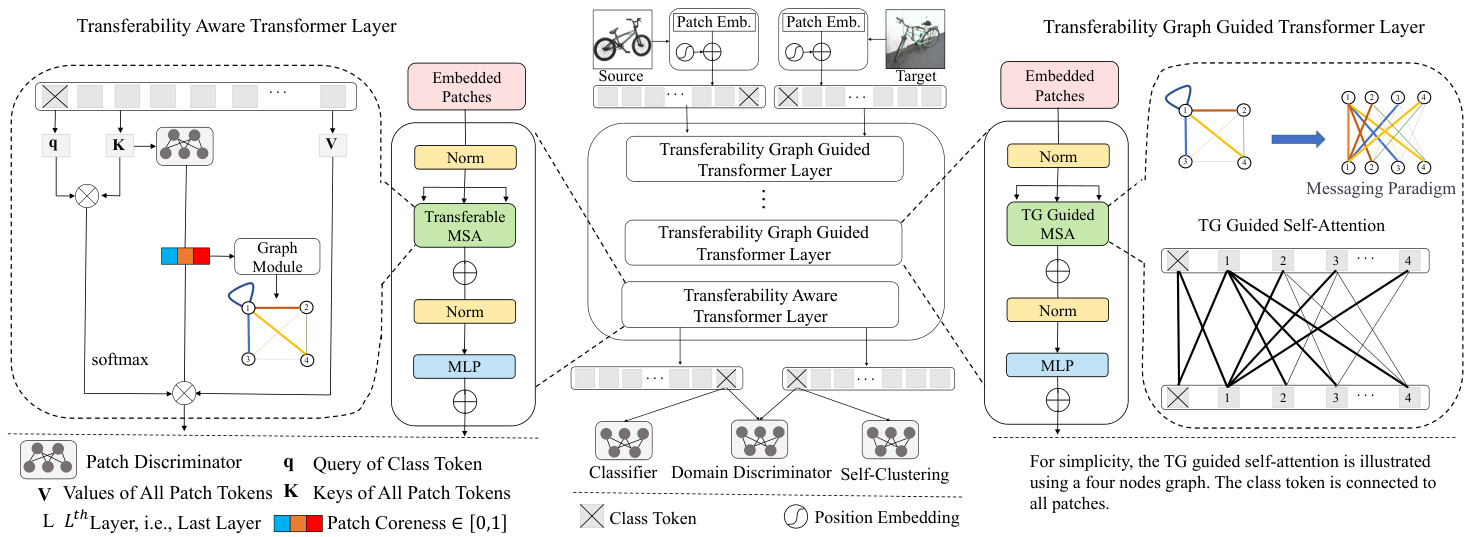

Figure 2. The overview of the FFTAT framework. In FFTAT, source and target images are divided into non-overlapping fixed-size patches which are linearly projected into the latent space and concatenated with positional information. A class token is prepended to the image tokens. The tokens are subsequently processed by a transformer encoder. The Feature Fusion Layer mixes the features as illustrated in Fig. 1. The patch disc rim in at or assesses the transfer ability of each patch and generates a transfer ability graph, which is used to guide the attention mechanism in the transformer layers. The classifier head and self-clustering module operate on source domain images and target domain images, respectively. The Domain Disc rim in at or predicts whether an image belongs to the source or target domain.

图 2: FFTAT框架概览。在FFTAT中,源图像和目标图像被划分为不重叠的固定大小图像块(patch),经线性投影至潜在空间并与位置信息拼接。图像token前会添加一个类别token。这些token随后由Transformer编码器处理。特征融合层如图1所示混合特征。图像块判别器评估每个图像块的迁移能力并生成迁移能力图,用于指导Transformer层中的注意力机制。分类器头部和自聚类模块分别处理源域图像和目标域图像。域判别器负责预测图像属于源域还是目标域。

39]. Various techniques for UDA have been proposed. For example, the discrepancy techniques measure the distribution divergence between source and target domains [24, 33, 35]. Adversarial adaptation discriminates domaininvariant and domain-specific representations by playing an adversarial game between the feature extractor and a domain disc rim in at or [9,44]. Metric learning aims to optimize new metrics that explicitly capture both the intra-class domain variation and the inter-class domain variation [16, 54]. While prevailing UDA methods focus on learning domaininvariant (transferable) features, another line of work emphasizes the importance of domain-specific features [31].

[39]。人们提出了多种无监督域适应(UDA)技术。例如,差异度量技术通过计算源域与目标域之间的分布差异来实现适应 [24, 33, 35]。对抗式适应通过在特征提取器与域判别器之间进行对抗博弈,区分域不变特征与域特定特征 [9,44]。度量学习则致力于优化新度量标准,显式捕捉类内域差异和类间域差异 [16, 54]。主流UDA方法主要关注学习域不变(可迁移)特征,而另一类研究则强调域特定特征的重要性 [31]。

3. Method

3. 方法

3.1. Preliminaries

3.1. 预备知识

Let $D_{s}={(x_{i}^{s},y_{i}^{s})}_{i=1}^{n_{s}}$ represents data in the labeled source domain, where $\boldsymbol{x}_{i}^{s}$ denotes the images, $y_{i}^{s}$ denotes the corresponding labels, and $n_{s}$ denotes the number of samples in labeled source domain. Similarly, let Dt = {(xit)}jnt=1 represents data in the target domain, consisting $n_{t}$ images but no labels. UDA algorithms aim to learn transferable knowledge to minimize domain discrepancy, thereby achieving the desired prediction performance on unlabeled target data. A common approach is to devise an objective function that jointly learns feature embeddings and a classifier. This objective function is formulated as follows:

设 $D_{s}={(x_{i}^{s},y_{i}^{s})}_{i=1}^{n_{s}}$ 表示带标签源域数据,其中 $\boldsymbol{x}_{i}^{s}$ 为图像,$y_{i}^{s}$ 为对应标签,$n_{s}$ 为带标签源域样本数。类似地,设 $D_{t} = {(x_{i}^{t})}_{j=1}^{n_{t}}$ 表示目标域数据,包含 $n_{t}$ 张无标签图像。无监督域适应 (UDA) 算法的目标是学习可迁移知识以最小化域差异,从而在无标签目标数据上实现预期预测性能。常见方法是设计同时学习特征嵌入和分类器的目标函数,其数学表达如下:

2.2. Data Augmentation

2.2. 数据增强

Data Augmentation has been an integral part of modern deep learning vision models. The general idea is to transform the given data without severely altering the semantics. Common data augmentation techniques include random flip and crop [17], MixUp [51], CutOut [6], AutoAugment [14] Rand Augment [3] and so on. While these techniques are generally employed in the image space, recent works have explored data augmentation in the embedding space and found promising outcomes in different applications, such as supervised image classification [37, 46] and semi-supervised learning [15]. Our feature fusion can be viewed as data augmentation in embedding space for unsupervised domain adaptation.

数据增强 (Data Augmentation) 是现代深度学习视觉模型的重要组成部分。其核心思想是在不严重改变语义的前提下对给定数据进行变换。常见的数据增强技术包括随机翻转和裁剪 [17]、MixUp [51]、CutOut [6]、AutoAugment [14]、RandAugment [3] 等。虽然这些技术通常应用于图像空间,但最近的研究开始探索嵌入空间的数据增强,并在监督式图像分类 [37, 46] 和半监督学习 [15] 等不同应用中取得了积极成果。我们的特征融合可视为无监督域自适应中嵌入空间的数据增强。

where $L_{C E}$ is the standard cross-entropy loss supervised in the source domain, while $L_{d i s}$ denotes the divergence loss, which varies in implementation across different algorithms. Hyper parameter $\alpha$ is used to balance the weight of $L_{d i s}$ .

其中 $L_{C E}$ 是源域监督的标准交叉熵损失,而 $L_{d i s}$ 表示散度损失,其具体实现因算法而异。超参数 $\alpha$ 用于平衡 $L_{d i s}$ 的权重。

3.2. Overview of FFTAT

3.2. FFTAT概述

We aim to advance ViT-based solutions for Unsupervised Domain Adaptation. Fig. 2 illustrates the overall framework of our proposed FFTAT. The framework employs a vision transformer backbone, consisting of a domain discriminator for the images (using the class tokens), a patch discriminator for assessing the transfer ability of the patches, a selfclustering module, and a classifier head. The vision transformer backbone is equipped with our proposed transferability graph-guided attention mechanism (comprising the Transfer ability Graph Guided Transformer Layer and the Transfer ability Aware Transformer Layer in Fig. 2), and a feature fusion mechanism (implemented as the Feature Fusion Layer, as shown in Fig. 2).

我们致力于推进基于视觉Transformer (ViT)的无监督域适应解决方案。图2展示了我们提出的FFTAT整体框架。该框架采用视觉Transformer主干网络,包含用于图像的域判别器(使用类别token)、评估图像块迁移能力的块判别器、自聚类模块以及分类器头部。该视觉Transformer主干网络配备了本文提出的可迁移性图引导注意力机制(由图2中的可迁移性图引导Transformer层和可迁移性感知Transformer层构成)以及特征融合机制(实现为图2所示的特征融合层)。

During training, both the source and target images are used. Each image is divided into non-overlapping fixed-size patches which are linearly projected into the latent space and concatenated with positional information. A class token is prepended to the patch tokens. All tokens are subsequently processed by the vision transformer backbone. From a graph view, we consider the patches as nodes and the attention between the patches as edges. This allows us to manipulate the strength of self-attention among patches by adaptively learning a transfer ability graph during the training process. In the first iteration, we initialize the transfer ability graph as an unweighted graph. At the end of each iteration, the patch disc rim in at or evaluates the transfer ability score of the patches and updates the transferability graph. The learned transfer ability graph is used to rescale the self-attention in the Transfer ability Aware Transformer Layer and the Transfer ability Graph Guided Transformer Layers, amplifying information from highly transferable features while damping the less transferable ones.

在训练过程中,同时使用源图像和目标图像。每张图像被划分为不重叠的固定大小图像块(patch),这些块通过线性投影映射到潜在空间并与位置信息拼接。一个类别token(class token)被添加到图像块token之前。所有token随后由视觉Transformer主干网络处理。从图(graph)的视角来看,我们将图像块视为节点(node),块之间的注意力(attention)视为边(edge)。这使得我们能够通过在训练过程中自适应学习迁移能力图(transfer ability graph)来调控图像块间自注意力(self-attention)的强度。在第一次迭代时,我们将迁移能力图初始化为无权图(unweighted graph)。每次迭代结束时,图像块判别器(patch discriminator)评估各块的迁移能力分数并更新可迁移性图(transferability graph)。学习得到的迁移能力图用于在"迁移感知Transformer层"(Transfer ability Aware Transformer Layer)和"迁移图引导Transformer层"(Transfer ability Graph Guided Transformer Layers)中重新缩放自注意力,从而放大高可迁移特征的信息,同时抑制低可迁移特征。

The Feature Fusion Layer is placed before the Transferability Aware Layer to mix patch token embeddings for better generalization. The classifier head predicts class labels based on the output class token of the labeled source domain images, while the domain disc rim in at or predicts whether images belong to the source or target domain for output class token embeddings of both domains. The selfclustering module utilizes the class token of the target domain to encourage the clustering of learned target domain image representation. Details are introduced below.

特征融合层位于可迁移感知层之前,用于混合图像块token嵌入以提升泛化能力。分类器头部基于已标注源域图像的输出类别token预测类别标签,而域判别器则根据两个域的输出类别token嵌入预测图像属于源域还是目标域。自聚类模块利用目标域的类别token来促进学习到的目标域图像表征聚类。具体细节如下所述。

3.3. Transfer ability Aware Transformer Layer

3.3. 迁移能力感知 Transformer 层

The patch tokens correspond to partial regions of the image and capture visual features as fine-grained local representations. Existing work [44, 45, 49] shows that the patch tokens are of different semantic importance. In this work, we define the transfer ability score on a patch to assess its transfer ability (detailed definition below). A patch with a higher transfer ability score is more likely to correspond to the highly transferable features.

补丁Token对应图像的局部区域,并作为细粒度局部表征捕获视觉特征。现有研究[44,45,49]表明,不同补丁Token具有不同的语义重要性。本文定义了补丁的迁移能力评分(具体定义见下文)来评估其迁移能力,评分越高的补丁越可能对应高可迁移性特征。

To obtain the transfer ability score of patch tokens, we adopt a patch-level domain disc rim in at or $D_{l}$ to evaluate the local features with a loss function:

为获取图像块(patch) token的迁移能力评分,我们采用基于图像块层级的域判别器 $D_{l}$ ,通过损失函数评估局部特征:

where $P$ is the number of patches, $D=D_{s}\cup D_{t}$ , $G_{f}$ is the feature encoder, implemented as ViT, $n~=~n_{s}+n_{t}$ , is the total number of images in $D$ , ip denotes the $p$ th patch of the i th image, yidp denotes the domain label of the $p$ th token of the $i$ th image, i.e., $y_{i p}^{d}=1$ means source domain, else the target domain. $D_{l}\left(f_{i p}\right)$ gives the probability of the patch belonging to the source domain, where $f_{i p}$ denotes the features of the $p$ th token of the ith image, i.e., $f_{i p}=G_{f}\left(x_{i p}\right)$ . During the training process, $D_{l}$ tries to discriminate the patches correctly, assigning 1 to patches from the source domain and 0 to those from the target domain.

其中 $P$ 是分块数量,$D=D_{s}\cup D_{t}$,$G_{f}$ 是特征编码器(采用 ViT 实现),$n~=~n_{s}+n_{t}$ 表示 $D$ 中的图像总数,ip 表示第 i 张图像的第 $p$ 个分块,yidp 表示第 i 张图像第 $p$ 个 token 的域标签(即 $y_{i p}^{d}=1$ 代表源域,否则为目标域)。$D_{l}\left(f_{i p}\right)$ 给出该分块属于源域的概率,其中 $f_{i p}$ 表示第 i 张图像第 $p$ 个 token 的特征(即 $f_{i p}=G_{f}\left(x_{i p}\right)$)。训练过程中,$D_{l}$ 会尝试正确区分分块:为源域分块分配 1,为目标域分块分配 0。

Empirically, patches that cannot be easily distinguished by the patch disc rim in at or (e.g., $D_{l}$ is around 0.5) are more likely to correspond to highly transferable features. These highly transferable features capture underlying patterns that remain consistent despite variations in the data distribution between the source and target domains. In the paper, we use the phrase “transfer ability” to capture this property. We define the transfer ability score of a patch as:

从经验上看,那些难以被补丁判别器区分的补丁(例如 $D_{l}$ 接近0.5时)更可能对应高可迁移特征。这些高可迁移特征捕捉了源域与目标域间数据分布变化下仍保持一致的底层模式。在本文中,我们使用"迁移能力"这一术语来描述该特性,并将补丁的迁移能力得分定义为:

where $H\left(\cdot\right)$ is the standard entropy function. If the output of the patch disc rim in at or $D_{l}$ is around 0.5, then the transfer ability score is close to 1, indicating that the features in the patch are highly transferable. A high transfer ability score means that the features in a patch are highly transferable, and vice versa. Assessing the transfer ability of patches allows a finer-grained view of the image, separating an image into highly transferable and less transferable patches. Features from highly transferable patches will be amplified while features from less transferable patches will be suppressed.

其中 $H\left(\cdot\right)$ 是标准熵函数。若补丁判别器 $D_{l}$ 的输出接近0.5,则迁移能力分数趋近于1,表明该补丁中的特征具有高度可迁移性。高迁移能力分数意味着补丁特征可迁移性强,反之则弱。通过评估补丁的迁移能力,可以更细粒度地观察图像,将其划分为高可迁移性和低可迁移性区域。高可迁移性补丁的特征将被增强,而低可迁移性补丁的特征则会被抑制。

Let $\mathcal{C}{i}={c{i1},...,c_{i p}}$ be the transfer ability scores of patches of image $i$ . The adjacency matrix of the transferability graph can be formulated as:

设 $\mathcal{C}{i}={c{i1},...,c_{i p}}$ 为图像 $i$ 各区块的迁移能力得分,其可迁移性图的邻接矩阵可表示为:

$B$ is the batch size, $\mathcal{H}$ is the number of heads, $[\cdot]{\times}$ means no gradients back-propagation for the adjacency matrix of the generated transfer ability graph. $M{t s}$ controls the connection strength of attention between patches.

$B$ 是批量大小,$\mathcal{H}$ 是注意力头数,$[\cdot]{\times}$ 表示对生成的迁移能力图的邻接矩阵不进行梯度反向传播。$M{ts}$ 控制图像块间注意力的连接强度。

The vanilla Self-Attention (SA) can then be reformulated as Transfer ability Aware Self-Attention (TSA) in the Transferability Aware Transformer Layer by integrating with transfer ability scores:

基础自注意力机制 (SA) 通过融合迁移能力分数,可在迁移感知Transformer层中重构为迁移感知自注意力机制 (TSA):

where $q_{c l s}$ is the query of the class token, $K$ represents the key of all tokens, including the class token and patch tokens, $K_{p a t c h}$ is the key of the patch tokens, $C_{K_{p a t c h}}$ denotes the transfer ability scores of the patch tokens, $\odot$ is the dot product, and $[;]$ is the concatenation operation. TSA encourages the class token to take more information from highly transferable patches with higher transfer ability scores while suppressing information from patches with low transferability scores. The Transfer ability Aware Multi-head SelfAttention is therefore defined as:

其中 $q_{c l s}$ 是类别 token 的查询向量,$K$ 表示所有 token 的键向量(包括类别 token 和图像块 token),$K_{p a t c h}$ 是图像块 token 的键向量,$C_{K_{p a t c h}}$ 表示图像块 token 的迁移能力分数,$\odot$ 是点积运算,$[;]$ 是拼接操作。迁移能力感知机制 (TSA) 会促使类别 token 从具有高迁移能力分数的图像块中获取更多信息,同时抑制来自低迁移能力分数图像块的信息。因此,迁移能力感知多头自注意力 (Transfer ability Aware Multi-head SelfAttention) 定义为:

where $h e a d_{i}=T S A\left(q_{c l s}W_{i}^{q_{c l s}},K W_{i}^{K},V W_{i}^{V}\right)$ . Taking them together, the operations in the transfer ability aware transformer layer can be formulated as:

其中 $h e a d_{i}=T S A\left(q_{c l s}W_{i}^{q_{c l s}},K W_{i}^{K},V W_{i}^{V}\right)$ 。综合起来,迁移能力感知 Transformer 层的操作可表述为:

where $z^{l-1}$ are output from previous layer. In this way, the Transfer ability Aware Transformer Layer focuses on finegrained features that are highly transferable and are discriminative for classification. Here $l=L$ , $L$ is the total number of transformer layers in ViT architecture.

其中 $z^{l-1}$ 是前一层的输出。通过这种方式,迁移感知Transformer层专注于具有高度可迁移性且对分类具有区分性的细粒度特征。此处 $l=L$ ,$L$ 是ViT架构中Transformer层的总数。

3.4. Feature Fusion

3.4. 特征融合

Emerging evidence shows that adding perturbations enhances model robustness [15,34,46]. To enhance the robustness of the generated transfer ability graphs and to make the model resistant to noisy perturbations, we propose a novel feature fusion technique into our FFTAT framework, implemented as a Feature Fusion Layer, placed before the Transferability Aware Transformer Layer. Given an image $x_{i}$ , let $b_{i}={b_{i1},\cdot\cdot\cdot,b_{i p}}$ denote the embeddings of its patches. As illustrated in Fig. 1, each embedding is perturbed by incorpora ting information from all the other embeddings. We perform the embedding fusion for the source and target domain separately, as instructed in [46]:

新兴研究表明,添加扰动能提升模型鲁棒性[15,34,46]。为增强生成迁移能力图的鲁棒性并使模型抵抗噪声扰动,我们在FFTAT框架中提出了一种新颖的特征融合技术,通过置于迁移感知Transformer层前的特征融合层实现。给定图像$x_{i}$,设$b_{i}={b_{i1},\cdot\cdot\cdot,b_{i p}}$表示其图像块嵌入。如图1所示,每个嵌入通过融合其他所有嵌入信息进行扰动。我们按照[46]的方法,分别对源域和目标域执行嵌入融合:

where $B$ is the batch size, $s$ and $t$ indicate the source and target domain. The FF aids in generating more robust transferability graphs and improves model general iz ability.

其中 $B$ 是批次大小,$s$ 和 $t$ 分别表示源域和目标域。FF (Feed Forward) 有助于生成更具鲁棒性的可迁移图,并提升模型的泛化能力。

3.5. Transfer ability Graph Guided Transformer Layer

3.5. 迁移能力图引导的Transformer层

As introduced in Section 3.2, we consider the patches as nodes and the attention between patches as edges. The learned transfer ability information can be effectively and conveniently integrated into the self-attention mechanism by updating the graph, as illustrated in the right part of Fig. 2. With the guidance of the transfer ability graph, the self-attention in Transfer ability Graph Guided Transformer

如第3.2节所述,我们将图像块视为节点,块间注意力视为边。通过更新图结构,习得的迁移能力信息能高效便捷地融入自注意力机制,如图2右侧所示。在迁移能力图的引导下,自注意力机制在迁移能力图引导的Transformer中

Layers will focus on the patches with more transferable features, thus steering the model to learn transferable knowledge across the source and target domain.

各层将聚焦于具有更多可迁移特征的图像块,从而引导模型学习源域与目标域间的可迁移知识。

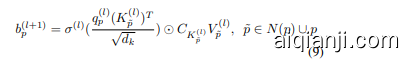

The transfer ability graph can be represented by $\mathcal{G}~=$ $(\nu,\mathcal{E})$ , with nodes $\begin{array}{r l r}{\mathcal{V}}&{{}=}&{{\nu_{1},...,\nu_{n}}}\end{array}$ , edges $\varepsilon\mathrm{~\quad~}=$ ${(\nu_{p},\nu_{\tilde{p}})|\nu_{p},\nu_{\tilde{p}}\in\mathcal{V}}$ , and adjacency matrix $M_{t s}$ . The transfer ability graph-guided self-attention for a specific patch $p$ at $l$ -th layer in the Transfer ability Graph Guided Transformer Layer is:

迁移能力图可表示为 $\mathcal{G}~=$ $(\nu,\mathcal{E})$,其中节点 $\begin{array}{r l r}{\mathcal{V}}&{{}=}&{{\nu_{1},...,\nu_{n}}}\end{array}$,边 $\varepsilon\mathrm{~\quad~}=$ ${(\nu_{p},\nu_{\tilde{p}})|\nu_{p},\nu_{\tilde{p}}\in\mathcal{V}}$,邻接矩阵为 $M_{t s}$。在迁移能力图引导的Transformer层中,第 $l$ 层特定图像块 $p$ 的图引导自注意力计算为:

where $\sigma(\cdot)$ is the activation function, which is usually the softmax function in ViTs, $q_{p}^{(l)}$ is the query of the $p$ th patch ( $\overset{\cdot}{p}$ th node in $\mathcal{G}$ ), $N(p)$ are the neighborhood nodes of node $p,d_{k}$ is the scale factor with the same dimension of queries and keys, K(l) and V (l) are the key and value of nodes $\tilde{p}$ , and $C_{K_{\tilde{p}}^{(l)}}$ denotes the transfer ability scores. Therefore, the transfer ability graph guided self-attention that is conducted at the patch level can be formulated as:

其中 $\sigma(\cdot)$ 是激活函数(在ViT中通常为softmax函数),$q_{p}^{(l)}$ 表示第 $p$ 个图像块(即图 $\mathcal{G}$ 中第 $\overset{\cdot}{p}$ 个节点)的查询向量,$N(p)$ 是节点 $p$ 的邻域节点集合,$d_{k}$ 为查询向量与键向量相同维度的缩放因子,$K^{(l)}$ 和 $V^{(l)}$ 分别表示节点 $\tilde{p}$ 的键与值,$C_{K_{\tilde{p}}^{(l)}}$ 代表迁移能力分数。因此,基于图像块级别的迁移能力图引导自注意力机制可表述为:

where queries, keys, and values of all patches are packed into matrices $Q,K$ , and $V$ , respectively, $M_{t s}$ is the adjacency matrix defined in $\mathrm{Eq}4$ . The transfer ability graph guided multi-head attention is then formulated as:

其中所有补丁的查询(query)、键(key)和值(value)分别打包成矩阵 $Q,K$ 和 $V$ , $M_{t s}$ 是 Eq4 中定义的邻接矩阵。迁移能力图引导的多头注意力公式如下:

where $h e a d_{i}=T G-S A(Q W_{i}^{Q},K W_{i}^{K},V W_{i}^{V},M_{t s})$ . The learnable parameter matrices $\boldsymbol{W}{i}^{Q}$ , $W{i}^{K}$ , $W_{i}^{V}$ and $W^{O}$ are the projections. Multi-head attention helps the model to jointly aggregate information from different representation subspaces at various positions. In this work, we apply the transfer ability guidance to each representation subspace.

其中 $h e a d_{i}=T G-S A(Q W_{i}^{Q},K W_{i}^{K},V W_{i}^{V},M_{t s})$ 。可学习参数矩阵 $\boldsymbol{W}{i}^{Q}$ 、 $W{i}^{K}$ 、 $W_{i}^{V}$ 和 $W^{O}$ 为投影矩阵。多头注意力机制使模型能够从不同位置的不同表示子空间联合聚合信息。本工作中,我们将迁移能力指导应用于每个表示子空间。

3.6. Overall Objective Function

3.6. 整体目标函数

Since our proposed FFTAT has a classifier head, a selfclustering module, a patch disc rim in at or, and a domain discriminator, there are four terms in the overall objective function. The classification loss is formulated as:

由于我们提出的FFTAT具有分类头、自聚类模块、补丁判别器和域判别器,整体目标函数包含四项。分类损失公式如下:

where $G_{c}$ is the classifier head, and $G_{f}$ is the feature extractor, i.e., the ViT with transfer ability graph-guided selfattention and feature fusion in our work.

其中 $G_{c}$ 是分类头,$G_{f}$ 是特征提取器,即本工作中具有迁移能力的图引导自注意力 (self-attention) 和特征融合 (feature fusion) 的 ViT。

The domain disc rim in at or takes the class token of images from the source and target domain and tries to discriminate

域判别器提取源域和目标域图像的类别token并尝试进行判别

Table 1. Comparison with SOTA methods on the Office-Home dataset. The best performance is marked in bold. The methods above the horizontal line are CNN-based methods, while the methods below the horizontal line are ViT-based methods.

表 1: Office-Home数据集上与SOTA方法的对比。最佳性能以粗体标出。水平线上方为基于CNN的方法,下方为基于ViT的方法。

| 方法 | Ar→Cl | Ar→Pr | Ar→Re | Cl→Ar | Cl→Pr | Cl→Re | Pr→Ar | Pr→Cl | Pr→Re | Re→Ar | Re→Cl | Re→Pr | 平均 |

|------|-------|-------|-------|-------|-------|-------|------