Big Bird: Transformers for Longer Sequences

Big Bird: 更长序列的Transformer

Abstract

摘要

Transformers-based models, such as BERT, have been one of the most successful deep learning models for NLP. Unfortunately, one of their core limitations is the quadratic dependency (mainly in terms of memory) on the sequence length due to their full attention mechanism. To remedy this, we propose, BIGBIRD, a sparse attention mechanism that reduces this quadratic dependency to linear. We show that BIGBIRD is a universal approx im at or of sequence functions and is Turing complete, thereby preserving these properties of the quadratic, full attention model. Along the way, our theoretical analysis reveals some of the benefits of having $O(1)$ global tokens (such as CLS), that attend to the entire sequence as part of the sparse attention mechanism. The proposed sparse attention can handle sequences of length up to 8x of what was previously possible using similar hardware. As a consequence of the capability to handle longer context, BIGBIRD drastically improves performance on various NLP tasks such as question answering and sum mari z ation. We also propose novel applications to genomics data.

基于Transformer的模型(如BERT)已成为自然语言处理(NLP)领域最成功的深度学习模型之一。然而,由于其全注意力机制,这类模型存在一个核心限制:对序列长度的二次方依赖(主要体现在内存消耗上)。为解决这一问题,我们提出了BIGBIRD——一种将二次方依赖降为线性关系的稀疏注意力机制。我们证明BIGBIRD是序列函数的通用逼近器,具备图灵完备性,从而保留了全注意力模型的这些特性。理论分析揭示了稀疏注意力机制中设置$O(1)$全局token(如CLS)的优势,这些token能够关注整个序列。该稀疏注意力机制可处理的序列长度达到原有硬件条件下最大长度的8倍。得益于处理长上下文的能力,BIGBIRD在问答和摘要等NLP任务上显著提升了性能。我们还提出了其在基因组数据中的创新应用。

1 Introduction

1 引言

Models based on Transformers [91], such as BERT [22, 63], are wildly successful for a wide variety of Natural Language Processing (NLP) tasks and consequently are mainstay of modern NLP research. Their versatility and robustness are the primary drivers behind the wide-scale adoption of Transformers. The model is easily adapted for a diverse range of sequence based tasks – as a seq2seq model for translation [91], sum mari z ation [66], generation [15], etc. or as a standalone encoders for sentiment analysis [83], POS tagging [65], machine reading comprehension [93], etc. – and it is known to vastly outperform previous sequence models like LSTM [37]. The key innovation in Transformers is the introduction of a self-attention mechanism, which can be evaluated in parallel for each token of the input sequence, eliminating the sequential dependency in recurrent neural networks, like LSTM. This parallelism enables Transformers to leverage the full power of modern SIMD hardware accelerators like GPUs/TPUs, thereby facilitating training of NLP models on datasets of unprecedented size. This ability to train on large scale data has led to surfacing of models like BERT [22] and T5 [75], which pretrain transformers on large general purpose corpora and transfer the knowledge to down-stream task. The pre training has led to significant improvement in low data regime downstream tasks [51] as well as tasks with sufficient data [101] and thus have been a major force behind the ubiquity of transformers in contemporary NLP.

基于Transformer [91]的模型,如BERT [22, 63],在各种自然语言处理(NLP)任务中取得了巨大成功,因此成为现代NLP研究的主流。其多功能性和鲁棒性是Transformer被广泛采用的主要原因。该模型能轻松适配多种基于序列的任务——作为seq2seq模型用于翻译[91]、摘要生成[66]、文本生成[15]等任务,或作为独立编码器用于情感分析[83]、词性标注[65]、机器阅读理解[93]等任务——且已知其性能远超LSTM [37]等传统序列模型。Transformer的核心创新是自注意力(self-attention)机制,该机制可并行计算输入序列中每个token的关联权重,消除了LSTM等循环神经网络的时序依赖性。这种并行性使Transformer能充分利用GPU/TPU等现代SIMD硬件加速器,从而支持前所未有的大规模NLP模型训练。这种大规模训练能力催生了BERT [22]和T5 [75]等模型,它们先在通用语料库上预训练Transformer,再将知识迁移至下游任务。预训练技术显著提升了小数据量下游任务[51]和充足数据任务[101]的性能,成为当前NLP领域普遍采用Transformer的重要推动力。

The self-attention mechanism overcomes constraints of RNNs (namely the sequential nature of RNN) by allowing each token in the input sequence to attend independently to every other token in the sequence. This design choice has several interesting repercussions. In particular, the full self-attention have computational and memory requirement that is quadratic in the sequence length. We note that while the corpus can be large, the sequence length, which provides the context in many applications is very limited. Using commonly available current hardware and model sizes, this requirement translates to roughly being able to handle input sequences of length 512 tokens. This reduces its direct applicability to tasks that require larger context, like QA [60], document classification, etc.

自注意力机制通过允许输入序列中的每个token独立关注序列中的其他所有token,克服了RNN的局限性(即RNN的顺序性)。这一设计选择带来了一些有趣的影响。特别是,完整的自注意力在计算和内存需求上与序列长度呈平方关系。我们注意到,虽然语料库可能很大,但在许多应用中提供上下文的序列长度非常有限。使用当前常见的硬件和模型规模,这一需求大致转化为能够处理512个token长度的输入序列。这限制了其直接适用于需要更大上下文的任务,如问答[60]、文档分类等。

However, while we know that self-attention and Transformers are useful, our theoretical understanding is rudimentary. What aspects of the self-attention model are necessary for its performance? What can we say about the expressivity of Transformers and similar models? Apriori, it was not even clear from the design if the proposed self-attention mechanism was as effective as RNNs. For example, the self-attention does not even obey sequence order as it is permutation e qui variant. This concern has been partially resolved, as Yun et al. [104] showed that transformers are expressive enough to capture all continuous sequence to sequence functions with a compact domain. Meanwhile, Pérez et al. [72] showed that the full transformer is Turing Complete (i.e. can simulate a full Turing machine). Two natural questions arise: Can we achieve the empirical benefits of a fully quadratic self-attention scheme using fewer inner-products? Do these sparse attention mechanisms preserve the expressivity and flexibility of the original network?

然而,尽管我们知道自注意力机制和Transformer模型很有用,但对其理论理解仍处于初级阶段。自注意力模型的哪些方面对其性能至关重要?关于Transformer及其类似模型的表现力,我们能得出什么结论?从设计上看,最初甚至无法确定所提出的自注意力机制是否与循环神经网络(RNN)同样有效。例如,自注意力机制并不遵循序列顺序,因为它具有排列等变性。这一担忧已得到部分解决,因为Yun等人[104]证明Transformer具有足够强的表现力,能够捕捉定义在紧致域上的所有连续序列到序列函数。同时,Pérez等人[72]表明完整版的Transformer具备图灵完备性(即能模拟完整的图灵机)。由此衍生出两个自然问题:能否通过更少的内积运算实现完全二次自注意力方案的经验优势?这些稀疏注意力机制是否能保持原始网络的表现力和灵活性?

In this paper, we address both the above questions and produce a sparse attention mechanism that improves performance on a multitude of tasks that require long contexts. We systematically develop BIGBIRD, an attention mechanism whose complexity is linear in the number of tokens (Sec. 2). We take inspiration from graph spars if i cation methods and understand where the proof for expressiveness of Transformers breaks down when full-attention is relaxed to form the proposed attention pattern. This understanding helped us develop BIGBIRD, which is theoretically as expressive and also empirically useful. In particular, our BIGBIRD consists of three main part:

在本文中,我们同时解决了上述两个问题,并提出了一种稀疏注意力机制,该机制在需要长上下文的多项任务中提升了性能。我们系统性地开发了BIGBIRD,这是一种注意力机制,其复杂度与token数量呈线性关系(见第2节)。我们从图稀疏化方法中汲取灵感,并理解了当将全注意力放宽以形成所提出的注意力模式时,Transformer表达能力的证明在何处失效。这一理解帮助我们开发了BIGBIRD,该机制在理论上具有同等表达能力,并在实践中也表现出实用性。具体而言,我们的BIGBIRD包含三个主要部分:

This leads to a high performing attention mechanism scaling to much longer sequence lengths (8x).

这使得注意力机制的性能得到提升,能够扩展到更长的序列长度(8倍)。

To summarize, our main contributions are:

总结来说,我们的主要贡献包括:

1.1 Related Work

1.1 相关工作

There have been a number of interesting attempts, that were aimed at alleviating the quadratic dependency of Transformers, which can broadly categorized into two directions. First line of work embraces the length limitation and develops method around it. Simplest methods in this category just employ sliding window [93], but in general most work fits in the following general paradigm: using some other mechanism select a smaller subset of relevant contexts to feed in the transformer and optionally iterate, i.e. call transformer block multiple time with different contexts each time. Most prominently, SpanBERT [42], ORQA [54], REALM [34], RAG [57] have achieved strong performance for different tasks. However, it is worth noting that these methods often require significant engineering efforts (like back prop through large scale nearest neighbor search) and are hard to train.

为缓解Transformer的二次方依赖问题,已有许多有趣的尝试,这些方法可大致分为两个方向。第一种思路接受长度限制并围绕其开发方法。此类中最简单的方法仅采用滑动窗口[93],但大多数工作遵循以下通用范式:通过其他机制选择较小的相关上下文子集输入Transformer,并可选择迭代(即每次用不同上下文多次调用Transformer模块)。其中最突出的SpanBERT[42]、ORQA[54]、REALM[34]和RAG[57]在不同任务中取得了强劲性能。但值得注意的是,这些方法通常需要大量工程努力(如通过大规模最近邻搜索进行反向传播)且训练难度较高。

Second line of work questions if full attention is essential and have tried to come up with approaches that do not require full attention, thereby reducing the memory and computation requirements. Prominently, Dai et al. [21], Sukhbaatar et al. [82], Rae et al. [74] have proposed auto-regresive models that work well for left-to-right language modeling but suffer in tasks which require bidirectional context. Child et al. [16] proposed a sparse model that reduces the complexity to $O(n{\sqrt{n}})$ , Kitaev et al. [49] further reduced the complexity to $O(n\log(n))$ by using LSH to compute nearest neighbors.

第二条研究方向质疑全注意力机制的必要性,并尝试提出无需全注意力的方法以降低内存和计算需求。其中,Dai等人[21]、Sukhbaatar等人[82]、Rae等人[74]提出的自回归模型在从左到右的语言建模中表现良好,但在需要双向上下文的任务中存在局限。Child等人[16]提出稀疏模型将复杂度降至$O(n{\sqrt{n}})$,Kitaev等人[49]则通过局部敏感哈希(LSH)计算最近邻将复杂度进一步降至$O(n\log(n))$。

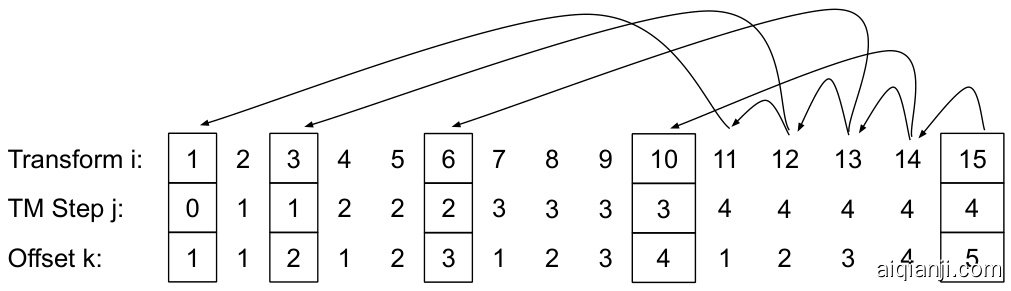

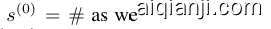

Figure 1: Building blocks of the attention mechanism used in BIGBIRD. White color indicates absence of attention. (a) random attention with $r=2$ , (b) sliding window attention with $w=3$ (c) global attention with $g=2$ . (d) the combined BIGBIRD model.

图 1: BIGBIRD中使用的注意力机制构建模块。白色表示无注意力。(a) 随机注意力(r=2),(b) 滑动窗口注意力(w=3),(c) 全局注意力(g=2),(d) 组合后的BIGBIRD模型。

Ye et al. [103] proposed binary partitions of the data where as Qiu et al. [73] reduced complexity by using block sparsity. Recently, Longformer [8] introduced a localized sliding window based mask with few global mask to reduce computation and extended BERT to longer sequence based tasks. Finally, our work is closely related to and built on the work of Extended Transformers Construction [4]. This work was designed to encode structure in text for transformers. The idea of global tokens was used extensively by them to achieve their goals. Our theoretical work can be seen as providing a justification for the success of these models as well. It is important to note that most of the aforementioned methods are heuristic based and empirically are not as versatile and robust as the original transformer, i.e. the same architecture do not attain SoTA on multiple standard benchmarks. (There is one exception of Longformer which we include in all our comparisons, see App. E.3 for a more detailed comparison). Moreover, these approximations do not come with theoretical guarantees.

Ye等人[103]提出了数据的二元划分方法,而Qiu等人[73]则通过使用块稀疏性来降低复杂度。最近,Longformer[8]引入了一种基于局部滑动窗口的掩码机制,辅以少量全局掩码,以减少计算量,并将BERT扩展到更长序列的任务中。最后,我们的工作与Extended Transformers Construction[4]密切相关,并在此基础上构建。该工作旨在为Transformer编码文本结构,他们广泛使用了全局token的概念来实现目标。我们的理论研究也可以视为对这些模型成功的一种理论支撑。值得注意的是,上述大多数方法都是基于启发式的,经验上不如原始Transformer那样通用和鲁棒,即同一架构无法在多个标准基准测试中达到最优水平(Longformer是个例外,我们将其纳入所有比较中,详见附录E.3的详细对比)。此外,这些近似方法缺乏理论保证。

2 BIGBIRD Architecture

2 BIGBIRD 架构

In this section, we describe the BIGBIRD model using the generalised attention mechanism that is used in each layer of transformer operating on an input sequence ${\pmb X}=({\pmb x}_ {1},...,{\pmb x}_{n})\in\mathbb{R}^{n\times d}$ The generalized attention mechanism is described by a directed graph $D$ whose vertex set is $[n]=$ ${1,\ldots,n}$ . The set of arcs (directed edges) represent the set of inner products that the attention mechanism will consider. Let $N(i)$ denote the out-neighbors set of node $i$ in $D$ , then the $i^{\mathrm{th}}$ output vector of the generalized attention mechanism is defined as

在本节中,我们使用广义注意力机制来描述BIGBIRD模型,该机制作用于输入序列 ${\pmb X}=({\pmb x}_ {1},...,{\pmb x}_{n})\in\mathbb{R}^{n\times d}$ 的每一层Transformer。广义注意力机制通过一个有向图 $D$ 来描述,其顶点集为 $[n]=$ ${1,\ldots,n}$。弧集(有向边)表示注意力机制将考虑的内积集合。设 $N(i)$ 表示节点 $i$ 在 $D$ 中的出邻居集,则广义注意力机制的第 $i^{\mathrm{th}}$ 个输出向量定义为

$$

\operatorname{ArfN}_ {D}(X)_ {i}={\pmb x}_ {i}+\sum_{h=1}^{H}\sigma\left(Q_{h}({\pmb x}_ {i})K_{h}({\pmb X}_ {N(i)})^{T}\right)\cdot V_{h}({\pmb X}_{N(i)})

$$

$$

\operatorname{ArfN}_ {D}(X)_ {i}={\pmb x}_ {i}+\sum_{h=1}^{H}\sigma\left(Q_{h}({\pmb x}_ {i})K_{h}({\pmb X}_ {N(i)})^{T}\right)\cdot V_{h}({\pmb X}_{N(i)})

$$

where $Q_{h},K_{h}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{m}$ are query and key functions respectively, $V_{h}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{d}$ is a value function, $\sigma$ is a scoring function (e.g. softmax or hardmax) and $H$ denotes the number of heads. Also note $X_{N(i)}$ corresponds to the matrix formed by only stacking ${\pmb{x}_{j}:j\in N(i)}$ and not all the inputs. If $D$ is the complete digraph, we recover the full quadratic attention mechanism of Vaswani et al. [91]. To simplify our exposition, we will operate on the adjacency matrix $A$ of the graph $D$ even though the underlying graph maybe sparse. To elaborate, $\dot{A}\in[0,\dot{1}]^{n\times n}$ with $A(i,j)=1$ if query $i$ attends to key $j$ and is zero otherwise. For example, when $A$ is the ones matrix (as in BERT), it leads to quadratic complexity, since all tokens attend on every other token. This view of self-attention as a fully connected graph allows us to exploit existing graph theory to help reduce its complexity. The problem of reducing the quadratic complexity of self-attention can now be seen as a graph spars if i cation problem. It is well-known that random graphs are expanders and can approximate complete graphs in a number of different contexts including in their spectral properties [80, 38]. We believe sparse random graph for attention mechanism should have two desiderata: small average path length between nodes and a notion of locality, each of which we discuss below.

其中 $Q_{h},K_{h}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{m}$ 分别是查询 (query) 和键 (key) 函数,$V_{h}:\mathbb{R}^{d}\rightarrow\mathbb{R}^{d}$ 是值 (value) 函数,$\sigma$ 是评分函数(如 softmax 或 hardmax),$H$ 表示头数。注意 $X_{N(i)}$ 对应仅由 ${\pmb{x}_{j}:j\in N(i)}$ 堆叠而成的矩阵,而非全部输入。若 $D$ 为完整有向图,则恢复 Vaswani 等人 [91] 的完全二次注意力机制。为简化表述,我们将基于图 $D$ 的邻接矩阵 $A$ 进行操作(即使底层图可能是稀疏的)。具体而言,$\dot{A}\in[0,\dot{1}]^{n\times n}$ 满足 $A(i,j)=1$(当查询 $i$ 关注键 $j$ 时)否则为零。例如,当 $A$ 为全1矩阵(如 BERT)时,由于所有 token 都相互关注,会导致二次复杂度。将自注意力视为全连接图的视角,使我们能利用现有图论来降低其复杂度。降低自注意力二次复杂度的问题可视为图稀疏化问题。众所周知,随机图具有扩展性,能在包括谱特性在内的多种情境中近似完全图 [80, 38]。我们认为注意力机制的稀疏随机图应具备两个特性:节点间较短的平均路径长度和局部性概念,下文将分别讨论。

Let us consider the simplest random graph construction, known as Erd6s-Rényi model, where each edge is independently chosen with a fixed probability. In such a random graph with just $\tilde{\Theta}(n)$ edges, the shortest path between any two nodes is logarithmic in the number of nodes [17, 43]. As a consequence, such a random graph approximates the complete graph spectrally and its second eigenvalue (of the adjacency matrix) is quite far from the first eigenvalue [9, 10, 6]. This property leads to a rapid mixing time for random walks in the grpah, which informally suggests that information can flow fast between any pair of nodes. Thus, we propose a sparse attention where each query attends over $r$ random number of keys i.e. $A(i,\cdot)=1$ for $r$ randomly chosen keys (see Fig. 1a).

让我们考虑最简单的随机图构建方法,即Erd6s-Rényi模型,其中每条边都以固定概率独立选择。在这种仅有$\tilde{\Theta}(n)$条边的随机图中,任意两个节点之间的最短路径与节点数量呈对数关系[17, 43]。因此,这种随机图在谱意义上近似于完全图,其邻接矩阵的第二特征值与第一特征值相差较大[9, 10, 6]。这一特性使得图中随机游走的混合时间很短,非正式地说,这表明信息可以在任意节点对之间快速流动。因此,我们提出了一种稀疏注意力机制,其中每个查询关注$r$个随机数量的键,即对于随机选择的$r$个键,$A(i,\cdot)=1$(见图1a)。

The second viewpoint which inspired the creation of BIGBIRD is that most contexts within NLP and computational biology have data which displays a great deal of locality of reference. In this phenomenon, a great deal of information about a token can be derived from its neighboring tokens. Most pertinent ly, Clark et al. [19] investigated self-attention models in NLP tasks and concluded that that neighboring inner-products are extremely important. The concept of locality, proximity of tokens in linguistic structure, also forms the basis of various linguistic theories such as transformation algenerative grammar. In the terminology of graph theory, clustering coefficient is a measure of locality of connectivity, and is high when the graph contains many cliques or near-cliques (subgraphs that are almost fully interconnected). Simple Erdos-Renyi random graphs do not have a high clustering coefficient [84], but a class of random graphs, known as small world graphs, exhibit high clustering coefficient [94]. A particular model introduced by Watts and Strogatz [94] is of high relevance to us as it achieves a good balance between average shortest path and the notion of locality. The generative process of their model is as follows: Construct a regular ring lattice, a graph with $n$ nodes each connected to $w$ neighbors, $w/2$ on each side.

激发BIGBIRD创建的第二个观点是:自然语言处理(NLP)和计算生物学领域中的大多数上下文数据都表现出极强的引用局部性。这种现象下,一个token的大量信息可以从其相邻token中推导得出。最具相关性的是,Clark等人[19]研究了NLP任务中的自注意力(self-attention)模型,发现相邻内积运算极其重要。这种语言结构中token邻近的局部性概念,也构成了转换生成语法(transformational-generative grammar)等多种语言学理论的基础。在图论术语中,聚类系数是连接局部性的度量指标,当图中存在大量团或近团结构(几乎完全互联的子图)时,该系数会保持较高值。简单的Erdos-Renyi随机图不具备高聚类系数[84],但被称为小世界网络(small world graphs)的一类随机图则表现出高聚类特性[94]。Watts和Strogatz提出的特定模型[94]与我们高度相关,因为它在平均最短路径和局部性概念之间实现了良好平衡。该模型的生成过程如下:构建一个规则环状网格,即包含$n$个节点的图,每个节点连接$w$个邻居(每侧各$w/2$个)。

In other words we begin with a sliding window on the nodes. Then a random subset $(k%)$ of all connections is replaced with a random connection. The other $(100-k)%$ local connections are retained. However, deleting such random edges might be inefficient on modern hardware, so we retain it, which will not affect its properties. In summary, to capture these local structures in the context, in BIGBIRD, we define a sliding window attention, so that during self attention of width $w$ , query at location $i$ attends from $i-w/2$ to $i+w/2$ keys. In our notation, $A(i,i-w/2:i+w/2)=\mathbf{\bar{1}}$ (see Fig. 1b). As an initial sanity check, we performed basic experiments to test whether these intuitions are sufficient in getting performance close to BERT like models, while keeping attention linear in the number of tokens. We found that random blocks and local window were insufficient in capturing all the context necessary to compete with the performance of BERT.

换句话说,我们首先在节点上应用滑动窗口。然后,将所有连接中的随机子集 $(k%)$ 替换为随机连接,其余 $(100-k)%$ 的局部连接则保留。不过,在现代硬件上删除这类随机边可能效率不高,因此我们选择保留它们,这不会影响其特性。总之,为了捕捉上下文中的这些局部结构,在 BIGBIRD 中,我们定义了滑动窗口注意力机制,使得在宽度为 $w$ 的自注意力过程中,位置 $i$ 的查询会关注从 $i-w/2$ 到 $i+w/2$ 的键。在我们的表示中,$A(i,i-w/2:i+w/2)=\mathbf{\bar{1}}$ (见图 1b)。作为初步验证,我们进行了基础实验,测试这些直觉是否足以在保持注意力与 token 数量呈线性关系的同时,获得接近 BERT 类模型的性能。我们发现,随机块和局部窗口不足以捕捉与 BERT 性能竞争所需的全部上下文。

Table 1: Building block comparison $\ @512$

| Model | MLM | SQuAD | MNLI |

| BERT-base | 64.2 | 88.5 | 83.4 |

| Random (R) | 60.1 | 83.0 | 80.2 |

| Window (W) | 58.3 | 76.4 | 73.1 |

| R+W | 62.7 | 85.1 | 80.5 |

表 1: 基础模块对比 $\ @512$

| Model | MLM | SQuAD | MNLI |

|---|---|---|---|

| BERT-base | 64.2 | 88.5 | 83.4 |

| Random (R) | 60.1 | 83.0 | 80.2 |

| Window (W) | 58.3 | 76.4 | 73.1 |

| R+W | 62.7 | 85.1 | 80.5 |

The final piece of BIGBIRD is inspired from our theoretical analysis (Sec. 3), which is critical for empirical performance. More specifically, our theory utilizes the importance of “global tokens” (tokens that attend to all tokens in the sequence and to whom all tokens attend to (see Fig. 1c). These global tokens can be defined in two ways:

BIGBIRD的最后一个组成部分源于我们的理论分析(第3节),这对实证性能至关重要。具体而言,我们的理论利用了"全局token"(即关注序列中所有token并被所有token关注的token,见图1c)的重要性。这些全局token可以通过两种方式定义:

The final attention mechanism for BIGBIRD (Fig. 1d) has all three of these properties: queries attend to $r$ random keys, each query attends to $w/2$ tokens to the left of its location and $w/2$ to the right of its location and they contain $g$ global tokens (The global tokens can be from existing tokens or extra added tokens). We provide implementation details in App. D.

BIGBIRD的最终注意力机制(图 1d)具有以下三个特性:查询关注$r$个随机键,每个查询关注其位置左侧的$w/2$个token和右侧的$w/2$个token,并包含$g$个全局token(全局token可以来自现有token或额外添加的token)。具体实现细节见附录D。

3 Theoretical Results about Sparse Attention Mechanism

3 稀疏注意力机制 (Sparse Attention Mechanism) 的理论结果

In this section, we will show that that sparse attention mechanisms are as powerful and expressive as full-attention mechanisms in two respects. First, we show that when sparse attention mechanisms are used in a standalone encoder (such as BERT), they are Universal Ap proxima tors of sequence to sequence functions in the style of Yun et al. [104]. We note that this property was also explored theoretically in contemporary work Yun et al. [105]. Second, unlike [105], we further show that sparse encoder-decoder transformers are Turing Complete (assuming the same conditions defined in [72]). Complementing the above positive results, we also show that moving to a sparse-attention mechanism incurs a cost, i.e. there is no free lunch. In Sec. 3.4, we show lower bounds by exhibiting a natural task where any sufficiently sparse mechanism will require polynomial ly more layers.

在本节中,我们将从两个方面证明稀疏注意力机制 (sparse attention mechanism) 与全注意力机制 (full-attention mechanism) 具有同等表达能力和性能。首先,我们证明当稀疏注意力机制作为独立编码器 (如 BERT) 使用时,它能以 Yun 等人 [104] 提出的方式通用逼近序列到序列函数。值得注意的是,Yun 等人 [105] 在同期工作中也从理论角度探讨了这一特性。其次,与 [105] 不同,我们进一步证明稀疏编码器-解码器 Transformer 具备图灵完备性 (Turing Complete) (假设满足 [72] 中定义的相同条件)。作为上述积极结论的补充,我们也证明采用稀疏注意力机制需要付出代价——即不存在免费午餐。在 3.4 节中,我们通过构建一个自然任务来展示下界:任何足够稀疏的机制都需要多项式级数量的额外层。

3.1 Notation

3.1 符号表示

The complete Transformer encoder stack is nothing but the repeated application of a single-layer encoder (with independent parameters). We denote class of such Transformer encoders stack, defined using generalized encoder (Sec. 2), by T DH,m,q which consists of H-heads with head size m and q is the hidden layer size of the output network, and the attention layer is defined by the directed graph $D$ .

完整的Transformer编码器堆栈只是单层编码器(具有独立参数)的重复应用。我们将这类Transformer编码器堆栈定义为T DH,m,q类(使用广义编码器定义,见第2节),其中包含H个头(head),头尺寸为m,q是输出网络的隐藏层大小,注意力层由有向图$D$定义。

The key difference between our proposed attention mechanism to that of Vaswani et al. [91], Yun et al. [104] is that we add a special token at the beginning of each sequence and assign it a special vector. We will refer to this as $\pmb{x}_{0}$ . Therefore our graph $D$ will have vertex set ${0}\cup[\bar{n}]={0,1,2,\ldots,n}$ We will assume that this extra node and its respective vector will be dropped at the final output layer of transformer. To avoid cumbersome notation, we will still treat transformer as mapping sequences $\pmb{X}\in\mathbb{R}^{n\times d}$ to $\mathbb{R}^{n\times d}$ . We will also allow the transformer to append position embeddings $E\in\mathbb{R}^{d\times n}$ to matrix $X$ in the input layer.

我们提出的注意力机制与Vaswani等人[91]、Yun等人[104]的关键区别在于,我们在每个序列开头添加了一个特殊token并为其分配了特殊向量。该向量将记为$\pmb{x}_{0}$。因此,我们的图$D$将具有顶点集${0}\cup[\bar{n}]={0,1,2,\ldots,n}$。我们假设该额外节点及其对应向量会在Transformer的最终输出层被丢弃。为避免繁琐的符号表示,我们仍将Transformer视为从序列$\pmb{X}\in\mathbb{R}^{n\times d}$到$\mathbb{R}^{n\times d}$的映射。同时允许Transformer在输入层为矩阵$X$添加位置嵌入$E\in\mathbb{R}^{d\times n}$。

Finally, we need to define the function class and distance measure for proving universal approximation property. Let ${\mathcal{F}}_ {C D}$ denote the set of continuous functions $f:[0,1]^{n\times\dot{d}}\rightarrow\mathbb{R}^{\stackrel{\leftarrow}{n}\times d}$ which are continuous with respect to the topology defined by $\ell_{p}$ norm. Recall for any $p\geq1$ , the $\ell_{p}$ distance is $d_{p}(f_{1},f_{2}) = \left( \int ||f_{1}(X) - f_{2}(X)||_{p}^{p} , dX \right)^{1/p}$.

最后,我们需要定义用于证明通用逼近性质的函数类和距离度量。设 ${\mathcal{F}}_ {C D}$ 表示连续函数 $f:[0,1]^{n\times\dot{d}}\rightarrow\mathbb{R}^{\stackrel{\leftarrow}{n}\times d}$ 的集合,这些函数在由 $\ell_{p}$ 范数定义的拓扑下是连续的。回顾对于任意 $p\geq1$,$\ell_{p}$ 距离为 $d_{p}(f_{1},f_{2}) = \left( \int ||f_{1}(X) - f_{2}(X)||_{p}^{p} , dX \right)^{1/p}$。

3.2 Universal Ap proxima tors

3.2 通用逼近器

Definition 1. The star-graph $S$ centered at 0 is the graph defined on ${0,\ldots,n}$ . The neighborhood of all vertices $i$ is $N(i)={0,i}$ for $i\in{1\ldots n}$ and $N(0)={1,\ldots\overset{\cdot}{n}}$ .

定义 1. 以0为中心的星图 $S$ 是定义在 ${0,\ldots,n}$ 上的图。对于所有顶点 $i\in{1\ldots n}$ ,其邻域为 $N(i)={0,i}$ ,而 $N(0)={1,\ldots\overset{\cdot}{n}}$ 。

Our main theorem is that the sparse attention mechanism defined by any graph containing $S$ is a universal approx im at or:

我们的主要定理是:任何包含 $S$ 的图所定义的稀疏注意力机制 (sparse attention mechanism) 都是一个通用逼近器 (universal approximator) :

Theorem 1. Given $1<p<\infty$ and $\epsilon>0$ , for any $f\in\mathcal{F}_ {C D}$ , there exists a transformer with sparse-attention, $g\in\mathcal{T}_ {D}^{H,m,q}$ such that $d_{p}(f,g)\leq\epsilon$ where $D$ is any graph containing star graph $S$

定理 1. 给定 $1<p<\infty$ 和 $\epsilon>0$,对于任意 $f\in\mathcal{F}_ {C D}$,存在一个具有稀疏注意力 (sparse-attention) 的 transformer $g\in\mathcal{T}_ {D}^{H,m,q}$,使得 $d_{p}(f,g)\leq\epsilon$,其中 $D$ 是包含星图 $S$ 的任意图。

To prove the theorem, we will follow the standard proof structure outlined in [104].

为了证明该定理,我们将遵循[104]中概述的标准证明结构。

Step 1: Approximate ${\mathcal{F}}_ {C D}$ by piece-wise constant functions. Since $f$ is a continuous function with bounded domain $[0,1)^{n\times d}$ , we will approximate it with a suitable piece-wise constant function. This is accomplished by a suitable partition of the region $[0,1)$ into a grid of granularity $\delta$ to get a discrete set $\mathbb{G}_ {\delta}$ . Therefore, we can assume that we are dealing with a function ${\bar{f}}:\mathbb{G}_{\delta}\rightarrow\mathbb{R}^{n\times d}$ , where dp(f, f¯) ≤ 3ϵ .

步骤1:用分段常数函数逼近 ${\mathcal{F}}_ {C D}$。由于 $f$ 是一个定义在有界域 $[0,1)^{n\times d}$ 上的连续函数,我们将用适当的分段常数函数来逼近它。这通过将区域 $[0,1)$ 以粒度 $\delta$ 划分成网格,得到离散集合 $\mathbb{G}_ {\delta}$ 来实现。因此,我们可以假设我们正在处理一个函数 ${\bar{f}}:\mathbb{G}_{\delta}\rightarrow\mathbb{R}^{n\times d}$,其中 dp(f, f¯) ≤ 3ϵ。

Step 2: Approximate piece-wise constant functions by modified transformers. This is the key step of the proof where the self-attention mechanism is used to generate a contextual-mapping of the input. Informally, a contextual mapping is a unique code for the pair consisting of a matrix $(X,x_{i})$ and a column. Its uniqueness allows the Feed forward layers to use each code to map it to a unique output column.

步骤2:用改进版Transformer逼近分段常数函数。这是证明的关键步骤,利用自注意力机制生成输入的上下文映射。非正式地说,上下文映射是由矩阵$(X,x_{i})$和列组成的对的唯一编码。其唯一性使前馈层能利用每个编码将其映射到唯一的输出列。

The main technical challenge is computing the contextual mapping using only sparse attention mechanism. This was done in [104] using a “selective” shift operator which shift up entries that are in a specific interval. Key to their proof was the fact that the shift, was exactly the range of the largest entry to the smallest entry.

主要技术挑战在于仅通过稀疏注意力机制计算上下文映射。[104] 中采用了一种"选择性"位移算子来实现这一点,该算子会将特定区间内的条目进行位移。其证明的关键在于:位移范围恰好等于最大条目与最小条目的差值。

Creating a contextual mapping with a sparse attention mechanism is quite a challenge. In particular, because each query only attends to a few keys, it is not at all clear that sufficient information can be corralled to make a contextual embedding of the entire matrix. To get around this, we develop a sparse shift operator which shifts the entries of the matrices if they lie in a certain range. The exact amount of the shift is controlled by the directed sparse attention graphg $D$ . The second key ingredient is the use of additional global token. By carefully applying the operator to a set of chosen ranges, we will show that each column will contain a unique mapping of the full mapping. Therefore, we can augment the loss of inner-products in the self attention mechanism by using multiple layers and an auxiliary global token.

使用稀疏注意力机制创建上下文映射是一项相当大的挑战。特别是由于每个查询仅关注少量键值,很难确保能收集足够信息来构建整个矩阵的上下文嵌入。为解决这个问题,我们开发了一种稀疏位移算子,当矩阵元素处于特定范围内时对其进行位移。具体位移量由有向稀疏注意力图 $D$ 控制。第二个关键要素是引入额外的全局token。通过将该算子精确应用于选定范围集,我们将证明每列都能包含完整映射的唯一表示。因此,可以通过使用多层结构和辅助全局token来增强自注意力机制中内积运算的信息捕获能力。

Step 3: Approximate modified transformers by original Transformers: The final step is to approximate the modified transformers by the original transformer which uses ReLU and softmax.

步骤3:用原始Transformer近似改进版Transformer:最后一步是使用带ReLU和softmax的原始Transformer来近似改进版Transformer。

We provide the full details in App. A.

完整细节见附录 A。

3.3 Turing Completeness

3.3 图灵完备性

Transformers are a very general class. In the original paper of Vaswani et al. [91], they were used in both an encoder and a decoder. While the previous section outlined how powerful just the encoders were, another natural question is to ask what the additional power of both a decoder along with an encoder is? Pérez et al. [72] showed that the full transformer based on a quadratic attention mechanism is Turing Complete. This result makes one unrealistic assumption, which is that the model works on arbitrary precision model. Of course, this is necessary as otherwise, Transformers are bounded finite state machines and cannot be Turing Complete.

Transformer 是一个非常通用的类别。在 Vaswani 等人 [91] 的原始论文中,它们同时被用于编码器和解码器。虽然前一节概述了仅编码器的强大能力,但另一个自然的问题是:编码器与解码器结合还能带来哪些额外能力?Pérez 等人 [72] 证明了基于二次注意力机制的完整 Transformer 具有图灵完备性。该结果做了一个不切实际的假设,即模型可以处理任意精度的数据。当然,这是必要的,否则 Transformer 就是有限状态机,无法实现图灵完备性。

It is natural to ask if the full attention mechanism is necessary. Or can a sparse attention mechanism also be used to simulate any Turing Machine? We show that this is indeed the case: we can use a sparse encoder and sparse decoder to simulate any Turing Machine.

很自然地会问,完整的注意力机制是否必要。或者说,稀疏注意力机制是否也能用来模拟任何图灵机?我们证明事实确实如此:可以使用稀疏编码器和稀疏解码器来模拟任何图灵机。

To use the sparse attention mechanism in the transformer architecture, we need to define a suitable modification where each token only reacts to previous tokens. Unlike the case for BERT, where the entire attention mechanism is applied once, in full transformers, the sparse attention mechanism at decoder side is used token by token. Secondly the work of Pérez et al. [72], uses each token as a representation of the tape history and uses the full attention to move and retrieve the correct tape symbol. Most of the construction of Pérez et al. [72] goes through for sparse attentions, except for their addressing scheme to point back in history (Lemma B.4 in [72]). We show how to simulate this using a sparse attention mechanism and defer the details to App. B.

要在Transformer架构中使用稀疏注意力机制,我们需要定义一个合适的修改方案,使每个token仅对先前的token产生响应。与BERT一次性应用整个注意力机制不同,在完整Transformer中,解码器端的稀疏注意力机制是逐个token使用的。其次,Pérez等人[72]的研究将每个token作为磁带历史的表征,并利用完整注意力机制来移动和检索正确的磁带符号。除了他们用于回溯历史的寻址方案([72]中的引理B.4)外,Pérez等人[72]的大部分构建方法同样适用于稀疏注意力机制。我们展示了如何通过稀疏注意力机制模拟这一过程,并将细节留待附录B阐述。

3.4 Limitations

3.4 局限性

We demonstrate a natural task which can be solved by the full attention mechanism in $O(1)$ -layers. However, under standard complexity theoretic assumptions, this problem requires $\tilde{\Omega}(n)$ -layers for any sparse attention layers with ${\tilde{O}}(n)$ edges (not just BIGBIRD). (Here $\tilde{O}$ hides poly-logarthmic factors). Consider the simple problem of finding the corresponding furthest vector for each vector in the given sequence of length $n$ . Formally,

我们展示了一个自然任务,该任务可以通过完整的注意力机制在 $O(1)$ 层内解决。然而,根据标准复杂性理论假设,对于任何具有 ${\tilde{O}}(n)$ 条边的稀疏注意力层 (不仅仅是 BIGBIRD) ,此问题需要 $\tilde{\Omega}(n)$ 层。(此处 $\tilde{O}$ 隐藏了多对数因子)。考虑一个简单问题:在给定长度为 $n$ 的序列中为每个向量找到对应的最远向量。形式化地,

Task 1. Given $n$ unit vectors ${u_{1},\ldots,u_{n}}$ , find $f(u_{1},\dots,u_{n})\to(u_{1^{ * }},\dots,u_{n^{ * }})$ where for a fixed $j\in[n]$ , we define $j^{ * }=\arg\operatorname * {max}_ {k}|u_{k}-u_{j}|_{2}^{2}$ .

任务1. 给定 $n$ 个单位向量 ${u_{1},\ldots,u_{n}}$,找到映射 $f(u_{1},\dots,u_{n})\to(u_{1^{ * }},\dots,u_{n^{ * }})$,其中对于固定的 $j\in[n]$,定义 $j^{ * }=\arg\operatorname * {max}_ {k}|u_{k}-u_{j}|_{2}^{2}$。

Finding vectors that are furthest apart boils down to minimize inner product search in case of unit vectors. For a full-attention mechanism with appropriate query and keys, this task is very easy as we can evaluate all pair-wise inner products.

寻找相距最远的向量可归结为在单位向量情况下最小化内积搜索。对于具有适当查询(query)和键(keys)的全注意力机制(full-attention),这项任务非常简单,因为我们可以计算所有成对内积。

The impossibility for sparse-attention follows from hardness results stemming from Orthogonal Vector Conjecture(OVC) [1, 2, 7, 96]. The OVC is a widely used assumption in fine-grained complexity. Informally, it states that one cannot determine if the minimum inner product among $n$ boolean vectors is 0 in sub quadratic time. In App. C, we show a reduction using OVC to show that if a transformer $g\in\mathcal{T}_{D}^{H=\bar{1},m=2d,q=0}$ for any sparse directed graph $D$ can evaluate the Task 1, it can solve the orthogonal vector problem.

稀疏注意力机制的不可能性源于正交向量猜想(OVC) [1, 2, 7, 96]的硬度结果。OVC是细粒度复杂度理论中广泛使用的假设,非正式地讲,它表明无法在次二次时间内判断n个布尔向量的最小内积是否为0。在附录C中,我们通过OVC归约证明:若对于任意稀疏有向图D,存在Transformer $g\in\mathcal{T}_{D}^{H=\bar{1},m=2d,q=0}$ 能评估任务1,则该模型可解决正交向量问题。

4 Experiments: Natural Language Processing

4 实验:自然语言处理

In this section our goal is to showcase benefits of modeling longer input sequence for NLP tasks, for which we select three representative tasks. We begin with basic masked language modeling (MLM; Devlin et al. 22) to check if better contextual representations can be learnt by utilizing longer contiguous sequences. Next, we consider QA with supporting evidence, for which capability to handle longer sequence would allow us to retrieve more evidence using crude systems like TF-IDF/BM25.

在本节中,我们的目标是展示为NLP任务建模更长输入序列的优势,为此我们选择了三个代表性任务。首先从基础的掩码语言建模(MLM; Devlin et al. [22])开始,验证是否通过利用更长的连续序列可以学习到更好的上下文表示。接着,我们考虑带有支持证据的问答任务,处理更长序列的能力将使我们能够使用TF-IDF/BM25等简单系统检索更多证据。

| Model | HotpotQA | NaturalQ | TriviaQA | WikiHop | |||

| Ans | Sup | Joint | LA | SA | Full | MCQ | |

| RoBERTa | 73.5 | 83.4 | 63.5 | 74.3 | 72.4 | ||

| Longformer | 74.3 | 84.4 | 64.4 | 一 | 75.2 | 75.0 | |

| BIGBIRD-ITC | 75.7 | 86.8 | 67.7 | 70.8 | 53.3 | 79.5 | 75.9 |

| BIGBIRD-ETC | 75.5 | 87.1 | 67.8 | 73.9 | 54.9 | 78.7 | 75.9 |

Table 2: QA Dev results using Base size models. We report accuracy for WikiHop and F1 for HotpotQA, Natural Questions, and TriviaQA.

| 模型 | HotpotQA | NaturalQ | TriviaQA | WikiHop | |||

|---|---|---|---|---|---|---|---|

| Ans | Sup | Joint | LA | SA | Full | MCQ | |

| RoBERTa | 73.5 | 83.4 | 63.5 | 74.3 | 72.4 | ||

| Longformer | 74.3 | 84.4 | 64.4 | — | 75.2 | 75.0 | |

| BIGBIRD-ITC | 75.7 | 86.8 | 67.7 | 70.8 | 53.3 | 79.5 | 75.9 |

| BIGBIRD-ETC | 75.5 | 87.1 | 67.8 | 73.9 | 54.9 | 78.7 | 75.9 |

表 2: 使用Base尺寸模型的问答开发结果。WikiHop报告准确率,HotpotQA、Natural Questions和TriviaQA报告F1分数。

Table 3: Fine-tuning results on Test set for QA tasks. The Test results (F1 for HotpotQA, Natural Questions, TriviaQA, and Accuracy for WikiHop) have been picked from their respective leader board. For each task the top-3 leaders were picked not including BIGBIRD-etc. For Natural Questions Long Answer (LA), TriviaQA, and WikiHop, BIGBIRD-ETC is the new state-of-the-art. On HotpotQA we are third in the leader board by F1 and second by Exact Match (EM).

| Model | HotpotQA | NaturalQ | TriviaQA | WikiHop | ||||

| Ans | Sup | Joint | LA | SA | Full | Verified | MCQ | |

| HGN [26] | 82.2 | 88.5 | 74.2 | |||||

| GSAN | 81.6 | 88.7 | 73.9 | |||||

| ReflectionNet[32] | 77.1 | 64.1 | ||||||

| RikiNet-v2[61] | 76.1 | 61.3 | ||||||

| Fusion-in-Decoder[39] | 84.4 | 90.3 | ||||||

| SpanBERT [42] | 79.1 | 86.6 | ||||||

| MRC-GCN [87] | 78.3 | |||||||

| MultiHop [14] | 76.5 | |||||||

| Longformer [8] | 81.2 | 88.3 | 73.2 | 77.3 | 85.3 | 81.9 | ||

| BIGBIRD-ETC | 81.2 | 89.1 | 73.6 | 77.8 | 57.9 | 84.5 | 92.4 | 82.3 |

表 3: QA任务测试集的微调结果。测试结果(HotpotQA和Natural Questions采用F1值,TriviaQA和WikiHop采用准确率)均取自各自排行榜。每个任务选取前三名(不含BIGBIRD-ETC)。在Natural Questions长答案(LA)、TriviaQA和WikiHop任务上,BIGBIRD-ETC创造了新的最优性能。HotpotQA任务中,我们的F1值排名第三,精确匹配(EM)排名第二。

| 模型 | HotpotQA | NaturalQ | TriviaQA | WikiHop | ||||

|---|---|---|---|---|---|---|---|---|

| Ans | Sup | Joint | LA | SA | Full | Verified | MCQ | |

| HGN [26] | 82.2 | 88.5 | 74.2 | |||||

| GSAN | 81.6 | 88.7 | 73.9 | |||||

| ReflectionNet[32] | 77.1 | 64.1 | ||||||

| RikiNet-v2[61] | 76.1 | 61.3 | ||||||

| Fusion-in-Decoder[39] | 84.4 | 90.3 | ||||||

| SpanBERT [42] | 79.1 | 86.6 | ||||||

| MRC-GCN [87] | 78.3 | |||||||

| MultiHop [14] | 76.5 | |||||||

| Longformer [8] | 81.2 | 88.3 | 73.2 | 77.3 | 85.3 | 81.9 | ||

| BIGBIRD-ETC | 81.2 | 89.1 | 73.6 | 77.8 | 57.9 | 84.5 | 92.4 | 82.3 |

Finally, we tackle long document classification where discriminating information may not be located in first 512 tokens. Below we summarize the results for BIGBIRD using sequence length $4096^{1}$ , while we defer all other setup details including computational resources, batch size, step size, to App. E.

最后,我们处理长文档分类任务,其中关键判别信息可能不在前512个token中。以下总结了BIGBIRD模型在序列长度$4096^{1}$下的实验结果,其他实验设置细节(包括计算资源、批量大小、步长等)详见附录E。

Pre training and MLM We follow [22, 63] to create base and large versions of BIGBIRD and pretrain it using MLM objective. This task involves predicting a random subset of tokens which have been masked out. We use four standard data-sets for pre training (listed in App. E.1, Tab. 9), warm-starting from the public RoBERTa checkpoint 2. We compare performance in predicting the masked out tokens in terms of bits per character, following [8]. As seen in App. E.1, Tab. 10, both BIGBIRD and Longformer perform better than limited length RoBERTa, with BIGBIRD-ETC performing the best. We note that we trained our models on a reasonable $16G B$ memory/chip with batch size of 32-64. Our memory efficiency is due to efficient blocking and sparsity structure of the sparse attention mechanism described in Sec. 2.

预训练与掩码语言模型(MLM)

我们遵循[22, 63]的方法创建BIGBIRD的基础版和大型版本,并使用MLM目标进行预训练。该任务需要预测被掩码的随机Token子集。我们使用四个标准数据集进行预训练(列于附录E.1表9),并从公开的RoBERTa检查点2进行热启动。按照[8]的方法,我们通过每字符比特数来比较预测掩码Token的性能。如附录E.1表10所示,BIGBIRD和Longformer的性能均优于有限长度的RoBERTa,其中BIGBIRD-ETC表现最佳。需要说明的是,我们的模型是在单芯片16GB内存、批量大小为32-64的合理配置下训练的。这种内存效率得益于第2节描述的稀疏注意力机制的高效分块和稀疏结构。

Question Answering (QA) We considered following four challenging datasets:

问答 (QA) 我们考虑了以下四个具有挑战性的数据集:

- Natural Questions [52]: For the given question, find a short span of answer (SA) from the given evidences as well highlight the paragraph from the given evidences containing information about the correct answer (LA).

- 自然问题 [52]: 对于给定问题,从提供的证据中找出简短答案片段 (SA) ,同时标注出包含正确答案相关信息的证据段落 (LA) 。

- HotpotQA-distractor [100]: Similar to natural questions, it requires finding the answer (Ans) as well as the supporting facts (Sup) over different documents needed for multi-hop reasoning from the given evidences.

- HotpotQA-distractor [100]: 类似于自然问题,它需要从给定的证据中通过多跳推理在不同文档中寻找答案(Ans)和支持事实(Sup)。

- TriviaQA-wiki [41]: We need to provide an answer for the given question using provided Wikipedia evidence, however, the answer might not be present in the given evidence. On a

- TriviaQA-wiki [41]: 我们需要利用提供的维基百科证据回答给定问题,但答案可能不在给定证据中。

- WikiHop [95]: Chose correct option from multiple-choice questions (MCQ), by aggregating information spread across multiple documents given in the evidences.

- WikiHop [95]: 从多项选择题 (MCQ) 中选择正确答案,通过整合证据中提供的多个文档中的信息。

As these tasks are very competitive, multiple highly engineered systems have been designed specific each dataset confirming to respective output formats. For a fair comparison, we had to use some additional regular iz ation for training BIGBIRD, details of which are provided in App. E.2 along with exact architecture description. We experiment using the base sized model and select the best configuration on the development set for each dataset (as reported in Tab. 2). We can see that BIGBIRD-ETC, with expanded global tokens consistently outperforms all other models. Thus, we chose this configuration to train a large sized model to be used for evaluation on the hidden test set.

由于这些任务竞争激烈,多个高度工程化的系统已针对每个数据集设计,并严格遵循相应的输出格式。为确保公平比较,我们不得不为训练BIGBIRD使用额外的正则化方法,具体细节及完整架构描述详见附录E.2。我们基于基础尺寸模型进行实验,并为每个数据集在开发集上选择最佳配置(如Tab. 2所示)。结果表明,采用扩展全局token的BIGBIRD-ETC始终优于其他所有模型。因此,我们选择该配置训练大尺寸模型,用于隐藏测试集的评估。

In Tab. 3, we compare BIGBIRD-ETC model to top-3 entries from the leader board excluding BIGBIRD. One can clearly see the importance of using longer context as both Longformer and BIGBIRD outperform models with smaller contexts. Also, it is worth noting that BIGBIRD submission is a single model, whereas the other top-3 entries for Natural Questions are ensembles, which might explain the slightly lower accuracy in exact answer phrase selection.

在表3中,我们将BIGBIRD-ETC模型与排行榜中除BIGBIRD外的前三名参赛模型进行了比较。可以明显看出使用更长上下文的重要性,因为Longformer和BIGBIRD的表现都优于上下文较小的模型。此外值得注意的是,BIGBIRD提交的是单一模型,而自然问答任务的其他前三名参赛模型均为集成模型,这可能解释了其在精确答案短语选择上略低的准确率。

Classification We experiment on datasets of different lengths and contents, specifically various document classification and GLUE tasks. Following BERT, we used one layer with cross entropy loss on top of the first [CLS] token. We see that gains of using BIGBIRD are more significant when we have longer documents and fewer training examples. For instance, using base sized model, BIGBIRD improves state-of-the-art for Arxiv dataset by about $\textbf{5%}$ points. On Patents dataset, there is improvement over using simple BERT/RoBERTa, but given the large size of training data the improvement over SoTA (which is not BERT based) is not significant. Note that this performance gain is not seen for much smaller IMDb dataset. Along with experimental setup detail, we present detailed results in App. E.4 which show competitive performance.

分类

我们在不同长度和内容的数据集上进行实验,具体包括各类文档分类任务和GLUE基准任务。遵循BERT的做法,我们在首个[CLS] token上添加了一个带交叉熵损失的单层分类器。实验表明,当处理较长文档且训练样本较少时,使用BIGBIRD带来的性能提升更为显著。例如,在基础模型规模下,BIGBIRD将Arxiv数据集的最先进水平提升了约$\textbf{5%}$个点。在Patents数据集上,其表现优于简单BERT/RoBERTa方案,但由于训练数据量庞大,相较于非BERT架构的现有最优方法(SoTA)提升有限。值得注意的是,在规模小得多的IMDb数据集上未观测到此类性能增益。除实验配置细节外,我们在附录E.4中提供了详实结果,显示其具备竞争优势。

4.1 Encoder-Decoder Tasks

4.1 编码器-解码器任务

For an encoder-decoder setup, one can easily see that both suffer from quadratic complexity due to the full self attention. We focus on introducing the sparse attention mechanism of BIGBIRD only at the encoder side. This is because, in practical generative applications, the length of output sequence is typically small as compared to the input. For example for text sum mari z ation, we see in realistic scenarios (c.f. App. E.5 Tab. 18) that the median output sequence length is $\sim200$ where as the input sequence’s median length is $>3000$ . For such applications, it is more efficient to use sparse attention mechanism for the encoder and full self-attention for the decoder.

对于编码器-解码器架构,可以明显看出两者都因全自注意力机制而面临二次复杂度问题。我们重点介绍仅在编码器侧引入BIGBIRD的稀疏注意力机制。这是因为在实际生成式应用中,输出序列长度通常远小于输入序列。以文本摘要为例,真实场景数据显示(参见附录E.5表18)输出序列中位数长度约为200个token,而输入序列中位数长度超过3000个token。在此类应用中,编码器采用稀疏注意力机制、解码器保留全自注意力机制是更高效的方案。

| Model | Arxiv | PubMed | BigPatent | |||||||

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | ||

| Art | SumBasic [68] | 29.47 | 6.95 | 26.30 | 37.15 | 11.36 | 33.43 | 27.44 | 7.08 | 23.66 |

| LexRank [25] | 33.85 | 10.73 | 28.99 | 39.19 | 13.89 | 34.59 | 35.57 | 10.47 | 29.03 | |

| LSA [97] | 29.91 | 7.42 | 25.67 | 33.89 | 9.93 | 29.70 | ||||

| Attn-Seq2Seq[85] | 29.30 | 6.00 | 25.56 | 31.55 | 8.52 | 27.38 | 28.74 | 7.87 | 24.66 | |

| Pntr-Gen-Seq2Seq [77] | 32.06 | 9.04 | 25.16 | 35.86 | 10.22 | 29.69 | 33.14 | 11.63 | 28.55 | |

| Long-Doc-Seq2Seq [20] | 35.80 | 11.05 | 31.80 | 38.93 | 15.37 | 35.21 | ||||

| Sent-CLF [81] | 34.01 | 8.71 | 30.41 | 45.01 | 19.91 | 41.16 | 36.20 | 10.99 | 31.83 | |

| Sent-PTR [81] | 42.32 | 15.63 | 38.06 | 43.30 | 17.92 | 39.47 | 34.21 | 10.78 | 30.07 | |

| Extr-Abst-TLM [81] | 41.62 | 14.69 | 38.03 | 42.13 | 16.27 | 39.21 | 38.65 | 12.31 | 34.09 | |

| Dancer [31] | 42.70 | 16.54 | 38.44 | 44.09 | 17.69 | 40.27 | ||||

| Base | Transformer | 28.52 | 6.70 | 25.58 | 31.71 | 8.32 | 29.42 | 39.66 | 20.94 | 31.20 |

| + RoBERTa [76] | 31.98 | 8.13 | 29.53 | 35.77 | 13.85 | 33.32 | 41.11 | 22.10 | 32.58 | |

| + Pegasus [107] | 34.81 | 10.16 | 30.14 | 39.98 | 15.15 | 35.89 | 43.55 | 20.43 | 31.80 | |

| BIGBIRD-RoBERTa | 41.22 | 16.43 | 36.96 | 43.70 | 19.32 | 39.99 | 55.69 | 37.27 | 45.56 | |

| Large | Pegasus (Reported) [107] | 44.21 | 16.95 | 38.83 | 45.97 | 20.15 | 41.34 | 52.29 | 33.08 | 41.75 |

| Pegasus (Re-eval) | 43.85 | 16.83 | 39.17 | 44.53 | 19.30 | 40.70 | 52.25 | 33.04 | 41.80 | |

| BIGBIRD-Pegasus | 46.63 | 19.02 | 41.77 | 46.32 | 20.65 | 42.33 | 60.64 | 42.46 | 50.01 | |

Table 4: Sum mari z ation ROUGE score for long documents.

| 模型 | Arxiv | PubMed | BigPatent |

|---|---|---|---|

| R-1 | R-2 | R-L | |

| Art | |||

| SumBasic [68] | 29.47 | 6.95 | 26.30 |

| LexRank [25] | 33.85 | 10.73 | 28.99 |

| LSA [97] | 29.91 | 7.42 | 25.67 |

| Attn-Seq2Seq[85] | 29.30 | 6.00 | 25.56 |

| Pntr-Gen-Seq2Seq [77] | 32.06 | 9.04 | 25.16 |

| Long-Doc-Seq2Seq [20] | 35.80 | 11.05 | 31.80 |

| Sent-CLF [81] | 34.01 | 8.71 | 30.41 |

| Sent-PTR [81] | 42.32 | 15.63 | 38.06 |

| Extr-Abst-TLM [81] | 41.62 | 14.69 | 38.03 |

| Dancer [31] | 42.70 | 16.54 | 38.44 |

| Base | |||

| Transformer | 28.52 | 6.70 | 25.58 |

| + RoBERTa [76] | 31.98 | 8.13 | 29.53 |

| + Pegasus [107] | 34.81 | 10.16 | 30.14 |

| BIGBIRD-RoBERTa | 41.22 | 16.43 | 36.96 |

| Large | |||

| Pegasus (Reported) [107] | 44.21 | 16.95 | 38.83 |

| Pegasus (Re-eval) | 43.85 | 16.83 | 39.17 |

| BIGBIRD-Pegasus | 46.63 | 19.02 | 41.77 |

表 4: 长文档摘要 ROUGE 分数。

Sum mari z ation Document sum mari z ation is a task of creating a short and accurate summary of a text document. We used three long document datasets for testing our model details of which are mention in Tab. 18. In this paper we focus on abstract ive sum mari z ation of long documents where using a longer contextual encoder should improve performance. The reasons are two fold: First, the salient content can be evenly distributed in the long document, not just in first 512 tokens, and this is by design in the BigPatents dataset [78]. Second, longer documents exhibit a richer discourse structure and summaries are considerably more abstract ive, thereby observing more context helps. As has been pointed out recently [76, 107], pre training helps in generative tasks, we warm start from our general purpose MLM pre training on base-sized models as well as utilizing state-of-the-art sum mari z ation specific pre training from Pegasus [107] on large-sized models. The results of training BIGBIRD sparse encoder along with full decoder on these long document datasets are presented in Tab. 4. We can clearly see modeling longer context brings significant improvement. Along with hyper parameters, we also present results on shorter but more widespread datasets in App. E.5, which show that using sparse attention does not hamper performance either.

文档摘要是一项为文本文档创建简短而准确的摘要的任务。我们使用了三个长文档数据集来测试模型,其详细信息见表18。在本文中,我们专注于长文档的抽象摘要,使用更长的上下文编码器应能提高性能。原因有二:首先,重要内容可能均匀分布在长文档中,而不仅限于前512个token,这在BigPatents数据集[78]中是设计如此。其次,长文档展现出更丰富的论述结构,摘要也更为抽象,因此观察更多上下文有助于提升效果。正如最近指出的[76, 107],预训练对生成任务有帮助,我们基于基础尺寸模型的通用MLM预训练进行热启动,并在大型模型上利用Pegasus[107]最先进的摘要专用预训练。在这些长文档数据集上训练BIGBIRD稀疏编码器和完整解码器的结果见表4。我们可以清楚地看到,建模更长上下文带来了显著改进。除了超参数外,我们还在附录E.5中展示了更短但更广泛的数据集上的结果,这些结果表明使用稀疏注意力也不会影响性能。

5 Experiments: Genomics

5 实验:基因组学

There has been a recent upsurge in using deep learning for genomics data [86, 106, 13], which has resulted in improved performance on several biologically-significant tasks such as promoter site prediction [71], methyl ation analysis [55], predicting functional effects of non-coding variant [109], etc. These approaches consume DNA sequence fragments as inputs, and therefore we believe longer input sequence handling capability of BIGBIRD would be beneficial as many functional effects in DNA are highly non-local [12]. Furthermore, taking inspiration from NLP, we learn powerful contextual representations for DNA fragments utilizing abundant unlabeled data (e.g. human reference genome, Saccharomyces Genome Database) via MLM pre training. Next, we showcase that our long input BIGBIRD along with the proposed pre training significantly improves performances in two downstream tasks. Detailed experimental setup for the two tasks are provided in App. F.

近年来,深度学习在基因组学数据中的应用激增[86, 106, 13],显著提升了多项生物学重要任务的性能表现,如启动子位点预测[71]、甲基化分析[55]、非编码变异功能效应预测[109]等。这些方法以DNA序列片段作为输入,因此我们认为BIGBIRD处理长输入序列的能力将具有优势,因为DNA中的许多功能效应具有高度非局部性[12]。此外,受自然语言处理(NLP)启发,我们通过掩码语言建模(MLM)预训练,利用大量未标注数据(如人类参考基因组、酵母基因组数据库)学习DNA片段的强上下文表征。随后,我们证明长输入版BIGBIRD结合所提出的预训练方法,能显著提升两项下游任务的性能。两项任务的详细实验设置见附录F。

Pre-training and MLM As explored in Liang [58], instead of operating on base pairs, we propose to first segment DNA into tokens so as to further increase the context length (App. F, Fig. 7). In particular, we build a byte-pair encoding [50] table for the DNA sequence of size 32K, with each token representing 8.78 base pairs on average. We learn contextual representation of these token on the human reference genome $(\mathrm{GRCh}37)^{3}$ using MLM objective. We then report the bits per character (BPC) on a held-out set in Tab. 5. We find that attention based contextual representation of DNA does improve BPC, which is further improved by using longer context.

预训练与MLM

如Liang [58]所述,我们提出先将DNA分割为token(而非直接操作碱基对)以进一步增加上下文长度(附录F,图7)。具体而言,我们为DNA序列构建了一个32K大小的字节对编码[50]表,每个token平均代表8.78个碱基对。我们在人类参考基因组$(\mathrm{GRCh}37)^{3}$上使用MLM目标学习这些token的上下文表示,随后在表5中报告了保留集的每字符比特数(BPC)。研究发现,基于注意力机制的DNA上下文表示确实能提升BPC,而更长的上下文会带来进一步改进。

Table 5: MLM BPC

| Model BPC |

| SRILM[58] 1.57 |

| BERT (sqln.512) 1.23 |

| BIGBIRD (sqln. 4096) 1.12 |

表 5: MLM BPC

| Model BPC |

|---|

| SRILM[58] 1.57 |

| BERT (sqln.512) 1.23 |

| BIGBIRD (sqln.4096) 1.12 |

Promoter Region Prediction Promoter is a DNA region typically located upstream of the gene, which is the site of transcription initiation. Multiple methods have been proposed to identify the promoter regions in a given DNA sequence [99, 59, 11, 98, 71], as it is an important first step in understanding gene regulation. The corresponding machine learning task is to classify a given DNA fragment as promoter or non-promoter sequence. We use the dataset compiled by Oubounyt et al. [71] which was built from Eukaryotic Promoter Database (EPDnew) [24] . We finetuned the pretrained BIGBIRD model from above, using the training data and report F1 on test dataset. We compare our results to the previously reported best method in Tab. 6. We see that BIGBIRD achieve nearly perfect accuracy with a $5%$ jump from the previous best reported accuracy.

启动子区域预测

启动子是通常位于基因上游的DNA区域,是转录起始的位点。理解基因调控的重要第一步是识别给定DNA序列中的启动子区域,为此已提出多种方法[99, 59, 11, 98, 71]。对应的机器学习任务是将给定DNA片段分类为启动子或非启动子序列。我们使用了Oubounyt等人[71]从真核启动子数据库(EPDnew)[24]编译的数据集。基于上述预训练的BIGBIRD模型进行微调,使用训练数据并在测试数据集上报告F1值。我们将结果与表6中先前报道的最佳方法进行比较,发现BIGBIRD实现了近乎完美的准确率,比之前报道的最佳准确率提高了5%。

| Model | F1 |

| CNNProm [90] | 69.7 |

| DeePromoter [71] | 95.6 |

| BIGBIRD | 99.9 |

Table 6: Comparison.

表 6: 对比结果

| 模型 | F1 |

|---|---|

| CNNProm [90] | 69.7 |

| DeePromoter [71] | 95.6 |

| BIGBIRD | 99.9 |

Chromatin-Profile Prediction Non-coding regions of DNA do not code for proteins. Majority of diseases and other trait associated single-nucleotide polymorphism are correlated to non-coding genomic variations [109, 46]. Thus, understanding the functional effects of non-coding regions of DNA is a very important task. An important step in this process, as defined by Zhou and Troy an s kaya [109], is to predict large-scale chromatin-profiling from non-coding genomic sequence. To this effect, DeepSea [109], compiled 919 chromatin-profile of 2.4M non-coding variants from Encyclopedia of DNA Elements (ENCODE)5 and Roadmap Epi genomics projects6. The corresponding ML task is to predict, for a given non-coding region of DNA, these 919 chromatin-profile including 690 transcription factors (TF) binding profiles for 160 different TFs, 125 DNase I sensitivity (DHS) profiles and 104 histone-mark (HM) profiles. We jointly learn 919 binary class if i ers to predict these functional effects from sequence of DNA fragments. On held-out chromosomes, we compare AUC with the baselines in Tab. 7 and see that we significantly improve on performance on the harder task HM, which is known to have longer-range correlations [27] than others.

染色质谱预测

DNA的非编码区不编码蛋白质。大多数疾病及其他性状相关的单核苷酸多态性与非编码基因组变异相关[109, 46]。因此,理解DNA非编码区的功能效应是一项非常重要的任务。根据Zhou和Troyanskaya[109]的定义,这一过程中的关键步骤是从非编码基因组序列预测大规模染色质谱。为此,DeepSea[109]从DNA元件百科全书(ENCODE)5和Roadmap表观基因组计划6中收集了240万个非编码变体的919个染色质谱。对应的机器学习任务是为给定的DNA非编码区预测这919个染色质谱,包括160种不同转录因子(TF)的690个TF结合谱、125个DNase I敏感性(DHS)谱和104个组蛋白标记(HM)谱。我们联合训练了919个二元分类器,从DNA片段序列预测这些功能效应。在保留染色体上,我们将AUC与表7中的基线进行比较,发现我们在更难的任务HM上显著提升了性能,已知该任务比其他任务具有更长程相关性[27]。

| Model | TF | HM | DHS |

| gkm-SVM[30] | 89.6 | - | |

| DeepSea [109] | 95.8 | 85.6 | 92.3 |

| BIGBIRD | 96.1 | 88.7 | 92.1 |

Table 7: Chromatin-Profile Prediction

表 7: 染色质图谱预测

| 模型 | TF | HM | DHS |

|---|---|---|---|

| gkm-SVM[30] | 89.6 | - | |

| DeepSea[109] | 95.8 | 85.6 | 92.3 |

| BIGBIRD | 96.1 | 88.7 | 92.1 |

6 Conclusion

6 结论

We propose BIGBIRD: a sparse attention mechanism that is linear in the number of tokens. BIGBIRD satisfies a number of theoretical results: it is a universal approx im at or of sequence to sequence functions and is also Turing complete. Theoretically, we use the power of extra global tokens preserve the expressive powers of the model. We complement these results by showing that moving to sparse attention mechanism do incur a cost. Empirically, BIGBIRD gives state-of-the-art performance on a number of NLP tasks such as question answering and long document classification. We further introduce attention based contextual language model for DNA and fine-tune it for down stream tasks such as promoter region prediction and predicting effects of non-coding variants.

我们提出BIGBIRD:一种Token数量呈线性增长的稀疏注意力机制。BIGBIRD满足多项理论成果:它是序列到序列函数的通用近似器,同时具备图灵完备性。理论上,我们通过额外全局Token的引入保留了模型的表达能力。这些成果的补充研究表明,转向稀疏注意力机制确实会带来性能损耗。实证显示,BIGBIRD在问答和长文档分类等NLP任务中达到了最先进水平。我们进一步提出基于注意力的DNA上下文语言模型,并针对启动子区域预测和非编码变体效应预测等下游任务进行微调。

References

参考文献

Big Bird: Transformers for Longer Sequences – Appendix

Big Bird: 更长序列的Transformer – 附录

A Universal Ap proxima tors

通用逼近器

A.1 Notation

A.1 符号说明

We begin by setting up some notations following Pérez et al. [72] to formally describe the complete architecture of Transformers. A single layer of Transformer encoder is a parametric function Enc receiving a sequence $\pmb{X}=(\pmb{x}_ {1},...,\pmb{x}_ {n})$ of vectors in $\mathbb{R}^{d}$ and returning a sequence $Z=(z_{1},...,z_{n})$ of the same length. Each $z_{i}$ is a $d$ dimensional vector as well. We interchangeably treat the sequence $\boldsymbol{X}$ as a matrix in $\mathbb{R}^{n\times d}$ . Enc has two components:

我们首先按照Pérez等人[72]的设定建立一些符号,以形式化描述Transformer的完整架构。单层Transformer编码器是一个参数化函数Enc,它接收$\mathbb{R}^{d}$空间中的向量序列$\pmb{X}=(\pmb{x}_ {1},...,\pmb{x}_ {n})$,并返回相同长度的序列$Z=(z_{1},...,z_{n})$。每个$z_{i}$同样是一个$d$维向量。我们将序列$\boldsymbol{X}$视为$\mathbb{R}^{n\times d}$矩阵来处理。Enc包含两个组件:

- An attention mechanism ATTN that takes in the sequence $\boldsymbol{X}$ and returns sequence $\left(\pmb{a}_ {1},...,\pmb{a}_{n}\right)$ of the same length and dimensionality; and 2. A two layer fully connected network $O$ that takes in a vector in $\mathbb{R}^{d}$ and returns a vector in $\mathbb{R}^{d}$ .

- 一种注意力机制 (ATTN),接收序列 $\boldsymbol{X}$ 并返回相同长度和维度的序列 $\left(\pmb{a}_ {1},...,\pmb{a}_{n}\right)$;

- 一个双层全连接网络 $O$,接收 $\mathbb{R}^{d}$ 中的向量并返回 $\mathbb{R}^{d}$ 中的向量。

Then $i$ -th output vector of $\operatorname{Enc}(X)$ is computed as follows:

然后 $\operatorname{Enc}(X)$ 的第 $i$ 个输出向量计算如下:

$$

z_{i}=O(\pmb{a}_ {i})+\pmb{a}_ {i}\qquad\mathrm{where}\qquad\pmb{a}_ {i}=\mathrm{ATTN}(\pmb{X})_ {i}+\pmb{x}_{i}

$$

$$

z_{i}=O(\pmb{a}_ {i})+\pmb{a}_ {i}\qquad\mathrm{where}\qquad\pmb{a}_ {i}=\mathrm{ATTN}(\pmb{X})_ {i}+\pmb{x}_{i}

$$

Now it remains to define ATTN and $O$ which we do next.

现在需要定义 ATTN 和 $O$,我们接下来进行这一步骤。

As described in Sec. 2, an attention mechanism is parameterized by three functions: $Q,K,V:$ $\mathbb{R}^{d}\rightarrow\mathbb{R}^{m}$ . In this paper, we assume that they are simply matrix products: $Q({\pmb x})={\pmb x}W_{Q}$ , $K({\pmb x})=$ ${\pmb x}W_{K}$ , and $V({\pmb x})={\pmb x}W_{V}$ , where $W_{Q},W_{K},W_{V}\in\mathbb{R}^{d\times m}$ and $W_{V}\in\mathbb{R}^{d\times d}$ . In reality a multiheaded attention is used, i.e. we have not only one, but $H$ -sets of Query/Key/Value weight matrices, $W_{Q}^{h},W_{V}^{h},W_{K}^{h}$ for $h=1,...,H$ . Thus, for a directed graph $D$ over $[n]$ , the $i^{\mathrm{th}}$ output vector of the generalized attention mechanism would be

如第2节所述,注意力机制由三个函数参数化:$Q,K,V:$ $\mathbb{R}^{d}\rightarrow\mathbb{R}^{m}$。本文假设它们仅为矩阵乘积:$Q({\pmb x})={\pmb x}W_{Q}$,$K({\pmb x})=$ ${\pmb x}W_{K}$,以及$V({\pmb x})={\pmb x}W_{V}$,其中$W_{Q},W_{K},W_{V}\in\mathbb{R}^{d\times m}$且$W_{V}\in\mathbb{R}^{d\times d}$。实际采用多头注意力机制,即拥有$H$组查询/键/值权重矩阵$W_{Q}^{h},W_{V}^{h},W_{K}^{h}$($h=1,...,H$)。因此,对于$[n]$上的有向图$D$,广义注意力机制的第$i^{\mathrm{th}}$个输出向量为

$$

\operatorname{ATTN}_ {D}(X)_ {i}=\sum_{h=1}^{H}\sigma\left((\pmb{x}_ {i}W_{Q}^{h})(\pmb{X}_ {N(i)}W_{K}^{h})^{T}\right)\cdot(\pmb{X}_ {N(i)}W_{V}^{h})

$$

$$

\operatorname{ATTN}_ {D}(X)_ {i}=\sum_{h=1}^{H}\sigma\left((\pmb{x}_ {i}W_{Q}^{h})(\pmb{X}_ {N(i)}W_{K}^{h})^{T}\right)\cdot(\pmb{X}_ {N(i)}W_{V}^{h})

$$

where $N(i)$ denote the out-neighbors set of node $i$ in $D$ . In other words, the set of arcs (directed edges) in $D$ represents the set of inner products that our attention mechanism will consider. Also recall that $\sigma$ is a scoring function such as softmax or hardmax.

其中 $N(i)$ 表示节点 $i$ 在有向图 $D$ 中的出边邻居集合。换句话说,$D$ 中的弧集(有向边)代表注意力机制将考虑的内积集合。同时请注意 $\sigma$ 是评分函数(如 softmax 或 hardmax)。

Lastly, we define the output fully connected network as follows:

最后,我们将输出全连接网络定义如下:

$$

O(\pmb{a}_ {i})=\mathrm{ReLU}\left(\pmb{a}_ {i}W_{1}+b_{1}\right)W_{2}\cdot+b_{2}

$$

$$

O(\pmb{a}_ {i})=\mathrm{ReLU}\left(\pmb{a}_ {i}W_{1}+b_{1}\right)W_{2}\cdot+b_{2}

$$

Here $W_{1}\in\mathbb{R}^{d\times q},W_{2}\in\mathbb{R}^{q\times d},b_{1}\in\mathbb{R}^{p}$ , and $b_{2}\in\mathbb{R}^{d}$ are parameters of output network $O$ .

这里 $W_{1}\in\mathbb{R}^{d\times q},W_{2}\in\mathbb{R}^{q\times d},b_{1}\in\mathbb{R}^{p}$ 和 $b_{2}\in\mathbb{R}^{d}$ 是输出网络 $O$ 的参数。

Additional Notation We introduce a few pieces of additional notation that will be useful. Let $[a,b)_ {\delta}={a,a+\delta,\ldots,a+\lfloor\frac{b-a}{\delta}\rfloor\cdot\delta}$ . Therefore, $[0,1)_{\delta}={0,\delta,2\delta,\dots,(1-\delta)}$ . We use $\mathbf{1}[\mathcal{E}]$ to denote the indicator variable; it is $1$ if the event $\mathcal{E}$ occurs and 0 otherwise.

附加符号

我们引入一些额外的符号以便后续使用。设 $[a,b)_ {\delta}={a,a+\delta,\ldots,a+\lfloor\frac{b-a}{\delta}\rfloor\cdot\delta}$ ,因此 $[0,1)_{\delta}={0,\delta,2\delta,\dots,(1-\delta)}$ 。我们用 $\mathbf{1}[\mathcal{E}]$ 表示指示变量:若事件 $\mathcal{E}$ 发生则为 $1$ ,否则为 $0$ 。

A.2 Proof

A.2 证明

In this section, we will present the full proof of theorem 1. The proof will contain three parts. The first and the third part will largely follow standard techniques. The main innovation lies is in the second part.

在本节中,我们将完整证明定理1。该证明包含三部分:第一和第三部分主要沿用标准技术,核心创新点在于第二部分。

A.2.1 ![image.png]()

A.2.1 用分段常数函数近似 ${\mathcal{F}}_{C D}$

First, we consider a suitable partition of the region $(0,1)$ into a grid of granularity $\delta$ , which we denote by $G_{\delta}$ . We do this using Lemma 8 from Yun et al. [104], which we restate for completeness:

首先,我们考虑将区域 $(0,1)$ 划分为粒度 $\delta$ 的网格 $G_{\delta}$。为此,我们使用了 Yun 等人 [104] 中的引理 8,为完整起见,我们在此重述该引理:

Lemma 1 (Lemma 8 [104]). For any given $\underline{{f}}\in\mathcal{F}_ {C D}$ and $1\leq p\leq\infty$ , there exists a $\delta>0$ such that there exists a piece-wise constant function $f$ with $d_{p}(f,\bar{f})\leq\frac{\epsilon}{3}$ . Concretely, $\bar{f}$ is defined as

引理1 (引理8 [104])。对于任意给定的 $\underline{{f}}\in\mathcal{F}_ {C D}$ 和 $1\leq p\leq\infty$,存在 $\delta>0$ 使得存在一个分段常数函数 $f$ 满足 $d_{p}(f,\bar{f})\leq\frac{\epsilon}{3}$。具体而言,$\bar{f}$ 定义为

$$

{\bar{f}}(X)=\sum_{P\in\mathbb{G}_ {\delta}}{f}(P)\cdot{\mathbf{1}}\left[|\mathrm{ReLU}(X-P)|_{\infty}\leq\delta\right]

$$

$$

{\bar{f}}(X)=\sum_{P\in\mathbb{G}_ {\delta}}{f}(P)\cdot{\mathbf{1}}\left[|\mathrm{ReLU}(X-P)|_{\infty}\leq\delta\right]

$$

Since transformers can learn a positional embedding $E$ , without any loss of generality, we can consider the translated function. In particular, define

由于Transformer可以学习位置嵌入$E$,不失一般性,我们可以考虑平移后的函数。具体而言,定义

$$

E=\left[\begin{array}{c c c c c}{{0}}&{{0}}&{{0}}&{{...}}&{{0}}\ {{\delta^{-d}}}&{{\delta^{-d}}}&{{\delta^{-d}}}&{{...}}&{{\delta^{-d}}}\ {{\delta^{-2d}}}&{{\delta^{-2d}}}&{{\delta^{-2d}}}&{{...}}&{{\delta^{-2d}}}\ {{\vdots}}&{{}}&{{}}&{{}}&{{}}\ {{\delta^{-(n-1)d}}}&{{\delta^{-(n-1)d}}}&{{\delta^{-(n-1)d}}}&{{...}}&{{\delta^{-(n-1)d}}}\end{array}\right]

$$

$$

E=\left[\begin{array}{c c c c c}{{0}}&{{0}}&{{0}}&{{...}}&{{0}}\ {{\delta^{-d}}}&{{\delta^{-d}}}&{{\delta^{-d}}}&{{...}}&{{\delta^{-d}}}\ {{\delta^{-2d}}}&{{\delta^{-2d}}}&{{\delta^{-2d}}}&{{...}}&{{\delta^{-2d}}}\ {{\vdots}}&{{}}&{{}}&{{}}&{{}}\ {{\delta^{-(n-1)d}}}&{{\delta^{-(n-1)d}}}&{{\delta^{-(n-1)d}}}&{{...}}&{{\delta^{-(n-1)d}}}\end{array}\right]

$$

We will try to approximate $g(X)=f(X-E)$ where $g$ is defined on the domain $[0,1]^{d}\times[\delta^{-d},\delta^{-d}+$ $1]^{d}\times\cdot\cdot\cdot\times[\delta^{-(n-1)d},\delta^{-(n-1)d}+1]^{d}$ . To do so, we will apply a suitable modification of Lemma 1, which will consider the disc ret i zed grid

我们将尝试近似 $g(X)=f(X-E)$,其中 $g$ 定义在域 $[0,1]^{d}\times[\delta^{-d},\delta^{-d}+$ $1]^{d}\times\cdot\cdot\cdot\times[\delta^{-(n-1)d},\delta^{-(n-1)d}+1]^{d}$ 上。为此,我们将对引理1进行适当修改,考虑离散化网格。

$$

\mathbf{G}_ {\delta}^{E}:=[0,1]_ {\delta}^{d}\times[\delta^{-d},\delta^{-d}+1]_ {\delta}^{d}\times\cdots\times[\delta^{-(n-1)d},\delta^{-(n-1)d}+1]_{\delta}^{d}.

$$

$$

\mathbf{G}_ {\delta}^{E}:=[0,1]_ {\delta}^{d}\times[\delta^{-d},\delta^{-d}+1]_ {\delta}^{d}\times\cdots\times[\delta^{-(n-1)d},\delta^{-(n-1)d}+1]_{\delta}^{d}.

$$

Therefore, it suffices to approximate a function $\bar{f}:{\bf G}_{\delta}^{E}\rightarrow\mathbb{R}^{n\times d}$ defined as

因此,只需近似一个函数 $\bar{f}:{\bf G}_{\delta}^{E}\rightarrow\mathbb{R}^{n\times d}$,其定义为

$$

\bar{f}(X)=\sum_{P\in\mathbf{G}_ {\delta}^{E}}f(P-E)\cdot\mathbf{1}\left[|\mathrm{ReLU}(X-P)|_{\infty}\leq\delta\right].

$$

$$

\bar{f}(X)=\sum_{P\in\mathbf{G}_ {\delta}^{E}}f(P-E)\cdot\mathbf{1}\left[|\mathrm{ReLU}(X-P)|_{\infty}\leq\delta\right].

$$

A.2.2 Contextual Mappings and Sparse Attention Mechanisms

A.2.2 上下文映射与稀疏注意力机制

Throughout this section, we will assume that we are given a function that has an extra global token at index 0 and all vectors have an extra dimension appended to them. The latter assumption is without loss of generality as we can use the Feed-Forward Network to append sparse dimensions. In particular, we will associate $X\in\mathbb{R}^{(n+1)\times(d+1)}$ where we write $X=(x_{0},x_{1},\ldots,x_{n})$ . Although our function is only defined for $\mathbf{G}_{\delta}^{E}\subset\mathbb{R}^{n\times d}$ , we can amend the function in a natural way by making it ignore the first column. To avoid excessive clutter, we will assume that the function value is evaluated on the last $n$ columns.

在本节中,我们将假设给定一个在索引0处具有额外全局token的函数,且所有向量都附加了一个额外维度。后一个假设不失一般性,因为我们可以使用前馈网络 (Feed-Forward Network) 来附加稀疏维度。具体而言,我们将关联 $X\in\mathbb{R}^{(n+1)\times(d+1)}$ ,其中记 $X=(x_{0},x_{1},\ldots,x_{n})$ 。尽管我们的函数仅定义于 $\mathbf{G}_{\delta}^{E}\subset\mathbb{R}^{n\times d}$ ,但可以通过使其忽略第一列来自然修正该函数。为避免过度混乱,我们假设函数值是在最后 $n$ 列上计算的。

The main idea in this section is the use of contextual mapping to enable Transformers to compute any disc ret i zed function. A contextual mapping is an unique encoding of each tuple $(X,x_{i})$ where $X\in\mathbf{G}_ {\delta}^{E}$ , and each column $x_{i}\in[\delta^{-(i-1)\bar{d}},\delta^{-\bar{(}i-1)d}+1)\bar{^{d}}\qquad$ for all $i\in[n]$ . We restate the definition adapted to our setting below

本节的核心思想是利用上下文映射(contextual mapping)使Transformer能够计算任意离散化函数。上下文映射是对每个元组$(X,x_{i})$的唯一编码,其中$X\in\mathbf{G}_ {\delta}^{E}$,且对于所有$i\in[n]$,每列$x_{i}\in[\delta^{-(i-1)\bar{d}},\delta^{-\bar{(}i-1)d}+1)\bar{^{d}}\qquad$。我们在下文中重新定义了适用于本场景的定义

Definition 2 (Defn 3.1 [104]). (Contextual Mapping) $A$ contextual mapping is a function mapping $q:{\bf G}_{\delta}^{E}\rightarrow\mathbb{R}^{n}$ if it satisfies the following:

定义2 (定义3.1 [104]). (上下文映射) 上下文映射是一个函数映射 $q:{\bf G}_{\delta}^{E}\rightarrow\mathbb{R}^{n}$,若其满足以下条件:

The key technical novelty of the proof is computing a contextual mapping using only the sparse attention mechanism. We create a “selective shift” operator which only shifts entries of a vector that lie in a certain range. We will use this shift operator strategically to ensure that we attain a contextual mapping at the end of the process. The lemma below, which is based on parts of the proof of Lemma 6 of [104], states that we can implement a suitable “selective” shift operator using a sparse attention mechanism.

该证明的关键技术创新在于仅通过稀疏注意力机制计算上下文映射。我们设计了一种"选择性偏移"算子,它仅对向量中处于特定范围内的元素进行位移。通过策略性运用该偏移算子,确保最终获得上下文映射。以下引理基于[104]中引理6的部分证明,表明我们可以利用稀疏注意力机制实现合适的"选择性"偏移算子。

Lemma 2. Given a function $\psi:\mathbb{R}^{(n+1)\times(d+1)}\times\mathbb{R}^{2}\to\mathbb{R}^{(n+1)\times1}$ and a vector $u\in\mathbb{R}^{d+1}$ and a sparse attention mechanism based on the directed graph $D$ , we can implement a selective shift operator that receives as input a matrix $X\in\mathbb{R}^{(n+1)\times(\bar{d}+\bar{1})}$ and outputs $X+\rho\cdot\psi_{u}(X,b_{1},b_{2})$ where

引理 2. 给定函数 $\psi:\mathbb{R}^{(n+1)\times(d+1)}\times\mathbb{R}^{2}\to\mathbb{R}^{(n+1)\times1}$ 和向量 $u\in\mathbb{R}^{d+1}$ 以及基于有向图 $D$ 的稀疏注意力机制,我们可以实现一个选择性位移算子。该算子接收输入矩阵 $X\in\mathbb{R}^{(n+1)\times(\bar{d}+\bar{1})}$ 并输出 $X+\rho\cdot\psi_{u}(X,b_{1},b_{2})$ 其中

$$

\psi_{u}(Z;b_{1},b_{2})_ {i} =\begin{cases}\left(\max_{j\in N(i)} u^{T}Z_{j} - \min_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } b_{1} \leq u^{T}Z_{j} \leq b_{2} \

0 & \text{else.}\end{cases}

$$

$$

\psi_{u}(Z;b_{1},b_{2})_ {i} =\begin{cases}\left(\max_{j\in N(i)} u^{T}Z_{j} - \min_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } b_{1} \leq u^{T}Z_{j} \leq b_{2} \

0 & \text{else.}\end{cases}

$$

Note that $e_{1}\in R^{d+1}$ denotes $(1,0,\ldots,0)$ .

注意,$e_{1}\in R^{d+1}$ 表示 $(1,0,\ldots,0)$。

Proof. Consider the function , which can be implemented by a sparse attention mechanism :

证明。考虑函数,它可以通过稀疏注意力机制实现:

$$

\boldsymbol{\tilde{\psi}}(\boldsymbol{X},\boldsymbol{b})_ {i}=\sigma_{H}\Big[(\boldsymbol{u}^{T}\cdot\boldsymbol{X}_ {i})^{T}\cdot(\boldsymbol{u}^{T}\boldsymbol{X}_ {N(i)}-\boldsymbol{b}\boldsymbol{1}_ {N(i)}^{T})e^{(1)}(\boldsymbol{u}^{T}\boldsymbol{X}_{N(i)})\Big]

$$

$$

\boldsymbol{\tilde{\psi}}(\boldsymbol{X},\boldsymbol{b})_ {i}=\sigma_{H}\Big[(\boldsymbol{u}^{T}\cdot\boldsymbol{X}_ {i})^{T}\cdot(\boldsymbol{u}^{T}\boldsymbol{X}_ {N(i)}-\boldsymbol{b}\boldsymbol{1}_ {N(i)}^{T})e^{(1)}(\boldsymbol{u}^{T}\boldsymbol{X}_{N(i)})\Big]

$$

This is because the Key, Query and Value functions are simply affine transformations of $X$ .

这是因为Key、Query和Value函数只是$X$的仿射变换。

Given any graph $D$ , the above function will evaluate to the following:

给定任意图 $D$,上述函数将评估为以下结果:

$$

\tilde{\psi}(Z;b)_ {i} = \begin{cases}\left(\max\limits_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } u^{T}Z_{j} > b \ \left(\min\limits_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } u^{T}Z_{j} < b\end{cases}

$$

$$

\tilde{\psi}(Z;b)_ {i} = \begin{cases}\left(\max\limits_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } u^{T}Z_{j} > b \ \left(\min\limits_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } u^{T}Z_{j} < b\end{cases}

$$

Therefore we can say that $\tilde{\psi}(Z;b_{Q})-\tilde{\psi}(Z;b_{Q^{\prime}})$ satisfies

因此我们可以说 $\tilde{\psi}(Z;b_{Q})-\tilde{\psi}(Z;b_{Q^{\prime}})$ 满足

$$

\psi(Z;b_{1},b_{2})_ {i} = \begin{cases}\left(\max_{j\in N(i)} u^{T}Z_{j} - \min_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } b_{1} \leq u^{T}Z_{j} \leq b_{2} \

0 & \text{else}\end{cases}

$$

$$

\psi(Z;b_{1},b_{2})_ {i} = \begin{cases}\left(\max_{j\in N(i)} u^{T}Z_{j} - \min_{j\in N(i)} u^{T}Z_{j}\right)e_{1} & \text{if } b_{1} \leq u^{T}Z_{j} \leq b_{2} \

0 & \text{else}\end{cases}

$$

The following lemma, which is the heart of the proof, uses the above selective shift operators to construct contextual mappings.

以下引理是证明的核心,它利用上述选择性位移算子来构建上下文映射。

Lemma 3. There exists a function $g_{c}:\mathbb{R}^{(n+1)\times(d+1)}\rightarrow\mathbb{R}^{(n+1)}$ and a unique vector $u$ , such that for all $P\in{\bf G}_ {\delta}^{E}~g_{c}(P):=\langle u,g(P)\rangle$ satisfies the property that $g_{c}$ is a contextual mapping of $P$ . Furthermore, $g_{c}\in\mathcal{T}_{D}^{2,1,1}$ using a composition of sparse attention layers as long as $D$ contains the star graph.

引理3. 存在函数 $g_{c}:\mathbb{R}^{(n+1)\times(d+1)}\rightarrow\mathbb{R}^{(n+1)}$ 和唯一向量 $u$ ,使得对所有 $P\in{\bf G}_ {\delta}^{E}~g_{c}(P):=\langle u,g(P)\rangle$ 满足 $g_{c}$ 是 $P$ 的上下文映射。此外,只要 $D$ 包含星图,通过稀疏注意力层的组合可使 $g_{c}\in\mathcal{T}_{D}^{2,1,1}$ 。

Proof. Define $u\in\mathbb{R}^{d+1}=[1,\delta^{-1},\delta^{-2},\ldots,\delta^{-d+1},\delta^{-n d}]$ and let $X_{0}=(0,\dots,0,1)$ . We will assume that $\langle x_{i},x_{0}\rangle=0$ , by assuming that all the columns $x_{1},\ldots,x_{n}$ are appended by 0.

证明。定义 $u\in\mathbb{R}^{d+1}=[1,\delta^{-1},\delta^{-2},\ldots,\delta^{-d+1},\delta^{-n d}]$,并设 $X_{0}=(0,\dots,0,1)$。我们将假设 $\langle x_{i},x_{0}\rangle=0$,即假设所有列向量 $x_{1},\ldots,x_{n}$ 末尾都补零。

To successfully encode the entire context in each token, we will interleave the shift operator to target the original columns $1,\ldots,n$ and to target the global column 0. After a column $i$ is targeted, its inner product with $u$ will encode the entire context of the first $i$ columns. Next, we will shift the global token to take this context into account. This can be subsequently used by the remaining columns.

为了在每个token中成功编码整个上下文,我们将交错使用移位算子来分别指向原始列$1,\ldots,n$和全局列0。当列$i$被指向后,其与$u$的内积将编码前$i$列的全部上下文。随后,我们会移动全局token以纳入该上下文信息,供后续列使用。

For $i\in{0,1,\ldots,n}$ , we will use $l_{i}$ to denote the inner products $\langle u,x_{i}\rangle$ at the beginning. For $f_{i}=\langle u,x_{i}\rangle$ after the $i^{t h}$ column has changed for $i\in{1,\ldots,n}$ and we will use $f_{0}^{k}$ to denote $\langle u,x_{0}\rangle$ after the $k^{t h}$ phase. We need to distinguish the global token further as it’s inner product will change in each phase. Initially, given $X\in\mathbf{G}_{\delta}^{\mathbf{\tilde{E}}}$ , the following are true:

对于 $i\in{0,1,\ldots,n}$,我们将使用 $l_{i}$ 表示初始内积 $\langle u,x_{i}\rangle$。当 $i\in{1,\ldots,n}$ 时,$f_{i}=\langle u,x_{i}\rangle$ 表示第 $i^{t h}$ 列变更后的值,而 $f_{0}^{k}$ 表示第 $k^{t h}$ 阶段后的 $\langle u,x_{0}\rangle$。由于全局token (global token) 的内积会在每个阶段变化,需要进一步区分它。初始时,给定 $X\in\mathbf{G}_{\delta}^{\mathbf{\tilde{E}}}$,以下条件成立:

$$

\begin{cases}\delta^{-(i-1)d} \leq \langle u, X_{i} \rangle \leq \delta^{-i d} - \delta & \text{for all } i \in [n] \

\delta^{-(n+1)d} = \langle u, X_{0} \rangle\end{cases}

$$

$$

\begin{cases}\delta^{-(i-1)d} \leq \langle u, X_{i} \rangle \leq \delta^{-i d} - \delta & \text{for all } i \in [n] \

\delta^{-(n+1)d} = \langle u, X_{0} \rangle\end{cases}

$$

Note that all $l_{i}$ ordered in distinct buckets $l_{1}<l_{2}<\cdots<l_{n}<l_{0}.$ .

请注意,所有 $l_{i}$ 被分配到不同的桶中,且满足 $l_{1}<l_{2}<\cdots<l_{n}<l_{0}$。

We do this in phases indexed from $i\in{1,\ldots,n}$ . Each phase consists of two distinct parts: The low shift operation: These operation will be of the form

我们分阶段进行,阶段索引为 $i\in{1,\ldots,n}$。每个阶段包含两个不同的部分:低位移动操作:这些操作的形式为

$$

X\leftarrow X+\delta^{-d}\psi\left(X,v-\delta/2,v+\delta/2\right)

$$

$$

X\leftarrow X+\delta^{-d}\psi\left(X,v-\delta/2,v+\delta/2\right)

$$

for values $v\in[\delta^{-i d}),\delta^{-(i+1)d})_ {\delta}$ . The range is chosen so that only $l_{i}$ will be in the range and no other $\operatorname{\it{l}}_ {j}\operatorname{\it{j}}\ne i$ is in the range. This will shift exactly the $i^{t h}$ column $x_{i}$ so that the new inner product $f_{i}=\langle\bar{u},x_{i}\rangle$ is substantially larger than $l_{i}$ . Furthermore, no other column of $X$ will be affected. The high shift operation: These operation will be of the form

对于值 $v\in[\delta^{-i d}),\delta^{-(i+1)d})_ {\delta}$,选择该范围是为了确保只有 $l_{i}$ 会落在范围内,而其他 $\operatorname{\it{l}}_ {j}\operatorname{\it{j}}\ne i$ 不会。这将精确地移动第 $i^{t h}$ 列 $x_{i}$,使得新的内积 $f_{i}=\langle\bar{u},x_{i}\rangle$ 显著大于 $l_{i}$。此外,矩阵 $X$ 的其他列不会受到影响。高位移操作的形式如下:

$$

X\leftarrow X+\delta^{-n d}\cdot\psi\left(X,v-\delta/2,v+\delta/2\right)

$$

$$

X\leftarrow X+\delta^{-n d}\cdot\psi\left(X,v-\delta/2,v+\delta/2\right)

$$

for values $v\in[S_{i},T_{i})_ {\delta}$ . The range $[S_{i},T_{i})_ {\delta}$ is chosen to only affect the column $x_{0}$ (corresponding to the global token) and no other column. In particular, this will shift the global token by a further $\delta^{-n d}$ . Let $\tilde{f}_ {0}^{i}$ denote the value of $\tilde{f}_ {0}^{i}=\langle u,x_{0}\rangle$ at the end of $i^{t h}$ high operation.

对于值 $v\in[S_{i},T_{i})_ {\delta}$。选择范围 $[S_{i},T_{i})_ {\delta}$ 仅影响列 $x_{0}$(对应全局 token)而不影响其他列。特别地,这将使全局 token 进一步偏移 $\delta^{-n d}$。设 $\tilde{f}_ {0}^{i}$ 表示在第 $i^{t h}$ 次高位操作结束时 $\tilde{f}_ {0}^{i}=\langle u,x_{0}\rangle$ 的值。

Each phase interleaves a shift operation to column $i$ and updates the global token. After each phase, the updated $i^{t h}$ column $f_{i}=\bar{\langle}u,x_{i}\rangle$ will contain a unique token encoding the values of all the $l_{1},\ldots,l_{i}$ . After the high update, $\tilde{f}_ {0}^{i}=\langle u,x_{0}\rangle$ will contain information about the first $i$ tokens.

每个阶段交替执行对列 $i$ 的移位操作并更新全局 token。每阶段完成后,更新后的 $i^{t h}$ 列 $f_{i}=\bar{\langle}u,x_{i}\rangle$ 将包含一个唯一 token,该 token 编码了所有 $l_{1},\ldots,l_{i}$ 的值。在高位更新后,$\tilde{f}_ {0}^{i}=\langle u,x_{0}\rangle$ 将包含前 $i$ 个 token 的信息。

Finally, we define the following constants for all $k\in{0,1,\ldots,n}$ .

最后,我们为所有 $k\in{0,1,\ldots,n}$ 定义以下常量。

$$

\begin{array}{c}{{T_{k}=(\delta^{-(n+1)d}+1)^{k}\cdot\delta^{-n d}-\displaystyle\sum_{t=2}^{k}(\delta^{-(n+1)d}+1)^{k-t}(2\delta^{-n d-d}+\delta^{-n d}+1)\delta^{-t d}}}\ {{-\displaystyle(\delta^{-(n+1)d}+1)^{k-1}(\delta^{-n d-d}+\delta^{-n d})\delta^{-d}-\delta^{-(k+1)d}}}\end{array}

$$

$$

\begin{array}{c}{{T_{k}=(\delta^{-(n+1)d}+1)^{k}\cdot\delta^{-n d}-\displaystyle\sum_{t=2}^{k}(\delta^{-(n+1)d}+1)^{k-t}(2\delta^{-n d-d}+\delta^{-n d}+1)\delta^{-t d}}}\ {{-\displaystyle(\delta^{-(n+1)d}+1)^{k-1}(\delta^{-n d-d}+\delta^{-n d})\delta^{-d}-\delta^{-(k+1)d}}}\end{array}

$$

$$

\begin{array}{c}{{S_{k}=(\delta^{-(n+1)d}+1)^{k}\cdot\delta^{-n d}-\displaystyle\sum_{t=2}^{k}(\delta^{-(n+1)d}+1)^{k-t}(2\delta^{-n d-d}+\delta^{-n d}+1)\delta^{-(t-1)d}}}\ {{-\displaystyle(\delta^{-(n+1)d}+1)^{k-1}(\delta^{-n d-d}+\delta^{-n d})-\delta^{-k d}}}\end{array}

$$

$$

\begin{array}{c}{{S_{k}=(\delta^{-(n+1)d}+1)^{k}\cdot\delta^{-n d}-\displaystyle\sum_{t=2}^{k}(\delta^{-(n+1)d}+1)^{k-t}(2\delta^{-n d-d}+\delta^{-n d}+1)\delta^{-(t-1)d}}}\ {{-\displaystyle(\delta^{-(n+1)d}+1)^{k-1}(\delta^{-n d-d}+\delta^{-n d})-\delta^{-k d}}}\end{array}

$$

After each $k$ phases, we will maintain the following invariants:

每经过 $k$ 个阶段后,我们将维持以下不变式:

- $S_{k}<\tilde{f}_ {0}^{k}<T_{k}$ for all $k\in{0,1,\ldots,n}$ .

- 对于所有 $k\in{0,1,\ldots,n}$,满足 $S_{k}<\tilde{f}_ {0}^{k}<T_{k}$。

- $T_{k-1}\leq f_{k}<S_{k}$

- $T_{k-1}\leq f_{k}<S_{k}$

- The order of the inner products after $k^{t h}$ phase is

- 第 $k^{t h}$ 阶段后的内积顺序为

$$

l_{k+1}<l_{k+2}\dotsm<l_{n}<f_{1}<f_{2}<\dotsm<f_{k}<\tilde{f}_{0}^{k}.

$$

$$

l_{k+1}<l_{k+2}\dotsm<l_{n}<f_{1}<f_{2}<\dotsm<f_{k}<\tilde{f}_{0}^{k}.

$$

Inductive Step First, in the low shift operation is performed in the range $[\delta^{-(k-1)d},\delta^{-k d})_ {\delta}$ Due to the invariant, we know that there exists only one column $x_{k}$ that is affected by this shift. In particular, for column $k$ , we will have $\begin{array}{r}{\operatorname*{max}_ {j\in N(k)}\left\langle u,x_{j}\right\rangle=\left\langle u,x_{0}\right\rangle=\tilde{f}_ {0}^{k-1}}\end{array}$ . The minimum is $l_{k}$ . Thus the update will be $f_{k}=\delta^{-d}(\tilde{f}_ {0}^{k-1}-l_{k})+l_{k}$ . Observe that for small enough $\delta$ , $f_{k}\geq\tilde{f}_{0}^{k-1}$ . Hence the total ordering, after this operation is

归纳步骤

首先,在低位移操作中,范围是 $[\delta^{-(k-1)d},\delta^{-k d})_ {\delta}$。根据不变性,我们知道仅有一列 $x_{k}$ 会受到该位移的影响。具体来说,对于列 $k$,我们有 $\begin{array}{r}{\operatorname*{max}_ {j\in N(k)}\left\langle u,x_{j}\right\rangle=\left\langle u,x_{0}\right\rangle=\tilde{f}_ {0}^{k-1}}\end{array}$。最小值是 $l_{k}$。因此更新后的值为 $f_{k}=\delta^{-d}(\tilde{f}_ {0}^{k-1}-l_{k})+l_{k}$。观察到当 $\delta$ 足够小时,$f_{k}\geq\tilde{f}_{0}^{k-1}$。因此,在此操作后的总排序为

$$

l_{k}+1<l_{k+2}\cdot\cdot\cdot<l_{n}<f_{1}<f_{2}<\cdot\cdot\cdot<\tilde{f}_ {0}^{k-1}<f_{k}

$$

$$

l_{k}+1<l_{k+2}\cdot\cdot\cdot<l_{n}<f_{1}<f_{2}<\cdot\cdot\cdot<\tilde{f}_ {0}^{k-1}<f_{k}

$$

Now when we operate a higher selective shift operator in the range $[S_{k-1},T_{k-1})_ {\delta}$ . Since only global token’s inner product f˜ 0k is in this range, it will be the only column affected by the shift operator. The global token operates over the entire range, we know from Eq. (2) that, $f_{k}=\operatorname*{max}_ {i\in[n]}\left\langle u,x_{i}\right\rangle$ and $\begin{array}{r}{l_{k+1}=\operatorname*{min}_ {i\in[n]}\left\langle u,x_{i}\right\rangle}\end{array}$ . The new value $\tilde{f}_ {0}^{k}=\delta^{-n d}$ · $(f_{k}-l_{k+1})+\tilde{f}_{0}^{k-1}$ . Expanding and simplifying we get,