Playing Atari with Deep Reinforcement Learning

使用深度强化学习玩Atari游戏

Volodymyr Mnih Koray Ka vuk cuo g lu David Silver Alex Graves Ioannis Antonoglou

Volodymyr Mnih Koray Kavukcuoglu David Silver Alex Graves Ioannis Antonoglou

Daan Wierstra Martin Riedmiller

Daan Wierstra Martin Riedmiller

DeepMind Technologies

DeepMind Technologies

{vlad,koray,david,alex.graves,ioannis,daan,martin.riedmiller} @ deepmind.com

{vlad,koray,david,alex.graves,ioannis,daan,martin.riedmiller} @ deepmind.com

Abstract

摘要

We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a value function estimating future rewards. We apply our method to seven Atari 2600 games from the Arcade Learning Environment, with no adjustment of the architecture or learning algorithm. We find that it outperforms all previous approaches on six of the games and surpasses a human expert on three of them.

我们提出了首个通过强化学习直接从高维感官输入成功学习控制策略的深度学习模型。该模型是一个卷积神经网络,采用Q-learning变体进行训练,其输入为原始像素,输出为估算未来奖励的价值函数。我们将该方法应用于Arcade Learning Environment中的七款Atari 2600游戏,未对架构或学习算法进行调整。实验表明,该方法在六款游戏中表现优于所有先前方案,并在其中三款游戏中超越了人类专家水平。

1 Introduction

1 引言

Learning to control agents directly from high-dimensional sensory inputs like vision and speech is one of the long-standing challenges of reinforcement learning (RL). Most successful RL applications that operate on these domains have relied on hand-crafted features combined with linear value functions or policy representations. Clearly, the performance of such systems heavily relies on the quality of the feature representation.

学习如何直接从视觉和语音等高维感官输入控制智能体,是强化学习(RL)长期面临的挑战之一。在这些领域取得成功的RL应用,大多依赖于手工设计的特征与线性价值函数或策略表示相结合。显然,这类系统的性能很大程度上取决于特征表示的质量。

Recent advances in deep learning have made it possible to extract high-level features from raw sensory data, leading to breakthroughs in computer vision [11, 22, 16] and speech recognition [6, 7]. These methods utilise a range of neural network architectures, including convolutional networks, multilayer perce ptr on s, restricted Boltzmann machines and recurrent neural networks, and have exploited both supervised and unsupervised learning. It seems natural to ask whether similar techniques could also be beneficial for RL with sensory data.

深度学习的最新进展使得从原始感知数据中提取高级特征成为可能,从而在计算机视觉 [11, 22, 16] 和语音识别 [6, 7] 领域取得突破。这些方法采用了多种神经网络架构,包括卷积网络、多层感知器、受限玻尔兹曼机和循环神经网络,并同时利用了监督学习与无监督学习。很自然地,我们会思考类似技术是否也能在基于感知数据的强化学习 (RL) 中发挥作用。

However reinforcement learning presents several challenges from a deep learning perspective. Firstly, most successful deep learning applications to date have required large amounts of handlabelled training data. RL algorithms, on the other hand, must be able to learn from a scalar reward signal that is frequently sparse, noisy and delayed. The delay between actions and resulting rewards, which can be thousands of timesteps long, seems particularly daunting when compared to the direct association between inputs and targets found in supervised learning. Another issue is that most deep learning algorithms assume the data samples to be independent, while in reinforcement learning one typically encounters sequences of highly correlated states. Furthermore, in RL the data distribution changes as the algorithm learns new behaviours, which can be problematic for deep learning methods that assume a fixed underlying distribution.

然而从深度学习的角度来看,强化学习存在若干挑战。首先,目前大多数成功的深度学习应用都需要大量人工标注的训练数据。而强化学习算法必须能够从通常稀疏、含噪且延迟的标量奖励信号中学习。动作与结果奖励之间可能相隔数千个时间步长的延迟,与监督学习中输入和目标之间的直接关联相比显得尤为棘手。另一个问题是大多数深度学习算法假定数据样本是独立的,而强化学习通常会遇到高度相关的状态序列。此外,强化学习中的数据分布会随着算法学习新行为而改变,这对假设底层分布固定的深度学习方法来说可能存在问题。

This paper demonstrates that a convolutional neural network can overcome these challenges to learn successful control policies from raw video data in complex RL environments. The network is trained with a variant of the Q-learning [26] algorithm, with stochastic gradient descent to update the weights. To alleviate the problems of correlated data and non-stationary distributions, we use an experience replay mechanism [13] which randomly samples previous transitions, and thereby smooths the training distribution over many past behaviors.

本文证明,卷积神经网络能够克服这些挑战,在复杂的强化学习环境中从原始视频数据中学习成功的控制策略。该网络采用Q学习[26]算法的变体进行训练,通过随机梯度下降更新权重。为缓解数据相关性和非平稳分布问题,我们采用了经验回放机制[13],该机制随机采样历史状态转移数据,从而平滑基于过往行为数据的训练分布。

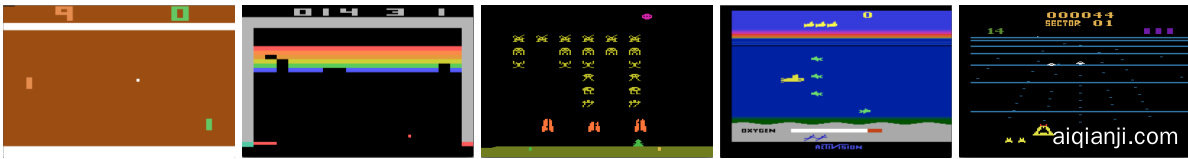

Figure 1: Screen shots from five Atari 2600 Games: (Left-to-right) Pong, Breakout, Space Invaders, Seaquest, Beam Rider

图 1: 五款Atari 2600游戏画面截图:(从左至右) Pong, Breakout, Space Invaders, Seaquest, Beam Rider

We apply our approach to a range of Atari 2600 games implemented in The Arcade Learning Environment (ALE) [3]. Atari 2600 is a challenging RL testbed that presents agents with a high dimensional visual input $(210\times160$ RGB video at $60\mathrm{Hz}$ ) and a diverse and interesting set of tasks that were designed to be difficult for humans players. Our goal is to create a single neural network agent that is able to successfully learn to play as many of the games as possible. The network was not provided with any game-specific information or hand-designed visual features, and was not privy to the internal state of the emulator; it learned from nothing but the video input, the reward and terminal signals, and the set of possible actions—just as a human player would. Furthermore the network architecture and all hyper parameters used for training were kept constant across the games. So far the network has outperformed all previous RL algorithms on six of the seven games we have attempted and surpassed an expert human player on three of them. Figure 1 provides sample screenshots from five of the games used for training.

我们将方法应用于一系列在街机学习环境 (ALE) [3] 中实现的 Atari 2600 游戏。Atari 2600 是一个具有挑战性的强化学习测试平台,它为智能体提供了高维视觉输入 $(210\times160$ RGB 视频,频率为 $60\mathrm{Hz}$ ) 以及一组多样且有趣的任务,这些任务设计初衷就是让人类玩家感到困难。我们的目标是创建一个单一的神经网络智能体,能够成功学习并尽可能多地掌握这些游戏。该网络没有获得任何游戏特定信息或手动设计的视觉特征,也无法访问模拟器的内部状态;它仅从视频输入、奖励和终止信号以及可能的动作集合中学习——就像人类玩家一样。此外,网络架构和所有训练使用的超参数在所有游戏中保持一致。截至目前,该网络在我们尝试的七款游戏中的六款上超越了所有先前的强化学习算法,并在其中三款游戏中超越了人类专家玩家。图 1 提供了用于训练的五款游戏的示例截图。

2 Background

2 背景

We consider tasks in which an agent interacts with an environment $\mathcal{E}$ , in this case the Atari emulator, in a sequence of actions, observations and rewards. At each time-step the agent selects an action $a_ {t}$ from the set of legal game actions, $\mathcal{A}={1,\ldots,K}$ . The action is passed to the emulator and modifies its internal state and the game score. In general $\mathcal{E}$ may be stochastic. The emulator’s internal state is not observed by the agent; instead it observes an image $\boldsymbol{x}_ {t}\in\mathbb{R}^{d}$ from the emulator, which is a vector of raw pixel values representing the current screen. In addition it receives a reward $r_ {t}$ representing the change in game score. Note that in general the game score may depend on the whole prior sequence of actions and observations; feedback about an action may only be received after many thousands of time-steps have elapsed.

我们考虑一种任务场景:AI智能体 (AI Agent) 与环境 $\mathcal{E}$(此处指Atari模拟器)通过一系列动作、观察和奖励进行交互。在每个时间步,智能体从合法游戏动作集合 $\mathcal{A}={1,\ldots,K}$ 中选择动作 $a_ {t}$。该动作被传递至模拟器,并改变其内部状态及游戏分数。通常 $\mathcal{E}$ 可能具有随机性。智能体无法观测模拟器的内部状态,仅能获取模拟器生成的图像 $\boldsymbol{x}_ {t}\in\mathbb{R}^{d}$——这是一个代表当前屏幕的原始像素值向量。同时,智能体会接收到反映游戏分数变化的奖励 $r_ {t}$。需要注意的是,游戏分数通常取决于先前所有动作和观察的序列,某个动作的反馈可能要在经历数千个时间步之后才能显现。

Since the agent only observes images of the current screen, the task is partially observed and many emulator states are perceptual ly aliased, i.e. it is impossible to fully understand the current situation from only the current screen $x_ {t}$ . We therefore consider sequences of actions and observations, $s_ {t}=$ $x_ {1},a_ {1},x_ {2},...,a_ {t-1},x_ {t}$ , and learn game strategies that depend upon these sequences. All sequences in the emulator are assumed to terminate in a finite number of time-steps. This formalism gives rise to a large but finite Markov decision process (MDP) in which each sequence is a distinct state. As a result, we can apply standard reinforcement learning methods for MDPs, simply by using the complete sequence $s_ {t}$ as the state representation at time $t$ .

由于智能体仅能观察到当前屏幕的图像,该任务属于部分可观测任务,且许多模拟器状态在感知上存在歧义,即仅凭当前屏幕 $x_ {t}$ 无法完全理解当前状况。因此,我们考虑动作与观测的序列 $s_ {t}=x_ {1},a_ {1},x_ {2},...,a_ {t-1},x_ {t}$ ,并学习依赖于这些序列的游戏策略。假设模拟器中所有序列都在有限时间步内终止。这种形式化方法产生了一个庞大但有限的马尔可夫决策过程 (MDP) ,其中每个序列都是一个独立的状态。如此一来,我们只需将完整序列 $s_ {t}$ 作为时间 $t$ 的状态表示,即可应用标准的 MDP 强化学习方法。

The goal of the agent is to interact with the emulator by selecting actions in a way that maximises future rewards. We make the standard assumption that future rewards are discounted by a factor of $\gamma$ per time-step, and define the future discounted return at time $t$ as $\begin{array}{r}{R_ {t}=\sum_ {t^{\prime}=t}^{T}\gamma^{t^{\prime}-t}r_ {t^{\prime}}}\end{array}$ γt′−trt′ , where T is the time-step at which the game terminates. We define the optimal actio n-value function $Q^{* }(s,a)$ as the maximum expected return achievable by following any strategy, after seeing some sequence $s$ and then taking some action $a$ , $Q^{* }(s,a)=\operatorname* {max}_ {\boldsymbol{\pi}}\mathbb{E}\left[R_ {t}|s_ {t}=s,a_ {t}=a,\boldsymbol{\pi}\right]$ , where $\pi$ is a policy mapping sequences to actions (or distributions over actions).

该智能体的目标是通过选择行动与模拟器交互,以最大化未来奖励。我们采用标准假设:未来奖励按每时间步长以系数 $\gamma$ 进行折现,并将时间 $t$ 的未来折现回报定义为 $\begin{array}{r}{R_ {t}=\sum_ {t^{\prime}=t}^{T}\gamma^{t^{\prime}-t}r_ {t^{\prime}}}\end{array}$ ,其中T为游戏终止的时间步长。最优动作-价值函数 $Q^{* }(s,a)$ 定义为在观察到某个序列 $s$ 并执行动作 $a$ 后,遵循任意策略所能获得的最大期望回报: $Q^{* }(s,a)=\operatorname* {max}_ {\boldsymbol{\pi}}\mathbb{E}\left[R_ {t}|s_ {t}=s,a_ {t}=a,\boldsymbol{\pi}\right]$ ,其中 $\pi$ 是将序列映射到动作(或动作分布)的策略。

The optimal action-value function obeys an important identity known as the Bellman equation. This is based on the following intuition: if the optimal value $Q^{* }(s^{\prime},a^{\prime})$ of the sequence $s^{\prime}$ at the next time-step was known for all possible actions $a^{\prime}$ , then the optimal strategy is to select the action $a^{\prime}$ maximising the expected value of $r+\gamma Q^{* }(s^{\prime},a^{\prime})$ ,

最优动作价值函数遵循一个被称为贝尔曼方程 (Bellman equation) 的重要恒等式。其核心思想是:若已知下一时间步所有可能动作 $a^{\prime}$ 对应的序列状态 $s^{\prime}$ 的最优值 $Q^{* }(s^{\prime},a^{\prime})$ ,则最优策略就是选择能最大化 $r+\gamma Q^{* }(s^{\prime},a^{\prime})$ 期望值的动作 $a^{\prime}$ 。

$$

\boldsymbol{Q}^{* }(s,a)=\mathbb{E}_ {s^{\prime}\sim\mathcal{E}}\left[r+\gamma\operatorname* {max}_ {a^{\prime}}\boldsymbol{Q}^{* }(s^{\prime},a^{\prime})\middle|s,a\right]

$$

$$

\boldsymbol{Q}^{* }(s,a)=\mathbb{E}_ {s^{\prime}\sim\mathcal{E}}\left[r+\gamma\operatorname* {max}_ {a^{\prime}}\boldsymbol{Q}^{* }(s^{\prime},a^{\prime})\middle|s,a\right]

$$

The basic idea behind many reinforcement learning algorithms is to estimate the actionvalue function, by using the Bellman equation as an iterative update, $\begin{array}{r l}{Q_ {i+1}(s,a)}&{{}=}\end{array}$ $\mathbb{E}\left[r+\gamma\operatorname* {max}_ {a^{\prime}}Q_ {i}(\dot{s}^{\prime},a^{\prime})|\dot{s},a\right]$ . Such value iteration algorithms converge to the optimal actionvalue function, $Q_ {i}\to Q^{* }$ as $i\rightarrow\infty$ [23]. In practice, this basic approach is totally impractical, because the action-value function is estimated separately for each sequence, without any generalisation. Instead, it is common to use a function approx im at or to estimate the action-value function, $Q(s,a;\theta)\approx Q^{* }(s,a)$ . In the reinforcement learning community this is typically a linear function approx im at or, but sometimes a non-linear function approx im at or is used instead, such as a neural network. We refer to a neural network function approx im at or with weights $\theta$ as a Q-network. A Q-network can be trained by minimising a sequence of loss functions $L_ {i}(\theta_ {i})$ that changes at each iteration $i$ ,

许多强化学习算法的基本思想是通过使用贝尔曼方程作为迭代更新来估计动作价值函数,$\begin{array}{r l}{Q_ {i+1}(s,a)}&{{}=}\end{array}$ $\mathbb{E}\left[r+\gamma\operatorname* {max}_ {a^{\prime}}Q_ {i}(\dot{s}^{\prime},a^{\prime})|\dot{s},a\right]$。这类值迭代算法会收敛到最优动作价值函数,即当$i\rightarrow\infty$时$Q_ {i}\to Q^{* }$[23]。实际上,这种基础方法完全不具备实用性,因为动作价值函数是单独为每个序列估计的,没有任何泛化性。因此,通常会使用一个函数逼近器来估计动作价值函数,即$Q(s,a;\theta)\approx Q^{* }(s,a)$。在强化学习领域,这通常是一个线性函数逼近器,但有时也会使用非线性函数逼近器,例如神经网络。我们将带有权重$\theta$的神经网络函数逼近器称为Q网络。Q网络可以通过最小化一系列在每次迭代$i$时变化的损失函数$L_ {i}(\theta_ {i})$来训练。

$$

L_ {i}\left(\theta_ {i}\right)=\mathbb{E}_ {s,a\sim\rho\left(\cdot\right)}\left[\left(y_ {i}-Q\left(s,a;\theta_ {i}\right)\right)^{2}\right],

$$

$$

L_ {i}\left(\theta_ {i}\right)=\mathbb{E}_ {s,a\sim\rho\left(\cdot\right)}\left[\left(y_ {i}-Q\left(s,a;\theta_ {i}\right)\right)^{2}\right],

$$

where $y_ {i}=\mathbb{E}_ {s^{\prime}\sim\mathcal{E}}[r+\gamma\operatorname* {max}_ {a^{\prime}}Q(s^{\prime},a^{\prime};\theta_ {i-1})|s,a]$ is the target for iteration $i$ and $\rho(s,a)$ is a probability distribution over sequences $s$ and actions $a$ that we refer to as the behaviour distribution. The parameters from the previous iteration $\theta_ {i-1}$ are held fixed when optimising the loss function $L_ {i}\left(\theta_ {i}\right)$ . Note that the targets depend on the network weights; this is in contrast with the targets used for supervised learning, which are fixed before learning begins. Differentiating the loss function with respect to the weights we arrive at the following gradient,

其中 $y_ {i}=\mathbb{E}_ {s^{\prime}\sim\mathcal{E}}[r+\gamma\operatorname* {max}_ {a^{\prime}}Q(s^{\prime},a^{\prime};\theta_ {i-1})|s,a]$ 是第 $i$ 次迭代的目标值,$\rho(s,a)$ 是状态序列 $s$ 和动作 $a$ 的概率分布(我们称之为行为分布)。在优化损失函数 $L_ {i}\left(\theta_ {i}\right)$ 时,前次迭代的参数 $\theta_ {i-1}$ 保持固定。需要注意的是,这些目标值依赖于网络权重——这与监督学习中使用的固定预设目标不同。对损失函数进行权重求导可得如下梯度:

$$

\nabla_ {\theta_ {i}}L_ {i}\left(\theta_ {i}\right)=\mathbb{E}_ {s,a\sim\rho\left(\cdot\right);s^{\prime}\sim\mathcal{E}}\left[\left(r+\gamma\operatorname* {max}_ {a^{\prime}}Q(s^{\prime},a^{\prime};\theta_ {i-1})-Q(s,a;\theta_ {i})\right)\nabla_ {\theta_ {i}}Q(s,a;\theta_ {i})\right].

$$

$$

\nabla_ {\theta_ {i}}L_ {i}\left(\theta_ {i}\right)=\mathbb{E}_ {s,a\sim\rho\left(\cdot\right);s^{\prime}\sim\mathcal{E}}\left[\left(r+\gamma\operatorname* {max}_ {a^{\prime}}Q(s^{\prime},a^{\prime};\theta_ {i-1})-Q(s,a;\theta_ {i})\right)\nabla_ {\theta_ {i}}Q(s,a;\theta_ {i})\right].

$$

Rather than computing the full expectations in the above gradient, it is often computationally expedient to optimise the loss function by stochastic gradient descent. If the weights are updated after every time-step, and the expectations are replaced by single samples from the behaviour distribution $\rho$ and the emulator $\mathcal{E}$ respectively, then we arrive at the familiar $Q$ -learning algorithm [26].

相较于计算上述梯度中的完整期望值,通过随机梯度下降优化损失函数通常在计算上更为便捷。若在每个时间步后更新权重,并将期望值分别替换为行为分布 $\rho$ 和模拟器 $\mathcal{E}$ 的单个样本,即可得到经典的 $Q$ 学习算法 [26]。

Note that this algorithm is model-free: it solves the reinforcement learning task directly using samples from the emulator $\mathcal{E}$ , without explicitly constructing an estimate of $\mathcal{E}$ . It is also off-policy: it learns about the greedy strategy $a=\operatorname* {max}_ {a}Q(s,a;\theta)$ , while following a behaviour distribution that ensures adequate exploration of the state space. In practice, the behaviour distribution is often selected by an $\epsilon$ -greedy strategy that follows the greedy strategy with probability $1-\epsilon$ and selects a random action with probability $\epsilon$ .

需要注意的是,该算法是无模型(model-free)的:它直接使用模拟器$\mathcal{E}$的样本来解决强化学习任务,而无需显式构建对$\mathcal{E}$的估计。它同时也是离策略(off-policy)的:在学习贪婪策略$a=\operatorname* {max}_ {a}Q(s,a;\theta)$的同时,遵循一个确保充分探索状态空间的行为分布。实践中,行为分布通常采用$\epsilon$-贪婪策略来选择,即以$1-\epsilon$的概率遵循贪婪策略,以$\epsilon$的概率随机选择动作。

3 Related Work

3 相关工作

Perhaps the best-known success story of reinforcement learning is $T D$ -gammon, a backgammonplaying program which learnt entirely by reinforcement learning and self-play, and achieved a superhuman level of play [24]. TD-gammon used a model-free reinforcement learning algorithm similar to Q-learning, and approximated the value function using a multi-layer perceptron with one hidden layer1.

强化学习最著名的成功案例或许是TD-Gammon这款西洋双陆棋程序,它完全通过强化学习和自我对弈进行训练,并达到了超越人类水平的棋力[24]。TD-Gammon采用了与Q学习类似的无模型强化学习算法,并通过带有一个隐藏层的多层感知机来近似价值函数1。

However, early attempts to follow up on TD-gammon, including applications of the same method to chess, Go and checkers were less successful. This led to a widespread belief that the TD-gammon approach was a special case that only worked in backgammon, perhaps because the stochastic it y in the dice rolls helps explore the state space and also makes the value function particularly smooth [19].

然而,后续对TD-gammon的早期尝试,包括将该方法应用于国际象棋、围棋和跳棋时,效果并不理想。这导致人们普遍认为TD-gammon方法是一个特例,仅适用于双陆棋,或许是因为骰子的随机性有助于探索状态空间,同时也使价值函数特别平滑 [19]。

Furthermore, it was shown that combining model-free reinforcement learning algorithms such as Qlearning with non-linear function ap proxima tors [25], or indeed with off-policy learning [1] could cause the Q-network to diverge. Subsequently, the majority of work in reinforcement learning focused on linear function ap proxima tors with better convergence guarantees [25].

此外,研究表明,将Qlearning等无模型强化学习算法与非线性函数逼近器[25]结合,或者实际采用离策略学习[1]时,可能导致Q网络发散。随后,大多数强化学习研究转向了具有更好收敛保证的线性函数逼近器[25]。

More recently, there has been a revival of interest in combining deep learning with reinforcement learning. Deep neural networks have been used to estimate the environment $\mathcal{E}$ ; restricted Boltzmann machines have been used to estimate the value function [21]; or the policy [9]. In addition, the divergence issues with Q-learning have been partially addressed by gradient temporal-difference methods. These methods are proven to converge when evaluating a fixed policy with a nonlinear function approx im at or [14]; or when learning a control policy with linear function approximation using a restricted variant of Q-learning [15]. However, these methods have not yet been extended to nonlinear control.

最近,深度学习与强化学习相结合的领域重新引起了人们的兴趣。深度神经网络被用于估计环境 $\mathcal{E}$;受限玻尔兹曼机被用于估计价值函数 [21] 或策略 [9]。此外,Q学习的发散问题已通过梯度时间差分方法得到部分解决。这些方法在评估固定策略时(使用非线性函数近似器 [14])或学习控制策略时(使用线性函数近似的Q学习受限变体 [15])被证明是收敛的。然而,这些方法尚未扩展到非线性控制领域。

Perhaps the most similar prior work to our own approach is neural fitted Q-learning (NFQ) [20]. NFQ optimises the sequence of loss functions in Equation 2, using the RPROP algorithm to update the parameters of the Q-network. However, it uses a batch update that has a computational cost per iteration that is proportional to the size of the data set, whereas we consider stochastic gradient updates that have a low constant cost per iteration and scale to large data-sets. NFQ has also been successfully applied to simple real-world control tasks using purely visual input, by first using deep auto encoders to learn a low dimensional representation of the task, and then applying NFQ to this representation [12]. In contrast our approach applies reinforcement learning end-to-end, directly from the visual inputs; as a result it may learn features that are directly relevant to discriminating action-values. Q-learning has also previously been combined with experience replay and a simple neural network [13], but again starting with a low-dimensional state rather than raw visual inputs.

与我们方法最为相似的先前工作可能是神经拟合Q学习 (neural fitted Q-learning, NFQ) [20]。NFQ通过RPROP算法更新Q网络参数来优化公式2中的损失函数序列。但该方法采用批量更新方式,每次迭代的计算成本与数据集大小成正比,而我们采用随机梯度更新,每次迭代的计算成本较低且能扩展到大型数据集。NFQ还通过先使用深度自动编码器学习任务的低维表示,再对其应用NFQ [12],成功实现了纯视觉输入的简单现实控制任务。相比之下,我们的方法直接从视觉输入端到端地应用强化学习,因此可能学习到与动作价值判别直接相关的特征。Q学习此前也曾与经验回放和简单神经网络结合使用 [13],但同样是从低维状态而非原始视觉输入开始。

The use of the Atari 2600 emulator as a reinforcement learning platform was introduced by [3], who applied standard reinforcement learning algorithms with linear function approximation and generic visual features. Subsequently, results were improved by using a larger number of features, and using tug-of-war hashing to randomly project the features into a lower-dimensional space [2]. The HyperNEAT evolutionary architecture [8] has also been applied to the Atari platform, where it was used to evolve (separately, for each distinct game) a neural network representing a strategy for that game. When trained repeatedly against deterministic sequences using the emulator’s reset facility, these strategies were able to exploit design flaws in several Atari games.

[3] 首次将 Atari 2600 模拟器作为强化学习平台,他们采用线性函数逼近和通用视觉特征的标准强化学习算法。随后,通过使用更多特征以及利用 tug-of-war 哈希将特征随机投影到低维空间 [2],结果得到了改善。HyperNEAT 进化架构 [8] 也被应用于 Atari 平台,用于针对每个不同游戏分别进化出代表该游戏策略的神经网络。当通过模拟器的重置功能反复针对确定性序列进行训练时,这些策略能够利用多款 Atari 游戏中的设计缺陷。

4 Deep Reinforcement Learning

4 深度强化学习

Recent breakthroughs in computer vision and speech recognition have relied on efficiently training deep neural networks on very large training sets. The most successful approaches are trained directly from the raw inputs, using lightweight updates based on stochastic gradient descent. By feeding sufficient data into deep neural networks, it is often possible to learn better representations than handcrafted features [11]. These successes motivate our approach to reinforcement learning. Our goal is to connect a reinforcement learning algorithm to a deep neural network which operates directly on RGB images and efficiently process training data by using stochastic gradient updates.

计算机视觉和语音识别领域的最新突破,依赖于在超大规模训练集上高效训练深度神经网络。最成功的方法直接从原始输入进行训练,采用基于随机梯度下降的轻量级更新机制。通过向深度神经网络输入足够数据,通常能学习到比手工特征更优的表征 [11]。这些成果启发了我们强化学习的研究路径。我们的目标是将强化学习算法与深度神经网络相连,使其直接处理RGB图像,并通过随机梯度更新高效处理训练数据。

Tesauro’s TD-Gammon architecture provides a starting point for such an approach. This architecture updates the parameters of a network that estimates the value function, directly from on-policy samples of experience, $s_ {t},a_ {t},r_ {t},s_ {t+1},a_ {t+1}$ , drawn from the algorithm’s interactions with the environment (or by self-play, in the case of backgammon). Since this approach was able to outperform the best human backgammon players 20 years ago, it is natural to wonder whether two decades of hardware improvements, coupled with modern deep neural network architectures and scalable RL algorithms might produce significant progress.

Tesauro 的 TD-Gammon 架构为这种方法提供了起点。该架构通过算法与环境交互 (或双陆棋中的自我对弈) 获得的策略样本经验 $s_ {t},a_ {t},r_ {t},s_ {t+1},a_ {t+1}$ ,直接更新价值函数估计网络的参数。由于这种方法在 20 年前就能超越最优秀的人类双陆棋选手,人们自然会思考:二十年的硬件进步结合现代深度神经网络架构和可扩展的强化学习算法,是否能带来重大突破。

In contrast to TD-Gammon and similar online approaches, we utilize a technique known as experience replay [13] where we store the agent’s experiences at each time-step, $e_ {t}=\left(s_ {t},a_ {t},r_ {t},s_ {t+1}\right)$ in a data-set $\mathcal{D}=e_ {1},...,e_ {N}$ , pooled over many episodes into a replay memory. During the inner loop of the algorithm, we apply Q-learning updates, or minibatch updates, to samples of experience, $e\sim D$ , drawn at random from the pool of stored samples. After performing experience replay, the agent selects and executes an action according to an $\epsilon$ -greedy policy. Since using histories of arbitrary length as inputs to a neural network can be difficult, our Q-function instead works on fixed length representation of histories produced by a function $\phi$ . The full algorithm, which we call deep $Q$ -learning, is presented in Algorithm 1.

与TD-Gammon等在线方法不同,我们采用了一种称为经验回放[13]的技术,将智能体在每个时间步的经历 $e_ {t}=\left(s_ {t},a_ {t},r_ {t},s_ {t+1}\right)$ 存储在数据集 $\mathcal{D}=e_ {1},...,e_ {N}$ 中,并将多轮次数据汇集成回放记忆库。在算法的内循环中,我们对从存储样本池中随机抽取的经验样本 $e\sim D$ 应用Q学习更新或小批量更新。完成经验回放后,智能体根据 $\epsilon$ -贪婪策略选择并执行动作。由于将任意长度的历史记录作为神经网络输入较为困难,我们的Q函数改为处理由函数 $\phi$ 生成的固定长度历史表示。完整算法(我们称之为深度 $Q$ 学习)如算法1所示。

This approach has several advantages over standard online Q-learning [23]. First, each step of experience is potentially used in many weight updates, which allows for greater data efficiency.

这种方法相比标准的在线Q学习[23]具有多个优势。首先,每个经验步骤都可能用于多次权重更新,从而提高了数据利用效率。

| Initialize replay memory D to capacity N Initialize action-value function Q with random weights for episode = 1, M do |

| Initialise sequence s1 = {1} and preprocessed sequenced Φ1 = Φ(s1) |

| for t = 1, T do With probability E select a random action at |

| otherwise select at = maxa Q* (Φ(st),a; 0) |

| Execute action at in emulator and observe reward rt and image Xt+1 |

| Set St+1 = St, at, Ct+1 and preprocess Φt+1 = Φ(st+1) |

| Store transition (Φt, at, rt, Φt+1) in D |

| Sample random minibatch of transitions (Φ§, a, r§, Φj+1) from D |

| for terminal Φ+1 Set yj rj |

| rj + maxa Q(Φ+1,a';0) for non-terminal Φj+1 |

| Perform a gradient descent step on (yj - Q(Φ, a; 0)2 according to equation 3 |

| end for end for |

| 初始化回放记忆库 D,容量为 N |

| 用随机权重初始化动作价值函数 Q |

| 对于每个回合 episode = 1 到 M 执行 |

| 初始化序列 s1 = {1} 并预处理序列 Φ1 = Φ(s1) |

| 对于每个时间步 t = 1 到 T 执行 |

| 以概率 ε 随机选择动作 at |

| 否则选择 at = maxa Q* (Φ(st), a; θ) |

| 在模拟器中执行动作 at,观察奖励 rt 和图像 Xt+1 |

| 设置 st+1 = st, at, xt+1 并预处理 Φt+1 = Φ(st+1) |

| 将转移 (Φt, at, rt, Φt+1) 存入 D |

| 从 D 中随机采样小批量转移 (Φj, aj, rj, Φj+1) |

| 对于终止状态的 Φj+1 设置 yj = rj |

| 对于非终止状态的 Φj+1 设置 yj = rj + γ maxa' Q(Φj+1, a'; θ) |

| 根据方程 3 对 (yj - Q(Φj, aj; θ))^2 执行梯度下降步骤 |

| 结束循环 |

Second, learning directly from consecutive samples is inefficient, due to the strong correlations between the samples; randomizing the samples breaks these correlations and therefore reduces the variance of the updates. Third, when learning on-policy the current parameters determine the next data sample that the parameters are trained on. For example, if the maximizing action is to move left then the training samples will be dominated by samples from the left-hand side; if the maximizing action then switches to the right then the training distribution will also switch. It is easy to see how unwanted feedback loops may arise and the parameters could get stuck in a poor local minimum, or even diverge catastrophically [25]. By using experience replay the behavior distribution is averaged over many of its previous states, smoothing out learning and avoiding oscillations or divergence in the parameters. Note that when learning by experience replay, it is necessary to learn off-policy (because our current parameters are different to those used to generate the sample), which motivates the choice of Q-learning.

其次,直接从连续样本中学习效率低下,因为样本之间存在强相关性;随机化样本能打破这些关联,从而降低更新的方差。第三,在策略学习时,当前参数决定了训练参数的下一批数据样本。例如,若最大化动作是向左移动,则训练样本会主要来自左侧;若最大化动作随后转为向右,训练数据分布也会随之改变。这容易引发不良反馈循环,导致参数陷入局部极小值,甚至出现灾难性发散 [25]。通过经验回放,行为分布会在多个先前状态上取平均,从而平滑学习过程并避免参数振荡或发散。需要注意的是,使用经验回放学习时必须采用离策略方式(因为当前参数与生成样本时所用参数不同),这正是选择Q学习算法的动机所在。

In practice, our algorithm only stores the last $N$ experience tuples in the replay memory, and samples uniformly at random from $\mathcal{D}$ when performing updates. This approach is in some respects limited since the memory buffer does not differentiate important transitions and always overwrites with recent transitions due to the finite memory size $N$ . Similarly, the uniform sampling gives equal importance to all transitions in the replay memory. A more sophisticated sampling strategy might emphasize transitions from