RAG-DDR: OPTIMIZING RETRIEVAL-AUGMENTED GENERATION USING DIFFERENTIABLE DATA REWARDS

RAG-DDR:使用可微分数据奖励优化检索增强生成

ABSTRACT

摘要

Retrieval-Augmented Generation (RAG) has proven its effectiveness in mitigating hallucinations in Large Language Models (LLMs) by retrieving knowledge from external resources. To adapt LLMs for RAG pipelines, current approaches use instruction tuning to optimize LLMs, improving their ability to utilize retrieved knowledge. This supervised fine-tuning (SFT) approach focuses on equipping LLMs to handle diverse RAG tasks using different instructions. However, it trains RAG modules to overfit training signals and overlooks the varying data preferences among agents within the RAG system. In this paper, we propose a Differentiable Data Rewards (DDR) method, which end-to-end trains RAG systems by aligning data preferences between different RAG modules. DDR works by collecting the rewards to optimize each agent with a rollout method. This method prompts agents to sample some potential responses as perturbations, evaluates the impact of these perturbations on the whole RAG system, and subsequently optimizes the agent to produce outputs that improve the performance of the RAG system. Our experiments on various knowledge-intensive tasks demonstrate that DDR significantly outperforms the SFT method, particularly for LLMs with smaller-scale parameters that depend more on the retrieved knowledge. Additionally, DDR exhibits a stronger capability to align the data preference between RAG modules. The DDR method makes generation module more effective in extracting key information from documents and mitigating conflicts between parametric memory and external knowledge. All codes are available at https://github.com/OpenMatch/RAG-DDR.

检索检索增强生成(Retrieval-Augmented Generation, RAG)通过从外部资源中检索知识,已经证明其在减轻大语言模型(LLM)幻觉问题上的有效性。为了将LLM适配于RAG管道,当前的方法使用指令调优来优化LLM,提高其利用检索知识的能力。这种监督微调(Supervised Fine-Tuning, SFT)方法的重点是通过不同的指令让LLM能够处理多样化的RAG任务。然而,这种方法训练RAG模块过度拟合训练信号,忽视了RAG系统中各智能体之间的数据偏好差异。在本文中,我们提出了一种可微分数据奖励(Differentiable Data Rewards, DDR)方法,该方法通过齐RAG模块之间的数据偏好来端到端地训练RAG系统。DDR通过收集奖励并使用rollout方法优化每个智能体。该方法促使智能体采样一些潜在的响应作为扰动,评估这些扰动对整个RAG系统的影响,随后优化智能体以生成能够提升RAG系统性能的输出。我们在各种知识密集型任务上的实验表明,DDR显著优于SFT方法,尤其是对于那些更依赖检索知识的小规模参数LLM。此外,DDR表现出更强的对齐RAG模块之间数据偏好的能力。DDR方法使生成模块在从文档中提取关键信息以及减轻参数化记忆与外部知识之间的冲突方面更加有效。所有代码可在https://github.com/OpenMatch/RAG-DDR获取。

1 INTRODUCTION

1 引言

Large Language Models (LLMs) have demonstrated impressive capabilities in language understanding, reasoning, and planning capabilities across a wide range of natural language processing (NLP) tasks (Achiam et al., 2023; Touvron et al., 2023; Hu et al., 2024). However, LLMs usually produce incorrect responses due to hallucination (Ji et al., 2023; Xu et al., 2024c). To alleviate the problem, existing studies employ Retrieval Augmented Generation (RAG) (Lewis et al., 2020; Shi et al., 2023; Peng et al., 2023) to enhance the capability of LLMs and help LLMs access long-tailed knowledge and up-to-date knowledge from different data sources (Trivedi et al., 2023; He et al., 2021; Cai et al., 2019; Parvez et al., 2021). However, the conflict between retrieved knowledge and parametric memory usually misleads LLMs, challenging the effectiveness of RAG system (Li et al., 2022; Chen et al., 2023; Asai et al., 2024b).

大大语言模型(LLMs)在广泛的自然语言处理(NLP)任务中展现了令人印象深刻深刻的语言理解、推理和规划能力 (Achiam et al., 2023; Touvron et al., 2023; Hu et al., 2024)。然而,由于幻觉问题 (Ji et al., 2023; Xu et al., 2024c),LLMs 通常会产生错误的回答。为了缓解这一问题,现有研究采用检索增强生成(Retrieval Augmented Generation, RAG)(Lewis et al., 2020; Shi et al., 2023; Peng et al., 2023) 来增强 LLMs 的能力,并帮助 LLMs 从不同数据源中获取长尾知识和最新知识 (Trivedi et al., 2023; He et al., 2021; Cai et al., 2019; Parvez et al., 2021)。然而,检索知识与参数化记忆之间的冲突通常会误导 LLMs,从而挑战 RAG 系统的有效性 (Li et al., 2022; Chen et al., 2023; Asai et al., 2024b)。

To ensure the effectiveness of RAG systems, existing research has focused on developing various agents to enhance the retrieval accuracy (Gao et al., 2024; Xu et al., 2023b; Jiang et al., 2023; Xu et al., 2024b). These approaches aim to refine retrieval results through query reformulation, reranking candidate documents, summarizing the retrieved documents or performing additional retrieval steps to find more relevant information (Yan et al., 2024; Trivedi et al., 2023; Asai et al., 2023; Yu et al., 2023a). To optimize the RAG system, the methods independently optimize different RAG modules by using the EM method (Singh et al., 2021; Sachan et al., 2021) or build the instruct tuning dataset for Supervised Fine-Tuning (SFT) these LLM based RAG modules (Lin et al., 2023; Asai et al., 2023). However, these SFT-based methods usually train LLMs to overfit the training signals and face the catastrophic forgetting problem (Luo et al., 2023b).

为了为了确保 RAG 系统的有效性,现有研究专注于开发各种智能体以提高检索准确性(Gao 等,2024;Xu 等,2023b;Jiang 等,2023;Xu 等,2024b)。这些方法旨在通过查询重构、重新排序候选文档、总结检索到的文档或执行额外的检索步骤来优化检索结果,以找到更多相关信息(Yan 等,2024;Trivedi 等,2023;Asai 等,2023;Yu 等,2023a)。为了优化 RAG 系统,这些方法要么通过 EM 方法独立优化不同的 RAG 模块(Singh 等,2021;Sachan 等,2021),要么构建用于监督微调(SFT)的指令调优数据集,以微调这些基于大语言模型的 RAG 模块(Lin 等,2023;Asai 等,2023)。然而,这些基于 SFT 的方法通常会使大语言模型过度拟合训练信号,并面临灾难性遗忘问题(Luo 等,2023b)。

Current research further aims to optimize these RAG modules for aligning their data preferences and primarily focuses on optimizing a two-agent framework, which consists of a retriever and a generator. Typically, these systems train only the retriever to supply more accurate documents to satisfy the data preference of the generator (Shi et al., 2023; Yu et al., 2023b). Aligning data preferences between the retriever and generator through refining retrieved knowledge is a straightforward approach to improving the effectiveness of RAG models. Nevertheless, the generator still faces the knowledge conflict, making LLMs not effectively utilize the retrieved knowledge during generation (Xie et al., 2024). Thus optimizing each RAG module by using the reward from the entire system during training, is essential for building a more tailored RAG system.

当前当前研究进一步旨在优化这些RAG模块,以对齐它们的数据偏好,并主要集中于优化由检索器和生成器组成的双智能体框架。通常,这些系统仅训练检索器以提供更准确的文档,以满足生成器的数据偏好(Shi et al., 2023; Yu et al., 2023b)。通过精炼检索到的知识来对齐检索器和生成器之间的数据偏好,是提高RAG模型有效性的一种直接方法。然而,生成器仍然面临知识冲突问题,使得大语言模型在生成过程中无法有效利用检索到的知识(Xie et al., 2024)。因此,在训练过程中使用整个系统的奖励来优化每个RAG模块,对于构建更加定制化的RAG系统至关重要。

This paper introduces a Differentiable Data Rewards (DDR) method for end-to-end optimizing agents in the RAG system using the DPO (Rafailov et al., 2024) method. DDR uses a rollout method (Kocsis & Szepesv´ari, 2006) to collect the reward from the overall system for each agent and optimizes the agent according to the reward. Specifically, we follow Asai et al. (2024a) and build a typical RAG system to evaluate the effectiveness of our DDR model. It consists of a knowledge refinement module for selecting retrieved documents and a generation module for producing responses based on queries and refined knowledge. Then we conduct the RAG-DDR model by optimizing the two-agent based RAG system using DDR. Throughout the optimization process, we use the reward from the entire RAG system and iterative ly optimize both the generation and knowledge refinement modules to align data preferences across both agents.

本文本文介绍了一种名为可微数据奖励 (Differentiable Data Rewards, DDR) 的方法,用于在 RAG(Retrieval-Augmented Generation, 检索增强生成)系统中使用 DPO (Rafailov et al., 2024) 方法对端到端的智能体进行优化。DDR 采用了一种 rollout 方法 (Kocsis & Szepesv´ari, 2006) 来为每个智能体从整个系统中收集奖励,并根据奖励优化智能体。具体而言,我们遵循 Asai 等人 (2024a) 的工作,构建了一个典型的 RAG 系统来评估 DDR 模型的有效性。该系统包括一个用于选择检索文档的知识精炼模块和一个基于查询和精炼知识生成响应的生成模块。然后,我们通过使用 DDR 优化基于双智能体的 RAG 系统,构建了 RAG-DDR 模型。在整个优化过程中,我们使用来自整个 RAG 系统的奖励,并迭代优化生成模块和知识精炼模块,以使两个智能体之间的数据偏好对齐。

Our experiments on various Large Language Models (LLMs) demonstrate that Differentiable Data Rewards (DDR) outperforms all baseline models, achieving significant improvements over previous method (Lin et al., 2023) in a range of knowledge-intensive tasks. DDR can effectively retrofit LLMs for the RAG modeling and help generate higher-quality responses of an appropriate length. Our further analyses show that the effectiveness of our RAG-DDR model primarily derives from the generation module, which is optimized by the reward from the RAG system. Our DDR optimized generation module is more effective in capturing crucial information from retrieved documents and alleviating the knowledge conflict between external knowledge and parametric memory. Further analyses show that the effectiveness of DDR optimized RAG systems can be generalized even when additional noisy documents are incorporated during response generation.

我们对我们对各种大语言模型(LLMs)的实验表明,可微分数据奖励(DDR)在所有基线模型上表现优异,在一系列知识密集型任务中相较于之前的方法(Lin et al., 2023)取得了显著改进。DDR 能够有效改造 LLMs 以进行 RAG 建模,并帮助生成更高质量的合适长度的响应。我们的进一步分析表明,RAG-DDR 模型的有效性主要来源于生成模块,该模块通过 RAG 系统的奖励进行了优化。我们的 DDR 优化生成模块在从检索到的文档中捕捉关键信息和缓解外部知识与参数化内存之间的知识冲突方面更为有效。进一步的分析表明,即使响应生成过程中加入了额外的噪声文档,DDR 优化的 RAG 系统的有效性仍然具有通用性。

2 RELATED WORK

2 相关工作

Retrieval-Augmented Generation (RAG) is widely used in various real-world applications, such as open-domain QA (Trivedi et al., 2023), language modeling (He et al., 2021), dialogue (Cai et al., 2019), and code generation (Parvez et al., 2021). RAG models retrieve documents from external corpus (Karpukhin et al., 2020; Xiong et al., 2021) and then augment the LLM’s generation by incorporating documents as the context for generation (Ram et al., 2023) or aggregating the output probabilities from the encoding pass of each retrieved documents (Shi et al., 2023). They help LLMs alleviate hallucinations and generate more accurate and trustworthy responses (Jiang et al., 2023; Xu et al., $2023\mathrm{a}$ ; Luo et al., 2023a; Hu et al., 2023; Kandpal et al., 2023). However, retrieved documents inevitably incorporate noisy information, limiting the effectiveness of RAG systems in generating accurate responses (Xu et al., 2023b; 2024b; Longpre et al., 2021; Liu et al., 2024b).

检索检索增强生成 (Retrieval-Augmented Generation, RAG) 被广泛应用于各种现实场景中,例如开放域问答 (Trivedi et al., 2023)、语言建模 (He et al., 2021)、对话系统 (Cai et al., 2019) 以及代码生成 (Parvez et al., 2021)。RAG 模型从外部语料库中检索文档 (Karpukhin et al., 2020; Xiong et al., 2021),然后通过将文档作为生成上下文 (Ram et al., 2023) 或聚合每个检索文档的编码输出概率 (Shi et al., 2023) 来增强大语言模型的生成能力。它们帮助大语言模型减轻幻觉问题,并生成更准确和可信的响应 (Jiang et al., 2023; Xu et al., 2023a; Luo et al., 2023a; Hu et al., 2023; Kandpal et al., 2023)。然而,检索到的文档不可避免地包含噪声信息,这限制了 RAG 系统生成准确响应的有效性 (Xu et al., 2023b; 2024b; Longpre et al., 2021; Liu et al., 2024b)。

Some studies have demonstrated that the noise from retrieved documents can mislead LLMs, sometimes resulting in degraded performance even on some knowledge-intensive tasks (Foulds et al., 2024; Shuster et al., 2021; Xu et al., 2024b). Such a phenomenon primarily derives from the knowledge conflict between parametric knowledge of LLMs and external knowledge (Jin et al., 2024;

一些一些研究表明,检索文档中的噪声可能会误导大语言模型,有时甚至会导致某些知识密集型任务的性能下降 (Foulds et al., 2024; Shuster et al., 2021; Xu et al., 2024b)。这种现象主要源于大语言模型的参数化知识与外部知识之间的冲突 (Jin et al., 2024;

Longpre et al., 2021; Chen et al., 2022; Xie et al., 2024; Wu et al., 2024). Xie et al. (2024) have demonstrated that LLMs are highly receptive to external evidence when external knowledge conflicts with the parametric memory (Xie et al., 2024). Thus, lots of RAG models focus on building modular RAG pipelines to improve the quality of retrieved documents (Gao et al., 2024). Most of them aim to conduct more accurate retrieval models by employing a retrieval evaluator to trigger different knowledge refinement actions (Yan et al., 2024), prompting LLMs to summarize the query-related knowledge from retrieved documents (Yu et al., 2023a) or training LLMs to learn how to retrieve and utilize knowledge on-demand by self-reflection (Asai et al., 2023).

LongLongpre等人, 2021; Chen等人, 2022; Xie等人, 2024; Wu等人, 2024)。Xie等人 (2024) 已经证明,当外部知识与参数记忆发生冲突时,大语言模型对外部证据具有高度的接受性 (Xie等人, 2024)。因此,许多 RAG 模型专注于构建模块化的 RAG 管道,以提高检索文档的质量 (Gao等人, 2024)。其中大多数旨在通过采用检索评估器来触发不同的知识精炼动作,从而构建更准确的检索模型 (Yan等人, 2024),提示大语言模型从检索到的文档中总结与查询相关的知识 (Yu等人, 2023a),或通过自我反思训练大语言模型学习如何按需检索和利用知识 (Asai等人, 2023)。

Optimizing RAG system is a crucial research direction to help generate more accurate responses. Previous work builds a RAG system based on pretrained language models and conducts an end-toend training method (Singh et al., 2021; Sachan et al., 2021). They regard retrieval decisions as latent variables and then iterative ly optimize the retriever and generator to fit the golden answers. Recent research primarily focuses on optimizing LLMs for RAG. INFO-RAG (Xu et al., 2024a) focuses on enabling LLMs with the in-context denoising ability by designing an unsupervised pre training method to teach LLMs to refine information from retrieved contexts. RA-DIT (Lin et al., 2023) builds a supervised training dataset and then optimizes the retriever and LLM by instruct tuning. However, these training methods focus on training LLMs to fit the training signals and face the issue of catastrophic forgetting during instruct tuning (Luo et al., 2023b).

优化优化 RAG 系统是帮助生成更准确响应的关键研究方向。先前的工作基于预训练语言模型构建 RAG 系统,并采用端到端训练方法 (Singh et al., 2021; Sachan et al., 2021)。它们将检索决策视为潜在变量,然后通过迭代优化检索器和生成器来拟合正确答案。最近的研究主要集中在优化 RAG 中的大语言模型。INFO-RAG (Xu et al., 2024a) 通过设计一种无监督预训练方法,使大语言模型具备上下文去噪能力,从而教会大语言模型从检索到的上下文中提炼信息。RA-DIT (Lin et al., 2023) 构建了一个有监督训练数据集,然后通过指令调优来优化检索器和大语言模型。然而,这些训练方法主要关注训练大语言模型以拟合训练信号,并在指令调优过程中面临灾难性遗忘问题 (Luo et al., 2023b)。

Reinforcement Learning (RL) algorithms (Schulman et al., 2017), such as Direct Preference Optimization (DPO) (Rafailov et al., 2024), are widely used to optimize LLMs for aligning with human preferences and enhancing the consistency of generated responses (Putta et al., 2024). Agent Q integrates MCTS and DPO to allow agents to learn from both successful and unsuccessful trajectories, thereby improving their performance in complex reasoning tasks (Putta et al., 2024). STEP-DPO further considers optimizing each inference step of a complex task as the fundamental unit for preference learning, which enhances the long-chain reasoning capabilities of LLMs (Lai et al., 2024). While these models primarily target the optimization of individual agents to improve response accuracy at each step, they do not focus on the effectiveness of data alignment within the multi-agent system. Instead of using SFT methods for RAG optimization (Lin et al., 2023), this paper focuses on using the DPO method to avoid over fitting the training signals and align data preferences across different agents, which is different from above RL-based optimization methods.

强化强化学习(Reinforcement Learning, RL)算法(Schulman et al., 2017),如直接偏好优化(Direct Preference Optimization, DPO)(Rafailov et al., 2024),被广泛用于优化大语言模型以使其与人类偏好对齐,并增强生成内容的一致性(Putta et al., 2024)。Agent Q 结合了蒙特卡洛树搜索(MCTS)和 DPO,使得智能体能够从成功和失败的轨迹中学习,从而提升其在复杂推理任务中的表现(Putta et al., 2024)。STEP-DPO 进一步将复杂任务的每个推理步骤优化作为偏好学习的基本单元,增强了大语言模型的长链推理能力(Lai et al., 2024)。尽管这些模型主要针对单个智能体的优化,以提升每一步响应的准确性,但它们并未关注多智能体系统中数据对齐的有效性。与使用监督微调(SFT)方法进行检索增强生成(Retrieval-Augmented Generation, RAG)优化不同,本文聚焦于使用 DPO 方法来避免训练信号的过拟合,并在不同智能体之间对齐数据偏好,这与上述基于强化学习的优化方法有所不同。

3 RAG TRAINING WITH DIFFERENTIABLE DATA REWARDS

3 使用可微分数据奖励进行 RAG 训练

This section introduces the Differentiable Data Rewards (DDR) method. We first introduce the DDR method, which optimizes the agent system by aligning the data preferences between agents (Sec. 3.1). Then we utilize knowledge refinement and generation modules to build the RAG pipeline and utilize the DDR method to optimize agents in this system (Sec. 3.2).

本节本节介绍可微分数据奖励 (Differentiable Data Rewards, DDR) 方法。我们首先介绍 DDR 方法,它通过齐 AI智能体之间的数据偏好来优化智能体系统 (第 3.1 节)。然后,我们利用知识精炼和生成模块构建 RAG 管道,并使用 DDR 方法优化该系统内的智能体 (第 3.2 节)。

3.1 DATA PREFERENCE LEARNING WITH DIFFERENTIABLE DATA REWARDS

3.1 基于可微分数据奖励的数据偏好学习

In a RAG system $\mathcal{V}=\left{V_{1},\ldots,V_{t},\ldots,V_{T}\right}$ , agents exchange and communicate data. To optimize this system, we first forward-propagate data among agents and then evaluate the performance of the RAG system. Then we backward-propagate rewards to refine the data preferences of each agent.

在在 RAG 系统 $\mathcal{V}=\left{V_{1},\ldots,V_{t},\ldots,V_{T}\right}$ 中,AI 智能体 (AI Agent) 交换和传递数据。为了优化该系统,我们首先在智能体之间前向传播数据,然后评估 RAG 系统的性能。接着我们反向传播奖励以优化每个智能体的数据偏好。

Data Propagation. During communication, the $t$ -th agent, $V_{t}$ , acts both as a sender and a receiver. Agent $V_{t}$ receives data from agent $V_{t-1}$ and simultaneously passes data to agent $V_{t+1}$ :

数据数据传播。在通信过程中,第$t$个智能体$V_{t}$同时充当发送方和接收方。智能体$V_{t}$从智能体$V_{t-1}$接收数据,同时将数据传递给智能体$V_{t+1}$:

where $V_{t} \xrightarrow{y_{t}}V_{t+1}$ denotes that the agent generates one response $y_{t}$ of the maximum prediction probability and sends it to the agent $V_{t+1}$ . $x\rightsquigarrow$ and $\sim~y_{T}$ represent sending the input $x$ to the agent system $\nu$ and getting the output $y_{T}$ from $\nu$ . The performance of the agent system $\nu$ can be evaluated by calculating the quality score $S(y_{T})$ of the final output $V_{T}$ of the agent system $\mathcal{V}$ .

其中其中 $V_{t} \xrightarrow{y_{t}}V_{t+1}$ 表示智能体生成一个最大预测概率的响应 $y_{t}$ 并将其发送给智能体 $V_{t+1}$ 。 $x\rightsquigarrow$ 和 $\sim~y_{T}$ 表示将输入 $x$ 发送到智能体系统 $\nu$ 并从 $\nu$ 获取输出 $y_{T}$ 。可以通过计算智能体系统 $\mathcal{V}$ 的最终输出 $V_{T}$ 的质量得分 $S(y_{T})$ 来评估智能体系统 $\nu$ 的性能。

Differentiable Data Reward. Unlike conventional supervised fine-tuning approaches (Lin et al., 2023), DDR tries to optimize the agent $V_{t}$ to align the data preference $V_{t+1:T}$ , making the agent system produce a better response with higher evaluation score $S(y_{T})$ of the whole RAG system.

可可微分数据奖励 (Differentiable Data Reward)。与传统的监督微调方法 (Lin et al., 2023) 不同,DDR 尝试优化智能体 $V_{t}$,以对齐数据偏好 $V_{t+1:T}$,使得智能体系统能够生成更好的响应,并获得整个 RAG 系统更高的评估分数 $S(y_{T})$。

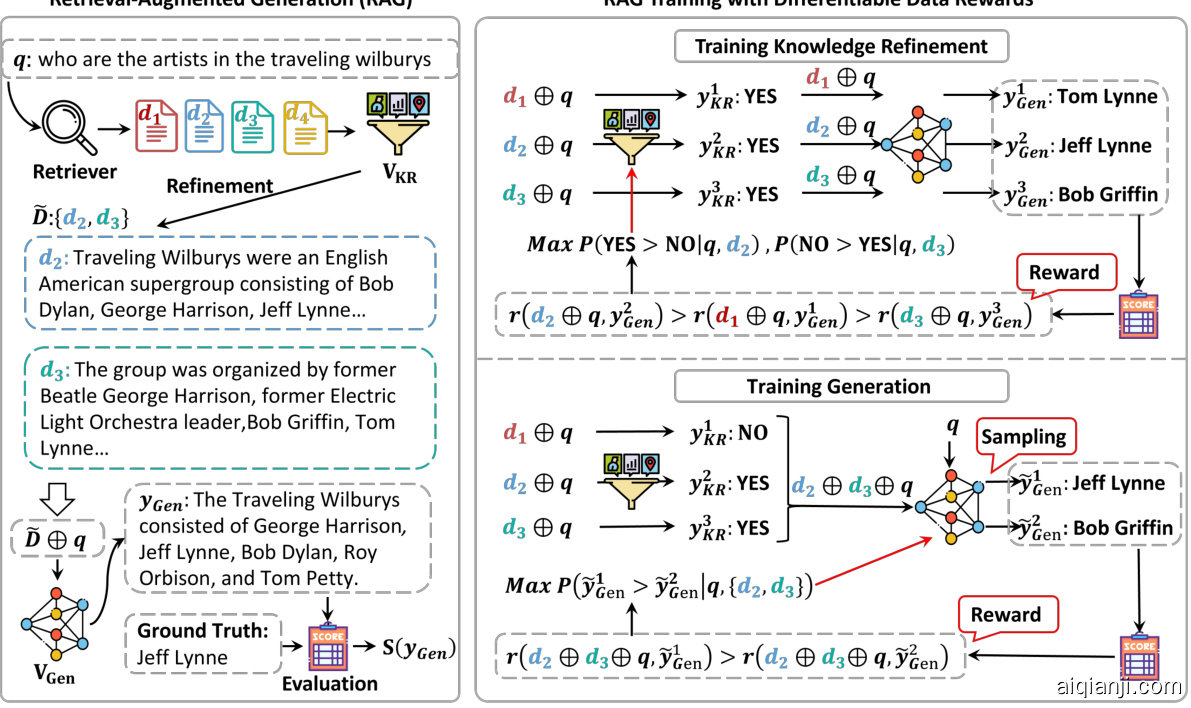

Retrieval-Augmented Generation (RAG) RAG Training with Differentiable Data Rewards

)

检索增强生成 (Retrieval-Augmented Generation, RAG) 使用可微数据奖励的 RAG 训练

Figure 1: The Illustration of End-to-End Retrieval-Augmented Generation (RAG) Training with Our Differentiable Data Reward (DDR) Method. During training, we iterative ly optimize the Generation module $(V_{\mathrm{Gen}})$ and Knowledge Refinement module $(V_{\mathrm{KR}})$ .

图图 1: 使用我们的可微分数据奖励 (DDR) 方法进行端到端检索增强生成 (RAG) 训练的示意图。在训练过程中,我们迭代优化生成模块 $(V_{\mathrm{Gen}})$ 和知识精炼模块 $(V_{\mathrm{KR}})$。

To optimize the agent $V_{t}$ , DDR aims to propagate the system reward to train the targeted agent. Specifically, we first instruct $V_{t}$ to sample multiple outputs $\tilde{y}{t}$ , which incorporate some perturbations into the agent system. Then we calculate the reward $r(x,\tilde{y}{t})$ with a rollout process (Kocsis $&$ Szepesva´ri, 2006). In detail, we regard the agents $\mathcal{V}{t+1:T}$ as the evaluation model, feed $\tilde{y}{t}$ to this subsystem $\mathcal{V}{t+1:T}$ and calculate the evaluation score $S(y{T})$ of the generated output $y_{T}$ :

为了为了优化智能体 $V_{t}$,DDR 旨在传播系统奖励以训练目标智能体。具体来说,我们首先指导 $V_{t}$ 采样多个输出 $\tilde{y}{t}$,这些输出在智能体系统中引入了一些扰动。然后我们通过 rollout 过程 (Kocsis $&$ Szepesva´ri, 2006) 计算奖励 $r(x,\tilde{y}{t})$。具体而言,我们将智能体 $\mathcal{V}{t+1:T}$ 视为评估模型,将 $\tilde{y}{t}$ 输入到该子系统 $\mathcal{V}{t+1:T}$ 中,并计算生成输出 $y{T}$ 的评估分数 $S(y_{T})$:

Finally, we maximize the probability of generating $\tilde{y}{t}^{+}$ over $\tilde{y}{t}^{-}$ , where $\tilde{y}{t}^{+}$ wins higher reward than $\tilde{y}{t}^{-};(r(x,\tilde{y}{t}^{+})>r(x,\tilde{y}{t}^{-}))$ :

最后最后,我们最大化生成 $\tilde{y}{t}^{+}$ 相对于 $\tilde{y}{t}^{-}$ 的概率,其中 $\tilde{y}{t}^{+}$ 获得的奖励高于 $\tilde{y}{t}^{-};(r(x,\tilde{y}{t}^{+})>r(x,\tilde{y}{t}^{-}))$ :

where $\sigma$ is the Sigmoid function. The parameters of $V_{t}$ can be trained using the DPO (Rafailov et al., 2024) training loss, aiding the DDR in identifying optimization directions by contrastive ly learning from the positive $(\tilde{y}{t}^{+})$ and negative $(\tilde{y}{t}^{-})$ outputs:

其中其中 $\sigma$ 是 Sigmoid 函数。$V_{t}$ 的参数可以通过使用 DPO (Rafailov et al., 2024) 训练损失进行训练,通过对比学习正样本 $(\tilde{y}{t}^{+})$ 和负样本 $(\tilde{y}{t}^{-})$ 来帮助 DDR 识别优化方向:

where $\beta$ is a hyper parameter. $\mathcal{D}$ is the dataset containing the input $x$ and its corresponding preference data pairs $(\tilde{y}{t}^{+},\tilde{y}{t}^{-})$ . $V_{t}^{\mathrm{ref}}$ is the reference model, which is frozen during training.

其中其中 $\beta$ 是一个超参数。$\mathcal{D}$ 是包含输入 $x$ 及其对应的偏好数据对 $(\tilde{y}{t}^{+},\tilde{y}{t}^{-})$ 的数据集。$V_{t}^{\mathrm{ref}}$ 是参考模型,在训练期间被冻结。

3.2 OPTIMIZING A SPECIFIC RAG SYSTEM THROUGH DDR

3.2 通过 DDR 优化特定 RAG 系统

Given a query $q$ and a set of retrieved documents $D,=,{d_{1},\ldots,d_{n}}$ , we build a RAG system by employing a knowledge refinement module $(V_{\mathrm{KR}})$ to filter unrelated documents and a generation module $(V_{\mathrm{Gen}})$ to produce a response. These two modules can be represented by a two-agent system:

给定给定查询 $q$ 和一组检索到的文档 $D,=,{d_{1},\ldots,d_{n}}$,我们通过使用知识精炼模块 $(V_{\mathrm{KR}})$ 来过滤无关文档,并使用生成模块 $(V_{\mathrm{Gen}})$ 来生成响应,从而构建一个 RAG 系统。这两个模块可以由一个双智能体系统表示:

where $\tilde{D}\subseteq D$ and $V_{\mathrm{KR}}$ produces the select actions to filter out the noise documents in $D$ to build $\tilde{D}$ . $V_{\mathrm{Gen}}$ generates the answer according to the query $q$ with filtered documents $\tilde{D}$ . As shown in

其中其中 $\tilde{D}\subseteq D$,且 $V_{\mathrm{KR}}$ 生成筛选动作以过滤掉 $D$ 中的噪声文档,构建 $\tilde{D}$。$V_{\mathrm{Gen}}$ 根据查询 $q$ 和筛选后的文档 $\tilde{D}$ 生成答案。

Figure 1, we iterative ly tune different modules to conduct the RAG-DDR model, beginning with the generation module optimization and subsequently focusing on tuning the knowledge refinement module during training this RAG system. In the rest of this subsection, we will explain the details of how to optimize $V_{\mathrm{KR}}$ and $V_{\mathrm{Gen}}$ using DDR.

图图 1: 我们通过迭代调整不同的模块来实现 RAG-DDR 模型,首先从生成模块优化开始,随后在训练 RAG 系统时专注于调整知识精炼模块。在本小节的剩余部分,我们将详细解释如何使用 DDR 优化 $V_{\mathrm{KR}}$ 和 $V_{\mathrm{Gen}}$。

Knowledge Refinement Module. We follow Asai et al. (2023) and build the knowledge refinement module $V_{\mathrm{KR}}$ to estimate the relevance of each document $d_{i}$ to the query $q$ for refinement. We feed both query $q$ and document $d_{i}$ to the $V_{\mathrm{KR}}$ and ask it to produce the action $y_{\mathrm{KR}}^{i},\in,{^{\leftarrow}\mathrm{YES}^{,\ast},{}^{\leftarrow}\mathrm{NO}^{,\ast}}$ , which indicates whether $d_{i}$ is retained $(y_{\mathrm{KR}}^{i}=\mathrm{{}^{\leftarrow}Y E S^{\ast}})$ or discarded $(y_{\mathrm{KR}}^{i}={}^{\overleftarrow{\bf\Lambda}}!!!\dot{\bf N}{\bf O}^{,\ast})$ ):

知识知识精炼模块。我们遵循 Asai 等人 (2023) 的方法,构建知识精炼模块 $V_{\mathrm{KR}}$ 来估计每个文档 $d_{i}$ 与查询 $q$ 的相关性以进行精炼。我们将查询 $q$ 和文档 $d_{i}$ 输入到 $V_{\mathrm{KR}}$ 中,并要求其生成动作 $y_{\mathrm{KR}}^{i},\in,{^{\leftarrow}\mathrm{YES}^{,\ast},{}^{\leftarrow}\mathrm{NO}^{,\ast}}$,该动作指示 $d_{i}$ 是否被保留 $(y_{\mathrm{KR}}^{i}=\mathrm{{}^{\leftarrow}Y E S^{\ast}})$ 或被丢弃 $(y_{\mathrm{KR}}^{i}={}^{\overleftarrow{\bf\Lambda}}!!!\dot{\bf N}{\bf O}^{,\ast})$:

where $\bigoplus$ denotes the concatenation operation, and InstructKR is a prompt designed for knowledge refinement. Then the refined document collection $\tilde{D}={d_{1},\dotsc,d_{k}}$ is constructed, where $k<=n$ . The document $d_{i}$ that leads the agent system to achieve the highest evaluation reward $r(x,y_{\mathrm{KR}}^{i}=$ “YES”) is considered positive, while the document $d_{j}$ that results in the lowest reward $r(x,y_{\mathrm{KR}}^{j}=$ “YES”) is regarded as negative. $V_{\mathrm{KR}}$ is trained to maximize the probability $P(y_{\mathrm{KR}}^{i},=,^{\ast}!\mathrm{YES}^{,\ast},>$ $y_{\mathrm{KR}}^{i}={}^{\leftarrow}!\mathrm{NO}^{\ast}!|q,d_{i})$ for the positive document. As well as, we maximize the probability $P(y_{\tt K R}^{j}=$ ${}^{\cdot}\mathrm{\mathcal{N}O}^{\cdot\cdot}>y_{\mathrm{KR}}^{j}={}^{\cdot\cdot}\mathrm{YES}^{\cdot\cdot}|q,d_{j})$ to filter out irrelevant documents.

其中其中 $\bigoplus$ 表示连接操作,InstructKR 是用于知识优化的提示。然后构建了优化后的文档集合 $\tilde{D}={d_{1},\dotsc,d_{k}}$,其中 $k<=n$。使代理系统获得最高评估奖励 $r(x,y_{\mathrm{KR}}^{i}=$ “YES”) 的文档 $d_{i}$ 被视为正面文档,而使奖励最低的文档 $d_{j}$ 被视为负面文档。 $V_{\mathrm{KR}}$ 的训练目标是最大化正面文档的概率 $P(y_{\mathrm{KR}}^{i},=,^{\ast}!\mathrm{YES}^{,\ast},>$ $y_{\mathrm{KR}}^{i}={}^{\leftarrow}!\mathrm{NO}^{\ast}!|q,d_{i})$。同时,我们通过最大化概率 $P(y_{\tt K R}^{j}=$ ${}^{\cdot}\mathrm{\mathcal{N}O}^{\cdot\cdot}>y_{\mathrm{KR}}^{j}={}^{\cdot\cdot}\mathrm{YES}^{\cdot\cdot}|q,d_{j})$ 来滤除不相关的文档。

Generation Module. After knowledge refinement, the query $q$ and filtered documents $\tilde{D};=$ ${d_{1},\dotsc,d_{k}}$ are fed to the generation module $V_{\mathrm{Gen}}$ . The response $\tilde{y}{\mathrm{Gen}}$ is sampled from $V{\mathrm{Gen}}$ :

生成生成模块。在知识精炼之后,查询 $q$ 和过滤后的文档 $\tilde{D};=$ ${d_{1},\dotsc,d_{k}}$ 被输入到生成模块 $V_{\mathrm{Gen}}$ 中。响应 $\tilde{y}{\mathrm{Gen}}$ 从 $V{\mathrm{Gen}}$ 中采样得到:

where Instruc $\mathrm{\Delta}_{\mathrm{Gen}}$ is a prompt for generating a tailored response. To reduce the misleading knowledge from the retrieved documents, we also sample responses using only the query as input:

其中其中 Instruc $\mathrm{\Delta}_{\mathrm{Gen}}$ 是用于生成定制化回答的提示。为了减少从检索文档中引入误导性信息,我们还仅使用查询作为输入来生成回答:

The response that achieves the highest evaluation score $S(\tilde{y}{\mathrm{Gen}})$ is considered positive $(\tilde{y}{\mathrm{Gen}}^{+})$ , while the lowest evaluation score is considered negative $(\tilde{y}{\mathrm{Gen}}^{-})$ . The generation module $V{\mathrm{Gen}}$ is optimized to maximize the probability of generating the positive response $P(\tilde{y}{\mathrm{Gen}}^{+}>\tilde{y}{\mathrm{Gen}}^{-}|q,\tilde{D})$ to win a higher reward. By generating responses $\tilde{y}_{\mathrm{Gen}}$ based on documents or the query alone, LLMs can learn to balance internal and external knowledge, alleviating the problems related to knowledge conflicts.

达到达到最高评估分数 $S(\tilde{y}{\mathrm{Gen}})$ 的响应被认为是正向的 $(\tilde{y}{\mathrm{Gen}}^{+})$,而最低评估分数被认为是负向的 $(\tilde{y}{\mathrm{Gen}}^{-})$。生成模块 $V{\mathrm{Gen}}$ 被优化以最大化生成正向响应的概率 $P(\tilde{y}{\mathrm{Gen}}^{+}>\tilde{y}{\mathrm{Gen}}^{-}|q,\tilde{D})$,从而获得更高的奖励。通过基于文档或查询生成响应 $\tilde{y}_{\mathrm{Gen}}$,大语言模型可以学会平衡内部和外部知识,缓解与知识冲突相关的问题。

4 EXPERIMENTAL METHODOLOGY

4 实验方法

This section first describes datasets, evaluation metrics, and baselines. Then we introduce the imple ment ation details of our experiments. More experimental details are shown in Appendix A.1.

本节本节首先介绍数据集、评估指标和基线方法,然后介绍实验的实施细节。更多实验细节见附录 A.1。

Dataset. In our experiments, we follow RA-DIT (Lin et al., 2023) and use the instruction tuning datasets for training and evaluating RAG models. For all datasets and all baselines, we use bgelarge (Xiao et al., 2023) to retrieve documents from the MS MARCO 2.0 (Bajaj et al., 2016).

数据集数据集。在我们的实验中,我们遵循 RA-DIT (Lin et al., 2023) 并使用指令微调数据集来训练和评估 RAG 模型。对于所有数据集和所有基线,我们使用 bgelarge (Xiao et al., 2023) 从 MS MARCO 2.0 (Bajaj et al., 2016) 中检索文档。

During the training of DDR, we collect ten datasets covering two tasks, open-domain QA and reasoning. Specifically, we randomly sample 32,805 samples for the training set and 2,000 samples for the development set in our experiments. Following previous work (Lin et al., 2023; Xu et al., 2024a), we select the knowledge-intensive tasks for evaluation, including open-domain question answering, multi-hop question answering, slot filling, and dialogue tasks. The open-domain QA tasks consist of NQ (Kwiatkowski et al., 2019), MARCO QA (Bajaj et al., 2016) and TriviaQA (Joshi et al., 2017), which require models to retrieve factual knowledge to help answer the given question. For more complex tasks, such as multi-hop QA and dialogue, we use HotpotQA dataset (Yang et al., 2018) and Wikipedia of Wizard (WoW) (Dinan et al., 2019) for evaluation. Besides, we also employ T-REx (Elsahar et al., 2018) to measure one-hop fact look-up abilities of models.

在在DDR的训练过程中,我们收集了涵盖两个任务的十个数据集,即开放域问答和推理。具体来说,我们在实验中随机抽取了32,805个样本用于训练集,2,000个样本用于开发集。根据之前的工作(Lin等,2023;Xu等,2024a),我们选择了知识密集型任务进行评估,包括开放域问答、多跳问答、槽填充和对话任务。开放域问答任务包括NQ(Kwiatkowski等,2019)、MARCO QA(Bajaj等,2016)和TriviaQA(Joshi等,2017),这些任务要求模型检索事实知识以帮助回答给定问题。对于更复杂的任务,如多跳问答和对话,我们使用HotpotQA数据集(Yang等,2018)和Wikipedia of Wizard(WoW)(Dinan等,2019)进行评估。此外,我们还使用T-REx(Elsahar等,2018)来衡量模型的一跳事实查找能力。

Evaluation. Following Xu et al. (2024a), we utilize Rouge-L and F1 as evaluation metrics for MARCO QA task and WoW task, respectively. For the rest tasks, we use Accuracy.

评估评估。根据 Xu 等人 (2024a) 的研究,我们分别使用 Rouge-L 和 F1 作为 MARCO QA 任务和 WoW 任务的评估指标。对于其他任务,我们使用准确率 (Accuracy) 作为评估指标。

Baselines. In our experiments, we compare DDR with five baseline models, including zero-shot models and supervised finetuning models.

基准基准模型。在我们的实验中,我们将 DDR 与五个基准模型进行比较,包括零样本模型和微调模型。

Table 1: Overall Performance of Different RAG Models. The best and second best results are highlighted. In our experiments, we employ Llama3-8B as the knowledge refinement module and utilize LLMs of varying scales (Llama3-8B and MiniCPM-2.4B) as the generation module.

表表 1: 不同 RAG 模型的整体性能。最佳和次佳结果已高亮显示。在我们的实验中,我们使用 Llama3-8B 作为知识精炼模块,并利用不同规模的大语言模型 (Llama3-8B 和 MiniCPM-2.4B) 作为生成模块。

| 方法 | 开放域问答 | 多跳问答 | 槽填充 | 对话 |

|---|---|---|---|---|

| NQ | TriviaQA | MARCOQA | HotpotQA | |

| MiniCPM-2.4B | ||||

| LLM w/o RAG | 20.1 | 45.0 | 17.1 | 17.7 |

| VanillaRAG (2023) | 42.2 | 79.5 | 16.7 | 26.7 |

| REPLUG (2023) | 39.4 | 77.0 | 19.4 | 24.7 |

| RA-DIT (2023) | 41.8 | 78.6 | 19.6 | 26.1 |

| RAG-DDR (w/1-Round) | 47.0 | 82.7 | 28.1 | 32.5 |

| RAG-DDR (w/2-Round) | 47.6 | 83.6 | 29.8 | 33.2 |

| Llama3-8B | ||||

| LLM w/o RAG | 35.4 | 78.4 | 17.0 | 27.7 |

| VanillaRAG (2023) | 46.2 | 84.0 | 20.6 | 30.1 |

| RA-DIT (2023) | 46.2 | 87.4 | 20.3 | 34.9 |

| RAG-DDR (w/1-Round) | 50.7 | 88.2 | 25.1 | 37.3 |

| RAG-DDR (w/2-Round) | 52.1 | 89.6 | 27.3 | 39.0 |

We first treat the LLM as a black box and conduct three baselines, including LLM w/o RAG, Vanilla RAG and REPLUG. For the LLM w/o RAG model, we directly feed the query to the LLM and ask it to produce the answer according to its memorized knowledge. To implement the vanilla RAG model, we follow previous work (Lin et al., 2023), use retrieved passages as context and leverage the in-context learning method to conduct the RAG modeling. REPLUG (Shi et al., 2023) is also compared, which ensembles output probabilities from different passage channels. Besides, RADIT (Lin et al., 2023) is also compared in our experiments, which optimizes the RAG system using the instruct-tuning method. In our experiments, we re implement REPLUG and RA-DIT baselines and do not finetune the retriever during our reproduction process, as the retriever we used has already been trained with massive supervised data and is sufficiently strong.

我们我们首先将大语言模型视为一个黑箱,进行了三个基线实验,包括无 RAG 的大语言模型、Vanilla RAG 和 REPLUG。对于无 RAG 的模型,我们直接将查询输入大语言模型,并让它根据记忆的知识生成答案。为了实现 Vanilla RAG 模型,我们遵循之前的工作 (Lin et al., 2023),使用检索到的段落作为上下文,并利用上下文学习方法进行 RAG 建模。我们还比较了 REPLUG (Shi et al., 2023),它集成了来自不同段落通道的输出概率。此外,实验中还比较了 RADIT (Lin et al., 2023),它使用指令微调方法优化了 RAG 系统。在我们的实验中,我们重新实现了 REPLUG 和 RADIT 基线,并且在复现过程中没有对检索器进行微调,因为我们使用的检索器已经通过大量监督数据训练,足够强大。

Implementation Details. In our experiments, we employ Minicpm-2.4B-sft (Hu et al., 2024) and lama3-8B-Instruct (Touvron et al., 2023) as backbone models to construct the generation modules, and employ Llama3-8B-Instruct (Touvron et al., 2023) to build the knowledge refinement module. While training the RAG modules with DDR, we use automatic metrics such as Rouge-L and Accuracy to calculate the reward and set $\beta=0.1$ . The learning rate is set to 5e-5, and each model is trained for one epoch. For the generation module, we feed 5 retrieved passages as external knowledge for augmenting the generation process. To optimize both knowledge refinement module and generation module, we use LoRA (Hu et al., 2022) for efficient training.

实现实现细节。在我们的实验中,我们使用 Minicpm-2.4B-sft (Hu et al., 2024) 和 llama3-8B-Instruct (Touvron et al., 2023) 作为骨干模型来构建生成模块,并采用 Llama3-8B-Instruct (Touvron et al., 2023) 来构建知识精炼模块。在使用 DDR 训练 RAG 模块时,我们使用 Rouge-L 和 Accuracy 等自动指标来计算奖励,并设置 $\beta=0.1$。学习率设置为 5e-5,每个模型训练一个 epoch。对于生成模块,我们输入 5 个检索到的段落作为外部知识来增强生成过程。为了优化知识精炼模块和生成模块,我们使用 LoRA (Hu et al., 2022) 进行高效训练。

5 EVALUATION RESULTS

5 评估结果

In this section, we first evaluate the performance of different RAG methods and then conduct ablation studies to show the effectiveness of different training strategies. Next, we examine the effectiveness of DDR training strategies on the generation module $(V_{\mathrm{Gen}})$ and explore how it balances internal and external knowledge through DDR. Finally, we present several case studies.

在本在本节中,我们首先评估了不同的RAG方法的性能,然后通过消融实验展示了不同训练策略的有效性。接着,我们检验了DDR训练策略在生成模块$(V_{\mathrm{Gen}})$上的有效性,并探讨了它如何通过DDR平衡内部和外部知识。最后,我们展示了几项案例研究。

5.1 OVERALL PERFORMANCE

5.1 整体性能

The performance of various RAG models is presented in Table 1. As shown in the evaluation results, RAG-DDR significantly outperforms these baseline models on all datasets. It achieves improvements of $7%$ compared to the Vanilla RAG model when using MiniCPM-2.4B and Llama3-8B to construct the generation module $(V_{\mathrm{Gen}})$ .

各种各种RAG模型的性能如表1所示。根据评估结果,RAG-DDR在所有数据集上显著优于这些基线模型。在使用MiniCPM-2.4B和Llama3-8B构建生成模块($V_{\mathrm{Gen}}$)时,与Vanilla RAG模型相比,RAG-DDR实现了7%的提升。

Compared with LLM w/o RAG, Vanilla RAG and REPLUG significantly enhance LLM performance on most knowledge-intensive tasks, indicating that external knowledge effectively improves the accuracy of generated responses. However, the performance of RAG models decreases on dialogue tasks, showing that LLMs can also be misled by the retrieved documents. Unlike these zero-shot methods, RA-DIT provides a more effective approach for guiding LLMs to filter out noise from retrieved content and identify accurate clues for answering questions. Nevertheless, RA-DIT still under performs compared to Vanilla RAG on certain knowledge-intensive tasks, such as NQ and HotpotQA, showing that over fitting to golden answers is less effective for teaching LLMs to capture essential information for generating accurate responses. In contrast, RAG-DDR surpasses RA-DIT on almost all tasks, particularly with smaller LLMs (MiniCPM-2.4b), achieving a $5%$ improvement. This highlights the generalization capability of our DDR training method, enabling LLMs of varying scales to utilize external knowledge through in-context learning effectively.

与与没有 RAG 的大语言模型相比,Vanilla RAG 和 REPLUG 在大多数知识密集型任务上显著提升了大语言模型的性能,表明外部知识有效提高了生成回答的准确性。然而,RAG 模型在对话任务上的表现有所下降,这显示大语言模型也可能被检索到的文档误导。与这些零样本方法不同,RA-DIT 提供了一种更有效的方法,能够引导大语言模型过滤掉检索内容中的噪声并识别出回答问题的准确线索。尽管如此,RA-DIT 在某些知识密集型任务(如 NQ 和 HotpotQA)上仍然表现不如 Vanilla RAG,表明过度拟合标准答案在教导大语言模型捕捉生成准确回答所需的关键信息方面效果较差。相比之下,RAG-DDR 在几乎所有任务上都超越了 RA-DIT,尤其是在较小的模型(如 MiniCPM-2.4b)上,实现了 $5%$ 的提升。这凸显了我们 DDR 训练方法的泛化能力,使得不同规模的大语言模型能够通过上下文学习有效地利用外部知识。

Table 2: Ablation Study. Both Vanilla RAG and RAG w/ $V_{\mathrm{KR}}$ are evaluated in a zero-shot setting without any fine-tuning. We then use DDR to optimize the knowledge refinement module $(V_{\mathrm{KR}})$ , the generation module $(V_{\mathrm{Gen}})$ , and both modules, resulting in three models: RAG-DDR (Only $V_{\mathrm{KR}}]$ ), RAG-DDR (Only $V_{\mathrm{Gen.}}$ ) and RAG-DDR (All).

表表 2: 消融研究。Vanilla RAG 和 RAG w/ $V_{\mathrm{KR}}$ 均在零样本设置下进行评估,未进行任何微调。然后使用 DDR 优化知识精炼模块 $(V_{\mathrm{KR}})$ 、生成模块 $(V_{\mathrm{Gen}})$ 以及两个模块,得到三个模型:RAG-DDR (Only $V_{\mathrm{KR}}$ )、RAG-DDR (Only $V_{\mathrm{Gen}}$) 和 RAG-DDR (All)。

| 方法 | 开放域问答 | 多跳问答 | 槽填充 | 对话 |

|---|---|---|---|---|

| NQ | TriviaQA | MARCOQA | HotpotQA | |

| MiniCPM-2.4B | ||||

| VanillaRAG | 42.1 | 78.0 | 16.6 | 24.9 |

| W/VKR | 42.2 | 79.5 | 16.7 | 26.7 |

| RAG-DDR (Only VkR) | 42.5 | 79.6 | 16.8 | 27.3 |

| RAG-DDR (Only VGen) | 46.8 | 81.7 | 28.3 | 31.2 |

| RAG-DDR (AIl) | 47.0 | 82.7 | 28.1 | 32.5 |

| Llama3-8B | ||||

| VanillaRAG | 45.4 | 83.2 | 20.8 | 28.5 |

| W/VKR | 46.2 | 84.0 | 20.6 | 30.1 |

| RAG-DDR (Only VkR) | 46.8 | 84.7 | 20.7 | 30.7 |

| RAG-DDR (Only VGen) | 50.2 | 87.8 | 25.2 | 36.9 |

5.2 ABLATION STUDIES

5.2 消融研究

As shown in Table 2, we conduct ablation studies to explore the role of different RAG modules and evaluate different training strategies using DDR.

如表如表 2 所示,我们进行了消融实验,以探索不同 RAG 模块的作用,并使用 DDR 评估不同的训练策略。

This experiment compares five models, utilizing MiniCPM-2.4b and Llama3-8b to construct the generation module. The Vanilla RAG model relies solely on the generation module $(V_{\mathrm{Gen}})$ to produce answers based on the query and retrieved documents. RAG w/ $V_{\mathrm{KR}}$ adds an additional knowledge refinement module $(V_{\mathrm{KR}})$ to filter the retrieved documents and then feeds query and filtered documents to the generation module $(V_{\mathrm{Gen}})$ . RAG-DDR (Only $V_{\mathrm{KR}}]$ ) indicates that we tune the RAG w/ $V_{\mathrm{KR}}$ model using DDR by only optimizing the knowledge refinement module $(V_{\mathrm{KR}})$ . RAG-DDR (Only $V_{\mathrm{Gen}})$ ) only optimizes the generation module $(V_{\mathrm{Gen}})$ . RAG-DDR (All) optimizes both $V_{\mathrm{KR}}$ and VGen.

本本实验比较了五个模型,利用 MiniCPM-2.4b 和 Llama3-8b 构建生成模块。Vanilla RAG 模型仅依赖生成模块 $(V_{\mathrm{Gen}})$ 根据查询和检索到的文档生成答案。RAG w/ $V_{\mathrm{KR}}$ 增加了一个额外的知识精炼模块 $(V_{\mathrm{KR}})$ 来过滤检索到的文档,然后将查询和过滤后的文档馈送到生成模块 $(V_{\mathrm{Gen}})$。RAG-DDR (Only $V_{\mathrm{KR}}]$) 表示我们通过仅优化知识精炼模块 $(V_{\mathrm{KR}})$ 来调整 RAG w/ $V_{\mathrm{KR}}$ 模型。RAG-DDR (Only $V_{\mathrm{Gen}})$) 仅优化生成模块 $(V_{\mathrm{Gen}})$。RAG-DDR (All) 同时优化 $V_{\mathrm{KR}}$ 和 $V_{\mathrm{Gen}}$。

Compared with the Vanilla RAG model, RAG w/ $V_{\mathrm{KR}}$ improves the RAG performance on almost all evaluation tasks, demonstrating the effectiveness of the knowledge refinement module in improving the accuracy of LLM responses. In contrast, RAG-DDR (Only $V_{\mathrm{Gen.}}$ ) shows greater improvements over RAG w/ $V_{\mathrm{KR}}$ than DDR (Only $V_{\mathrm{{KR}}}$ ), indicating that the primary effectiveness of RAG-DDR comes from optimizing the generation module $(V_{\mathrm{Gen}})$ through DDR. When we begin with the RAGDDR (Only $V_{\mathrm{Gen.}}$ ) model and subsequently optimize the knowledge refinement module, the performance of RAG with $V_{\mathrm{KR}}$ improves. It shows that filtering noise from retrieved documents using feedback from the generation module is effective, which is also observed in previous work (Yu et al., 2023b; Izacard & Grave, 2020). However, the improvements from optimizing the knowledge refinement modules are limited, highlighting that enhancing the generation module’s ability to leverage external knowledge is more critical for the existing RAG system.

与与 Vanilla RAG 模型相比,RAG w/ $V_{\mathrm{KR}}$ 在几乎所有评估任务上都提升了 RAG 的性能,证明了知识精炼模块在提高大语言模型响应准确性方面的有效性。相比之下,RAG-DDR (Only $V_{\mathrm{Gen.}}$) 相较于 RAG w/ $V_{\mathrm{KR}}$ 表现出更大的改进,这表明 RAG-DDR 的主要效果来自于通过 DDR 优化生成模块 $(V_{\mathrm{Gen}})$。当我们从 RAG-DDR (Only $V_{\mathrm{Gen.}}$) 模型开始并随后优化知识精炼模块时,RAG with $V_{\mathrm{KR}}$ 的性能得到了提升。这表明使用生成模块的反馈过滤检索文档中的噪声是有效的,这也在此前的工作中得到了观察 (Yu et al., 2023b; Izacard & Grave, 2020)。然而,优化知识精炼模块带来的改进有限,突出了增强生成模块利用外部知识的能力对现有 RAG 系统更为关键。

5.3 CHARACTERISTICS OF THE GENERATION MODULE IN RAG-DDR

5.3 RAG-DDR 中生成模块的特性

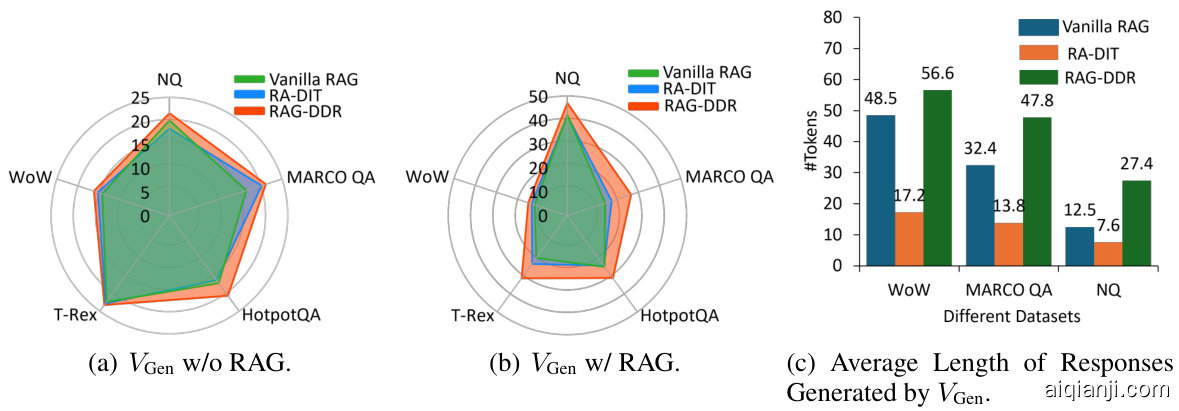

In this experiment, we explore the characteristics of the generation module $\left<V_{\mathrm{Gen}}\right>$ by employing various training strategies, including zero-shot (Vanilla RAG), the SFT method (RA-DIT), and DDR (RAG-DDR). As illustrated in Figure 2, we present the performance of $V_{\mathrm{Gen}}$ w/o RAG and $V_{\mathrm{Gen}}$ w/ RAG. These experiments evaluate $V_{\mathrm{Gen}}$ ’s ability to memorize knowledge and utilize external knowledge. Additionally, we report the average length of responses generated by $V_{\mathrm{Gen}}$ .

在本在本实验中,我们通过采用不同的训练策略,包括零样本 (Vanilla RAG)、SFT 方法 (RA-DIT) 和 DDR (RAG-DDR),探索了生成模块 $\left<V_{\mathrm{Gen}}\right>$ 的特性。如图 2 所示,我们展示了 $V_{\mathrm{Gen}}$ 在不使用 RAG 和使用 RAG 时的性能。这些实验评估了 $V_{\mathrm{Gen}}$ 的记忆知识和利用外部知识的能力。此外,我们还报告了 $V_{\mathrm{Gen}}$ 生成响应的平均长度。

Figure 2: Characteristics of the Generation Module in RAG Optimized with Different Training Strategies. We use MiniCPM-2.4B to build the generation module $(V_{\mathrm{Gen}})$ and then train it using different strategies. The performance of $V_{\mathrm{Gen}}$ is shown in a zero-shot setting, along with the generation module optimized using the RA-DIT and DDR methods.

图图 2: 不同训练策略优化的 RAG 生成模块特性。我们使用 MiniCPM-2.4B 构建生成模块 $(V_{\mathrm{Gen}})$,然后使用不同策略进行训练。$V_{\mathrm{Gen}}$ 在零样本设置下的表现如图所示,同时展示了使用 RA-DIT 和 DDR 方法优化的生成模块。

Table 3: Experimental Results on Evaluating the Knowledge Usage Ability of the Generation Module $(V_{\mathrm{Gen}})$ of Different RAG Models.

表表 3: 评估不同 RAG 模型的生成模块 $(V_{\mathrm{Gen}})$ 知识使用能力的实验结果

| 方法 | NQ | HotpotQA | T-REx | NQ | HotpotQA | T-REx | NQ | HotpotQA | T-REx |

|---|---|---|---|---|---|---|---|---|---|

| MiniCPM-2.4B | |||||||||

| LLMw/oRAG | 27.6 | 26.7 | 36.6 | 2.6 | 11.7 | 4.1 | 100.0 | 100.0 | 100.0 |

| VanillaRAG | 59.1 | 51.7 | 36.8 | ||||||

| RA-DIT | 58.3 | 47.6 | 41.7 | 1.7 | 10.8 | 4.2 | 76.9 | 73.7 | 73.4 |

| RAG-DDR | 65.5 | 56.9 | 52.6 | 2.4 | 10.8 | 5.9 | 82.9 | 81.0 | 78.5 |

| Llama3-8B | |||||||||

| VanillaRAG | 64.2 | 58.0 | 45.5 | 2.9 | 10.5 | 3.0 | 80.0 | 66.3 | |

| RA-DIT | 64.1 | 59.7 | 65.3 | 3.4 | 17.9 | 10.8 | 81.0 | 79.2 | 77.9 |

| RAG-DDR | 69.5 | 64.3 | 59.9 | 4.1 | 18.0 | 5.4 | 88.4 | 82.0 | 79.2 |

As shown in Figure 2(a), we compare the performance of the generation module that relies solely on internal knowledge of parametric memory. Compared to the Vanilla RAG model, RA-DIT demonstrates a decline in performance on the NQ and HotpotQA tasks. Such a phenomenon reveals that the model loses previously acquired knowledge while learning new information during SFT (Luo et al., 2023b). In contrast, DDR not only outperforms the RA-DIT method but also achieves consistent improvements over the Vanilla RAG model across all evaluation tasks. This indicates that DDR can help learn more factual knowledge during training while also preventing the loss of previously memorized information through a reinforcement learning-based training approach. Then we feed retrieved documents to the generation module and show the generation performance in Figure 2(b). The evaluation results indicate that RA-DIT marginally outperforms the Vanilla RAG model, while RAG-DDR significantly improves generation accuracy by utilizing factual knowledge from the retrieved documents. Additional experiments showing the general capabilities of RAGDDR are presented in Appendix A.4.

如图如图 2(a) 所示,我们比较了仅依赖参数内存内部知识的生成模块的性能。与 Vanilla RAG 模型相比,RA-DIT 在 NQ 和 HotpotQA 任务上的表现有所下降。这种现象表明,在 SFT 过程中,模型在学习新知识时失去了之前学到的知识 (Luo et al., 2023b)。相比之下,DDR 不仅优于 RA-DIT 方法,而且在所有评估任务中均比 Vanilla RAG 模型有所提升。这表明,DDR 可以在训练过程中帮助学习更多的事实知识,同时通过基于强化学习的训练方法防止之前记忆信息的丢失。然后,我们将检索到的文档输入生成模块,并在图 2(b) 中展示生成性能。评估结果表明,RA-DIT 略优于 Vanilla RAG 模型,而 RAG-DDR 通过利用检索文档中的事实知识显著提高了生成准确性。附录 A.4 中展示了更多关于 RAGDDR 通用能力的实验结果。

Finally, we show the average length of responses generated by $V_{\mathrm{Gen}}$ in Figure 2(c). Compared to the Vanilla RAG model, the average length of responses generated by RA-DIT decreases significantly, indicating that the SFT training method tends to cause LLMs to overfit the supervised data. On the contrary, RAG-DDR shows a more similar length distribution with Vanilla RAG model, enabling the model to generate responses of a more appropriate length. It demonstrates that training LLMs to learn data preferences from generated responses can help align the output format of RAG models more closely with that of the original LLMs.

最后最后,我们在图 2(c) 中展示了 $V_{\mathrm{Gen}}$ 生成响应的平均长度。与原始 RAG 模型相比,RA-DIT 生成响应的平均长度显著减少,表明 SFT 训练方法容易导致大语言模型过拟合监督数据。相反,RAG-DDR 显示出与原始 RAG 模型更相似的长度分布,使模型能够生成更合适长度的响应。这表明,通过训练大语言模型从生成响应中学习数据偏好,可以帮助使 RAG 模型的输出格式更接近原始大语言模型。

Figure 3: Effectiveness of Different RAG Models in Defending Noisy Information. We use MiniCPM-2.4B to build the generation module $(V_{\mathrm{Gen}})$ . And then we retain one informative passage and randomly replace $n$ top-retrieved documents with noisy ones.

图图 3: 不同 RAG 模型在防御噪声信息方面的有效性。我们使用 MiniCPM-2.4B 构建生成模块 $(V_{\mathrm{Gen}})$,然后保留一个信息丰富的段落,并随机用噪声文档替换 $n$ 个检索到的文档。

5.4 EFFECTIVENESS OF RAG-DDR IN USING EXTERNAL KNOWLEDGE

5.4 RAG-DDR 在使用外部知识方面的有效性

In this section, we investigate the capability of the generation module $V_{\mathrm{Gen}}$ in the RAG model to leverage external knowledge for response generation. We first evaluate the ability of $V_{\mathrm{Gen}}$ to balance the internal and external knowledge. Next, we evalua