A survey on large language model based autonomous agents

基于大语言模型的自主AI智能体综述

Lei WANG, Chen MA*, Xueyang FENG*, Zeyu ZHANG, Hao YANG, Jingsen ZHANG, Zhiyuan CHEN, Jiakai TANG, Xu CHEN $\left(\boxtimes\right)$ , Yankai LIN $\left(\boxtimes\right)$ , Wayne Xin ZHAO, Zhewei WEI, Jirong WEN

Lei WANG, Chen MA*, Xueyang FENG*, Zeyu ZHANG, Hao YANG, Jingsen ZHANG, Zhiyuan CHEN, Jiakai TANG, Xu CHEN (□), Yankai LIN (□), Wayne Xin ZHAO, Zhewei WEI, Jirong WEN

Gaoling School of Artificial Intelligence, Renmin University of China, Beijing 100872, China $\circleddash$ The Author(s) 2024. This article is published with open access at link.springer.com and journal.hep.com.cn

中国人民大学高瓴人工智能学院,中国北京 100872 $\circleddash$ 作者 2024。本文以开放获取形式发表于 link.springer.com 和 journal.hep.com.cn

Abstract Autonomous agents have long been a research focus in academic and industry communities. Previous research often focuses on training agents with limited knowledge within isolated environments, which diverges significantly from human learning processes, and makes the agents hard to achieve human-like decisions. Recently, through the acquisition of vast amounts of Web knowledge, large language models (LLMs) have shown potential in human-level intelligence, leading to a surge in research on LLM-based autonomous agents. In this paper, we present a comprehensive survey of these studies, delivering a systematic review of LLM-based autonomous agents from a holistic perspective. We first discuss the construction of LLM-based autonomous agents, proposing a unified framework that encompasses much of previous work. Then, we present a overview of the diverse applications of LLM-based autonomous agents in social science, natural science, and engineering. Finally, we delve into the evaluation strategies commonly used for LLM-based autonomous agents. Based on the previous studies, we also present several challenges and future directions in this field.

摘要

自主智能体 (Autonomous Agents) 长期以来一直是学术界和工业界的研究热点。先前研究通常局限于在孤立环境中训练知识有限的智能体,这与人类学习过程存在显著差异,导致智能体难以实现类人决策。近年来,通过获取海量网络知识,大语言模型 (LLM) 展现出人类水平智能的潜力,推动了基于大语言模型的自主智能体研究热潮。本文系统综述了该领域研究,从整体视角对基于大语言模型的自主智能体进行系统性梳理。我们首先探讨其构建方法,提出一个涵盖多数已有工作的统一框架;其次概述其在社会科学、自然科学和工程领域的多样化应用;最后深入分析常用的评估策略。基于现有研究,我们进一步提出该领域面临的挑战与未来发展方向。

Keywords autonomous agent, large language model, human-level intelligence

关键词 自主智能体 (autonomous agent), 大语言模型, 人类水平智能

1 Introduction

1 引言

“An autonomous agent is a system situated within and a part of an environment that senses that environment and acts on it, over time, in pursuit of its own agenda and so as to effect what it senses in the future.”

自主智能体 (autonomous agent) 是一种存在于环境中并作为环境组成部分的系统,它感知该环境并随时间推移对其采取行动,以追求自身目标,从而影响其未来的感知结果。

Franklin and Graesser (1997)

Franklin 和 Graesser (1997)

Autonomous agents have long been recognized as a promising approach to achieving artificial general intelligence (AGI), which is expected to accomplish tasks through selfdirected planning and actions. In previous studies, the agents are assumed to act based on simple and heuristic policy functions, and learned in isolated and restricted environments [1-6]. Such assumptions significantly differs from the human learning process, since the human mind is highly complex, and individuals can learn from a much wider variety of environments. Because of these gaps, the agents obtained from the previous studies are usually far from replicating humanlevel decision processes, especially in un constrained, opendomain settings.

自主智能体 (Autonomous Agents) 长期以来被视为实现通用人工智能 (AGI) 的有前景途径,其预期通过自主规划和行动完成任务。在以往研究中,智能体通常被假设基于简单启发式策略函数行动,并在孤立受限环境中学习 [1-6]。这些假设与人类学习过程存在显著差异——人类心智高度复杂,且能从更广泛的环境中学习。由于这些差距,既有研究获得的智能体往往难以复现人类水平的决策过程,尤其在非约束性开放领域场景中。

In recent years, large language models (LLMs) have achieved notable successes, demonstrating significant potential in attaining human-like intelligence [5–10]. This capability arises from leveraging comprehensive training datasets alongside a substantial number of model parameters. Building upon this capability, there has been a growing research area that employs LLMs as central controllers to construct autonomous agents to obtain human-like decisionmaking capabilities [11–17].

近年来,大语言模型(LLM)取得了显著成就,在实现类人智能方面展现出巨大潜力[5-10]。这种能力源于利用海量训练数据集和庞大的模型参数。基于此能力,一个新兴研究领域正逐渐形成:将大语言模型作为核心控制器来构建自主AI智能体,从而获得类人决策能力[11-17]。

Comparing with reinforcement learning, LLM-based agents have more comprehensive internal world knowledge, which facilitates more informed agent actions even without training on specific domain data. Additionally, LLM-based agents can provide natural language interfaces to interact with humans, which is more flexible and explain able.

与强化学习相比,基于大语言模型 (LLM) 的 AI智能体拥有更全面的内部世界知识,即使未经特定领域数据训练也能促成更明智的决策行为。此外,基于大语言模型的智能体可提供自然语言交互界面,其灵活性和可解释性更优。

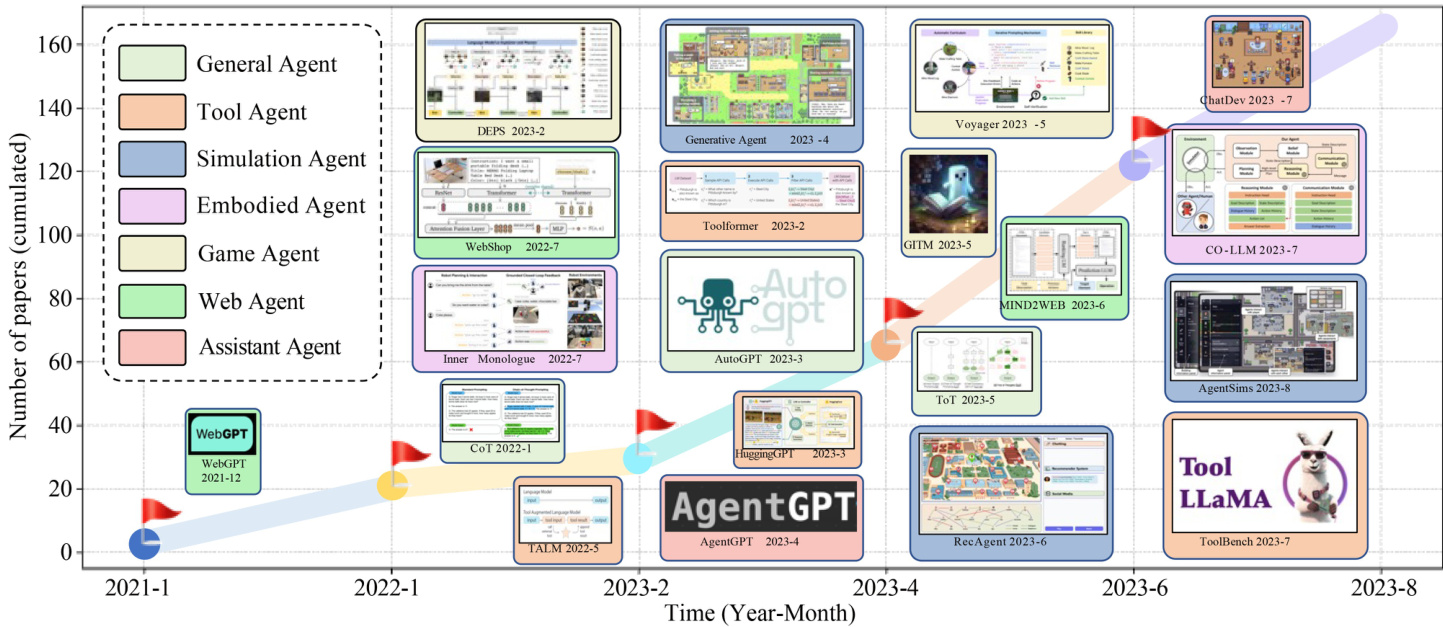

Along this direction, researchers have developed numerous promising models (see Fig. 1 for an overview of this field), where the key idea is to equip LLMs with crucial human capabilities like memory and planning to make them behave like humans and complete various tasks effectively. Previously, these models were proposed independently, with limited efforts made to summarize and compare them holistic ally. However, we believe a systematic summary on this rapidly developing field is of great significance to comprehensively understand it and benefit to inspire future research.

沿着这一方向,研究人员已开发出众多前景广阔的模型(该领域概览见图1),其核心思路是为大语言模型配备记忆与规划等关键人类能力,使其能像人类一样行动并高效完成各类任务。此前这些模型被独立提出,缺乏系统性总结与整体对比。但我们认为,对这一快速发展领域进行系统梳理,将有助于全面理解其脉络并启发未来研究。

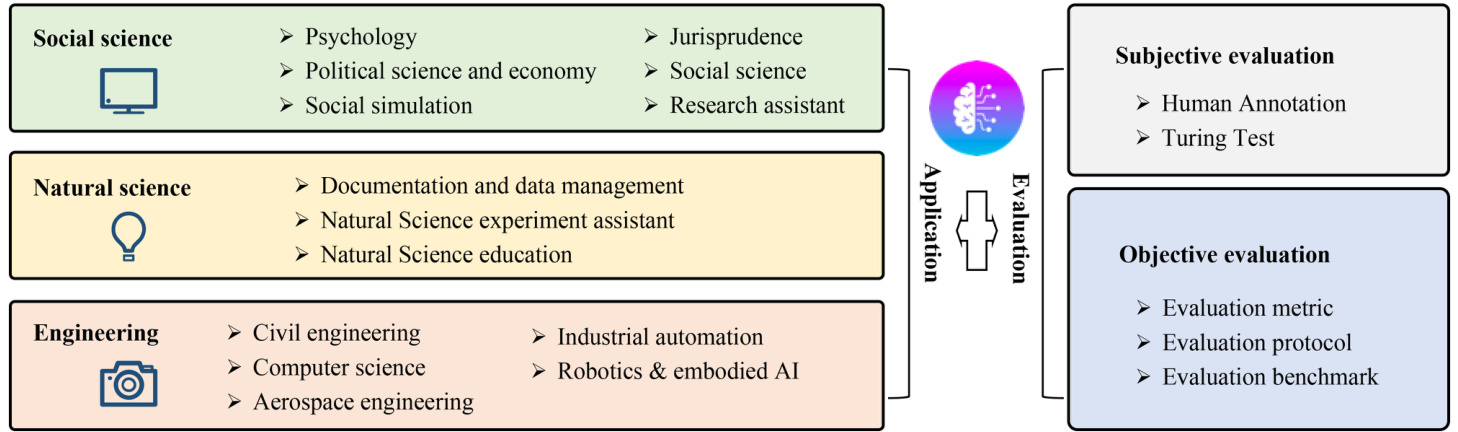

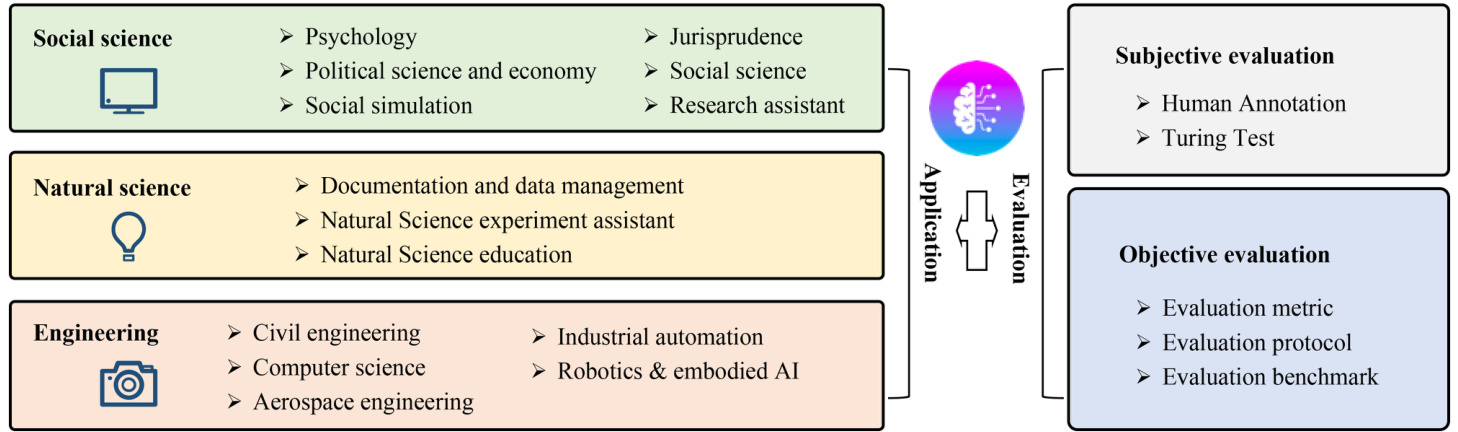

In this paper, we conduct a comprehensive survey of the field of LLM-based autonomous agents. Specifically, we organize our survey based on three aspects including the construction, application, and evaluation of LLM-based autonomous agents. For the agent construction, we focus on two problems, that is, (1) how to design the agent architecture to better leverage LLMs, and (2) how to inspire and enhance the agent capability to complete different tasks. Intuitively, the first problem aims to build the hardware fundamentals for the agent, while the second problem focus on providing the agent with software resources. For the first problem, we present a unified agent framework, which can encompass most of the previous studies. For the second problem, we provide a summary on the commonly-used strategies for agents’ capability acquisition. In addition to discussing agent construction, we also provide an systematic overview of the applications of LLM-based autonomous agents in social science, natural science, and engineering. Finally, we delve into the strategies for evaluating LLM-based autonomous agents, focusing on both subjective and objective strategies.

本文对大语言模型(LLM)驱动的自主AI智能体领域进行了全面综述。具体而言,我们从智能体构建、应用场景和评估方法三个维度组织本次调研。在智能体构建方面,我们聚焦两个核心问题:(1) 如何设计智能体架构以更好地利用大语言模型;(2) 如何激发和增强智能体完成多样化任务的能力。直观来看,第一个问题旨在为智能体构建硬件基础,而第二个问题则侧重于提供软件资源。针对第一个问题,我们提出了一个能涵盖多数现有研究的统一智能体框架;针对第二个问题,我们系统总结了智能体能力获取的常用策略。除构建方法外,我们还系统梳理了大语言模型智能体在社会科学、自然科学及工程领域的应用。最后,我们深入探讨了主客观相结合的大语言模型智能体评估策略。

Fig. 1 Illustration of the growth trend in the field of LLM-based autonomous agents. We present the cumulative number of papers published from January 2021 to August 2023. We assign different colors to represent various agent categories. For example, a game agent aims to simulate a game-player, while a tool agent mainly focuses on tool using. For each time period, we provide a curated list of studies with diverse agent categories

图 1: 基于大语言模型的自主智能体领域增长趋势示意图。我们展示了2021年1月至2023年8月期间发表的论文累计数量,并使用不同颜色区分各类智能体。例如游戏智能体(Game Agent)旨在模拟游戏玩家,而工具智能体(Tool Agent)主要关注工具使用。每个时间段我们都精选了涵盖多种智能体类别的代表性研究。

In summary, this survey conducts a systematic review and establishes comprehensive taxonomies for existing studies in the burgeoning field of LLM-based autonomous agents. Our focus encompasses three primary areas: construction of agents, their applications, and methods of evaluation. Drawing from a wealth of previous studies, we identify various challenges in this field and discuss potential future directions. We expect that our survey can provide newcomers of LLMbased autonomous agents with a comprehensive background knowledge, and also encourage further groundbreaking studies.

总之,本综述对基于大语言模型(LLM)的自主AI智能体这一新兴领域的研究进行了系统性梳理,并建立了完整的分类体系。我们重点聚焦三个核心方向:智能体构建、应用场景及评估方法。通过整合大量前人研究,我们指出了该领域面临的诸多挑战,并探讨了未来潜在的发展路径。我们希望本次综述能为基于大语言模型的自主AI智能体领域的新研究者提供全面的背景知识,同时激励更多突破性研究的开展。

2 LLM-based autonomous agent construction

2 基于大语言模型的自主AI智能体构建

LLM-based autonomous agents are expected to effectively perform diverse tasks by leveraging the human-like capabilities of LLMs. In order to achieve this goal, there are two significant aspects, that is, (1) which architecture should be designed to better use LLMs and (2) give the designed architecture, how to enable the agent to acquire capabilities for accomplishing specific tasks. Within the context of architecture design, we contribute a systematic synthesis of existing research, culminating in a comprehensive unified framework. As for the second aspect, we summarize the strategies for agent capability acquisition based on whether they fine-tune the LLMs. When comparing LLM-based autonomous agents to traditional machine learning, designing the agent architecture is analogous to determining the network structure, while the agent capability acquisition is similar to learning the network parameters. In the following, we introduce these two aspects more in detail.

基于大语言模型(LLM)的自主AI智能体有望通过利用LLM类人能力高效执行多样化任务。为实现该目标,需关注两大核心:(1) 设计何种架构以优化LLM使用;(2) 既定架构下如何使智能体获得特定任务能力。在架构设计方面,我们对现有研究进行系统梳理,最终提出一个全面统一的框架。针对第二方面,我们根据是否微调LLM总结了智能体能力获取策略。相较于传统机器学习,设计智能体架构类似于确定网络结构,而能力获取则类似于学习网络参数。下文将详细阐述这两个方面。

2.1 Agent architecture design

2.1 AI智能体架构设计

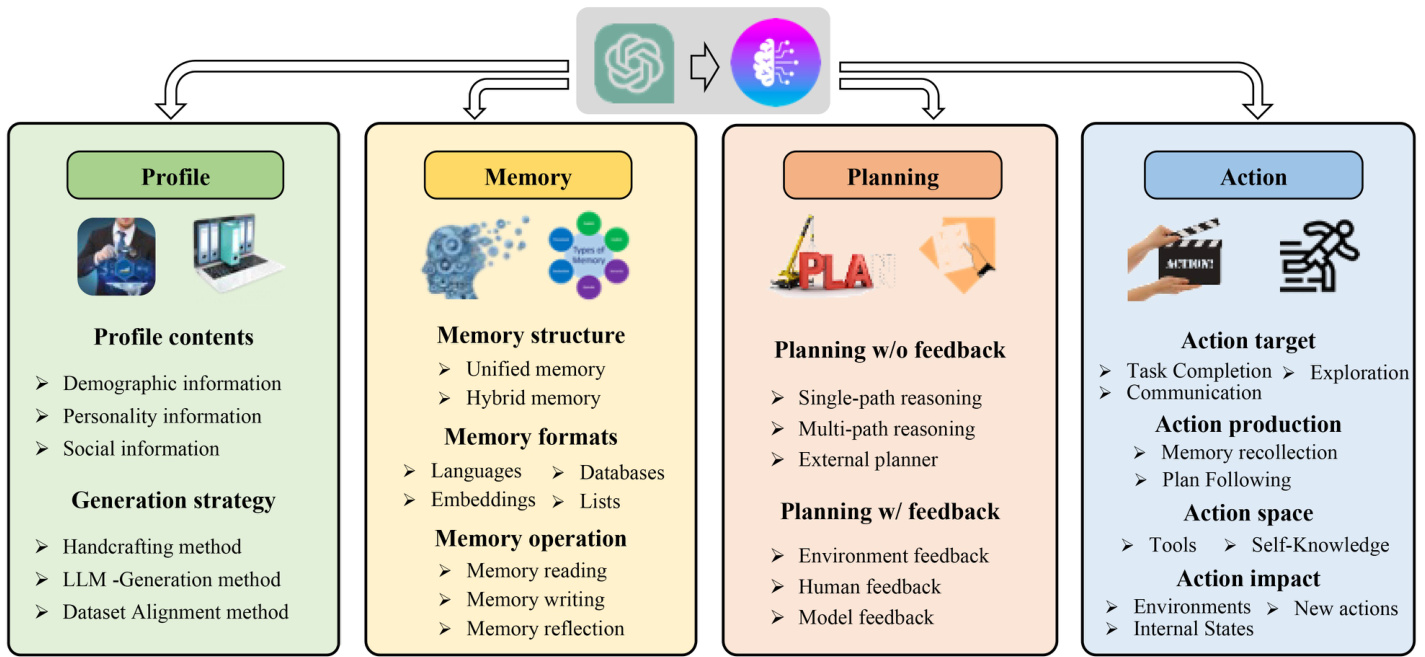

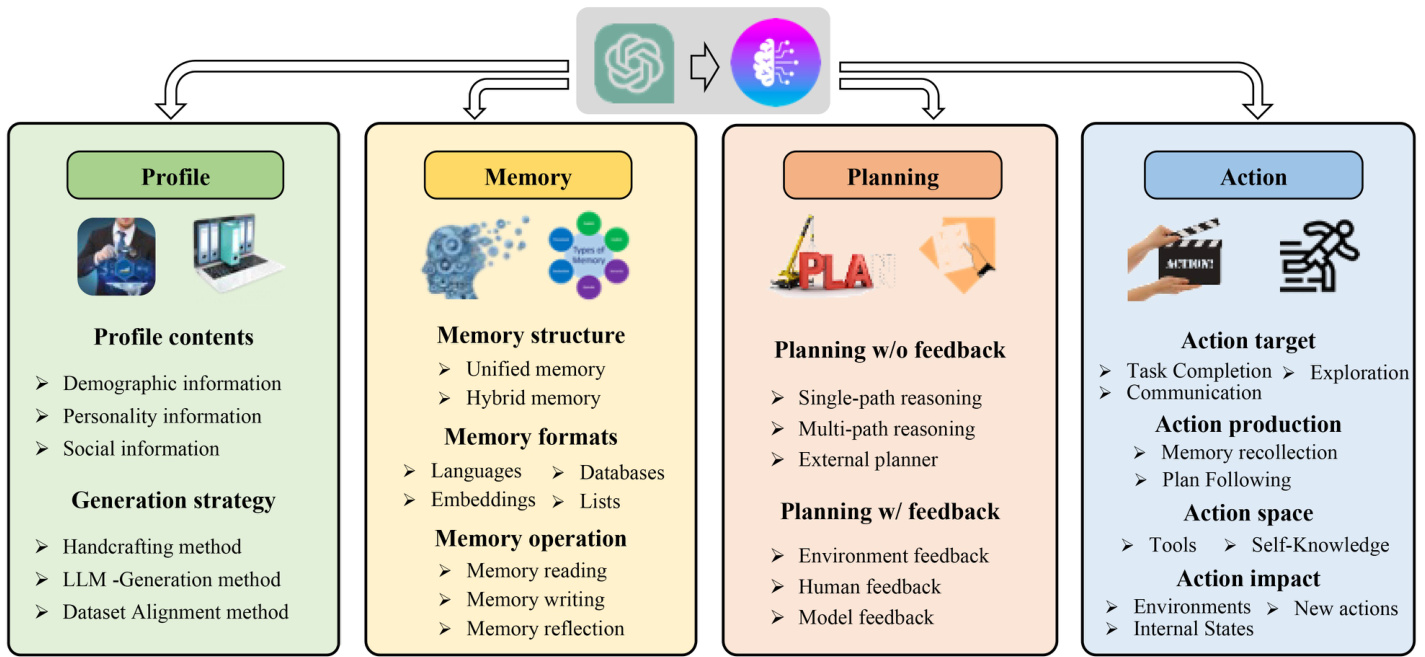

Recent advancements in LLMs have demonstrated their great potential to accomplish a wide range of tasks in the form of question-answering (QA). However, building autonomous agents is far from QA, since they need to fulfill specific roles and autonomously perceive and learn from the environment to evolve themselves like humans. To bridge the gap between traditional LLMs and autonomous agents, a crucial aspect is to design rational agent architectures to assist LLMs in maximizing their capabilities. Along this direction, previous work has developed a number of modules to enhance LLMs. In this section, we propose a unified framework to summarize these modules. In specific, the overall structure of our framework is illustrated Fig. 2, which is composed of a profiling module, a memory module, a planning module, and an action module. The purpose of the profiling module is to identify the role of the agent. The memory and planning modules place the agent into a dynamic environment, enabling it to recall past behaviors and plan future actions. The action module is responsible for translating the agent’s decisions into specific outputs. Within these modules, the profiling module impacts the memory and planning modules, and collectively, these three modules influence the action module. In the following, we detail these modules.

近年来,大语言模型(LLM)的进步已展现出其通过问答(QA)形式完成广泛任务的巨大潜力。然而,构建自主AI智能体远非问答那么简单,因为它们需要履行特定角色,像人类一样自主感知环境并从中学习进化。为弥合传统大语言模型与自主智能体之间的鸿沟,设计合理的智能体架构以帮助LLM最大化其能力成为关键。沿此方向,先前研究已开发了多个增强模块。本节我们提出统一框架来归纳这些模块,具体结构如图2所示,由角色分析模块、记忆模块、规划模块和行动模块构成。角色分析模块用于确定智能体的职能定位,记忆与规划模块使智能体置身动态环境,能够回溯过往行为并规划未来行动。行动模块负责将智能体决策转化为具体输出。这些模块中,角色分析模块会影响记忆与规划模块,三者共同作用于行动模块。下文将详细阐述各模块。

2.1.1 Profiling module

2.1.1 性能分析模块

Autonomous agents typically perform tasks by assuming specific roles, such as coders, teachers and domain experts [18,19]. The profiling module aims to indicate the profiles of the agent roles, which are usually written into the prompt to influence the LLM behaviors. Agent profiles typically encompass basic information such as age, gender, and career [20], as well as psychology information, reflecting the personalities of the agent, and social information, detailing the relationships between agents [21]. The choice of information to profile the agent is largely determined by the specific application scenarios. For instance, if the application aims to study human cognitive process, then the psychology information becomes pivotal. After identifying the types of profile information, the next important problem is to create specific profiles for the agents. Existing literature commonly employs the following three strategies.

自主AI智能体通常通过承担特定角色来执行任务,例如程序员、教师和领域专家 [18,19]。画像模块旨在定义智能体角色的属性特征,这些特征通常被写入提示词以影响大语言模型的行为。智能体画像通常包含年龄、性别和职业等基本信息 [20],以及反映智能体个性的心理特征信息,还有描述智能体间社会关系的社交信息 [21]。选择哪些信息来构建智能体画像主要由具体应用场景决定。例如,若应用目标是研究人类认知过程,那么心理特征信息就至关重要。在确定画像信息类型后,接下来的关键问题是为智能体创建具体画像。现有文献通常采用以下三种策略。

Fig. 2 A unified framework for the architecture design of LLM-based autonomous agent

图 2: 基于大语言模型的自主智能体架构设计统一框架

Handcrafting method: in this method, agent profiles are manually specified. For instance, if one would like to design agents with different personalities, he can use “you are an outgoing person” or “you are an introverted person” to profile the agent. The handcrafting method has been leveraged in a lot of previous work to indicate the agent profiles. For example, Generative Agent [22] describes the agent by the information like name, objectives, and relationships with other agents. MetaGPT [23], ChatDev [18], and Self-collaboration [24] predefine various roles and their corresponding responsibilities in software development, manually assigning distinct profiles to each agent to facilitate collaboration. PTLLM [25] aims to explore and quantify personality traits displayed in texts generated by LLMs. This method guides LLMs in generating diverse responses by manfully defining various agent characters through the use of personality assessment tools such as IPIP-NEO [26] and BFI [27]. [28] studies the toxicity of the LLM output by manually prompting LLMs with different roles, such as politicians, journalists and businessperson s. In general, the handcrafting method is very flexible, since one can assign any profile information to the agents. However, it can be also labor-intensive, particularly when dealing with a large number of agents.

手工制作方法:该方法通过人工指定AI智能体(agent)的配置文件。例如若要设计不同性格的智能体,可使用"你是个外向的人"或"你是个内向的人"来刻画其形象。该方法在先前诸多研究中被用于定义智能体特征:Generative Agent [22]通过姓名、目标、与其他智能体的关系等信息来描述智能体;MetaGPT [23]、ChatDev [18]和Self-collaboration [24]在软件开发中预定义不同角色及其对应职责,手动为每个智能体分配独特配置以促进协作;PTLLM [25]旨在探索和量化大语言模型生成文本中显现的人格特质,通过IPIP-NEO [26]和BFI [27]等人格评估工具手动定义各类角色,引导模型生成多样化响应;[28]则通过手动提示政治人物、记者、商人等不同角色,研究大语言模型输出的毒性。总体而言,手工制作方法具有高度灵活性,可为智能体分配任意配置信息,但在处理大量智能体时会显得耗时费力。

LLM-generation method: in this method, agent profiles are automatically generated based on LLMs. Typically, it begins by indicating the profile generation rules, elucidating the composition and attributes of the agent profiles within the target population. Then, one can optionally specify several seed agent profiles to serve as few-shot examples. At last, LLMs are leveraged to generate all the agent profiles. For example, RecAgent [21] first creates seed profiles for a few number of agents by manually crafting their backgrounds like age, gender, personal traits, and movie preferences. Then, it leverages ChatGPT to generate more agent profiles based on the seed information. The LLM-generation method can save significant time when the number of agents is large, but it may lack precise control over the generated profiles.

大语言模型生成方法:该方法基于大语言模型自动生成智能体档案。通常,首先明确档案生成规则,阐明目标群体中智能体档案的构成与属性。随后可选择性地指定若干种子智能体档案作为少样本示例。最后利用大语言模型生成所有智能体档案。例如,RecAgent [21] 先通过手工创建少量智能体的背景信息(如年龄、性别、个人特质和电影偏好)来生成种子档案,再基于这些种子信息调用ChatGPT生成更多档案。当智能体数量庞大时,大语言模型生成方法能显著节省时间,但可能对生成档案缺乏精确控制。

Dataset alignment method: in this method, the agent profiles are obtained from real-world datasets. Typically, one can first organize the information about real humans in the datasets into natural language prompts, and then leverage it to profile the agents. For instance, in [29], the authors assign roles to GPT-3 based on the demographic backgrounds (such as race/ethnicity, gender, age, and state of residence) of participants in the American National Election Studies (ANES). They subsequently investigate whether GPT-3 can produce similar results to those of real humans. The dataset alignment method accurately captures the attributes of the real population, thereby making the agent behaviors more meaningful and reflective of real-world scenarios.

数据集对齐方法:该方法通过真实世界数据集获取智能体画像。通常可先将数据集中真实人类的自然语言提示信息进行结构化处理,再用于构建智能体特征。例如在[29]中,作者根据美国国家选举研究(ANES)参与者的种族/民族、性别、年龄和居住州等人口统计背景,为GPT-3分配角色,进而探究GPT-3能否产生与真实人类相似的结果。这种数据对齐方法能精确捕捉真实人群特征,使智能体行为更具现实意义并真实反映现实场景。

Remark. While most of the previous work leverage the above profile generation strategies independently, we argue that combining them may yield additional benefits. For example, in order to predict social developments via agent simulation, one can leverage real-world datasets to profile a subset of the agents, thereby accurately reflecting the current social status. Subsequently, roles that do not exist in the real world but may emerge in the future can be manually assigned to the other agents, enabling the prediction of future social development. Beyond this example, one can also flexibly combine the other strategies. The profile module serves as the foundation for agent design, exerting significant influence on the agent memorization, planning, and action procedures.

备注:虽然之前的工作大多独立运用上述角色生成策略,但我们认为组合使用可能带来额外收益。例如,在通过智能体模拟预测社会发展时,可以结合现实数据集为部分智能体构建角色档案,从而准确反映当前社会现状;同时为其他智能体人工分配现实中尚不存在但未来可能出现的角色,以此预测社会发展走向。除该案例外,其他策略也能灵活组合。角色模块作为智能体设计的基础,对其记忆、规划和行动流程具有重要影响。

2.1.2 Memory module

2.1.2 内存模块

The memory module plays a very important role in the agent architecture design. It stores information perceived from the environment and leverages the recorded memories to facilitate future actions. The memory module can help the agent to accumulate experiences, self-evolve, and behave in a more consistent, reasonable, and effective manner. This section provides a comprehensive overview of the memory module, focusing on its structures, formats, and operations.

记忆模块在智能体架构设计中扮演着至关重要的角色。它存储从环境中感知到的信息,并利用记录的记忆来促进未来的行动。记忆模块能帮助智能体积累经验、自我进化,并以更一致、合理且高效的方式行动。本节全面概述了记忆模块,重点介绍其结构、格式和操作。

Memory structures: LLM-based autonomous agents usually incorporate principles and mechanisms derived from cognitive science research on human memory processes. Human memory follows a general progression from sensory memory that registers perceptual inputs, to short-term memory that maintains information transient ly, to long-term memory that consolidates information over extended periods. When designing the agent memory structures, researchers take inspiration from these aspects of human memory. In specific, short-term memory is analogous to the input information within the context window constrained by the transformer architecture. Long-term memory resembles the external vector storage that agents can rapidly query and retrieve from as needed. In the following, we introduce two commonly used memory structures based on the short- and long-term memories.

记忆结构:基于大语言模型(LLM)的自主智能体通常借鉴了人类记忆过程的认知科学研究原理与机制。人类记忆遵循从感知输入的感觉记忆,到短暂维持信息的短期记忆,再到长期巩固信息的长时记忆这一递进过程。在设计智能体记忆结构时,研究者从人类记忆的这些特征中获得启发。具体而言,短期记忆类似于受Transformer架构限制的上下文窗口内的输入信息,长时记忆则类似于智能体可按需快速查询检索的外部向量存储。下文将介绍基于短时记忆和长时记忆的两种常用记忆结构。

● Unified memory. This structure only simulates the human shot-term memory, which is usually realized by in-context learning, and the memory information is directly written into the prompts. For example, RLP [30] is a conversation agent, which maintains internal states for the speaker and listener. During each round of conversation, these states serve as LLM prompts, functioning as the agent’s short-term memory. SayPlan [31] is an embodied agent specifically designed for task planning. In this agent, the scene graphs and environment feedback serve as the agent’s short-term memory, guiding its actions. CALYPSO [32] is an agent designed for the game Dungeons & Dragons, which can assist Dungeon Masters in the creation and narration of stories. Its short-term memory is built upon scene descriptions, monster information, and previous summaries. DEPS [33] is also a game agent, but it is developed for Minecraft. The agent initially generates task plans and then utilizes them to prompt LLMs, which in turn produce actions to complete the task. These plans can be deemed as the agent’s short-term memory. In practice, implementing short-term memory is straightforward and can enhance an agent’s ability to perceive recent or con textually sensitive behaviors and observations. However, due to the limitation of context window of LLMs, it’s hard to put all memories into prompt, which may degrade the performance of agents. This method has high requirements on the window length of LLMs and the ability to handle long contexts. Therefore, many researchers resort to hybrid memory to alleviate this question. However, the limited context window of LLMs restricts incorporating comprehensive memories into prompts, which can impair agent performance. This challenge necessitates LLMs with larger context windows and the ability to handle extended contexts. Consequently, numerous researchers turn to hybrid memory systems to mitigate this

● 统一内存。这种结构仅模拟人类的短期记忆,通常通过上下文学习实现,记忆信息直接写入提示词中。例如,RLP [30] 是一种对话智能体,会为说话者和听者维护内部状态。在每轮对话中,这些状态作为大语言模型的提示词,充当智能体的短期记忆。SayPlan [31] 是专为任务规划设计的具身智能体,其场景图和环境反馈作为短期记忆指导行动。CALYPSO [32] 是为游戏《龙与地下城》设计的智能体,可协助地下城主创作和叙述故事,其短期记忆基于场景描述、怪物信息和先前摘要构建。DEPS [33] 同样是游戏智能体,但针对《我的世界》开发,该智能体首先生成任务计划,随后利用这些计划提示大语言模型生成动作完成任务,这些计划可视为其短期记忆。实际应用中,实现短期记忆较为直接,能增强智能体感知近期或上下文敏感行为及观察的能力。但由于大语言模型上下文窗口的限制,难以将所有记忆纳入提示词,可能影响智能体表现。该方法对大语言模型的窗口长度和长上下文处理能力要求较高,因此许多研究者转向混合内存方案以缓解该问题。

issue.

问题

● Hybrid memory. This structure explicitly models the human short-term and long-term memories. The short-term memory temporarily buffers recent perceptions, while longterm memory consolidates important information over time. For instance, Generative Agent [20] employs a hybrid memory structure to facilitate agent behaviors. The short-term memory contains the context information about the agent current situations, while the long-term memory stores the agent past behaviors and thoughts, which can be retrieved according to the current events. AgentSims [34] also implements a hybrid memory architecture. The information provided in the prompt can be considered as short-term memory. In order to enhance the storage capacity of memory, the authors propose a long-term memory system that utilizes a vector database, facilitating efficient storage and retrieval. Specifically, the agent’s daily memories are encoded as embeddings and stored in the vector database. If the agent needs to recall its previous memories, the long-term memory system retrieves relevant information using embedding similarities. This process can improve the consistency of the agent’s behavior. In GITM [16], the short-term memory stores the current trajectory, and the long-term memory saves reference plans summarized from successful prior trajectories. Long-term memory provides stable knowledge, while shortterm memory allows flexible planning. Reflexion [12] utilizes a short-term sliding window to capture recent feedback and incorporates persistent long-term storage to retain condensed insights. This combination allows for the utilization of both detailed immediate experiences and high-level abstractions. SCM [35] selectively activates the most relevant long-term knowledge to combine with short-term memory, enabling reasoning over complex contextual dialogues. Simply Retrieve [36] utilizes user queries as short-term memory and stores long-term memory using external knowledge bases. This design enhances the model accuracy while guaranteeing user privacy. Memory Sandbox [37] implements long-term and short-term memory by utilizing a 2D canvas to store memory objects, which can then be accessed throughout various conversations. Users can create multiple conversations with different agents on the same canvas, facilitating the sharing of memory objects through a simple drag-and-drop interface. In practice, integrating both short-term and long-term memories can enhance an agent’s ability for long-range reasoning and accumulation of valuable experiences, which are crucial for accomplishing tasks in complex environments.

● 混合记忆 (Hybrid memory)。这种结构明确模拟了人类的短期记忆和长期记忆。短期记忆临时缓冲近期感知信息,而长期记忆会随时间推移巩固重要信息。例如,Generative Agent [20] 采用混合记忆结构来支持智能体行为。其短期记忆包含智能体当前情境的上下文信息,长期记忆则存储智能体过去的行为与思考,可根据当前事件进行检索。AgentSims [34] 同样实现了混合记忆架构,提示词中提供的信息可视为短期记忆。为增强记忆存储容量,作者提出使用向量数据库的长期记忆系统,实现高效存储检索。具体而言,智能体的日常记忆会被编码为嵌入向量存入数据库,当需要回忆时,长期记忆系统通过嵌入相似度检索相关信息,这一过程能提升智能体行为的一致性。在 GITM [16] 中,短期记忆存储当前轨迹,长期记忆保存从成功历史轨迹中总结的参考方案。长期记忆提供稳定知识,短期记忆支持灵活规划。Reflexion [12] 采用短期滑动窗口捕获近期反馈,同时通过持久化长期存储保留凝练的洞见,这种组合既能利用详细即时经验,也能调用高层抽象。SCM [35] 选择性激活最相关的长期知识并与短期记忆结合,从而支持复杂情境对话推理。Simply Retrieve [36] 将用户查询作为短期记忆,使用外部知识库存储长期记忆,这种设计在保障用户隐私的同时提升了模型准确性。Memory Sandbox [37] 通过二维画布存储记忆对象来实现长短期记忆,这些对象可在不同对话中调用。用户可在同一画布上与多个智能体创建对话,通过拖拽界面轻松实现记忆对象共享。实际应用中,整合短期与长期记忆能增强智能体的长程推理能力和价值经验积累,这对复杂环境中的任务执行至关重要。

Remark. Careful readers may find that there may also exist another type of memory structure, that is, only based on the long-term memory. However, we find that such type of memory is rarely documented in the literature. Our speculation is that the agents are always situated in continuous and dynamic environments, with consecutive actions displaying a high correlation. Therefore, the capture of short-term memory is very important and usually cannot be disregarded.

备注。细心的读者可能会发现,还可能存在另一种记忆结构,即仅基于长期记忆。然而,我们发现此类记忆在文献中鲜有记载。我们推测,AI智能体始终处于连续动态的环境中,其连续动作具有高度相关性,因此短期记忆的捕获非常重要且通常不可忽视。

Memory formats: In addition to the memory structure, another perspective to analyze the memory module is based on the formats of the memory storage medium, for example, natural language memory or embedding memory. Different memory formats possess distinct strengths and are suitable for various applications. In the following, we introduce several representative memory formats.

存储格式:除了内存结构外,分析内存模块的另一个角度是基于存储介质的格式,例如自然语言内存或嵌入内存。不同的存储格式具有不同的优势,适用于各种应用场景。下面我们介绍几种具有代表性的存储格式。

● Natural languages. In this format, memory information such as the agent behaviors and observations are directly described using raw natural language. This format possesses several strengths. Firstly, the memory information can be expressed in a flexible and understandable manner. Moreover, it retains rich semantic information that can provide comprehensive signals to guide agent behaviors. In the previous work, Reflexion [12] stores experiential feedback in natural language within a sliding window. Voyager [38] employs natural language descriptions to represent skills within the Minecraft game, which are directly stored in memory.

● 自然语言 (Natural languages)。在这种格式中,AI智能体 (AI Agent) 的行为和观察等记忆信息直接通过原始自然语言描述。该格式具有多重优势:首先,记忆信息能以灵活且易于理解的方式呈现;其次,它保留了丰富的语义信息,能为智能体行为提供全面的引导信号。在先前工作中,Reflexion [12] 将经验反馈以自然语言形式存储在滑动窗口内,而Voyager [38] 则采用自然语言描述来表征《我的世界》(Minecraft) 游戏中的技能,这些描述直接存储在记忆系统中。

● Embeddings. In this format, memory information is encoded into embedding vectors, which can enhance the memory retrieval and reading efficiency. For instance, MemoryBank [39] encodes each memory segment into an embedding vector, which creates an indexed corpus for retrieval. [16] represents reference plans as embeddings to facilitate matching and reuse. Furthermore, ChatDev [18] encodes dialogue history into vectors for retrieval.

● 嵌入 (Embeddings)。在这种格式中,记忆信息被编码为嵌入向量,可以提高记忆检索和读取效率。例如,MemoryBank [39] 将每个记忆片段编码为一个嵌入向量,从而创建了一个用于检索的索引语料库。[16] 将参考计划表示为嵌入向量以便于匹配和重用。此外,ChatDev [18] 将对话历史编码为向量用于检索。

● Databases. In this format, memory information is stored in databases, allowing the agent to manipulate memories efficiently and comprehensively. For example, ChatDB [40] uses a database as a symbolic memory module. The agent can utilize SQL statements to precisely add, delete, and revise the memory information. In DB-GPT [41], the memory module is constructed based on a database. To more intuitively operate the memory information, the agents are fine-tuned to understand and execute SQL queries, enabling them to interact with databases using natural language directly.

● 数据库。在这种形式中,记忆信息存储在数据库中,使得智能体能够高效且全面地操作记忆。例如,ChatDB [40] 使用数据库作为符号记忆模块,智能体可以利用 SQL 语句精确地添加、删除和修改记忆信息。在 DB-GPT [41] 中,记忆模块基于数据库构建。为了更直观地操作记忆信息,智能体经过微调以理解和执行 SQL 查询,使其能够直接用自然语言与数据库交互。

● Structured lists. In this format, memory information is organized into lists, and the semantic of memory can be conveyed in an efficient and concise manner. For instance, GITM [16] stores action lists for sub-goals in a hierarchical tree structure. The hierarchical structure explicitly captures the relationships between goals and corresponding plans. RETLLM [42] initially converts natural language sentences into triplet phrases, and subsequently stores them in memory.

● 结构化列表。该格式将记忆信息组织成列表形式,能以高效简洁的方式传递记忆语义。例如,GITM [16] 在分层树状结构中存储子目标对应的动作列表,这种层级结构明确捕获了目标与对应计划之间的关系。RETLLM [42] 先将自然语言句子转换为三元组短语,再将其存入记忆。

Remark. Here we only show several representative memory formats, but it is important to note that there are many uncovered ones, such as the programming code used by [38]. Moreover, it should be emphasized that these formats are not mutually exclusive; many models incorporate multiple formats to concurrently harness their respective benefits. A notable example is the memory module of GITM [16], which utilizes a key-value list structure. In this structure, the keys are represented by embedding vectors, while the values consist of raw natural languages. The use of embedding vectors allows for efficient retrieval of memory records. By utilizing natural languages, the memory contents become highly comprehensive, enabling more informed agent actions.

备注:这里我们仅展示了几种具有代表性的记忆格式,但需要注意的是,还存在许多未涵盖的格式,例如[38]所使用的编程代码。此外,必须强调的是这些格式并非互斥关系,许多模型会融合多种格式以同时发挥各自优势。一个典型例子是GITM[16]的记忆模块,它采用了键值对列表结构:其中键由嵌入向量(embedding vectors)表示,值则由原始自然语言构成。嵌入向量的运用实现了记忆记录的高效检索,而自然语言的采用使记忆内容具备高度可解释性,从而支撑智能体做出更明智的决策。

Above, we mainly discuss the internal designs of the memory module. In the following, we turn our focus to memory operations, which are used to interact with external

上文主要讨论了内存模块的内部设计。接下来,我们将重点转向用于与外部交互的内存操作。

environments.

环境。

Memory operations: The memory module plays a critical role in allowing the agent to acquire, accumulate, and utilize significant knowledge by interacting with the environment. The interaction between the agent and the environment is accomplished through three crucial memory operations: memory reading, memory writing, and memory reflection. In the following, we introduce these operations more in detail.

内存操作:内存模块在AI智能体通过与环境的交互获取、积累和利用重要知识方面发挥着关键作用。智能体与环境的交互通过三个核心内存操作实现:内存读取、内存写入和内存反思。下文将详细阐述这些操作。

● Memory reading. The objective of memory reading is to extract meaningful information from memory to enhance the agent’s actions. For example, using the previously successful actions to achieve similar goals [16]. The key of memory reading lies in how to extract valuable information from history actions. Usually, there three commonly used criteria for information extraction, that is, the recency, relevance, and importance [20]. Memories that are more recent, relevant, and important are more likely to be extracted. Formally, we conclude the following equation from existing literature for memory information extraction:

● 记忆读取。记忆读取的目标是从记忆中提取有意义的信息,以增强智能体的行为。例如,使用先前成功的动作来实现类似目标 [16]。记忆读取的关键在于如何从历史行为中提取有价值的信息。通常,信息提取有三个常用标准,即时效性、相关性和重要性 [20]。越新近、越相关且越重要的记忆越容易被提取。形式上,我们从现有文献中总结出以下记忆信息提取公式:

$$

m^{* }=\arg\operatorname*{min}_{m\in M}\alpha s^{r e c}(q,m)+\beta s^{r e l}(q,m)+\gamma s^{i m p}(m),

$$

$$

m^{* }=\arg\operatorname*{min}_{m\in M}\alpha s^{r e c}(q,m)+\beta s^{r e l}(q,m)+\gamma s^{i m p}(m),

$$

where $q$ is the query, for example, the task that the agent should address or the context in which the agent is situated. $M$ is the set of all memories. $s^{r e c}(\cdot),s^{r e l}(\cdot)$ , and $s^{i m p}(\cdot)$ are the scoring functions for measuring the recency, relevance, and importance of the memory $m$ . These scoring functions can be implemented using various methods, for example, $s^{r e l}(q,m)$ can be realized based on LSH, ANNOY, HNSW, FAISS, and so on. It should be noted that $s^{i m p}$ only reflects the characters of the memory itself, thus it is unrelated to the query $q,\alpha,\beta$ and $\gamma$ are balancing parameters. By assigning them with different values, one can obtain various memory reading strategies. For example, by setting $\alpha=\gamma=0$ , many studies [16,30,38,42] only consider the relevance score $s^{r e l}$ for memory reading. By assigning $\alpha=\beta=\gamma=1.0$ , [20] equally weights all the above three metrics to extract information from memory.

其中 $q$ 是查询,例如AI智能体需要处理的任务或所处情境。$M$ 表示所有记忆的集合。$s^{rec}(\cdot)$、$s^{rel}(\cdot)$ 和 $s^{imp}(\cdot)$ 分别是用于衡量记忆 $m$ 的新近性、相关性和重要性的评分函数。这些评分函数可通过多种方法实现,例如 $s^{rel}(q,m)$ 可基于LSH、ANNOY、HNSW、FAISS等技术实现。需注意 $s^{imp}$ 仅反映记忆本身的特征,因此与查询 $q$ 无关。$\alpha$、$\beta$ 和 $\gamma$ 是平衡参数,通过赋予不同值可获得多种记忆读取策略。例如,当设置 $\alpha=\gamma=0$ 时,许多研究[16,30,38,42]仅考虑相关性评分 $s^{rel}$ 进行记忆读取;而当 $\alpha=\beta=\gamma=1.0$ 时,文献[20]对上述三个指标进行等权重处理以从记忆中提取信息。

● Memory writing. The purpose of memory writing is to store information about the perceived environment in memory. Storing valuable information in memory provides a foundation for retrieving informative memories in the future, enabling the agent to act more efficiently and rationally. During the memory writing process, there are two potential problems that should be carefully addressed. On one hand, it is crucial to address how to store information that is similar to existing memories (i.e., memory duplicated). On the other hand, it is important to consider how to remove information when the memory reaches its storage limit (i.e., memory overflow). In the following, we discuss these problems more in detail. (1) Memory duplicated. To incorporate similar information, people have developed various methods for integrating new and previous records. For instance, in [7], the successful action sequences related to the same sub-goal are stored in a list. Once the size of the list reaches $\mathrm{N}(=5)$ , all the sequences in it are condensed into a unified plan solution using LLMs. The original sequences in the memory are replaced with the newly generated one. Augmented LLM [43] aggregates duplicate information via count accumulation, avoiding redundant storage. (2) Memory overflow. In order to write information into the memory when it is full, people design different methods to delete existing information to continue the memorizing process. For example, in ChatDB [40], memories can be explicitly deleted based on user commands. RET-LLM [42] uses a fixed-size buffer for memory, overwriting the oldest entries in a first-in-first-out (FIFO) manner.

● 记忆写入。记忆写入的目的是将感知到的环境信息存储到记忆中。将有价值的信息存入记忆,为未来检索有用记忆奠定了基础,使智能体能够更高效、更合理地行动。在记忆写入过程中,有两个潜在问题需要谨慎处理:一方面,如何存储与现有记忆相似的信息(即记忆重复)至关重要;另一方面,当记忆达到存储限制时如何移除信息(即记忆溢出)同样重要。下文将详细讨论这些问题。

(1) 记忆重复。为整合相似信息,研究者开发了多种新旧记录融合方法。例如文献[7]将与同一子目标相关的成功动作序列存储在列表中,当列表大小达到$\mathrm{N}(=5)$时,所有序列会通过大语言模型压缩为统一规划方案,并用新生成的方案替换原始记忆。Augmented LLM [43]通过计数累加来聚合重复信息,避免冗余存储。

(2) 记忆溢出。为解决记忆满载时的写入问题,研究者设计了不同方法来删除现有信息以继续记忆过程。例如ChatDB [40]支持根据用户指令显式删除记忆,RET-LLM [42]采用固定大小的记忆缓冲区,以先进先出(FIFO)方式覆盖最旧条目。

● Memory reflection. Memory reflection emulates humans’ ability to witness and evaluate their own cognitive, emotional, and behavioral processes. When adapted to agents, the objective is to provide agents with the capability to independently summarize and infer more abstract, complex and high-level information. More specifically, in Generative Agent [20], the agent has the capability to summarize its past experiences stored in memory into broader and more abstract insights. To begin with, the agent generates three key questions based on its recent memories. Then, these questions are used to query the memory to obtain relevant information. Building upon the acquired information, the agent generates five insights, which reflect the agent high-level ideas. For example, the low-level memories “Klaus Mueller is writing a research paper”, “Klaus Mueller is engaging with a librarian to further his research”, and “Klaus Mueller is conversing with Ayesha Khan about his research” can induce the high-level insight “Klaus Mueller is dedicated to his research”. In addition, the reflection process can occur hierarchically, meaning that the insights can be generated based on existing insights. In GITM [16], the actions that successfully accomplish the sub-goals are stored in a list. When the list contains more than five elements, the agent summarizes them into a common and abstract pattern and replaces all the elements. In ExpeL [44], two approaches are introduced for the agent to acquire reflection. Firstly, the agent compares successful or failed trajectories within the same task. Secondly, the agent learns from a collection of successful trajectories to gain experiences.

● 记忆反射。记忆反射模拟了人类观察和评估自身认知、情感及行为过程的能力。当应用于AI智能体时,其目标是让智能体具备独立总结并推断更抽象、复杂和高级信息的能力。具体而言,在Generative Agent [20]中,智能体能够将存储在记忆中的过往经历总结为更广泛、更抽象的见解。首先,智能体基于近期记忆生成三个关键问题;随后利用这些问题查询记忆以获取相关信息;基于所得信息,智能体会生成五项体现其高层思维的见解。例如,底层记忆"Klaus Mueller正在撰写研究论文"、"Klaus Mueller与图书馆员交流以推进研究"以及"Klaus Mueller与Ayesha Khan讨论研究"可推导出高层见解"Klaus Mueller专注于研究工作"。此外,反射过程可分层进行,即基于现有见解生成新见解。在GITM [16]中,成功完成子目标的行为会被存入列表,当列表元素超过五个时,智能体会将其归纳为通用抽象模式并替换所有元素。ExpeL [44]提出了两种获取反思的方法:一是比较同一任务中的成功或失败轨迹;二是通过分析成功轨迹集合来获取经验。

A significant distinction between traditional LLMs and the agents is that the latter must possess the capability to learn and complete tasks in dynamic environments. If we consider the memory module as responsible for managing the agents’ past behaviors, it becomes essential to have another significant module that can assist the agents in planning their future actions. In the following, we present an overview of how researchers design the planning module.

传统大语言模型与AI智能体的一个重要区别在于,后者必须具备在动态环境中学习和完成任务的能力。如果将记忆模块视为负责管理AI智能体过去行为的组件,那么另一个关键模块就显得至关重要——它需要帮助AI智能体规划未来行动。下文我们将概述研究人员如何设计这一规划模块。

2.1.3 Planning module

2.1.3 规划模块

When faced with a complex task, humans tend to deconstruct it into simpler subtasks and solve them individually. The planning module aims to empower the agents with such human capability, which is expected to make the agent behave more reasonably, powerfully, and reliably. In specific, we summarize existing studies based on whether the agent can receive feedback in the planing process, which are detailed as follows:

面对复杂任务时,人类倾向于将其拆解为更简单的子任务并逐个解决。规划模块旨在赋予AI智能体这种类人能力,使其行为更合理、强大且可靠。具体而言,我们根据智能体在规划过程中能否接收反馈,将现有研究分为以下两类:

Planning without feedback: In this method, the agents do not receive feedback that can influence its future behaviors after taking actions. In the following, we present several

无反馈规划:在此方法中,智能体在执行动作后不会收到可影响其未来行为的反馈。下文将介绍几种

representative strategies.

代表性策略。

● Single-path reasoning. In this strategy, the final task is decomposed into several intermediate steps. These steps are connected in a cascading manner, with each step leading to only one subsequent step. LLMs follow these steps to achieve the final goal. Specifically, Chain of Thought (CoT) [45] proposes inputting reasoning steps for solving complex problems into the prompt. These steps serve as examples to inspire LLMs to plan and act in a step-by-step manner. In this method, the plans are created based on the inspiration from the examples in the prompts. Zero-shot-CoT [46] enables LLMs to generate task reasoning processes by prompting them with trigger sentences like “think step by step”. Unlike CoT, this method does not incorporate reasoning steps as examples in the prompts. Re-Prompting [47] involves checking whether each step meets the necessary prerequisites before generating a plan. If a step fails to meet the prerequisites, it introduces a prerequisite error message and prompts the LLM to regenerate the plan. ReWOO [48] introduces a paradigm of separating plans from external observations, where the agents first generate plans and obtain observations independently, and then combine them together to derive the final results. HuggingGPT [13] first decomposes the task into many subgoals, and then solves each of them based on Hugging face. Different from CoT and Zero-shot-CoT, which outcome all the reasoning steps in a one-shot manner, ReWOO and HuggingGPT produce the results by accessing LLMs multiply times.

● 单路径推理 (Single-path reasoning)。该策略将最终任务分解为若干中间步骤,这些步骤以级联方式连接,每个步骤仅导向一个后续步骤。大语言模型通过遵循这些步骤实现最终目标。具体而言,思维链 (Chain of Thought, CoT) [45] 提出在提示词中输入解决复杂问题的推理步骤,这些步骤作为示例启发大语言模型进行分步规划与执行。该方法中的规划基于提示词中的示例启发生成。零样本思维链 (Zero-shot-CoT) [46] 通过"逐步思考"等触发句提示大语言模型生成任务推理过程,与CoT不同,该方法不会在提示词中包含推理步骤示例。重新提示 (Re-Prompting) [47] 在生成计划前会检查每个步骤是否满足必要前提条件,若未满足则引入前提错误信息并提示大语言模型重新生成计划。ReWOO [48] 提出将规划与外部观察分离的范式,智能体先独立生成规划并获取观察结果,再将二者结合得出最终结论。HuggingGPT [13] 首先将任务分解为多个子目标,再基于HuggingFace平台逐一解决。与CoT和零样本思维链一次性输出所有推理步骤不同,ReWOO和HuggingGPT通过多次访问大语言模型来生成结果。

● Multi-path reasoning. In this strategy, the reasoning steps for generating the final plans are organized into a tree-like structure. Each intermediate step may have multiple subsequent steps. This approach is analogous to human thinking, as individuals may have multiple choices at each reasoning step. In specific, Self-consistent CoT (CoT-SC) [49] believes that each complex problem has multiple ways of thinking to deduce the final answer. Thus, it starts by employing CoT to generate various reasoning paths and corresponding answers. Subsequently, the answer with the highest frequency is chosen as the final output. Tree of Thoughts (ToT) [50] is designed to generate plans using a tree-like reasoning structure. In this approach, each node in the tree represents a “thought,” which corresponds to an intermediate reasoning step. The selection of these intermediate steps is based on the evaluation of LLMs. The final plan is generated using either the breadth-first search (BFS) or depth-first search (DFS) strategy. Comparing with CoT-SC, which generates all the planed steps together, ToT needs to query LLMs for each reasoning step. In RecMind [51], the authors designed a self-inspiring mechanism, where the discarded historical information in the planning process is also leveraged to derive new reasoning steps. In GoT [52], the authors expand the tree-like reasoning structure in ToT to graph structures, resulting in more powerful prompting strategies. In AoT [53], the authors design a novel method to enhance the reasoning processes of LLMs by incorporating algorithmic examples into the prompts. Remarkably, this method only needs to query LLMs for only one or a few times. In [54], the LLMs are leveraged as zero-shot planners.

● 多路径推理。该策略将生成最终计划的推理步骤组织成树状结构,每个中间步骤可能包含多个后续步骤。这种方法模拟了人类思维方式,因为个体在每个推理步骤中可能存在多种选择。具体而言,自洽思维链 (CoT-SC) [49] 认为每个复杂问题都存在多种推导最终答案的思考路径,因此首先生成多条推理路径及对应答案,然后选择出现频率最高的答案作为最终输出。思维树 (ToT) [50] 采用树状推理结构生成计划,其中每个节点代表一个对应中间推理步骤的"思考",这些中间步骤的选择基于大语言模型的评估,最终计划通过广度优先搜索 (BFS) 或深度优先搜索 (DFS) 策略生成。与一次性生成所有计划步骤的CoT-SC相比,ToT需要为每个推理步骤查询大语言模型。RecMind [51] 设计了自我启发机制,利用规划过程中被丢弃的历史信息来推导新推理步骤。GoT [52] 将ToT的树状推理结构扩展为图结构,从而形成更强大的提示策略。AoT [53] 通过将算法示例融入提示词来增强大语言模型的推理过程,值得注意的是该方法仅需查询大语言模型一至数次。文献[54]则直接将大语言模型作为零样本规划器使用。

At each planning step, they first generate multiple possible next steps, and then determine the final one based on their distances to admissible actions. [55] further improves [54] by incorporating examples that are similar to the queries in the prompts. RAP [56] builds a world model to simulate the potential benefits of different plans based on Monte Carlo Tree Search (MCTS), and then, the final plan is generated by aggregating multiple MCTS iterations. To enhance comprehension, we provide an illustration comparing the strategies of single-path and multi-path reasoning in Fig. 3.

在每次规划步骤中,他们首先生成多个可能的下一步,然后根据这些步骤与可执行动作的距离确定最终选择。[55]通过在与查询相似的提示中加入示例,进一步改进了[54]的方法。RAP [56]构建了一个世界模型,基于蒙特卡洛树搜索(MCTS)模拟不同计划的潜在收益,最终通过聚合多次MCTS迭代生成最终计划。为了便于理解,我们在图3中提供了单路径与多路径推理策略的对比示意图。

● External planner. Despite the demonstrated power of LLMs in zero-shot planning, effectively generating plans for domain-specific problems remains highly challenging. To address this challenge, researchers turn to external planners. These tools are well-developed and employ efficient search algorithms to rapidly identify correct, or even optimal, plans. In specific, $\mathrm{LLM{+}P}$ [57] first transforms the task descriptions into formal Planning Domain Definition Languages (PDDL), and then it uses an external planner to deal with the PDDL. Finally, the generated results are transformed back into natural language by LLMs. Similarly, LLM-DP [58] utilizes LLMs to convert the observations, the current world state, and the target objectives into PDDL. Subsequently, this transformed data is passed to an external planner, which efficiently determines the final action sequence. CO-LLM [22] demonstrates that LLMs is good at generating high-level plans, but struggle with lowlevel control. To address this limitation, a heuristic ally designed external low-level planner is employed to effectively execute actions based on high-level plans.

● 外部规划器。尽管大语言模型在零样本规划中展现出强大能力,但针对特定领域问题有效生成计划仍极具挑战性。为解决这一难题,研究者转向外部规划器。这些工具发展成熟,采用高效搜索算法快速找到正确甚至最优方案。具体而言,$\mathrm{LLM{+}P}$ [57] 首先将任务描述转换为形式化的规划领域定义语言 (PDDL) ,随后调用外部规划器处理PDDL描述,最终由大语言模型将生成结果转回自然语言。类似地,LLM-DP [58] 利用大语言模型将观察数据、当前世界状态和目标对象转化为PDDL描述,再交由外部规划器高效确定最终动作序列。CO-LLM [22] 研究表明大语言模型擅长生成高层计划,但在底层控制方面表现欠佳。为此,该系统引入启发式设计的外部底层规划器,基于高层计划高效执行具体动作。

Planning with feedback: In many real-world scenarios, the agents need to make long-horizon planning to solve complex tasks. When facing these tasks, the above planning modules without feedback can be less effective due to the following reasons: firstly, generating a flawless plan directly from the beginning is extremely difficult as it needs to consider various complex preconditions. As a result, simply following the initial plan often leads to failure. Moreover, the execution of the plan may be hindered by unpredictable transition dynamics, rendering the initial plan non-executable. Simultaneously, when examining how humans tackle complex tasks, we find that individuals may iterative ly make and revise their plans based on external feedback. To simulate such human capability, researchers have designed many planning modules, where the agent can receive feedback after taking actions. The feedback can be obtained from environments, humans, and models, which are detailed in the following.

带反馈的规划:在许多现实场景中,智能体需要进行长周期规划来解决复杂任务。面对这些任务时,上述无反馈的规划模块可能因以下原因效率低下:首先,从一开始就生成完美计划极其困难,因为这需要考虑各种复杂的先决条件。因此,单纯遵循初始计划往往会导致失败。此外,计划的执行可能受到不可预测的状态转移动态阻碍,使初始计划无法实施。同时,观察人类处理复杂任务的方式时,我们发现个体会基于外部反馈迭代制定并修正计划。为模拟这种人类能力,研究者设计了多种规划模块,使智能体在执行动作后能接收反馈。反馈可来自环境、人类和模型,具体如下。

● Environmental feedback. This feedback is obtained from the objective world or virtual environment. For instance, it could be the game’s task completion signals or the observations made after the agent takes an action. In specific, ReAct [59] proposes constructing prompts using thought-actobservation triplets. The thought component aims to facilitate high-level reasoning and planning for guiding agent behaviors. The act represents a specific action taken by the agent. The observation corresponds to the outcome of the action, acquired through external feedback, such as search engine results. The next thought is influenced by the previous observations, which makes the generated plans more adaptive to the environment. Voyager [38] makes plans by incorporating three types of environment feedback including the intermediate progress of program execution, the execution error and self-verification results. These signals can help the agent to make better plans for the next action. Similar to Voyager, Ghost [16] also incorporates feedback into the reasoning and action taking processes. This feedback encompasses the environment states as well as the success and failure information for each executed action. SayPlan [31] leverages environmental feedback derived from a scene graph simulator to validate and refine its strategic formulations. This simulator is adept at discerning the outcomes and state transitions subsequent to agent actions, facilitating SayPlan’s iterative re calibration of its strategies until a viable plan is ascertained. In DEPS [33], the authors argue that solely providing information about the completion of a task is often inadequate for correcting planning errors. Therefore, they propose informing the agent about the detail reasons for task failure, allowing them to more effectively revise their plans. LLM-Planner [60] introduces a grounded re-planning algorithm that dynamically updates plans generated by LLMs when encountering object mismatches and unattainable plans during task completion. Inner Monologue [61] provides three types of feedback to the agent after it takes actions: (1) whether the task is successfully completed, (2) passive scene descriptions, and (3) active scene descriptions. The former two are generated from the environments, which makes the agent actions more reasonable.

● 环境反馈。这类反馈来自客观世界或虚拟环境。例如,游戏任务完成信号或智能体执行动作后的观察结果。具体而言,ReAct [59] 提出使用思考-行动-观察三元组构建提示。思考模块用于促进高层推理与规划以指导智能体行为,行动代表智能体执行的具体动作,观察则对应通过外部反馈(如搜索引擎结果)获取的动作结果。后续思考会受到先前观察的影响,从而使生成的计划更具环境适应性。Voyager [38] 通过整合三类环境反馈制定计划:程序执行的中间进度、执行错误及自我验证结果。这些信号能帮助智能体优化后续动作规划。与Voyager类似,Ghost [16] 也将反馈融入推理与行动过程,包括环境状态及每个已执行动作的成功/失败信息。SayPlan [31] 利用源自场景图模拟器的环境反馈来验证和完善策略方案,该模拟器能精准识别智能体动作后的结果与状态转换,支持策略的迭代优化直至确定可行方案。DEPS [33] 指出仅提供任务完成信息通常不足以修正规划错误,因此建议向智能体反馈任务失败的具体原因以提升计划修订效率。LLM-Planner [60] 提出基于场景的重新规划算法,当任务执行过程中出现对象不匹配或计划不可行时,动态更新大语言模型生成的计划。Inner Monologue [61] 在智能体行动后提供三类反馈:(1) 任务是否成功完成,(2) 被动场景描述,(3) 主动场景描述。前两类由环境生成,可使智能体行为更趋合理。

Fig. 3 Comparison between the strategies of single-path and multi-path reasoning. LMZSP is the model proposed in [54]

图 3: 单路径与多路径推理策略对比。LMZSP 是文献 [54] 提出的模型

● Human feedback. In addition to obtaining feedback from the environment, directly interacting with humans is also a very intuitive strategy to enhance the agent planning capability. The human feedback is a subjective signal. It can effectively make the agent align with the human values and preferences, and also help to alleviate the hallucination problem. In Inner Monologue [61], the agent aims to perform high-level natural language instructions in a 3D visual environment. It is given the capability to actively solicit feedback from humans regarding scene descriptions. Then, the agent incorporates the human feedback into its prompts, enabling more informed planning and reasoning. In the above cases, we can see, different types of feedback can be combined to enhance the agent planning capability. For example, Inner Monologue [61] collects both environment and human feedback to facilitate the agent plans.

● 人类反馈。除了从环境中获取反馈,直接与人类互动也是提升智能体规划能力的一种非常直观的策略。人类反馈是一种主观信号,它能有效使智能体与人类价值观和偏好对齐,并有助于缓解幻觉问题。在Inner Monologue [61]中,智能体旨在3D视觉环境中执行高级自然语言指令,被赋予了主动向人类征求场景描述反馈的能力。随后,智能体将人类反馈纳入其提示(prompt)中,从而实现更明智的规划和推理。从上述案例可以看出,不同类型的反馈可以结合使用以增强智能体规划能力。例如,Inner Monologue [61]同时收集环境和人类反馈来辅助智能体规划。

● Model feedback. Apart from the aforementioned environmental and human feedback, which are external signals, researchers have also investigated the utilization of internal feedback from the agents themselves. This type of feedback is usually generated based on pre-trained models. In specific, [62] proposes a self-refine mechanism. This mechanism consists of three crucial components: output, feedback, and refinement. Firstly, the agent generates an output. Then, it utilizes LLMs to provide feedback on the output and offer guidance on how to refine it. At last, the output is improved by the feedback and refinement. This output-feedback-refinement process iterates until reaching some desired conditions. SelfCheck [63] allows agents to examine and evaluate their reasoning steps generated at various stages. They can then correct any errors by comparing the outcomes. InterAct [64] uses different language models (such as ChatGPT and Instruct GP T) as auxiliary roles, such as checkers and sorters, to help the main language model avoid erroneous and inefficient actions. ChatCoT [65] utilizes model feedback to improve the quality of its reasoning process. The model feedback is generated by an evaluation module that monitors the agent reasoning steps. Reflexion [12] is developed to enhance the agent’s planning capability through detailed verbal feedback. In this model, the agent first produces an action based on its memory, and then, the evaluator generates feedback by taking the agent trajectory as input. In contrast to previous studies, where the feedback is given as a scalar value, this model leverages LLMs to provide more detailed verbal feedback, which can provide more comprehensive supports for the agent plans.

● 模型反馈。除了上述环境和人类反馈这类外部信号外,研究者还探索了利用AI智能体自身产生的内部反馈。这类反馈通常基于预训练模型生成。具体而言,[62]提出了一种自我优化机制,该机制包含三个关键组件:输出、反馈和优化。首先,智能体生成输出;接着利用大语言模型对输出提供反馈和改进建议;最终根据反馈进行优化。这种"输出-反馈-优化"流程会循环迭代直至满足预设条件。SelfCheck[63]允许智能体在不同阶段检视和评估自身生成的推理步骤,通过结果对比纠正错误。InterAct[64]采用不同语言模型(如ChatGPT和InstructGPT)作为校验器、排序器等辅助角色,帮助主语言模型规避错误和低效操作。ChatCoT[65]通过评估模块监控智能体推理步骤来生成模型反馈,从而提升推理质量。Reflexion[12]通过详细语言反馈增强智能体规划能力:智能体先基于记忆生成动作,评估器再以行为轨迹为输入生成反馈。与以往研究使用标量反馈不同,该模型利用大语言模型提供更详尽的语言反馈,为智能体规划提供更全面的支持。

Remark. In conclusion, the implementation of planning module without feedback is relatively straightforward. However, it is primarily suitable for simple tasks that only require a small number of reasoning steps. Conversely, the strategy of planning with feedback needs more careful designs to handle the feedback. Nevertheless, it is considerably more powerful and capable of effectively addressing complex tasks that involve long-range reasoning.

备注。总之,无反馈规划模块的实现相对简单直接。然而,它主要适用于仅需少量推理步骤的简单任务。相反,带反馈的规划策略需要更精细的设计来处理反馈信息,但其能力显著更强,能有效解决涉及长程推理的复杂任务。

2.1.4 Action module

2.1.4 行动模块

The action module is responsible for translating the agent’s decisions into specific outcomes. This module is located at the most downstream position and directly interacts with the environment. It is influenced by the profile, memory, and planning modules. This section introduces the action module from four perspectives: (1) Action goal: what are the intended outcomes of the actions? (2) Action production: how are the actions generated? (3) Action space: what are the available actions? (4) Action impact: what are the consequences of the actions? Among these perspectives, the first two focus on the aspects preceding the action (“before-action” aspects), the third focuses on the action itself (“in-action” aspect), and the fourth emphasizes the impact of the actions (“after-action” aspect).

动作模块负责将AI智能体的决策转化为具体结果。该模块位于最下游位置,直接与环境交互,并受档案、记忆和规划模块的影响。本节从四个角度介绍动作模块:(1) 动作目标:动作的预期结果是什么?(2) 动作生成:动作是如何产生的?(3) 动作空间:可用的动作有哪些?(4) 动作影响:动作会产生什么后果?其中前两个角度关注动作前的方面("动作前"阶段),第三个角度关注动作本身("动作中"阶段),第四个角度强调动作的影响("动作后"阶段)。

Action goal: The agent can perform actions with various objectives. Here, we present several representative examples: (1) Task Completion. In this scenario, the agent’s actions are aimed at accomplishing specific tasks, such as crafting an iron pickaxe in Minecraft [38] or completing a function in software development [18]. These actions usually have well-defined objectives, and each action contributes to the completion of the final task. Actions aimed at this type of goal are very common in existing literature. (2) Communication. In this case, the actions are taken to communicate with the other agents or real humans for sharing information or collaboration. For example, the agents in ChatDev [18] may communicate with each other to collectively accomplish software development tasks. In Inner Monologue [61], the agent actively engages in communication with humans and adjusts its action strategies based on human feedback. (3) Environment Exploration. In this example, the agent aims to explore unfamiliar environments to expand its perception and strike a balance between exploring and exploiting. For instance, the agent in Voyager [38] may explore unknown skills in their task completion process, and continually refine the skill execution code based on environment feedback through trial and error.

行动目标:AI智能体能够执行具有不同目的的动作。以下是几个典型示例:(1) 任务完成。这种情况下,智能体的动作旨在完成特定任务,例如在《我的世界》中制作铁镐[38]或在软件开发中实现某个功能[18]。这类动作通常具有明确目标,每个动作都对最终任务的完成有所贡献。现有文献中针对此类目标的动作十分常见。(2) 交流协作。此时动作的目的是与其他智能体或真实人类进行信息共享或协作。例如ChatDev[18]中的智能体会相互沟通以共同完成软件开发任务,而Inner Monologue[61]中的智能体则主动与人类交流,并根据人类反馈调整动作策略。(3) 环境探索。此类情况下,智能体旨在探索陌生环境以扩展认知,并在探索与利用之间取得平衡。例如Voyager[38]中的智能体在任务完成过程中可能发现未知技能,并通过试错不断优化技能执行代码。

Action production: Different from ordinary LLMs, where the model input and output are directly associated, the agent may take actions via different strategies and sources. In the following, we introduce two types of commonly used action production strategies. (1) Action via memory recollection. In this strategy, the action is generated by extracting information from the agent memory according to the current task. The task and the extracted memories are used as prompts to trigger the agent actions. For example, in Generative Agents [20], the agent maintains a memory stream, and before taking each action, it retrieves recent, relevant and important information from the memory steam to guide the agent actions. In GITM [16], in order to achieve a low-level sub-goal, the agent queries its memory to determine if there are any successful experiences related to the task. If similar tasks have been completed previously, the agent invokes the previously successful actions to handle the current task directly. In collaborative agents such as ChatDev [18] and MetaGPT [23], different agents may communicate with each other. In this process, the conversation history in a dialog is remembered in the agent memories. Each utterance generated by the agent is influenced by its memory. (2) Action via plan following. In this strategy, the agent takes actions following its pregenerated plans. For instance, in DEPS [33], for a given task, the agent first makes action plans. If there are no signals indicating plan failure, the agent will strictly adhere to these plans. In GITM [16], the agent makes high-level plans by decomposing the task into many sub-goals. Based on these plans, the agent takes actions to solve each sub-goal sequentially to complete the final task.

动作生成:与普通大语言模型直接关联输入输出不同,AI智能体可通过不同策略和来源采取行动。以下介绍两种常用动作生成策略。(1) 基于记忆回溯的动作生成。该策略通过从智能体记忆中提取与当前任务相关的信息来生成动作,任务内容和提取的记忆作为提示触发智能体行为。例如在Generative Agents [20]中,智能体维护记忆流,每次行动前会从记忆流中检索近期、相关且重要的信息来指导行为。GITM [16]为实现低级子目标,会查询记忆库中是否存在相关成功经验,若存在则直接调用历史成功动作处理当前任务。在ChatDev [18]和MetaGPT [23]等协作型智能体中,对话历史会被存入记忆,每个生成语句都受记忆影响。(2) 基于计划遵循的动作生成。该策略要求智能体按预设计划执行动作,如DEPS [33]中智能体先制定行动计划,若无失败信号则严格遵循计划。GITM [16]通过任务分解生成高层计划,智能体依次解决各子目标以完成最终任务。

Action space: Action space refers to the set of possible actions that can be performed by the agent. In general, we can roughly divide these actions into two classes: (1) external tools and (2) internal knowledge of the LLMs. In the following, we introduce these actions more in detail.

动作空间:动作空间指的是智能体可以执行的可能动作集合。总体而言,我们可以将这些动作大致分为两类:(1) 外部工具 和 (2) 大语言模型的内部知识。下文将更详细地介绍这些动作。

● External tools. While LLMs have been demonstrated to be effective in accomplishing a large amount of tasks, they may not work well for the domains which need comprehensive expert knowledge. In addition, LLMs may also encounter hallucination problems, which are hard to be resolved by themselves. To alleviate the above problems, the agents are empowered with the capability to call external tools for executing action. In the following, we present several representative tools which have been exploited in the literature.

● 外部工具。虽然大语言模型已被证明能有效完成大量任务,但在需要综合专业知识的领域可能表现欠佳。此外,大语言模型还存在幻觉问题,这类问题往往难以自主解决。为缓解上述问题,AI智能体被赋予调用外部工具执行操作的能力。下文列举了文献中已应用的几种代表性工具:

(1) APIs. Leveraging external APIs to complement and expand action space is a popular paradigm in recent years. For example, HuggingGPT [13] leverages the models on Hugging Face to accomplish complex user tasks. [66,67] propose to automatically generate queries to extract relevant content from external Web pages when responding to user request. TPTU [67] interfaces with both Python interpreters and LaTeX compilers to execute sophisticated computations such as square roots, factorials and matrix operations. Another type of APIs is the ones that can be directly invoked by LLMs based on natural language or code inputs. For instance, Gorilla [68] is a fine-tuned LLM designed to generate accurate input arguments for API calls and mitigate the issue of hallucination during external API invocations. ToolFormer [15] is an LLMbased tool transformation system that can automatically convert a given tool into another one with different functionalities or formats based on natural language instructions. API-Bank [69] is an LLM-based API recommendation agent that can automatically search and generate appropriate API calls for various programming languages and domains. API-Bank also provides an interactive interface for users to easily modify and execute the generated API calls. ToolBench [14] is an LLM-based tool generation system that can automatically design and implement various practical tools based on natural language requirements. The tools generated by ToolBench include calculators, unit converters, calendars, maps, charts, etc. RestGPT [70] connects LLMs with RESTful APIs, which follow widely accepted standards for Web services development, making the resulting program more compatible with real-world applications. TaskMatrix.AI [71] connects LLMs with millions of APIs to support task execution. At its core lies a multimodal conversational foundational model that interacts with users, understands their goals and context, and then produces executable code for particular tasks. All these agents utilize external APIs as their tools, and provide interactive interfaces for users to easily modify and execute the generated or transformed tools.

(1) API。近年来,利用外部API补充和扩展行动空间已成为流行范式。例如HuggingGPT [13]借助Hugging Face平台上的模型完成复杂用户任务。[66,67]提出在响应用户请求时自动生成查询以从外部网页提取相关内容。TPTU [67]通过对接Python语言解释器和LaTeX编译器来执行平方根、阶乘和矩阵运算等复杂计算。另一类API可直接通过自然语言或代码输入被大语言模型调用,如Gorilla [68]作为微调后的大语言模型,能生成精确的API调用参数并缓解外部API调用时的幻觉问题。ToolFormer [15]是基于大语言模型的工具转换系统,能根据自然语言指令将给定工具自动转化为具有不同功能或格式的新工具。API-Bank [69]是基于大语言模型的API推荐智能体,可自动搜索并生成适用于不同编程语言和领域的API调用,同时提供交互界面供用户修改和执行生成的API调用。ToolBench [14]是基于大语言模型的工具生成系统,能根据自然语言需求自动设计与实现各类实用工具,包括计算器、单位转换器、日历、地图、图表等。RestGPT [70]将大语言模型与遵循Web服务开发通用标准的RESTful API对接,使生成程序更兼容实际应用场景。TaskMatrix.AI [71]通过连接数百万API支持任务执行,其核心是多模态对话基础模型,可理解用户目标与上下文并生成特定任务的可执行代码。这些智能体均将外部API作为工具使用,并提供交互界面方便用户修改和执行生成或转换后的工具。

(2) Databases & Knowledge Bases. Integrating external database or knowledge base enables agents to obtain specific domain information for generating more realistic actions. For example, ChatDB [40] employs SQL statements to query databases, facilitating actions by the agents in a logical manner. MRKL [72] and OpenAGI [73] incorporate various expert systems such as knowledge bases and planners to access domain-specific information.

(2) 数据库与知识库。集成外部数据库或知识库使AI智能体能够获取特定领域信息,从而生成更符合实际的动作。例如,ChatDB [40] 使用SQL语句查询数据库,以逻辑方式辅助智能体执行动作。MRKL [72] 和 OpenAGI [73] 整合了多种专家系统(如知识库和规划器)来访问领域专有信息。

(3) External models. Previous studies often utilize external models to expand the range of possible actions. In comparison to APIs, external models typically handle more complex tasks. Each external model may correspond to multiple APIs. For example, to enhance the text retrieval capability, MemoryBank [39] incorporates two language models: one is designed to encode the input text, while the other is responsible for matching the query statements. ViperGPT [74] firstly uses Codex, which is implemented based on language model, to generate Python code from text descriptions, and then executes the code to complete the given tasks. TPTU [67] incorporates various LLMs to accomplish a wide range of language generation tasks such as generating code, producing lyrics, and more. ChemCrow [75] is an LLM-based chemical agent designed to perform tasks in organic synthesis, drug discovery, and material design. It utilizes seventeen expertdesigned models to assist its operations. MM-REACT [76] integrates various external models, such as VideoBERT for video sum mari z ation, X-decoder for image generation, and SpeechBERT for audio processing, enhancing its capability in diverse multimodal scenarios.

(3) 外部模型。先前研究常利用外部模型来扩展可能的行动范围。相比API,外部模型通常处理更复杂的任务。每个外部模型可能对应多个API。例如,为增强文本检索能力,MemoryBank [39] 整合了两个语言模型:一个负责编码输入文本,另一个负责匹配查询语句。ViperGPT [74] 首先使用基于语言模型实现的Codex从文本描述生成Python代码,再执行代码完成任务。TPTU [67] 整合了多种大语言模型来完成代码生成、歌词创作等广泛的语言生成任务。ChemCrow [75] 是一个基于大语言模型的化学智能体,用于执行有机合成、药物发现和材料设计任务,它借助17个专家设计的模型辅助运作。MM-REACT [76] 集成了多种外部模型,如用于视频摘要的VideoBERT、图像生成的X-decoder和音频处理的SpeechBERT,从而增强其在多模态场景下的能力。

● Internal knowledge. In addition to utilizing external tools, many agents rely solely on the internal knowledge of LLMs to guide their actions. We now present several crucial capabilities of LLMs that can support the agent to behave reasonably and effectively. (1) Planning capability. Previous work has demonstrated that LLMs can be used as decent planers to decompose complex task into simpler ones [45]. Such capability of LLMs can be even triggered without incorporating examples in the prompts [46]. Based on the planning capability of LLMs, DEPS [33] develops a Minecraft agent, which can solve complex task via sub-goal decomposition. Similar agents like GITM [16] and Voyager [38] also heavily rely on the planning capability of LLMs to successfully complete different tasks. (2) Conversation capability. LLMs can usually generate high-quality conversations. This capability enables the agent to behave more like humans. In the previous work, many agents take actions based on the strong conversation capability of LLMs. For example, in ChatDev [18], different agents can discuss the software developing process, and even can make reflections on their own behaviors. In RLP [30], the agent can communicate with the listeners based on their potential feedback on the agent’s utterance. (3) Common sense understanding capability. Another important capability of

● 内部知识。除了利用外部工具外,许多AI智能体仅依靠大语言模型的内部知识来指导其行为。我们接下来介绍大语言模型几项支撑智能体合理高效运行的关键能力:(1) 规划能力。已有研究表明大语言模型可作为优质规划器将复杂任务分解为简单子任务 [45],这种能力甚至能在提示词中不包含示例的情况下被触发 [46]。基于大语言模型的规划能力,DEPS [33] 开发了能通过子目标分解解决复杂任务的《我的世界》游戏智能体。类似GITM [16]和Voyager [38]等智能体也深度依赖大语言模型的规划能力来成功完成各类任务。(2) 对话能力。大语言模型通常能生成高质量对话,该能力使智能体表现更趋近人类。先前研究中,许多智能体都基于大语言模型的强大对话能力采取行动。例如ChatDev [18]中不同智能体可讨论软件开发流程,甚至能对自身行为进行反思;RLP [30]中的智能体能根据听众对其话语的潜在反馈与听众进行交流。(3) 常识理解能力。大语言模型的另一项重要能力是...

LLMs is that they can well comprehend human common sense. Based on this capability, many agents can simulate human daily life and make human-like decisions. For example, in Generative Agent, the agent can accurately understand its current state, the surrounding environment, and summarize high-level ideas based on basic observations. Without the common sense understanding capability of LLMs, these behaviors cannot be reliably simulated. Similar conclusions may also apply to RecAgent [21] and $\mathrm{S}^{3}$ [77], where the agents aim to simulate user recommendation and social behaviors.

大语言模型 (LLM) 的优势在于能够充分理解人类常识。基于这一能力,许多AI智能体可以模拟人类日常生活并做出类人决策。例如在Generative Agent中,该智能体能够准确理解自身当前状态、周边环境,并根据基础观察总结高层次想法。若缺乏大语言模型的常识理解能力,这些行为将无法被可靠地模拟。类似结论可能也适用于RecAgent [21] 和 $\mathrm{S}^{3}$ [77] ,这些智能体旨在模拟用户推荐和社交行为。

Action impact: Action impact refers to the consequences of the action. In fact, the action impact can encompass numerous instances, but for brevity, we only provide a few examples. (1) Changing environments. Agents can directly alter environment states by actions, such as moving their positions, collecting items, and constructing buildings. For instance, in GITM [16] and Voyager [38], the environments are changed by the actions of the agents in their task completion process. For example, if the agent mines three woods, then they may disappear in the environments. (2) Altering internal states. Actions taken by the agent can also change the agent itself, including updating memories, forming new plans, acquiring novel knowledge, and more. For example, in Generative Agents [20], memory streams are updated after performing actions within the system. SayCan [78] enables agents to take actions to update understandings of the environment. (3) Triggering new actions. In the task completion process, one agent action can be triggered by another one. For example, Voyager [38] constructs buildings once it has gathered all the necessary resources.

动作影响:动作影响指的是动作带来的后果。实际上,动作影响可能包含大量实例,但为了简洁,我们仅列举几个示例。(1) 改变环境。智能体可以通过动作直接改变环境状态,例如移动位置、收集物品和建造建筑。例如在GITM [16]和Voyager [38]中,环境会随着智能体执行任务过程中的动作而改变。如果智能体采集了三块木材,这些木材就会从环境中消失。(2) 改变内部状态。智能体采取的动作也可能改变其自身状态,包括更新记忆、形成新计划、获取新知识等。例如在Generative Agents [20]中,系统内执行动作后会更新记忆流。SayCan [78]使智能体能够通过动作更新对环境认知。(3) 触发新动作。在任务完成过程中,一个智能体动作可能触发另一个动作。例如Voyager [38]在收集完所有必要资源后就会开始建造建筑。

2.2 Agent capability acquisition

2.2 AI智能体能力获取

In the above sections, we mainly focus on how to design the agent architecture to better inspire the capability of LLMs to make it qualified for accomplishing tasks like humans. The architecture functions as the “hardware” of the agent. However, relying solely on the hardware is insufficient for achieving effective task performance. This is because the agent may lack the necessary task-specific capabilities, skills and experiences, which can be regarded as “software” resources. In order to equip the agent with these resources, various strategies have been devised. Generally, we categorize these strategies into two classes based on whether they require fine-tuning of the LLMs. In the following, we introduce each of them more in detail.

在前面的章节中,我们主要关注如何设计智能体架构以更好地激发大语言模型的能力,使其具备像人类一样完成任务的能力。该架构相当于智能体的"硬件"。然而,仅依靠硬件无法实现有效的任务执行,因为智能体可能缺乏必要的任务特定能力、技能和经验,这些可以被视为"软件"资源。为了让智能体具备这些资源,人们设计了多种策略。通常,我们根据这些策略是否需要微调大语言模型将其分为两类。下面我们将更详细地介绍每种策略。

Capability acquisition with fine-tuning: A straightforward method to enhance the agent capability for task completion is fine-tuning the agent based on task-dependent datasets. Generally, the datasets can be constructed based on human annotation, LLM generation or collected from real-world applications. In the following, we introduce these methods more in detail.

通过微调获取能力:提升AI智能体任务完成能力的一种直接方法是基于任务相关数据集对智能体进行微调。通常,这些数据集可通过人工标注、大语言模型生成或从实际应用中收集构建。下文将详细介绍这些方法。

● Fine-tuning with human annotated datasets. To fine-tune the agent, utilizing human annotated datasets is a versatile approach that can be employed in various application scenarios. In this approach, researchers first design annotation tasks and then recruit workers to complete them. For example, in CoH [79], the authors aim to align LLMs with human values and preferences. Different from the other models, where the human feedback is leveraged in a simple and symbolic manner, this method converts the human feedback into detailed comparison information in the form of natural languages. The LLMs are directly fine-tuned based on these natural language datasets. In RET-LLM [42], in order to better convert natural languages into structured memory information, the authors fine-tune LLMs based on a human constructed dataset, where each sample is a “triplet-natural language” pair. In WebShop [80], the authors collect 1.18 million real-world products form amazon.com, and put them onto a simulated ecommerce website, which contains several carefully designed human shopping scenarios. Based on this website, the authors recruit 13 workers to collect a real-human behavior dataset. At last, three methods based on heuristic rules, imitation learning and reinforcement learning are trained based on this dataset. Although the authors do not fine-tune LLM-based agents, we believe that the dataset proposed in this paper holds immense potential to enhance the capabilities of agents in the field of Web shopping. In EduChat [81], the authors aim to enhance the educational functions of LLMs, such as open-domain question answering, essay assessment, Socratic teaching, and emotional support. They fine-tune LLMs based on human annotated datasets that cover various educational scenarios and tasks. These datasets are manually evaluated and curated by psychology experts and frontline teachers. SWIFTSAGE [82] is an agent influenced by the dual-process theory of human cognition [83], which is effective for solving complex interactive reasoning tasks. In this agent, the SWIFT module constitutes a compact encoder-decoder language model, which is fine-tuned using human-annotated datasets.

● 基于人工标注数据集的微调。为微调AI智能体,利用人工标注数据集是一种通用方法,可适用于多种应用场景。该方法首先由研究者设计标注任务,再招募工作人员完成标注。例如在CoH[79]中,作者旨在使大语言模型与人类价值观及偏好对齐。与其他仅以简单符号化方式利用人类反馈的模型不同,该方法将人类反馈转化为自然语言形式的详细对比信息,并基于这些自然语言数据集直接微调大语言模型。RET-LLM[42]为更好地将自然语言转换为结构化记忆信息,基于人工构建的"三元组-自然语言"配对数据集进行微调。WebShop[80]从amazon.com收集118万件真实商品构建模拟电商网站,设计多个人类购物场景,招募13名工作人员采集真实人类行为数据集,最终基于该数据集训练启发式规则、模仿学习和强化学习三种方法。虽然作者未对大语言模型智能体进行微调,但我们认为该数据集对提升网络购物领域智能体能力具有巨大潜力。EduChat[81]为增强大语言模型的教育功能(如开放域问答、作文评分、苏格拉底式教学和情感支持),基于覆盖多教育场景任务的人工标注数据集进行微调,这些数据由心理学专家和一线教师人工评估筛选。SWIFTSAGE[82]是受人类认知双加工理论[83]启发的智能体,能有效解决复杂交互推理任务,其SWIFT模块采用紧凑型编码器-解码器语言模型,通过人工标注数据集进行微调。

● Fine-tuning with LLM generated datasets. Building human annotated dataset needs to recruit people, which can be costly, especially when one needs to annotate a large amount of samples. Considering that LLMs can achieve human-like capabilities in a wide range of tasks, a natural idea is using LLMs to accomplish the annotation task. While the datasets produced from this method can be not as perfect as the human annotated ones, it is much cheaper, and can be leveraged to generate more samples. For example, in ToolBench [14], to enhance the tool-using capability of open-source LLMs, the authors collect 16,464 real-world APIs spanning 49 categories from the RapidAPI Hub. They used these APIs to prompt ChatGPT to generate diverse instructions, covering both single-tool and multi-tool scenarios. Based on the obtained dataset, the authors fine-tune LLaMA [9], and obtain significant performance improvement in terms of tool using. In [84], to empower the agent with social capability, the authors design a sandbox, and deploy multiple agents to interact with each other. Given a social question, the central agent first generates initial responses. Then, it shares the responses to its nearby agents for collecting their feedback. Based on the feedback as well as its detailed explanations, the central agent revise its initial responses to make them more consistent with social norms. In this process, the authors collect a large amount of agent social interaction data, which is then leveraged to fine-tune the LLMs.

● 基于大语言模型生成数据集进行微调。构建人工标注数据集需要招募人员,成本可能很高,尤其是在需要标注大量样本时。考虑到大语言模型能在多种任务中展现类人能力,一个自然的思路是利用大语言模型完成标注任务。虽然这种方法产生的数据集可能不如人工标注完美,但成本更低廉,且能用于生成更多样本。例如在ToolBench [14]中,为增强开源大语言模型的工具使用能力,作者从RapidAPI Hub收集了覆盖49个类别的16,464个真实API,并利用这些API提示ChatGPT生成涵盖单工具和多工具场景的多样化指令。基于获得的数据集,作者对LLaMA [9]进行微调,在工具使用方面取得了显著性能提升。在[84]中,为赋予智能体社交能力,作者设计了一个沙盒环境,部署多个智能体进行交互:给定社交问题时,中心智能体首先生成初始响应,随后将响应分享给邻近智能体收集反馈,最终结合反馈和详细解释修订初始响应以使其更符合社会规范。在此过程中,作者收集了大量智能体社交交互数据用于微调大语言模型。

Fine-tuning with real-world datasets. In addition to building datasets based on human or LLM annotation, directly using real-world datasets to fine-tune the agent is also a common strategy. For example, in MIND2WEB [85], the authors collect a large amount of real-world datasets to enhance the agent capability in the Web domain. In contrast to prior studies, the dataset presented in this paper encompasses diverse tasks, real-world scenarios, and comprehensive user interaction patterns. Specifically, the authors collect over 2,000 open-ended tasks from 137 real-world websites spanning 31 domains. Using this dataset, the authors fine-tune LLMs to enhance their performance on Web-related tasks, including movie discovery and ticket booking, among others. In SQL-PALM [86], researchers fine-tune PaLM-2 based on a cross-domain large-scale text-to-SQL dataset called Spider. The obtained model can achieve significant performance improvement on text-to-SQL tasks.

基于真实数据集进行微调。除了通过人工或大语言模型标注构建数据集外,直接使用真实世界数据对智能体进行微调也是常见策略。例如在MIND2WEB [85]中,作者收集了大量真实数据集来增强智能体在网页领域的能力。与先前研究不同,该论文提出的数据集涵盖了多样化任务、真实场景和完整的用户交互模式。具体而言,作者从137个真实网站收集了跨越31个领域的2000多项开放式任务。利用该数据集,作者对大语言模型进行微调以提升其在网页相关任务(包括电影发现、票务预订等)上的表现。在SQL-PALM [86]中,研究人员基于名为Spider的跨领域大规模文本转SQL数据集对PaLM-2进行微调,所得模型在文本转SQL任务上实现了显著性能提升。

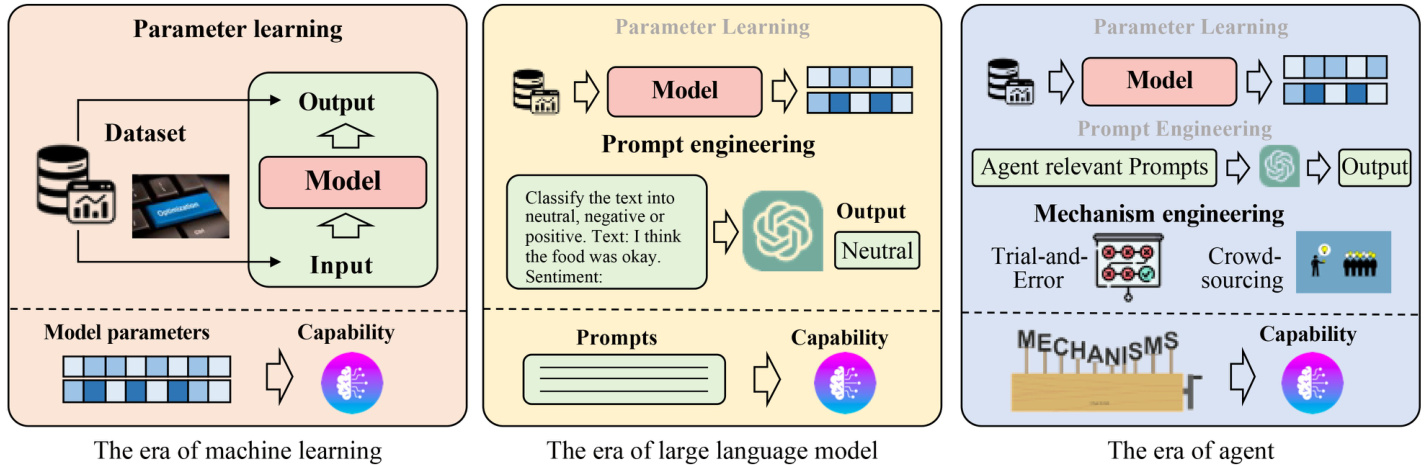

Capability acquisition without fine-tuning: In the era of tradition machine learning, the model capability is mainly acquired by learning from datasets, where the knowledge is encoded into the model parameters. In the era of LLMs, the model capability can be acquired either by training/fine-tuning the model parameters or designing delicate prompts (i.e., prompt engineer). In prompt engineer, one needs to write valuable information into the prompts to enhance the model capability or unleash existing LLM capabilities. In the era of agents, the model capability can be acquired based on three strategies: (1) model fine-tuning, (2) prompt engineer, and (3) designing proper agent evolution mechanisms (we called it as mechanism engineering). Mechanism engineering is a broad concept that involves developing specialized modules, introducing novel working rules, and other strategies to enhance agent capabilities. For clearly understanding such transitions on the strategy of model capability acquisition, we illustrate them in Fig. 4. In the following, we introduce prompting engineering and mechanism engineering for agent capability acquisition.

无需微调的能力获取:在传统机器学习时代,模型能力主要通过从数据集中学习获得,知识被编码到模型参数中。在大语言模型时代,模型能力既可以通过训练/微调模型参数获得,也可以通过设计精巧的提示(即提示工程)来获取。在提示工程中,需要将有价值的信息写入提示以增强模型能力或释放现有大语言模型潜力。在智能体时代,模型能力可以通过三种策略获得:(1) 模型微调,(2) 提示工程,以及(3) 设计适当的智能体进化机制(我们称之为机制工程)。机制工程是一个广义概念,涉及开发专用模块、引入新颖工作规则等提升智能体能力的策略。为清晰理解模型能力获取策略的演变,我们在图4中进行了展示。下文将分别介绍用于智能体能力获取的提示工程与机制工程。

Prompting engineering. Due to the strong language comprehension capabilities, people can directly interact with LLMs using natural languages. This introduces a novel strategy for enhancing agent capabilities, that is, one can describe the desired capability using natural language and then use it as prompts to influence LLM actions. For example, in CoT [45], in order to empower the agent with the capability for complex task reasoning, the authors present the intermediate reasoning steps as few-shot examples in the prompt. Similar techniques are also used in CoT-SC [49] and ToT [50]. In SocialAGI [30], in order to enhance the agent self-awareness capability in conversation, the authors prompt LLMs with the agent beliefs about the mental states of the listeners and itself, which makes the generated utterance more engaging and adaptive. In addition, the authors also incorporate the target mental states of the listeners, which enables the agents to make more strategic plans. Retro former [87] presents a retrospective model that enables the agent to generate reflections on its past failures. The reflections are integrated into the prompt of LLMs to guide the agent’s future actions. Additionally, this model utilizes reinforcement learning to iterative ly improve the retrospective model, thereby refining the LLM prompt.