PRCA: Fitting Black-Box Large Language Models for Retrieval Question Answering via Pluggable Reward-Driven Contextual Adapter

PRCA: 通过可插拔奖励驱动的上下文适配器适配黑盒大语言模型以用于检索问答

Haoyan Yang1,2†, Zhitao $\mathbf{Li}^{1}$ , Yong Zhang1, Jianzong Wang1∗, Ning Cheng1, Ming $\mathbf{Li^{1,3}}$ , Jing Xiao1

Haoyan Yang1,2†, Zhitao $\mathbf{Li}^{1}$, Yong Zhang1, Jianzong Wang1∗, Ning Cheng1, Ming $\mathbf{Li^{1,3}}$, Jing Xiao1

1Ping An Technology (Shenzhen) Co., Ltd., China 2New York University 3 University of Maryland jzwang@188.com

1平安科技(深圳)有限公司 2纽约大学 3马里兰大学 jzwang@188.com

Abstract

摘要

The Retrieval Question Answering (ReQA) task employs the retrieval-augmented framework, composed of a retriever and generator. The generator formulates the answer based on the documents retrieved by the retriever. Incorporating Large Language Models (LLMs) as generators is beneficial due to their advanced QA capabilities, but they are typically too large to be fine-tuned with budget constraints while some of them are only accessible via APIs. To tackle this issue and further improve ReQA performance, we propose a trainable Pluggable Reward-Driven Contextual Adapter (PRCA), keeping the generator as a black box. Positioned between the retriever and generator in a Pluggable manner, PRCA refines the retrieved information by operating in a tokenauto regressive strategy via maximizing rewards of the reinforcement learning phase. Our experiments validate PRCA’s effectiveness in enhancing ReQA performance on three datasets by up to $20%$ improvement to fit black-box LLMs into existing frameworks, demonstrating its considerable potential in the LLMs era.

检索式问答 (ReQA) 任务采用检索增强框架,由检索器和生成器组成。生成器根据检索器获取的文档生成答案。由于大语言模型具备先进的问答能力,将其作为生成器具有优势,但其参数量通常过大难以在有限预算下微调,且部分模型仅能通过API访问。为解决该问题并进一步提升ReQA性能,我们提出可训练的即插即用奖励驱动上下文适配器 (PRCA),将生成器视为黑盒。PRCA以即插即用方式部署于检索器与生成器之间,通过强化学习阶段奖励最大化的token自回归策略优化检索信息。实验证明PRCA能有效提升三个数据集的ReQA性能(最高达$20%$),使黑盒大语言模型适配现有框架,展现了其在LLM时代的巨大潜力。

1 Introduction

1 引言

Retrieval Question Answering (ReQA) tasks involve generating appropriate answers to given questions, utilizing relevant contextual documents. To achieve this, retrieval augmentation is employed (Chen et al., 2017; Pan et al., 2019; Izacard and Grave, 2021), and comprised of two key components: a retriever and a generator. The retriever’s role is to retrieve relevant documents from a large corpus in response to the question, while the generator uses this contextual information to formulate accurate answers. Such systems alleviate the problem of hallucinations (Shuster et al., 2021), thereby enhancing the overall accuracy of the output.

检索问答 (Retrieval Question Answering,ReQA) 任务旨在利用相关上下文文档为给定问题生成合适答案。为实现这一目标,系统采用检索增强技术 (Chen et al., 2017; Pan et al., 2019; Izacard and Grave, 2021) ,其核心包含两个组件:检索器与生成器。检索器负责从大规模语料库中检索与问题相关的文档,生成器则基于这些上下文信息构建准确答案。此类系统能有效缓解幻觉问题 (Shuster et al., 2021) ,从而提升输出结果的整体准确性。

Current Paradigm

当前范式

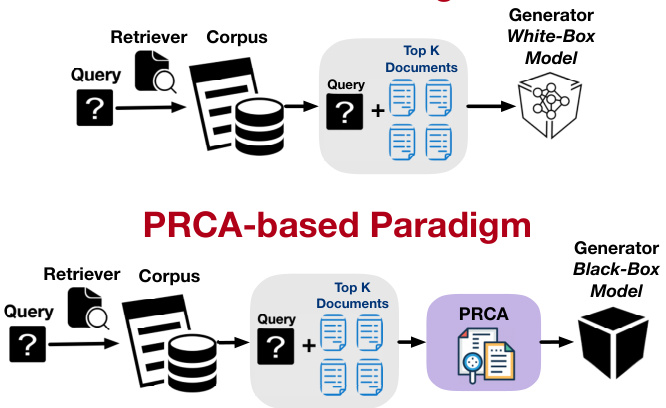

Figure 1: A comparison between two paradigms for information retrieval and generation. The upper section showcases the traditional method where a query is processed by a retriever that scans a corpus to fetch the Top-K documents and then fed to a white-box generator. The lower section introduces our proposed PRCA method, which processes extracted Top-K documents from the retriever before feeding them to black-box generator to achieve better performance for in-domain tasks.

图 1: 信息检索与生成的两种范式对比。上半部分展示了传统方法:查询由检索器处理,扫描语料库获取Top-K文档后输入白盒生成器。下半部分介绍我们提出的PRCA方法,该方法在将检索器提取的Top-K文档输入黑盒生成器前进行预处理,以提升领域内任务性能。

Recent advances in Large Language Models (LLMs) such as the generative pre-trained transformer (GPT) series (Brown et al., 2020; Ouyang et al., 2022; OpenAI, 2023) have demonstrated remarkable potential, notably in their zero-shot and few-shot abilities within the realm of QA tasks. Owing to these capabilities, LLMs are excellent choices as generators within the retrievalaugmented framework. However, due to the vast parameters of LLMs, fine-tuning them becomes exceedingly difficult within a limited computation budget. Furthermore, certain LLMs such as GPT-4 (OpenAI, 2023) are closed-source, making it impossible to fine-tune them. To achieve optimal results on specific datasets, fine-tuning retrievalaugmented models becomes necessary (Guu et al., 2020; Lewis et al., 2020b; An et al., 2021). Previ- ous attempts to integrate LLMs into the retrievalaugmented framework have met with partial success but also come with limitations. (Shi et al., 2023) utilized the logits from the final layer of the LLMs when calculating the loss function, which may not be available to certain powerful LLMs that served via APIs. (Ma et al., 2023) involved frequently invoking pricy LLMs and overlooked the impact of the input token length on the accuracy and effectiveness of the system.

近年来,大语言模型 (LLM) 如生成式预训练Transformer (GPT) 系列 (Brown et al., 2020; Ouyang et al., 2022; OpenAI, 2023) 取得了显著进展,尤其在问答任务中展现出卓越的零样本和少样本能力。凭借这些特性,大语言模型成为检索增强框架中生成器的理想选择。然而,由于大语言模型参数量庞大,在有限算力下进行微调极为困难。此外,GPT-4 (OpenAI, 2023) 等部分大语言模型未开源,导致无法微调。为在特定数据集上获得最优效果,对检索增强模型进行微调十分必要 (Guu et al., 2020; Lewis et al., 2020b; An et al., 2021)。此前将大语言模型融入检索增强框架的尝试虽取得部分成功,但仍存在局限。(Shi et al., 2023) 在计算损失函数时使用了大语言模型最后一层的logits,但通过API调用的某些强大模型可能无法提供该数据。(Ma et al., 2023) 需要频繁调用高成本的大语言模型,且忽视了输入token长度对系统准确性和效率的影响。

To overcome these hurdles, we propose a trainable Pluggable Reward-driven Context Adapter (PRCA) that enables one to fine-tune the adapter instead of LLMs under the retrieval-augmented framework on specific datasets and achieve higher performance. Furthermore, PRCA distills the retrieved documents information guided by rewards from the generator through reinforcement learning. The distillation of retrieval information through PRCA reduces the length of text input to the generator and constructs a context of superior quality, which mitigates the hallucination issues during the answer generation. As shown in Figure 1, PRCA is placed between the retriever and the generator, forming a PRCA-based Paradigm where both the generator and the retriever remain frozen. In general, the introduction of the PRCA-based paradigm brings the following advantages:

为克服这些障碍,我们提出了一种可训练的插件式奖励驱动上下文适配器(PRCA),它允许在特定数据集上基于检索增强框架微调解码器而非大语言模型,从而获得更高性能。此外,PRCA通过强化学习以生成器的奖励信号为指导,蒸馏检索文档信息。这种基于PRCA的检索信息蒸馏机制能缩短生成器的文本输入长度,并构建更高质量的上下文,从而缓解答案生成过程中的幻觉问题。如图1所示,PRCA被置于检索器与生成器之间,形成一种PRCA范式(PRCA-based Paradigm),其中生成器和检索器均保持冻结状态。总体而言,该范式的引入具有以下优势:

Black-box LLMs Integration With the use of PRCA, LLMs can be treated as a black box integrated into the retrieval-augmented framework, eliminating the need for resource-intensive finetuning and restrictions on closed-nature models.

大语言模型的黑箱集成

通过使用PRCA,大语言模型可作为黑箱集成到检索增强框架中,无需资源密集的微调,也不受闭源模型的限制。

Robustness PRCA serves as a pluggable adapter that is compatible with various retrievers and generators because PRCA-based paradigm keeps both the generator and retriever frozen.

鲁棒性 PRCA 作为一种可插拔适配器,能够兼容多种检索器和生成器,因为基于 PRCA 的范式保持生成器和检索器均处于冻结状态。

Efficiency The PRCA-based paradigm ensures the efficiency of the framework by reducing the text length inputted into the generator and can adapt to different retrieval corpus.

效率

基于PRCA的范式通过减少输入生成器的文本长度来确保框架的效率,并能适应不同的检索语料库。

2 Related Work

2 相关工作

2.1 The Potential of LLMs as Black-Box Models

2.1 大语言模型 (LLM) 作为黑盒模型的潜力

LLMs have demonstrated remarkable capabilities in downstream QA tasks, even in scenarios with limited or no training data (Wei et al., 2022). This emergence capability enables them to efficiently tackle such tasks, making them potential candidates for black-box models in inference. Furthermore, the non-open-source nature and large parameter size of these models further contribute to their inclination towards being perceived as black boxes.

大语言模型在下游问答任务中展现出卓越能力,即便在训练数据有限或缺失的场景下 [20]。这种涌现能力使其能高效应对此类任务,成为推理中黑盒模型的潜在候选者。此外,这些模型的非开源特性及庞大参数量进一步强化了其黑盒属性认知。

On one hand, LLMs like GPT-4 (OpenAI, 2023) and PaLM (Scao et al., 2023) have showcased impressive performance in QA tasks. However, their closed source nature restricts access to these models, making API-based utilization the only feasible option, thereby categorizing them as black-box models.

一方面,GPT-4 (OpenAI, 2023) 和 PaLM (Scao et al., 2023) 等大语言模型在问答任务中展现了令人印象深刻的表现。然而,它们的闭源特性限制了访问权限,使得基于 API 的使用成为唯一可行方案,从而将其归类为黑盒模型。

On the other hand, training LLMs, exemplified by models like Bloom (Scao et al., 2022) and GLM130B (Zeng et al., 2023), impose substantial computational demands. Specifically, training Bloom took 3.5 months using 384 NVIDIA A100 80GB GPUs. Similarly, GLM-130B requires a two-month training period on a cluster of 96 DGX-A100 GPU servers. These resource requirements make it extremely challenging for the majority of researchers to deploy these models. Moreover, LLMs exhibit rapid development speeds. For instance, from LLaMA (Touvron et al., 2023) to Alpaca (Taori et al., 2023) and now Vicuna (Peng et al., 2023), the iterations are completed within a month. It is evident that the speed of training models lags behind the pace of model iterations. Consequentially, tuning small-size adapters for any sequenceto-sequence LLMs on downstream tasks could be a simpler and more efficient approach.

另一方面,以Bloom (Scao等人, 2022) 和GLM130B (Zeng等人, 2023) 为代表的大语言模型训练需要巨大的计算资源。具体而言,训练Bloom耗时3.5个月,使用了384块NVIDIA A100 80GB GPU。同样地,GLM-130B需要在96台DGX-A100 GPU服务器集群上进行为期两个月的训练。这些资源需求使得大多数研究者难以部署这些模型。此外,大语言模型的发展速度极快。例如从LLaMA (Touvron等人, 2023) 到Alpaca (Taori等人, 2023) 再到Vicuna (Peng等人, 2023),迭代周期仅在一个月内完成。显然,模型训练速度已落后于迭代速度。因此,在下游任务中为任何序列到序列的大语言模型微调小型适配器可能是更简单高效的解决方案。

2.2 Retrieval-Augmented Framework

2.2 检索增强框架

Various retrieval augmented ideas have been progressively developed and applied to improve the performance in the ReQA task.

多种检索增强方法逐步发展并应用于提升ReQA任务性能。

In the initial stage of research, independent statistical similarity-base retrievers like TF-IDF (Sparck Jones, 1972) and BM25 (Robertson and Zaragoza, 2009) were used as fundamental retrieval engines. They helped in extracting the most relevant documents from the corpus for QA tasks (Chen et al., 2017; Izacard and Grave, 2021).

在研究初期阶段,独立统计相似性检索器如TF-IDF (Sparck Jones, 1972)和BM25 (Robertson and Zaragoza, 2009)被用作基础检索引擎。它们有助于从语料库中提取与问答任务最相关的文档 (Chen et al., 2017; Izacard and Grave, 2021)。

The concept of vector iz ation was subsequently introduced, where both questions and documents were represented as vectors, and vector similarity became a critical parameter for retrieval. This paradigm shift was led by methods such as dense retrieval, as embodied by DPR (Karpukhin et al., 2020). Models based on contrastive learning like SimCSE (Gao et al., 2021) and Contriver (Izacard et al., 2022a), along with sentence-level semantic models such as Sentence-BERT (Reimers and Gurevych, 2019), represented this era. These methods can be seen as pre-trained retrievers that boosted the effectiveness of the ReQA task.

向量化(vectorization)概念随后被引入,此时问题和文档都被表示为向量,而向量相似度成为检索的关键参数。这一范式转变由密集检索(dense retrieval)等方法引领,以DPR (Karpukhin等人,2020)为代表。基于对比学习的模型如SimCSE (Gao等人,2021)和Contriver (Izacard等人,2022a),以及句子级语义模型如Sentence-BERT (Reimers和Gurevych,2019)都是这一时期的代表。这些方法可视为预训练检索器,显著提升了ReQA任务的效果。

Further development led to the fusion of retrieval and generation components within the ReQA frameworks. This was implemented in systems like REALM (Guu et al., 2020) and RAG (Lewis et al., 2020b), where retrievers were co-trained with generators, further refining the performance in the ReQA task.

进一步的发展促成了ReQA框架中检索与生成组件的融合。这一理念在REALM (Guu et al., 2020) 和 RAG (Lewis et al., 2020b) 等系统中得到实现,这些系统通过联合训练检索器与生成器,进一步优化了ReQA任务的性能表现。

Recently, advanced approaches like Atlas (Izacard et al., 2022b) and RETRO (Borgeaud et al., 2022) have been introduced which could achieve performance comparable to large-scale models like Palm (Chowdhery et al., 2022) and GPT3 (Brown et al., 2020) with significantly fewer parameters.

近期,Atlas (Izacard等人,2022b) 和 RETRO (Borgeaud等人,2022) 等先进方法的提出,使得模型能以显著更少的参数量达到与 Palm (Chowdhery等人,2022) 和 GPT3 (Brown等人,2020) 等大规模模型相媲美的性能。

3 Methodology

3 方法论

3.1 Two-Stage Training for PRCA

3.1 PRCA 的两阶段训练

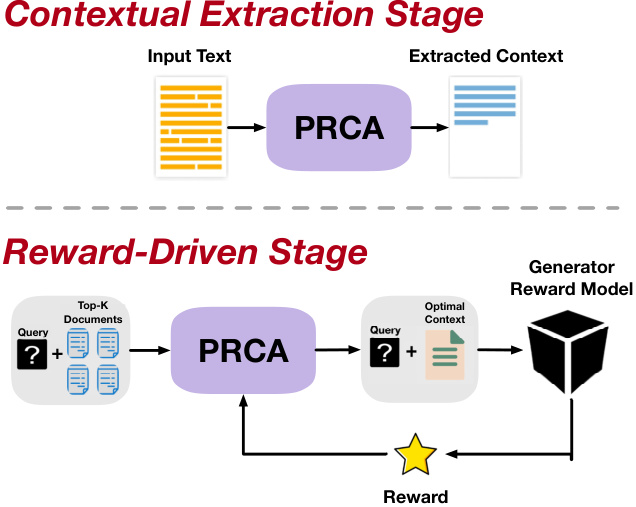

PRCA is designed to take sequences composed of the given query and the Top-K relevant documents retrieved by the retriever. The purpose of PRCA is to distill this collection of results, presenting a concise and effective context to the generator, while keeping both the retriever and the generator frozen. This PRCA-based paradigm introduces two challenges: the effectiveness of the retrieval cannot be directly evaluated due to its heavy dependence on the responses generated by the generator, and learning the mapping relationship between the generator’s outputs and the input sequence via back propagation is obstructed due to the black-box generator. To tackle these issues, we propose a twostage training strategy for PRCA, as illustrated in Figure 2. In the contextual stage, supervised learning is employed to train PRCA, encouraging it to output context-rich extractions from the input text. During the reward-driven stage, the generator is treated as a reward model. The difference between the generated answer and the ground truth serves as a reward signal to further train PRCA. This process effectively optimizes the information distillation to be more beneficial for the generator to answer accurately.

PRCA的设计目的是处理由给定查询和检索器(Retriever)返回的Top-K相关文档组成的序列。其核心在于提炼这组结果,为生成器(Generator)提供简洁有效的上下文,同时保持检索器和生成器的参数冻结。这种基于PRCA的范式带来两个挑战:由于检索效果高度依赖生成器的响应,无法直接评估检索质量;同时黑盒生成器阻碍了通过反向传播学习输出与输入序列间的映射关系。为解决这些问题,我们提出了如图2所示的两阶段训练策略。在上下文阶段,采用监督学习训练PRCA,使其能从输入文本中提取富含上下文的片段;在奖励驱动阶段,将生成器视为奖励模型,用生成答案与标准答案的差异作为奖励信号进一步优化PRCA,从而有效改进信息蒸馏过程,使其更有利于生成器给出准确回答。

3.2 Contextual Extraction Stage

3.2 上下文提取阶段

In the contextual extraction stage, we train PRCA to extract textual information. Given an input text $S_{\mathrm{input}}$ , PRCA generates an output sequence Cextracted, representing the context derived from the

在上下文提取阶段,我们训练PRCA来提取文本信息。给定输入文本$S_{\mathrm{input}}$,PRCA生成输出序列Cextracted,表示从

Figure 2: An illustration of the two-stage sequential training process for the PRCA. In the first “Contextual Extraction Stage”, PRCA module is pre-trained on domain abstract ive sum mari z ation tasks. The second “Reward-Driven Stage”, demonstrates the interaction between retrieved Top-K documents and the PRCA. Here, the PRCA refines the query using both the documents and the original query, producing an optimal context. This context is processed by a generator to obtain a reward, signifying the quality and relevance of the context, with the feedback loop aiding in further refining the model’s output and performance.

图 2: PRCA两阶段顺序训练流程示意图。在第一阶段"上下文提取阶段"中,PRCA模块在领域抽象摘要任务上进行预训练。第二阶段"奖励驱动阶段"展示了检索到的Top-K文档与PRCA的交互过程。此时,PRCA会结合文档和原始查询优化上下文,生成最优语境。该语境由生成器处理后获得奖励值,用于评估语境的质量和相关性,反馈循环机制可进一步优化模型输出和性能。

input text. The objective of the training process is to minimize the discrepancy between $C_{\mathrm{extracted}}$ and the ground truth context $C_{\mathrm{truth}}$ and the loss function is demonstrated as follows:

输入文本。训练过程的目标是最小化提取的上下文 $C_{\mathrm{extracted}}$ 与真实上下文 $C_{\mathrm{truth}}$ 之间的差异,损失函数如下所示:

$$

\operatorname*{min}_ {\theta}L(\theta)=-\frac{1}{N}\sum_{i=1}^{N}C_{\mathrm{truth}}^{(i)}\log(f_{\mathrm{PRCA}}(S_{\mathrm{input}}^{(i)};\theta))

$$

$$

\operatorname*{min}_ {\theta}L(\theta)=-\frac{1}{N}\sum_{i=1}^{N}C_{\mathrm{truth}}^{(i)}\log(f_{\mathrm{PRCA}}(S_{\mathrm{input}}^{(i)};\theta))

$$

where $\theta$ represents the parameters of PRCA

其中 $\theta$ 代表 PRCA 的参数

In the context extraction stage, PRCA is initialized from a BART-Large model pre-trained on CNN Daily Mail dataset (Lewis et al., 2020a).

在上下文提取阶段,PRCA从基于CNN Daily Mail数据集预训练的BART-Large模型初始化 (Lewis et al., 2020a)。

3.3 Reward-Driven Stage

3.3 奖励驱动阶段

In the reward-driven stage, the objective is to align the extracted context $C_{\mathrm{extracted}}$ from the previous stage with the downstream generator, ensuring that the text distilled by PRCA serves effectively to guide the generator’s answering. Given the blackbox nature of the generator, a direct update of PRCA is not feasible. Therefore, we resort to reinforcement learning to optimize PRCA’s parameters. Specifically, the generator offers rewards to guide the update of PRCA’s parameter, targeting to improve answer quality. The reward is based on the ROUGE-L score between the generated answer $O$ and the ground truth $O^{*}$ . Meanwhile, it’s vital that PRCA retains its skill of information extraction from long texts, as learned in the contextual extraction stage. Our objective is twofold: maximizing generator’s reward and maintaining similarity between updated and original parameters of PRCA after contextual extraction training. Catering to the reward-driven training where policy actions manipulate sequence tokens, policy optimization, particularly via Proximal Policy Optimization (PPO) (Schulman et al., 2017; Stiennon et al., 2020), is the preferred method. However, when employing a black-box generator as a reward model, we identify certain limitations of using PPO.

在奖励驱动的阶段,目标是将上一阶段提取的上下文 $C_{\mathrm{extracted}}$ 与下游生成器对齐,确保PRCA提炼的文本能有效指导生成器的回答。鉴于生成器的黑盒性质,直接更新PRCA不可行。因此,我们采用强化学习来优化PRCA的参数。具体而言,生成器通过提供奖励来指导PRCA参数的更新,旨在提升答案质量。该奖励基于生成答案 $O$ 与真实答案 $O^{*}$ 之间的ROUGE-L分数。同时,PRCA必须保留其在上下文提取阶段学习到的长文本信息提取能力。我们的目标有两个:最大化生成器的奖励,并保持PRCA在上下文提取训练后更新参数与原始参数的相似性。针对奖励驱动的训练,其中策略动作操作序列token,策略优化(特别是通过近端策略优化(PPO) (Schulman et al., 2017; Stiennon et al., 2020))是首选方法。然而,当使用黑盒生成器作为奖励模型时,我们发现PPO存在某些局限性。

In (2), we present the PPO’s objective function $J(\theta)$ . This function strives to optimize the advantage, a value derived from the Generalized Advantage Estimation (GAE) (Schulman et al., 2016). The GAE leverages both $\gamma$ and $\lambda$ as discounting factors, adjusting the estimated advantage based on the temporal difference $\delta_{t+l}^{V}$ , as depicted in (3). Here, $E_{t}[m i n(r_{t}(\theta)\cdot A_{t}^{G A E},c l i p(r_{t}(\theta),1-\epsilon,1+$ $\epsilon)\cdot A_{t}^{G A E})]$ captures the expected advantage. The clip function serves to prevent excessive policy updates by constraining the policy update step, ensuring stability in the learning process. The term $\beta(V(s_{t})-R_{t})^{2}$ is a squared-error term between $V(s_{t})$ and $R_{t}$ . This term seeks to minimize the difference between the predicted and actual value, ensuring accurate value predictions. However, the critic network $V$ is usually initialized to have the same parameter as the reward model (Yao et al., 2023; Fazzie et al., 2023), which is inapplicable when the reward models are black-boxed. Additionally, the APIs from vendors usually have limited amount of return parameters which may cause the computation of $R_{t}$ impossible.

在(2)中,我们提出了PPO的目标函数$J(\theta)$。该函数旨在优化优势值(advantage),该值源自广义优势估计(GAE) (Schulman等人,2016)。GAE利用$\gamma$和$\lambda$作为折扣因子,基于时序差分$\delta_{t+l}^{V}$调整估计优势值,如(3)所示。其中$E_{t}[min(r_{t}(\theta)\cdot A_{t}^{GAE},clip(r_{t}(\theta),1-\epsilon,1+$ $\epsilon)\cdot A_{t}^{GAE})]$表示期望优势值。clip函数通过限制策略更新步长来防止过度的策略更新,确保学习过程的稳定性。项$\beta(V(s_{t})-R_{t})^{2}$是$V(s_{t})$与$R_{t}$之间的平方误差项,旨在最小化预测值与实际值之间的差异,确保准确的价值预测。然而,评论家网络$V$通常被初始化为与奖励模型具有相同参数(Yao等人,2023;Fazzie等人,2023),当奖励模型为黑盒时这种方法不适用。此外,供应商提供的API通常返回参数有限,可能导致$R_{t}$无法计算。

$$

\begin{array}{r}{\underset{\theta}{\operatorname*{max}}J(\theta)=E_{t}[m i n(r_{t}(\theta)\cdot A_{t}^{G A E},\quad\quad\quad\quad\quad}\ {c l i p(r_{t}(\theta),1-\epsilon,1+\epsilon)\cdot A_{t}^{G A E})]}\ {\quad\quad-\beta(V(s_{t})-R_{t})^{2}\quad\quad\quad\quad(2)}\end{array}

$$

$$

\begin{array}{r}{\underset{\theta}{\operatorname*{max}}J(\theta)=E_{t}[m i n(r_{t}(\theta)\cdot A_{t}^{G A E},\quad\quad\quad\quad\quad}\ {c l i p(r_{t}(\theta),1-\epsilon,1+\epsilon)\cdot A_{t}^{G A E})]}\ {\quad\quad-\beta(V(s_{t})-R_{t})^{2}\quad\quad\quad\quad(2)}\end{array}

$$

where $\begin{array}{r}{r_{t}(\theta)=\frac{\pi_{\theta}(a_{t}|s_{t})}{\pi_{\theta_{o r i}}(a_{t}|s_{t})}}\end{array}$ is the ratio of the updated policy $\pi_{\theta}$ to the original policy $\pi_{\theta_{o r i}}~;~a_{t}$ represents the action (the next token); $s_{t}$ is the state (the sequence of previous tokens); $\epsilon$ is the clipping parameter; $V$ is a critic network; $V(s_{t})$ is the predicted value of state $s_{t};\beta$ is a coefficient that weights the squared-error term; $R_{t}$ is the expected return at time $t$ .

其中 $\begin{array}{r}{r_{t}(\theta)=\frac{\pi_{\theta}(a_{t}|s_{t})}{\pi_{\theta_{o r i}}(a_{t}|s_{t})}}\end{array}$ 表示更新后策略 $\pi_{\theta}$ 与原策略 $\pi_{\theta_{o r i}}$ 的比值; $a_{t}$ 表示动作(下一个token); $s_{t}$ 表示状态(先前token序列); $\epsilon$ 是截断参数; $V$ 是评判网络; $V(s_{t})$ 是状态 $s_{t}$ 的预测值; $\beta$ 是平方误差项的加权系数; $R_{t}$ 表示时刻 $t$ 的预期回报。

$$

A_{t}^{G A E(\gamma,\lambda)}=\sum_{l=0}^{T}(\gamma\lambda)^{l}\delta_{t+l}^{V}

$$

$$

A_{t}^{G A E(\gamma,\lambda)}=\sum_{l=0}^{T}(\gamma\lambda)^{l}\delta_{t+l}^{V}

$$

where δtV+l $\delta_{t+l}^{V}~=~R_{t+l}+\gamma V(s_{t+l+1})-V(s_{t+l})$ ; $\gamma$ and $\lambda$ as discounting and GAE parameters respectively.

其中 $\delta_{t+l}^{V}~=~R_{t+l}+\gamma V(s_{t+l+1})-V(s_{t+l})$;$\gamma$ 和 $\lambda$ 分别为折扣因子和广义优势估计(GAE)参数。

To tackle this issue, we introduce a strategy to estimate $R_{t}$ . In the PRCA, when the token $\langle E O S\rangle$ is generated, we can obtain the reward $R_{E O S}$ by comparing the generated answer against the ground truth. We consider it an accumulation of the reward $R_{t}$ achieved at each time step t for the generated token. As for $R_{t}$ , it serves as a target in $J(\theta)$ to train the critic network $V(s)$ for fitting, symbolizing the average reward of the current action, thereby assessing the advantage of the current policy. For each token, the greater the probability of generation, the more important this token is perceived by the current policy, so we consider its contribution to the total reward to be greater. Therefore, we regard the probability of generating each token as the weight of $R_{E O S}$ , and the representation of $R_{t}$ is given by the following:

为解决这一问题,我们引入了一种估计 $R_{t}$ 的策略。在PRCA中,当生成 $\langle E O S\rangle$ token时,通过将生成答案与标准答案对比可获得奖励 $R_{E O S}$ 。我们将其视为每个时间步t生成token所获奖励 $R_{t}$ 的累积值。对于 $R_{t}$ ,它在 $J(\theta)$ 中作为训练评论家网络 $V(s)$ 拟合的目标,表征当前动作的平均奖励,从而评估当前策略的优势。对于每个token,其生成概率越大,说明当前策略认为该token越重要,因此我们认为其对总奖励的贡献也越大。因此,我们将每个token的生成概率作为 $R_{E O S}$ 的权重, $R_{t}$ 的表达式如下:

$$

R_{t}=R_{E O S}*{\frac{e^{\pi_{\theta}(a_{t}|s_{t})}}{\sum_{t=1}^{K}e^{\pi_{\theta}(a_{t}|s_{t})}}}

$$

$$

R_{t}=R_{E O S}*{\frac{e^{\pi_{\theta}(a_{t}|s_{t})}}{\sum_{t=1}^{K}e^{\pi_{\theta}(a_{t}|s_{t})}}}

$$

$$

\begin{array}{r}{R_{E O S}=\mathrm{ROUGE}\mathrm{-L}(O,O^{*})}\ {-\beta\cdot D_{K L}(\pi_{\theta}||\pi_{\theta_{o r i}})}\end{array}

$$

$$

\begin{array}{r}{R_{E O S}=\mathrm{ROUGE}\mathrm{-L}(O,O^{*})}\ {-\beta\cdot D_{K L}(\pi_{\theta}||\pi_{\theta_{o r i}})}\end{array}

$$

$$

{\mathrm{ROUGE}}{\mathrm{-}}\mathrm{L}={\frac{\operatorname{LCS}(X,Y)}{\operatorname*{max}(|X|,|Y|)}}

$$

$$

{\mathrm{ROUGE}}{\mathrm{-}}\mathrm{L}={\frac{\operatorname{LCS}(X,Y)}{\operatorname*{max}(|X|,|Y|)}}

$$

where $K$ is the number of tokens in one generated context, $\operatorname{LCS}(X,Y)$ denotes the length of the longest common sub sequence between sequence $X$ and sequence $Y$ , and $|X|$ and $\vert Y\vert$ denote the lengths of sequences $X$ and $Y$ , respectively.

其中 $K$ 是生成上下文中的一个 token 数量,$\operatorname{LCS}(X,Y)$ 表示序列 $X$ 和序列 $Y$ 之间最长公共子序列的长度,$|X|$ 和 $\vert Y\vert$ 分别表示序列 $X$ 和 $Y$ 的长度。

This method mitigates the challenges associated with calculating $R_{t}$ when interpreting the blackbox generator as a reward model. A substantial advantage it confers is the requirement of invoking the reward model only once for each context generation. Compared to the original PPO that employs the reward model for every token computation, our approach reduces the reward model usage to $\frac{1}{K}$ , which is cost-effective especially when using LLMs as generators.

该方法缓解了将黑盒生成器视为奖励模型时计算$R_{t}$的挑战。其显著优势在于每个上下文生成只需调用一次奖励模型。相比原始PPO对每个token计算都使用奖励模型,我们的方法将奖励模型使用量降至$\frac{1}{K}$,这在采用大语言模型作为生成器时尤为经济高效。

Table 1: Overview of the data quantities used for training and testing across three benchmark datasets.

| Dataset | Train/Test | # of Q | #of C | #ofA |

| SQuAD | Train | 87.6k | 18.9k | 87.6k |

| Test | 10.6k | 2.1k | 10.6k | |

| HotpotQA | Train | 90.4k | 483.5k | 90.4k |

| Test | 7.4k | 66.5k | 7.4k | |

| TopiQCQA | Train | 45.5k | 45.5k | 45.5k |

| Test | 2.5k | 2.5k | 2.5k |

表 1: 三个基准数据集训练与测试使用的数据量概览

| 数据集 | 训练/测试 | # of Q | # of C | # of A |

|---|---|---|---|---|

| SQuAD | 训练 | 87.6k | 18.9k | 87.6k |

| 测试 | 10.6k | 2.1k | 10.6k | |

| HotpotQA | 训练 | 90.4k | 483.5k | 90.4k |

| 测试 | 7.4k | 66.5k | 7.4k | |

| TopiQCQA | 训练 | 45.5k | 45.5k | 45.5k |

| 测试 | 2.5k | 2.5k | 2.5k |

4 Experimental Setup

4 实验设置

4.1 Datasets

4.1 数据集

We performed our experiments on three QA datasets: SQuAD (Rajpurkar et al., 2016), HotpotQA (Yang et al., 2018) and TopiOCQA (Adlakha et al., 2022). The complexity of three datasets increases sequentially: SQuAD is a dataset that matches questions, documents, and answers in a one-to-one manner. HotpotQA is a multi-hop QA dataset, requiring the synthesis of correct answers from multiple documents. TopiOCQA is a conversational QA dataset with topic switching.

我们在三个问答数据集上进行了实验:SQuAD (Rajpurkar et al., 2016)、HotpotQA (Yang et al., 2018) 和 TopiOCQA (Adlakha et al., 2022)。这三个数据集的复杂度依次递增:SQuAD 是以一对一方式匹配问题、文档和答案的数据集;HotpotQA 是多跳问答数据集,需要从多篇文档中综合出正确答案;TopiOCQA 是支持主题切换的会话式问答数据集。

To align these datasets with our ReQA task, we reconstructed all three datasets into the form of $(Q,C,A)$ , where $Q$ and $A$ denote the question and answer pair, and $C$ represents a corpus composed of all the documents in the dataset respectively. In Table 1, we present the number of questions and answers employed in the PRCA training and testing phases for every dataset. Additionally, we provide the quantity of documents contained within each respective corpus.

为了使这些数据集与我们的ReQA任务对齐,我们将所有三个数据集重构为$(Q,C,A)$形式,其中$Q$和$A$表示问题和答案对,$C$代表由数据集中所有文档分别组成的语料库。在表1中,我们展示了每个数据集在PRCA训练和测试阶段使用的问题和答案数量。此外,我们还提供了每个语料库中包含的文档数量。

4.2 Baseline Retrievers and Generators

4.2 基线检索器和生成器

We conducted experiments with five different retrievers, specifically BM25 (Robertson and Zaragoza, 2009), Sentence Bert (Reimers and Gurevych, 2019), DPR (Karpukhin et al., 2020), SimCSE (Gao et al., 2021), and Contriver (Izacard et al., 2022a). We also utilized five generators which are T5-large (Raffel et al., 2020), Phoenix7B (Chen et al., 2023), Vicuna-7B (Peng et al., 2023), ChatGLM (Du et al., 2022) and GPT-3.5 1 to assess the effectiveness of PRCA. Note that both the retrievers and generators remain frozen through the experiment.

我们使用了五种不同的检索器进行实验,具体包括 BM25 (Robertson and Zaragoza, 2009)、Sentence Bert (Reimers and Gurevych, 2019)、DPR (Karpukhin et al., 2020)、SimCSE (Gao et al., 2021) 和 Contriver (Izacard et al., 2022a)。同时采用了五种生成器:T5-large (Raffel et al., 2020)、Phoenix7B (Chen et al., 2023)、Vicuna-7B (Peng et al., 2023)、ChatGLM (Du et al., 2022) 和 GPT-3.5 来评估 PRCA 的效果。需要注意的是,实验中所有检索器和生成器均保持冻结状态。

By pairing every retriever with each generator, we established a total of seventy-five baseline config u rations on three datasets. For each configuration, we evaluated the performance with and without the application of PRCA and the difference serves as an indicator of the effectiveness of our proposed approach.

通过将每个检索器与每个生成器配对,我们在三个数据集上共建立了七十五种基准配置。针对每种配置,我们评估了应用PRCA前后的性能差异,该差异作为衡量我们提出方法有效性的指标。

Table 2: Hyper parameters settings used in the experiments.

| Hyperparameters | Value |

| Learningrate | 5 ×10-5 |

| Batchsize | 1/2/4 |

| Numbeams | 3 |

| Temperature | 1 |

| Early Stopping | True |

| Topk | 0.0 |

| Topp | 1.0 |

表 2: 实验中使用的超参数设置。

| 超参数 | 值 |

|---|---|

| 学习率 (Learning rate) | 5 ×10-5 |

| 批量大小 (Batch size) | 1/2/4 |

| 束搜索数量 (Number beams) | 3 |

| 温度系数 (Temperature) | 1 |

| 早停机制 (Early Stopping) | True |

| Topk | 0.0 |

| Topp | 1.0 |

4.3 GPT-4 Assessment

4.3 GPT-4评估

Notably, we used GPT-4 for evaluation rather than traditional metrics like F1 and BLEU, as these metrics often misjudged semantically similar sentences. LLMs often output longer textual explanations for answers, even when the correct answer might be a word or two. Despite attempts to constrain an- swer lengths, the results weren’t ideal. We then evaluated predictions using both manual methods and GPT-4 against golden answers. GPT-4’s evaluations showed correctness rates of $96%$ , $93%$ , and $92%$ across three datasets, demonstrating its reliability and alignment with human judgment.

值得注意的是,我们使用GPT-4而非F1和BLEU等传统指标进行评估,因为这些指标经常误判语义相似的句子。大语言模型通常会输出较长的文本解释作为答案,即使正确答案可能仅为一两个单词。尽管尝试限制答案长度,结果仍不理想。随后,我们通过人工方法和GPT-4对照标准答案评估预测结果。GPT-4在三个数据集上的评估正确率分别为$96%$、$93%$和$92%$,证明了其可靠性与人类判断的一致性。

Specifically, the template for GPT-4 assessment is shown as follows. Finally, the accuracy rate of answering “Yes” is counted as the evaluation metric.

具体而言,GPT-4评估模板如下所示。最终统计回答"是"的准确率作为评估指标。

Template for GPT-4 Assessment

GPT-4 评估模板

Prompt: You are now an intelligent assessment assistant. Based on the question and the golden answer, judge whether the predicted answer correctly answers the question and give only a Yes or No.

提示:你现在是一位智能评估助手。根据问题和参考答案,判断预测答案是否正确回答了问题,并仅回答是或否。

Question: Golden Answer: Predicted Answer:

问题: 黄金答案: 预测答案:

Expected Output: Yes / No

预期输出: 是 / 否

4.4 Hyper parameter Configurations

4.4 超参数配置

To achieve optimal results in our PRCA training, careful selection of hyper parameters is pivotal. The

在我们的PRCA训练中,要取得最佳效果,超参数(hyper parameters)的精心选择至关重要。

Table 3: Comparative results of performance for different retriever and generator combinations in the presence and absence of PRCA integration. The results are based on the evaluation using three benchmark datasets: SQuAD, HotpotQA, and TopiOCQA, and focus on the selection of the Top-5 most relevant documents.

| Retriever | Generator | SQuAD | HotpotQA | TopiOCQA |

| BM25 | T5 | 0.74-0.03 | 0.35+0.01 | 0.27+0.08 |

| Phoenix | 0.61+0.02 | 0.31+0.09 | 0.25+0.03 | |

| Vicuna | 0.59+0.09 | 0.19+0.13 | 0.23+0.10 | |

| ChatGLM | 0.67+0.03 | 0.36+0.04 | 0.35+0.03 | |

| GPT-3.5 | 0.75+0.02 | 0.48+0.06 | 0.44+0.04 | |

| SentenceBert | T5 | 0.48-0.06 | 0.20+0.05 | 0.28+0.05 |

| Phoenix | 0.42+0.04 | 0.13+0.10 | 0.26+0.08 | |

| Vicuna | 0.36+0.09 | 0.22+0.03 | 0.23+0.05 | |

| ChatGLM | 0.57+0.04 | 0.16+0.08 | 0.28+0.04 | |

| GPT-3.5 | 0.6+0.02 | 0.34+0.03 | 0.47+0.03 | |

| DPR | T5 | 0.57+0 | 0.23+0.02 | 0.20+0.09 |

| Phoenix | 0.56+0.01 | 0.15+0.09 | 0.15+0.16 | |

| Vicuna | 0.42+0.06 | 0.16+0.11 | 0.15+0.14 | |

| ChatGLM | 0.53+0.0 | 0.16+0.04 | 0.31+0.07 | |

| GPT-3.5 | 0.69+0.04 | 0.41+0.02 | 0.34+0.06 | |

| SimSCE | T5 | 0.75+0.01 | 0.28+0.02 | 0.18+0.09 |

| Phoenix | 0.67+0.02 | 0.17+0.10 | 0.17+0.13 | |

| Vicuna | 0.47+0.06 | 0.19+0.06 | 0.10+0.20 | |

| ChatGLM | 0.75+0.05 | 0.17+0.05 | 0.21+0.06 | |

| GPT-3.5 | 0.77+0.04 | 0.37+0.05 | 0.31+0.06 | |

| Contriver | T5 | 0.80-0.08 | 0.35+0.03 | 0.18+0.11 |

| Phoenix | 0.69+0.02 | 0.10+0.11 | 0.16+0.18 | |

| Vicuna | 0.58+0.08 | 0.17+0.12 | 0.14+0.19 | |

| ChatGLM | 0.71+0.05 | 0.13+0.09 | 0.23+0.05 | |

| GPT-3.5 | 0.80+0.02 | 0.37+0.05 | 0.30+0.08 |

$\cdot_{+},$ indicates an improvement in performance metrics upon the incorporation of PRCA. The color coding provides a visual representation of the effect: Green signifies a positive enhancement in performance, while Red indicates a decrement.

表 3: 不同检索器与生成器组合在集成/未集成PRCA情况下的性能对比结果。该评估基于SQuAD、HotpotQA和TopiOCQA三个基准数据集,重点关注Top-5最相关文档的选择。

| 检索器 | 生成器 | SQuAD | HotpotQA | TopiOCQA |

|---|---|---|---|---|

| BM25 | T5 | 0.74-0.03 | 0.35+0.01 | 0.27+0.08 |

| Phoenix | 0.61+0.02 | 0.31+0.09 | 0.25+0.03 | |

| Vicuna | 0.59+0.09 | 0.19+0.13 | 0.23+0.10 | |

| ChatGLM | 0.67+0.03 | 0.36+0.04 | 0.35+0.03 | |

| GPT-3.5 | 0.75+0.02 | 0.48+0.06 | 0.44+0.04 | |

| SentenceBert | T5 | 0.48-0.06 | 0.20+0.05 | 0.28+0.05 |

| Phoenix | 0.42+0.04 | 0.13+0.10 | 0.26+0.08 | |

| Vicuna | 0.36+0.09 | 0.22+0.03 | 0.23+0.05 | |

| ChatGLM | 0.57+0.04 | 0.16+0.08 | 0.28+0.04 | |

| GPT-3.5 | 0.6+0.02 | 0.34+0.03 | 0.47+0.03 | |

| DPR | T5 | 0.57+0 | 0.23+0.02 | 0.20+0.09 |

| Phoenix | 0.56+0.01 | 0.15+0.09 | 0.15+0.16 | |

| Vicuna | 0.42+0.06 | 0.16+0.11 | 0.15+0.14 | |

| ChatGLM | 0.53+0.0 | 0.16+0.04 | 0.31+0.07 | |

| GPT-3.5 | 0.69+0.04 | 0.41+0.02 | 0.34+0.06 | |

| SimSCE | T5 | 0.75+0.01 | 0.28+0.02 | 0.18+0.09 |

| Phoenix | 0.67+0.02 | 0.17+0.10 | 0.17+0.13 | |

| Vicuna | 0.47+0.06 | 0.19+0.06 | 0.10+0.20 | |

| ChatGLM | 0.75+0.05 | 0.17+0.05 | 0.21+0.06 | |

| GPT-3.5 | 0.77+0.04 | 0.37+0.05 | 0.31+0.06 | |

| Contriver | T5 | 0.80-0.08 | 0.35+0.03 | 0.18+0.11 |

| Phoenix | 0.69+0.02 | 0.10+0.11 | 0.16+0.18 | |

| Vicuna | 0.58+0.08 | 0.17+0.12 | 0.14+0.19 | |

| ChatGLM | 0.71+0.05 | 0.13+0.09 | 0.23+0.05 | |

| GPT-3.5 | 0.80+0.02 | 0.37+0.05 | 0.30+0.08 |

$\cdot_{+}$表示集成PRCA后性能指标的提升。颜色编码提供直观效果:绿色表示性能正向增强,红色表示性能下降。

configuration settings employed in our experiment are stated in Table 2.

我们实验中采用的配置设置如表 2 所示。

5 Results and Analysis

5 结果与分析

5.1 Overall Performance

5.1 总体性能

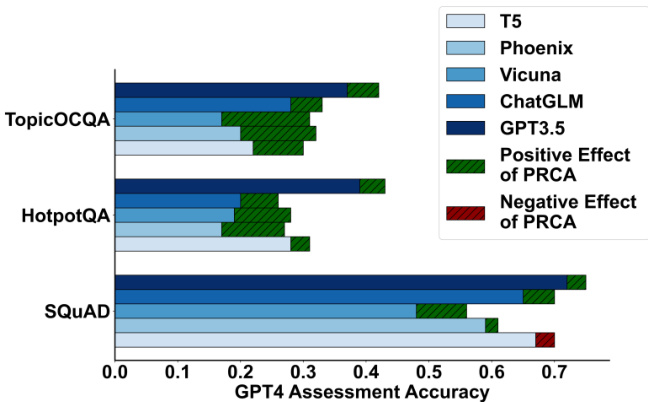

As delineated in Table 3, among the seventy-five configurations, our experimental results suggest that the inclusion of PRCA improves performance in seventy-one configurations. On average, we observe an enhancement of $3%$ , $6%$ , and $9%$ on the SQuAD, HotpotQA, and TopiOCQA datasets, respectively. This demonstrates that PRCA possesses robustness and can enhance the performance of different combinations of retrievers and generators on the ReQA task. As illustrated in Figure 3, the improvements rendered by PRCA to the generators are significant across all three datasets. Particularly on the TopiOCQA dataset, the average improvement for generator Vicuna across five different retrievers reaches $14%$ . Notably, when SimSCE is the retriever, the enhancement offered by PRCA is $20%$ .

如表 3 所示,在七十五种配置中,我们的实验结果表明,加入 PRCA 在七十一种配置中提升了性能。平均而言,我们在 SQuAD、HotpotQA 和 TopiOCQA 数据集上分别观察到 $3%$、$6%$ 和 $9%$ 的提升。这表明 PRCA 具有鲁棒性,能够提升不同检索器与生成器组合在 ReQA 任务中的表现。如图 3 所示,PRCA 对生成器的改进在所有三个数据集上均显著。尤其在 TopiOCQA 数据集上,生成器 Vicuna 在五种不同检索器下的平均改进达到 $14%$。值得注意的是,当检索器为 SimSCE 时,PRCA 带来的提升高达 $20%$。

In Figure 3, we notice that the improvement to generator performance by PRCA across the three datasets is incremental, while the original performance of the generators across the three datasets is dec re mental without PRCA, correlating directly with the complexity of the datasets. This is because when faced with more complex issues, such as multi-hop questions in HotpotQA and topic transitions in multi-turn QA in TopiOCQA, PRCA reserves and integrates critical information which is beneficial for generators from the retrieved documents. This attribute of PRCA alleviate issues where generators struggle with lengthy texts, failing to answer questions correctly or producing halluci nations, thus enhancing performance.

在图3中,我们注意到PRCA对三个数据集上生成器性能的提升是递增的,而未经PRCA处理的生成器在三个数据集上的原始性能则随着数据集复杂度的增加而递减。这是因为当面对更复杂的问题时(如HotpotQA中的多跳问题和TopiOCQA中多轮问答的主题转换),PRCA会保留并整合检索文档中对生成器有益的关键信息。PRCA的这一特性缓解了生成器处理长文本时遇到的困难(如无法正确回答问题或产生幻觉),从而提升了性能。

However, the inclusion of PRCA has a negative effect on the performance of the generator T5 on the SQuAD dataset. This is because the SQuAD dataset is relatively simple, where the answer often directly corresponds to a phrase in the text. As an encoder-decoder architecture model, T5 tends to extract answers directly rather than infer in-depth based on the context. Therefore, without information distillation by PRCA from the retrieved documents, T5 performs well because its features fit well in handling this dataset, capable of directly extracting answers from the context. But under the effect of PRCA, the structure of the text might be altered, and T5’s direct answer extraction may lead to some errors, thereby reducing performance.

然而,PRCA的引入对生成器T5在SQuAD数据集上的性能产生了负面影响。这是因为SQuAD数据集相对简单,答案通常直接对应文本中的某个短语。作为编码器-解码器架构模型,T5倾向于直接提取答案,而非基于上下文进行深入推理。因此,在没有PRCA从检索文档中进行信息蒸馏的情况下,T5表现良好,因为其特征非常适合处理该数据集,能够直接从上下文中提取答案。但在PRCA的作用下,文本结构可能被改变,T5的直接答案提取可能导致一些错误,从而降低了性能。

Figure 3: Comparison of performance of different generators (T5, Phoenix, Vicuna, ChatGLM, and GPT-3.5) on three benchmark datasets: SQuAD, HotpotQA, and TopicOCQA. The horizontal axis represents the GPT-4 assessment accuracy. Bars depict the performance levels of each generator, with green and red arrows indicating the enhanced or diminished effects due to PRCA integration, respectively.

图 3: 不同生成器 (T5、Phoenix、Vicuna、ChatGLM 和 GPT-3.5) 在三个基准数据集 (SQuAD、HotpotQA 和 TopicOCQA) 上的性能对比。横轴表示 GPT-4 评估准确率。柱状图展示各生成器的性能水平,绿色和红色箭头分别表示集成 PRCA 后产生的增强或削弱效果。

While in a few configurations, the characteristics of PRCA may have negative effects, for the vast majority of configurations, our experiments validate that under PRCA-based paradigm, PRCA can effectively enhance the performance in the ReQA task, demonstrating robustness.

虽然在某些配置中,PRCA的特性可能产生负面影响,但在绝大多数配置下,我们的实验验证了基于PRCA的范式能有效提升ReQA任务性能,展现出鲁棒性。

5.2 Efficiency of PRCA

5.2 PRCA的效率

PRCA represents an effective approach for enhancing the performance of the ReQA task without significantly increasing computational demand. Its efficiency is manifested in optimizing parameters to achieve superior results and in simplifying input text, thereby aiding generators in managing complex text.

PRCA代表了一种在不显著增加计算需求的情况下提升ReQA任务性能的有效方法。其效率体现在通过优化参数实现更优结果,以及简化输入文本从而帮助生成器处理复杂文本。

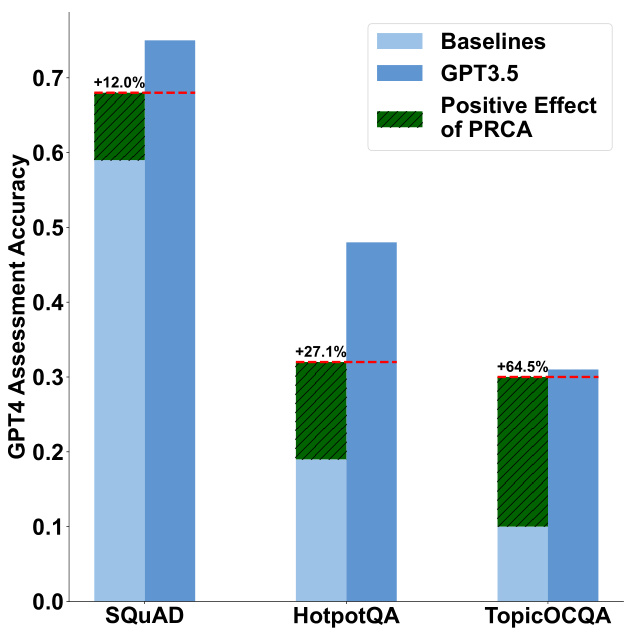

Parameter Efficiency Figure 4 portrays a comparative analysis between the generators, which gain the maximum improvements with PRCA, and the GPT-3.5 model which operates without PRCA, across 3 datasets. PRCA boasts roughly 0.4 billion parameters, the most significantly improved generators encompass about 7 billion parameters on average, while GPT-3.5 has approximately 1.75 trillion parameters. As demonstrated in Figure 4, with a marginal parameter increment, the performance of these generators improved by $12.0%$ , $27.1%$ , and $64.5%$ respectively. Hence, PRCA has great potential to be an efficient way to boost the performance of ReQA task while keeping computational resources consumption acceptable. During the inference process, a fully-trained PRCA will perform only standard forward propagation and hence introduce limited impact on inference latency. Our inference latency test on SQUAD was reported in Table 4. This low latency ensures that the system maintains a smooth process without significant delays after integrating PRCA, underscoring the high efficiency of PRCA in boosting system performance.

参数效率

图 4: 展示了采用PRCA后获得最大提升的生成器与未使用PRCA的GPT-3.5模型在3个数据集上的对比分析。PRCA仅包含约4亿参数,改进最显著的生成器平均约70亿参数,而GPT-3.5拥有约1.75万亿参数。如图4所示,在参数量小幅增加的情况下,这些生成器的性能分别提升了$12.0%$、$27.1%$和$64.5%$。因此,PRCA有望成为在保持计算资源消耗可控的同时显著提升ReQA任务性能的高效方案。推理过程中,完全训练后的PRCA仅执行标准前向传播,对推理延迟的影响有限。我们在SQUAD上的推理延迟测试结果见表4。这种低延迟特性确保系统在集成PRCA后仍能保持流畅运行,凸显了PRCA在提升系统性能方面的高效性。

Figure 4: Performance comparison between PRCAenhanced baseline models and GPT-3.5 across SQuAD, HotpotQA, and TopicOCQA. Light and dark blue bars represent baseline and GPT-3.5 performance, while striped green indicates PRCA’s improvement.

图 4: PRCA增强基线模型与GPT-3.5在SQuAD、HotpotQA和TopicOCQA上的性能对比。浅蓝和深蓝色柱分别表示基线和GPT-3.5的性能,条纹绿色柱表示PRCA带来的提升。

Table 4: PRCA inference speed test results.

| Dataset | Precision | GPU | BatchSize | InferenceSpeed (token/s) |

| PRCA | float32 | A100 | 1 | 126 |

| PRCA | float32 | A100 | 2 | 231 |

| PRCA | float32 | A100 | 4 | 492 |

表 4: PRCA 推理速度测试结果。

| Dataset | Precision | GPU | BatchSize | InferenceSpeed (token/s) |

|---|