Rethinking Recurrent Neural Networks and Other Improvements for Image Classification

重新思考循环神经网络及其他图像分类改进方案

Abstract—Over the long history of machine learning, which dates back several decades, recurrent neural networks (RNNs) have been used mainly for sequential data and time series and generally with 1D information. Even in some rare studies on 2D images, these networks are used merely to learn and generate data sequentially rather than for image recognition tasks. In this study, we propose integrating an RNN as an additional layer when designing image recognition models. We also develop end-to-end multimodel ensembles that produce expert predictions using several models. In addition, we extend the training strategy so that our model performs comparably to leading models and can even match the state-of-the-art models on several challenging datasets (e.g., SVHN (0.99), Cifar-100 (0.9027) and Cifar-10 (0.9852)). Moreover, our model sets a new record on the Surrey dataset (0.949). The source code of the methods provided in this article is available at https://github.com/leonlha/e2e-3m and http://nguyen hu up hong.me.

摘要—在长达数十年的机器学习发展史中,循环神经网络(RNN)主要被用于处理序列数据和时间序列,且通常针对一维信息。即使在少数关于二维图像的研究中,这些网络也仅用于顺序学习和生成数据,而非图像识别任务。本研究提出在设计图像识别模型时将RNN作为附加层进行集成,并开发了端到端多模型集成方法,通过多个模型生成专家预测。此外,我们扩展了训练策略,使得我们的模型性能可与领先模型相媲美,在多个具有挑战性的数据集(如SVHN(0.99)、Cifar-100(0.9027)和Cifar-10(0.9852))上甚至能达到最先进模型的水平。更重要的是,我们的模型在Surrey数据集上创造了新纪录(0.949)。本文提供的方法源代码可见于https://github.com/leonlha/e2e-3m和http://nguyen hu up hong.me。

1 INTRODUCTION

1 引言

Recently, the image recognition task has been transformed by the availability of high-performance computing hardware—particularly modern graphical processing units (GPUs) and large-scale datasets. The early designs of convolutional neural networks (ConvNets) in the 1990s were shallow and included only a few layers; however, as the everincreasing volume of image data with higher resolutions required a concomitant increase in computing power, the field has evolved; modern ConvNets have deeper and wider layers with improved efficiency and accuracy [1], [2], [3], [4], [5], [6], [7]. The later developments involved a balancing act among network depth, width and image resolution [8] and determining appropriate augmentation policies [9].

近年来,图像识别任务因高性能计算硬件(特别是现代图形处理器(GPU))和大规模数据集的普及而发生变革。20世纪90年代早期的卷积神经网络(ConvNet)设计较浅,仅包含少数几层;但随着高分辨率图像数据量的持续增长对计算能力提出更高要求,该领域不断发展:现代ConvNet通过更深更宽的层结构实现了效率与精度的提升 [1] [2] [3] [4] [5] [6] [7]。后续研究重点转向网络深度、宽度与图像分辨率之间的平衡调控 [8],以及确定合适的增强策略 [9]。

During this same period, recurrent neural networks have proven successful at various applications, including natural language processing [10], [11], machine translation [12], speech recognition [13], [14], weather forecast- ing [15], human action recognition [16], [17], [18], drug discovery [19], and so on. However, in the image recognition field, RNNs are largely used merely to generate image pixel sequences [20], [21] rather than being applied for wholeimage recognition purposes.

同一时期,循环神经网络 (RNN) 已在多个领域被验证有效,包括自然语言处理 [10][11]、机器翻译 [12]、语音识别 [13][14]、天气预报 [15]、人类行为识别 [16][17][18]、药物发现 [19] 等。但在图像识别领域,RNN 主要仅用于生成图像像素序列 [20][21],而非应用于完整图像识别任务。

As the architecture of RNNs has evolved through several forms and become optimized, it could be interesting to study whether these spectacular advances have a direct effect on image classification. In this study, we take a distinct approach by integrating an RNN and considering it as an essential layer when designing an image recognition model.

随着RNN架构历经多种形式演进并不断优化,研究这些显著进展是否对图像分类产生直接影响颇具意义。本研究采用独特方法,将RNN整合为图像识别模型设计中的核心层。

In addition, we propose end-to-end (E2E) multiplemodel ensembles that learn expertise through the various models. This approach was the result of a critical observation: when training models for specific datasets, we often select the most accurate models or group some models into an ensemble. We argue that merged predictions can provide a better solution than can a single model. Moreover, the ensembling process essentially breaks the complete operation (from obtaining input data to final prediction) into separate stages and each step may be performed on different platforms. This approach can cause serious issues that may even make it impossible to integrate the operation into a single location (e.g., on real-time systems) [22], [23] or to a future platform such as a system on a chip (SoC) [24], [25].

此外,我们提出了端到端 (E2E) 多模型集成方法,通过不同模型学习专业能力。这一方法的提出源于关键发现:在为特定数据集训练模型时,我们通常会选择最精确的模型或将部分模型组合成集成。我们认为合并预测能提供优于单一模型的解决方案。更重要的是,传统集成过程实质上将完整操作(从获取输入数据到最终预测)拆分为独立阶段,每个步骤可能在不同平台上执行。这种做法可能导致严重问题,甚至使得整个操作无法集成到单一位置(如实时系统)[22][23],或无法迁移至未来平台(如片上系统 (SoC))[24][25]。

Additionally, we explore other key techniques, such as the learning rate strategy and test-time augmentation, to obtain overall improvements. The remainder of this article is organized as follows. Our main contributions are discussed in Section 2. Various RNN formulations, including a typical RNN and more advanced RNNs, are the focus of subsection 3.1. In addition, our core idea for designing ConvNet models that gain experience from expert models is highlighted in subsection 3.2. In subsection 4.1, we evaluate our design on the i Naturalist dataset, and in subsection 4.2, the performances of various image recognition models integrated with RNNs are thoroughly analyzed. In subsection 4.3, we evaluate our design on the iCassava dataset. In subsections 4.4 and 4.5, we discuss our learning rate strategy and the softmax pruning technology. Additionally, we extend our experiments in subsection 4.6 to include more challenging datasets (i.e., SVHN, Cifar-100, and Cifar-10) and show that our model’s performance can match the state-of-theart models even under limited resources. In subsection 4.7, we show that our approach outperforms the state-of-theart methods by a large margin. Finally, we conclude our research in Section 5.

此外,我们还探索了其他关键技术,如学习率策略和测试时增强 (test-time augmentation),以获得整体性能提升。本文其余部分结构如下:第2节讨论我们的主要贡献;第3.1小节重点分析各类RNN变体,包括典型RNN和更先进的RNN结构;第3.2小节着重阐述我们设计ConvNet模型的核心思想——从专家模型中获取经验;第4.1小节在iNaturalist数据集上评估我们的设计;第4.2小节深入分析集成RNN的各种图像识别模型性能;第4.3小节在iCassava数据集上进行评估;第4.4和4.5小节分别讨论学习率策略和softmax剪枝技术;第4.6小节将实验扩展到更具挑战性的数据集(SVHN、Cifar-100和Cifar-10),证明我们的模型在有限资源下仍能达到最先进水平;第4.7小节展示我们的方法大幅超越现有最优方法;最后第5节总结研究。

2 CONTRIBUTIONS

2 贡献

Our research differs from previous works in several ways. First, most studies utilize RNNs for sequential data and time series. Except for rare cases, RNNs are used largely to generate sequences of image pixels. Instead, we propose integrating RNNs as an essential layer of ConvNets.

我们的研究在多个方面与先前工作不同。首先,多数研究利用RNN处理序列数据和时间序列。除个别情况外,RNN主要用于生成图像像素序列。而我们提出将RNN作为卷积网络(ConvNets)的核心层进行整合。

Second, we present our core idea for designing a ConvNet in which the model is able to learn decisions from expert models. Typically, we choose predictions from a single model or an ensemble of models.

其次,我们提出设计卷积神经网络 (ConvNet) 的核心思路,使模型能够从专家模型中学习决策。通常,我们会选择单一模型或集成模型的预测结果。

Another main contribution of this work involves a training strategy that allows our models to perform competitively, matching previous approaches on several datasets and outperforming some state-of-the-art models.

本工作的另一主要贡献在于提出了一种训练策略,该策略使我们的模型在多个数据集上实现了与先前方法相当、甚至超越部分最先进模型的竞争力表现。

We make our source code so other researchers can replicate this work. The program is written in the Jupyter Notebook environment using a web-based interface with a few extra libraries.

我们公开源代码以便其他研究人员能复现这项工作。该程序基于Jupyter Notebook环境开发,采用网页交互界面并调用若干扩展库实现。

3 METHODOLOGIES

3 方法

Our key idea is to integrate an RNN layer into ConvNet models. We propose several RNNs and present the computational formulas. The concept of training a model to learn predictions from multiple individual models and the design of such a model is also discussed.

我们的核心思路是将RNN层整合到ConvNet模型中。我们提出了几种RNN结构并给出了计算公式。同时还探讨了训练模型从多个独立模型中学习预测的概念,以及此类模型的设计方案。

3.1 Recurrent Neural Networks

3.1 循环神经网络

For the purpose of performance analysis and comparison, we adopt both a typical RNN and some more advanced RNNs i.e., . (a long short term memory (LSTM) and gated recurrent unit (GRU) as well as a bidirectional RNN (BRNN)). The formulations of the selected RNNs are presented as follows.

出于性能分析和比较的目的,我们采用了典型的RNN和一些更先进的RNN(即长短期记忆网络(LSTM)、门控循环单元(GRU)以及双向RNN(BRNN))。所选RNN的公式如下。

Considering a standard RNN with a given input sequence $x_{1},x_{2},...,x_{T},$ the hidden cell state is updated at a time step $t$ as follows:

考虑一个标准的 RNN (Recurrent Neural Network) ,给定输入序列 $x_{1},x_{2},...,x_{T},$ 其隐藏单元状态在时间步 $t$ 的更新方式如下:

$$

\begin{array}{r}{h_{t}=\sigma(W_{h}h_{t-1}+W_{x}x_{t}+b),}\end{array}

$$

$$

\begin{array}{r}{h_{t}=\sigma(W_{h}h_{t-1}+W_{x}x_{t}+b),}\end{array}

$$

where $W_{h}$ and $W_{x}$ denote weight matrices, b represents the bias, and $\sigma$ is a sigmoid function that outputs values between 0 and 1.

其中 $W_{h}$ 和 $W_{x}$ 表示权重矩阵,b 代表偏置,$\sigma$ 是输出值介于 0 和 1 之间的 sigmoid 函数。

The output of a cell, for ease of notation, is defined as

为便于表示,将单元格的输出定义为

$$

y_{t}=h_{t},

$$

$$

y_{t}=h_{t},

$$

but can also be shown using the sof tmax function, in which $\hat{y}{t}$ is the output and $y{t}$ is the target:

但也可以通过使用softmax函数来展示,其中$\hat{y}{t}$是输出,$y{t}$是目标:

$$

\hat{y_{t}}=s o f t m a x(W_{y}h_{t}+b_{y}).

$$

$$

\hat{y_{t}}=s o f t m a x(W_{y}h_{t}+b_{y}).

$$

A more sophisticated RNN or LSTM that includes the concept of a forget gate can be expressed as shown in the following equations:

一种更复杂的RNN或LSTM(包含遗忘门概念)可通过以下方程表示:

$$

\begin{array}{r l}&{f_{t}=\sigma(W_{f h}h_{t-1}+W_{f x}x_{t}+b_{f}),}\ &{\quad i_{t}=\sigma(W_{i h}h_{t-1}+W_{i x}x_{t}+b_{i}),}\ &{c_{t}^{\prime}=t a n h(W_{c^{\prime}h}h_{t-1}+W_{c^{\prime}x}x_{t}+b_{c}^{\prime}),}\ &{\quad\quad c_{t}=f_{t}\odot c_{t-1}+i_{t}\odot c_{t}^{\prime},}\ &{\quad o_{t}=\sigma(W_{o h}h_{t-1}+W_{o x}x_{t}+b_{o}),}\ &{\quad\quad h_{t}=o_{t}\odot t a n h(c_{t}),}\end{array}

$$

$$

\begin{array}{r l}&{f_{t}=\sigma(W_{f h}h_{t-1}+W_{f x}x_{t}+b_{f}),}\ &{\quad i_{t}=\sigma(W_{i h}h_{t-1}+W_{i x}x_{t}+b_{i}),}\ &{c_{t}^{\prime}=t a n h(W_{c^{\prime}h}h_{t-1}+W_{c^{\prime}x}x_{t}+b_{c}^{\prime}),}\ &{\quad\quad c_{t}=f_{t}\odot c_{t-1}+i_{t}\odot c_{t}^{\prime},}\ &{\quad o_{t}=\sigma(W_{o h}h_{t-1}+W_{o x}x_{t}+b_{o}),}\ &{\quad\quad h_{t}=o_{t}\odot t a n h(c_{t}),}\end{array}

$$

where the $\odot$ operation represents an element wise vector product, and $f,i,$ o and $c$ are the forget gate, input gate, output gate and cell state, respectively. Information is retained when the forget gate $f_{t}$ becomes 1 and eliminated when $f_{t}$ is set to 0.

其中 $\odot$ 运算表示逐元素向量乘积,$f$、$i$、$o$ 和 $c$ 分别代表遗忘门、输入门、输出门和细胞状态。当遗忘门 $f_{t}$ 为1时保留信息,当 $f_{t}$ 设为0时消除信息。

Because LSTMs require powerful computing resources, we use a variation, (i.e., a GRU) for optimization purposes. The GRU combines the input gate and forget gate into a single gate—namely, the update gate. The mathematical formulas are expressed as follows:

由于LSTM需要强大的计算资源,我们采用其变体(即GRU)进行优化。GRU将输入门和遗忘门合并为单个门(即更新门),其数学公式表示如下:

$$

\begin{array}{r l r}&{}&{r_{t}=\sigma(W_{r h}h_{t-1}+W_{r x}x_{t}+b_{r}),}\ &{}&{z_{t}=\sigma(W_{z h}h_{t-1}+W_{z x}x_{t}+b_{z}),~}\ &{}&{h_{t}^{\prime}=t a n h(W_{h^{\prime}h}(r_{t}\odot h_{t-1})+W_{h^{\prime}x}x_{t}+b_{z}),~}\ &{}&{h_{t}=(1-z_{t})\odot h_{t-1}+z_{t}\odot h_{t}^{\prime}.~}\end{array}

$$

$$

\begin{array}{r l r}&{}&{r_{t}=\sigma(W_{r h}h_{t-1}+W_{r x}x_{t}+b_{r}),}\ &{}&{z_{t}=\sigma(W_{z h}h_{t-1}+W_{z x}x_{t}+b_{z}),~}\ &{}&{h_{t}^{\prime}=t a n h(W_{h^{\prime}h}(r_{t}\odot h_{t-1})+W_{h^{\prime}x}x_{t}+b_{z}),~}\ &{}&{h_{t}=(1-z_{t})\odot h_{t-1}+z_{t}\odot h_{t}^{\prime}.~}\end{array}

$$

Finally, while a typical RNN essentially takes only previous information, bidirectional RNNs integrate both past and future information:

最后,虽然典型的RNN本质上只利用过去的信息,但双向RNN同时整合了过去和未来的信息:

$$

\begin{array}{r}{h_{t}=\sigma(W_{h x}x_{t}+W_{h h}h_{t-1}+b_{h}),}\ {z_{t}=\sigma(W_{Z X}x_{t}+W_{H X}h_{t+1}+b_{z}),}\ {\hat{y}_{t}=s o f t m a x(W_{y h}h_{t}+W_{y z}z_{t}+b_{y}),}\end{array}

$$

$$

\begin{array}{r}{h_{t}=\sigma(W_{h x}x_{t}+W_{h h}h_{t-1}+b_{h}),}\ {z_{t}=\sigma(W_{Z X}x_{t}+W_{H X}h_{t+1}+b_{z}),}\ {\hat{y}_{t}=s o f t m a x(W_{y h}h_{t}+W_{y z}z_{t}+b_{y}),}\end{array}

$$

where $h_{t-1}$ and $h_{t+1}$ indicate hidden cell states at the previous time step $(t-1)$ and the future time step $(t+1)$ .

其中 $h_{t-1}$ 和 $h_{t+1}$ 分别表示前一时间步 $(t-1)$ 和未来时间步 $(t+1)$ 的隐藏单元状态。

For more details on the RNN, LSTM, GRU and BRNN models, please refer to the following articles [26], [27], [28], [29], [30], [26], [31] and [32], [33] respectively.

有关RNN、LSTM、GRU和BRNN模型的更多细节,请分别参考以下文章[26]、[27]、[28]、[29]、[30]、[26]、[31]以及[32]、[33]。

3.2 End-to-end Ensembles of Multiple Models

3.2 多模型端到端集成

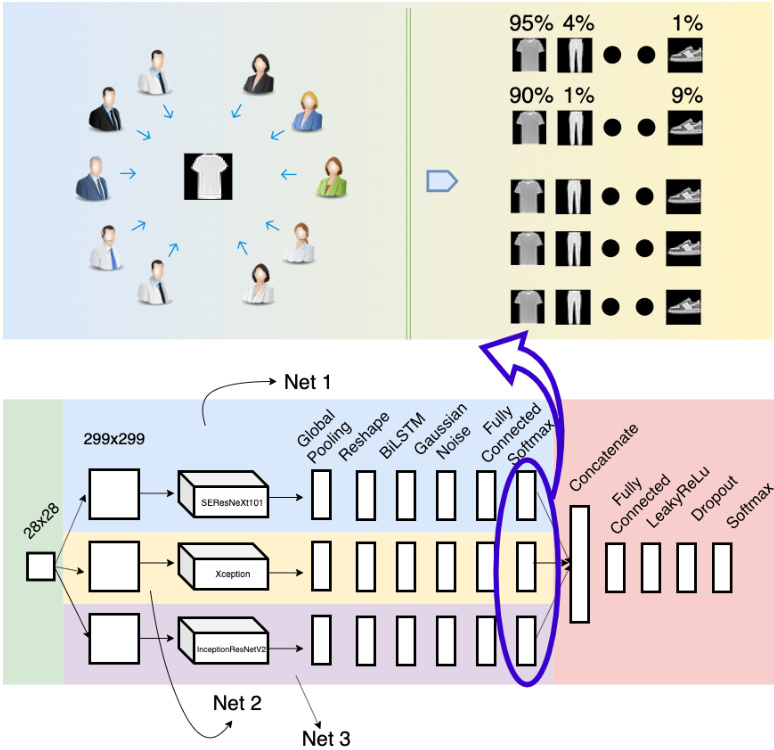

Our main idea for this design is that when several models are trained on a certain dataset, we would typically choose the model that yields the best accuracy. However, we could also construct a model that combines expertise from all the individual models. We illustrate this idea in Figure 1.

我们设计的主要思路是:当多个模型在某个数据集上训练时,通常我们会选择准确率最高的模型。但也可以构建一个融合所有单一模型专长的组合模型。如图1所示。

As shown in the upper part, each actor represents a trained single model. For each presented sample, each actor predicts the probability that the sample belongs to each category. These probabilities are then combined and utilized to train our model.

如上部分所示,每个参与者代表一个训练好的单一模型。对于每个呈现的样本,参与者会预测该样本属于每个类别的概率。这些概率随后被合并并用于训练我们的模型。

The bottom part of the figure represents our ConvNet design for this idea. We essentially select three models to make predictions instead of two. Using only two models may result in a situation where one model dominates the other. In other words, we would use the predictions primarily from only one model. Adding one additional model provides a balance that offsets this weakness of a two-model architecture. Note that we limit our designs to just three models because of resource limitations (i.e., GPU memory). We name this model E2E-3M, where $\mathrm{^{//}E2E^{/\prime}}$ is an abbreviation of the “end-to-end“ learning process [34], [35], [36], in which a model performs all phases from training until final prediction. The $\mathbf{\omega}^{\prime\prime}3\mathbf{M}^{\prime\prime}$ simply documents the combination of three models.

图的下半部分展示了我们基于这一理念设计的卷积神经网络 (ConvNet)。我们选择了三个模型进行预测而非两个,因为仅使用两个模型可能导致其中一个模型主导另一个,即预测结果主要来自单一模型。增加第三个模型可以平衡这种双模型架构的缺陷。需要注意的是,由于资源限制(如GPU内存),我们将设计限定为三个模型。该模型命名为E2E-3M,其中$\mathrm{^{//}E2E^{/\prime}}$是端到端 (end-to-end) 学习过程的缩写 [34][35][36],表示模型从训练到最终预测全程参与。$\mathbf{\omega}^{\prime\prime}3\mathbf{M}^{\prime\prime}$则标注了三个模型的组合结构。

Each individual model (named Net 1, Net 2, and Net 3) is a fine-tuned model in which the last layer is removed and replaced by more additional layers, e.g., global pooling to reduce the network size and an RNN module (including a reshape layer and an RNN layer). The models also employ Gaussian noise to prevent over fitting, include a fully connected layer and each model has its own softmax layer. The outputs from these three models are concatenated and utilized to train the subsequent neural network module, which consists of a fully connected layer, a LeakyReLU [37] layer, a dropout [38] layer and a softmax layer for classification.

每个独立模型(分别命名为Net 1、Net 2和Net 3)均为微调模型,其最后一层被移除并替换为更多附加层,例如用于缩减网络规模的全局池化层和RNN模块(包含重塑层与RNN层)。这些模型还采用高斯噪声防止过拟合,包含全连接层,且每个模型拥有独立的softmax层。三个模型的输出结果被拼接后用于训练后续神经网络模块,该模块由全连接层、LeakyReLU [37]层、Dropout [38]层及用于分类的softmax层构成。

Fig. 1. End-to-end Ensembles of Multiple Models: Concept and Design. The upper part of this image illustrates our key idea, in which several actors make predictions for a sample and output the probability of the sample belonging to each category. The lower part presents a ConvNet design in which three distinct and recent models are aggregated and trained using advanced neural networks. This design also illustrates our proposal of integrating an RNN into an image recognition model. (Best viewed in color)

图 1: 端到端多模型集成:概念与设计。图像上半部分展示了我们的核心思想,其中多个执行者 (actor) 对样本进行预测并输出该样本属于每个类别的概率。下半部分展示了一个卷积网络 (ConvNet) 设计,该设计通过先进神经网络聚合并训练了三个不同的最新模型。此设计也展示了我们将循环神经网络 (RNN) 集成到图像识别模型中的提案。(建议彩色查看)

Ensemble learning is a key aspect of this design. An ensemble refers to an aggregation of weaker models (base learners) combined to construct a model that performs more efficiently (a strong learner) [39]. The ensembling technique is more prevalent in machine learning than in deep learning, especially in the image recognition field, because convolution requires considerable computational power. Most of the recent related studies focus on a simple averaging or voting mechanism [40], [41], [42], [43], [44], and few have investigated integrating trainable neural networks [45], [46]. Our research differs from prior studies, because we study the design on a much larger scale using a larger number of up-to-date ConvNets.

集成学习是该设计的关键部分。集成 (ensemble) 指通过组合多个较弱模型 (基学习器) 来构建性能更优模型 (强学习器) 的方法 [39]。与深度学习相比,集成技术在机器学习领域更为常见,尤其在图像识别领域,因为卷积运算需要大量计算资源。近期相关研究多集中于简单平均或投票机制 [40] [41] [42] [43] [44],而较少探索可训练神经网络的集成方法 [45] [46]。本研究区别于先前工作之处在于,我们采用了更多最新卷积网络 (ConvNet) 进行大规模设计研究。

Suppose that our ConvNet design has $n$ fine-tuned models or class if i ers and that the dataset contains $c$ classes. The output of each classifier can be represented as a distribution vector:

假设我们的卷积网络 (ConvNet) 设计包含 $n$ 个微调模型或分类器,且数据集中有 $c$ 个类别。每个分类器的输出可表示为分布向量:

$$

\Delta_{j}=[\delta_{1j}\quad\delta_{2j}\quad\ldots\quad\delta_{c j}],

$$

$$

\Delta_{j}=[\delta_{1j}\quad\delta_{2j}\quad\ldots\quad\delta_{c j}],

$$

where

其中

$$

\begin{array}{c}{1\leq j\leq n,}\ {0\leq\delta_{i j}\leq1\quad\forall1\leq i\leq c,}\ {\displaystyle\sum_{i=1}^{c}\delta_{i j}=1.}\end{array}

$$

$$

\begin{array}{c}{1\leq j\leq n,}\ {0\leq\delta_{i j}\leq1\quad\forall1\leq i\leq c,}\ {\displaystyle\sum_{i=1}^{c}\delta_{i j}=1.}\end{array}

$$

After concatenating the outputs of the $n$ class if i ers, the distribution vector becomes

在拼接 $n$ 个分类器的输出后,分布向量变为

$$

\Delta=[\Delta_{1}\quad\Delta_{2}\quad...\quad\Delta_{n}].

$$

$$

\Delta=[\Delta_{1}\quad\Delta_{2}\quad...\quad\Delta_{n}].

$$

For formulaic convenience, we assume that the neural network has only one layer and that the number of neurons is equal to the number of classes. As usual, the networks’ weights, $\Theta_{\cdot}$ , are initialized randomly. The distribution vector is computed as follows:

为便于公式表达,我们假设神经网络仅有一层且神经元数量等于类别数。与常规做法相同,网络权重 $\Theta_{\cdot}$ 采用随机初始化。分布向量计算方式如下:

$$

\Delta^{'}=\Theta\cdot\Delta=[\delta_{1}^{'}\quad\delta_{2}^{'}\quad...\quad\delta_{c}^{'}],

$$

$$

\Delta^{'}=\Theta\cdot\Delta=[\delta_{1}^{'}\quad\delta_{2}^{'}\quad...\quad\delta_{c}^{'}],

$$

where

其中

$$

\begin{array}{c}{{1\leq j\leq c,}}\ {{\delta_{j}^{'}=\displaystyle\sum_{i=1}^{n c}\delta_{i}w_{i j}.}}\end{array}

$$

$$

\begin{array}{c}{{1\leq j\leq c,}}\ {{\delta_{j}^{'}=\displaystyle\sum_{i=1}^{n c}\delta_{i}w_{i j}.}}\end{array}

$$

Finally, after the softmax activation, the output is

最后,经过 softmax 激活后,输出为

$$

\eta_{j}^{'}=\frac{e^{\delta_{j}^{'}}}{\displaystyle\sum_{i=1}^{c}e^{\delta_{j}^{'}}}.

$$

$$

\eta_{j}^{'}=\frac{e^{\delta_{j}^{'}}}{\displaystyle\sum_{i=1}^{c}e^{\delta_{j}^{'}}}.

$$

4 EXPERIMENTS

4 实验

In this section, we will present the experiments used to evaluate our initial design (without an RNN) and then analyze the performance when RNNs are integrated. We also describe our end-to-end ensemble multiple models, the developed training strategy, and the extension of the softmax layer. These experiments were performed on the i Naturalist’19 [47] and iCassava’19 Challenges [48], and on the Cifar-10 [49] and Fashion-MNIST [50] datasets. We also extend our experiments on Cifar-100 [49], SVHN [51] and Surrey [52].

在本节中,我们将展示用于评估初始设计(不含RNN)的实验,并分析集成RNN后的性能表现。同时阐述端到端集成多模型方案、开发的训练策略以及softmax层的扩展设计。实验在iNaturalist'19 [47]、iCassava'19挑战赛[48]、Cifar-10 [49]和Fashion-MNIST [50]数据集上进行,并扩展至Cifar-100 [49]、SVHN [51]和Surrey [52]数据集。

4.1 Experiment 1

4.1 实验 1

Deep learning [53] and convolutional neural networks (ConvNets) have achieved notable successes in the image recognition field. From the early LeNet model [54], first proposed several decades ago, to the recent AlexNet [55], Inception [2], [3], [56], ResNet [4], SENet [7] and EfficientNet [8] models, these ConvNets have leveraged automated classification to exceed human performance in several applications. This efficiency is due to the high availability of powerful computer hardware, specifically GPUs and big data.

深度学习 [53] 和卷积神经网络 (ConvNets) 在图像识别领域取得了显著成就。从几十年前首次提出的早期 LeNet 模型 [54],到近期的 AlexNet [55]、Inception [2][3][56]、ResNet [4]、SENet [7] 和 EfficientNet [8] 模型,这些卷积神经网络通过自动分类在多个应用中超越了人类表现。这种高效性得益于强大计算机硬件(特别是 GPU)和大数据的高度可用性。

In this subsection, we examine the significance of our design, which utilizes several ConvNets constructed based on leading architectures such as Inception V 3, ResNet50, Inception-ResNetV2, Xception, Mobile Ne tV 1 and SEResNeXt101. We adopt Inception V 3 as a baseline model since the popularity of ConvNets among deep learning researchers means they can often be used as standard testbeds. In addition, Inception V 3 is known for employing sliding kernels, e.g., $1{\times}1,3{\times}3$ or $5\times5,$ in parallel, which essentially reduces the computation and increases the accuracy. We also use the simplest version of residual networks, i.e., . ResNet50 (ResNet has several versions, including ResNet50, ResNet101 and ResNet152, named according to the number of depth layers), which introduces short circuits through each network layer that greatly reduce the training time. In addition, we explore other ConvNets to facilitate the comparisons and evaluations.

在本小节中,我们将探讨设计方案的重要性。该方案采用了基于主流架构构建的多个卷积神经网络 (ConvNet),包括 Inception V 3、ResNet50、Inception-ResNetV2、Xception、MobileNetV1 和 SEResNeXt101。由于卷积神经网络在深度学习研究者中的普及性使其常被用作标准测试平台,我们选择 Inception V 3 作为基线模型。此外,Inception V 3 以并行使用滑动核(如 $1{\times}1,3{\times}3$ 或 $5\times5$)而闻名,这有效降低了计算量并提升了准确率。我们还采用了残差网络的最简版本 ResNet50(ResNet 系列包含 ResNet50、ResNet101 和 ResNet152 等版本,其命名依据网络深度层数),该架构通过每层引入短路连接大幅缩短了训练时间。同时,我们还测试了其他卷积神经网络以进行对比评估。

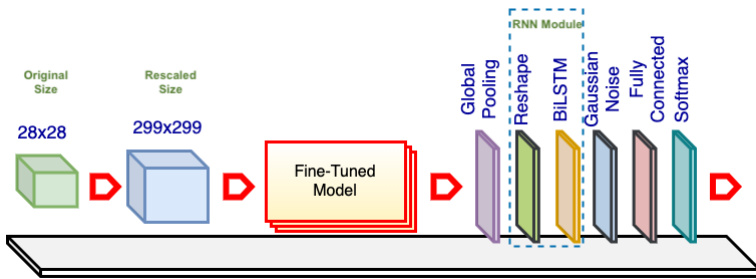

Fig. 2. Single E2E-3M Model. The fine-tuned model is a pretrained ConvNet (e.g., Inception V 3) with the top layers excluded and the weights retrained. The base model comes preloaded with ImageNet weights. The original images are rescaled as needed to match the required input size of the fine-tuned model or for image resolution analysis. The global pooling layer reduces the networks’ size, and the reshaping layer converts the data to a standard input form for the RNN layer. The Gaussian noise layer improves the variation among samples to prevent over fitting. The fully connected layer aims to improve classification. The softmax layer is another fully connected layer that has the same number of neurons as the number of dataset categories, and it utilizes softmax activation.

图 2: 单端到端三模态 (E2E-3M) 模型。微调后的模型是一个预训练的卷积网络 (如 Inception V3),去除了顶层并重新训练权重。基础模型预载了 ImageNet 权重。原始图像会根据需要调整尺寸,以匹配微调模型所需的输入尺寸或用于图像分辨率分析。全局池化层减小网络规模,重塑层将数据转换为 RNN 层的标准输入形式。高斯噪声层通过增加样本间差异性来防止过拟合。全连接层用于提升分类性能。softmax 层是另一个全连接层,其神经元数量与数据集类别数相同,并采用 softmax 激活函数。

Our initial design is depicted in Figure 2. The core of this process implements one of the above mentioned ConvNet models. While the architectures of these ConvNets differ, usually their top layers function as class if i ers and can be replaced to adapt the models to different datasets. For example, Xception and ResNet50 use a global pooling and a fully connected layer as the top layers, while VGG19 [57] uses a flatten layer and two fully connected layers (the original article used max pooling, but for some reason the Keras implementation uses a flatten layer).

我们的初始设计如图 2 所示。该流程的核心实现了上述 ConvNet 模型之一。虽然这些 ConvNet 的架构各不相同,但通常它们的顶层作为分类器 (class if i ers) 使用,可以通过替换来使模型适应不同的数据集。例如,Xception 和 ResNet50 使用全局池化 (global pooling) 和全连接层 (fully connected layer) 作为顶层,而 VGG19 [57] 使用展平层 (flatten layer) 和两个全连接层 (原始论文使用了最大池化 (max pooling),但出于某些原因,Keras 实现使用了展平层)。

Our design adds global pooling to decrease the output size of the networks (this is in line with most ConvNets but contrasts with VGGs, in which flatten layers are utilized extensively). Notably, we also insert an RNN module to evaluate of our proposed approach. The module comprises a reshape layer and an RNN layer as described in subsection 3.1. Moreover, we add a Gaussian noise layer to increase sample variation and prevent over fitting. In the fully connected layer, the number of neurons varies , (e.g., 256, 512, 1024 or 2048); the actual value is based on the specific experiment. The softmax layer uses the same number of outputs as the number of i Naturalist’19 categories.

我们的设计采用全局池化来减小网络输出尺寸(这与大多数卷积网络一致,但与广泛使用扁平层的VGG网络形成对比)。值得注意的是,我们还插入了一个RNN模块来评估所提出的方法。如第3.1小节所述,该模块包含一个重塑层和一个RNN层。此外,我们添加了高斯噪声层以增加样本多样性并防止过拟合。在全连接层中,神经元数量可变(例如256、512、1024或2048),具体数值根据实验而定。softmax层的输出数量与iNaturalist'19数据集的类别数相同。

All the networks’ layers from the ConvNets are unfrozen; we reuse only the models’ architectures and pretrained weights (these ConvNets are pretrained on the ImageNet dataset [58], [59]). Reusing the trained weights offers several advantages because retraining from scratch takes days, weeks or even months on a large dataset such as ImageNet. Typically, transfer learning can be used for most applications based on the concept that the early layers act like edge and curve filters; once trained, ConvNets can be reused on other, similar datasets [57]. However, when the target dataset differs substantially from the pretrained dataset, retraining or fine-tuning can increase the accuracy. To distinguish these ConvNets from the original ones, we refer to each model as a fine-tuned model.

所有卷积网络(ConvNets)的层都被解冻;我们仅复用模型架构与预训练权重(这些卷积网络在ImageNet数据集[58][59]上进行了预训练)。复用训练好的权重具有多重优势,因为在ImageNet等大型数据集上从头开始训练需要数日、数周甚至数月时间。通常,基于早期层充当边缘和曲线滤波器的概念,迁移学习可适用于大多数应用场景;一旦完成训练,卷积网络便可复用于其他相似数据集[57]。然而当目标数据集与预训练数据集差异显著时,重新训练或微调能提升准确率。为区分这些卷积网络与原始模型,我们将每个模型称为微调模型。

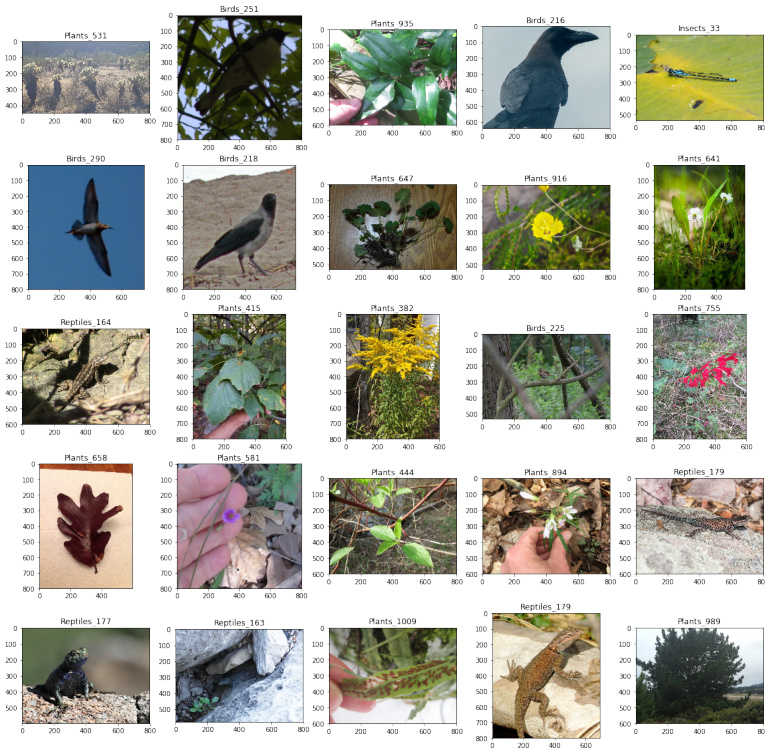

We conduct our experiments on the i Naturalist’19 dataset, which was originally compiled for the i Naturalist Challenge, conducted at the 6th Fine-grained Visual Categorization (FGVC6) workshop at CVPR 2019. In the computer vision area, FGVC has been a focus of researchers since approximately 2011 [60], [61], [62], although research on similar topics appeared long before [63], [64]. FGVC or subordinate categorization aims to classify visual objects at a more subtle level of detail than basic level categories [65], for example, bird species rather than just birds [60], dog types [62], car brands [66], and aircraft models [67]. The i Naturalist dataset was created in line with the development of FGVC [47], and the dataset is comparable with ImageNet regarding size and category variation. The specific dataset used in this research (i Naturalist’19) focuses on more similar categories than previous versions and is composed of 1,010 species collected from approximately two hundred thousand real plants and animals. Figure 3 shows some random images from this dataset and indicates the species shown as well as their respective classes and subcategories.

我们在iNaturalist'19数据集上进行了实验,该数据集最初是为2019年CVPR会议第六届细粒度视觉分类(FGVC6)研讨会举办的iNaturalist挑战赛而构建的。在计算机视觉领域,FGVC自2011年左右[60][61][62]就成为研究热点,但类似主题的研究早在[63][64]就已出现。FGVC或下属分类旨在比基础类别[65]更精细的层次上对视觉对象进行分类,例如区分鸟类物种而非仅识别鸟类[60]、犬种[62]、汽车品牌[66]以及飞机型号[67]。iNaturalist数据集是顺应FGVC发展而创建的[47],其规模和类别多样性与ImageNet相当。本研究使用的特定数据集(iNaturalist'19)聚焦于比以往版本更具相似性的类别,包含从约20万真实动植物中收集的1,010个物种。图3展示了该数据集中的随机图像样本,并标注了所示物种及其对应的纲目和亚类。

Fig. 3. Random Samples from i Naturalist’19 dataset. Each image is labeled with the species name and its subcategory

图 3: iNaturalist'19 数据集中的随机样本。每张图像都标有物种名称及其子类别

The dataset is randomly split into training and test sets at a ratio of $80:20$ . In addition, the images are resized to appropriate resolutions, e.g., for the Inception V 3 standard, the rescaled images have a size of $299\times299$ . The resolution is also increased to $401\times401$ or even $421\times421$ . In the Gaussian noise layer, we set the amount of noise to 0.1, and in the fully connected layer, we chose $1,024$ neurons based on experience, because it is impractical to evaluate all the layers with every setting.

数据集按 $80:20$ 的比例随机划分为训练集和测试集。此外,图像被调整为适当的分辨率,例如对于 Inception V3 标准,调整后的图像尺寸为 $299\times299$。分辨率也可提升至 $401\times401$ 甚至 $421\times421$。在高斯噪声层中,我们将噪声量设为 0.1;在全连接层中,基于经验选择了 $1,024$ 个神经元,因为对所有层进行每种设置评估是不现实的。

We configured a Jupyter Notebook server running on a Linux operating system (OS) using 4 GPUs (GeForce® GTX 1080 Ti) each with 12 GB of RAM. For coding, we used Keras with a TensorFlow backend [68] as our platform. Keras is written in the Python programming language and has been developed as an independent wrapper that runs on top of several backend platforms, including TensorFlow. The project was recently acquired by Google Inc. and has become a part of TensorFlow.

我们在一台运行Linux操作系统的服务器上配置了Jupyter Notebook环境,使用4块显存为12GB的GeForce® GTX 1080 Ti GPU。代码实现采用基于TensorFlow后端[68]的Keras框架。该框架使用Python语言编写,最初是作为可运行于TensorFlow等后端平台的独立封装层开发,现已被Google公司收购并整合为TensorFlow的组成部分。

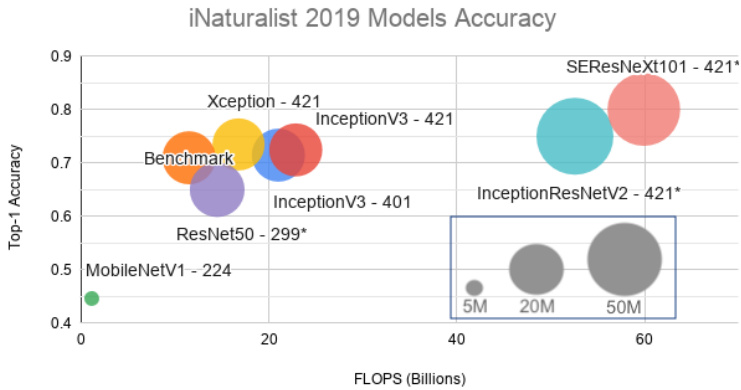

Figure 4 shows the results of this experiment in which the top-1 accuracy is plotted against the floating-point operations per second (FLOPS). The size of each model or the total number of parameters is also displayed. The top-1 accuracy was obtained by submitting predictions to the challenge website, obtaining the top-1 error level from the private leader board and subtracting the result from 1. During a tournament, the public leader board is computed based on $51%$ of the official test data. After the tournament, the private leader board contains a summary of all the data.

图 4: 展示了该实验结果,其中纵轴为top-1准确率,横轴为每秒浮点运算次数(FLOPS)。各模型规模(即参数量)亦标注于图中。top-1准确率通过向挑战网站提交预测结果获得,具体计算方式为:从私有排行榜获取top-1错误率后用1减去该数值。竞赛期间公开排行榜基于51%的官方测试数据生成,赛事结束后私有排行榜将汇总全部测试数据。

Fig. 4. i Naturalist’19 Model Accuracy. Here, “Benchmark“ denotes the Inception V 3 result using the default input setting (an image resolution of $299\times299$ ). The sizes of the input images for the other models are indicated along with their respective names. For example, Xception-421 indicates that the input images for that model have been rescaled to $421\times421$ .

图 4: iNaturalist'19 模型准确率。其中 "Benchmark" 表示采用默认输入设置 (图像分辨率 $299\times299$) 的 Inception V3 结果。其他模型的输入图像尺寸标注在各自名称旁,例如 Xception-421 表示该模型的输入图像被调整为 $421\times421$。

As expected, a higher image resolution yields greater accuracy but also uses more computing power for the same model (Inception V 3). As a side note, our benchmark achieved an accuracy of 0.7097, which is a marginal gap from the benchmark model on the Challenge website (0.7139). Because we have no knowledge of the organizers’ ConvNet designs, settings and working environments, we cannot delve further into the reasons for this difference. Later, we increased the image resolutions from $299\times299$ to $401\times401$ and $421\times421$ and switched the fine-tuned models. Using Xception-421, our model obtained an accuracy of approximately 0.7347.

正如预期,更高的图像分辨率在相同模型(Inception V 3)下能带来更高准确率,但也会消耗更多算力。值得一提的是,我们的基准测试准确率为0.7097,与挑战赛官网基准模型(0.7139)存在微小差距。由于不了解组织者采用的卷积网络设计、参数设置及运行环境,我们无法深入探究差异原因。后续我们将图像分辨率从$299\times299$提升至$401\times401$和$421\times421$,并切换微调模型。使用Xception-421时,模型准确率达到了约0.7347。

Because our server is shared, training takes approximately one week each time. This is the reason why we could increase the image size to only $421\times421$ . In addition, we did not obtain the results for SERe s NeXt 101-421, InceptionResNetV2-421 and ResNet50-299in this experiment, but projected their approximated accuracies; we will use these models in later experiments. Additionally, because adding an RNN module would substantially increase the training time, the RNN module is not analyzed on the i Naturalist’19

由于我们的服务器是共享的,每次训练大约需要一周时间。这就是为什么我们只能将图像尺寸增加到 $421\times421$。此外,在本实验中我们未获得SEResNeXt 101-421、InceptionResNetV2-421和ResNet50-299的结果,但预估了它们的近似准确率;我们将在后续实验中使用这些模型。另外,由于添加RNN模块会显著增加训练时间,因此未在iNaturalist'19数据集上分析RNN模块。

dataset.

数据集

4.2 Experiment 2

4.2 实验 2

As mentioned in the previous section, most of the research regarding RNNs has focused on sequential data or time series. Despite the little attention paid to using RNNs with images, the main goal is to generate sequences of pixels rather than direct image recognition. Our approach differs significantly from typical image recognition models, in which all the image pixels are presented simultaneously rather than in several time steps.

如前一节所述,大多数关于RNN的研究都集中在序列数据或时间序列上。尽管很少有人关注将RNN用于图像,但其主要目标是生成像素序列而非直接的图像识别。我们的方法与典型的图像识别模型有很大不同,后者所有图像像素是同时呈现的,而非分多个时间步呈现。

We systematically evaluated the proposed design that utilizes distinct recurrent neural networks. These models include a typical RNN, an advanced GRU and a bidirectional RNN–BiLSTM—and we compared them against a standard (STD) model without an RNN module. In addition, we selected representative fine-tuned models, namely, Inception V 3, Xception, ResNet50, Inception-ResNetV2, MobileNetV1, VGG19 and SERe s NeXt 101, for comparison and analysis.

我们系统评估了所提出的采用不同循环神经网络的设计方案。这些模型包括典型RNN、先进的GRU以及双向RNN——BiLSTM,并将其与不含RNN模块的标准(STD)模型进行对比。此外,我们选取了具有代表性的微调模型进行比较分析,包括Inception V3、Xception、ResNet50、Inception-ResNetV2、MobileNetV1、VGG19以及SEResNeXt 101。

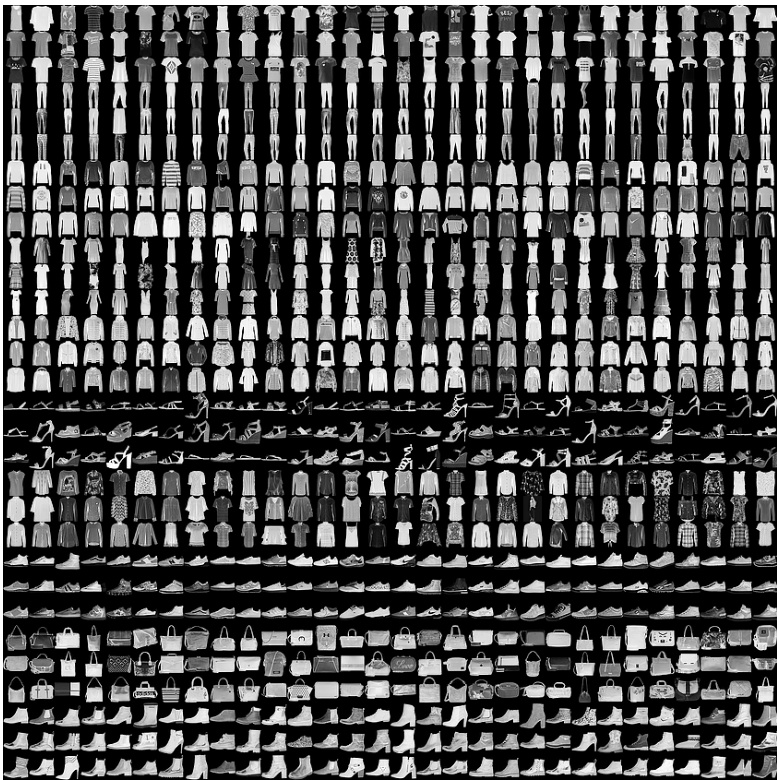

Fig. 5. Some Samples from the Fashion-MNIST dataset

图 5: Fashion-MNIST 数据集中的部分样本

In this experiment, we employ the Fashion-MNIST dataset, which was recently created by Zalando SE and intended to serve as a direct replacement for the MNIST dataset as a machine learning benchmark because some models have achieved an almost perfect result of $100%$ accuracy on MNIST. The Fashion-MNIST dataset contains the same amount of data as MNIST–50, 000 training and 10, 000 testing samples–and includes 10 categories. Figure 5 visualizes how this dataset looks; each sample is a $28\times28$ grayscale image.

在本实验中,我们采用了由Zalando SE最新创建的Fashion-MNIST数据集。该数据集旨在作为MNIST数据集的直接替代品,成为机器学习的新基准,因为部分模型在MNIST上已能达到接近完美的$100%$准确率。Fashion-MNIST与MNIST数据量相同——包含50,000个训练样本和10,000个测试样本——并涵盖10个类别。图5展示了该数据集的可视化效果:每个样本均为$28\times28$的灰度图像。

Our initial design (as discussed previously) is reused with a highlighted note that the RNN module has been incorporated. The number of units for each RNN is set to 2,048 (in the BiLSTM, the number of units is 1,024); the time step number is simply one, which shows the entire image each time. In addition, because the image size in the FashionMNIST dataset is smaller than the desired resolutions (e.g., $244\times244$ or $299\times299$ for Mobile Ne tV 1 and Inception V 3), all the images are upsampled.

我们沿用了初始设计 (如前所述),并特别注明已整合RNN模块。每个RNN单元数设置为2,048 (BiLSTM中单元数为1,024);时间步数仅为1,表示每次呈现完整图像。此外,由于FashionMNIST数据集的图像尺寸小于目标分辨率 (例如MobileNetV1和InceptionV3所需的$244\times244$或$299\times299$),所有图像都进行了上采样处理。

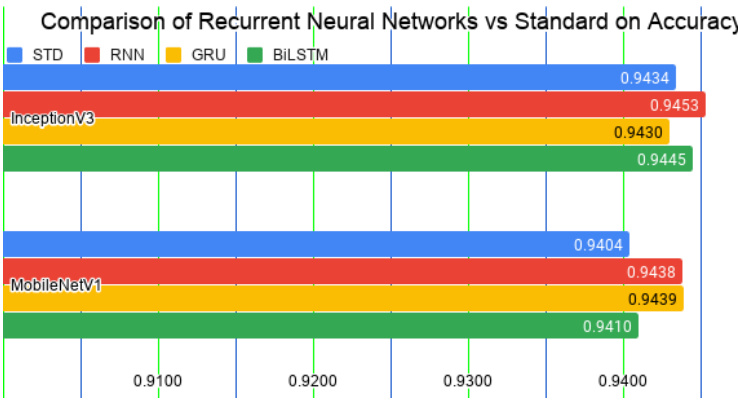

TABLE 1 Accuracy comparison of models using distinct recurrent neural networks built on Inception V 3 and Mobile Ne tV 1

表 1: 基于 Inception V3 和 MobileNetV1 构建的不同循环神经网络模型准确率对比

| 微调模型 | STD | RNN | GRU | BiLSTM |

|---|---|---|---|---|

| InceptionV3 | 0.9434 | 0.9453 | 0.9430 | 0.9445 |

| MobileNetV1 | 0.9404 | 0.9438 | 0.9439 | 0.9410 |

We performed these experiments on Google Colab1, even though our server runs faster. The main reason is that Jupyter Notebook occupies all the GPUs for the first login section. In other words, only one program executes with full capability. In contrast, Colab can run multiple environments in parallel. A secondary reason is that the virtual environment allows rapid development (i.e., it supports the installation of additional libraries and can run programs instantly). All the experiments are set to run for 12 hours; some take less time before over fitting, but others take more than the maximum time allotted. We repeated each experiment 3 times. Moreover, in this study, we often submit the results to challenge websites and record only the highest accuracy rather than employing other metrics. The accuracy metric is defined as follows:

我们在Google Colab1上进行了这些实验,尽管我们的服务器运行速度更快。主要原因是Jupyter Notebook在首次登录时会占用所有GPU资源,换句话说,只有单个程序能全性能执行。相比之下,Colab可以并行运行多个环境。次要原因是虚拟环境支持快速开发(即支持安装额外库并能即时运行程序)。所有实验均设置为运行12小时:部分实验在过拟合前耗时较短,但其他实验会超出最大分配时长。每组实验重复3次。此外,本研究中我们常将结果提交至挑战网站,仅记录最高准确率而非采用其他指标。准确率指标定义如下:

$$

A c c u r a c y={\frac{\mathrm{Number of correct predictions}}{\mathrm{Total numbers of predictions~made}}}.

$$

$$

A c c u r a c y={\frac{\mathrm{Number of correct predictions}}{\mathrm{Total numbers of predictions~made}}}.

$$

Table 1 shows comparisons of the various models using different RNN modules built on Inception V 3 and MobileNetV1. The former is often chosen as a baseline model, whereas the latter is the lightest model used here in terms of parameters and computation. The results in Figure 6 show that the models with the additional RNN modules achieve substantially higher accuracy than do the STD models.

表 1 展示了基于 Inception V3 和 MobileNetV1 构建的不同 RNN 模块的模型对比。前者常被选作基线模型,而后者是本文所用参数量和计算量最轻的模型。图 6 结果显示,增加 RNN 模块的模型比 STD 模型取得了显著更高的准确率。

Fig. 6. Accuracy comparison of models integrated with recurrent neural networks vs. the standard model (STD). The integrated models significantly outperform the STD models.

图 6: 集成循环神经网络 (RNN) 的模型与标准模型 (STD) 的准确率对比。集成模型显著优于标准模型。

- https://colab.research.google.com/, which began as an internal project built based on Jupyter Notebook but was opened for public use in 2018. At the time of this writing, the virtual environment supports only a single 12 GB NVIDIA Tesla K80 GPU.

- https://colab.research.google.com/,最初是基于Jupyter Notebook构建的内部项目,于2018年开放公众使用。截至本文撰写时,该虚拟环境仅支持单个12 GB NVIDIA Tesla K80 GPU。

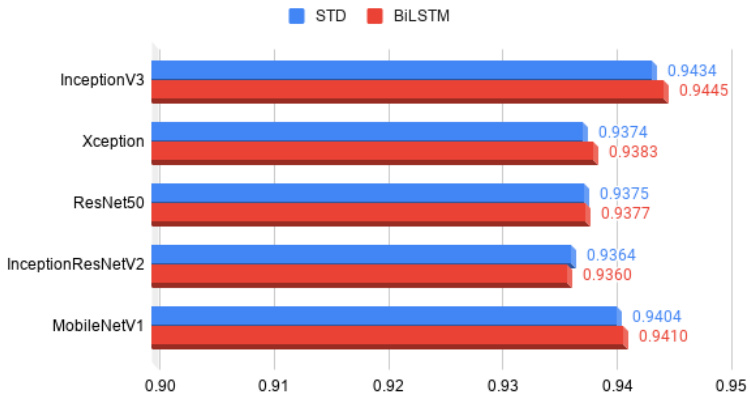

TABLE 2 Comparison of BiLSTM and STD on different fine-tuned models, including Inception V 3, Xception, ResNet50, Inception-ResNetV2, Mobile Ne tV 1, VGG19 and SERe s NeXt 101.

表 2: BiLSTM 和 STD 在不同微调模型上的对比,包括 Inception V3、Xception、ResNet50、Inception-ResNetV2、MobileNetV1、VGG19 和 SEResNeXt 101。

| Fine-tunedModels | ||||

|---|---|---|---|---|

| Inception V3 | Xception | ResNet 50 | InceptionResNet V2 | |

| STD | 0.9434 | 0.9374 | 0.9375 | 0.9364 |

| BiLSTM | 0.9445 | 0.9383 | 0.9377 | 0.9360 |

| MobileNet V1 | VGG19 | SEResNeXt 101 | ||

| STD | 0.9404 | 0.9075 | N/A | |

| BiLSTM | 0.9410 | N/A | N/A |

Fig. 7. Comparison of the accuracy of BiLSTM and STD models using Inception V 3, Xception, ResNet50, Inception-ResNetV2 and MobileNetV1. The BiLSTM models substantially outperform the STD models in several instances.

图 7: 使用 Inception V3、Xception、ResNet50、Inception-ResNetV2 和 MobileNetV1 的 BiLSTM 与 STD 模型准确率对比。BiLSTM 模型在多数情况下显著优于 STD 模型。

We also extended this experiment to include more models, including Xception, ResNet50, Inception-ResNetV2, VGG19 and SERe s NeXt 101. Table 2 shows the comparisons of models integrated with the BiLSTM module versus the standard models. Most of the models integrated with BiLSTMs significantly outperform the STDs, except for Inception-ResNetV2. Please note that training the VGG19 model required excessive training time; the model did not reach the same accuracy level as the other models even after 12 hours of training. Similarly, we were unable to obtain results for the VGG19-BiLSTM or SERe s NeXt 101 models. Therefore, Figure 7 shows only the results for Inception V 3, Xception, ResNet50, Inception-ResNetV2 and Mobile Ne tV 1.

我们还扩展了实验范围,纳入了更多模型,包括Xception、ResNet50、Inception-ResNetV2、VGG19和SEResNeXt 101。表2展示了集成BiLSTM模块的模型与标准模型的对比结果。除Inception-ResNetV2外,大多数集成BiLSTM的模型性能显著优于标准模型。需注意,VGG19模型的训练耗时过长,即使经过12小时训练仍未达到其他模型的精度水平。同样,我们未能获得VGG19-BiLSTM和SEResNeXt 101模型的实验结果。因此,图7仅展示了Inception V3、Xception、ResNet50、Inception-ResNetV2和MobileNetV1的结果。

4.3 Experiment 3

4.3 实验3

Often, when training a model, we randomly split a dataset into training and testing sets with a desired ratio. Then, we repeat our evaluation several times, and finally, obtain the results from one of the measurement methods, e.g., accuracy mean and standard deviation. However, in competitions such as those on Kaggle, a test set is completely separated from a training set. If we naively divide the training set into a training set and a validation set, we face a dilemma in which all the samples of the original training set cannot be used for training because a portion of the dataset is always needed for validation. To solve this problem, we apply $\mathbf{k}\cdot\mathbf{\partial}$ - fold validation to the training set by dividing the dataset into $\mathbf{k}$ subsets, of which one is reserved for validation. We expand this process and make predictions on the official test set. This method allows our models (one model for each set) to learn from all the images in the official training set.

在训练模型时,我们通常会将数据集按所需比例随机划分为训练集和测试集。随后进行多次评估,最终通过某种测量方法(如准确率均值和标准差)获得结果。然而,在Kaggle等竞赛中,测试集与训练集是完全分离的。若简单地将训练集划分为训练集和验证集,就会面临原始训练集无法全部用于训练的困境——因为总需要保留部分数据用于验证。为解决这个问题,我们对训练集采用$\mathbf{k}\cdot\mathbf{\partial}$折交叉验证:将数据集分成$\mathbf{k}$个子集,其中留一个子集作为验证集。通过扩展这一流程并对官方测试集进行预测,我们的模型(每个子集对应一个模型)就能从官方训练集的所有图像中学习。

We also attempted to increase model robustness via data augmentation techniques. The goal of such techniques is to transform an original training dataset into an expanded dataset whose true labels are known [69], [70], [71]. Importantly, this teaches the model to be invariant to and un influenced by input variations [72]. For example, flipping an image of a car horizontally does not change its corresponding category. We applied the augmentation approach from [55] on the test set. In this approach a sample is cropped multiple times and the model makes predictions for each instance. The procedure has recently become standard practice in image recognition tasks and is referred to as “test time augmentation.“ In this study, we cropped the images at random locations instead of at only the four corners and the center. In addition, we utilized the most prevalent augmentation techniques for geometric and texture transformations, such as rotation, width/height shifts, shear and zoom, horizontal and vertical flips and channel shift.

我们还尝试通过数据增强技术提高模型的鲁棒性。这类技术的目标是将原始训练数据集转换为已知真实标签的扩展数据集 [69], [70], [71]。关键在于,这能教会模型对输入变化保持不变性且不受其影响 [72]。例如,将汽车图片水平翻转不会改变其对应类别。我们在测试集上应用了 [55] 提出的增强方法:对样本进行多次裁剪,模型对每个裁剪实例进行预测。该流程近期已成为图像识别任务的标准实践,称为"测试时增强 (test time augmentation)"。本研究中,我们采用随机位置裁剪而非仅限四角和中心裁剪。此外,我们运用了最普遍的几何与纹理变换增强技术,包括旋转、宽/高偏移、剪切缩放、水平/垂直翻转以及通道偏移。

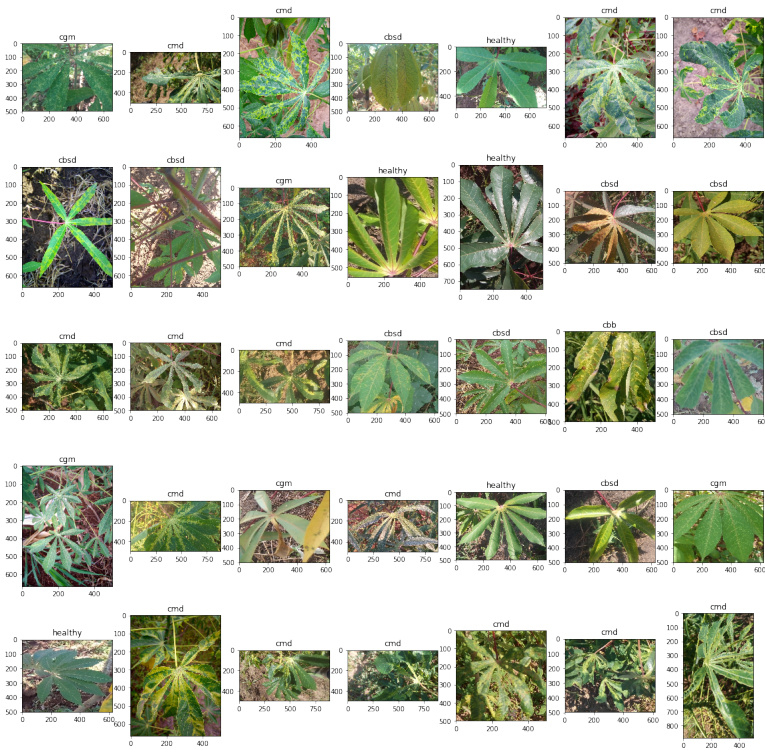

Fig. 8. Random Samples from the iCassava dataset. CMD, CGM, CBB and CBSD denote cassava mosaic disease, cassava green mite disease, cassava bacterial blight, and cassava brown streak disease, respectively.

图 8: iCassava数据集中的随机样本。CMD、CGM、CBB和CBSD分别表示木薯花叶病、木薯绿螨病、木薯细菌性枯萎病和木薯褐条病。

We also applied state-of-the-art ensemble learning because the technique is generally more accurate than is prediction from a single model. We use a simple averaging approach to aggregate all the models (a.k.a AVG-3M). Additionally, it is important to ensure a variety of the finetuned models to increase the classifier diversity because combining multiple redundant class if i ers would be meaningless. We finally chose the SERest NeXt 101, Xception and Inception-ResNetV2 fine-tuned models, as these ConvNets yield higher results than do others. Please note that the RNN module (including the reshape and BiLSTM layers) is excluded.

我们还应用了最先进的集成学习技术,因为该技术通常比单一模型的预测更准确。我们采用简单的平均方法聚合所有模型(即AVG-3M)。此外,确保微调模型的多样性以提升分类器差异性至关重要,因为组合多个冗余分类器毫无意义。最终我们选择了SERest NeXt 101、Xception和Inception-ResNetV2微调模型,这些卷积网络的性能优于其他模型。需注意,RNN模块(包括重塑层和双向LSTM层)被排除在外。

One last crucial technique is our training strategy, which helps to reduce loss and increase accuracy by searching for a better global minimum (more details will be presented in the next section).

最后一项关键技术是我们的训练策略,它通过寻找更好的全局最小值来帮助减少损失并提高准确度 (更多细节将在下一节中介绍)。

We evaluated our approach using the iCassava Challenge dataset, which was also compiled for the FGVC6 workshop at CVPR19. In the iCassava dataset, the leaf images of cassava plants are divided into 4 disease categories: cassava mosaic disease, cassava green mite disease, cassava bacterial blight, and cassava brown streak disease, and 1 category of healthy plants, comprising 9,436 labeled images. The challenge was organized on the Kaggle website 2, ran from the 26th of April to the 2nd of June 2019, and attracted nearly 100 teams from around the world. The proposed models were evaluated on 3,774 official test samples, and the results were submitted to the website. The public leader board is computed from $40%$ of the test data, whereas the private leader board is computed from all the test data. Figure 8 shows some random samples from this dataset.

我们使用iCassava Challenge数据集评估了我们的方法,该数据集也是为CVPR19的FGVC6研讨会所整理。在iCassava数据集中,木薯植物的叶片图像被分为4种病害类别:木薯花叶病、木薯绿螨病、木薯细菌性枯萎病和木薯褐条病,以及1类健康植物,共包含9,436张标注图像。该挑战赛于2019年4月26日至6月2日在Kaggle网站2上举办,吸引了来自全球近100支团队参赛。所提出的模型在3,774个官方测试样本上进行了评估,结果提交至网站。公开排行榜基于40%的测试数据计算,而私有排行榜则基于全部测试数据计算。图8展示了该数据集中的一些随机样本。

The iCassava official training set is split into 5 subsets for k-fold cross validation, in which one subset is reserved for testing and the others are used for training in turn. We performed these experiments on a server using a GeForce® GTX 1080 Ti graphics card with 4 GPUs, each with 12 GB of RAM.

iCassava官方训练集被划分为5个子集用于k折交叉验证,其中1个子集留作测试,其余子集依次用于训练。我们在配备GeForce