Shrinking Class Space for Enhanced Certainty in Semi-Supervised Learning

通过缩小类别空间提升半监督学习的确定性

Abstract

摘要

Semi-supervised learning is attracting blooming attention, due to its success in combining unlabeled data. To mitigate potentially incorrect pseudo labels, recent frameworks mostly set a fixed confidence threshold to discard uncertain samples. This practice ensures high-quality pseudo labels, but incurs a relatively low utilization of the whole unlabeled set. In this work, our key insight is that these uncertain samples can be turned into certain ones, as long as the confusion classes for the top-1 class are detected and removed. Invoked by this, we propose a novel method dubbed Shrink Match to learn uncertain samples. For each uncertain sample, it adaptively seeks a shrunk class space, which merely contains the original top-1 class, as well as remaining less likely classes. Since the confusion ones are removed in this space, the re-calculated top-1 confidence can satisfy the pre-defined threshold. We then impose a consistency regular iz ation between a pair of strongly and weakly augmented samples in the shrunk space to strive for disc rim i native representations. Furthermore, considering the varied reliability among uncertain samples and the gradually improved model during training, we correspondingly design two re weighting principles for our uncertain loss. Our method exhibits impressive performance on widely adopted benchmarks.

半监督学习因其在结合未标记数据方面的成功而备受关注。为减少潜在错误的伪标签,当前主流框架通常设置固定置信度阈值来剔除不确定样本。这种做法虽能确保伪标签的高质量,却导致整个未标记数据集的利用率较低。本研究的核心观点是:只要检测并移除与top-1类别易混淆的类别,这些不确定样本就能转化为确定样本。基于此,我们提出名为Shrink Match的创新方法:针对每个不确定样本,自适应地构建一个仅包含原始top-1类别及其他低概率类别的收缩类别空间。由于移除了混淆类别,在此空间内重新计算的top-1置信度可满足预设阈值。随后,我们通过在收缩空间内对强弱增强样本对施加一致性正则化,以获取判别性表征。此外,考虑到不确定样本间的可靠性差异及训练过程中模型的持续优化,我们为不确定损失函数设计了双重加权机制。该方法在广泛采用的基准测试中展现出卓越性能。

1. Introduction

1. 引言

In the last decade, our computer vision community has witnessed inspiring progress, thanks to large-scale datasets [21, 10]. Nevertheless, it is laborious and costly to annotate massive images, hindering the progress to benefit a broader range of real-world scenarios. Inspired by this, semisupervised learning (SSL) was proposed to utilize the unlabeled data under the assistance of limited labeled data.

过去十年间,得益于大规模数据集[21,10],计算机视觉领域取得了振奋人心的进展。然而,海量图像的标注工作费时耗力,制约了技术成果向更广泛现实场景的延伸。受此启发,半监督学习(SSL)应运而生,该方法在有限标注数据的辅助下利用未标注数据进行训练。

The frameworks in SSL are typically based on the strategy of pseudo labeling. Briefly, the model acquires knowledge from the labeled data, and then assigns predictions on the unlabeled data. The two sources of data are finally combined to train a better model. During this process, it is obvious that predictions on unlabeled data are not reliable. If the model is iterative ly trained with incorrect pseudo labels, it will suffer the confirmation bias issue [1]. To address this dilemma, recent works [28] simply set a fixed confidence threshold to discard potentially unreliable samples. This simple strategy effectively retains high-quality pseudo labels, however, it also incurs a low utilization of the whole unlabeled set. As evidenced by our pilot study on CIFAR-100 [17], nearly $20%$ unlabeled images are filtered out for not satisfying the threshold of 0.95. Instead of blindly throwing them away, we believe there should exist a more promising approach. This work is just aimed to fully leverage previously uncertain samples in an informative but also safe manner.

SSL框架通常基于伪标签策略。简而言之,模型先从标注数据中学习知识,然后对未标注数据进行预测,最终结合这两类数据训练出更好的模型。显然,在此过程中对未标注数据的预测并不可靠。若模型持续使用错误的伪标签进行迭代训练,就会陷入确认偏误(confirmation bias)问题[1]。针对这一困境,近期研究[28]仅通过固定置信度阈值来剔除潜在不可靠样本。这种简单策略虽能有效保留高质量伪标签,但也导致整个未标注集的利用率低下。我们在CIFAR-100[17]上的初步研究表明,近20%未标注图像因未达到0.95阈值而被过滤。与其盲目丢弃这些样本,我们认为应存在更具潜力的解决方案。本研究正是致力于以信息丰富且安全的方式,充分挖掘这些曾被判定为不确定样本的价值。

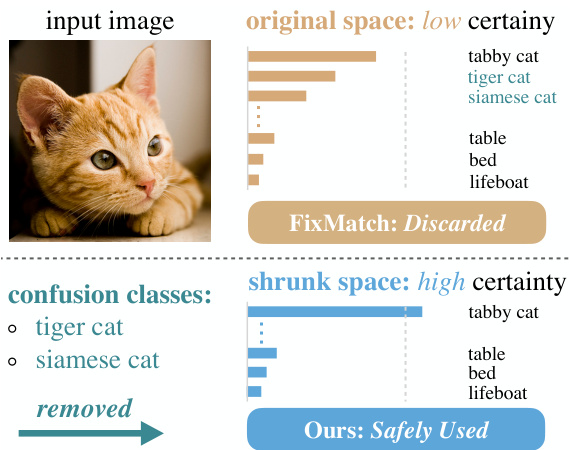

Figure 1: Illustration of our motivation. Due to confusion classes for the top-1 class, the certainty fails to reach the pre-defined threshold (gray dotted line). FixMatch discards such uncertain samples. Our method, however, detects and removes confusion classes to enhance certainty, then enjoying full and safe utilization of all unlabeled images.

图 1: 方法动机示意图。由于存在与top-1类别易混淆的类别,置信度无法达到预设阈值(灰色虚线)。FixMatch会丢弃这类不确定样本。而我们的方法通过检测并移除混淆类别来提升置信度,从而实现对所有未标注图像的安全充分利用。

So first, why are these samples uncertain? According to our observations on CIFAR-100 and ImageNet, although the top-1 accuracy could be low, the top-5 accuracy is much higher. This indicates in most cases, the model struggles to discriminate among a small portion of classes. As illustrated in Fig. 1, given a cat image, the model is not sure whether it belongs to tabby cat, tiger cat, or other cats. On the other hand, however, it is absolutely certain that the object is more like a tabby cat, rather than a table or anything else. In other words, it is reliable for the model to distinguish the top class from the remaining less likely classes.

首先,为什么这些样本存在不确定性?根据我们在CIFAR-100和ImageNet上的观察,尽管top-1准确率可能较低,但top-5准确率却高得多。这表明在大多数情况下,模型难以区分一小部分类别。如图1所示,给定一张猫的图像,模型不确定它属于虎斑猫、虎猫还是其他猫类。然而另一方面,模型可以完全确定该对象更像虎斑猫,而不是桌子或其他任何东西。换句话说,模型能够可靠地将最可能的类别与其余可能性较低的类别区分开来。

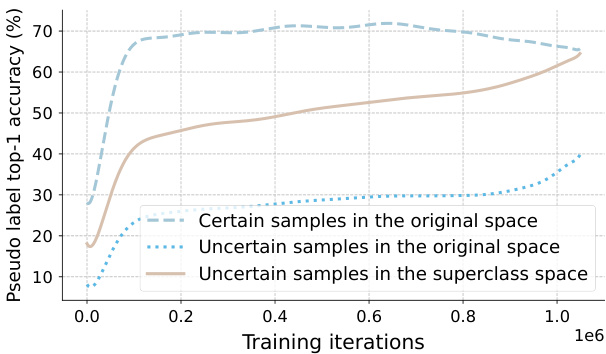

Figure 2: Pseudo label accuracy on CIFAR-100 with 400 labels. We highlight that even for uncertain samples, their top-1 predictions are of high accuracy in the superclass space (20 classes). This accuracy can even be comparable to the delicately selected certain samples in the original class space.

图 2: 在仅有400个标注的CIFAR-100数据集上的伪标签准确率。我们特别指出,即使对于不确定样本,其top-1预测在超类空间(20个类别)中也具有较高准确率,这种准确率甚至可以与原始类别空间中精心筛选的确定样本相媲美。

Invoked by these, we propose a novel method dubbed Shrink Match to learn uncertain samples. The prediction fails to satisfy the pre-defined threshold due to the existence of confusion classes for the top-1 class. Hence, our approach adaptively seeks a shrunk class space where the confusion classes are removed, to enable the re-calculated confidence to reach the original threshold. Moreover, the obtained shrunk class space is also required to be the largest among those that can satisfy the threshold. In a word, we seek a certain and largest shrunk space for uncertain samples. Then, logits of the strongly augmented image are correspondingly gathered in the new space. And a consistency regular iz ation is imposed between the shrunk weak-strong predictions.

受此启发,我们提出了一种名为Shrink Match的新方法用于学习不确定样本。由于存在与top-1类别易混淆的类别,预测结果无法满足预设阈值。因此,我们的方法自适应地寻找一个去除混淆类别的收缩类别空间,使得重新计算的置信度能够达到原始阈值。此外,所获得的收缩类别空间还需在满足阈值的条件下尽可能大。简言之,我们为不确定样本寻找一个确定且最大的收缩空间。随后,强增强图像的对数概率将在新空间中进行相应聚合,并在收缩后的强弱预测之间施加一致性正则化约束。

Note that, the confusion classes are detected and removed in a fully automatic and instance-adaptive fashion. Moreover, even if the predicted top-1 class is not fully in line with the ground truth label, they mostly belong to the same superclass. To prove this, as shown in Fig. 2, uncertain samples exhibit much higher pseudo label accuracy in the superclass space than the original space. This accuracy is even comparable to that of certain samples in the original class space. Therefore, contrasting these ground truth-related classes against remaining unlikely classes is still highly beneficial, yielding more disc rim i native representations of our model. To avoid affecting the main classifier, we further adopt an auxiliary classifier to disentangle the learning in the shrunk space.

需要注意的是,混淆类别的检测和移除是完全自动且实例自适应的。此外,即使预测的top-1类别与真实标签不完全一致,它们大多属于相同的超类。如图2所示,不确定样本在超类空间中展现的伪标签准确率远高于原始空间,甚至可与原始类别空间中确定样本的准确率相媲美。因此,将这些与真实标签相关的类别与剩余不太可能的类别进行对比仍然非常有益,能为模型生成更具判别性的表征。为避免影响主分类器,我们进一步采用辅助分类器来解耦收缩空间中的学习过程。

Despite the effectiveness, there still exist two main drawbacks to the above optimization target. (1) First, it treats all uncertain samples equally. The truth, however, is that in the original class space, the top-1 confidence of different uncertain samples can vary dramatically. And it is clear that samples with larger confidence should be attached more importance. To this end, we propose to balance different uncertain samples by their confidence in the original space. (2) Moreover, the regular iz ation term also overlooks the gradually improved model state during training. At the start of training, there are abundant uncertain samples, but their predictions are extremely noisy, or even random. So even the highest scored class may share no relationship with the true class. Then as the training proceeds, the top classes become reliable. Considering this, we further propose to adaptively reweight the uncertain loss according to the model state. The model state is tracked and approximately estimated via performing exponential moving average on the proportion of certain samples in each mini-batch. With the two reweighting principles, the model turns out more stable, and avoids accumulating much noise from uncertain samples, especially at early training iterations.

尽管有效,上述优化目标仍存在两个主要缺点。(1) 首先,它对所有不确定样本一视同仁。然而事实上,在原始类别空间中,不同不确定样本的top-1置信度可能差异巨大。显然,置信度更高的样本应被赋予更大权重。为此,我们提出根据样本在原始空间中的置信度来平衡不同不确定样本。(2) 此外,正则化项也忽略了训练过程中模型状态的逐步改善。训练初期存在大量不确定样本,但其预测结果噪声极大甚至完全随机,此时最高分类别可能与真实类别毫无关联。随着训练推进,top类别会逐渐变得可靠。基于此,我们进一步提出根据模型状态自适应地重新加权不确定损失。通过对小批次中确定样本比例进行指数移动平均来追踪和近似估计模型状态。通过这两项加权原则,模型表现更稳定,并避免了(尤其是训练早期)从不稳定样本中累积过多噪声。

To summarize, our contributions lie in three aspects:

总结而言,我们的贡献主要体现在三个方面:

• We first point out that low certainty is typically caused by a small portion of confusion classes. To enhance the certainty, we propose to shrink the original class space by adaptively detecting and removing confusion ones for the top-1 class to turn it certain in the new space. • We manage to reweight the uncertain loss from two perspectives: the image-based varied reliability among different uncertain samples, and the model-based gradually improved state as the training proceeds. • Our proposed Shrink Match establishes new state-ofthe-art results on widely acknowledged benchmarks.

• 我们首先指出,低确定性通常由少量混淆类别引起。为提高确定性,我们提出通过自适应检测并移除top-1类别的混淆类别来收缩原始类别空间,使其在新空间中具有确定性。

• 我们从两个角度对不确定损失进行重新加权:基于图像的不同不确定样本间可靠性差异,以及基于模型的训练过程中逐步优化状态。

• 我们提出的Shrink Match方法在广泛认可的基准测试中创造了最新最优结果。

2. Related Work

2. 相关工作

Semi-supervised learning (SSL). The primary concern in SSL [19, 28, 36, 20, 25, 16, 37, 35, 41, 43, 14, 24, 29, 23, 4, 30, 11, 33, 2, 42] is to design effective constraints for unlabeled samples. Dating back to decades ago, pioneering works [19, 38] integrate unlabeled data via assigning pseudo labels to them, with the knowledge acquired from labeled data. In the era of deep learning, subsequent methods mainly follow such a boots trapping fashion, but greatly boost it with some key components. Specifically, to enhance the quality of pseudo labels, Π-model [18] and Mean Teacher [31] ensemble model predictions and model parameters respectively. Later works start to exploit the role of perturbations. During this trend, [27] proposes to apply stochastic perturbations on inputs or features, and enforce consistency across these predictions. Then, UDA [34] emphasizes the necessity of strengthening the perturbation pool. It also follows VAT [22] and MixMatch [3] to supervise the prediction under strong perturbations with that under weak perturbations. Since then, weak-to-strong consistency regular iz ation has become a standard practice in SSL. Eventually, the milestone work FixMatch [28] presents a simplified framework using a fixed confidence threshold to discard uncertain samples. Our Shrink Match is built upon FixMatch. But, we highlight the value of previously neglected uncertain samples, and leverage them in an informative but also safe manner.

半监督学习 (SSL)。SSL [19, 28, 36, 20, 25, 16, 37, 35, 41, 43, 14, 24, 29, 23, 4, 30, 11, 33, 2, 42] 的核心问题在于为未标注样本设计有效约束。早在数十年前,先驱工作 [19, 38] 就通过从标注数据中获取知识,为未标注数据分配伪标签来实现数据整合。在深度学习时代,后续方法主要延续这种自举 (bootstrapping) 范式,但通过关键组件大幅提升了性能。具体而言,为提升伪标签质量,Π-model [18] 和 Mean Teacher [31] 分别对模型预测和模型参数进行集成。后续研究开始探索扰动的作用,其中 [27] 提出对输入或特征施加随机扰动,并强制这些预测之间保持一致性。随后,UDA [34] 强调增强扰动池的必要性,并遵循 VAT [22] 和 MixMatch [3] 的思路,用弱扰动下的预测监督强扰动下的预测。自此,弱-强一致性正则化成为 SSL 的标准实践。最终,里程碑工作 FixMatch [28] 提出使用固定置信度阈值剔除不确定样本的简化框架。我们的 Shrink Match 基于 FixMatch,但强调了先前被忽略的不确定样本的价值,并以信息丰富且安全的方式加以利用。

More recently, DST [7] decouples the generation and utilization of pseudo labels with a main and an auxiliary head respectively. Besides, SimMatch [45] explores instancelevel relationships with labeled embeddings to supplement original class-level relations. Compared with them, our Shrink Match achieves larger improvements.

最近,DST [7] 通过分别使用主头和辅助头来解耦伪标签的生成和利用。此外,SimMatch [45] 利用带标签的嵌入来探索实例级关系,以补充原始的类级关系。相比之下,我们的 Shrink Match 取得了更大的改进。

Defining uncertain samples. Earlier works estimate the uncertainty with Bayesian Neural Networks [15], or its faster approximation, e.g., Monte Carlo Dropout [12]. Some other works measure the prediction disagreement among multiple randomly augmented inputs [32]. The latest trend is to directly use the entropy of predictions [39], cross entropy [36], or softmax confidence [28] as a measurement for uncertainty. Our work is not aimed at the optimal uncertainty estimation strategy, so we adopt the simplest solution from FixMatch, i.e., using the maximum softmax output as the certainty.

定义不确定样本。早期研究通过贝叶斯神经网络[15]或其快速近似方法(如蒙特卡洛Dropout[12])来估计不确定性。另一些工作则通过测量多个随机增强输入之间的预测分歧[32]进行评估。最新趋势是直接使用预测熵[39]、交叉熵[36]或softmax置信度[28]作为不确定性度量指标。本研究不追求最优不确定性估计策略,因此采用FixMatch中最简方案,即以最大softmax输出作为确定性度量。

Utilizing uncertain samples. UPS [26] leverages negative class labels whose confidence is below a pre-defined threshold, from a reversed multi-label classification perspective. In comparison, our model is enforced to tell the most likely class without being cheated by the less likely ones. So our supervision on uncertain samples is more informative and produces more disc rim i native representations. Moreover, we do not introduce any extra hyper-parameters, e.g., the lower threshold in [26], into our framework.

利用不确定样本。UPS [26] 从反向多标签分类的角度,利用了置信度低于预设阈值的负类标签。相比之下,我们的模型被强制要求识别最可能的类别,而不被可能性较低的类别所欺骗。因此,我们对不确定样本的监督信息更丰富,并能产生更具判别性的表征。此外,我们的框架没有引入任何额外的超参数(例如 [26] 中的下限阈值)。

3. Method

3. 方法

We primarily provide some notations and review a common practice in semi-supervised learning (SSL) (Sec. 3.1). Next, we present our Shrink Match in detail (Sec. 3.2 and Sec. 3.3). Finally, we summarize our approach and provide a further discussion (Sec. 3.4 and Sec. 3.5).

我们首先提供一些符号说明并回顾半监督学习(SSL)中的常见做法(第3.1节)。接着详细阐述我们的Shrink Match方法(第3.2节和第3.3节)。最后总结我们的方法并进行深入讨论(第3.4节和第3.5节)。

3.1. Preliminaries

3.1. 预备知识

$$

\mathcal{L}{u}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\boldsymbol{\xi}(\boldsymbol{p}{k}^{w})\geq\boldsymbol{\tau})\cdot\mathrm{H}(\boldsymbol{p}{k}^{w},\boldsymbol{p}_{k}^{s}),

$$

$$

\mathcal{L}{u}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\boldsymbol{\xi}(\boldsymbol{p}{k}^{w})\geq\boldsymbol{\tau})\cdot\mathrm{H}(\boldsymbol{p}{k}^{w},\boldsymbol{p}_{k}^{s}),

$$

pre-defined to abandon uncertain ones. So the unsupervised loss $\mathcal{L}_{u}$ can be formulated as:

预定义以舍弃不确定样本。因此无监督损失 $\mathcal{L}_{u}$ 可表示为:

Semi-supervised learning aims to learn a model with limited labeled images $\mathcal{D}^{l}={(x_{k},y_{k})}$ , aided by a large number of unlabeled images $D^{u}={u_{k}}$ . Recent frameworks commonly follow the FixMatch practice. Concretely, an unlabeled image $u$ is first transformed by a weak augmentation pool $\mathcal{A}^{w}$ and a strong augmentation pool $\mathcal{A}^{s}$ to yield a pair of weakly and strongly augmented images $(\boldsymbol{u}^{w},\boldsymbol{u}^{s})$ . Then, they are fed into the model together to produce corresponding predictions $(p^{w},p^{s})$ . Typically, $p^{w}$ is of much higher accuracy than $p^{s}$ , while $p^{s}$ is beneficial to learning. Therefore, $p^{w}$ serves as the pseudo label for $p^{s}$ . Moreover, a core practice introduced by FixMatch is that, to improve the quality of selected pseudo labels, a fixed confidence threshold is where $B^{u}$ is the batch size of unlabeled images and $\tau$ is the pre-defined threshold. $\xi(p_{k}^{w})$ computes the confidence of logits $p_{k}^{w}$ by $\xi(\cdot)=\operatorname*{max}(\sigma(\cdot))$ , where $\sigma$ is the softmax func- tion. The $\mathrm{H}$ denotes the consistency regular iz ation between the two distributions. It is typically the cross entropy loss.

半监督学习旨在利用有限的标注图像 $\mathcal{D}^{l}={(x_{k},y_{k})}$ 和大量未标注图像 $D^{u}={u_{k}}$ 学习模型。当前主流框架通常遵循FixMatch策略:首先对未标注图像 $u$ 分别进行弱增强 $\mathcal{A}^{w}$ 和强增强 $\mathcal{A}^{s}$ 处理,生成增强图像对 $(\boldsymbol{u}^{w},\boldsymbol{u}^{s})$ ,随后输入模型获得预测结果 $(p^{w},p^{s})$ 。其中 $p^{w}$ 的准确率显著高于 $p^{s}$ ,而 $p^{s}$ 有助于模型学习,因此将 $p^{w}$ 作为 $p^{s}$ 的伪标签。FixMatch的核心创新在于通过固定置信度阈值筛选高质量伪标签,公式中 $B^{u}$ 表示未标注图像的批次大小, $\tau$ 为预设阈值。置信度计算函数 $\xi(p_{k}^{w})=\operatorname*{max}(\sigma(\cdot))$ 通过对数几率 $p_{k}^{w}$ 取softmax最大值实现, $\mathrm{H}$ 表示两个分布间的一致性正则项,通常采用交叉熵损失函数。

In addition, the labeled images are learned with a regular cross entropy loss to obtain the supervised loss $\mathcal{L}_{x}$ . The overall loss in each mini-batch then will be:

此外,标注图像通过常规交叉熵损失学习以获得监督损失 $\mathcal{L}_{x}$。每个小批量的总损失为:

$$

\mathcal{L}=\mathcal{L}{x}+\lambda_{u}\cdot\mathcal{L}_{u},

$$

$$

\mathcal{L}=\mathcal{L}{x}+\lambda_{u}\cdot\mathcal{L}_{u},

$$

where $\lambda_{u}$ acts as a trade-off term between the two losses.

其中 $\lambda_{u}$ 作为两个损失函数之间的权衡项。

3.2. Shrinking the Class Space for Certainty

3.2 缩小类别空间以提高确定性

Our motivation. As reviewed above, FixMatch discards the samples whose confidence is lower than a pre-defined threshold, because their pseudo labels are empirically found relatively noisier. These samples are named uncertain samples in this work. Although this practice retains high-quality pseudo labels, it incurs a low utilization of the whole unlabeled set, especially when the scenario is challenging and the selection criterion is strict. Take the CIFAR-100 dataset as an instance, with a common threshold of 0.95 and 4 labels per class, there will be nearly $20%$ unlabeled samples being ignored due to their low certainty. We argue that, these uncertain samples can still benefit the model optimization, as long as we can design appropriate constraints (loss functions) on them. So we first investigate the cause of low certainty to gain some better intuitions.

我们的动机。如前所述,FixMatch会丢弃置信度低于预定义阈值的样本,因为经验发现它们的伪标签噪声相对较大。这些样本在本研究中被称为不确定样本。虽然这种做法保留了高质量的伪标签,但导致整个未标记集的利用率较低,尤其是在场景具有挑战性且选择标准严格的情况下。以CIFAR-100数据集为例,在常见的0.95阈值和每类4个标签的设置下,由于置信度低,近20%的未标记样本会被忽略。我们认为,只要能为这些不确定样本设计适当的约束(损失函数),它们仍能为模型优化带来益处。因此,我们首先探究低确定性的成因以获得更深入的洞见。

The reason for the low certainty of an unlabeled image is that, the model tends to be confused among some top classes. For example, given a cat image, the score of the class tabby cat and class tiger cat may be both high, so the model is not absolutely certain what the concrete class is. Motivated by this observation, we propose to shrink the original class space via adaptively detecting and removing the confusion classes for the top-1 class. Then the shrunk space is only composed of the original top-1 class, as well as the remaining less likely classes. After this process, re-calculated confidence of the top-1 class will satisfy the pre-defined threshold. Thereby, we can enforce the model to learn the previously uncertain samples in this new certain space, as shown in Fig. 3. Following the previous cat example, if the top-1 class is tabby cat, our method will scan scores of all other classes, and remove confusion ones (e.g., tiger cat and siamese cat) to construct a confident shrunk space, where the model is sure that the image is a tabby cat, rather than a table. Since we do not ask the model to discriminate among several top classes, it will avoid suffering from the noise when it makes a wrong judgment in the original space.

未标注图像确定性低的原因在于,模型容易在某些头部类别间产生混淆。例如给定一张猫的图像,虎斑猫类别与虎猫类别的得分可能都很高,导致模型无法完全确定具体类别。基于这一观察,我们提出通过自适应检测并剔除与top-1类别易混淆的类别,从而收缩原始类别空间。收缩后的空间仅包含原始top-1类别及剩余低概率类别。经过此处理后,重新计算的top-1类别置信度将满足预设阈值。如图3所示,该方法可强制模型在新构建的确定性空间中学习先前不确定的样本。以前述猫的示例为例,若top-1类别为虎斑猫,本方法会扫描所有其他类别的得分,并移除混淆类别(如虎猫和暹罗猫)以构建确信的收缩空间——此时模型能确定图像是虎斑猫而非桌子。由于不再要求模型区分多个头部类别,可避免其在原始空间做出错误判断时产生的噪声干扰。

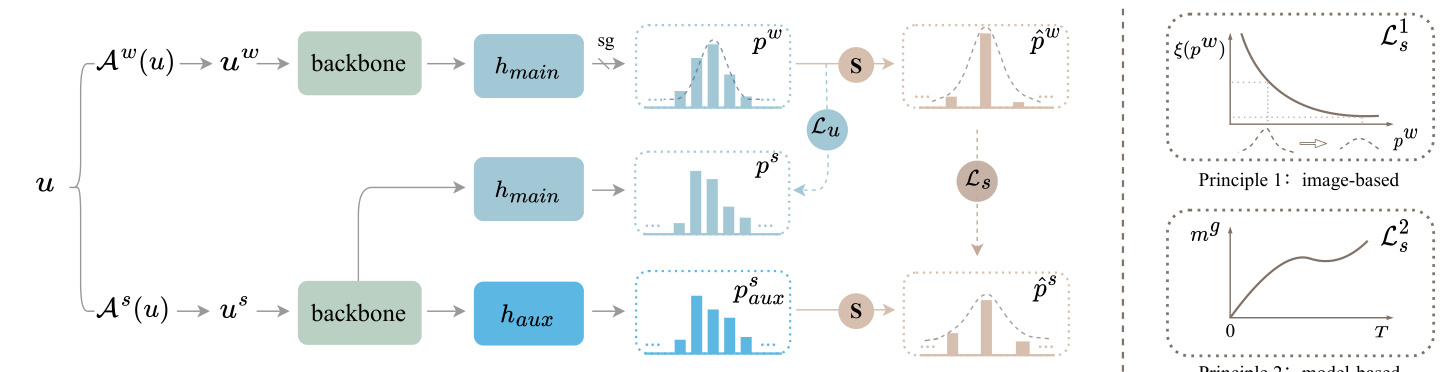

Principle 2:model-based Figure 3: An overview of our proposed Shrink Match. Our motivation is to fully leverage the originally uncertain samples. “S” denotes shrinking the class space. The confusion classes for the top-1 class are detected and removed in a fully automatic and instance-adaptive fashion, to construct a shrunk space where the top-1 class is turned certain. $\mathcal{L}{u}$ is the original certain loss, while $\mathcal{L}{s}$ calculates the uncertain loss in the shrunk class space. We add an auxiliary head $h_{a u x}$ to learn in the new space. On the right, we further reweight $\mathcal{L}_{s}$ based on two principles. Principle 1 (image-based): image predictions with larger reliability are attached more importance. Principle 2 (model-based): We track the model state during training for re weighting.

图 3: 我们提出的Shrink Match方法概览。我们的动机是充分利用原始不确定样本。"S"表示收缩类别空间。通过全自动且实例自适应的方式检测并移除与top-1类别易混淆的类别,构建一个使top-1类别转为确定的收缩空间。$\mathcal{L}{u}$是原始确定损失,而$\mathcal{L}{s}$计算收缩类别空间中的不确定损失。我们添加辅助头$h_{aux}$在新空间中进行学习。右侧我们基于两个原则对$\mathcal{L}_{s}$进行重新加权。原则1(基于图像):可靠性更高的图像预测被赋予更大权重。原则2(基于模型):我们在训练过程中跟踪模型状态进行重新加权。

How to seek the shrunk class space? Now, how can we enable our method to automatically seek the optimal shrunk space of an uncertain unlabeled image? Ideally, we hope this seeking process is free from any prior knowledge from humans, e.g., class relationships, and also does not require any extra hyper-parameters. Considering these, we opt to inherit the pre-defined confidence threshold as a criterion. To be specific, we first sort the predicted logits in a descending order to obtain $p^{w}={s_{n_{i}}^{w}}_{i=1}^{C}$ for classes ${n_{i}}_{i=1}^{C}$ , where snwi ≥ snwi+1. In the shrunk space, we will retain the original top-1 class, because it is still the most likely one to be true. Then, we find a set of less likely classes ${n_{i}}_{i=K}^{C}$ by enforcing two constraints on the $K$ :

如何寻找收缩后的类别空间?现在,我们如何让方法自动为不确定的未标注图像寻找最优的收缩空间?理想情况下,我们希望这一寻找过程无需依赖任何人类先验知识(例如类别关系),也不引入额外超参数。基于此,我们选择沿用预定义的置信度阈值作为判断标准。具体而言,我们首先对预测logits进行降序排序,得到类别${n_{i}}_{i=1}^{C}$对应的$p^{w}={s_{n_{i}}^{w}}_{i=1}^{C}$,其中$s_{n_{i}}^{w} \geq s_{n_{i+1}}^{w}$。在收缩空间中,我们将保留原始top-1类别,因为它仍是最可能正确的类别。接着,通过对$K$施加两个约束条件,找到一组可能性较低的类别${n_{i}}_{i=K}^{C}$:

$$

\begin{array}{r l}&{\xi({s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}{i=K}^{C})\geq\tau,}\ &{\xi({s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}_{i=K-1}^{C})<\tau,}\end{array}

$$

$$

\begin{array}{r l}&{\xi({s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}{i=K}^{C})\geq\tau,}\ &{\xi({s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}_{i=K-1}^{C})<\tau,}\end{array}

$$

where $\xi$ is defined the same as that in Eq. (1), calculating the icso nc of id mep nocs e eodf tohf et rhee- arses-e as ms bel med b lleodg itcsl.a sTshees ${n_{1}}\cup{n_{i}}_{i=K}^{C}$ The two constraints on not only ensure the top-1 class is turned certain in the new space (Eq. (3)), but also select the largest space among all candidates (Eq. (4)).

其中 $\xi$ 的定义与式 (1) 相同,计算 icso nc of id mep nocs e eodf tohf et rhee-arses-e as ms bel med b lleodg itcsl.a sTshees ${n_{1}}\cup{n_{i}}_{i=K}^{C}$。这两个约束条件不仅确保 top-1 类别在新空间中变为确定状态 (式 (3)),同时还从所有候选空间中选择最大的空间 (式 (4))。

How to learn in the shrunk class space? For a weakly augmented uncertain image $x^{w}$ , the model is certain about its top-1 class in the shrunk class space. To learn effectively in this space, we follow the popular practice of weak-to-strong consistency regular iz ation. The correspondingly shrunk prediction on the strongly augmented image is enforced to match that on the weakly augmented one. Concretely, for clarity, the re-assembled logits from $p^{w}$ is denoted as $\hat{p}^{w}$ , which means $\hat{p}^{w}={s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}{K}^{C}$ . We use the re-assembled classes ${n_{1}}\cup{n_{i}}{K}^{C}$ in the shrunk space to correspondingly gather the logits $p^{s}$ on $x^{s}$ , yielding $\hat{p}^{s}={s_{n_{1}}^{s}}\cup{s_{n_{i}}^{s}}_{K}^{C}$ . Then we can regularize the consistency between the two shrunk distributions $\hat{p}^{s}$ and $\hat{p}^{w}$ , similar to that in Eq. (1):

如何在缩小的类别空间中学习?对于弱增强的不确定图像$x^{w}$,模型对其在缩小类别空间中的top-1类别是确定的。为了在该空间中有效学习,我们遵循弱到强一致性正则化的流行做法。强制要求强增强图像上的相应缩小预测与弱增强图像上的预测相匹配。具体来说,为清晰起见,从$p^{w}$重新组装的logits记为$\hat{p}^{w}$,即$\hat{p}^{w}={s_{n_{1}}^{w}}\cup{s_{n_{i}}^{w}}{K}^{C}$。我们使用缩小空间中的重新组装类别${n_{1}}\cup{n_{i}}{K}^{C}$来相应收集$x^{s}$上的logits$p^{s}$,得到$\hat{p}^{s}={s_{n_{1}}^{s}}\cup{s_{n_{i}}^{s}}_{K}^{C}$。然后可以正则化两个缩小分布$\hat{p}^{s}$和$\hat{p}^{w}$之间的一致性,类似于式(1)中的做法:

$$

\mathcal{L}{s}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\boldsymbol{\xi}(\boldsymbol{p}{k}^{w})<\boldsymbol{\tau})\cdot\hat{\mathrm{H}}(\hat{\boldsymbol{p}}{k}^{w},\hat{\boldsymbol{p}}_{k}^{s}),

$$

$$

\mathcal{L}{s}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\boldsymbol{\xi}(\boldsymbol{p}{k}^{w})<\boldsymbol{\tau})\cdot\hat{\mathrm{H}}(\hat{\boldsymbol{p}}{k}^{w},\hat{\boldsymbol{p}}_{k}^{s}),

$$

where the indicator function is to find uncertain samples.

指示函数用于寻找不确定样本。

Empirically, we observe that if we use the original linear head $h_{m a i n}$ to learn this auxiliary supervision, it will make the confidence of our model increase aggressively. Most noisy unlabeled samples are blindly judged as certain ones. We conjecture that it is because the $\mathcal{L}{s}$ strengthens weights of the classes that are frequently uncertain, then these classes will be incorrectly turned certain. With this in mind, our solution is simple. We adopt an auxiliary MLP head $h_{a u x}$ that shares the backbone with $h_{m a i n}$ , to deal with this auxiliary optimization target, as shown in Fig. 3. So the $\hat{p}^{s}$ is indeed gathered from predictions of $h_{a u x}$ ( $\hat{p}^{w}$ is still from $h_{m a i n.}$ ). This modification enables our feature extractor to acquire more disc rim i native representations, and meantime does not affect predictions of the main head. Note that $h_{a u x}$ is only applied for training, bringing no burden to the test stage.

根据实验观察,若使用原始线性头 $h_{main}$ 学习该辅助监督任务,会导致模型置信度急剧上升,大量含噪声的未标注样本被盲目判定为确定样本。我们推测这是由于 $\mathcal{L}{s}$ 强化了频繁处于不确定状态的类别权重,致使这些类别被错误地转为确定状态。为此,我们提出一种简单解决方案:如图3所示,采用与 $h_{main}$ 共享主干网络的辅助MLP头 $h_{aux}$ 来处理该辅助优化目标。此时 $\hat{p}^{s}$ 实际来自 $h_{aux}$ 的预测(而 $\hat{p}^{w}$ 仍由 $h_{main}$ 生成)。这一改进使特征提取器能获得更具判别性的表征,同时不影响主头的预测性能。需注意 $h_{aux}$ 仅用于训练阶段,不会增加测试时的计算负担。

3.3. Re weighting the Uncertain Loss

3.3. 不确定性损失重加权

Despite the effectiveness of the above uncertain loss, there still exist two main drawbacks. (1) On one hand, it overlooks the varied reliability of the top-1 class among different uncertain images. For example, suppose two uncertain images $u_{1}$ and $u_{2}$ with softmax predictions [0.8, 0.1, 0.1] and [0.5, 0.3, 0.2] in the original space, they should not be treated equally in the shrunk space. The $u_{1}$ with top-1 confidence of 0.8 is more likely to be true than $u_{2}$ , and thereby should be attached more attention to. (2) On the other, it ignores the gradually improved model performance as the training proceeds. To be concrete, at the very start of training, the predictions are extremely noisy or even random. And then at later stages, the predictions become more and more reliable. So the uncertain predictions at different training stages should not be treated equally. Therefore, we further design two re weighting principles for the two concerns.

尽管上述不确定性损失方法有效,但仍存在两个主要缺陷:(1) 一方面,它忽略了不同不确定图像中top-1类别的可靠性差异。例如,假设原始空间中两个不确定图像$u_{1}$和$u_{2}$的softmax预测值分别为[0.8, 0.1, 0.1]和[0.5, 0.3, 0.2],它们在压缩空间中不应被同等对待。top-1置信度为0.8的$u_{1}$比$u_{2}$更可能为真,因此应获得更多关注。(2) 另一方面,它忽略了模型性能随训练进程逐步提升的特性。具体而言,训练初期的预测噪声极大甚至随机,而后期预测会越来越可靠。因此,不同训练阶段的不确定性预测不应被等同视之。基于这两个考量,我们进一步设计了两项重加权原则。

Principle 1: Re weighting with image-based varied reliability. According to the above intuition, we directly reweight the uncertain loss of each uncertain image by its top-1 confidence $\xi(p_{k}^{w})$ in the original class space, which is:

原则1: 基于图像可变可靠性的重加权。根据上述直觉,我们直接通过每张不确定图像在原类别空间中的top-1置信度$\xi(p_{k}^{w})$对其不确定损失进行重加权,即:

$$

\mathcal{L}{s}^{1}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathrm{H}}(\hat{p}{k}^{w},\hat{p}_{k}^{s})\cdot\xi(p_{k}^{w}).

$$

$$

\mathcal{L}{s}^{1}=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathrm{H}}(\hat{p}{k}^{w},\hat{p}{k}^{s})\cdot\xi(p_{k}^{w}).

$$

We do not use the top-1 confidence $\xi(\hat{p}{k}^{w})$ in the shrunk space as the weight, because after shrinking, this value of different predictions is very close to each other. So generally, $\xi(p_{k}^{w})$ is more disc rim i native than $\xi(\hat{p}_{k}^{w})$ as the weight.

我们不以收缩空间中的最高置信度 $\xi(\hat{p}{k}^{w})$ 作为权重,因为收缩后不同预测的该值彼此非常接近。因此,通常 $\xi(p_{k}^{w})$ 比 $\xi(\hat{p}_{k}^{w})$ 更具区分性,更适合作为权重。

Principle 2: Re weighting with model-based gradually improved state. One naïve solution is to linearly increase the loss weight of $\mathcal{L}_{s}$ from 0 at the beginning to $\mu$ at iteration $T$ , and then keep $\mu$ until the end. However, this practice has two severe disadvantages. First, the two additional hyperparameters $\mu$ and $T$ are not easy to determine, and could be sensitive. More importantly, the linear scheduling criterion simply assumes the model state also improves linearly. Indeed, it can not be true. Thus, we here present a more promising principle to perform re weighting, that is free from any extra hyper-parameters. It can adjust the loss weight according to the model state in a fully adaptive fashion. To be specific, we use the certain ratio of the unlabeled set as an indicator for the model state. The certain ratio is traced at each iteration and accumulated globally in an exponential moving average (EMA) manner. Formally, the certain ratio in a single mini-batch is given by:

原则2:基于模型状态渐进改善的重新加权。一种简单的方法是从初始阶段将$\mathcal{L}_{s}$的损失权重从0线性增加到迭代$T$时的$\mu$,之后保持$\mu$直至训练结束。但这种方法存在两个明显缺陷:首先,额外超参数$\mu$和$T$难以确定且可能敏感;更重要的是,线性调度假设模型状态也呈线性改善,这往往不符合实际。因此,我们提出一种无需额外超参数、能根据模型状态自适应调整损失权重的优化方案。具体而言,我们使用未标注数据集的确定比例作为模型状态指标,该比例通过指数移动平均(EMA)方式在每次迭代时追踪并全局累积。单批次训练中的确定比例计算公式为:

$$

m=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})\geq\tau).

$$

$$

m=\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})\geq\tau).

$$

The global certain ratio $m^{g}$ is initialized as 0, and accumulated at each training iteration by:

全局确定比率 $m^{g}$ 初始化为0,并通过以下方式在每个训练迭代中累积:

$$

m^{g}\gets\gamma\cdot m^{g}+(1-\gamma)\cdot m,

$$

$$

m^{g}\gets\gamma\cdot m^{g}+(1-\gamma)\cdot m,

$$

where $\gamma$ is the momentum coefficient. It is a hyper-parameter already defined in FixMatch (baseline), where it is used to update the teacher parameters for final evaluation.

其中$\gamma$是动量系数。该超参数已在FixMatch (基线方法)中定义,用于更新教师模型的参数以进行最终评估。

Obviously, $m^{g}$ falls between 0 and 1. And it will approximately increase from 0 to a nearly saturated value. Then, the reweighted uncertain loss is given by:

显然,$m^{g}$ 介于0到1之间,并将近似从0递增至接近饱和值。于是,重加权不确定损失由下式给出:

$$

\mathcal{L}{s}^{2}=m^{g}\cdot\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathrm{H}}(\hat{p}{k}^{w},\hat{p}_{k}^{s}).

$$

$$

\mathcal{L}{s}^{2}=m^{g}\cdot\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathrm{H}}(\hat{p}{k}^{w},\hat{p}_{k}^{s}).

$$

Integrating the above two intuitions and principles, the final reweighted uncertain loss will be:

综合上述两种直觉和原则,最终重新加权的损失函数为:

$$

\mathcal{L}{s}=m^{g}\cdot\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathbf{H}}(\hat{p}{k}^{w},\hat{p}{k}^{s})\cdot\xi(p_{k}^{w}).

$$

$$

\mathcal{L}{s}=m^{g}\cdot\frac{1}{B_{u}}\sum_{k=1}^{B_{u}}\mathbb{1}(\xi(p_{k}^{w})<\tau)\cdot\hat{\mathbf{H}}(\hat{p}{k}^{w},\hat{p}{k}^{s})\cdot\xi(p_{k}^{w}).

$$

3.4. Summary

3.4. 总结

To summarize, the final loss in a mini-batch is a combination of the supervised loss $(\mathcal{L}{x})$ , certain loss ( $\mathcal{L}{u}$ , Eq. (1)), and uncertain loss in the shrunk class space ( $\mathcal{L}_{s}$ , Eq. (10)):

综上所述,小批量(mini-batch)的最终损失是监督损失 $(\mathcal{L}{x})$ 、确定性损失 ( $\mathcal{L}{u}$ ,式(1)) 以及收缩类别空间中的不确定性损失 ( $\mathcal{L}_{s}$ ,式(10)) 的组合:

$$

\mathcal{L}=\mathcal{L}{x}+\lambda_{u}\cdot(\mathcal{L}{u}+\mathcal{L}_{s}).

$$

$$

\mathcal{L}=\mathcal{L}{x}+\lambda_{u}\cdot(\mathcal{L}{u}+\mathcal{L}_{s}).

$$

We do not carefully fine-tune the fusion weight between $\mathcal{L}{u}$ and $\mathcal{L}_{s}$ , but use 1:1 by default to avoid hyper-parameters.

我们没有仔细微调 $\mathcal{L}{u}$ 和 $\mathcal{L}_{s}$ 之间的融合权重,而是默认使用 1:1 的比例以避免超参数调整。

3.5. Discussions

3.5. 讨论

Our uncertain loss in the shrunk space owns two properties: informative a