Layout Diffusion: Controllable Diffusion Model for Layout-to-image Generation

Layout Diffusion: 面向布局到图像生成的可控扩散模型

(a) layout-guided vs. text-guided image generation for diffusion model

(a) 扩散模型的布局引导与文本引导图像生成

(b) Image-Layout Fusion in a unified space

(b) 统一空间中的图像-布局融合

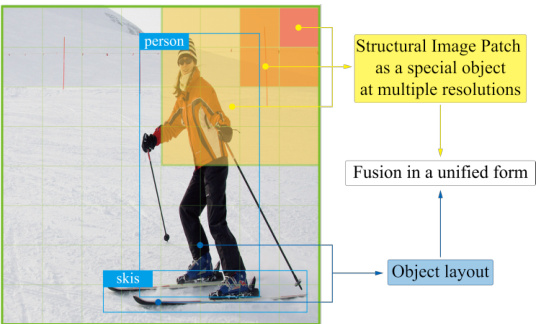

Figure 1. Compared to text, the layout allows diffusion models to obtain more control over the objects while maintaining high quality. Unlike the prevailing methods, we propose a diffusion model named Layout Diffusion for layout-to-image generation. We transform the difficult multimodal fusion of the image and layout into a unified form by constructing a structural image patch with region information and regarding the patched image as a special layout.

图 1: 与纯文本相比,布局使扩散模型能在保持高质量的同时获得更精准的对象控制。不同于主流方法,我们提出名为Layout Diffusion的扩散模型用于布局到图像生成。通过构建带有区域信息的结构化图像块,并将拼接后的图像视为特殊布局,我们将图像与布局的多模态融合难题转化为统一形式。

Abstract

摘要

Recently, diffusion models have achieved great success in image synthesis. However, when it comes to the layoutto-image generation where an image often has a complex scene of multiple objects, how to make strong control over both the global layout map and each detailed object remains a challenging task. In this paper, we propose a diffusion model named Layout Diffusion that can obtain higher generation quality and greater control l ability than the previous works. To overcome the difficult multimodal fusion of image and layout, we propose to construct a structural image patch with region information and transform the patched image into a special layout to fuse with the normal layout in a unified form. Moreover, Layout Fusion Module (LFM) and Object-aware Cross Attention (OaCA) are proposed to model the relationship among multiple objects and designed to be object-aware and position-sensitive, allowing for precisely controlling the spatial related information. Extensive experiments show that our Layout Diffusion outperforms the previous SOTA methods on FID, CAS by relatively $46.35%$ , $26.70%$ on COCO-stuff and $44.29%$ , $41.82%$ on VG. Code is available at https://github.com/ ZGCTroy/Layout Diffusion.

规则:

- 输出中文翻译部分的时候,只保留翻译的标题,不要有任何其他的多余内容,不要重复,不要解释。

- 不要输出与英文内容无关的内容。

- 翻译时要保留原始段落格式,以及保留术语,例如 FLAC,JPEG 等。保留公司缩写,例如 Microsoft, Amazon, OpenAI 等。

- 人名不翻译

- 同时要保留引用的论文,例如 [20] 这样的引用。

- 对于 Figure 和 Table,翻译的同时保留原有格式,例如:“Figure 1: ”翻译为“图 1: ”,“Table 1: ”翻译为:“表 1: ”。

- 全角括号换成半角括号,并在左括号前面加半角空格,右括号后面加半角空格。

- 在翻译专业术语时,第一次出现时要在括号里面写上英文原文,例如:“生成式 AI (Generative AI)”,之后就可以只写中文了。

- 以下是常见的 AI 相关术语词汇对应表(English -> 中文):

- Transformer -> Transformer

- Token -> Token

- LLM/Large Language Model -> 大语言模型

- Zero-shot -> 零样本

- Few-shot -> 少样本

- AI Agent -> AI智能体

- AGI -> 通用人工智能

- Python -> Python语言

策略:

分三步进行翻译工作:

- 不翻译无法识别的特殊字符和公式,原样返回

- 将HTML表格格式转换成Markdown表格格式

- 根据英文内容翻译成符合中文表达习惯的内容,不要遗漏任何信息

最终只返回Markdown格式的翻译结果,不要回复无关内容。

现在请按照上面的要求开始翻译以下内容为简体中文:最近,扩散模型 (diffusion models) 在图像合成领域取得了巨大成功。然而,当涉及包含多个对象的复杂场景的布局到图像生成 (layout-to-image generation) 时,如何同时精确控制全局布局图和每个细节对象仍是一项具有挑战性的任务。本文提出了一种名为 Layout Diffusion 的扩散模型,其生成质量和控制能力均优于先前工作。为解决图像与布局的多模态融合难题,我们提出构建具有区域信息的结构化图像块 (structural image patch),并将分块图像转换为特殊布局形式,从而实现与常规布局的统一融合。此外,本文提出的布局融合模块 (Layout Fusion Module, LFM) 和对象感知交叉注意力 (Object-aware Cross Attention, OaCA) 能够建模多对象间关系,其设计具备对象感知和位置敏感性,可精确控制空间相关信息。大量实验表明,我们的 Layout Diffusion 在 COCO-stuff 和 VG 数据集上分别以 46.35%、26.70% 和 44.29%、41.82% 的相对优势超越先前 SOTA 方法 (FID, CAS 指标)。代码已开源:https://github.com/ZGCTroy/Layout-Diffusion。

1. Introduction

1. 引言

Recently, the diffusion model has achieved encouraging progress in conditional image generation, especially in textto-image generation such as GLIDE [28], Imagen [36], and Stable Diffusion [35]. However, text-guided diffusion models may still fail in the following situations. As shown in Fig. 1 (a), when aiming to generate a complex image with multiple objects, it is hard to design a prompt properly and comprehensively. Even input with well-designed prompts, problems such as missing objects and incorrectly generating objects’ positions, shapes, and categories still occur in the state-of-the-art text-guided diffusion model [28, 35, 36].

最近,扩散模型在条件图像生成领域取得了令人鼓舞的进展,尤其是在文本到图像生成方面,如 GLIDE [28]、Imagen [36] 和 Stable Diffusion [35]。然而,文本引导的扩散模型在以下情况下仍可能失败。如图 1 (a) 所示,当试图生成包含多个对象的复杂图像时,很难设计出恰当且全面的提示词。即使输入精心设计的提示词,在最先进的文本引导扩散模型 [28, 35, 36] 中仍会出现对象缺失、对象位置/形状/类别生成错误等问题。

This is mainly due to the ambiguity of the text and its weakness in precisely expressing the position of the image space [6, 15, 22, 43–45]. Fortunately, this is not a problem when using the coarse layout as guidance, which is a set of objects with the annotation of the bounding box (bbox) and object category. With both spatial and high-level semantic information, the diffusion model can obtain more powerful control l ability while maintaining the high quality.

这主要源于文本的模糊性及其在精确表达图像空间位置方面的不足 [6, 15, 22, 43-45]。幸运的是,使用粗粒度布局作为引导时不存在此问题——布局是一组带有边界框 (bbox) 和物体类别标注的对象。凭借空间信息与高层语义信息的结合,扩散模型能在保持高质量的同时获得更强的控制能力。

However, early studies [2, 16, 47, 51] on layout-to-image generation are almost limited to generative adversarial networks (GANs) and often suffer from unstable convergence [1] and mode collapse [31]. Despite the advantages of diffusion models in easy training [11] and significant quality improvement [8], few studies have considered applying diffusion in the layout-to-image generation task. To our knowledge, only LDM [35] supports the condition of layout and has shown encouraging progress in this field.

然而,早期关于布局到图像生成的研究 [2, 16, 47, 51] 几乎仅限于生成对抗网络 (GANs),并且经常面临收敛不稳定 [1] 和模式崩溃 [31] 的问题。尽管扩散模型在训练简便 [11] 和质量显著提升 [8] 方面具有优势,但很少有研究考虑将扩散模型应用于布局到图像生成任务。据我们所知,只有 LDM [35] 支持布局条件,并在该领域展现了令人鼓舞的进展。

In this paper, different from LDM that applies the simple multimodal fusion method (e.g., the cross attention) or direct input concatenation for all conditional input, we aim to specifically design the fusion mechanism between layout and image. Moreover, instead of conditioning only in the second stage like LDM, we propose an end-to-end one-stage model that considers the condition for the whole process, which may have the potential to help mitigate loss in the task that requires fine-grained accuracy in pixel space [35]. The fusion between image and layout is a difficult multimodal fusion problem. Compared to the fusion of text and image, the layout has more restrictions on the position, size, and category of objects. This requires a higher control l ability of the model and often leads to a decrease in the naturalness and diversity of the generated image. Furthermore, the layout is more sensitive to each token and the loss in token of layout will directly lead to the missing objects.

本文与LDM采用简单的多模态融合方法(如交叉注意力)或对所有条件输入直接拼接不同,我们旨在专门设计布局与图像间的融合机制。此外,不同于LDM仅在第二阶段施加条件,我们提出了一个端到端的单阶段模型,该模型在整个生成过程中都考虑条件约束,这可能有助于缓解像素空间需要精细准确度的任务中的信息损失[35]。图像与布局的融合是一个困难的多模态融合问题。相比文本与图像的融合,布局对物体的位置、尺寸和类别有更多限制。这需要模型具备更强的控制能力,同时往往会导致生成图像的自然性和多样性下降。此外,布局对每个token更为敏感,布局token的缺失会直接导致物体丢失。

To address the problems mentioned above, we propose treating the patched image and the input layout in a unified form. Specifically, we construct a structural image patch at multi-resolution by adding the concept of region that contains information of position and size. As a result, each patch of the image is transformed into a special type of object, and the entire patched image will also be regarded as a layout. Finally, the difficult problem of multimodal fusion between image and layout will be transformed into a simple fusion with a unified form in the same spatial space of the image. We name our model Layout Diff u is on, a layout-conditional diffusion model with Layout Fusion Module (LFM), object-aware Cross Attention Mechanism (OaCA), and corresponding classifier-free training and sampling scheme. In detail, LFM fuses the information of each object and models the relationship among multiple objects, providing a latent representation of the entire layout. To make the model pay more attention to the information related to the object, we propose an object-aware fusion module named OaCA. Cross-attention is made between the image patch feature and layout in a unified coordinate space by representing the positions of both of them as bounding boxes. To further improve the user experience of LayoutDiffuison, we also make several optimization s on the speed of the classifier-free sampling process and could significantly outperform the SOTA models in 25 iterations.

为解决上述问题,我们提出以统一形式处理分块图像与输入布局。具体而言,通过引入包含位置和尺寸信息的区域概念,我们在多分辨率下构建结构化图像块。这使得每个图像块被转化为特殊类型的对象,整个分块图像也将被视为一种布局。最终,图像与布局间的困难多模态融合问题,将转化为同一图像空间内统一形式的简单融合。我们将模型命名为LayoutDiffusion,这是一个包含布局融合模块(LFM)、对象感知交叉注意力机制(OaCA)以及对应无分类器训练与采样方案的布局条件扩散模型。具体来说,LFM融合各对象信息并建模多对象关系,提供整个布局的潜在表征。为使模型更关注对象相关信息,我们提出名为OaCA的对象感知融合模块。通过将图像块特征与布局的位置表示为边界框,在统一坐标空间内进行交叉注意力计算。为进一步提升LayoutDiffusion的用户体验,我们对无分类器采样过程的速度进行了多项优化,在25次迭代中即可显著超越SOTA模型。

Experiments are conducted on COCO-stuff [5] and Visual Genome (VG) [21]. Various metrics ranging from quality, diversity, and control l ability show that Layout Diffusion significantly outperforms both state-of-the-art GAN-based and diffusion-based methods.

实验在COCO-stuff [5] 和 Visual Genome (VG) [21] 数据集上进行。从生成质量、多样性和可控性等多维度指标表明,Layout Diffusion显著优于当前最先进的基于GAN和扩散模型的方法。

Our main contribution is listed below.

我们的主要贡献如下。

2. Related work

2. 相关工作

The related works are mainly from layout-to-image generation and diffusion models.

相关工作主要涉及布局到图像生成和扩散模型。

Layout-to-Image Generation. Before the layout-to-image generation is formally proposed, the layout is usually used as as a complementary feature [17, 34, 49] or an intermediate representation in text-to-image [13], scene-to-image generation [16]. The first image generation directly from the layout appears in Layout2Im [56] and is defined as a set of objects annotated with category and bbox. Models that work well with fine-grained semantic maps at the pixel level can also be easily transformed to this setting [14, 30, 52]. Inspired by StyleGAN [18], LostGAN-v1 [46], LostGANv2 [47] used a re configurable layout to obtain better control over individual objects. For interactive image synthesis, PLGAN [51] employed panoptic theory [20] by constructing stuff and instance layouts into separate branches and proposed Instance- and Stuff-Aware Normalization to fuse into panoptic layouts. Despite encouraging progress in this field, almost all approaches are limited to the generative adversarial network (GAN) and may suffer from unstable convergence [1] and mode collapse [31]. As a multimodal diffusion model, LDM [35] supports the condition of coarse layout and has shown great potential in layout-guided image generation.

布局到图像生成。在布局到图像生成被正式提出之前,布局通常被用作补充特征[17, 34, 49]或文本到图像[13]、场景到图像生成[16]的中间表示。首个直接从布局生成图像的工作出现在Layout2Im[56]中,其将布局定义为带有类别和边界框标注的对象集合。在像素级别能良好处理细粒度语义图的模型也能轻松适配此设定[14, 30, 52]。受StyleGAN[18]启发,LostGAN-v1[46]和LostGAN-v2[47]采用可重构布局实现对单个对象的更好控制。针对交互式图像合成,PLGAN[51]通过将场景布局和实例布局构建为独立分支,运用全景理论[20]并提出实例与场景感知归一化方法来实现全景布局融合。尽管该领域取得显著进展,几乎所有方法都局限于生成对抗网络(GAN),存在收敛不稳定[1]和模式坍塌[31]问题。作为多模态扩散模型,LDM[35]支持粗粒度布局条件输入,在布局引导的图像生成中展现出巨大潜力。

Diffusion Model. Diffusion models [3, 11, 29, 35, 39, 41, 42, 53] are being recognized as a promising family of generative models that have proven to be state-of-the-art sample quality for a variety of image generation benchmarks [7,50,54], including class-conditional image generation [8,57], text-to-image generation [28,35,36], and image- to-image translation [19, 26, 37]. Classifier guidance was introduced in ADM-G [8] to allow diffusion models to condition the class label. The gradient of the classifier trained on noised images could be added to the image during the sampling process. Then Ho et al. [12] proposed a classifierfree training and sampling strategy by interpolating between predictions of a diffusion model with and without condition input. For the acceleration of training and sampling speed, LDM proposed to first compress the image into smaller resolution and then apply denoising training in the latent space.

扩散模型 (Diffusion Model) [3, 11, 29, 35, 39, 41, 42, 53] 正被视为一类极具前景的生成式模型,其在多种图像生成基准测试中展现出最先进的样本质量 [7,50,54],包括类别条件图像生成 [8,57]、文本到图像生成 [28,35,36] 以及图像到图像转换 [19, 26, 37]。ADM-G [8] 引入了分类器引导机制,使扩散模型能够基于类别标签进行条件生成。采样过程中可将经过噪声图像训练的分类器梯度叠加至图像。随后 Ho 等人 [12] 提出了一种无分类器训练与采样策略,通过对有条件输入和无条件输入的扩散模型预测结果进行插值实现。为加速训练与采样过程,LDM 提出先将图像压缩至较低分辨率,再在潜在空间中进行去噪训练。

3. Method

3. 方法

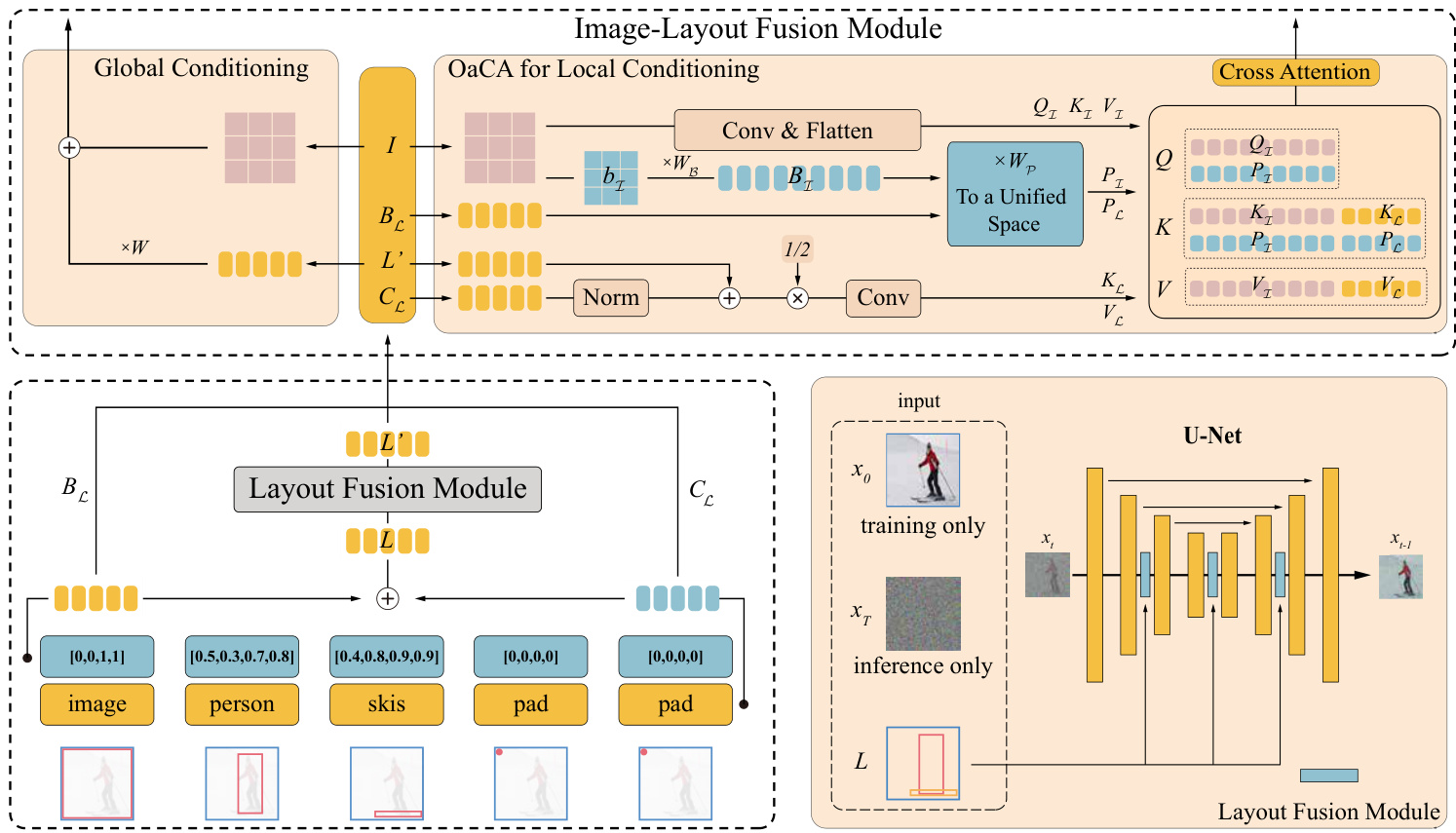

In this section, we propose our Layout Diffusion, as shown in Fig. 2. The whole framework consists mainly of four parts: (a) layout embedding that pre processes the layout input, (b) layout fusion module that encourages more interaction between objects of layout, (c) image-layout fusion module that constructs the structal image patch and objectaware cross attention developed with the specific design for layout and image fusion, (d) the layout-conditional diffu- sion model with training and accelerated sampling methods.

在本节中,我们提出了布局扩散方法 (Layout Diffusion) ,如图 2 所示。整个框架主要包含四个部分: (a) 布局嵌入层 (layout embedding) ,用于预处理布局输入; (b) 布局融合模块 (layout fusion module) ,用于增强布局中对象间的交互; (c) 图像-布局融合模块 (image-layout fusion module) ,用于构建结构化图像块 (structal image patch) 和对象感知交叉注意力机制 (objectaware cross attention) ,该模块专为布局与图像融合而设计; (d) 布局条件扩散模型 (layout-conditional diffusion model) ,包含训练方法和加速采样方法。

3.1. Layout Embedding

3.1. 布局嵌入

A layout $l~={o_{1},o_{2},\cdot\cdot\cdot~,o_{n}}$ is a set of $n$ objects. Each object $o_{i}$ is represented as $o_{i}={b_{i},c_{i}}$ , where $b_{i}=$ $(x_{0}^{i},y_{0}^{i},x_{1}^{i},y_{1}^{i})\in[0,1]^{4}$ denotes a bounding box (bbox) and $c_{i}\in[0,\mathcal{C}+1]$ is its category id.

布局 $l~={o_{1},o_{2},\cdot\cdot\cdot~,o_{n}}$ 是一组 $n$ 个对象的集合。每个对象 $o_{i}$ 表示为 $o_{i}={b_{i},c_{i}}$ ,其中 $b_{i}=$ $(x_{0}^{i},y_{0}^{i},x_{1}^{i},y_{1}^{i})\in[0,1]^{4}$ 表示边界框 (bbox),而 $c_{i}\in[0,\mathcal{C}+1]$ 是其类别 ID。

To support the input of a variable length sequence, we need to pad $l$ to a fixed length $k$ by adding one $o_{l}$ in the front and some padding $o_{p}$ in the end, where $o_{l}$ represents the entire layout and $o_{p}$ represents no object. Specifically, $b_{l}=(0,0,1,1)$ , $c_{l}=0$ denotes a object that covers the whole image and $b_{p}=(0,0,0,0)$ , $c_{p}=\mathcal{C}+1$ denotes a empty object that has no shape or does not appear in the image.

为了支持可变长度序列的输入,我们需要将 $l$ 填充到固定长度 $k$ ,方法是在前面添加一个 $o_{l}$ ,并在末尾添加一些填充 $o_{p}$ ,其中 $o_{l}$ 表示整个布局, $o_{p}$ 表示无对象。具体来说, $b_{l}=(0,0,1,1)$ , $c_{l}=0$ 表示一个覆盖整个图像的对象,而 $b_{p}=(0,0,0,0)$ , $c_{p}=\mathcal{C}+1$ 表示一个没有形状或未出现在图像中的空对象。

After the padding process, we can get a padded $l=$ consisting of $k$ objects, and each object has its specific position, size, and category. Then, the layout $l$ is transformed into a layout embedding $L=$ ${O_{1},O_{2},\cdot\cdot\cdot,O_{k}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ by the projection matrix $W_{B}\in$ $\mathbb{R}^{4\times d_{c}}$ and $W_{\mathcal{C}}\in\mathbb{R}^{1\times d_{\mathcal{L}}}$ using the following equation:

填充处理后,我们可以得到一个填充后的 $l=$ 包含 $k$ 个对象,每个对象都有其特定的位置、大小和类别。随后,通过投影矩阵 $W_{B}\in$ $\mathbb{R}^{4\times d_{c}}$ 和 $W_{\mathcal{C}}\in\mathbb{R}^{1\times d_{\mathcal{L}}}$ ,布局 $l$ 被转换为布局嵌入 $L=$ ${O_{1},O_{2},\cdot\cdot\cdot,O_{k}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ ,转换公式如下:

$$

\begin{array}{c}{L=B_{\mathcal{L}}+C_{\mathcal{L}}}\ {B_{\mathcal{L}}=b W_{B}}\ {C_{\mathcal{L}}=c W_{C}}\end{array}

$$

$$

\begin{array}{c}{L=B_{\mathcal{L}}+C_{\mathcal{L}}}\ {B_{\mathcal{L}}=b W_{B}}\ {C_{\mathcal{L}}=c W_{C}}\end{array}

$$

where $B_{\mathcal{L}},C_{\mathcal{L}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ are the bounding box embedding and the category embedding of a layout $l$ , respectively. As a result, $L$ is defined as the sum of $B\boldsymbol{\mathscr{c}}$ and $C_{\mathcal{L}}$ to include both the content and positional information of a entire layout, and $d_{\mathcal{L}}$ is the dimension of the layout embedding.

其中 $B_{\mathcal{L}},C_{\mathcal{L}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ 分别是布局 $l$ 的边界框嵌入和类别嵌入。因此,$L$ 被定义为 $B\boldsymbol{\mathscr{c}}$ 与 $C_{\mathcal{L}}$ 之和,以包含整个布局的内容和位置信息,$d_{\mathcal{L}}$ 是布局嵌入的维度。

3.2. Layout Fusion Module

3.2. 布局融合模块

Currently, each object in layout has no relationship with other objects. This leads to a low understanding of the whole scene, especially when multiple objects overlap and block each other. Therefore, to encourage more interaction between multiple objects of the layout to better understand the entire layout before inputting the layout embedding, we propose Layout Fusion Module (LFM), a transformer encoder that uses multiple layers of self-attention to fuse the layout embedding and can be denoted as

目前,布局中的每个对象与其他对象之间没有关联。这导致对整个场景的理解不足,尤其在多个对象相互重叠和遮挡时更为明显。因此,为促进布局中多个对象间的更多交互,从而在输入布局嵌入前更好地理解整体布局,我们提出了布局融合模块 (Layout Fusion Module, LFM) ——一种通过多层自注意力机制融合布局嵌入的 Transformer 编码器,可表示为

$$

L^{\prime}=\operatorname{LFM}(L)

$$

$$

L^{\prime}=\operatorname{LFM}(L)

$$

, where the output is a fused layout embedding $L^{\prime}=$ ${O_{1}^{\prime},O_{2}^{'},\cdot\cdot\cdot,O_{k}^{'}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ .

其中输出为融合布局嵌入 $L^{\prime}=$ ${O_{1}^{\prime},O_{2}^{'},\cdot\cdot\cdot,O_{k}^{'}}\in\mathbb{R}^{k\times d_{\mathcal{L}}}$ 。

3.3. Image-Layout Fusion Module

3.3. 图像-布局融合模块

Structural Image Patch. The fusion of image and layout is a difficult multimodal fusion problem, and one of the most important parts lies in the fusion of position and size. However, the image patch is limited to the semantic information of the whole feature and lacks the spatial information. Therefore, we construct a structural image patch by adding the concept of region that contains the information of position and size.

结构化图像块 (Structural Image Patch)。图像与版式的融合是一个困难的多模态融合问题,其中最关键的部分在于位置和尺寸信息的融合。然而传统图像块仅包含整体特征的语义信息,缺乏空间信息。为此,我们通过引入包含位置与尺寸信息的区域概念,构建了结构化图像块。

Specifically, $\pmb{I}\in\mathbb{R}^{h\times w\times d_{\mathbb{Z}}}$ denotes the feature map of a entire image with height $h$ , width $w$ , and channel $d_{\mathbb{Z}}$ . We define that $I_{u,v}$ is the $u^{\mathrm{th}}$ row and $v^{\mathrm{th}}$ column patch of $I$ and its bounding box, or the ablated region information, is defined as $b_{I_{u,v}}$ by the following equation:

具体来说,$\pmb{I}\in\mathbb{R}^{h\times w\times d_{\mathbb{Z}}}$表示整个图像的特征图,其高度为$h$,宽度为$w$,通道数为$d_{\mathbb{Z}}$。我们定义$I_{u,v}$为$I$的第$u^{\mathrm{th}}$行和第$v^{\mathrm{th}}$列的图像块,其边界框(或称为擦除区域信息)由以下方程定义为$b_{I_{u,v}}$:

$$

b_{\mathcal{Z}_{u,v}}=(\frac{u}{h},\frac{v}{w},\frac{u+1}{h},\frac{v+1}{w})

$$

$$

b_{\mathcal{Z}_{u,v}}=(\frac{u}{h},\frac{v}{w},\frac{u+1}{h},\frac{v+1}{w})

$$

The bounding box sets of a patched image $I$ is defined as . As a result, the positional information of image patch and layout object is contained in the unified bounding box defined in the same spatial space, leading to better fusion of image and layout.

修补图像 $I$ 的边界框集定义为。因此,图像块和布局对象的位置信息被包含在同一空间空间中定义的统一边界框内,从而实现图像和布局更好的融合。

Positional Embedding in Unified Space. We define the positional embedding of the image and layout as $P_{\mathcal{Z}}$ and

空间统一的位置嵌入。我们将图像和布局的位置嵌入定义为 $P_{\mathcal{Z}}$ 和

Figure 2. The whole pipeline of Layout Diffusion. The layout that consisted of bounding box $b$ and objects categories $c$ is transformed into embedding $B_{\mathcal{L}},C_{\mathcal{L}},L$ . Then Layout Fusion Module fuses layout embedding $L$ to output the fused layout embedding $L^{\prime}$ . Finally, Image-Layout Fusion Module including direct addition used for global conditioning and Object-aware Cross Attention (OaCA) used for local conditioning, will fuse the layout related $B_{\mathcal{L}},C_{\mathcal{L}},L^{\prime}$ and the image feature $I$ at multiple resolutions.

图 2: Layout Diffusion 的整体流程。由边界框 $b$ 和物体类别 $c$ 组成的布局被转换为嵌入 $B_{\mathcal{L}},C_{\mathcal{L}},L$。随后,布局融合模块 (Layout Fusion Module) 将布局嵌入 $L$ 融合,输出融合后的布局嵌入 $L^{\prime}$。最后,图像-布局融合模块 (Image-Layout Fusion Module) 通过直接相加 (direct addition) 实现全局条件控制,并利用物体感知交叉注意力 (Object-aware Cross Attention, OaCA) 实现局部条件控制,将布局相关的 $B_{\mathcal{L}},C_{\mathcal{L}},L^{\prime}$ 与多分辨率下的图像特征 $I$ 进行融合。

$P_{\mathcal{L}}$ as follows:

$P_{\mathcal{L}}$ 如下:

$$

\begin{array}{l}{B_{\mathcal{T}}=b_{\mathcal{T}}W_{\mathcal{B}}}\ {P_{\mathcal{T}}=B_{\mathcal{T}}W_{\mathcal{P}}}\ {P_{\mathcal{L}}=B_{\mathcal{L}}W_{\mathcal{P}}}\end{array}

$$

$$

\begin{array}{l}{B_{\mathcal{T}}=b_{\mathcal{T}}W_{\mathcal{B}}}\ {P_{\mathcal{T}}=B_{\mathcal{T}}W_{\mathcal{P}}}\ {P_{\mathcal{L}}=B_{\mathcal{L}}W_{\mathcal{P}}}\end{array}

$$

, where $W_{B}\in\mathbb{R}^{4\times d_{\mathcal{L}}}$ is defined in Eq. 2 and works as a shared projection matrix that transforms the coordinates of bounding box into embedding of $d_{\mathcal{L}}$ dimension. $W_{\mathcal P}\in$ $\mathbb{R}^{d_{\mathbb{Z}}\times d_{\mathbb{Z}}}$ is the projection matrix that transforms the $B$ to the positional Embedding $P$ .

其中 $W_{B}\in\mathbb{R}^{4\times d_{\mathcal{L}}}$ 是公式2中定义的共享投影矩阵,用于将边界框坐标转换为 $d_{\mathcal{L}}$ 维嵌入。$W_{\mathcal P}\in$ $\mathbb{R}^{d_{\mathbb{Z}}\times d_{\mathbb{Z}}}$ 是将边界框 $B$ 转换为位置嵌入 $P$ 的投影矩阵。

Pointwise Addition for Global Conditioning. With the help of LFM in Eq. 4, $O_{1}^{\prime}$ can be considered as a global information of the entire layout, and $O_{i}^{\prime}(i\in[2,k])$ is considered as the local information embedding of single object along with the other related objects. One of the easiest ways to condition the layout in the image is to directly add $O_{1}^{\prime}$ , the global information of the layout, to the multiple resolution of image features. Specifically, the condition process can be defined as

全局调节的逐点加法。借助式4中的LFM,$O_{1}^{\prime}$可视为整个布局的全局信息,而$O_{i}^{\prime}(i\in[2,k])$则被视为单个对象及其相关对象的局部信息嵌入。对图像中的布局进行调节的最简单方法之一,是将布局的全局信息$O_{1}^{\prime}$直接添加到图像特征的多分辨率上。具体而言,该调节过程可定义为

$$

I^{'}=I+O_{1}^{\prime}W

$$

$$

I^{'}=I+O_{1}^{\prime}W

$$

, where $W\in\mathbb{R}^{d_{\mathcal{L}}\times d_{\mathcal{Z}}}$ is a projection matrix and $I^{\prime}$ is the image feature conditioned with global embedding of layout.

其中 $W\in\mathbb{R}^{d_{\mathcal{L}}\times d_{\mathcal{Z}}}$ 是投影矩阵,$I^{\prime}$ 为融合布局全局嵌入的图像特征。

Object-aware Cross Attention for Local Conditioning.

面向局部条件化的对象感知交叉注意力

Cross attention is successfully applied in [28] to condition text into image feature, where the sequence of the image patch is used as the query and the concatenated sequence of the image patch and text is applied as key and value. The equation of cross-attention is defined as

交叉注意力在[28]中被成功应用于将文本条件融入图像特征,其中图像块的序列作为查询(query),图像块与文本的拼接序列作为键(key)和值(value)。交叉注意力的计算公式定义为

$$

{\mathrm{Attention}}(Q,K,V)=\operatorname{softmax}\left({\frac{Q K^{T}}{\sqrt{d_{k}}}}\right)V

$$

$$

{\mathrm{Attention}}(Q,K,V)=\operatorname{softmax}\left({\frac{Q K^{T}}{\sqrt{d_{k}}}}\right)V

$$

, where $Q,K,V$ represent the embeddings of query, key, and value, respectively. In the following paper, we will use the subscript image and layout to represent the image patch feature and layout feature, respectively.

其中 $Q,K,V$ 分别表示查询(query)、键(key)和值(value)的嵌入向量。在下文中,我们将使用下标 image 和 layout 分别表示图像块特征和布局特征。

In text-to-image generation, each token in the text sequence is a word. The aggregation of these words constitutes the semantics of a sentence. After the transformer encoder, the first token in text sequence is well-semantic information that generalizes the whole text but may not reverse the semantic meaning of each word. However, the loss in information of one token is relatively serious in layout rather than in text. Each token in the layout sequence is a single object with a specific category, size, and position. The loss of information on a layout token will directly lead to a missing or wrong object in the generated image pixel space.

在文本到图像生成中,文本序列中的每个token都是一个单词。这些单词的聚合构成了句子的语义。经过transformer编码器处理后,文本序列中的第一个token会成为概括整体文本的强语义信息,但可能无法还原每个单词的语义含义。然而,在布局序列中,单个token的信息丢失问题比文本序列更为严重。布局序列中的每个token都代表具有特定类别、尺寸和位置的独立对象。布局token的信息丢失会直接导致生成图像像素空间中出现缺失或错误的对象。

Therefore, we take into account the fusion of locations, size, and category of objects and define our object-aware cross-attention (OaCA) as

因此,我们综合考虑物体的位置、尺寸和类别融合,并将物体感知交叉注意力 (OaCA) 定义为

$$

\begin{array}{r l}&{Q=\Psi_{1}(Q_{\mathcal{T}},P_{\mathcal{L}})}\ &{K=\Psi_{1}(\Psi_{2}(K_{\mathcal{T}},K_{\mathcal{L}}),\Psi_{2}(P_{\mathcal{T}},P_{\mathcal{L}}))}\ &{V=\Psi_{2}(V_{\mathcal{T}},V_{\mathcal{L}})}\end{array}

$$

$$

\begin{array}{r l}&{Q=\Psi_{1}(Q_{\mathcal{T}},P_{\mathcal{L}})}\ &{K=\Psi_{1}(\Psi_{2}(K_{\mathcal{T}},K_{\mathcal{L}}),\Psi_{2}(P_{\mathcal{T}},P_{\mathcal{L}}))}\ &{V=\Psi_{2}(V_{\mathcal{T}},V_{\mathcal{L}})}\end{array}

$$

, where the query $Q\in\mathbb{R}^{h w\times2d_{\mathbb{Z}}}$ , $K\in\mathbb{R}^{(h w+k)\times2d_{\mathcal{Z}}}$ , and $V\in\mathbb{R}^{(h w+\bar{k})\times\bar{d}{\mathcal{T}}}$ . $\Psi_{1}$ and $\Psi_{2}$ denote concatenation on the dimension of the channel and length of the sequence, respectively.

其中查询 $Q\in\mathbb{R}^{h w\times2d_{\mathbb{Z}}}$ 、键 $K\in\mathbb{R}^{(h w+k)\times2d_{\mathcal{Z}}}$ 和值 $V\in\mathbb{R}^{(h w+\bar{k})\times\bar{d}{\mathcal{T}}}$ 。$\Psi_{1}$ 和 $\Psi_{2}$ 分别表示在通道维度和序列长度维度上的拼接操作。

We first construct the key and value of the layout:

我们首先构建布局的键和值:

$$

K_{\mathcal{L}},V_{\mathcal{L}}=\operatorname{Conv}(\frac{1}{2}(\operatorname{Norm}(C_{\mathcal{L}})+L^{\prime}))

$$

$$

K_{\mathcal{L}},V_{\mathcal{L}}=\operatorname{Conv}(\frac{1}{2}(\operatorname{Norm}(C_{\mathcal{L}})+L^{\prime}))

$$

, where $K_{\mathcal{L}},V_{\mathcal{L}}\in\mathbb{R}^{k\times d_{\mathcal{Z}}}$ and Conv is the convolution operation. The embedding of key and value in the layout is related to the category embedding $C_{\mathcal{L}}$ and the fused layout embedding $L^{\prime}$ . $C_{\mathcal{L}}$ focuses on the category information of layout and $L^{\prime}$ concentrates on the comprehensive information of both the object itself and other objects that may have a relationship with it. By averaging between $L^{\prime}$ and $C_{\mathcal{L}}$ , we can obtain both the general information of the object and also emphasize the category information of the object.

其中 $K_{\mathcal{L}},V_{\mathcal{L}}\in\mathbb{R}^{k\times d_{\mathcal{Z}}}$ ,Conv 表示卷积运算。布局中键与值的嵌入与类别嵌入 $C_{\mathcal{L}}$ 及融合后的布局嵌入 $L^{\prime}$ 相关。$C_{\mathcal{L}}$ 聚焦于布局的类别信息,而 $L^{\prime}$ 则综合了物体自身及其可能关联对象的整体信息。通过对 $L^{\prime}$ 和 $C_{\mathcal{L}}$ 取平均,既能获取物体的通用信息,又能强化其类别特征。

We construct the query, key, and value of the image feature as follows:

我们按如下方式构建图像特征的查询(query)、键(key)和值(value):

$$

Q_{\mathcal{T}},K_{\mathcal{T}},V_{\mathcal{T}}=\operatorname{Conv}(\operatorname{Norm}(I))

$$

$$

Q_{\mathcal{T}},K_{\mathcal{T}},V_{\mathcal{T}}=\operatorname{Conv}(\operatorname{Norm}(I))

$$

3.4. Layout-conditional Diffusion Model

3.4. 布局条件扩散模型

Here, we follow the Gaussian diffusion models improved by [11,41]. Given a data point sampled from a real data distribution $x_{0}\sim q(x_{0})$ , a forward diffusion process is defined by adding small amount of Gaussian noise to the $x_{0}$ in $T$ steps:

这里,我们遵循[11,41]改进的高斯扩散模型。给定从真实数据分布 $x_{0}\sim q(x_{0})$ 中采样的数据点,前向扩散过程通过在 $T$ 步中向 $x_{0}$ 添加少量高斯噪声来定义:

$$

q(x_{t}|x_{t-1}):=\mathcal{N}(x_{t};\sqrt{\alpha_{t}}x_{t-1},(1-\alpha_{t})\mathbf{I})

$$

$$

q(x_{t}|x_{t-1}):=\mathcal{N}(x_{t};\sqrt{\alpha_{t}}x_{t-1},(1-\alpha_{t})\mathbf{I})

$$

If the total noise added throughout the Markov chain is large enough, the $x_{T}$ will be well approximated by $\mathcal{N}(0,\mathbf{I})$ . If we add noise at each step with a sufficiently small magnitude $1-\alpha_{t}$ , the posterior $q(x_{t-1}|x_{t})$ will be well approximated by a diagonal Gaussian. This nice property ensures that we can reverse the above forward process and sample from $x_{T}\sim\mathcal{N}(0,\mathbf{I})$ , which is a Gaussian noise. However, since the entire dataset is needed, we are unable to easily estimate the posterior. Instead, we have to learn a model $p_{\theta}(x_{t-1}|x_{t})$ to approximate it:

如果整个马尔可夫链中添加的总噪声足够大,$x_{T}$ 将很好地近似于 $\mathcal{N}(0,\mathbf{I})$。如果我们以足够小的幅度 $1-\alpha_{t}$ 在每一步添加噪声,后验分布 $q(x_{t-1}|x_{t})$ 将很好地近似为一个对角高斯分布。这一优良特性确保我们可以反转上述前向过程,并从高斯噪声 $x_{T}\sim\mathcal{N}(0,\mathbf{I})$ 中采样。然而,由于需要整个数据集,我们无法轻松估计后验分布,而必须通过学习模型 $p_{\theta}(x_{t-1}|x_{t})$ 来近似它:

$$

p_{\theta}\big(x_{t-1}|x_{t}\big):=\mathcal{N}(\mu_{\theta}(x_{t}),\Sigma_{\theta}(x_{t}))

$$

$$

p_{\theta}\big(x_{t-1}|x_{t}\big):=\mathcal{N}(\mu_{\theta}(x_{t}),\Sigma_{\theta}(x_{t}))

$$

Instead of using the tractable variation al lower bound (VLB) in $\log p_{\theta}(x_{0})$ , Ho et al. [11] proposed to reweight the terms of the VLB to optimize a surrogate objective. Specifically, we first add $t$ steps of Gaussian noise to a clean sample $x_{0}$ to generate a noised sample $x_{t}\sim q(x_{t}|x_{0})$ . Then train a model $\epsilon_{\theta}$ to predict the added noise using the following loss:

Ho等人[11]提出对变分下界(VLB)进行项重加权来优化替代目标,而非直接使用$\log p_{\theta}(x_{0})$中的可处理变分下界。具体而言,我们首先对干净样本$x_{0}$添加$t$步高斯噪声生成带噪样本$x_{t}\sim q(x_{t}|x_{0})$,随后训练模型$\epsilon_{\theta}$通过以下损失函数预测所添加噪声:

$$

\mathcal{L}:=E_{t\sim[1,T],x_{0}\sim q(x_{0}),\epsilon\sim\mathcal{N}(0,\mathbf{I})}[||\epsilon-\epsilon_{\theta}(x_{t},t)||^{2}]

$$

$$

\mathcal{L}:=E_{t\sim[1,T],x_{0}\sim q(x_{0}),\epsilon\sim\mathcal{N}(0,\mathbf{I})}[||\epsilon-\epsilon_{\theta}(x_{t},t)||^{2}]

$$

, which is a standard mean-squared error loss.

这是一个标准的均方误差损失函数。

To support the layout condition, we apply classifier-free guidance, a technique proposed by Ho et al. [12] for conditional generation that requires no additional training of the classifier. It is accomplished by interpolating between predictions of a diffusion model with and without condition input. For the condition of layout, we first construct a padding layout $l_{\phi}={o_{l},o_{p},\cdot\cdot\cdot,o_{p}}$ . During training, the condition of layout $l$ of diffusion model will be replaced with $l_{\phi}$ with a fixed probability. When sampling, the following equation is used to sample a layout-condional image:

为支持布局条件,我们采用Ho等人[12]提出的无需分类器(classifier-free guidance)技术进行条件生成,该技术无需额外训练分类器。具体实现方式是在有/无条件输入的扩散模型预测结果之间进行插值。对于布局条件,我们首先构建填充布局$l_{\phi}={o_{l},o_{p},\cdot\cdot\cdot,o_{p}}$。训练过程中,扩散模型的布局条件$l$会以固定概率被替换为$l_{\phi}$。采样时使用以下方程生成布局条件图像:

$$

\hat{\epsilon}{\theta}(x_{t},t|l)=(1-s)\cdot\epsilon_{\theta}(x_{t},t|l_{\phi})+s\cdot\epsilon_{\theta}(x_{t},t|l)

$$

$$

\hat{\epsilon}{\theta}(x_{t},t|l)=(1-s)\cdot\epsilon_{\theta}(x_{t},t|l_{\phi})+s\cdot\epsilon_{\theta}(x_{t},t|l)

$$

, where the scale $s$ can be used to increase the gap between $\epsilon_{\theta}(x_{t},t|l_{\phi})$ and $\epsilon_{\boldsymbol{\theta}}(\boldsymbol{x}_{t},t|l)$ to enhance the strength of conditional guidance.

其中,比例因子 $s$ 可用于增大 $\epsilon_{\theta}(x_{t},t|l_{\phi})$ 与 $\epsilon_{\boldsymbol{\theta}}(\boldsymbol{x}_{t},t|l)$ 之间的差距,从而增强条件引导的强度。

To further improve the user experience of Layout Diff uison, we also make several optimization s on the speed of the classifier-free sampling process and could significantly outperform the SOTA models in 25 iterations. Specifically, we adapt DPM-solver [25] for the conditional classifier-free sampling, a fast dedicated high-order solver for diffusion ODEs [42] with the convergence order guarantee, to accelerate the conditional sampling speed.

为进一步提升Layout Diffusion的用户体验,我们对无分类器采样过程的速度进行了多项优化,在25次迭代中显著超越当前最优(SOTA)模型。具体而言,我们采用专为扩散ODE设计的快速高阶求解器DPM-solver [25](具有收敛阶保证[42])来加速条件采样过程。

4. Experiments

4. 实验

In this section, we evaluate our Layout Diffusion on different benchmarks in terms of various metrics. First, we introduce the datasets and evaluation metrics. Second, we show the qualitative and quantitative results compared with other strategies. Finally, some ablation studies and analysis are also mentioned. More details can be found in Appendix, including model architecture, training hyper parameters, reproduction results, more experimental results and visualizations.

在本节中,我们从不同指标评估Layout Diffusion在多个基准测试上的表现。首先介绍数据集和评估指标,其次展示与其他策略的定性与定量对比结果,最后提及部分消融实验与分析。更多细节详见附录,包括模型架构、训练超参数、复现结果、补充实验数据及可视化效果。

4.1. Datasets

4.1. 数据集

We conduct our experiments on two popular datasets, COCO-Stuff [5] and Visual Genome [21].

我们在两个流行数据集 COCO-Stuff [5] 和 Visual Genome [21] 上进行了实验。

COCO-Stuff has 164K images from COCO 2017, of which the images contain bounding boxes and pixel-level segmentation masks for 80 categories of thing and 91 categories of stuff, respectively. Following the settings of LostGANv2 [47], we use the COCO 2017 Stuff Segmentation Challenge subset that contains $40\mathrm{K}/5\mathrm{k}/5\mathrm{k}$ images for train / val / test-dev set, respectively. We use images in the train and val set with 3 to 8 objects that cover more than $2%$ of the image and not belong to crowd. Finally, there are 25,210 train and 3,097 val images.

COCO-Stuff数据集包含来自COCO 2017的164K张图像,其中图像分别包含80类物体(thing)和91类材料(stuff)的边界框及像素级分割掩码。遵循LostGANv2 [47]的设置,我们采用COCO 2017 Stuff Segmentation Challenge子集,该子集包含$40\mathrm{K}/5\mathrm{k}/5\mathrm{k}$张图像分别用于训练集/验证集/测试开发集。我们筛选训练集和验证集中包含3至8个对象、覆盖图像面积超过$2%$且不属于人群类别的图像,最终得到25,210张训练图像和3,097张验证图像。

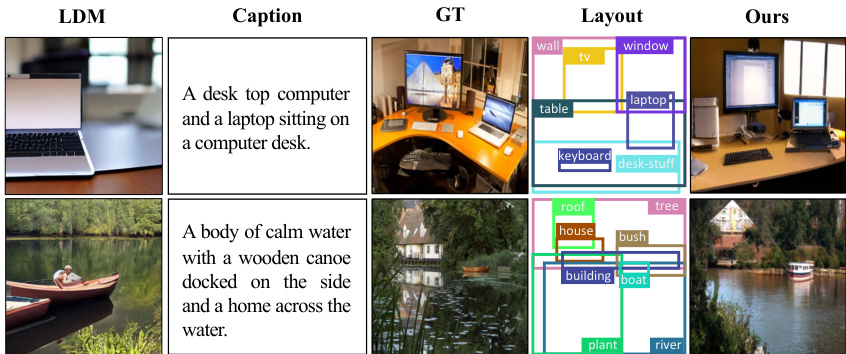

Figure 3. Visualization of com parisi on with SOTA methods on COCO-stuff $256\times256$ . Layout Diffusion has better generation quality and stronger control l ability compared to the other methods.

Table 1. Quantitative results on COCO-stuff [5] and VG [21]. The proposed diffusion method has made great progress in all evaluation metrics, showing better quality, control l ability, diversity, and accuracy than previous works. For COCO-stuff, we evaluate on 3097 layout and sample 5 images for each layout. For VG, we evaluate on 5096 layout and sample 1 image for each layout. We also report reproduction scores of previous works in Appendix.

图 3: 在COCO-stuff $256\times256$ 数据集上与SOTA方法的对比可视化。Layout Diffusion相比其他方法具有更好的生成质量和更强的控制能力。

| 方法 | COCO-stuff | VG | ||||||

|---|---|---|---|---|---|---|---|---|

| FID ← | IS ↑ | DS ↑ | CAS ↑ | YOLOScore ↑ | FID← | DS ↑ | CAS ↑ | |

| 128×128 | ||||||||

| Grid2Im [2] | 59.50 | 12.50±0.30 | 0.28±0.11 | 4.05 | 6.80 | |||

| LostGAN-v2 [47] | 24.76 | 14.21±0.40 | 0.45±0.09 | 39.91 | 13.60 | 29.00 | 0.42±0.09 | 29.74 |

| PLGAN [51] | 22.70 | 15.60±0.30 | 0.16±0.72 | 38.70 | 13.40 | 20.62 | ||

| LayoutDiffusion | 16.57 | 20.17±0.56 | 0.47±0.09 | 43.60 | 27.00 | 16.35 | 0.49±0.09 | 36.45 |

| 256×256 | ||||||||

| Grid2Im [2] | 65.20 | 16.40±0.70 | 0.34±0.13 | 4.81 | 9.70 | |||

| LostGAN-v2 [47] | 31.18 | 18.01±0.50 | 0.56±0.10 | 40.00 | 17.50 | 32.08 | 0.53±0.10 | 34.48 |

| PLGAN [51] | 29.10 | 18.90±0.30 | 0.52±0.10 | 37.65 | 14.40 | 28.06 | ||

| LayoutDiffusion | 15.61 | 28.36±0.75 | 0.57±0.10 | 47.74 | 32.00 | 15.63 | 0.59±0.10 | 48.90 |

表 1: COCO-stuff [5]和VG [21]数据集的定量结果。提出的扩散方法在所有评估指标上都取得了显著进展,展现出比之前工作更好的质量、控制能力、多样性和准确性。对于COCO-stuff,我们在3097个布局上进行评估,并为每个布局采样5张图像。对于VG,我们在5096个布局上进行评估,并为每个布局采样1张图像。我们还在附录中报告了之前工作的复现分数。

Table 2. Ablation study of Layout Fusion Module (LFM), Object-aware Cross Attention (OaCA), Cross Attention (CA). We use the model trained for 300,000 iterations on COCO-stuff $128\times128$ . The value in brackets denotes the discrepancy to our proposed method $\mathrm{+LFM{+}O a C A}$ ), where red denotes better and green denotes worse.

表 2. 布局融合模块 (LFM) 、物体感知交叉注意力 (OaCA) 和交叉注意力 (CA) 的消融研究。我们使用在 COCO-stuff $128\times128$ 数据集上训练 300,000 次迭代的模型。括号中的数值表示与本文提出的方法 $\mathrm{+LFM{+}O a C A}$ 的差异,其中红色表示更好,绿色表示更差。

| LFM | OaCA | CA | FID √ | IS↑ | DS↑ | CAS↑ | YOLOScore↑ |

|---|---|---|---|---|---|---|---|

| 29.94 (+13.37) | 13.59±0.29 (-6.58) | 0.70±0.08 (+0.23) | 3.83 (-39.77) | 0.00 (-27.00) | |||

| 17.06 (+0.49) | 19.21±0.53 (-0.96) | 0.52±0.09 (+0.05) | 30.86 (-12.74) | 6.90 (-20.10) | |||

| 16.76 (+0.19) | 19.57±0.40 (-0.60) | 0.48±0.09 (+0.01) | 40.67 (-2.93) | 18.80 (-8.20) | |||

| 16.46 (-0.11) | 19.79±0.40 (-0.38) | 0.48±0.10 (+0.01) | 42.47 (-1.13) | 23.60 (-3.40) | |||

| 16.57 | 20.17±0.56 | 0.47±0.09 | 43.60 | 27.00 |

Visual Genome collects 108,077 images with dense annotations of objects, attributes, and relationships. Following the setting of $\mathrm{SG}2\mathrm{Im}$ [16], we divide the data into $80%$ , $10%$ , $10%$ for the train, val, test set, respectively. We select the object and relationship categories occurring at least 2000 and 500 times in the train set, respectively, and select the images with 3 to 30 bounding boxes and ignoring all small objects. Finally, the training / validation / test set will have 62565 / 5062 / 5096 images, respectively.

Visual Genome 收集了 108,077 张带有密集物体、属性和关系标注的图像。按照 $\mathrm{SG}2\mathrm{Im}$ [16] 的设置,我们将数据分别按 $80%$、$10%$、$10%$ 的比例划分为训练集、验证集和测试集。我们筛选出在训练集中分别出现至少 2000 次和 500 次的对象类别和关系类别,并选择包含 3 到 30 个边界框的图像,同时忽略所有小物体。最终,训练集/验证集/测试集分别包含 62565/5062/5096 张图像。

4.2. Evaluation Metrics & Protocols

4.2. 评估指标与协议

We use five metrics to evaluate the quality, diversity, and control l ability of generation.

我们使用五个指标来评估生成的质量、多样性和控制能力。

Fr‘echet Inception Distance (FID) [10] shows the overall visual quality of the generated image by measuring the difference in the distribution of features between the real images and the generated images on an ImageNet-pretrained Inception-V3 [48] network.

Fr'echet Inception Distance (FID) [10] 通过测量真实图像与生成图像在ImageNet预训练Inception-V3 [48]网络上的特征分布差异,反映生成图像的整体视觉质量。

Inception Score (IS) [38] uses an Inception-V3 [48] pretrained on ImageNet network to compute the statistical score of the output of the generated images.

Inception Score (IS) [38] 使用在ImageNet上预训练的Inception-V3 [48]网络来计算生成图像的统计分数。

Diversity Score (DS) calculates the diversity between two generated images of the same layout by comparing the LPIPS [55] metric in a DNN feature space between them.

多样性分数 (DS) 通过比较同一布局下两幅生成图像在DNN特征空间中的LPIPS [55] 指标来计算它们之间的多样性。

Classification Score (CAS) [32] first crops the ground truth box area of images and resizing them at a resolution of $32\times32$ with their class. A ResNet-101 [9] classifier is trained with generated images and tested on real images.

分类评分 (CAS) [32] 首先裁剪图像的真实标注框区域,并将其调整为 $32\times32$ 分辨率并保留类别标签。随后使用生成图像训练一个 ResNet-101 [9] 分类器,并在真实图像上进行测试。

YOLOScore [23] evaluates 80 thing categories bbox mAP on generated images using a pretrained YOLOv4 [4] model, and shows the precision of control in one generated model.

YOLOScore [23] 使用预训练的 YOLOv4 [4] 模型评估生成图像中80个物体类别的边界框平均精度 (bbox mAP),并展示生成模型的控制精度。

In summary, FID and IS show the generation quality, DS shows the diversity, CAS and YOLOScore represent the control l ability. We follow the architecture of ADM [8], which is mainly a UNet. All experiments are conducted on 32 NVIDIA 3090s with mixed precision training [27]. We set batch size 24, learning rate 1e-5. We adopt the fixed linear variance schedule. More details can be found in the Appendix.

总之,FID和IS反映生成质量,DS体现多样性,CAS与YOLOScore衡量控制能力。我们采用ADM[8]的UNet主干架构,所有实验均在32块NVIDIA 3090显卡上采用混合精度训练[27]完成,批次大小设为24,学习率1e-5,并使用固定线性方差调度表。详见附录。

4.3. Qualitative results

4.3. 定性结果

Comparison of generated $256~\times~256$ images on the COCO-Stuff [5] with our method and previous works [2, 47, 51] is shown in Fig. 3.

在COCO-Stuff [5]数据集上生成的$256~\times~256$图像与我们的方法及先前工作[2, 47, 51]的对比结果如图3所示。

| 方法 | FID↓ | 参数量 (Nparms) | 吞吐量 (images/s) | 第一阶段 V100 天数 | 条件阶段 V100 天数 |

|---|---|---|---|---|---|

| LDM-8 (100 步) | 42.06 | 345M | 0.457 | 66 | 3.69 |

| LDM-4 (200 步) | 40.91 | 306M | 0.267 | 29 | 95.49 |

| ours-small (25 步) | 36.16 | 142M | 0.608 | 75.83 | |

| ours (25 步) | 31.68 | 569M | 0.308 | 216.55 |

Table 3. Comparison with SOTA diffusion-based methods LDM on COCO-stuff $256\times256$ . We generate the same 2048 images of LDM for a fair com parisi on.

表 3: 基于扩散的 SOTA 方法 LDM 在 COCO-stuff $256\times256$ 上的对比。为公平比较,我们生成与 LDM 相同的 2048 张图像。

Layout Diffusion generates more accurate high quality images, which has more recognizable and accurate objects corresponding to their layouts. Grid2Im [2], LostGANv2 [47] and PLGAN [51] generate images with distorted and unreal objects.

布局扩散 (Layout Diffusion) 生成的图像质量更高且更精准,其物体与布局的对应关系更清晰准确。Grid2Im [2]、LostGANv2 [47] 和 PLGAN [51] 生成的图像存在物体扭曲失真的问题。

Especially when input a set of multiple objects with complex relationships, previous work can hardly generate recognizable objects in the position corresponding to layouts. For example, in Fig. 3 (a), (c), and (e), the main objects (e.g. train, zebra, bus) in images are poorly generated in previous work, while our Layout Diffusion generates well. In Fig. 3 (b), only our Layout Diffusion generates the laptop in the right place. The images generated by our Layout Diffusion are more sensor i ally similar to the real ones.

尤其是当输入一组具有复杂关系的多个对象时,先前的工作很难在布局对应位置生成可识别的物体。例如在图3(a)、(c)和(e)中,先前工作生成的图像主体(如火车、斑马、公交车)效果较差,而我们的Layout Diffusion则生成良好。图3(b)中,只有我们的Layout Diffusion在正确位置生成了笔记本电脑。本方法生成的图像在感官上更接近真实图像。

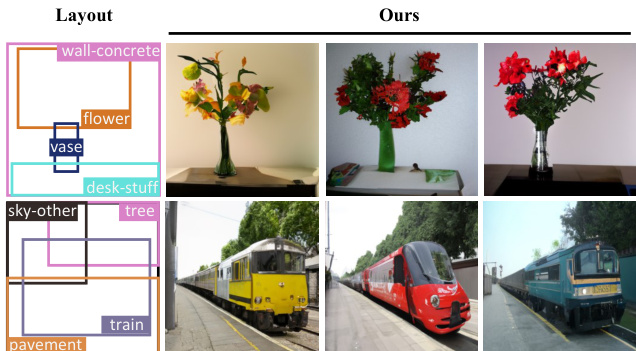

We show the diversity of Layout Diffusion in Fig. 4. Images from the same layouts have high quality and diversity (different lighting, textures, colors, and details).

我们在图 4 中展示了 Layout Diffusion 的多样性。来自相同布局的图像具有高质量和多样性(不同的光照、纹理、颜色和细节)。

We continuously add an additional layout from the initial layout, the one in the upper left corner, as shown in Fig. 5. In each step, Layout Diffusion adds the new object in very precise locations with consistent image quality, showing user-friendly interactivity.

我们持续从初始布局(左上角所示)添加额外布局,如图5所示。在每一步中,布局扩散(Layout Diffusion)都能以精确的位置和稳定的图像质量添加新对象,展现出用户友好的交互性。

4.4. Quantitative results

4.4. 定量结果

Tab. 1 provides the comparison among previous works and our method in FID, IS, DS, CAS and YOLOScore. Compared to the SOTA method, the proposed method achieves the best performance in comparison.

表 1: 对比了先前工作与本文方法在 FID、IS、DS、CAS 和 YOLOScore 指标上的表现。相较于当前最优(SOTA)方法,所提方法在各项对比中均取得最佳性能。

Figure 4. The diversity of Layout Diffusion. Each row of images are from the same layout and have great difference.

图 4: Layout Diffusion 的多样性。每行图像均源自相同布局但存在显著差异。

In overall generation quality, our Layout Diffusion outperforms the SOTA model by $46.35%$ and $29.29%$ at most in FID and IS, respectively. While maintaining high overall image quality, we also show precise and accurate controll ability, Layout Diffusion outperforms the SOTA model by $122.22%$ and $41.82%$ at most on YOLOScore and CAS, respectively. As for diversity, our Layout Diffusion still achieves $11.30%$ imp or ve ment at most accroding to the DS. Experiments on these metrics show that our methods can successfully generate the higher-quality images with better location and quantity control.

在整体生成质量上,我们的Layout Diffusion在FID和IS指标上分别以最高46.35%和29.29%的优势超越了当前最优(SOTA)模型。在保持高整体图像质量的同时,Layout Diffusion还展现出精准的控制能力,其YOLOScore和CAS指标分别以最高122.22%和41.82%的幅度领先SOTA模型。多样性方面,根据DS指标,Layout Diffusion仍实现了最高11.30%的提升。这些指标的实验表明,我们的方法能成功生成更高质量、具有更优位置与数量控制能力的图像。

In particular, we conduct experiments compared to LDM [35] in Tab. 3. “Ours-small” uses comparable GPU resources to have better FID performance with much fewer parameters and better throughout compared to LDM-8 when “Ours-small” outperforms LDM-4 in all respects. The results of “Ours” indicate that Layout Diffusion can have better FID performance, 31.6, at a higher cost. From these results, Layout Diffusion always achieves better performance at different cost levels compared with LDM [35].

特别地,我们在表3中与LDM [35]进行了对比实验。"Ours-small"在GPU资源相当的情况下,以更少的参数量和更高的吞吐量实现了优于LDM-8的FID性能,同时在所有方面都超越了LDM-4。"Ours"的结果表明,Layout Diffusion能以更高成本实现更优的FID性能(31.6)。这些结果表明,与LDM [35]相比,Layout Diffusion在不同成本级别下始终能获得更好的性能。

4.5. Ablation studies

4.5. 消融实验

We validate the effectiveness of LFM and OaCA in Tab. 2, using the evaluation metrics in Sec. 4.2. The significant improvement on FID, IS, CAS, and YOLOScore proves that the application of LFM and OaCA allows for higher generation quality and diversity, along with more control l ability. Furthermore, when applying both, considerable performance, 13.37 / 6.58 / 39.77 / 27.00 on FID / IS / CAS / YOLOScore, is gained.

我们在表 2 中验证了 LFM 和 OaCA 的有效性,使用了第 4.2 节的评估指标。FID、IS、CAS 和 YOLOScore 的显著提升证明,应用 LFM 和 OaCA 能够实现更高的生成质量和多样性,同时具备更强的控制能力。此外,当同时应用两者时,在 FID / IS / CAS / YOLOScore 上分别取得了 13.37 / 6.58 / 39.77 / 27.00 的优异性能。

An interesting phenomenon is that the change of the Diversity Score (DS) is in the opposite direction of other metrics. This is because DS, which stands for diversity, is physically the opposite of the control l ability represented by other metrics such as CAS and YOLOScore. The precise control offered on generated image leads to more constraints on diversity. As a result, the Diversity Score (DS) has a slight drop compared to the baseline.

一个有趣的现象是,多样性分数 (Diversity Score, DS) 的变化方向与其他指标相反。这是因为代表多样性的 DS 在物理意义上与 CAS、YOLOScore 等其他指标所代表的控制能力相对立。对生成图像的精确控制会导致多样性受到更多约束。因此,与基线相比,多样性分数 (DS) 略有下降。

5. Limitations & Societal Impacts

5. 局限性与社会影响

Limitations. Despite the significant improvements in various metrics, it is still difficult to generate a realistic image with no distortion and overlap, especially for a complex multi-