Far3D: Expanding the Horizon for Surround-view 3D Object Detection

Far3D: 拓展环视3D物体检测的视野

Abstract

摘要

Recently 3D object detection from surround-view images has made notable advancements with its low deployment cost. However, most works have primarily focused on close perception range while leaving long-range detection less explored. Expanding existing methods directly to cover long distances poses challenges such as heavy computation costs and unstable convergence. To address these limitations, this paper proposes a novel sparse query-based framework, dubbed Far3D. By utilizing high-quality 2D object priors, we generate 3D adaptive queries that complement the 3D global queries. To efficiently capture disc rim i native features across different views and scales for long-range objects, we introduce a perspective-aware aggregation module. Additionally, we propose a range-modulated 3D denoising approach to address query error propagation and mitigate convergence issues in long-range tasks. Significantly, Far3D demonstrates SoTA performance on the challenging Argoverse 2 dataset, covering a wide range of 150 meters, surpassing several LiDAR-based approaches. The code is available at https://github.com/megvii-research/Far3D.

最近,基于环视图像的3D物体检测因部署成本低而取得显著进展。然而,大多数工作主要聚焦近距离感知范围,远距离检测研究相对不足。直接将现有方法扩展到远距场景会面临计算成本高昂和收敛不稳定等挑战。为解决这些局限,本文提出一种新型稀疏查询框架Far3D:通过高质量2D物体先验生成3D自适应查询,与3D全局查询形成互补;针对远距物体跨视角、跨尺度的特征提取问题,设计视角感知聚合模块;此外提出范围调制3D去噪方法,以解决查询误差传播并缓解远距任务中的收敛问题。Far3D在覆盖150米范围的Argoverse 2数据集上显著超越多个基于LiDAR的方案,达到当前最优性能。代码已开源:https://github.com/megvii-research/Far3D。

1 Introduction

1 引言

3D object detection plays an important role in understanding 3D scenes for autonomous driving, aiming to provide accurate object localization and category around the ego vehicle. Surround-view methods (Huang and Huang 2022; Li et al. 2023; Liu et al. 2022b; Li et al. $2022\mathrm{c}$ ; Yang et al. 2023; Park et al. 2022; Wang et al. 2023a), with their advantages of low cost and wide applicability, have achieved remarkable progress. However, most of them focus on close-range perception (e.g., ${\sim}50$ meters on nuScenes (Caesar et al. 2020)), leaving the long-range detection field less explored. Detecting distant objects is essential for real-world driving to maintain a safe distance, especially at high speeds or complex road conditions.

3D物体检测在自动驾驶的3D场景理解中扮演着重要角色,旨在为自车周围提供精准的物体定位与类别识别。环视方法 (Huang and Huang 2022; Li et al. 2023; Liu et al. 2022b; Li et al. $2022\mathrm{c}$; Yang et al. 2023; Park et al. 2022; Wang et al. 2023a) 凭借低成本、高适用性的优势取得了显著进展。但现有研究多集中于近距离感知 (例如 nuScenes (Caesar et al. 2020) 数据集约50米范围),对远距离检测领域的探索相对不足。在实际驾驶场景中,远距离物体检测对保持安全车距至关重要,尤其在高速行驶或复杂路况下。

Existing surround-view methods can be broadly categorized into two groups based on the intermediate representation, dense Bird’s-Eye-View (BEV) based methods and sparse query-based methods. BEV based methods (Huang et al. 2021; Huang and Huang 2022; Li et al. 2023, 2022c; Yang et al. 2023) usually convert perspective features to BEV features by employing a view transformer (Philion and Fidler 2020), then utilizing a 3D detector head to produce the 3D bounding boxes. However, dense BEV features come at the cost of high computation even for the close-range perception, making it more difficult to scale up to long-range perception. Instead, following DETR (Carion et al. 2020) style, sparse query-based methods (Wang et al. 2022; Liu et al. 2022a,b; Wang et al. 2023a) adopt learnable global queries to represent 3D objects, and interact with surroundview image features to update queries. Although sparse design can avoid the squared growth of query numbers, its global fixed queries cannot adapt to dynamic scenarios and usually miss targets in long-range detection. We adopt the sparse query design to maintain detection efficiency and introduce 3D adaptive queries to address the inflexibility

现有环视方法根据中间表示可大致分为两类:基于密集鸟瞰图(BEV)的方法和基于稀疏查询的方法。基于BEV的方法(Huang et al. 2021; Huang and Huang 2022; Li et al. 2023, 2022c; Yang et al. 2023)通常通过视图转换器(Philion and Fidler 2020)将透视图特征转换为BEV特征,再使用3D检测头生成3D边界框。然而密集BEV特征即使对近距离感知也会带来高计算成本,使其更难扩展至远距离感知。基于稀疏查询的方法(Wang et al. 2022; Liu et al. 2022a,b; Wang et al. 2023a)遵循DETR(Carion et al. 2020)范式,采用可学习的全局查询来表示3D物体,并通过与环视图像特征交互来更新查询。虽然稀疏设计能避免查询数量的平方增长,但其全局固定查询无法适应动态场景,通常在远距离检测中漏检目标。我们采用稀疏查询设计以保持检测效率,并引入3D自适应查询来解决灵活性不足的问题

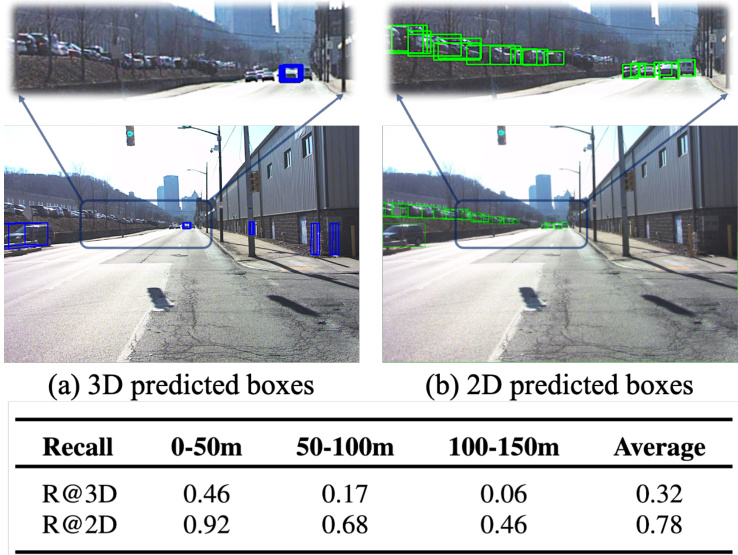

Figure 1: Peformance comparisons on Argoverse 2 between 3D detection and 2D detection. (a) and (b) demonstrate predicted boxes of StreamPETR and YOLOX, respectively. (c) imply that 2D recall is notably better than 3D recall and can act as a bridge to achieve high-quality 3D detection. Note that 2D recall does not represent 3D upper bound due to different recall criteria. () Recall comparison

图 1: Argoverse 2 数据集上 3D 检测与 2D 检测的性能对比。(a) 和 (b) 分别展示了 StreamPETR 与 YOLOX 的预测框。(c) 表明 2D 召回率显著优于 3D 召回率,可作为实现高质量 3D 检测的桥梁。需注意由于召回标准不同,2D 召回率并不代表 3D 检测的上限。() 召回率对比

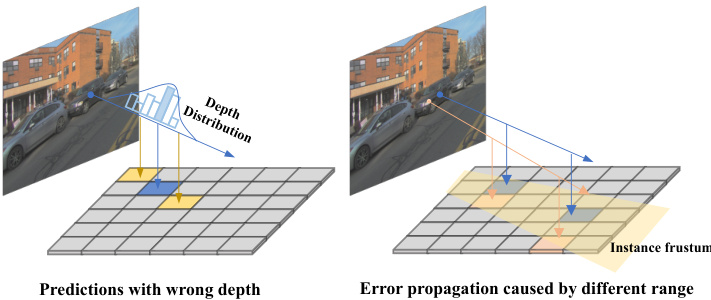

Figure 2: Different cases of transform img 2D points into 3D space. The blue dots indicate the centers of 3D objects in images. (a) shows the redundant prediction with the wrong depth, which is in yellow. (b) illustrates the error propagation problem dominated by different ranges.

图 2: 将二维图像点转换到三维空间的不同情况。蓝点表示图像中三维物体的中心。(a) 展示了深度错误导致的冗余预测(黄色部分)。(b) 呈现了由不同范围主导的误差传播问题。

weaknesses.

弱点。

To employ the sparse query-based paradigm for longrange detection, the primary challenge lies in poor recall performance. Due to the query sparsity in 3D space, assignments between predictions and ground-truth objects are affected, generating only a small amount of matched positive queries. As illustrated in Fig. 1, 3D detector recalls are pretty low, yet recalls from the existing 2D detector are much higher, showing a significant performance gap between them. Motivated by this, leveraging high-quality 2D object priors to improve 3D proposals is a promising approach, for enabling accurate localization and comprehensive coverage. Although previous methods like SimMOD (Zhang et al. 2023) and MV2D (Wang et al. 2023b) have explored using 2D predictions to initialize 3D object proposals, they primarily focus on close-range tasks and discard learnable object queries. Moreover, as depicted in Fig. 2, directly introducing 3D queries derived from 2D proposals for long-range tasks encounters two issues: 1) inferior redundant predictions due to uncertain depth distribution along the object rays, and 2) larger deviations in 3D space as the range increases due to frustum transformation. These noisy queries can impact the training stability, requiring effective denoising ways to optimize. Furthermore, within the training process, the model exhibits a tendency to overfit on densely populated close objects while disregarding sparsely distributed distant objects.

要在长距离检测中采用基于稀疏查询的范式,主要挑战在于召回性能较差。由于3D空间中的查询稀疏性,预测结果与真实物体之间的匹配关系受到影响,仅能生成少量匹配的正查询。如图1所示,3D检测器的召回率相当低,而现有2D检测器的召回率则高得多,两者之间存在显著性能差距。受此启发,利用高质量的2D物体先验信息来改进3D提案是一种有前景的方法,可实现精确定位和全面覆盖。尽管SimMOD (Zhang et al. 2023) 和MV2D (Wang et al. 2023b) 等先前方法已探索使用2D预测来初始化3D物体提案,但它们主要关注近距离任务,并丢弃了可学习的物体查询。此外,如图2所示,直接为长距离任务引入源自2D提案的3D查询会遇到两个问题:1) 由于沿物体射线的不确定深度分布导致冗余预测质量较差;2) 由于视锥变换,随着距离增加,3D空间中的偏差会更大。这些噪声查询会影响训练稳定性,需要有效的去噪方法进行优化。此外,在训练过程中,模型倾向于过度拟合密集分布的近距离物体,而忽略稀疏分布的远距离物体。

To address the aforementioned challenges, we design a novel 3D detection paradigm to expand the perception horizon. Despite the 3D global query that was learned from the dataset, our approach also incorporates auxiliary 2D proposals into 3D adaptive query generation. Specifically, we first produce reliable pairs of 2D object proposals and corresponding depths then project them to 3D proposals via spatial transformation. We compose 3D adaptive queries with the projected positional embedding and semantic context, which would be refined in the subsequent decoder. In the decoder layers, perspective-aware aggregation is employed across different image scales and views. It learns sampling offsets for each query and dynamically enables interactions with favorable features. For instance, distant object queries are beneficial to attend large-resolution features, while the opposite is better for close objects in order to capture highlevel context. Lastly, we design a range-modulated 3D denoising technique to mitigate query error propagation and slow convergence. Considering the different regression difficulties for various ranges, noisy queries are constructed based on ground-truth (GT) as well as referring to their distances and scales. Our method feeds multi-group noisy proposals around GT into the decoder and trains the model to a) recover 3D GT for positive ones and b) reject negative ones, respectively. The inclusion of query denoising also alleviates the problem of range-level unbalanced distribution.

为解决上述挑战,我们设计了一种新颖的3D检测范式以扩展感知范围。除了从数据集中学习到的3D全局查询(global query),我们的方法还将辅助2D提案(proposal)融入3D自适应查询生成。具体而言,我们首先生成可靠的2D目标提案及其对应深度,随后通过空间变换将其投影为3D提案。通过融合投影位置嵌入(positional embedding)与语义上下文,我们构建出3D自适应查询,这些查询将在后续解码器中被逐步优化。在解码器层中,采用跨图像尺度和视角的透视感知聚合(perspective-aware aggregation),该方法为每个查询学习采样偏移量,并动态建立与有利特征的交互。例如,远距离目标查询适合关注大分辨率特征,而近距离目标则更适合捕获高层级上下文。最后,我们设计了距离调制的3D去噪技术(range-modulated 3D denoising)以减少查询误差传播和收敛缓慢问题。针对不同距离的回归难度差异,噪声查询的构建既基于真实标注(GT),又参考其距离和尺度。我们的方法将多组围绕GT的噪声提案输入解码器,并训练模型分别实现:a)对正样本恢复3D GT;b)拒绝负样本。查询去噪机制的引入也缓解了距离层级分布不均衡的问题。

Our proposed method achieves remarkable performance advancements over state-of-the-art (SoTA) approaches in the challenging long-range Argoverse 2 dataset, as well as surpassing the prior arts of LiDAR-based methods. To evaluate the generalization capability, we further validate its results on nuScenes dataset and demonstrate SoTA metrics.

我们提出的方法在具有挑战性的长距离Argoverse 2数据集上实现了显著优于现有技术(SoTA)方法的性能提升,同时超越了基于LiDAR的现有方法。为评估泛化能力,我们进一步在nuScenes数据集上验证了结果,并展示了SoTA指标。

In summary, our contributions are:

总之,我们的贡献包括:

• We propose a novel sparse query-based framework to expand the perception range in 3D detection, by incorporating high-quality 2D object priors into 3D adaptive queries. • We develop perspective-aware aggregation that captures informative features from diverse scales and views, as well as a range-modulated 3D denoising technique to address query error propagation and convergence problems. • On the challenging long-range Argoverse 2 datasets, our method surpasses surround-view methods and outperforms several LiDAR-based methods. The generalization of our method is validated on the nuScenes dataset.

• 我们提出了一种新颖的基于稀疏查询的框架,通过将高质量2D物体先验融入3D自适应查询,扩展3D检测的感知范围。

• 我们开发了视角感知聚合机制以捕获多尺度和多视角的信息特征,并提出范围调制3D去噪技术来解决查询误差传播和收敛问题。

• 在具有挑战性的长距离Argoverse 2数据集上,我们的方法超越了环视方法,并优于多种基于LiDAR的方法。本方法在nuScenes数据集上验证了泛化能力。

2 Related Work

2 相关工作

2.1 Surround-view 3D Object Detection

2.1 环视3D目标检测

Recently 3D object detection from surround-view images has attracted much attention and achieved great progress, due to its advantages of low deployment cost and rich semantic information. Based on feature representation, existing methods (Wang et al. 2021, 2022; Liu et al. 2022a; Huang and Huang 2022; Li et al. 2023, 2022b; Jiang et al. 2023; Liu et al. 2022b; Li et al. 2022c; Yang et al. 2023; Park et al. 2022; Wang et al. 2023a; Zong et al. 2023; Liu et al. 2023) can be largely classified into BEV-based methods and sparse-query based methods.

近年来,基于环视图像的3D物体检测因其部署成本低和语义信息丰富的优势备受关注并取得显著进展。根据特征表示方式,现有方法 (Wang et al. 2021, 2022; Liu et al. 2022a; Huang and Huang 2022; Li et al. 2023, 2022b; Jiang et al. 2023; Liu et al. 2022b; Li et al. 2022c; Yang et al. 2023; Park et al. 2022; Wang et al. 2023a; Zong et al. 2023; Liu et al. 2023) 主要可分为基于鸟瞰图(BEV)的方法和基于稀疏查询的方法。

Extracting image features from surround views, BEVbased methods (Huang et al. 2021; Huang and Huang 2022; Li et al. 2023, 2022c) transform features into BEV space by leveraging estimated depths or attention layers, then a 3D detector head is employed to predict localization and other properties of 3D objects. For instance, BEVFormer (Li et al. 2022c) leverages both spatial and temporal features by interacting with spatial and temporal space through predefined grid-shaped BEV queries. BEVDepth (Li et al. 2023) propose a 3D detector with a trustworthy depth estimation, by introducing a camera-aware depth estimation module. On the other hand, sparse query-based paradigms (Wang et al. 2022; Liu et al. 2022a) learn global object queries from the representative data, then feed them into the decoder to predict 3D bounding boxes during inference. This line of work has the advantage of lightweight computing.

从环视图像中提取特征时,基于鸟瞰图(BEV)的方法(Huang et al. 2021; Huang and Huang 2022; Li et al. 2023, 2022c)通过估计深度或注意力层将特征转换到BEV空间,随后采用3D检测头预测三维物体的定位及其他属性。例如BEVFormer(Li et al. 2022c)通过预定义的网格状BEV查询,与时空特征进行交互来利用时空信息。BEVDepth(Li et al. 2023)则通过引入相机感知的深度估计模块,提出了具有可信深度估计的3D检测器。另一方面,基于稀疏查询的范式(Wang et al. 2022; Liu et al. 2022a)从代表性数据中学习全局物体查询,在推理阶段将其输入解码器来预测3D边界框。这类方法具有计算量轻的优势。

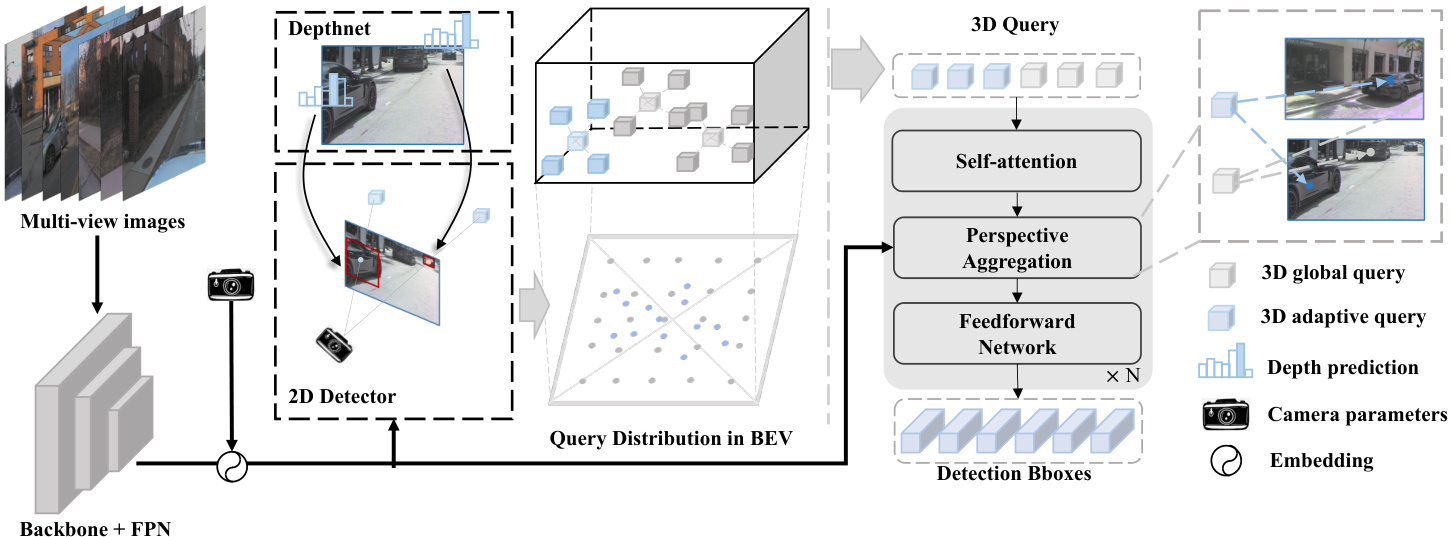

Figure 3: The overview of our proposed Far3D. Feeding surround-view images into the backbone and FPN neck, we obtain 2D image features and encode them with camera parameters for perspective-aware transformation. Utilizing a 2D detector and DepthNet, we generate reliable 2D box proposals and their corresponding depths, which are then concatenated and projected into 3D space. The generated 3D adaptive queries, combined with the initial 3D global queries, are iterative ly refined by the decoder layers to predict 3D bounding boxes. Furthermore, temporal modeling is equipped through long-term query propagation.

图 3: 我们提出的 Far3D 方法概览。将环视图像输入主干网络和 FPN 颈部结构后,我们获得 2D 图像特征并通过相机参数进行透视感知编码。利用 2D 检测器和 DepthNet 生成可靠的 2D 边界框提案及其对应深度信息,随后将这些数据拼接并投影至 3D 空间。生成的 3D 自适应查询与初始 3D 全局查询相结合,通过解码器层迭代优化以预测 3D 边界框。此外,通过长期查询传播实现时序建模。

Furthermore, temporal modeling for surround-view 3D detection can improve detection performance and decrease velocity errors significantly, and many works (Huang and Huang 2022; Liu et al. 2022b; Park et al. 2022; Wang et al. 2023a; Lin et al. 2022, 2023) aim to extend a single-frame framework to multi-frame design. BEVDet4D (Huang and Huang 2022) lifts the BEVDet paradigm from the spatialonly 3D space to the spatial-temporal 4D space, via fusing features with the previous frame. PETRv2 (Liu et al. 2022b) extends the 3D position embedding in PETR for temporal modeling through the temporal alignment of different frames. However, they use only limited history. To leverage both short-term and long-term history, SOLOFusion (Park et al. 2022) balances the impacts of spatial resolution and temporal difference on localization potential, then use it to design a powerful temporal 3D detector. StreamPETR (Wang et al. 2023a) develops an object-centric temporal mechanism in an online manner, where long-term historical information is propagated through object queries.

此外,环视3D检测中的时序建模能显著提升检测性能并降低速度误差。多项研究 (Huang and Huang 2022; Liu et al. 2022b; Park et al. 2022; Wang et al. 2023a; Lin et al. 2022, 2023) 致力于将单帧框架扩展为多帧设计。BEVDet4D (Huang and Huang 2022) 通过融合前一帧特征,将BEVDet范式从纯空间3D空间提升至时空4D空间。PETRv2 (Liu et al. 2022b) 通过不同帧的时序对齐,扩展了PETR中的3D位置嵌入以实现时序建模,但仅使用有限历史帧。为兼顾短期与长期历史信息,SOLOFusion (Park et al. 2022) 平衡了空间分辨率与时间差异对定位潜力的影响,进而设计出强大的时序3D检测器。StreamPETR (Wang et al. 2023a) 采用在线方式开发了以物体为中心的时序机制,通过物体查询传递长期历史信息。

2.2 2D Auxiliary Tasks for 3D Detection

2.2 用于3D检测的2D辅助任务

3D detection from surround-view images can be improved through 2D auxiliary tasks, and some works (Xie et al. 2022; Zhang et al. 2023; Wang, Jiang, and Li 2022; Yang et al. 2023; Wang et al. 2023b) aim to exploit its potential. There are several approaches including 2D pertaining, auxiliary supervision, and proposal generation. SimMOD (Zhang et al. 2023) exploits sample-wise object proposals and designs a two-stage training manner, where perspective object proposals are generated and followed by iterative refinement in DETR3D-style. Focal-PETR (Wang, Jiang, and Li 2022) performs 2D object supervision to adaptively focus the attention of 3D queries on disc rim i native foreground regions. BEV Former V 2 (Yang et al. 2023) presents a two-stage BEV detector where perspective proposals are fed into the BEV head for final predictions. MV2D (Wang et al. 2023b) designs a 3D detector head that is initialized by RoI regions of 2D predicted proposals.

通过2D辅助任务可以提升环视图像的3D检测性能,部分研究(Xie等人2022;Zhang等人2023;Wang、Jiang和Li 2022;Yang等人2023;Wang等人2023b)致力于挖掘其潜力。主要方法包括2D预训练、辅助监督和提案生成:SimMOD(Zhang等人2023)采用样本级物体提案并设计两阶段训练策略,首先生成透视物体提案,随后以DETR3D风格进行迭代优化;Focal-PETR(Wang、Jiang和Li 2022)实施2D物体监督,使3D查询的注意力自适应聚焦于判别性前景区域;BEV Former V2(Yang等人2023)提出两阶段BEV检测器,将透视提案输入BEV头部进行最终预测;MV2D(Wang等人2023b)设计了由2D预测提案的RoI区域初始化的3D检测头。

Compared to the above methods, our framework differs in the following aspects. Firstly, we aim to resolve the challenges of long-range detection with surrounding views, which are less explored in previous methods. Besides learning 3D global queries, we explicitly leverage 2D predicted boxes and depths to build 3D adaptive queries, utilizing positional prior and semantic context simultaneously. Furthermore, the designs of perspective-aware aggregation and 3D denoising are integrated to address task issues.

与上述方法相比,我们的框架在以下方面有所不同。首先,我们致力于解决多视角远距离检测的挑战,这一领域在先前方法中较少被探索。除了学习3D全局查询(global queries)外,我们显式利用2D预测框和深度信息构建3D自适应查询(adaptive queries),同时整合位置先验与语义上下文。此外,通过集成视角感知聚合(perspective-aware aggregation)和3D去噪(denoising)设计来解决任务相关问题。

3 Method

3 方法

3.1 Overview

3.1 概述

Fig. 3 shows the overall pipeline of our sparse query-based framework. Feeding surround-view images $\mathbf{I}={\mathbf{I^{1}},...,\mathbf{I^{n}}}$ , we extract multi-level images features $\mathbf{F}={\bar{\mathbf{F}}^{1},...,\bar{\mathbf{F}}^{\bar{\mathbf{n}}}}$ by using the backbone network (e.g. ResNet, ViT) and a FPN (Lin et al. 2017) neck. To generate 3D adaptive queries, we first obtain 2D proposals and depths using a 2D detector head and depth network, then filter reliable ones and transform them into 3D space to generate 3D object queries. In this way, informative object priors from 2D detections are encoded into the 3D adaptive queries.

图 3: 展示了我们基于稀疏查询框架的整体流程。输入环视图像 $\mathbf{I}={\mathbf{I^{1}},...,\mathbf{I^{n}}}$ 后,我们通过骨干网络 (如 ResNet、ViT) 和 FPN (Lin et al. 2017) 颈部结构提取多层级图像特征 $\mathbf{F}={\bar{\mathbf{F}}^{1},...,\bar{\mathbf{F}}^{\bar{\mathbf{n}}}}$ 。为生成 3D 自适应查询,首先使用 2D 检测头和深度网络获取 2D 提案及深度信息,随后筛选可靠提案并将其转换至 3D 空间以生成 3D 物体查询。通过这种方式,来自 2D 检测的信息化物体先验被编码到 3D 自适应查询中。

In the 3D detector head, we concatenate 3D adaptive queries and 3D global queries, then input them to transformer decoder layers including self-attention among queries and perspective-aware aggregation between queries and features. We propose perspective-aware aggregation to efficiently capture rich features in multiple views and scales by considering the projection of 3D objects. Besides, rangemodulated 3D denoising is introduced to alleviate query error propagation and stabilize the convergence, when training with long-range and imbalanced distributed objects. Sec 3.4 depicts the denoising technique in detail.

在3D检测头中,我们将3D自适应查询(adaptive queries)与3D全局查询(global queries)拼接后输入Transformer解码层,该层包含查询间自注意力机制(self-attention)以及查询与特征间的视角感知聚合(perspective-aware aggregation)。我们提出的视角感知聚合能通过考虑3D物体的投影,高效捕获多视角多尺度的丰富特征。此外,针对远距离及非均衡分布物体的训练场景,我们引入范围调制3D去噪(rangemodulated 3D denoising)技术来减轻查询误差传播并稳定收敛。第3.4节详细描述了该去噪技术。

3.2 Adaptive Query Generation

3.2 自适应查询生成

Directly extend existing 3D detectors from short range (e.g. ${\sim}50\mathrm{m})$ to long range (e.g. $\mathord{\sim}150\mathrm{m})$ suffers from several problems: heavy computation costs, inefficient convergence and declining localization ability. For instance, the query number is supposed to grow at least squarely to cover possible objects in a larger range, yet such a computing disaster is unacceptable in realistic scenarios. Besides that, small and sparse distant objects would hinder the convergence and even hurt the localization of close objects. Motivated by the high performance of 2D proposals, we propose to generate adaptive queries as objects prior to assist 3D localization. This paradigm compensates for the weakness of global fixed query design and allows the detector to generate adaptive queries near the ground-truth (GT) boxes for different images. In this way, the model is equipped with better generalization and practicality.

直接将现有3D检测器从短距离(如${\sim}50\mathrm{m}$)扩展到长距离(如$\mathord{\sim}150\mathrm{m}$)会面临几个问题:高昂的计算成本、低效的收敛性以及定位能力下降。例如,查询数量至少需要平方级增长才能覆盖更大范围内的潜在物体,但这种计算灾难在实际场景中是不可接受的。此外,小而稀疏的远距离物体会阻碍收敛,甚至影响近处物体的定位。受2D提案高性能的启发,我们提出在3D定位前生成自适应查询作为物体先验。这一范式弥补了全局固定查询设计的不足,使检测器能够针对不同图像在真实标注(GT)框附近生成自适应查询。通过这种方式,模型获得了更好的泛化能力和实用性。

Specifically, given image features after FPN neck, we feed them into the anchor-free detector head from YOLOX (Ge et al. 2021) and a light-weighted depth estimation net, outputting 2D box coordinates, scores and depth map. 2D detector head follows the original design, while the depth estimation is regarded as a classification task by disc ret i zing the depth into bins (Reading et al. 2021; Zhang et al. 2022). We then make pairs of 2D boxes and corresponding depths. To avoid the interference of low-quality proposals, we set a score threshold $\tau$ (e.g. 0.1) to leave only reliable ones. For each view $i$ , box centers $(\mathbf{c}{w},\mathbf{c}{h})$ from 2D predictions and depth ${\bf d}{w h}$ from depth map are combined and projected to 3D proposal centers ${\bf c}_{3d}$ .

具体而言,给定经过FPN颈部的图像特征后,我们将其输入来自YOLOX (Ge et al. 2021) 的无锚检测头和轻量化深度估计网络,输出2D框坐标、分数和深度图。2D检测头沿用原始设计,而深度估计通过将深度离散化为区间 (Reading et al. 2021; Zhang et al. 2022) 被视为分类任务。随后我们将2D框与对应深度配对。为避免低质量提案的干扰,设置分数阈值 $\tau$ (如0.1) 仅保留可靠提案。对于每个视角 $i$,将2D预测的框中心 $(\mathbf{c}{w},\mathbf{c}{h})$ 与深度图获取的深度 ${\bf d}{w h}$ 结合,投影为3D提案中心 ${\bf c}_{3d}$。

$$

\mathbf{c_{3d}}=K_{i}^{-1}I_{i}^{-1}[\mathbf{c}{w}\mathbf{d}{w h},\mathbf{c}{h}*\mathbf{d}{w h},\mathbf{d}_{w h},\mathbf{1}]^{T}

$$

$$

\mathbf{c_{3d}}=K_{i}^{-1}I_{i}^{-1}[\mathbf{c}{w}\mathbf{d}{w h},\mathbf{c}{h}*\mathbf{d}{w h},\mathbf{d}_{w h},\mathbf{1}]^{T}

$$

where $K_{i},I_{i}$ denote camera extrinsic and intrinsic matrices.

其中 $K_{i},I_{i}$ 表示相机外参矩阵和内参矩阵。

After obtaining projected 3D proposals, we encode them into 3D adaptive queries as follows,

在获取投影的3D提案后,我们按如下方式将其编码为3D自适应查询:

$$

{\bf Q}{p o s}=P o s E m b e d({\bf c}_{3d})

$$

$$

{\bf Q}{p o s}=P o s E m b e d({\bf c}_{3d})

$$

$$

\mathbf{Q}{s e m}=S e m E m b e d(\mathbf{z}{2d},\mathbf{s}_{2d})

$$

$$

\mathbf{Q}{s e m}=S e m E m b e d(\mathbf{z}{2d},\mathbf{s}_{2d})

$$

$$

\mathbf{Q}=\mathbf{Q}{p o s}+\mathbf{Q}_{s e m}

$$

$$

\mathbf{Q}=\mathbf{Q}{p o s}+\mathbf{Q}_{s e m}

$$

where $\mathbf{Q}{p o s},\mathbf{Q}{s e m}$ denote positional embedding and semantic embedding, respectively. ${\bf z}{2d}$ sampled from $\mathbf{F}$ corresponds to the semantic context of position $(\mathbf{c}{w},\mathbf{c}{h})$ , and ${\bf s}_{2d}$ is the confidence score of 2D boxes. $P o s E m b e d(\cdot)$ consists of a sinusoidal transformation (Vaswani et al. 2017) and a MLP, while $S e m E m b e d(\cdot)$ is another MLP.

其中 $\mathbf{Q}{pos}$ 和 $\mathbf{Q}{sem}$ 分别表示位置嵌入 (positional embedding) 和语义嵌入 (semantic embedding)。从 $\mathbf{F}$ 中采样的 ${\bf z}{2d}$ 对应位置 $(\mathbf{c}{w},\mathbf{c}{h})$ 的语义上下文,${\bf s}_{2d}$ 是 2D 边界框的置信度分数。$PosEmbed(\cdot)$ 由正弦变换 [20] 和多层感知机组成,而 $SemEmbed(\cdot)$ 是另一个多层感知机。

Lastly, the proposed 3D adaptive queries are concatenated with initialized global queries, and fed to subsequent transformer layers in the decoder.

最后,将提出的3D自适应查询与初始化的全局查询拼接,并输入解码器中后续的Transformer层。

3.3 Perspective-aware Aggregation

3.3 视角感知聚合

Existing sparse query-based approaches usually adopt one single-level feature map for computation effectiveness (e.g. StreamPETR). However, the single feature level is not optimal for all object queries of different ranges. For example, small distant objects require large-resolution features for precise localization, while high-level features are better suited for large close objects. To overcome the limitation, we propose perspective-aware aggregation, enabling efficient feature interactions on different scales and views.

现有基于稀疏查询的方法通常采用单层特征图以提高计算效率(例如StreamPETR)。然而,单一特征层级并不适用于所有不同距离的目标查询。例如,远处小物体需要大分辨率特征实现精确定位,而高层特征更适合处理近处大物体。为突破这一限制,我们提出视角感知聚合机制,实现跨尺度与多视角的高效特征交互。

Inspired by the deformable attention mechanism (Zhu et al. 2020), we apply a 3D spatial deformable attention consisting of 3D offsets sampling followed by view transformation. Formally, we first equip image features $\mathbf{F}$ with the camera information including intrinsic I and extrinsic parameters $\mathbf{K}$ . A squeeze-and-excitation block $\mathrm{Hu}$ , Shen, and Sun 2018) is used to explicitly enrich the features. Given enhanced feature $\mathbf{F}^{\prime}$ , we employ 3D deformable attention instead of global attention in PETR series (Liu et al. 2022a,b; Wang et al. 2023a). For each query reference point in 3D space, the model learns $M$ sampling offsets around and projects these references into different 2D scales and views.

受可变形注意力机制(Zhu et al. 2020)启发,我们采用了一种包含3D偏移采样与视角变换的3D空间可变形注意力。具体而言,我们首先为图像特征$\mathbf{F}$配备相机内参I和外参$\mathbf{K}$,并通过挤压激励模块(Hu et al. 2018)显式增强特征。基于增强后的特征$\mathbf{F}^{\prime}$,我们在PETR系列方法(Liu et al. 2022a,b; Wang et al. 2023a)中使用3D可变形注意力替代全局注意力。对于3D空间中的每个查询参考点,模型学习其周围$M$个采样偏移量,并将这些参考点投影至不同2D尺度与视角。

$$

\mathbf{P}{q}^{2d}=\mathbf{I}\cdot\mathbf{K}\cdot(\mathbf{P}{q}^{3d}+\Delta\mathbf{P}_{q}^{3d})

$$

$$

\mathbf{P}{q}^{2d}=\mathbf{I}\cdot\mathbf{K}\cdot(\mathbf{P}{q}^{3d}+\Delta\mathbf{P}_{q}^{3d})

$$

where $\mathbf{P}{q}^{3d},\Delta\mathbf{P}{q}^{3d}$ are 3D reference point and learned offsets for query $q$ , respectively. $\mathbf{P}_{q}^{2d}$ stands for the projected 2d reference point of different scales and views. For simplicity, we omit the subscripts of scales and views.

其中 $\mathbf{P}{q}^{3d},\Delta\mathbf{P}{q}^{3d}$ 分别是查询 $q$ 的3D参考点和学习到的偏移量。 $\mathbf{P}_{q}^{2d}$ 表示不同尺度和视角下的投影2D参考点。为简洁起见,我们省略了尺度和视角的下标。

Next, 3D object queries interact with multi-scale sampled features from $\bar{\mathbf{F}}^{\prime}$ , according to the above 2D reference points $\mathbf{P}_{q}^{2d}$ . In this way, diverse features from various vis and scales are aggregated into 3D queries by considering their relative importance.

接下来,3D物体查询根据上述2D参考点$\mathbf{P}_{q}^{2d}$,与来自$\bar{\mathbf{F}}^{\prime}$的多尺度采样特征进行交互。通过这种方式,来自不同视角和尺度的多样化特征被聚合到3D查询中,同时考虑了它们的相对重要性。

3.4 Range-modulated 3D Denoising

3.4 范围调制3D降噪

3D object queries at different distances have different regression difficulties, which is different from 2D queries that are usually treated equally for existing 2D denoising methods such as DN-DETR (Li et al. 2022a). The difficulty discrepancy comes from query density and error propagation. On the one hand, queries corresponding to distant objects are less matched compared to close ones. On the other hand, small errors of 2D proposals can be amplified when introducing 2D priors to 3D adaptive queries, illustrated in Fig. 2, not to mention which effect increases along with object distance. As a result, some query proposals near GT boxes can be regarded as noisy candidates, whereas others with notable deviation should be negative ones. Therefore we aim to recall those potential positive ones and directly reject solid negative ones, by developing a method called rangemodulated 3D denoising.

不同距离的3D物体查询具有不同的回归难度,这与现有2D去噪方法(如DN-DETR [20])通常平等对待2D查询的情况不同。这种难度差异源于查询密度和误差传播:一方面,远距离物体对应的查询匹配率低于近处物体;另一方面,如图2所示,当将2D先验引入3D自适应查询时,2D提案的小误差会被放大,且这种效应随物体距离增加而加剧。因此,靠近真实框的查询提案可视为噪声候选,而明显偏离的提案则应判定为负样本。为此,我们提出了一种称为"距离调制3D去噪"的方法,旨在召回潜在正样本并直接剔除明确负样本。

Concretely, we construct noisy queries based on GT objects by simultaneously adding positive and negative groups. For both types, random noises are applied according to object positions and sizes to facilitate denoising learning in long-range perception. Formally, we define the position of noisy queries as:

具体而言,我们通过在GT物体上同时添加正负样本组来构建带噪声的查询。对于这两种类型,根据物体位置和大小施加随机噪声,以促进远距离感知中的去噪学习。形式上,我们将带噪声查询的位置定义为:

$$

\tilde{\mathbf{P}}=\mathbf{P}{G T}+\alpha f_{p}(\mathbf{S}{G T})+(1-\alpha)f_{n}(\mathbf{P}_{G T})

$$

$$

\tilde{\mathbf{P}}=\mathbf{P}{G T}+\alpha f_{p}(\mathbf{S}{G T})+(1-\alpha)f_{n}(\mathbf{P}_{G T})

$$

where $\alpha\in{0,1}$ corresponds to the generation of negative and positive queries, respectively. $\mathbf{P}{G T}^{-},\mathbf{S}{G T}\in\mathbb{R}^{3}$ represents 3D center $(x,y,z)$ and box scale $(w,l,h)$ of GT, and $\tilde{\mathbf{P}}$ is noisy coordinates. We use functions $f_{p}$ and $f_{n}$ to encode position-aware noise for positive and negative samples.

其中 $\alpha\in{0,1}$ 分别对应生成负查询和正查询。$\mathbf{P}{G T}^{-},\mathbf{S}{G T}\in\mathbb{R}^{3}$ 表示真实标注 (GT) 的3D中心坐标 $