UCPhrase: Unsupervised Context-aware Quality Phrase Tagging

UCPhrase: 无监督上下文感知的高质量短语标注

ABSTRACT

摘要

Identifying and understanding quality phrases from context is a fundamental task in text mining. The most challenging part of this task arguably lies in uncommon, emerging, and domain-specific phrases. The infrequent nature of these phrases significantly hurts the performance of phrase mining methods that rely on sufficient phrase occurrences in the input corpus. Context-aware tagging models, though not restricted by frequency, heavily rely on domain experts for either massive sentence-level gold labels or handcrafted gazetteers. In this work, we propose UCPhrase, a novel unsupervised context-aware quality phrase tagger. Specifically, we induce high-quality phrase spans as silver labels from consistently co-occurring word sequences within each document. Compared with typical context-agnostic distant supervision based on existing knowledge bases (KBs), our silver labels root deeply in the input domain and context, thus having unique advantages in preserving contextual completeness and capturing emerging, out-of-KB phrases. Training a conventional neural tagger based on silver labels usually faces the risk of over fitting phrase surface names. Alternatively, we observe that the contextual i zed attention maps generated from a Transformer-based neural language model effectively reveal the connections between words in a surface-agnostic way. Therefore, we pair such attention maps with the silver labels to train a lightweight span prediction model, which can be applied to new input to recognize (unseen) quality phrases regardless of their surface names or frequency. Thorough experiments on various tasks and datasets, including corpus-level phrase ranking, document-level keyphrase extraction, and sentence-level phrase tagging, demonstrate the superiority of our design over state-of-the-art pre-trained, unsupervised, and distantly supervised methods.

从上下文中识别和理解高质量短语是文本挖掘的一项基础任务。该任务最具挑战性的部分在于罕见、新兴和领域特定短语的识别。这些短语的低频特性严重影响了依赖语料库中充分出现次数的传统短语挖掘方法性能。虽然基于上下文感知的标注模型不受频率限制,但其严重依赖领域专家提供大量句子级黄金标注或手工编纂的术语表。

本研究提出UCPhrase——一种新型无监督上下文感知高质量短语标注器。具体而言,我们通过文档内部持续共现的词语序列诱导出高质量短语跨度作为银标注。相比传统基于现有知识库(KB)的上下文无关远程监督方法,我们的银标注深度扎根于输入领域和上下文,在保持上下文完整性和捕捉新兴、知识库外短语方面具有独特优势。

基于银标注训练常规神经标注器通常面临过度拟合短语表面形式的风险。我们观察到,基于Transformer的神经语言模型生成的上下文注意力图能以表面无关的方式有效揭示词语关联。因此,我们将此类注意力图与银标注结合,训练了一个轻量级跨度预测模型。该模型可应用于新输入数据,实现不受表面形式或频率影响的(未见过)高质量短语识别。

在包括语料库级短语排序、文档级关键词抽取和句子级短语标注等多项任务和数据集上的全面实验表明,我们的设计优于当前最先进的预训练、无监督和远程监督方法。

1 INTRODUCTION

1 引言

Quality phrases refer to informative multi-word sequences that “appear consecutively in the text, forming a complete semantic unit in certain contexts or the given document” [10]. Identifying and understanding quality phrases from context is a fundamental task in text mining. Automated quality phrase tagging serves as a cornerstone in a broad spectrum of downstream applications, including but not limited to entity recognition [35], text classification [1], and information retrieval [5].

优质短语 (quality phrases) 指那些"在文本中连续出现,在特定语境或给定文档中形成完整语义单元" [10] 的信息性多词序列。从上下文中识别和理解优质短语是文本挖掘的基础任务。自动化优质短语标注作为众多下游应用的基石,包括但不限于实体识别 [35]、文本分类 [1] 和信息检索 [5]。

The most challenging open problem in this task is how to recognize uncommon, emerging phrases, especially in specific domains. These phrases are essential in the sense of their significant semantic meanings and the large volume—following a typical Zipfian distribution, uncommon phrases can add up to a significant portion of quality phrases [37]. Moreover, emerging phrases are critical in understanding domain-specific documents, such as scientific papers, since new terminologies often come along with transformative innovations. However, mining such sparse long-tail phrases is nontrivial, since a frequency threshold has long ruled them out in traditional phrase mining methods [6, 8, 23, 25, 34] due to the lack of reliable frequency-related corpus-level signals (e.g., the mutual information of its sub-ngrams). For instance, AutoPhrase [34] only recognizes phrases with at least 10 occurrences by default.

该任务中最具挑战性的开放性问题是如何识别不常见的新兴短语,尤其在特定领域内。这些短语的重要性体现在其显著的语义含义和庞大的数量上——根据典型的齐夫分布 (Zipfian distribution) ,不常见短语可能占据高质量短语的很大比例 [37]。此外,新兴短语对于理解领域特定文档(如科学论文)至关重要,因为新术语往往伴随着变革性创新出现。然而,挖掘这类稀疏的长尾短语并非易事,由于缺乏可靠的频率相关语料库级信号(例如其子n-gram的互信息),传统短语挖掘方法 [6, 8, 23, 25, 34] 长期通过频率阈值将其排除在外。例如,AutoPhrase [34] 默认仅识别出现至少10次的短语。

For infrequent phrases, the tagging process largely relies on local context. Recent advances in neural language models have unleashed the power of sentence-level contextual i zed features in building chunking- and tagging-based models [27, 36]. These contextaware models can even recognize unseen phrases from new input texts, thus being no longer restricted by frequency. However, training a domain-specific tagger of reasonably high quality requires expensive, hard-to-scale effort from domain experts for massive sentence-level gold labels or handcrafted gazetteers.

对于低频短语,标注过程主要依赖局部上下文。近年来,神经语言模型的进展释放了句子级上下文特征在构建基于分块和标注模型中的潜力 [27, 36]。这些上下文感知模型甚至能识别新输入文本中未出现的短语,从而不再受频率限制。然而,训练一个质量尚可的领域专用标注器,需要领域专家付出昂贵且难以扩展的努力,以获取大量句子级黄金标注或手工编纂的词典。

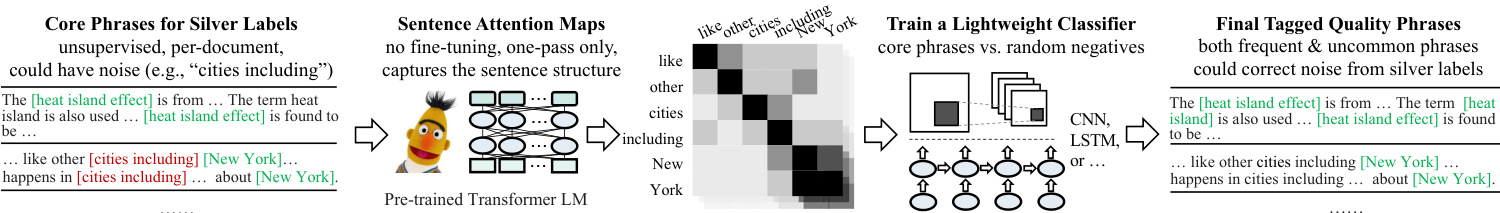

In this work, we propose UCPhrase, a novel unsupervised contextaware quality phrase tagger. It first induces high-quality silver labels directly from the corpus under the unsupervised setting, and then trains a tailored Transformer-based neural model that can recognize quality phrases in new sentences. Figure 1 presents an overview of UCPhrase. The two major steps are detailed as follows.

在本工作中,我们提出了UCPhrase,一种新型的无监督上下文感知质量短语标注器。它首先在无监督设置下直接从语料库中生成高质量的银标签,然后训练一个定制的基于Transformer的神经模型,用于识别新句子中的质量短语。图1展示了UCPhrase的概览。两个主要步骤如下。

By imitating the reading process of humans, we derive supervision directly from the input corpus. Given a document, human readers can quickly recognize new phrases or terminologies from the consistently used word sequences within the document. The “document” here refers to a collection of sentences centered on the same topic, such as sentences from an abstract of a scientific paper and tweets mentioning the same hashtag. Inspired by this observation, we propose to extract core phrases from each document, which are maximal contiguous word sequences that appear in the document more than once. The “maximal” here means that if one expands this word sequence further towards the left or right, its frequency within this document will drop. To avoid uninformative phrases (e.g., “of a”), we conduct simple filtering of stopwords before finalizing the silver labels. Note that our proposed silver label generation follows a per-document manner. Therefore, compared with typical context-agnostic distant supervision based on existing knowledge bases or dictionaries [34–36], our supervision roots deeply in the input domain and context, thus having unique advantages in preserving contextual completeness of matched spans and capturing much more emerging phrases.

通过模仿人类的阅读过程,我们直接从输入语料中获取监督信号。给定一篇文档,人类读者能快速从文档中反复出现的连贯词序列里识别出新短语或术语。这里的"文档"指围绕同一主题的句子集合,例如科学论文摘要中的句子、提及同一话题标签的推文等。受此启发,我们提出从每篇文档中提取核心短语——这些是在文档中出现超过一次的极大连续词序列。"极大"意味着若向左右任意方向扩展该词序列,其在当前文档中的出现频率就会下降。为避免无意义短语(如"of a"),我们在确定银标签前进行了简单的停用词过滤。值得注意的是,我们提出的银标签生成遵循逐文档处理方式。因此,相比基于现有知识库或词典的典型上下文无关远程监督[34-36],我们的监督信号深植于输入领域和上下文环境,在保持匹配文本片段上下文完整性、捕获更多新兴短语方面具有独特优势。

Figure 1: An overview of our UCPhrase: unsupervised context-aware quality phrase tagging.

图 1: 我们的 UCPhrase 方法概览:无监督上下文感知质量短语标注。

We further design a tailored neural tagger to fit our silver labels better. Training a conventional neural tagger based on silver labels usually faces a high risk of over fitting the observed labels [24]. With access to the word-identifiable embedding features, it is easy for the model to achieve nearly zero training error by rigidly memorizing the surface names of training labels. Alternatively, we find that the contextual i zed attention distributions generated from a Transformer-based neural language model could capture the connections between words in a surface-agnostic way [19]. Intuitively, the attention maps of quality phrases should reveal distinct patterns from ordinary word spans. Moreover, attention-based features block the direct access to the surface names of training labels, and force the model to learn about more general context patterns. Therefore, we pair such surface-agnostic features based on attention maps with the silver labels to train a neural tagging model, which can be applied to new input to recognize (unseen) quality phrases. Specifically, given an unlabeled sentence of $N$ words, we first encode the sentence with a pre-trained Transformer-based language model and obtain the attention maps as features. The $N\times N$ matrices from different Transformer layers and attention heads can be viewed as images to be classified with multiple channels. A lightweight CNN-based classifier is then trained to distinguish quality phrases from randomly sampled negative spans.

我们进一步设计了一个定制的神经标注器,以更好地适配我们的银标签。基于银标签训练传统神经标注器通常会面临过拟合已观测标签的高风险 [24]。借助词可识别的嵌入特征,模型很容易通过死记硬背训练标签的表面名称来实现近乎零的训练误差。相反,我们发现基于Transformer的神经语言模型生成的上下文注意力分布能以表面无关的方式捕捉词间关联 [19]。直观上,优质短语的注意力图应展现出与普通词跨度截然不同的模式。此外,基于注意力的特征阻断了直接访问训练标签表面名称的途径,迫使模型学习更通用的上下文模式。因此,我们将这种基于注意力图的表面无关特征与银标签配对,训练了一个可应用于新输入以识别(未见过的)优质短语的神经标注模型。具体而言,给定一个包含$N$个词的未标注句子,我们首先用预训练的基于Transformer的语言模型编码该句子,并获取注意力图作为特征。来自不同Transformer层和注意力头的$N×N$矩阵可视为待分类的多通道图像。随后训练一个轻量级基于CNN的分类器,用于从随机采样的负跨度中区分优质短语。

Thorough experiments on various tasks and datasets, including corpus-level phrase ranking, document-level keyphrase extraction, and sentence-level phrase tagging, demonstrate the superiority of our design over state-of-the-art unsupervised, distantly supervised methods, and pre-trained off-the-shelf tagging models. It is noteworthy that our trained model is robust to the noise in the core phrases—our case studies in Section 4.7 show that the model can identify inferior training labels by assigning extremely low scores.

在多种任务和数据集上的全面实验,包括语料库级短语排序、文档级关键词抽取和句子级短语标注,证明了我们的设计优于当前最先进的无监督方法、远程监督方法以及预训练的现成标注模型。值得注意的是,我们训练的模型对核心短语中的噪声具有鲁棒性——第4.7节的案例研究表明,该模型能通过分配极低分数来识别劣质训练标签。

Efficiency wise, thanks to the rich semantic and syntactic knowledge in the pre-trained language model, we can simply use the generated attention maps as informative features without fine-tuning the language model. Hence we only need to update the lightweight classification model during training, making the training process as fast as one inference pass of the language model through the corpus with limited resource consumption.

效率方面,得益于预训练语言模型中丰富的语义和句法知识,我们可以直接使用生成的注意力图作为信息特征,无需微调语言模型。因此训练过程中只需更新轻量级分类模型,使得训练过程与语言模型在语料库上的一次推理过程同样快速,且资源消耗有限。

To the best of our knowledge, UCPhrase is the first unsupervised context-aware quality phrase tagger. It enjoys the rich knowledge from the pre-trained neural language models. The learned phrase tagger works efficiently and effectively without reliance on human annotations, existing knowledge bases, or phrase dictionaries. We summarize our key contributions as follows:

据我们所知,UCPhrase 是首个无监督上下文感知的高质量短语标注工具。它充分利用了预训练神经语言模型中的丰富知识。学习得到的短语标注器无需依赖人工标注、现有知识库或短语词典即可高效且有效地工作。我们的主要贡献总结如下:

• We propose to mine silver labels that root deeply in the input domain and context by recognizing core phrases, i.e., maximal word sequences that occur consistently in a per-document manner. • We propose to replace the conventional contextual i zed word representations with surface-agnostic attention maps generated by pre-trained Transformer-based language models to alleviate the risk of over fitting silver labels. • We conduct extensive experiments, ablation studies, and case studies to compare UCPhrase with state-of-the-art unsupervised, distantly supervised methods, and pre-trained off-the-shelf tagging models. The results verify the superiority of our method .

• 我们提出通过识别核心短语(即每篇文档中持续出现的最大词序列)来挖掘深植于输入领域和上下文中的银标签。

• 我们提出用预训练基于Transformer的语言模型生成的与表面形式无关的注意力图替代传统的上下文词表示,以减轻过拟合银标签的风险。

• 我们进行了大量实验、消融研究和案例分析,将UCPhrase与最先进的无监督方法、远程监督方法以及预训练现成标注模型进行对比。结果验证了我们方法的优越性。

2 PROBLEM DEFINITION

2 问题定义

Given a sequence of words $\left[w_{1},\ldots,w_{N}\right]$ , a quality phrase is a contiguous span of words $[w_{i},\dots,w_{i+k}]$ that form a complete and informative semantic unit in context. Though some studies also view unigrams as potential phrases, in this work, we focus on multi-word phrases $(k>0)$ , which are more informative, yet more challenging to get due to both diversity and sparsity.

给定一个词序列 $\left[w_{1},\ldots,w_{N}\right]$,质量短语是指构成完整且信息丰富语义单元的连续词跨度 $[w_{i},\dots,w_{i+k}]$。尽管部分研究也将单字词视为潜在短语,但本工作专注于多词短语 $(k>0)$,这类短语信息量更大,却因多样性和稀疏性而更难获取。

To effectively capture phrases with potential overlaps, e.g., “information extraction” in “information extraction systems”, we adopt the span prediction framework, where each possible span in the sentence is assigned a binary label. To avoid a quadratic growth of the size of candidate spans, we follow previous work [25, 34] to set a maximum span length $K$ . We also explore alternative class if i ers based on the sequence labeling framework in Section 3.

为有效捕捉可能存在重叠的短语(例如“信息抽取系统”中的“信息抽取”),我们采用跨度预测框架,为句子中每个可能的跨度分配二元标签。为避免候选跨度数量呈二次增长,我们遵循先前研究 [25, 34] 设置最大跨度长度 $K$。第3节还将探讨基于序列标注框架的替代分类器。

3 UCPHRASE: METHODOLOGY

3 UCPHRASE: 方法论

Figure 1 presents an overview of UCPhrase. As an unsupervised method, UCPhrase first mines core phrases directly from each document as silver labels and extracts surface-agnostic attention features with a pre-trained language model. A lightweight classifier is then trained with silver labels and randomly sampled negative labels. Algorithm 1 shows the detailed training process.

图 1: UCPhrase 概述。作为一种无监督方法,UCPhrase 首先直接从每篇文档中挖掘核心短语作为银标签 (silver labels) ,并使用预训练语言模型提取与表面形式无关的注意力特征。随后通过银标签和随机采样的负标签 (negative labels) 训练一个轻量级分类器。算法 1 展示了详细的训练流程。

3.1 Silver Label Generation

3.1 银标签生成

As the first step, we seek to collect high-quality phrases in the input corpus following an unsupervised way, which will be our silver labels for the tagging model training. A common practice for automated label fetching is to conduct a context-agnostic matching between the corpus and a given quality phrase list, either mined

第一步,我们尝试以无监督方式从输入语料库中收集高质量短语,这些短语将作为标注模型训练的银标准标签。自动获取标签的常见做法是在语料库与给定质量短语列表之间进行上下文无关匹配,这些短语列表可通过挖掘...

Distant Supervision based on Wiki Entities

基于维基实体的远程监督

Doc1: … study about heat [island effect] … The heat [island effect] arises because the buildings…of their heat [island effect]…

Doc1: … 关于热岛效应 (heat island effect) 的研究 … 热岛效应的产生是由于建筑物…的热岛效应…

Doc2: … propose to extract core phrases … robust to potential noise in core phrases … the surface names of core phrases…

Doc2: …提出提取核心短语…对核心短语中的潜在噪声具有鲁棒性…核心短语的表面名称…

Core Phrase Mining

核心短语挖掘

Doc1: …a study about [heat island effect]… The [heat island effect] arises because the buildings…of their [heat island effect]…

Doc1: …一项关于[热岛效应]的研究… [热岛效应]的产生是由于建筑物…的[热岛效应]…

Doc2: …propose to extract [core phrases]… robust to potential noise in [core phrases]… the surface names of [core phrases]…

文档2:…提出提取[核心短语]…对[核心短语]中的潜在噪声具有鲁棒性…[核心短语]的表面名称…

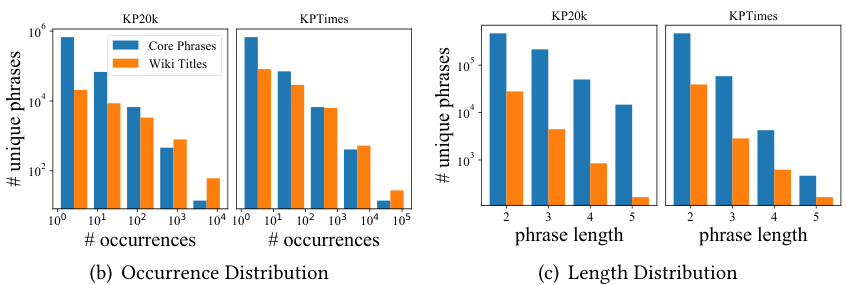

Figure 2: Comparing core phrases with context-agnostic distant supervision. (a) An illustrative example with context. Our core phrases preserve better contextual completeness and discover emerging new concepts introduced in the document. (b) Distributions of the generated silver labels with their occurrences in the corpus. The X-axis represents bins of phrase occurrences in the corpus. The Y-axis (exponential) represents the number of unique phrases in each bin. (c) Distributions of the generated silver labels with their lengths (# of words).

图 2: 核心短语与上下文无关的远距离监督对比。(a) 带上下文的示例。我们的核心短语保持了更好的上下文完整性,并发现了文档中引入的新兴概念。(b) 生成的银标签在语料库中出现次数的分布。X轴表示短语在语料库中出现次数的区间。Y轴(指数)表示每个区间内独特短语的数量。(c) 生成的银标签长度(单词数)的分布。

Algorithm 1: UCPhrase: unsupervised model training

算法 1: UCPhrase: 无监督模型训练

with unsupervised models or collected from an existing knowledge base (KB). Such methods, as we show later, can suffer from incomplete labels due to the negligence of context.

使用无监督模型或从现有知识库(KB)收集。正如我们稍后展示的,这类方法可能因忽略上下文而导致标签不完整。

On the contrary, based on the definition of phrases, we look for consistently used word sequences in context. We propose to treat documents as context and collect high-quality phrases directly from each document. The “document” here refers to a collection of sentences centered on the same topic, such as sentences from an abstract of a scientific paper and tweets mentioning the same hashtag. This way, we expect to preserve better contextual completeness that reflects the original writing intention.

相反,基于短语的定义,我们在上下文中寻找一致使用的词序列。我们建议将文档视为上下文,并直接从每篇文档中收集高质量短语。这里的"文档"指围绕同一主题的句子集合,例如科学论文摘要中的句子和提及相同话题标签的推文。通过这种方式,我们期望保留更好的上下文完整性,以反映原始写作意图。

We view a document $d_{m}$ as a contiguous word sequence $[w_{1},\ldots,$ $w_{N}]$ and then mine max contiguous sequential patterns. A valid pattern here is a word span $[w_{i},\dots,w_{j}]$ that appear more than once in the input sequence. One can easily adjust the frequency threshold to balance the quality and quantity of valid patterns. In this work, we simply use the minimum requirement of two occurrences without further tuning, and find it works well for both short documents like paper abstracts and long documents like news reports. To preserve completeness, we only leave max patterns that are not sub-patterns of any other valid patterns. Uninformative patterns like “of $\alpha^{\mathfrak{s}}$ are removed with a stopword list widely used by previous work [25, 34]. We treat the remaining max patterns as core phrases of document $d_{m}$ , and add them to the positive training samples $\mathcal{P}{m}^{+}$ . An equal number of negative samples are randomly drawn from the remaining spans in $d_{m}$ , denoted as $\mathcal{P}_{m}^{-}$ .

我们将文档 $d_{m}$ 视为连续的词序列 $[w_{1},\ldots,$ $w_{N}]$,然后挖掘最大连续序列模式。这里的有效模式是指词片段 $[w_{i},\dots,w_{j}]$ 在输入序列中出现超过一次。可以轻松调整频率阈值以平衡有效模式的质量和数量。在本工作中,我们简单地使用最低要求两次出现而不进行进一步调整,发现它对于论文摘要等短文档和新闻报道等长文档都效果良好。为了保持完整性,我们只保留那些不是其他有效模式子模式的最大模式。通过使用先前工作广泛采用的停用词列表 [25, 34],我们移除了诸如“of $\alpha^{\mathfrak{s}}$”之类的无信息模式。我们将剩余的最大模式视为文档 $d_{m}$ 的核心短语,并将它们添加到正训练样本 $\mathcal{P}{m}^{+}$ 中。从 $d_{m}$ 的剩余片段中随机抽取同等数量的负样本,记为 $\mathcal{P}_{m}^{-}$。

Figure 2 compares the silver labels generated by our core phrases with those by distant supervision, which follows a context-agnostic string matching from the Wikipedia entities. From the real example in Figure 2(a), “heat island effect” is not a Wikipedia entity, but “island effect” is one. Distant supervision hence generates a flawed label by partially matching the real phrase. Similar examples are quite common especially when it comes to compound phrases, like “biomedical data mining”. Distant supervision would tend to favor those popular phrases and generate incomplete matches in context. On the contrary, our core phrase mining can generate labels with better contextual completeness. Core phrase mining can also dynamically capture concepts or expressions newly introduced in each document, such as the “core phrases” in this paper. Figure 2(b) confirms this by showing the distribution of unique phrases of the two types of silver labels, mined from the KP20k CS publication corpus and the KPTimes news corpus, with respect to their frequency. In particular, core phrase mining discovers more unique phrases with less than 10 occurrences in the corpus (30x on KP20k, 9x on KPTimes). As Figure 2(c) demonstrates, core phrases outnumber matched Wiki titles on all length ranges. Overall, core phrase mining discovers much more unique phrases than distant supervision (20x on KP20k, 6x on KPTimes).

图 2: 将我们生成的核心短语银标签与远程监督生成的标签进行对比。远程监督采用与上下文无关的维基百科实体字符串匹配方式。从图 2(a)的实际案例可见,"heat island effect"并非维基百科实体,但"island effect"是。因此远程监督通过部分匹配生成了错误标签。这种案例在复合短语(如"biomedical data mining")中尤为常见——远程监督会倾向流行短语并产生上下文不完整的匹配。相反,我们的核心短语挖掘能生成上下文更完整的标签,还能动态捕捉每篇文档新引入的概念或表述(如本文的"core phrases")。图 2(b)通过KP20k学术文献库和KPTimes新闻语料中两类银标签的唯一短语分布验证了这一点:核心短语挖掘在出现次数少于10次的低频短语上具有显著优势(KP20k语料30倍,KPTimes语料9倍)。如图 2(c)所示,核心短语在所有长度区间均超过匹配的维基标题数量。总体而言,核心短语挖掘发现的唯一短语数量远超远程监督(KP20k语料20倍,KPTimes语料6倍)。

Of course, there also inevitably exist noises in mined core phrases due to random word combinations consistently used in some documents, e.g., “countries including”. Fortunately, since we collected core phrases from each document independently, such noisy labels will not spread and be amplified to the entire corpus. In fact, among the tagged core phrases randomly sampled from two datasets, the overall proportion of high-quality labels is over $90%$ . The large volume of reasonably high-quality silver labels provides a robust foundation for us to train a span classifier that learns about general context patterns to distinguish noisy spans. As Section 4.7 shows, the final classifier can assign extremely low scores to false-positive phrases in training labels.

当然,由于某些文档中持续使用的随机词汇组合(例如“countries including”),挖掘出的核心短语中也不可避免地存在噪声。幸运的是,由于我们独立地从每篇文档中收集核心短语,此类噪声标签不会扩散并放大到整个语料库。事实上,在从两个数据集中随机抽样的已标注核心短语中,高质量标签的总体占比超过$90%$。大量合理的高质量银标签为我们训练一个学习通用上下文模式以区分噪声片段的跨度分类器奠定了坚实基础。如第4.7节所示,最终分类器可以为训练标签中的假阳性短语分配极低分数。

In summary, document-level core phrase mining provides a simple and effective way to automatically fetch abundant contextaware silver labels of reasonably good quality without relying on external KBs. In ablation studies (Section 4.6) we show that models trained with such free silver labels can outperform the same models

总之,文档级核心短语挖掘提供了一种简单有效的方法,无需依赖外部知识库(KB)即可自动获取大量质量尚可的上下文感知银标签。消融研究(第4.6节)表明,使用这类免费银标签训练的模型性能可超越同等模型。

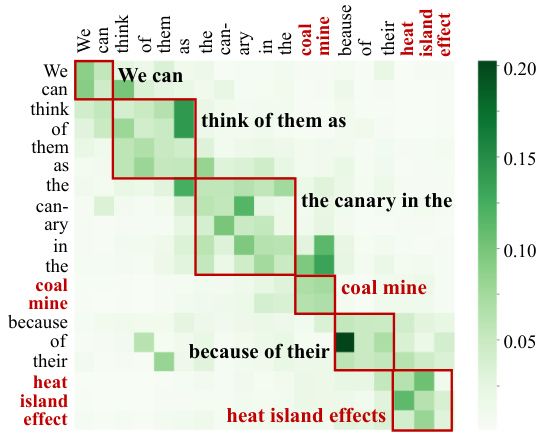

Figure 3: Illustration of the attention map generated by one of the pre-trained RoBERTa layers, averaged over all attention heads.

图 3: 预训练 RoBERTa 某一层生成的平均注意力图 (attention map) 可视化结果 (对所有注意力头取平均)。

trained with distant supervision.

通过远监督训练。

3.2 Surface-agnostic Feature Extraction

3.2 表面无关的特征提取

To build an effective context-aware tagger for quality phrases, in addition to labels, we need to figure out the contextual i zed feature representation for each span. Traditional word-identifiable features (e.g., contextual i zed embedding) make it easy for a classification model to overfit the silver labels by rigidly memorizing the surface names of training labels. A degenerated name-matching model can easily achieve zero training error without really learning about any useful, general iz able features.

为了构建一个有效的上下文感知标签器来识别优质短语,除了标签外,我们还需要为每个文本片段提取上下文特征表示。传统的可识别词特征(例如上下文嵌入)容易导致分类模型通过死记硬背训练标签的表面名称而过拟合银标签。一个退化的名称匹配模型即使没有学习到任何有用且可泛化的特征,也能轻松实现零训练误差。

In principle, the recognition of a phrase should depend on the role that it plays in the sentence. Kim et al. [19] show that the structure information of a sentence can be largely captured by its attention distribution. Therefore, we propose to obtain surfaceagnostic features from the attention distributions generated by a pre-trained Transformer-based language model encoder (LM), such as BERT [7] and RoBERTa [26].

原则上,短语的识别应取决于其在句子中的作用。Kim等人[19]表明,句子的结构信息很大程度上可以通过其注意力分布来捕捉。因此,我们提出从预训练的基于Transformer的语言模型编码器(LM)(如BERT[7]和RoBERTa[26])生成的注意力分布中获取表面无关特征。

Given a sentence $\left[w_{1},\dots,w_{N}\right]$ , we encode it with a language model pre-trained on a massive, unlabeled corpus from the same domain (e.g., general domain and scientific domain). Suppose this language model has $L$ layers, and each layer has $H$ attention heads. Each attention head $h$ from layer $l$ produces an attention map $\mathbf{A}^{l,h}\in\mathbb{R}^{N\times N}$ of the sentence. The aggregated attention map from attention heads of all layers is denoted as $\mathbf{\dot{A}}\in\mathbb{R}^{N\times N\times(H\cdot L)}$ , where $\mathbf{A}{i,j}\in\mathbb{R}^{H\cdot L}$ is a vector that contains the attention scores from $w_{i}$ to $w_{j}$ . Finally, for each candidate span $\boldsymbol{p}=\left[w_{i},\ldots,w_{j}\right]$ , we denote its feature as X𝑝 = A𝑖...𝑗,𝑖...𝑗 .

给定一个句子 $\left[w_{1},\dots,w_{N}\right]$ ,我们使用在相同领域(例如通用领域和科学领域)的大规模未标注语料上预训练的语言模型对其进行编码。假设该语言模型有 $L$ 层,每层有 $H$ 个注意力头。层 $l$ 中的每个注意力头 $h$ 会生成该句子的注意力图 $\mathbf{A}^{l,h}\in\mathbb{R}^{N\times N}$ 。所有层注意力头的聚合注意力图表示为 $\mathbf{\dot{A}}\in\mathbb{R}^{N\times N\times(H\cdot L)}$ ,其中 $\mathbf{A}{i,j}\in\mathbb{R}^{H\cdot L}$ 是一个包含从 $w_{i}$ 到 $w_{j}$ 注意力分数的向量。最后,对于每个候选片段 $\boldsymbol{p}=\left[w_{i},\ldots,w_{j}\right]$ ,我们将其特征表示为 X𝑝 = A𝑖...𝑗,𝑖...𝑗 。

Ideally, the attention maps of quality phrases should reveal distinct patterns of word connections. Figure 3 shows a real example of the generated attention map of a sentence. The chunks on the attention map lead to a clear separation of different parts of the sentence. From all chunks, our final span classifier (Section 3.3) accurately distinguishes the quality phrases (“coal mine”, “heat island effects”) from ordinary spans (e.g., “We can”), indicating the informative ness of the attention features.

理想情况下,质量短语的注意力图应呈现清晰的词语关联模式。图 3 展示了一个句子生成的注意力图实例,图中区块能清晰区分句子的不同部分。通过所有区块分析,我们的最终跨度分类器 (第 3.3 节) 能准确区分质量短语 ("coal mine", "heat island effects") 与普通片段 (如 "We can"),这证明了注意力特征的信息有效性。

Efficient Implementation. Thanks to the rich syntactic and semantic knowledge in the pre-trained language model, the generated attention maps are already informative enough for phrase tagging.

高效实现。得益于预训练语言模型中丰富的句法和语义知识,生成的注意力图已经足够丰富,可用于短语标注。

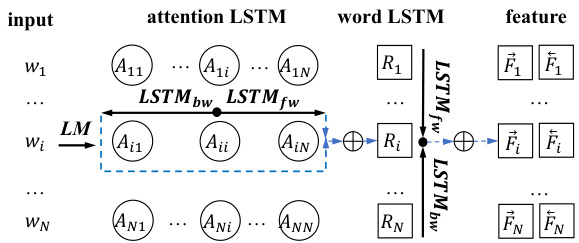

Figure 4: An alternative classifier based on attention-level LSTM.

图 4: 基于注意力级别 LSTM 的替代分类器。

In this work, we adopt the RoBERTa model [26], one of the state-ofthe-art Transformer-based language models, as a feature extractor without the need for further fine-tuning. We only need to apply the pre-trained RoBERTa model for one inference pass through the target corpus for feature extraction.

在本工作中,我们采用RoBERTa模型[26]作为特征提取器,无需进一步微调。该模型是基于Transformer的先进语言模型之一。我们只需将预训练的RoBERTa模型对目标语料库进行一次推理遍历即可完成特征提取。

The overall efficiency now mainly depends on the size of the attention map, which is $N{\times}N{\times}(H{\cdot}L).N$ is restricted to the length of each span during training, and for inference, we apply sentencelevel encoding, with each sentence restricted to at most 64 tokens. Depth wise, existing studies have observed considerable redundancy in the outputs of different Transformer layers, including attention distributions [14, 15]. For this reason, as the default setting of UCPhrase, we only preserve attention maps from the first 3 layers in RoBERTa (i.e., $L=3$ ). As RoBERTa has 12 layers in total, this saves $75%$ of resource consumption. We have quantitatively compared the final tagging performance of using 3 layers vs. using all 12 layers in Section 4.6. As the experimental results suggest, using 3 layers exhibits comparable performance with the full model.

整体效率现在主要取决于注意力图的大小,即 $N{\times}N{\times}(H{\cdot}L)$。训练时 $N$ 受限于每个跨度的长度,而在推理阶段,我们采用句子级编码,每句最多限制为64个token。在深度方面,现有研究发现不同Transformer层的输出存在显著冗余,包括注意力分布 [14, 15]。因此,UCPhrase默认设置仅保留RoBERTa前3层的注意力图(即 $L=3$)。由于RoBERTa共有12层,这一设定可节省 $75%$ 的资源消耗。我们在4.6节定量比较了使用3层与全部12层的最终标注性能,实验结果表明:3层配置与完整模型具有相当的表现力。

3.3 Lightweight Span Classifier

3.3 轻量级跨度分类器

With the labels and features in-house, we are ready to build a classifier to recognize spans of quality phrases. Our framework is general and compatible with various classification models. For the sake of efficiency, we wish to find a lightweight classifier.

有了内部的标签和特征,我们就可以构建一个分类器来识别质量短语的范围。我们的框架具有通用性,兼容多种分类模型。出于效率考虑,我们希望找到一个轻量级的分类器。

Given the attention map of a $k$ -word span, an accurate classifier should effectively capture inter-word relationships from different LM layers and at different ranges. Naturally, the attention map can be viewed as a square image of $k$ pixels for both height and width, with $H\cdot L$ channels. We can now transform the phrase classification problem into an image classification problem: given a multi-channel image (attention map), we want to predict whether the corresponding word span is a quality phrase. Specifically, we apply a two-layer convolutional neural network (CNN) model on the multi-channel attention map. The output is then fed to a logistic regression layer to assign a binary label for the corresponding span. During the training process, the classification model $f(\cdot;\theta)$ parameterized by $\theta$ is learned by minimizing the loss over the training set ${\mathbf{X}\varphi,{\mathcal{P}}}$ :

给定一个 $k$ 词跨度的注意力图,一个准确的分类器应能有效捕捉来自不同大语言模型层和不同范围的词间关系。自然地,注意力图可视为高度和宽度均为 $k$ 像素的方形图像,具有 $H\cdot L$ 个通道。现在我们可以将短语分类问题转化为图像分类问题:给定一个多通道图像(注意力图),我们需要预测对应的词跨度是否为优质短语。具体而言,我们在多通道注意力图上应用一个双层卷积神经网络(CNN)模型,其输出随后被送入逻辑回归层,为对应跨度分配二元标签。在训练过程中,通过最小化训练集 ${\mathbf{X}\varphi,{\mathcal{P}}}$ 上的损失,学习由参数 $\theta$ 定义的分类模型 $f(\cdot;\theta)$:

$$

\hat{\theta}=\underset{\theta}{\operatorname{argmin}}\frac{1}{|\mathcal{P}|}\sum_{i=1}^{|\mathcal{P}|}\ell(p_{i},f(\mathbf{X}{p_{i}};\theta)),

$$

$$

\hat{\theta}=\underset{\theta}{\operatorname{argmin}}\frac{1}{|\mathcal{P}|}\sum_{i=1}^{|\mathcal{P}|}\ell(p_{i},f(\mathbf{X}{p_{i}};\theta)),

$$

where $p_{i}\in\mathcal{P}=\bigcup_{m=1}^{M}{\mathcal{P}{m}^{-},\mathcal{P}_{m}^{+}}$ represents the $i$ -th labeled span, and $\ell$ is the binary cross entropy loss function. The model is updated with minibatch-based stochastic gradient descent.

其中 $p_{i}\in\mathcal{P}=\bigcup_{m=1}^{M}{\mathcal{P}{m}^{-},\mathcal{P}_{m}^{+}}$ 表示第 $i$ 个标注片段,$\ell$ 为二元交叉熵损失函数。模型采用基于小批量的随机梯度下降法进行更新。

Note that $\theta$ here only includes the parameters in the two CNN layers and the logistic regression layer during training, which makes

请注意,这里的 $\theta$ 仅在训练期间包含两个 CNN 层和逻辑回归层中的参数,这使得

Corpus

语料库

Task I. Corpus-level Phrase Ranking

任务 I. 语料库级短语排序

Task II. Document-level Keyphrase Extraction

任务 II. 文档级关键词抽取

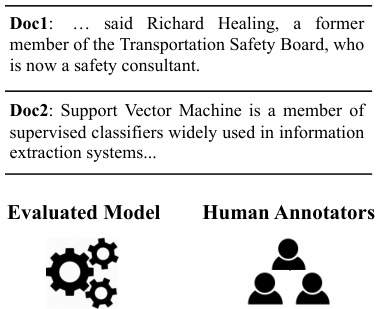

Doc1 Gold Keyphrases: - Richard Healing - Transportation Safety Board

Doc1 黄金关键词: - Richard Healing - Transportation Safety Board

Tagged phrases as candidates -- fRo irc mh a err d m Hee mal bien rg Rec. = 100% - Transportation Safety Board

标记短语作为候选 -- fRo irc mh a err d m Hee mal bien rg 召回率 = 100% - 运输安全委员会

Ranked by TF-IDF

按TF-IDF排序

- Transportation Safety Board - Richard Healing - safety consultant $F_{I}@3=80%$

- 运输安全委员会 - Richard Healing - 安全顾问 $F_{I}@3=80%$

Task III. Sentence-level Phrase Tagging

任务 III. 句子级短语标注

Human Annotators (* 3):

人工标注员 (* 3):

[Support Vector Machine] is a member of [supervised class if i ers] widely used in [information extraction systems] .

[支持向量机]是[信息抽取系统]中广泛使用的[监督分类器]之一。

System Prediction:

系统预测:

[Support Vector Machine] is a [member of] [supervised class if i ers] widely used in [information extraction] systems.

支持向量机 (Support Vector Machine) 是信息抽取 (information extraction) 系统中广泛使用的监督分类器 (supervised classifiers) 成员之一。

Rec. = 66.7%, Prec. $=50%$ , $F_{I}=57.2%$ (average over all annotators)

Rec. = 66.7%, Prec. $=50%$, $F_{I}=57.2%$ (所有标注者的平均值)

Fine-grained

细粒度

the training process efficient in terms of resource consumption. In fact, the checkpoint of training parameters from each epoch can be stored in a 22 KB file on disk.

训练过程在资源消耗方面非常高效。实际上,每个训练周期的参数检查点可以存储在磁盘上22 KB的文件中。

Another bidirectional LSTM layer is built upon the word representations $\mathbf{R}$ to extract the feature F, i.e.,

另一个双向 LSTM 层基于词表示 $\mathbf{R}$ 构建,用于提取特征 F,即

$$

\begin{array}{r}{\begin{array}{l}{\overrightarrow{\mathbf{F}}{1,2,\ldots,N}=\mathrm{LSTM}(\mathbf{R}{1},\mathbf{R}{2},\ldots,\mathbf{R}{N}),}\ {\overleftarrow{\mathbf{F}}{N,N-1,\ldots,1}=\mathrm{LSTM}(\mathbf{R}{N},\mathbf{R}{N-1},\ldots,\mathbf{R}_{1}).}\end{array}}\end{array}

$$

$$

\begin{array}{r}{\begin{array}{l}{\overrightarrow{\mathbf{F}}{1,2,\ldots,N}=\mathrm{LSTM}(\mathbf{R}{1},\mathbf{R}{2},\ldots,\mathbf{R}{N}),}\ {\overleftarrow{\mathbf{F}}{N,N-1,\ldots,1}=\mathrm{LSTM}(\mathbf{R}{N},\mathbf{R}{N-1},\ldots,\mathbf{R}_{1}).}\end{array}}\end{array}

$$

Scheme wise, there are two popular labeling schemes in sequence labeling: (1) Tie-or-Break, which is predicting whether each consecutive pair of words belong to the same phrase, and (2) BIO Tagging, which is tagging phrases in the sentence through a Begin-InsideOutside scheme [32]. We are not using BIOES [33] as we focus on multi-word phrases. For the Tie-or-Break tagging scheme, we apply a 2-layer Multi-layer Perceptron followed by a Sigmoid classification function to predict whether the $[\overrightarrow{\textbf{F}}{i},\overleftarrow{\textbf{F}}{i+1}]$ representation corresponds to a tie between word $i$ and word $i+1$ or a break. For the BIO tagging scheme, in a word-wise manner, we concatenate the represent at ions $\vec{\textbf{F}}{1,2,...,N}$ and $\overleftarrow{\mathbf{F}}_{N,N-1,...,1}$ into $N$ representations for each sentence, and then send them through a Conditional Random Field (CRF) layer [18, 22] to predict the BIO tags for phrases.

在序列标注中,有两种流行的标注方案:(1) Tie-or-Break,用于预测每对相邻单词是否属于同一短语;(2) BIO标注,通过Begin-Inside-Outside方案对句子中的短语进行标注[32]。由于我们关注多词短语,因此不使用BIOES[33]。对于Tie-or-Break标注方案,我们采用2层多层感知机(MLP)和Sigmoid分类函数来预测$[\overrightarrow{\textbf{F}}{i},\overleftarrow{\textbf{F}}{i+1}]$表示是否对应单词$i$与单词$i+1$之间的连接或断开。对于BIO标注方案,我们以逐词方式将表示$\vec{\textbf{F}}{1,2,...,N}$和$\overleftarrow{\mathbf{F}}_{N,N-1,...,1}$拼接为$N$个句子表示,然后通过条件随机场(CRF)层[18,22]预测短语的BIO标签。

Other training procedures for both of the class if i ers are the same as the aforementioned default span classifier. These alternative classifiers have comparable performance, as confirmed in Section 4.6.

两类分类器的其他训练流程与前述默认跨度分类器相同。这些替代分类器具有可比性能,如第4.6节所验证。

4 EXPERIMENTS

4 实验

We compare our UCPhrase with previous studies on multi-word phrase mining tasks on two datasets and three tasks at different granularity: corpus-level phrase ranking, document-level keyphrase extraction, and sentence-level phrase tagging.

我们在两个数据集和三个不同粒度的任务上,将UCPhrase与以往的多词短语挖掘研究进行对比:语料库级短语排序、文档级关键词抽取以及句子级短语标注。

Figure 5: Illustration of the Three Evaluation Tasks and their Evaluation Metrics. Table 1: Dataset statistics on KP20k and KPTimes.

图 5: 三项评估任务及其评估指标示意图。

表 1: KP20k 和 KPTimes 数据集统计信息。

| Statistics | KP20k | KPTimes |

|---|---|---|

| TrainSet | TrainSet | |

| #documents | 527,090 | 259,923 |

| #words per document | 176 | 907 |

| TestSet | TestSet | |

| #documents | 20,000 | 20,000 |

| #multi-word keyphrases | 37,289 | 24,920 |

| #unique | 24,626 | 8,970 |

| #absent in training corpus | 4,171 | 2,940 |

4.1 Evaluation Tasks and Metrics

4.1 评估任务与指标

We evaluate all methods on the following three tasks. Figure 5 illustrates the tasks and evaluation metrics with some examples.

我们在以下三个任务上评估所有方法。图5: 用示例展示了任务和评估指标。

Task I: Phrase Ranking is a popular evaluation task in previous statistics-based phrase mining work [6, 8, 23, 25, 34]. Specifically, it evaluates the “global” rank list of phrases that a method finds from the input corpus. Since UCPhrase does not explicitly compute a “global” score for each phrase, we use the average logits of all occurrences of a predicted phrase to rank phrases.

任务 I: 短语排序是先前基于统计的短语挖掘工作中常见的评估任务 [6, 8, 23, 25, 34]。具体而言,它评估方法从输入语料库中发现的短语的"全局"排名列表。由于 UCPhrase 并未显式计算每个短语的"全局"分数,我们使用预测短语所有出现位置的平均 logits 值对短语进行排序。

In our experiments, for each method2 on each dataset, we quantitatively evaluate the precision of the phrases found in the topranked 5,000 and 50,000 phrases, denoted as $\mathbf{P}{a}5\mathbf{K}$ and $\mathbf{P}@50\mathbf{K}$ . Since it is expensive to hire annotators to annotate all these phrases, we estimate the precision scores by randomly sampling 200 phrases from rank lists. Extracted phrases from different methods are shuffled and mixed before presenting to the annotators.

在我们的实验中,对于每个数据集上的每种方法2,我们定量评估了排名前5,000和50,000个短语的准确率,分别表示为$\mathbf{P}{a}5\mathbf{K}$和$\mathbf{P}@50\mathbf{K}$。由于雇佣标注人员标注所有这些短语成本高昂,我们通过从排名列表中随机抽取200个短语来估算准确率分数。不同方法提取的短语在呈现给标注人员之前会被打乱并混合。

Task II: Keyphrase Extraction is a classic task to extract salient phrases that best summarize a document [9], which essentially has two stages: candidate generation and keyphrase ranking. At the first stage, we treat all compared methods as candidate phrase extractors and evaluate the recall of generated candidates. In each document, the recall measures how many gold keyphrases are extracted in the candidate list. For fair comparison, we preserve the same number of candidates from the rank list of each method for evaluation.

任务二:关键词提取是一项经典任务,旨在提取最能概括文档的显著短语 [9],主要包含两个阶段:候选生成和关键词排序。第一阶段,我们将所有对比方法视为候选短语提取器,评估生成候选的召回率。在每篇文档中,召回率衡量候选列表中提取到的黄金关键词数量。为保证公平比较,我们从每种方法的排序列表中保留相同数量的候选进行评估。

For the end-the-end performance, we apply the classic TF-IDF model to rank the candidate phrases extracted by different methods. In each document, we follow the standard evaluation method [13] to calculate the $F_{1}$ score of the top-10 ranked phra