Universal Evasion Attacks on Sum mari z ation Scoring

通用规避攻击在摘要评分中的应用

Abstract

摘要

The automatic scoring of summaries is important as it guides the development of summarizers. Scoring is also complex, as it involves multiple aspects such as fluency, grammar, and even textual entailment with the source text. However, summary scoring has not been considered a machine learning task to study its accuracy and robustness. In this study, we place automatic scoring in the context of regression machine learning tasks and perform evasion attacks to explore its robustness. Attack systems predict a non-summary string from each input, and these non-summary strings achieve competitive scores with good summarize rs on the most popular metrics: ROUGE, METEOR, and BERTScore. Attack systems also "outperform" state-of-the-art sum mari z ation methods on ROUGE-1 and ROUGE-L, and score the second-highest on METEOR. Furthermore, a BERTScore backdoor is observed: a simple trigger can score higher than any automatic sum mari z ation method. The evasion attacks in this work indicate the low robustness of current scoring systems at the system level. We hope that our highlighting of these proposed attacks will facilitate the development of summary scores.

摘要自动评分至关重要,因为它能指导摘要生成系统的开发。评分工作也很复杂,涉及流畅度、语法甚至与原文的文本蕴含关系等多方面因素。然而摘要评分尚未被视为需要研究其准确性和鲁棒性的机器学习任务。本研究将自动评分置于回归机器学习任务背景下,通过规避攻击探究其鲁棒性。攻击系统能从每个输入中预测出非摘要字符串,这些字符串在最流行的ROUGE、METEOR和BERTScore指标上与优质摘要系统获得相当分数。攻击系统在ROUGE-1和ROUGE-L上甚至"优于"最先进的摘要方法,在METEOR上排名第二。此外还观察到BERTScore存在后门:简单触发词就能获得比任何自动摘要方法更高的分数。本研究的规避攻击表明当前评分系统在系统层面鲁棒性较低。我们希望通过揭示这些攻击方式促进摘要评分系统的发展。

1 Introduction

1 引言

A long-standing paradox has plagued the task of automatic sum mari z ation. On the one hand, for about 20 years, there has not been any automatic scoring available as a sufficient or necessary condition to demonstrate summary quality, such as adequacy, grammatical it y, cohesion, fidelity, etc. On the other hand, contemporaneous research more often uses one or several automatic scores to endorse a summarizer as state-of-the-art. More than $90%$ of works on language generation neural models choose automatic scoring as the main basis, and about half of them rely on automatic scoring only (van der Lee et al., 2021). However, these scoring methods have been found to be insufficient (Novikova et al., 2017), oversimplified (van der Lee et al., 2021), difficult to interpret (Sai et al., 2022), inconsistent with the way humans assess summaries (Rankel et al., 2013; Böhm et al., 2019), or even contradict each other (Gehrmann et al., 2021; Bhandari et al., 2020).

自动摘要领域长期存在一个悖论。一方面,近20年来始终缺乏能够充分或必要证明摘要质量的自动评分指标(如完整性、语法正确性、连贯性、忠实度等)。另一方面,同期研究却普遍采用一个或多个自动评分来宣称其摘要系统达到最优水平。超过90%的神经语言生成模型研究将自动评分作为主要依据,其中约半数仅依赖自动评分(van der Lee等,2021)。然而这些评分方法已被证实存在诸多问题:不够充分(Novikova等,2017)、过度简化(van der Lee等,2021)、难以解释(Sai等,2022)、与人类评估方式不符(Rankel等,2013;Böhm等,2019),甚至彼此矛盾(Gehrmann等,2021;Bhandari等,2020)。

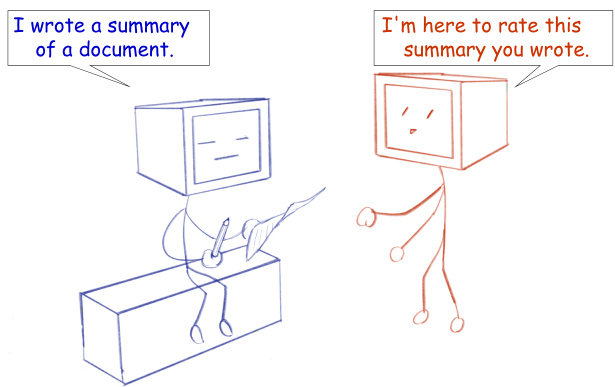

Figure 1: Automatic sum mari z ation (left) and automatic scoring (right) should be considered as two systems of the same rank, representing conditional language generation and natural language understanding, respectively. As a stand-alone system, the accuracy and robustness of automatic scoring are also important. In this study, we create systems that use bad summaries to fool existing scoring systems. This work shows that optimizing towards a flawed scoring does more harm than good, and flawed scoring methods are not able to indicate the true performance of summarize rs, even at a system level.

图 1: 自动摘要生成(左)与自动评分(右)应被视为同级系统,分别代表条件语言生成和自然语言理解。作为独立系统,自动评分的准确性和鲁棒性同样重要。本研究创建了利用劣质摘要欺骗现有评分系统的方案。这项工作表明,针对有缺陷的评分进行优化弊大于利,且存在缺陷的评分方法即使在系统层面也无法真实反映摘要生成器的性能。

Why do we have to deal with this paradox? The current work may not have suggested that summarizers assessed by automatic scoring are de facto ineffective. However, optimizing for flawed evaluations (Gehrmann et al., 2021; Peyrard et al., 2017), directly or indirectly, ultimately harms the development of automatic sum mari z ation (Narayan et al., 2018; Kryscinski et al., 2019; Paulus et al., 2018). One of the most likely drawbacks is shortcut learning (surface learning, Geirhos et al., 2020), where summarizing models may fail to generate text with more widely accepted qualities such as adequacy and authenticity, but instead pleasing scores. Here, we quote and adapt1 this hypothetical story by Geirhos et al..

为何必须应对这一悖论?当前研究或许并未表明经自动评分评估的摘要生成器实际无效。但直接或间接地为存在缺陷的评估体系进行优化 (Gehrmann et al., 2021; Peyrard et al., 2017),终将损害自动摘要领域的发展 (Narayan et al., 2018; Kryscinski et al., 2019; Paulus et al., 2018)。其中最可能的弊端是捷径学习 (浅层学习 surface learning, Geirhos et al., 2020)——摘要模型可能无法生成具有更广泛认可质量的文本(如充分性与真实性),而只是追求讨好评分。此处我们引用并改编了Geirhos等人提出的假设案例。

"Alice loves literature. Always has, probably always will. At this very moment, however, she is cursing the subject: After spending weeks immersing herself in the world of Shakespeare’s The Tempest, she is now faced with a number of exam questions that are (in her opinion) to equal parts dull and difficult. ’How many times is Duke of Milan addressed’... Alice notices that Bob, sitting in front of her, seems to be doing very well. Bob of all people, who had just boasted how he had learned the whole book chapter by rote last night ..."

Alice热爱文学。过去是,现在可能也永远是。但此时此刻,她却对这个学科咒骂不已:在沉浸于莎士比亚《暴风雨》的世界数周后,她现在面对着一堆(在她看来)既乏味又困难的考题。"米兰公爵被提及多少次"……Alice注意到坐在前排的Bob似乎答得很顺利。偏偏是Bob——这家伙昨晚还吹嘘自己通宵死记硬背了整本书……

According to Geirhos et al., Bob might get better grades and consequently be considered a better student than Alice, which is an example of surface learning. The same could be the case with automatic sum mari z ation, where we might end up with significant differences between expected and actual learning outcomes (Paulus et al., 2018). To avoid going astray, it is important to ensure that the objective is correct.

根据Geirhos等人的研究,Bob可能获得更高的分数,因此被认为比Alice更优秀,这是表层学习的一个例子。同样的情况也可能出现在自动摘要领域,我们可能会面临预期与实际学习成果之间的显著差异 (Paulus et al., 2018)。为避免偏离方向,确保目标正确至关重要。

In addition to understanding the importance of correct justification, we also need to know what caused the fallacy of the justification process for these potentially useful summarize rs. There are three mainstream speculations that are not mutually exclusive. (1) The transition from extractive summarization to abstract ive sum mari z ation (Kryscinski et al., 2019) could have been underestimated. For example, the popular score ROUGE (Lin, 2004) was originally used to judge the ranking of sentences selected from documents. Due to constraints on sentence integrity, the generated summaries can always be fluent and un distorted, except sometimes when anaphora is involved. However, when it comes to free-form language generation, sentence integrity is no longer guaranteed, but the metric continues to be used. (2) Many metrics, while flawed in judging individual summaries, often make sense at the system level (Reiter, 2018; Gehrmann et al., 2021; Böhm et al., 2019). In other words, it might have been believed that few summarization systems can consistently output poorquality but high-scoring strings. (3) Researchers have not figured out how humans interpret or understand texts (van der Lee et al., 2021; Gehrmann et al., 2021; Schluter, 2017), thus the decision about how good a summary really is varies from person to person, let alone automated scoring. In fact, automatic scoring is more of a natural language understanding (NLU) task, a task that is far from solved. From this viewpoint, automatic scoring itself is fairly challenging.

除了理解正确论证的重要性外,我们还需了解这些潜在有用的摘要生成器在论证过程中产生谬误的原因。存在三种主流的推测(这些推测并不互斥):

(1) 从抽取式摘要到生成式摘要的转变可能被低估了 (Kryscinski et al., 2019)。例如,广泛使用的ROUGE评分 (Lin, 2004) 最初用于评判从文档中选取句子的排序。由于句子完整性的约束,生成的摘要通常流畅且无歧义(除非涉及指代问题)。但当面对自由形式的语言生成时,句子完整性无法保证,而该指标仍被沿用。

(2) 许多指标虽然在评判单个摘要时存在缺陷,但在系统层面往往具有合理性 (Reiter, 2018; Gehrmann et al., 2021; Böhm et al., 2019)。换言之,人们可能认为很少有摘要系统能持续输出低质量但高得分的文本。

(3) 研究者尚未明确人类如何解读或理解文本 (van der Lee et al., 2021; Gehrmann et al., 2021; Schluter, 2017),因此对摘要质量的判断因人而异,更不用说自动化评分了。实际上,自动评分更属于自然语言理解 (NLU) 任务——这一领域远未得到解决。从这个角度看,自动评分本身就极具挑战性。

Nevertheless, the current work is not to advocate (and certainly does not disparage) human evaluation. Instead, we argue that automatic scoring itself is not just a sub-module of automatic summarization, and that automatic scoring is a stand-alone system that needs to be studied for its own accuracy and robustness. The primary reason is that NLU is clearly required to characterize summary quality, e.g., semantic similarity to determine adequacy (Morris, 2020), or textual entailment (Dagan et al., 2006) to determine fidelity. Besides, summary scoring is similar to automated essay scoring (AES), which is a 50-year-old task measuring grammatical it y, cohesion, relevance etc. of written texts (Ke and $\mathrm{Ng}$ , 2019). Moreover, recent advances in automatic scoring also support this argument well. Automatic scoring is gradually transitioning from well-established metrics measuring N-gram overlap (BLEU (Papineni et al., 2002), ROUGE (Lin, 2004), METEOR (Banerjee and Lavie, 2005), etc.) to emerging metrics aimed at computing semantic similarity through pre-trained neural models (BERTScore (Zhang et al., 2019b), MoverScore (Zhao et al., 2019), BLEURT (Sellam et al., 2020), etc.) These emerging scores exhibit two characteristics that stand-alone machine learning systems typically have: one is that some can be fine-tuned for human cognition; the other is that they still have room to improve and still have to learn how to match human ratings.

然而,当前的工作并非提倡(当然也不贬低)人工评估。相反,我们认为自动评分本身不仅仅是自动摘要的一个子模块,而是一个需要独立研究其准确性和鲁棒性的系统。主要原因是,表征摘要质量显然需要自然语言理解(NLU),例如通过语义相似性判断充分性(Morris, 2020),或通过文本蕴含(Dagan et al., 2006)判断忠实性。此外,摘要评分与自动作文评分(AES)类似,后者是一项已有50年历史的任务,用于衡量书面文本的语法性、连贯性、相关性等(Ke和$\mathrm{Ng}$, 2019)。

更重要的是,自动评分的最新进展也很好地支持了这一观点。自动评分正逐渐从成熟的N-gram重叠度指标(如BLEU(Papineni et al., 2002)、ROUGE(Lin, 2004)、METEOR(Banerjee and Lavie, 2005)等)转向通过预训练神经模型计算语义相似性的新兴指标(如BERTScore(Zhang et al., 2019b)、MoverScore(Zhao et al., 2019)、BLEURT(Sellam et al., 2020)等)。这些新兴评分展现出独立机器学习系统通常具备的两个特征:一是部分指标可针对人类认知进行微调;二是它们仍有改进空间,仍需学习如何匹配人类评分标准。

Machine learning systems can be attacked. Attacks can help improve their generality, robustness, and interpret ability. In particular, evasion attacks are an intuitive way to further expose the weaknesses of current automatic scoring systems. Evasion attack is the parent task of adversarial attack, which aims to make the system fail to correctly identify the input, and thus requires defence against certain exposed vulnerabilities.

机器学习系统可能遭受攻击。攻击有助于提升其通用性、鲁棒性和可解释性。其中,规避攻击(evasion attack)是进一步暴露当前自动评分系统弱点的直观方法。规避攻击是对抗攻击(adversarial attack)的父任务,其目标是使系统无法正确识别输入,因此需要针对某些已暴露的漏洞进行防御。

In this work, we try to answer the question: do current representative automatic scoring systems really work well at the system level? How hard is it to say they do not work well at the system level? In summary, we make the following major contributions in this study:

在本研究中,我们试图回答以下问题:当前具有代表性的自动评分系统在系统层面是否真的表现良好?要证明它们在系统层面表现不佳有多困难?总的来说,我们在本研究中做出了以下主要贡献:

Table 1: We created non-summarizing systems, each of which produces bad text when processing any document. Broken sentences get higher lexical scores; non-alphanumeric characters outperform good summaries on BERTScore. Concatenating two strings produces equally bad text, but scores high on both. The example is from CNN/DailyMail (for visualization, document is abridged to keep content most consistent with the corresponding gold summary).

表 1: 我们创建了非摘要生成系统,每个系统在处理任何文档时都会产生低质量文本。断裂的句子获得更高的词汇分数;非字母数字字符在 BERTScore 上优于优质摘要。连接两个字符串会产生同样糟糕的文本,但在两项指标上都得分很高。示例来自 CNN/DailyMail (为便于展示,文档经过删减以保持内容与对应标准摘要最一致)。

|

• We are the first to treat automatic summarization scoring as an NLU regression task and perform evasion attacks. • We are the first to perform a universal, targeted attack on NLP regression models. • Our evasion attacks support that it is not difficult to deceive the three most popular automatic scoring systems simultaneously. • The proposed attacks can be directly applied to test emerging scoring systems.

• 我们首次将自动摘要评分视为自然语言理解(NLU)回归任务并进行规避攻击。

• 我们首次对NLP回归模型实施通用定向攻击。

• 我们的规避攻击证明同时欺骗三种最流行的自动评分系统并不困难。

• 所提出的攻击方法可直接用于测试新兴评分系统。

2 Related Work

2 相关工作

2.1 Evasion Attacks in NLP

2.1 NLP中的规避攻击

In an evasion attack, the attacker modifies the input data so that the NLP model incorrectly identifies the input. The most widely studied evasion attack is the adversarial attack, in which insignificant changes are made to the input to make "adversarial examples" that greatly affect the model’s output (Szegedy et al., 2014). There are other types of evasion attacks, and evasion attacks can be classified from at least three perspectives. (1) Targeted evasion attacks and untargeted evasion attacks (Cao and Gong, 2017). The former is intended for the model to predict a specific wrong output for that example. The latter is designed to mislead the model to predict any incorrect output. (2) Universal attacks and input-dependent attacks (Wallace et al., 2019; Song et al., 2021). The former, also known as an "input-agnostic" attack, is a "unique model analysis tool". They are more threatening and expose more general input-output patterns learned by the model. The opposite is often referred to as an input-dependent attack, and is sometimes referred to as a local or typical attack. (3) Black-box attacks and white-box attacks. The difference is whether the attacker has access to the detailed computation of the victim model. The former does not, and the latter does. Often, targeted, universal, black-box attacks are more challenging. Evasion attacks have been used to expose vulnerabilities in sentiment analysis, natural language inference (NLI), automatic short answer grading (ASAG), and natural language generation (NLG) (Alzantot et al., 2018; Wallace et al., 2019; Song et al., 2021; Filighera et al., 2020, 2022; Zang et al., 2020; Behjati et al., 2019).

在规避攻击中,攻击者会修改输入数据,使NLP模型错误识别输入。研究最广泛的规避攻击是对抗攻击,通过对输入进行微小改动生成显著影响模型输出的"对抗样本" (Szegedy et al., 2014)。其他类型的规避攻击至少可从三个角度分类:(1) 定向规避攻击与非定向规避攻击 (Cao and Gong, 2017),前者旨在使模型预测特定错误输出,后者则意图误导模型产生任意错误输出;(2) 通用攻击与输入相关攻击 (Wallace et al., 2019; Song et al., 2021),前者作为"输入无关"攻击是独特的模型分析工具,能揭示模型更普遍的输入-输出模式,后者常被称为局部或典型攻击;(3) 黑盒攻击与白盒攻击,区别在于攻击者是否知晓受害模型的计算细节。通常定向、通用、黑盒攻击更具挑战性。这类攻击已用于暴露情感分析、自然语言推理(NLI)、自动简答评分(ASAG)和自然语言生成(NLG)等任务的脆弱性 (Alzantot et al., 2018; Wallace et al., 2019; Song et al., 2021; Filighera et al., 2020, 2022; Zang et al., 2020; Behjati et al., 2019)。

2.2 Universal Triggers in Attacks on Classification

2.2 分类攻击中的通用触发器

A prefix can be a universal trigger. When a prefix is added to any input, it can cause the classifier to mis classify sentiment, textual entailment (Wallace et al., 2019), or if a short answer is correct (Filighera et al., 2020). These are usually untargeted attacks in a white-box setting2, where the gradients of neural models are computed during the trigger

前缀可以作为一种通用触发器。当在任何输入前添加特定前缀时,可能导致分类器对情感分类、文本蕴含 (Wallace et al., 2019) 或简答题正误判断 (Filighera et al., 2020) 产生误判。这类攻击通常属于白盒环境下的无目标攻击,其通过计算神经模型的梯度来生成触发前缀。

search phase.

搜索阶段。

Wallace et al. also used prefixes to trigger a reading comprehension model to specifically choose an odd answer or an NLG model to generate something similar to an egregious set of targets. These two are universal, targeted attacks, but are mainly for classification tasks. Given that automatic scoring is a regression task, more research is needed.

Wallace等人还使用前缀来触发阅读理解模型专门选择异常答案,或触发自然语言生成(NLG)模型生成与恶劣目标集相似的内容。这两种都是通用的针对性攻击,但主要针对分类任务。考虑到自动评分属于回归任务,仍需更多研究。

2.3 Adversarial Examples Search for Regression Models

2.3 回归模型的对抗样本搜索

Compared with classification tasks in NLP, regression tasks (such as determining text similarity) are fewer and less frequently attacked. For example, the Universal Sentence Encoder (USE, Cer et al., 2018) and BERTScore (Zhang et al., 2019b) are often taken as two constraints when searching adversarial examples for other tasks (Alzantot et al., 2018). However, these regression models may also be flawed, vulnerable or not robust, which may invalidate the constraints (Morris, 2020).

与NLP中的分类任务相比,回归任务(如确定文本相似度)数量较少且受攻击频率更低。例如,通用句子编码器(Universal Sentence Encoder, USE, Cer等人, 2018)和BERTScore(Zhang等人, 2019b)常被作为搜索对抗样本时的两个约束条件(Alzantot等人, 2018)。然而这些回归模型同样可能存在缺陷、脆弱性或不够鲁棒,这可能导致约束条件失效(Morris, 2020)。

Morris (2020) shows that adversarial attacks could also threaten these regression models. For example, Maheshwary et al. (2021) adopt a blackbox setting to maximize the semantic similarity between the altered input text sequence and the original text. Similar attacks are mostly inputdependent, probably because these regression models are mostly used as constraints. In contrast, uni- versal attacks may better reveal the vulnerabilities of these regression models.

Morris (2020) 研究表明对抗性攻击也可能威胁这些回归模型。例如,Maheshwary 等人 (2021) 采用黑盒设置来最大化修改后的输入文本序列与原始文本之间的语义相似度。这类攻击大多具有输入依赖性,可能是因为这些回归模型主要被用作约束条件。相比之下,通用攻击或许更能揭示这些回归模型的脆弱性。

2.4 Victim Scoring Systems

2.4 受害者评分系统

Every (existing) automatic summary scoring is a monotonic regression model. Most scoring requires at least one gold-standard text to be compared to the output from summarize rs. One can opt to combine multiple available systems in one super system (Lamontagne and Abi-Zeid, 2006). We will focus on the three most frequently used systems, including rule-based systems and neural systems. ROUGE (Recall-Oriented Understudy for Gisting Evaluation Lin, 2004) measures the number of overlap- ping N-grams or the longest common sub sequence (LCS) between the generated summary and a set of gold reference summaries. Particularly, ROUGE-1 corresponds to unigrams, ROUGE-2 to bigrams, and ROUGE-L to LCS. F-measures of ROUGE are often used (See et al., 2017). METEOR (Banerjee and Lavie, 2005) measures overlapping unigrams, equating a unigram with its stemmed form, synonyms, and paraphrases. BERTScore (Zhang et al.,

规则:

- 所有现有的自动摘要评分都是单调回归模型。大多数评分系统需要至少一个黄金标准文本与摘要生成器的输出进行对比。可以选择将多个可用系统整合成一个超级系统 (Lamontagne and Abi-Zeid, 2006)。我们将重点介绍三种最常用的系统,包括基于规则的系统与神经网络系统。ROUGE (面向召回率的摘要评估替代指标 Lin, 2004) 通过统计生成摘要与参考摘要之间重叠的N元语法或最长公共子序列 (LCS) 进行评测。具体而言,ROUGE-1对应单元语法,ROUGE-2对应二元语法,ROUGE-L对应最长公共子序列。通常采用ROUGE的F值作为评估指标 (See et al., 2017)。METEOR (Banerjee and Lavie, 2005) 通过匹配单元语法及其词干形式、同义词和释义来评估重叠度。BERTScore (Zhang et al.,

2019b) measures soft overlap between two tokenaligned texts, by selecting alignments, BERTScore returns the maximum cosine similarity between contextual BERT (Devlin et al., 2019) embeddings.

2019b) 通过选择对齐方式测量两个经过 token 对齐的文本之间的软重叠度,BERTScore 返回上下文 BERT (Devlin et al., 2019) 嵌入之间的最大余弦相似度。

2.5 Targeted Threshold for Attacks

2.5 攻击的目标阈值

We use a threshold to determine whether a targeted attack on the regression model was successful. Intuitively, the threshold is given by the scores of the top summarize rs, and we consider our attack to be successful if an attacker obtains a score higher than the threshold using clearly inferior summaries. We use representative systems that once achieved the state-of-the-art in the past five years: Pointer Generator (See et al., 2017), Bottom-Up (Gehrmann et al., 2018), PNBERT (Zhong et al., 2019), T5 (Raffel et al., 2019), BART (Lewis et al., 2020), and SimCLS (Liu and Liu, 2021).

我们使用一个阈值来判断针对回归模型的定向攻击是否成功。直观上,该阈值由顶级摘要系统的得分决定,如果攻击者使用明显较差的摘要获得高于该阈值的分数,则认为攻击成功。我们选用了过去五年中曾达到最先进水平的代表性系统:Pointer Generator (See et al., 2017)、Bottom-Up (Gehrmann et al., 2018)、PNBERT (Zhong et al., 2019)、T5 (Raffel et al., 2019)、BART (Lewis et al., 2020) 和 SimCLS (Liu and Liu, 2021)。

3 Universal Evasion Attacks

3 通用规避攻击

We develop universal evasion attacks for individual scoring system, and make sure that the combined attacker can fool ROUGE, METEOR, and BERTScore at the same time. It incorporates two parts, a white-box attacker on ROUGE, and a black-box universal trigger search algorithm for BERTScore, based on genetic algorithms. METEOR can be attacked directly by the one designed for ROUGE. Concatenating output strings from black-box and white-box attackers leads to a sole universal evasion attacking string.

我们针对单个评分系统开发了通用规避攻击方法,确保组合攻击能同时欺骗ROUGE、METEOR和BERTScore。该攻击包含两部分:针对ROUGE的白盒攻击器,以及基于遗传算法、针对BERTScore的黑盒通用触发器搜索算法。METEOR可直接通过ROUGE攻击器进行攻击。将黑盒与白盒攻击器的输出字符串拼接后,即可得到单一通用规避攻击字符串。

3.1 Problem Formulation

3.1 问题描述

Sum mari z ation is conditional generation. A system $\sigma$ that performs this conditional generation takes an input text (a) and outputs a text (s), i.e., $\hat{\mathbf{s}}=\sigma(\mathbf{a})$ . In single-reference scenario, there is a gold reference sequence $\mathbf{s}{\mathrm{ref}}$ . A summary scoring system $\gamma$ calculates the "closeness" between sequence $\hat{\mathbf{s}}$ and $\bf{s}_{\mathrm{{ref}}}$ . In order for a scoring system to be sufficient to justify a good summarizer, the following condition should always be avoided:

摘要生成是一种条件生成任务。系统 $\sigma$ 执行这种条件生成时,会接收输入文本 (a) 并输出文本 (s),即 $\hat{\mathbf{s}}=\sigma(\mathbf{a})$。在单参考场景下,存在一个黄金参考序列 $\mathbf{s}{\mathrm{ref}}$。摘要评分系统 $\gamma$ 会计算生成序列 $\hat{\mathbf{s}}$ 与参考序列 $\bf{s}_{\mathrm{{ref}}}$ 之间的"接近程度"。为确保评分系统足以证明摘要生成器的优良性能,必须始终避免以下情况:

$$

\gamma(\sigma_{\mathrm{far}\mathrm{worse}}(\mathbf{a}),\mathbf{s}{\mathrm{ref}})>\gamma(\sigma_{\mathrm{better}}(\mathbf{a}),\mathbf{s}_{\mathrm{ref}}).

$$

$$

\gamma(\sigma_{\mathrm{far}\mathrm{worse}}(\mathbf{a}),\mathbf{s}{\mathrm{ref}})>\gamma(\sigma_{\mathrm{better}}(\mathbf{a}),\mathbf{s}_{\mathrm{ref}}).

$$

Indeed, to satisfy the condition above is our attacking task. In this section, we detail how we find a suitable $\sigma_{\mathrm{far}}$ worse.

确实,满足上述条件正是我们的攻击任务。本节将详细介绍如何找到合适的 $\sigma_{\mathrm{far}}$ 使其表现更差。

3.2 White-box Input-agnostic Attack on ROUGE and METEOR

3.2 针对ROUGE和METEOR的白盒输入无关攻击

In general, attacking ROUGE or METEOR can only be done with a white-box setup, since even the most novice attacker (developer) will understand how these two formulae calculate the overlap between two strings. We choose to game ROUGE with the most obvious bad system output (broken sentences) such that no additional human evaluation is required. In contrast, for other gaming methods, such as reinforcement learning (Paulus et al., 2018), even if a high score is achieved, human evaluation is still needed to measure how bad the quality of the text is.

一般来说,攻击ROUGE或METEOR只能在白盒设置下进行,因为即使是最初级的攻击者(开发者)也能理解这两个公式如何计算两个字符串之间的重叠度。我们选择用最明显的劣质系统输出(破碎句子)来操纵ROUGE分数,从而无需额外人工评估。相比之下,对于其他操纵方法(如强化学习 [Paulus et al., 2018]),即使获得高分,仍需人工评估来衡量文本质量的低劣程度。

We utilize a hybrid approach (we refer to it as $\sigma_{\mathrm{ROUGE}})$ of token classification neural models and simple rule-based ordering, since we know that ROUGE compares each pair of sequences $(\mathbf{s}{1},\mathbf{s}{2})$ via hard N-gram overlapping. In bag algebra, extended from set algebra (Bertossi et al., 2018), two trendy variants of ROUGE: ROUGE-N $(R_{\mathrm{N}}(n,\mathbf{s}{1},\mathbf{s}{2}),n\in\mathbb{Z}^{+})$ and ROUGE $\mathrm{L}(R_{\mathrm{L}}(\mathbf{s}{1},\mathbf{s}_{2}))$ calculate as follows:

我们采用了一种混合方法(称为$\sigma_{\mathrm{ROUGE}}$),结合了Token分类神经网络模型和基于规则的简单排序。已知ROUGE通过硬性N-gram重叠来比较序列对$(\mathbf{s}{1},\mathbf{s}{2})$。在从集合代数扩展而来的包代数中(Bertossi等人,2018),ROUGE的两个流行变体:ROUGE-N $(R_{\mathrm{N}}(n,\mathbf{s}{1},\mathbf{s}{2}),n\in\mathbb{Z}^{+})$和ROUGE-L $(R_{\mathrm{L}}(\mathbf{s}{1},\mathbf{s}_{2}))$,其计算公式如下:

$$

\begin{array}{r l r}&{}&{{R_{\mathrm{N}}}(n,{\bf s}{1},{\bf s}{2})=\frac{2\cdot\left|b(n,{\bf s}{1})\cap b(n,{\bf s}{2})\right|}{\left|b(n,{\bf s}{1})\right|+\left|b(n,{\bf s}{2})\right|},}\ &{}&{{R_{\mathrm{L}}}({\bf s}{1},{\bf s}{2})=\frac{2\cdot\left|b(1,\mathrm{LCS}({\bf s}{1},{\bf s}{1}))\right|}{\left|b(1,{\bf s}{1})\right|+\left|b(1,{\bf s}_{2})\right|},}\end{array}

$$

$$

\begin{array}{r l r}&{}&{{R_{\mathrm{N}}}(n,{\bf s}{1},{\bf s}{2})=\frac{2\cdot\left|b(n,{\bf s}{1})\cap b(n,{\bf s}{2})\right|}{\left|b(n,{\bf s}{1})\right|+\left|b(n,{\bf s}{2})\right|},}\ &{}&{{R_{\mathrm{L}}}({\bf s}{1},{\bf s}{2})=\frac{2\cdot\left|b(1,\mathrm{LCS}({\bf s}{1},{\bf s}{1}))\right|}{\left|b(1,{\bf s}{1})\right|+\left|b(1,{\bf s}_{2})\right|},}\end{array}

$$

where $\left\vert\cdot\right\vert$ denotes the size of a bag, $\cap$ denotes bag intersection, and bag of $\mathbf{N}.$ -grams is calculated as follows:

其中 $\left\vert\cdot\right\vert$ 表示集合大小,$\cap$ 表示集合交集,$\mathbf{N}$-gram 集合的计算方式如下:

$$

b(n,\mathbf{s})={x\mid x{\mathrm{ is an~}}n{\mathrm{-gram in~}}\mathbf{s}}_{\mathrm{bag}}.

$$

$$

b(n,\mathbf{s})={x\mid x{\mathrm{ is an~}}n{\mathrm{-gram in~}}\mathbf{s}}_{\mathrm{bag}}.

$$

In our hybrid approach, the first step is that the neural model tries to predict the target’s bag of words $b(1,\mathbf{s}{\mathrm{ref}})$ , given any input a and corresponding target $\mathbf{s}_{\mathrm{ref}}$ . Then, words in the predicted bag are ordered according to their occurrence in the input a. Formally, training of the neural model $(\phi)$ is:

在我们的混合方法中,第一步是神经模型尝试预测目标的词袋 $b(1,\mathbf{s}{\mathrm{ref}})$ ,给定任意输入 a 和对应的目标 $\mathbf{s}_{\mathrm{ref}}$ 。然后,预测词袋中的单词根据它们在输入 a 中的出现顺序进行排序。形式上,神经模型 $(\phi)$ 的训练如下:

$$

\operatorname*{min}{\boldsymbol{\phi}}\frac{1}{|\mathcal{A}|}\sum_{\mathbf{a}\in\mathcal{A}}\sum_{w\in\mathbf{a}}H(P_{\mathrm{ref}}(\cdot\mid w),P(\cdot\mid w,\phi)),

$$

$$

\operatorname*{min}{\boldsymbol{\phi}}\frac{1}{|\mathcal{A}|}\sum_{\mathbf{a}\in\mathcal{A}}\sum_{w\in\mathbf{a}}H(P_{\mathrm{ref}}(\cdot\mid w),P(\cdot\mid w,\phi)),

$$

where $H$ is the cross-entropy between the probability distribution of the reference word count and the predicted word count. An approximation is that the model tries to predict $b(1,\mathbf{s}_{\mathrm{ref}})\cap b(1,\mathbf{a})$ . Empirically, three-quarters of words in reference summaries can be found in their corresponding input texts.

其中 $H$ 是参考词数概率分布与预测词数概率分布之间的交叉熵。该模型的近似目标是预测 $b(1,\mathbf{s}_{\mathrm{ref}})\cap b(1,\mathbf{a})$ 。实证研究表明,参考摘要中四分之三的词汇能在对应输入文本中找到。

Referencing the input text (a) and predicted bag of words $(\hat{W})$ to construct a sequence is straightforward, as seen in Algorithm 1.

参考输入文本(a)和预测词袋$(\hat{W})$来构建序列是直接的,如算法1所示。

Algorithm 1 From bag of words to sequence

算法 1 从词袋到序列

| Require:a,W return S s←( while M >0do |

|---|

| Salient Sequence I ← (x |

| ifc |

| break end if |

| s←s+c >Concatenatectos |

| W←W-c >Removeusedwords endwhile |

Algorithm 1 uses salient words to highlight the longest consecutive salient sub sequences in a, until the words in $\hat{W}$ are exhausted, or when each consecutive salient sequence is less than three words $\left(C=3\right)$ .

算法1使用显著词来突出a中最长的连续显著子序列,直到$\hat{W}$中的词耗尽,或每个连续显著序列少于三个词$\left(C=3\right)$。

3.3 Black-box Universal Trigger Search on BERTScore

3.3 基于BERTScore的黑盒通用触发器搜索

Finding a σfar worse for BERTScore alone to satisfy condition1 is easy. A single dot $(^{\prime\prime}.^{\prime\prime})$ is an imitator of all strings, as if it is a "backdoor" left by developers. We notice that, on default setting of BERTScore3, using a single dot can achieve around 0.892 on average when compared with any natural sentences. This figure "outperforms" all existing summarize rs, making outputing a dot a good enough σfar worse instance.

为BERTScore单独找到一个满足条件1的σfar worse实例很容易。单个点 $(^{\prime\prime}.^{\prime\prime})$ 可以模仿所有字符串,就像是开发者留下的"后门"。我们注意到,在BERTScore的默认设置下,使用单个点与任何自然句子比较时平均能达到约0.892分。这个数值"优于"所有现有摘要生成器,使得输出一个点成为足够好的σfar worse实例。

This example is very intriguing because it highlights the extent to which many vulnerabilities go unnoticed, although it cannot be combined directly with the attacker for ROUGE. Intuitively, there could be various clever methods to attack BERTScore as well, such as adding a prefix to each string (Wallace et al., 2019; Song et al., 2021). However, we here opt to develop a system that could output (one of) the most obviously bad strings (scrambled codes) to score high.

这个例子非常有趣,因为它凸显了许多漏洞被忽视的程度,尽管无法直接与ROUGE的攻击者结合。直观来看,攻击BERTScore也可能存在各种巧妙方法,例如为每个字符串添加前缀 (Wallace et al., 2019; Song et al., 2021)。但我们选择开发一个能输出(最明显)劣质字符串(乱码)却获得高分的系统。

BERTScore is generally classified as a neural, untrained score (Sai et al., 2022). In other words, part of its forward computation (e.g., greedy matching) is rule-based, while the rest (e.g., getting every token embedded in the sequence) is not. Therefore, it is difficult to "design" an attack rationally. Gradient methods (white-box) or discrete optimization (black-box) are preferable. Likewise, while letting BERTScore generate soft predictions (Jauregi Unanue et al., 2021) may allow attacks in a white-box setting, we found that black-box optimization is sufficient.

BERTScore通常被归类为一种基于神经网络的、未经训练的评分方法 (Sai et al., 2022)。换言之,其前向计算部分(例如贪心匹配)是基于规则的,而其余部分(例如获取序列中每个token的嵌入表示)则不是。因此,很难"合理设计"针对它的攻击方式。梯度方法(白盒)或离散优化(黑盒)更为合适。同样,虽然让BERTScore生成软预测 (Jauregi Unanue et al., 2021) 可能允许在白盒设置下进行攻击,但我们发现黑盒优化已经足够。

Inspired by the single-dot backdoor in BERTScore, we hypothesize that we can form longer catch-all emulators by using only nonalphanumeric tokens. Such an emulator has two benefits: first, it requires a small fitting set, which is important in targeted attacks on regression models. We will see that once an emulator is optimized to fit one natural sentence, it can also emulate almost any other natural sentence. The total number of natural sentences that need to be fitted before it can imitate decently is usually less than ten. Another benefit is that using non-alphanumeric tokens does not affect ROUGE.

受BERTScore中单点后门的启发,我们假设仅使用非字母数字token即可构建更长的全能模拟器。这种模拟器具有双重优势:首先,它只需少量拟合样本,这对回归模型的定向攻击至关重要。实验表明,当模拟器优化至适配一个自然语句后,它几乎能模拟任何其他自然语句。通常只需拟合不到十个自然语句,就能实现良好模仿效果。其次,使用非字母数字token不会影响ROUGE评分。

Genetic Algorithm (GA, Holland, 2012) was used to discretely optimize the proposed nonalphanumeric strings. Genetic algorithm is a search-based optimization technique inspired by the natural selection process. GA starts by initializing a population of candidate solutions and iterative ly making them progress towards better solutions. In each iteration, GA uses a fitness function to evaluate the quality of each candidate. High- quality candidates are likely to be selected and crossover-ed to produce the next set of candidates. New candidates are mutated to ensure search space diversity and better exploration. Applying GA to attacks has shown effectiveness and efficiency in maximizing the probability of a certain classification label (Alzantot et al., 2018) or the semantic similarity between two text sequences (Maheshwary et al., 2021). Our single fitness function is as follows,

遗传算法 (GA, Holland, 2012) 被用于离散优化所提出的非字母数字字符串。遗传算法是一种基于搜索的优化技术,其灵感来源于自然选择过程。GA 首先初始化一组候选解,并通过迭代使它们逐步向更好的解进化。在每次迭代中,GA 使用适应度函数评估每个候选解的质量。高质量的候选解更有可能被选中并进行交叉操作以产生下一组候选解。新候选解会通过变异操作来确保搜索空间的多样性和更好的探索性。将 GA 应用于攻击已显示出在最大化特定分类标签概率 (Alzantot et al., 2018) 或两个文本序列之间的语义相似度 (Maheshwary et al., 2021) 方面的有效性和高效性。我们的单一适应度函数如下:

$$

\begin{array}{r}{\hat{\bf s}{\mathrm{emu}}=\arg\operatorname*{min}{\hat{\bf s}}-B(\hat{\bf s},{\bf s}_{\mathrm{ref}}),}\end{array}

$$

$$

\begin{array}{r}{\hat{\bf s}{\mathrm{emu}}=\arg\operatorname*{min}{\hat{\bf s}}-B(\hat{\bf s},{\bf s}_{\mathrm{ref}}),}\end{array}

$$

where $B$ stands for BERTScore. As for termination, we either use a threshold of -0.88, or maximum of 2000 iterations.

其中 $B$ 代表 BERTScore。终止条件采用 -0.88 的阈值或最多 2000 次迭代。

To fit $\hat{\mathbf{s}}{\mathrm{emu}}$ to a set of natural sentences, we calculate BERTScore for each sentence in the set after each termination. We then select a proper $\mathbf{s}{\mathrm{ref}}$ to fit for the next round. We always select the natural sentence (in a finite set) that has the lowest BERTScore with the optimized $\hat{\mathbf{s}}_{\mathrm{emu}}$ at the current stage. We then repeat this process till the average BERTScore achieved by this string is higher than many reputable summarize rs.

为了使 $\hat{\mathbf{s}}{\mathrm{emu}}$ 适配一组自然语句,我们在每次终止后计算该集合中每个句子的BERTScore。随后选取合适的 $\mathbf{s}{\mathrm{ref}}$ 作为下一轮拟合目标。在当前阶段,我们始终选择与优化后的 $\hat{\mathbf{s}}_{\mathrm{emu}}$ 具有最低BERTScore的自然语句(来自有限集合)。重复此过程,直至该字符串的平均BERTScore超过多数知名摘要生成器的水平。

Finally, to simultaneously game ROUGE and BERTScore, we concatenate $\hat{\mathbf{s}}{\mathrm{emu}}$ and the inputagnostic $\sigma_{\mathrm{ROUGE}}(\mathbf{a})$ . If we set the number of tokens in $\hat{\mathbf{s}}{\mathrm{emu}}$ greater than 512 (the max sequence length for BERT), $\sigma_{\mathrm{ROUGE}}(\mathbf{a})$ would then not affect the effectiveness of $\hat{\mathbf{s}}_{\mathrm{emu}}$ , and we technically game them both. Additionally, this concatenated string games METEOR, too.

最后,为了同时优化ROUGE和BERTScore,我们将$\hat{\mathbf{s}}{\mathrm{emu}}$与输入无关的$\sigma_{\mathrm{ROUGE}}(\mathbf{a})$进行拼接。若将$\hat{\mathbf{s}}{\mathrm{emu}}$的token数量设为超过512(BERT的最大序列长度),则$\sigma_{\mathrm{ROUGE}}(\mathbf{a})$不会影响$\hat{\mathbf{s}}_{\mathrm{emu}}$的有效性,从而在技术上实现了对两者的共同优化。此外,这种拼接字符串还能优化METEOR指标。

4 Experiments

4 实验

We instantiate our evasion attack by conducting experiments on non-anonymized CNN/DailyMail (CNNDM, Nallapati et al., 2016; See et al., 2017), a dataset that contains news articles and associated highlights as summaries. CNNDM includes 287,226 training pairs, 13,368 validation pairs and 11,490 test pairs.

我们通过在非匿名的CNN/DailyMail (CNNDM, Nallapati et al., 2016; See et al., 2017) 数据集上进行实验来实例化我们的规避攻击,该数据集包含新闻文章及其对应的摘要亮点。CNNDM包含287,226个训练对、13,368个验证对和11,490个测试对。

For $\sigma$ ROUGE we use RoBERTa (base model, Liu et al., 2019) to instantiate $\phi$ , which is an optimized pretrained encoding with a randomly initialized linear layer on top of the hidden states. Number of classes is set to three because we assume that each word appears at most twice in a summary. All 124,058,116 parameters are trained as a whole on CNNDM train split for one epoch. When the batch size is eight, the training time on an NVIDIA Tesla K80 graphics processing unit (GPU) is less than 14 hours. It then takes about 20 minutes to predict (including word ordering) all 11,490 samples in the CNNDM test split. Scripts and results are available at https://github.com (URL will be made publicly available after paper acceptance).

对于 $\sigma$ ROUGE,我们使用RoBERTa(基础模型,Liu等人,2019)来实例化 $\phi$,这是一种优化的预训练编码,在隐藏状态之上添加了一个随机初始化的线性层。类别数量设置为3,因为我们假设每个单词在摘要中最多出现两次。所有124,058,116个参数在CNNDM训练集上整体训练一个周期。当批大小为8时,在NVIDIA Tesla K80图形处理单元(GPU)上的训练时间少于14小时。然后预测(包括单词排序)CNNDM测试集中的所有11,490个样本大约需要20分钟。脚本和结果可在https://github.com获取(论文接受后将公开URL)。

For the universal trigger to BERTScore, we use the library from Blank and Deb (2020) for discrete optimizing, set population size at 10, and terminate at 2000 generations. $\hat{\mathbf{s}}{\mathrm{emu}}$ is a sequence of independent randomly initialized non-alphanumeric characters. For a reference $\bf{s}{\mathrm{{ref}}}$ from CNNDM, we start from randomly pick a summary text from train split and optimize for $\hat{\mathbf{s}}{\mathrm{emu},i=0}$ . We then pick the $\mathbf{s}{\mathrm{ref}}$ that is farthest away from $\hat{\mathbf{s}}{\mathrm{emu},i=0}$ to optimize for $\hat{\mathbf{s}}{\mathrm{emu},i=1}$ , with $\hat{\mathbf{s}}_{\mathrm{emu},i=1}$ as initial population. Practically, we found that we can stop iterating when $i=5$ . Each iteration takes less than two hours on a 2vCPU (Intel Xeon $\ @2.30\mathrm{GHz}$ ).

针对BERTScore的通用触发器,我们采用Blank和Deb (2020) 的库进行离散优化,设置种群规模为10,并在2000代时终止。$\hat{\mathbf{s}}{\mathrm{emu}}$ 是由独立随机初始化的非字母数字字符组成的序列。对于来自CNNDM的参考文本 $\bf{s}{\mathrm{{ref}}}$ ,我们首先从训练集中随机选取一个摘要文本,并针对 $\hat{\mathbf{s}}{\mathrm{emu},i=0}$ 进行优化。然后选择与 $\hat{\mathbf{s}}{\mathrm{emu},i=0}$ 差异最大的 $\mathbf{s}{\mathrm{ref}}$ 来优化 $\hat{\mathbf{s}}{\mathrm{emu},i=1}$ ,并将 $\hat{\mathbf{s}}_{\mathrm{emu},i=1}$ 作为初始种群。实际应用中,我们发现当 $i=5$ 时可以停止迭代。每次迭代在2vCPU (Intel Xeon $\ @2.30\mathrm{GHz}$) 上耗时不足两小时。

5 Results

5 结果

We compare ROUGE-1/2/L, METEOR, andBERTScore of our threat model with that achieved by the top summarize rs in Table 2. We present two versions of threat models with a minor difference. As the results indicate, each version alone can exceed state-of-the-art summarizing algorithms on both ROUGE-1 and ROUGE-L. For METEOR, the threat model ranks second. As for ROUGE-2 and BERTScore, the threat model can score higher than other BERT-based summarizing algorithms 4. Overall, we rank the systems by averaging their three relative ranking on $\mathrm{{ROUGE}^{5}}$ , METEOR, and BERTScore; our threat model gets runner-up (2.7), right behind SimCLS (1.7) and ahead of BART (3.3). This suggests that at the system level, even a combination of mainstream metrics is questionable in justifying the excellence of the summarizer.

我们将威胁模型的ROUGE-1/2/L、METEOR和BERTScore与表2中顶级摘要生成器的性能进行对比。我们展示了两个存在细微差异的威胁模型版本。结果显示,任一版本在ROUGE-1和ROUGE-L指标上均能超越现有最优摘要算法。在METEOR指标上,威胁模型位列第二。对于ROUGE-2和BERTScore,威胁模型的得分高于其他基于BERT的摘要算法[4]。总体而言,我们通过计算各系统在$\mathrm{{ROUGE}^{5}}$、METEOR和BERTScore三项指标的相对排名均值进行排序:威胁模型获得亚军(2.7),仅次于SimCLS(1.7)并领先于BART(3.3)。这表明在系统层面,即使组合主流指标也难以有效论证摘要生成器的优越性。

Table 2: Results on CNNDM. Besides ROUGE-1/2/L, METEOR, and BERTScore, we also compute the arithmetic mean (A.M.) and geometric mean (G.M.) of ROUGE-1/2/L, which is commonly adopted (Zhang et al., $2019\mathrm{a}$ ; Bae et al., 2019; Chowdhery et al., 2022). The best score in each column is in bold, the runner-up underlined. Our attack system is compared with well-known summarize rs from the past five years. The alternative version (last row) of our system changes $C$ in Algorithm 1 from 3 to 2.

表 2: CNNDM数据集上的结果。除ROUGE-1/2/L、METEOR和BERTScore外,我们还计算了ROUGE-1/2/L的算术平均数(A.M.)和几何平均数(G.M.),这是常用的评估方式 (Zhang et al., $2019\mathrm{a}$; Bae et al., 2019; Chowdhery et al., 2022)。每列最优值加粗显示,次优值加下划线。我们的攻击系统与过去五年知名摘要系统进行对比。系统变体版本(最后一行)将算法1中的$C$值从3改为2。

| System | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-A.M. | ROUGE-G.M. | METEOR | BERTScore |

|---|---|---|---|---|---|---|---|

| Pointer-generator(coverage)(See et al.,2017) | 39.53 | 17.28 | 36.38 | 31.06 | 29.18 | 33.1 | 86.44 |

| Bottom-Up(Gehrmannet al.,2018) | 41.22 | 18.68 | 38.34 | 32.75 | 30.91 | 34.2 | 87.71 |

| PNBERT (Zhong et al.,2019) | 42.69 | 19.60 | 38.85 | 33.71 | 31.91 | 41.2 | 87.73 |

| T5 (Raffel et al.,2019) | 43.52 | 21.55 | 40.69 | 35.25 | 33.67 | 38.6 | 88.66 |

| BART(Lewisetal.,2020) | 44.16 | 21.28 | 40.90 | 35.45 | 33.75 | 40.5 | 88.62 |

| SimCLS(LiuandLiu,2021) | 46.67 | 22.15 | 43.54 | 37.45 | 35.57 | 40.5 | 88.85 |

| Scrambledcode+broken | 46.71 | 20.39 | 43.56 | 36.89 | 34.62 | 39.6 | 87.80 |

| Scrambledcode+broken(alter) | 48.18 | 19.84 | 45.35 | 37.79 | 35.13 | 40.6 | 87.80 |

These results reveal low robustness of popular metrics and how certain models can obtain high scores with inferior summaries. For example, our threat model is able to grasp the essence of ROUGE-1/2/L using a general but lightweight model, which requires less running time than summarizing algorithms. The training strategies for the model and word order are trivial. Not surprisingly, its output texts do not resemble human understandable "summaries" (Table 1).

这些结果表明流行指标的鲁棒性较低,以及某些模型如何通过低质量摘要获得高分。例如,我们的威胁模型能够利用一个通用但轻量级的模型掌握ROUGE-1/2/L的精髓,其运行时间比摘要算法更短。该模型的训练策略和词序无关紧要。不出所料,其输出文本并不像人类可理解的"摘要"(表1)。

6 Discussion

6 讨论

6.1 How does Shortcut Learning Come about?

6.1 捷径学习是如何产生的?

As suggested in the hypothetical story by Geirhos et al., scoring draws students’ attention (Filighera et al., 2022) and Bob is thus considered a better student. Similarly, in automatic sum mari z ation, there are already works that are explicitly optimized for various scoring systems (Jauregi Unanue et al., 2021; Pasunuru and Bansal, 2018). Even in some cases, people subscribe more to automatic scoring than "aspects of good sum mari z ation". For example, Pasunuru and Bansal (2018) employ reinforcement learning where entailment is one of the rewards, but in the end, ROUGE, not textual entailment, is the only justification for this summarizer.

正如Geirhos等人假设的故事所示,评分会吸引学生的注意力(Filighera等人,2022),因此Bob被认为是个更好的学生。类似地,在自动摘要领域,已有研究明确针对各种评分系统进行优化(Jauregi Unanue等人,2021;Pasunuru和Bansal,2018)。甚至在某些情况下,人们更倾向于相信自动评分而非"优质摘要的各个方面"。例如,Pasunuru和Bansal(2018)采用强化学习将文本蕴含作为奖励之一,但最终该摘要系统的唯一评判标准仍是ROUGE指标而非文本蕴含。

We use a threat model to show that optimizing toward a flawed indicator does more harm than good. This is consistent with the findings by Paulus et al. but more often, not everyone scrutinizes the output like Paulus et al. do, and these damages can be overshadowed by a staggering increase in metrics, or made less visible by optimizing with other objectives. This is also because human evaluations are usually only used as a supplement, and it is only one per cent of the scale of automatic scoring, and how human evaluations are done also varies from group to group (van der Lee et al., 2021).

我们采用威胁模型来证明,针对有缺陷的指标进行优化弊大于利。这与Paulus等人的研究发现一致,但通常情况下,并非所有人都能像Paulus团队那样严格审查输出结果。这些危害可能被指标的惊人增长所掩盖,或因同时优化其他目标而变得不易察觉。这也因为人工评估通常仅作为补充手段,其规模仅为自动评分的百分之一,且不同团队实施人工评估的方式也存在差异 (van der Lee et al., 2021)。

6.2 Simple defence

6.2 简单防御

For score robustness, we believe that simply taking more scores as benchmark (Gehrmann et al., 2021) may not be enough. Instead, fixing the existing scoring system might be a better option. A welldefined attack leads to a well-defined defence. Our attacks can be detected, or neutral is ed through a few defences such as adversarial example detection (Xu et al., 2018; Metzen et al., 2017; Carlini and Wagner, 2017). During the model inference phase, detectors, determining if the sample is fluent/grammatical, can be applied before the input samples are scored. An even easier defence is to check whether there is a series of non-alphanumeric characters. Practically, grammar-based measures, like grammatical error correction $\mathrm{(GEC^{6})}$ , could be promising (Napoles et al., 2016; Novikova et al., 2017), although they are also under development. To account for grammar in text, one can also try to parse predictions and references, and calculate

关于评分鲁棒性,我们认为仅增加评分基准数量 [20] 可能不足,改进现有评分体系或许是更优解。明确定义的攻击才能催生明确定义的防御。我们的攻击可通过对抗样本检测 [36][25][6] 等防御手段被识别或中和。在模型推理阶段,可在输入样本评分前应用流畅度/语法检测器。更简易的防御是检查是否存在连续非字母数字字符。实践中,基于语法的测量方法(如语法纠错 $\mathrm{(GEC^{6})}$)虽处于发展阶段,但前景可期 [23][26]。为评估文本语法,也可尝试解析预测结果与参考答案并计算

| 系统 | Parse | GEC |

|---|---|---|

| Pointer-generator(coverage) (See et al.,2017) | 0.131 | 1.73 |

| Bottom-Up (Gehrmann et al.,2018) | 0.145 | 1.88 |

| PNBERT (Zhong et al.,2020) | 0.179 | 2.15 |

| T5 (Raffel et al.,2019) | 0.198 | 1.59 |

| BART (Lewis et al.,2020) | 0.170 | 2.07 |

| SimCLS (Liu and Liu,2021) | 0.202 | 2.17 |

| Scrambled code+broken | 0.168 | 2.64 |

Table 3: Input san it iz ation checks, Parse and GEC, on the 100-sample CNNDM test split given by Graham (2015). They penalize non-summary texts, but may introduce more disagreement with human evaluation, e.g., high-scoring Pointer-generator on GEC. Thus, their actual summary-evaluating capabilities on linguistic features (grammar, dependencies, or co-reference) require further investigation.

表 3: Graham (2015) 提供的 100 个样本 CNNDM 测试集上的输入净化检查 (Input sanitization checks)、解析 (Parse) 和语法错误修正 (GEC)。这些方法会惩罚非摘要文本,但可能导致与人工评估产生更多分歧,例如 Pointer-generator 在 GEC 上得分较高。因此,它们在语言特征 (语法、依存关系或共指) 方面的实际摘要评估能力仍需进一步研究。

F1-score of dependency triple overlap (Riezler et al., 2003; Clarke and Lapata, 2006). Dependency triples compare grammatical relations of two texts. We found both useful to ensure input san it iz ation (Table 3).

依存三元组重叠的F1分数 (Riezler et al., 2003; Clarke and Lapata, 2006)。依存三元组用于比较两个文本的语法关系。我们发现这两种方法都能有效确保输入规范化 (表 3)。

6.3 Potential Objections on the Proposed Attacks

6.3 针对所提出攻击的潜在反对意见

The Flaw was Known. That many summarization scoring can be gamed is well known. For example, ROUGE grows when prediction length increases (Sun et al., 2019). ROUGE-L is not reliable when output space is relatively large (Krishna et al., 2021). That ROUGE correlates badly with human judgments at a system level has been revealed by findings of Paulus et al.. And, BERTScore does not improve upon the correlation of ROUGE (Fabbri et al., 2021; Gehrmann et al., 2021).

缺陷已知。许多摘要评分的可操纵性已是众所周知。例如,ROUGE 分数会随预测长度增加而升高 (Sun et al., 2019)。当输出空间较大时,ROUGE-L 并不可靠 (Krishna et al., 2021)。Paulus 等人的研究揭示了在系统层面,ROUGE 与人类判断的相关性极差。此外,BERTScore 也未能改善 ROUGE 的相关性 (Fabbri et al., 2021; Gehrmann et al., 2021)。

The current work goes beyond most conventional arguments and analyses against the metrics, and actually constructs a system that sets out to game ROUGE, METEOR, and BERTScore together. We believe that clearly showing the vulnerability is beneficial for scoring remediation efforts. From a behavioural viewpoint, each step of defence against an attack makes the scoring more robust. Compared with findings by Paulus et al., we cover more metrics, and provide a more thorough overthrow of the monotonic it y of the scoring systems, i.e., outputs from our threat model are significantly worse.

当前研究超越了大多数针对指标的常规争论与分析,实际构建了一个旨在同时操控ROUGE、METEOR和BERTScore的系统。我们认为清晰展示这种脆弱性有助于评分体系的改进。从行为学视角看,防御攻击的每一步都会增强评分的稳健性。与Paulus等人的发现相比,我们覆盖了更多指标,并对评分系统的单调性进行了更彻底的颠覆(即威胁模型的输出结果显著更差)。

Shoddy Attack? The proposed attack is easy to detect, so its effectiveness may be questioned. In fact, since we are the first to see automatic scoring as a decent NLU task and attack the most widely used systems, evasion attacks are relatively easy. This just goes to show that even the crudest attack can work on these scoring systems. Certainly, as the scoring system becomes more robust, the attack has to be more crafted. For example, if the minimum accepted input to the scoring system is a "grammatically correct" sentence, an attacker may have to search for fluent but factually incorrect sentences. With a contest like this, we may end up with a robust scoring system.

拙劣攻击?所提出的攻击方法容易检测,因此其有效性可能受到质疑。事实上,由于我们是首个将自动评分视为合理自然语言理解(NLU)任务并攻击最广泛使用系统的团队,规避攻击相对容易实现。这恰恰说明即使是最粗糙的攻击也能对这些评分系统生效。当然,随着评分系统变得更鲁棒(robust),攻击手段也必须更精巧。例如,若评分系统要求输入至少是"语法正确"的句子,攻击者可能需要寻找流畅但事实错误的句子。通过这种对抗,我们最终可能获得一个鲁棒的评分系统。

As for attack scope, we believe it is more urgent to explore popular metrics, as they currently have the greatest impact on sum mari z ation. Nonetheless, we will expand to a wider range of scoring and catch up with emerging ratings such as BLEURT (Sellam et al., 2020).

就攻击范围而言,我们认为探索流行指标更为紧迫,因为它们目前对摘要化(summarization)的影响最大。不过,我们将扩展到更广泛的评分范围,并跟进BLEURT (Sellam et al., 2020)等新兴评分标准。

6.4 Potential Difficulties

6.4 潜在困难

Performing evasion attacks with bad texts is easy, when texts are as bad as broken sentences or scrambled codes in Table 1. In this case, the output of the threat system does not need to be scrutinized by human evaluators. However, human evaluation of attack examples may be required to identify more complex flaws, such as untrue statements or those that the document does not entail. Therefore, more effort may be required when performing evasion attacks on more robust scoring systems.

表1中所示,当文本质量极差(如断句或乱码)时,实施规避攻击轻而易举。这种情况下,威胁系统的输出无需经过人工评估即可识别。然而,对于更复杂的缺陷(如不实陈述或文档未包含的内容),可能需要人工评估攻击样本才能发现。因此,针对更健壮的评分系统实施规避攻击时,往往需要投入更多精力。

7 Conclusion

7 结论

We hereby answer the question: it is easy to create a threat system that simultaneously scores high on ROUGE, METEOR, and BERTScore using worse text. In this work, we treat automatic scoring as a regression machine learning task and conduct evasion attacks to probe its robustness or reliability. Our attacker, whose score competes with toplevel summarize rs, actually outputs non-summary strings. This further suggests that current mainstream scoring systems are not a sufficient condition to support the plausibility of summarize rs, as they ignore the linguistic information required to compute sentence proximity. Intentionally or not, optimizing for flawed scores can prevent algorithms from summarizing well. The practical effectiveness of existing summarizing algorithms is not affected by this, since most of them optimize maximum likelihood estimation. Based on the exposed vulnerabilities, careful fixes to scoring systems that measure summary quality and sentence similarity are necessary.

我们在此回答这个问题:利用劣质文本创建一个同时在ROUGE、METEOR和BERTScore上得分较高的威胁系统是容易的。在本工作中,我们将自动评分视为回归机器学习任务,并通过规避攻击来探究其鲁棒性或可靠性。我们的攻击者生成的分数可与顶级摘要生成器竞争,但实际上输出的是非摘要字符串。这进一步表明,当前主流评分系统不足以支持摘要生成器的合理性,因为它们忽略了计算句子邻近度所需的语言信息。无论有意还是无意,针对有缺陷的分数进行优化可能会阻碍算法生成良好的摘要。现有摘要算法的实际效果不受此影响,因为它们大多优化的是最大似然估计。基于暴露的漏洞,有必要对衡量摘要质量和句子相似度的评分系统进行仔细修复。

Ethical considerations

伦理考量

The techniques developed in this study can be recognized by programs or humans, and we also provide defences. Our intention is not to harm, but to publish such attacks publicly so that better scores can be developed in the future and to better guide the development of summaries. This