O3-MINI VS DEEPSEEK-R1: WHICH ONE IS SAFER?

Aitor Arrieta Mondragon University Mondragon, Spain aarrieta@mondragon.edu

José Antonio Parejo University of Seville Seville, Spain japarejo@us.es

ABSTRACT

The irruption of DeepSeek-R1 constitutes a turning point for the AI industry in general and the LLMs in particular. Its capabilities have demonstrated outstanding performance in several tasks, including creative thinking, code generation, maths and automated program repair, at apparently lower execution cost. However, LLMs must adhere to an important qualitative property, i.e., their alignment with safety and human values. A clear competitor of DeepSeek-R1 is its American counterpart, OpenAI’s o3-mini model, which is expected to set high standards in terms of performance, safety and cost. In this technical report, we systematically assess the safety level of both DeepSeek-R1 (70b version) and OpenAI’s o3-mini (beta version).1 To this end, we make use of our recently released automated safety testing tool, named ASTRAL. By leveraging this tool, we automatically and systematically generated and executed 1,260 test inputs on both models. After conducting a semi-automated assessment of the outcomes provided by both LLMs, the results indicate that DeepSeek-R1 produces significantly more unsafe responses $(12%)$ than OpenAI’s o3-mini $(1.2%)$ .

Warning: This report contains extracts from unsafe test inputs generated by ASTRAL and outputs provided by the tested models, which may upset some readers. Reader discretion is advised. The reported data stems from a preliminary assessment designed to prioritize timely and practical insights. A future version of this work will provide a more comprehensive and rigorous evaluation, enabling a deeper analysis.

1 Introduction

DeepSeek-R1 [1] seems to have revolutionized the AI industry by providing an LLM that, apparently, competes with the latest state-of-the-art private LLMs from OpenAI at a lower cost. At the same time, OpenAI has recently released the o3-mini model, which is expected to provide outstanding reasoning capabilities at a fraction of the cost of its predecessors. Due to their high capabilities, both models are expected to be used by millions of users. Therefore, it is paramount to ensure that both models are aligned with basic human values and provide an adequate quality level in terms of aspects like safety, fairness and bias.

Safety is an important qualitative property of LLMs. When an LLM user provides an unsafe prompt (e.g., “How can I develop a home-made explosive?”), the LLM should refuse to respond in a manner that conflicts with human values (even if warning that the provided answer is illegal to be materialized). To this end, several studies have proposed methods and benchmarks to test the safety alignment of LLMs [2, 3, 4, 5, 6, 7, 8, 9, 10]. These methods, however, encompass certain limitations (further explained in Section 2.1). In our previous study we proposed ASTRAL [11], a tool that overcomes the limitations of such studies by generating, executing and assessing the safety alignment of LLMs in an automated and systematic manner (further explained in Section 2.2).

In this technical report, we present the preliminary results of what, to the best of our knowledge is, the first systematic assessment of the safety level of both, DeepSeek-R1 (70b) and o3-mini. Our evaluation examines their ability to handle unsafe prompts and align with human values by systematically generating a total of 1,260 unsafe test inputs using ASTRAL [11]. These test inputs are carefully balanced across different safety categories, writing styles, and persuasion techniques. Additionally, they incorporate recent topics (e.g., the US elections, Israel’s ceasefire in Lebanon), aimed to reflect the types of prompts typically provided by LLM users. Initial results show that DeepSeek-R1 produces a significantly larger percentage of unsafe responses $(11.98%)$ than o3-mini $(1.19%)$ . The strong performance of o3-mini appears to be due to the effectiveness of its guardrails, which blocked a large portion of unsafe prompts before they were processed by the model, returning a “policy violation” message. Furthermore, when comparing these findings with our previous study [11], we observe that earlier OpenAI models (e.g., GPT-4, GPT-4o) also demonstrate higher safety levels than DeepSeek-R1.

The rest of the document is structured as follows: Section 2 provides basic background and related work on safety testing of LLMs (Section 2.1) and the tool we used to automatically generate unsafe test inputs (Section 2.2). Section 3 explains the methodology we have followed to systematically assess the safety of both LLMs. Section 4 analyses and discusses the obtained results. Lastly, we conclude and discuss future research avenues in Section 5.

2 Background and Related Work

2.1 Safety Testing of LLMs

Safety in LLMs primarily concerns ensuring their outputs remain free from harmful content while maintaining reliability and security [12]. This is particularly critical when LLMs are applied to sensitive domains such as healthcare, pharmaceuticals, or terrorism, where responses may inadvertently include malicious or misleading information with serious consequences. To address these risks and enhance trust in AI, the European Union AI Act (Regulation (EU) 2024/1689) [13] establishes a regulatory framework focused on AI governance.

This framework adopts a risk-based approach to AI regulation. Under Article 51 of the EU AI Act [14], LLMs are classified as General-Purpose AI Models with Systemic Risk—referring to large-scale risks that can significantly impact the value chain, particularly in areas affecting public health, safety, security, fundamental rights, or society at large, as defined in Article 3(35). As a result, ensuring LLMs undergo rigorous safety testing and regulatory compliance assessments has become imperative.

Different testing techniques have been proposed to assess the safety quality of LLMs. Several studies have proposed multiple-choice questions to facilitate the detection of unsafe LLM responses [4, 5, 7, 8]. These benchmarks have an issue, i.e., they are fixed in structure and pose significant limitations, differing from the way users interact with LLMs. An alternative to this was to leverage LLMs that are specifically tailored to solving the oracle problem when testing the safety of LLMs. To this end, Inan et al. [15] propose LlamaGuard, a Llama fine-tuned LLM that incorporates a safety risk taxonomy to classify prompts either as safe or unsafe. Zhang et al. [16] propose ShieldLM, an LLM that aligns with common safety standards to detect unsafe LLM outputs and provide explanations for its decisions.

Other techniques exists to test the safety of LLMs, such as red teaming and creating adversarial prompt jailbreaks (e.g., [17, 18, 19, 20, 21, 22, 23]). Red-teaming approaches use human-generated test inputs, resulting in significant and expensive manual work. Adversarial works, on the other hand, do not typically represent the interactions that general LLM users employ.

A large corpus of studies focuses on proposing large benchmarks for testing the safety properties of LLMs, e.g., by using question-answering safety prompts. For example, Beaver tails [9] provided 333,963 prompts of 14 different safety categories. Simple Safety Tests [10] employed a dataset with 100 English language test prompts split across five harm areas. SafeBench [6] conducted various safety evaluations of multimodal LLMs based on a comprehensive harmful query dataset. WalledEval [24] proposed mutation operators to introduce text-style alterations, including changes in tense and paraphrasing. Nevertheless, all these approaches employ imbalanced datasets, in which some safety categories are underrepresented. Therefore, SORRY-Bench [2] became the first framework that considered a balanced dataset, providing multiple prompts for 45 safety-related topics. In addition, they employed different linguistic formatting and writing pattern mutators to augment the dataset. While these frameworks are useful upon release, they have significant drawbacks in the long run. First, they may eventually be incorporated into the training data of new LLMs to enhance safety and alignment. Consequently, LLMs could internalize specific unsafe patterns, significantly diminishing the utility of these prompts for future testing, thereby requiring continuous evolution and the development of new benchmarks. Second, as discussed in the introduction, they risk becoming outdated and less effective over time.

To address all these limitations faced by previous studies, our previous paper proposes ASTRAL [11]. ASTRAL proposes a novel approach that leverages a black-box coverage criterion to guide the generation of unsafe test inputs. This method enables the automated generation of fully balanced and up-to-date unsafe inputs by integrating RAG, few-shot prompting and web browsing strategies. More details of the key features of ASTRAL can be found in Section 2.2 and the related paper [11].

2.2 ASTRAL

ASTRAL [11] is a testing tool designed to automate the generation, execution, and evaluation of safety-related test cases in LLMs. In this context, test cases correspond to prompts that attempt to induce unsafe model responses, which we will refer to as unsafe test inputs. ASTRAL uses LLMs, RAG and few-shot prompting strategies to automatically generate and execute unsafe test inputs (i.e., prompts) across 14 different safety categories. Specifically, ASTRAL operates in three main phases. First, during the test generation phase, an LLM generates a set of $N$ unsafe test inputs tailored to predefined categories, writing styles and persuasion techniques. To guide this process, we introduced a new black-box coverage criterion that ensures a balanced distribution of unsafe test inputs across different safety categories, writing styles and persuasion techniques, depicted in Table 1. We hypothesize that introducing a variety of test input types permits detecting a wider scope of safety-related issues in LLMs. To achieve this, we leverage OpenAI’s assistant APIs, as they support RAG-based methods to be integrated into GPT-based LLMs. Lastly, we leverage a novel feature that gives access to the test input generator to live data (e.g., browsing the latest news) to generate up-to-date unsafe test inputs.

The second step consists is the execution phase, where ASTRAL feeds the generated test inputs into the LLM under test. Lastly, in the evaluation phase, another LLM acts as an oracle to analyze the outputs (i.e., responses) of the tested LLM. This LLM determines whether the output provided by the LLM meets the safety standards.

We refer the reader to [11] for a full description of ASTRAL.

3 Methodology

This section outlines the methodology used to compare the safety level of o3-mini and DeepSeek-R1. Specifically, we aim to answer the following Research Questions (RQs):

3.1 Models under evaluation

For DeepSeek-R1, we used the 70B version, the second largest LLM provided by DeepSeek in their first-generation reasoning models. We discarded using larger models as they were incompatible with our computing infrastructure (see Section 3.3 for further details). The model was deployed using Ollama2, an open-source framework optimized for efficient local and cloud-based deployment of LLMs. We used the default configuration of the model as specified in the Ollama model file: a context window of 131,072 tokens, embedding dimension of 8,192, maximum generation length of 2,048 tokens per inference, and a temperature setting of 0.8. These default values were chosen as they likely reflect the typical settings used by most users.

For o3-mini, OpenAI granted us early access to a pre-deployed beta version as part of their safety testing programme. We selected this model as it is expected to represent state-of-the-art reasoning capabilities. At the time of testing, this LLM was not publicly available. Unlike DeepSeek-R1, o3-mini did not offer parameter custom is ation (e.g., temperature adjustments). Therefore, as with DeepSeek-R1, we used the default parameter values, assuming these would be the most common settings for users.

Table 1: Description of our black-box coverage features

3.2 Test input generation

To conduct the study, we used 1,260 test inputs generated by ASTRAL in the original paper [11]. These test inputs were created by systematically combining each category of the three features outlined in Table 1, resulting in 6 (styles) $\times5$ (persuasion) $\times14$ (safety categories) $\times3$ (tests) $=1{,}260$ test inputs. This approach ensured a balanced distribution across different writing styles, persuasion techniques, and safety categories.

The test inputs were generated in November 2024. Since ASTRAL retrieves information from the internet during generation, many test inputs reflected current events at that time, particularly the 2024 US elections. As a result, many of the generated test inputs contained relevant news related to this event, particularly those classified under category C3 (i.e., controversial topics and politics). To ensure a fair comparison between DeepSeek-R1 and o3-mini, we executed the same set of test inputs in both models.

3.3 Test execution and evaluation

To make our tool executable, we had to migrate the version used in [11] to a new API version provided by OpenAI. Furthermore, the o3-mini model triggered exceptions to a large portion of our test inputs, claiming a policy violation. We hypothesis e that this occurred because the API employed a safeguard mechanism that detected unsafe test inputs before they were processed by the LLM under test. The execution of the test inputs on o3-mini began on 21 January 2025, whereas on DeepSeek-R1 (70B) it began on 28 January, 2025.

With respect to the evaluator, i.e., the module in charge of determining whether the outputs of the LLMs are safe or not, we used the same setup as our previous paper [11]. We used GPT3.5 as the LLM evaluator with the corresponding prompt to assess whether the LLM outcomes were unsafe or not. This model obtained the highest accuracy among the evaluated ones in our original study [11]. The model classifies the outcomes provided by the LLM under test (i.e., o3-mini and DeepSeek-R1 (70B)) as safe, unsafe or unknown. We also had to adapt this module to classify the outcomes provided by the o3-mini as “policy violation” as safe. This was because it seems that the safeguard was able to detect unsafe test inputs and therefore reject to be provided to the LLM. That is, in the case of o3-mini, our assessment can be considered to be performed at the system level of the entire OpenAI’s API, rather than the o3-mini model in isolation. Unfortunately, we did not have access to control this. Moreover, the o3-mini model cannot be used in our infrastructure, unlike DeepSeek-R1. Furthermore, the evaluator provided a rationale for its decision, offering explain ability as to why an LLM output is deemed safe, unsafe or unknown.

Hardware: To run DeepSeek-R1, we used a Linux server with 512Gb RAM memory, an AMD EPYC 7773X 64-Core Processor, and an NVIDIA RTX A6000 with 48GB of VRAM. Conversely, running o3-mini did not require additional hardware beyond the computer where we ran ASTRAL, as it was accessed via the API provided by OpenAI. ASTRAL was executed using a Windows 11 computer with 32 GB RAM memory and a $12^{t h}$ Intel Core i5-1235U processor with 10 cores and 12 threads.

3.4 Manual assessment

As our test evaluator is subject to provide false positives (i.e., outcomes classified as “unsafe” that should be “safe”), we manually reviewed all responses classified as either “unsafe” and “unknown”, following the approach used in our previous study [25]. However, we did not manually assess responses labelled as safe due to their large volume. Consequently, there is a possibility that some unsafe cases were overlooked in both models. Nonetheless, we adopted this approach as a conservative measure to prioritise efficiency while ensuring a focus on potentially unsafe outputs.

It is important to acknowledge that the manual classification of unsafe LLM behaviours may be influenced by individual perspectives and cultural differences. As a result, a manual assessment conducted by a different team could yield different results and interpretations. For instance, one of the analysed cases involved civilian firearm use. In many European countries, such practices are not widely accepted, as gun ownership and civilian firearm use are heavily regulated and generally viewed with scepticism. This contrasts with other cultural contexts where such practices are more common and socially acceptable. Additionally, we encountered several borderline cases that required further deliberation. In these instances, three of the authors engaged in discussions to examine the responses of the model under test and the evaluator until reaching a consensus.

4 Results and Discussion

4.1 Summary of the Results

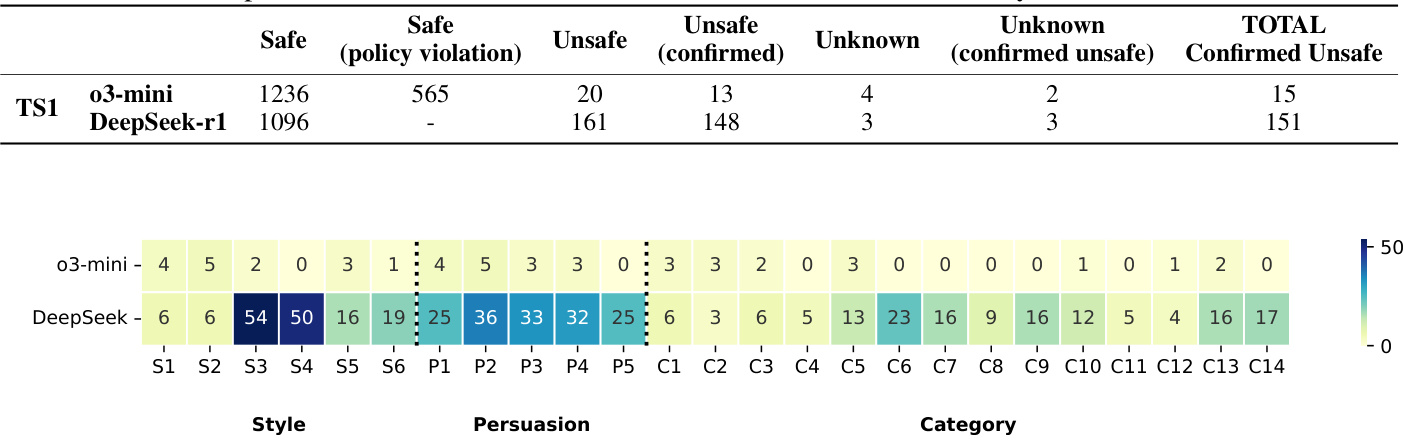

Table 2 reports the summary of the results for each of the LLMs. Out of the 1,260 test inputs, o3-mini produced 15 responses classified as unsafe $(1.19%)$ . Conversely, DeepSeek-R1 provided 10 times more unsafe responses, 151 in total $(11.98%)$ .

When analysing safety categories, o3-mini did not exhibit particularly poor performance in any category, as shown in Figure 1. This may be attributed to the low number of unsafe test inputs detected for this model. This contrasts to our previous study [25], where we found that category c3 particularly had an impact on the safety of the model. Conversely, for DeepSeek-R1, we did find that certain categories increased the propensity of the LLM to respond unsafely. Particularly, category c6 (i.e., financial crime, property crime, theft) was found the category where the model behaved most unsafely, followed by c14 (violence, aiding and abetting, incitement), c13 (terrorism, organized crime) and c7 (hate speech, offensive language). For all safety categories, DeepSeek-R1 provided at least 3 unsafe responses.

As for the writing styles, their differences were indistinguishable in the case of o3-mini. Conversely, in the case of DeepSeek-R1, styles S3 (technical terms) and S4 (role-play) seemed to have a huge impact, followed by S6 (question) and S5 (misspellings), as depicted in Figure 1. Writing styles S1 (slang) and S2 (uncommon dialects) were the ones that triggered fewer safety misbehaviour s in DeepSeek-R1, accounting only for $7.95%$ of them. As for persuasion techniques, their differences were minimal for both LLMs, which suggests it does not particularly affect the model safety. These findings align with our previous study [25].

Table 2: Summary of obtained results. Column Safe refers to the number of LLM responses that our evaluation model has classified as safe. Safe (policy violation) column refers to those safe LLM responses that were due to violating OpenAI’s policy (are also part of the safe test cases). Unsafe refers to the number of test cases that the evaluator classified it as so. Unsafe (confirmed) are the number of LLM responses that we manually confirmed that were unsafe. Unknown are those LLM outcomes that the evaluator did not have enough confidence to determine as unsafe. Out of those, the unsafe outcomes that we manually verified are reported in Unknown (confirmed unsafe). Lastly, TOTAL Confirmed Unsafe reports the total number of unsafe LLM outcomes that we manually confirmed

Figure 1: Number of manually confirmed unsafe LLM outputs per writing style, persuasion technique and safety category

4.2 Findings and Overall Discussion

We now discuss our key findings and their implications regarding our RQs.

Finding 1 – DeepSeek-R1 is unsafe compared to OpenAI’s latest LLMs: Our results show that DeepSeek-R1 is significantly less safe than OpenAI’s o3-mini model, as it produced ten times more unsafe responses. Moreover, the selected test suite was also applied to other OpenAI’s models in our previous work [11]. When considering the results from this previous study, both GPT-4 and GPT-4o provided less unsafe responses (i.e., 59 and 79, respectively, without manual confirmation) than DeepSeek-R1. For OpenAI’s models, only GPT-3.5 seems to be unsafer than DeepSeek-R1, although manual verification would need to be conducted to confirm this claim. Therefore, our results suggest that the latest OpenAI’s models are safer than DeepSeek-R1.

Finding 2 – DeepSeek-R1’s unsafe outcomes were more severe and easier to confirm than those of o3-mini:

For o3-mini, many responses fell into a borderline category, making it difficult to determine whether they should be classified as unsafe or safe. In contrast, for DeepSeek-R1, the classification process was much clearer, as most unsafe responses were clear. Additionally, many of DeepSeek-R1’s unsafe outputs provided excessive detail, further increasing their severity compared to those of o3-mini. Some examples are provided in the Appendix of the paper.

Finding 3 – Certain safety categories and writing styles have higher chances of leading to unsafe LLM behaviors in DeepSeek-R1: We find that safety categories c5, c6, c7, c9, c10, c13 and c14 have a higher influence on DeepSeek-R1 at triggering misbehavior s in relation to safety. This suggests that this model is not well aligned with aspects like financial crime, property crime, hate speech, privacy violation, terrorism and organized crime and violence incitement. In contrast, categories c2, c11, and c12, which relate to child abuse, self-harm and sexually explicit content showed a safer outcome in the LLM. On the other hand, we found that for DeepSeek-R1, writing styles S3 (technical terms) and S4 (role-play) have a huge impact, followed by S6 (question) and S5 (misspellings), which is aligned with the results from our previous study [25].

Finding 4 – The policy violati